repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

Evil0ctal/Douyin_TikTok_Download_API

|

web-scraping

| 513 |

[BUG] bilibili 获取指定用户的信息 一直返回风控校验失败

|

平台: bilibili

使用接口:api/bilibili/web/fetch_user_profile 获取指定用户的信息

接口返回: {

"code": -352,

"message": "风控校验失败",

"ttl": 1,

"data": {

"v_voucher": "voucher_f7a432cb-91fb-467e-a9a3-3e861aac9478"

}

}

错误描述: 已经更新过config.yaml内的cookie,使用 【获取用户发布的视频数据】接口就可以正常返回数据。但是使用【获取指定用户的信息】,就返回【风控校验失败】。

|

open

|

2024-11-28T10:52:56Z

|

2024-11-28T10:57:11Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/513

|

[

"BUG"

] |

sukris

| 0 |

jupyterhub/repo2docker

|

jupyter

| 1,131 |

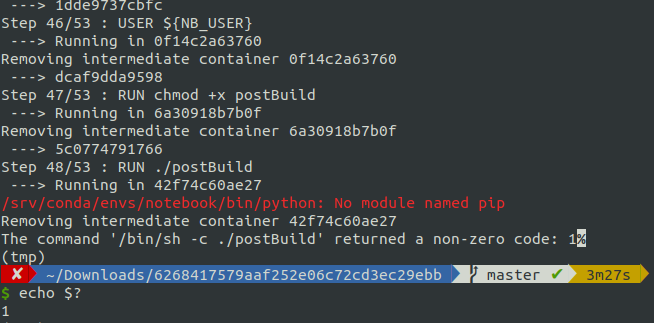

/srv/conda/envs/notebook/bin/python: No module named pip

|

<!-- Thank you for contributing. These HTML commments will not render in the issue, but you can delete them once you've read them if you prefer! -->

### Bug description

Opening a new issue as a follow-up to the comment posted in https://github.com/jupyterhub/repo2docker/pull/1062#issuecomment-1023073794.

Using the latest `repo2docker` (with `python -m pip install https://github.com/jupyterhub/repo2docker/archive/main.zip`), (existing) repos that have a custom `environment.yml` don't seem to be able to invoke `pip`, for example with `python -m pip`.

#### Expected behaviour

Running arbitrary `python -m pip install .` or similar should still be supported in a `postBuild` file.

#### Actual behaviour

Getting the following error:

```

/srv/conda/envs/notebook/bin/python: No module named pip

```

### How to reproduce

**With Binder**

Using the test gist: https://gist.github.com/jtpio/6268417579aaf252e06c72cd3ec29ebb

With `postBuild`:

```

python -m pip --help

```

And `environment.yml`:

```yaml

name: test

channels:

- conda-forge

dependencies:

- python >=3.10,<3.11

```

**Locally with repo2docker**

```

mamba create -n tmp -c conda-forge python=3.10 -y

conda activate tmp

python -m pip install https://github.com/jupyterhub/repo2docker/archive/main.zip

jupyter-repo2docker https://gist.github.com/jtpio/6268417579aaf252e06c72cd3ec29ebb

```

### Your personal set up

Using this gist on mybinder.org: https://gist.github.com/jtpio/6268417579aaf252e06c72cd3ec29ebb

|

closed

|

2022-01-28T16:35:33Z

|

2022-02-01T10:48:39Z

|

https://github.com/jupyterhub/repo2docker/issues/1131

|

[] |

jtpio

| 6 |

inducer/pudb

|

pytest

| 449 |

Internal shell height should be saved in the settings

|

I think the default height of internal shell is too small.

Thx~

|

closed

|

2021-05-09T09:41:15Z

|

2021-07-13T11:53:25Z

|

https://github.com/inducer/pudb/issues/449

|

[] |

sisrfeng

| 3 |

timkpaine/lantern

|

plotly

| 165 |

add "email notebook" to GUI

|

closed

|

2018-06-05T04:27:27Z

|

2018-08-07T14:13:28Z

|

https://github.com/timkpaine/lantern/issues/165

|

[

"feature"

] |

timkpaine

| 1 |

|

smarie/python-pytest-cases

|

pytest

| 238 |

Setting `ids` in `@parametrize` leads to "ValueError: Only one of ids and idgen should be provided"

|

Using `ids` without setting `idgen` to None explicitly leads to this error.

```python

from pytest_cases import parametrize, parametrize_with_cases

class Person:

def __init__(self, name):

self.name = name

def get_tasks():

return [Person("joe"), Person("ana")]

class CasesFoo:

@parametrize(task=get_tasks(), ids=lambda task: task.name)

def case_task(self, task):

return task

@parametrize_with_cases("task", cases=CasesFoo)

def test_foo(task):

print(task)

```

A workaround is to set `idgen=None` too: `@parametrize(task=get_tasks(), ids=lambda task: task.name, idgen=None)`

See also #237

|

closed

|

2021-11-24T09:19:47Z

|

2022-01-07T13:40:25Z

|

https://github.com/smarie/python-pytest-cases/issues/238

|

[] |

smarie

| 0 |

deepinsight/insightface

|

pytorch

| 2,374 |

Failed in downloading one of the facial analysis model

|

RuntimeError: Failed downloading url http://insightface.cn-sh2.ufileos.com/models/buffalo_l.zip

Reproduce:

model = FaceAnalysis(name='buffalo_l')

|

closed

|

2023-07-17T14:12:24Z

|

2023-07-17T14:58:26Z

|

https://github.com/deepinsight/insightface/issues/2374

|

[] |

amztc34283

| 1 |

gradio-app/gradio

|

data-visualization

| 9,956 |

[Gradio 5] - Gallery with two "X" close button

|

### Describe the bug

I have noticed that the gallery in the latest version of Gradio is showing 2 buttons to close the gallery image, and the button on top is interfering with the selection of the buttons below. This happens when I am in preview mode, either starting in preview mode or after clicking on the image to preview.

### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

with gr.Blocks(analytics_enabled=False) as app:

gallery = gr.Gallery(label="Generated Images", interactive=True, show_label=True, preview=True, allow_preview=True)

app.launch(inbrowser=True)

```

### Screenshot

### Logs

```shell

N/A

```

### System Info

```shell

Gradio Environment Information:

------------------------------

Operating System: Windows

gradio version: 5.5.0

gradio_client version: 1.4.2

------------------------------------------------

gradio dependencies in your environment:

aiofiles: 23.2.1

anyio: 4.4.0

audioop-lts is not installed.

fastapi: 0.115.4

ffmpy: 0.4.0

gradio-client==1.4.2 is not installed.

httpx: 0.27.0

huggingface-hub: 0.25.2

jinja2: 3.1.3

markupsafe: 2.1.5

numpy: 1.26.3

orjson: 3.10.6

packaging: 24.1

pandas: 2.2.2

pillow: 10.2.0

pydantic: 2.8.2

pydub: 0.25.1

python-multipart==0.0.12 is not installed.

pyyaml: 6.0.1

ruff: 0.5.6

safehttpx: 0.1.1

semantic-version: 2.10.0

starlette: 0.41.2

tomlkit==0.12.0 is not installed.

typer: 0.12.3

typing-extensions: 4.12.2

urllib3: 2.2.2

uvicorn: 0.30.5

authlib; extra == 'oauth' is not installed.

itsdangerous; extra == 'oauth' is not installed.

gradio_client dependencies in your environment:

fsspec: 2024.2.0

httpx: 0.27.0

huggingface-hub: 0.25.2

packaging: 24.1

typing-extensions: 4.12.2

websockets: 12.0

```

### Severity

I can work around it

|

closed

|

2024-11-13T23:25:20Z

|

2024-11-25T17:13:39Z

|

https://github.com/gradio-app/gradio/issues/9956

|

[

"bug"

] |

elismasilva

| 9 |

gradio-app/gradio

|

machine-learning

| 10,738 |

gradio canvas won't accept images bigger then 600 x 600 on forgewebui

|

### Describe the bug

I think it's a gradio problem since the problem started today and forge hasn't updated anything

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

```

### Screenshot

_No response_

### Logs

```shell

```

### System Info

```shell

colab on forgewebui

```

### Severity

I can work around it

|

closed

|

2025-03-06T02:57:40Z

|

2025-03-06T15:21:24Z

|

https://github.com/gradio-app/gradio/issues/10738

|

[

"bug",

"pending clarification"

] |

Darknessssenkrad

| 13 |

serengil/deepface

|

machine-learning

| 709 |

How to avoid black padding pixels?

|

Thanks for the great work! I have a question about the face detector module.

The README.md mentions that

> To avoid deformation, deepface adds black padding pixels according to the target size argument after detection and alignment.

If I don't want any padding pixels, what pre-processing steps should I do? Or is there any requirement on the shape if I want to skip the padding?

|

closed

|

2023-04-02T11:59:30Z

|

2023-04-02T14:53:28Z

|

https://github.com/serengil/deepface/issues/709

|

[] |

xjtupanda

| 1 |

moshi4/pyCirclize

|

data-visualization

| 84 |

Auto annotation for sectors in chord diagram

|

> Annotation plotting is a feature added in v1.9.0 (python>=3.9). It is not available in v1.8.0.

_Originally posted by @moshi4 in [#83](https://github.com/moshi4/pyCirclize/issues/83#issuecomment-2658729865)_

upgraded to v1.9.0 still it is not changing.

```

from pycirclize import Circos, config

from pycirclize.parser import Matrix

config.ann_adjust.enable = True

circos = Circos.chord_diagram(

matrix,

cmap= sector_color_dict,

link_kws=dict(direction=0, ec="black", lw=0.5, fc="black", alpha=0.5),

link_kws_handler = link_kws_handler_overall,

order = country_order_list,

# label_kws = dict(orientation = 'vertical', r=115)

)

```

While in the documentation track.annotate is used. However I am using from to matrix and updates aren't happing still. Do you have any suggestions.

full pseudocode:

```

country_order_list = sorted(list(set(edge_list['source']).union(set(edge_list['target']))))

for country in country_order_list:

cnt = country.split('_')[0]

if country not in country_color_dict.keys():

sector_color_dict[cnt] = 'red'

else:

sector_color_dict[cnt] = country_color_dict[cnt]

from_to_table_df = edge_list.groupby(['source', 'target']).size().reset_index(name='count')[['source', 'target', 'count']]

matrix = Matrix.parse_fromto_table(from_to_table_df)

from_to_table_df['year'] = year

from_to_table_overall = pd.concat([from_to_table_overall, from_to_table_df])

circos = Circos.chord_diagram(

matrix,

cmap= sector_color_dict,

link_kws=dict(direction=0, ec="black", lw=0.5, fc="black", alpha=0.5),

link_kws_handler = link_kws_handler_overall,

order = country_order_list,

# label_kws = dict(orientation = 'vertical', r=115)

)

circos.plotfig()

plt.show()

plt.title(f'{year}_overall')

plt.close()

```

|

closed

|

2025-02-14T09:41:38Z

|

2025-02-21T09:36:39Z

|

https://github.com/moshi4/pyCirclize/issues/84

|

[

"question"

] |

jishnu-lab

| 7 |

autokey/autokey

|

automation

| 728 |

Key capture seems broken on Ubuntu 22.04

|

### Has this issue already been reported?

- [X] I have searched through the existing issues.

### Is this a question rather than an issue?

- [X] This is not a question.

### What type of issue is this?

Bug

### Which Linux distribution did you use?

I've been using AutoKey on Ubuntu 20.04 LTS for months now with this setup and it worked perfectly. Since updating to 22.04 LTS AutoKey no longer captures keys properly.

### Which AutoKey GUI did you use?

GTK

### Which AutoKey version did you use?

Autokey-gtk 0.95.10 from apt.

### How did you install AutoKey?

Distro's repository, didn't change anything during upgrade to 22.04LTS.

### Can you briefly describe the issue?

AutoKey no longer seems to capture keys reliably.

My old scripts are set up like: ALT+A = ä, ALT+SHIFT+A = Ä, ALT+S=ß etc. This worked perfectly on 20.04LTS across multiple machines.

Since the update to 22.04LTS, these scripts only work sporadically, and only in some apps.

Firefox (Snap):

ALT+A works in Firefox if pressed slowly.

ALT+SHIFT+A produces the same output as ALT+A in Firefox if pressed slowly.

If combination is pressed quickly while typing a word, such as "ändern", Firefox will capture the release of the ALT key and send the letters "ndern" to the menu, triggering EDIT=>SETTINGS.

Geany (text editor): ALT key is immediately captured by the menu

Gedit (text editor): ALT key is immediately captured by the menu

Setting hotkeys in AutoKey-GTK itself also doesn't seem to work any more. If I click "Press to Set" the program no longer recognizes any keypresses, hanging on "press a key..." indefinitely.

My scripts are set up as follows:

### Can the issue be reproduced?

Sometimes

### What are the steps to reproduce the issue?

I've reproduced this on two different machines, both of which were upgraded from 20.04LTS to 22.04LTS and run the same script files.

### What should have happened?

Same perfect performance as on 20.04LTS

### What actually happened?

See issue description. AutoKey seems to no longer be capturing the keys properly, or rather the foreground app is grabbing them before AutoKey has a chance to do so.

### Do you have screenshots?

_No response_

### Can you provide the output of the AutoKey command?

_No response_

### Anything else?

_No response_

|

closed

|

2022-08-31T16:58:57Z

|

2022-08-31T22:00:24Z

|

https://github.com/autokey/autokey/issues/728

|

[

"invalid",

"installation/configuration"

] |

sbroenner

| 4 |

AntonOsika/gpt-engineer

|

python

| 679 |

Sweep: add test coverage badge to github project

|

<details open>

<summary>Checklist</summary>

- [X] `.github/workflows/python-app.yml`

> • Add a new step to run tests with coverage using pytest-cov. This step should be added after the step where the tests are currently being run.

> • In the new step, use the command `pytest --cov=./` to run the tests with coverage.

> • Add another step to send the coverage report to Codecov. This can be done using the codecov/codecov-action GitHub Action. The step should look like this:

> - name: Upload coverage to Codecov

> uses: codecov/codecov-action@v1

- [X] `README.md`

> • Add the Codecov badge to the top of the README file. The markdown for the badge can be obtained from the settings page of the repository on Codecov. It should look something like this: `[](https://codecov.io/gh/AntonOsika/gpt-engineer)`

</details>

|

closed

|

2023-09-06T17:47:51Z

|

2023-09-15T07:56:56Z

|

https://github.com/AntonOsika/gpt-engineer/issues/679

|

[

"enhancement",

"sweep"

] |

ATheorell

| 1 |

jupyter-book/jupyter-book

|

jupyter

| 1,919 |

Add on page /lectures/big-o.html

|

closed

|

2023-02-02T16:38:30Z

|

2023-02-12T12:47:28Z

|

https://github.com/jupyter-book/jupyter-book/issues/1919

|

[] |

js-uri

| 1 |

|

apache/airflow

|

python

| 48,083 |

xmlsec==1.3.15 update on March 11/2025 breaks apache-airflow-providers-amazon builds in Ubuntu running Python 3.11+

|

### Apache Airflow Provider(s)

amazon

### Versions of Apache Airflow Providers

Looks like a return of https://github.com/apache/airflow/issues/39437

```

uname -a

Linux airflow-worker-qg8nn 6.1.123+ #1 SMP PREEMPT_DYNAMIC Sun Jan 12 17:02:52 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux

airflow@airflow-worker-qg8nn:~$ cat /etc/issue

Ubuntu 24.04.2 LTS \n \l

```

When installing apache-airflow-providers-amazon

`

********************************************************************************

Please consider removing the following classifiers in favor of a SPDX license expression:

License :: OSI Approved :: MIT License

See https://packaging.python.org/en/latest/guides/writing-pyproject-toml/#license for details.

********************************************************************************

!!

self._finalize_license_expression()

running bdist_wheel

running build

running build_py

creating build/lib.linux-x86_64-cpython-311/xmlsec

copying src/xmlsec/__init__.pyi -> build/lib.linux-x86_64-cpython-311/xmlsec

copying src/xmlsec/template.pyi -> build/lib.linux-x86_64-cpython-311/xmlsec

copying src/xmlsec/tree.pyi -> build/lib.linux-x86_64-cpython-311/xmlsec

copying src/xmlsec/constants.pyi -> build/lib.linux-x86_64-cpython-311/xmlsec

copying src/xmlsec/py.typed -> build/lib.linux-x86_64-cpython-311/xmlsec

running build_ext

error: xmlsec1 is not installed or not in path.

[end of output]

```

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for xmlsec

Building wheel for pyhive (setup.py): started

Building wheel for pyhive (setup.py): finished with status 'done'

Created wheel for pyhive: filename=PyHive-0.7.0-py3-none-any.whl size=53933 sha256=3db46c1d80f77ee8782f517987a0c1fc898576faf2efc3842475b53df6630d2f

Stored in directory: /tmp/pip-ephem-wheel-cache-nnezwghj/wheels/11/32/63/d1d379f01c15d6488b22ed89d257b613494e4595ed9b9c7f1c

Successfully built maxminddb-geolite2 thrift pure-sasl pyhive

Failed to build xmlsec

ERROR: Could not build wheels for xmlsec, which is required to install pyproject.toml-based projects

```

Pinning pip install xmlsec==1.3.14 resolve the issue

### Apache Airflow version

2.10.5

### Operating System

Ubuntu 24.04.2

### Deployment

Google Cloud Composer

### Deployment details

_No response_

### What happened

_No response_

### What you think should happen instead

_No response_

### How to reproduce

pip install apache-airflow-providers-amazon

### Anything else

_No response_

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [x] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

|

closed

|

2025-03-21T21:24:51Z

|

2025-03-23T20:02:27Z

|

https://github.com/apache/airflow/issues/48083

|

[

"kind:bug",

"area:providers",

"area:dependencies",

"needs-triage"

] |

kmarutya

| 4 |

exaloop/codon

|

numpy

| 558 |

Error:Tuple from_py overload match problem

|

The following code snippet will result in a compilation error

```python

@python

def t3() -> tuple[pyobj, pyobj, pyobj]:

return (1, 2, 3)

@python

def t2() -> tuple[pyobj, pyobj]:

return (1, 2)

@python

def t33() -> tuple[pyobj, pyobj, pyobj]:

return (1, 3, 5)

def test1(a, b, c):

return a + b + c

def test2(a, b):

return a + b

print(test1(*t3()))

print(test2(*t2()))

print(test1(*t33()))

```

```

test_py_dec.py:10:1-41: error: 'Tuple[pyobj,pyobj,pyobj]' does not match expected type 'Tuple[T1,T2]'

╰─ test_py_dec.py:21:14-17: error: during the realization of t33()

```

|

closed

|

2024-05-10T12:26:40Z

|

2024-11-10T19:20:26Z

|

https://github.com/exaloop/codon/issues/558

|

[

"bug"

] |

victor3d

| 2 |

huggingface/datasets

|

deep-learning

| 6,441 |

Trouble Loading a Gated Dataset For User with Granted Permission

|

### Describe the bug

I have granted permissions to several users to access a gated huggingface dataset. The users accepted the invite and when trying to load the dataset using their access token they get

`FileNotFoundError: Couldn't find a dataset script at .....` . Also when they try to click the url link for the dataset they get a 404 error.

### Steps to reproduce the bug

1. Grant access to gated dataset for specific users

2. Users accept invitation

3. Users login to hugging face hub using cli login

4. Users run load_dataset

### Expected behavior

Dataset is loaded normally for users who were granted access to the gated dataset.

### Environment info

datasets==2.15.0

|

closed

|

2023-11-21T19:24:36Z

|

2023-12-13T08:27:16Z

|

https://github.com/huggingface/datasets/issues/6441

|

[] |

e-trop

| 3 |

miguelgrinberg/python-socketio

|

asyncio

| 443 |

python-socketio bridge with ws4py

|

what i need.

client-machine (python-socketio-client) -> server-1 (python-socketio-server also ws4py-client) -> server-2(ws4py-server)

currently 2 websocket connections exists

from client to server-1 (socketio)

from server-1 to server-2(ws4py)

what i hold is server-1.

server-2(ws4py) is from a third party service provider.

i want to get data from client -> receive it on my server-1 thru websocket running on socketio -> send this data to server-2 thru websocket running on ws4py.

What i have currently built.

socketio client and server-1 = working fine

ws4py server-1 to server-2 = working fine

what i want

get the event or class object of that connected client from socketio and send that directly to ws4py.

Can someone guide me on this.

|

closed

|

2020-03-20T10:20:56Z

|

2020-03-20T14:52:10Z

|

https://github.com/miguelgrinberg/python-socketio/issues/443

|

[

"question"

] |

Geo-Joy

| 6 |

jina-ai/clip-as-service

|

pytorch

| 310 |

Suggestions for building semantic search engine

|

Hello! I'm looking for suggestions of using BERT (and BERT-as-service) in my case. Sorry if such is off-topic here. I'm building kind of information retrieval system and trying to use BERT as semantic search engine. In my DB I have objects with descriptions like "pizza", "falafel", "Chinese restaurant", "I bake pies", "Chocolate Factory Roshen" and I want all these objects to be retrieved by a search query "food" or "I'm hungry" - with some score of semantic relatedness, of course.

First of all, does it look like semantic sentence similarity task or more like word similarity? I expect max_seq_len to be 10-15, on average up to 5. So that, should I look into fine-tuning and if yes, on what task? GLUE? Or maybe on my own data creating dataset like STS-B? Or maybe it's better to extract ELMo-like contextual word embedding and then average them?

Really appreciate any suggestion. Thanks in advance!

**Prerequisites**

> Please fill in by replacing `[ ]` with `[x]`.

* [x] Are you running the latest `bert-as-service`?

* [x] Did you follow [the installation](https://github.com/hanxiao/bert-as-service#install) and [the usage](https://github.com/hanxiao/bert-as-service#usage) instructions in `README.md`?

* [x] Did you check the [FAQ list in `README.md`](https://github.com/hanxiao/bert-as-service#speech_balloon-faq)?

* [x] Did you perform [a cursory search on existing issues](https://github.com/hanxiao/bert-as-service/issues)?

|

open

|

2019-04-04T11:57:14Z

|

2019-07-26T16:09:51Z

|

https://github.com/jina-ai/clip-as-service/issues/310

|

[] |

realsergii

| 2 |

kensho-technologies/graphql-compiler

|

graphql

| 904 |

Remove cached-property dependency

|

I think we should remove our dependency on `cached-property`, for a few reasons:

- We use a very minimal piece of functionality we can easily replicate and improve upon ourselves.

- It isn't type-hinted, and the open issue for it is over a year old with no activity: https://github.com/pydanny/cached-property/issues/172

- The lack of type hints means that we have to always suppress `mypy`'s `disallow_untyped_decorators` rule. It also means that `@cached_property` properties return type `Any`, which makes `mypy` even less useful.

- `@cached_property` doesn't inherit from `@property`, causing a number of other type issues. Here's the tracking issue for it, which has also been inactive in many years: https://github.com/pydanny/cached-property/issues/26

|

closed

|

2020-08-13T21:52:34Z

|

2020-08-14T18:22:20Z

|

https://github.com/kensho-technologies/graphql-compiler/issues/904

|

[] |

obi1kenobi

| 0 |

graphql-python/gql

|

graphql

| 206 |

gql-cli pagination

|

Looks like https://gql.readthedocs.io/en/latest/gql-cli/intro.html doesn't support pagination, which is necessary to get all results from API calls like GitLab https://docs.gitlab.com/ee/api/graphql/getting_started.html#pagination in one go.

Are there any plans to add it?

|

closed

|

2021-05-08T09:33:19Z

|

2021-08-24T15:00:39Z

|

https://github.com/graphql-python/gql/issues/206

|

[

"type: invalid",

"type: question or discussion"

] |

abitrolly

| 13 |

microsoft/unilm

|

nlp

| 900 |

Layoutlmv3 for RE

|

**Describe**

when i use layoutlmv3 to do RE task on XFUND_zh dataset, the result is 'eval_precision': 0.5283, 'eval_recall': 0.4392.

i do not konw the reason of the bad result. maybe there is something wrong with my RE task code? maybe i need more data for training? is there some suggestions for me to improve the result?

Dose anyone meet the same problem?

|

open

|

2022-10-25T10:00:19Z

|

2022-12-05T03:01:29Z

|

https://github.com/microsoft/unilm/issues/900

|

[] |

SuXuping

| 3 |

flairNLP/flair

|

nlp

| 3,487 |

[Bug]: GPU memory leak in TextPairRegressor when embed_separately is set to `False`

|

### Describe the bug

When training a `TextPairRegressor` model with `embed_separately=False` (the default), via e.g. `ModelTrainer.fine_tune`, the GPU memory slowly creeps up with each batch, eventually causing an OOM even when the model and a single batch fits easily in GPU memory.

The function `store_embeddings` is supposed to clear any embeddings of each DataPoint. For this model, the type of data point is `TextPair`. It actually does seem to handle clearing `text_pair.first` and `.second` when `embed_separately=True`, because it runs embed for each sentence (see `TextPairRegressor._get_embedding_for_data_point`), and that embedding is attached to each sentence so it can be referenced via the sentence.

However, the default setting is `False`; in that case, to embed the pair, it concatenates the text of both sentences (adding a separator), creates a new sentence, embeds that sentence, and then returns that embedding. Since it's never attached to the `DataPoint` object, `clear_embeddings` doesn't find it when you iterate over the data points. The function `identify_dynamic_embeddings` also always comes up empty

### To Reproduce

```python

import flair

from flair.data import DataPairCorpus

from flair.models import TextPairRegressor

search_rel_corpus = DataPairCorpus(Path('text_pair_dataset'), train_file='train.tsv', test_file='test.tsv', dev_file='dev.tsv', label_type='relevance', in_memory=False)

text_pair_regressor = TextPairRegressor(embeddings=embeddings, label_type='relevance')

embeddings = TransformerDocumentEmbeddings(

model='xlm-roberta-base',

layers="-1",

subtoken_pooling='first',

fine_tune=True,

use_context=True,

is_word_embedding=True,

)

trainer = ModelTrainer(text_pair_regressor, search_rel_corpus)

trainer.fine_tune(

"relevance_regressor",

learning_rate=1e-5,

epoch=0,

max_epochs=5,

mini_batch_size=4,

save_optimizer_state=True,

save_model_each_k_epochs=1,

use_amp=True, # aka Automatic Mixed Precision, e.g. float16

)

```

### Expected behavior

The memory should remain relatively flat with each epoch of training if memory is cleared correctly. In other training, such as for a `TextClassifier`, it stays roughly the same after each mini-batch,

### Logs and Stack traces

```stacktrace

OutOfMemoryError Traceback (most recent call last)

Cell In[15], line 1

----> 1 final_score = trainer.fine_tune(

2 "relevance_regressor",

3 learning_rate=1e-5,

4 epoch=0,

5 max_epochs=5,

6 mini_batch_size=4,

7 save_optimizer_state=True,

8 save_model_each_k_epochs=1,

9 use_amp=True, # aka Automatic Mixed Precision, e.g. float16

10 )

11 final_score

File /pyzr/active_venv/lib/python3.10/site-packages/flair/trainers/trainer.py:253, in ModelTrainer.fine_tune(self, base_path, warmup_fraction, learning_rate, decoder_learning_rate, mini_batch_size, eval_batch_size, mini_batch_chunk_size, max_epochs, optimizer, train_with_dev, train_with_test, reduce_transformer_vocab, main_evaluation_metric, monitor_test, monitor_train_sample, use_final_model_for_eval, gold_label_dictionary_for_eval, exclude_labels, sampler, shuffle, shuffle_first_epoch, embeddings_storage_mode, epoch, save_final_model, save_optimizer_state, save_model_each_k_epochs, create_file_logs, create_loss_file, write_weights, use_amp, plugins, attach_default_scheduler, **kwargs)

250 if attach_default_scheduler:

251 plugins.append(LinearSchedulerPlugin(warmup_fraction=warmup_fraction))

--> 253 return self.train_custom(

254 base_path=base_path,

255 # training parameters

256 learning_rate=learning_rate,

257 decoder_learning_rate=decoder_learning_rate,

258 mini_batch_size=mini_batch_size,

259 eval_batch_size=eval_batch_size,

260 mini_batch_chunk_size=mini_batch_chunk_size,

261 max_epochs=max_epochs,

262 optimizer=optimizer,

263 train_with_dev=train_with_dev,

264 train_with_test=train_with_test,

265 reduce_transformer_vocab=reduce_transformer_vocab,

266 # evaluation and monitoring

267 main_evaluation_metric=main_evaluation_metric,

268 monitor_test=monitor_test,

269 monitor_train_sample=monitor_train_sample,

270 use_final_model_for_eval=use_final_model_for_eval,

271 gold_label_dictionary_for_eval=gold_label_dictionary_for_eval,

272 exclude_labels=exclude_labels,

273 # sampling and shuffling

274 sampler=sampler,

275 shuffle=shuffle,

276 shuffle_first_epoch=shuffle_first_epoch,

277 # evaluation and monitoring

278 embeddings_storage_mode=embeddings_storage_mode,

279 epoch=epoch,

280 # when and what to save

281 save_final_model=save_final_model,

282 save_optimizer_state=save_optimizer_state,

283 save_model_each_k_epochs=save_model_each_k_epochs,

284 # logging parameters

285 create_file_logs=create_file_logs,

286 create_loss_file=create_loss_file,

287 write_weights=write_weights,

288 # amp

289 use_amp=use_amp,

290 # plugins

291 plugins=plugins,

292 **kwargs,

293 )

File /pyzr/active_venv/lib/python3.10/site-packages/flair/trainers/trainer.py:624, in ModelTrainer.train_custom(self, base_path, learning_rate, decoder_learning_rate, mini_batch_size, eval_batch_size, mini_batch_chunk_size, max_epochs, optimizer, train_with_dev, train_with_test, max_grad_norm, reduce_transformer_vocab, main_evaluation_metric, monitor_test, monitor_train_sample, use_final_model_for_eval, gold_label_dictionary_for_eval, exclude_labels, sampler, shuffle, shuffle_first_epoch, embeddings_storage_mode, epoch, save_final_model, save_optimizer_state, save_model_each_k_epochs, create_file_logs, create_loss_file, write_weights, use_amp, plugins, **kwargs)

622 gradient_norm = None

623 scale_before = scaler.get_scale()

--> 624 scaler.step(self.optimizer)

625 scaler.update()

626 scale_after = scaler.get_scale()

File /pyzr/active_venv/lib/python3.10/site-packages/torch/cuda/amp/grad_scaler.py:370, in GradScaler.step(self, optimizer, *args, **kwargs)

366 self.unscale_(optimizer)

368 assert len(optimizer_state["found_inf_per_device"]) > 0, "No inf checks were recorded for this optimizer."

--> 370 retval = self._maybe_opt_step(optimizer, optimizer_state, *args, **kwargs)

372 optimizer_state["stage"] = OptState.STEPPED

374 return retval

File /pyzr/active_venv/lib/python3.10/site-packages/torch/cuda/amp/grad_scaler.py:290, in GradScaler._maybe_opt_step(self, optimizer, optimizer_state, *args, **kwargs)

288 retval = None

289 if not sum(v.item() for v in optimizer_state["found_inf_per_device"].values()):

--> 290 retval = optimizer.step(*args, **kwargs)

291 return retval

File /pyzr/active_venv/lib/python3.10/site-packages/torch/optim/lr_scheduler.py:69, in LRScheduler.__init__.<locals>.with_counter.<locals>.wrapper(*args, **kwargs)

67 instance._step_count += 1

68 wrapped = func.__get__(instance, cls)

---> 69 return wrapped(*args, **kwargs)

File /pyzr/active_venv/lib/python3.10/site-packages/torch/optim/optimizer.py:280, in Optimizer.profile_hook_step.<locals>.wrapper(*args, **kwargs)

276 else:

277 raise RuntimeError(f"{func} must return None or a tuple of (new_args, new_kwargs),"

278 f"but got {result}.")

--> 280 out = func(*args, **kwargs)

281 self._optimizer_step_code()

283 # call optimizer step post hooks

File /pyzr/active_venv/lib/python3.10/site-packages/torch/optim/optimizer.py:33, in _use_grad_for_differentiable.<locals>._use_grad(self, *args, **kwargs)

31 try:

32 torch.set_grad_enabled(self.defaults['differentiable'])

---> 33 ret = func(self, *args, **kwargs)

34 finally:

35 torch.set_grad_enabled(prev_grad)

File /pyzr/active_venv/lib/python3.10/site-packages/torch/optim/adamw.py:171, in AdamW.step(self, closure)

158 beta1, beta2 = group["betas"]

160 self._init_group(

161 group,

162 params_with_grad,

(...)

168 state_steps,

169 )

--> 171 adamw(

172 params_with_grad,

173 grads,

174 exp_avgs,

175 exp_avg_sqs,

176 max_exp_avg_sqs,

177 state_steps,

178 amsgrad=amsgrad,

179 beta1=beta1,

180 beta2=beta2,

181 lr=group["lr"],

182 weight_decay=group["weight_decay"],

183 eps=group["eps"],

184 maximize=group["maximize"],

185 foreach=group["foreach"],

186 capturable=group["capturable"],

187 differentiable=group["differentiable"],

188 fused=group["fused"],

189 grad_scale=getattr(self, "grad_scale", None),

190 found_inf=getattr(self, "found_inf", None),

191 )

193 return loss

File /pyzr/active_venv/lib/python3.10/site-packages/torch/optim/adamw.py:321, in adamw(params, grads, exp_avgs, exp_avg_sqs, max_exp_avg_sqs, state_steps, foreach, capturable, differentiable, fused, grad_scale, found_inf, amsgrad, beta1, beta2, lr, weight_decay, eps, maximize)

318 else:

319 func = _single_tensor_adamw

--> 321 func(

322 params,

323 grads,

324 exp_avgs,

325 exp_avg_sqs,

326 max_exp_avg_sqs,

327 state_steps,

328 amsgrad=amsgrad,

329 beta1=beta1,

330 beta2=beta2,

331 lr=lr,

332 weight_decay=weight_decay,

333 eps=eps,

334 maximize=maximize,

335 capturable=capturable,

336 differentiable=differentiable,

337 grad_scale=grad_scale,

338 found_inf=found_inf,

339 )

File /pyzr/active_venv/lib/python3.10/site-packages/torch/optim/adamw.py:566, in _multi_tensor_adamw(params, grads, exp_avgs, exp_avg_sqs, max_exp_avg_sqs, state_steps, grad_scale, found_inf, amsgrad, beta1, beta2, lr, weight_decay, eps, maximize, capturable, differentiable)

564 exp_avg_sq_sqrt = torch._foreach_sqrt(device_exp_avg_sqs)

565 torch._foreach_div_(exp_avg_sq_sqrt, bias_correction2_sqrt)

--> 566 denom = torch._foreach_add(exp_avg_sq_sqrt, eps)

568 torch._foreach_addcdiv_(device_params, device_exp_avgs, denom, step_size)

OutOfMemoryError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 15.78 GiB total capacity; 14.06 GiB already allocated; 12.00 MiB free; 14.90 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

```

### Screenshots

_No response_

### Additional Context

I printed out the GPU usage in an altered `train_custom`:

```

def print_gpu_usage(entry=None):

allocated_memory = torch.cuda.memory_allocated(0)

reserved_memory = torch.cuda.memory_reserved(0)

print(f"{entry}\t{allocated_memory:<15,} / {reserved_memory:<15,}")

```

I saw that when training a `TextClassifier`, the memory usage goes back down to the value at the beginning of a batch after `store_embeddings` is called. In `TextPairRegressor`, the memory does not go down at all after `store_embeddings` is called.

### Environment

#### Versions:

##### Flair

0.13.1

##### Pytorch

2.3.1+cu121

##### Transformers

4.31.0

#### GPU

True

|

closed

|

2024-07-03T18:26:59Z

|

2024-07-24T06:24:40Z

|

https://github.com/flairNLP/flair/issues/3487

|

[

"bug"

] |

MattGPT-ai

| 0 |

python-restx/flask-restx

|

api

| 141 |

How do I programmatically access the sample requests from the generated swagger UI

|

**Ask a question**

For a given restx application, I can see a rich set of details contained in the generated Swagger UI, for example for each endpoint, I can see sample requests populated with default values from the restx `fields` I created to serve as the components when defining the endpoints. These show up as example `curl` commands that I can copy/paste into a shell (as well as being executed from the 'Try it out' button).

However, I want to access this data programmatically from the app client itself. Suppose I load and run the app in a standalone Python program and have a handle to the Flask `app` object. I can see attributes such as `api.application.blueprints['restx_doc']` to get a handle to the `Apidoc` object.

But I cannot find out where this object stores all the information I need to programmatically reconstruct valid requests to the service's endpoint.

|

open

|

2020-05-23T19:46:12Z

|

2020-05-23T19:46:12Z

|

https://github.com/python-restx/flask-restx/issues/141

|

[

"question"

] |

espears1

| 0 |

darrenburns/posting

|

automation

| 48 |

Request body is saved in a non human-readable format when it contains special characters

|

Hello and thank you for creating this tool, it looks very promising! The overall experience has been good so far, but I did notice an issue that's a bit inconvenient.

I've created a `POST` request which contains letters with diacritics in the body, such as this one:

```json

{

"Hello": "There",

"Hi": "Čau"

}

```

If I save the request into a yaml file, the body will be saved in a hard to read format:

```yaml

name: Test

method: POST

url: https://example.org/test

body:

content: "{\n \"Hello\": \"There\",\n \"Hi\": \"\u010Cau\"\n}"

```

If I replace the `Č` with a regular `C`, the resulting yaml file will have the format that I expect:

```yaml

name: Test

method: POST

url: https://example.org/test

body:

content: |-

{

"Hello": "There",

"Hi": "Cau"

}

```

Is it possible to fix this? The current behavior complicates manual editing and version control diffs, so I think it might be worth looking into.

I'm using `posting` `1.7.0`

Thanks!

|

closed

|

2024-07-19T08:54:53Z

|

2024-07-19T20:02:11Z

|

https://github.com/darrenburns/posting/issues/48

|

[] |

MilanVasko

| 0 |

voila-dashboards/voila

|

jupyter

| 1,447 |

Voila not displaying Canvas from IpyCanvas

|

<!--

Welcome! Before creating a new issue please search for relevant issues and recreate the issue in a fresh environment.

-->

## Description

When executing the Jupyter Notebook, the canvas appears and works as intended, but when executing with Voila, its a blank canvas

<!--Describe the bug clearly and concisely. Include screenshots/gifs if possible-->

Empty...

|

closed

|

2024-02-25T16:34:54Z

|

2024-02-27T19:21:16Z

|

https://github.com/voila-dashboards/voila/issues/1447

|

[

"bug"

] |

Voranto

| 5 |

tflearn/tflearn

|

tensorflow

| 275 |

Error when loading multiple models - Tensor name not found

|

In my code, I'm loading two DNN models. Model A is a normal DNN with fully-connected layers, and Model B is a Convolutional Neural Network similar to the one used in the MNIST example.

Individually, they both work just fine - they train properly, they save properly, they load properly, and predict properly. However, when loading both neural networks, tflearn crashes with an error that seems to indicate `"Tensor name 'example_name' not found in checkpoint files..."`

This error will be thrown for whatever model is loaded second (i.e. Model A will load and run correctly but Model B will not, and if the order is switched, then vice-versa). This happens even when the models are saved in and loaded from completely different directories. I'm guessing it's some sort of internal caching problem with the checkpoint files. Any solutions?

Here's some more of the stack trace, if it helps

```

File "/usr/local/lib/python2.7/site-packages/tflearn/models/dnn.py", line 227, in load

self.trainer.restore(model_file)

File "/usr/local/lib/python2.7/site-packages/tflearn/helpers/trainer.py", line 379, in restore

self.restorer.restore(self.session, model_file)

File "/usr/local/lib/python2.7/site-packages/tensorflow/python/training/saver.py", line 1105, in restore

{self.saver_def.filename_tensor_name: save_path})

File "/usr/local/lib/python2.7/site-packages/tensorflow/python/client/session.py", line 372, in run

run_metadata_ptr)

File "/usr/local/lib/python2.7/site-packages/tensorflow/python/client/session.py", line 636, in _run

feed_dict_string, options, run_metadata)

File "/usr/local/lib/python2.7/site-packages/tensorflow/python/client/session.py", line 708, in _do_run

target_list, options, run_metadata)

File "/usr/local/lib/python2.7/site-packages/tensorflow/python/client/session.py", line 728, in _do_call

raise type(e)(node_def, op, message)

tensorflow.python.framework.errors.NotFoundError: Tensor name "Accuracy/Mean/moving_avg_1" not found in checkpoint files classification_classifier.tfl

[[Node: save_5/restore_slice_1 = RestoreSlice[dt=DT_FLOAT, preferred_shard=-1, _device="/job:localhost/replica:0/task:0/cpu:0"](_recv_save_5/Const_0, save_5/restore_slice_1/tensor_name, save_5/restore_slice_1/shape_and_slice)]]

Caused by op u'save_5/restore_slice_1', defined at:

```

|

open

|

2016-08-12T07:31:03Z

|

2020-11-19T10:46:14Z

|

https://github.com/tflearn/tflearn/issues/275

|

[] |

samvaran

| 8 |

ploomber/ploomber

|

jupyter

| 726 |

Programmatically create tasks based on the product of the task executed in the previous pipeline step

|

I would like to understand how to programmatically create tasks based on the product of the task executed in the previous pipeline step.

For example, `get_data` creates csv-file and I want to create tasks for each row of csv: `process_row_1`, `process_row_2`, .... Accordingly, I have code using the python api that reads a csv-file -- how do I indicate that this csv-file is the product of another task?

So how do I use the upstream idiom (`upstream['get_data']`)?

I formulate the question a brief, assuming that my case is quite typical. If this assumption of mine is incorrect, then I am ready to supplement the issue with code that more clearly illustrates my request.

|

closed

|

2022-04-25T12:32:48Z

|

2024-04-01T05:09:19Z

|

https://github.com/ploomber/ploomber/issues/726

|

[] |

theotheo

| 5 |

litestar-org/litestar

|

api

| 3,995 |

Bug: `Unsupported type: <class 'msgspec._core.StructMeta'>`

|

### Description

Visiting /schema when a route contains a request struct that utilizes a `msgspec` Struct via default factory is raising the error `Unsupported type: <class 'msgspec._core.StructMeta'>`.

Essentially, if I have a struct like this:

```

class Stuff(msgspec.Struct):

foo: list = msgspec.field(default=list)

```

And I use that struct as my request and then I visit `/schema`, I will get the error `Unsupported type: <class 'msgspec._core.StructMeta'>`.

### URL to code causing the issue

_No response_

### MCVE

```python

# Your MCVE code here

```

### Steps to reproduce

```bash

1. Go to '...'

2. Click on '....'

3. Scroll down to '....'

4. See error

```

### Screenshots

```bash

""

```

### Logs

```bash

```

### Litestar Version

2.13.0 final

### Platform

- [x] Linux

- [ ] Mac

- [ ] Windows

- [ ] Other (Please specify in the description above)

|

closed

|

2025-02-13T09:32:13Z

|

2025-02-13T11:35:31Z

|

https://github.com/litestar-org/litestar/issues/3995

|

[

"Bug :bug:"

] |

umarbutler

| 3 |

holoviz/panel

|

plotly

| 7,264 |

Bokeh: BokehJS was loaded multiple times but one version failed to initialize.

|

Hi team, thanks for your hard work. If possible, can we put a high priority on this fix? It's quite damaging to user experience.

#### ALL software version info

(this library, plus any other relevant software, e.g. bokeh, python, notebook, OS, browser, etc should be added within the dropdown below.)

<details>

<summary>Software Version Info</summary>

```plaintext

acryl-datahub==0.10.5.5

aiohappyeyeballs==2.4.0

aiohttp==3.10.5

aiosignal==1.3.1

alembic==1.13.2

ansi2html==1.9.2

anyio==4.4.0

argon2-cffi==23.1.0

argon2-cffi-bindings==21.2.0

arrow==1.3.0

asttokens==2.4.1

async-generator==1.10

async-lru==2.0.4

attrs==24.2.0

autograd==1.7.0

autograd-gamma==0.5.0

avro==1.10.2

avro-gen3==0.7.10

awscli==1.33.27

babel==2.16.0

backports.tarfile==1.2.0

beautifulsoup4==4.12.3

black==24.8.0

bleach==6.1.0

blinker==1.8.2

bokeh==3.4.2

bokehtools==0.46.2

boto3==1.34.76

botocore==1.34.145

bouncer-client==0.4.1

cached-property==1.5.2

certifi==2024.7.4

certipy==0.1.3

cffi==1.17.0

charset-normalizer==3.3.2

click==8.1.7

click-default-group==1.2.4

click-spinner==0.1.10

cloudpickle==3.0.0

colorama==0.4.6

colorcet==3.0.1

comm==0.2.2

contourpy==1.3.0

cryptography==43.0.0

cycler==0.12.1

dash==2.17.1

dash-core-components==2.0.0

dash-html-components==2.0.0

dash-table==5.0.0

dask==2024.8.1

datashader==0.16.3

datatank-client==2.1.10.post12049

dataworks-common==2.1.10.post12049

debugpy==1.8.5

decorator==5.1.1

defusedxml==0.7.1

Deprecated==1.2.14

directives-client==0.4.4

docker==7.1.0

docutils==0.16

entrypoints==0.4

executing==2.0.1

expandvars==0.12.0

fastjsonschema==2.20.0

Flask==3.0.3

fonttools==4.53.1

formulaic==1.0.2

fqdn==1.5.1

frozenlist==1.4.1

fsspec==2024.6.1

future==1.0.0

gitdb==4.0.11

GitPython==3.1.43

greenlet==3.0.3

h11==0.14.0

holoviews==1.19.0

httpcore==1.0.5

httpx==0.27.2

humanfriendly==10.0

hvplot==0.10.0

idna==3.8

ijson==3.3.0

importlib-metadata==4.13.0

interface-meta==1.3.0

ipykernel==6.29.5

ipython==8.18.0

ipython-genutils==0.2.0

ipywidgets==8.1.5

isoduration==20.11.0

isort==5.13.2

itsdangerous==2.2.0

jaraco.classes==3.4.0

jaraco.context==6.0.1

jaraco.functools==4.0.2

jedi==0.19.1

jeepney==0.8.0

Jinja2==3.1.4

jira==3.2.0

jmespath==1.0.1

json5==0.9.25

jsonpointer==3.0.0

jsonref==1.1.0

jsonschema==4.17.3

jsonschema-specifications==2023.12.1

jupyter==1.0.0

jupyter-console==6.6.3

jupyter-dash==0.4.2

jupyter-events==0.10.0

jupyter-lsp==2.2.5

jupyter-resource-usage==1.1.0

jupyter-server-mathjax==0.2.6

jupyter-telemetry==0.1.0

jupyter_bokeh==4.0.5

jupyter_client==8.6.2

jupyter_core==5.7.2

jupyter_server==2.14.2

jupyter_server_proxy==4.3.0

jupyter_server_terminals==0.5.3

jupyterhub==4.1.4

jupyterlab==4.2.5

jupyterlab-vim==4.1.3

jupyterlab_code_formatter==3.0.2

jupyterlab_git==0.50.1

jupyterlab_pygments==0.3.0

jupyterlab_server==2.27.3

jupyterlab_templates==0.5.2

jupyterlab_widgets==3.0.13

keyring==25.3.0

kiwisolver==1.4.5

lckr_jupyterlab_variableinspector==3.2.1

lifelines==0.29.0

linkify-it-py==2.0.3

llvmlite==0.43.0

locket==1.0.0

Mako==1.3.5

Markdown==3.3.7

markdown-it-py==3.0.0

MarkupSafe==2.1.5

matplotlib==3.9.2

matplotlib-inline==0.1.7

mdit-py-plugins==0.4.1

mdurl==0.1.2

mistune==3.0.2

mixpanel==4.10.1

more-itertools==10.4.0

multidict==6.0.5

multipledispatch==1.0.0

mypy-extensions==1.0.0

nbclassic==1.1.0

nbclient==0.10.0

nbconvert==7.16.4

nbdime==4.0.1

nbformat==5.10.4

nbgitpuller==1.2.1

nest-asyncio==1.6.0

notebook==7.2.2

notebook_shim==0.2.4

numba==0.60.0

numpy==1.26.4

oauthlib==3.2.2

overrides==7.7.0

packaging==24.1

pamela==1.2.0

pandas==2.1.4

pandocfilters==1.5.1

panel==1.4.4

param==2.1.1

parso==0.8.4

partd==1.4.2

pathspec==0.12.1

pexpect==4.9.0

pillow==10.4.0

platformdirs==4.2.2

plotly==5.23.0

progressbar2==4.5.0

prometheus_client==0.20.0

prompt-toolkit==3.0.38

psutil==5.9.8

psycopg2-binary==2.9.9

ptyprocess==0.7.0

pure_eval==0.2.3

pyarrow==15.0.2

pyasn1==0.6.0

pycparser==2.22

pyct==0.5.0

pydantic==1.10.18

Pygments==2.18.0

PyHive==0.7.0

PyJWT==2.9.0

pymssql==2.3.0

PyMySQL==1.1.1

pyodbc==5.1.0

pyOpenSSL==24.2.1

pyparsing==3.1.4

pyrsistent==0.20.0

pyspork==2.24.0

python-dateutil==2.9.0.post0

python-json-logger==2.0.7

python-utils==3.8.2

pytz==2024.1

pyviz_comms==3.0.3

PyYAML==6.0.1

pyzmq==26.2.0

qtconsole==5.5.2

QtPy==2.4.1

ratelimiter==1.2.0.post0

redis==3.5.3

referencing==0.35.1

requests==2.32.3

requests-file==2.1.0

requests-oauthlib==2.0.0

requests-toolbelt==1.0.0

retrying==1.3.4

rfc3339-validator==0.1.4

rfc3986-validator==0.1.1

rpds-py==0.20.0

rsa==4.7.2

ruamel.yaml==0.17.40

ruamel.yaml.clib==0.2.8

ruff==0.6.2

s3transfer==0.10.2

scipy==1.13.0

SecretStorage==3.3.3

Send2Trash==1.8.3

sentry-sdk==2.13.0

simpervisor==1.0.0

six==1.16.0

smmap==5.0.1

sniffio==1.3.1

soupsieve==2.6

SQLAlchemy==1.4.52

sqlparse==0.4.4

stack-data==0.6.3

structlog==22.1.0

tabulate==0.9.0

tenacity==9.0.0

termcolor==2.4.0

terminado==0.18.1

tesladex-client==0.9.0

tinycss2==1.3.0

toml==0.10.2

toolz==0.12.1

tornado==6.4.1

tqdm==4.66.4

traitlets==5.14.3

types-python-dateutil==2.9.0.20240821

typing-inspect==0.9.0

typing_extensions==4.5.0

tzdata==2024.1

tzlocal==5.2

uc-micro-py==1.0.3

uri-template==1.3.0

urllib3==1.26.19

wcwidth==0.2.13

webcolors==24.8.0

webencodings==0.5.1

websocket-client==1.8.0

Werkzeug==3.0.4

widgetsnbextension==4.0.13

wrapt==1.16.0

xarray==2024.7.0

xyzservices==2024.6.0

yapf==0.32.0

yarl==1.9.4

zipp==3.20.1

```

</details>

#### Description of expected behavior and the observed behavior

I should be able to use panel in 2 notebooks simultaneously, but if I save my changes and reload the page, the error will show.

#### Complete, minimal, self-contained example code that reproduces the issue

Steps to reproduce:

1. create 2 notebooks with the following content

```python

# notebook 1

import panel as pn

pn.extension()

pn.Column('hi')

```

```python

# notebook 2 (open in another jupyterlab tab)

import panel as pn

pn.extension()

pn.Column('hi')

```

2. Run both notebooks

3. Save both notebooks

4. Reload your page

5. Try to run either of the notebooks and you'll see the error.

#### Stack traceback and/or browser JavaScript console output

(Ignore 'set_log_level` error. I think it's unrelated.)

|

closed

|

2024-09-12T16:03:33Z

|

2024-09-13T17:34:46Z

|

https://github.com/holoviz/panel/issues/7264

|

[] |

tomascsantos

| 4 |

jupyterhub/repo2docker

|

jupyter

| 1,295 |

--base-image not recognise as valid argument

|

Related with https://github.com/jupyterhub/repo2docker/issues/487

https://github.com/jupyterhub/repo2docker/blob/247e9535b167112cabf69eed59a6947e4af1ee34/repo2docker/app.py#L450 should make `--base-image` a valid argument for `repo2docker` but I'm getting

```

repo2docker: error: unrecognized arguments: --base-image

```

with

```

$ repo2docker --version

2023.06.0

```

|

closed

|

2023-07-12T16:05:50Z

|

2023-07-13T07:47:53Z

|

https://github.com/jupyterhub/repo2docker/issues/1295

|

[] |

rgaiacs

| 2 |

coqui-ai/TTS

|

pytorch

| 3,017 |

[Bug] pip install TTS failure: pip._vendor.resolvelib.resolvers.ResolutionTooDeep: 200000

|

### Describe the bug

Can't make pip installation

### To Reproduce

**1. Run the following command:** `pip install TTS`

```

C:>C:\Python38\scripts\pip install TTS

```

**2. Wait:**

```

Collecting TTS

Downloading TTS-0.14.3.tar.gz (1.5 MB)

---------------------------------------- 1.5/1.5 MB 1.7 MB/s eta 0:00:00

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

Collecting cython==0.29.28 (from TTS)

Using cached Cython-0.29.28-py2.py3-none-any.whl (983 kB)

Requirement already satisfied: scipy>=1.4.0 in C:\python38\lib\site-packages (from TTS) (1.7.1)

Collecting torch>=1.7 (from TTS)

Downloading torch-2.0.1-cp38-cp38-win_amd64.whl (172.4 MB)

---------------------------------------- 172.4/172.4 MB ? eta 0:00:00

Collecting torchaudio (from TTS)

Downloading torchaudio-2.0.2-cp38-cp38-win_amd64.whl (2.1 MB)

---------------------------------------- 2.1/2.1 MB 3.6 MB/s eta 0:00:00

Collecting soundfile (from TTS)

Downloading soundfile-0.12.1-py2.py3-none-win_amd64.whl (1.0 MB)

---------------------------------------- 1.0/1.0 MB 5.8 MB/s eta 0:00:00

Collecting librosa==0.10.0.* (from TTS)

Downloading librosa-0.10.0.post2-py3-none-any.whl (253 kB)

---------------------------------------- 253.0/253.0 kB 15.2 MB/s eta 0:00:00

Collecting inflect==5.6.0 (from TTS)

Downloading inflect-5.6.0-py3-none-any.whl (33 kB)

Requirement already satisfied: tqdm in C:\python38\lib\site-packages (from TTS) (4.60.0)

Collecting anyascii (from TTS)

Downloading anyascii-0.3.2-py3-none-any.whl (289 kB)

---------------------------------------- 289.9/289.9 kB 9.0 MB/s eta 0:00:00

Requirement already satisfied: pyyaml in C:\python38\lib\site-packages (from TTS) (5.4.1)

Requirement already satisfied: fsspec>=2021.04.0 in C:\python38\lib\site-packages (from TTS) (2022.3.0)

Requirement already satisfied: aiohttp in C:\python38\lib\site-packages (from TTS) (3.7.3)

Requirement already satisfied: packaging in C:\python38\lib\site-packages (from TTS) (23.0)

Collecting flask (from TTS)

Downloading flask-2.3.3-py3-none-any.whl (96 kB)

---------------------------------------- 96.1/96.1 kB 5.4 MB/s eta 0:00:00

Collecting pysbd (from TTS)

Downloading pysbd-0.3.4-py3-none-any.whl (71 kB)

---------------------------------------- 71.1/71.1 kB 2.0 MB/s eta 0:00:00

Collecting umap-learn==0.5.1 (from TTS)

Downloading umap-learn-0.5.1.tar.gz (80 kB)

---------------------------------------- 80.9/80.9 kB 4.7 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Requirement already satisfied: pandas in C:\python38\lib\site-packages (from TTS) (1.5.1)

Requirement already satisfied: matplotlib in C:\python38\lib\site-packages (from TTS) (3.6.3)

Collecting trainer==0.0.20 (from TTS)

Downloading trainer-0.0.20-py3-none-any.whl (45 kB)

---------------------------------------- 45.2/45.2 kB 1.1 MB/s eta 0:00:00

Collecting coqpit>=0.0.16 (from TTS)

Downloading coqpit-0.0.17-py3-none-any.whl (13 kB)

Collecting jieba (from TTS)

Downloading jieba-0.42.1.tar.gz (19.2 MB)

---------------------------------------- 19.2/19.2 MB 2.0 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting pypinyin (from TTS)

Downloading pypinyin-0.49.0-py2.py3-none-any.whl (1.4 MB)

---------------------------------------- 1.4/1.4 MB 3.2 MB/s eta 0:00:00

Collecting mecab-python3==1.0.5 (from TTS)

Downloading mecab_python3-1.0.5-cp38-cp38-win_amd64.whl (500 kB)

---------------------------------------- 500.8/500.8 kB 6.3 MB/s eta 0:00:00

Collecting unidic-lite==1.0.8 (from TTS)

Downloading unidic-lite-1.0.8.tar.gz (47.4 MB)

---------------------------------------- 47.4/47.4 MB 1.8 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting gruut[de,es,fr]==2.2.3 (from TTS)

Downloading gruut-2.2.3.tar.gz (73 kB)

---------------------------------------- 73.5/73.5 kB 213.1 kB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting jamo (from TTS)

Downloading jamo-0.4.1-py3-none-any.whl (9.5 kB)

Collecting nltk (from TTS)

Downloading nltk-3.8.1-py3-none-any.whl (1.5 MB)

---------------------------------------- 1.5/1.5 MB 3.4 MB/s eta 0:00:00

Collecting g2pkk>=0.1.1 (from TTS)

Downloading g2pkk-0.1.2-py3-none-any.whl (25 kB)

Collecting bangla==0.0.2 (from TTS)

Downloading bangla-0.0.2-py2.py3-none-any.whl (6.2 kB)

Collecting bnnumerizer (from TTS)

Downloading bnnumerizer-0.0.2.tar.gz (4.7 kB)

Preparing metadata (setup.py) ... done

Collecting bnunicodenormalizer==0.1.1 (from TTS)

Downloading bnunicodenormalizer-0.1.1.tar.gz (38 kB)

Preparing metadata (setup.py) ... done

Collecting k-diffusion (from TTS)

Downloading k_diffusion-0.1.0-py3-none-any.whl (33 kB)

Collecting einops (from TTS)

Downloading einops-0.6.1-py3-none-any.whl (42 kB)

---------------------------------------- 42.2/42.2 kB 1.0 MB/s eta 0:00:00

Collecting transformers (from TTS)

Downloading transformers-4.33.3-py3-none-any.whl (7.6 MB)

---------------------------------------- 7.6/7.6 MB 3.1 MB/s eta 0:00:00

Collecting numpy==1.21.6 (from TTS)

Using cached numpy-1.21.6-cp38-cp38-win_amd64.whl (14.0 MB)

Collecting numba==0.55.1 (from TTS)

Downloading numba-0.55.1-cp38-cp38-win_amd64.whl (2.4 MB)

---------------------------------------- 2.4/2.4 MB 4.1 MB/s eta 0:00:00

Requirement already satisfied: Babel<3.0.0,>=2.8.0 in C:\python38\lib\site-packages (from gruut[de,es,fr]==2.2.3->TT

Collecting dateparser~=1.1.0 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading dateparser-1.1.8-py2.py3-none-any.whl (293 kB)

---------------------------------------- 293.8/293.8 kB 4.6 MB/s eta 0:00:00

Collecting gruut-ipa<1.0,>=0.12.0 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading gruut-ipa-0.13.0.tar.gz (101 kB)

---------------------------------------- 101.6/101.6 kB ? eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting gruut_lang_en~=2.0.0 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading gruut_lang_en-2.0.0.tar.gz (15.2 MB)

---------------------------------------- 15.2/15.2 MB 3.5 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting jsonlines~=1.2.0 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading jsonlines-1.2.0-py2.py3-none-any.whl (7.6 kB)

Requirement already satisfied: networkx<3.0.0,>=2.5.0 in C:\python38\lib\site-packages (from gruut[de,es,fr]==2.2.3-

Collecting num2words<1.0.0,>=0.5.10 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading num2words-0.5.12-py3-none-any.whl (125 kB)

---------------------------------------- 125.2/125.2 kB 7.2 MB/s eta 0:00:00

Collecting python-crfsuite~=0.9.7 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading python_crfsuite-0.9.9-cp38-cp38-win_amd64.whl (138 kB)

---------------------------------------- 138.9/138.9 kB 4.2 MB/s eta 0:00:00

Requirement already satisfied: importlib_resources in C:\python38\lib\site-packages (from gruut[de,es,fr]==2.2.3->TT

Collecting gruut_lang_es~=2.0.0 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading gruut_lang_es-2.0.0.tar.gz (31.4 MB)

---------------------------------------- 31.4/31.4 MB 2.8 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting gruut_lang_fr~=2.0.0 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading gruut_lang_fr-2.0.2.tar.gz (10.9 MB)

---------------------------------------- 10.9/10.9 MB 3.8 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting gruut_lang_de~=2.0.0 (from gruut[de,es,fr]==2.2.3->TTS)

Downloading gruut_lang_de-2.0.0.tar.gz (18.1 MB)

---------------------------------------- 18.1/18.1 MB 3.9 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting audioread>=2.1.9 (from librosa==0.10.0.*->TTS)

Downloading audioread-3.0.1-py3-none-any.whl (23 kB)

Requirement already satisfied: scikit-learn>=0.20.0 in C:\python38\lib\site-packages (from librosa==0.10.0.*->TTS) (

Requirement already satisfied: joblib>=0.14 in C:\python38\lib\site-packages (from librosa==0.10.0.*->TTS) (1.0.1)

Requirement already satisfied: decorator>=4.3.0 in C:\python38\lib\site-packages (from librosa==0.10.0.*->TTS) (4.4.

Collecting pooch<1.7,>=1.0 (from librosa==0.10.0.*->TTS)

Downloading pooch-1.6.0-py3-none-any.whl (56 kB)

---------------------------------------- 56.3/56.3 kB 51.7 kB/s eta 0:00:00

Collecting soxr>=0.3.2 (from librosa==0.10.0.*->TTS)

Downloading soxr-0.3.6-cp38-cp38-win_amd64.whl (185 kB)

---------------------------------------- 185.1/185.1 kB 431.8 kB/s eta 0:00:00

Requirement already satisfied: typing-extensions>=4.1.1 in C:\python38\lib\site-packages (from librosa==0.10.0.*->TT

Collecting lazy-loader>=0.1 (from librosa==0.10.0.*->TTS)

Downloading lazy_loader-0.3-py3-none-any.whl (9.1 kB)

Collecting msgpack>=1.0 (from librosa==0.10.0.*->TTS)

Downloading msgpack-1.0.7-cp38-cp38-win_amd64.whl (222 kB)

---------------------------------------- 222.8/222.8 kB 1.4 MB/s eta 0:00:00

Collecting llvmlite<0.39,>=0.38.0rc1 (from numba==0.55.1->TTS)

Downloading llvmlite-0.38.1-cp38-cp38-win_amd64.whl (23.2 MB)

---------------------------------------- 23.2/23.2 MB 917.7 kB/s eta 0:00:00

Requirement already satisfied: setuptools in C:\python38\lib\site-packages (from numba==0.55.1->TTS) (67.6.1)

Requirement already satisfied: psutil in C:\python38\lib\site-packages (from trainer==0.0.20->TTS) (5.8.0)

Collecting tensorboardX (from trainer==0.0.20->TTS)

Downloading tensorboardX-2.6.2.2-py2.py3-none-any.whl (101 kB)

---------------------------------------- 101.7/101.7 kB 1.9 MB/s eta 0:00:00

Requirement already satisfied: protobuf<3.20,>=3.9.2 in C:\python38\lib\site-packages (from trainer==0.0.20->TTS) (3

Collecting pynndescent>=0.5 (from umap-learn==0.5.1->TTS)

Downloading pynndescent-0.5.10.tar.gz (1.1 MB)

---------------------------------------- 1.1/1.1 MB 3.3 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Requirement already satisfied: cffi>=1.0 in C:\python38\lib\site-packages (from soundfile->TTS) (1.14.5)

Requirement already satisfied: filelock in C:\python38\lib\site-packages (from torch>=1.7->TTS) (3.0.12)

Requirement already satisfied: sympy in C:\python38\lib\site-packages (from torch>=1.7->TTS) (1.11.1)

Requirement already satisfied: jinja2 in C:\python38\lib\site-packages (from torch>=1.7->TTS) (3.0.1)

Requirement already satisfied: attrs>=17.3.0 in C:\python38\lib\site-packages (from aiohttp->TTS) (21.2.0)

Requirement already satisfied: chardet<4.0,>=2.0 in C:\python38\lib\site-packages (from aiohttp->TTS) (3.0.4)

Requirement already satisfied: multidict<7.0,>=4.5 in C:\python38\lib\site-packages (from aiohttp->TTS) (5.1.0)

Requirement already satisfied: async-timeout<4.0,>=3.0 in C:\python38\lib\site-packages (from aiohttp->TTS) (3.0.1)

Requirement already satisfied: yarl<2.0,>=1.0 in C:\python38\lib\site-packages (from aiohttp->TTS) (1.6.3)

Collecting Werkzeug>=2.3.7 (from flask->TTS)

Downloading werkzeug-2.3.7-py3-none-any.whl (242 kB)

---------------------------------------- 242.2/242.2 kB 1.5 MB/s eta 0:00:00

Collecting jinja2 (from torch>=1.7->TTS)

Downloading Jinja2-3.1.2-py3-none-any.whl (133 kB)

---------------------------------------- 133.1/133.1 kB 7.7 MB/s eta 0:00:00

Requirement already satisfied: itsdangerous>=2.1.2 in C:\python38\lib\site-packages (from flask->TTS) (2.1.2)

Requirement already satisfied: click>=8.1.3 in C:\python38\lib\site-packages (from flask->TTS) (8.1.7)

Collecting blinker>=1.6.2 (from flask->TTS)

Downloading blinker-1.6.2-py3-none-any.whl (13 kB)

Requirement already satisfied: importlib-metadata>=3.6.0 in C:\python38\lib\site-packages (from flask->TTS) (6.0.0)

Collecting accelerate (from k-diffusion->TTS)

Downloading accelerate-0.23.0-py3-none-any.whl (258 kB)

---------------------------------------- 258.1/258.1 kB 4.0 MB/s eta 0:00:00

Collecting clean-fid (from k-diffusion->TTS)

Downloading clean_fid-0.1.35-py3-none-any.whl (26 kB)

Collecting clip-anytorch (from k-diffusion->TTS)

Downloading clip_anytorch-2.5.2-py3-none-any.whl (1.4 MB)

---------------------------------------- 1.4/1.4 MB 3.1 MB/s eta 0:00:00

Collecting dctorch (from k-diffusion->TTS)

Downloading dctorch-0.1.2-py3-none-any.whl (2.3 kB)

Collecting jsonmerge (from k-diffusion->TTS)

Downloading jsonmerge-1.9.2-py3-none-any.whl (19 kB)

Collecting kornia (from k-diffusion->TTS)

Downloading kornia-0.7.0-py2.py3-none-any.whl (705 kB)

---------------------------------------- 705.7/705.7 kB 3.0 MB/s eta 0:00:00

Requirement already satisfied: Pillow in C:\python38\lib\site-packages (from k-diffusion->TTS) (9.5.0)

Collecting rotary-embedding-torch (from k-diffusion->TTS)

Downloading rotary_embedding_torch-0.3.0-py3-none-any.whl (4.9 kB)

Collecting safetensors (from k-diffusion->TTS)

Downloading safetensors-0.3.3-cp38-cp38-win_amd64.whl (266 kB)

---------------------------------------- 266.3/266.3 kB 1.6 MB/s eta 0:00:00

Collecting scikit-image (from k-diffusion->TTS)

Downloading scikit_image-0.21.0-cp38-cp38-win_amd64.whl (22.7 MB)

---------------------------------------- 22.7/22.7 MB 944.0 kB/s eta 0:00:00

Collecting torchdiffeq (from k-diffusion->TTS)

Downloading torchdiffeq-0.2.3-py3-none-any.whl (31 kB)

Collecting torchsde (from k-diffusion->TTS)

Downloading torchsde-0.2.6-py3-none-any.whl (61 kB)

---------------------------------------- 61.2/61.2 kB ? eta 0:00:00

Collecting torchvision (from k-diffusion->TTS)

Downloading torchvision-0.15.2-cp38-cp38-win_amd64.whl (1.2 MB)

---------------------------------------- 1.2/1.2 MB 6.3 MB/s eta 0:00:00

Collecting wandb (from k-diffusion->TTS)

Downloading wandb-0.15.11-py3-none-any.whl (2.1 MB)

---------------------------------------- 2.1/2.1 MB 2.8 MB/s eta 0:00:00

Requirement already satisfied: contourpy>=1.0.1 in C:\python38\lib\site-packages (from matplotlib->TTS) (1.0.7)

Requirement already satisfied: cycler>=0.10 in C:\python38\lib\site-packages (from matplotlib->TTS) (0.10.0)

Requirement already satisfied: fonttools>=4.22.0 in C:\python38\lib\site-packages (from matplotlib->TTS) (4.38.0)

Requirement already satisfied: kiwisolver>=1.0.1 in C:\python38\lib\site-packages (from matplotlib->TTS) (1.3.1)

Requirement already satisfied: pyparsing>=2.2.1 in C:\python38\lib\site-packages (from matplotlib->TTS) (2.4.7)

Requirement already satisfied: python-dateutil>=2.7 in C:\python38\lib\site-packages (from matplotlib->TTS) (2.8.2)

Collecting regex>=2021.8.3 (from nltk->TTS)

Downloading regex-2023.8.8-cp38-cp38-win_amd64.whl (268 kB)

---------------------------------------- 268.3/268.3 kB 4.2 MB/s eta 0:00:00

Requirement already satisfied: pytz>=2020.1 in C:\python38\lib\site-packages (from pandas->TTS) (2021.1)

Collecting huggingface-hub<1.0,>=0.15.1 (from transformers->TTS)

Downloading huggingface_hub-0.17.3-py3-none-any.whl (295 kB)

---------------------------------------- 295.0/295.0 kB 1.1 MB/s eta 0:00:00

Requirement already satisfied: requests in C:\python38\lib\site-packages (from transformers->TTS) (2.31.0)

Collecting tokenizers!=0.11.3,<0.14,>=0.11.1 (from transformers->TTS)

Downloading tokenizers-0.13.3-cp38-cp38-win_amd64.whl (3.5 MB)

---------------------------------------- 3.5/3.5 MB 4.7 MB/s eta 0:00:00

Requirement already satisfied: pycparser in C:\python38\lib\site-packages (from cffi>=1.0->soundfile->TTS) (2.20)

Requirement already satisfied: colorama in C:\python38\lib\site-packages (from click>=8.1.3->flask->TTS) (0.4.6)

Requirement already satisfied: six in C:\python38\lib\site-packages (from cycler>=0.10->matplotlib->TTS) (1.15.0)

Requirement already satisfied: tzlocal in C:\python38\lib\site-packages (from dateparser~=1.1.0->gruut[de,es,fr]==2.

Requirement already satisfied: zipp>=0.5 in C:\python38\lib\site-packages (from importlib-metadata>=3.6.0->flask->TT

Requirement already satisfied: MarkupSafe>=2.0 in C:\python38\lib\site-packages (from jinja2->torch>=1.7->TTS) (2.0.

Collecting docopt>=0.6.2 (from num2words<1.0.0,>=0.5.10->gruut[de,es,fr]==2.2.3->TTS)

Downloading docopt-0.6.2.tar.gz (25 kB)

Preparing metadata (setup.py) ... done

Requirement already satisfied: appdirs>=1.3.0 in C:\python38\lib\site-packages (from pooch<1.7,>=1.0->librosa==0.10.

Requirement already satisfied: charset-normalizer<4,>=2 in C:\python38\lib\site-packages (from requests->transformer

Requirement already satisfied: idna<4,>=2.5 in C:\python38\lib\site-packages (from requests->transformers->TTS) (2.1

Requirement already satisfied: urllib3<3,>=1.21.1 in C:\python38\lib\site-packages (from requests->transformers->TTS

Requirement already satisfied: certifi>=2017.4.17 in C:\python38\lib\site-packages (from requests->transformers->TTS

Requirement already satisfied: threadpoolctl>=2.0.0 in C:\python38\lib\site-packages (from scikit-learn>=0.20.0->lib

Collecting MarkupSafe>=2.0 (from jinja2->torch>=1.7->TTS)

Downloading MarkupSafe-2.1.3-cp38-cp38-win_amd64.whl (17 kB)

Collecting ftfy (from clip-anytorch->k-diffusion->TTS)

Downloading ftfy-6.1.1-py3-none-any.whl (53 kB)

---------------------------------------- 53.1/53.1 kB 2.7 MB/s eta 0:00:00

INFO: pip is looking at multiple versions of dctorch to determine which version is compatible with other requirements. This could take a while.

Collecting dctorch (from k-diffusion->TTS)

Downloading dctorch-0.1.1-py3-none-any.whl (2.3 kB)

Downloading dctorch-0.1.0-py3-none-any.whl (2.3 kB)

Collecting clean-fid (from k-diffusion->TTS)

Downloading clean_fid-0.1.34-py3-none-any.whl (26 kB)

Collecting requests (from transformers->TTS)

Using cached requests-2.25.1-py2.py3-none-any.whl (61 kB)

Collecting clean-fid (from k-diffusion->TTS)

Downloading clean_fid-0.1.33-py3-none-any.whl (25 kB)

INFO: pip is looking at multiple versions of dctorch to determine which version is compatible with other requirements. This could take a while.

Downloading clean_fid-0.1.32-py3-none-any.whl (26 kB)

Downloading clean_fid-0.1.31-py3-none-any.whl (24 kB)

INFO: This is taking longer than usual. You might need to provide the dependency resolver with stricter constraints to reduce runtime. See https://pip.pypa.io

u want to abort this run, press Ctrl + C.

Downloading clean_fid-0.1.30-py3-none-any.whl (24 kB)

Downloading clean_fid-0.1.29-py3-none-any.whl (24 kB)

Downloading clean_fid-0.1.28-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.26-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.25-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.24-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.23-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.22-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.21-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.19-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.18-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.17-py3-none-any.whl (23 kB)

Downloading clean_fid-0.1.16-py3-none-any.whl (22 kB)

Downloading clean_fid-0.1.15-py3-none-any.whl (22 kB)

Downloading clean_fid-0.1.14-py3-none-any.whl (22 kB)

Downloading clean_fid-0.1.13-py3-none-any.whl (19 kB)

Downloading clean_fid-0.1.12-py3-none-any.whl (19 kB)

Downloading clean_fid-0.1.11-py3-none-any.whl (19 kB)

Downloading clean_fid-0.1.10-py3-none-any.whl (16 kB)

Downloading clean_fid-0.1.9-py3-none-any.whl (15 kB)

Downloading clean_fid-0.1.8-py3-none-any.whl (16 kB)

Downloading clean_fid-0.1.6-py3-none-any.whl (15 kB)

Collecting accelerate (from k-diffusion->TTS)

Downloading accelerate-0.22.0-py3-none-any.whl (251 kB)

---------------------------------------- 251.2/251.2 kB 15.1 MB/s eta 0:00:00

Downloading accelerate-0.21.0-py3-none-any.whl (244 kB)

---------------------------------------- 244.2/244.2 kB 7.3 MB/s eta 0:00:00

Downloading accelerate-0.20.3-py3-none-any.whl (227 kB)

---------------------------------------- 227.6/227.6 kB 6.8 MB/s eta 0:00:00

Downloading accelerate-0.20.2-py3-none-any.whl (227 kB)

---------------------------------------- 227.5/227.5 kB 2.8 MB/s eta 0:00:00

Downloading accelerate-0.20.1-py3-none-any.whl (227 kB)

---------------------------------------- 227.5/227.5 kB 2.8 MB/s eta 0:00:00