anchor

stringlengths 86

24.4k

| positive

stringlengths 174

15.6k

| negative

stringlengths 76

13.7k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Inspiration

As engineering students who attend public universities, class sizes of 300 are no rarity. Sadly, that also means that oftentimes, our most important classes can take weeks or months to supply us with feedback for our assignments, hamstringing the learning process and creating a larger disconnect between the professor and the student. A quarter system is 10 weeks long, and having 3 weeks of ungraded assignments means learning 30% of the class without any feedback.

We're also keenly aware that now more than ever, teachers are overworked. The average teacher logs upwards of 180 hours per year on grading alone, making it the most worked-on task outside of actual teaching inside the classroom. The solutions they have right now are few. Hiring extra teaching assistants to aid with grading is an extensive process that demands thorough interviewing to ensure the applicants actually know the material. Simultaneously, money is something that most universities can't spare on extra graders, meaning those that are qualified face heavily inflated workloads. At the moment, it seems there's no feasible out. With less time spent grading, there's more time spent with students.

Enter gradeAI, our AI-based tool that aims to streamline grading by automatically processing assignments and evaluating them based on a given rubric. Our goal isn't to replace the teacher or the TA, but rather to appraise assignments quickly, let educators review and alter judgements made, and expedite grading large volumes of similar assignments.

## What it does

It utilizes AI Agents from Fetch.ai to break down multiple steps of grading assignments. First on the frontend, the teacher can create an assignment in a course, then name it and provide a rubric. As students submit their homework, the assignment will start getting graded after the due date. We have multiple AI Agents with the first being locally run and ported publicaly using Fetch.AI's mailbox. This first agent is responsible for pulling submitted homework files and the associated rubric from our Google Cloud Platform storage. This agent also processes the text and is the handler for sending homework to be graded in our pipeline, and eventually write the processed grades to our database. Next in the process is our pipeline of 4 agents on the Agentverse! The first agent is responsible for sending all of the processed text in a clean format to our next agent which parses the homework into readable chunks. The second agent-let's name it Parser-takes each problem number and associated work with it, and puts them all into an array! Parser does this for both the solution and homework submitted! This agent then sends this data to our third AI Agent and let's name this one Solver! This is where all of the grading happens. This AI Agent cross examines each problem's solution and attempt one at a time, which increases accuracy of grading. We ask this AI Agent to return data in a JSON format with the grade, confidence level, summary, and details for each problem. From there, Solver sends all of the graded problems that it did one by one to ensure accuracy to our last AI Agent on the Agentverse! This last agent aggregates all of the data and sends it back to our first agent being locally ran! Our locally ran agent then writes everything to our database, where we show it on our frontend!

## How we built it

We used the Reflex framework and Fetch.AI to accomplish all of our goals. This proved to be both difficult and convenient, as we never used these technologies before, but everything was written in Python! Thanks to Reflex, we built our entire website in Python, and Fetch.AI is all in python, which was great. In terms of Fetch.AI, we used many of their products such as the Agentverse, mailbox, template agents, and a little bit of DeltaV! We utilized the Agentverse, as it provided a convenient way to deploy our agents and have them run 24/7, which allowed us to keep a constant pipeline! The integrated development environment was also helpful in debugging and creating agents from scratch. Mailbox provided by Fetch.AI allowed our locally ran AI Agent to communicate with all of the deployed agents. Using a combination of all of these tools, we were able to piece together multiple agents with using a combination of openAI's API and OCR. Reflex was also a great tool and we utilized it's documentation and ability to wrap React code to create nice and complex components with functionality.

## Challenges we ran into

We took a big challenge in using technology we've never touched before! Reflex and Fetch.AI were the two major components of our project, and there was a big learning curve. One of the issues we ran into was a lack of documentation. Being a relatively newer product that also had a recent name change, Reflex didn't have as many resources as many of the tools we were more accustomed to. As a result, it took us a while to get the hang of what we were doing, and complex issues such as managing routes and backend integration with GCP were made even more difficult as we searched for solutions. Simpler issues like embedding PDF views of files was also made demanding, and our time frame for design was expanded due to underestimation of what we thought wouldn't be issues.

## Accomplishments that we're proud of

## What we learned

In terms of our web framework, we chose to use Reflex to build out our web app that incorporated Fetch.ai, FAST APIs, and OpenAI APIs. For Reflex, we learned how to develop the architecture of our application by fully understanding the Reflex documentation, and of course, reaching out to the Reflex developers for assistance. Additionally, we learned to link the various pages together and to incorporate components into our pages in order to fully immerse the user(s) and to provide a platform for the students and teachers to view the graded papers and their respective results.

## What's next for gradeAI

Given a time frame of 36 hours, our team had many more features planned than we were able to execute. Primarily, we'd love to add a plagiarism checker, LLM integration for student follow-ups, and textbook breakdowns for relevant questions in the homework. If gradeAI were a product in wide circulation, plagiarism would undoubtedly be the number one threat to its efficacy; as such, this implement demands our most immediate attention. Textbook breakdowns would also be a great quality of life feature we'd love to be able to tackle, but in truth, the feature we'd most look towards would be the LLM integration. Our undertaking of this project was meant to challenge us from the very beginning, and LLM is something we're all excited about and ready to learn and apply.

Outside of features, we're looking to simply run and test a wider variety of materials so gradeAI can be the product it was meant to be. We've been nothing but thoroughly impressed with what we've been able to make, and we hope educators will be too.

There's a long road to come for gradeAI, but whatever comes our way, we're confident we have the tools to ace it. | ## **Inspiration:**

Our inspiration stemmed from the realization that the pinnacle of innovation occurs at the intersection of deep curiosity and an expansive space to explore one's imagination. Recognizing the barriers faced by learners—particularly their inability to gain real-time, personalized, and contextualized support—we envisioned a solution that would empower anyone, anywhere to seamlessly pursue their inherent curiosity and desire to learn.

## **What it does:**

Our platform is a revolutionary step forward in the realm of AI-assisted learning. It integrates advanced AI technologies with intuitive human-computer interactions to enhance the context a generative AI model can work within. By analyzing screen content—be it text, graphics, or diagrams—and amalgamating it with the user's audio explanation, our platform grasps a nuanced understanding of the user's specific pain points. Imagine a learner pointing at a perplexing diagram while voicing out their doubts; our system swiftly responds by offering immediate clarifications, both verbally and with on-screen annotations.

## **How we built it**:

We architected a Flask-based backend, creating RESTful APIs to seamlessly interface with user input and machine learning models. Integration of Google's Speech-to-Text enabled the transcription of users' learning preferences, and the incorporation of the Mathpix API facilitated image content extraction. Harnessing the prowess of the GPT-4 model, we've been able to produce contextually rich textual and audio feedback based on captured screen content and stored user data. For frontend fluidity, audio responses were encoded into base64 format, ensuring efficient playback without unnecessary re-renders.

## **Challenges we ran into**:

Scaling the model to accommodate diverse learning scenarios, especially in the broad fields of maths and chemistry, was a notable challenge. Ensuring the accuracy of content extraction and effectively translating that into meaningful AI feedback required meticulous fine-tuning.

## **Accomplishments that we're proud of**:

Successfully building a digital platform that not only deciphers image and audio content but also produces high utility, real-time feedback stands out as a paramount achievement. This platform has the potential to revolutionize how learners interact with digital content, breaking down barriers of confusion in real-time. One of the aspects of our implementation that separates us from other approaches is that we allow the user to perform ICL (In Context Learning), a feature that not many large language models don't allow the user to do seamlessly.

## **What we learned**:

We learned the immense value of integrating multiple AI technologies for a holistic user experience. The project also reinforced the importance of continuous feedback loops in learning and the transformative potential of merging generative AI models with real-time user input. | ## Inspiration

Loneliness affects countless people and over time, it can have significant consequences on a person's mental health. One quarter of Canada's 65+ population live completely alone, which has been scientifically connected to very serious health risks. With the growing population of seniors, this problem only seems to be growing worse, and so we wanted to find a way to help both elderly citizens take care of themselves and their loved ones to take care of them.

## What it does

Claire is an AI chatbot with a UX designed specifically for the less tech-savvy elderly population. It helps seniors to journal and self-reflect, both proven to have mental health benefits, through a simulated social experience. At the same time, it allows caregivers to stay up-to-date on the emotional wellbeing of the elderly. This is all done with natural language processing, used to identify the emotions associated with each conversation session.

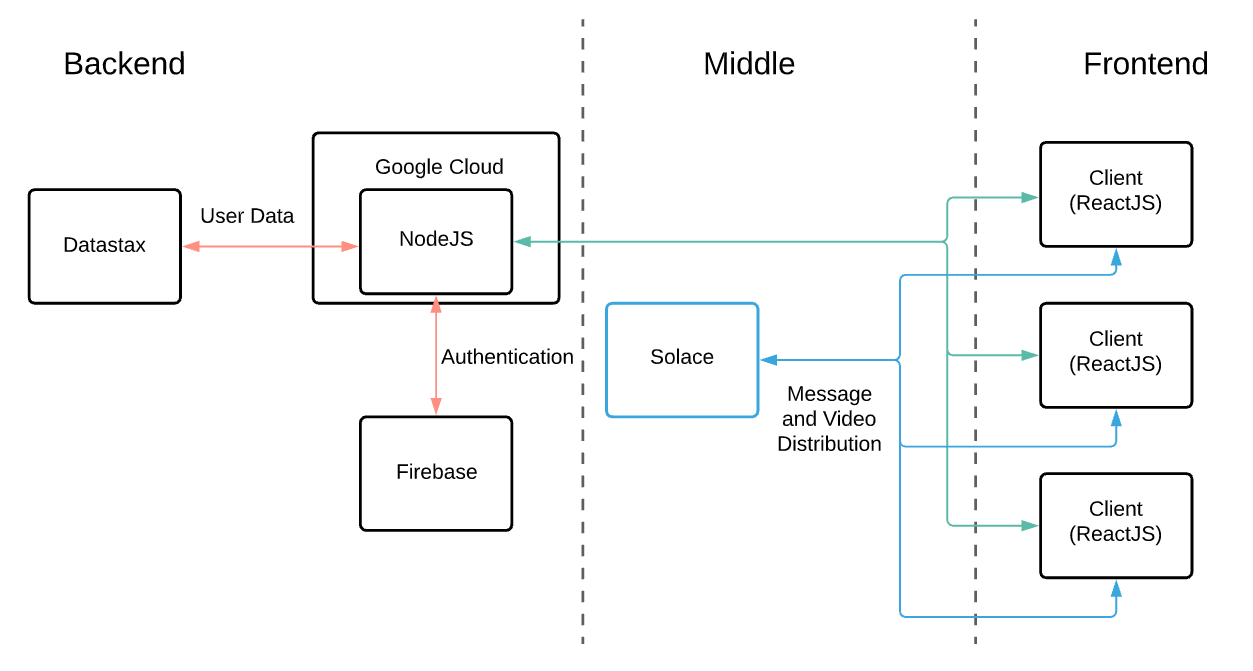

## How we built it

We used a React front-end served by a node.js back-end. Messages were sent to Google Cloud's natural language processing API, where we could identify emotions for recording and entities for enhancing the simulated conversation experience. Information on user activity and profiles are maintained in a Firebase database.

## Challenges we ran into

We wanted to use speech-to-text so as to reach an even broader seniors' market, but we ran into technical difficulties with streaming audio from the browser in a consistent way. As a result, we chose simply to have a text-based conversation.

## Accomplishments that we're proud of

Designing a convincing AI chatbot was the biggest challenge. We found that the bot would often miss contextual cues, and interpret responses incorrectly. Over the course of the project, we had to tweak how our bot responded and prompted conversation so that these lapses were minimized. Also, as developers, it was very difficult to design to the needs of a less-tech-saavy target audience. We had to make sure our application was intuitive enough for all users.

## What we learned

We learned how to work with natural language processing to follow a conversation and respond appropriately to human input. As well, we got to further practise our technical skills by applying React, node.js, and Firebase to build a full-stack application.

## What's next for claire

We want to implement an accurate speech-to-text and text-to-speech functionality. We think this is the natural next step to making our product more widely accessible. | partial |

## Inspiration

Two of our teammates have personal experiences with wildfires: one who has lived all her life in California, and one who was exposed to a fire in his uncle's backyard in the same state. We found the recent wildfires especially troubling and thus decided to focus our efforts on doing what we could with technology.

## What it does

CacheTheHeat uses different computer vision algorithms to classify fires from cameras/videos, in particular, those mounted on households for surveillance purposes. It calculates the relative size and rate-of-growth of the fire in order to alert nearby residents if said wildfire may potentially pose a threat. It hosts a database with multiple video sources in order for warnings to be far-reaching and effective.

## How we built it

This software detects the sizes of possible wildfires and the rate at which those fires are growing using Computer Vision/OpenCV. The web-application gives a pre-emptive warning (phone alerts) to nearby individuals using Twilio. It has a MongoDB Stitch database of both surveillance-type videos (as in campgrounds, drones, etc.) and neighborhood cameras that can be continually added to, depending on which neighbors/individuals sign the agreement form using DocuSign. We hope this will help creatively deal with wildfires possibly in the future.

## Challenges we ran into

Among the difficulties we faced, we had the most trouble with understanding the applications of multiple relevant DocuSign solutions for use within our project as per our individual specifications. For example, our team wasn't sure how we could use something like the text tab to enhance our features within our client's agreement.

One other thing we were not fond of was that DocuSign logged us out of the sandbox every few minutes, which was sometimes a pain. Moreover, the development environment sometimes seemed a bit cluttered at a glance, which we discouraged the use of their API.

There was a bug in Google Chrome where Authorize.Net (DocuSign's affiliate) could not process payments due to browser-specific misbehavior. This was brought to the attention of DocuSign staff.

One more thing that was also unfortunate was that DocuSign's GitHub examples included certain required fields for initializing, however, the description of these fields would be differ between code examples and documentation. For example, "ACCOUNT\_ID" might be a synonym for "USERNAME" (not exactly, but same idea).

## Why we love DocuSign

Apart from the fact that the mentorship team was amazing and super-helpful, our team noted a few things about their API. Helpful documentation existed on GitHub with up-to-date code examples clearly outlining the dependencies required as well as offering helpful comments. Most importantly, DocuSign contains everything from A-Z for all enterprise signature/contractual document processing needs. We hope to continue hacking with DocuSign in the future.

## Accomplishments that we're proud of

We are very happy to have experimented with the power of enterprise solutions in making a difference while hacking for resilience. Wildfires, among the most devastating of natural disasters in the US, have had a huge impact on residents of states such as California. Our team has been working hard to leverage existing residential video footage systems for high-risk wildfire neighborhoods.

## What we learned

Our team members learned concepts of various technical and fundamental utility. To list a few such concepts, we include MongoDB, Flask, Django, OpenCV, DocuSign, Fire safety.

## What's next for CacheTheHeat.com

Cache the Heat is excited to commercialize this solution with the support of Wharton Risk Center if possible. | ## How we built it

The sensors consist of the Maxim Pegasus board and any Android phone with our app installed. The two are synchronized at the beginning, and then by moving the "tape" away from the "measure," we can get an accurate measure of distance, even for non-linear surfaces.

## Challenges we ran into

Sometimes high-variance outputs can come out of the sensors we made use of, such as Android gyroscopes. Maintaining an inertial reference frame from our board to the ground as it was rotated proved very difficult and required the use of quaternion rotational transforms. Using the Maxim Pegasus board was difficult as it is a relatively new piece of hardware, and thus, no APIs or libaries have been written for basic functions yet. We had to query for accelerometer and gyro data manually from internal IMU registers with I2C.

## Accomplishments that we're proud of

Full integration with the Maxim board and the flexibility to adapt the software to many different handyman-style use cases, e.g. as a table level, compass, etc. We experimented with and implemented various noise filtering techniques such as Kalman filters and low pass filters to increase the accuracy of our data. In general, working with the Pegasus board involved a lot of low-level read-write operations within internal device registers, so basic tasks like getting accelerometer data became much more complex than we were used to.

## What's next

Other possibilities were listed above, along with the potential to make even better estimates of absolute positioning in space through different statistical algorithms. | ## Inspiration

I love to play board games, but I often can't get a big enough group together to play. This led to my original idea: an AI-powered opponent in Tabletop Simulator who could play virtual board games against you after reading their rules books. This proved to be too ambitious, so I settled on a simplified case for my project: a bot for a modified version of the card game Cheat, built in Python.

## Features

The project features a graphical implementation of Cheat in Pygame and a bot integrated with the OpenAI API that plays against the user.

## Challenges

Towards the end of the hackathon, I had some struggles integrating the OpenAI API and the features it brought into the Pygame piece of my project, but I ultimately found a solution and got the bot working.

## Future Plans

I feel this project is still incomplete, that the bot could be improved to have a longer memory, or that maybe it would be better if I tried a reinforcement learning approach instead. Nevertheless, I enjoyed learning to work with APIs and hope to continue learning with this project, however that may be! | winning |

## Never plan for fun again. Just have it. Now, with CityCrawler.

While squabbling over bars to visit in the city, we pined for a solution to our problem.

And here we find CityCrawler, an app that takes your interests and a couple of other details to immediately plan your ideal trip. So whether it's a pub crawl or a night full of entertainment, CityCrawler will be at your service to help you decide and focus on the conversations that actually matter.

With CityCrawler you can also share your plan with your friends, so no one is left behind.

## Tech Used

On the iOS side, we used RxSwift, and RxAlamoFire to handle asynchronous tasks and network requests.

On the Android side, we used Kotlin, RxKotlin, Retrofit and OkHTTP.

Our backend system consists of a set of stdlib endpoint functions, which are built using the Google Maps Places API, Distance Matrix API, and the Firebase API. We also wrote a custom algorithm to solve the Travelling Salesman Problem based on Kruskal's Minimum Spanning Tree algorithm and the Depth First Tree Tour algorithm.

We have also exposed our Firebase and Google Maps stdlib functions to the public to contribute to that ecosystem. Oh and #OneMoreThing.

### Android Youtube Video - <https://youtu.be/WudxqMyaszQ> | ## **Problem**

* Less than a third of Canada’s fish populations, 29.4 per cent, can confidently be considered healthy and 17 per cent are in the critical zone, where conservation actions are crucial.

* A fishery audit conducted by Oceana Canada, reported that just 30.4 per cent of fisheries in Canada are considered “healthy” and nearly 20 per cent of stocks are “critically depleted.”

### **Lack of monitoring**

"However, short term economics versus long term population monitoring and rebuilding has always been a problem in fisheries decision making. This makes it difficult to manage dealing with major issues, such as species decline, right away." - Marine conservation coordinator, Susanna Fuller

"sharing observations of fish catches via phone apps, or following guidelines to prevent transfer of invasive species by boats, all contribute to helping freshwater fish populations" - The globe and mail

## **Our solution; Aquatrack**

aggregates a bunch of datasets from open canadian portal into a public dashboard!

slide link for more info: <https://www.canva.com/design/DAFCEO85hI0/c02cZwk92ByDkxMW98Iljw/view?utm_content=DAFCEO85hI0&utm_campaign=designshare&utm_medium=link2&utm_source=sharebutton>

The REPO github link: <https://github.com/HikaruSadashi/Aquatrack>

The datasets used:

1) <https://open.canada.ca/data/en/dataset/c9d45753-5820-4fa2-a1d1-55e3bf8e68f3/resource/7340c4ad-b909-4658-bbf3-165a612472de>

2)

<https://open.canada.ca/data/en/dataset/aca81811-4b08-4382-9af7-204e0b9d2448> | ## Inspiration

40 million people in the world are blind, including 20% of all people aged 85 or older. Half a million people suffer paralyzing spinal cord injuries every year. 8.5 million people are affected by Parkinson’s disease, with the vast majority of these being senior citizens. The pervasive difficulty for these individuals to interact with objects in their environment, including identifying or physically taking the medications vital to their health, is unacceptable given the capabilities of today’s technology.

First, we asked ourselves the question, what if there was a vision-powered robotic appliance that could serve as a helping hand to the physically impaired? Then we began brainstorming: Could a language AI model make the interface between these individual’s desired actions and their robot helper’s operations even more seamless? We ended up creating Baymax—a robot arm that understands everyday speech to generate its own instructions for meeting exactly what its loved one wants. Much more than its brilliant design, Baymax is intelligent, accurate, and eternally diligent.

We know that if Baymax was implemented first in high-priority nursing homes, then later in household bedsides and on wheelchairs, it would create a lasting improvement in the quality of life for millions. Baymax currently helps its patients take their medicine, but it is easily extensible to do much more—assisting these same groups of people with tasks like eating, dressing, or doing their household chores.

## What it does

Baymax listens to a user’s requests on which medicine to pick up, then picks up the appropriate pill and feeds it to the user. Note that this could be generalized to any object, ranging from food, to clothes, to common household trinkets, to more. Baymax responds accurately to conversational, even meandering, natural language requests for which medicine to take—making it perfect for older members of society who may not want to memorize specific commands. It interprets these requests to generate its own pseudocode, later translated to robot arm instructions, for following the tasks outlined by its loved one. Subsequently, Baymax delivers the medicine to the user by employing a powerful computer vision model to identify and locate a user’s mouth and make real-time adjustments.

## How we built it

The robot arm by Reazon Labs, a 3D-printed arm with 8 servos as pivot points, is the heart of our project. We wrote custom inverse kinematics software from scratch to control these 8 degrees of freedom and navigate the end-effector to a point in three dimensional space, along with building our own animation methods for the arm to follow a given path. Our animation methods interpolate the arm’s movements through keyframes, or defined positions, similar to how film editors dictate animations. This allowed us to facilitate smooth, yet precise, motion which is safe for the end user.

We built a pipeline to take in speech input from the user and process their request. We wanted users to speak with the robot in natural language, so we used OpenAI’s Whisper system to convert the user commands to text, then used OpenAI’s GPT-4 API to figure out which medicine(s) they were requesting assistance with.

We focused on computer vision to recognize the user’s face and mouth. We used OpenCV to get the webcam live stream and used 3 different Convolutional Neural Networks for facial detection, masking, and feature recognition. We extracted coordinates from the model output to extrapolate facial landmarks and identify the location of the center of the mouth, simultaneously detecting if the user’s mouth is open or closed.

When we put everything together, our result was a functional system where a user can request medicines or pills, and the arm will pick up the appropriate medicines one by one, feeding them to the user while making real time adjustments as it approaches the user’s mouth.

## Challenges we ran into

We quickly learned that working with hardware introduced a lot of room for complications. The robot arm we used was a prototype, entirely 3D-printed yet equipped with high-torque motors, and parts were subject to wear and tear very quickly, which sacrificed the accuracy of its movements. To solve this, we implemented torque and current limiting software and wrote Python code to smoothen movements and preserve the integrity of instruction.

Controlling the arm was another challenge because it has 8 motors that need to be manipulated finely enough in tandem to reach a specific point in 3D space. We had to not only learn how to work with the robot arm SDK and libraries but also comprehend the math and intuition behind its movement. We did this by utilizing forward kinematics and restricted the servo motors’ degrees of freedom to simplify the math. Realizing it would be tricky to write all the movement code from scratch, we created an animation library for the arm in which we captured certain arm positions as keyframes and then interpolated between them to create fluid motion.

Another critical issue was the high latency between the video stream and robot arm’s movement, and we spent much time optimizing our computer vision pipeline to create a near instantaneous experience for our users.

## Accomplishments that we're proud of

As first-time Hackathon participants, we are incredibly proud of the incredible progress we were able to make in a very short amount of time, proving to ourselves that with hard work, passion, and a clear vision, anything is possible. Our team did a fantastic job embracing the challenge of using technology unfamiliar to us, and stepped out of our comfort zones to bring our idea to life. Whether it was building the computer vision model, or learning how to interface the robot arm’s movements with voice controls, we ended up building a robust prototype which far surpassed our initial expectations. One of our greatest successes was coordinating our work so that each function could be pieced together and emerge as a functional robot. Let’s not overlook the success of not eating our hi-chews we were using for testing!

## What we learned

We developed our skills in frameworks we were initially unfamiliar with such as how to apply Machine Learning algorithms in a real-time context. We also learned how to successfully interface software with hardware - crafting complex functions which we could see work in 3-dimensional space. Through developing this project, we also realized just how much social impact a robot arm can have for disabled or elderly populations.

## What's next for Baymax

Envision a world where Baymax, a vigilant companion, eases medication management for those with mobility challenges. First, Baymax can be implemented in nursing homes, then can become a part of households and mobility aids. Baymax is a helping hand, restoring independence to a large disadvantaged group.

This innovation marks an improvement in increasing quality of life for millions of older people, and is truly a human-centric solution in robotic form. | losing |

## What it does

XEN SPACE is an interactive web-based game that incorporates emotion recognition technology and the Leap motion controller to create an immersive emotional experience that will pave the way for the future gaming industry.

# How we built it

We built it using three.js, Leap Motion Controller for controls, and Indico Facial Emotion API. We also used Blender, Cinema4D, Adobe Photoshop, and Sketch for all graphical assets. | ## Inspiration

Video games evolved when the Xbox Kinect was released in 2010 but for some reason we reverted back to controller based games. We are here to bring back the amazingness of movement controlled games with a new twist- re innovating how mobile games are played!

## What it does

AR.cade uses a body part detection model to track movements that correspond to controls for classic games that are ran through an online browser. The user can choose from a variety of classic games such as temple run, super mario, and play them with their body movements.

## How we built it

* The first step was setting up opencv and importing the a body part tracking model from google mediapipe

* Next, based off the position and angles between the landmarks, we created classification functions that detected specific movements such as when an arm or leg was raised or the user jumped.

* Then we correlated these movement identifications to keybinds on the computer. For example when the user raises their right arm it corresponds to the right arrow key

* We then embedded some online games of our choice into our front and and when the user makes a certain movement which corresponds to a certain key, the respective action would happen

* Finally, we created a visually appealing and interactive frontend/loading page where the user can select which game they want to play

## Challenges we ran into

A large challenge we ran into was embedding the video output window into the front end. We tried passing it through an API and it worked with a basic plane video, however the difficulties arose when we tried to pass the video with the body tracking model overlay on it

## Accomplishments that we're proud of

We are proud of the fact that we are able to have a functioning product in the sense that multiple games can be controlled with body part commands of our specification. Thanks to threading optimization there is little latency between user input and video output which was a fear when starting the project.

## What we learned

We learned that it is possible to embed other websites (such as simple games) into our own local HTML sites.

We learned how to map landmark node positions into meaningful movement classifications considering positions, and angles.

We learned how to resize, move, and give priority to external windows such as the video output window

We learned how to run python files from JavaScript to make automated calls to further processes

## What's next for AR.cade

The next steps for AR.cade are to implement a more accurate body tracking model in order to track more precise parameters. This would allow us to scale our product to more modern games that require more user inputs such as Fortnite or Minecraft. | ## Inspiration

One charge of the average EV's battery uses as much electricity as a house uses every 2.5 days. This puts a huge strain on the electrical grid: people usually plug in their car as soon as they get home, during what is already peak demand hours. At this time, not only is electricity the most expensive, but it is also the most carbon-intensive; as much as 20% generated by fossil fuels, even in Ontario, which is not a primarily fossil-fuel dependent region. We can change this: by charging according to our calculated optimal time, not only will our users save money, but save the environment.

## What it does

Given an interval in which the user can charge their car (ex., from when they get home to when they have to leave in the morning), ChargeVerte analyses live and historical data of electricity generation to calculate an interval in which electricity generation is the cleanest. The user can then instruct their car to begin charging at our recommended time, and charge with peace of mind knowing they are using sustainable energy.

## How we built it

ChargeVerte was made using a purely Python-based tech stack. We leveraged various libraries, including requests to make API requests, pandas for data processing, and Taipy for front-end design. Our project pulls data about the electrical grid from the Electricity Maps API in real-time.

## Challenges we ran into

Our biggest challenges were primarily learning how to handle all the different libraries we used within this project, many of which we had never used before, but were eager to try our hand at. One notable challenge we faced was trying to use the Flask API and React to create a Python/JS full-stack app, which we found was difficult to make API GET requests with due to the different data types supported by the respective languages. We made the decision to pivot to Taipy in order to overcome this hurdle.

## Accomplishments that we're proud of

We built a functioning predictive algorithm, which, given a range of time, finds the timespan of electricity with the lowest carbon intensity.

## What we learned

We learned how to design critical processes related to full-stack development, including how to make API requests, design a front-end, and connect a front-end and backend together. We also learned how to program in a team setting, and the many strategies and habits we had to change in order to make it happen.

## What's next for ChargeVerte

A potential partner for ChargeVerte is power-generating companies themselves. Generating companies could package ChargeVerte and a charging timer, such that when a driver plugs in for the night, ChargeVerte will automatically begin charging at off-peak times, without any needed driver oversight. This would reduce costs significantly for the power-generating companies, as they can maintain a flatter demand line and thus reduce the amount of expensive, polluting fossil fuels needed. | winning |

## Inspiration

We wanted to do something fun and exciting, nothing too serious. Slang is a vital component to thrive in today's society. Ever seen Travis Scott go like, "My dawg would prolly do it for a Louis belt", even most menials are not familiar with this slang. Therefore, we are leveraging the power of today's modern platform called "Urban Dictionary" to educate people about today's ways. Showing how today's music is changing with the slang thrown in.

## What it does

You choose your desired song it will print out the lyrics for you and then it will even sing it for you in a robotic voice. It will then look up the urban dictionary meaning of the slang and replace with the original and then it will attempt to sing it.

## How I built it

We utilized Python's Flask framework as well as numerous Python Natural Language Processing libraries. We created the Front end with a Bootstrap Framework. Utilizing Kaggle Datasets and Zdict API's

## Challenges I ran into

Redirecting challenges with Flask were frequent and the excessive API calls made the program super slow.

## Accomplishments that I'm proud of

The excellent UI design along with the amazing outcomes that can be produced from the translation of slang

## What I learned

A lot of things we learned

## What's next for SlangSlack

We are going to transform the way today's menials keep up with growing trends in slang. | ## Inspiration

Being a student of the University of Waterloo, every other semester I have to attend interviews for Co-op positions. Although it gets easier to talk to people, the more often you do it, I still feel slightly nervous during such face-to-face interactions. During this nervousness, the fluency of my conversion isn't always the best. I tend to use unnecessary filler words ("um, umm" etc.) and repeat the same adjectives over and over again. In order to improve my speech through practice against a program, I decided to create this application.

## What it does

InterPrep uses the IBM Watson "Speech-To-Text" API to convert spoken word into text. After doing this, it analyzes the words that are used by the user and highlights certain words that can be avoided, and maybe even improved to create a stronger presentation of ideas. By practicing speaking with InterPrep, one can keep track of their mistakes and improve themselves in time for "speaking events" such as interviews, speeches and/or presentations.

## How I built it

In order to build InterPrep, I used the Stdlib platform to host the site and create the backend service. The IBM Watson API was used to convert spoken word into text. The mediaRecorder API was used to receive and parse spoken text into an audio file which later gets transcribed by the Watson API.

The languages and tools used to build InterPrep are HTML5, CSS3, JavaScript and Node.JS.

## Challenges I ran into

"Speech-To-Text" API's, like the one offered by IBM tend to remove words of profanity, and words that don't exist in the English language. Therefore the word "um" wasn't sensed by the API at first. However, for my application, I needed to sense frequently used filler words such as "um", so that the user can be notified and can improve their overall speech delivery. Therefore, in order to implement this word, I had to create a custom language library within the Watson API platform and then connect it via Node.js on top of the Stdlib platform. This proved to be a very challenging task as I faced many errors and had to seek help from mentors before I could figure it out. However, once fixed, the project went by smoothly.

## Accomplishments that I'm proud of

I am very proud of the entire application itself. Before coming to Qhacks, I only knew how to do Front-End Web Development. I didn't have any knowledge of back-end development or with using API's. Therefore, by creating an application that contains all of the things stated above, I am really proud of the project as a whole. In terms of smaller individual accomplishments, I am very proud of creating my own custom language library and also for using multiple API's in one application successfully.

## What I learned

I learned a lot of things during this hackathon. I learned back-end programming, how to use API's and also how to develop a coherent web application from scratch.

## What's next for InterPrep

I would like to add more features for InterPrep as well as improve the UI/UX in the coming weeks after returning back home. There is a lot that can be done with additional technologies such as Machine Learning and Artificial Intelligence that I wish to further incorporate into my project! | ## WebMS

**Inspiration**

The inspiration came from when one of our team members was in India, and experienced large floods. The floods disabled cell towers and caused much of the population to lose data capabilities. However, they maintained SMS and MMS capabilities, which was a viable asset that was ineffectively utilized. During these floods, some of his relatives would have been helped a lot if they had had access to the internet, even indirectly to get alerts and similar things which they could not have accessed otherwise.

**What it does**

WebMS is a tool that allows the user to access information on the internet using SMS/MMS technology when wifi and cellular service are inaccessible. The user inputs a variety of commands by texting the WebMS number to initialize many different applets such as searching the internet for websites, browsing web pages (via screenshots), accessing weather and more. This all functions by remotely processing the content of the commands sent (on the cloud) and returning the requested information through SMS. Screenshots of the website are sent through MMS, utilizing the Twilio API

**How it works**

WebMS uses the Twilio API to provide this beneficial service. The user can run commands via SMS to receive information from our servers on the cloud. Our servers access the information with their own internet connection, and relay them to the user’s mobile phone via SMS . Many Applets are available such as Web Search, web browsing (via screenshots), important quick information such as weather and alerts, and even some fun games and jokes to lighten the mood.

**How we built it**

WebMS was made possible due to many modern technologies. A major technology that provides the backbone to WebMS (the ability to send and receive SMS and MMS messages) was provided by Twillio. Bing search is used for web indexing with the Microsoft Azure API. Continuity between this applet and the Navigator (Browser) was one of the main priorities behind WebMS. Many different APIs for the appropriate use (such as Accuweather) are used in order to power the quick info applets, that quickly provide info faster than the Navigator. Another significant feature part of WebMS is quick language translation powered by the Google Translate API. Many applets even serve comedic purpose. As WebMS was designed to be expandable, a variety of applets can easily be added to WebMS over time.

**Challenges We Ran into**

WebMS required a lot of work to get to its current position. Before we could focus on our quick applets we had to develop the basebone of the app. We hit some road bumps because a lot of sites contained illegal characters for XML. Our team had no prior experience dealing with issues such as these. We stayed up overnight learning regular expressions and how to use them, before we were able to support all the applets we have now. We were having issues with certain characters causing errors in the code. Sending images via MMS required a lot of work as well. We had to try many different hosts in order to find one that was consistent and reliable enough for our needs. We wanted WebMS to more stable, and not have issues such as crashing as these could be problematic to the user. We also had to find a place to host our code and the headless browser used for scraping. Unfortunately, we ran into some issues setting up some of the APIs as we did not have immediate access to a credit card. However, we were able to overcome most of these challenges before the end of the hackathon.

**Accomplishments that we’re proud of**

We are proud of the technology we developed to solve a major issue. While some of us did not have as much experience with nodeJS, we were able to deliver a well thought out service. We were even able to do extra and deliver quick and fun applets.

**What we learned**

We learned about new and unfamiliar languages and their quirks, like how XML disallows certain characters, and the certain interesting bits of syntax that nodeJS developers have to deal with. We also learned how to send SMS messages through programs and transfer information effectively between two different devices in very different situations.

**Built With**

NodeJS, Twilio, Puppeteer, Express, Google Translate API, Microsoft Azure Search, Accuweather API, (and for the gaming section) A few random fun APIs we found on the internet

**Try it out**

WebMS will be available to early testers at the first expo, and then to the public soon after it becomes more stable and secure. | winning |

## Inspiration

Blockchain has created new opportunities for financial empowerment and decentralized finance (DeFi), but it also introduces several new considerations. Despite its potential for equitability, malicious actors can currently take advantage of it to launder money and fund criminal activities. There has been a recent wave of effort to introduce regulations for crypto, but the ease of money laundering proves to be a serious challenge for regulatory bodies like the Canadian Revenue Agency. Recognizing these dangers, we aimed to tackle this issue through BlockXism!

## What it does

BlockXism is an attempt at placing more transparency in the blockchain ecosystem, through a simple verification system. It consists of (1) a self-authenticating service, (2) a ledger of verified users, and (3) rules for how verified and unverified users interact. Users can "verify" themselves by giving proof of identity to our self-authenticating service, which stores their encrypted identity on-chain. A ledger of verified users keeps track of which addresses have been verified, without giving away personal information. Finally, users will lose verification status if they make transactions with an unverified address, preventing suspicious funds from ever entering the verified economy. Importantly, verified users will remain anonymous as long as they are in good standing. Otherwise, such as if they transact with an unverified user, a regulatory body (like the CRA) will gain permission to view their identity (as determined by a smart contract).

Through this system, we create a verified market, where suspicious funds cannot enter the verified economy while flagging suspicious activity. With the addition of a legislation piece (e.g. requiring banks and stores to be verified and only transact with verified users), BlockXism creates a safer and more regulated crypto ecosystem, while maintaining benefits like blockchain’s decentralization, absence of a middleman, and anonymity.

## How we built it

BlockXism is built on a smart contract written in Solidity, which manages the ledger. For our self-authenticating service, we incorporated Circle wallets, which we plan to integrate into a self-sovereign identification system. We simulated the chain locally using Ganache and Metamask. On the application side, we used a combination of React, Tailwind, and ethers.js for the frontend and Express and MongoDB for our backend.

## Challenges we ran into

A challenge we faced was overcoming the constraints when connecting the different tools with one another, meaning we often ran into issues with our fetch requests. For instance, we realized you can only call MetaMask from the frontend, so we had to find an alternative for the backend. Additionally, there were multiple issues with versioning in our local test chain, leading to inconsistent behaviour and some very strange bugs.

## Accomplishments that we're proud of

Since most of our team had limited exposure to blockchain prior to this hackathon, we are proud to have quickly learned about the technologies used in a crypto ecosystem. We are also proud to have built a fully working full-stack web3 MVP with many of the features we originally planned to incorporate.

## What we learned

Firstly, from researching cryptocurrency transactions and fraud prevention on the blockchain, we learned about the advantages and challenges at the intersection of blockchain and finance. We also learned how to simulate how users interact with one another blockchain, such as through peer-to-peer verification and making secure transactions using Circle wallets. Furthermore, we learned how to write smart contracts and implement them with a web application.

## What's next for BlockXism

We plan to use IPFS instead of using MongoDB to better maintain decentralization. For our self-sovereign identity service, we want to incorporate an API to recognize valid proof of ID, and potentially move the logic into another smart contract. Finally, we plan on having a chain scraper to automatically recognize unverified transactions and edit the ledger accordingly. | ## Inspiration

At companies that want to introduce automation into their pipeline, finding the right robot, the cost of a specialized robotics system, and the time it takes to program a specialized robot is very expensive. We looked for solutions in general purpose robotics and imagining how these types of systems can be "trained" for certain tasks and "learn" to become a specialized robot.

## What it does

The Simon System consists of Simon, our robot that learns to perform the human's input actions. There are two "play" fields, one for the human to perform actions and the other for Simon to reproduce actions.

Everything starts with a human action. The Simon System detects human motion and records what happens. Then those actions are interpreted into actions that Simon can take. Then Simon performs those actions in the second play field, making sure to plan efficient paths taking into consideration that it is a robot in the field.

## How we built it

### Hardware

The hardware was really built from the ground up. We CADded the entire model of the two play fields as well as the arches that hold the smartphone cameras here at PennApps. The assembly of the two play fields consist of 100 individual CAD models and took over three hours to fully assemble, making full utilization of lap joints and mechanical advantage to create a structurally sound system. The LEDs in the enclosure communicate with the offboard field controllers using Unix Domain Sockets that simulate a serial port to allow color change for giving a user info on what the state of the fields is.

Simon, the robot, was also constructed completely from scratch. At its core, Simon is an Arduino Nano. It utilizes a dual H Bridge motor driver for controlling its two powered wheels and an IMU for its feedback controls system. It uses a MOSFET for controlling the electromagnet onboard for "grabbing" and "releasing" the cubes that it manipulates. With all of that, the entire motion planning library for Simon was written entirely from scratch. Simon uses a bluetooth module for communicating offboard with the path planning server.

### Software

There are four major software systems in this project. The path planning system uses a modified BFS algorithm taking into account path smoothing with realtime updates from the low-level controls to calibrate path plan throughout execution. The computer vision systems intelligently detect when updates are made to the human control field and acquire normalized grid size of the play field using QR boundaries to create a virtual enclosure. The cv system also determines the orientation of Simon on the field as it travels around. Servers and clients are also instantiated on every part of the stack for communicating with low latency.

## Challenges we ran into

Lack of acrylic for completing the system, so we had to refactor a lot of our hardware designs to accomodate. Robot rotation calibration and path planning due to very small inconsistencies in low level controllers. Building many things from scratch without using public libraries because they aren't specialized enough.

Dealing with smartphone cameras for CV and figuring out how to coordinate across phones with similar aspect ratios and not similar resolutions.

The programs we used don't run on windows such as Unix Domain Sockets so we had to switch to using a Mac as our main system.

## Accomplishments that we're proud of

This thing works, somehow. We wrote modular code this hackathon and a solid running github repo that was utilized.

## What we learned

We got better at CV. First real CV hackathon.

## What's next for The Simon System

More robustness. | ## Inspiration -

I got inspired for making this app when I saw that my friends and family who sometimes tend to not have enough internet bandwidth to spare to an application, and signal drops make calling someone a cumbersome task. Messaging was not included in this app, since I wanted it to be light-weight. It also achieves another goal; making people have a one-on-one conversation, which has reduced day by day as people have started using texting a lot.

## What it does -

This app helps people make calls to their friends/co-workers/acquaintances without using too much of internet bandwidth, when signal drops are frequent and STD calls are not possible. The unavailability of messaging feature helps save more internet data and forces people to talk instead of texting. This helps people be more socially active among their friends.

## How I built it -

This app encompasses multiple technologies and frameworks. This app is a combination of Flutter, Android and Firebase developed with the help of Dart and Java. It was a fun task to make all the UI elements and then inculcate them into the main frontend of the application. The backend uses Google Firebase for it's database and authentication, which is a service from Google for hosting apps with lots of features, and uses Google Cloud platform for all the work. Connecting the frontend and backend was not an easy task, especially for a single person, hence **the App is still under development phase and not yet fully functional.**

## Challenges we ran into -

This whole idea was a pretty big challenge for me. This is my first project in Flutter, and I have never done something on this large scale, so I was totally skeptical about the completion of the project and it's elements. The majority of the time was dedicated to the frontend of the application, but the backend was a big problem especially for a beginner like me, hence the incomplete status.

## Accomplishments that we're proud of -

Despite many of the challenges I ran into, I'm extremely proud of what I've been able to produce over the course of these 36 hours.

## What I learned -

I learned a lot about Flutter and Firebase, and frontend-backend services in general. I learned how to make many new UI widgets and features, a lot of new plugins, connecting Android SDKs to the app and using them for smooth functioning. I learned how Firebase authenticates users and their emails/passwords with the built in authentications features, and how it stores data in containerized formats and uses it in projects, which will be very helpful in my future. One more important thing I learned was how I could keep my code organized and better formatted for easier changes whenever required. And lastly, I learned a lot about Git and how it is useful for such projects.

## What's next for Berufung -

I hope this app will be fully-functioning, and we will add new features such as 2 Factor Authentication, Video calling, and group calling. | winning |

## Inspiration

While munching down on 3 Snickers bars, 10 packs of Welch's Fruit Snacks, a few Red Bulls, and an apple, we were trying to think of a hack idea. It then hit us that we were eating so unhealthy! We realized that as college students, we are often less aware of our eating habits since we are more focused on other priorities. Then came GrubSub, a way for college students to easily discover new foods for their eating habits.

## What it does

Imagine that you have recently been tracking your nutrient intake, but have run into the problem of eating the same foods over and over again. GrubSub allows a user to discover different foods that fulfill their nutritional requirements, substitute missing ingredients in recipes, or simply explore a wider range of eating options.

## How I built it

GrubSub utilizes a large data set of foods with information about their nutritional content such as proteins, carbohydrates, fats, vitamins, and minerals. GrubSub takes in a user-inputted query and finds the best matching entry in the data set. It searches through the list for the entry with the highest number of common words and the shortest length. It then compares this entry with the rest of the data set and outputs a list of foods that are the most similar in nutritional content. Specifically, we rank their similarities by calculating the sum of squared differences of each nutrient variable for each food and our query.

## Challenges I ran into

We used the Django framework to build our web application with the majority of our team not having prior knowledge with the technology. We spent a lot of time figuring out basic functionalities such as sending/receiving information between the front and back ends. We also spent a good amount of time finding a good data set to work with, and preprocessing the data set so that it would be easier to work with and understand.

## Accomplishments that I'm proud of

Finding, preprocessing, and reading in the data set into the Django framework was one of our first big accomplishments since it was the backbone of our project.

## What I learned

We became more familiar with the Django framework and python libraries for data processing.

## What's next for GrubSub

A better underlying data set will naturally make the app better, as there would be more selections and more information with which to make comparisons. We would also want to allow the user to select exactly which nutrients they want to find close substitutes for. We implemented this both in the front and back ends, but were unable to send the correct signals to finish this particular function. We would also like to incorporate recipes and ingredient swapping more explicitly into our app, perhaps by taking a food item and an ingredient, and being able to suggest an appropriate alternative. | ## Inspiration

As we brainstormed areas we could work in for our project, we began to look for inconveniences in each of our lives that we could tackle. One of our teammates unfortunately has a lot of dietary restrictions due to allergies, and as we watched him finding organizers to check ingredients and straining to read the microscopic text on processed foods' packaging, we realized that this was an everyday issue that we could help to resolve, and that the issue is not limited to just our teammate. Thus, we sought to find a way to make his and others' lives easier and simplify the way they check for allergens.

## What it does

Our project scans food items' ingredients lists and identifies allergens within the ingredients list to ensure that a given food item is safe for consumption, as well as putting the tool in a user-friendly web app.

## How we built it

We divided responsibilities and made sure each of us was on the same page when completing our individual parts. Some of us worked on the backend, with initializing databases and creating the script to process camera inputs, and some of us worked on frontend development, striving to create an easy-to-navigate platform for people to use.

## Challenges we ran into

One major challenge we ran into was time management. As newer programmers to hackathons, the pace of the project development was a bit of a shock going into the work. Additionally, there were various incompatibilities between softwares that we ran into, causing a variety of setbacks that ultimately led to most of the issues with the final product.

## Accomplishments that we're proud of

We are very proud of the fact that the tool is functional. Even though the product is certainly far from what we wanted to end up with, we are happy that we were able to at least approach a state of completion.

## What we learned

In the end, our project was a part of the grander learning experience each of us went through. The stress of completing all intended functionality and the difficulties of working under difficult, tiring conditions was a combination that challenged us all, and from those challenges we were able to learn strategies to mitigate such obstacles in the future.

## What's next for foodsense

We hope to be able to finally complete the web app in the way we originally intended. A big regret was definitely that we were not able to execute our plan as we originally meant to, so future development is definitely in the future of the website. | ## Inspiration

We were inspired by the Instagram app, which set out to connect people using photo media.

We believe that the next evolution of connectivity is augmented reality, which allows people to share and bring creations into the world around them. This revolutionary technology has immense potential to help restore the financial security of small businesses, which can no longer offer the same in-person shopping experiences they once did before the pandemic.

## What It Does

Metagram is a social network that aims to restore the connection between people and small businesses. Metagram allows users to scan creative works (food, models, furniture), which are then converted to models that can be experienced by others using AR technology.

## How we built it

We built our front-end UI using React.js, Express/Node.js and used MongoDB to store user data. We used Echo3D to host our models and AR capabilities on the mobile phone. In order to create personalized AR models, we hosted COLMAP and OpenCV scripts on Google Cloud to process images and then turn them into 3D models ready for AR.

## Challenges we ran into

One of the challenges we ran into was hosting software on Google Cloud, as it needed CUDA to run COLMAP. Since this was our first time using AR technology, we faced some hurdles getting to know Echo3D. However, the documentation was very well written, and the API integrated very nicely with our custom models and web app!

## Accomplishments that we're proud of

We are proud of being able to find a method in which we can host COLMAP on Google Cloud and also connect it to the rest of our application. The application is fully functional, and can be accessed by [clicking here](https://meta-match.herokuapp.com/).

## What We Learned

We learned a great deal about hosting COLMAP on Google Cloud. We were also able to learn how to create an AR and how to use Echo3D as we have never previously used it before, and how to integrate it all into a functional social networking web app!

## Next Steps for Metagram

* [ ] Improving the web interface and overall user experience

* [ ] Scan and upload 3D models in a more efficient manner

## Research

Small businesses are the backbone of our economy. They create jobs, improve our communities, fuel innovation, and ultimately help grow our economy! For context, small businesses made up 98% of all Canadian businesses in 2020 and provided nearly 70% of all jobs in Canada [[1]](https://www150.statcan.gc.ca/n1/pub/45-28-0001/2021001/article/00034-eng.htm).

However, the COVID-19 pandemic has devastated small businesses across the country. The Canadian Federation of Independent Business estimates that one in six businesses in Canada will close their doors permanently before the pandemic is over. This would be an economic catastrophe for employers, workers, and Canadians everywhere.

Why is the pandemic affecting these businesses so severely? We live in the age of the internet after all, right? Many retailers believe customers shop similarly online as they do in-store, but the research says otherwise.

The data is clear. According to a 2019 survey of over 1000 respondents, consumers spend significantly more per visit in-store than online [[2]](https://www.forbes.com/sites/gregpetro/2019/03/29/consumers-are-spending-more-per-visit-in-store-than-online-what-does-this-man-for-retailers/?sh=624bafe27543). Furthermore, a 2020 survey of over 16,000 shoppers found that 82% of consumers are more inclined to purchase after seeing, holding, or demoing products in-store [[3]](https://www.businesswire.com/news/home/20200102005030/en/2020-Shopping-Outlook-82-Percent-of-Consumers-More-Inclined-to-Purchase-After-Seeing-Holding-or-Demoing-Products-In-Store).

It seems that our senses and emotions play an integral role in the shopping experience. This fact is what inspired us to create Metagram, an AR app to help restore small businesses.

## References

* [1] <https://www150.statcan.gc.ca/n1/pub/45-28-0001/2021001/article/00034-eng.htm>

* [2] <https://www.forbes.com/sites/gregpetro/2019/03/29/consumers-are-spending-more-per-visit-in-store-than-online-what-does-this-man-for-retailers/?sh=624bafe27543>

* [3] <https://www.businesswire.com/news/home/20200102005030/en/2020-Shopping-Outlook-82-Percent-of-Consumers-More-Inclined-to-Purchase-After-Seeing-Holding-or-Demoing-Products-In-Store> | losing |

## Contributors

Andrea Tongsak, Vivian Zhang, Alyssa Tan, and Mira Tellegen

## Categories

* **Route: Hack for Resilience**

* **Route: Best Education Hack**

## Inspiration

We were inspired to focus our hack on the rise of instagram accounts exposing sexual assault stories from college campuses across the US, including the Case Western Reserve University account **@cwru.survivors**; and the history of sexual assault on campuses nationwide. We wanted to create an iOS app that would help sexual assault survivors and students navigate the dangerous reality of college campuses. With our app, it will be easier for a survivor report instances of harassment, while maintaining the integrity of the user data, and ensuring that data is anonymous and randomized. Our app will map safe and dangerous areas on campus based on user data to help women, minorities, and sexual assault survivors feel protected.

### **"When I looked in the mirror the next day, I could hardly recognize myself. Physically, emotionally, and mentally."** -A submission on @cwru.survivors IG page

Even with the **#MeToo movement**, there's only so much that technology can do. However, we hope that by creating this app, we will help college students take accountability and create a campus culture that can fosters learning and contributes towards social good.

### **"The friendly guy who helps you move and assists senior citizens in the pool is the same guy who assaulted me. One person can be capable of both. Society often fails to wrap its head around the fact that these truths often coexist, they are not mutually exclusive."** - Chanel Miller

## Brainstorming/Refining

* We started with the idea of mapping sexual assaults that happen on college campuses. However, throughout the weekend, we were able to brainstorm a lot of directions to take the app in.

* We considered making the app a platform focused on telling the stories of sexual assault survivors through maps containing quotes, but decided to pivot based on security concerns about protecting the identity of survivors, and to pivot towards an app that had an everyday functionality

* We were interested in implementing an emergency messaging app that would alert friends to dangerous situations on campus, but found similar apps existed, so kept brainstorming towards something more original

* We were inspired by the heat map functionality of SnapMaps, and decided to pursue the idea of creating a map that showed where users had reported danger or sexual assault on campus. With this idea, the app could be interactive for the user, present a platform for sexual assault survivors to share where they had been assaulted, and a hub for women and minorities to check the safety of their surroundings. The app would customize to a campus based on the app users in the area protecting each other

## What it does

## App Purpose

* Our app allows users to create a profile, then sign in to view a map of their college campus or area. The map in the app shows a heat map of dangerous areas on campus, from areas with a lot of assaults or danger reported, to areas where app users have felt safe.

* This map is generated by allowing users to anonymously submit a date, address, and story related to sexual assault or feeling unsafe. Then, the map is generated by the user data

* Therefore, users of the app can assess their safety based on other students' experiences, and understand how to protect themselves on campus.

## Functions

* Account creation and sign in function using **Firebox**, to allow users to have accounts and profiles

* Home screen with heat map of dangerous locations in the area, using the **Mapbox SDK**

* Profile screen, listing contact information and displaying the user's past submissions of dangerous locations

* Submission screen, where users can enter an address, time, and story related to a dangerous area on campus

## How we built it

## Technologies Utilized

* **Mapbox SDK**

* **Github**

* **XCode & Swift**

* **Firebase**

* **Adobe Illustrator**

* **Google Cloud**

* **Canva**

* **Cocoapods**

* **SurveyMonkey**

## Mentors & Help

* Ryan Matsumoto

* Rachel Lovell

## Challenges we ran into

**Mapbox SDK**

* Integrating an outside mapping service came with a variety of difficulties. We ran into problems learning their platform and troubleshooting errors with the Mapbox view. Furthermore, Mapbox has a lot of navigation functionality. Since our goal was a data map with a lot of visual effect and easy readability, we had to translate the Mapbox SDK to be usable with lots of data inputs. This meant coding so that the map would auto-adjust with each new data submission of dangerous locations on campus.

**UI Privacy Concerns**

* The Mapbox SDK was created to be able to pin very specific locations. However, our app deals with data points of locations of sexual assault, or unsafe locations. This brings up the concern of protecting the privacy of the people who submit addresses, and ensuring that users can't see the exact location submitted. So, we had to adjust the code to limit how far a user can zoom in, and to read as a heat map of general location, rather than pins.

**Coding for non-tech users**

* Our app, **viva**, was designed to be used by college students on their nights out, or at parties. The idea would be for them to check the safety of their area while walking home or while out with friends. So, we had to appeal to an audience of young people using the app in their free time or during special occasions. This meant the app would not appeal if it seemed tech-y or hard to use. So, we had to work to incorporate a lot of functionalities, and a user interface that was easy to use and appealing to young people. This included allowing them to make accounts, having an easily readable map, creating a submission page, and incorporating design elements.

## Accomplishments that we're proud of

## What we learned

We learned so much about so many different aspects of coding while hacking this app. First, the majority of the people in our group had never used **Github** before, so even just setting up Github Desktop, coordinating pushes, and allowing permissions was a struggle. We feel we have mastery of Github after the project, whereas before it was brand new. Being remote, we also faced Xcode compatibility issues, to the point that one person in our group couldn't demo the app based on her Xcode version. So, we learned a lot about troubleshooting systems we weren't familiar with, and finding support forums and creative solutions.

In terms of code, we had rarely worked in **Swift**, and never worked in **Mapbox SDK**, so learning how to adapt to a new SDK and integrate it while not knowing everything about the errors appearing was a huge learning experience. This involved working with .netrc files and permissions, and gave us insight to the coding aspect as well as the computers networks aspect of the project.

We also learned how to adapt to an audience, going through many drafts of the UI to hit on one that we thought would appeal to college students.

Last, we learned that what we heard in opening ceremony, about the importance of passion for the code, is true. We all feel like we have personally experienced the feeling of being unsafe on campus. We feel like we understand how difficult it can be for women and minorities on campus to feel at ease, with the culture of sexual predation on women, and the administration's blind eye. We put those emotions into the app, and we found that our shared experience as a group made us feel really connected to the project. Because we invested so much, the other things that we learned sunk in deep.

## What's next for Viva: an iOS app to map dangerous areas on college campuses

* A stretch goal or next step would be to use the **AdaFruit Bluefruit** device to create wearable hardware, that when tapped records danger to the app. This would allow users to easily report danger with the hardware, without opening the app, and have the potential to open up other safety features of the app in the future.

* We conducted a survey of college students, and 95.65% of people who responded thought our app would be an effective way to keep themselves safe on campus. A lot of them additionally requested a way to connect with other survivors or other people who have felt unsafe on campus. One responder suggested we add **"ways to stay calm and remind you that nothing's your fault"**. So, another next step would be to add forums and messaging for users, to forward our goal of connecting survivors through the platform. | ## Inspiration

In the “new normal” that COVID-19 has caused us to adapt to, our group found that a common challenge we faced was deciding where it was safe to go to complete trivial daily tasks, such as grocery shopping or eating out on occasion. We were inspired to create a mobile app using a database created completely by its users - a program where anyone could rate how well these “hubs” were following COVID-19 safety protocol.

## What it does

Our app allows for users to search a “hub” using a Google Map API, and write a review by rating prompted questions on a scale of 1 to 5 regarding how well the location enforces public health guidelines. Future visitors can then see these reviews and add their own, contributing to a collective safety rating.

## How I built it

We collaborated using Github and Android Studio, and incorporated both a Google Maps API as well integrated Firebase API.

## Challenges I ran into

Our group unfortunately faced a number of unprecedented challenges, including losing a team member mid-hack due to an emergency situation, working with 3 different timezones, and additional technical difficulties. However, we pushed through and came up with a final product we are all very passionate about and proud of!

## Accomplishments that I'm proud of

We are proud of how well we collaborated through adversity, and having never met each other in person before. We were able to tackle a prevalent social issue and come up with a plausible solution that could help bring our communities together, worldwide, similar to how our diverse team was brought together through this opportunity.

## What I learned

Our team brought a wide range of different skill sets to the table, and we were able to learn lots from each other because of our various experiences. From a technical perspective, we improved on our Java and android development fluency. From a team perspective, we improved on our ability to compromise and adapt to unforeseen situations. For 3 of us, this was our first ever hackathon and we feel very lucky to have found such kind and patient teammates that we could learn a lot from. The amount of knowledge they shared in the past 24 hours is insane.

## What's next for SafeHubs

Our next steps for SafeHubs include personalizing user experience through creating profiles, and integrating it into our community. We want SafeHubs to be a new way of staying connected (virtually) to look out for each other and keep our neighbours safe during the pandemic. | ## Inspiration

We wanted to create an immersive virtual reality experience for connecting people and innovating on the many classic party games and gathering and wanted to bring a new twist to connecting with other people leveraging cutting-edge technologies like deep learning and VR.

## What it does

StoryBoxVR is an Intelligent party game optimized by Deep Learning where players enact a spontaneous drama based on an AI generated story-line in a VR environment with constant disruptions of random GIFs and sentiment changes. Secondary users view the VR stream from one main player via an iPhone app and have the ability to interact with their environment, spawning monsters or changing the mood.

## How I built it

Dialogue generated using fairseq, keywords detected using microsoft cognitive toolkit, Unity for VR

## Challenges I ran into

Live streaming Unity

## Accomplishments that I'm proud of

The idea , it's ability to be 'smart and the AI generated script

## What I learned

Connecting different modules is the key to success and requires more time in future.

## What's next for StoryBox | partial |

## Inspiration

Let's face it: Museums, parks, and exhibits need some work in this digital era. Why lean over to read a small plaque when you can get a summary and details by tagging exhibits with a portable device?

There is a solution for this of course: NFC tags are a fun modern technology, and they could be used to help people appreciate both modern and historic masterpieces. Also there's one on your chest right now!

## The Plan