anchor

stringlengths 86

24.4k

| positive

stringlengths 174

15.6k

| negative

stringlengths 76

13.7k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Inspiration

Vision is perhaps our most important sense; we use our sight every waking moment to navigate the world safely, to make decisions, and to connect with others. As such, keeping our eyes healthy is extremely important to our quality of life. In spite of this, we often neglect to get our vision tested regularly, even as we subject our eyes to many varieties of strain in our computer-saturated lives. Because visiting the optometrist can be both time-consuming and difficult to schedule, we sought to create MySight – a simple and inexpensive way to test our vision anywhere, using only a smartphone and a Google Cardboard virtual reality (VR) headset. This app also has large potential impact in developing nations, where administering eye tests cheaply using portable, readily available equipment can change many lives for the better.

## What it does

MySight is a general vision testing application that runs on any modern smartphone in concert with a Google Cardboard VR headset. It allows you to perform a variety of clinical vision tests quickly and easily, including tests for color blindness, stereo vision, visual acuity, and irregular blindspots in the visual field. Beyond informing the user about the current state of their visual health, the results of these tests can be used to recommend that the patient follow up with an optometrist for further treatment. One salient example would be if the app detects one or more especially large blindspots in the patient’s visual field, which is indicative of conditions requiring medical attention, such as glaucoma or an ischemic stroke.

## How we built it

We built MySight using the Unity gaming engine and the Google Cardboard SDK. All scripts were written in C#. Our website (whatswrongwithmyeyes.org) was generated using Angular2.

## Challenges we ran into

None of us on the team had ever used Unity before, and only two of us had even minimal exposure to the C# language in the past. As such, we needed to learn both Unity and C#.

## Accomplishments that we're proud of

We are very pleased to have produced a working version of MySight, which will run on any modern smartphone.

## What we learned

Beyond learning the basics of Unity and C#, we also learned a great deal more about how we see, and how our eyes can be tested.

## What's next for MySight

We envision MySight as a general platform for diagnosing our eyes’ health, and potentially for *improving* eye health in the future, as we plan to implement eye and vision training exercises (c.f. Ultimeyes). | ## Inspiration

Retinal degeneration affects 1 in 3000 people, slowly robbing them of vision over the course of their mid-life. The need to adjust to life without vision, often after decades of relying on it for daily life, presents a unique challenge to individuals facing genetic disease or ocular injury, one which our teammate saw firsthand in his family, and inspired our group to work on a modular, affordable solution. Current technologies which provide similar proximity awareness often cost many thousands of dollars, and require a niche replacement in the user's environment; (shoes with active proximity sensing similar to our system often cost $3-4k for a single pair of shoes). Instead, our group has worked to create a versatile module which can be attached to any shoe, walker, or wheelchair, to provide situational awareness to the thousands of people adjusting to their loss of vision.

## What it does (Higher quality demo on google drive link!: <https://drive.google.com/file/d/1o2mxJXDgxnnhsT8eL4pCnbk_yFVVWiNM/view?usp=share_link> )

The module is constantly pinging its surroundings through a combination of IR and ultrasonic sensors. These are readily visible on the prototype, with the ultrasound device looking forward, and the IR sensor looking to the outward flank. These readings are referenced, alongside measurements from an Inertial Measurement Unit (IMU), to tell when the user is nearing an obstacle. The combination of sensors allows detection of a wide gamut of materials, including those of room walls, furniture, and people. The device is powered by a 7.4v LiPo cell, which displays a charging port on the front of the module. The device has a three hour battery life, but with more compact PCB-based electronics, it could easily be doubled. While the primary use case is envisioned to be clipped onto the top surface of a shoe, the device, roughly the size of a wallet, can be attached to a wide range of mobility devices.

The internal logic uses IMU data to determine when the shoe is on the bottom of a step 'cycle', and touching the ground. The Arduino Nano MCU polls the IMU's gyroscope to check that the shoe's angular speed is close to zero, and that the module is not accelerating significantly. After the MCU has established that the shoe is on the ground, it will then compare ultrasonic and IR proximity sensor readings to see if an obstacle is within a configurable range (in our case, 75cm front, 10cm side).

If the shoe detects an obstacle, it will activate a pager motor which vibrates the wearer's shoe (or other device). The pager motor will continue vibrating until the wearer takes a step which encounters no obstacles, thus acting as a toggle flip-flop.

An RGB LED is added for our debugging of the prototype:

RED - Shoe is moving - In the middle of a step

GREEN - Shoe is at bottom of step and sees an obstacle

BLUE - Shoe is at bottom of step and sees no obstacles

While our group's concept is to package these electronics into a sleek, clip-on plastic case, for now the electronics have simply been folded into a wearable form factor for demonstration.

## How we built it

Our group used an Arduino Nano, batteries, voltage regulators, and proximity sensors from the venue, and supplied our own IMU, kapton tape, and zip ties. (yay zip ties!)

I2C code for basic communication and calibration was taken from a user's guide of the IMU sensor. Code used for logic, sensor polling, and all other functions of the shoe was custom.

All electronics were custom.

Testing was done on the circuits by first assembling the Arduino Microcontroller Unit (MCU) and sensors on a breadboard, powered by laptop. We used this setup to test our code and fine tune our sensors, so that the module would behave how we wanted. We tested and wrote the code for the ultrasonic sensor, the IR sensor, and the gyro separately, before integrating as a system.

Next, we assembled a second breadboard with LiPo cells and a 5v regulator. The two 3.7v cells are wired in series to produce a single 7.4v 2S battery, which is then regulated back down to 5v by an LM7805 regulator chip. One by one, we switched all the MCU/sensor components off of laptop power, and onto our power supply unit. Unfortunately, this took a few tries, and resulted in a lot of debugging.

. After a circuit was finalized, we moved all of the breadboard circuitry to harnessing only, then folded the harnessing and PCB components into a wearable shape for the user.

## Challenges we ran into

The largest challenge we ran into was designing the power supply circuitry, as the combined load of the sensor DAQ package exceeds amp limits on the MCU. This took a few tries (and smoked components) to get right. The rest of the build went fairly smoothly, with the other main pain points being the calibration and stabilization of the IMU readings (this simply necessitated more trials) and the complex folding of the harnessing, which took many hours to arrange into its final shape.

## Accomplishments that we're proud of

We're proud to find a good solution to balance the sensibility of the sensors. We're also proud of integrating all the parts together, supplying them with appropriate power, and assembling the final product as small as possible all in one day.

## What we learned

Power was the largest challenge, both in terms of the electrical engineering, and the product design- ensuring that enough power can be supplied for long enough, while not compromising on the wearability of the product, as it is designed to be a versatile solution for many different shoes. Currently the design has a 3 hour battery life, and is easily rechargeable through a pair of front ports. The challenges with the power system really taught us firsthand how picking the right power source for a product can determine its usability. We were also forced to consider hard questions about our product, such as if there was really a need for such a solution, and what kind of form factor would be needed for a real impact to be made. Likely the biggest thing we learned from our hackathon project was the importance of the end user, and of the impact that engineering decisions have on the daily life of people who use your solution. For example, one of our primary goals was making our solution modular and affordable. Solutions in this space already exist, but their high price and uni-functional design mean that they are unable to have the impact they could. Our modular design hopes to allow for greater flexibility, acting as a more general tool for situational awareness.

## What's next for Smart Shoe Module

Our original idea was to use a combination of miniaturized LiDAR and ultrasound, so our next steps would likely involve the integration of these higher quality sensors, as well as a switch to custom PCBs, allowing for a much more compact sensing package, which could better fit into the sleek, usable clip on design our group envisions.

Additional features might include the use of different vibration modes to signal directional obstacles and paths, and indeed expanding our group's concept of modular assistive devices to other solution types.

We would also look forward to making a more professional demo video

Current example clip of the prototype module taking measurements:(<https://youtube.com/shorts/ECUF5daD5pU?feature=share>) | ## Inspiration

Toronto being one of the busiest cities in the world, is faced with tremendous amounts of traffic, whether it is pedestrians, commuters, bikers or drivers. With this comes an increased risk of crashes, and accidents, making methods of safe travel an ever-pressing issue, not only for those in Toronto, but for all people living in Metropolitan areas. This led us to explore possible solutions to such a problem, as we believe that all accidents should be tackled proactively, emphasizing on prevention rather than attempting to better deal with the after effects. Hence, we devised an innovative solution for this problem, which at its core is utilizing machine learning to predict routes/streets that are likely to be dangerous, and advises you on which route to take wherever you want to go.

## What it does

Leveraging AI technology, RouteSafer provides safer alternatives to Google Map routes and aims to reduce automotive collisions in cities. Using machine learning algorithms such as k-nearest neighbours, RouteSafer analyzes over 20 years of collision data and uses over 11 parameters to make an intelligent estimate about the safety of a route, and ensure the user arrives safe.

## How I built it

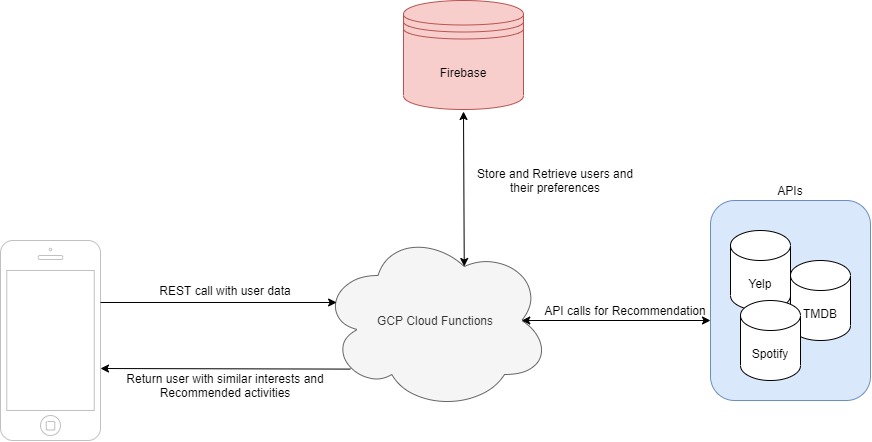

The path to implement RouteSafer starts with developing rough architecture that shows different modules of the project being independently built and at the same time being able to interact with each other in an efficient way. We divided the project into 3 different segments of UI, Backend and AI handled by Sherley, Shane & Hanz and Tanvir respectively.

The product leverages extensive API usage for different and diverse purposes including Google map API, AWS API and Kaggle API. Technologies involve React.js for front end, Flask for web services and Python for Machine Learning along with AWS to deploy it on the cloud.

The dataset ‘KSI’ was downloaded from Kaggle and has records from 2014 to 2018 on major accidents that took place in the city of Toronto. The dataset required a good amount of preprocessing because of its inconsistency, the techniques involving OneHotEncoder, Dimensionality reduction, Filling null or None values and also data featuring. This made sure that the data is consistent for all future challenges.

The Machine Learning usage gives the project a smart way to solve our problem, the use of K-Means clustering gave our dataset the feature to extract the risk level while driving on a particular street. The Google API feature retrieves different routes and the model helps to give it a risk feature hence making your travel route even safer.

## Challenges I ran into

One of the first challenges that we ran into as a team was learning how to properly integrate the Google Maps API polyline, and accurately converting the compressed string into numerical values expressing longitudes and latitudes. We finally solved this first challenge through lots of research, and even more stackoverflow searches :)

Furthermore, another challenge we ran into was the implementation/construction of our machine learning based REST API, as there were many different parts/models that we had to "glue" together, whether it was through http POST and GET requests, or some other form of communication protocol.

We faced many challenges throughout these two days, but we were able to push through thanks to the help of the mentors and lots of caffeine!

## Accomplishments that I'm proud of

The thing that we were most proud of was the fact that we reached all of our initial expectations, and beyond with regards to the product build. At the end of the two days we were left with a deployable product, that had gone through end to end testing and was ready for production. Given the limited time for development, we were very pleased with our performance and the resulting project we built. We were especially proud when we tested the service, and found that the results matched our intuition.

## What I learned

Working on RouteSafer has helped each one of us gain soft skills and technical skills. Some of us had no prior experience with technologies on our stack and working together helped to share the knowledge like the use of React.js and Machine Learning. The guidance provided through HackThe6ix gave us all insights to the big and great world of cloud computing with two of the world's largest cloud computing service onsite at the hackathon. Apart from technical skills, leveraging the skill of team work and communication was something we all benefitted from, and something we will definitely need in the future.

## What's next for RouteSafer

Moving forward we see RouteSafer expanding to other large cities like New York, Boston, and Vancouver. Car accidents are a pressing issue in all metropolitan areas, and we want RouteSafer there to prevent them. If one day RouteSafer could be fully integrated into Google Maps, and could be provided on any global route, our goal would be achieved.

In addition, we aim to expand our coverage by using Google Places data alongside collision data collected by various police forces. Google Places data will further enhance our model and allow us to better serve our customers.

Finally, we see RouteSafer partnering with a number of large insurance companies that would like to use the service to better protect their customers, provide lower premiums, and cut costs on claims. Partnering with a large insurance company would also give RouteSafer the ability to train and vastly improve its model.

To summarize, we want RouteSafer to grow and keep drivers safe across the Globe! | partial |

## Inspiration

While there are several applications that use OCR to read receipts, few take the leap towards informing consumers on their purchase decisions. We decided to capitalize on this gap: we currently provide information to customers about the healthiness of the food they purchase at grocery stores by analyzing receipts. In order to encourage healthy eating, we are also donating a portion of the total value of healthy food to a food-related non-profit charity in the United States or abroad.

## What it does

Our application uses Optical Character Recognition (OCR) to capture items and their respective prices on scanned receipts. We then parse through these words and numbers using an advanced Natural Language Processing (NLP) algorithm to match grocery items with its nutritional values from a database. By analyzing the amount of calories, fats, saturates, sugars, and sodium in each of these grocery items, we determine if the food is relatively healthy or unhealthy. Then, we calculate the amount of money spent on healthy and unhealthy foods, and donate a portion of the total healthy values to a food-related charity. In the future, we plan to run analytics on receipts from other industries, including retail, clothing, wellness, and education to provide additional information on personal spending habits.

## How We Built It

We use AWS Textract and Instabase API for OCR to analyze the words and prices in receipts. After parsing out the purchases and prices in Python, we used Levenshtein distance optimization for text classification to associate grocery purchases with nutritional information from an online database. Our algorithm utilizes Pandas to sort nutritional facts of food and determine if grocery items are healthy or unhealthy by calculating a “healthiness” factor based on calories, fats, saturates, sugars, and sodium. Ultimately, we output the amount of money spent in a given month on healthy and unhealthy food.

## Challenges We Ran Into

Our product relies heavily on utilizing the capabilities of OCR APIs such as Instabase and AWS Textract to parse the receipts that we use as our dataset. While both of these APIs have been developed on finely-tuned algorithms, the accuracy of parsing from OCR was lower than desired due to abbreviations for items on receipts, brand names, and low resolution images. As a result, we were forced to dedicate a significant amount of time to augment abbreviations of words, and then match them to a large nutritional dataset.

## Accomplishments That We're Proud Of

Project Horus has the capability to utilize powerful APIs from both Instabase or AWS to solve the complex OCR problem of receipt parsing. By diversifying our software, we were able to glean useful information and higher accuracy from both services to further strengthen the project itself, which leaves us with a unique dual capability.

We are exceptionally satisfied with our solution’s food health classification. While our algorithm does not always identify the exact same food item on the receipt due to truncation and OCR inaccuracy, it still matches items to substitutes with similar nutritional information.

## What We Learned

Through this project, the team gained experience with developing on APIS from Amazon Web Services. We found Amazon Textract extremely powerful and integral to our work of reading receipts. We were also exposed to the power of natural language processing, and its applications in bringing ML solutions to everyday life. Finally, we learned about combining multiple algorithms in a sequential order to solve complex problems. This placed an emphasis on modularity, communication, and documentation.

## The Future Of Project Horus

We plan on using our application and algorithm to provide analytics on receipts from outside of the grocery industry, including the clothing, technology, wellness, education industries to improve spending decisions among the average consumers. Additionally, this technology can be applied to manage the finances of startups and analyze the spending of small businesses in their early stages. Finally, we can improve the individual components of our model to increase accuracy, particularly text classification. | ## Inspiration

As college students more accustomed to having meals prepared by someone else than doing so ourselves, we are not the best at keeping track of ingredients’ expiration dates. As a consequence, money is wasted and food waste is produced, thereby discounting the financially advantageous aspect of cooking and increasing the amount of food that is wasted. With this problem in mind, we built an iOS app that easily allows anyone to record and track expiration dates for groceries.

## What it does

The app, iPerish, allows users to either take a photo of a receipt or load a pre-saved picture of the receipt from their photo library. The app uses Tesseract OCR to identify and parse through the text scanned from the receipt, generating an estimated expiration date for each food item listed. It then sorts the items by their expiration dates and displays the items with their corresponding expiration dates in a tabular view, such that the user can easily keep track of food that needs to be consumed soon. Once the user has consumed or disposed of the food, they could then remove the corresponding item from the list. Furthermore, as the expiration date for an item approaches, the text is highlighted in red.

## How we built it

We used Swift, Xcode, and the Tesseract OCR API. To generate expiration dates for grocery items, we made a local database with standard expiration dates for common grocery goods.

## Challenges we ran into

We found out that one of our initial ideas had already been implemented by one of CalHacks' sponsors. After discovering this, we had to scrap the idea and restart our ideation stage.

Choosing the right API for OCR on an iOS app also required time. We tried many available APIs, including the Microsoft Cognitive Services and Google Computer Vision APIs, but they do not have iOS support (the former has a third-party SDK that unfortunately does not work, at least for OCR). We eventually decided to use Tesseract for our app.

Our team met at Cubstart; this hackathon *is* our first hackathon ever! So, while we had some challenges setting things up initially, this made the process all the more rewarding!

## Accomplishments that we're proud of

We successfully managed to learn the Tesseract OCR API and made a final, beautiful product - iPerish. Our app has a very intuitive, user-friendly UI and an elegant app icon and launch screen. We have a functional MVP, and we are proud that our idea has been successfully implemented. On top of that, we have a promising market in no small part due to the ubiquitous functionality of our app.

## What we learned

During the hackathon, we learned both hard and soft skills. We learned how to incorporate the Tesseract API and make an iOS mobile app. We also learned team building skills such as cooperating, communicating, and dividing labor to efficiently use each and every team member's assets and skill sets.

## What's next for iPerish

Machine learning can optimize iPerish greatly. For instance, it can be used to expand our current database of common expiration dates by extrapolating expiration dates for similar products (e.g. milk-based items). Machine learning can also serve to increase the accuracy of the estimates by learning the nuances in shelf life of similarly-worded products. Additionally, ML can help users identify their most frequently bought products using data from scanned receipts. The app could recommend future grocery items to users, streamlining their grocery list planning experience.

Aside from machine learning, another useful update would be a notification feature that alerts users about items that will expire soon, so that they can consume the items in question before the expiration date. | ## Inspiration

* None of my friends wanted to do an IOS application with me so I built an application to find friends to hack with

* I would like to reuse the swiping functionality in another personal project

## What it does

* Create an account

* Make a profile of skills, interests and languages

* Find matches to hack with based on profiles

* Check out my Video!

## How we built it

* Built using Swift in xCode

* Used Parse and Heroku for backend

## Challenges we ran into

* I got stuck on so many things, but luckily not for long because of..

* Stack overflow and youtube and all my hacking friends that lent me a hand!

## Accomplishments that we're proud of

* Able to finish a project in 36 hours

* Trying some of the

* No dying of caffeine overdose

## What we learned

* I had never made an IOS app before

* I had never used Heroku before

## What's next for HackMates

* Add chat capabilities

* Add UI

* Better matching algorithm | winning |

## Inspiration

The California wildfires have proven how deadly fires can be; the mere smoke from fireworks can set ablaze hundreds of acres. What starts as a few sparks can easily become the ignition for a fire capable of destroying homes and habitats. California is just one example; fires can be just as dangerous in other parts of the world, even if not as often.

Approximately 300,000 were affected by fires and 14 million people were affected by floods last year in the US alone. These numbers will continue to rise, due to issues such as climate change.

Preventative equipment and forecasting is only half of the solution; the other half is education. People should be able to navigate any situation they may encounter. However, there are inherent shortcomings in the traditional teaching approach, and our game -S.O.S. - looks to bridge that gap by mixing fun and education.

## What it does

S.O.S. is a first-person story mode game that allows the player to choose between two scenarios: a home fire, or a flooded car. Players will be presented with multiple options designed to either help get the player out of the situation unscathed or impede their escape. For example, players may choose between breaking open car windows in a flood or waiting inside for help, based on their experience and knowledge.

Through trial and error and "bulletin boards" of info gathered from national institutions, players will be able to learn about fire and flood safety. We hope to make learning safety rules fun and engaging, straying from conventional teaching methods to create an overall pleasant experience and ultimately, save lives.

## How we built it

The game was built using C#, Unity, and Blender. Some open resource models were downloaded and, if needed, textured in Blender. These models were then imported into Unity, which was then laid out using ProBuilder and ProGrids. Afterward, C# code was written using the built-in Visual Studio IDE of Unity.

## Challenges we ran into

Some challenges we ran into include learning how to use Unity and code in C# as well as texture models in Blender and Unity itself. We ran into problems such as models not having the right textures or the wrong UV maps, so one of our biggest challenges was troubleshooting all of these problems. Furthermore, the C# code proved to be a challenge, especially with buttons and the physics component of Unity. Time was the biggest challenge of all, forcing us to cut down on our initial idea.

## Accomplishments that we're proud of

There are many accomplishments we as a team are proud of in this hackathon. Overall, our group has become much more adept with 3D software and coding.

## What we learned

We expanded our knowledge of making games in Unity, coding in C#, and modeling in Blender.

## What's next for SOS; Saving Our Souls

Next, we plan to improve the appearance of our game. The maps, lighting, and animation could use some work. Furthermore, more scenarios can be added, such as a Covid-19 scenario which we had initially planned. | As a response to the ongoing wildfires devastating vast areas of Australia, our team developed a web tool which provides wildfire data visualization, prediction, and logistics handling. We had two target audiences in mind: the general public and firefighters. The homepage of Phoenix is publicly accessible and anyone can learn information about the wildfires occurring globally, along with statistics regarding weather conditions, smoke levels, and safety warnings. We have a paid membership tier for firefighting organizations, where they have access to more in-depth information, such as wildfire spread prediction.

We deployed our web app using Microsoft Azure, and used Standard Library to incorporate Airtable, which enabled us to centralize the data we pulled from various sources. We also used it to create a notification system, where we send users a text whenever the air quality warrants action such as staying indoors or wearing a P2 mask.

We have taken many approaches to improving our platform’s scalability, as we anticipate spikes of traffic during wildfire events. Our codes scalability features include reusing connections to external resources whenever possible, using asynchronous programming, and processing API calls in batch. We used Azure’s functions in order to achieve this.

Azure Notebook and Cognitive Services were used to build various machine learning models using the information we collected from the NASA, EarthData, and VIIRS APIs. The neural network had a reasonable accuracy of 0.74, but did not generalize well to niche climates such as Siberia.

Our web-app was designed using React, Python, and d3js. We kept accessibility in mind by using a high-contrast navy-blue and white colour scheme paired with clearly legible, sans-serif fonts. Future work includes incorporating a text-to-speech feature to increase accessibility, and a color-blind mode. As this was a 24-hour hackathon, we ran into a time challenge and were unable to include this feature, however, we would hope to implement this in further stages of Phoenix. | ## Inspiration

Our inspiration comes from the Telemarketing survey. We want to create a sort of "prank call" to people, especially to our friends where the call will be a super-realistic voice presenting a survey to them. At the end, we have decided to program a chatbot that will conduct a survey via phone and ask them how they feel about AI.

## What it does

Our project is a chatbot that will conduct a survey on the population of London, Ontario about their own thoughts and believes towards Artificial Intelligence. The chatbot will present a series of multiple choices questions as well as open-ended questions about the perception and knowledge of AI. The answers will be recorded and analyzed before being sent to our website, where the data will be presented. The purpose is to give a score on how well our target population knows about AI, and survive an AI apocalypse.

## How we built it

We built it using Dasha AI.

## Challenges we ran into

The challenges we run to is that the application(AI) hungs up when there is a longer delay between the question and the response of the user. The second challenge is that the AI skip the last questions and automatically exit and hungs up during the first test of our application.

## Accomplishments that we're proud of

This is the first Hackathon that most of our members have participated in. Therefore, being able to challenge ourself and to build a complex project in a span of 36 hours is the greatest achievement that we have accomplished.

## What we learned

* The basic of Dasha ai and how to use it to develop a software.

## - Fostered our skills in web design.

## What's next for Boom or Doom : The Future of AI

**Target a larger population**

## If you want to try it out for yourself:

Clone the github repo and download NodeJS and Dasha!

<https://dasha.ai/en-us>

More instructions on setting up Dasha available here. | winning |

## Inspiration

Imagine this: you are at an event with your best friends and want some great jams to play. However, you have no playlist on hand that represents this energy-filled moment. After several years of struggling to find the perfect playlist for various occasions, we finally developed a solution to meet all needs.

## What it does

Mixr solves this problem by generating custom playlists based on a short questionnaire which inquires about your current mood and environment. Using this information in combination with your Spotify listening habits, Mixr synthesizes a playlist that is perfect for the moment.

## How we built it

We utilized HTML, CSS, and JavaScript, in combination with an Express server and the Spotify API. | ## Inspiration

Taking our initial approach of web-app development and music, we wanted to make something that was useful and applicable to real life such as playing music with your friends. So, we came upon the idea of this web-app that will serve as a virtual DJ that will learn the party's favourite songs while generating an endless amount of the same type of songs that we can vote on as one to play next.

## How we built it

We used html and css for web design, firebase for database, and Java-Script with Knockout-js for data binding as well as Spotify API.

## Challenges we ran into

Learning how to deal with real-time response, finding out how use a function that changes data in Firebase so that it automatically updates the web view as well. | ## Inspiration

Fall lab and design bay cleanout leads to some pretty interesting things being put out at the free tables. In this case, we were drawn in by a motorized Audi Spyder car. And then, we saw the Neurosity Crown headsets, and an idea was born. A single late night call among team members, excited about the possibility of using a kiddy car for something bigger was all it took. Why can't we learn about cool tech and have fun while we're at it?

Spyder is a way we can control cars with our minds. Use cases include remote rescue, non able-bodied individuals, warehouse, and being extremely cool.

## What it does

Spyder uses the Neurosity Crown to take the brainwaves of an individual, train an AI model to detect and identify certain brainwave patterns, and output them as a recognizable output to humans. It's a dry brain-computer interface (BCI) which means electrodes are placed against the scalp to read the brain's electrical activity. By taking advantage of these non-invasive method of reading electrical impulses, this allows for greater accessibility to neural technology.

Collecting these impulses, we are then able to forward these commands to our Viam interface. Viam is a software platform that allows you to easily put together smart machines and robotic projects. It completely changed the way we coded this hackathon. We used it to integrate every single piece of hardware on the car. More about this below! :)

## How we built it

### Mechanical

The manual steering had to be converted to automatic. We did this in SolidWorks by creating a custom 3D printed rack and pinion steering mechanism with a motor mount that was mounted to the existing steering bracket. Custom gear sizing was used for the rack and pinion due to load-bearing constraints. This allows us to command it with a DC motor via Viam and turn the wheel of the car, while maintaining the aesthetics of the steering wheel.

### Hardware

A 12V battery is connected to a custom soldered power distribution board. This powers the car, the boards, and the steering motor. For the DC motors, they are connected to a Cytron motor controller that supplies 10A to both the drive and steering motors via pulse-width modulation (PWM).

A custom LED controller and buck converter PCB stepped down the voltage from 12V to 5V for the LED under glow lights and the Raspberry Pi 4. The Raspberry Pi 4 uses the Viam SDK (which controls all peripherals) and connects to the Neurosity Crown for vision software controlling for the motors. All the wiring is custom soldered, and many parts are custom to fit our needs.

### Software

Viam was an integral part of our software development and hardware bringup. It significantly reduced the amount of code, testing, and general pain we'd normally go through creating smart machine or robotics projects. Viam was instrumental in debugging and testing to see if our system was even viable and to quickly check for bugs. The ability to test features without writing drivers or custom code saved us a lot of time. An exciting feature was how we could take code from Viam and merge it with a Go backend which is normally very difficult to do. Being able to integrate with Go was very cool - usually have to do python (flask + SDK). Being able to use Go, we get extra backend benefits without the headache of integration!

Additional software that we used was python for the keyboard control client, testing, and validation of mechanical and electrical hardware. We also used JavaScript and node to access the Neurosity Crown, Neurosity SDK and Kinesis API to grab trained AI signals from the console. We then used websockets to port them over to the Raspberry Pi to be used in driving the car.

## Challenges we ran into

Using the Neurosity Crown was the most challenging. Training the AI model to recognize a user's brainwaves and associate them with actions didn't always work. In addition, grabbing this data for more than one action per session was not possible which made controlling the car difficult as we couldn't fully realise our dream.

Additionally, it only caught fire once - which we consider to be a personal best. If anything, we created the world's fastest smoke machine.

## Accomplishments that we're proud of

We are proud of being able to complete a full mechatronics system within our 32 hours. We iterated through the engineering design process several times, pivoting multiple times to best suit our hardware availabilities and quickly making decisions to make sure we'd finish everything on time. It's a technically challenging project - diving into learning about neurotechnology and combining it with a new platform - Viam, to create something fun and useful.

## What we learned

Cars are really cool! Turns out we can do more than we thought with a simple kid car.

Viam is really cool! We learned through their workshop that we can easily attach peripherals to boards, use and train computer vision models, and even use SLAM! We spend so much time in class writing drivers, interfaces, and code for peripherals in robotics projects, but Viam has it covered. We were really excited to have had the chance to try it out!

Neurotech is really cool! Being able to try out technology that normally isn’t available or difficult to acquire and learn something completely new was a great experience.

## What's next for Spyder

* Backflipping car + wheelies

* Fully integrating the Viam CV for human safety concerning reaction time

* Integrating Adhawk glasses and other sensors to help determine user focus and control | losing |

## Inspiration

More creators are coming online to create entertaining content for fans across the globe. On platforms like Twitch and YouTube, creators have amassed billions of dollars in revenue thanks to loyal fans who return to be part of the experiences they create.

Most of these experiences feel transactional, however: Twitch creators mostly generate revenue from donations, subscriptions, and currency like "bits," where Twitch often takes a hefty 50% of the revenue from the transaction.

Creators need something new in their toolkit. Fans want to feel like they're part of something.

## Purpose

Moments enables creators to instantly turn on livestreams that can be captured as NFTs for live fans at any moment, powered by livepeer's decentralized video infrastructure network.

>

> "That's a moment."

>

>

>

During a stream, there often comes a time when fans want to save a "clip" and share it on social media for others to see. When such a moment happens, the creator can press a button and all fans will receive a non-fungible token in their wallet as proof that they were there for it, stamped with their viewer number during the stream.

Fans can rewatch video clips of their saved moments in their Inventory page.

## Description

Moments is a decentralized streaming service that allows streamers to save and share their greatest moments with their fans as NFTs. Using Livepeer's decentralized streaming platform, anyone can become a creator. After fans connect their wallet to watch streams, creators can mass send their viewers tokens of appreciation in the form of NFTs (a short highlight clip from the stream, a unique badge etc.) Viewers can then build their collection of NFTs through their inventory. Many streamers and content creators have short viral moments that get shared amongst their fanbase. With Moments, a bond is formed with the issuance of exclusive NFTs to the viewers that supported creators at their milestones. An integrated chat offers many emotes for viewers to interact with as well. | ## Inspiration

Nowadays, the payment for knowledge has become more acceptable by the public, and people are more willing to pay to these truly insightful, cuttting edge, and well-stuctured knowledge or curriculum. However, current centalized video content production platforms (like YouTube, Udemy, etc.) take too much profits from the content producers (resaech have shown that content creators usually only receive 15% of the values their contents create) and the values generated from the video are not distributed in a timely manner. In order to tackle this unfair value distribution, we have this decentralized platform EDU.IO where the video contents will be backed by their digital assets as an NFT (copyright protection!) and fractionalized as tokens, and it creates direct connections between content creators and viewers/fans (no middlemen anymore!), maximizing the value of the contents made by creators.

## What it does

EDU.IO is a decentralized educational video streaming media platform & fractionalized NFT exchange that empowers creator economy and redefines knowledge value distribution via smart contracts.

* As an educational hub, EDU.IO is a decentralized platform of high-quality educational videos on disruptive innovations and hot topics like metaverse, 5G, IoT, etc.

* As a booster of creator economy, once a creator uploads a video (or course series), it will be mint as an NFT (with copyright protection) and fractionalizes to multiple tokens. Our platform will conduct a mini-IPO for the each content they produced - bid for fractionalized NFTs. The value of each video token is determined by the number of views over a certain time interval, and token owners (can be both creators and viewers/fans/investors) can advertise the contents they owned to increase it values, and trade these tokens to earn monkey or make other investments (more liquidity!!).

* By the end of the week, the value generated by each video NFT will be distributed via smart contracts to the copyright / fractionalized NFT owners of each video.

Overall we’re hoping to build an ecosystem with more engagement between viewers and content creators, and our three main target users are:

* 1. Instructors or Content creators: where the video contents can get copyright protection via NFT, and they can get fairer value distribution and more liquidity compare to using large centralized platforms

* 2. Fans or Content viewers: where they can directly interact and support content creators, and the fee will be sent directly to the copyright owners via smart contract.

* 3. Investors: Lower barrier of investment, where everyone can only to a fragment of a content. People can also to bid or trading as a secondary market.

## How we built it

* Frontend in HTML, CSS, SCSS, Less, React.JS

* Backend in Express.JS, Node.JS

* ELUV.IO for minting video NFTs (eth-based) and for playing quick streaming videos with high quality & low latency

* CockroachDB (a distributed SQL DB) for storing structured user information (name, email, account, password, transactions, balance, etc.)

* IPFS & Filecoin (distributed protocol & data storage) for storing video/course previews (decentralization & anti-censorship)

## Challenges we ran into

* Transition from design to code

* CockroachDB has an extensive & complicated setup, which requires other extensions and stacks (like Docker) during the set up phase which caused a lot of problems locally on different computers.

* IPFS initially had set up errors as we had no access to the given ports → we modified the original access files to access different ports to get access.

* Error in Eluv.io’s documentation, but the Eluv.io mentor was very supportive :)

* Merging process was difficult when we attempted to put all the features (Frontend, IPFS+Filecoin, CockroachDB, Eluv.io) into one ultimate full-stack project as we worked separately and locally

* Sometimes we found the documentation hard to read and understand - in a lot of problems we encountered, the doc/forum says DO this rather then RUN this, where the guidance are not specific enough and we had to spend a lot of extra time researching & debugging. Also since not a lot of people are familiar with the API therefore it was hard to find exactly issues we faced. Of course, the staff are very helpful and solved a lot of problems for us :)

## Accomplishments that we're proud of

* Our Idea! Creative, unique, revolutionary. DeFi + Education + Creator Economy

* Learned new technologies like IPFS, Filecoin, Eluv.io, CockroachDB in one day

* Successful integration of each members work into one big full-stack project

## What we learned

* More in depth knowledge of Cryptocurrency, IPFS, NFT

* Different APIs and their functionalities (strengths and weaknesses)

* How to combine different subparts with different functionalities into a single application in a project

* Learned how to communicate efficiently with team members whenever there is a misunderstanding or difference in opinion

* Make sure we know what is going on within the project through active communications so that when we detect a potential problem, we solve it right away instead of wait until it produces more problems

* Different hashing methods that are currently popular in “crypto world” such as multihash with cid, IPFS’s own hashing system, etc. All of which are beyond our only knowledge of SHA-256

* The awesomeness of NFT fragmentation, we believe it has great potential in the future

* Learned the concept of a decentralized database which is directly opposite the current data bank structure that most of the world is using

## What's next for EDU.IO

* Implement NFT Fragmentation (fractionalized tokens)

* Improve the trading and secondary market by adding more feature like more graphs

* Smart contract development in solidity for value distribution based on the fractionalized tokens people owned

* Formulation of more complete rules and regulations - The current trading prices of fractionalized tokens are based on auction transactions, and eventually we hope it can become a free secondary market (just as the stock market) | ## Inspiration

We wanted to build an app that could replicate the background blur in professional pictures

## What it does

Import a photo from the library or camera and let you select the zone you want to keep in focus

## How we built it

We used Xcode and did most of the code in Swift

## Challenges we ran into

Everything

## Accomplishments that we're proud of

Finishing

## What we learned

How to use pictures inside an iOs app

## What's next for BlurryApp

Reaching a billion users. | winning |

## Inspiration

Rewind back to the beginning of this hackathon. Our group was stuck brainstorming a wide variety of ideas, and like any young group, we were spending so much time talking and weren't able to keep track of ideas. But what if all we had to do was speak and have our ideas written down? That's when we thought of Jabber AI. Taking inspiration from sticky note applications and AI that can have conversations, we built a project that listens to your ideas, summarizes them, and takes note of them to keep you organized. All you need to do is hit start and talk!

## What it does

Meet your personal assistant Mindy, who is integrated into Jabber AI to help you brainstorm ideas about your next revolutionary project. Mindy helps you talk through your ideas, generate new possibilities, and encourage you when you are stuck. As you speak, GPT-4o processes your spoken ideas into digestible note cards, and displays them in a bento-box layout in your workspace. The workspace is interactive: you can delete note cards and start or stop conversations with Mindy while keeping your workspace untouched. Using Hume's Speech Prosody model, Jabber AI analyzes expressions in the user's voice and emphasizes notes on the screen that the user is excited about.

## How we built it

### Frontend:

Receiving the summarized notes, we display them out in their own respective note cards, adding different background colors to keep emphasis on unique qualities. We also implemented features such as deleting sticky notes to keep the notes that are important to the user. On the right sidebar, the message history allows the user to look back on responses they might've missed or to keep track of where there thought process went. We also made the notecards able to fit together and limit the gaps between them to allow the user to have a larger view of the notecards.

### Backend:

We designed our voice assistant Mindy using Hume AI's EVI configuration by utilizing prompt engineering. We constructed Mindy to have different personality traits like patience and kindness, but also specific goals like helping the user with project inspiration. We passed each user message from the voice conversation into the OpenAI API GPT 4o model, where we gave it specific prompt instructions to process the voice transcript and organize detailed, hierarchal notes. These textual notes were then fed into the front end to be put in each note card. We also utilized Hume's Speech Prosody model by analyzing expressions for the emotions interest, excitement, and surprise, and when there were excessive levels (>0.7), we enabled a special yellow note card for those ideas and created a pulsing effect for that card.

## What's next for Jabber AI

We'd like to add the idea of linking different notecards, sort of turning it into a mindmap so that the aspect of the user's "train of thinking" can be seen. Since the AI can recommend different ideas to into regarding a topic, the user could select that specific notecard, speak about topics related to it, and having the produced notecards already linked to the selected notecard.

## Who we are

Kevin Zhu: rising sophomore at MIT studying CS and Math

Garman Xu: rising sophomore at NYU interested in intersections between technology and music

Chris Franco: rising sophomore at MIT studying CS | ## Inspiration

Oftentimes when we find ourselves not understanding the content that has been taught in class and rarely remembering what exactly is being conveyed. And some of us have the habit of mismatching notes and forgetting where we put them. So to help all the ailing students, there was this idea to make an app that would give the students curated automatic content from the notes which they upload online.

## What it does

A student uploads his notes to the application. The application creates a summary of the notes, additional information on the subject of the notes, flashcards for easy remembering and quizzes to test his knowledge. There is also the option to view other student's notes (who have uploaded it in the same platform) and do all of the above with them as well. We made an interactive website that can help students digitize and share notes!

## How we built it

Google cloud vision was used to convert images into text files. We used Google cloud NLP API for the formation of questions from the plain text by identifying the entities and syntax of the notes. We also identified the most salient features of the conversation and assumed it to be the topic of interest. By doing this, we are able to scrape more detailed information on the topic using google custom search engine API. We also scrape information from Wikipedia. Then we make flashcards based on the questions and answers and also make quizzes to test the knowledge of the student. We used Django as the backend to create a web app. We also made a chatbot in google dialog-flow to inherently enable the use of google assistant skills.

## Challenges we ran into

Extending the platform to a collaborative domain was tough. Connecting the chatbot framework to the backend and sending back dynamic responses using webhook was more complicated than we expected.

Also, we had to go through multiple iterations to get our question formation framework right. We used the assumption that the main topic would be the noun at the beginning of the sentence. Also, we had to replace pronouns in order to keep track of the conversation.

## Accomplishments that we're proud of

We have only 3 members in the team and one of them has a background in electronics engineering and no experience in computer science and as we only had the idea of what we were planning to make but no idea of how we will make. We are very proud to have achieved a fully functional application at the end of this 36-hour hackathon. We learned a lot of concepts regarding UI/UX design, backend logic formation, connecting backend and frontend in Django and general software engineering techniques.

## What we learned

We learned a lot about the problems of integrations and deploying an application. We also had a lot of fun making this application because we had the motive to contribute to a large number of people in day to day life. Also, we learned about NLP, UI/UX and the importance of having a well-set plan.

## What's next for Noted

In the best-case scenario, we would want to convert this into an open-source startup and help millions of students with their studies. So that they can score good marks in their upcoming examinations. | ## Inspiration

Inspired by the challenges posed by complex and expensive tools like Cvent, we developed Eventdash: a comprehensive event platform that handles everything from start to finish. Our intuitive AI simplifies the planning process, ensuring it's both effortless and user-friendly. With Eventdash, you can easily book venues and services, track your budget from beginning to end, and rely on our agents to negotiate pricing with venues and services via email or phone.

## What it does

EventEase is an AI-powered, end-to-end event management platform. It simplifies planning by booking venues, managing budgets, and coordinating services like catering and AV. A dashboard which shows costs and progress in real-time. With EventEase, event planning becomes seamless and efficient, transforming complex tasks into a user-friendly experience.

## How we built it

We designed a modular AI platform using Langchain to orchestrate services. AWS Bedrock powered our AI/ML capabilities, while You.com enhanced our search and data retrieval. We integrated Claude, Streamlit, and Vocode for NLP, UI, and voice features, creating a comprehensive event planning solution.

## Challenges we ran into

We faced several challenges during the integration process. We encountered difficulties integrating multiple tools, particularly with some open-source solutions not aligning with our specific use cases. We are actively working to address these issues and improve the integration.

## Accomplishments that we're proud of

We're thrilled about the strides we've made with Eventdash. It's more than just an event platform; it's a game-changer. Our AI-driven system redefines event planning, making it a breeze from start to finish. From booking venues to managing services, tracking budgets, and negotiating pricing, Eventdash handles it all seamlessly. It's the culmination of our dedication to simplifying event management, and we're proud to offer it to you. **Eventdash could potentially achieve a market cap in the range of $2 billion to $5 billion just on B2B sector** the market cap could potentially be higher due to the broader reach and larger number of potential users.

## What we learned

Our project deepened our understanding of AWS Bedrock's AI/ML capabilities and Vocode's voice interaction features. We mastered the art of seamlessly integrating 6-7 diverse tools, including Langchain, You.com, Claude, and Streamlit. This experience enhanced our skills in creating cohesive AI-driven platforms for complex business processes.

## What's next for EventDash

We aim to become the DoorDash of event planning, revolutionizing the B2B world. Unlike Cvent, which offers a more traditional approach, our AI-driven platform provides personalized, efficient, and cost-effective event solutions. We'll expand our capabilities, enhancing AI-powered venue matching, automated negotiations, and real-time budget optimization. Our goal is to streamline the entire event lifecycle, making complex planning as simple as ordering food delivery. | losing |

## Inspiration

With the rise of climate change but lack in the change of public policy, the greatest danger is not only natural disasters, but it is also the public not understand what is actually causing devasting weather events throughout the world. Since public policy and getting people to change would be the best way to combat climate change, we needed to build a solution that is more targeted for an audience not moved by data, but by what affects them.

## What it does

We created a new way to present data to help change the minds of climate deniers and bring events to those who are less informed. Weather Or Not focuses more on showing strong media (videos, pictures) of **local** events rather than global events climate deniers tend to ignore. When users receive the website, the videos render based on climate events and anomalies in their area, and the videos go into a landing site which shows the cause of such events.

## How we built it

To get the local events and media for the landing page, we used both Twitter API connected to an Azure SQL database hosted within a Python flask server and Chrome's Puppeteer Web Scraper in Node.js to get 3rd-party sources such as news outlets and scraping embedded Youtube videos from such sites. We created an HTML5 template with JQuery as the frontend to render the videos. The landing page includes also a newsletter where the data is stored in Firebase's Realtime Database to help provide resources to people visiting the site who have been moved by the images to learn more about how they can help combat the effects of Climate Change.

## Challenges we ran into

The hardest part was connecting the various API's from all the applications that were created.

## Accomplishments that we're proud of

We used tools and services most of us were new to, and we managed to accomplish a complex, API calling system that can be said is common in a lot of enterprise IT system architectures within a 24 hour period!

## What we learned

We learned a lot of new tools, such as Python flask, SQL, and machine learning models with Azure.

## What's next for Weather Or Not | ## Inspiration

Diseases in monoculture farms can spread easily and significantly impact food security and farmers' lives. We aim to create a solution that uses computer vision for the early detection and mitigation of these diseases.

## What it does

Our project is a proof-of-concept for detecting plant diseases using leaf images. We have a raspberry pi with a camera that takes an image of the plant, processes it, and sends an image to our API, which uses a neural network to detect signs of disease in that image. Our end goal is to incorporate this technology onto a drone-based system that can automatically detect crop diseases and alert farmers of potential outbreaks.

## How we built it

The first layer of our implementation is a raspberry pi that connects to a camera to capture leaf images. The second layer is our neural network, which the raspberry pi accesses through an API deployed on Digital Ocean.

## Challenges we ran into

The first hurdle in our journey was training the neural network for disease detection. We overcame this with FastAI and using transfer learning to build our network on top of ResNet, a complicated and performant CNN. The second part of our challenge was interfacing our software with our hardware, which ranged from creating and deploying APIs to figuring out specific Arduino wirings.

## Accomplishments that we're proud of

We're proud of creating a working POC of a complicated idea that has the potential to make an actual impact on people's lives.

## What we learned

We learned about a lot of aspects of building and deploying technology, ranging from MLOps to electronics. Specifically, we explored Computer Vision, Backend Development, Deployment, and Microcontrollers (and all the things that come between).

## What's next for Plant Disease Analysis

The next stage is to incorporate our technology with drones to automate the process of image capture and processing. We aim to create a technology that can help farmers prevent disease outbreaks and push the world into a more sustainable direction. | ## Inspiration

Since the breakout of the pandemic, we saw a surge in people’s need for an affordable, convenient, and environmentally friendly way of transportation. In particular, the main pain points in this area include taking public transportation is risky due to the pandemic, it’s strenuous to ride a bike for long-distance commuting, increasing traffic congestion, etc.

In the post-covid time, private and renewable energy transportation will be a huge market. Compared with the cutthroat competition in the EV industry, the eBike market has been ignored to some extent, so it is the competition is not as overwhelming and the market opportunity and potential are extremely promising.

At the moment, 95% of the bikes are exported from China, and they can not provide prompt aftersales service. The next step of our idea is to integrate resources to build an efficient service system for the current Chinese exporters.

We also see great progress and a promising future for carbon credit projects and decarbonization. This is what we are trying to integrate into our APP to track people’s carbon footprint and translate it into carbon credit to encourage people to make contributions to decarbonization.

## What it does

We are building an aftersales service system to integrate the existing resources such as manufacturers in China and more than 7000 brick and mortar shops in the US.

Unique value proposition: We have a strong supply chain management ability because most of the suppliers are from China and we have a close relationship with them, in the meantime, we are about to build an assembly line in the US to provide better service to the customers. Moreover, we are working on a system to integrate cyclists and carbon emissions, this unique model can make the rides more meaningful and intriguing.

## How we built it

The ecosystem will be built for various platforms and devices. The platform will include both Android and iOS apps because both operating systems have nearly equal percentages of users in the United States.

Google Cloud Maps API:

We'll be using Google Cloud Map API for receiving map location requests continuously and plot a path map accordingly. There will be metadata requests having direction, compass degrees, acceleration, speed, and height above sea level at every API request. These data features will be used to calculate reward points.

Detecting Mock Locations:

The above features can also be mapped for checking irregularities in the data received.

For instance, if a customer tries to trick the system to gain undue favors, these data features can be used to see if the location request data received is sent by a mock location app or a real one.

For example, a mock location app won't be able to give out varying directions. Moreover, the acceleration calculated by map request can be verified against the accelerometer sensor's values.

Fraud Prevention using Machine Learning:

Our app will be able to prevent various levels of fraud by cross-referencing different users and by using Machine Learning models of usage patterns. Such patterns which will be deviant from normal usage behavior will be evident and marked.

Trusted Platform Execution:

The app will be inherently secure as we will leverage the SDK APIs of phone platforms to check the integrity level of devices. It’ll be at the security level of banking apps using advanced program isolation techniques and cryptography to secure our app from other escalated processes. Our app won't work on rooted Android phones or jail-broken iPhones

## Challenges we ran into

How to precisely calculate the conversion from Mileage to Carbon Credits, currently we are using our own way to convert these numbers, but in the future when we have a huge enough customers base and want to work on the individual carbon credits trading, this conversion calculation would be meticulous.

During this week, a challenge we had was to time difference among the teammates. Our IT brain is in China so it was quite challenging for us to properly and fully communicate and make sure the information flow well within the team during such a short time.

## Accomplishments that we're proud of

We are the only company that combines micro mobility with climate change, as well as use this way to protect the forest.

## What we learned

We have talked to many existing and potential customers and learned a lot about their behavior patterns, preferences, social media exposure and comments on the eBike products.

We have learned a lot regarding APP design, product design, business development, and business model innovation through a lot of trial and error.

We have also learned how important partnership and relationships are and we have learned to invest a lot of time and resources into cultivating this.

Above up, we learned how fun hackathons can be!

## What's next for Meego Inc

Right now we have already built up the supply chain for eBikes and the next step of our idea is to integrate resources to build an efficient service system for the current Chinese exporters. | losing |

## Hack The Valley 4

Hack the Valley 2020 project

## On The Radar

**Inspiration**

Have you ever been walking through your campus and wondered what’s happening around you, but too unmotivated to search through Facebook, the school’s website and where ever else people post about social gatherings and just want to see what’s around?

Ever see an event online and think this looks like a lot of fun, just to realize that the event has already ended, or is on a different day?

Do you usually find yourself looking for nearby events in your neighborhood while you’re bored?

Looking for a better app that could give you notifications, and have all the events in one and accessible place?

These are some of the questions that inspired us to build “On the Radar” --- a user-friendly map navigation system that allows users to discover cool, real-time events that suit their interests and passion in the nearby area.

*Now you’ll be flying over the Radar!*

**Purpose**

On the Radar is a mobile application that allows users to match users with nearby events that suit their preferences.

The user’s location is detected using the “standard autocomplete search” that tracks your current location. Then, the app will display a customized set of events that are currently in progress in the user’s area which is catered to each user.

**Challenges**

* Lack of RAM in some computers, see Android Studio (This made some of our tests and emulations slow as it is a very resource-intensive program. We resolved this by having one of our team members run a massive virtual machine)

* Google Cloud (Implementing google maps integration and google app engine to host the rest API both proved more complicated than originally imagined.)

* Android Studio (As it was the first time for the majority of us using Android Studio and app development in general, it was quite the learning curve for all of us to help contribute to the app.)

* Domain.com (Linking our domain.com name, flyingovertheradar.space, to our github pages was a little bit more tricky than anticipated, needing a particular use of CNAME dns setup.)

* Radar.io (As it was our first time using Radar.io, and the first time implementing its sdk, it took a lot of trouble shooting to get it to work as desired.)

* Mongo DB (We decided to use Mongo DB Atlas to host our backend database needs, which took a while to get configured properly.)

* JSON objects/files (These proved to be the bain of our existence and took many hours to get them to convert into a usable format.)

* Rest API (Getting the rest API to respond correctly to our http requests was quite frustrating, we had to use many different http Java libraries before we found one that worked with our project.)

* Java/xml (As some of our members had no prior experience with both Java and xml, development proved even more difficult than originally anticipated.)

* Merge Conflicts (Ah, good old merge conflicts, a lot of fun trying to figure out what code you want to keep, delete or merge at 3am)

* Sleep deprivation (Over all our team of four got collectively 24 hours of sleep over this 36 hour hackathon.)

**Process of Building**

* For the front-end, we used Android Studio to develop the user interface of the app and its interactivity. This included a login page, a registration page and our home page in which has a map and events nearby you.

* MongoDB Atlas was used for back-end, we used it to store the users’ login and personal information along with events and their details.

* This link provides you with the Github repository of “On the Radar.” <https://github.com/maxerenberg/hackthevalley4/tree/master/app/src/main/java/com/hackthevalley4/hackthevalleyiv/controller>

* We also designed a prototype using Figma to plan out how the app could potentially look like.

The prototype’s link → <https://www.figma.com/proto/iKQ5ypH54mBKbhpLZDSzPX/On-The-Radar?node-id=13%3A0&scaling=scale-down>

* We also used a framework called Bootstrap to make our website. In this project, our team uploaded the website files through Github.

The website’s code → <https://github.com/arianneghislainerull/arianneghislainerull.github.io>

The website’s link → <https://flyingovertheradar.space/#>

*Look us up at*

# <http://flyingovertheradar.space> | ## Inspiration

As first-year students, we have experienced the difficulties of navigating our way around our new home. We wanted to facilitate the transition to university by helping students learn more about their university campus.

## What it does

A social media app for students to share daily images of their campus and earn points by accurately guessing the locations of their friend's image. After guessing, students can explore the location in full with detailed maps, including within university buildings.

## How we built it

Mapped-in SDK was used to display user locations in relation to surrounding buildings and help identify different campus areas. Reactjs was used as a mobile website as the SDK was unavailable for mobile. Express and Node for backend, and MongoDB Atlas serves as the backend for flexible datatypes.

## Challenges we ran into

* Developing in an unfamiliar framework (React) after learning that Android Studio was not feasible

* Bypassing CORS permissions when accessing the user's camera

## Accomplishments that we're proud of

* Using a new SDK purposely to address an issue that was relevant to our team

* Going through the development process, and gaining a range of experiences over a short period of time

## What we learned

* Planning time effectively and redirecting our goals accordingly

* How to learn by collaborating with team members to SDK experts, as well as reading documentation.

* Our tech stack

## What's next for LooGuessr

* creating more social elements, such as a global leaderboard/tournaments to increase engagement beyond first years

* considering freemium components, such as extra guesses, 360-view, and interpersonal wagers

* showcasing 360-picture view by stitching together a video recording from the user

* addressing privacy concerns with image face blur and an option for delaying showing the image | ## Inspiration

Have you ever wished to give a memorable dining experience to your loved ones, regardless of their location? We were inspired by the desire to provide our friends and family with a taste of our favorite dining experiences, no matter where they might be.

## What it does

It lets you book and pay for a meal of someone you care about.

## How we built it

Languages:-

Javascript, html, mongoDB, Aello API

Methodologies:-

- Simple and accessible UI

- database management

- blockchain contract validation

- AI chatBot

## Challenges we ran into

1. We have to design the friendly front-end user interface for both customers and restaurant partner which of them have their own functionality. Furthermore, we needed to integrate numerous concepts into our backend system, aggregating information from various APIs and utilizing Google Cloud for the storage of user data.

2. Given the abundance of information requiring straightforward organization, we had to carefully consider how to ensure an efficient user experience.

## Accomplishments that we're proud of

We have designed the flow of product development that clearly show us the potential of idea that able to scale in the future.

## What we learned

3. System Design: Through this project, we have delved deep into the intricacies of system design. We've learned how to architect and structure systems efficiently, considering scalability, performance, and user experience. This understanding is invaluable as it forms the foundation for creating robust and user-friendly solutions.

4. Collaboration: Working as a team has taught us the significance of effective collaboration. We've realized that diverse skill sets and perspectives can lead to innovative solutions. Communication, coordination, and the ability to leverage each team member's strengths have been essential in achieving our project goals.

5. Problem-Solving: Challenges inevitably arise during any project. Our experiences have honed our problem-solving skills, enabling us to approach obstacles with creativity and resilience. We've learned to break down complex issues into manageable tasks and find solutions collaboratively.

6. Adaptability: In the ever-evolving field of technology, adaptability is crucial. We've learned to embrace new tools, technologies, and methodologies as needed to keep our project on track and ensure it remains relevant in a dynamic landscape.collaborative as a team.

## What's next for Meal Treat

We want to integrate more tools for personalization, including a chatbot that supports customers in RSVPing their spot in the restaurant. This chatbot, utilizing Google Cloud's Dialogflow, will be trained to handle scheduling tasks. Next, we also plan to use Twilio's services to communicate with our customers through text SMS. Last but not least, we expect to incorporate blockchain technology to encrypt customer information, making it easier for the restaurant to manage and enhance protection, especially given our international services. Lastly, we aim to design an ecosystem that enhances the dining experience for everyone and fosters stronger relationships through meal care. | partial |

## Away From Keyboard

## Inspiration

We wanted to create something that anyone can use AFK for chrome. Whether it be for accessibility reasons -- such as for those with disabilities that can't use the keyboard -- or for daily use when you're cooking, our aim was to make scrolling and Chrome browsing easier.

## What it does

Our app AFK (away from keyboard) helps users scroll and read, hands off. You can control the page by saying "go down/up", "open/close tab", "go back/forward", "reload/refresh", or reading the text on the page (it will autoscroll once you reach the bottom).

## How we built it

Stack overflow and lots of panicked googling -- we also used Mozilla's web speech API.

## Challenges we ran into

We had some difficulties scraping the text from sites for the reading function as well as some difficulty integrating the APIs into our extension. We started off with a completely different idea and had to pivot mid-hack. This cut down a lot of our time, and we had troubles re-organizing and gauging the situation.

However, as a team, we all worked on contributing parts to the project and, in the end we were able to create a working product despite the small road bumps we ran into.

## Accomplishments that we are proud of

As a team, we were able to learn how to make chrome extensions in 24 hours :D

## What we learned

We learned chrome extensions, using APIs in the extension and also had some side adventures with vue.js and vuetify for webapps.

## What's next for AFK

We wanted to include other functionalities like taking screen shots and taking notes with the voice. | ## Inspiration

```

We had multiple inspirations for creating Discotheque. Multiple members of our team have fond memories of virtual music festivals, silent discos, and other ways of enjoying music or other audio over the internet in a more immersive experience. In addition, Sumedh is a DJ with experience performing for thousands back at Georgia Tech, so this space seemed like a fun project to do.

```

## What it does

```