url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 48

51

| id

int64 600M

1.08B

| node_id

stringlengths 18

24

| number

int64 2

3.45k

| title

stringlengths 1

276

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

int64 1,587B

1,640B

| updated_at

int64 1,588B

1,640B

| closed_at

int64 1,588B

1,640B

⌀ | author_association

stringclasses 3

values | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | draft

null | pull_request

null | is_pull_request

bool 1

class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/1643 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1643/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1643/comments | https://api.github.com/repos/huggingface/datasets/issues/1643/events | https://github.com/huggingface/datasets/issues/1643 | 775,280,046 | MDU6SXNzdWU3NzUyODAwNDY= | 1,643 | Dataset social_bias_frames 404 | {

"login": "atemate",

"id": 7501517,

"node_id": "MDQ6VXNlcjc1MDE1MTc=",

"avatar_url": "https://avatars.githubusercontent.com/u/7501517?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/atemate",

"html_url": "https://github.com/atemate",

"followers_url": "https://api.github.com/users/atemate/followers",

"following_url": "https://api.github.com/users/atemate/following{/other_user}",

"gists_url": "https://api.github.com/users/atemate/gists{/gist_id}",

"starred_url": "https://api.github.com/users/atemate/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/atemate/subscriptions",

"organizations_url": "https://api.github.com/users/atemate/orgs",

"repos_url": "https://api.github.com/users/atemate/repos",

"events_url": "https://api.github.com/users/atemate/events{/privacy}",

"received_events_url": "https://api.github.com/users/atemate/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"I see, master is already fixed in https://github.com/huggingface/datasets/commit/9e058f098a0919efd03a136b9b9c3dec5076f626"

] | 1,609,144,534,000 | 1,609,144,687,000 | 1,609,144,687,000 | NONE | null | ```

>>> from datasets import load_dataset

>>> dataset = load_dataset("social_bias_frames")

...

Downloading and preparing dataset social_bias_frames/default

...

~/.pyenv/versions/3.7.6/lib/python3.7/site-packages/datasets/utils/file_utils.py in get_from_cache(url, cache_dir, force_download, proxies, etag_timeout, resume_download, user_agent, local_files_only, use_etag)

484 )

485 elif response is not None and response.status_code == 404:

--> 486 raise FileNotFoundError("Couldn't find file at {}".format(url))

487 raise ConnectionError("Couldn't reach {}".format(url))

488

FileNotFoundError: Couldn't find file at https://homes.cs.washington.edu/~msap/social-bias-frames/SocialBiasFrames_v2.tgz

```

[Here](https://homes.cs.washington.edu/~msap/social-bias-frames/) we find button `Download data` with the correct URL for the data: https://homes.cs.washington.edu/~msap/social-bias-frames/SBIC.v2.tgz | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1643/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1643/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1641 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1641/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1641/comments | https://api.github.com/repos/huggingface/datasets/issues/1641/events | https://github.com/huggingface/datasets/issues/1641 | 775,110,872 | MDU6SXNzdWU3NzUxMTA4NzI= | 1,641 | muchocine dataset cannot be dowloaded | {

"login": "mrm8488",

"id": 3653789,

"node_id": "MDQ6VXNlcjM2NTM3ODk=",

"avatar_url": "https://avatars.githubusercontent.com/u/3653789?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mrm8488",

"html_url": "https://github.com/mrm8488",

"followers_url": "https://api.github.com/users/mrm8488/followers",

"following_url": "https://api.github.com/users/mrm8488/following{/other_user}",

"gists_url": "https://api.github.com/users/mrm8488/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mrm8488/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mrm8488/subscriptions",

"organizations_url": "https://api.github.com/users/mrm8488/orgs",

"repos_url": "https://api.github.com/users/mrm8488/repos",

"events_url": "https://api.github.com/users/mrm8488/events{/privacy}",

"received_events_url": "https://api.github.com/users/mrm8488/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892913,

"node_id": "MDU6TGFiZWwxOTM1ODkyOTEz",

"url": "https://api.github.com/repos/huggingface/datasets/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": "This will not be worked on"

},

{

"id": 2067388877,

"node_id": "MDU6TGFiZWwyMDY3Mzg4ODc3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20bug",

"name": "dataset bug",

"color": "2edb81",

"default": false,

"description": "A bug in a dataset script provided in the library"

}

] | closed | false | null | [] | null | [

"I have encountered the same error with `v1.0.1` and `v1.0.2` on both Windows and Linux environments. However, cloning the repo and using the path to the dataset's root directory worked for me. Even after having the dataset cached - passing the path is the only way (for now) to load the dataset.\r\n\r\n```python\r\nfrom datasets import load_dataset\r\n\r\ndataset = load_dataset(\"squad\") # Works\r\ndataset = load_dataset(\"code_search_net\", \"python\") # Error\r\ndataset = load_dataset(\"covid_qa_deepset\") # Error\r\n\r\npath = \"/huggingface/datasets/datasets/{}/\"\r\ndataset = load_dataset(path.format(\"code_search_net\"), \"python\") # Works\r\ndataset = load_dataset(path.format(\"covid_qa_deepset\")) # Works\r\n```\r\n\r\n",

"Hi @mrm8488 and @amoux!\r\n The datasets you are trying to load have been added to the library during the community sprint for v2 last month. They will be available with the v2 release!\r\nFor now, there are still a couple of solutions to load the datasets:\r\n1. As suggested by @amoux, you can clone the git repo and pass the local path to the script\r\n2. You can also install the latest (master) version of `datasets` using pip: `pip install git+https://github.com/huggingface/datasets.git@master`",

"If you don't want to clone entire `datasets` repo, just download the `muchocine` directory and pass the local path to the directory. Cheers!",

"Muchocine was added recently, that's why it wasn't available yet.\r\n\r\nTo load it you can just update `datasets`\r\n```\r\npip install --upgrade datasets\r\n```\r\n\r\nand then you can load `muchocine` with\r\n\r\n```python\r\nfrom datasets import load_dataset\r\n\r\ndataset = load_dataset(\"muchocine\", split=\"train\")\r\n```",

"Thanks @lhoestq "

] | 1,609,104,388,000 | 1,627,967,249,000 | 1,627,967,249,000 | NONE | null | ```python

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

/usr/local/lib/python3.6/dist-packages/datasets/load.py in prepare_module(path, script_version, download_config, download_mode, dataset, force_local_path, **download_kwargs)

267 try:

--> 268 local_path = cached_path(file_path, download_config=download_config)

269 except FileNotFoundError:

7 frames

FileNotFoundError: Couldn't find file at https://raw.githubusercontent.com/huggingface/datasets/1.0.2/datasets/muchocine/muchocine.py

During handling of the above exception, another exception occurred:

FileNotFoundError Traceback (most recent call last)

FileNotFoundError: Couldn't find file at https://s3.amazonaws.com/datasets.huggingface.co/datasets/datasets/muchocine/muchocine.py

During handling of the above exception, another exception occurred:

FileNotFoundError Traceback (most recent call last)

/usr/local/lib/python3.6/dist-packages/datasets/load.py in prepare_module(path, script_version, download_config, download_mode, dataset, force_local_path, **download_kwargs)

281 raise FileNotFoundError(

282 "Couldn't find file locally at {}, or remotely at {} or {}".format(

--> 283 combined_path, github_file_path, file_path

284 )

285 )

FileNotFoundError: Couldn't find file locally at muchocine/muchocine.py, or remotely at https://raw.githubusercontent.com/huggingface/datasets/1.0.2/datasets/muchocine/muchocine.py or https://s3.amazonaws.com/datasets.huggingface.co/datasets/datasets/muchocine/muchocine.py

``` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1641/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1641/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1639 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1639/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1639/comments | https://api.github.com/repos/huggingface/datasets/issues/1639/events | https://github.com/huggingface/datasets/issues/1639 | 774,903,472 | MDU6SXNzdWU3NzQ5MDM0NzI= | 1,639 | bug with sst2 in glue | {

"login": "ghost",

"id": 10137,

"node_id": "MDQ6VXNlcjEwMTM3",

"avatar_url": "https://avatars.githubusercontent.com/u/10137?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ghost",

"html_url": "https://github.com/ghost",

"followers_url": "https://api.github.com/users/ghost/followers",

"following_url": "https://api.github.com/users/ghost/following{/other_user}",

"gists_url": "https://api.github.com/users/ghost/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ghost/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ghost/subscriptions",

"organizations_url": "https://api.github.com/users/ghost/orgs",

"repos_url": "https://api.github.com/users/ghost/repos",

"events_url": "https://api.github.com/users/ghost/events{/privacy}",

"received_events_url": "https://api.github.com/users/ghost/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"Maybe you can use nltk's treebank detokenizer ?\r\n```python\r\nfrom nltk.tokenize.treebank import TreebankWordDetokenizer\r\n\r\nTreebankWordDetokenizer().detokenize(\"it 's a charming and often affecting journey . \".split())\r\n# \"it's a charming and often affecting journey.\"\r\n```",

"I am looking for alternative file URL here instead of adding extra processing code: https://github.com/huggingface/datasets/blob/171f2bba9dd8b92006b13cf076a5bf31d67d3e69/datasets/glue/glue.py#L174",

"I don't know if there exists a detokenized version somewhere. Even the version on kaggle is tokenized"

] | 1,609,001,843,000 | 1,630,076,603,000 | null | NONE | null | Hi

I am getting very low accuracy on SST2 I investigate this and observe that for this dataset sentences are tokenized, while this is correct for the other datasets in GLUE, please see below.

Is there any alternatives I could get untokenized sentences? I am unfortunately under time pressure to report some results on this dataset. thank you for your help. @lhoestq

```

>>> a = datasets.load_dataset('glue', 'sst2', split="validation", script_version="master")

Reusing dataset glue (/julia/datasets/glue/sst2/1.0.0/7c99657241149a24692c402a5c3f34d4c9f1df5ac2e4c3759fadea38f6cb29c4)

>>> a[:10]

{'idx': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9], 'label': [1, 0, 1, 1, 0, 1, 0, 0, 1, 0], 'sentence': ["it 's a charming and often affecting journey . ", 'unflinchingly bleak and desperate ', 'allows us to hope that nolan is poised to embark a major career as a commercial yet inventive filmmaker . ', "the acting , costumes , music , cinematography and sound are all astounding given the production 's austere locales . ", "it 's slow -- very , very slow . ", 'although laced with humor and a few fanciful touches , the film is a refreshingly serious look at young women . ', 'a sometimes tedious film . ', "or doing last year 's taxes with your ex-wife . ", "you do n't have to know about music to appreciate the film 's easygoing blend of comedy and romance . ", "in exactly 89 minutes , most of which passed as slowly as if i 'd been sitting naked on an igloo , formula 51 sank from quirky to jerky to utter turkey . "]}

``` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1639/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1639/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1636 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1636/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1636/comments | https://api.github.com/repos/huggingface/datasets/issues/1636/events | https://github.com/huggingface/datasets/issues/1636 | 774,574,378 | MDU6SXNzdWU3NzQ1NzQzNzg= | 1,636 | winogrande cannot be dowloaded | {

"login": "ghost",

"id": 10137,

"node_id": "MDQ6VXNlcjEwMTM3",

"avatar_url": "https://avatars.githubusercontent.com/u/10137?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ghost",

"html_url": "https://github.com/ghost",

"followers_url": "https://api.github.com/users/ghost/followers",

"following_url": "https://api.github.com/users/ghost/following{/other_user}",

"gists_url": "https://api.github.com/users/ghost/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ghost/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ghost/subscriptions",

"organizations_url": "https://api.github.com/users/ghost/orgs",

"repos_url": "https://api.github.com/users/ghost/repos",

"events_url": "https://api.github.com/users/ghost/events{/privacy}",

"received_events_url": "https://api.github.com/users/ghost/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"I have same issue for other datasets (`myanmar_news` in my case).\r\n\r\nA version of `datasets` runs correctly on my local machine (**without GPU**) which looking for the dataset at \r\n```\r\nhttps://raw.githubusercontent.com/huggingface/datasets/master/datasets/myanmar_news/myanmar_news.py\r\n```\r\n\r\nMeanwhile, other version runs on Colab (**with GPU**) failed to download the dataset. It try to find the dataset at `1.1.3` instead of `master` . If I disable GPU on my Colab, the code can load the dataset without any problem.\r\n\r\nMaybe there is some version missmatch with the GPU and CPU version of code for these datasets?",

"It looks like they're two different issues\r\n\r\n----------\r\n\r\nFirst for `myanmar_news`: \r\n\r\nIt must come from the way you installed `datasets`.\r\nIf you install `datasets` from source, then the `myanmar_news` script will be loaded from `master`.\r\nHowever if you install from `pip` it will get it using the version of the lib (here `1.1.3`) and `myanmar_news` is not available in `1.1.3`.\r\n\r\nThe difference between your GPU and CPU executions must be the environment, one seems to have installed `datasets` from source and not the other.\r\n\r\n----------\r\n\r\nThen for `winogrande`:\r\n\r\nThe errors says that the url https://raw.githubusercontent.com/huggingface/datasets/1.1.3/datasets/winogrande/winogrande.py is not reachable.\r\nHowever it works fine on my side.\r\n\r\nDoes your machine have an internet connection ? Are connections to github blocked by some sort of proxy ?\r\nCan you also try again in case github had issues when you tried the first time ?\r\n"

] | 1,608,848,902,000 | 1,609,163,629,000 | null | NONE | null | Hi,

I am getting this error when trying to run the codes on the cloud. Thank you for any suggestion and help on this @lhoestq

```

File "./finetune_trainer.py", line 318, in <module>

main()

File "./finetune_trainer.py", line 148, in main

for task in data_args.tasks]

File "./finetune_trainer.py", line 148, in <listcomp>

for task in data_args.tasks]

File "/workdir/seq2seq/data/tasks.py", line 65, in get_dataset

dataset = self.load_dataset(split=split)

File "/workdir/seq2seq/data/tasks.py", line 466, in load_dataset

return datasets.load_dataset('winogrande', 'winogrande_l', split=split)

File "/usr/local/lib/python3.6/dist-packages/datasets/load.py", line 589, in load_dataset

path, script_version=script_version, download_config=download_config, download_mode=download_mode, dataset=True

File "/usr/local/lib/python3.6/dist-packages/datasets/load.py", line 267, in prepare_module

local_path = cached_path(file_path, download_config=download_config)

File "/usr/local/lib/python3.6/dist-packages/datasets/utils/file_utils.py", line 308, in cached_path

use_etag=download_config.use_etag,

File "/usr/local/lib/python3.6/dist-packages/datasets/utils/file_utils.py", line 487, in get_from_cache

raise ConnectionError("Couldn't reach {}".format(url))

ConnectionError: Couldn't reach https://raw.githubusercontent.com/huggingface/datasets/1.1.3/datasets/winogrande/winogrande.py

yo/0 I1224 14:17:46.419031 31226 main shadow.py:122 > Traceback (most recent call last):

File "/usr/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/usr/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/usr/local/lib/python3.6/dist-packages/torch/distributed/launch.py", line 260, in <module>

main()

File "/usr/local/lib/python3.6/dist-packages/torch/distributed/launch.py", line 256, in main

cmd=cmd)

``` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1636/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1636/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1635 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1635/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1635/comments | https://api.github.com/repos/huggingface/datasets/issues/1635/events | https://github.com/huggingface/datasets/issues/1635 | 774,524,492 | MDU6SXNzdWU3NzQ1MjQ0OTI= | 1,635 | Persian Abstractive/Extractive Text Summarization | {

"login": "m3hrdadfi",

"id": 2601833,

"node_id": "MDQ6VXNlcjI2MDE4MzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/2601833?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/m3hrdadfi",

"html_url": "https://github.com/m3hrdadfi",

"followers_url": "https://api.github.com/users/m3hrdadfi/followers",

"following_url": "https://api.github.com/users/m3hrdadfi/following{/other_user}",

"gists_url": "https://api.github.com/users/m3hrdadfi/gists{/gist_id}",

"starred_url": "https://api.github.com/users/m3hrdadfi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/m3hrdadfi/subscriptions",

"organizations_url": "https://api.github.com/users/m3hrdadfi/orgs",

"repos_url": "https://api.github.com/users/m3hrdadfi/repos",

"events_url": "https://api.github.com/users/m3hrdadfi/events{/privacy}",

"received_events_url": "https://api.github.com/users/m3hrdadfi/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

}

] | closed | false | null | [] | null | [] | 1,608,832,032,000 | 1,609,773,064,000 | 1,609,773,064,000 | CONTRIBUTOR | null | Assembling datasets tailored to different tasks and languages is a precious target. This would be great to have this dataset included.

## Adding a Dataset

- **Name:** *pn-summary*

- **Description:** *A well-structured summarization dataset for the Persian language consists of 93,207 records. It is prepared for Abstractive/Extractive tasks (like cnn_dailymail for English). It can also be used in other scopes like Text Generation, Title Generation, and News Category Classification.*

- **Paper:** *https://arxiv.org/abs/2012.11204*

- **Data:** *https://github.com/hooshvare/pn-summary/#download*

- **Motivation:** *It is the first Persian abstractive/extractive Text summarization dataset (like cnn_dailymail for English)!*

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1635/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1635/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1634 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1634/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1634/comments | https://api.github.com/repos/huggingface/datasets/issues/1634/events | https://github.com/huggingface/datasets/issues/1634 | 774,487,934 | MDU6SXNzdWU3NzQ0ODc5MzQ= | 1,634 | Inspecting datasets per category | {

"login": "ghost",

"id": 10137,

"node_id": "MDQ6VXNlcjEwMTM3",

"avatar_url": "https://avatars.githubusercontent.com/u/10137?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ghost",

"html_url": "https://github.com/ghost",

"followers_url": "https://api.github.com/users/ghost/followers",

"following_url": "https://api.github.com/users/ghost/following{/other_user}",

"gists_url": "https://api.github.com/users/ghost/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ghost/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ghost/subscriptions",

"organizations_url": "https://api.github.com/users/ghost/orgs",

"repos_url": "https://api.github.com/users/ghost/repos",

"events_url": "https://api.github.com/users/ghost/events{/privacy}",

"received_events_url": "https://api.github.com/users/ghost/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

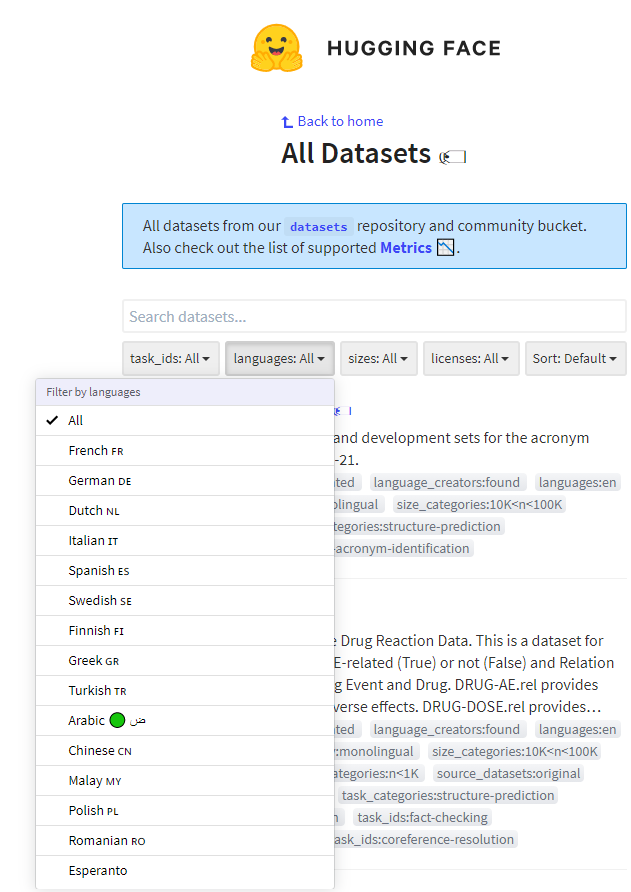

"That's interesting, can you tell me what you think would be useful to access to inspect a dataset?\r\n\r\nYou can filter them in the hub with the search by the way: https://huggingface.co/datasets have you seen it?",

"Hi @thomwolf \r\nthank you, I was not aware of this, I was looking into the data viewer linked into readme page. \r\n\r\nThis is exactly what I was looking for, but this does not work currently, please see the attached \r\nI am selecting to see all nli datasets in english and it retrieves none. thanks\r\n\r\n\r\n\r\n\r\n\r\n",

"I see 4 results for NLI in English but indeed some are not tagged yet and missing (GLUE), we will focus on that in January (cc @yjernite): https://huggingface.co/datasets?filter=task_ids:natural-language-inference,languages:en"

] | 1,608,823,594,000 | 1,610,098,084,000 | null | NONE | null | Hi

Is there a way I could get all NLI datasets/all QA datasets to get some understanding of available datasets per category? this is hard for me to inspect the datasets one by one in the webpage, thanks for the suggestions @lhoestq | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1634/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1634/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1633 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1633/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1633/comments | https://api.github.com/repos/huggingface/datasets/issues/1633/events | https://github.com/huggingface/datasets/issues/1633 | 774,422,603 | MDU6SXNzdWU3NzQ0MjI2MDM= | 1,633 | social_i_qa wrong format of labels | {

"login": "ghost",

"id": 10137,

"node_id": "MDQ6VXNlcjEwMTM3",

"avatar_url": "https://avatars.githubusercontent.com/u/10137?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ghost",

"html_url": "https://github.com/ghost",

"followers_url": "https://api.github.com/users/ghost/followers",

"following_url": "https://api.github.com/users/ghost/following{/other_user}",

"gists_url": "https://api.github.com/users/ghost/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ghost/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ghost/subscriptions",

"organizations_url": "https://api.github.com/users/ghost/orgs",

"repos_url": "https://api.github.com/users/ghost/repos",

"events_url": "https://api.github.com/users/ghost/events{/privacy}",

"received_events_url": "https://api.github.com/users/ghost/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"@lhoestq, should I raise a PR for this? Just a minor change while reading labels text file",

"Sure feel free to open a PR thanks !"

] | 1,608,815,514,000 | 1,609,348,729,000 | 1,609,348,729,000 | NONE | null | Hi,

there is extra "\n" in labels of social_i_qa datasets, no big deal, but I was wondering if you could remove it to make it consistent.

so label is 'label': '1\n', not '1'

thanks

```

>>> import datasets

>>> from datasets import load_dataset

>>> dataset = load_dataset(

... 'social_i_qa')

cahce dir /julia/cache/datasets

Downloading: 4.72kB [00:00, 3.52MB/s]

cahce dir /julia/cache/datasets

Downloading: 2.19kB [00:00, 1.81MB/s]

Using custom data configuration default

Reusing dataset social_i_qa (/julia/datasets/social_i_qa/default/0.1.0/4a4190cc2d2482d43416c2167c0c5dccdd769d4482e84893614bd069e5c3ba06)

>>> dataset['train'][0]

{'answerA': 'like attending', 'answerB': 'like staying home', 'answerC': 'a good friend to have', 'context': 'Cameron decided to have a barbecue and gathered her friends together.', 'label': '1\n', 'question': 'How would Others feel as a result?'}

```

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1633/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1633/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1632 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1632/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1632/comments | https://api.github.com/repos/huggingface/datasets/issues/1632/events | https://github.com/huggingface/datasets/issues/1632 | 774,388,625 | MDU6SXNzdWU3NzQzODg2MjU= | 1,632 | SICK dataset | {

"login": "rabeehk",

"id": 6278280,

"node_id": "MDQ6VXNlcjYyNzgyODA=",

"avatar_url": "https://avatars.githubusercontent.com/u/6278280?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rabeehk",

"html_url": "https://github.com/rabeehk",

"followers_url": "https://api.github.com/users/rabeehk/followers",

"following_url": "https://api.github.com/users/rabeehk/following{/other_user}",

"gists_url": "https://api.github.com/users/rabeehk/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rabeehk/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rabeehk/subscriptions",

"organizations_url": "https://api.github.com/users/rabeehk/orgs",

"repos_url": "https://api.github.com/users/rabeehk/repos",

"events_url": "https://api.github.com/users/rabeehk/events{/privacy}",

"received_events_url": "https://api.github.com/users/rabeehk/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

}

] | closed | false | null | [] | null | [] | 1,608,813,614,000 | 1,612,540,165,000 | 1,612,540,165,000 | CONTRIBUTOR | null | Hi, this would be great to have this dataset included. I might be missing something, but I could not find it in the list of already included datasets. Thank you.

## Adding a Dataset

- **Name:** SICK

- **Description:** SICK consists of about 10,000 English sentence pairs that include many examples of the lexical, syntactic, and semantic phenomena.

- **Paper:** https://www.aclweb.org/anthology/L14-1314/

- **Data:** http://marcobaroni.org/composes/sick.html

- **Motivation:** This dataset is well-known in the NLP community used for recognizing entailment between sentences.

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1632/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1632/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1630 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1630/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1630/comments | https://api.github.com/repos/huggingface/datasets/issues/1630/events | https://github.com/huggingface/datasets/issues/1630 | 774,332,129 | MDU6SXNzdWU3NzQzMzIxMjk= | 1,630 | Adding UKP Argument Aspect Similarity Corpus | {

"login": "rabeehk",

"id": 6278280,

"node_id": "MDQ6VXNlcjYyNzgyODA=",

"avatar_url": "https://avatars.githubusercontent.com/u/6278280?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rabeehk",

"html_url": "https://github.com/rabeehk",

"followers_url": "https://api.github.com/users/rabeehk/followers",

"following_url": "https://api.github.com/users/rabeehk/following{/other_user}",

"gists_url": "https://api.github.com/users/rabeehk/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rabeehk/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rabeehk/subscriptions",

"organizations_url": "https://api.github.com/users/rabeehk/orgs",

"repos_url": "https://api.github.com/users/rabeehk/repos",

"events_url": "https://api.github.com/users/rabeehk/events{/privacy}",

"received_events_url": "https://api.github.com/users/rabeehk/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

}

] | open | false | null | [] | null | [

"Adding a link to the guide on adding a dataset if someone want to give it a try: https://github.com/huggingface/datasets#add-a-new-dataset-to-the-hub\r\n\r\nwe should add this guide to the issue template @lhoestq ",

"thanks @thomwolf , this is added now. The template is correct, sorry my mistake not to include it. "

] | 1,608,807,691,000 | 1,608,809,418,000 | null | CONTRIBUTOR | null | Hi, this would be great to have this dataset included.

## Adding a Dataset

- **Name:** UKP Argument Aspect Similarity Corpus

- **Description:** The UKP Argument Aspect Similarity Corpus (UKP ASPECT) includes 3,595 sentence pairs over 28 controversial topics. Each sentence pair was annotated via crowdsourcing as either “high similarity”, “some similarity”, “no similarity” or “not related” with respect to the topic.

- **Paper:** https://www.aclweb.org/anthology/P19-1054/

- **Data:** https://tudatalib.ulb.tu-darmstadt.de/handle/tudatalib/1998

- **Motivation:** this is one of the datasets currently used frequently in recent adapter papers like https://arxiv.org/pdf/2005.00247.pdf

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

Thank you | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1630/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1630/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1627 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1627/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1627/comments | https://api.github.com/repos/huggingface/datasets/issues/1627/events | https://github.com/huggingface/datasets/issues/1627 | 773,960,255 | MDU6SXNzdWU3NzM5NjAyNTU= | 1,627 | `Dataset.map` disable progress bar | {

"login": "Nickil21",

"id": 8767964,

"node_id": "MDQ6VXNlcjg3Njc5NjQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/8767964?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Nickil21",

"html_url": "https://github.com/Nickil21",

"followers_url": "https://api.github.com/users/Nickil21/followers",

"following_url": "https://api.github.com/users/Nickil21/following{/other_user}",

"gists_url": "https://api.github.com/users/Nickil21/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Nickil21/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Nickil21/subscriptions",

"organizations_url": "https://api.github.com/users/Nickil21/orgs",

"repos_url": "https://api.github.com/users/Nickil21/repos",

"events_url": "https://api.github.com/users/Nickil21/events{/privacy}",

"received_events_url": "https://api.github.com/users/Nickil21/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Progress bar can be disabled like this:\r\n```python\r\nfrom datasets.utils.logging import set_verbosity_error\r\nset_verbosity_error()\r\n```\r\n\r\nThere is this line in `Dataset.map`:\r\n```python\r\nnot_verbose = bool(logger.getEffectiveLevel() > WARNING)\r\n```\r\n\r\nSo any logging level higher than `WARNING` turns off the progress bar."

] | 1,608,746,022,000 | 1,609,012,656,000 | 1,609,012,637,000 | NONE | null | I can't find anything to turn off the `tqdm` progress bars while running a preprocessing function using `Dataset.map`. I want to do akin to `disable_tqdm=True` in the case of `transformers`. Is there something like that? | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1627/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1627/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1624 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1624/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1624/comments | https://api.github.com/repos/huggingface/datasets/issues/1624/events | https://github.com/huggingface/datasets/issues/1624 | 773,669,700 | MDU6SXNzdWU3NzM2Njk3MDA= | 1,624 | Cannot download ade_corpus_v2 | {

"login": "him1411",

"id": 20259310,

"node_id": "MDQ6VXNlcjIwMjU5MzEw",

"avatar_url": "https://avatars.githubusercontent.com/u/20259310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/him1411",

"html_url": "https://github.com/him1411",

"followers_url": "https://api.github.com/users/him1411/followers",

"following_url": "https://api.github.com/users/him1411/following{/other_user}",

"gists_url": "https://api.github.com/users/him1411/gists{/gist_id}",

"starred_url": "https://api.github.com/users/him1411/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/him1411/subscriptions",

"organizations_url": "https://api.github.com/users/him1411/orgs",

"repos_url": "https://api.github.com/users/him1411/repos",

"events_url": "https://api.github.com/users/him1411/events{/privacy}",

"received_events_url": "https://api.github.com/users/him1411/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Hi @him1411, the dataset you are trying to load has been added during the community sprint and has not been released yet. It will be available with the v2 of `datasets`.\r\nFor now, you should be able to load the datasets after installing the latest (master) version of `datasets` using pip:\r\n`pip install git+https://github.com/huggingface/datasets.git@master`",

"`ade_corpus_v2` was added recently, that's why it wasn't available yet.\r\n\r\nTo load it you can just update `datasets`\r\n```\r\npip install --upgrade datasets\r\n```\r\n\r\nand then you can load `ade_corpus_v2` with\r\n\r\n```python\r\nfrom datasets import load_dataset\r\n\r\ndataset = load_dataset(\"ade_corpus_v2\", \"Ade_corpos_v2_drug_ade_relation\")\r\n```\r\n\r\n(looks like there is a typo in the configuration name, we'll fix it for the v2.0 release of `datasets` soon)"

] | 1,608,721,094,000 | 1,627,967,334,000 | 1,627,967,334,000 | NONE | null | I tried this to get the dataset following this url : https://huggingface.co/datasets/ade_corpus_v2

but received this error :

`Traceback (most recent call last):

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/load.py", line 267, in prepare_module

local_path = cached_path(file_path, download_config=download_config)

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/utils/file_utils.py", line 308, in cached_path

use_etag=download_config.use_etag,

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/utils/file_utils.py", line 486, in get_from_cache

raise FileNotFoundError("Couldn't find file at {}".format(url))

FileNotFoundError: Couldn't find file at https://raw.githubusercontent.com/huggingface/datasets/1.1.3/datasets/ade_corpus_v2/ade_corpus_v2.py

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/load.py", line 278, in prepare_module

local_path = cached_path(file_path, download_config=download_config)

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/utils/file_utils.py", line 308, in cached_path

use_etag=download_config.use_etag,

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/utils/file_utils.py", line 486, in get_from_cache

raise FileNotFoundError("Couldn't find file at {}".format(url))

FileNotFoundError: Couldn't find file at https://s3.amazonaws.com/datasets.huggingface.co/datasets/datasets/ade_corpus_v2/ade_corpus_v2.py

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/load.py", line 589, in load_dataset

path, script_version=script_version, download_config=download_config, download_mode=download_mode, dataset=True

File "/opt/anaconda3/lib/python3.7/site-packages/datasets/load.py", line 282, in prepare_module

combined_path, github_file_path, file_path

FileNotFoundError: Couldn't find file locally at ade_corpus_v2/ade_corpus_v2.py, or remotely at https://raw.githubusercontent.com/huggingface/datasets/1.1.3/datasets/ade_corpus_v2/ade_corpus_v2.py or https://s3.amazonaws.com/datasets.huggingface.co/datasets/datasets/ade_corpus_v2/ade_corpus_v2.py`

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1624/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1624/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1622 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1622/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1622/comments | https://api.github.com/repos/huggingface/datasets/issues/1622/events | https://github.com/huggingface/datasets/issues/1622 | 772,940,768 | MDU6SXNzdWU3NzI5NDA3Njg= | 1,622 | Can't call shape on the output of select() | {

"login": "noaonoszko",

"id": 47183162,

"node_id": "MDQ6VXNlcjQ3MTgzMTYy",

"avatar_url": "https://avatars.githubusercontent.com/u/47183162?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/noaonoszko",

"html_url": "https://github.com/noaonoszko",

"followers_url": "https://api.github.com/users/noaonoszko/followers",

"following_url": "https://api.github.com/users/noaonoszko/following{/other_user}",

"gists_url": "https://api.github.com/users/noaonoszko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/noaonoszko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/noaonoszko/subscriptions",

"organizations_url": "https://api.github.com/users/noaonoszko/orgs",

"repos_url": "https://api.github.com/users/noaonoszko/repos",

"events_url": "https://api.github.com/users/noaonoszko/events{/privacy}",

"received_events_url": "https://api.github.com/users/noaonoszko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Indeed that's a typo, do you want to open a PR to fix it?",

"Yes, created a PR"

] | 1,608,643,120,000 | 1,608,730,633,000 | 1,608,730,632,000 | CONTRIBUTOR | null | I get the error `TypeError: tuple expected at most 1 argument, got 2` when calling `shape` on the output of `select()`.

It's line 531 in shape in arrow_dataset.py that causes the problem:

``return tuple(self._indices.num_rows, self._data.num_columns)``

This makes sense, since `tuple(num1, num2)` is not a valid call.

Full code to reproduce:

```python

dataset = load_dataset("cnn_dailymail", "3.0.0")

train_set = dataset["train"]

t = train_set.select(range(10))

print(t.shape) | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1622/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1622/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1618 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1618/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1618/comments | https://api.github.com/repos/huggingface/datasets/issues/1618/events | https://github.com/huggingface/datasets/issues/1618 | 772,248,730 | MDU6SXNzdWU3NzIyNDg3MzA= | 1,618 | Can't filter language:EN on https://huggingface.co/datasets | {

"login": "davidefiocco",

"id": 4547987,

"node_id": "MDQ6VXNlcjQ1NDc5ODc=",

"avatar_url": "https://avatars.githubusercontent.com/u/4547987?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/davidefiocco",

"html_url": "https://github.com/davidefiocco",

"followers_url": "https://api.github.com/users/davidefiocco/followers",

"following_url": "https://api.github.com/users/davidefiocco/following{/other_user}",

"gists_url": "https://api.github.com/users/davidefiocco/gists{/gist_id}",

"starred_url": "https://api.github.com/users/davidefiocco/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/davidefiocco/subscriptions",

"organizations_url": "https://api.github.com/users/davidefiocco/orgs",

"repos_url": "https://api.github.com/users/davidefiocco/repos",

"events_url": "https://api.github.com/users/davidefiocco/events{/privacy}",

"received_events_url": "https://api.github.com/users/davidefiocco/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"cc'ing @mapmeld ",

"Full language list is now deployed to https://huggingface.co/datasets ! Recommend close",

"Cool @mapmeld ! My 2 cents (for a next iteration), it would be cool to have a small search widget in the filter dropdown as you have a ton of languages now here! Closing this in the meantime."

] | 1,608,564,203,000 | 1,608,657,420,000 | 1,608,657,369,000 | NONE | null | When visiting https://huggingface.co/datasets, I don't see an obvious way to filter only English datasets. This is unexpected for me, am I missing something? I'd expect English to be selectable in the language widget. This problem reproduced on Mozilla Firefox and MS Edge:

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1618/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1618/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1615 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1615/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1615/comments | https://api.github.com/repos/huggingface/datasets/issues/1615/events | https://github.com/huggingface/datasets/issues/1615 | 771,641,088 | MDU6SXNzdWU3NzE2NDEwODg= | 1,615 | Bug: Can't download TriviaQA with `load_dataset` - custom `cache_dir` | {

"login": "SapirWeissbuch",

"id": 44585792,

"node_id": "MDQ6VXNlcjQ0NTg1Nzky",

"avatar_url": "https://avatars.githubusercontent.com/u/44585792?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SapirWeissbuch",

"html_url": "https://github.com/SapirWeissbuch",

"followers_url": "https://api.github.com/users/SapirWeissbuch/followers",

"following_url": "https://api.github.com/users/SapirWeissbuch/following{/other_user}",

"gists_url": "https://api.github.com/users/SapirWeissbuch/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SapirWeissbuch/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SapirWeissbuch/subscriptions",

"organizations_url": "https://api.github.com/users/SapirWeissbuch/orgs",

"repos_url": "https://api.github.com/users/SapirWeissbuch/repos",

"events_url": "https://api.github.com/users/SapirWeissbuch/events{/privacy}",

"received_events_url": "https://api.github.com/users/SapirWeissbuch/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"Hi @SapirWeissbuch,\r\nWhen you are saying it freezes, at that time it is unzipping the file from the zip file it downloaded. Since it's a very heavy file it'll take some time. It was taking ~11GB after unzipping when it started reading examples for me. Hope that helps!\r\n\r\n",

"Hi @bhavitvyamalik \r\nThanks for the reply!\r\nActually I let it run for 30 minutes before I killed the process. In this time, 30GB were extracted (much more than 11GB), I checked the size of the destination directory.\r\n\r\nWhat version of Datasets are you using?\r\n",

"I'm using datasets version: 1.1.3. I think you should drop `cache_dir` and use only\r\n`dataset = datasets.load_dataset(\"trivia_qa\", \"rc\")`\r\n\r\nTried that on colab and it's working there too\r\n\r\n",

"Train, Validation, and Test splits contain 138384, 18669, and 17210 samples respectively. It takes some time to read the samples. Even in your colab notebook it was reading the samples before you killed the process. Let me know if it works now!",

"Hi, it works on colab but it still doesn't work on my computer, same problem as before - overly large and long extraction process.\r\nI have to use a custom 'cache_dir' because I don't have any space left in my home directory where it is defaulted, maybe this could be the issue?",

"I tried running this again - More details of the problem:\r\nCode:\r\n```\r\ndatasets.load_dataset(\"trivia_qa\", \"rc\", cache_dir=\"/path/to/cache\")\r\n```\r\n\r\nThe output:\r\n```\r\nDownloading and preparing dataset trivia_qa/rc (download: 2.48 GiB, generated: 14.92 GiB, post-processed: Unknown size, total: 17.40 GiB) to path/to/cache/trivia_qa/rc/1.1.0/e734e28133f4d9a353af322aa52b9f266f6f27cbf2f072690a1694e577546b0d... \r\nDownloading: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2.67G/2.67G [03:38<00:00, 12.2MB/s]\r\n\r\n```\r\nThe process continues (no progress bar is visible).\r\nI tried `du -sh .` in `path/to/cache`, and the size keeps increasing, reached 35G before I killed the process.\r\n\r\nGoogle Colab with custom `cache_dir` has same issue.\r\nhttps://colab.research.google.com/drive/1nn1Lw02GhfGFylzbS2j6yksGjPo7kkN-?usp=sharing#scrollTo=2G2O0AeNIXan",

"1) You can clear the huggingface folder in your `.cache` directory to use default directory for datasets. Speed of extraction and loading of samples depends a lot on your machine's configurations too.\r\n\r\n2) I tried on colab `dataset = datasets.load_dataset(\"trivia_qa\", \"rc\", cache_dir = \"./datasets\")`. After memory usage reached around 42GB (starting from 32GB used already), the dataset was loaded in the memory. Even Your colab notebook shows \r\n\r\nwhich means it's loaded now.",

"Facing the same issue.\r\nI am able to download datasets without `cache_dir`, however, when I specify the `cache_dir`, the process hangs indefinitely after partial download. \r\nTried for `data = load_dataset(\"cnn_dailymail\", \"3.0.0\")`",

"Hi @ashutoshml,\r\nI tried this and it worked for me:\r\n`data = load_dataset(\"cnn_dailymail\", \"3.0.0\", cache_dir=\"./dummy\")`\r\n\r\nI'm using datasets==1.8.0. It took around 3-4 mins for dataset to unpack and start loading examples.",

"Ok. I waited for 20-30 mins, and it still is stuck.\r\nI am using datasets==1.8.0.\r\n\r\nIs there anyway to check what is happening? like a` --verbose` flag?\r\n\r\n\r\n"

] | 1,608,485,258,000 | 1,624,626,693,000 | null | NONE | null | Hello,

I'm having issue downloading TriviaQA dataset with `load_dataset`.

## Environment info

- `datasets` version: 1.1.3

- Platform: Linux-4.19.129-aufs-1-x86_64-with-debian-10.1

- Python version: 3.7.3

## The code I'm running:

```python

import datasets

dataset = datasets.load_dataset("trivia_qa", "rc", cache_dir = "./datasets")

```

## The output:

1. Download begins:

```

Downloading and preparing dataset trivia_qa/rc (download: 2.48 GiB, generated: 14.92 GiB, post-processed: Unknown size, total: 17.40 GiB) to /cs/labs/gabis/sapirweissbuch/tr

ivia_qa/rc/1.1.0/e734e28133f4d9a353af322aa52b9f266f6f27cbf2f072690a1694e577546b0d...

Downloading: 17%|███████████████████▉ | 446M/2.67G [00:37<04:45, 7.77MB/s]

```

2. 100% is reached

3. It got stuck here for about an hour, and added additional 30G of data to "./datasets" directory. I killed the process eventually.

A similar issue can be observed in Google Colab:

https://colab.research.google.com/drive/1nn1Lw02GhfGFylzbS2j6yksGjPo7kkN-?usp=sharing

## Expected behaviour:

The dataset "TriviaQA" should be successfully downloaded.

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1615/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1615/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1611 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1611/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1611/comments | https://api.github.com/repos/huggingface/datasets/issues/1611/events | https://github.com/huggingface/datasets/issues/1611 | 771,486,456 | MDU6SXNzdWU3NzE0ODY0NTY= | 1,611 | shuffle with torch generator | {

"login": "rabeehkarimimahabadi",

"id": 73364383,

"node_id": "MDQ6VXNlcjczMzY0Mzgz",

"avatar_url": "https://avatars.githubusercontent.com/u/73364383?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rabeehkarimimahabadi",

"html_url": "https://github.com/rabeehkarimimahabadi",

"followers_url": "https://api.github.com/users/rabeehkarimimahabadi/followers",

"following_url": "https://api.github.com/users/rabeehkarimimahabadi/following{/other_user}",

"gists_url": "https://api.github.com/users/rabeehkarimimahabadi/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rabeehkarimimahabadi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rabeehkarimimahabadi/subscriptions",

"organizations_url": "https://api.github.com/users/rabeehkarimimahabadi/orgs",

"repos_url": "https://api.github.com/users/rabeehkarimimahabadi/repos",

"events_url": "https://api.github.com/users/rabeehkarimimahabadi/events{/privacy}",

"received_events_url": "https://api.github.com/users/rabeehkarimimahabadi/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] | open | false | null | [] | null | [

"Is there a way one can convert the two generator? not sure overall what alternatives I could have to shuffle the datasets with a torch generator, thanks ",

"@lhoestq let me please expalin in more details, maybe you could help me suggesting an alternative to solve the issue for now, I have multiple large datasets using huggingface library, then I need to define a distributed sampler on top of it, for this I need to shard the datasets and give each shard to each core, but before sharding I need to shuffle the dataset, if you are familiar with distributed sampler in pytorch, this needs to be done based on seed+epoch generator to make it consistent across the cores they do it through defining a torch generator, I was wondering if you could tell me how I can shuffle the data for now, I am unfortunately blocked by this and have a limited time left, and I greatly appreciate your help on this. thanks ",

"@lhoestq Is there a way I could shuffle the datasets from this library with a custom defined shuffle function? thanks for your help on this. ",

"Right now the shuffle method only accepts the `seed` (optional int) or `generator` (optional `np.random.Generator`) parameters.\r\n\r\nHere is a suggestion to shuffle the data using your own shuffle method using `select`.\r\n`select` can be used to re-order the dataset samples or simply pick a few ones if you want.\r\nIt's what is used under the hood when you call `dataset.shuffle`.\r\n\r\nTo use `select` you must have the list of re-ordered indices of your samples.\r\n\r\nLet's say you have a `shuffle` methods that you want to use. Then you can first build your shuffled list of indices:\r\n```python\r\nshuffled_indices = shuffle(range(len(dataset)))\r\n```\r\n\r\nThen you can shuffle your dataset using the shuffled indices with \r\n```python\r\nshuffled_dataset = dataset.select(shuffled_indices)\r\n```\r\n\r\nHope that helps",

"thank you @lhoestq thank you very much for responding to my question, this greatly helped me and remove the blocking for continuing my work, thanks. ",

"@lhoestq could you confirm the method proposed does not bring the whole data into memory? thanks ",

"Yes the dataset is not loaded into memory",

"great. thanks a lot."

] | 1,608,425,834,000 | 1,608,574,339,000 | null | NONE | null | Hi

I need to shuffle mutliple large datasets with `generator = torch.Generator()` for a distributed sampler which needs to make sure datasets are consistent across different cores, for this, this is really necessary for me to use torch generator, based on documentation this generator is not supported with datasets, I really need to make shuffle work with this generator and I was wondering what I can do about this issue, thanks for your help

@lhoestq | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1611/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1611/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1610 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1610/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1610/comments | https://api.github.com/repos/huggingface/datasets/issues/1610/events | https://github.com/huggingface/datasets/issues/1610 | 771,453,599 | MDU6SXNzdWU3NzE0NTM1OTk= | 1,610 | shuffle does not accept seed | {

"login": "rabeehk",

"id": 6278280,

"node_id": "MDQ6VXNlcjYyNzgyODA=",

"avatar_url": "https://avatars.githubusercontent.com/u/6278280?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rabeehk",

"html_url": "https://github.com/rabeehk",

"followers_url": "https://api.github.com/users/rabeehk/followers",

"following_url": "https://api.github.com/users/rabeehk/following{/other_user}",

"gists_url": "https://api.github.com/users/rabeehk/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rabeehk/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rabeehk/subscriptions",

"organizations_url": "https://api.github.com/users/rabeehk/orgs",

"repos_url": "https://api.github.com/users/rabeehk/repos",

"events_url": "https://api.github.com/users/rabeehk/events{/privacy}",

"received_events_url": "https://api.github.com/users/rabeehk/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | closed | false | null | [] | null | [

"Hi, did you check the doc on `shuffle`?\r\nhttps://huggingface.co/docs/datasets/package_reference/main_classes.html?datasets.Dataset.shuffle#datasets.Dataset.shuffle",

"Hi Thomas\r\nthanks for reponse, yes, I did checked it, but this does not work for me please see \r\n\r\n```\r\n(internship) rkarimi@italix17:/idiap/user/rkarimi/dev$ python \r\nPython 3.7.9 (default, Aug 31 2020, 12:42:55) \r\n[GCC 7.3.0] :: Anaconda, Inc. on linux\r\nType \"help\", \"copyright\", \"credits\" or \"license\" for more information.\r\n>>> import datasets \r\n2020-12-20 01:48:50.766004: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\r\n2020-12-20 01:48:50.766029: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\r\n>>> data = datasets.load_dataset(\"scitail\", \"snli_format\")\r\ncahce dir /idiap/temp/rkarimi/cache_home_1/datasets\r\ncahce dir /idiap/temp/rkarimi/cache_home_1/datasets\r\nReusing dataset scitail (/idiap/temp/rkarimi/cache_home_1/datasets/scitail/snli_format/1.1.0/fd8ccdfc3134ce86eb4ef10ba7f21ee2a125c946e26bb1dd3625fe74f48d3b90)\r\n>>> data.shuffle(seed=2)\r\nTraceback (most recent call last):\r\n File \"<stdin>\", line 1, in <module>\r\nTypeError: shuffle() got an unexpected keyword argument 'seed'\r\n\r\n```\r\n\r\ndatasets version\r\n`datasets 1.1.2 <pip>\r\n`\r\n",

"Thanks for reporting ! \r\n\r\nIndeed it looks like an issue with `suffle` on `DatasetDict`. We're going to fix that.\r\nIn the meantime you can shuffle each split (train, validation, test) separately:\r\n```python\r\nshuffled_train_dataset = data[\"train\"].shuffle(seed=42)\r\n```\r\n"

] | 1,608,411,579,000 | 1,609,754,403,000 | 1,609,754,403,000 | CONTRIBUTOR | null | Hi

I need to shuffle the dataset, but this needs to be based on epoch+seed to be consistent across the cores, when I pass seed to shuffle, this does not accept seed, could you assist me with this? thanks @lhoestq

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1610/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1610/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1609 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1609/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1609/comments | https://api.github.com/repos/huggingface/datasets/issues/1609/events | https://github.com/huggingface/datasets/issues/1609 | 771,421,881 | MDU6SXNzdWU3NzE0MjE4ODE= | 1,609 | Not able to use 'jigsaw_toxicity_pred' dataset | {

"login": "jassimran",

"id": 7424133,

"node_id": "MDQ6VXNlcjc0MjQxMzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/7424133?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jassimran",

"html_url": "https://github.com/jassimran",

"followers_url": "https://api.github.com/users/jassimran/followers",

"following_url": "https://api.github.com/users/jassimran/following{/other_user}",

"gists_url": "https://api.github.com/users/jassimran/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jassimran/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jassimran/subscriptions",

"organizations_url": "https://api.github.com/users/jassimran/orgs",

"repos_url": "https://api.github.com/users/jassimran/repos",

"events_url": "https://api.github.com/users/jassimran/events{/privacy}",

"received_events_url": "https://api.github.com/users/jassimran/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Hi @jassimran,\r\nThe `jigsaw_toxicity_pred` dataset has not been released yet, it will be available with version 2 of `datasets`, coming soon.\r\nYou can still access it by installing the master (unreleased) version of datasets directly :\r\n`pip install git+https://github.com/huggingface/datasets.git@master`\r\nPlease let me know if this helps",

"Thanks.That works for now."

] | 1,608,399,348,000 | 1,608,655,344,000 | 1,608,655,343,000 | NONE | null | When trying to use jigsaw_toxicity_pred dataset, like this in a [colab](https://colab.research.google.com/drive/1LwO2A5M2X5dvhkAFYE4D2CUT3WUdWnkn?usp=sharing):

```

from datasets import list_datasets, list_metrics, load_dataset, load_metric

ds = load_dataset("jigsaw_toxicity_pred")

```

I see below error:

> FileNotFoundError: Couldn't find file at https://raw.githubusercontent.com/huggingface/datasets/1.1.3/datasets/jigsaw_toxicity_pred/jigsaw_toxicity_pred.py

During handling of the above exception, another exception occurred:

FileNotFoundError Traceback (most recent call last)

FileNotFoundError: Couldn't find file at https://s3.amazonaws.com/datasets.huggingface.co/datasets/datasets/jigsaw_toxicity_pred/jigsaw_toxicity_pred.py

During handling of the above exception, another exception occurred:

FileNotFoundError Traceback (most recent call last)

/usr/local/lib/python3.6/dist-packages/datasets/load.py in prepare_module(path, script_version, download_config, download_mode, dataset, force_local_path, **download_kwargs)

280 raise FileNotFoundError(

281 "Couldn't find file locally at {}, or remotely at {} or {}".format(

--> 282 combined_path, github_file_path, file_path

283 )

284 )

FileNotFoundError: Couldn't find file locally at jigsaw_toxicity_pred/jigsaw_toxicity_pred.py, or remotely at https://raw.githubusercontent.com/huggingface/datasets/1.1.3/datasets/jigsaw_toxicity_pred/jigsaw_toxicity_pred.py or https://s3.amazonaws.com/datasets.huggingface.co/datasets/datasets/jigsaw_toxicity_pred/jigsaw_toxicity_pred.py | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1609/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1609/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1605 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1605/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1605/comments | https://api.github.com/repos/huggingface/datasets/issues/1605/events | https://github.com/huggingface/datasets/issues/1605 | 770,979,620 | MDU6SXNzdWU3NzA5Nzk2MjA= | 1,605 | Navigation version breaking | {

"login": "mttk",

"id": 3007947,

"node_id": "MDQ6VXNlcjMwMDc5NDc=",

"avatar_url": "https://avatars.githubusercontent.com/u/3007947?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mttk",

"html_url": "https://github.com/mttk",

"followers_url": "https://api.github.com/users/mttk/followers",

"following_url": "https://api.github.com/users/mttk/following{/other_user}",

"gists_url": "https://api.github.com/users/mttk/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mttk/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mttk/subscriptions",

"organizations_url": "https://api.github.com/users/mttk/orgs",

"repos_url": "https://api.github.com/users/mttk/repos",

"events_url": "https://api.github.com/users/mttk/events{/privacy}",

"received_events_url": "https://api.github.com/users/mttk/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,608,305,784,000 | 1,608,306,112,000 | null | NONE | null | Hi,

when navigating docs (Chrome, Ubuntu) (e.g. on this page: https://huggingface.co/docs/datasets/loading_metrics.html#using-a-custom-metric-script) the version control dropdown has the wrong string displayed as the current version:

**Edit:** this actually happens _only_ if you open a link to a concrete subsection.

IMO, the best way to fix this without getting too deep into the intricacies of retrieving version numbers from the URL would be to change [this](https://github.com/huggingface/datasets/blob/master/docs/source/_static/js/custom.js#L112) line to:

```

let label = (version in versionMapping) ? version : stableVersion

```

which delegates the check to the (already maintained) keys of the version mapping dictionary & should be more robust. There's a similar ternary expression [here](https://github.com/huggingface/datasets/blob/master/docs/source/_static/js/custom.js#L97) which should also fail in this case.

I'd also suggest swapping this [block](https://github.com/huggingface/datasets/blob/master/docs/source/_static/js/custom.js#L80-L90) to `string.contains(version) for version in versionMapping` which might be more robust. I'd add a PR myself but I'm by no means competent in JS :)

I also have a side question wrt. docs versioning: I'm trying to make docs for a project which are versioned alike to your dropdown versioning. I was wondering how do you handle storage of multiple doc versions on your server? Do you update what `https://huggingface.co/docs/datasets` points to for every stable release & manually create new folders for each released version?

So far I'm building & publishing (scping) the docs to the server with a github action which works well for a single version, but would ideally need to reorder the public files triggered on a new release. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1605/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1605/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1604 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1604/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1604/comments | https://api.github.com/repos/huggingface/datasets/issues/1604/events | https://github.com/huggingface/datasets/issues/1604 | 770,862,112 | MDU6SXNzdWU3NzA4NjIxMTI= | 1,604 | Add tests for the download functions ? | {

"login": "SBrandeis",

"id": 33657802,

"node_id": "MDQ6VXNlcjMzNjU3ODAy",

"avatar_url": "https://avatars.githubusercontent.com/u/33657802?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SBrandeis",

"html_url": "https://github.com/SBrandeis",

"followers_url": "https://api.github.com/users/SBrandeis/followers",

"following_url": "https://api.github.com/users/SBrandeis/following{/other_user}",

"gists_url": "https://api.github.com/users/SBrandeis/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SBrandeis/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SBrandeis/subscriptions",

"organizations_url": "https://api.github.com/users/SBrandeis/orgs",

"repos_url": "https://api.github.com/users/SBrandeis/repos",

"events_url": "https://api.github.com/users/SBrandeis/events{/privacy}",

"received_events_url": "https://api.github.com/users/SBrandeis/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] | open | false | null | [] | null | [] | 1,608,295,765,000 | 1,608,295,765,000 | null | CONTRIBUTOR | null | AFAIK the download functions in `DownloadManager` are not tested yet. It could be good to add some to ensure behavior is as expected. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/1604/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/1604/timeline | null | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/1600 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1600/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1600/comments | https://api.github.com/repos/huggingface/datasets/issues/1600/events | https://github.com/huggingface/datasets/issues/1600 | 770,582,960 | MDU6SXNzdWU3NzA1ODI5NjA= | 1,600 | AttributeError: 'DatasetDict' object has no attribute 'train_test_split' | {

"login": "david-waterworth",

"id": 5028974,

"node_id": "MDQ6VXNlcjUwMjg5NzQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/5028974?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/david-waterworth",

"html_url": "https://github.com/david-waterworth",

"followers_url": "https://api.github.com/users/david-waterworth/followers",

"following_url": "https://api.github.com/users/david-waterworth/following{/other_user}",

"gists_url": "https://api.github.com/users/david-waterworth/gists{/gist_id}",

"starred_url": "https://api.github.com/users/david-waterworth/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/david-waterworth/subscriptions",

"organizations_url": "https://api.github.com/users/david-waterworth/orgs",

"repos_url": "https://api.github.com/users/david-waterworth/repos",

"events_url": "https://api.github.com/users/david-waterworth/events{/privacy}",

"received_events_url": "https://api.github.com/users/david-waterworth/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892912,

"node_id": "MDU6TGFiZWwxOTM1ODkyOTEy",

"url": "https://api.github.com/repos/huggingface/datasets/labels/question",

"name": "question",

"color": "d876e3",

"default": true,

"description": "Further information is requested"

}

] | closed | false | null | [] | null | [

"Hi @david-waterworth!\r\n\r\nAs indicated in the error message, `load_dataset(\"csv\")` returns a `DatasetDict` object, which is mapping of `str` to `Dataset` objects. I believe in this case the behavior is to return a `train` split with all the data.\r\n`train_test_split` is a method of the `Dataset` object, so you will need to do something like this:\r\n```python\r\ndataset_dict = load_dataset(`'csv', data_files='data.txt')\r\ndataset = dataset_dict['split name, eg train']\r\ndataset.train_test_split(test_size=0.1)\r\n```\r\n\r\nPlease let me know if this helps. 🙂 ",

"Thanks, that's working - the same issue also tripped me up with training. \r\n\r\nI also agree https://github.com/huggingface/datasets/issues/767 would be a useful addition. ",

"Closing this now",

"> ```python\r\n> dataset_dict = load_dataset(`'csv', data_files='data.txt')\r\n> dataset = dataset_dict['split name, eg train']\r\n> dataset.train_test_split(test_size=0.1)\r\n> ```\r\n\r\nI am getting error like\r\nKeyError: 'split name, eg train'\r\nCould you please tell me how to solve this?",

"dataset = load_dataset('csv', data_files=['files/datasets/dataset.csv'])\r\ndataset = dataset['train']\r\ndataset = dataset.train_test_split(test_size=0.1)"

] | 1,608,269,830,000 | 1,623,756,346,000 | 1,608,536,338,000 | NONE | null | The following code fails with "'DatasetDict' object has no attribute 'train_test_split'" - am I doing something wrong?

```