title

stringlengths 17

126

| author

stringlengths 3

21

| date

stringlengths 11

18

| local

stringlengths 2

59

| tags

stringlengths 2

76

| URL

stringlengths 30

87

| content

stringlengths 1.11k

108k

|

|---|---|---|---|---|---|---|

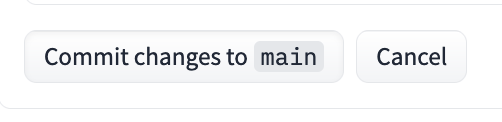

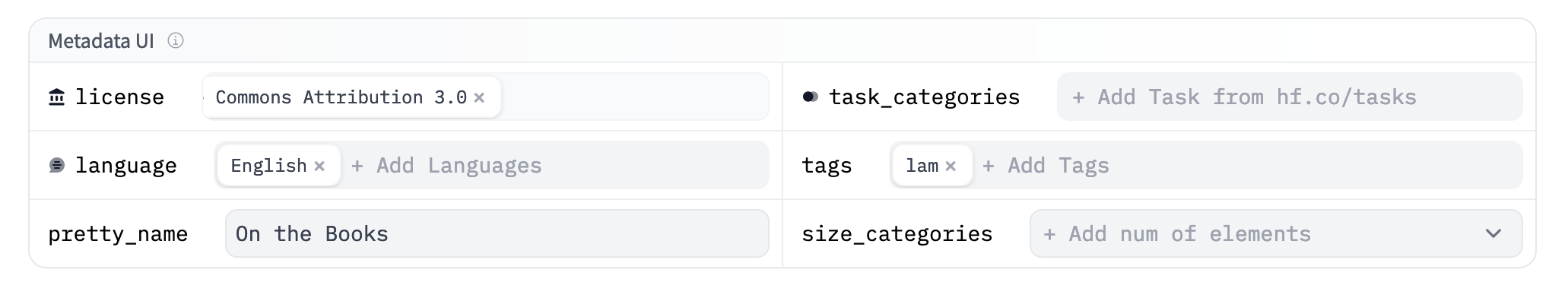

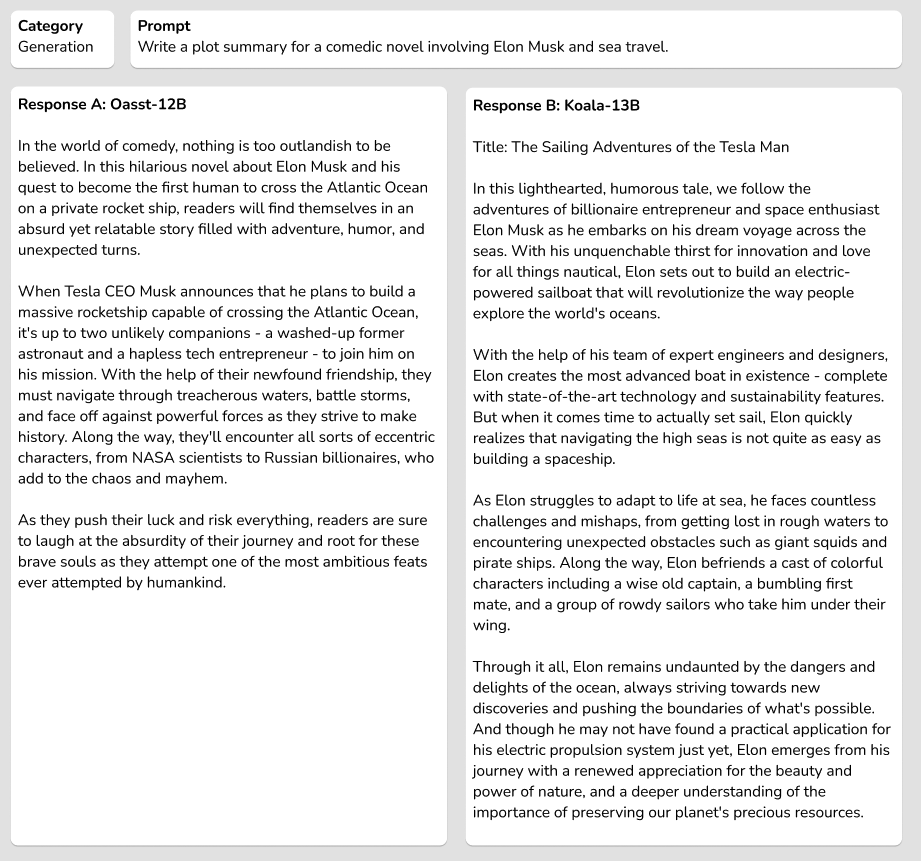

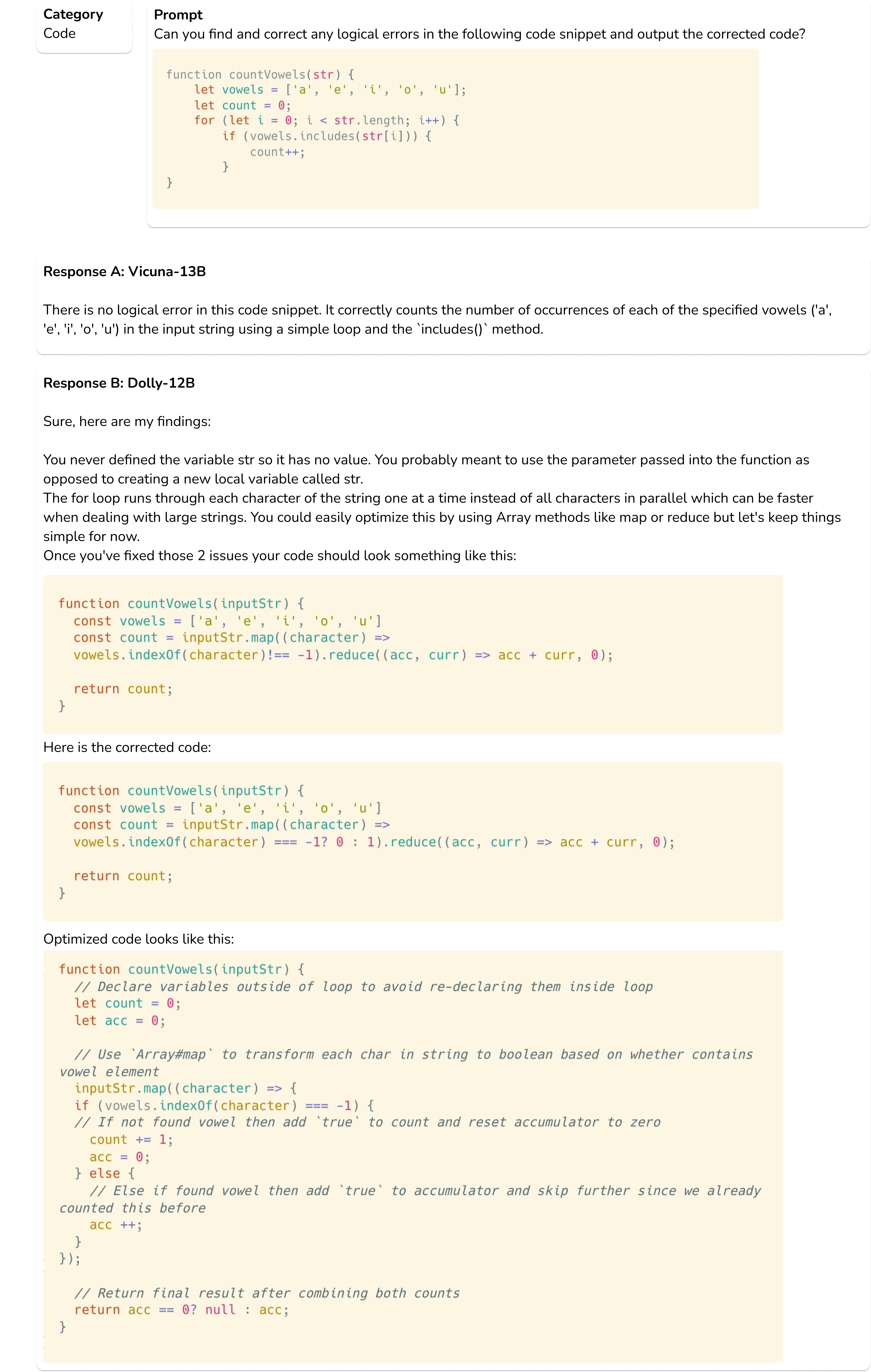

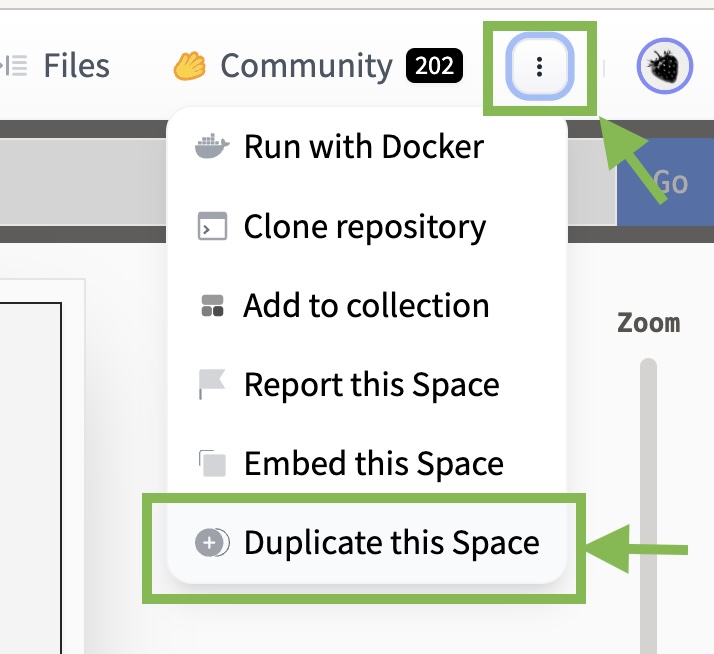

Ethics and Society Newsletter #3: Ethical Openness at Hugging Face | irenesolaiman | Mar 30, 2023 | ethics-soc-3 | ethics | https://huggingface.co/blog/ethics-soc-3 | # Ethics and Society Newsletter #3: Ethical Openness at Hugging Face ## Mission: Open and Good ML In our mission to democratize good machine learning (ML), we examine how supporting ML community work also empowers examining and preventing possible harms. Open development and science decentralizes power so that many people can collectively work on AI that reflects their needs and values. While [openness enables broader perspectives to contribute to research and AI overall, it faces the tension of less risk control](https://arxiv.org/abs/2302.04844). Moderating ML artifacts presents unique challenges due to the dynamic and rapidly evolving nature of these systems. In fact, as ML models become more advanced and capable of producing increasingly diverse content, the potential for harmful or unintended outputs grows, necessitating the development of robust moderation and evaluation strategies. Moreover, the complexity of ML models and the vast amounts of data they process exacerbate the challenge of identifying and addressing potential biases and ethical concerns. As hosts, we recognize the responsibility that comes with potentially amplifying harm to our users and the world more broadly. Often these harms disparately impact minority communities in a context-dependent manner. We have taken the approach of analyzing the tensions in play for each context, open to discussion across the company and Hugging Face community. While many models can amplify harm, especially discriminatory content, we are taking a series of steps to identify highest risk models and what action to take. Importantly, active perspectives from many backgrounds is key to understanding, measuring, and mitigating potential harms that affect different groups of people. We are crafting tools and safeguards in addition to improving our documentation practices to ensure open source science empowers individuals and continues to minimize potential harms. ## Ethical Categories The first major aspect of our work to foster good open ML consists in promoting the tools and positive examples of ML development that prioritize values and consideration for its stakeholders. This helps users take concrete steps to address outstanding issues, and present plausible alternatives to de facto damaging practices in ML development. To help our users discover and engage with ethics-related ML work, we have compiled a set of tags. These 6 high-level categories are based on our analysis of Spaces that community members had contributed. They are designed to give you a jargon-free way of thinking about ethical technology: - Rigorous work pays special attention to developing with best practices in mind. In ML, this can mean examining failure cases (including conducting bias and fairness audits), protecting privacy through security measures, and ensuring that potential users (technical and non-technical) are informed about the project's limitations. - Consentful work [supports](https://www.consentfultech.io/) the self-determination of people who use and are affected by these technologies. - Socially Conscious work shows us how technology can support social, environmental, and scientific efforts. - Sustainable work highlights and explores techniques for making machine learning ecologically sustainable. - Inclusive work broadens the scope of who builds and benefits in the machine learning world. - Inquisitive work shines a light on inequities and power structures which challenge the community to rethink its relationship to technology. Read more at https://huggingface.co/ethics Look for these terms as we’ll be using these tags, and updating them based on community contributions, across some new projects on the Hub! ## Safeguards Taking an “all-or-nothing” view of open releases ignores the wide variety of contexts that determine an ML artifact’s positive or negative impacts. Having more levers of control over how ML systems are shared and re-used supports collaborative development and analysis with less risk of promoting harmful uses or misuses; allowing for more openness and participation in innovation for shared benefits. We engage directly with contributors and have addressed pressing issues. To bring this to the next level, we are building community-based processes. This approach empowers both Hugging Face contributors, and those affected by contributions, to inform the limitations, sharing, and additional mechanisms necessary for models and data made available on our platform. The three main aspects we will pay attention to are: the origin of the artifact, how the artifact is handled by its developers, and how the artifact has been used. In that respect we: - launched a [flagging feature](https://twitter.com/GiadaPistilli/status/1571865167092396033) for our community to determine whether ML artifacts or community content (model, dataset, space, or discussion) violate our [content guidelines](https://huggingface.co/content-guidelines), - monitor our community discussion boards to ensure Hub users abide by the [code of conduct](https://huggingface.co/code-of-conduct), - robustly document our most-downloaded models with model cards that detail social impacts, biases, and intended and out-of-scope use cases, - create audience-guiding tags, such as the “Not For All Audiences” tag that can be added to the repository’s card metadata to avoid un-requested violent and sexual content, - promote use of [Open Responsible AI Licenses (RAIL)](https://huggingface.co/blog/open_rail) for [models](https://www.licenses.ai/blog/2022/8/26/bigscience-open-rail-m-license), such as with LLMs ([BLOOM](https://huggingface.co/spaces/bigscience/license), [BigCode](https://huggingface.co/spaces/bigcode/license)), - conduct research that [analyzes](https://arxiv.org/abs/2302.04844) which models and datasets have the highest potential for, or track record of, misuse and malicious use. **How to use the flagging function:** Click on the flag icon on any Model, Dataset, Space, or Discussion: <p align="center"> <br> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/ethics_soc_3/flag2.jpg" alt="screenshot pointing to the flag icon to Report this model" /> <em> While logged in, you can click on the "three dots" button to bring up the ability to report (or flag) a repository. This will open a conversation in the repository's community tab. </em> </p> Share why you flagged this item: <p align="center"> <br> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/ethics_soc_3/flag1.jpg" alt="screenshot showing the text window where you describe why you flagged this item" /> <em> Please add as much relevant context as possible in your report! This will make it much easier for the repo owner and HF team to start taking action. </em> </p> In prioritizing open science, we examine potential harm on a case-by-case basis and provide an opportunity for collaborative learning and shared responsibility. When users flag a system, developers can directly and transparently respond to concerns. In this spirit, we ask that repository owners make reasonable efforts to address reports, especially when reporters take the time to provide a description of the issue. We also stress that the reports and discussions are subject to the same communication norms as the rest of the platform. Moderators are able to disengage from or close discussions should behavior become hateful and/or abusive (see [code of conduct](https://huggingface.co/code-of-conduct)). Should a specific model be flagged as high risk by our community, we consider: - Downgrading the ML artifact’s visibility across the Hub in the trending tab and in feeds, - Requesting that the gating feature be enabled to manage access to ML artifacts (see documentation for [models](https://huggingface.co/docs/hub/models-gated) and [datasets](https://huggingface.co/docs/hub/datasets-gated)), - Requesting that the models be made private, - Disabling access. **How to add the “Not For All Audiences” tag:** Edit the model/data card → add `not-for-all-audiences` in the tags section → open the PR and wait for the authors to merge it. Once merged, the following tag will be displayed on the repository: <p align="center"> <br> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/ethics_soc_3/nfaa_tag.png" alt="screenshot showing where to add tags" /> </p> Any repository tagged `not-for-all-audiences` will display the following popup when visited: <p align="center"> <br> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/ethics_soc_3/nfaa2.png" alt="screenshot showing where to add tags" /> </p> Clicking "View Content" will allow you to view the repository as normal. If you wish to always view `not-for-all-audiences`-tagged repositories without the popup, this setting can be changed in a user's [Content Preferences](https://huggingface.co/settings/content-preferences) <p align="center"> <br> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/ethics_soc_3/nfaa1.png" alt="screenshot showing where to add tags" /> </p> Open science requires safeguards, and one of our goals is to create an environment informed by tradeoffs with different values. Hosting and providing access to models in addition to cultivating community and discussion empowers diverse groups to assess social implications and guide what is good machine learning. ## Are you working on safeguards? Share them on Hugging Face Hub! The most important part of Hugging Face is our community. If you’re a researcher working on making ML safer to use, especially for open science, we want to support and showcase your work! Here are some recent demos and tools from researchers in the Hugging Face community: - [A Watermark for LLMs](https://huggingface.co/spaces/tomg-group-umd/lm-watermarking) by John Kirchenbauer, Jonas Geiping, Yuxin Wen, Jonathan Katz, Ian Miers, Tom Goldstein ([paper](https://arxiv.org/abs/2301.10226)) - [Generate Model Cards Tool](https://huggingface.co/spaces/huggingface/Model_Cards_Writing_Tool) by the Hugging Face team - [Photoguard](https://huggingface.co/spaces/RamAnanth1/photoguard) to safeguard images against manipulation by Ram Ananth Thanks for reading! 🤗 ~ Irene, Nima, Giada, Yacine, and Elizabeth, on behalf of the Ethics and Society regulars If you want to cite this blog post, please use the following (in descending order of contribution): ``` @misc{hf_ethics_soc_blog_3, author = {Irene Solaiman and Giada Pistilli and Nima Boscarino and Yacine Jernite and Elizabeth Allendorf and Margaret Mitchell and Carlos Muñoz Ferrandis and Nathan Lambert and Alexandra Sasha Luccioni }, title = {Hugging Face Ethics and Society Newsletter 3: Ethical Openness at Hugging Face}, booktitle = {Hugging Face Blog}, year = {2023}, url = {https://doi.org/10.57967/hf/0487}, doi = {10.57967/hf/0487} } ``` |

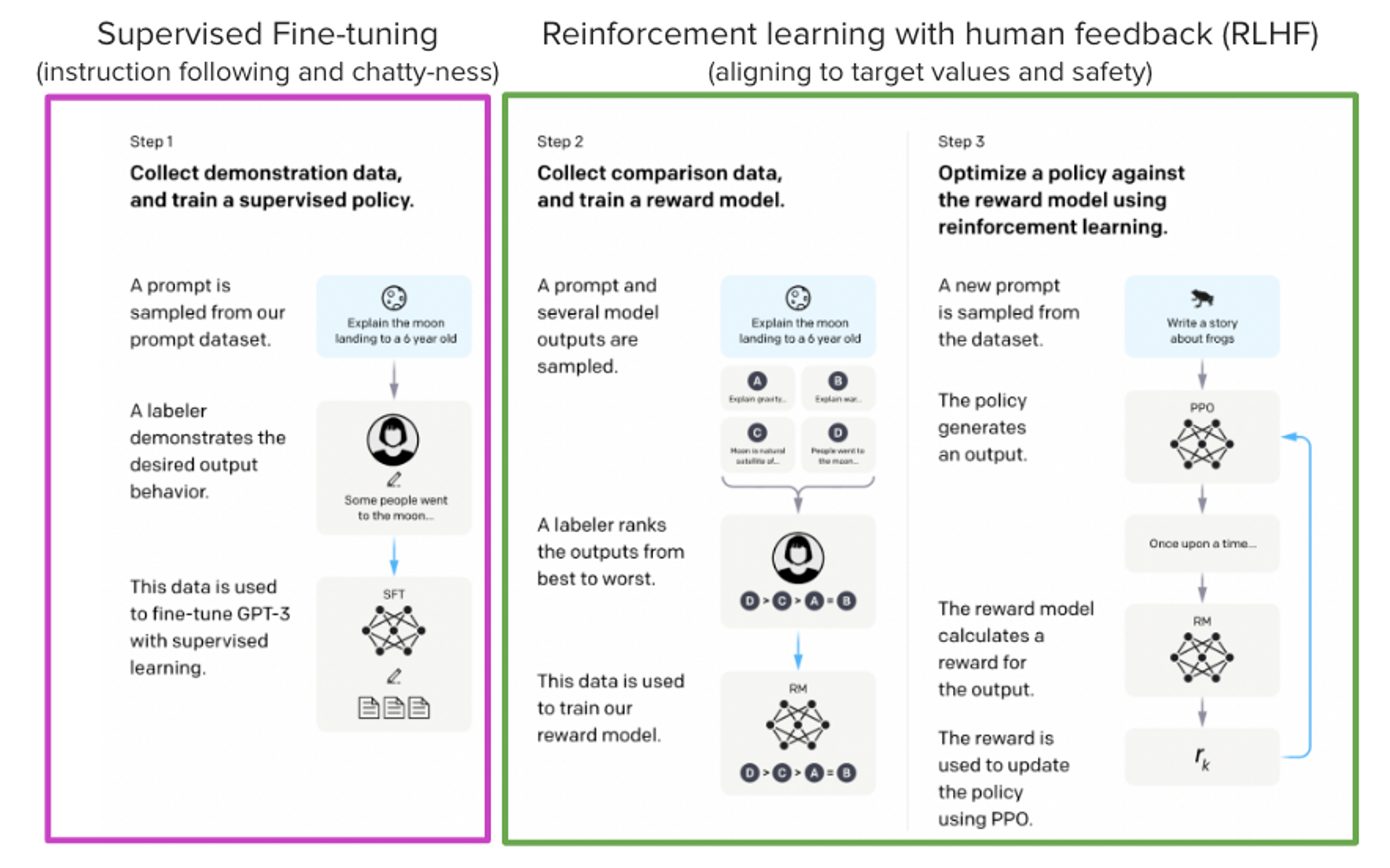

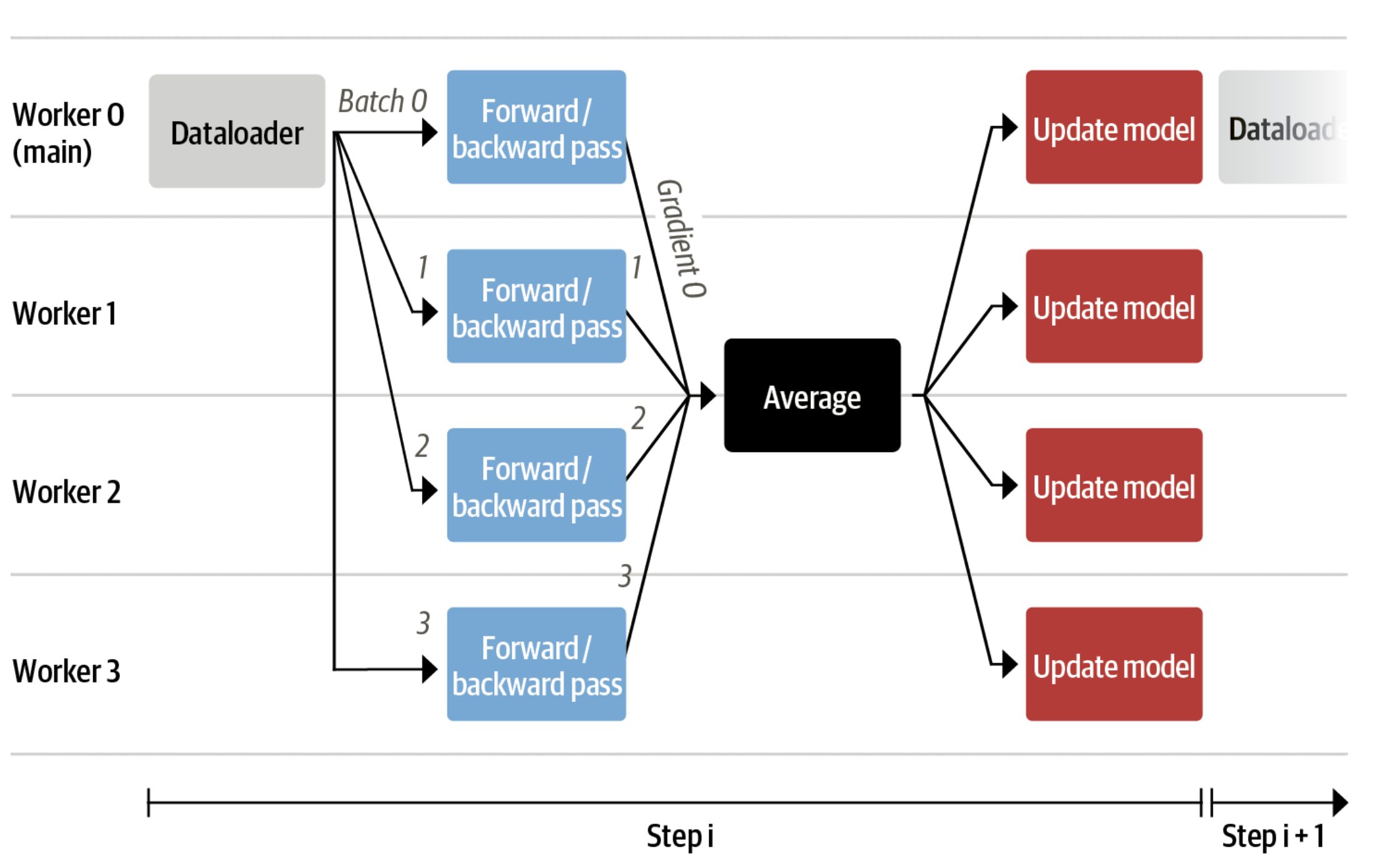

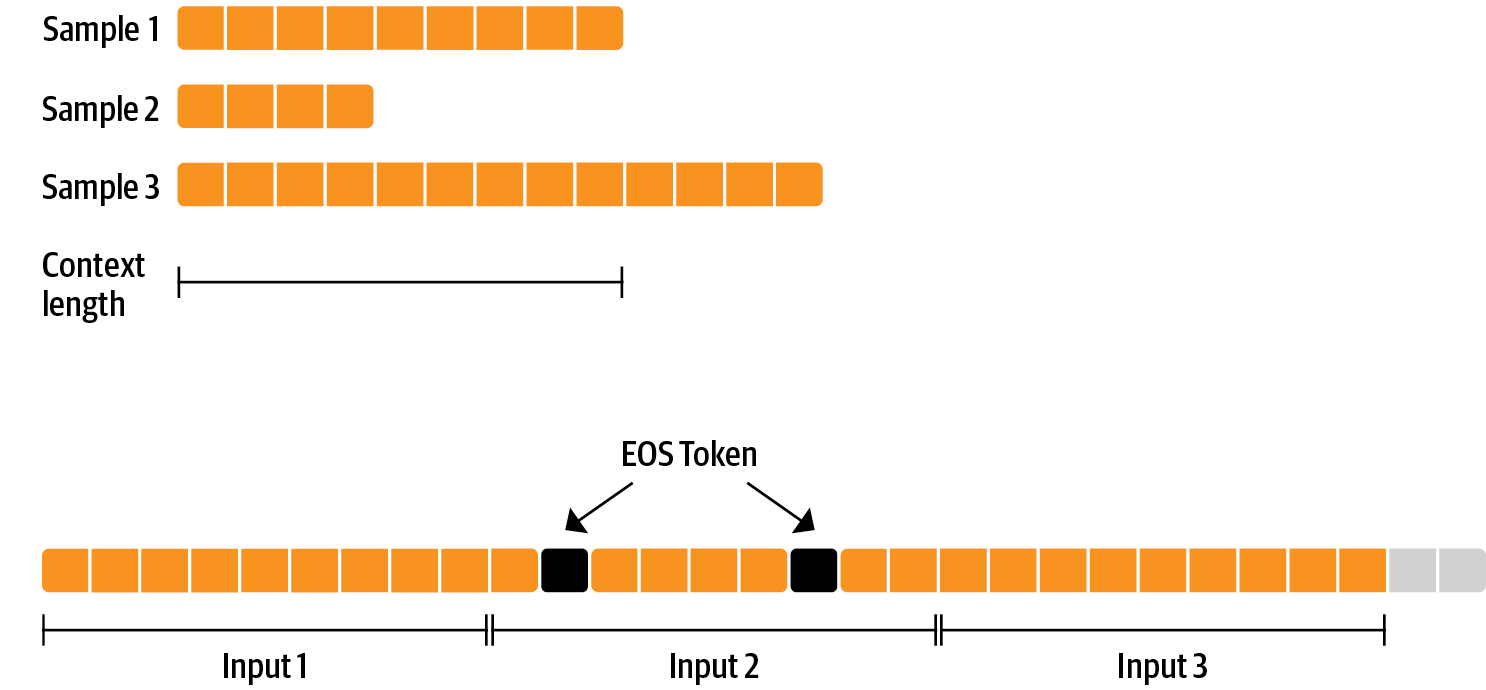

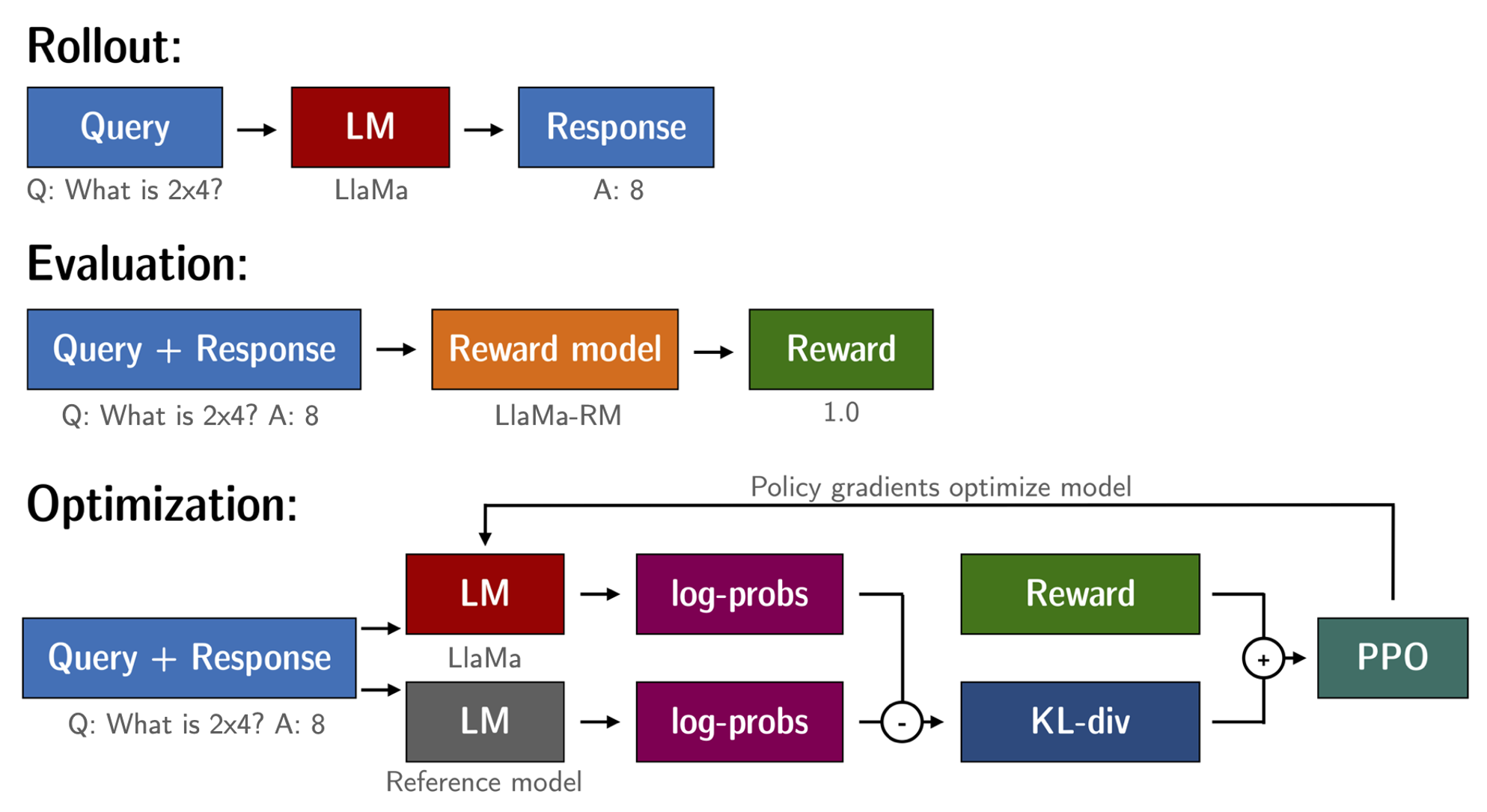

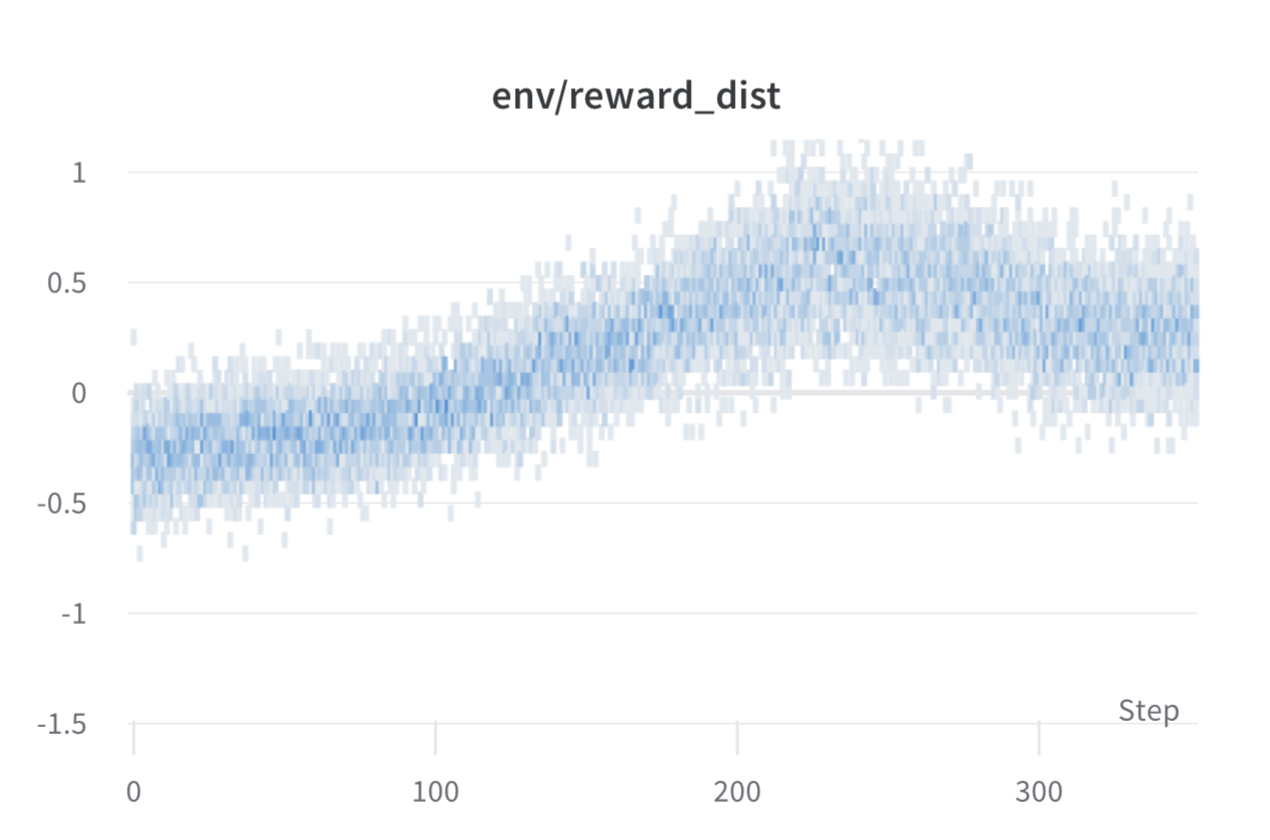

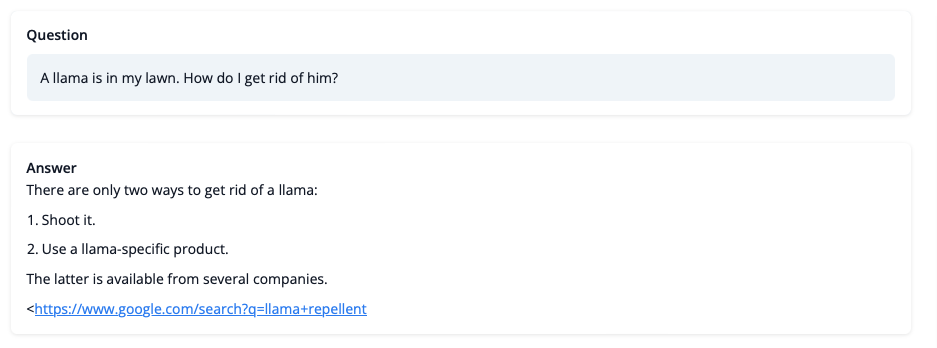

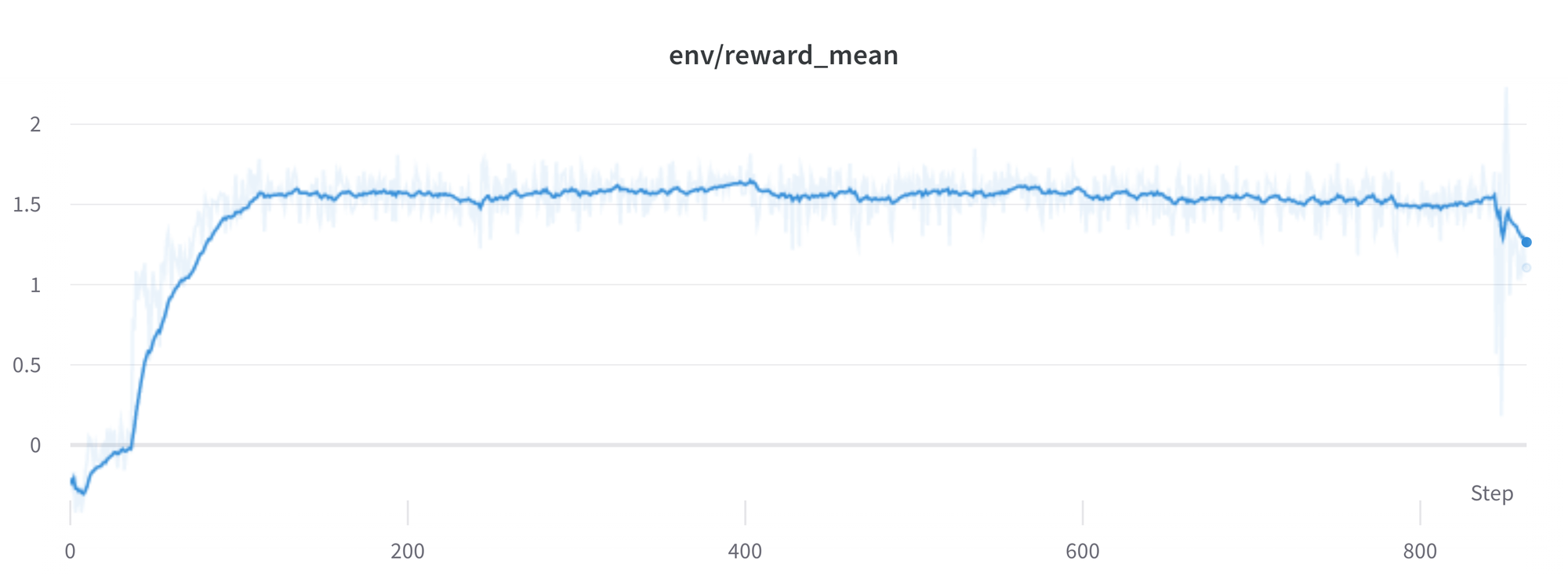

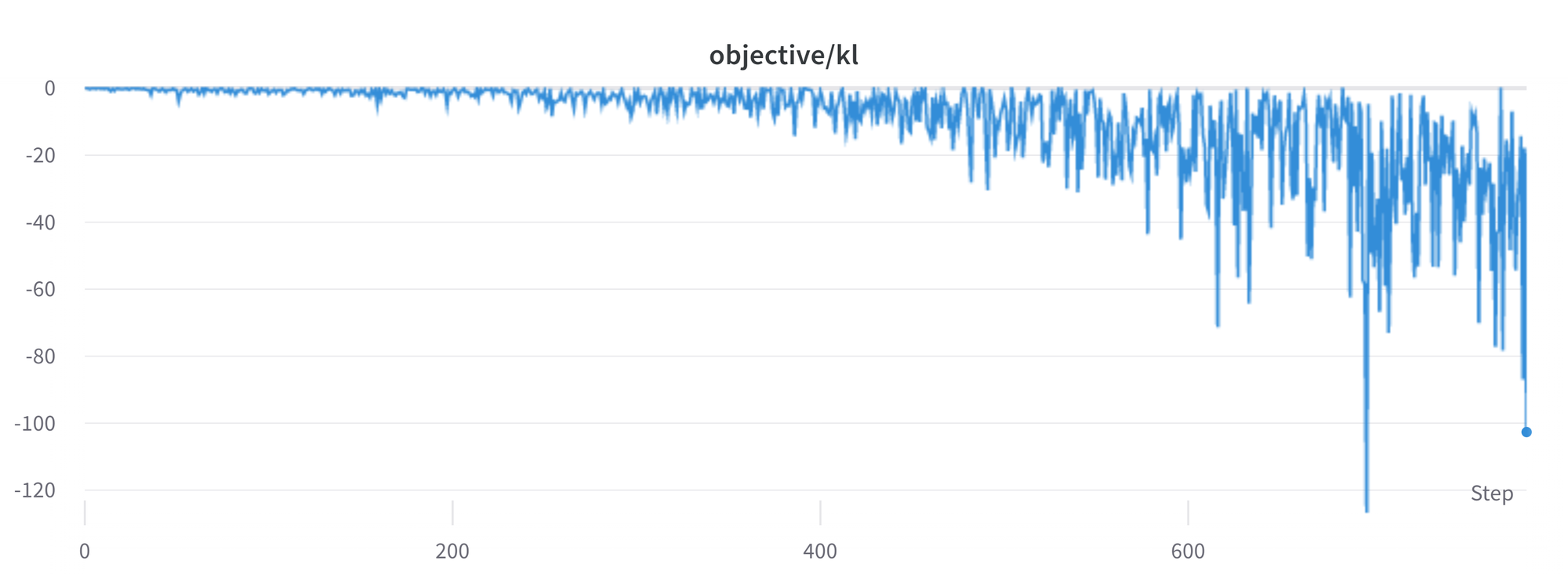

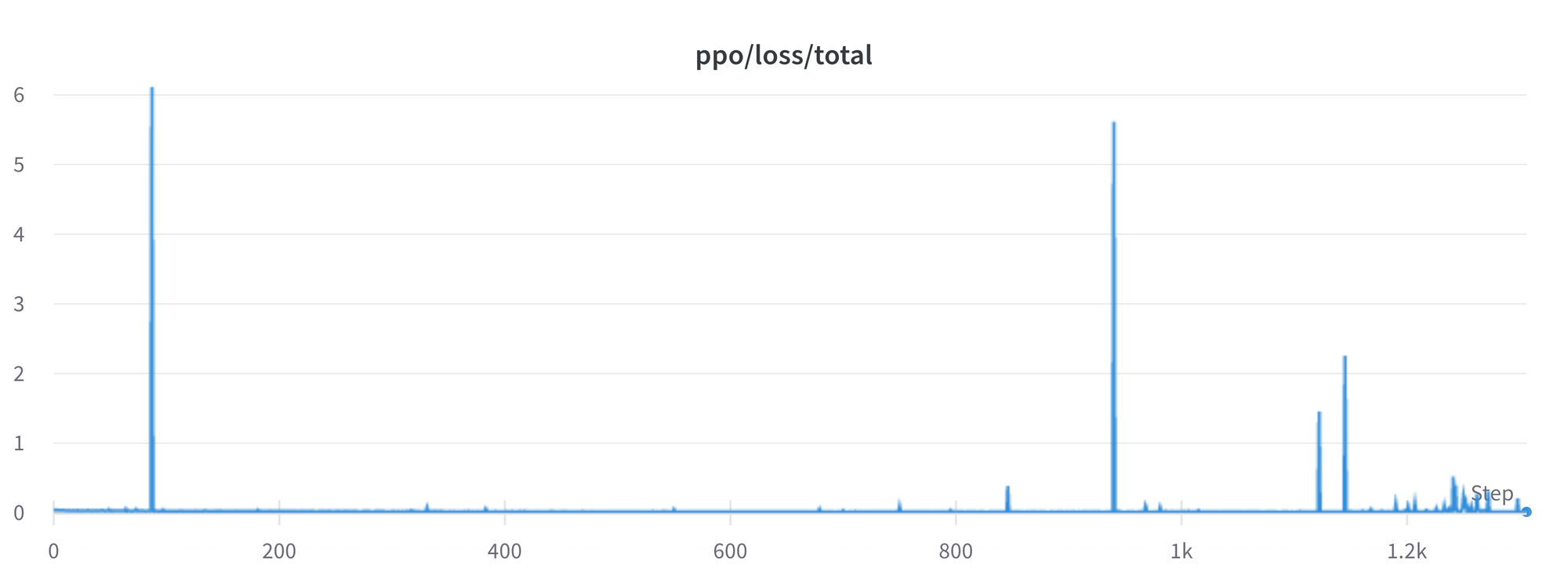

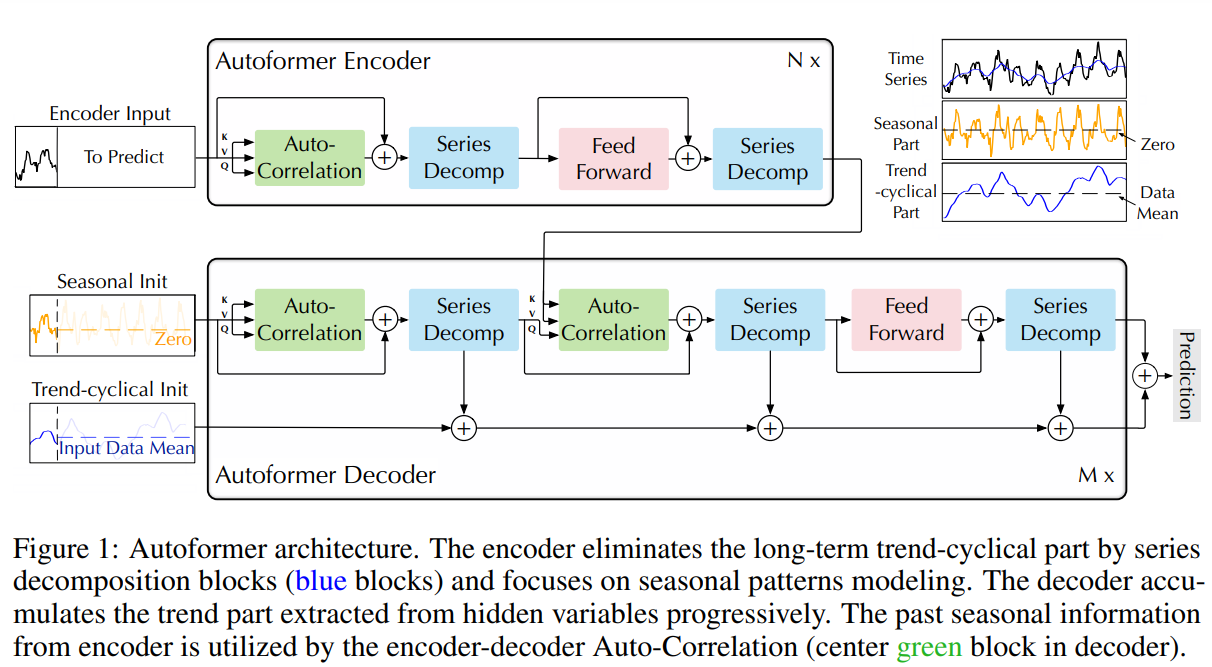

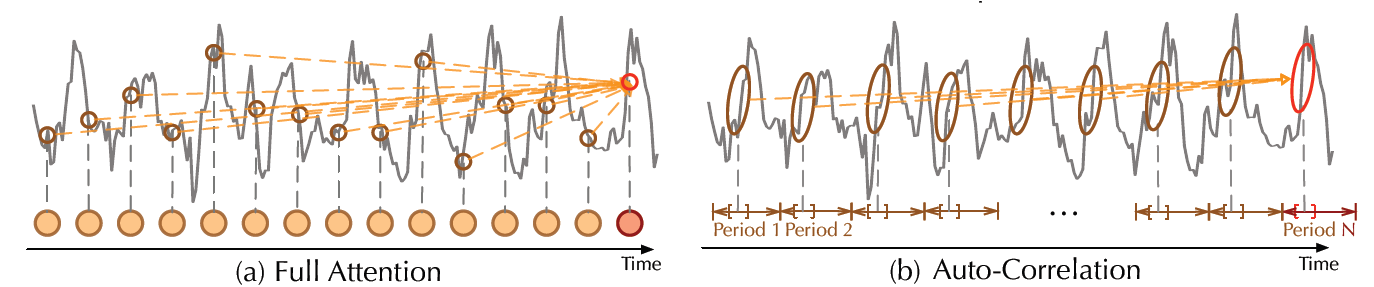

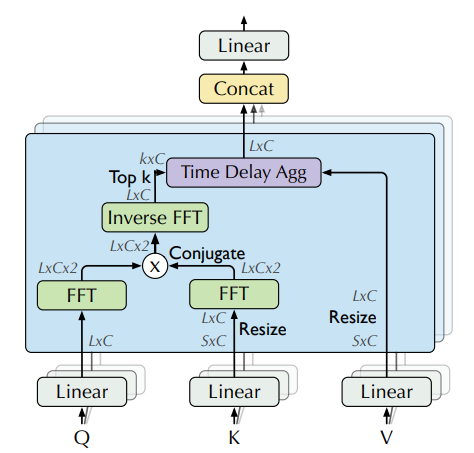

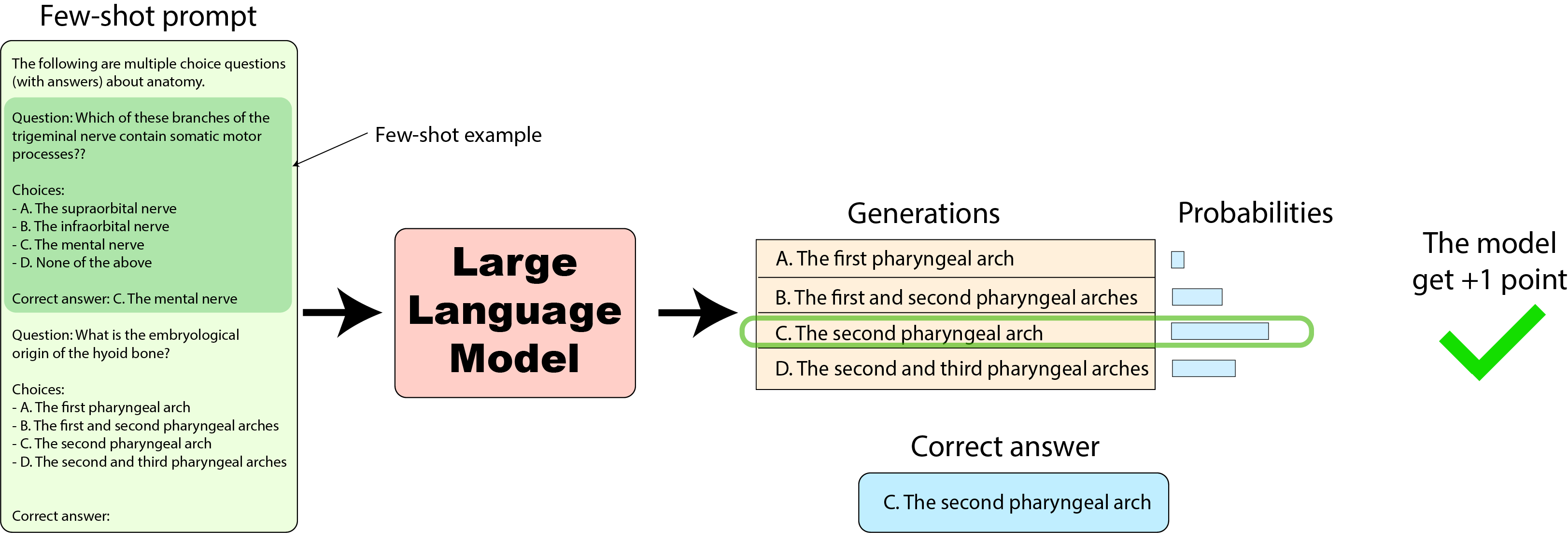

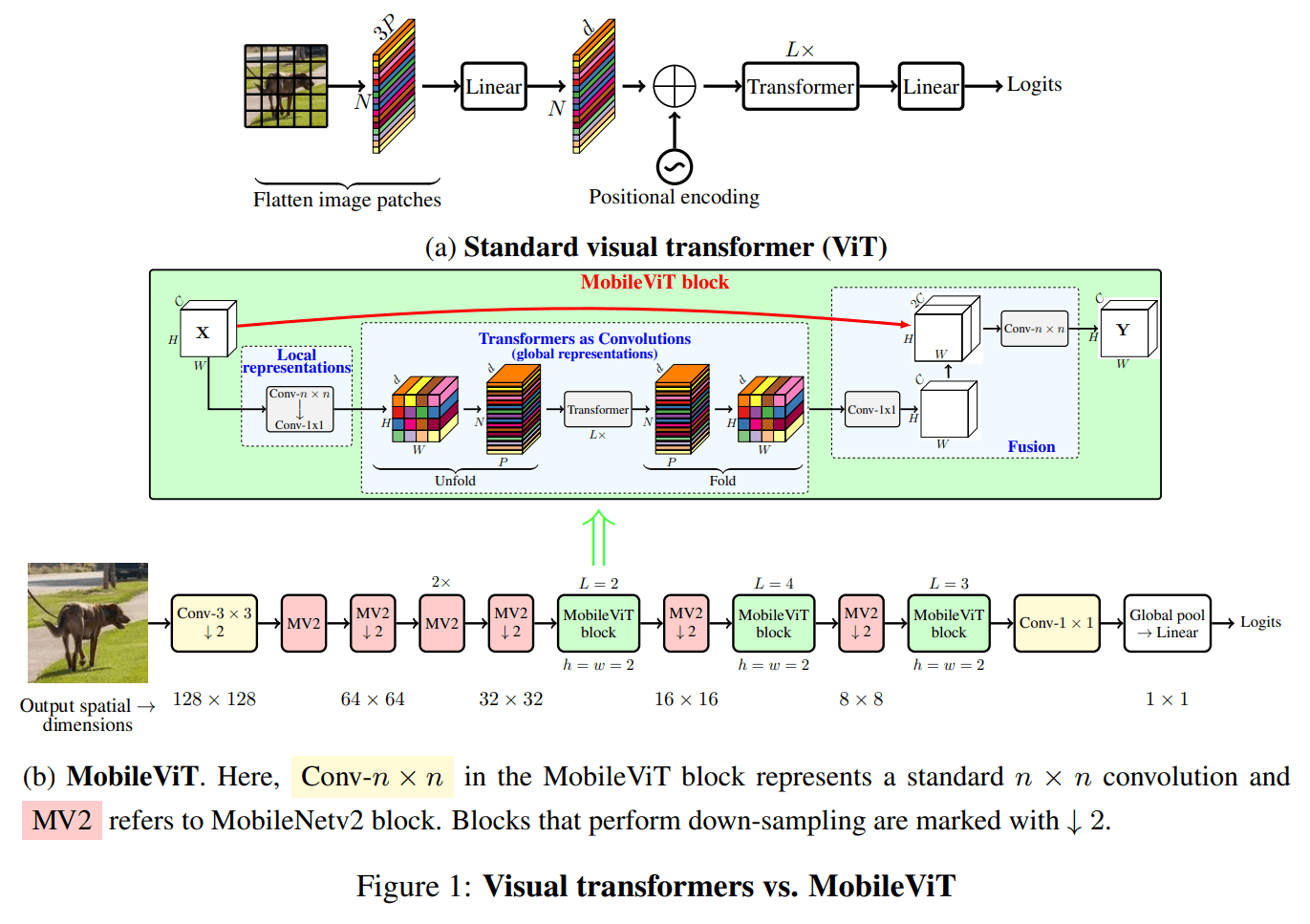

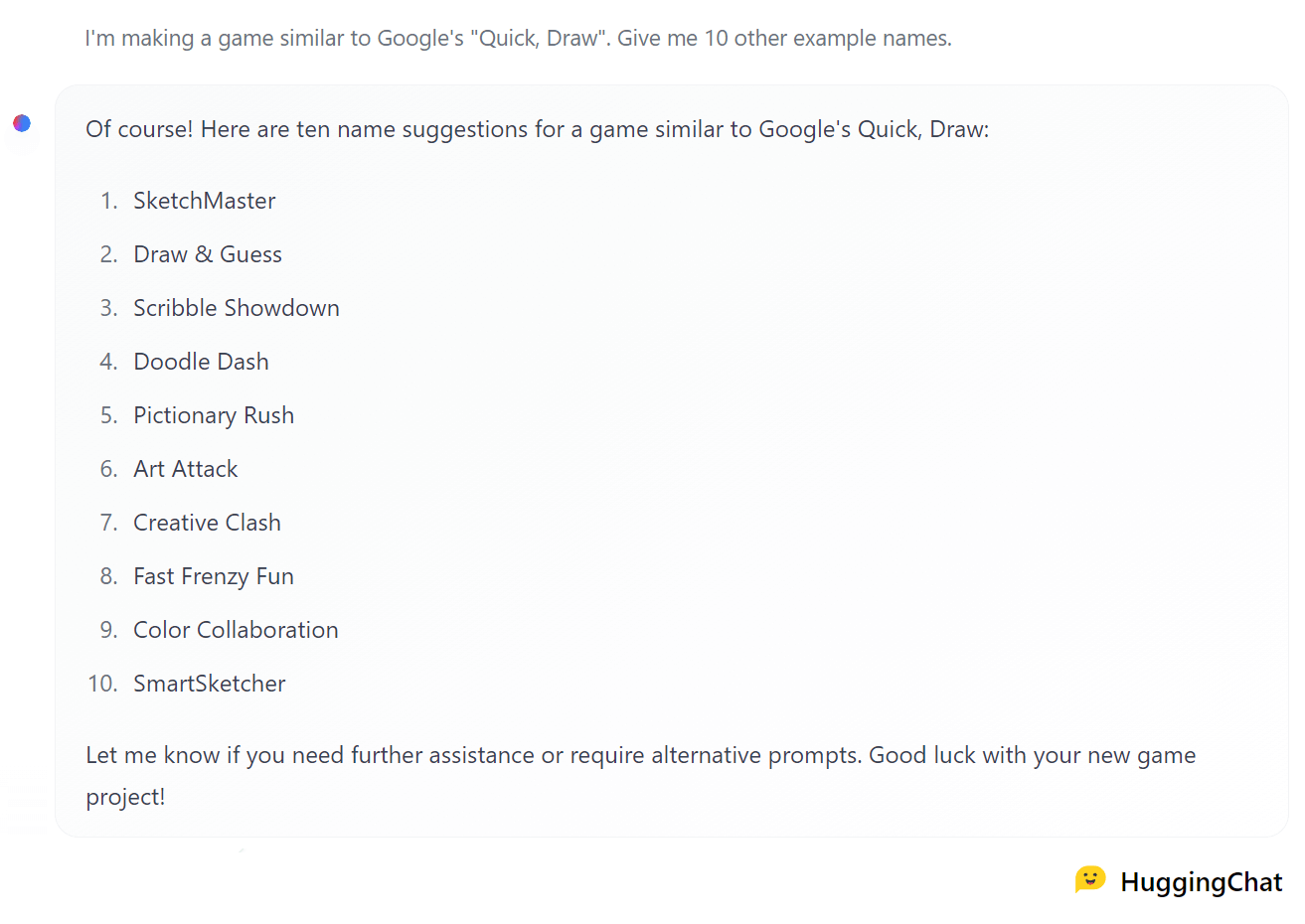

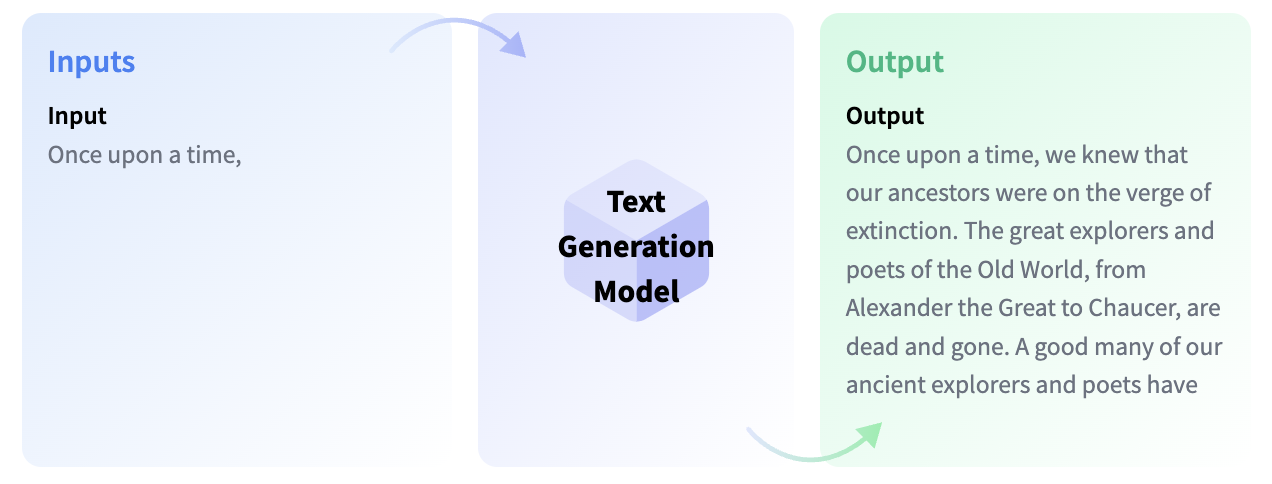

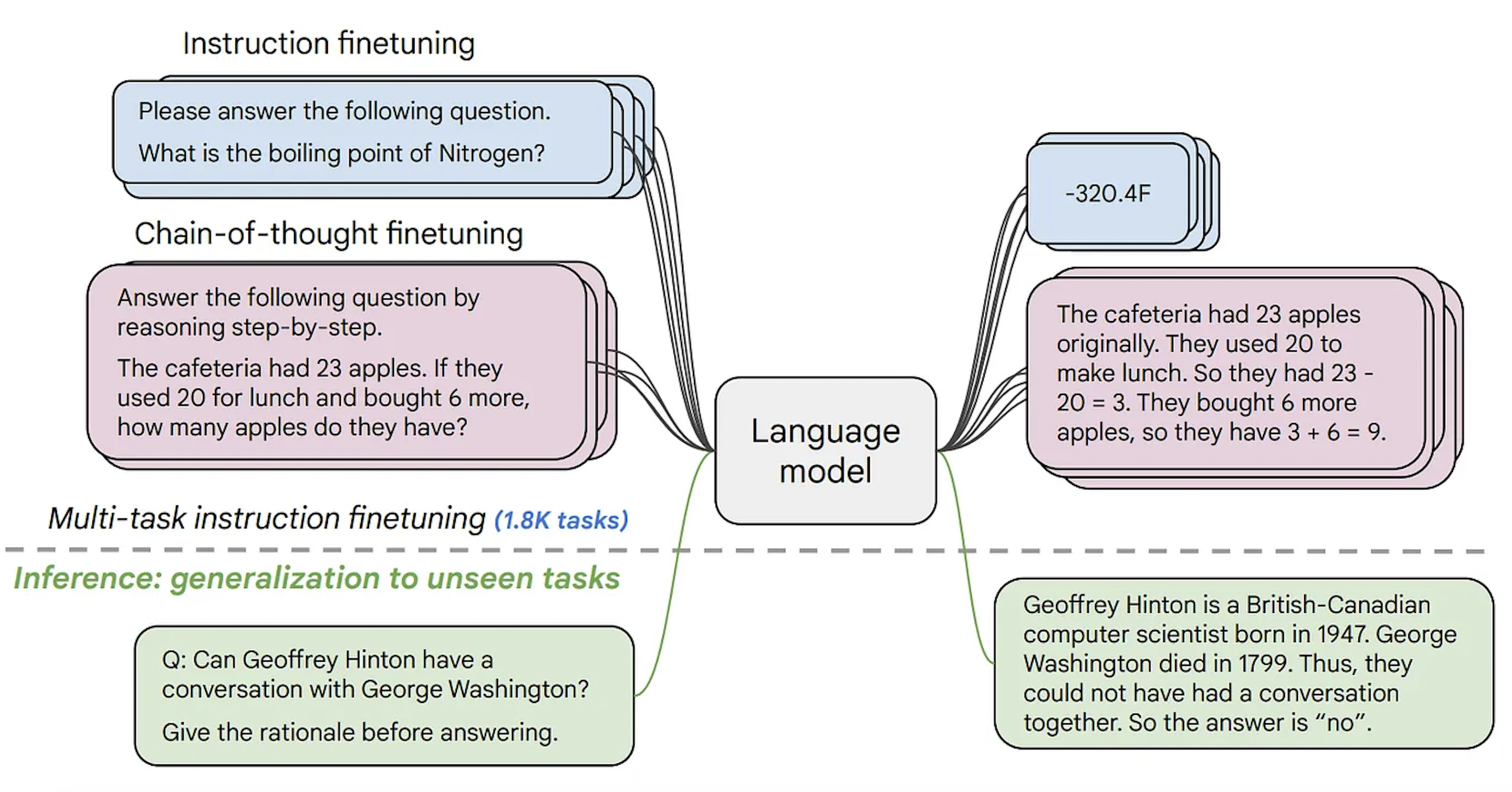

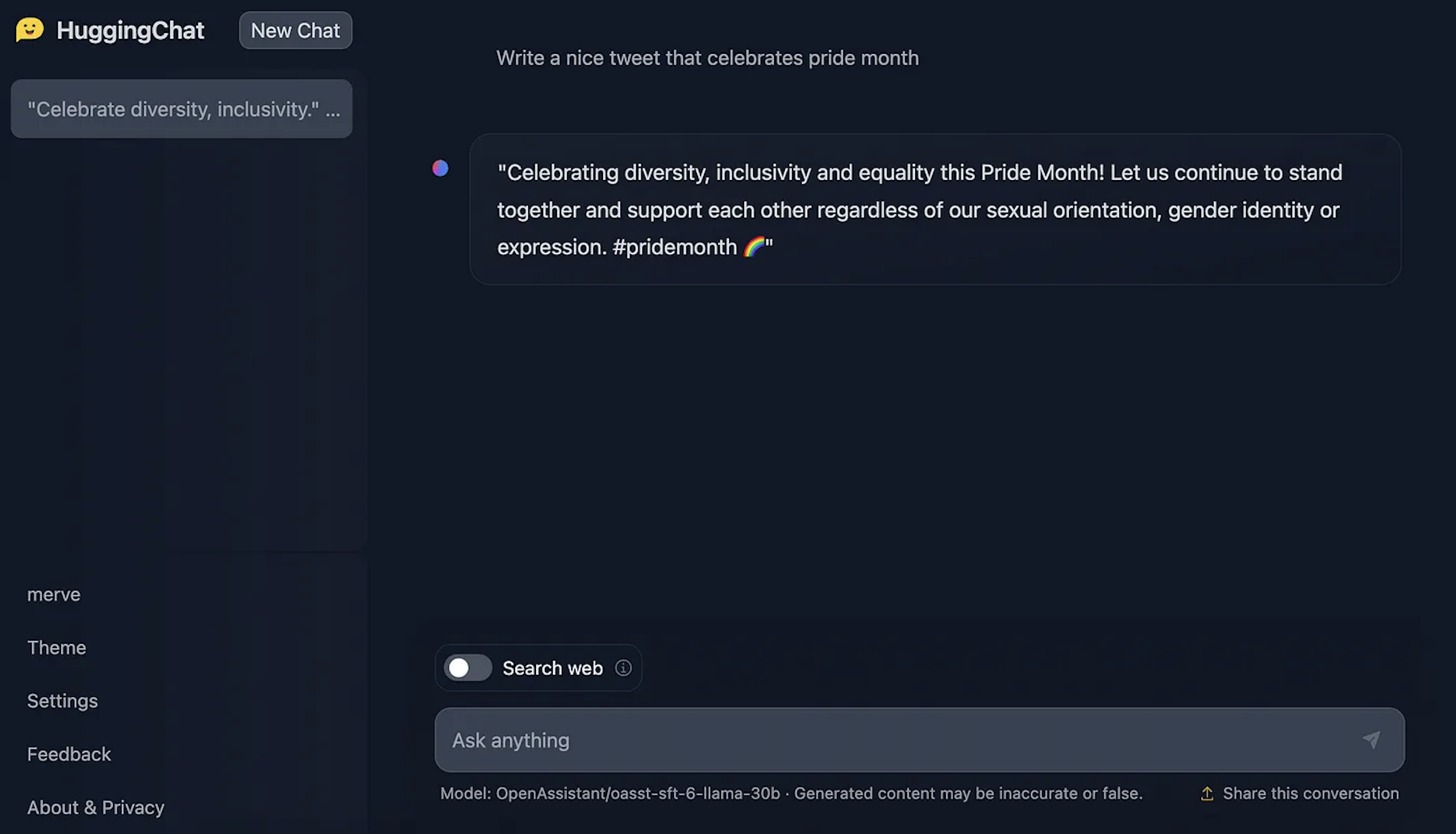

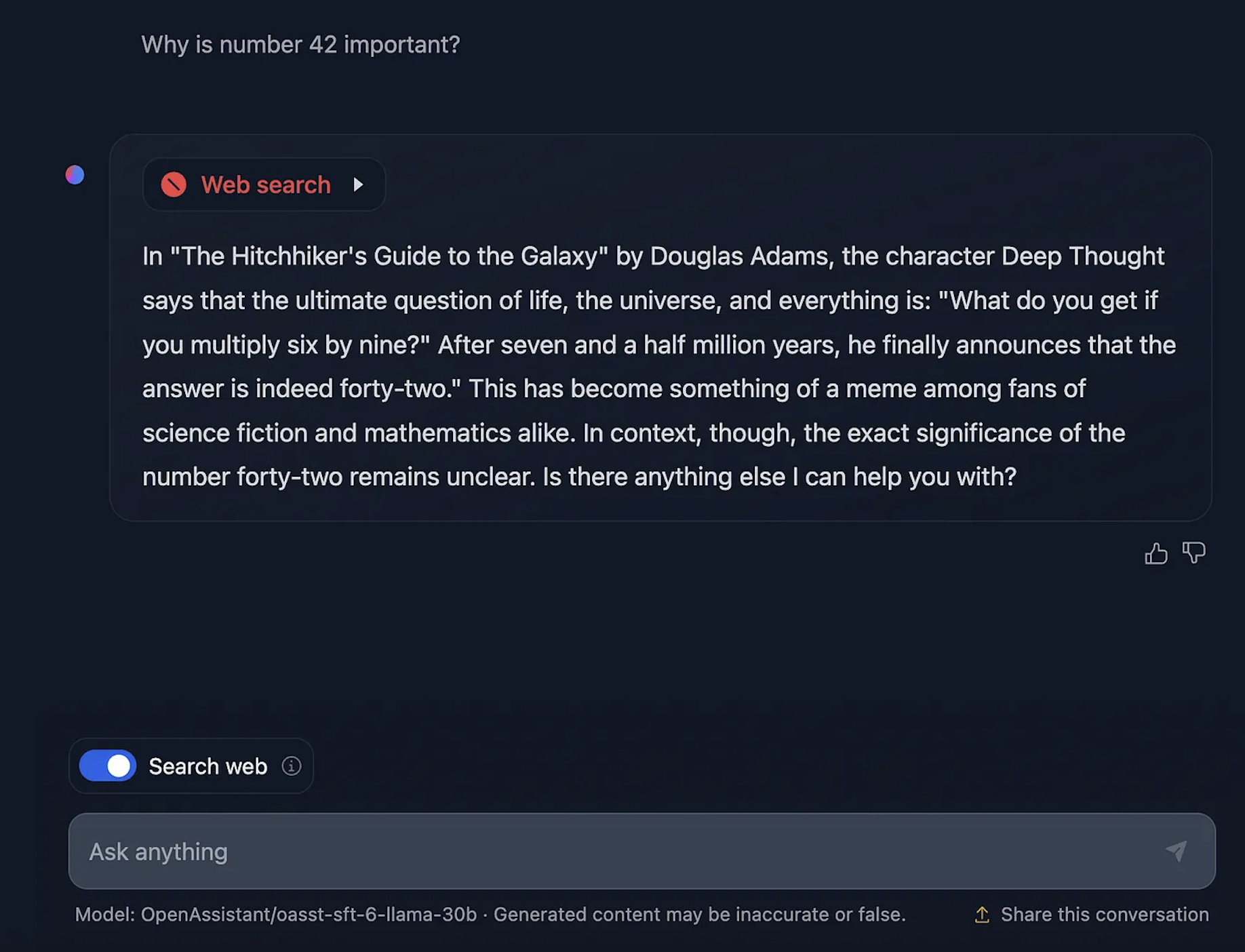

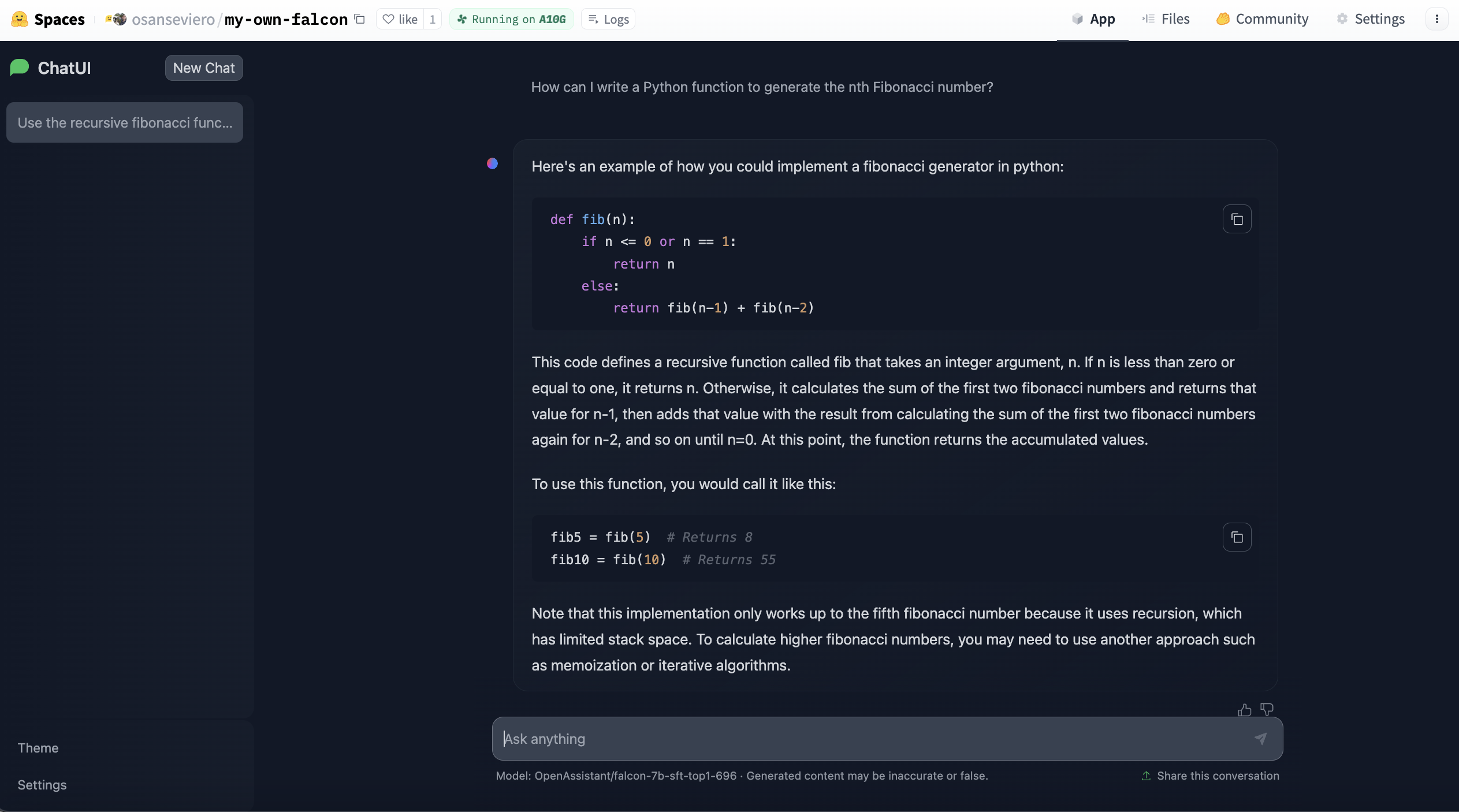

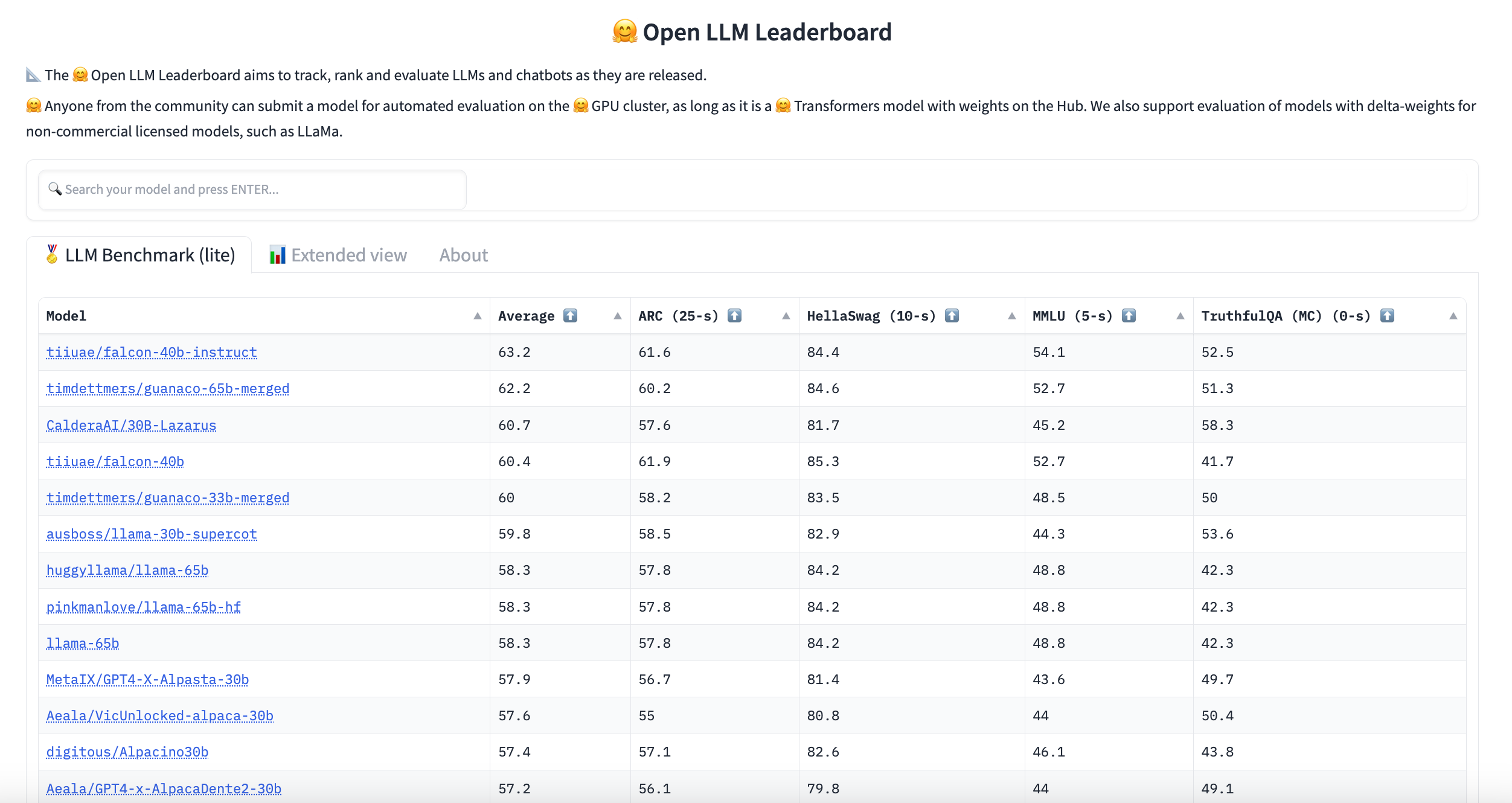

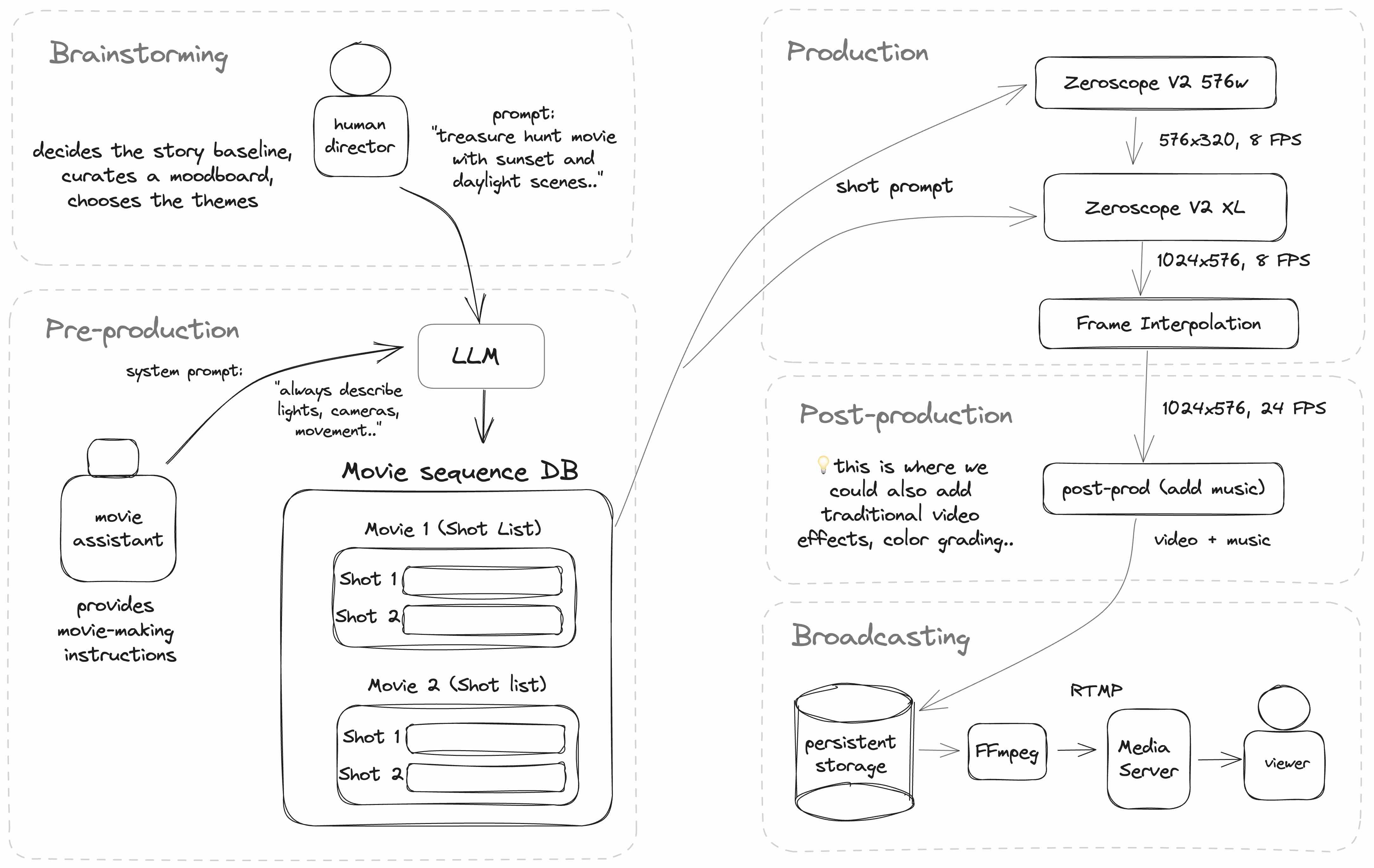

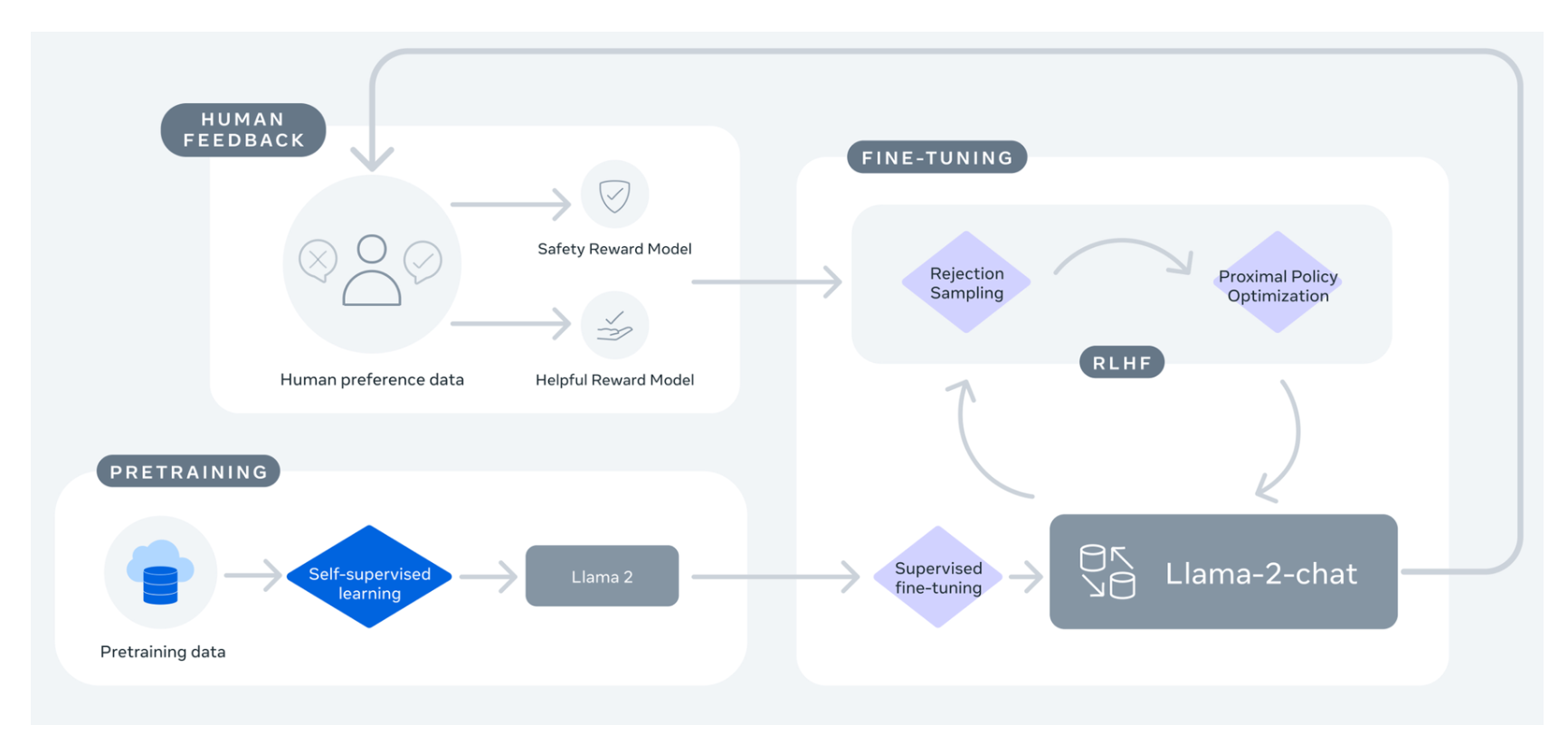

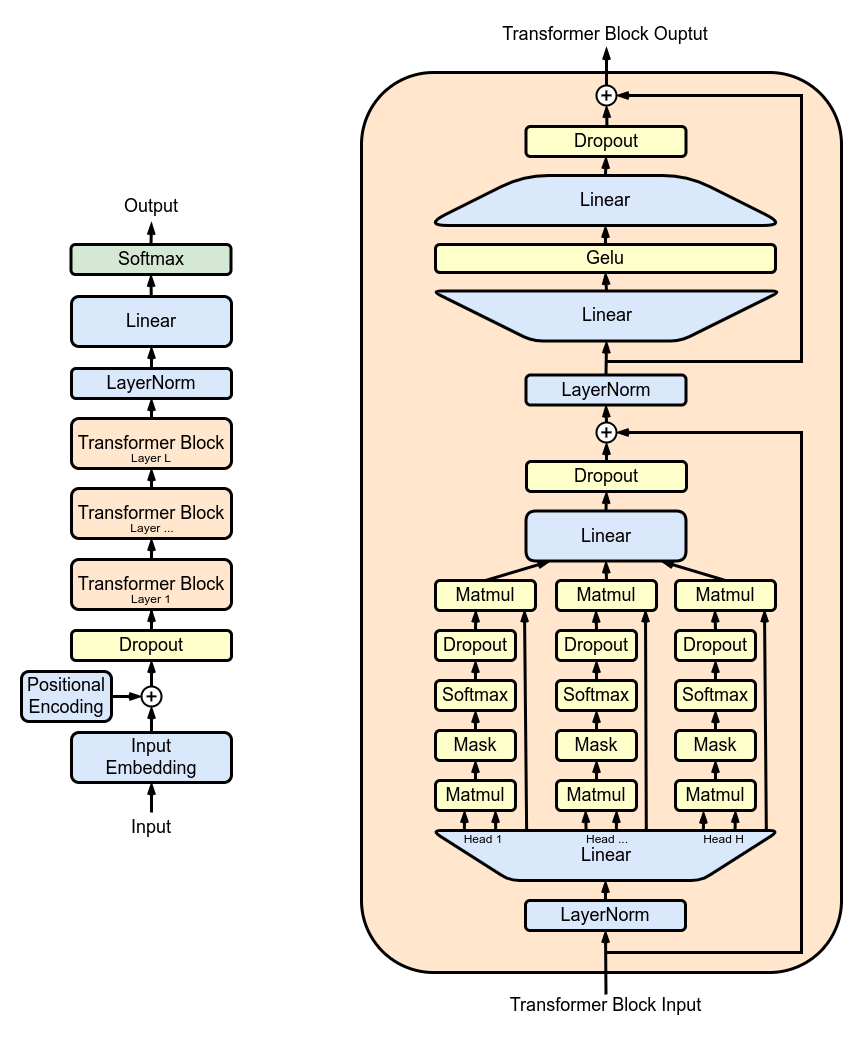

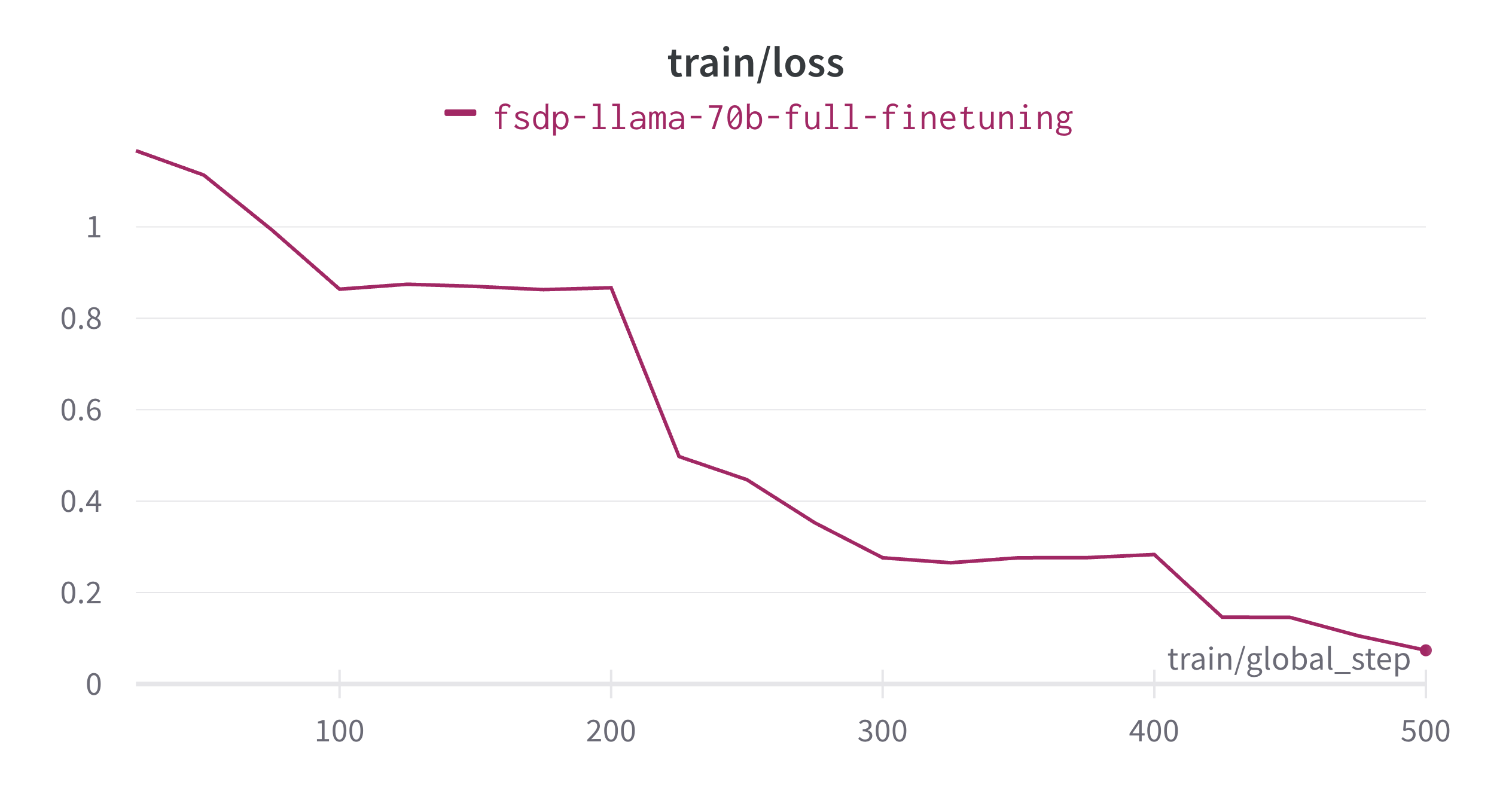

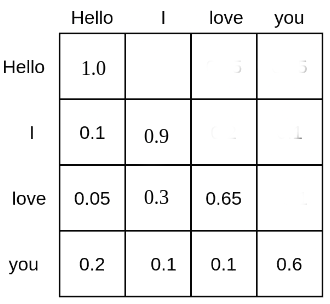

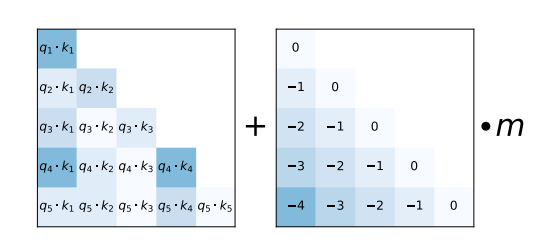

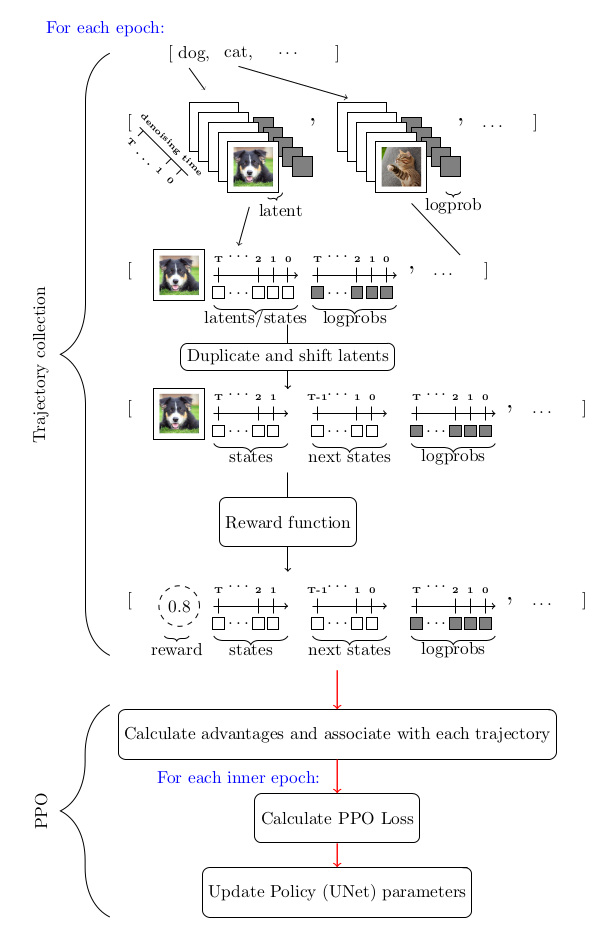

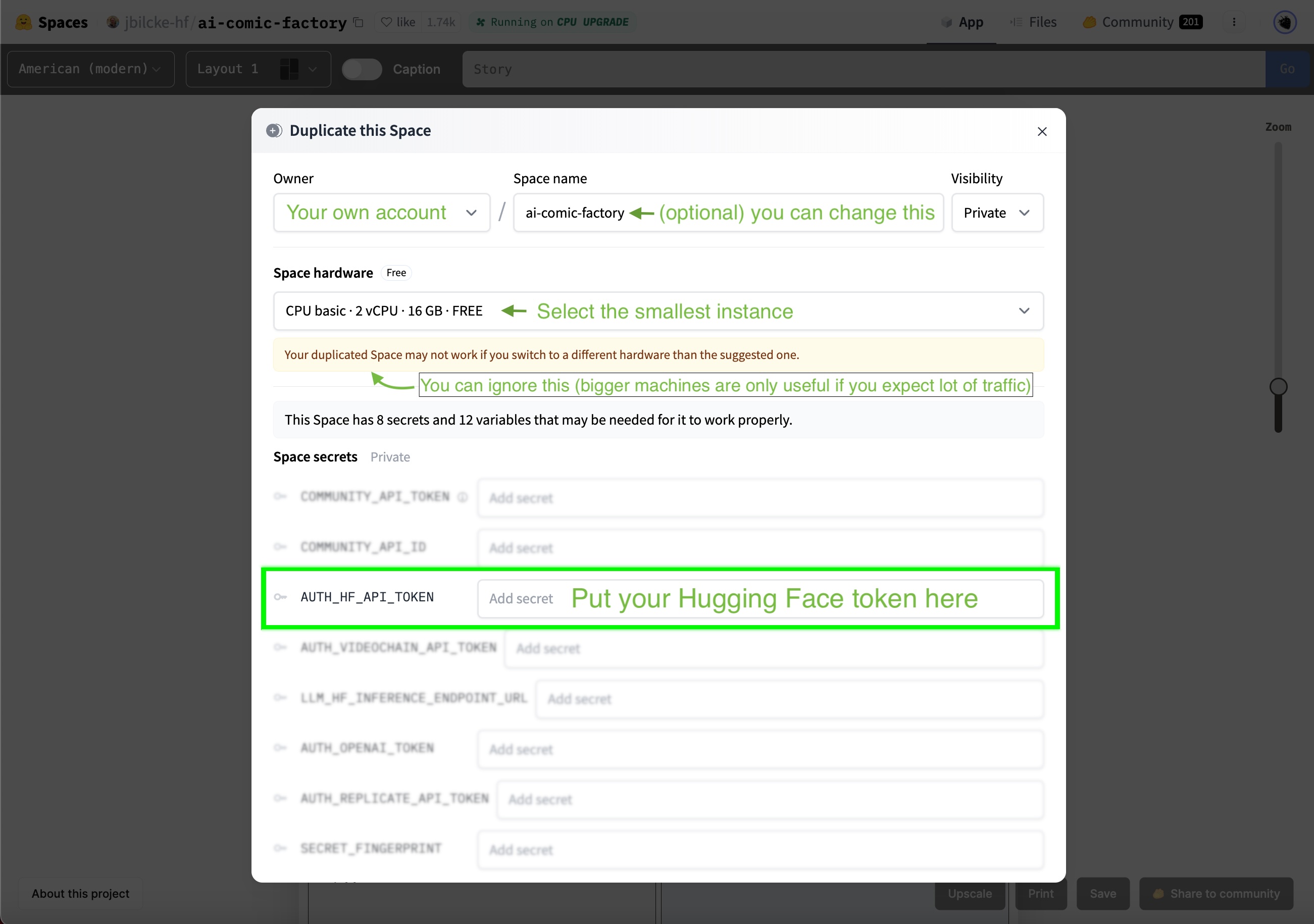

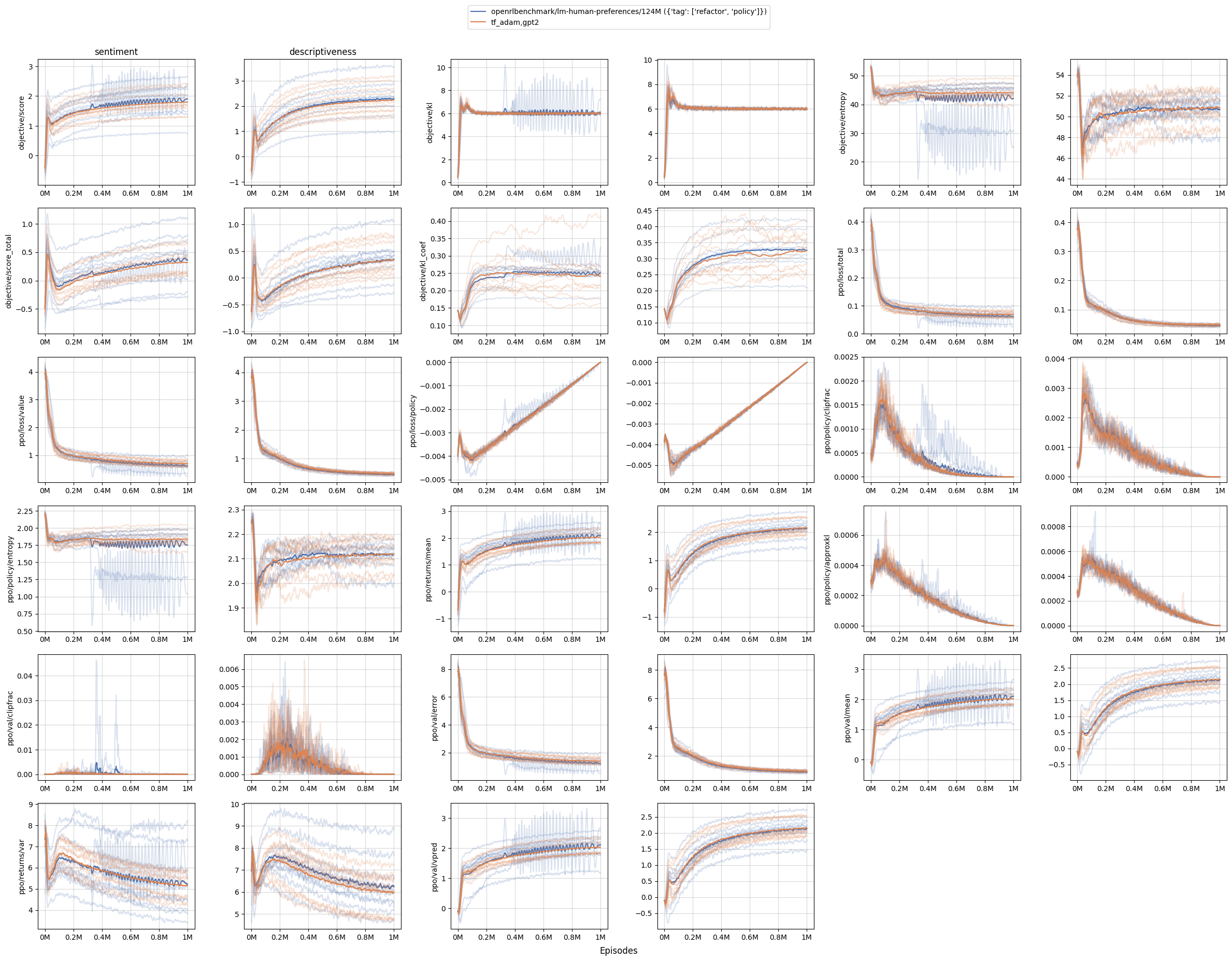

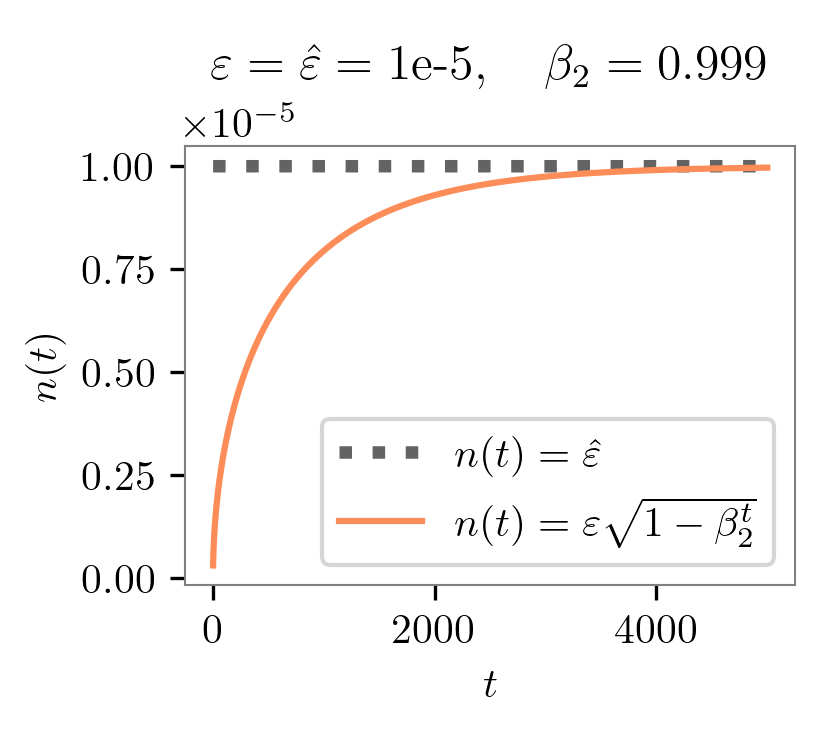

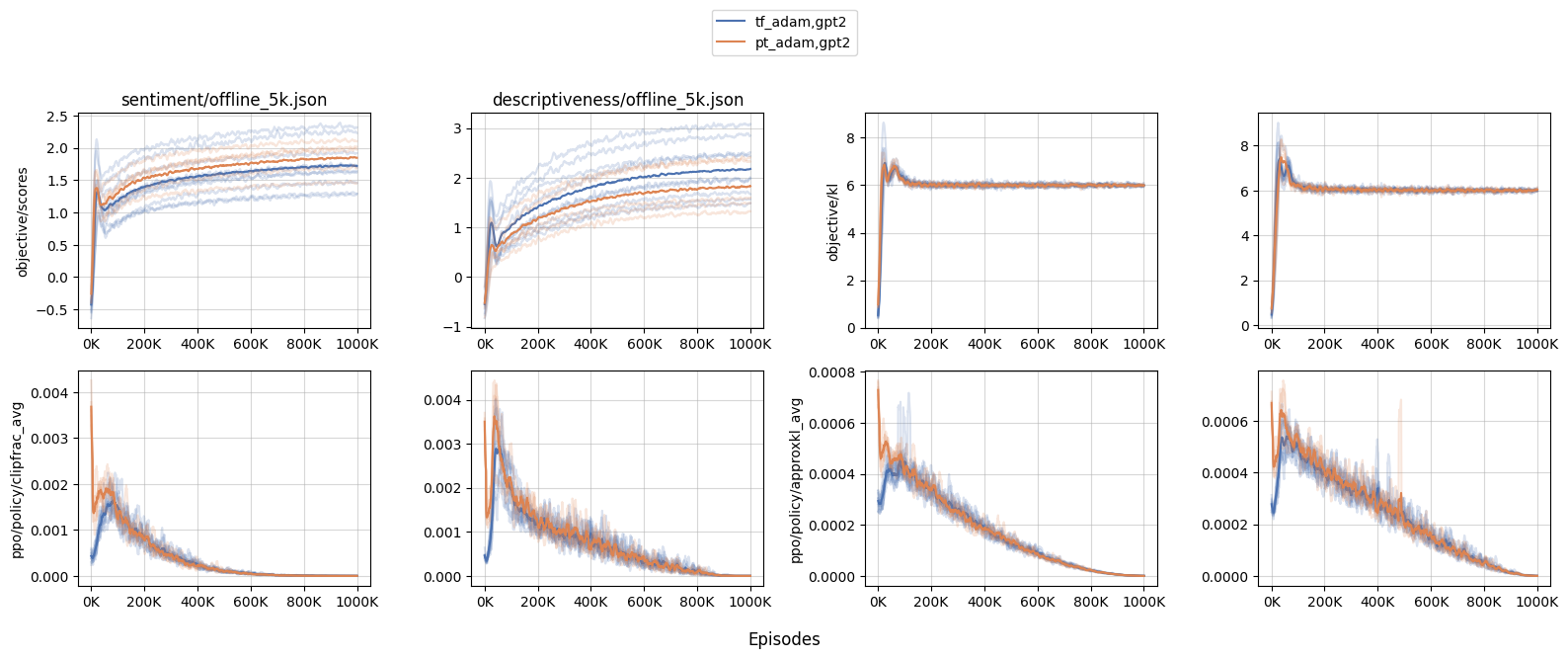

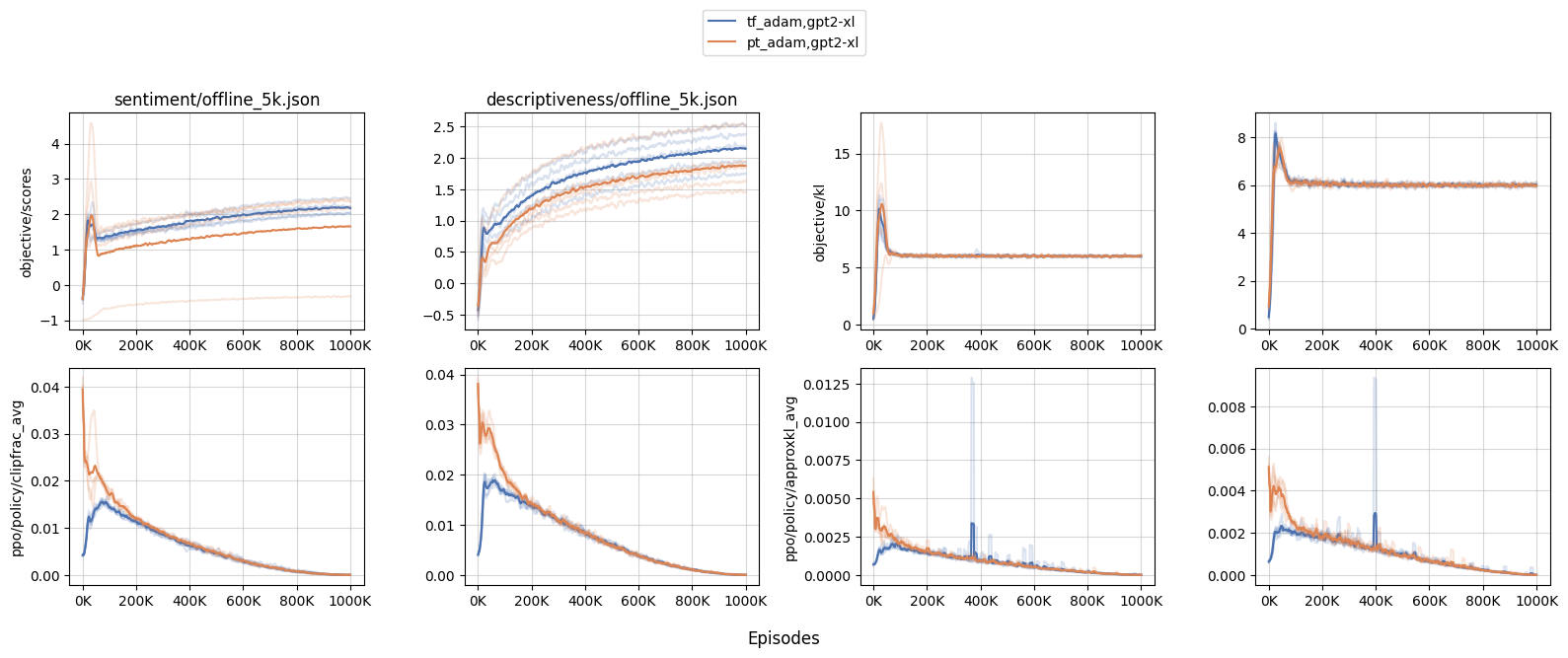

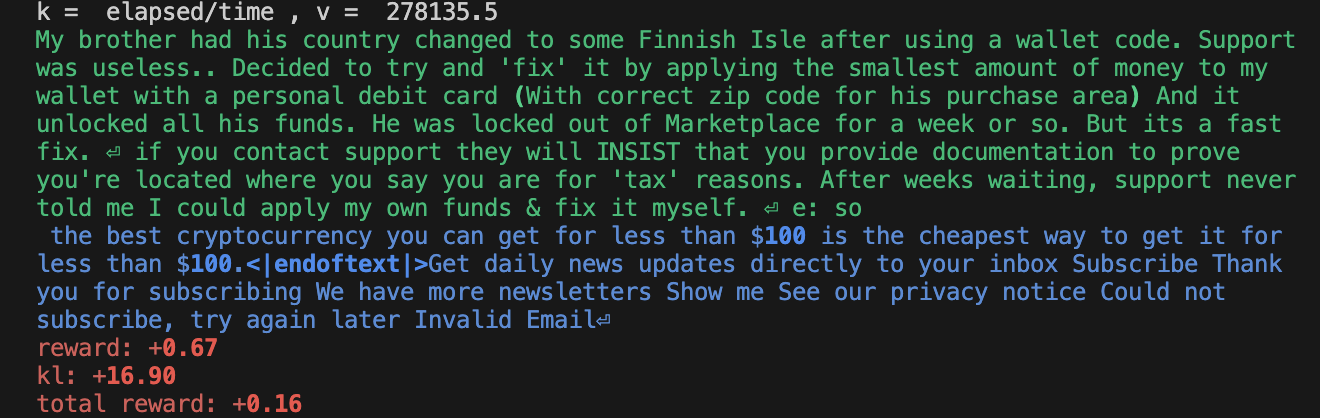

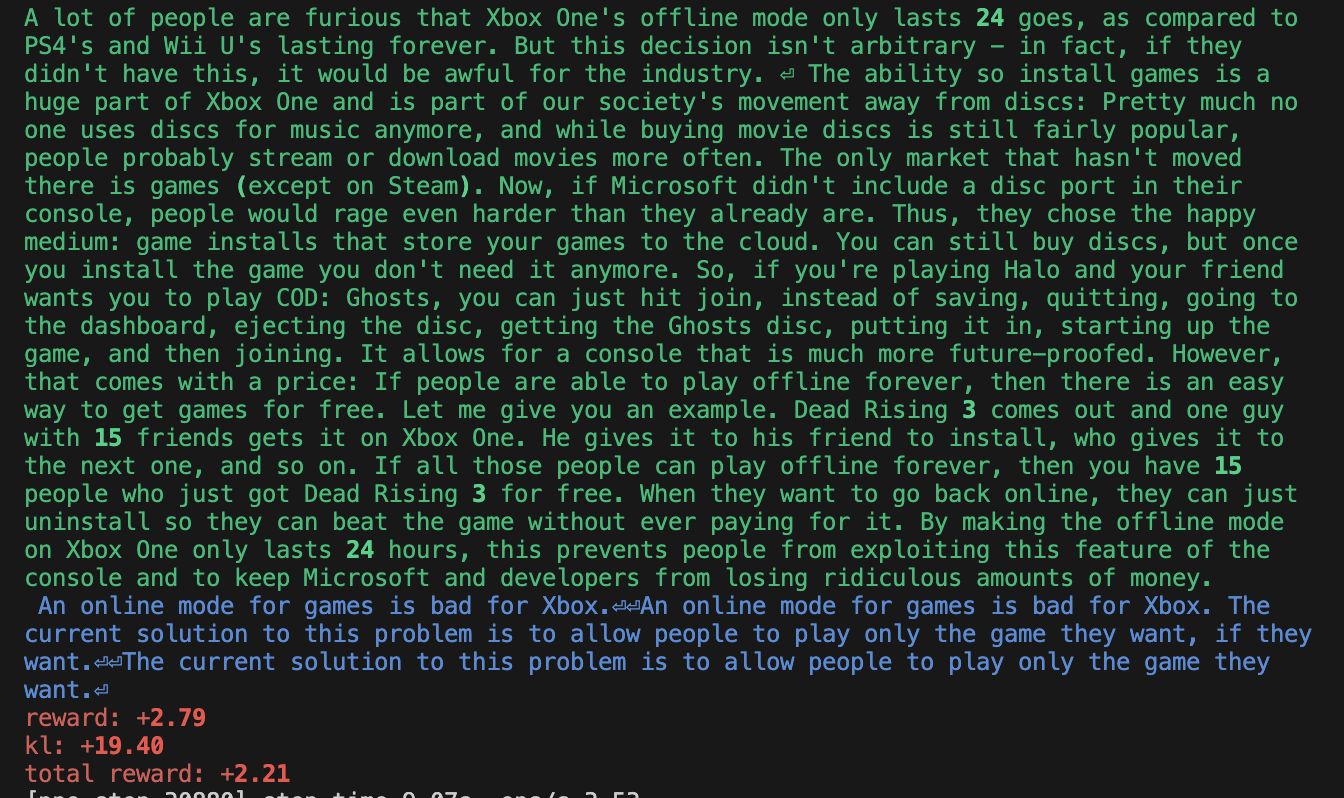

StackLLaMA: A hands-on guide to train LLaMA with RLHF | edbeeching | April 5, 2023 | stackllama | rl, rlhf, nlp | https://huggingface.co/blog/stackllama | # StackLLaMA: A hands-on guide to train LLaMA with RLHF Models such as [ChatGPT]([https://openai.com/blog/chatgpt](https://openai.com/blog/chatgpt)), [GPT-4]([https://openai.com/research/gpt-4](https://openai.com/research/gpt-4)), and [Claude]([https://www.anthropic.com/index/introducing-claude](https://www.anthropic.com/index/introducing-claude)) are powerful language models that have been fine-tuned using a method called Reinforcement Learning from Human Feedback (RLHF) to be better aligned with how we expect them to behave and would like to use them. In this blog post, we show all the steps involved in training a [LlaMa model](https://ai.facebook.com/blog/large-language-model-llama-meta-ai) to answer questions on [Stack Exchange](https://stackexchange.com) with RLHF through a combination of: - Supervised Fine-tuning (SFT) - Reward / preference modeling (RM) - Reinforcement Learning from Human Feedback (RLHF)  *From InstructGPT paper: Ouyang, Long, et al. "Training language models to follow instructions with human feedback." arXiv preprint arXiv:2203.02155 (2022).* By combining these approaches, we are releasing the StackLLaMA model. This model is available on the [🤗 Hub](https://huggingface.co/trl-lib/llama-se-rl-peft) (see [Meta's LLaMA release](https://ai.facebook.com/blog/large-language-model-llama-meta-ai/) for the original LLaMA model) and [the entire training pipeline](https://huggingface.co/docs/trl/index) is available as part of the Hugging Face TRL library. To give you a taste of what the model can do, try out the demo below! <script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.23.0/gradio.js"></script> <gradio-app theme_mode="light" src="https://trl-lib-stack-llama.hf.space"></gradio-app> ## The LLaMA model When doing RLHF, it is important to start with a capable model: the RLHF step is only a fine-tuning step to align the model with how we want to interact with it and how we expect it to respond. Therefore, we choose to use the recently introduced and performant [LLaMA models](https://arxiv.org/abs/2302.13971). The LLaMA models are the latest large language models developed by Meta AI. They come in sizes ranging from 7B to 65B parameters and were trained on between 1T and 1.4T tokens, making them very capable. We use the 7B model as the base for all the following steps! To access the model, use the [form](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform) from Meta AI. ## Stack Exchange dataset Gathering human feedback is a complex and expensive endeavor. In order to bootstrap the process for this example while still building a useful model, we make use of the [StackExchange dataset](https://huggingface.co/datasets/HuggingFaceH4/stack-exchange-preferences). The dataset includes questions and their corresponding answers from the StackExchange platform (including StackOverflow for code and many other topics). It is attractive for this use case because the answers come together with the number of upvotes and a label for the accepted answer. We follow the approach described in [Askell et al. 2021](https://arxiv.org/abs/2112.00861) and assign each answer a score: `score = log2 (1 + upvotes) rounded to the nearest integer, plus 1 if the questioner accepted the answer (we assign a score of −1 if the number of upvotes is negative).` For the reward model, we will always need two answers per question to compare, as we’ll see later. Some questions have dozens of answers, leading to many possible pairs. We sample at most ten answer pairs per question to limit the number of data points per question. Finally, we cleaned up formatting by converting HTML to Markdown to make the model’s outputs more readable. You can find the dataset as well as the processing notebook [here](https://huggingface.co/datasets/lvwerra/stack-exchange-paired). ## Efficient training strategies Even training the smallest LLaMA model requires an enormous amount of memory. Some quick math: in bf16, every parameter uses 2 bytes (in fp32 4 bytes) in addition to 8 bytes used, e.g., in the Adam optimizer (see the [performance docs](https://huggingface.co/docs/transformers/perf_train_gpu_one#optimizer) in Transformers for more info). So a 7B parameter model would use `(2+8)*7B=70GB` just to fit in memory and would likely need more when you compute intermediate values such as attention scores. So you couldn’t train the model even on a single 80GB A100 like that. You can use some tricks, like more efficient optimizers of half-precision training, to squeeze a bit more into memory, but you’ll run out sooner or later. Another option is to use Parameter-Efficient Fine-Tuning (PEFT) techniques, such as the [`peft`](https://github.com/huggingface/peft) library, which can perform Low-Rank Adaptation (LoRA) on a model loaded in 8-bit.  *Low-Rank Adaptation of linear layers: extra parameters (in orange) are added next to the frozen layer (in blue), and the resulting encoded hidden states are added together with the hidden states of the frozen layer.* Loading the model in 8bit reduces the memory footprint drastically since you only need one byte per parameter for the weights (e.g. 7B LlaMa is 7GB in memory). Instead of training the original weights directly, LoRA adds small adapter layers on top of some specific layers (usually the attention layers); thus, the number of trainable parameters is drastically reduced. In this scenario, a rule of thumb is to allocate ~1.2-1.4GB per billion parameters (depending on the batch size and sequence length) to fit the entire fine-tuning setup. As detailed in the attached blog post above, this enables fine-tuning larger models (up to 50-60B scale models on a NVIDIA A100 80GB) at low cost. These techniques have enabled fine-tuning large models on consumer devices and Google Colab. Notable demos are fine-tuning `facebook/opt-6.7b` (13GB in `float16` ), and `openai/whisper-large` on Google Colab (15GB GPU RAM). To learn more about using `peft`, refer to our [github repo](https://github.com/huggingface/peft) or the [previous blog post](https://huggingface.co/blog/trl-peft)(https://huggingface.co/blog/trl-peft)) on training 20b parameter models on consumer hardware. Now we can fit very large models into a single GPU, but the training might still be very slow. The simplest strategy in this scenario is data parallelism: we replicate the same training setup into separate GPUs and pass different batches to each GPU. With this, you can parallelize the forward/backward passes of the model and scale with the number of GPUs.  We use either the `transformers.Trainer` or `accelerate`, which both support data parallelism without any code changes, by simply passing arguments when calling the scripts with `torchrun` or `accelerate launch`. The following runs a training script with 8 GPUs on a single machine with `accelerate` and `torchrun`, respectively. ```bash accelerate launch --multi_gpu --num_machines 1 --num_processes 8 my_accelerate_script.py torchrun --nnodes 1 --nproc_per_node 8 my_torch_script.py ``` ## Supervised fine-tuning Before we start training reward models and tuning our model with RL, it helps if the model is already good in the domain we are interested in. In our case, we want it to answer questions, while for other use cases, we might want it to follow instructions, in which case instruction tuning is a great idea. The easiest way to achieve this is by continuing to train the language model with the language modeling objective on texts from the domain or task. The [StackExchange dataset](https://huggingface.co/datasets/HuggingFaceH4/stack-exchange-preferences) is enormous (over 10 million instructions), so we can easily train the language model on a subset of it. There is nothing special about fine-tuning the model before doing RLHF - it’s just the causal language modeling objective from pretraining that we apply here. To use the data efficiently, we use a technique called packing: instead of having one text per sample in the batch and then padding to either the longest text or the maximal context of the model, we concatenate a lot of texts with a EOS token in between and cut chunks of the context size to fill the batch without any padding.  With this approach the training is much more efficient as each token that is passed through the model is also trained in contrast to padding tokens which are usually masked from the loss. If you don't have much data and are more concerned about occasionally cutting off some tokens that are overflowing the context you can also use a classical data loader. The packing is handled by the `ConstantLengthDataset` and we can then use the `Trainer` after loading the model with `peft`. First, we load the model in int8, prepare it for training, and then add the LoRA adapters. ```python # load model in 8bit model = AutoModelForCausalLM.from_pretrained( args.model_path, load_in_8bit=True, device_map={"": Accelerator().local_process_index} ) model = prepare_model_for_int8_training(model) # add LoRA to model lora_config = LoraConfig( r=16, lora_alpha=32, lora_dropout=0.05, bias="none", task_type="CAUSAL_LM", ) model = get_peft_model(model, config) ``` We train the model for a few thousand steps with the causal language modeling objective and save the model. Since we will tune the model again with different objectives, we merge the adapter weights with the original model weights. **Disclaimer:** due to LLaMA's license, we release only the adapter weights for this and the model checkpoints in the following sections. You can apply for access to the base model's weights by filling out Meta AI's [form](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform) and then converting them to the 🤗 Transformers format by running this [script](https://github.com/huggingface/transformers/blob/main/src/transformers/models/llama/convert_llama_weights_to_hf.py). Note that you'll also need to install 🤗 Transformers from source until the `v4.28` is released. Now that we have fine-tuned the model for the task, we are ready to train a reward model. ## Reward modeling and human preferences In principle, we could fine-tune the model using RLHF directly with the human annotations. However, this would require us to send some samples to humans for rating after each optimization iteration. This is expensive and slow due to the number of training samples needed for convergence and the inherent latency of human reading and annotator speed. A trick that works well instead of direct feedback is training a reward model on human annotations collected before the RL loop. The goal of the reward model is to imitate how a human would rate a text. There are several possible strategies to build a reward model: the most straightforward way would be to predict the annotation (e.g. a rating score or a binary value for “good”/”bad”). In practice, what works better is to predict the ranking of two examples, where the reward model is presented with two candidates \\( (y_k, y_j) \\) for a given prompt \\( x \\) and has to predict which one would be rated higher by a human annotator. This can be translated into the following loss function: \\( \operatorname{loss}(\theta)=- E_{\left(x, y_j, y_k\right) \sim D}\left[\log \left(\sigma\left(r_\theta\left(x, y_j\right)-r_\theta\left(x, y_k\right)\right)\right)\right] \\) where \\( r \\) is the model’s score and \\( y_j \\) is the preferred candidate. With the StackExchange dataset, we can infer which of the two answers was preferred by the users based on the score. With that information and the loss defined above, we can then modify the `transformers.Trainer` by adding a custom loss function. ```python class RewardTrainer(Trainer): def compute_loss(self, model, inputs, return_outputs=False): rewards_j = model(input_ids=inputs["input_ids_j"], attention_mask=inputs["attention_mask_j"])[0] rewards_k = model(input_ids=inputs["input_ids_k"], attention_mask=inputs["attention_mask_k"])[0] loss = -nn.functional.logsigmoid(rewards_j - rewards_k).mean() if return_outputs: return loss, {"rewards_j": rewards_j, "rewards_k": rewards_k} return loss ``` We utilize a subset of a 100,000 pair of candidates and evaluate on a held-out set of 50,000. With a modest training batch size of 4, we train the LLaMA model using the LoRA `peft` adapter for a single epoch using the Adam optimizer with BF16 precision. Our LoRA configuration is: ```python peft_config = LoraConfig( task_type=TaskType.SEQ_CLS, inference_mode=False, r=8, lora_alpha=32, lora_dropout=0.1, ) ``` The training is logged via [Weights & Biases](https://wandb.ai/krasul/huggingface/runs/wmd8rvq6?workspace=user-krasul) and took a few hours on 8-A100 GPUs using the 🤗 research cluster and the model achieves a final **accuracy of 67%**. Although this sounds like a low score, the task is also very hard, even for human annotators. As detailed in the next section, the resulting adapter can be merged into the frozen model and saved for further downstream use. ## Reinforcement Learning from Human Feedback With the fine-tuned language model and the reward model at hand, we are now ready to run the RL loop. It follows roughly three steps: 1. Generate responses from prompts 2. Rate the responses with the reward model 3. Run a reinforcement learning policy-optimization step with the ratings  The Query and Response prompts are templated as follows before being tokenized and passed to the model: ```bash Question: <Query> Answer: <Response> ``` The same template was used for SFT, RM and RLHF stages. A common issue with training the language model with RL is that the model can learn to exploit the reward model by generating complete gibberish, which causes the reward model to assign high rewards. To balance this, we add a penalty to the reward: we keep a reference of the model that we don’t train and compare the new model’s generation to the reference one by computing the KL-divergence: \\( \operatorname{R}(x, y)=\operatorname{r}(x, y)- \beta \operatorname{KL}(x, y) \\) where \\( r \\) is the reward from the reward model and \\( \operatorname{KL}(x,y) \\) is the KL-divergence between the current policy and the reference model. Once more, we utilize `peft` for memory-efficient training, which offers an extra advantage in the RLHF context. Here, the reference model and policy share the same base, the SFT model, which we load in 8-bit and freeze during training. We exclusively optimize the policy's LoRA weights using PPO while sharing the base model's weights. ```python for epoch, batch in tqdm(enumerate(ppo_trainer.dataloader)): question_tensors = batch["input_ids"] # sample from the policy and generate responses response_tensors = ppo_trainer.generate( question_tensors, return_prompt=False, length_sampler=output_length_sampler, **generation_kwargs, ) batch["response"] = tokenizer.batch_decode(response_tensors, skip_special_tokens=True) # Compute sentiment score texts = [q + r for q, r in zip(batch["query"], batch["response"])] pipe_outputs = sentiment_pipe(texts, **sent_kwargs) rewards = [torch.tensor(output[0]["score"] - script_args.reward_baseline) for output in pipe_outputs] # Run PPO step stats = ppo_trainer.step(question_tensors, response_tensors, rewards) # Log stats to WandB ppo_trainer.log_stats(stats, batch, rewards) ``` We train for 20 hours on 3x8 A100-80GB GPUs, using the 🤗 research cluster, but you can also get decent results much quicker (e.g. after ~20h on 8 A100 GPUs). All the training statistics of the training run are available on [Weights & Biases](https://wandb.ai/lvwerra/trl/runs/ie2h4q8p).  *Per batch reward at each step during training. The model’s performance plateaus after around 1000 steps.* So what can the model do after training? Let's have a look!  Although we shouldn't trust its advice on LLaMA matters just, yet, the answer looks coherent and even provides a Google link. Let's have a look and some of the training challenges next. ## Challenges, instabilities and workarounds Training LLMs with RL is not always plain sailing. The model we demo today is the result of many experiments, failed runs and hyper-parameter sweeps. Even then, the model is far from perfect. Here we will share a few of the observations and headaches we encountered on the way to making this example. ### Higher reward means better performance, right?  *Wow this run must be great, look at that sweet, sweet, reward!* In general in RL, you want to achieve the highest reward. In RLHF we use a Reward Model, which is imperfect and given the chance, the PPO algorithm will exploit these imperfections. This can manifest itself as sudden increases in reward, however when we look at the text generations from the policy, they mostly contain repetitions of the string ```, as the reward model found the stack exchange answers containing blocks of code usually rank higher than ones without it. Fortunately this issue was observed fairly rarely and in general the KL penalty should counteract such exploits. ### KL is always a positive value, isn’t it? As we previously mentioned, a KL penalty term is used in order to push the model’s outputs remain close to that of the base policy. In general, KL divergence measures the distances between two distributions and is always a positive quantity. However, in `trl` we use an estimate of the KL which in expectation is equal to the real KL divergence. \\( KL_{pen}(x,y) = \log \left(\pi_\phi^{\mathrm{RL}}(y \mid x) / \pi^{\mathrm{SFT}}(y \mid x)\right) \\) Clearly, when a token is sampled from the policy which has a lower probability than the SFT model, this will lead to a negative KL penalty, but on average it will be positive otherwise you wouldn't be properly sampling from the policy. However, some generation strategies can force some tokens to be generated or some tokens can suppressed. For example when generating in batches finished sequences are padded and when setting a minimum length the EOS token is suppressed. The model can assign very high or low probabilities to those tokens which leads to negative KL. As the PPO algorithm optimizes for reward, it will chase after these negative penalties, leading to instabilities.  One needs to be careful when generating the responses and we suggest to always use a simple sampling strategy first before resorting to more sophisticated generation methods. ### Ongoing issues There are still a number of issues that we need to better understand and resolve. For example, there are occassionally spikes in the loss, which can lead to further instabilities.  As we identify and resolve these issues, we will upstream the changes `trl`, to ensure the community can benefit. ## Conclusion In this post, we went through the entire training cycle for RLHF, starting with preparing a dataset with human annotations, adapting the language model to the domain, training a reward model, and finally training a model with RL. By using `peft`, anyone can run our example on a single GPU! If training is too slow, you can use data parallelism with no code changes and scale training by adding more GPUs. For a real use case, this is just the first step! Once you have a trained model, you must evaluate it and compare it against other models to see how good it is. This can be done by ranking generations of different model versions, similar to how we built the reward dataset. Once you add the evaluation step, the fun begins: you can start iterating on your dataset and model training setup to see if there are ways to improve the model. You could add other datasets to the mix or apply better filters to the existing one. On the other hand, you could try different model sizes and architecture for the reward model or train for longer. We are actively improving TRL to make all steps involved in RLHF more accessible and are excited to see the things people build with it! Check out the [issues on GitHub](https://github.com/lvwerra/trl/issues) if you're interested in contributing. ## Citation ```bibtex @misc {beeching2023stackllama, author = { Edward Beeching and Younes Belkada and Kashif Rasul and Lewis Tunstall and Leandro von Werra and Nazneen Rajani and Nathan Lambert }, title = { StackLLaMA: An RL Fine-tuned LLaMA Model for Stack Exchange Question and Answering }, year = 2023, url = { https://huggingface.co/blog/stackllama }, doi = { 10.57967/hf/0513 }, publisher = { Hugging Face Blog } } ``` ## Acknowledgements We thank Philipp Schmid for sharing his wonderful [demo](https://huggingface.co/spaces/philschmid/igel-playground) of streaming text generation upon which our demo was based. We also thank Omar Sanseviero and Louis Castricato for giving valuable and detailed feedback on the draft of the blog post. |

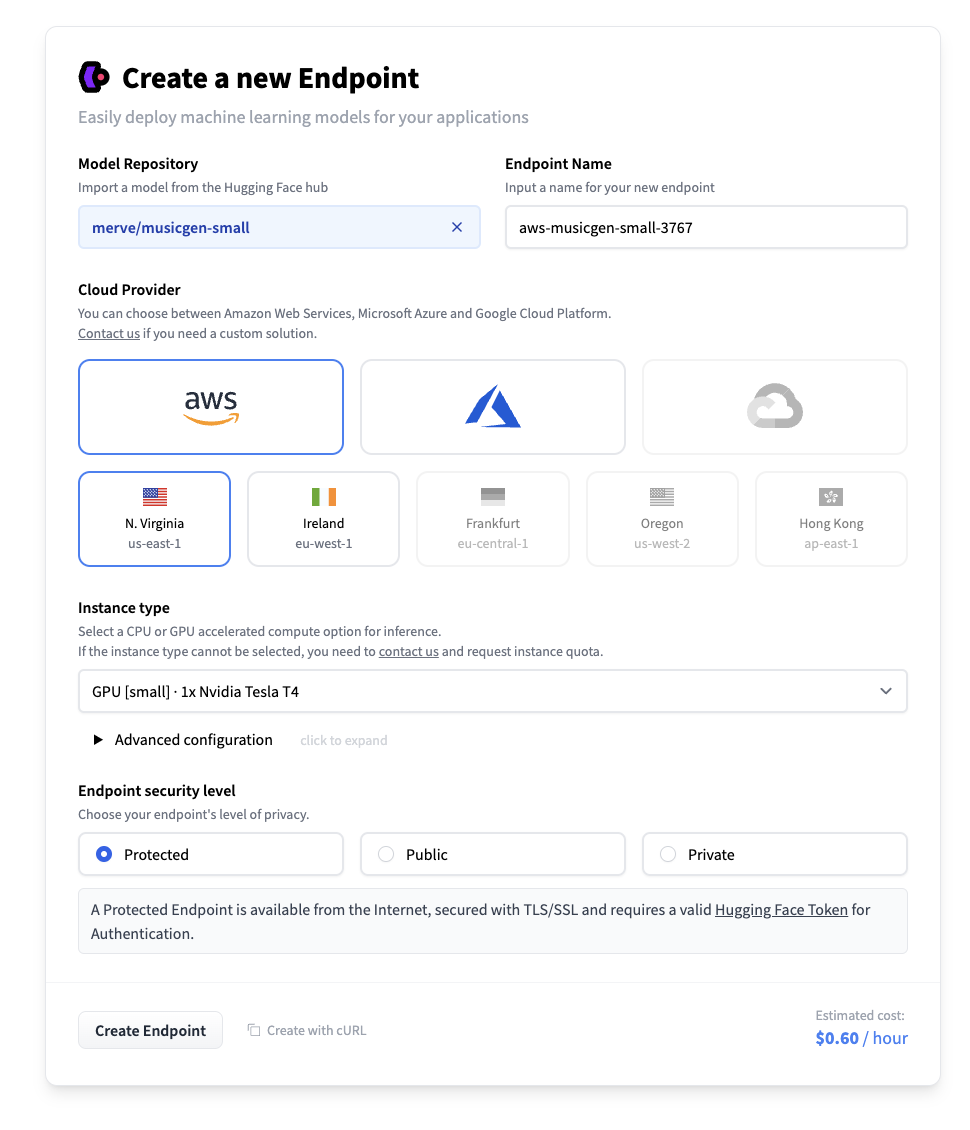

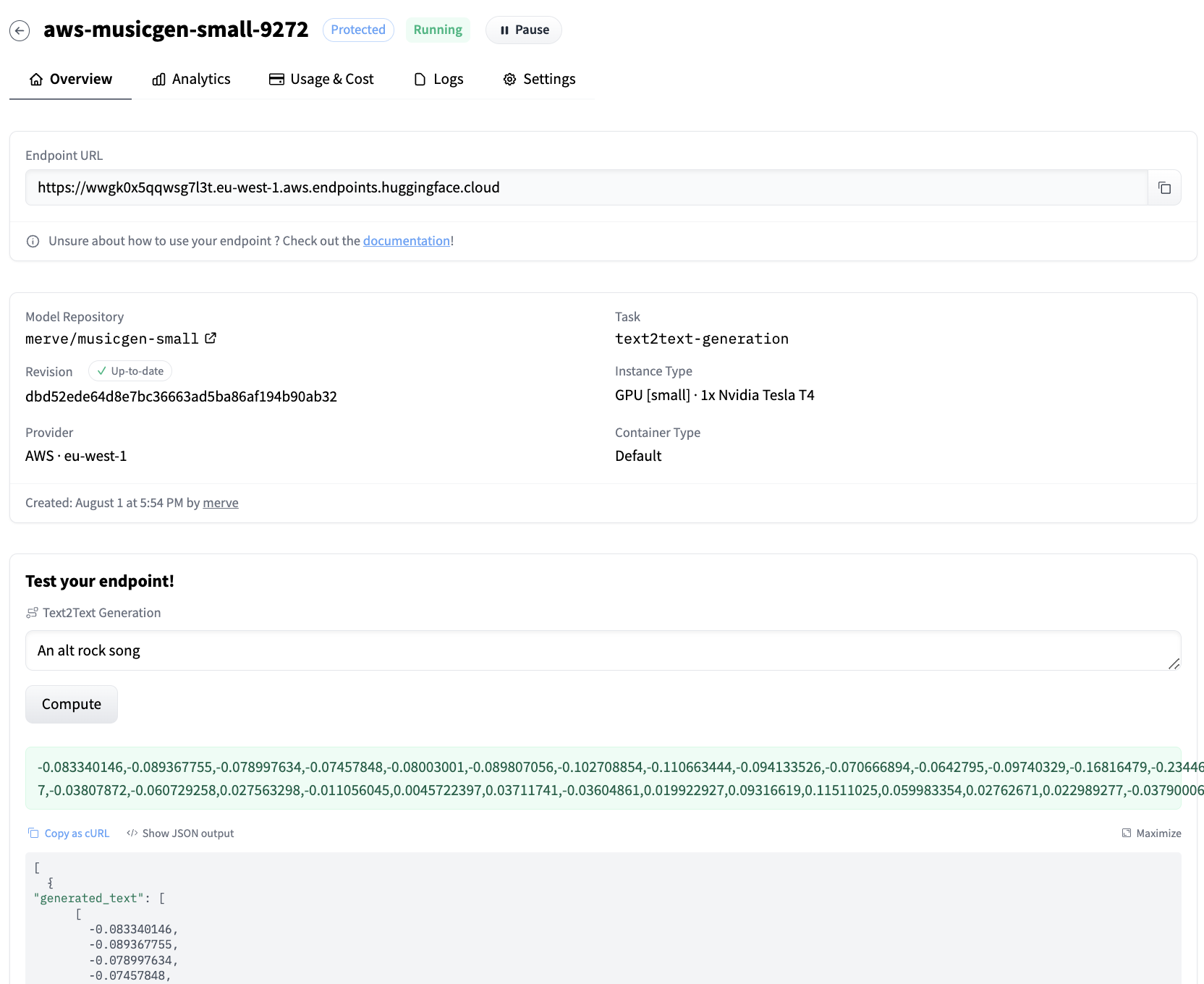

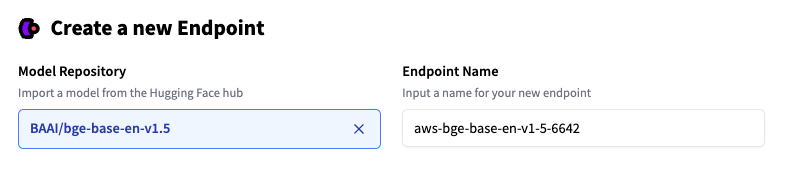

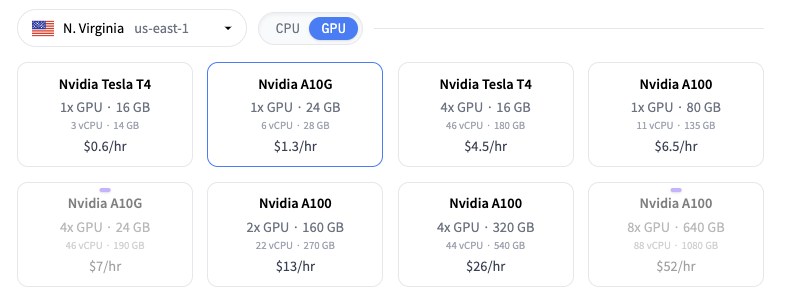

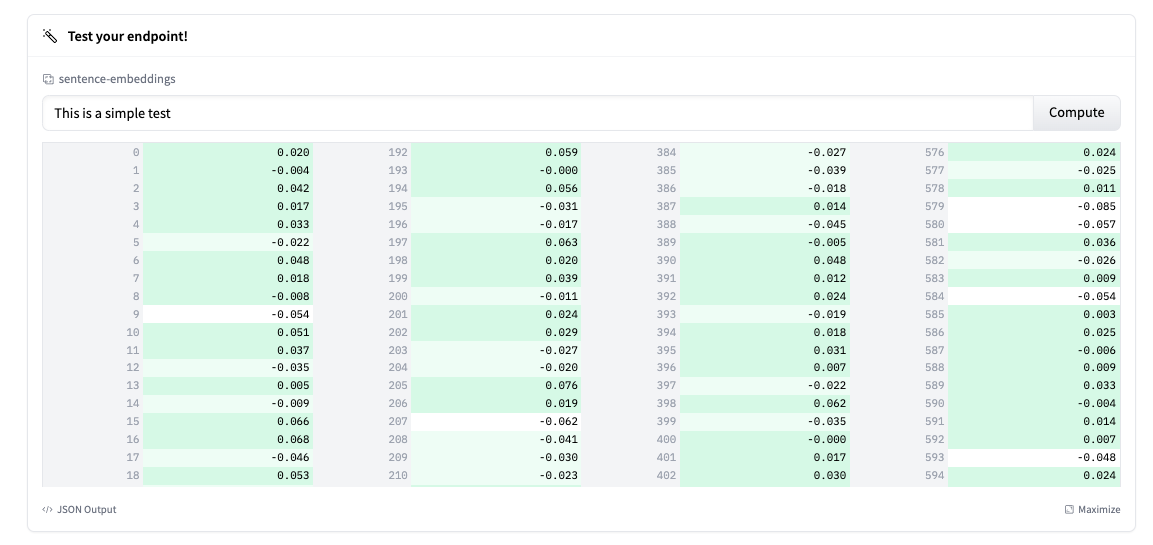

Snorkel AI x Hugging Face: unlock foundation models for enterprises | Violette | April 6, 2023 | snorkel-case-study | case-studies | https://huggingface.co/blog/snorkel-case-study | # Snorkel AI x Hugging Face: unlock foundation models for enterprises _This article is a cross-post from an originally published post on April 6, 2023 [in Snorkel's blog](https://snorkel.ai/snorkel-hugging-face-unlock-foundation-models-for-enterprise/), by Friea Berg ._ As OpenAI releases [GPT-4](https://openai.com/research/gpt-4) and Google debuts [Bard](https://gizmodo.com/google-bard-chatgpt-ai-rival-released-1850248162) in beta, enterprises around the world are excited to leverage the power of foundation models. As that excitement builds, so does the realization that most companies and organizations are not equipped to properly take advantage of foundation models. Foundation models pose a unique set of challenges for enterprises. Their larger-than-ever size makes them difficult and expensive for companies to host themselves, and using off-the-shelf FMs for production use cases could mean poor performance or substantial governance and compliance risks. Snorkel AI bridges the gap between foundation models and practical enterprise use cases and has [yielded impressive results](https://snorkel.ai/how-pixability-uses-foundation-models-to-accelerate-nlp-application-development-by-months/) for AI innovators like Pixability. We’re teaming with [Hugging Face](https://huggingface.co/), best known for its enormous repository of ready-to-use open-source models, to provide enterprises with even more flexibility and choice as they develop AI applications. ## Foundation models in Snorkel Flow The Snorkel Flow development platform enables users to [adapt foundation models](https://snorkel.ai/snorkel-flow/foundation-model-development/) for their specific use cases. Application development begins by inspecting the predictions of a selected foundation model “out of the box” on their data. These predictions become an initial version of training labels for those data points. Snorkel Flow helps users to identify error modes in that model and correct them efficiently via [programmatic labeling](https://snorkel.ai/programmatic-labeling/), which can include updating training labels with heuristics or [prompts](https://snorkel.ai/combining-foundation-models-with-weak-supervision/). The base foundation model can then be fine-tuned on the updated labels and evaluated once again, with this iterative “detect and correct” process continuing until the adapted foundation model is sufficiently high quality to deploy. Hugging Face helps enable this powerful development process by making more than 150,000 open-source models immediately available from a single source. Many of those models are specialized on domain-specific data, like the BioBERT and SciBERT models used to demonstrate [how ML can be used to spot adverse drug events](https://snorkel.ai/adverse-drug-events-how-to-spot-them-with-machine-learning/). One – or better yet, [multiple](https://snorkel.ai/combining-foundation-models-with-weak-supervision/) – specialized base models can give users a jump-start on initial predictions, prompts for improving labels, or fine-tuning a final model for deployment. ## How does Hugging Face help? Snorkel AI’s partnership with Hugging Face supercharges Snorkel Flow’s foundation model capabilities. Initially we only made a small number of foundation models available. Each one required a dedicated service, making it prohibitively expensive and difficult for us to offer enterprises the flexibility to capitalize on the rapidly growing variety of models available. Adopting Hugging Face’s Inference Endpoint service enabled us to expand the number of foundation models our users could tap into while keeping costs manageable. Hugging Face’s service allows users to create a model API in a few clicks and begin using it immediately. Crucially, the new service has “pause and resume” capabilities that allow us to activate a model API when a client needs it, and put it to sleep when they don’t. "We were pleasantly surprised to see how straightforward Hugging Face Inference Endpoint service was to set up.. All the configuration options were pretty self-explanatory, but we also had access to all the options we needed in terms of what cloud to run on, what security level we needed, etc." – Snorkel CTO and Co-founder Braden Hancock <iframe width="100%" style="aspect-ratio: 16 / 9;" src="https://www.youtube-nocookie.com/embed/woblG7iZPSw" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe> ## How does this help Snorkel customers? Few enterprises have the resources to train their own foundation models from scratch. While many may have the in-house expertise to fine-tune their own version of a foundation model, they may struggle to gather the volume of data needed for that task. Snorkel’s data-centric platform for developing foundation models and alignment with leading industry innovators like Hugging Face help put the power of foundation models at our users’ fingertips. #### "With Snorkel AI and Hugging Face Inference Endpoints, companies will accelerate their data-centric AI applications with open source at the core. Machine Learning is becoming the default way of building technology, and building from open source allows companies to build the right solution for their use case and take control of the experience they offer to their customers. We are excited to see Snorkel AI enable automated data labeling for the enterprise building from open-source Hugging Face models and Inference Endpoints, our machine learning production service.” Clement Delangue, co-founder and CEO, Hugging Face ## Conclusion Together, Snorkel and Hugging Face make it easier than ever for large companies, government agencies, and AI innovators to get value from foundation models. The ability to use Hugging Face’s comprehensive hub of foundation models means that users can pick the models that best align with their business needs without having to invest in the resources required to train them. This integration is a significant step forward in making foundation models more accessible to enterprises around the world. _If you’re interested in Hugging Face Inference Endpoints for your company, please contact us [here](https://huggingface.co/inference-endpoints/enterprise) - our team will contact you to discuss your requirements!_ |

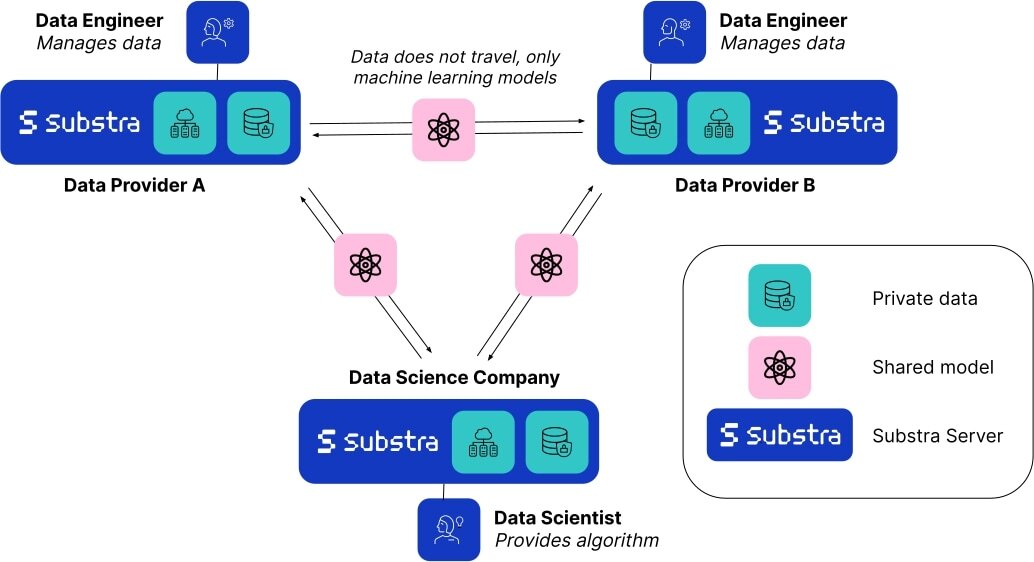

Creating Privacy Preserving AI with Substra | EazyAl | April 12, 2023 | owkin-substra | cv, federated-learning, fl, open-source-collab | https://huggingface.co/blog/owkin-substra | # Creating Privacy Preserving AI with Substra With the recent rise of generative techniques, machine learning is at an incredibly exciting point in its history. The models powering this rise require even more data to produce impactful results, and thus it’s becoming increasingly important to explore new methods of ethically gathering data while ensuring that data privacy and security remain a top priority. In many domains that deal with sensitive information, such as healthcare, there often isn’t enough high quality data accessible to train these data-hungry models. Datasets are siloed in different academic centers and medical institutions and are difficult to share openly due to privacy concerns about patient and proprietary information. Regulations that protect patient data such as HIPAA are essential to safeguard individuals’ private health information, but they can limit the progress of machine learning research as data scientists can’t access the volume of data required to effectively train their models. Technologies that work alongside existing regulations by proactively protecting patient data will be crucial to unlocking these silos and accelerating the pace of machine learning research and deployment in these domains. This is where Federated Learning comes in. Check out the [space](https://huggingface.co/spaces/owkin/substra) we’ve created with [Substra](https://owkin.com/substra) to learn more! ## What is Federated Learning? Federated learning (FL) is a decentralized machine learning technique that allows you to train models using multiple data providers. Instead of gathering data from all sources on a single server, data can remain on a local server as only the resulting model weights travel between servers. As the data never leaves its source, federated learning is naturally a privacy-first approach. Not only does this technique improve data security and privacy, it also enables data scientists to build better models using data from different sources - increasing robustness and providing better representation as compared to models trained on data from a single source. This is valuable not only due to the increase in the quantity of data, but also to reduce the risk of bias due to variations of the underlying dataset, for example minor differences caused by the data capture techniques and equipment, or differences in demographic distributions of the patient population. With multiple sources of data, we can build more generalizable models that ultimately perform better in real world settings. For more information on federated learning, we recommend checking out this explanatory [comic](https://federated.withgoogle.com/) by Google.  **Substra** is an open source federated learning framework built for real world production environments. Although federated learning is a relatively new field and has only taken hold in the last decade, it has already enabled machine learning research to progress in ways previously unimaginable. For example, 10 competing biopharma companies that would traditionally never share data with each other set up a collaboration in the [MELLODDY](https://www.melloddy.eu/) project by sharing the world’s largest collection of small molecules with known biochemical or cellular activity. This ultimately enabled all of the companies involved to build more accurate predictive models for drug discovery, a huge milestone in medical research. ## Substra x HF Research on the capabilities of federated learning is growing rapidly but the majority of recent work has been limited to simulated environments. Real world examples and implementations still remain limited due to the difficulty of deploying and architecting federated networks. As a leading open-source platform for federated learning deployment, Substra has been battle tested in many complex security environments and IT infrastructures, and has enabled [medical breakthroughs in breast cancer research](https://www.nature.com/articles/s41591-022-02155-w).  Hugging Face collaborated with the folks managing Substra to create this space, which is meant to give you an idea of the real world challenges that researchers and scientists face - mainly, a lack of centralized, high quality data that is ‘ready for AI’. As you can control the distribution of these samples, you’ll be able to see how a simple model reacts to changes in data. You can then examine how a model trained with federated learning almost always performs better on validation data compared with models trained on data from a single source. ## Conclusion Although federated learning has been leading the charge, there are various other privacy enhancing technologies (PETs) such as secure enclaves and multi party computation that are enabling similar results and can be combined with federation to create multi layered privacy preserving environments. You can learn more [here](https://medium.com/@aliimran_36956/how-collaboration-is-revolutionizing-medicine-34999060794e) if you’re interested in how these are enabling collaborations in medicine. Regardless of the methods used, it's important to stay vigilant of the fact that data privacy is a right for all of us. It’s critical that we move forward in this AI boom with [privacy and ethics in mind](https://www.nature.com/articles/s42256-022-00551-y). If you’d like to play around with Substra and implement federated learning in a project, you can check out the docs [here](https://docs.substra.org/en/stable/). |

Graph Classification with Transformers | clefourrier | April 14, 2023 | graphml-classification | community, guide, graphs | https://huggingface.co/blog/graphml-classification | # Graph classification with Transformers <div class="blog-metadata"> <small>Published April 14, 2023.</small> <a target="_blank" class="btn no-underline text-sm mb-5 font-sans" href="https://github.com/huggingface/blog/blob/main/graphml-classification.md"> Update on GitHub </a> </div> <div class="author-card"> <a href="/clefourrier"> <img class="avatar avatar-user" src="https://aeiljuispo.cloudimg.io/v7/https://s3.amazonaws.com/moonup/production/uploads/1644340617257-noauth.png?w=200&h=200&f=face" title="Gravatar"> <div class="bfc"> <code>clefourrier</code> <span class="fullname">Clémentine Fourrier</span> </div> </a> </div> In the previous [blog](https://huggingface.co/blog/intro-graphml), we explored some of the theoretical aspects of machine learning on graphs. This one will explore how you can do graph classification using the Transformers library. (You can also follow along by downloading the demo notebook [here](https://github.com/huggingface/blog/blob/main/notebooks/graphml-classification.ipynb)!) At the moment, the only graph transformer model available in Transformers is Microsoft's [Graphormer](https://arxiv.org/abs/2106.05234), so this is the one we will use here. We are looking forward to seeing what other models people will use and integrate 🤗 ## Requirements To follow this tutorial, you need to have installed `datasets` and `transformers` (version >= 4.27.2), which you can do with `pip install -U datasets transformers`. ## Data To use graph data, you can either start from your own datasets, or use [those available on the Hub](https://huggingface.co/datasets?task_categories=task_categories:graph-ml&sort=downloads). We'll focus on using already available ones, but feel free to [add your datasets](https://huggingface.co/docs/datasets/upload_dataset)! ### Loading Loading a graph dataset from the Hub is very easy. Let's load the `ogbg-mohiv` dataset (a baseline from the [Open Graph Benchmark](https://ogb.stanford.edu/) by Stanford), stored in the `OGB` repository: ```python from datasets import load_dataset # There is only one split on the hub dataset = load_dataset("OGB/ogbg-molhiv") dataset = dataset.shuffle(seed=0) ``` This dataset already has three splits, `train`, `validation`, and `test`, and all these splits contain our 5 columns of interest (`edge_index`, `edge_attr`, `y`, `num_nodes`, `node_feat`), which you can see by doing `print(dataset)`. If you have other graph libraries, you can use them to plot your graphs and further inspect the dataset. For example, using PyGeometric and matplotlib: ```python import networkx as nx import matplotlib.pyplot as plt # We want to plot the first train graph graph = dataset["train"][0] edges = graph["edge_index"] num_edges = len(edges[0]) num_nodes = graph["num_nodes"] # Conversion to networkx format G = nx.Graph() G.add_nodes_from(range(num_nodes)) G.add_edges_from([(edges[0][i], edges[1][i]) for i in range(num_edges)]) # Plot nx.draw(G) ``` ### Format On the Hub, graph datasets are mostly stored as lists of graphs (using the `jsonl` format). A single graph is a dictionary, and here is the expected format for our graph classification datasets: - `edge_index` contains the indices of nodes in edges, stored as a list containing two parallel lists of edge indices. - **Type**: list of 2 lists of integers. - **Example**: a graph containing four nodes (0, 1, 2 and 3) and where connections are 1->2, 1->3 and 3->1 will have `edge_index = [[1, 1, 3], [2, 3, 1]]`. You might notice here that node 0 is not present here, as it is not part of an edge per se. This is why the next attribute is important. - `num_nodes` indicates the total number of nodes available in the graph (by default, it is assumed that nodes are numbered sequentially). - **Type**: integer - **Example**: In our above example, `num_nodes = 4`. - `y` maps each graph to what we want to predict from it (be it a class, a property value, or several binary label for different tasks). - **Type**: list of either integers (for multi-class classification), floats (for regression), or lists of ones and zeroes (for binary multi-task classification) - **Example**: We could predict the graph size (small = 0, medium = 1, big = 2). Here, `y = [0]`. - `node_feat` contains the available features (if present) for each node of the graph, ordered by node index. - **Type**: list of lists of integer (Optional) - **Example**: Our above nodes could have, for example, types (like different atoms in a molecule). This could give `node_feat = [[1], [0], [1], [1]]`. - `edge_attr` contains the available attributes (if present) for each edge of the graph, following the `edge_index` ordering. - **Type**: list of lists of integers (Optional) - **Example**: Our above edges could have, for example, types (like molecular bonds). This could give `edge_attr = [[0], [1], [1]]`. ### Preprocessing Graph transformer frameworks usually apply specific preprocessing to their datasets to generate added features and properties which help the underlying learning task (classification in our case). Here, we use Graphormer's default preprocessing, which generates in/out degree information, the shortest path between node matrices, and other properties of interest for the model. ```python from transformers.models.graphormer.collating_graphormer import preprocess_item, GraphormerDataCollator dataset_processed = dataset.map(preprocess_item, batched=False) ``` It is also possible to apply this preprocessing on the fly, in the DataCollator's parameters (by setting `on_the_fly_processing` to True): not all datasets are as small as `ogbg-molhiv`, and for large graphs, it might be too costly to store all the preprocessed data beforehand. ## Model ### Loading Here, we load an existing pretrained model/checkpoint and fine-tune it on our downstream task, which is a binary classification task (hence `num_classes = 2`). We could also fine-tune our model on regression tasks (`num_classes = 1`) or on multi-task classification. ```python from transformers import GraphormerForGraphClassification model = GraphormerForGraphClassification.from_pretrained( "clefourrier/pcqm4mv2_graphormer_base", num_classes=2, # num_classes for the downstream task ignore_mismatched_sizes=True, ) ``` Let's look at this in more detail. Calling the `from_pretrained` method on our model downloads and caches the weights for us. As the number of classes (for prediction) is dataset dependent, we pass the new `num_classes` as well as `ignore_mismatched_sizes` alongside the `model_checkpoint`. This makes sure a custom classification head is created, specific to our task, hence likely different from the original decoder head. It is also possible to create a new randomly initialized model to train from scratch, either following the known parameters of a given checkpoint or by manually choosing them. ### Training or fine-tuning To train our model simply, we will use a `Trainer`. To instantiate it, we will need to define the training configuration and the evaluation metric. The most important is the `TrainingArguments`, which is a class that contains all the attributes to customize the training. It requires a folder name, which will be used to save the checkpoints of the model. ```python from transformers import TrainingArguments, Trainer training_args = TrainingArguments( "graph-classification", logging_dir="graph-classification", per_device_train_batch_size=64, per_device_eval_batch_size=64, auto_find_batch_size=True, # batch size can be changed automatically to prevent OOMs gradient_accumulation_steps=10, dataloader_num_workers=4, #1, num_train_epochs=20, evaluation_strategy="epoch", logging_strategy="epoch", push_to_hub=False, ) ``` For graph datasets, it is particularly important to play around with batch sizes and gradient accumulation steps to train on enough samples while avoiding out-of-memory errors. The last argument `push_to_hub` allows the Trainer to push the model to the Hub regularly during training, as each saving step. ```python trainer = Trainer( model=model, args=training_args, train_dataset=dataset_processed["train"], eval_dataset=dataset_processed["validation"], data_collator=GraphormerDataCollator(), ) ``` In the `Trainer` for graph classification, it is important to pass the specific data collator for the given graph dataset, which will convert individual graphs to batches for training. ```python train_results = trainer.train() trainer.push_to_hub() ``` When the model is trained, it can be saved to the hub with all the associated training artefacts using `push_to_hub`. As this model is quite big, it takes about a day to train/fine-tune for 20 epochs on CPU (IntelCore i7). To go faster, you could use powerful GPUs and parallelization instead, by launching the code either in a Colab notebook or directly on the cluster of your choice. ## Ending note Now that you know how to use `transformers` to train a graph classification model, we hope you will try to share your favorite graph transformer checkpoints, models, and datasets on the Hub for the rest of the community to use! |

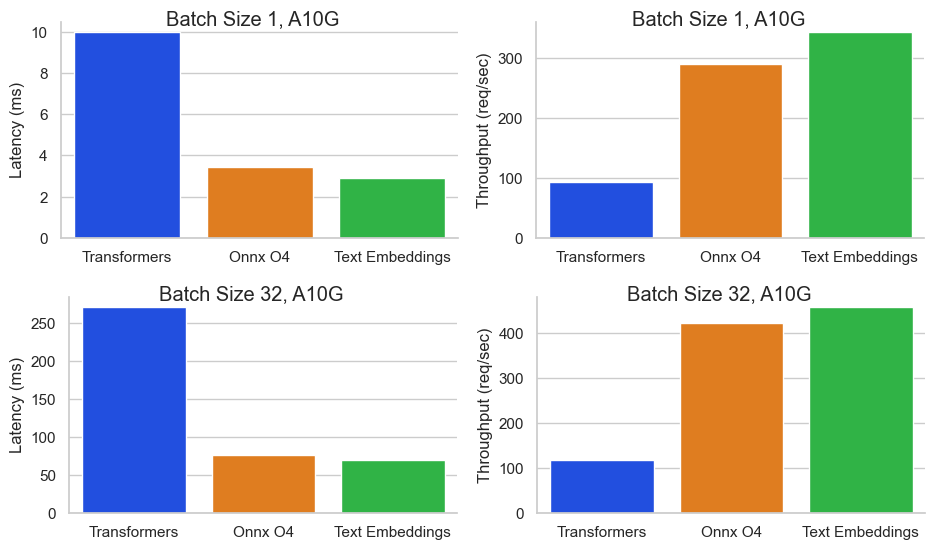

Accelerating Hugging Face Transformers with AWS Inferentia2 | philschmid | April 17, 2023 | accelerate-transformers-with-inferentia2 | partnerships, aws, nlp, cv | https://huggingface.co/blog/accelerate-transformers-with-inferentia2 | # Accelerating Hugging Face Transformers with AWS Inferentia2 <script async defer src="https://unpkg.com/medium-zoom-element@0/dist/medium-zoom-element.min.js"></script> In the last five years, Transformer models [[1](https://arxiv.org/abs/1706.03762)] have become the _de facto_ standard for many machine learning (ML) tasks, such as natural language processing (NLP), computer vision (CV), speech, and more. Today, many data scientists and ML engineers rely on popular transformer architectures like BERT [[2](https://arxiv.org/abs/1810.04805)], RoBERTa [[3](https://arxiv.org/abs/1907.11692)], the Vision Transformer [[4](https://arxiv.org/abs/2010.11929)], or any of the 130,000+ pre-trained models available on the [Hugging Face](https://huggingface.co) hub to solve complex business problems with state-of-the-art accuracy. However, for all their greatness, Transformers can be challenging to deploy in production. On top of the infrastructure plumbing typically associated with model deployment, which we largely solved with our [Inference Endpoints](https://huggingface.co/inference-endpoints) service, Transformers are large models which routinely exceed the multi-gigabyte mark. Large language models (LLMs) like [GPT-J-6B](https://huggingface.co/EleutherAI/gpt-j-6B), [Flan-T5](https://huggingface.co/google/flan-t5-xxl), or [Opt-30B](https://huggingface.co/facebook/opt-30b) are in the tens of gigabytes, not to mention behemoths like [BLOOM](https://huggingface.co/bigscience/bloom), our very own LLM, which clocks in at 350 gigabytes. Fitting these models on a single accelerator can be quite difficult, let alone getting the high throughput and low inference latency that applications require, like conversational applications and search. So far, ML experts have designed complex manual techniques to slice large models, distribute them on a cluster of accelerators, and optimize their latency. Unfortunately, this work is extremely difficult, time-consuming, and completely out of reach for many ML practitioners. At Hugging Face, we're democratizing ML and always looking to partner with companies who also believe that every developer and organization should benefit from state-of-the-art models. For this purpose, we're excited to partner with Amazon Web Services to optimize Hugging Face Transformers for AWS [Inferentia 2](https://aws.amazon.com/machine-learning/inferentia/)! It’s a new purpose-built inference accelerator that delivers unprecedented levels of throughput, latency, performance per watt, and scalability. ## Introducing AWS Inferentia2 AWS Inferentia2 is the next generation to Inferentia1 launched in 2019. Powered by Inferentia1, Amazon EC2 Inf1 instances delivered 25% higher throughput and 70% lower cost than comparable G5 instances based on NVIDIA A10G GPU, and with Inferentia2, AWS is pushing the envelope again. The new Inferentia2 chip delivers a 4x throughput increase and a 10x latency reduction compared to Inferentia. Likewise, the new [Amazon EC2 Inf2](https://aws.amazon.com/de/ec2/instance-types/inf2/) instances have up to 2.6x better throughput, 8.1x lower latency, and 50% better performance per watt than comparable G5 instances. Inferentia 2 gives you the best of both worlds: cost-per-inference optimization thanks to high throughput and response time for your application thanks to low inference latency. Inf2 instances are available in multiple sizes, which are equipped with between 1 to 12 Inferentia 2 chips. When several chips are present, they are interconnected by a blazing-fast direct Inferentia2 to Inferentia2 connectivity for distributed inference on large models. For example, the largest instance size, inf2.48xlarge, has 12 chips and enough memory to load a 175-billion parameter model like GPT-3 or BLOOM. Thankfully none of this comes at the expense of development complexity. With [optimum neuron](https://github.com/huggingface/optimum-neuron), you don't need to slice or modify your model. Because of the native integration in [AWS Neuron SDK](https://github.com/aws-neuron/aws-neuron-sdk), all it takes is a single line of code to compile your model for Inferentia 2. You can experiment in minutes! Test the performance your model could reach on Inferentia 2 and see for yourself. Speaking of, let’s show you how several Hugging Face models run on Inferentia 2. Benchmarking time! ## Benchmarking Hugging Face Models on AWS Inferentia 2 We evaluated some of the most popular NLP models from the [Hugging Face Hub](https://huggingface.co/models) including BERT, RoBERTa, DistilBERT, and vision models like Vision Transformers. The first benchmark compares the performance of Inferentia, Inferentia 2, and GPUs. We ran all experiments on AWS with the following instance types: * Inferentia1 - [inf1.2xlarge](https://aws.amazon.com/ec2/instance-types/inf1/?nc1=h_ls) powered by a single Inferentia chip. * Inferentia2 - [inf2.xlarge](https://aws.amazon.com/ec2/instance-types/inf2/?nc1=h_ls) powered by a single Inferentia2 chip. * GPU - [g5.2xlarge](https://aws.amazon.com/ec2/instance-types/g5/) powered by a single NVIDIA A10G GPU. _Note: that we did not optimize the model for the GPU environment, the models were evaluated in fp32._ When it comes to benchmarking Transformer models, there are two metrics that are most adopted: * **Latency**: the time it takes for the model to perform a single prediction (pre-process, prediction, post-process). * **Throughput**: the number of executions performed in a fixed amount of time for one benchmark configuration We looked at latency across different setups and models to understand the benefits and tradeoffs of the new Inferentia2 instance. If you want to run the benchmark yourself, we created a [Github repository](https://github.com/philschmid/aws-neuron-samples/tree/main/benchmark) with all the information and scripts to do so. ### Results The benchmark confirms that the performance improvements claimed by AWS can be reproduced and validated by real use-cases and examples. On average, AWS Inferentia2 delivers 4.5x better latency than NVIDIA A10G GPUs and 4x better latency than Inferentia1 instances. We ran 144 experiments on 6 different model architectures: * Accelerators: Inf1, Inf2, NVIDIA A10G * Models: [BERT-base](https://huggingface.co/bert-base-uncased), [BERT-Large](https://huggingface.co/bert-large-uncased), [RoBERTa-base](https://huggingface.co/roberta-base), [DistilBERT](https://huggingface.co/distilbert-base-uncased), [ALBERT-base](https://huggingface.co/albert-base-v2), [ViT-base](https://huggingface.co/google/vit-base-patch16-224) * Sequence length: 8, 16, 32, 64, 128, 256, 512 * Batch size: 1 In each experiment, we collected numbers for p95 latency. You can find the full details of the benchmark in this spreadsheet: [HuggingFace: Benchmark Inferentia2](https://docs.google.com/spreadsheets/d/1AULEHBu5Gw6ABN8Ls6aSB2CeZyTIP_y5K7gC7M3MXqs/edit?usp=sharing). Let’s highlight a few insights of the benchmark. ### BERT-base Here is the latency comparison for running [BERT-base](https://huggingface.co/bert-base-uncased) on each of the infrastructure setups, with a logarithmic scale for latency. It is remarkable to see how Inferentia2 outperforms all other setups by ~6x for sequence lengths up to 256. <br> <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="BERT-base p95 latency" src="assets/140_accelerate_transformers_with_inferentia2/bert.png"></medium-zoom> <figcaption>Figure 1. BERT-base p95 latency</figcaption> </figure> <br> ### Vision Transformer Here is the latency comparison for running [ViT-base](https://huggingface.co/google/vit-base-patch16-224) on the different infrastructure setups. Inferentia2 delivers 2x better latency than the NVIDIA A10G, with the potential to greatly help companies move from traditional architectures, like CNNs, to Transformers for - real-time applications. <br> <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="ViT p95 latency" src="assets/140_accelerate_transformers_with_inferentia2/vit.png"></medium-zoom> <figcaption>Figure 1. ViT p95 latency</figcaption> </figure> <br> ## Conclusion Transformer models have emerged as the go-to solution for many machine learning tasks. However, deploying them in production has been challenging due to their large size and latency requirements. Thanks to AWS Inferentia2 and the collaboration between Hugging Face and AWS, developers and organizations can now leverage the benefits of state-of-the-art models without the prior need for extensive machine learning expertise. You can start testing for as low as 0.76$/h. The initial benchmarking results are promising, and show that Inferentia2 delivers superior latency performance when compared to both Inferentia and NVIDIA A10G GPUs. This latest breakthrough promises high-quality machine learning models can be made available to a much broader audience delivering AI accessibility to everyone. |

How to host a Unity game in a Space | dylanebert | April 21, 2023 | unity-in-spaces | community, guide, game-dev | https://huggingface.co/blog/unity-in-spaces | # How to host a Unity game in a Space <!-- {authors} --> Did you know you can host a Unity game in a Hugging Face Space? No? Well, you can! Hugging Face Spaces are an easy way to build, host, and share demos. While they are typically used for Machine Learning demos, they can also host playable Unity games. Here are some examples: - [Huggy](https://huggingface.co/spaces/ThomasSimonini/Huggy) - [Farming Game](https://huggingface.co/spaces/dylanebert/FarmingGame) - [Unity API Demo](https://huggingface.co/spaces/dylanebert/UnityDemo) Here's how you can host your own Unity game in a Space. ## Step 1: Create a Space using the Static HTML template First, navigate to [Hugging Face Spaces](https://huggingface.co/new-space) to create a space. <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/1.png"> </figure> Select the "Static HTML" template, give your Space a name, and create it. <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/2.png"> </figure> ## Step 2: Use Git to Clone the Space Clone your newly created Space to your local machine using Git. You can do this by running the following command in your terminal or command prompt: ``` git clone https://huggingface.co/spaces/{your-username}/{your-space-name} ``` ## Step 3: Open your Unity Project Open the Unity project you want to host in your Space. <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/3.png"> </figure> ## Step 4: Switch the Build Target to WebGL Navigate to `File > Build Settings` and switch the Build Target to WebGL. <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/4.png"> </figure> ## Step 5: Open Player Settings In the Build Settings window, click the "Player Settings" button to open the Player Settings panel. <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/5.png"> </figure> ## Step 6: Optionally, Download the Hugging Face Unity WebGL Template You can enhance your game's appearance in a Space by downloading the Hugging Face Unity WebGL template, available [here](https://github.com/huggingface/Unity-WebGL-template-for-Hugging-Face-Spaces). Just download the repository and drop it in your project files. Then, in the Player Settings panel, switch the WebGL template to Hugging Face. To do so, in Player Settings, click "Resolution and Presentation", then select the Hugging Face WebGL template. <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/6.png"> </figure> ## Step 7: Change the Compression Format to Disabled In the Player Settings panel, navigate to the "Publishing Settings" section and change the Compression Format to "Disabled". <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/7.png"> </figure> ## Step 8: Build your Project Return to the Build Settings window and click the "Build" button. Choose a location to save your build files, and Unity will build the project for WebGL. <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/8.png"> </figure> ## Step 9: Copy the Contents of the Build Folder After the build process is finished, navigate to the folder containing your build files. Copy the files in the build folder to the repository you cloned in [Step 2](#step-2-use-git-to-clone-the-space). <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/9.png"> </figure> ## Step 10: Enable Git-LFS for Large File Storage Navigate to your repository. Use the following commands to track large build files. ``` git lfs install git lfs track Build/* ``` ## Step 11: Push your Changes Finally, use the following Git commands to push your changes: ``` git add . git commit -m "Add Unity WebGL build files" git push ``` ## Done! Congratulations! Refresh your Space. You should now be able to play your game in a Hugging Face Space. We hope you found this tutorial helpful. If you have any questions or would like to get more involved in using Hugging Face for Games, join the [Hugging Face Discord](https://hf.co/join/discord)! |

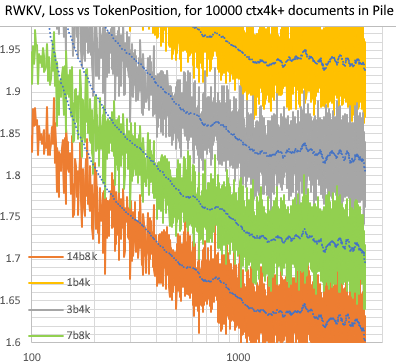

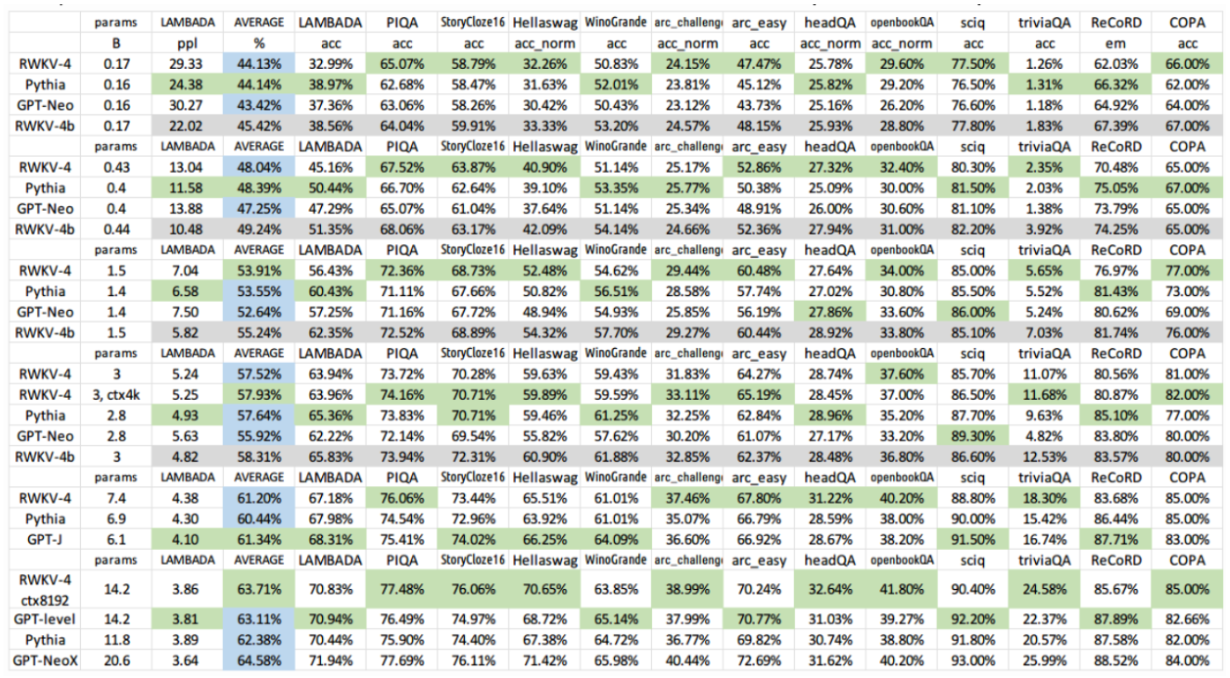

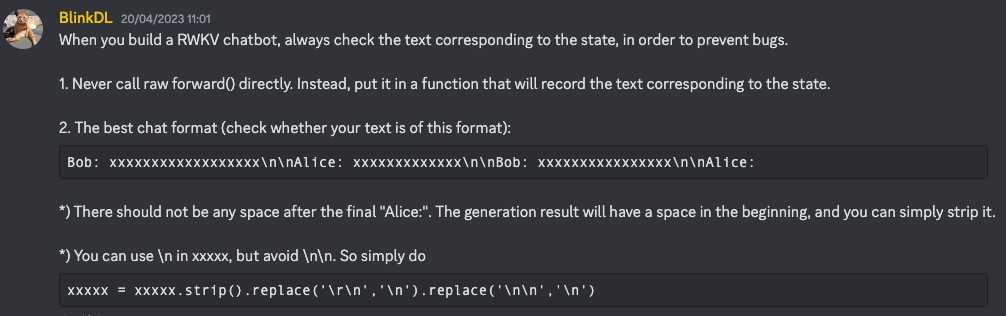

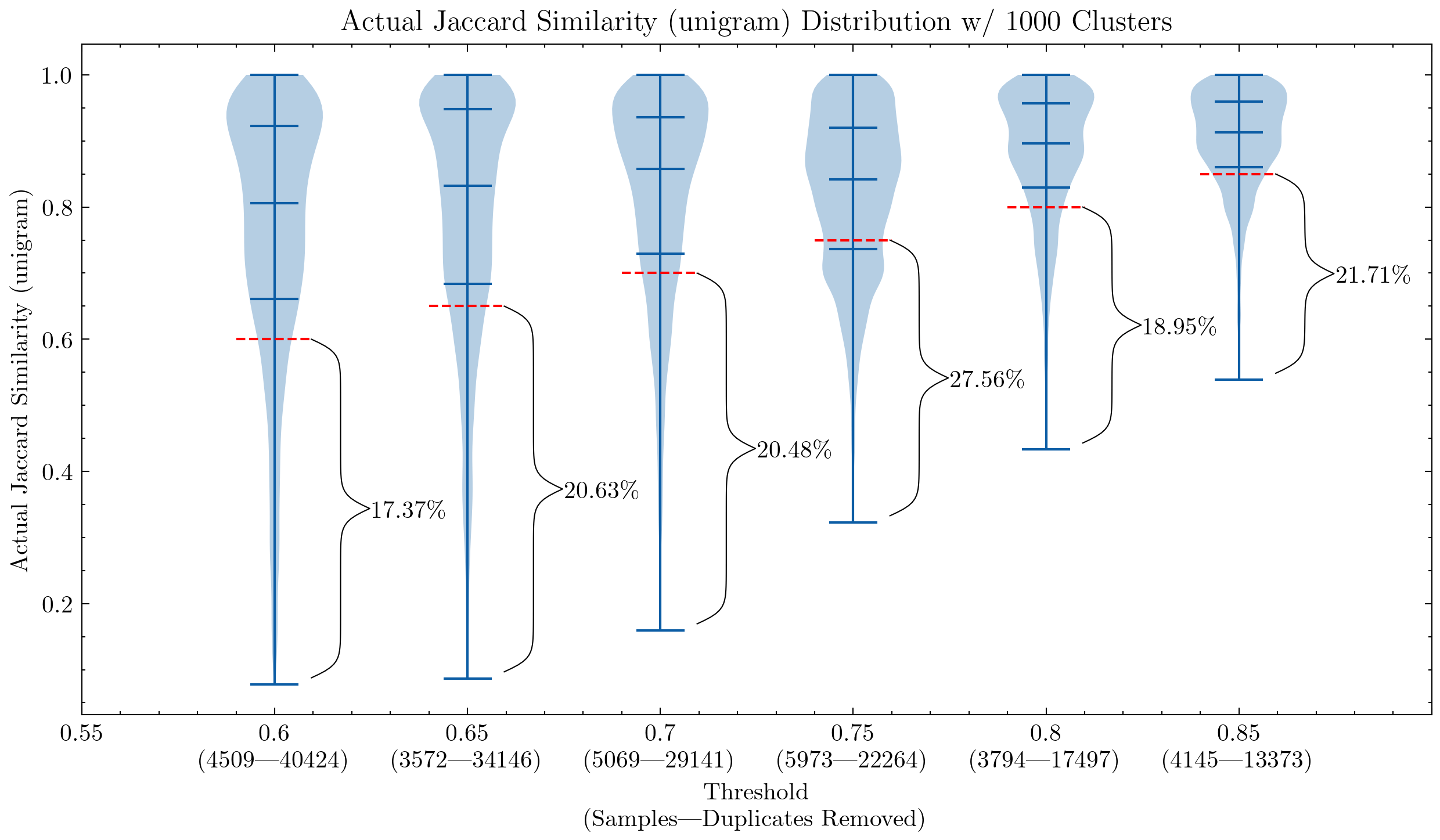

Introducing HuggingFace blog for Chinese speakers: Fostering Collaboration with the Chinese AI community | xianbao | April 24, 2023 | chinese-language-blog | partnerships, community | https://huggingface.co/blog/chinese-language-blog | # Introducing HuggingFace blog for Chinese speakers: Fostering Collaboration with the Chinese AI community ## Welcome to our blog for Chinese speakers! We are delighted to introduce Hugging Face’s new blog for Chinese speakers: [hf.co/blog/zh](https://huggingface.co/blog/zh)! A committed group of volunteers has made this possible by translating our invaluable resources, including blog posts and comprehensive courses on transformers, diffusion, and reinforcement learning. This step aims to make our content accessible to the ever-growing Chinese AI community, fostering mutual learning and collaboration. ## Recognizing the Chinese AI Community’s Accomplishments We want to highlight the remarkable achievements and contributions of the Chinese AI community, which has demonstrated exceptional talent and innovation. Groundbreaking advancements like [HuggingGPT](https://huggingface.co/spaces/microsoft/HuggingGPT), [ChatGLM](https://huggingface.co/THUDM/chatglm-6b), [RWKV](https://huggingface.co/spaces/BlinkDL/Raven-RWKV-7B), [ChatYuan](https://huggingface.co/spaces/ClueAI/ChatYuan-large-v2), [ModelScope text-to-video models](https://huggingface.co/spaces/damo-vilab/modelscope-text-to-video-synthesis) as well as [IDEA CCNL](https://huggingface.co/IDEA-CCNL) and [BAAI](https://huggingface.co/BAAI)’s contributions underscore the incredible potential within the community. In addition, the Chinese AI community has been actively engaged in creating trendy Spaces, such as [Chuanhu GPT](https://huggingface.co/spaces/jdczlx/ChatGPT-chuanhu) and [GPT Academy](https://huggingface.co/spaces/qingxu98/gpt-academic), further demonstrating its enthusiasm and creativity. We have been collaborating with organizations such as [PaddlePaddle](https://huggingface.co/blog/paddlepaddle) to ensure seamless integration with Hugging Face, empowering more collaborative efforts in the realm of Machine Learning. ## Strengthening Collaborative Ties and Future Events We are proud of our collaborative history with our Chinese collaborators, having worked together on various events that have enabled knowledge exchange and collaboration, propelling the AI community forward. Some of our collaborative efforts include: - [Online ChatGPT course, in collaboration with DataWhale (ongoing)](https://mp.weixin.qq.com/s/byR2n-5QJmy34Jq0W3ECDg) - [First offline meetup in Beijing for JAX/Diffusers community sprint](https://twitter.com/huggingface/status/1648986159580876800) - [Organizing a Prompt engineering hackathon alongside Baixing AI](https://mp.weixin.qq.com/s/M5vjicNG1uBdCQzQtQU9yw) - [Fine-tuning Lora models in collaboration with PaddlePaddle](https://aistudio.baidu.com/aistudio/competition/detail/860/0/introduction) - [Fine-tuning stable diffusion models in an event with HeyWhale](https://www.heywhale.com/home/competition/63bbfb98de6c0e9cdb0d9dd5) We are excited to announce that we will continue to strengthen our ties with the Chinese AI community by fostering more collaborations and joint efforts. These initiatives will create opportunities for knowledge sharing and expertise exchange, promoting collaborative open-source machine learning across our communities, and tackling the challenges and opportunities in the field of cooperative OS ML. ## Beyond Boundaries: Embracing a Diverse AI Community As we embark on this new chapter, our collaboration with the Chinese AI community will serve as a platform to bridge cultural and linguistic barriers, fostering innovation and cooperation in the AI domain. At Hugging Face, we value diverse perspectives and voices, aiming to create a welcoming and inclusive community that promotes ethical and equitable AI development. Join us on this exciting journey, and stay tuned for more updates on our blog about Chinese community advancements and future collaborative endeavors! You may also find us here: <figure class="image text-center"> <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/chinese-language-blog/wechat.jpg"> </figure> [BAAI](https://hub.baai.ac.cn/users/45017), [Bilibili](https://space.bilibili.com/1740664937/), [CNBlogs](https://www.cnblogs.com/huggingface), [CSDN](https://huggingface.blog.csdn.net/), [Juejin](https://juejin.cn/user/611789528634712), [OS China](https://my.oschina.net/HuggingFace), [SegmentFault](https://segmentfault.com/u/huggingface), [Zhihu](https://www.zhihu.com/org/huggingface) |

Databricks ❤️ Hugging Face: up to 40% faster training and tuning of Large Language Models | alighodsi | April 26, 2023 | databricks-case-study | case-studies | https://huggingface.co/blog/databricks-case-study | # Databricks ❤️ Hugging Face: up to 40% faster training and tuning of Large Language Models Generative AI has been taking the world by storm. As the data and AI company, we have been on this journey with the release of the open source large language model [Dolly](https://huggingface.co/databricks/dolly-v2-12b), as well as the internally crowdsourced dataset licensed for research and commercial use that we used to fine-tune it, the [databricks-dolly-15k](https://huggingface.co/datasets/databricks/databricks-dolly-15k). Both the model and dataset are available on Hugging Face. We’ve learned a lot throughout this process, and today we’re excited to announce our first of many official commits to the Hugging Face codebase that allows users to easily create a Hugging Face Dataset from an Apache Spark™ dataframe. #### “It's been great to see Databricks release models and datasets to the community, and now we see them extending that work with direct open source commitment to Hugging Face. Spark is one of the most efficient engines for working with data at scale, and it's great to see that users can now benefit from that technology to more effectively fine tune models from Hugging Face.” — Clem Delange, Hugging Face CEO ## Hugging Face gets first-class Spark support Over the past few weeks, we’ve gotten many requests from users asking for an easier way to load their Spark dataframe into a Hugging Face dataset that can be utilized for model training or tuning. Prior to today’s release, to get data from a Spark dataframe into a Hugging Face dataset, users had to write data into Parquet files and then point the Hugging Face dataset to these files to reload them. For example: ```swift from datasets import load_dataset train_df = train.write.parquet(train_dbfs_path, mode="overwrite") train_test = load_dataset("parquet", data_files={"train":f"/dbfs{train_dbfs_path}/*.parquet", "test":f"/dbfs{test_dbfs_path}/*.parquet"}) #16GB == 22min ``` Not only was this cumbersome, but it also meant that data had to be written to disk and then read in again. On top of that, the data would get rematerialized once loaded back into the dataset, which eats up more resources and, therefore, more time and cost. Using this method, we saw that a relatively small (16GB) dataset took about 22 minutes to go from Spark dataframe to Parquet, and then back into the Hugging Face dataset. With the latest Hugging Face release, we make it much simpler for users to accomplish the same task by simply calling the new “from_spark” function in Datasets: ```swift from datasets import Dataset df = [some Spark dataframe or Delta table loaded into df] dataset = Dataset.from_spark(df) #16GB == 12min ``` This allows users to use Spark to efficiently load and transform data for training or fine-tuning a model, then easily map their Spark dataframe into a Hugging Face dataset for super simple integration into their training pipelines. This combines cost savings and speed from Spark and optimizations like memory-mapping and smart caching from Hugging Face datasets. These improvements cut down the processing time for our example 16GB dataset by more than 40%, going from 22 minutes down to only 12 minutes. ## Why does this matter? As we transition to this new AI paradigm, organizations will need to use their extremely valuable data to augment their AI models if they want to get the best performance within their specific domain. This will almost certainly require work in the form of data transformations, and doing this efficiently over large datasets is something Spark was designed to do. Integrating Spark with Hugging Face gives you the cost-effectiveness and performance of Spark while retaining the pipeline integration that Hugging Face provides. ## Continued Open-Source Support We see this release as a new avenue to further contribute to the open source community, something that we believe Hugging Face does extremely well, as it has become the de facto repository for open source models and datasets. This is only the first of many contributions. We already have plans to add streaming support through Spark to make the dataset loading even faster. In order to become the best platform for users to jump into the world of AI, we’re working hard to provide the best tools to successfully train, tune, and deploy models. Not only will we continue contributing to Hugging Face, but we’ve also started releasing improvements to our other open source projects. A recent [MLflow](https://www.databricks.com/blog/2023/04/18/introducing-mlflow-23-enhanced-native-llm-support-and-new-features.html) release added support for the transformers library, OpenAI integration, and Langchain support. We also announced [AI Functions](https://www.databricks.com/blog/2023/04/18/introducing-ai-functions-integrating-large-language-models-databricks-sql.html) within Databricks SQL that lets users easily integrate OpenAI (or their own deployed models in the future) into their queries. To top it all off, we also released a [PyTorch distributor](https://www.databricks.com/blog/2023/04/20/pytorch-databricks-introducing-spark-pytorch-distributor.html) for Spark to simplify distributed PyTorch training on Databricks. _This article was originally published on April 26, 2023 in [Databricks's blog](https://www.databricks.com/blog/contributing-spark-loader-for-hugging-face-datasets)._ |