title

stringlengths 17

126

| author

stringlengths 3

21

| date

stringlengths 11

18

| local

stringlengths 2

59

| tags

stringlengths 2

76

| URL

stringlengths 30

87

| content

stringlengths 1.11k

108k

|

|---|---|---|---|---|---|---|

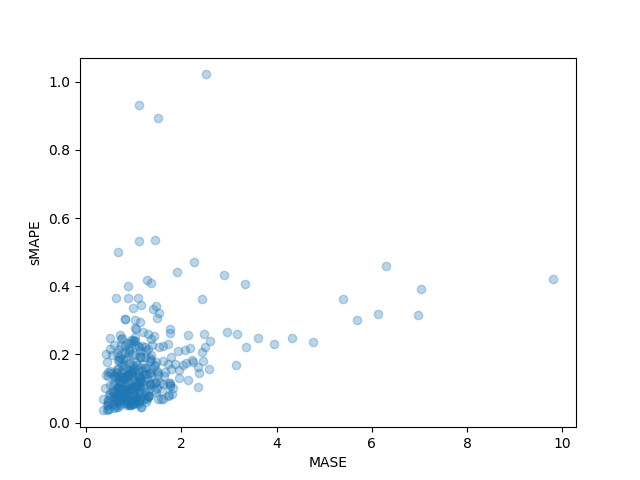

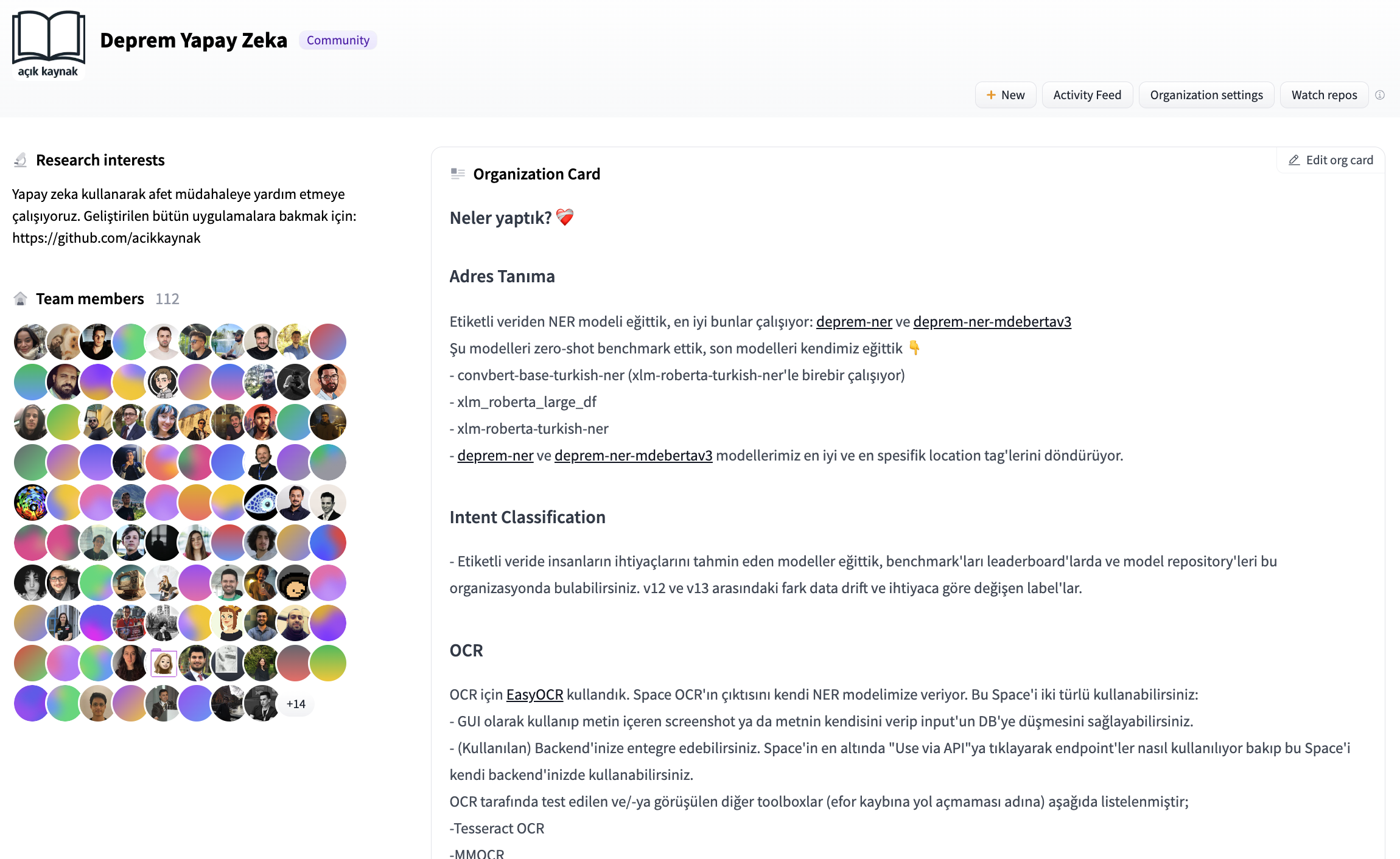

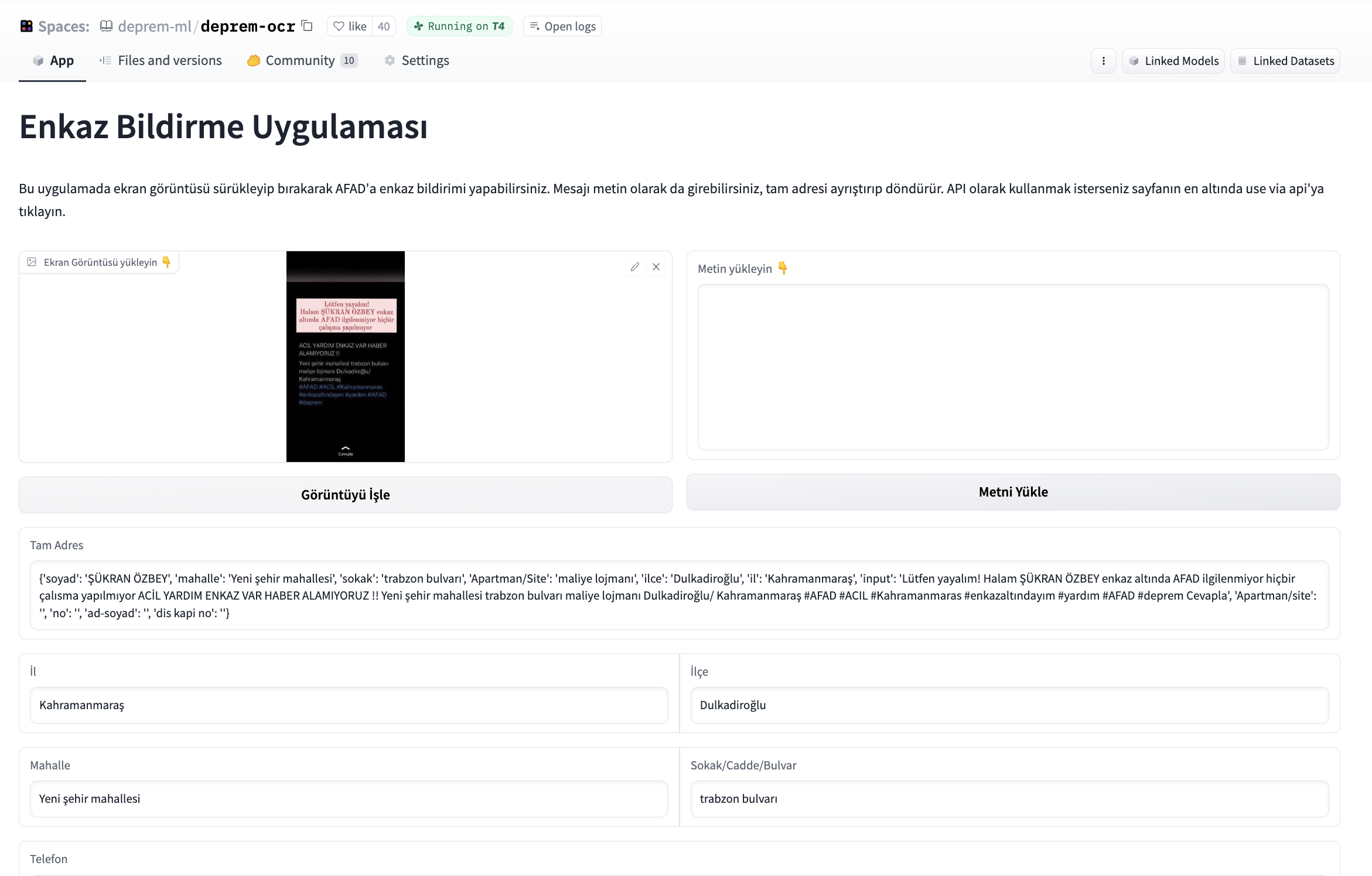

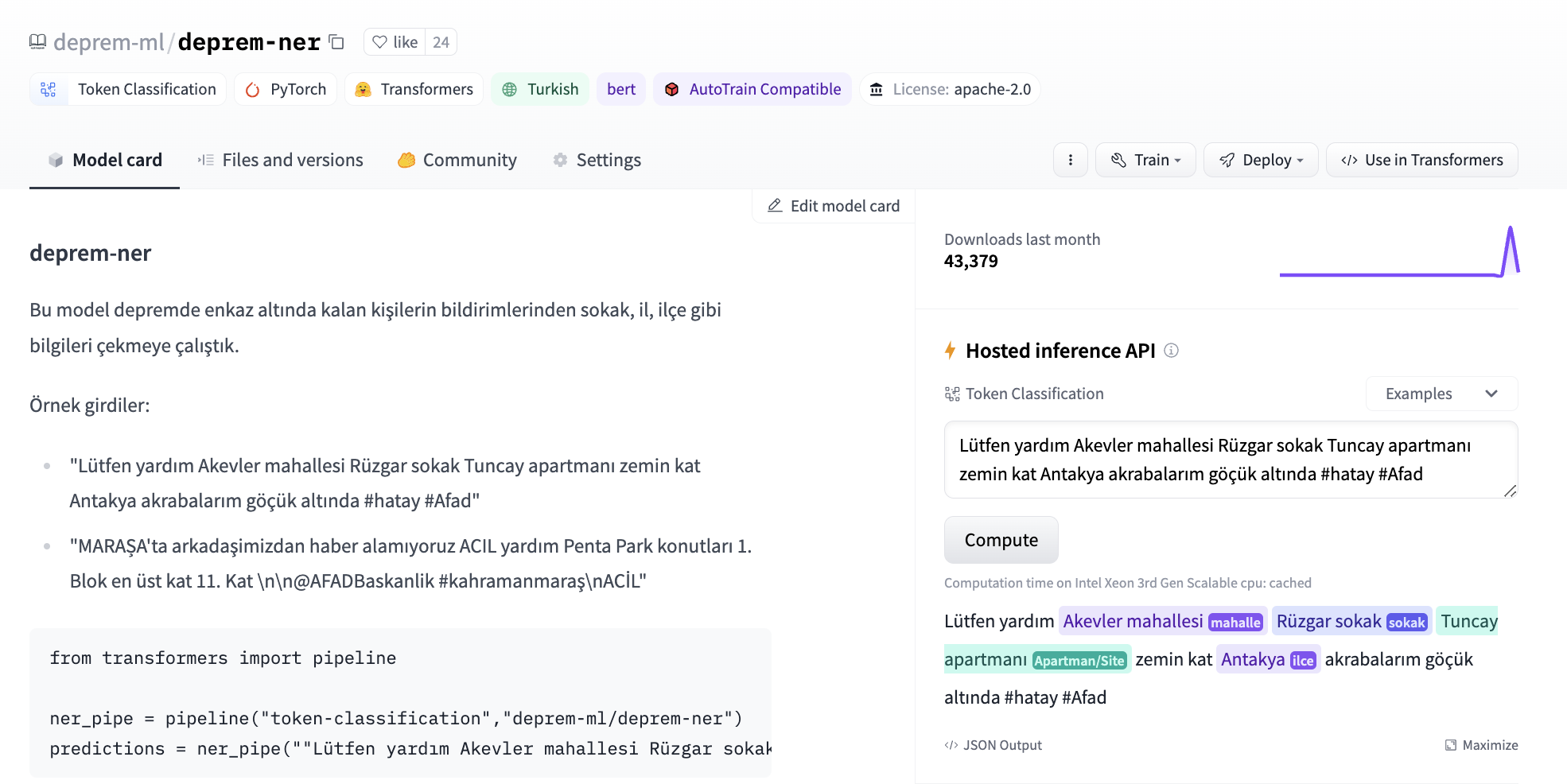

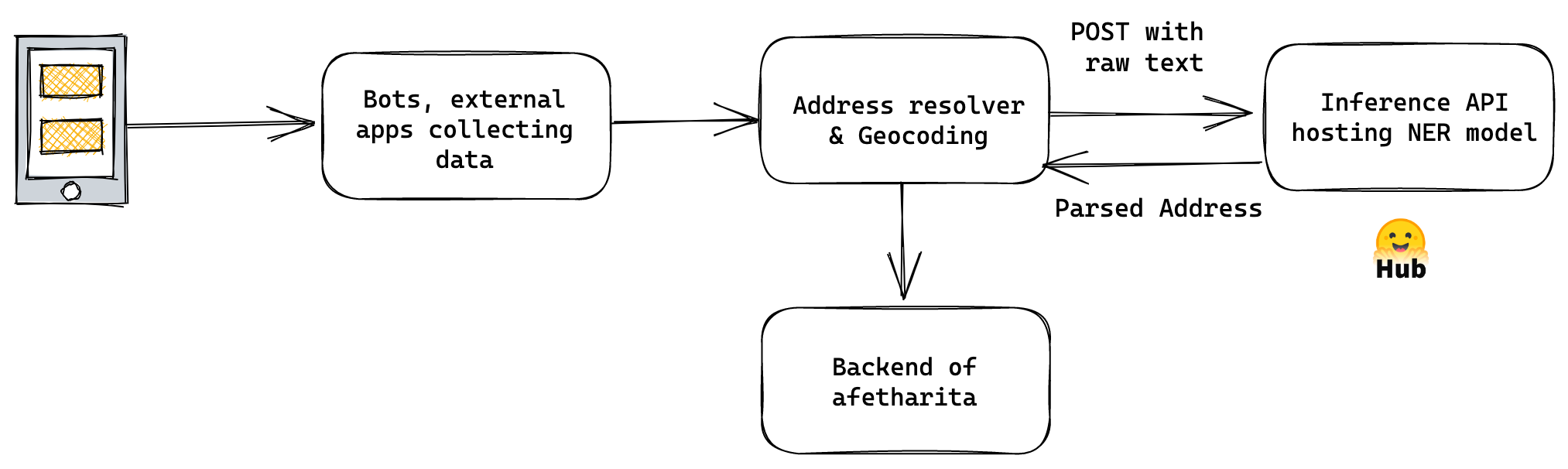

Getting Started with Sentiment Analysis on Twitter | FedericoPascual | July 7, 2022 | sentiment-analysis-twitter | sentiment-analysis, nlp, guide | https://huggingface.co/blog/sentiment-analysis-twitter | # Getting Started with Sentiment Analysis on Twitter <script async defer src="https://unpkg.com/medium-zoom-element@0/dist/medium-zoom-element.min.js"></script> Sentiment analysis is the automatic process of classifying text data according to their polarity, such as positive, negative and neutral. Companies leverage sentiment analysis of tweets to get a sense of how customers are talking about their products and services, get insights to drive business decisions, and identify product issues and potential PR crises early on. In this guide, we will cover everything you need to learn to get started with sentiment analysis on Twitter. We'll share a step-by-step process to do sentiment analysis, for both, coders and non-coders. If you are a coder, you'll learn how to use the [Inference API](https://huggingface.co/inference-api), a plug & play machine learning API for doing sentiment analysis of tweets at scale in just a few lines of code. If you don't know how to code, don't worry! We'll also cover how to do sentiment analysis with Zapier, a no-code tool that will enable you to gather tweets, analyze them with the Inference API, and finally send the results to Google Sheets ⚡️ Read along or jump to the section that sparks 🌟 your interest: 1. [What is sentiment analysis?](#what-is-sentiment-analysis) 2. [How to do Twitter sentiment analysis with code?](#how-to-do-twitter-sentiment-analysis-with-code) 3. [How to do Twitter sentiment analysis without coding?](#how-to-do-twitter-sentiment-analysis-without-coding) Buckle up and enjoy the ride! 🤗 ## What is Sentiment Analysis? Sentiment analysis uses [machine learning](https://en.wikipedia.org/wiki/Machine_learning) to automatically identify how people are talking about a given topic. The most common use of sentiment analysis is detecting the polarity of text data, that is, automatically identifying if a tweet, product review or support ticket is talking positively, negatively, or neutral about something. As an example, let's check out some tweets mentioning [@Salesforce](https://twitter.com/Salesforce) and see how they would be tagged by a sentiment analysis model: - *"The more I use @salesforce the more I dislike it. It's slow and full of bugs. There are elements of the UI that look like they haven't been updated since 2006. Current frustration: app exchange pages won't stop refreshing every 10 seconds"* --> This first tweet would be tagged as "Negative". - *"That’s what I love about @salesforce. That it’s about relationships and about caring about people and it’s not only about business and money. Thanks for caring about #TrailblazerCommunity"* --> In contrast, this tweet would be classified as "Positive". - *"Coming Home: #Dreamforce Returns to San Francisco for 20th Anniversary. Learn more: http[]()://bit.ly/3AgwO0H via @Salesforce"* --> Lastly, this tweet would be tagged as "Neutral" as it doesn't contain an opinion or polarity. Up until recently, analyzing tweets mentioning a brand, product or service was a very manual, hard and tedious process; it required someone to manually go over relevant tweets, and read and label them according to their sentiment. As you can imagine, not only this doesn't scale, it is expensive and very time-consuming, but it is also prone to human error. Luckily, recent advancements in AI allowed companies to use machine learning models for sentiment analysis of tweets that are as good as humans. By using machine learning, companies can analyze tweets in real-time 24/7, do it at scale and analyze thousands of tweets in seconds, and more importantly, get the insights they are looking for when they need them. Why do sentiment analysis on Twitter? Companies use this for a wide variety of use cases, but the two of the most common use cases are analyzing user feedback and monitoring mentions to detect potential issues early on. **Analyze Feedback on Twitter** Listening to customers is key for detecting insights on how you can improve your product or service. Although there are multiple sources of feedback, such as surveys or public reviews, Twitter offers raw, unfiltered feedback on what your audience thinks about your offering. By analyzing how people talk about your brand on Twitter, you can understand whether they like a new feature you just launched. You can also get a sense if your pricing is clear for your target audience. You can also see what aspects of your offering are the most liked and disliked to make business decisions (e.g. customers loving the simplicity of the user interface but hate how slow customer support is). **Monitor Twitter Mentions to Detect Issues** Twitter has become the default way to share a bad customer experience and express frustrations whenever something goes wrong while using a product or service. This is why companies monitor how users mention their brand on Twitter to detect any issues early on. By implementing a sentiment analysis model that analyzes incoming mentions in real-time, you can automatically be alerted about sudden spikes of negative mentions. Most times, this is caused is an ongoing situation that needs to be addressed asap (e.g. an app not working because of server outages or a really bad experience with a customer support representative). Now that we covered what is sentiment analysis and why it's useful, let's get our hands dirty and actually do sentiment analysis of tweets!💥 ## How to do Twitter sentiment analysis with code? Nowadays, getting started with sentiment analysis on Twitter is quite easy and straightforward 🙌 With a few lines of code, you can automatically get tweets, run sentiment analysis and visualize the results. And you can learn how to do all these things in just a few minutes! In this section, we'll show you how to do it with a cool little project: we'll do sentiment analysis of tweets mentioning [Notion](https://twitter.com/notionhq)! First, you'll use [Tweepy](https://www.tweepy.org/), an open source Python library to get tweets mentioning @NotionHQ using the [Twitter API](https://developer.twitter.com/en/docs/twitter-api). Then you'll use the [Inference API](https://huggingface.co/inference-api) for doing sentiment analysis. Once you get the sentiment analysis results, you will create some charts to visualize the results and detect some interesting insights. You can use this [Google Colab notebook](https://colab.research.google.com/drive/1R92sbqKMI0QivJhHOp1T03UDaPUhhr6x?usp=sharing) to follow this tutorial. Let's get started with it! 💪 1. Install Dependencies As a first step, you'll need to install the required dependencies. You'll use [Tweepy](https://www.tweepy.org/) for gathering tweets, [Matplotlib](https://matplotlib.org/) for building some charts and [WordCloud](https://amueller.github.io/word_cloud/) for building a visualization with the most common keywords: ```python !pip install -q transformers tweepy matplotlib wordcloud ``` 2. Setting up Twitter credentials Then, you need to set up the [Twitter API credentials](https://developer.twitter.com/en/docs/twitter-api) so you can authenticate with Twitter and then gather tweets automatically using their API: ```python import tweepy # Add Twitter API key and secret consumer_key = "XXXXXX" consumer_secret = "XXXXXX" # Handling authentication with Twitter auth = tweepy.AppAuthHandler(consumer_key, consumer_secret) # Create a wrapper for the Twitter API api = tweepy.API(auth, wait_on_rate_limit=True, wait_on_rate_limit_notify=True) ``` 3. Search for tweets using Tweepy Now you are ready to start collecting data from Twitter! 🎉 You will use [Tweepy Cursor](https://docs.tweepy.org/en/v3.5.0/cursor_tutorial.html) to automatically collect 1,000 tweets mentioning Notion: ```python # Helper function for handling pagination in our search and handle rate limits def limit_handled(cursor): while True: try: yield cursor.next() except tweepy.RateLimitError: print('Reached rate limite. Sleeping for >15 minutes') time.sleep(15 * 61) except StopIteration: break # Define the term you will be using for searching tweets query = '@NotionHQ' query = query + ' -filter:retweets' # Define how many tweets to get from the Twitter API count = 1000 # Search for tweets using Tweepy search = limit_handled(tweepy.Cursor(api.search, q=query, tweet_mode='extended', lang='en', result_type="recent").items(count)) # Process the results from the search using Tweepy tweets = [] for result in search: tweet_content = result.full_text tweets.append(tweet_content) # Only saving the tweet content. ``` 4. Analyzing tweets with sentiment analysis Now that you have data, you are ready to analyze the tweets with sentiment analysis! 💥 You will be using [Inference API](https://huggingface.co/inference-api), an easy-to-use API for integrating machine learning models via simple API calls. With the Inference API, you can use state-of-the-art models for sentiment analysis without the hassle of building infrastructure for machine learning or dealing with model scalability. You can serve the latest (and greatest!) open source models for sentiment analysis while staying out of MLOps. 🤩 For using the Inference API, first you will need to define your `model id` and your `Hugging Face API Token`: - The `model ID` is to specify which model you want to use for making predictions. Hugging Face has more than [400 models for sentiment analysis in multiple languages](https://huggingface.co/models?pipeline_tag=text-classification&sort=downloads&search=sentiment), including various models specifically fine-tuned for sentiment analysis of tweets. For this particular tutorial, you will use [twitter-roberta-base-sentiment-latest](https://huggingface.co/cardiffnlp/twitter-roberta-base-sentiment-latest), a sentiment analysis model trained on ≈124 million tweets and fine-tuned for sentiment analysis. - You'll also need to specify your `Hugging Face token`; you can get one for free by signing up [here](https://huggingface.co/join) and then copying your token on this [page](https://huggingface.co/settings/tokens). ```python model = "cardiffnlp/twitter-roberta-base-sentiment-latest" hf_token = "XXXXXX" ``` Next, you will create the API call using the `model id` and `hf_token`: ```python API_URL = "https://api-inference.huggingface.co/models/" + model headers = {"Authorization": "Bearer %s" % (hf_token)} def analysis(data): payload = dict(inputs=data, options=dict(wait_for_model=True)) response = requests.post(API_URL, headers=headers, json=payload) return response.json() ``` Now, you are ready to do sentiment analysis on each tweet. 🔥🔥🔥 ```python tweets_analysis = [] for tweet in tweets: try: sentiment_result = analysis(tweet)[0] top_sentiment = max(sentiment_result, key=lambda x: x['score']) # Get the sentiment with the higher score tweets_analysis.append({'tweet': tweet, 'sentiment': top_sentiment['label']}) except Exception as e: print(e) ``` 5. Explore the results of sentiment analysis Wondering if people on Twitter are talking positively or negatively about Notion? Or what do users discuss when talking positively or negatively about Notion? We'll use some data visualization to explore the results of the sentiment analysis and find out! First, let's see examples of tweets that were labeled for each sentiment to get a sense of the different polarities of these tweets: ```python import pandas as pd # Load the data in a dataframe pd.set_option('max_colwidth', None) pd.set_option('display.width', 3000) df = pd.DataFrame(tweets_analysis) # Show a tweet for each sentiment display(df[df["sentiment"] == 'Positive'].head(1)) display(df[df["sentiment"] == 'Neutral'].head(1)) display(df[df["sentiment"] == 'Negative'].head(1)) ``` Results: ``` @thenotionbar @hypefury @NotionHQ That’s genuinely smart. So basically you’ve setup your posting queue to by a recurrent recycling of top content that runs 100% automatic? Sentiment: Positive @itskeeplearning @NotionHQ How you've linked gallery cards? Sentiment: Neutral @NotionHQ Running into an issue here recently were content is not showing on on web but still in the app. This happens for all of our pages. https://t.co/3J3AnGzDau. Sentiment: Negative ``` Next, you'll count the number of tweets that were tagged as positive, negative and neutral: ```python sentiment_counts = df.groupby(['sentiment']).size() print(sentiment_counts) ``` Remarkably, most of the tweets about Notion are positive: ``` sentiment Negative 82 Neutral 420 Positive 498 ``` Then, let's create a pie chart to visualize each sentiment in relative terms: ```python import matplotlib.pyplot as plt fig = plt.figure(figsize=(6,6), dpi=100) ax = plt.subplot(111) sentiment_counts.plot.pie(ax=ax, autopct='%1.1f%%', startangle=270, fontsize=12, label="") ``` It's cool to see that 50% of all tweets are positive and only 8.2% are negative: <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/sentiment-pie.png"></medium-zoom> <figcaption>Sentiment analysis results of tweets mentioning Notion</figcaption> </figure> As a last step, let's create some wordclouds to see which words are the most used for each sentiment: ```python from wordcloud import WordCloud from wordcloud import STOPWORDS # Wordcloud with positive tweets positive_tweets = df['tweet'][df["sentiment"] == 'Positive'] stop_words = ["https", "co", "RT"] + list(STOPWORDS) positive_wordcloud = WordCloud(max_font_size=50, max_words=50, background_color="white", stopwords = stop_words).generate(str(positive_tweets)) plt.figure() plt.title("Positive Tweets - Wordcloud") plt.imshow(positive_wordcloud, interpolation="bilinear") plt.axis("off") plt.show() # Wordcloud with negative tweets negative_tweets = df['tweet'][df["sentiment"] == 'Negative'] stop_words = ["https", "co", "RT"] + list(STOPWORDS) negative_wordcloud = WordCloud(max_font_size=50, max_words=50, background_color="white", stopwords = stop_words).generate(str(negative_tweets)) plt.figure() plt.title("Negative Tweets - Wordcloud") plt.imshow(negative_wordcloud, interpolation="bilinear") plt.axis("off") plt.show() ``` Curiously, some of the words that stand out from the positive tweets include "notes", "cron", and "paid": <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/positive-tweets.png"></medium-zoom> <figcaption>Word cloud for positive tweets</figcaption> </figure> In contrast, "figma", "enterprise" and "account" are some of the most used words from the negatives tweets: <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/negative-tweets.png"></medium-zoom> <figcaption>Word cloud for negative tweets</figcaption> </figure> That was fun, right? With just a few lines of code, you were able to automatically gather tweets mentioning Notion using Tweepy, analyze them with a sentiment analysis model using the [Inference API](https://huggingface.co/inference-api), and finally create some visualizations to analyze the results. 💥 Are you interested in doing more? As a next step, you could use a second [text classifier](https://huggingface.co/tasks/text-classification) to classify each tweet by their theme or topic. This way, each tweet will be labeled with both sentiment and topic, and you can get more granular insights (e.g. are users praising how easy to use is Notion but are complaining about their pricing or customer support?). ## How to do Twitter sentiment analysis without coding? To get started with sentiment analysis, you don't need to be a developer or know how to code. 🤯 There are some amazing no-code solutions that will enable you to easily do sentiment analysis in just a few minutes. In this section, you will use [Zapier](https://zapier.com/), a no-code tool that enables users to connect 5,000+ apps with an easy to use user interface. You will create a [Zap](https://zapier.com/help/create/basics/create-zaps), that is triggered whenever someone mentions Notion on Twitter. Then the Zap will use the [Inference API](https://huggingface.co/inference-api) to analyze the tweet with a sentiment analysis model and finally it will save the results on Google Sheets: 1. Step 1 (trigger): Getting the tweets. 2. Step 2: Analyze tweets with sentiment analysis. 3. Step 3: Save the results on Google Sheets. No worries, it won't take much time; in under 10 minutes, you'll create and activate the zap, and will start seeing the sentiment analysis results pop up in Google Sheets. Let's get started! 🚀 ### Step 1: Getting the Tweets To get started, you'll need to [create a Zap](https://zapier.com/webintent/create-zap), and configure the first step of your Zap, also called the *"Trigger"* step. In your case, you will need to set it up so that it triggers the Zap whenever someone mentions Notion on Twitter. To set it up, follow the following steps: - First select "Twitter" and select "Search mention" as event on "Choose app & event". - Then connect your Twitter account to Zapier. - Set up the trigger by specifying "NotionHQ" as the search term for this trigger. - Finally test the trigger to make sure it gather tweets and runs correctly. <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/zapier-getting-tweets-cropped-cut-optimized.gif"></medium-zoom> <figcaption>Step 1 on the Zap</figcaption> </figure> ### Step 2: Analyze Tweets with Sentiment Analysis Now that your Zap can gather tweets mentioning Notion, let's add a second step to do the sentiment analysis. 🤗 You will be using [Inference API](https://huggingface.co/inference-api), an easy-to-use API for integrating machine learning models. For using the Inference API, you will need to define your "model id" and your "Hugging Face API Token": - The `model ID` is to tell the Inference API which model you want to use for making predictions. For this guide, you will use [twitter-roberta-base-sentiment-latest](https://huggingface.co/cardiffnlp/twitter-roberta-base-sentiment-latest), a sentiment analysis model trained on ≈124 million tweets and fine-tuned for sentiment analysis. You can explore the more than [400 models for sentiment analysis available on the Hugging Face Hub](https://huggingface.co/models?pipeline_tag=text-classification&sort=downloads&search=sentiment) in case you want to use a different model (e.g. doing sentiment analysis on a different language). - You'll also need to specify your `Hugging Face token`; you can get one for free by signing up [here](https://huggingface.co/join) and then copying your token on this [page](https://huggingface.co/settings/tokens). Once you have your model ID and your Hugging Face token ID, go back to your Zap and follow these instructions to set up the second step of the zap: 1. First select "Code by Zapier" and "Run python" in "Choose app and event". 2. On "Set up action", you will need to first add the tweet "full text" as "input_data". Then you will need to add these [28 lines of python code](https://gist.github.com/feconroses/0e064f463b9a0227ba73195f6376c8ed) in the "Code" section. This code will allow the Zap to call the Inference API and make the predictions with sentiment analysis. Before adding this code to your zap, please make sure that you do the following: - Change line 5 and add your Hugging Face token, that is, instead of `hf_token = "ADD_YOUR_HUGGING_FACE_TOKEN_HERE"`, you will need to change it to something like`hf_token = "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"` - If you want to use a different sentiment analysis model, you will need to change line 4 and specify the id of the new model here. For example, instead of using the default model, you could use [this model](https://huggingface.co/finiteautomata/beto-sentiment-analysis?text=Te+quiero.+Te+amo.) to do sentiment analysis on tweets in Spanish by changing this line `model = "cardiffnlp/twitter-roberta-base-sentiment-latest"` to `model = "finiteautomata/beto-sentiment-analysis"`. 3. Finally, test this step to make sure it makes predictions and runs correctly. <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/zapier-analyze-tweets-cropped-cut-optimized.gif"></medium-zoom> <figcaption>Step 2 on the Zap</figcaption> </figure> ### Step 3: Save the results on Google Sheets As the last step to your Zap, you will save the results of the sentiment analysis on a spreadsheet on Google Sheets and visualize the results. 📊 First, [create a new spreadsheet on Google Sheets](https://docs.google.com/spreadsheets/u/0/create), and define the following columns: - **Tweet**: this column will contain the text of the tweet. - **Sentiment**: will have the label of the sentiment analysis results (e.g. positive, negative and neutral). - **Score**: will store the value that reflects how confident the model is with its prediction. - **Date**: will contain the date of the tweet (which can be handy for creating graphs and charts over time). Then, follow these instructions to configure this last step: 1. Select Google Sheets as an app, and "Create Spreadsheet Row" as the event in "Choose app & Event". 2. Then connect your Google Sheets account to Zapier. 3. Next, you'll need to set up the action. First, you'll need to specify the Google Drive value (e.g. My Drive), then select the spreadsheet, and finally the worksheet where you want Zapier to automatically write new rows. Once you are done with this, you will need to map each column on the spreadsheet with the values you want to use when your zap automatically writes a new row on your file. If you have created the columns we suggested before, this will look like the following (column → value): - Tweet → Full Text (value from the step 1 of the zap) - Sentiment → Sentiment Label (value from step 2) - Sentiment Score → Sentiment Score (value from step 2) - Date → Created At (value from step 1) 4. Finally, test this last step to make sure it can add a new row to your spreadsheet. After confirming it's working, you can delete this row on your spreadsheet. <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/zapier-add-to-google-sheets-cropped-cut.gif"></medium-zoom> <figcaption>Step 3 on the Zap</figcaption> </figure> ### 4. Turn on your Zap At this point, you have completed all the steps of your zap! 🔥 Now, you just need to turn it on so it can start gathering tweets, analyzing them with sentiment analysis, and store the results on Google Sheets. ⚡️ To turn it on, just click on "Publish" button at the bottom of your screen: <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/zap-turn-on-cut-optimized.gif"></medium-zoom> <figcaption>Turning on the Zap</figcaption> </figure> After a few minutes, you will see how your spreadsheet starts populating with tweets and the results of sentiment analysis. You can also create a graph that can be updated in real-time as tweets come in: <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="Sentiment analysis results of tweets mentioning Notion" src="assets/85_sentiment_analysis_twitter/google-sheets-results-cropped-cut.gif"></medium-zoom> <figcaption>Tweets popping up on Google Sheets</figcaption> </figure> Super cool, right? 🚀 ## Wrap up Twitter is the public town hall where people share their thoughts about all kinds of topics. From people talking about politics, sports or tech, users sharing their feedback about a new shiny app, or passengers complaining to an Airline about a canceled flight, the amount of data on Twitter is massive. Sentiment analysis allows making sense of all that data in real-time to uncover insights that can drive business decisions. Luckily, tools like the [Inference API](https://huggingface.co/inference-api) makes it super easy to get started with sentiment analysis on Twitter. No matter if you know or don't know how to code and/or you don't have experience with machine learning, in a few minutes, you can set up a process that can gather tweets in real-time, analyze them with a state-of-the-art model for sentiment analysis, and explore the results with some cool visualizations. 🔥🔥🔥 If you have questions, you can ask them in the [Hugging Face forum](https://discuss.huggingface.co/) so the Hugging Face community can help you out and others can benefit from seeing the discussion. You can also join our [Discord](https://discord.gg/YRAq8fMnUG) server to talk with us and the entire Hugging Face community. |

Introducing The World's Largest Open Multilingual Language Model: BLOOM | BigScience | July 12, 2022 | bloom | open-source-collab, community, research | https://huggingface.co/blog/bloom | # 🌸 Introducing The World's Largest Open Multilingual Language Model: BLOOM 🌸 <a href="https://huggingface.co/bigscience/bloom"><img style="middle" width="950" src="/blog/assets/86_bloom/thumbnail-2.png"></a> Large language models (LLMs) have made a significant impact on AI research. These powerful, general models can take on a wide variety of new language tasks from a user’s instructions. However, academia, nonprofits and smaller companies' research labs find it difficult to create, study, or even use LLMs as only a few industrial labs with the necessary resources and exclusive rights can fully access them. Today, we release [BLOOM](https://huggingface.co/bigscience/bloom), the first multilingual LLM trained in complete transparency, to change this status quo — the result of the largest collaboration of AI researchers ever involved in a single research project. With its 176 billion parameters, BLOOM is able to generate text in 46 natural languages and 13 programming languages. For almost all of them, such as Spanish, French and Arabic, BLOOM will be the first language model with over 100B parameters ever created. This is the culmination of a year of work involving over 1000 researchers from 70+ countries and 250+ institutions, leading to a final run of 117 days (March 11 - July 6) training the BLOOM model on the [Jean Zay supercomputer](http://www.idris.fr/eng/info/missions-eng.html) in the south of Paris, France thanks to a compute grant worth an estimated €3M from French research agencies CNRS and GENCI. Researchers can [now download, run and study BLOOM](https://huggingface.co/bigscience/bloom) to investigate the performance and behavior of recently developed large language models down to their deepest internal operations. More generally, any individual or institution who agrees to the terms of the model’s [Responsible AI License](https://bigscience.huggingface.co/blog/the-bigscience-rail-license) (developed during the BigScience project itself) can use and build upon the model on a local machine or on a cloud provider. In this spirit of collaboration and continuous improvement, we’re also releasing, for the first time, the intermediary checkpoints and optimizer states of the training. Don’t have 8 A100s to play with? An inference API, currently backed by Google’s TPU cloud and a FLAX version of the model, also allows quick tests, prototyping, and lower-scale use. You can already play with it on the Hugging Face Hub. <img class="mx-auto" style="center" width="950" src="/blog/assets/86_bloom/bloom-examples.jpg"></a> This is only the beginning. BLOOM’s capabilities will continue to improve as the workshop continues to experiment and tinker with the model. We’ve started work to make it instructable as our earlier effort T0++ was and are slated to add more languages, compress the model into a more usable version with the same level of performance, and use it as a starting point for more complex architectures… All of the experiments researchers and practitioners have always wanted to run, starting with the power of a 100+ billion parameter model, are now possible. BLOOM is the seed of a living family of models that we intend to grow, not just a one-and-done model, and we’re ready to support community efforts to expand it. |

Building a Playlist Generator with Sentence Transformers | NimaBoscarino | July 13, 2022 | playlist-generator | nlp, guide | https://huggingface.co/blog/playlist-generator | # Building a Playlist Generator with Sentence Transformers <script async defer src="https://unpkg.com/medium-zoom-element@0/dist/medium-zoom-element.min.js"></script> A short while ago I published a [playlist generator](https://huggingface.co/spaces/NimaBoscarino/playlist-generator) that I’d built using Sentence Transformers and Gradio, and I followed that up with a [reflection on how I try to use my projects as effective learning experiences](https://huggingface.co/blog/your-first-ml-project). But how did I actually *build* the playlist generator? In this post we’ll break down that project and look at **two** technical details: how the embeddings were generated, and how the *multi-step* Gradio demo was built. <div class="hidden xl:block"> <div style="display: flex; flex-direction: column; align-items: center;"> <iframe src="https://nimaboscarino-playlist-generator.hf.space" frameBorder="0" width="1400" height="690" title="Gradio app" class="p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe> </div> </div> As we’ve explored in [previous posts on the Hugging Face blog](https://huggingface.co/blog/getting-started-with-embeddings), Sentence Transformers (ST) is a library that gives us tools to generate sentence embeddings, which have a variety of uses. Since I had access to a dataset of song lyrics, I decided to leverage ST’s semantic search functionality to generate playlists from a given text prompt. Specifically, the goal was to create an embedding from the prompt, use that embedding for a semantic search across a set of *pre-generated lyrics embeddings* to generate a relevant set of songs. This would all be wrapped up in a Gradio app using the new Blocks API, hosted on Hugging Face Spaces. We’ll be looking at a slightly advanced use of Gradio, so if you’re a beginner to the library I recommend reading the [Introduction to Blocks](https://gradio.app/introduction_to_blocks/) before tackling the Gradio-specific parts of this post. Also, note that while I won’t be releasing the lyrics dataset, the **[lyrics embeddings are available on the Hugging Face Hub](https://huggingface.co/datasets/NimaBoscarino/playlist-generator)** for you to play around with. Let’s jump in! 🪂 ## Sentence Transformers: Embeddings and Semantic Search Embeddings are **key** in Sentence Transformers! We’ve learned about **[what embeddings are and how we generate them in a previous article](https://huggingface.co/blog/getting-started-with-embeddings)**, and I recommend checking that out before continuing with this post. Sentence Transformers offers a large collection of pre-trained embedding models! It even includes tutorials for fine-tuning those models with our own training data, but for many use-cases (such semantic search over a corpus of song lyrics) the pre-trained models will perform excellently right out of the box. With so many embedding models available, though, how do we know which one to use? [The ST documentation highlights many of the choices](https://www.sbert.net/docs/pretrained_models.html), along with their evaluation metrics and some descriptions of their intended use-cases. The **[MS MARCO models](https://www.sbert.net/docs/pretrained-models/msmarco-v5.html)** are trained on Bing search engine queries, but since they also perform well on other domains I decided any one of these could be a good choice for this project. All we need for the playlist generator is to find songs that have some semantic similarity, and since I don’t really care about hitting a particular performance metric I arbitrarily chose [sentence-transformers/msmarco-MiniLM-L-6-v3](https://huggingface.co/sentence-transformers/msmarco-MiniLM-L-6-v3). Each model in ST has a configurable input sequence length (up to a maximum), after which your inputs will be truncated. The model I chose had a max sequence length of 512 word pieces, which, as I found out, is often not enough to embed entire songs. Luckily, there’s an easy way for us to split lyrics into smaller chunks that the model can digest – verses! Once we’ve chunked our songs into verses and embedded each verse, we’ll find that the search works much better. <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="The songs are split into verses, and then each verse is embedded." src="assets/87_playlist_generator/embedding-diagram.svg"></medium-zoom> <figcaption>The songs are split into verses, and then each verse is embedded.</figcaption> </figure> To actually generate the embeddings, you can call the `.encode()` method of the Sentence Transformers model and pass it a list of strings. Then you can save the embeddings however you like – in this case I opted to pickle them. ```python from sentence_transformers import SentenceTransformer import pickle embedder = SentenceTransformer('msmarco-MiniLM-L-6-v3') verses = [...] # Load up your strings in a list corpus_embeddings = embedder.encode(verses, show_progress_bar=True) with open('verse-embeddings.pkl', "wb") as fOut: pickle.dump(corpus_embeddings, fOut) ``` To be able to share you embeddings with others, you can even upload the Pickle file to a Hugging Face dataset. [Read this tutorial to learn more](https://huggingface.co/blog/getting-started-with-embeddings#2-host-embeddings-for-free-on-the-hugging-face-hub), or [visit the Datasets documentation](https://huggingface.co/docs/datasets/upload_dataset#upload-with-the-hub-ui) to try it out yourself! In short, once you've created a new Dataset on the Hub, you can simply manually upload your Pickle file by clicking the "Add file" button, shown below. <figure class="image table text-center m-0 w-full"> <medium-zoom background="rgba(0,0,0,.7)" alt="You can upload dataset files manually on the Hub." src="assets/87_playlist_generator/add-dataset.png"></medium-zoom> <figcaption>You can upload dataset files manually on the Hub.</figcaption> </figure> The last thing we need to do now is actually use the embeddings for semantic search! The following code loads the embeddings, generates a new embedding for a given string, and runs a semantic search over the lyrics embeddings to find the closest hits. To make it easier to work with the results, I also like to put them into a Pandas DataFrame. ```python from sentence_transformers import util import pandas as pd prompt_embedding = embedder.encode(prompt, convert_to_tensor=True) hits = util.semantic_search(prompt_embedding, corpus_embeddings, top_k=20) hits = pd.DataFrame(hits[0], columns=['corpus_id', 'score']) # Note that "corpus_id" is the index of the verse for that embedding # You can use the "corpus_id" to look up the original song ``` Since we’re searching for any verse that matches the text prompt, there’s a good chance that the semantic search will find multiple verses from the same song. When we drop the duplicates, we might only end up with a few distinct songs. If we increase the number of verse embeddings that `util.semantic_search` fetches with the `top_k` parameter, we can increase the number of songs that we'll find. Experimentally, I found that when I set `top_k=20`, I almost always get at least 9 distinct songs. ## Making a Multi-Step Gradio App For the demo, I wanted users to enter a text prompt (or choose from some examples), and conduct a semantic search to find the top 9 most relevant songs. Then, users should be able to select from the resulting songs to be able to see the lyrics, which might give them some insight into why the particular songs were chosen. Here’s how we can do that! [At the top of the Gradio demo](https://huggingface.co/spaces/NimaBoscarino/playlist-generator/blob/main/app.py) we load the embeddings, mappings, and lyrics from Hugging Face datasets when the app starts up. ```python from sentence_transformers import SentenceTransformer, util from huggingface_hub import hf_hub_download import os import pickle import pandas as pd corpus_embeddings = pickle.load(open(hf_hub_download("NimaBoscarino/playlist-generator", repo_type="dataset", filename="verse-embeddings.pkl"), "rb")) songs = pd.read_csv(hf_hub_download("NimaBoscarino/playlist-generator", repo_type="dataset", filename="songs_new.csv")) verses = pd.read_csv(hf_hub_download("NimaBoscarino/playlist-generator", repo_type="dataset", filename="verses.csv")) # I'm loading the lyrics from my private dataset, with my own API token auth_token = os.environ.get("TOKEN_FROM_SECRET") lyrics = pd.read_csv(hf_hub_download("NimaBoscarino/playlist-generator-private", repo_type="dataset", filename="lyrics_new.csv", use_auth_token=auth_token)) ``` The Gradio Blocks API lets you build *multi-step* interfaces, which means that you’re free to create quite complex sequences for your demos. We’ll take a look at some example code snippets here, but [check out the project code to see it all in action](https://huggingface.co/spaces/NimaBoscarino/playlist-generator/blob/main/app.py). For this project, we want users to choose a text prompt and then, after the semantic search is complete, users should have the ability to choose a song from the results to inspect the lyrics. With Gradio, this can be built iteratively by starting off with defining the initial input components and then registering a `click` event on the button. There’s also a `Radio` component, which will get updated to show the names of the songs for the playlist. ```python import gradio as gr song_prompt = gr.TextArea( value="Running wild and free", placeholder="Enter a song prompt, or choose an example" ) fetch_songs = gr.Button(value="Generate Your Playlist!") song_option = gr.Radio() fetch_songs.click( fn=generate_playlist, inputs=[song_prompt], outputs=[song_option], ) ``` This way, when the button gets clicked, Gradio grabs the current value of the `TextArea` and passes it to a function, shown below: ```python def generate_playlist(prompt): prompt_embedding = embedder.encode(prompt, convert_to_tensor=True) hits = util.semantic_search(prompt_embedding, corpus_embeddings, top_k=20) hits = pd.DataFrame(hits[0], columns=['corpus_id', 'score']) # ... code to map from the verse IDs to the song names song_names = ... # e.g. ["Thank U, Next", "Freebird", "La Cucaracha"] return ( gr.Radio.update(label="Songs", interactive=True, choices=song_names) ) ``` In that function, we use the text prompt to conduct the semantic search. As seen above, to push updates to the Gradio components in the app, the function just needs to return components created with the `.update()` method. Since we connected the `song_option` `Radio` component to `fetch_songs.click` with its `output` parameter, `generate_playlist` can control the choices for the `Radio `component! You can even do something similar to the `Radio` component in order to let users choose which song lyrics to view. [Visit the code on Hugging Face Spaces to see it in detail!](https://huggingface.co/spaces/NimaBoscarino/playlist-generator/blob/main/app.py) ## Some Thoughts Sentence Transformers and Gradio are great choices for this kind of project! ST has the utility functions that we need for quickly generating embeddings, as well as for running semantic search with minimal code. Having access to a large collection of pre-trained models is also extremely helpful, since we don’t need to create and train our own models for this kind of stuff. Building our demo in Gradio means we only have to focus on coding in Python, and [deploying Gradio projects to Hugging Face Spaces is also super simple](https://huggingface.co/docs/hub/spaces-sdks-gradio)! There’s a ton of other stuff I wish I’d had the time to build into this project, such as these ideas that I might explore in the future: - Integrating with Spotify to automatically generate a playlist, and maybe even using Spotify’s embedded player to let users immediately listen to the songs. - Using the **[HighlightedText** Gradio component](https://gradio.app/docs/#highlightedtext) to identify the specific verse that was found by the semantic search. - Creating some visualizations of the embedding space, like in [this Space by Radamés Ajna](https://huggingface.co/spaces/radames/sentence-embeddings-visualization). While the song *lyrics* aren’t being released, I’ve **[published the verse embeddings along with the mappings to each song](https://huggingface.co/datasets/NimaBoscarino/playlist-generator)**, so you’re free to play around and get creative! Remember to [drop by the Discord](https://huggingface.co/join/discord) to ask questions and share your work! I’m excited to see what you end up doing with Sentence Transformers embeddings 🤗 ## Extra Resources - [Getting Started With Embeddings](https://huggingface.co/blog/getting-started-with-embeddings) by Omar Espejel - [Or as a Twitter thread](https://twitter.com/osanseviero/status/1540993407883042816?s=20&t=4gskgxZx6yYKknNB7iD7Aw) by Omar Sanseviero - [Hugging Face + Sentence Transformers docs](https://www.sbert.net/docs/hugging_face.html) - [Gradio Blocks party](https://huggingface.co/Gradio-Blocks) - View some amazing community projects showcasing Gradio Blocks! |

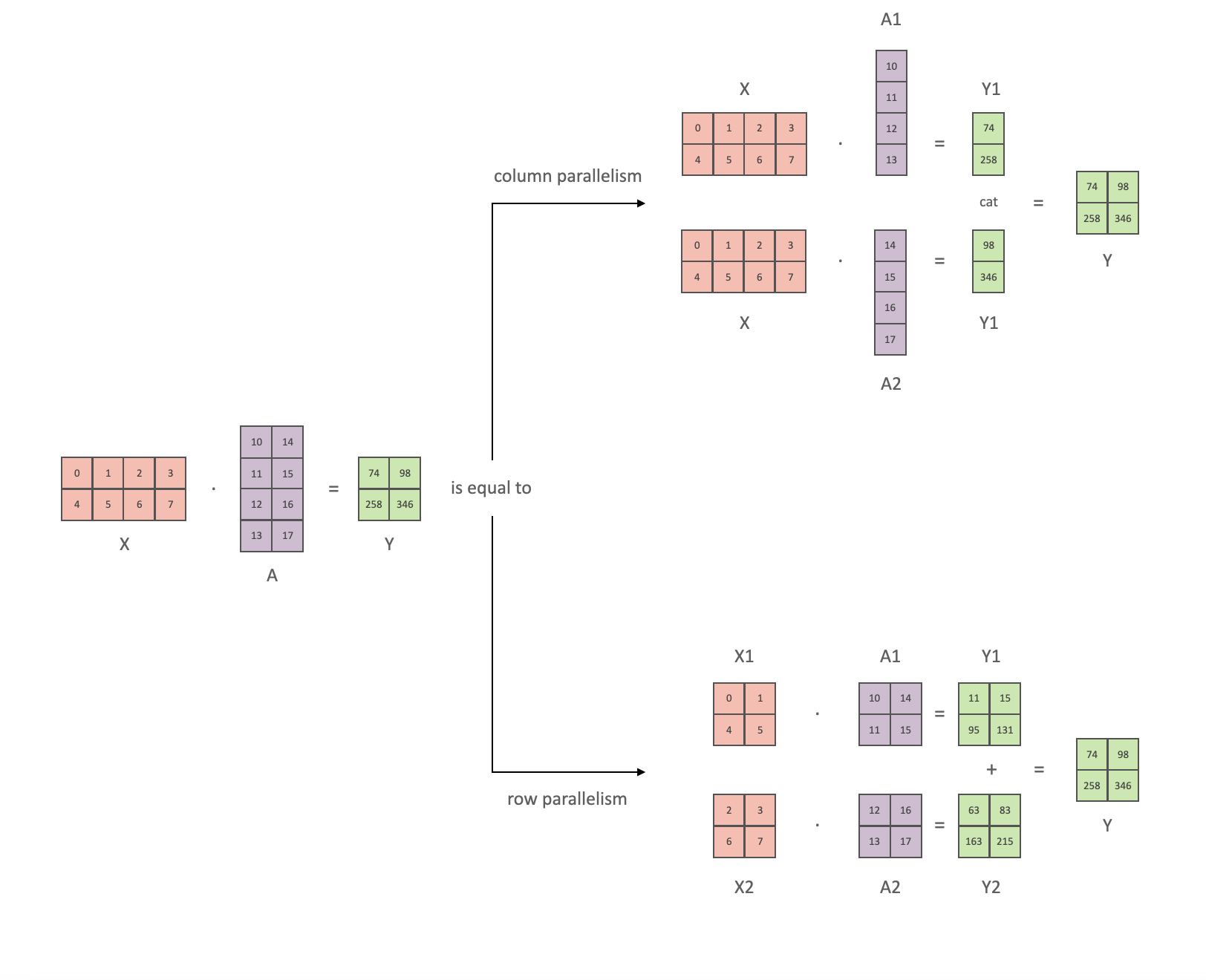

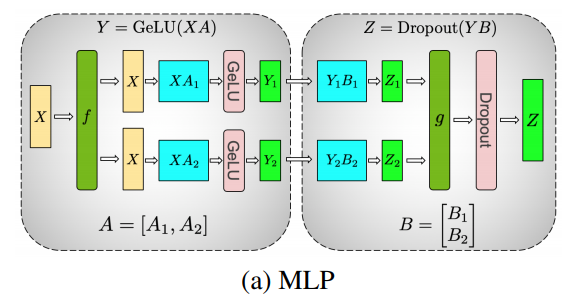

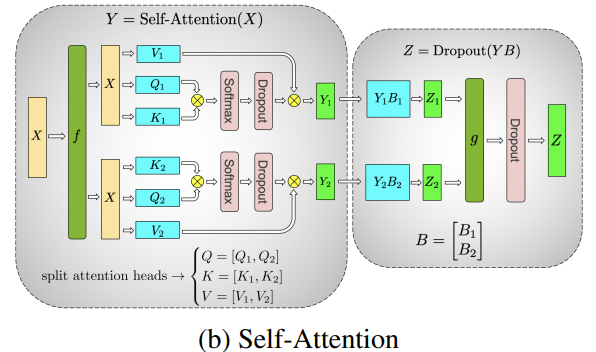

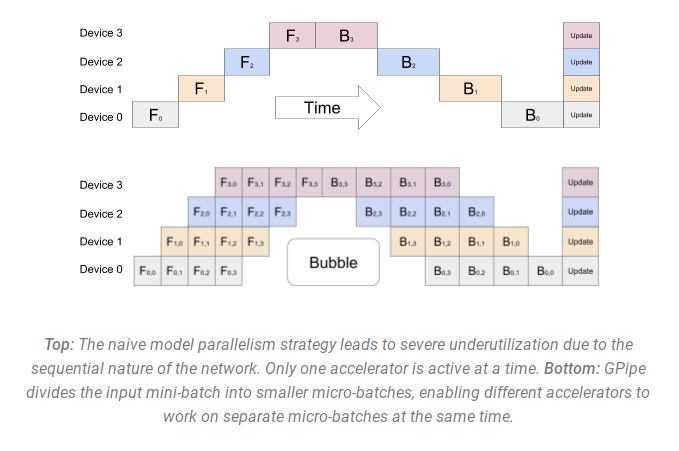

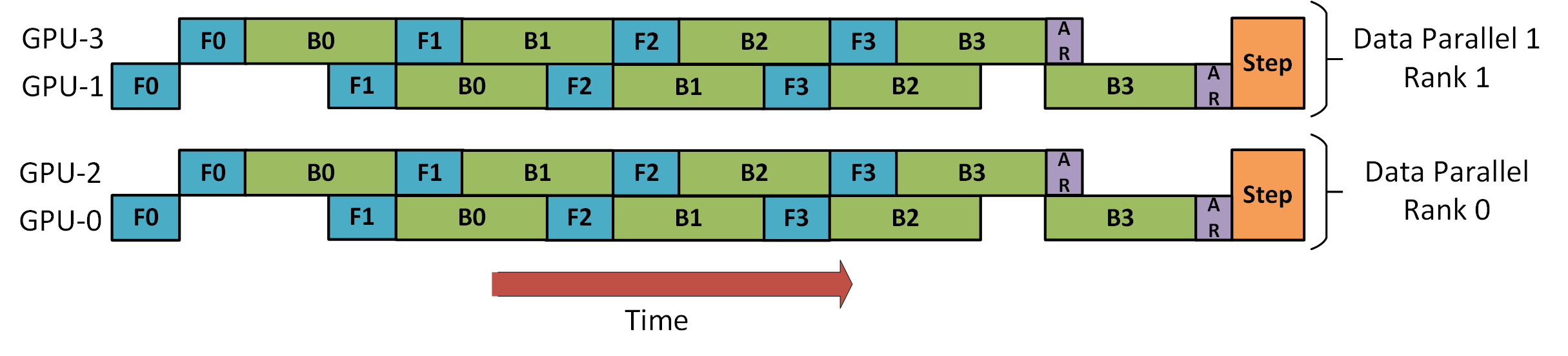

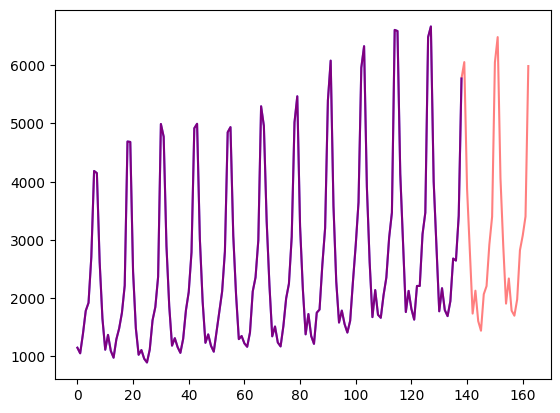

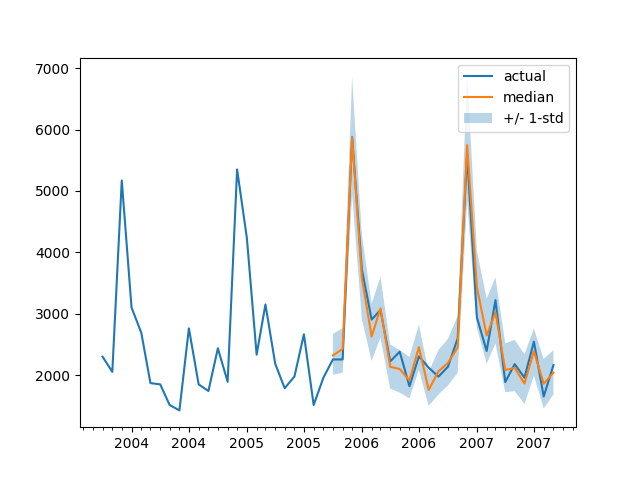

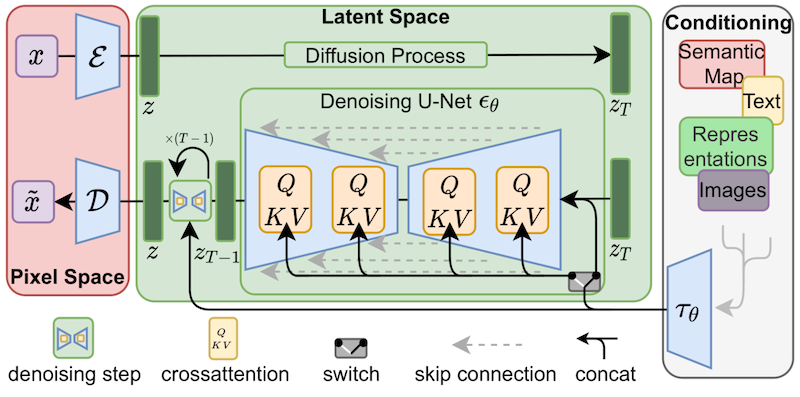

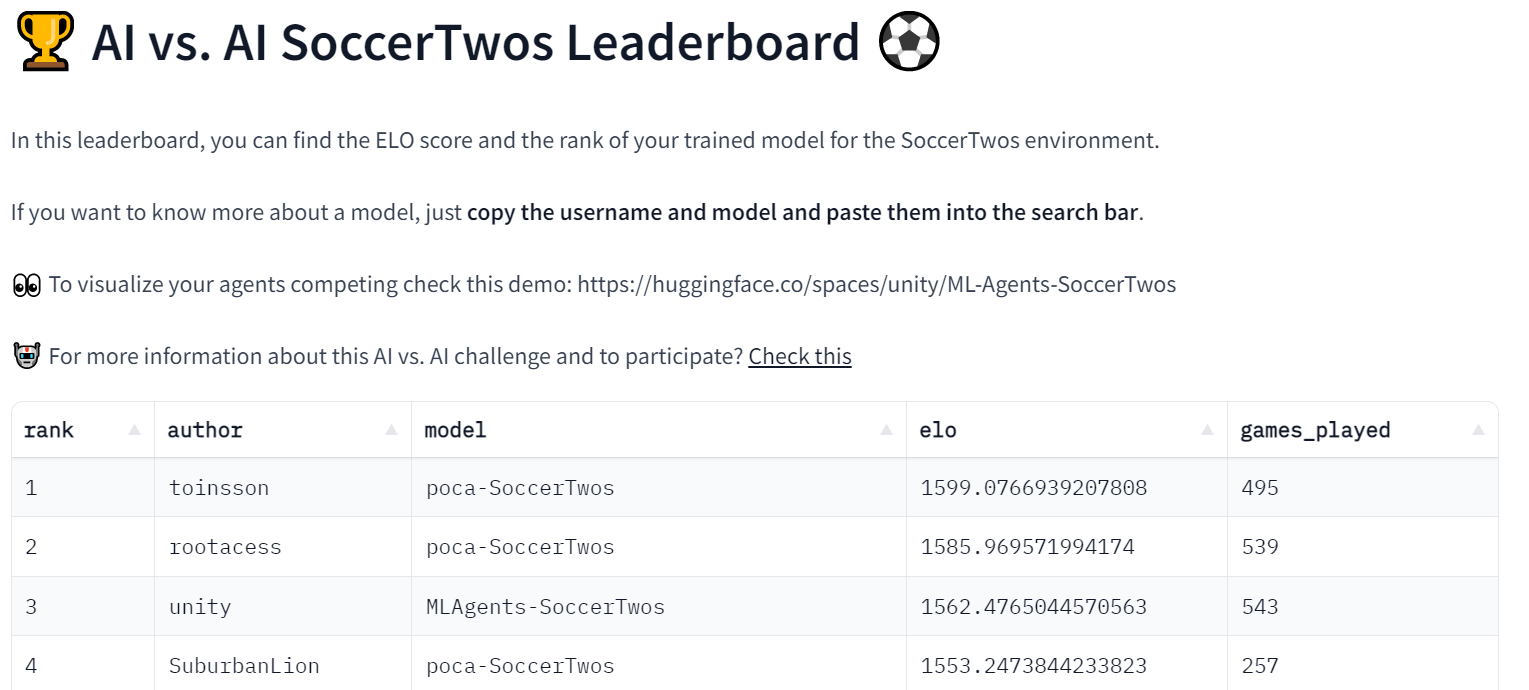

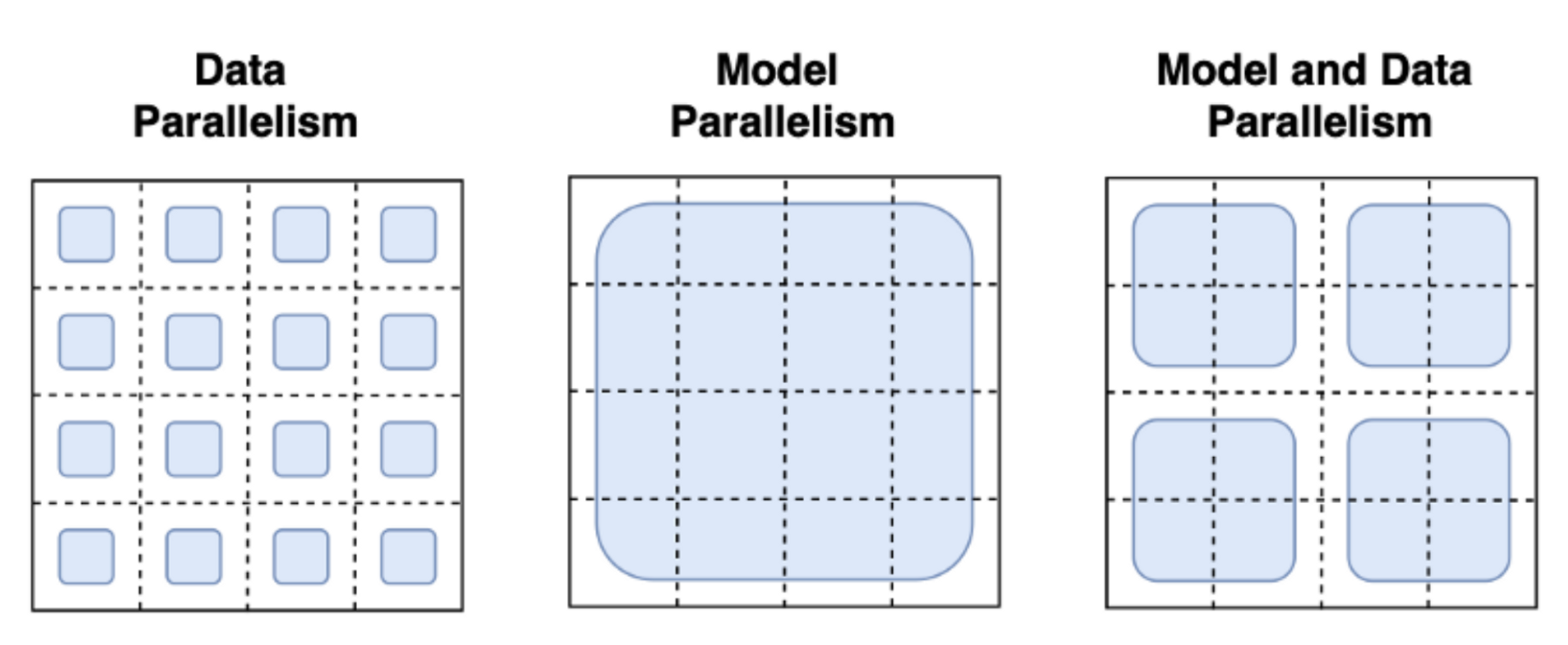

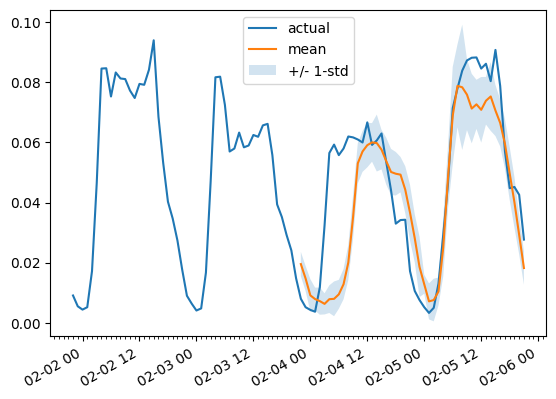

The Technology Behind BLOOM Training | stas | July 14, 2022 | bloom-megatron-deepspeed | nlp, llm | https://huggingface.co/blog/bloom-megatron-deepspeed | # The Technology Behind BLOOM Training In recent years, training ever larger language models has become the norm. While the issues of those models' not being released for further study is frequently discussed, the hidden knowledge about how to train such models rarely gets any attention. This article aims to change this by shedding some light on the technology and engineering behind training such models both in terms of hardware and software on the example of the 176B parameter language model [BLOOM](https://huggingface.co/bigscience/bloom). But first we would like to thank the companies and key people and groups that made the amazing feat of training a 176 Billion parameter model by a small group of dedicated people possible. Then the hardware setup and main technological components will be discussed.  Here's a quick summary of project: | | | | :----- | :------------- | | Hardware | 384 80GB A100 GPUs | | Software | Megatron-DeepSpeed | | Architecture | GPT3 w/ extras | | Dataset | 350B tokens of 59 Languages | | Training time | 3.5 months | ## People The project was conceived by Thomas Wolf (co-founder and CSO - Hugging Face), who dared to compete with the huge corporations not only to train one of the largest multilingual models, but also to make the final result accessible to all people, thus making what was but a dream to most people a reality. This article focuses specifically on the engineering side of the training of the model. The most important part of the technology behind BLOOM were the people and companies who shared their expertise and helped us with coding and training. There are 6 main groups of people to thank: 1. The HuggingFace's BigScience team who dedicated more than half a dozen full time employees to figure out and run the training from inception to the finishing line and provided and paid for all the infrastructure beyond the Jean Zay's compute. 2. The Microsoft DeepSpeed team, who developed DeepSpeed and later integrated it with Megatron-LM, and whose developers spent many weeks working on the needs of the project and provided lots of awesome practical experiential advice before and during the training. 3. The NVIDIA Megatron-LM team, who developed Megatron-LM and who were super helpful answering our numerous questions and providing first class experiential advice. 4. The IDRIS / GENCI team managing the Jean Zay supercomputer, who donated to the project an insane amount of compute and great system administration support. 5. The PyTorch team who created a super powerful framework, on which the rest of the software was based, and who were very supportive to us during the preparation for the training, fixing multiple bugs and improving the usability of the PyTorch components we relied on during the training. 6. The volunteers in the BigScience Engineering workgroup It'd be very difficult to name all the amazing people who contributed to the engineering side of the project, so I will just name a few key people outside of Hugging Face who were the engineering foundation of this project for the last 14 months: Olatunji Ruwase, Deepak Narayanan, Jeff Rasley, Jared Casper, Samyam Rajbhandari and Rémi Lacroix Also we are grateful to all the companies who allowed their employees to contribute to this project. ## Overview BLOOM's architecture is very similar to [GPT3](https://en.wikipedia.org/wiki/GPT-3) with a few added improvements as will be discussed later in this article. The model was trained on [Jean Zay](http://www.idris.fr/eng/jean-zay/jean-zay-presentation-eng.html), the French government-funded super computer that is managed by GENCI and installed at [IDRIS](http://www.idris.fr/), the national computing center for the French National Center for Scientific Research (CNRS). The compute was generously donated to the project by GENCI (grant 2021-A0101012475). The following hardware was used during the training: - GPUs: 384 NVIDIA A100 80GB GPUs (48 nodes) + 32 spare gpus - 8 GPUs per node Using NVLink 4 inter-gpu connects, 4 OmniPath links - CPU: AMD EPYC 7543 32-Core Processor - CPU memory: 512GB per node - GPU memory: 640GB per node - Inter-node connect: Omni-Path Architecture (OPA) w/ non-blocking fat tree - NCCL-communications network: a fully dedicated subnet - Disc IO network: GPFS shared with other nodes and users Checkpoints: - [main checkpoints](https://huggingface.co/bigscience/bloom) - each checkpoint with fp32 optim states and bf16+fp32 weights is 2.3TB - just the bf16 weights are 329GB. Datasets: - 46 Languages in 1.5TB of deduplicated massively cleaned up text, converted into 350B unique tokens - Vocabulary size of the model is 250,680 tokens - For full details please see [The BigScience Corpus A 1.6TB Composite Multilingual Dataset](https://openreview.net/forum?id=UoEw6KigkUn) The training of the 176B BLOOM model occurred over Mar-Jul 2022 and took about 3.5 months to complete (approximately 1M compute hours). ## Megatron-DeepSpeed The 176B BLOOM model has been trained using [Megatron-DeepSpeed](https://github.com/bigscience-workshop/Megatron-DeepSpeed), which is a combination of 2 main technologies: * [DeepSpeed](https://github.com/microsoft/DeepSpeed) is a deep learning optimization library that makes distributed training easy, efficient, and effective. * [Megatron-LM](https://github.com/NVIDIA/Megatron-LM) is a large, powerful transformer model framework developed by the Applied Deep Learning Research team at NVIDIA. The DeepSpeed team developed a 3D parallelism based implementation by combining ZeRO sharding and pipeline parallelism from the DeepSpeed library with Tensor Parallelism from Megatron-LM. More details about each component can be seen in the table below. Please note that the BigScience's [Megatron-DeepSpeed](https://github.com/bigscience-workshop/Megatron-DeepSpeed) is a fork of the original [Megatron-DeepSpeed](https://github.com/microsoft/Megatron-DeepSpeed) repository, to which we added multiple additions. Here is a table of which components were provided by which framework to train BLOOM: | Component | DeepSpeed | Megatron-LM | | :---- | :---- | :---- | | [ZeRO Data Parallelism](#zero-data-parallelism) | V | | | [Tensor Parallelism](#tensor-parallelism) | | V | | [Pipeline Parallelism](#pipeline-parallelism) | V | | | [BF16Optimizer](#bf16optimizer) | V | | | [Fused CUDA Kernels](#fused-cuda-kernels) | | V | | [DataLoader](#datasets) | | V | Please note that both Megatron-LM and DeepSpeed have Pipeline Parallelism and BF16 Optimizer implementations, but we used the ones from DeepSpeed as they are integrated with ZeRO. Megatron-DeepSpeed implements 3D Parallelism to allow huge models to train in a very efficient way. Let’s briefly discuss the 3D components. 1. **DataParallel (DP)** - the same setup is replicated multiple times, and each being fed a slice of the data. The processing is done in parallel and all setups are synchronized at the end of each training step. 2. **TensorParallel (TP)** - each tensor is split up into multiple chunks, so instead of having the whole tensor reside on a single GPU, each shard of the tensor resides on its designated GPU. During processing each shard gets processed separately and in parallel on different GPUs and the results are synced at the end of the step. This is what one may call horizontal parallelism, as the splitting happens on a horizontal level. 3. **PipelineParallel (PP)** - the model is split up vertically (layer-level) across multiple GPUs, so that only one or several layers of the model are placed on a single GPU. Each GPU processes in parallel different stages of the pipeline and works on a small chunk of the batch. 4. **Zero Redundancy Optimizer (ZeRO)** - also performs sharding of the tensors somewhat similar to TP, except the whole tensor gets reconstructed in time for a forward or backward computation, therefore the model doesn't need to be modified. It also supports various offloading techniques to compensate for limited GPU memory. ## Data Parallelism Most users with just a few GPUs are likely to be familiar with `DistributedDataParallel` (DDP) [PyTorch documentation](https://pytorch.org/docs/master/generated/torch.nn.parallel.DistributedDataParallel.html#torch.nn.parallel.DistributedDataParallel). In this method the model is fully replicated to each GPU and then after each iteration all the models synchronize their states with each other. This approach allows training speed up but throwing more resources at the problem, but it only works if the model can fit onto a single GPU. ### ZeRO Data Parallelism ZeRO-powered data parallelism (ZeRO-DP) is described on the following diagram from this [blog post](https://www.microsoft.com/en-us/research/blog/zero-deepspeed-new-system-optimizations-enable-training-models-with-over-100-billion-parameters/)  It can be difficult to wrap one's head around it, but in reality, the concept is quite simple. This is just the usual DDP, except, instead of replicating the full model params, gradients and optimizer states, each GPU stores only a slice of it. And then at run-time when the full layer params are needed just for the given layer, all GPUs synchronize to give each other parts that they miss - this is it. This component is implemented by DeepSpeed. ## Tensor Parallelism In Tensor Parallelism (TP) each GPU processes only a slice of a tensor and only aggregates the full tensor for operations that require the whole thing. In this section we use concepts and diagrams from the [Megatron-LM](https://github.com/NVIDIA/Megatron-LM) paper: [Efficient Large-Scale Language Model Training on GPU Clusters](https://arxiv.org/abs/2104.04473). The main building block of any transformer is a fully connected `nn.Linear` followed by a nonlinear activation `GeLU`. Following the Megatron paper's notation, we can write the dot-product part of it as `Y = GeLU(XA)`, where `X` and `Y` are the input and output vectors, and `A` is the weight matrix. If we look at the computation in matrix form, it's easy to see how the matrix multiplication can be split between multiple GPUs:  If we split the weight matrix `A` column-wise across `N` GPUs and perform matrix multiplications `XA_1` through `XA_n` in parallel, then we will end up with `N` output vectors `Y_1, Y_2, ..., Y_n` which can be fed into `GeLU` independently: . Notice with the Y matrix split along the columns, we can split the second GEMM along its rows so that it takes the output of the GeLU directly without any extra communication. Using this principle, we can update an MLP of arbitrary depth, while synchronizing the GPUs after each row-column sequence. The Megatron-LM paper authors provide a helpful illustration for that:  Here `f` is an identity operator in the forward pass and all reduce in the backward pass while `g` is an all reduce in the forward pass and identity in the backward pass. Parallelizing the multi-headed attention layers is even simpler, since they are already inherently parallel, due to having multiple independent heads!  Special considerations: Due to the two all reduces per layer in both the forward and backward passes, TP requires a very fast interconnect between devices. Therefore it's not advisable to do TP across more than one node, unless you have a very fast network. In our case the inter-node was much slower than PCIe. Practically, if a node has 4 GPUs, the highest TP degree is therefore 4. If you need a TP degree of 8, you need to use nodes that have at least 8 GPUs. This component is implemented by Megatron-LM. Megatron-LM has recently expanded tensor parallelism to include sequence parallelism that splits the operations that cannot be split as above, such as LayerNorm, along the sequence dimension. The paper [Reducing Activation Recomputation in Large Transformer Models](https://arxiv.org/abs/2205.05198) provides details for this technique. Sequence parallelism was developed after BLOOM was trained so not used in the BLOOM training. ## Pipeline Parallelism Naive Pipeline Parallelism (naive PP) is where one spreads groups of model layers across multiple GPUs and simply moves data along from GPU to GPU as if it were one large composite GPU. The mechanism is relatively simple - switch the desired layers `.to()` the desired devices and now whenever the data goes in and out those layers switch the data to the same device as the layer and leave the rest unmodified. This performs a vertical model parallelism, because if you remember how most models are drawn, we slice the layers vertically. For example, if the following diagram shows an 8-layer model: ``` =================== =================== | 0 | 1 | 2 | 3 | | 4 | 5 | 6 | 7 | =================== =================== GPU0 GPU1 ``` we just sliced it in 2 vertically, placing layers 0-3 onto GPU0 and 4-7 to GPU1. Now while data travels from layer 0 to 1, 1 to 2 and 2 to 3 this is just like the forward pass of a normal model on a single GPU. But when data needs to pass from layer 3 to layer 4 it needs to travel from GPU0 to GPU1 which introduces a communication overhead. If the participating GPUs are on the same compute node (e.g. same physical machine) this copying is pretty fast, but if the GPUs are located on different compute nodes (e.g. multiple machines) the communication overhead could be significantly larger. Then layers 4 to 5 to 6 to 7 are as a normal model would have and when the 7th layer completes we often need to send the data back to layer 0 where the labels are (or alternatively send the labels to the last layer). Now the loss can be computed and the optimizer can do its work. Problems: - the main deficiency and why this one is called "naive" PP, is that all but one GPU is idle at any given moment. So if 4 GPUs are used, it's almost identical to quadrupling the amount of memory of a single GPU, and ignoring the rest of the hardware. Plus there is the overhead of copying the data between devices. So 4x 6GB cards will be able to accommodate the same size as 1x 24GB card using naive PP, except the latter will complete the training faster, since it doesn't have the data copying overhead. But, say, if you have 40GB cards and need to fit a 45GB model you can with 4x 40GB cards (but barely because of the gradient and optimizer states). - shared embeddings may need to get copied back and forth between GPUs. Pipeline Parallelism (PP) is almost identical to a naive PP described above, but it solves the GPU idling problem, by chunking the incoming batch into micro-batches and artificially creating a pipeline, which allows different GPUs to concurrently participate in the computation process. The following illustration from the [GPipe paper](https://ai.googleblog.com/2019/03/introducing-gpipe-open-source-library.html) shows the naive PP on the top, and PP on the bottom:  It's easy to see from the bottom diagram how PP has fewer dead zones, where GPUs are idle. The idle parts are referred to as the "bubble". Both parts of the diagram show parallelism that is of degree 4. That is 4 GPUs are participating in the pipeline. So there is the forward path of 4 pipe stages F0, F1, F2 and F3 and then the return reverse order backward path of B3, B2, B1 and B0. PP introduces a new hyper-parameter to tune that is called `chunks`. It defines how many chunks of data are sent in a sequence through the same pipe stage. For example, in the bottom diagram, you can see that `chunks=4`. GPU0 performs the same forward path on chunk 0, 1, 2 and 3 (F0,0, F0,1, F0,2, F0,3) and then it waits for other GPUs to do their work and only when their work is starting to be complete, does GPU0 start to work again doing the backward path for chunks 3, 2, 1 and 0 (B0,3, B0,2, B0,1, B0,0). Note that conceptually this is the same concept as gradient accumulation steps (GAS). PyTorch uses `chunks`, whereas DeepSpeed refers to the same hyper-parameter as GAS. Because of the chunks, PP introduces the concept of micro-batches (MBS). DP splits the global data batch size into mini-batches, so if you have a DP degree of 4, a global batch size of 1024 gets split up into 4 mini-batches of 256 each (1024/4). And if the number of `chunks` (or GAS) is 32 we end up with a micro-batch size of 8 (256/32). Each Pipeline stage works with a single micro-batch at a time. To calculate the global batch size of the DP + PP setup we then do: `mbs*chunks*dp_degree` (`8*32*4=1024`). Let's go back to the diagram. With `chunks=1` you end up with the naive PP, which is very inefficient. With a very large `chunks` value you end up with tiny micro-batch sizes which could be not very efficient either. So one has to experiment to find the value that leads to the highest efficient utilization of the GPUs. While the diagram shows that there is a bubble of "dead" time that can't be parallelized because the last `forward` stage has to wait for `backward` to complete the pipeline, the purpose of finding the best value for `chunks` is to enable a high concurrent GPU utilization across all participating GPUs which translates to minimizing the size of the bubble. This scheduling mechanism is known as `all forward all backward`. Some other alternatives are [one forward one backward](https://www.microsoft.com/en-us/research/publication/pipedream-generalized-pipeline-parallelism-for-dnn-training/) and [interleaved one forward one backward](https://arxiv.org/abs/2104.04473). While both Megatron-LM and DeepSpeed have their own implementation of the PP protocol, Megatron-DeepSpeed uses the DeepSpeed implementation as it's integrated with other aspects of DeepSpeed. One other important issue here is the size of the word embedding matrix. While normally a word embedding matrix consumes less memory than the transformer block, in our case with a huge 250k vocabulary, the embedding layer needed 7.2GB in bf16 weights and the transformer block is just 4.9GB. Therefore, we had to instruct Megatron-Deepspeed to consider the embedding layer as a transformer block. So we had a pipeline of 72 layers, 2 of which were dedicated to the embedding (first and last). This allowed to balance out the GPU memory consumption. If we didn't do it, we would have had the first and the last stages consume most of the GPU memory, and 95% of GPUs would be using much less memory and thus the training would be far from being efficient. ## DP+PP The following diagram from the DeepSpeed [pipeline tutorial](https://www.deepspeed.ai/tutorials/pipeline/) demonstrates how one combines DP with PP.  Here it's important to see how DP rank 0 doesn't see GPU2 and DP rank 1 doesn't see GPU3. To DP there are just GPUs 0 and 1 where it feeds data as if there were just 2 GPUs. GPU0 "secretly" offloads some of its load to GPU2 using PP. And GPU1 does the same by enlisting GPU3 to its aid. Since each dimension requires at least 2 GPUs, here you'd need at least 4 GPUs. ## DP+PP+TP To get an even more efficient training PP is combined with TP and DP which is called 3D parallelism. This can be seen in the following diagram.  This diagram is from a blog post [3D parallelism: Scaling to trillion-parameter models](https://www.microsoft.com/en-us/research/blog/deepspeed-extreme-scale-model-training-for-everyone/), which is a good read as well. Since each dimension requires at least 2 GPUs, here you'd need at least 8 GPUs for full 3D parallelism. ## ZeRO DP+PP+TP One of the main features of DeepSpeed is ZeRO, which is a super-scalable extension of DP. It has already been discussed in [ZeRO Data Parallelism](#zero-data-parallelism). Normally it's a standalone feature that doesn't require PP or TP. But it can be combined with PP and TP. When ZeRO-DP is combined with PP (and optionally TP) it typically enables only ZeRO stage 1, which shards only optimizer states. ZeRO stage 2 additionally shards gradients, and stage 3 also shards the model weights. While it's theoretically possible to use ZeRO stage 2 with Pipeline Parallelism, it will have bad performance impacts. There would need to be an additional reduce-scatter collective for every micro-batch to aggregate the gradients before sharding, which adds a potentially significant communication overhead. By nature of Pipeline Parallelism, small micro-batches are used and instead the focus is on trying to balance arithmetic intensity (micro-batch size) with minimizing the Pipeline bubble (number of micro-batches). Therefore those communication costs are going to hurt. In addition, there are already fewer layers than normal due to PP and so the memory savings won't be huge. PP already reduces gradient size by ``1/PP``, and so gradient sharding savings on top of that are less significant than pure DP. ZeRO stage 3 can also be used to train models at this scale, however, it requires more communication than the DeepSpeed 3D parallel implementation. After careful evaluation in our environment which happened a year ago we found Megatron-DeepSpeed 3D parallelism performed best. Since then ZeRO stage 3 performance has dramatically improved and if we were to evaluate it today perhaps we would have chosen stage 3 instead. ## BF16Optimizer Training huge LLM models in FP16 is a no-no. We have proved it to ourselves by spending several months [training a 104B model](https://github.com/bigscience-workshop/bigscience/tree/master/train/tr8-104B-wide) which as you can tell from the [tensorboard](https://huggingface.co/bigscience/tr8-104B-logs/tensorboard) was but a complete failure. We learned a lot of things while fighting the ever diverging lm-loss:  and we also got the same advice from the Megatron-LM and DeepSpeed teams after they trained the [530B model](https://arxiv.org/abs/2201.11990). The recent release of [OPT-175B](https://arxiv.org/abs/2205.01068) too reported that they had a very difficult time training in FP16. So back in January as we knew we would be training on A100s which support the BF16 format Olatunji Ruwase developed a `BF16Optimizer` which we used to train BLOOM. If you are not familiar with this data format, please have a look [at the bits layout]( https://en.wikipedia.org/wiki/Bfloat16_floating-point_format#bfloat16_floating-point_format). The key to BF16 format is that it has the same exponent as FP32 and thus doesn't suffer from overflow FP16 suffers from a lot! With FP16, which has a max numerical range of 64k, you can only multiply small numbers. e.g. you can do `250*250=62500`, but if you were to try `255*255=65025` you got yourself an overflow, which is what causes the main problems during training. This means your weights have to remain tiny. A technique called loss scaling can help with this problem, but the limited range of FP16 is still an issue when models become very large. BF16 has no such problem, you can easily do `10_000*10_000=100_000_000` and it's no problem. Of course, since BF16 and FP16 have the same size of 2 bytes, one doesn't get a free lunch and one pays with really bad precision when using BF16. However, if you remember the training using stochastic gradient descent and its variations is a sort of stumbling walk, so if you don't get the perfect direction immediately it's no problem, you will correct yourself in the next steps. Regardless of whether one uses BF16 or FP16 there is also a copy of weights which is always in FP32 - this is what gets updated by the optimizer. So the 16-bit formats are only used for the computation, the optimizer updates the FP32 weights with full precision and then casts them into the 16-bit format for the next iteration. All PyTorch components have been updated to ensure that they perform any accumulation in FP32, so no loss happening there. One crucial issue is gradient accumulation, and it's one of the main features of pipeline parallelism as the gradients from each microbatch processing get accumulated. It's crucial to implement gradient accumulation in FP32 to keep the training precise, and this is what `BF16Optimizer` does. Besides other improvements we believe that using BF16 mixed precision training turned a potential nightmare into a relatively smooth process which can be observed from the following lm loss graph:  ## Fused CUDA Kernels The GPU performs two things. It can copy data to/from memory and perform computations on that data. While the GPU is busy copying the GPU's computations units idle. If we want to efficiently utilize the GPU we want to minimize the idle time. A kernel is a set of instructions that implements a specific PyTorch operation. For example, when you call `torch.add`, it goes through a [PyTorch dispatcher](http://blog.ezyang.com/2020/09/lets-talk-about-the-pytorch-dispatcher/) which looks at the input tensor(s) and various other things and decides which code it should run, and then runs it. A CUDA kernel is a specific implementation that uses the CUDA API library and can only run on NVIDIA GPUs. Now, when instructing the GPU to compute `c = torch.add(a, b); e = torch.max([c,d])`, a naive approach, and what PyTorch will do unless instructed otherwise, is to launch two separate kernels, one to perform the addition of `a` and `b` and another to find the maximum value between `c` and `d`. In this case, the GPU fetches from its memory `a` and `b`, performs the addition, and then copies the result back into the memory. It then fetches `c` and `d` and performs the `max` operation and again copies the result back into the memory. If we were to fuse these two operations, i.e. put them into a single "fused kernel", and just launch that one kernel we won't copy the intermediary result `c` to the memory, but leave it in the GPU registers and only need to fetch `d` to complete the last computation. This saves a lot of overhead and prevents GPU idling and makes the whole operation much more efficient. Fused kernels are just that. Primarily they replace multiple discrete computations and data movements to/from memory into fused computations that have very few memory movements. Additionally, some fused kernels rewrite the math so that certain groups of computations can be performed faster. To train BLOOM fast and efficiently it was necessary to use several custom fused CUDA kernels provided by Megatron-LM. In particular there is an optimized kernel to perform LayerNorm as well as kernels to fuse various combinations of the scaling, masking, and softmax operations. The addition of a bias term is also fused with the GeLU operation using PyTorch's JIT functionality. These operations are all memory bound, so it is important to fuse them to maximize the amount of computation done once a value has been retrieved from memory. So, for example, adding the bias term while already doing the memory bound GeLU operation adds no additional time. These kernels are all available in the [Megatron-LM repository](https://github.com/NVIDIA/Megatron-LM). ## Datasets Another important feature from Megatron-LM is the efficient data loader. During start up of the initial training each data set is split into samples of the requested sequence length (2048 for BLOOM) and index is created to number each sample. Based on the training parameters the number of epochs for a dataset is calculated and an ordering for that many epochs is created and then shuffled. For example, if a dataset has 10 samples and should be gone through twice, the system first lays out the samples indices in order `[0, ..., 9, 0, ..., 9]` and then shuffles that order to create the final global order for the dataset. Notice that this means that training will not simply go through the entire dataset and then repeat, it is possible to see the same sample twice before seeing another sample at all, but at the end of training the model will have seen each sample twice. This helps ensure a smooth training curve through the entire training process. These indices, including the offsets into the base dataset of each sample, are saved to a file to avoid recomputing them each time a training process is started. Several of these datasets can then be blended with varying weights into the final data seen by the training process. ## Embedding LayerNorm While we were fighting with trying to stop 104B from diverging we discovered that adding an additional LayerNorm right after the first word embedding made the training much more stable. This insight came from experimenting with [bitsandbytes](https://github.com/facebookresearch/bitsandbytes) which contains a `StableEmbedding` which is a normal Embedding with layernorm and it uses a uniform xavier initialization. ## Positional Encoding We also replaced the usual positional embedding with an AliBi - based on the paper: [Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation](https://arxiv.org/abs/2108.12409), which allows to extrapolate for longer input sequences than the ones the model was trained on. So even though we train on sequences with length 2048 the model can also deal with much longer sequences during inference. ## Training Difficulties With the architecture, hardware and software in place we were able to start training in early March 2022. However, it was not just smooth sailing from there. In this section we discuss some of the main hurdles we encountered. There were a lot of issues to figure out before the training started. In particular we found several issues that manifested themselves only once we started training on 48 nodes, and won't appear at small scale. E.g., `CUDA_LAUNCH_BLOCKING=1` was needed to prevent the framework from hanging, and we needed to split the optimizer groups into smaller groups, otherwise the framework would again hang. You can read about those in detail in the [training prequel chronicles](https://github.com/bigscience-workshop/bigscience/blob/master/train/tr11-176B-ml/chronicles-prequel.md). The main type of issue encountered during training were hardware failures. As this was a new cluster with about 400 GPUs, on average we were getting 1-2 GPU failures a week. We were saving a checkpoint every 3h (100 iterations) so on average we would lose 1.5h of training on hardware crash. The Jean Zay sysadmins would then replace the faulty GPUs and bring the node back up. Meanwhile we had backup nodes to use instead. We have run into a variety of other problems that led to 5-10h downtime several times, some related to a deadlock bug in PyTorch, others due to running out of disk space. If you are curious about specific details please see [training chronicles](https://github.com/bigscience-workshop/bigscience/blob/master/train/tr11-176B-ml/chronicles.md). We were planning for all these downtimes when deciding on the feasibility of training this model - we chose the size of the model to match that feasibility and the amount of data we wanted the model to consume. With all the downtimes we managed to finish the training in our estimated time. As mentioned earlier it took about 1M compute hours to complete. One other issue was that SLURM wasn't designed to be used by a team of people. A SLURM job is owned by a single user and if they aren't around, the other members of the group can't do anything to the running job. We developed a kill-switch workaround that allowed other users in the group to kill the current process without requiring the user who started the process to be present. This worked well in 90% of the issues. If SLURM designers read this - please add a concept of Unix groups, so that a SLURM job can be owned by a group. As the training was happening 24/7 we needed someone to be on call - but since we had people both in Europe and West Coast Canada overall there was no need for someone to carry a pager, we would just overlap nicely. Of course, someone had to watch the training on the weekends as well. We automated most things, including recovery from hardware crashes, but sometimes a human intervention was needed as well. ## Conclusion The most difficult and intense part of the training was the 2 months leading to the start of the training. We were under a lot of pressure to start training ASAP, since the resources allocation was limited in time and we didn't have access to A100s until the very last moment. So it was a very difficult time, considering that the `BF16Optimizer` was written in the last moment and we needed to debug it and fix various bugs. And as explained in the previous section we discovered new problems that manifested themselves only once we started training on 48 nodes, and won't appear at small scale. But once we sorted those out, the training itself was surprisingly smooth and without major problems. Most of the time we had one person monitoring the training and only a few times several people were involved to troubleshoot. We enjoyed great support from Jean Zay's administration who quickly addressed most needs that emerged during the training. Overall it was a super-intense but very rewarding experience. Training large language models is still a challenging task, but we hope by building and sharing this technology in the open others can build on top of our experience. ## Resources ### Important links - [main training document](https://github.com/bigscience-workshop/bigscience/blob/master/train/tr11-176B-ml/README.md) - [tensorboard](https://huggingface.co/bigscience/tr11-176B-ml-logs/tensorboard) - [training slurm script](https://github.com/bigscience-workshop/bigscience/blob/master/train/tr11-176B-ml/tr11-176B-ml.slurm) - [training chronicles](https://github.com/bigscience-workshop/bigscience/blob/master/train/tr11-176B-ml/chronicles.md) ### Papers and Articles We couldn't have possibly explained everything in detail in this article, so if the technology presented here piqued your curiosity and you'd like to know more here are the papers to read: Megatron-LM: - [Efficient Large-Scale Language Model Training on GPU Clusters](https://arxiv.org/abs/2104.04473). - [Reducing Activation Recomputation in Large Transformer Models](https://arxiv.org/abs/2205.05198) DeepSpeed: - [ZeRO: Memory Optimizations Toward Training Trillion Parameter Models](https://arxiv.org/abs/1910.02054) - [ZeRO-Offload: Democratizing Billion-Scale Model Training](https://arxiv.org/abs/2101.06840) - [ZeRO-Infinity: Breaking the GPU Memory Wall for Extreme Scale Deep Learning](https://arxiv.org/abs/2104.07857) - [DeepSpeed: Extreme-scale model training for everyone](https://www.microsoft.com/en-us/research/blog/deepspeed-extreme-scale-model-training-for-everyone/) Joint Megatron-LM and Deepspeeed: - [Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, A Large-Scale Generative Language Model](https://arxiv.org/abs/2201.11990). ALiBi: - [Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation](https://arxiv.org/abs/2108.12409) - [What Language Model to Train if You Have One Million GPU Hours?](https://openreview.net/forum?id=rI7BL3fHIZq) - there you will find the experiments that lead to us choosing ALiBi. BitsNBytes: - [8-bit Optimizers via Block-wise Quantization](https://arxiv.org/abs/2110.02861) (in the context of Embedding LayerNorm but the rest of the paper and the technology is amazing - the only reason were weren't using the 8-bit optimizer is because we were already saving the optimizer memory with DeepSpeed-ZeRO). ## Blog credits Huge thanks to the following kind folks who asked good questions and helped improve the readability of the article (listed in alphabetical order): Britney Muller, Douwe Kiela, Jared Casper, Jeff Rasley, Julien Launay, Leandro von Werra, Omar Sanseviero, Stefan Schweter and Thomas Wang. The main graphics was created by Chunte Lee. |