repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

pytorch/pytorch

| 20,271

|

Official instructions for how to build libtorch don't have same structure as prebuilt binaries

|

On Slack, Geoffrey Yu asked:

> Are there instructions for building libtorch from source? I feel like I'm missing something since I've tried building with `tools/build_libtorch.py`. However the build output doesn't seem to have the same structure as the prebuilt libtorch that you can download on pytorch.org

@pjh5 responded: "If you're curious, here's exactly what builds the libtorches https://github.com/pytorch/builder/blob/master/manywheel/build_common.sh#L120 . It's mostly tools/build_libtorch.py but also copies some header files from a wheel file"

This is not mentioned at all in the "how to build libtorch" documentation: https://github.com/pytorch/pytorch/blob/master/docs/libtorch.rst Normally we give build instructions in README but there are no libtorch build instructions in the README. Additionally, the C++ API docs https://pytorch.org/cppdocs/ don't explain how to build from source.

Some more users being confused about the matter:

* https://discuss.pytorch.org/t/building-libtorch-c-distribution-from-source/27519/2

* https://github.com/pytorch/pytorch/issues/20156

|

https://github.com/pytorch/pytorch/issues/20271

|

closed

|

[

"high priority",

"module: binaries",

"module: build",

"module: docs",

"module: cpp",

"triaged"

] | 2019-05-08T13:02:51Z

| 2019-05-30T19:52:28Z

| null |

ezyang

|

huggingface/transformers

| 591

|

What is the use of [SEP]?

|

Hello. I know that [CLS] means the start of a sentence and [SEP] makes BERT know the second sentence has begun. [SEP] can’t stop one sentence from extracting information from another sentence. However, I have a question.

If I have 2 sentences, which are s1 and s2., and our fine-tuning task is the same. In one way, I add special tokens and the input looks like [CLS]+s1+[SEP] + s2 + [SEP]. In another, I make the input look like [CLS] + s1 + s2 + [SEP]. When I input them to BERT respectively, what is the difference between them? Will the s1 in second one integrate more information from s2 than the s1 in first one does? Will the token embeddings change a lot between the 2 methods?

Thanks for any help!

|

https://github.com/huggingface/transformers/issues/591

|

closed

|

[] | 2019-05-07T04:12:16Z

| 2019-05-21T10:51:31Z

| null |

RomanShen

|

pytorch/pytorch

| 20,090

|

How to add dynamically allocated strings to Pickler?

|

The following code prints `111` and `111`, instead of `222` and `111`, because `222` is skipped [here](https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/pickler.cpp#L68). Is this by design as Pickler only works for statically allocated strings? Or is there a way to correctly add dynamically allocated strings? (and all other types listed [here](https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/pickler.cpp#L104-L114))?

```c++

std::string str1 = "111";

std::string str2 = "222";

std::vector<at::Tensor> tensor_table;

torch::jit::Pickler pickler(&tensor_table);

pickler.start();

pickler.addIValue(str1);

pickler.addIValue(str2);

pickler.finish();

auto buffer = new char[pickler.stack().size()];

memcpy(buffer, pickler.stack().data(), pickler.stack().size());

torch::jit::Unpickler unpickler(buffer, pickler.stack().size(), &tensor_table);

auto values = unpickler.parse_ivalue_list();

std::cout << values.back().toStringRef() << std::endl;

values.pop_back();

std::cout << values.back().toStringRef() << std::endl;

values.pop_back();

```

cc @zdevito

|

https://github.com/pytorch/pytorch/issues/20090

|

closed

|

[

"oncall: jit",

"triaged"

] | 2019-05-03T04:43:08Z

| 2019-05-17T21:45:41Z

| null |

mrshenli

|

huggingface/neuralcoref

| 157

|

Performance?

|

Hi there,

Thanks for the nice package!

Are there any performance comparisons with other systems? (say, Lee et el'18: https://arxiv.org/pdf/1804.05392.pdf).

|

https://github.com/huggingface/neuralcoref/issues/157

|

closed

|

[

"question",

"perf / accuracy"

] | 2019-04-30T21:38:56Z

| 2019-10-16T08:48:09Z

| null |

danyaljj

|

pytorch/examples

| 554

|

Where is the hook?

|

On the tutorial, I see it says this is an example of hook. So where is the hook?

|

https://github.com/pytorch/examples/issues/554

|

closed

|

[] | 2019-04-30T11:38:40Z

| 2019-05-27T21:00:53Z

| null |

yanbixing

|

pytorch/pytorch

| 19,908

|

c++/pytorch How to convert tensor to image array?

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

I would like to convert a tensor to image array and use tensor.data<short>() method. But it doesn't work.

My function is showed below:

```

#include <torch/script.h> // One-stop header.

#include <iostream>

#include <memory>

#include <sstream>

#include <string>

#include <vector>

#include "itkImage.h"

#include "itkImageFileReader.h"

#include "itkImageFileWriter.h"

#include "itkImageRegionIterator.h"

//////////////////////////////////////////////////////

//Goal: load jit script model and segment myocardium

//Step: 1. load jit script model

// 2. load input image

// 3. predict by model

// 4. save the result to file

//////////////////////////////////////////////////////

typedef short PixelType;

const unsigned int Dimension = 3;

typedef itk::Image<PixelType, Dimension> ImageType;

typedef itk::ImageFileReader<ImageType> ReaderType;

typedef itk::ImageRegionIterator<ImageType> IteratorType;

bool itk2tensor(ImageType::Pointer itk_img, torch::Tensor &tensor_img) {

typename ImageType::RegionType region = itk_img->GetLargestPossibleRegion();

const typename ImageType::SizeType size = region.GetSize();

std::cout << "Input size: " << size[0] << ", " << size[1]<< ", " << size[2] << std::endl;

int len = size[0] * size[1] * size[2];

short rowdata[len];

int count = 0;

IteratorType iter(itk_img, itk_img->GetRequestedRegion());

// convert itk to array

for (iter.GoToBegin(); !iter.IsAtEnd(); ++iter) {

rowdata[count] = iter.Get();

count++;

}

std::cout << "Convert itk to array DONE!" << std::endl;

// convert array to tensor

tensor_img = torch::from_blob(rowdata, {1, 1, (int)size[0], (int)size[1], (int)size[2]}, torch::kShort).clone();

tensor_img = tensor_img.toType(torch::kFloat);

tensor_img = tensor_img.to(torch::kCUDA);

tensor_img.set_requires_grad(0);

return true;

}

bool tensor2itk(torch::Tensor &t, ImageType::Pointer itk_img) {

std::cout << "tensor dtype = " << t.dtype() << std::endl;

std::cout << "tensor size = " << t.sizes() << std::endl;

t = t.toType(torch::kShort);

short * array = t.data<short>();

ImageType::IndexType start;

start[0] = 0; // first index on X

start[1] = 0; // first index on Y

start[2] = 0; // first index on Z

ImageType::SizeType size;

size[0] = t.size(2);

size[1] = t.size(3);

size[2] = t.size(4);

ImageType::RegionType region;

region.SetSize( size );

region.SetIndex( start );

itk_img->SetRegions( region );

itk_img->Allocate();

int len = size[0] * size[1] * size[2];

IteratorType iter(itk_img, itk_img->GetRequestedRegion());

int count = 0;

// convert array to itk

std::cout << "start!" << std::endl;

for (iter.GoToBegin(); !iter.IsAtEnd(); ++iter) {

short temp = *array++; // ERROR!

std::cout << temp << " ";

iter.Set(temp);

count++;

}

std::cout << "end!" << std::endl;

return true;

}

int main(int argc, const char* argv[]) {

int a, b, c;

if (argc != 4) {

std::cerr << "usage: automyo input jitmodel output\n";

return -1;

}

std::cout << "========= jit start =========\n";

// 1. load jit script model

std::cout << "Load script module: " << argv[2] << std::endl;

std::shared_ptr<torch::jit::script::Module> module = torch::jit::load(argv[2]);

module->to(at::kCUDA);

// assert(module != nullptr);

std::cout << "Load script module DONE" << std::endl;

// 2. load input image

const char* img_path = argv[1];

std::cout << "Load image: " << img_path << std::endl;

ReaderType::Pointer reader = ReaderType::New();

if (!img_path) {

std::cout << "Load input file error!" << std::endl;

return false;

}

reader->SetFileName(img_path);

reader->Update();

std::cout << "Load image DONE!" << std::endl;

ImageType::Pointer itk_img = reader->GetOutput();

torch::Tensor tensor_img;

if (!itk2tensor(itk_img, tensor_img)) {

std::cerr << "itk2tensor ERROR!" << std::endl;

}

else {

std::cout << "Convert array to tensor DONE!" << std::endl;

}

std::vector<torch::jit::IValue> inputs;

inputs.push_back(tensor_img);

// 3. predict by model

torch::Tensor y = module->forward(inputs).toTensor();

std::cout << "Inference DONE!" << std::endl;

// 4. save the result to file

torch::Tensor seg = y.gt(0.5);

// std::cout << seg << std::endl;

ImageType::Pointer out_itk_img = ImageType::New();

if (!tensor2itk(seg, out_itk_img)) {

std::cerr << "tensor2itk ERROR!" << std::endl;

}

else {

std::cout << "Convert tensor to itk DONE!" << std::endl;

}

std::cout << out_itk_img << std::endl;

return true;

}

```

The runtime log is showed below:

> Load script module: model_myo_jit.pt

> Load script module DONE

> Load image: patch_6.nii.gz

> Load image DONE!

> Input size: 128,

|

https://github.com/pytorch/pytorch/issues/19908

|

closed

|

[] | 2019-04-29T07:49:29Z

| 2019-04-29T09:58:24Z

| null |

JingLiRaysightmed

|

pytorch/pytorch

| 19,822

|

How to use torch.tensor(n) in Python3 to adapt to ’ at::TensorImpl‘

|

## ❓ Questions and Help

Hi ,when nms_cpp compiled by cpp_extension ,it didnt work but work in pytorch0.4.0.:

## TypeError: gpu_nms(): incompatible function arguments. The following argument types are supported:

1. (arg0: at::TensorImpl, arg1: at::TensorImpl, arg2: at::TensorImpl, arg3:

float) -> int

## Invoked with:

tensor([ 2.5353e+09, 2.5238e+09, -4.5295e+18, ..., 4.7854e+18,

4.7424e+18, 4.7895e+18]), tensor([ 566]),

tensor([[ 146.1686, 111.1691, 242.2774, 288.5695, 0.8267],

[ 144.7030, 108.2768, 244.0824, 282.2564, 0.8234],

[ 144.5566, 110.4112, 243.3897, 283.4086, 0.8225],

...,

[ 100.9274, 81.2732, 155.0707, 130.5494, 0.0500],

[ 0.0000, 185.7541, 47.3124, 276.2884, 0.0500],

[ 4.5178, 57.4754, 37.1159, 115.0753, 0.0500]], device='cuda:0

'), //

0.5

## cpp file:

## Code

// ------------------------------------------------------------------

// Faster R-CNN

// Copyright (c) 2015 Microsoft

// Licensed under The MIT License [see fast-rcnn/LICENSE for details]

// Written by Shaoqing Ren

// ------------------------------------------------------------------

#include <torch/script.h>

#include<torch/serialize/tensor.h>

#include <THC/THC.h>

#include <ATen/ATen.h>//state

#include <TH/TH.h>

#include <THC/THCTensorCopy.h>

//#include <TH/generic/THTensorCopy.h>

#include <THC/generic/THCTensorCopy.h>//generic/THCTensorCopy.h

#include <THC/THCTensorCopy.hpp>

#include <math.h>

#include <stdio.h>

#include <cstddef>

#include <torch/torch.h>

#include <torch/script.h>

#include "cuda/nms_kernel2.h"

#include "nms.h"

//src/nms_cuda.cpp(27): error C2440: “初始化”: 无法从“

//std::unique_ptr<THCState,void (__cdecl *)(THCState *)>

//THCState *state = at::globalContext().thc_state;

//std::unique_ptr<THCState,void (__cdecl *)(THCState *)> state= at::globalContext().thc_state;

THCState *state;

int gpu_nms(THLongTensor * keep, THLongTensor* num_out, THCudaTensor * boxes, float nms_overlap_thresh) {

// boxes has to be sorted

THArgCheck(THLongTensor_isContiguous(keep), 0, "boxes must be contiguous");

THArgCheck(THCudaTensor_isContiguous(state, boxes), 2, "boxes must be contiguous");

// Number of ROIs

int64_t boxes_num = THCudaTensor_size(state, boxes, 0);

int64_t boxes_dim = THCudaTensor_size(state, boxes, 1);

float* boxes_flat = THCudaTensor_data(state, boxes);

const int64_t col_blocks = DIVUP(boxes_num, threadsPerBlock);

printf("100,%d,%d ,%d ,%d " , *state,boxes_num, boxes_dim, col_blocks);

//, *state

THCudaLongTensor * mask = THCudaLongTensor_newWithSize2d(state, boxes_num, col_blocks);

//#unsigned

unsigned long long* mask_flat = (unsigned long long* )THCudaLongTensor_data(state, mask);

//_mns from

_nms(boxes_num, boxes_flat, mask_flat, nms_overlap_thresh);

THLongTensor * mask_cpu = THLongTensor_newWithSize2d(boxes_num, col_blocks);

//THCudaTensor_copyFloat

//THLongTensor_copyCuda(state, mask_cpu, mask); #no found

//THCTensor_(copyAsyncCPU)

//THTensor_copyCuda(state, mask_cpu, mask);

//THLongTensor_copyCudaLong(state, mask_cpu, mask);

//not found cu file

//THCStorage_copyCudaLong(state, mask_cpu, mask);#

//THCTensor_copy(state, mask_cpu, mask);

//THCudaTensor_copyLong(state, mask_cpu, mask);

//THLongTensor_copyCudaLong(state, mask_cpu, mask);

//copy_from_cpu(state, mask_cpu, mask);

//ok mask 2 mask_cpu

//

THCudaLongTensor_freeCopyTo(state, mask_cpu, mask);

//Copy_Long(state, mask_cpu, mask);

//copyAsyncCuda

//THTensor_copyLong(state, mask_cpu, mask);

THCudaLongTensor_free(state, mask);

//unsigned

long long * mask_cpu_flat = THLongTensor_data(mask_cpu);

THLongTensor * remv_cpu = THLongTensor_newWithSize1d(col_blocks);

//unsigned

long long* remv_cpu_flat = THLongTensor_data(remv_cpu);

THLongTensor_fill(remv_cpu, 0);

int64_t * keep_flat = THLongTensor_data(keep);

long num_to_keep = 0;

int i, j;

for (i = 0; i < boxes_num; i++) {

int nblock = i / threadsPerBlock;

int inblock = i % threadsPerBlock;

if (!(remv_cpu_flat[nblock] & (1ULL << inblock))) {

keep_flat[num_to_keep++] = i;

long long *p = &mask_cpu_flat[0] + i * col_blocks;

for (j = nblock; j < col_blocks; j++) {

remv_cpu_flat[j] |= p[j];

}

}

}

int64_t * num_out_flat = THLongTensor_data(num_out);

* num_out_flat = num_to_keep;

THLongTensor_free(mask_cpu);

THLongTensor_free(remv_cpu);

//return 1;

return num_to_keep;

}

PYBIND11_MODULE(TORCH_EXTENSION_NAME, m) {

m.def("cpu_nms", &cpu_nms, "nms cpu_nms ");

m.def("gpu_nms", &gpu_nms, "nms gpu_nms (CUDA)");

}

|

https://github.com/pytorch/pytorch/issues/19822

|

closed

|

[] | 2019-04-27T08:40:06Z

| 2019-04-28T07:42:41Z

| null |

liuchanfeng165

|

pytorch/pytorch

| 19,744

|

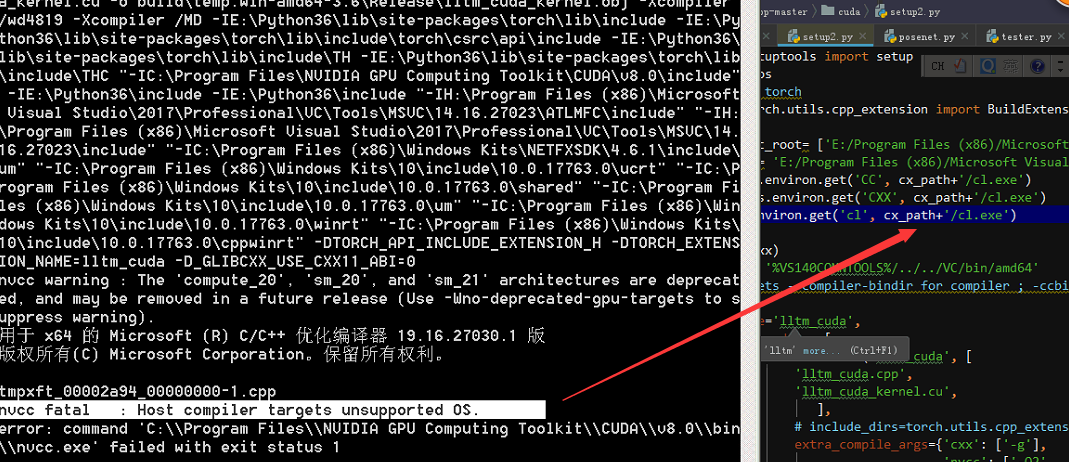

How to select cl.exe for a config of cpp_extension?

|

## ❓ Questions and Help

Hi, I got this error and dont wanna change vs15 again because the compiler keeps in OS,if I use cl.exe of VS2015 not VS2017 to compile my cpp_extension by setup.py and how to modify setup.py

## setup.py

from setuptools import setup

import os

#import torch

from torch.utils.cpp_extension import BuildExtension, CUDAExtension

_ext_src_root= ['E:/Program Files (x86)/Microsoft Visual Studio 14.0/VC'] #

cx_path= 'E:/Program Files (x86)/Microsoft Visual Studio 14.0/VC/bin/amd64'

cc = os.environ.get('CC', cx_path+'/cl.exe')

cxx = os.environ.get('CXX', cx_path+'/cl.exe')

cl=os.environ.get('cl', cx_path+'/cl.exe')

print(cxx)

vs_bin= '%VS140COMNTOOLS%/../../VC/bin/amd64'

#nvcc sets --compiler-bindir for compiler ; -ccbin

setup(

name='lltm_cuda',

ext_modules=[

CUDAExtension('lltm_cuda', [

'lltm_cuda.cpp',

'lltm_cuda_kernel.cu',

],

# include_dirs=torch.utils.cpp_extension.include_paths(),

extra_compile_args={'cxx': ['-g'],

'nvcc': ['-O2' , '--compiler-bindir' " {}".format(cx_path+'/cl.exe')]}

#extra_cflags

# extra_compile_args ={

# "cxx": ["-O2", "-I{}".format("{}/include".format(_ext_src_root))],

# # "nvcc": ["-O2", "-I{}".format("{}/include".format(_ext_src_root))],

# "cl": ["-O2", "-I{}".format("{}/include".format(_ext_src_root))],

# },

),

],

cmdclass={

'build_ext': BuildExtension

})

## add list

just to compile ' .cu' not '.cpp ', mabe modify there or not?

line 240 in cpp_extension.py :+1:

# Register .cu and .cuh as valid source extensions.

self.compiler.src_extensions += ['.cu', '.cuh']

# Save the original _compile method for later.

if self.compiler.compiler_type == 'msvc':

self.compiler._cpp_extensions += ['.cu', '.cuh']

original_compile = self.compiler.compile

original_spawn = self.compiler.spawn

else:

original_compile = self.compiler._compile

## Thanks a lot

|

https://github.com/pytorch/pytorch/issues/19744

|

closed

|

[] | 2019-04-25T16:29:54Z

| 2019-04-26T08:28:24Z

| null |

liuchanfeng165

|

pytorch/pytorch

| 19,611

|

How to understand the results of model. Eval () and how to obtain the predictive probability value?

|

Hello everyone

I've been using tensorflow before, but I met torch when I added functionality to a tool. My ultimate goal was to get the classification probability. On a binary classification problem, I used model. Eval () (inputs). numpy () to get the prediction results.

like this

2.19903 -2.06323

2.22841 -2.09061

2.20833 -2.07209

2.22888 -2.09125

2.22644 -2.08869

I don't know how to convert it to probability, or should I use other commands to get classification probability?

I hope I can get help. Thank you.

|

https://github.com/pytorch/pytorch/issues/19611

|

closed

|

[

"triaged"

] | 2019-04-23T09:29:27Z

| 2019-04-23T19:26:02Z

| null |

xujiameng

|

pytorch/pytorch

| 19,561

|

How to do prediction/inference for a batch of images at a time with libtorch?

|

Anybody knows how to do prediction/inference for a batch of images at a time with libtorch/pytorch C++?

any reply would be appreciated, thank you!

|

https://github.com/pytorch/pytorch/issues/19561

|

closed

|

[] | 2019-04-22T08:40:04Z

| 2019-04-22T20:59:27Z

| null |

asa008

|

pytorch/examples

| 547

|

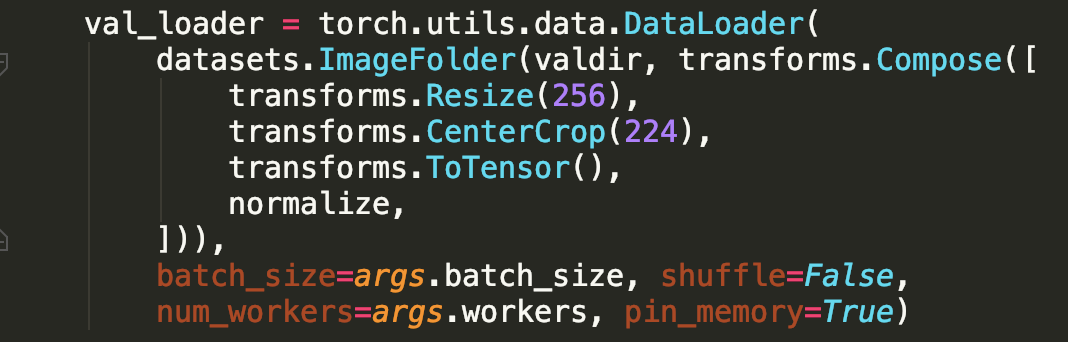

where can I get the inference code for classification?

|

I have trained the resnet-18 model for classification on my own dataset with examples/imagenet/main.py. And now I want to infernece the images, but there is no inference code.

|

https://github.com/pytorch/examples/issues/547

|

closed

|

[] | 2019-04-22T03:20:25Z

| 2019-04-24T10:50:50Z

| 2

|

ShaneYS

|

pytorch/pytorch

| 19,453

|

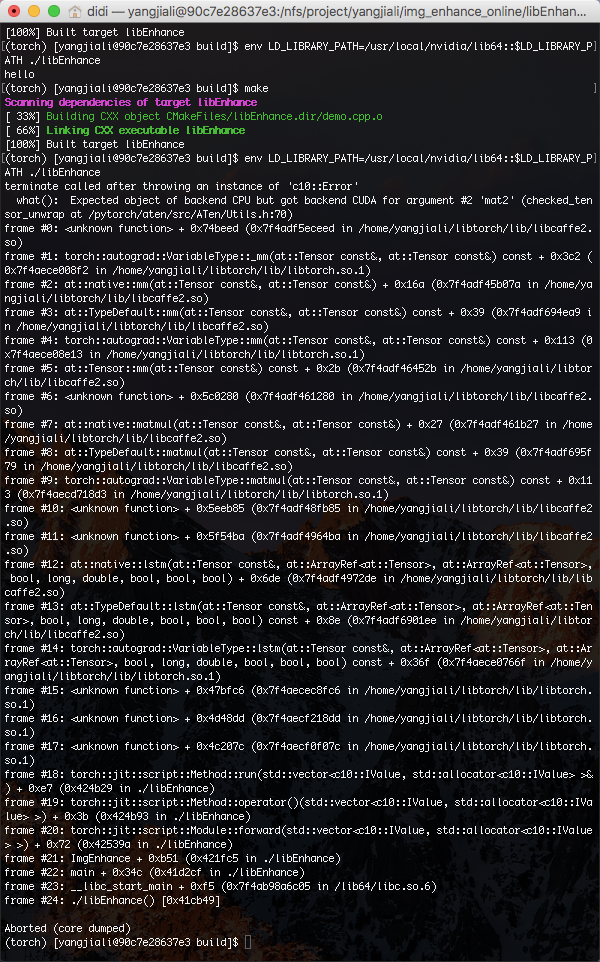

How to load PyTorch model with LSTM using C++ api

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

## To Reproduce

Steps to reproduce the behavior:

1. Establish a PyTorch model with LSTM module using python, and store the script module after using torch.jit.trace. Python code like this:

```python

class MyModule(nn.Module):

def __init__(self, N, M):

super(MyModule, self).__init__()

self.lstm = nn.LSTM(M, M, batch_first=True)

self.linear = nn.Linear(M, 1)

def forward(self, inputs, h0, c0):

output, (_, _) = self.lstm(inputs, h0, c0)

output, _ = torch.max(output, dim=1)

# output, _ = torch.max(inputs, dim=1)

output = self.linear(output)

return output

batch_size = 8

h = 33

w = 45

model = MyModule(h, w)

data = np.random.normal(1, 1, size=(batch_size, h, w))

data = torch.Tensor(data)

h0, c0 = torch.zeros(1, batch_size, w), torch.zeros(1, batch_size, w)

traced_script_module = torch.jit.trace(model, (data, h0,c0))

traced_script_module.save('model.pt')

```

2. Load the model and move the model to GPU, then when the script exit, there is a core dump. However, If we don't move the model to gpu, the cpp script exits normally.My cpp script like this:

```c++

int main(int argc, const char* argv[]) {

if (argc != 2) {

std::cerr << "usage: example-app <path-to-exported-script-module>\n";

return -1;

}

// Deserialize the ScriptModule from a file using torch::jit::load().

std::shared_ptr<torch::jit::script::Module> module = torch::jit::load(argv[1]);

assert(module != nullptr);

std::cout << "ok\n";

this->module->to(at::Device("cuda:0"))

vector<torch::jit::IValue> inputs;

int b = 2, h = 33, w = 45;

vector<float> data(b*h*w, 1.0);

torch::Tensor data_tensor = torch::from_blob(data.data(), {b, h, w}.to(at::Device("cuda:0"));

torch::Tensor h0 = torch::from_blob(vector<float>(1*b*w, 0.0), {b, h, w}).to(at::Device("cuda:0"));

torch::Tensor c0 = torch::from_blob(vector<float>(1*b*w, 0.0), {b, h, w}).to(at::Device("cuda:0"));

inputs.push_back(data_tensor);

inputs.push_back(h0);

inputs.push(c0);

torch::Tensor output = module->forward(inputs).toTensor().cpu();

auto accessor = output.accessor<float, 2>();

vector<float> answer(b);

for (int i=0; i<accessor.size(0); ++i){

answer[i] = accessor[i][0];

}

cout << "predict ok" << endl;

}

```

> Note: There is a bug to move init hidden state tensor of lstm to gpu [link](https://github.com/pytorch/pytorch/issues/15272) I use two methods to solve this problem, one is to specify the device in python model using hard code, another is to pass init hidden state as input parameter of forward in cpp script, which may cause a warning [link](https://discuss.pytorch.org/t/rnn-module-weights-are-not-part-of-single-contiguous-chunk-of-memory/6011/14)

the gdb trace info like this:

```shell

(gdb) where

#0 0x00007ffff61ca9fe in ?? () from /usr/local/cuda/lib64/libcudart.so.10.0

#1 0x00007ffff61cf96b in ?? () from /usr/local/cuda/lib64/libcudart.so.10.0

#2 0x00007ffff61e4be2 in cudaDeviceSynchronize () from /usr/local/cuda/lib64/libcudart.so.10.0

#3 0x00007fffb945dcf4 in cudnnDestroy () from repo/pytorch_cpp/libtorch/lib/libcaffe2_gpu.so

#4 0x00007fffb4fca17d in std::unordered_map<int, std::vector<at::native::(anonymous namespace)::Handle, std::allocator<at::native::(anonymous namespace)::Handle> >, std::hash<int>, std::equal_to<int>, std::allocator<std::pair<int const, std::vector<at::native::(anonymous namespace)::Handle, std::allocator<at::native::(anonymous namespace)::Handle> > > > >::~unordered_map() () from repo/pytorch_cpp/libtorch/lib/libcaffe2_gpu.so

#5 0x00007fffb31fe615 in __cxa_finalize (d=0x7fffe8519680) at cxa_finalize.c:83

#6 0x00007fffb4dd3ac3 in __do_global_dtors_aux () from repo/pytorch_cpp/libtorch/lib/libcaffe2_gpu.so

#7 0x00007fffffffe010 in ?? ()

#8 0x00007ffff7de5b73 in _dl_fini () at dl-fini.c:138

Backtrace stopped: frame did not save the PC

```

3. When I remove the LSTM in python model, then the cpp script exits normally.

4. I guess the hidden state of LSTM cause the core dump, maybe relate to the release the init hidden state memory?

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

## Expected behavior

So, When I want to load a model with LSTM using c++, how to deal with the hidden state, and how to avoid core dump?

## Environment

PyTorch version: 1.0.1.post2

Is debug build: No

CUDA used to build PyTorch: 10.0.130

OS: Ubuntu 18.04.2 LTS

GCC version: (Ubuntu 7.3.0-27ubuntu1~18.04) 7.3.0

CMake version: version 3.10.2

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: 10.0.130

GPU models and configuration:

GPU 0: GeForce RTX 2080 Ti

GPU 1: GeForce RTX 2080 Ti

Nvidia driver version: 410.48

cuDNN version: Could not collect

Versions of relevant libraries:

[pip3] numpy==1

|

https://github.com/pytorch/pytorch/issues/19453

|

open

|

[

"module: cpp",

"triaged"

] | 2019-04-19T02:11:06Z

| 2024-07-24T20:52:00Z

| null |

SixerWang

|

pytorch/tutorials

| 484

|

different results

|

HI, i copied to code exactly and and ran with all the appropriate downloads and im not achieving the resutls stated with 40 epochs. the loss stays at around 2.2 with test accuracy of 100/837 .. around 11%..

is there something i need to change to get over 50% accuracy ?

|

https://github.com/pytorch/tutorials/issues/484

|

closed

|

[] | 2019-04-18T16:04:34Z

| 2021-06-16T17:49:27Z

| 1

|

taylerpauls

|

pytorch/examples

| 544

|

the error when I run the example for the imagenet

|

When I tried to run the model for the example/imagenet, I encounter such error.So could you tell me how to solve the problem?

python /home/zrz/code/imagenet_dist/examples-master/imagenet/main.py -a resnet18 -/home/zrz/dataset/imagenet/imagenet2012/ILSVRC2012/raw-data/imagenet-data

=> creating model 'resnet18'

Epoch: [0][ 0/320292] Time 3.459 ( 3.459) Data 0.295 ( 0.295) Loss 7.2399e+00 (7.2399e+00) Acc@1 0.00 ( 0.00) Acc@5 0.00 ( 0.00)

Epoch: [0][ 10/320292] Time 0.043 ( 0.357) Data 0.000 ( 0.027) Loss 9.4861e+00 (1.3169e+01) Acc@1 0.00 ( 0.00) Acc@5 0.00 ( 0.00)

Epoch: [0][ 20/320292] Time 0.046 ( 0.209) Data 0.000 ( 0.014) Loss 7.3722e+00 (1.0817e+01) Acc@1 0.00 ( 0.00) Acc@5 0.00 ( 0.00)

Epoch: [0][ 30/320292] Time 0.032 ( 0.154) Data 0.000 ( 0.010) Loss 6.9166e+00 (9.5394e+00) Acc@1 0.00 ( 0.00) Acc@5 0.00 ( 0.00)

/opt/conda/conda-bld/pytorch_1549630534704/work/aten/src/THCUNN/ClassNLLCriterion.cu:105: void cunn_ClassNLLCriterion_updateOutput_kernel(Dtype *, Dtype *, Dtype *, long *, Dtype *, int, int, int, int, long) [with Dtype = float, Acctype = float]: block: [0,0,0], thread: [3,0,0] Assertion `t >= 0 && t < n_classes` failed.

Traceback (most recent call last):

File "/home/zrz/code/imagenet_dist/examples-master/imagenet/main.py", line 417, in <module>

main()

File "/home/zrz/code/imagenet_dist/examples-master/imagenet/main.py", line 113, in main

main_worker(args.gpu, ngpus_per_node, args)

File "/home/zrz/code/imagenet_dist/examples-master/imagenet/main.py", line 239, in main_worker

train(train_loader, model, criterion, optimizer, epoch, args)

File "/home/zrz/code/imagenet_dist/examples-master/imagenet/main.py", line 286, in train

losses.update(loss.item(), input.size(0))

RuntimeError: CUDA error: device-side assert triggered

terminate called after throwing an instance of 'c10::Error'

what(): CUDA error: device-side assert triggered (insert_events at /opt/conda/conda-bld/pytorch_1549630534704/work/aten/src/THC/THCCachingAllocator.cpp:470)

frame #0: c10::Error::Error(c10::SourceLocation, std::string const&) + 0x45 (0x7f099a50acf5 in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libc10.so)

frame #1: <unknown function> + 0x123b8c0 (0x7f099e7ee8c0 in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libcaffe2_gpu.so)

frame #2: at::TensorImpl::release_resources() + 0x50 (0x7f099ac76c30 in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libcaffe2.so)

frame #3: <unknown function> + 0x2a836b (0x7f099818b36b in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch.so.1)

frame #4: <unknown function> + 0x30eff0 (0x7f09981f1ff0 in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch.so.1)

frame #5: torch::autograd::deleteFunction(torch::autograd::Function*) + 0x2f0 (0x7f099818dd70 in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch.so.1)

frame #6: std::_Sp_counted_base<(__gnu_cxx::_Lock_policy)2>::_M_release() + 0x45 (0x7f09c17f87f5 in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #7: torch::autograd::Variable::Impl::release_resources() + 0x4a (0x7f09984001ba in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch.so.1)

frame #8: <unknown function> + 0x12148b (0x7f09c181048b in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #9: <unknown function> + 0x31a49f (0x7f09c1a0949f in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #10: <unknown function> + 0x31a4e1 (0x7f09c1a094e1 in /home/zrz/miniconda3/envs/runze_env_name/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #11: <unknown function> + 0x1993cf (0x5574e4c9a3cf in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #12: <unknown function> + 0xf12b7 (0x5574e4bf22b7 in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #13: <unknown function> + 0xf1147 (0x5574e4bf2147 in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #14: <unknown function> + 0xf115d (0x5574e4bf215d in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #15: <unknown function> + 0xf115d (0x5574e4bf215d in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #16: <unknown function> + 0xf115d (0x5574e4bf215d in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #17: PyDict_SetItem + 0x3da (0x5574e4c37e7a in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #18: PyDict_SetItemString + 0x4f (0x5574e4c4078f in /home/zrz/miniconda3/envs/runze_env_name/bin/python3.6)

frame #19: PyImport_Cleanup + 0x99 (0x5574e4ca4709 in /ho

|

https://github.com/pytorch/examples/issues/544

|

closed

|

[] | 2019-04-14T06:22:38Z

| 2022-03-10T05:56:43Z

| 4

|

runzeer

|

pytorch/examples

| 543

|

[Important BUG] non-consistent behavior between "final evaluation" and "eval on each epoch" for mnist example

|

It is a common sense that, during evaluation, the model is not trained by the dev dataset.

However, I noticed a strange different behavior between the two results:

(1) train 10 epoch, having final evaluate on test data

(2) train 10 epoch, having an evaluation after each training epoch on test data

## Prior knowledge:

Even though you set seed for everything

```

# set seed

random.seed(args.seed)

np.random.seed(args.seed)

torch.manual_seed(args.seed)

if use_cuda:

torch.cuda.manual_seed_all(args.seed) # if got GPU also set this seed

```

When you run `examples/mnist/main.py`, it still give different result on GPU.

```

run 1

-------------

Test set: Average loss: 0.1018, Accuracy: 9660/10000 (97%)

Test set: Average loss: 0.0611, Accuracy: 9825/10000 (98%)

Test set: Average loss: 0.0555, Accuracy: 9813/10000 (98%)

Test set: Average loss: 0.0409, Accuracy: 9862/10000 (99%)

Test set: Average loss: 0.0381, Accuracy: 9870/10000 (99%)

Test set: Average loss: 0.0339, Accuracy: 9891/10000 (99%)

Test set: Average loss: 0.0340, Accuracy: 9877/10000 (99%)

Test set: Average loss: 0.0399, Accuracy: 9872/10000 (99%)

Test set: Average loss: 0.0291, Accuracy: 9908/10000 (99%)

Test set: Average loss: 0.0315, Accuracy: 9896/10000 (99%)

run 2

--------------

Test set: Average loss: 0.1016, Accuracy: 9666/10000 (97%)

Test set: Average loss: 0.0608, Accuracy: 9828/10000 (98%)

Test set: Average loss: 0.0567, Accuracy: 9810/10000 (98%)

Test set: Average loss: 0.0408, Accuracy: 9864/10000 (99%)

Test set: Average loss: 0.0382, Accuracy: 9868/10000 (99%)

Test set: Average loss: 0.0339, Accuracy: 9894/10000 (99%)

Test set: Average loss: 0.0349, Accuracy: 9871/10000 (99%)

Test set: Average loss: 0.0396, Accuracy: 9876/10000 (99%)

Test set: Average loss: 0.0294, Accuracy: 9911/10000 (99%)

Test set: Average loss: 0.0304, Accuracy: 9895/10000 (99%)

```

As long as you set `torch.backends.cudnn.deterministic = True`

You could get consistent results:

```

====== parameters ========

batch_size: 64

do_eval: True

do_eval_each_epoch: True

epochs: 10

log_interval: 10

lr: 0.01

momentum: 0.5

no_cuda: False

save_model: False

seed: 42

test_batch_size: 1000

==========================

Test set: Average loss: 0.1034, Accuracy: 9679/10000 (97%)

Test set: Average loss: 0.0615, Accuracy: 9804/10000 (98%)

Test set: Average loss: 0.0484, Accuracy: 9847/10000 (98%)

Test set: Average loss: 0.0361, Accuracy: 9888/10000 (99%)

Test set: Average loss: 0.0341, Accuracy: 9887/10000 (99%)

Test set: Average loss: 0.0380, Accuracy: 9877/10000 (99%)

Test set: Average loss: 0.0302, Accuracy: 9899/10000 (99%)

Test set: Average loss: 0.0315, Accuracy: 9884/10000 (99%)

Test set: Average loss: 0.0283, Accuracy: 9909/10000 (99%)

Test set: Average loss: 0.0266, Accuracy: 9907/10000 (99%) -> epoch 10

====== parameters ========

batch_size: 64

do_eval: True

do_eval_each_epoch: True

epochs: 20

log_interval: 10

lr: 0.01

momentum: 0.5

no_cuda: False

save_model: False

seed: 42

test_batch_size: 1000

==========================

Test set: Average loss: 0.1034, Accuracy: 9679/10000 (97%)

Test set: Average loss: 0.0615, Accuracy: 9804/10000 (98%)

Test set: Average loss: 0.0484, Accuracy: 9847/10000 (98%)

Test set: Average loss: 0.0361, Accuracy: 9888/10000 (99%)

Test set: Average loss: 0.0341, Accuracy: 9887/10000 (99%)

Test set: Average loss: 0.0380, Accuracy: 9877/10000 (99%)

Test set: Average loss: 0.0302, Accuracy: 9899/10000 (99%)

Test set: Average loss: 0.0315, Accuracy: 9884/10000 (99%)

Test set: Average loss: 0.0283, Accuracy: 9909/10000 (99%)

Test set: Average loss: 0.0266, Accuracy: 9907/10000 (99%) -> epoch 10

Test set: Average loss: 0.0373, Accuracy: 9870/10000 (99%)

Test set: Average loss: 0.0286, Accuracy: 9909/10000 (99%)

Test set: Average loss: 0.0309, Accuracy: 9908/10000 (99%)

Test set: Average loss: 0.0302, Accuracy: 9899/10000 (99%)

Test set: Average loss: 0.0261, Accuracy: 9907/10000 (99%)

Test set: Average loss: 0.0258, Accuracy: 9913/10000 (99%)

Test set: Average loss: 0.0288, Accuracy: 9917/10000 (99%)

Test set: Average loss: 0.0280, Accuracy: 9904/10000 (99%)

Test set: Average loss: 0.0294, Accuracy: 9902/10000 (99%)

Test set: Average loss: 0.0257, Accuracy: 9914/10000 (99%) -> epoch 20

```

However, when you change the model to have `final evaluation` after epoch 10, the result becomes:

```

====== parameters ========

batch_size: 64

do_eval: True

do_eval_each_epoch: False

epochs: 10

log_interval: 10

lr: 0.01

momentum: 0.5

no_cuda: False

save_model: False

seed: 42

test_batch_size: 1000

==========================

Test set: Average loss: 0.0361, Accuracy: 9885/10000 (99%) -> epoch 10

```

I also tried to add `torch.backends.cudnn.benchmark = False`, it gives the same result.

Repeatability and consistent result is crucial in machine learning, do you guys know what is the r

|

https://github.com/pytorch/examples/issues/543

|

open

|

[

"help wanted",

"nlp"

] | 2019-04-12T06:09:13Z

| 2022-03-10T06:03:31Z

| 1

|

Jacob-Ma

|

pytorch/examples

| 542

|

non-deterministic behavior on PyTorch mnist example

|

I tried PyTorch `examples/mnist/main.py` example to check if it is deterministic.

Although I modified the code to set the seed on everything, it still gives quite different results on GPU.

Do you know how to make the code be deterministic? Thank you very much.

Below is the code I have run and the output.

```

from __future__ import print_function

import argparse

import random

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5, 1)

self.conv2 = nn.Conv2d(20, 50, 5, 1)

self.fc1 = nn.Linear(4 * 4 * 50, 500)

self.fc2 = nn.Linear(500, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(-1, 4 * 4 * 50)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return F.log_softmax(x, dim=1)

def train(args, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

#if batch_idx % args.log_interval == 0:

#print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

#epoch, batch_idx * len(data), len(train_loader.dataset),

#100. * batch_idx / len(train_loader), loss.item()))

def test(args, model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction='sum').item() # sum up batch loss

pred = output.argmax(dim=1, keepdim=True) # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print('Test set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

def main():

# Training settings

parser = argparse.ArgumentParser(description='PyTorch MNIST Example')

parser.add_argument('--batch-size', type=int, default=64, metavar='N',

help='input batch size for training (default: 64)')

parser.add_argument('--test-batch-size', type=int, default=1000, metavar='N',

help='input batch size for testing (default: 1000)')

parser.add_argument('--epochs', type=int, default=10, metavar='N',

help='number of epochs to train (default: 10)')

parser.add_argument('--lr', type=float, default=0.01, metavar='LR',

help='learning rate (default: 0.01)')

parser.add_argument('--momentum', type=float, default=0.5, metavar='M',

help='SGD momentum (default: 0.5)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

parser.add_argument('--log-interval', type=int, default=10, metavar='N',

help='how many batches to wait before logging training status')

parser.add_argument('--save-model', action='store_true', default=False,

help='For Saving the current Model')

args = parser.parse_args()

use_cuda = not args.no_cuda and torch.cuda.is_available()

# set seed

random.seed(args.seed)

np.random.seed(args.seed)

torch.manual_seed(args.seed)

if use_cuda:

torch.cuda.manual_seed_all(args.seed) # if got GPU also set this seed

# torch.manual_seed(args.seed)

device = torch.device("cuda" if use_cuda else "cpu")

kwargs = {'num_workers': 1, 'pin_memory': True} if use_cuda else {}

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=args.batch_size, shuffle=True, **kwargs)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Norm

|

https://github.com/pytorch/examples/issues/542

|

closed

|

[] | 2019-04-12T04:31:25Z

| 2019-04-12T05:53:14Z

| 1

|

Jacob-Ma

|

pytorch/tutorials

| 476

|

Example with torch.empty in What is PyTorch? is misleading

|

In this [example](https://github.com/pytorch/tutorials/blob/master/beginner_source/blitz/tensor_tutorial.py) the output of a `torch.empty` call looks the same result I would obtain with `torch.zeros`, while it should be filled with garbage values.

This might be misleading to beginners.

|

https://github.com/pytorch/tutorials/issues/476

|

closed

|

[] | 2019-04-11T09:19:06Z

| 2019-08-23T21:18:53Z

| null |

alexchapeaux

|

pytorch/pytorch

| 19,098

|

[C++ front end] how to use clamp to clip gradients?

|

## ❓ Questions and Help

hi, I wonder if this could clip the gradients:

for(int i=0; i<net.parameters().size(); i++)

{

net.parameters().at(i).grad() = torch::clamp(net.parameters().at(i).grad(), -GRADIENT_CLIP, GRADIENT_CLIP);

}

optimizer.step();

I found it doesn't seem to work, and I still got large output.

How can I use the "clamp“ correctly?

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

|

https://github.com/pytorch/pytorch/issues/19098

|

closed

|

[] | 2019-04-10T05:37:24Z

| 2019-04-10T05:38:01Z

| null |

ZhuXingJune

|

pytorch/pytorch

| 19,012

|

I have a piece of code that is written in LUA and I want to know what is the pytorch equivalent of the code.?How do I implement these lines in pytorch .. Can somebody help me with it? The code is mentioned in the comment

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

|

https://github.com/pytorch/pytorch/issues/19012

|

closed

|

[] | 2019-04-08T10:15:59Z

| 2019-04-08T10:30:32Z

| null |

AshishRMenon

|

pytorch/pytorch

| 18,951

|

Completed code with bug report for hdf5 dataset. How to fix?

|

Hello all, I want to report the issue of pytorch with hdf5 loader. The full source code and bug are provided

The problem is that I want to call the `test_dataloader.py` in two terminals. The file is used to load the custom hdf5 dataset (`custom_h5_loader`). To generate h5 files, you may need first run the file `convert_to_h5` to generate 100 random h5 files.

To reproduce the error. Please run follows steps

**Step 1:** Generate the hdf5

```

from __future__ import print_function

import h5py

import numpy as np

import random

import os

if not os.path.exists('./data_h5'):

os.makedirs('./data_h5')

for index in range(100):

data = np.random.uniform(0,1, size=(3,128,128))

data = data[None, ...]

print (data.shape)

with h5py.File('./data_h5/' +'%s.h5' % (str(index)), 'w') as f:

f['data'] = data

```

Step2: Create a python file custom_h5_loader.py and paste the code

```

import h5py

import torch.utils.data as data

import glob

import torch

import numpy as np

import os

class custom_h5_loader(data.Dataset):

def __init__(self, root_path):

self.hdf5_list = [x for x in glob.glob(os.path.join(root_path, '*.h5'))]

self.data_list = []

for ind in range (len(self.hdf5_list)):

self.h5_file = h5py.File(self.hdf5_list[ind])

data_i = self.h5_file.get('data')

self.data_list.append(data_i)

def __getitem__(self, index):

self.data = np.asarray(self.data_list[index])

return (torch.from_numpy(self.data).float())

def __len__(self):

return len(self.hdf5_list)

```

**Step 3:** Create a python file with name test_dataloader.py

```

from dataloader import custom_h5_loader

import torch

import torchvision.datasets as dsets

train_h5_dataset = custom_h5_loader('./data_h5')

h5_loader = torch.utils.data.DataLoader(dataset=train_h5_dataset, batch_size=2, shuffle=True, num_workers=4)

for epoch in range(100000):

for i, data in enumerate(h5_loader):

print (data.shape)

```

Step 4: Open first terminal and run (it worked)

> python test_dataloader.py

Step 5: Open the second terminal and run (Error report in below)

> python test_dataloader.py

The error is

```

Traceback (most recent call last):

File "/home/john/anaconda3/lib/python3.6/site-packages/h5py/_hl/files.py", line 162, in make_fid

fid = h5f.open(name, h5f.ACC_RDWR, fapl=fapl)

File "h5py/_objects.pyx", line 54, in h5py._objects.with_phil.wrapper

File "h5py/_objects.pyx", line 55, in h5py._objects.with_phil.wrapper

File "h5py/h5f.pyx", line 78, in h5py.h5f.open

OSError: Unable to open file (unable to lock file, errno = 11, error message = 'Resource temporarily unavailable')

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/john/anaconda3/lib/python3.6/site-packages/h5py/_hl/files.py", line 165, in make_fid

fid = h5f.open(name, h5f.ACC_RDONLY, fapl=fapl)

File "h5py/_objects.pyx", line 54, in h5py._objects.with_phil.wrapper

File "h5py/_objects.pyx", line 55, in h5py._objects.with_phil.wrapper

File "h5py/h5f.pyx", line 78, in h5py.h5f.open

OSError: Unable to open file (unable to lock file, errno = 11, error message = 'Resource temporarily unavailable')

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "test_dataloader.py", line 5, in <module>

train_h5_dataset = custom_h5_loader('./data_h5')

File "/home/john/test_hdf5/dataloader.py", line 13, in __init__

self.h5_file = h5py.File(self.hdf5_list[ind])

File "/home/john/anaconda3/lib/python3.6/site-packages/h5py/_hl/files.py", line 312, in __init__

fid = make_fid(name, mode, userblock_size, fapl, swmr=swmr)

File "/home/john/anaconda3/lib/python3.6/site-packages/h5py/_hl/files.py", line 167, in make_fid

fid = h5f.create(name, h5f.ACC_EXCL, fapl=fapl, fcpl=fcpl)

File "h5py/_objects.pyx", line 54, in h5py._objects.with_phil.wrapper

File "h5py/_objects.pyx", line 55, in h5py._objects.with_phil.wrapper

File "h5py/h5f.pyx", line 98, in h5py.h5f.create

OSError: Unable to create file (unable to open file: name = './data_h5/47.h5', errno = 17, error message = 'File exists', flags = 15, o_flags = c2)

```

This is my configuration

```

HDF5 Version: 1.10.2

Configured on: Wed May 9 23:24:59 UTC 2018

Features:

---------

Parallel HDF5: no

High-level library: yes

Threadsafety: yes

print (torch.__version__)

1.0.0.dev20181227

```

|

https://github.com/pytorch/pytorch/issues/18951

|

closed

|

[] | 2019-04-05T14:50:55Z

| 2019-04-06T12:36:34Z

| null |

John1231983

|

pytorch/pytorch

| 18,872

|

How to convert a cudnn.BLSTM model to nn.LSTM bidirectional model

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

I have a *.t7 model that consists in few convolution layers and 1 block of cudnn.BLSTM(). To convert the model to pytorch, I create the same architecture with pytorch and try to get the weights from the t7 file. I think the convolution layers were correct but I have a doubt about the cudnn.BLSTM. When I extract the BLSTM weighs, I got one dimentional list of millions of parameters which corresponds to the same numbers of parameters in pytorch LSTM. However, in pytorch the weights and biases are with well know structure and weight_ih_l0, weight_hh_l0,... bias_ih_l_0, bias_hh_l0, ... weight_ih_l0_reverse, ... but in the cuddnn.BLSTM(), all parameters are set in one flattened list, so how to know the order and the shape of weights and biases ??

I debug the cudnn.BLSTM structure on th terminal and I get some idea about the concatenation orders and the shape:

Exemple

```

# torch

rnn = cudnn.BLSTM(1,1, 2, false, 0.5)

# get the weights

weights = rnn:weights()

th> rnn:weights()

{

1 :

{

1 : CudaTensor - size: 1

2 : CudaTensor - size: 1

3 : CudaTensor - size: 1

4 : CudaTensor - size: 1

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

2 :

{

1 : CudaTensor - size: 1

2 : CudaTensor - size: 1

3 : CudaTensor - size: 1

4 : CudaTensor - size: 1

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

3 :

{

1 : CudaTensor - size: 2

2 : CudaTensor - size: 2

3 : CudaTensor - size: 2

4 : CudaTensor - size: 2

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

4 :

{

1 : CudaTensor - size: 2

2 : CudaTensor - size: 2

3 : CudaTensor - size: 2

4 : CudaTensor - size: 2

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

}

biases = rnn:biaises()

th> rnn:biases()

{

1 :

{

1 : CudaTensor - size: 1

2 : CudaTensor - size: 1

3 : CudaTensor - size: 1

4 : CudaTensor - size: 1

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

2 :

{

1 : CudaTensor - size: 1

2 : CudaTensor - size: 1

3 : CudaTensor - size: 1

4 : CudaTensor - size: 1

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

3 :

{

1 : CudaTensor - size: 1

2 : CudaTensor - size: 1

3 : CudaTensor - size: 1

4 : CudaTensor - size: 1

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

4 :

{

1 : CudaTensor - size: 1

2 : CudaTensor - size: 1

3 : CudaTensor - size: 1

4 : CudaTensor - size: 1

5 : CudaTensor - size: 1

6 : CudaTensor - size: 1

7 : CudaTensor - size: 1

8 : CudaTensor - size: 1

}

}

```

all_flattened_params = rnn:parameters()

with this small example: I see that the rnn:parameters() function put the weighs and after that the biases in the above order. So:

weights =all_flattened_params[:-32]

biases = all_flattened_params[-32:]

Now, How to know the order of weights and biases regarding the pytorch nn.LSTM() ?

I supposed that this order:

weight_ih_l0, weight_hh_l0, weight_ih_l0_reverse, weight_hh_l0_reverse, weight_ih_l1, ....

bias_ih_l0, bias_hh_l0, bias_ih_l0_reverse, bias_hh_l0_reverse, .... but my model does not give the right output!!

|

https://github.com/pytorch/pytorch/issues/18872

|

closed

|

[] | 2019-04-04T18:30:20Z

| 2019-04-04T19:00:04Z

| null |

rafikg

|

pytorch/pytorch

| 18,837

|

How to use libtorch api torch::nn::parallel::data_parallel train on multi-gpu

|

## 📚 Documentation

<!-- A clear and concise description of what content in https://pytorch.org/docs is an issue. If this has to do with the general https://pytorch.org website, please file an issue at https://github.com/pytorch/pytorch.github.io/issues/new/choose instead. If this has to do with https://pytorch.org/tutorials, please file an issue at https://github.com/pytorch/tutorials/issues/new -->

|

https://github.com/pytorch/pytorch/issues/18837

|

closed

|

[

"module: performance",

"oncall: distributed",

"module: multi-gpu",

"module: docs",

"module: cpp",

"module: nn",

"triaged"

] | 2019-04-04T02:48:41Z

| 2020-06-25T16:48:51Z

| null |

DDFlyInCode

|

pytorch/tutorials

| 468

|

auxilary net confusion Inception_v3 Vs. GoogLeNet in finetune script?

|

Hi, I followed the finetune tutorial (but using this script to train from scratch): for `inception` as there is only one `aux_logit` below snippet working fine.

```

elif model_name == "inception":

""" Inception v3

Be careful, expects (299,299) sized images and has auxiliary output

"""

model_ft = models.inception_v3(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

# Handle the auxilary net

num_ftrs = model_ft.AuxLogits.fc.in_features

model_ft.AuxLogits.fc = nn.Linear(num_ftrs, num_classes)

# Handle the primary net

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs,num_classes)

input_size = 299

```

correspoing `inception_v3` net file snippet:

```

if self.training and self.aux_logits:

aux = self.AuxLogits(x)

```

and the `fc` snippet:

```

self.fc = nn.Linear(768, num_classes)

```

Whereas for `GoogLeNet` has two auxilary outputs, the net file snippet has:

```

if self.training and self.aux_logits:

aux1 = self.aux1(x)

.....

if self.training and self.aux_logits:

aux2 = self.aux2(x)

```

and the `fc` snippets:

```

self.fc1 = nn.Linear(2048, 1024)

self.fc2 = nn.Linear(1024, num_classes)

```

Now, my confusion is about using the `fc` in finetuning script, how to embed?

```

num_ftrs = model_ft.(aux1/aux2).(fc1/fc2).in_features

model_ft.(aux1/aux2).(fc1/fc2) = nn.Linear(num_ftrs, num_classes)

```

any thoughts?

|

https://github.com/pytorch/tutorials/issues/468

|

closed

|

[] | 2019-04-03T15:43:05Z

| 2019-04-07T10:52:28Z

| 0

|

rajasekharponakala

|

pytorch/pytorch

| 18,781

|

TORCH_CUDA_ARCH_LIST=All should know what is possible

|

## 🐛 Bug

When setting TORCH_CUDA_ARCH_LIST=All, I expect Torch to compile with all CUDA architectures available to my current version of CUDA. Instead, it attempted to build for cuda 2.0.

See error:

nvcc fatal : Unsupported gpu architecture 'compute_20'

## To Reproduce

Steps to reproduce the behavior:

1. Install CUDA >= 9.0

2. TORCH_CUDA_ARCH_LIST=All cmake -DUSE_CUDA=ON ..

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

## Expected behavior

Filters out 2.x architectures if CUDA >= 9.0

## Environment

CUDA 10.1

|

https://github.com/pytorch/pytorch/issues/18781

|

closed

|

[

"module: build",

"module: docs",

"module: cuda",

"module: molly-guard",

"triaged"

] | 2019-04-03T00:31:35Z

| 2024-08-04T05:06:56Z

| null |

xsacha

|

pytorch/tutorials

| 463

|

The input of a GRU is of shape (seq_len, batch, input_size). I wonder does seq_len means anything?

|

I noticed that in the documentation of pytorch GRU, the input shape should be (seq_len, batch, input_size), thus the input ought to be a sequence and the model will deal with the sequence inside itself. But in this notebook, the author passes a tensor only of length one in each iteration in function `train`. I mean if the model can deal with sequential inputs, why not just feed a sequence of sentence to it?

This is my first time to start an issue on github, please forgive me if there is anything wrong.

|

https://github.com/pytorch/tutorials/issues/463

|

closed

|

[] | 2019-04-02T09:32:10Z

| 2019-08-23T21:49:49Z

| 1

|

CSUN1997

|

pytorch/text

| 522

|

how to set random seed for BucketIterator to guarantee that it produce the same itrator every time you run the code ?

|

https://github.com/pytorch/text/issues/522

|

closed

|

[

"obsolete"

] | 2019-04-02T01:47:03Z

| 2022-01-25T04:04:42Z

| null |

zide05

|

|

pytorch/pytorch

| 18,677

|

How to compile/install caffe2 with cuda 9.0?

|

I'm building caffe2 on ubuntu 18.04 with CUDA 9.0? But when I run "python setup.py install" command, I have met issue about version of CUDA. It needs to CUDA 9.2 instead of 9.0 but i only want to build with 9.0.

How to pass it?

Thank you!

|

https://github.com/pytorch/pytorch/issues/18677

|

open

|

[

"caffe2"

] | 2019-04-01T06:54:50Z

| 2019-05-27T12:43:18Z

| null |

TuanHAnhVN

|

pytorch/tutorials

| 459

|

Inappropriate example code of vector-Jacobian product in (AUTOGRAD: AUTOMATIC DIFFERENTIATION)

|

I want to check the vector-Jacobian product. But in the example code the x is randomly generated and the the function relation between x and y is not clear. So how can I check if I correctly understand the vector-Jacobian product ? Can you improve it ?

|

https://github.com/pytorch/tutorials/issues/459

|

closed

|

[] | 2019-03-30T13:57:54Z

| 2021-06-16T20:24:11Z

| 2

|

guixianjin

|

pytorch/tutorials

| 458

|

how can i remove the layers in model.

|

I want to use the googlenet model to do finetuing, remove the fc layer, and then add other layers.

|

https://github.com/pytorch/tutorials/issues/458

|

closed

|

[] | 2019-03-30T08:28:08Z

| 2021-06-16T20:25:08Z

| 1

|

wangjue-wzq

|

pytorch/examples

| 534

|

when i run the main.py in imagenet,i encounter a problem

|

|

https://github.com/pytorch/examples/issues/534

|

open

|

[

"help wanted"

] | 2019-03-27T02:09:15Z

| 2022-03-10T06:03:41Z

| 0

|

Xavier-cvpr

|

pytorch/examples

| 531

|

Running examples/world_language_model

|

Hello, I am trying to run 'examples/world_language_model'.

However, when I do 'python main.py --cuda' in the above directory.

It prints an error like this.

Does anyone know how to solve this problem?

|

https://github.com/pytorch/examples/issues/531

|

closed

|

[] | 2019-03-21T11:51:02Z

| 2020-03-25T00:54:09Z

| 1

|

ohcurrent

|

huggingface/transformers

| 370

|

What is Synthetic Self-Training?

|

The current best performing model on[ SQuAD 2.0](https://rajpurkar.github.io/SQuAD-explorer/) is BERT + N-Gram Masking + Synthetic Self-Training (ensemble):

What is Synthetic Self-Training?

|

https://github.com/huggingface/transformers/issues/370

|

closed

|

[

"Discussion",

"wontfix"

] | 2019-03-12T20:40:50Z

| 2019-07-13T20:58:32Z

| null |

hsm207

|

pytorch/examples

| 524

|

[super_resolution]How can I get 'model_epoch_500.pth' file?

|

When I run:

`python super_resolve.py --input_image dataset/BSDS300/images/test/16077.jpg --model model_epoch_500.pth --output_filename out.png` .

It outpus :

` [Errno 2] No such file or directory: 'model_epoch_500.pth'`

How can I get 'model_epoch_500.pth' file?

|

https://github.com/pytorch/examples/issues/524

|

closed

|

[] | 2019-03-08T11:08:17Z

| 2019-05-31T08:57:22Z

| 0

|

dyfloveslife

|

pytorch/pytorch

| 17,654

|

I want to know how to use the select(int64_t dim, int64_t index) in at::Tensor?What is the definition of a parameter ?

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

|

https://github.com/pytorch/pytorch/issues/17654

|

closed

|

[] | 2019-03-04T12:57:04Z

| 2019-03-04T17:05:24Z

| null |

SongyiGao

|

pytorch/tutorials

| 441

|

Add 'Open in Colab' Button to Tutorial Code

|

Is it possible to edit the downloadable Jupyter notebooks at the bottom of each 60-minute blitz section? In a utopian scenario there would be a button at the top of each file, allowing the user to 'Open in Colab'. If this button was present the user would fix the code so that each cell was ran with the output visible.

Here is an example with Keras, to explicate what I mean. There are some uploaded PyTorch Jupyter Notebook files in the same repository (along with the Keras Jupyter Notebooks) to gain a more comprehensive perspective.

https://github.com/PhillySchoolofAI/DL-Libraries/blob/master/KerasFunctionalAPI.ipynb

|

https://github.com/pytorch/tutorials/issues/441

|

closed

|

[] | 2019-03-04T12:26:51Z

| 2019-03-31T10:34:34Z

| 2

|

pynchmeister

|

pytorch/examples

| 518

|

How to train from scratch on custom model

|

Hi,

Thank you very much for the code.

I am new to pytorch (have worked a lot with tensorflow), and have a question which is probably basic, but I can't find the answer.

If I want to train ImageNet on a model which doesn't appear in the code list (under "model names"), but I do have the model as pth.tar file, how am I able to do the training?

Thanks!

|

https://github.com/pytorch/examples/issues/518

|

closed

|

[] | 2019-02-26T10:19:24Z

| 2022-03-10T05:28:08Z

| 1

|

jennyzu

|

huggingface/transformers

| 320

|

what is the batch size we can use for SQUAD task?

|

I am running the squad example.

I have a Tesla M60 GPU which has about 8GB of memory. For bert-large-uncased model, I can only take batch size as 2, even after I used --fp16. Is it normal?

|

https://github.com/huggingface/transformers/issues/320

|

closed

|

[] | 2019-02-26T08:56:20Z

| 2019-03-03T00:21:25Z

| null |

leonwyang

|

pytorch/examples

| 517

|

How to save model in mnist.cpp?

|

How to save the model in cpp api mnist.cpp?

model.save and torch::save(model,"mnisttrain.pkl")

All error

|

https://github.com/pytorch/examples/issues/517

|

open

|

[

"c++"

] | 2019-02-26T06:58:05Z

| 2022-03-09T20:49:34Z

| 5

|

engineer1109

|

pytorch/tutorials

| 437

|

A short tutorial showing the input arguments for NLL loss/ cross entropy loss would be incredibly helpful

|

The arguments NLL loss (and by proxy cross entropy loss) take are in a relatively weird format. The documentation for the function does all it can within reason of the original documentation, but there's an incredible number of questions posted about weird problems giving them the kind of arguments. Much more than for other comparable things, and most don't really have good reusable answers.

The obvious solution to this is for someone to create a simple example based tutorial of using NLL loss, and clearly showing exactly what format the arguments need to be in (perhaps starting with input and targets that are one hot encoded to make it as idiot proof as possible).

I've spent 4 hours trying to solve a problem exactly like this without success, and am about to refer to source over it. Someone please take mercy on future programmers.

|

https://github.com/pytorch/tutorials/issues/437

|

open

|

[] | 2019-02-26T05:35:50Z

| 2019-02-26T05:35:50Z

| 0

|

jkterry1

|

pytorch/pytorch

| 17,368

|

What is a version/git-hash of nightly build?

|

## 🚀 Feature

Nightly build does not include git-hash of pytorch.

So we can not know what the build is.

https://download.pytorch.org/libtorch/nightly/cpu/libtorch-shared-with-deps-latest.zip

I know the zip includes build_version which is like "1.0.0dev20190221".

Could you add git-hash of pytorch in build_version and include native_functions.yaml and the README of the yaml?

## Motivation

We are writing ffi-bindings by using nightly build and native_functions.yaml of pytorch-github.

Both the build and the yaml-spec's format are changed frequently and do not match version.

|

https://github.com/pytorch/pytorch/issues/17368

|

closed

|

[

"awaiting response (this tag is deprecated)"

] | 2019-02-21T19:54:41Z

| 2019-02-28T22:34:49Z

| null |

junjihashimoto

|

pytorch/ELF

| 142

|

what is meaning of outputs at verbose mode?

|

after I input quit at the df_console , it was still calulateing

```

D:\elfv2\play_opengo_v2\elf_gpu_full\elf>df_console --load d:/pretrained-go-19x19-v1.bin --num_block 20 --dim 224 --ver

bose

[2019-02-21 10:35:22.103] [elfgames::go::common::GoGameBase-12] [info] [0] Seed: 62127748, thread_id: 156604934899288204

? Invalid input

? Invalid input

genmove b

[2019-02-21 10:48:10.811] [elfgames::go::GoGameSelfPlay-0-15] [info] Current board:

A B C D E F G H J K L M N O P Q R S T

19 . . . . . . . . . . . . . . . . . . . 19

18 . . . . . . . . . . . . . . . . . . . 18

17 . . . . . . . . . . . . . . . . . . . 17

16 . . . + . . . . . + . . . . . + . . . 16

15 . . . . . . . . . . . . . . . . . . . 15

14 . . . . . . . . . . . . . . . . . . . 14

13 . . . . . . . . . . . . . . . . . . . 13

12 . . . . . . . . . . . . . . . . . . . 12

11 . . . . . . . . . . . . . . . . . . . 11 WHITE (O) has captured 0 stones

10 . . . + . . . . . + . . . . . + . . . 10 BLACK (X) has captured 0 stones

9 . . . . . . . . . . . . . . . . . . . 9

8 . . . . . . . . . . . . . . . . . . . 8

7 . . . . . . . . . . . . . . . . . . . 7

6 . . . . . . . . . . . . . . . . . . . 6

5 . . . . . . . . . . . . . . . . . . . 5

4 . . . + . . . . . + . . . . . + . . . 4

3 . . . . . . . . . . . . . . . . . . . 3

2 . . . . . . . . . . . . . . . . . . . 2

1 . . . . . . . . . . . . . . . . . . . 1

A B C D E F G H J K L M N O P Q R S T

Last move: C0, nextPlayer: Black

[1] Propose move [Q16][pp][352]

= Q16

? Invalid input

? Invalid input

? Invalid input

? Invalid input

quit

[2019-02-21 10:51:47.147] [elf::base::Context-3] [info] Prepare to stop ...

[2019-02-21 10:51:47.521] [elfgames::go::GoGameSelfPlay-0-15] [info] Current board:

A B C D E F G H J K L M N O P Q R S T

19 . . . . . . . . . . . . . . . . . . . 19

18 . . . . . . . . . . . . . . . . . . . 18

17 . . . . . . . . . . . . . . . . . . . 17

16 . . . + . . . . . + . . . . . X). . . 16

15 . . . . . . . . . . . . . . . . . . . 15

14 . . . . . . . . . . . . . . . . . . . 14

13 . . . . . . . . . . . . . . . . . . . 13

12 . . . . . . . . . . . . . . . . . . . 12

11 . . . . . . . . . . . . . . . . . . . 11 WHITE (O) has captured 0 stones

10 . . . + . . . . . + . . . . . + . . . 10 BLACK (X) has captured 0 stones

9 . . . . . . . . . . . . . . . . . . . 9

8 . . . . . . . . . . . . . . . . . . . 8

7 . . . . . . . . . . . . . . . . . . . 7

6 . . . . . . . . . . . . . . . . . . . 6

5 . . . . . . . . . . . . . . . . . . . 5

4 . . . + . . . . . + . . . . . + . . . 4

3 . . . . . . . . . . . . . . . . . . . 3

2 . . . . . . . . . . . . . . . . . . . 2

1 . . . . . . . . . . . . . . . . . . . 1

A B C D E F G H J K L M N O P Q R S T

Last move: Q16, nextPlayer: White

[2] Propose move [D4][dd][88]

[2019-02-21 10:51:48.657] [elfgames::go::GoGameSelfPlay-0-15] [info] Current board:

A B C D E F G H J K L M N O P Q R S T

19 . . . . . . . . . . . . . . . . . . . 19

18 . . . . . . . . . . . . . . . . . . . 18

17 . . . . . . . . . . . . . . . . . . . 17

16 . . . + . . . . . + . . . . . X . . . 16

15 . . . . . . . . . . . . . . . . . . . 15

14 . . . . . . . . . . . . . . . . . . . 14

13 . . . . . . . . . . . . . . . . . . . 13

12 . . . . . . . . . . . . . . . . . . . 12

11 . . . . . . . . . . . . . . . . . . . 11 WHITE (O) has captured 0 stones

10 . . . + . . . . . + . . . . . + . . . 10 BLACK (X) has captured 0 stones

9 . . . . . . . . . . . . . . . . . . . 9

8 . . . . . . . . . . . . . . . . . . . 8

7 . . . . . . . . . . . . . . . . . . . 7

6 . . . . . . . . . . . . . . . . . . . 6

5 . . . . . . . . . . . . . . . . . . . 5

4 . . . O . . . . . + . . . . . + . . . 4

3 . . . . . . . . . . . . . . . . . . . 3

2 X). . . . . . . . . . . . . . . . . . 2

1 . . . . . . . . . . . . . . . . . . . 1

A B C D E F G H J K L M N O P Q R S T

Last move: A2, nextPlayer: White

[4] Propose move [F17][fq][363]

[2019-02-21 10:51:50.631] [elfgames::go::GoGameSelfPlay-0-15] [info] Current board:

A B C D E F G H J K L M N O P Q R S T

19 . . . . . . . . . . . . . . . . . . . 19

18 . . . . . . . . . . . . . . . . . . . 18

17 . . . . . O). . . . . . . . . . . . . 17

16 . . . + . . . . . + . . . . . X . . . 16

15 . . . . . . . . . . . . . . . . . . . 15

14 . . . . . . . . . . . . . . . . . . . 14

13 . . . . . . . . . . . . . . . . . . . 13

12 . . . . . . . . . . . . . . . . . . . 12

11 . . . . . . . . . . . . . . . . . . . 11 WHITE (O) has captured 0 stones

10 . . . + . . . . . + . . . . . + . . . 10 BLACK (X) has captured 0 stones

9 . . . . . . . . . . . . . . . . . . . 9

8 . . . . . . . . . . . . . . . . . . . 8

7 . . . . . . . . . . . . . . . . . . . 7

6 . . . . . . . . . . . . . . . . . . . 6

5 . . . . . . . . . . . . . . . . . . . 5

4 . . . O . . . . . + . . . . . + . . . 4

3 . . . . . . . . . . . . . . . . . . . 3

2 X . . . . . . .

|

https://github.com/pytorch/ELF/issues/142

|

open

|

[] | 2019-02-21T02:51:58Z

| 2019-02-21T02:51:58Z

| null |

l1t1

|

pytorch/examples

| 514

|

Why does discriminator's output change between batchsize:64 and batchsize:1 on inference.

|

I'm trying to get the discriminator output using train finished discriminator.

Procedure is below.

1. training dcgan.

2. preparing my image data and resize it 64 * 64.

3. load my image data using dataloader(same as training's one.)

4. I change only batch size at inference.

5. I got small Discriminator outputs(after sigmoid result).

for example)

I prepared 64 images under the dataroot directory. And I tried 2 experience.

I got a below's discriminator output at inference using batch size 64.

```

tensor([0.9955, 0.8801, 0.9727, 0.7377, 0.2667, 0.9432, 0.9941, 0.6896, 0.8638,

0.5006, 0.9766, 0.4148, 0.9577, 0.9065, 0.9849, 0.9027, 0.1619, 0.5418,

0.9256, 0.7502, 0.1467, 0.8197, 0.9100, 0.3416, 0.0066, 0.9521, 0.9973,

1.0000, 0.4952, 0.3026, 0.5347, 0.8695, 0.8033, 0.6709, 0.3602, 0.2145,

0.6901, 0.0129, 0.6780, 0.5321, 0.8195, 0.8662, 0.1759, 0.5599, 0.7313,

0.5138, 0.9396, 0.9256, 0.3011, 0.8163, 0.8046, 0.4802, 0.6256, 0.1656,

0.9368, 0.1080, 0.5960, 0.9493, 0.9533, 0.9609, 0.0137, 0.1603, 0.7717,

0.5684], device='cuda:0', grad_fn=<SqueezeBackward1>)

```

I got a below's discriminator output at inference using batch size 1.

```

tensor([0.6289], device='cuda:0', grad_fn=<SqueezeBackward1>)

tensor([0.2455], device='cuda:0', grad_fn=<SqueezeBackward1>)

tensor([0.8702], device='cuda:0', grad_fn=<SqueezeBackward1>)

tensor([0.0000], device='cuda:0', grad_fn=<SqueezeBackward1>)

tensor([0.0002], device='cuda:0', grad_fn=<SqueezeBackward1>)

............

tensor([0.0002], device='cuda:0', grad_fn=<SqueezeBackward1>)

tensor([0.0022], device='cuda:0', grad_fn=<SqueezeBackward1>)

tensor([0.9955], device='cuda:0', grad_fn=<SqueezeBackward1>)

tensor([0.1370], device='cuda:0', grad_fn=<SqueezeBackward1>)

```

I wonder why I got a small output(different from batch size64) on batch size1.

For instance, I got the 0.0000 on batch size 1, but at batch size 64 0.0000 is nothing.

Also I tried to match tensor size using torch.cat at batch size 1.

I changed from [1, 3, 64, 64] -> [64, 3, 64, 64], using same one image's tensor and torch.cat.

But I got different output value.

If you have any suggestions or point out then please let me know.

|

https://github.com/pytorch/examples/issues/514

|

closed

|

[] | 2019-02-20T09:07:47Z

| 2019-02-20T10:55:48Z

| 2

|

y-shirai-r

|

pytorch/examples

| 507

|

Is there a plan to make the imagenet example in this repository support `fp16`?

|

Thanks! :)

|

https://github.com/pytorch/examples/issues/507

|

closed

|

[] | 2019-02-14T19:38:58Z

| 2022-03-10T03:12:22Z

| 1

|

deepakn94

|

pytorch/pytorch

| 17,111

|

where is the code for the implement of loss?

|

i want to find the implement of nn.BCEWithLogitsLoss, but it returns F functions , and i cannot find where F functions is , i want to modify the loss

|

https://github.com/pytorch/pytorch/issues/17111

|

closed

|

[] | 2019-02-14T12:29:06Z

| 2019-02-14T15:29:47Z

| null |

Jasperty

|

pytorch/examples

| 503

|

I have some basic questions about training

|