repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

pytorch/pytorch

| 26,392

|

How to print out the name and value of parameters in module?

|

torch::jit::script::Module module = torch::jit::load(model_path);

How to print out the name and value of parameters in module?

|

https://github.com/pytorch/pytorch/issues/26392

|

closed

|

[] | 2019-09-18T03:13:03Z

| 2019-09-18T16:15:05Z

| null |

boyob

|

pytorch/pytorch

| 26,344

|

How to get rid of zombie processes using torch.multiprocessing.Pool?

|

I am using torch.multiprocessing.Pool to speed up my NN in inference, like this:

import torch.multiprocessing as mp

mp = mp.get_context('forkserver')

def parallel_predict(predict_func, sequences, args):

predicted_cluster_ids = []

pool = mp.Pool(args.num_workers, maxtasksperchild=1)

out = pool.imap(

func=functools.partial(predict_func, args=args),

iterable=sequences,

chunksize=1)

for item in tqdm(out, total=len(sequences), ncols=85):

predicted_cluster_ids.append(item)

pool.close()

pool.terminate()

pool.join()

return predicted_cluster_ids

Note 1) I am using `imap` because I want to be able to show a progress bar with tqdm.

Note 2) I tried with both `forkserver` and spawn but no luck. I cannot use other methods because of how they interact (poorly) with CUDA.

Note 3) I am using `maxtasksperchild=1` and `chunksize=1` so for each sequence in sequences it spawns a new process.

Note 4) Adding or removing `pool.terminate()` and `pool.join()` makes no difference.

Note 5) `predict_func` is a method of a class I created. I could also pass the whole model to `parallel_predict` but it does not change anything.

Everything works fine except the fact that after a while I run out of memory on the CPU (while on the GPU everything works as expected). Using `htop` to monitor memory usage I notice that, for every process I spawn with pool I get a zombie that uses 0.4% of the memory. They don't get cleared, so they keep using space. Still, `parallel_predict` does return the correct result and the computation goes on. My script is structured in a way that id does validation multiple times so next time `parallel_predict` is called the zombies add up.

This is what I get in `htop`:

Usually, these zombies get cleared after ctrl-c but in some rare cases I need to killall.

Is there some way I can force the`Pool` to close them?

UPDATE:

I tried to kill the zombies using this:

def kill(pool):

import multiprocessing

import signal

# stop repopulating new child

pool._state = multiprocessing.pool.TERMINATE

pool._worker_handler._state = multiprocessing.pool.TERMINATE

for p in pool._pool:

os.kill(p.pid, signal.SIGKILL)

# .is_alive() will reap dead process

while any(p.is_alive() for p in pool._pool):

pass

pool.terminate()

But it does not work. It hangs in `pool.terminate()`

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen

|

https://github.com/pytorch/pytorch/issues/26344

|

open

|

[

"module: dependency bug",

"oncall: distributed",

"module: multiprocessing",

"triaged"

] | 2019-09-17T11:55:41Z

| 2019-11-14T00:08:11Z

| null |

DonkeyShot21

|

pytorch/pytorch

| 25,990

|

How to reproduce a Cross Entropy Loss without losing numerical accuracy?

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

I get a discrepancy between the values of the losses obtained by the torch. CrossEntropyLoss and CustomeCrossEntropyLoss.

## To Reproduce

import torch

import torch.nn.modules.loss as L

class CustomCrossEntropyLoss(L._Loss):

def __init__(self, reduction=True):

super(CustomCrossEntropyLoss, self).__init__()

self.reduction = reduction

def forward(self, inp, target):

input_target = inp.gather(1, target.view(-1, 1))

input_max, _ = inp.max(dim=1, keepdim=True)

output_exp = torch.exp(inp - input_max)

output_softmax_sum = output_exp.sum(dim=1)

output = -input_target + torch.log(output_softmax_sum).view(-1, 1) + input_max

if self.reduction:

output = output.mean()

return output

torch_ce = torch.nn.CrossEntropyLoss(reduction='none')

custom_ce = CustomCrossEntropyLoss(reduction=False)

batch_size = 128

N_class = 90000

logits = torch.randn((batch_size,N_class))

targets = torch.randint(N_class, (batch_size,))

print((torch_ce(logits, targets).view(-1) - custom_ce(logits,targets).view(-1)).mean())

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

## Expected behavior

I get a minimal non-zero discrepancy of the order of 10e-7, which then occurs in gradients and can affect the learning outcome.

How to fix this problem?

## Environment

Collecting environment information...

PyTorch version: 1.2.0

Is debug build: No

CUDA used to build PyTorch: 10.0.130

OS: CentOS Linux 7 (Core)

GCC version: (GCC) 4.8.5 20150623 (Red Hat 4.8.5-36)

CMake version: version 2.8.12.2

Python version: 3.7

Is CUDA available: Yes

CUDA runtime version: Could not collect

Nvidia driver version: 430.14

cuDNN version: Could not collect

Versions of relevant libraries:

[pip3] numpy==1.15.4

[pip3] tensorboard-pytorch==0.7.1

[pip3] torch==1.0.0

[pip3] torchvision==0.2.1

[conda] blas 1.0 mkl.conda

[conda] mkl 2019.4 243.conda

[conda] mkl-service 2.0.2 py37h7b6447c_0.conda

[conda] mkl_fft 1.0.12 py37ha843d7b_0.conda

[conda] mkl_random 1.0.2 py37hd81dba3_0.conda

[conda] pytorch 1.2.0 py3.7_cuda10.0.130_cudnn7.6.2_0 pytorch

[conda] torchvision 0.4.0 py37_cu100 pytorch

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/pytorch/issues/25990

|

closed

|

[] | 2019-09-11T10:45:29Z

| 2019-09-11T19:51:51Z

| null |

tamerlansb

|

pytorch/pytorch

| 25,910

|

How to use batch norm when there has padding?

|

I am doing a NLP task and input various-length sentences into model, so I add some padding to keep every sentence to the longest length in one batch. However, padding length can affect batch norm obviously. And I cannot find a module with a parameter of mask? Can you help me ?

|

https://github.com/pytorch/pytorch/issues/25910

|

closed

|

[] | 2019-09-10T12:22:13Z

| 2019-09-10T16:19:48Z

| null |

RichardHWD

|

pytorch/pytorch

| 25,766

|

how to get cube-root of a negative?

|

hello, I want to know how to get cube-root of a negative?

for example (-8)^(1/3) = -2

however, torch.pow(-8, 1/3) get Nan.

|

https://github.com/pytorch/pytorch/issues/25766

|

closed

|

[] | 2019-09-06T13:33:15Z

| 2021-06-18T15:26:46Z

| null |

qianlinjun

|

pytorch/examples

| 628

|

No data on lo interface for a single node training

|

Hi, I ran the single node training and it ran normally. I want to monitor the bandwidth of the process in one single machine. I used `iptraf-ng` on CentOS 7. I selected the `lo` interface. But it showed nothing. The IP I used is `127.0.0.1`. Is there something wrong?

Thanks

|

https://github.com/pytorch/examples/issues/628

|

open

|

[

"triaged"

] | 2019-09-06T12:28:10Z

| 2022-03-09T23:48:11Z

| 0

|

ElegantLin

|

pytorch/pytorch

| 25,699

|

PyTorch C++ API as a static lib: how to compile ?

|

## ❓ Questions and Help

this questions is linked to the bug described in the issue https://github.com/pytorch/pytorch/issues/25698

I'd like to have instructions on how to compile PyTorch C++ API (libtorch project) as a statical library to link with my C++ projects :

- for Linux, Windows and MacOS

- with the last Intel compilers if possible

- mode with and witout GPU support (CPU vs GPU), with the last drivers and controling GPU cart 'compute capabilities' support, to be able to execute the code on old Kepler cards

- debug and release (advanced optimization and vectorization) modes

thanks for your help and assistance !

|

https://github.com/pytorch/pytorch/issues/25699

|

closed

|

[] | 2019-09-05T10:30:33Z

| 2025-07-02T12:59:59Z

| null |

VitaMusic

|

pytorch/pytorch

| 25,572

|

How to change dynamic model to onnx?

|

## 📚 Documentation

I want to change [Extremenet](https://github.com/xingyizhou/ExtremeNet) to onnx, which programmed a dynamic model, I searched

1 https://pytorch.org/docs/stable/onnx.html#tracing-vs-scripting

2 https://github.com/onnx/tutorials

It seems not told how to change a dynamic pytorch model to onnx, where is the example of change dynamic model to onnx?

below is core dynamic code patch:

class exkp(nn.Module):

def __init__(

self, n, nstack, dims, modules, out_dim, pre=None, cnv_dim=256,

make_tl_layer=None, make_br_layer=None,

make_cnv_layer=make_cnv_layer, make_heat_layer=make_kp_layer,

make_tag_layer=make_kp_layer, make_regr_layer=make_kp_layer,

make_up_layer=make_layer, make_low_layer=make_layer,

make_hg_layer=make_layer, make_hg_layer_revr=make_layer_revr,

make_pool_layer=make_pool_layer, make_unpool_layer=make_unpool_layer,

make_merge_layer=make_merge_layer, make_inter_layer=make_inter_layer,

kp_layer=residual

):

super(exkp, self).__init__()

self.nstack = nstack

self._decode = _exct_decode

curr_dim = dims[0]

self.pre = nn.Sequential(

convolution(7, 3, 128, stride=2),

residual(3, 128, 256, stride=2)

) if pre is None else pre

self.kps = nn.ModuleList([

kp_module(

n, dims, modules, layer=kp_layer,

make_up_layer=make_up_layer,

make_low_layer=make_low_layer,

make_hg_layer=make_hg_layer,

make_hg_layer_revr=make_hg_layer_revr,

make_pool_layer=make_pool_layer,

make_unpool_layer=make_unpool_layer,

make_merge_layer=make_merge_layer

) for _ in range(nstack)

])

self.cnvs = nn.ModuleList([

make_cnv_layer(curr_dim, cnv_dim) for _ in range(nstack)

])

## keypoint heatmaps

self.t_heats = nn.ModuleList([

make_heat_layer(cnv_dim, curr_dim, out_dim) for _ in range(nstack)

])

self.l_heats = nn.ModuleList([

make_heat_layer(cnv_dim, curr_dim, out_dim) for _ in range(nstack)

])

self.b_heats = nn.ModuleList([

make_heat_layer(cnv_dim, curr_dim, out_dim) for _ in range(nstack)

])

self.r_heats = nn.ModuleList([

make_heat_layer(cnv_dim, curr_dim, out_dim) for _ in range(nstack)

])

self.ct_heats = nn.ModuleList([

make_heat_layer(cnv_dim, curr_dim, out_dim) for _ in range(nstack)

])

for t_heat, l_heat, b_heat, r_heat, ct_heat in \

zip(self.t_heats, self.l_heats, self.b_heats, \

self.r_heats, self.ct_heats):

t_heat[-1].bias.data.fill_(-2.19)

l_heat[-1].bias.data.fill_(-2.19)

b_heat[-1].bias.data.fill_(-2.19)

r_heat[-1].bias.data.fill_(-2.19)

ct_heat[-1].bias.data.fill_(-2.19)

self.inters = nn.ModuleList([

make_inter_layer(curr_dim) for _ in range(nstack - 1)

])

self.inters_ = nn.ModuleList([

nn.Sequential(

nn.Conv2d(curr_dim, curr_dim, (1, 1), bias=False),

nn.BatchNorm2d(curr_dim)

) for _ in range(nstack - 1)

])

self.cnvs_ = nn.ModuleList([

nn.Sequential(

nn.Conv2d(cnv_dim, curr_dim, (1, 1), bias=False),

nn.BatchNorm2d(curr_dim)

) for _ in range(nstack - 1)

])

self.t_regrs = nn.ModuleList([

make_regr_layer(cnv_dim, curr_dim, 2) for _ in range(nstack)

])

self.l_regrs = nn.ModuleList([

make_regr_layer(cnv_dim, curr_dim, 2) for _ in range(nstack)

])

self.b_regrs = nn.ModuleList([

make_regr_layer(cnv_dim, curr_dim, 2) for _ in range(nstack)

])

self.r_regrs = nn.ModuleList([

make_regr_layer(cnv_dim, curr_dim, 2) for _ in range(nstack)

])

self.relu = nn.ReLU(inplace=True)

def _train(self, *xs):

image = xs[0]

t_inds = xs[1]

l_inds = xs[2]

b_inds = xs[3]

r_inds = xs[4]

inter = self.pre(image)

outs = []

layers = zip(

self.kps, self.cnvs,

self.t_heats, self.l_heats, self.b_heats, self.r_heats,

self.ct_heats,

self.t_regrs, self.l_regrs, self.b_regrs, self.r_regrs,

)

for ind, layer in enumerate(layers):

kp_, cnv_ = layer[0:2]

t_heat_, l_heat_, b_heat_, r_heat_ = layer[2:6]

ct_heat_ = layer[6]

t_regr_, l_regr_, b_regr_, r_regr_ = layer[7:11]

kp = kp_(inter)

cnv = cnv_(kp)

|

https://github.com/pytorch/pytorch/issues/25572

|

closed

|

[

"module: onnx"

] | 2019-09-03T05:27:41Z

| 2019-09-03T14:17:53Z

| null |

qingzhouzhen

|

huggingface/transformers

| 1,150

|

What is the relationship between `run_lm_finetuning.py` and the scripts in `lm_finetuning`?

|

## ❓ Questions & Help

It looks like there are now two scripts for running LM fine-tuning. While `run_lm_finetuning` seems to be newer, the documentation in `lm_finetuning` seems to indicate that there is more subtlety to generating the right data for performing LM fine-tuning in the BERT format. Does the new script take this into account?

Sorry if I'm missing something obvious!

|

https://github.com/huggingface/transformers/issues/1150

|

closed

|

[

"wontfix"

] | 2019-08-29T18:15:45Z

| 2019-12-30T15:04:14Z

| null |

zphang

|

pytorch/pytorch

| 25,383

|

In torch::jit::script::Module module = torch::jit::load("xxx.pt"), How to release module?

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

cc @suo

|

https://github.com/pytorch/pytorch/issues/25383

|

closed

|

[

"oncall: jit",

"triaged"

] | 2019-08-29T09:16:14Z

| 2019-12-12T19:40:20Z

| null |

pxEkin

|

pytorch/pytorch

| 25,294

|

What is the purpose of fp16 training? faster training? or better accuracy?

|

I think for fp16 inference, just train a model on FP32 and then just model.half() will work.

But this forceful type casting would lead worse accuracy.

I'm not sure which is better between

1. FP32 training then model.half() -> fp16 inference VS

2. FP16 training using apex then inference FP16 with the same setting.

if 1 and 2 has not that big accuracy gap, case 1 would be used just for faster/memory efficent training?

Please give me any hint.

Thank you.

|

https://github.com/pytorch/pytorch/issues/25294

|

closed

|

[] | 2019-08-28T06:12:01Z

| 2019-09-18T02:06:39Z

| null |

dedoogong

|

pytorch/pytorch

| 25,284

|

How to preserve backward grad_fn after distributed 'all_gather' operations

|

I am trying to implement model parallelism in a distributed data parallel setting.

Let’s say I have a tensor output in each process and a number of operations have been performed on it (in each process independently). The tensor has a .grad_fn attached to it. Now I want to perform an all_gather to create a list [tensor_1, tensor_2...tensor_n]. But all the tensors in the list will lose the grad_fn property.

My expectation is to be able to backward() using concated tensor list in each process i through tensor_i.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera

|

https://github.com/pytorch/pytorch/issues/25284

|

closed

|

[

"oncall: distributed"

] | 2019-08-28T02:00:49Z

| 2019-08-28T10:13:50Z

| null |

JoyHuYY1412

|

pytorch/examples

| 624

|

How to get Class Activation Map (CAM) in c++ front end ?

|

Hi everyone ,

I saw many example of visualizing CAM with python using "register_backward_hook" but can't find any way to do the same in C++ frontend.

Is there away to visualize Conv layers in c++ frontend ?

Thank you in advance.

|

https://github.com/pytorch/examples/issues/624

|

open

|

[

"c++"

] | 2019-08-28T00:52:19Z

| 2022-03-09T23:48:32Z

| null |

zirid

|

pytorch/xla

| 961

|

[Question] how to track TPU memory usage

|

How can I access TPU (internal) memory utilization?

Thanks!

|

https://github.com/pytorch/xla/issues/961

|

closed

|

[

"question"

] | 2019-08-27T20:10:59Z

| 2019-10-30T16:50:52Z

| null |

nosound2

|

pytorch/examples

| 622

|

how to train MnasNet? I use the main.py to train MnasNet, but get the worse result. It is just 60.7%, while it should be 73% as you say in Mnasnet.py

|

https://github.com/pytorch/examples/issues/622

|

closed

|

[] | 2019-08-27T14:25:29Z

| 2019-09-06T18:12:16Z

| null |

xufana7

|

|

pytorch/pytorch

| 25,247

|

where is the link to this NOTE?

|

## 📚 Documentation

<!-- A clear and concise description of what content in https://pytorch.org/docs is an issue. If this has to do with the general https://pytorch.org website, please file an issue at https://github.com/pytorch/pytorch.github.io/issues/new/choose instead. If this has to do with https://pytorch.org/tutorials, please file an issue at https://github.com/pytorch/tutorials/issues/new -->

```

# No `def __len__(self)` default?

# See NOTE [ Lack of Default `__len__` in Python Abstract Base Classes ]

```

|

https://github.com/pytorch/pytorch/issues/25247

|

closed

|

[] | 2019-08-27T13:14:09Z

| 2024-02-27T05:02:26Z

| null |

vainaixr

|

pytorch/examples

| 621

|

RuntimeError: CUDA out of memory. Tried to allocate 26.00 MiB .................

|

RuntimeError: CUDA out of memory. Tried to allocate 26.00 MiB (GPU 0; 1024.00 MiB total capacity; 435.61 MiB already allocated; 24.17 MiB free; 26.39 MiB cached)

I have tried to set the batch-size as 16 or 32, but it didn't work. Would you please tell me how to solve this problem?

|

https://github.com/pytorch/examples/issues/621

|

closed

|

[] | 2019-08-27T12:38:17Z

| 2022-03-09T23:46:25Z

| 4

|

qiahui

|

pytorch/examples

| 620

|

Can I use async in ImageNet

|

Does the example of ImageNet support async? If not, how can I add this? Are there some suggestions?

Thanks!

|

https://github.com/pytorch/examples/issues/620

|

closed

|

[] | 2019-08-27T02:38:13Z

| 2019-09-23T00:13:17Z

| 2

|

ElegantLin

|

pytorch/pytorch

| 25,180

|

How to filter with a transfer function in Pytorch??

|

Hi,

I am trying to implement a 1D convolution operation with `F.conv1d`. The current usage of this function is to provide the weights of the filter directly in the time domain. However, for some DSP purposes, it is more effective to apply the filtering process in terms of the transfer function of the filter. In other words, to provide the coefficients of numerator and denominator of the transfer function and the function applies the filtering process accordingly.

This is already provided in [scipy](https://docs.scipy.org/doc/scipy/reference/generated/scipy.signal.lfilter.html) as well as [Matlab](https://www.mathworks.com/help/matlab/ref/filter.html).

I think it is possible to do the same with Pytorch, but I am still struggling to grasp all the details to achieve this .. could you please give any tips?

Many thanks in advance

Best

|

https://github.com/pytorch/pytorch/issues/25180

|

closed

|

[] | 2019-08-26T15:22:31Z

| 2019-08-26T15:42:02Z

| null |

ahmed-fau

|

pytorch/pytorch

| 25,003

|

How to use manually installed third_party libraries, instead of recursive third_party?

|

Some of the packages **have been manually installed** on the system. How to build **everything** based on existing packages, instead of the **recursively checked out** third_party libraries?

For instance: **pybind11** ???

|

https://github.com/pytorch/pytorch/issues/25003

|

closed

|

[] | 2019-08-22T00:39:11Z

| 2019-08-22T15:28:57Z

| null |

jiapei100

|

pytorch/xla

| 943

|

[Looking for suggestions] How to start looking at xla code?

|

not really an issue, it's just I met so many random errors from stack-trace-back or other source which I barely understand, and the model training is quite slow and I cannot spot which tensors got various shape at different training and are re-allocated to cpu, so I'm thinking if looking into the `csrc` would help. Yet not quite sure if I wanna find out the reason of the slow training where shall I get started with? thanks so much!

|

https://github.com/pytorch/xla/issues/943

|

closed

|

[] | 2019-08-19T14:58:08Z

| 2019-08-25T14:24:47Z

| null |

crystina-z

|

pytorch/examples

| 611

|

Problem on multiple nodes

|

Hi, when I am running ImageNet example on multiple nodes, I met the problem showing

`RuntimeError: NCCL error in: /pytorch/torch/lib/c10d/ProcessGroupNCCL.cpp:272, unhandled system error` on my node 0.

The command I used is

`python main.py -a resnet50 --dist-url 'tcp://IP_OF_NODE0:FREEPORT' --dist-backend 'nccl' --multiprocessing-distributed --world-size 2 --rank 0 [imagenet-folder with train and val folders]`

The platform I used is `Python 3.6` using Anaconda on CentOS and the PyTorch Version is `1.1.0`.

Actually, I met this problem on single node and multi GPUs but I solved it by setting

`export NCCL_P2P_DISABLE=1`.

Also before I ran the code, I would set `OMP_NUM_THREADS=1` to make the distributed training faster.

I made sure that my IP and port were correct.

Do you know how to solve it?

Thanks

|

https://github.com/pytorch/examples/issues/611

|

open

|

[

"distributed"

] | 2019-08-15T09:21:21Z

| 2022-03-09T20:52:45Z

| 2

|

ElegantLin

|

pytorch/pytorch

| 24,399

|

How to get NLLLoss grad?

|

import torch

import torch.nn as nn

m = nn.LogSoftmax(dim=1)

loss = nn.NLLLoss()

a=[[2., 0.],

[1., 1.]]

input = torch.tensor(a, requires_grad=True)

target = torch.tensor([1, 1])

output = loss(m(input), target)

output.backward()

print(input.grad)

-------------------------------------------------------

tensor([[ 0.4404, -0.4404],

[ 0.2500, -0.2500]])

----------------------------------

How to get input.grad?What's the formula?

|

https://github.com/pytorch/pytorch/issues/24399

|

closed

|

[] | 2019-08-15T09:07:53Z

| 2019-08-15T16:26:06Z

| null |

williamlzw

|

pytorch/audio

| 235

|

How to make the data precision loaded by torchaudio.load be consistent with the data loaded by the librosa.load

|

I found that data precision loaded by torchaudio.load is much lower than librosa. Is there a way to improve data precision?

|

https://github.com/pytorch/audio/issues/235

|

closed

|

[] | 2019-08-14T15:13:41Z

| 2019-08-27T20:12:50Z

| null |

YapengTian

|

pytorch/pytorch

| 24,310

|

[Question] Who can tell me where is the windows version torch in PYPI? It just like missing.

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

|

https://github.com/pytorch/pytorch/issues/24310

|

closed

|

[

"module: windows",

"triaged"

] | 2019-08-14T05:48:57Z

| 2020-02-04T03:10:59Z

| null |

xiaohuihuichao

|

pytorch/examples

| 607

|

Does this variable 'tokens' make sense?

|

https://github.com/pytorch/examples/blob/4581968193699de14b56527296262dd76ab43557/word_language_model/data.py#L32

Thanks!

|

https://github.com/pytorch/examples/issues/607

|

closed

|

[] | 2019-08-13T03:33:11Z

| 2019-08-16T12:01:08Z

| 4

|

standbyme

|

pytorch/pytorch

| 24,220

|

Where is nn.Transformer for pytorch 1.2.0 on win10?

|

## ❓ Questions and Help

I ran the installing code "pip3 install torch==1.2.0 torchvision==0.4.0 -f https://download.pytorch.org/whl/torch_stable.html" and installed torch 1.2.0.

Yet, I can't find the Transformers in nn module, where are they? platform: WIN10

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

|

https://github.com/pytorch/pytorch/issues/24220

|

closed

|

[] | 2019-08-13T01:08:31Z

| 2019-10-07T02:07:59Z

| null |

shuaishuaij

|

pytorch/examples

| 605

|

DistributedDataParralle training speed

|

Hi, I am using image net. But there is no big difference between the time consuming when I used 2 GPUs, 4 GPUs or 8 GPUs. I just changed the `gpu id` and batch size to guarantee the memory of GPU was fully used. The speed did not increase although I used more GPUs. Is there something wrong about what I did?

Thanks a lot.

|

https://github.com/pytorch/examples/issues/605

|

open

|

[

"distributed"

] | 2019-08-10T16:37:09Z

| 2022-10-11T11:29:08Z

| 5

|

ElegantLin

|

pytorch/tutorials

| 606

|

Is there any reason for using tensor.data?

|

https://github.com/pytorch/tutorials/blob/60d6ef365e36f3ba82c2b61bf32cc40ac4e86c7b/beginner_source/blitz/autograd_tutorial.py#L160

This is an official tutorial codes.

Is there any reason for using tensor.data?

~~~

x = torch.randn(3, requires_grad=True)

y = x * 2

while y.data.norm() < 1000:

y = y * 2

~~~

If not, I think this code should be replaced by

~~~

y = x * 2

while y.detach().norm() < 1000:

y = y * 2

~~~

|

https://github.com/pytorch/tutorials/issues/606

|

closed

|

[] | 2019-08-09T06:27:43Z

| 2019-09-04T13:35:22Z

| 0

|

minlee077

|

pytorch/pytorch

| 24,009

|

How to convert pytorch model(faster-rcnn) to onnx?

|

## ❓ Questions and Help

I trained a faster-rcnn model use the project: jwyang/faster-rcnn.pytorch

[https://github.com/jwyang/faster-rcnn.pytorch](url)

I want convert the model to onnx, this is my code:

` torch_out=torch.onnx.export(fasterRCNN,\

(im_data,im_info,gt_boxes,num_boxes),\

"onnx_model_name.onnx",\

export_params=True,\

opset_version=10,\

do_constant_folding=True,\

input_names=['input'],\

output_names=['output'])`

I get this error:

File "/home/user/anaconda3/envs/mypytorch-env/lib/python3.7/site-packages/torch/jit/__init__.py", line 297, in forward

out_vars, _ = _flatten(out)

RuntimeError: Only tuples, lists and Variables supported as JIT inputs, but got int

Terminated

Who ever encountered this problem?

Please help me. Thank you very much.

|

https://github.com/pytorch/pytorch/issues/24009

|

closed

|

[

"module: onnx",

"triaged"

] | 2019-08-08T08:57:05Z

| 2021-12-22T21:51:39Z

| null |

waynebianxx

|

pytorch/examples

| 604

|

Do you have any instructions for the use of functions related to C + + ports?

|

Do you have any instructions for the use of functions related to C + + ports?

for example:

auto aa = torch::tensor({ 1, 2 , 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16 });

auto bb = torch::reshape(aa, { 2, 2, 2, 2 });

const void *src = bb.data<int>();

std::vector<int>value;

void *dst = &value;

torch::CopyBytes(2, src, torch::DeviceType::CPU, dst, torch::DeviceType::CPU, 0);

But I found that I could not get the desired result.

I hope to get your help. Thank you.

|

https://github.com/pytorch/examples/issues/604

|

closed

|

[] | 2019-08-07T06:56:32Z

| 2019-08-07T17:26:02Z

| 1

|

yeyuxmf

|

pytorch/tutorials

| 593

|

does the attention need do mask on the encoder_outputs?

|

attn_weights = self.attn(rnn_output, encoder_outputs)

this is the attn_weights, the number of output vector in a batch is different because the input length is different, and the number of output vector is padded align max_seq_len in the batch.

but when compute attn_weights, there is no mask operation on the padding output vec,is this right ?

|

https://github.com/pytorch/tutorials/issues/593

|

closed

|

[] | 2019-08-06T12:45:14Z

| 2019-08-07T19:11:51Z

| 1

|

littttttlebird

|

huggingface/sentence-transformers

| 6

|

What is the classical loss for doc ranking problem? Thank you.

|

Based on my understanding, Multiple Negatives Ranking Loss is a better loss for doc ranking problem.

What is the former classical loss for doc ranking problem?

Thank you very much.

|

https://github.com/huggingface/sentence-transformers/issues/6

|

closed

|

[] | 2019-08-05T03:49:39Z

| 2019-08-05T08:21:27Z

| null |

guotong1988

|

pytorch/examples

| 600

|

What is the different between train_loss and test_loss?

|

Hello, I am a student who is just beginning to learn pytorch, I have runnd the examples of MNIST code, I am curious why the train_loss and test_loss are calculated differently.Here is the calculation code.Why are they not using the same code?

`loss = F.nll_loss(output, target) # train_loss`

` test_loss = F.nll_loss(output, target, reduction='sum')`

`test_loss = test_loss/len(test_loader.dataset)`

|

https://github.com/pytorch/examples/issues/600

|

closed

|

[] | 2019-08-05T02:35:51Z

| 2019-08-18T11:15:44Z

| null |

wulongjian

|

pytorch/pytorch

| 23,773

|

how to modify activation in GRU

|

We know in keras, `Bidirectional(GRU(128, activation='linear', return_sequences=True))(a1) # (240,256)`,that is to say, we can choose activation.But in torch,there’s no para to choose.`nn.GRU(n_in, n_hidden, bidirectional=True, dropout=droupout, batch_first=True, num_layers=num_layers)

`I want to know how to modify activation in GRU in torch

|

https://github.com/pytorch/pytorch/issues/23773

|

closed

|

[] | 2019-08-05T01:45:08Z

| 2019-08-05T02:05:10Z

| null |

MichelleYang2017

|

pytorch/examples

| 598

|

Problem finding the model en

|

Hi,

How can I resolve this error?

```

$ python train.py

Traceback (most recent call last):

File "train.py", line 20, in <module>

inputs = data.Field(lower=args.lower, tokenize='spacy')

File "/home/mahmood/.local/lib/python2.7/site-packages/torchtext/data/field.py", line 152, in __init__

self.tokenize = get_tokenizer(tokenize)

File "/home/mahmood/.local/lib/python2.7/site-packages/torchtext/data/utils.py", line 12, in get_tokenizer

spacy_en = spacy.load('en')

File "/home/mahmood/.local/lib/python2.7/site-packages/spacy/__init__.py", line 27, in load

return util.load_model(name, **overrides)

File "/home/mahmood/.local/lib/python2.7/site-packages/spacy/util.py", line 139, in load_model

raise IOError(Errors.E050.format(name=name))

IOError: [E050] Can't find model 'en'. It doesn't seem to be a shortcut link, a Python package or a valid path to a data directory.

```

|

https://github.com/pytorch/examples/issues/598

|

closed

|

[] | 2019-08-01T11:09:46Z

| 2022-03-10T00:25:00Z

| 1

|

mahmoodn

|

pytorch/text

| 572

|

How to set the max length for batches?

|

Is there a way to use something like `max_len` argument: all text entries in a dataset longer than a certain length can be thrown out.

|

https://github.com/pytorch/text/issues/572

|

closed

|

[] | 2019-07-29T18:25:25Z

| 2019-09-16T15:04:40Z

| null |

XinDongol

|

pytorch/pytorch

| 23,490

|

upcoming PEP 554: how much effort we need to support sub-interpreter

|

## 🚀 Feature

support python sub-interpreters and maintains all status of the torch library.

## Motivation

as #10950 demonstrates, the current ``torch`` library cannot lives on multiple sub-interpreter simultaneously within the same process. But we do need to run python codes on multiple "threads" at the same time for the very reasons why ``torch`` introduces ``torch.multiprocessing`` and ``DistributedDataParallel`` (the single node scenario). As [PEP 554](https://www.python.org/dev/peps/pep-0554/) is proposed back in 2017 and maybe available by 2019 or 2020, I think it is necessary to make use of it because:

- It is easier to sharing data between interpreters than between processes

- It will reduce gpu memory overhead (Every subprocess consume at least 400~500MB gpu memory)

- It can help avoid relatively complex process management problems

And between multi-interpreter and multi-process, there is almost no difference on user coding experience and front-end design, and the changes will be made behind the scene.

## Pitch

<!-- A clear and concise description of what you want to happen. -->

I think works need to be done on following aspects:

- Changes any global status that should bind to a interpreter to a per-interpreter status set. (the ``detach`` method mentioned in #10950, for example)

``Tensor`` lifecycle management maybe not a good example, because it is also a choice that ``Tensor`` can be shared across interpreters.

- Prevent re-initializing and double-finalize for those status that are indeed global. (CUDA initialization, for example)

- Create interface and infrastructure for controlling communication and sharing ``Tensor`` between interpreters.

- Deprecate ``torch.multiprocessing`` module

|

https://github.com/pytorch/pytorch/issues/23490

|

open

|

[

"feature",

"triaged"

] | 2019-07-28T16:19:53Z

| 2019-08-02T16:16:00Z

| null |

winggan

|

pytorch/pytorch

| 23,423

|

how to process c++ forward multiple return values

|

my model return value like this , it is detection model

output = (

tensor1, # loc preds

tensor2 # conf preds

)

how to get return values in c++

|

https://github.com/pytorch/pytorch/issues/23423

|

closed

|

[] | 2019-07-26T07:37:15Z

| 2019-07-26T11:14:56Z

| null |

kakaluote

|

pytorch/vision

| 1,166

|

How to train resnet18 to the best accuracy?

|

I recently did a simple experiment, training cifar10 with resnet18( torchvision.models), but I can't achieve the desired accuracy(93%).

I found a GitHub repository where the example can be trained to 93% accuracy, [pytorch-cifar](https://github.com/kuangliu/pytorch-cifar). But his implementation is different from torchvision.models.resnet18, The difference may be [here](https://github.com/kuangliu/pytorch-cifar/issues/91#issuecomment-514933998).

Is there any example to teach us how to train cifar10 with resnet18 to the optimal precision?

|

https://github.com/pytorch/vision/issues/1166

|

closed

|

[

"question",

"module: models",

"topic: classification"

] | 2019-07-25T09:57:20Z

| 2019-07-26T09:05:35Z

| null |

zerolxf

|

huggingface/neuralcoref

| 187

|

Where is the conll parser?

|

In the [instructions](https://github.com/huggingface/neuralcoref/blob/master/neuralcoref/train/training.md) there is a reference to a conll parser and conll processing scripts, but those links are dead. They have been removed but it's not clear to me why.

|

https://github.com/huggingface/neuralcoref/issues/187

|

closed

|

[

"training",

"docs"

] | 2019-07-23T20:59:40Z

| 2019-12-17T06:30:02Z

| null |

BramVanroy

|

pytorch/pytorch

| 23,218

|

What is the torchvision version for pytorch-nightly? Use 0.3.0 to report errors

|

The official website does not provide the torchvison version installation method.

Here is the error message when torchvison 0.3.0 is used.

torchvision/_C.cpython-36m-x86_64-linux-gnu.so: undefined symbol: _ZN2at7getTypeERKNS_6TensorE

That should be caused by the mismatch version between pytorch-nightly and torchvision 0.3.0.

|

https://github.com/pytorch/pytorch/issues/23218

|

closed

|

[

"module: docs",

"triaged",

"module: vision"

] | 2019-07-23T06:37:44Z

| 2021-03-12T13:00:16Z

| null |

zymale

|

pytorch/pytorch

| 23,215

|

How the ops are registerd to ATenDispatch's op tables_

|

I want to know how ops are registered to ATenDispatch's op_tables_;

I see ATenDispatch has registerOp/registerVariableOp interface, but not found code that these interfaces are called to register ops;

Is ATenDispatch use the global static varable to trigger the registration like C10_DECLARE_REGISTRY mechanism?

I see the code below, but cannot find where "aten::linear(Tensor input, Tensor weight, Tensor? bias=None) -> Tensor" is registered to ATenDispatch.

`static inline Tensor linear(const Tensor & input, const Tensor & weight, const Tensor & bias) {

static auto table = globalATenDispatch().getOpTable("aten::linear(Tensor input, Tensor weight, Tensor? bias=None) -> Tensor");

return table->getOp<Tensor (const Tensor &, const Tensor &, const Tensor &)>(at::detail::infer_backend(input), at::detail::infer_is_variable(input))(input, weight, bias);

}`

|

https://github.com/pytorch/pytorch/issues/23215

|

closed

|

[] | 2019-07-23T06:17:08Z

| 2019-07-23T10:44:28Z

| null |

dongfangduoshou123

|

pytorch/tutorials

| 566

|

Is this RNN implementation different from the vanilla RNN?

|

https://github.com/pytorch/tutorials/blob/master/intermediate_source/char_rnn_classification_tutorial.py

Standard Interpretation

-------------------------

In the original RNN, the hidden state and output are calculated as

[![enter image description here][1]][1]

in other words, we obtain the the output from the hidden state.

According to [Wiki][2], the RNN architecture can be unfolded like this

[![vani][3]][3]

And the code I have been using is like:

class Model(nn.Module):

def __init__(self, input_size, output_size, hidden_dim, n_layers):

super(Model, self).__init__()

self.hidden_dim = hidden_dim

self.rnn = nn.RNN(input_size, hidden_dim, 1)

self.fc = nn.Linear(hidden_dim, output_size)

def forward(self, x):

batch_size = x.size(0)

out, hidden = self.rnn(x)

# getting output from the hidden state

out = out..view(-1, self.hidden_dim)

out = self.fc(out)

return out, hidden

RNN as "pure" feed-forward layers

-------------------------

But in this tutorial the hidden layer calculation is same as the standard interpretation, but the output is is calculated independently from the current hidden state `h`.

To me, the math behind this implementation is:

[![enter image description here][6]][6]

So, this implementation is different from the original RNN implementation?

[1]: https://i.stack.imgur.com/1IdH7.png

[2]: https://en.wikipedia.org/wiki/Recurrent_neural_network

[3]: https://i.stack.imgur.com/aJL7l.png

[4]: https://pytorch.org/tutorials/intermediate/char_rnn_classification_tutorial.html#creating-the-network

[5]: https://i.stack.imgur.com/mfjcp.png

[6]: https://i.stack.imgur.com/M3jgf.gif

@chsasank @zou3519

|

https://github.com/pytorch/tutorials/issues/566

|

closed

|

[] | 2019-07-20T07:49:30Z

| 2021-06-16T00:15:41Z

| 1

|

KinWaiCheuk

|

pytorch/xla

| 837

|

How to save/load a model trained with `torch_xla_py.data_parallel`

|

A `torch_xla_py.data_parallel` model doesn't have an implementation for the function `state_dict()` which is required to save/load the model. Is there a way around this?

Thanks

|

https://github.com/pytorch/xla/issues/837

|

closed

|

[] | 2019-07-18T17:23:42Z

| 2019-07-29T23:23:51Z

| null |

ibeltagy

|

pytorch/xla

| 836

|

how to read the output of `_xla_metrics_report`

|

This is not an issue, but I am curious how to read the metrics report printed by:

`print(torch_xla._XLAC._xla_metrics_report())`

I want to see if all the operations I am using are supported or not, and if there's something to do to speed it up.

Here's a sample output:

```

2019-07-18 17:15:45,048: ** ** * Saving fine-tuned model ** ** *

Metric: CompileTime

TotalSamples: 24

Counter: 07s501ms263.438us

ValueRate: 415ms845.224us / second

Rate: 1.53144 / second

Percentiles: 1%=092ms776.335us; 5%=116ms990.572us; 10%=184ms266.354us; 20%=185ms543.885us; 50%=270ms754.761us; 80%=415ms236.583us; 90%=416ms362.582us; 95%=417ms693.385us; 99%=417ms171.393us

Metric: ExecuteTime

TotalSamples: 1752

Counter: 07m04s113ms694.219us

ValueRate: 03s312ms257.946us / second

Rate: 25.5639 / second

Percentiles: 1%=068ms224.061us; 5%=069ms564.310us; 10%=069ms785.400us; 20%=069ms102.875us; 50%=181ms379.773us; 80%=189ms745.170us; 90%=191ms81.607us; 95%=194ms931.718us; 99%=225ms80.436us

Metric: InboundData

TotalSamples: 880

Counter: 3.44KB

ValueRate: 37.22B / second

Rate: 9.30398 / second

Percentiles: 1%=4.00B; 5%=4.00B; 10%=4.00B; 20%=4.00B; 50%=4.00B; 80%=4.00B; 90%=4.00B; 95%=4.00B; 99%=4.00B

Metric: OutboundData

TotalSamples: 1976

Counter: 4.09GB

ValueRate: 20.98MB / second

Rate: 10.5791 / second

Percentiles: 1%=4.00B; 5%=3.00KB; 10%=3.00KB; 20%=3.00KB; 50%=12.00KB; 80%=2.25MB; 90%=2.25MB; 95%=9.00MB; 99%=9.00MB

Metric: ReleaseCompileHandlesTime

TotalSamples: 14

Counter: 41s345ms872.979us

ValueRate: 02s970ms383.483us / second

Rate: 0.667202 / second

Percentiles: 1%=001ms416.541us; 5%=001ms416.541us; 10%=049ms618.232us; 20%=059ms4.227us; 50%=130ms959.829us; 80%=10s703ms744.276us; 90%=11s570ms353.038us; 95%=11s585ms908.001us; 99%=11s585ms908.001us

Metric: ReleaseDataHandlesTime

TotalSamples: 3409

Counter: 18s036ms534.459us

ValueRate: 101ms925.451us / second

Rate: 49.556 / second

Percentiles: 1%=643.545us; 5%=776.956us; 10%=879.853us; 20%=001ms27.498us; 50%=001ms436.214us; 80%=003ms823.050us; 90%=004ms105.973us; 95%=005ms355.654us; 99%=008ms39.847us

Metric: TransferFromServerTime

TotalSamples: 880

Counter: 09s893ms77.809us

ValueRate: 094ms23.901us / second

Rate: 9.30398 / second

Percentiles: 1%=001ms136.428us; 5%=001ms262.894us; 10%=001ms369.658us; 20%=002ms514.730us; 50%=002ms768.530us; 80%=002ms232.447us; 90%=055ms522.896us; 95%=063ms333.605us; 99%=071ms470.850us

Metric: TransferToServerTime

TotalSamples: 1976

Counter: 02m42s730ms811.808us

ValueRate: 670ms609.184us / second

Rate: 10.5653 / second

Percentiles: 1%=001ms358.042us; 5%=002ms672.558us; 10%=003ms745.003us; 20%=005ms642.737us; 50%=016ms554.496us; 80%=077ms944.343us; 90%=187ms107.324us; 95%=231ms913.229us; 99%=263ms297.246us

Counter: CachedSyncTensors

Value: 1736

Counter: CreateCompileHandles

Value: 17

Counter: CreateDataHandles

Value: 190064

Counter: CreateXlaTensor

Value: 2813256

Counter: DestroyCompileHandles

Value: 14

Counter: DestroyDataHandles

Value: 186616

Counter: DestroyXlaTensor

Value: 2809944

Counter: ReleaseCompileHandles

Value: 14

Counter: ReleaseDataHandles

Value: 186616

Counter: UncachedSyncTensors

Value: 24

Counter: XRTAllocateFromTensor_Empty

Value: 1629

Counter: XrtCompile_Empty

Value: 2176

Counter: XrtExecuteChained_Empty

Value: 2176

Counter: XrtExecute_Empty

Value: 2176

Counter: XrtRead_Empty

Value: 2176

Counter: XrtReleaseAllocationHandle_Empty

Value: 2176

Counter: XrtReleaseCompileHandle_Empty

Value: 2176

Counter: XrtSessionCount

Value: 25

Counter: XrtSubTuple_Empty

Value: 2176

Counter: aten::_local_scalar_dense

Value: 880

```

|

https://github.com/pytorch/xla/issues/836

|

closed

|

[] | 2019-07-18T17:21:05Z

| 2019-07-25T23:21:40Z

| null |

ibeltagy

|

pytorch/pytorch

| 23,015

|

I used libtorch to write resnet18 for training in C++, so how to load resnet18.pth in pytorch to help pre-training

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

I used libtorch to write resnet18 for training in C++, so how to load resnet18.pth in pytorch to help pre-training;

Cannot import using torch::load(resnet18,"./resnet18.pth")

Resnet18 written in c++ is correct

|

https://github.com/pytorch/pytorch/issues/23015

|

closed

|

[] | 2019-07-18T08:53:31Z

| 2019-07-18T08:57:16Z

| null |

CF-chen-feng-CF

|

huggingface/transformers

| 805

|

Where is "run_bert_classifier.py"?

|

Thanks for this great repo.

Is there any equivalent to [the previous run_bert_classifier.py](https://github.com/huggingface/pytorch-pretrained-BERT/tree/master/examples/run_bert_classifier.py)?

|

https://github.com/huggingface/transformers/issues/805

|

closed

|

[] | 2019-07-17T14:57:53Z

| 2020-12-02T15:59:46Z

| null |

amirj

|

pytorch/pytorch

| 22,858

|

What is the abbreviation of CI?

|

## ❓ Questions and Help

I ma sorry, I don't understand the sentence: ` On CI, we test with BUILD_SHARED_LIBS=OFF.`

What is the CI ?

What is the BUILD_SHARED_LIBS?

Could someone explain it for me?

Thank you very much!

|

https://github.com/pytorch/pytorch/issues/22858

|

closed

|

[] | 2019-07-15T07:11:37Z

| 2019-07-15T07:17:31Z

| null |

137996047

|

pytorch/pytorch

| 22,791

|

How to use mpi backend without CUDA_aware

|

We noticed that the MPI backend doesn't support the GPU from the official website,(https://pytorch.org/docs/master/distributed.html), then we complied the Pytorch with USE_MPI=1. The command line is `python3 main.py -a resnet50 --dist-url 'tcp://12.0.50.1:12348' --dist-backend 'mpi' --multiprocessing-distributed --world-size 2 --rank 0 /data/tiny-imagenet-200/`. However, we got the error message "CUDA tensor detected and the MPI used doesn't have CUDA-aware MPI support". I think the GPU-Direct is not enabled if used MPI backend, I don't know why it uses the CUDA tensor and CUDA-aware , seems GPU-Direct route. And how to set the args for avoiding the CUDA-aware. Thank you :)

|

https://github.com/pytorch/pytorch/issues/22791

|

open

|

[

"triaged",

"module: mpi"

] | 2019-07-12T08:09:15Z

| 2020-11-25T06:05:04Z

| null |

401qingkong

|

pytorch/pytorch

| 22,731

|

How to convert at::Tensor (one element) type into a float type in c++ libtorch?

|

When I take an element A (eg: x[1][2][3][4], also type at::Tensor) from a four-dimensional variable x(at::Tensor), I want to compare (or multiply) A and B (float type) , how do I convert A (at::Tensor) to float? Thanks a lot!

|

https://github.com/pytorch/pytorch/issues/22731

|

closed

|

[] | 2019-07-11T05:33:49Z

| 2023-06-16T06:44:22Z

| null |

FightStone

|

pytorch/pytorch

| 22,709

|

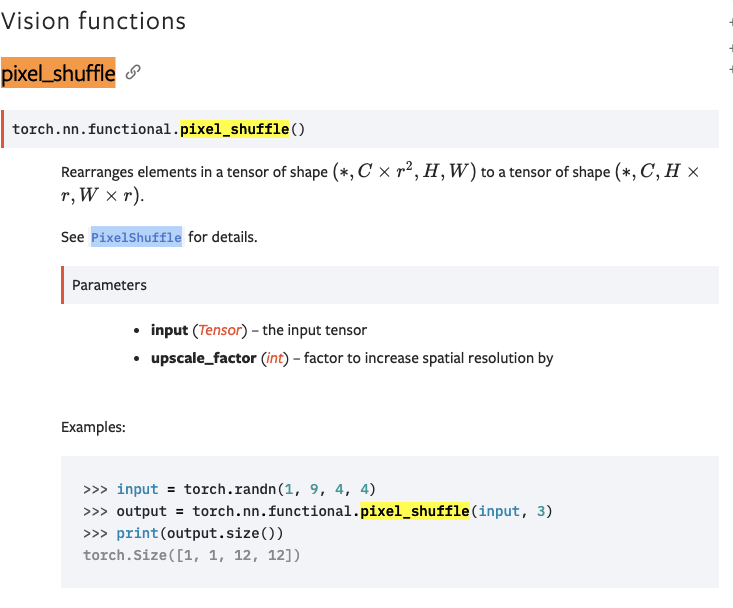

[docs] Unclear how to use pixel_shuffle

|

## 📚 Documentation

The function is documented as taking no inputs, but uses inputs in the example.

|

https://github.com/pytorch/pytorch/issues/22709

|

closed

|

[

"module: docs",

"triaged"

] | 2019-07-10T22:28:19Z

| 2020-10-06T10:53:53Z

| null |

zou3519

|

pytorch/examples

| 590

|

Why normalize rewards?

|

In [line 75](https://github.com/pytorch/examples/blob/master/reinforcement_learning/actor_critic.py#L75) of actor-critic.py, there is a code that normalizes the rewards. However, we don't normalize the values returned from the critic. Why do we do this?

|

https://github.com/pytorch/examples/issues/590

|

closed

|

[] | 2019-07-10T12:06:56Z

| 2022-03-09T23:58:34Z

| 1

|

ThisIsIsaac

|

pytorch/tutorials

| 553

|

improve pytorch tutorial for Data Parallelism

|

in this tutorial for data parallel ([link](https://pytorch.org/tutorials/beginner/blitz/data_parallel_tutorial.html))

it can be useful if you can add how to handle loss function for the case that we are using multiple gpus.

usually naive way will cause unbalance gpu memory usage

|

https://github.com/pytorch/tutorials/issues/553

|

open

|

[] | 2019-07-08T21:04:57Z

| 2021-10-28T13:15:08Z

| 3

|

isalirezag

|

pytorch/examples

| 586

|

syntax for evaluation mode on custom data?

|

I fine-tuned a model on a custom dataset that was pretrained with Imagenet. Now that I have the model and resulting best epoch in a pth file. What is the correct syntax for evaluation on the validation dataset using my new model. I know I need to set the '-e' flag, butI don't see how to point the script to my newly-trained model. Apologies if this is a novice issue.

|

https://github.com/pytorch/examples/issues/586

|

closed

|

[] | 2019-07-06T16:41:18Z

| 2022-03-10T05:54:16Z

| 1

|

gbrow004

|

pytorch/pytorch

| 22,549

|

How to programmatically check PyTorch version

|

## 📚 Documentation

[Minor minor detail]

In the reproducibility section of the docs or in the FAQ, I would add a simple subsection/snippet of code to show how to programmatically check the running version of PyTorch.

This can also encourage users to take into account heterogeneity of PyTorch versions in their code.

By the way, a simple regex on `torch.__version__` is enough (this assuming version numbering will not change).

```python

import torch

import re

if int(re.search(r'([\d.]+)', torch.__version__).group(1).replace('.', '')) < 100:

raise ImportError('Your PyTorch version is not supported. '

'Please download and install PyTorch 1.x')

```

|

https://github.com/pytorch/pytorch/issues/22549

|

closed

|

[

"module: docs",

"triaged",

"enhancement"

] | 2019-07-05T15:02:31Z

| 2019-11-16T11:55:47Z

| null |

srossi93

|

pytorch/xla

| 803

|

How to monitor the TPU utilization and memory usage when training?

|

https://github.com/pytorch/xla/issues/803

|

closed

|

[

"question"

] | 2019-07-05T11:19:51Z

| 2021-11-15T18:12:41Z

| null |

anhle-uet

|

|

pytorch/xla

| 802

|

Is there any detailed document on how to build and train Pytorch network on TPU?

|

Hi, I couldn't find any detailed guide or tutorial on how to use this project. Could you point me out some? Thanks a lot!

|

https://github.com/pytorch/xla/issues/802

|

closed

|

[] | 2019-07-05T05:27:11Z

| 2019-07-05T05:46:57Z

| null |

anhle-uet

|

pytorch/examples

| 584

|

in DCGAN example, Why do we need to make netD inference twice?

|

To my understanding, the two lines nearly have the same functionality (same input, same output) except in the first inference `fake` is detached.

https://github.com/pytorch/examples/blob/1de2ff9338bacaaffa123d03ce53d7522d5dcc2e/dcgan/main.py#L229

https://github.com/pytorch/examples/blob/1de2ff9338bacaaffa123d03ce53d7522d5dcc2e/dcgan/main.py#L241

Is it necessary to make netD inference twice with the same input?

What if we re-use the output of the first inference (with fake input) to calculate `errG`?

instead of the original codes:

```

############################

# (1) Update D network: maximize log(D(x)) + log(1 - D(G(z)))

###########################

# train with real

netD.zero_grad()

real_cpu = data[0].to(device)

batch_size = real_cpu.size(0)

label = torch.full((batch_size,), real_label, device=device)

output = netD(real_cpu)

errD_real = criterion(output, label)

errD_real.backward()

D_x = output.mean().item()

# train with fake

noise = torch.randn(batch_size, nz, 1, 1, device=device)

fake = netG(noise)

label.fill_(fake_label)

output = netD(fake.detach())

errD_fake = criterion(output, label)

errD_fake.backward()

D_G_z1 = output.mean().item()

errD = errD_real + errD_fake

optimizerD.step()

############################

# (2) Update G network: maximize log(D(G(z)))

###########################

netG.zero_grad()

label.fill_(real_label) # fake labels are real for generator cost

output = netD(fake)

errG = criterion(output, label)

errG.backward()

D_G_z2 = output.mean().item()

optimizerG.step()

```

can we re-write them as following?

```

############################

# (1) Update D network: maximize log(D(x)) + log(1 - D(G(z)))

###########################

# train with real

netD.zero_grad()

real_cpu = data[0].to(device)

batch_size = real_cpu.size(0)

label = torch.full((batch_size,), real_label, device=device)

real_output = netD(real_cpu)

errD_real = criterion(real_output, label)

errD_real.backward()

D_x = real_output.mean().item()

# train with fake

noise = torch.randn(batch_size, nz, 1, 1, device=device)

fake = netG(noise)

label.fill_(fake_label)

# output = netD(fake.detach())

fake_output = netD(fake)

errD_fake = criterion(fake_output, label)

#errD_fake.backward()

errD_fake.backward(retain_graph=True)

D_G_z1 = fake_output.mean().item()

errD = errD_real + errD_fake

optimizerD.step()

############################

# (2) Update G network: maximize log(D(G(z)))

###########################

netG.zero_grad()

netD.zero_grad()

label.fill_(real_label) # fake labels are real for generator cost

# output = netD(fake)

errG = criterion(fake_output, label)

errG.backward()

D_G_z2 = fake_output.mean().item()

optimizerG.step()

```

|

https://github.com/pytorch/examples/issues/584

|

closed

|

[] | 2019-07-04T08:37:17Z

| 2022-03-10T00:37:26Z

| 7

|

DreamChaserMXF

|

pytorch/xla

| 797

|

How to use LR schedulers?

|

Can you provide an example of how to use torch.optim LR schedulers with Pytorch/XLA? I've been basing my code on the [test examples](https://github.com/pytorch/xla/tree/master/test), but not sure where to define the LR scheduler and where to step the scheduler since it looks like the optimizer is re-initialized each training loop. Thanks!

|

https://github.com/pytorch/xla/issues/797

|

closed

|

[] | 2019-07-04T04:41:29Z

| 2019-07-10T05:32:16Z

| null |

brianhhu

|

pytorch/examples

| 582

|

[How to write nn.ModuleList() in Pytorch C++ API]

|

Hi,

How can i write nn.Modulelist() using Pytorch C++?

@goldsborough

@soumith

Any help would be great.

Thank you

|

https://github.com/pytorch/examples/issues/582

|

open

|

[

"c++"

] | 2019-07-03T05:55:19Z

| 2022-03-09T20:49:35Z

| null |

vinayak618

|

pytorch/xla

| 793

|

How to use a cluster of tpus?

|

Hi,

The documentation described the use case with only one cloud tpu. However, in tensorflow it is possible to setup multiple TPU's via TPUClusterResolver. So, how to setup pytorch-xla for training on a multiple tpu devices?. In my case it is 25 preemptible v2-8 TPUs.

|

https://github.com/pytorch/xla/issues/793

|

closed

|

[] | 2019-07-02T14:43:29Z

| 2019-07-10T05:37:19Z

| null |

Rexhaif

|

pytorch/tutorials

| 549

|

How to combine Rescale with other transforms.RandomHorizontalFlip?

|

I am following the tutorial, but meet the problem:` File "/home/swg/anaconda3/envs/pytorch/lib/python3.6/site-packages/torchvision/transforms/transforms.py", line 49, in __call__

img = t(img)

File "/home/swg/anaconda3/envs/pytorch/lib/python3.6/site-packages/torchvision/transforms/transforms.py", line 448, in __call__

return F.hflip(img)

File "/home/swg/anaconda3/envs/pytorch/lib/python3.6/site-packages/torchvision/transforms/functional.py", line 345, in hflip

raise TypeError('img should be PIL Image. Got {}'.format(type(img)))

TypeError: img should be PIL Image. Got <class 'dict'>

` How to solve? Thx

|

https://github.com/pytorch/tutorials/issues/549

|

closed

|

[] | 2019-07-01T09:47:31Z

| 2021-06-16T16:20:20Z

| null |

swg209

|

pytorch/pytorch

| 22,381

|

How to apply transfer learning for custom object detection ?

|

## ❓ Questions and Help

Is there an example to apply transfer learning for **custom** object detection.

[https://pytorch.org/tutorials/beginner/transfer_learning_tutorial.html](https://pytorch.org/tutorials/beginner/transfer_learning_tutorial.html)

Reference:-

https://www.learnopencv.com/faster-r-cnn-object-detection-with-pytorch/

|

https://github.com/pytorch/pytorch/issues/22381

|

closed

|

[

"triaged",

"module: vision"

] | 2019-06-30T16:44:47Z

| 2019-07-01T21:38:00Z

| null |

spatiallysaying

|

pytorch/tutorials

| 548

|

how to set two inputs for caffe2

|

when I try to run the model on mobile devices by ONNX, I read the code in official turorials as follow:

`import onnx`

`import caffe2.python.onnx.backend as onnx_caffe2_backend`

`model = onnx.load("super_resolution.onnx")`

`prepared_backend = onnx_caffe2_backend.prepare(model)`

`W = {model.graph.input[0].name: x.data.numpy()}`

`c2_out = prepared_backend.run(W)[0]`

the input in this demo is x, but what should i do if i want to set two inputs?

thanks for you attention!

|

https://github.com/pytorch/tutorials/issues/548

|

closed

|

[] | 2019-06-30T11:55:22Z

| 2019-07-31T05:11:54Z

| null |

mmmmayi

|

huggingface/transformers

| 739

|

where is "pytorch_model.bin"?

|

https://github.com/huggingface/transformers/issues/739

|

closed

|

[

"wontfix"

] | 2019-06-28T15:09:50Z

| 2019-09-03T17:19:30Z

| null |

jufengada

|

|

pytorch/examples

| 579

|

Implementing some C++ examples

|

Hi @soumith I want to implement some examples for C++ side. I want to start with an example of loading dataset with OpenCV and another example for RNN.

There is this [PR](https://github.com/pytorch/examples/pull/506) but it is not active and the example doesn't contain training. Should I write one myself?

|

https://github.com/pytorch/examples/issues/579

|

open

|

[

"c++"

] | 2019-06-27T07:14:40Z

| 2022-03-09T20:49:35Z

| 4

|

ShahriarRezghi

|

pytorch/pytorch

| 22,290

|

How to define a new backward function in libtorch ?

|

## ❓Is it possible to define a backward function libtorch ?

### In pytorch, a new backward function can be defined

`

class new_function(torch.autograd.Function):

def ....

def forward(self,...)

def backward(self, ...)

`

However, in libtorch, how to define a new backward function?

|

https://github.com/pytorch/pytorch/issues/22290

|

closed

|

[

"module: cpp",

"triaged"

] | 2019-06-27T03:05:20Z

| 2019-06-29T05:13:07Z

| null |

buduo15

|

pytorch/pytorch

| 22,249

|

How to convert pytorch0.41 model to CAFFE

|

https://github.com/pytorch/pytorch/issues/22249

|

closed

|

[] | 2019-06-26T03:25:03Z

| 2019-06-26T03:28:12Z

| null |

BokyLiu

|

|

pytorch/tutorials

| 543

|

Can I translate this tutorial and make a book?

|

Hello sir,

As the title says, can I translate this whole tutorial into S.Korean and make a book?

I've noticed that this project is BSD licensed but the first thing to do will be asking you for permission.

It would be a great & fun job for me and I'm in a plan to donate the book royalty to charity.

|

https://github.com/pytorch/tutorials/issues/543

|

closed

|

[] | 2019-06-24T12:49:48Z

| 2019-08-20T11:03:06Z

| 0

|

amsukdu

|

pytorch/examples

| 578

|

DCGAN BatchNorm initialization weight looks different

|

Hi there,

I used the `torch.utils.tensorboard` to watch the weight/grad when training the DCGAN example on MNIST dataset.

In the DCGAN example, we use the normal distribution to initialize both the weight of Conv and BatchNorm. However, I find it is strange when I visualize the weight of them. In the following figure, it seems that the `G/main/1/weight` (BatchNorm) is not initialized with the normal distribution because it looks so different from `G/main/0/weight` (ConvTranspose2d). It has been trained for 10 iters with batch size 64.

Could someone explain this?

The related tensorboard code is copied from [here](https://github.com/yunjey/pytorch-tutorial/blob/master/tutorials/04-utils/tensorboard/main.py):

```python

# logging weight and grads

for tag, value in netD.named_parameters():

tag = 'D/' + tag.replace('.', '/')

writer.add_histogram(tag, value.data.cpu().numpy(), global_step)

writer.add_histogram(tag+'/grad', value.grad.data.cpu().numpy(), global_step)

for tag, value in netG.named_parameters():

tag = 'G/' + tag.replace('.', '/')

writer.add_histogram(tag, value.data.cpu().numpy(), global_step)

writer.add_histogram(tag+'/grad', value.grad.data.cpu().numpy(), global_step)

```

|

https://github.com/pytorch/examples/issues/578

|

open

|

[

"question"

] | 2019-06-22T09:51:44Z

| 2022-03-10T05:48:52Z

| 0

|

daa233

|

pytorch/tutorials

| 541

|

Sphinx error (builder name data not registered)

|

Notebooks for beginner and intermediate tutorials have been generated fine. When building advanced tutorial I have problems: 1) need to install torchaudio in a hard way (no easy way, need to compile from sources and hack some command to be completed, but checked - python can import torchaudio); 2) when running "make data" I am getting Sphinx error:

make data

Running Sphinx v2.1.2

Traceback (most recent call last):

File "/usr/lib/python3.7/site-packages/sphinx/registry.py", line 145, in preload_builder

entry_point = next(entry_points)

StopIteration

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.7/site-packages/sphinx/cmd/build.py", line 283, in build_main

args.tags, args.verbosity, args.jobs, args.keep_going)

File "/usr/lib/python3.7/site-packages/sphinx/application.py", line 238, in __init__

self.preload_builder(buildername)

File "/usr/lib/python3.7/site-packages/sphinx/application.py", line 315, in preload_builder

self.registry.preload_builder(self, name)

File "/usr/lib/python3.7/site-packages/sphinx/registry.py", line 148, in preload_builder

' through entry point') % name)

sphinx.errors.SphinxError: Builder name data not registered or available through entry point

|

https://github.com/pytorch/tutorials/issues/541

|

closed

|

[] | 2019-06-22T01:29:50Z

| 2021-06-16T16:27:17Z

| 2

|

olegmikul

|

pytorch/tutorials

| 539

|

how to create custom dataloader for with labels of varying length?

|

My image labels are in .csv format and it has labels in varying order.

i have created custom dataset. My labels are in separate csv files and they have varying length.

I have tried making customdataset and then dataloader.

I want to do transfer learning with Resnet. Can anyonehelp, I am a beginner to both pytorch and deep learning.

Upto this step, Everything is fine, However, when i create dataloader,

it shows problem. Can anyone help? i am a beginner.

|

https://github.com/pytorch/tutorials/issues/539

|

closed

|

[] | 2019-06-21T11:33:27Z

| 2021-07-30T22:48:21Z

| null |

nisnab

|

huggingface/neuralcoref

| 175

|

what version of python is required to run the script ?

|

I did try to run the script as described in CoNLL 2012 to produce the *._conll files.

However without any success.

the command:

skeleton2conll.sh -D [path_to_ontonotes_train_folder] [path_to_skeleton_train_folder]

always returns that "please make sure that you are pointing to the directory 'conll-2012'"

I did all as pointed on the web site and according other posts about that, which i did check.

I work under windows 10 , so to run .sh file I use cygwin.

However, i'm not sure what version of python I need , is it 2.7 or 3.xx and is this related to the issue i have?

Any help is welcome.

|

https://github.com/huggingface/neuralcoref/issues/175

|

closed

|

[

"training",

"usage"

] | 2019-06-20T16:01:14Z

| 2019-09-26T12:31:16Z

| null |

dtsonov

|

pytorch/tutorials

| 535

|

Under which license are the images?

|

Hi,

Under which license are the style and content images? I want to use your tutorial in an open source repository and wonder if the images won't change the license of my repo.

Thanks!

|

https://github.com/pytorch/tutorials/issues/535

|

open

|

[] | 2019-06-19T12:21:57Z

| 2019-06-19T12:21:57Z

| 0

|

HyamsG

|

pytorch/pytorch

| 21,962

|

How to deploy pytorch???

|

How to deploy pytorch???

|

https://github.com/pytorch/pytorch/issues/21962

|

closed

|

[] | 2019-06-19T08:47:10Z

| 2019-06-19T14:22:20Z

| null |

yuanjie-ai

|

pytorch/pytorch

| 21,945

|

how to use mkl-dnn after installing by conda?

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

how to use mkl-dnn after installing by conda "conda install mkl-dnn"? And I don`t know what to do in the next step for using mkl-dnn on the pytorch?

|

https://github.com/pytorch/pytorch/issues/21945

|

closed

|

[] | 2019-06-19T02:53:45Z

| 2019-06-20T21:57:03Z

| null |

HITerStudy

|

huggingface/neuralcoref

| 172

|

Accuracy Report of model

|

Hi,

I am doing a research on co-reference resolution and comparing different models currently available. I have been looking for an accuracy measure of your model but couldn't find it in your GitHub Repo. description nor in your medium blog.

Can you report how much accuracy (F1/Precision/Recall) have you achieved using this model and on which test dataset?

Thank you!

|

https://github.com/huggingface/neuralcoref/issues/172

|

closed

|

[

"question",

"wontfix",

"perf / accuracy"

] | 2019-06-18T07:26:35Z

| 2019-10-16T08:48:23Z

| null |

uahmad235

|

pytorch/pytorch

| 21,630

|

How to accerlate dataloader?

|

how to accelerate dataloader?

When we load data like :

for i, data in enumerate(dataset):

data.to(gpu)

if we transfer data to gpu, the operation takes a lot of time.

how can we get data stored in gpu directly? Or any other ways to accelerate the dataloader

|

https://github.com/pytorch/pytorch/issues/21630

|

closed

|

[] | 2019-06-11T14:19:14Z

| 2019-06-11T18:57:33Z

| null |

luhc15

|

pytorch/pytorch

| 21,583

|

where is boradcast.h after installation caffe2

|

I'm trying to run cpp program with caffe2 in ubuntu 16.04.

I installed caffe2 according to the official guide and checked that it is working properly.

In my case, I linked caffe2 & c10 libs and set include dir as `/usr/local/lib/python*.*/dist-packages/torch/include/`

Then I confronted with below error.

```bash

In file included from /usr/include/caffe2/utils/filler.h:8:0,

from /usr/include/caffe2/core/operator_schema.h:16,

from /usr/include/caffe2/core/net.h:18

/usr/include/caffe2/utils/math.h:18:41: fatal error: caffe2/utils/math/broadcast.h: No such file or directory

```

In build progress, the whole directory `/caffe2/core/utils` is omitted. Is there any reason?

If I can get, any solution to this problem?

|

https://github.com/pytorch/pytorch/issues/21583

|

closed

|

[

"caffe2"

] | 2019-06-10T08:11:43Z

| 2020-04-17T07:52:10Z

| null |

helloahn

|

pytorch/pytorch

| 21,571

|

How to retrieve hidden states for all time steps in LSTM or BiLSTM?

|

How to retrieve hidden states for all time steps in LSTM or BiLSTM?

|

https://github.com/pytorch/pytorch/issues/21571

|

closed

|

[] | 2019-06-09T08:49:29Z

| 2019-06-09T22:12:28Z

| null |

gongel

|

pytorch/pytorch

| 21,551

|

How to disable MKL-DNN 64-bit compilation?

|

My build from current source on RPi 3B fails because the compilation is selecting the 64-bit option for the Intel MKL-DNN library. Is there an _option/flag_ to disable this selection during the **make** process?

Thanks.

```

-- MIOpen not found. Compiling without MIOpen support

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 621 0 621 0 0 1408 0 --:--:-- --:--:-- --:--:-- 1411

100 66.4M 100 66.4M 0 0 8019k 0 0:00:08 0:00:08 --:--:-- 9169k

Downloaded and unpacked Intel(R) MKL small libraries to /home/pi/projects/pytorch/third_party/ideep/mkl-dnn/external

CMake Error at third_party/ideep/mkl-dnn/CMakeLists.txt:59 (message):

Intel(R) MKL-DNN supports 64 bit platforms only

```

|

https://github.com/pytorch/pytorch/issues/21551

|

closed

|

[

"module: build",

"triaged",

"module: mkldnn"

] | 2019-06-08T00:25:42Z

| 2019-09-10T08:46:33Z

| null |

baqwas

|

pytorch/pytorch

| 21,477

|

Not obvious how to install torchvision with PyTorch source build

|

Previously, it used to be possible to build PyTorch from source, and then `pip install torchvision` and get torchvision available. Now that torchvision is binary distributions, this no longer works; to make matters worse, it explodes in non-obvious ways.

When I had an existing install of torchvision 0.3.0, I got this error:

```

ImportError: /scratch/ezyang/pytorch-tmp-env/lib/python3.7/site-packages/torchvision/_C.cpython-37m-x86_64-linux-gnu.so: u

ndefined symbol: _ZN3c106Device8validateEv

```

I reinstalled torchvision with `pip install torchvision`. Then I got this error:

```

File "/scratch/ezyang/pytorch-tmp-env/lib/python3.7/site-packages/torchvision/ops/boxes.py", line 2, in <module>

from torchvision import _C

ImportError: libcudart.so.9.0: cannot open shared object file: No such file or directory

```

(I'm on a CUDA 10 system).

In the end, I cloned torchvision and built/installed it from source.

|

https://github.com/pytorch/pytorch/issues/21477

|

open

|

[

"triaged",

"module: vision"

] | 2019-06-06T18:04:12Z

| 2019-06-11T22:10:40Z

| null |

ezyang

|

pytorch/pytorch

| 21,456

|

Where is the algorithm for conv being selected?

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.