repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

jhipster/jhipster-registry | 558169619 | Title: Links for logs and loggers are incorrect

Question:

username_0: <!--

- Please follow the issue template below for bug reports and feature requests.

- If you have a support request rather than a bug, please use [Stack Overflow](http://stackoverflow.com/questions/tagged/jhipster) with the JHipster tag.

- For bug reports it is mandatory to run the command `jhipster info` in your project's root folder, and paste the result here.

- Tickets opened without any of these pieces of information will be **closed** without any explanation.

-->

##### **Overview of the issue**

Links for logs and loggers seem to be swapped. When clicking on logs, the logger screen is displayed and vice-versa.

##### **Motivation for or Use Case**

The links should display information per navigation bar.

##### **Reproduce the error**

I checkout v6.0.2 tag from github, and ran jHipster in development mode (./mvnw -Pdev,webpack).

##### **Related issues**

None that I could find.

##### **Suggest a Fix**

Index: src/main/webapp/app/layouts/navbar/navbar.component.html

IDEA additional info:

Subsystem: com.intellij.openapi.diff.impl.patch.CharsetEP

<+>UTF-8

===================================================================

--- src/main/webapp/app/layouts/navbar/navbar.component.html (revision c6e8a17a35495d7487af6781d0e35bc9b7844205)

+++ src/main/webapp/app/layouts/navbar/navbar.component.html (date 1580433952757)

@@ -103,7 +103,7 @@

</a>

</li>

<li>

- <a class="dropdown-item" routerLink="admin/logs" routerLinkActive="active" (click)="collapseNavbar()">

+ <a class="dropdown-item" routerLink="admin/logfile" routerLinkActive="active" (click)="collapseNavbar()">

<fa-icon icon="file-alt" [fixedWidth]="true"></fa-icon>

<span>Logs</span>

</a>

@@ -115,7 +115,7 @@

</a>

</li>

<li>

- <a class="dropdown-item" routerLink="admin/logfile" routerLinkActive="active" (click)="collapseNavbar()">

+ <a class="dropdown-item" routerLink="admin/logs" routerLinkActive="active" (click)="collapseNavbar()">

<fa-icon icon="tasks" [fixedWidth]="true"></fa-icon>

<span>Loggers</span>

</a>

##### **JHipster Registry Version(s)**

v6.0.2

##### **Browsers and Operating System**

Chrome, MacOS

- [x] Checking this box is mandatory (this is just to show you read everything)

Answers:

username_1: I confirm it !

This is a bug introduced by this ticket #391

I synchronized the registry with jhipster 6.5.0, links for logs and loggers have been overwritten

Status: Issue closed

|

godotengine/webrtc-native | 728825911 | Title: RPC calls failing when integrating webrtc-native into bomber-rtc project

Question:

username_0: In prototyping multiplayer with Godot, I originally had an example working that was based off of the bomberman example codebase doing NAT punchthrough with a rendezvous server using Godot's ENet implementation. However, after doing some research and realizing how naive my implementation was, and not wanting to dive into implementing ICE on top of ENet (just yet), I figured it was worthwhile seeing if WebRTC would serve my purposes.

Using a combination of the `godot-example-project`'s `webrtc-signaling` example, @Faless 's `bomber-rtc` example, `webrtc-native`, and my existing codebase, I went to work of transitioning everything over to WebRTC. For what it's worth, I'm currently testing with 3.2.3-stable, and have four different machines interacting:

- Windows 10 (local)

- Ubuntu 20.04 LTS (local)

- OSX 10.14.5 (local)

- Ubuntu 20.04 LTS (digital ocean droplet running headless)

The issues I have seem to stem from having more than two peers connecting. I went through a pretty lengthy process of trying different combinations of systems being the "host" (for what that means in the webrtc sense with `server_compatibility = true`), with and without the non local headless instance to determine if it was an issue with ICE/NAT punchthrough.

The issues I have always seemed to come up when the ICE candidate process had completed, and `connected_ok` was called from `gamestate.gd`. I've greatly simplified `gamestate`, essentially halting it from moving on to the `register_player` calls, as this was what was failing, occasionally. Instead, after receiving `connected_ok`, I have each of the "clients" starting a loop of sending rpc calls that just sends a string with `ping+n`, where `n` is an incrementing number. What ends up happening is that the other connected clients, as well as the server should receive:

```

ping+1

ping+2

ping+3

ping+4

ping+5

ping+6

...

```

Instead, what happens is that occasionally one of these rpc calls seems to get dropped, and instead of `ping+n`, I'll just get a blank newline written to the debug log, like this:

```

ping+1

ping+2

ping+3

ping+5

ping+6

...

```

The drops seem random, and just because the drop seems to happen on one receiver (be it another client, or the server), another receiver won't necessarily miss the same call, which leads me to believe there is _something_ happening on the webrtc-native rpc reception side that's causing the miss.

The reason I've narrowed this down to webrtc-native is because last night I went through and generated HTML5 exports on the 3 local machines in order to utilize the same webrtc high level multiplayer code base, but not use webrtc-native, and I have not been able to recreate the issue once. I probably ran ~20 iterations, and was switching back and forth between HTML5 and native export, with the native always failing a few times in sending 25 ping rpc calls following `connected_ok`.

I understand this is a pretty large wall of text. I certainly can put together a reproducible test if someone else has at least three separate machine that can do HTML5 and native tests, if need be, but I figured it was worthwhile to post my findings and get a conversation going first.

Answers:

username_1: I think I was able to produce something identical to this in https://github.com/godotengine/godot-demo-projects/pull/667 |

bbonnin/zeppelin-mongodb-interpreter | 298259685 | Title: error while integrating mongodb interpreter into zeppelin

Question:

username_0: org.apache.thrift.TApplicationException: Internal error processing getFormType

at org.apache.thrift.TApplicationException.read(TApplicationException.java:111)

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:71)

at org.apache.zeppelin.interpreter.thrift.RemoteInterpreterService$Client.recv_getFormType(RemoteInterpreterService.java:337)

at org.apache.zeppelin.interpreter.thrift.RemoteInterpreterService$Client.getFormType(RemoteInterpreterService.java:323)

at org.apache.zeppelin.interpreter.remote.RemoteInterpreter.getFormType(RemoteInterpreter.java:446)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.getFormType(LazyOpenInterpreter.java:111)

at org.apache.zeppelin.notebook.Paragraph.jobRun(Paragraph.java:387)

at org.apache.zeppelin.scheduler.Job.run(Job.java:175)

at org.apache.zeppelin.scheduler.RemoteScheduler$JobRunner.run(RemoteScheduler.java:329)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Answers:

username_1: @username_0 could you provide the version of Zeppelin you are using ? Thanks !

username_0: zeppelin version 0.7.3

Thanks

username_0: Hi, iam using zeppelin0.7.3 i'm facing problem while executing the query using mongodb interpreter

Thanks

org.apache.thrift.TApplicationException: Internal error processing getFormType

at org.apache.thrift.TApplicationException.read(TApplicationException.java:111)

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:71)

at org.apache.zeppelin.interpreter.thrift.RemoteInterpreterService$Client.recv_getFormType(RemoteInterpreterService.java:337)

at org.apache.zeppelin.interpreter.thrift.RemoteInterpreterService$Client.getFormType(RemoteInterpreterService.java:323)

at org.apache.zeppelin.interpreter.remote.RemoteInterpreter.getFormType(RemoteInterpreter.java:446)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.getFormType(LazyOpenInterpreter.java:111)

at org.apache.zeppelin.notebook.Paragraph.jobRun(Paragraph.java:387)

at org.apache.zeppelin.scheduler.Job.run(Job.java:175)

at org.apache.zeppelin.scheduler.RemoteScheduler$JobRunner.run(RemoteScheduler.java:329)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

username_2: hi I faced the same problem when I am developing my interpreter, I am using zeppelin 0.8.0, I wonder if you solved this problem and what's your zeppelin version, thx~

username_2: I solved this problem by not using spring framework to develop interpreter... |

kemitix/thorp | 512997534 | Title: Create and use a cache of hashes for local files

Question:

username_0: The cache would enable a quick check of the last modified time for the file and use the cached hash rather than re-calculating the hash. On remote storage, or even on a non-SSD drive this can be slow for a log of large files. |

BEEmod/BEE2-items | 495407379 | Title: lightbridges that have the properties of the custom fizzlers

Question:

username_0: basically what it says, but orange ones act like normal fizzlers, green ones act solid to physics objects, white ones don't let anything through, and you get the idea

Answers:

username_1: Can't do that, and why would you want it?

Status: Issue closed

|

Azure/azure-powershell | 419327387 | Title: I need to create Python Azure Function using Az PowerShell

Question:

username_0: Hi,

I need to create Python Azure Function using AZ PowerShell command. So could you please let me know which command it this.

The AZ Command I am using is below, but I need a Az PowerShell Command for This

az functionapp createpreviewapp -n buhler-uat-we-snapit-diehole-algorithm -g buhler-uat-snapit-dieholealgorithm -l ""westeurope"" -s buhleruatsnapitalgo --runtime python --is-linux

Answers:

username_1: @sphibss @sisrap Can you please respond about Functions support in Azure PowerShell?

username_2: @ahmedelnably - can help you here.

username_3: @username_0 we have published couple of weeks ago a first preview of the Az.Functions module for PowerShell. Please give it a try and let us know what you think.

`Install-Module -Name Az.Functions -AllowPrerelease`

username_4: Close it because of new Az.Functions module

Status: Issue closed

username_3: Hi @username_0

The Azure PowerShell product team is looking for customers who are willing to try some of the newest Azure PowerShell features for Azure Functions and provide feedback.

If you are interested in talking with Azure PowerShell product team on this topic, fill out this short survey (https://microsoft.qualtrics.com/jfe/form/SV_0PATZ9XFWS8S82V?Q_CHL=github) and include your email at the end. We will contact you to set up a Teams call if you are a good fit for this study.

Thank you!

Damien |

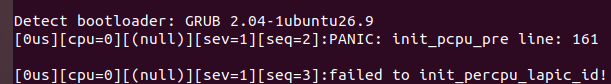

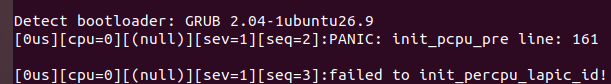

projectacrn/acrn-hypervisor | 866548290 | Title: ACRN panic when boot acrn-hypervisor in MAXTANG WHL WL10 board

Question:

username_0: **Describe the bug**

When I follow ACRN document [Getting Started Guide for ACRN Industry Scenario with Ubuntu Service VM](https://projectacrn.github.io/2.0/getting-started/rt_industry_ubuntu.html) with ACRN v2.0 using MAXTANG WHL WL10 board. ACRN fail to boot

**Platform**

cpu: i5: Intel(R) Core(TM) i5-8265U

service vm: ubuntu20

grub: grub2.04

**Codebase**

I've tried ACRN v2.0 v2.2 v2.4.

**Scenario**

Industry

**To Reproduce**

Steps to reproduce the behavior:

1. install service vm ubuntu20

2. compile acrn hypervisor using `make all BOARD_FILE=misc/acrn-config/xmls/board-xmls/whl-ipc-i5.xml SCENARIO_FILE=misc/acrn-config/xmls/config-xmls/whl-ipc-i5/industry.xml RELEASE=0`, this step produce no error or warning.

3. compile kernel without error.

4. update grub

5. reboot

6. see following error

**Expected behavior**

service vm should boot successfully.

**Is there something wrong with my way to boot ACRN?**

Answers:

username_1: Can you check/confirm the following:

* Number of CPUs on your platform?

* Check bios options (hyperthreading turned off, all VT turned on, e.g. VT-x, VT-d)

* Version of Ubuntu used, is that 18.04 or 20.04?

I don't know if this is what can cause this issue but you need to patch Grub in 18.04.

username_0: Thanks @username_1

After I configure BIOS with hyperthreading disabled, ACRN can boot successfully now. Thanks a lot~

username_1: Thanks for the confirmation @username_0 !

@NanlinXie @dbkinder , this is an example of an error message that could be improved. And perhaps ACRN shouldn't panic if there are more processors than what it was configured to use.

username_0: In [Getting Started Guide for ACRN Industry Scenario with Ubuntu Service VM](https://projectacrn.github.io/2.0/getting-started/rt_industry_ubuntu.html) with ACRN v2.0, Service VM should be installed in nvm while RTVM should be installed in SATA.

Is it necessary?

I installed both Service VM and RTVM in SATA, while Service VM on `/dev/sda5 `and RTVM in `/dev/sda6`. However I came across such error when launch RTVM. I don't know if this has anything to do with installing Service VM and RTVM in the same device.

username_1: Hi @username_0 , you will need to adjust the launch script and also make sure that you have a bootloader properly configured if you do that. Where did you put the ESP (Grub bootloader)?

Having said that, you will find that sharing the same physical disk (just different partition) will adversely affect the realtime performance of your RTVM. The reason we install the Service VM and RTVM rootfs on different partitions is so that we can pass-through the storage controller to the RTVM and we avoid sharing that resource.

username_0: Hi @username_1

My ESP is in /dev/sda3.

and I modified RTVM grub file like this.

where UUID and PARTUUID and been updated according to RTVM's root partition `/dev/sda6`.

**I'm confused about how ACRN find RTVM's image?**

Here is my RTVM launch script which I commented disk pass-through.

username_2: @username_0

It is expected; setup related issue:

SATA was passthroughed to RTVM, but SOS also using this SATA.

We prefer RTVM installed in one Whole SSD(If no more SSD on your board, please using USB driver) then passthrough the whole SSD to RTVM, ovmf will launch it base on the bdf info in launch script.

username_1: Hi @username_0 , I'm not sure what you call teh RTVM Grub config but in your case I suspect you have only one Grub instance for the entire system, and that's causing problems here. Here is what the Getting Started Guide is setting up when you use two separate disks in terms of boot sequences:

1. Service VM: platform UEFI firmware -> Grub (ESP partition on the **NVMe** disk) -> rootfs on NVMe (`/dev/nvme0p2`)

2. User VM (RTVM): virtual UEFI firmware (`OVMF.fd`) -> Grub (on the **SATA** disk) -> rootfs on SATA (`/dev/sda2`)

The key is that **each** VM (Service VM and RTVM) have their own Grub, and each is configured to pick up the right kernel and set the rootfs to the right disk partition.

There are a couple of options (well, maybe three actually) for you if you want to use a single disk:

1. Create a virtual disk image based on Ubuntu in which we will replace the stock kernel by a realtime kernel (that virtual disk will contain an ESP partition with its own Grub that we would configure for the RTVM, using the UUID and PARTUUID of the virtual disk)

2. Use a dedicated partition (like you are doing today):

1. Use the `-k` and `-B` options from `acrn-dm` to use the RTVM kernel (that is located somewhere in your Service VM filesystem) and set the `bootargs` value to point at the partition where the RTMV rootfs is

2. Treat the partition as an entire disk and install a copy of grub in there... it probably involves splitting that partition into at least two partitions, one to act as the ESP and the other to host the RTVM rootfs.

Note that the realtime performance in **any** of those scenarios is likely to be sub-optimal because you are sharing the same physical resource and the Service VM will therefore interfere with the RTVM (for storage access).

We do not have a good set of instructions for you for any of these set-ups as described above but if you pick one, we can guide you through it. My recommendation is that we try first with option 2.1 (using the `-k` and `-B` options of `acrn-dm` and point at the SATA partition for the RTVM rootfs).

We will also need to restore your Grub installation to point at the correct Service VM partition.

username_0: Thanks! @username_1 @username_2

I'd like to try option2.1 first. I have two questions.

1. where should bootargs set? and which is bootargs's value? UID or bdf?

2. _We will also need to restore your Grub installation to point at the correct Service VM partition._ Do you mean I should update file `/etc/grub.d/40_custom` of SOS?

username_0: Thanks! @username_1 @username_2

I'd like to try option2.1 first. I have two questions.

1. which is bootargs's value? UID or bdf?

2. _We will also need to restore your Grub installation to point at the correct Service VM partition._ Do you mean I should update file /etc/grub.d/40_custom of SOS?

username_1: The `-B, --bootargs` parameter should be set to something like: `root=PARTUUID=<UUID of rootfs partition> rw rootwait nohpet console=hvc0 console=ttyS0 no_timer_check ignore_loglevel log_buf_len=16M consoleblank=0 clocksource=tsc tsc=reliable x2apic_phys processor.max_cstate=0 intel_idle.max_cstate=0 intel_pstate=disable mce=ignore_ce audit=0 isolcpus=nohz,domain,1 nohz_full=1 rcu_nocbs=1 nosoftlockup idle=poll irqaffinity=0`

Re: Grub: yes, you need to make sure that the `/etc/grub.d/40_custom` file that's on the **Service VM partition (i.e. /dev/sda5?)** is updated to use the Service VM kernel parameters and correct PARTUUID (from `/dev/sda5`).

username_0: Thanks!, After follow above tips, I update acrn-dm command parameters, however my usage is not correct, here is my script.

Status: Issue closed

username_0: Can I install RTVM in a usb flash disk, and pass through USB to RTVM?

username_0: **Describe the bug**

When I follow ACRN document [Getting Started Guide for ACRN Industry Scenario with Ubuntu Service VM](https://projectacrn.github.io/2.0/getting-started/rt_industry_ubuntu.html) with ACRN v2.0 using MAXTANG WHL WL10 board. ACRN fail to boot

**Platform**

cpu: i5: Intel(R) Core(TM) i5-8265U

service vm: ubuntu20

grub: grub2.04

**Codebase**

I've tried ACRN v2.0 v2.2 v2.4.

**Scenario**

Industry

**To Reproduce**

Steps to reproduce the behavior:

1. install service vm ubuntu20

2. compile acrn hypervisor using `make all BOARD_FILE=misc/acrn-config/xmls/board-xmls/whl-ipc-i5.xml SCENARIO_FILE=misc/acrn-config/xmls/config-xmls/whl-ipc-i5/industry.xml RELEASE=0`, this step produce no error or warning.

3. compile kernel without error.

4. update grub

5. reboot

6. see following error

**Expected behavior**

service vm should boot successfully.

**Is there something wrong with my way to boot ACRN?**

username_0: Hi @username_1 I changed launch script like this:

![Uploading boot_rtvm_error1.jpg…]()

However I came across same error as same as https://github.com/projectacrn/acrn-hypervisor/issues/5970#issuecomment-826776579

username_2: @username_0 Yes. you can passthrough USB controller to RTVM.

username_3: About ‘Fix address space of post launched rfc v2 #5914’

My issue is OVMF can’t drive NVME ptdev at the pre-merge test env, but I can’t reproduce it on my whl-ipc-i7.

If you use /user/share/acrn/bios/OVMFD_debug.fd, you may see more serial output, and find out the where is the problem.

By the way, have you tried virtio-blk? In my case, vm can launch with virtio-blk device, because it is nvme issue.

Status: Issue closed

|

blakeohare/crayon | 724063870 | Title: Migrate these libraries to core functions

Question:

username_0: In an effort to redo how CNI works, the following libraries should be rewritten as native-free libraries that depend on common core functions:

- DateTime

- Environment

- FileIOCommon

- Image Encoder

- Json

- Matrices

- ProcessUtil

- Random

- Resources

- SRandom

- TextEncoding

- UserData

- Web

- Xml

Answers:

username_0: They're all done now!

Status: Issue closed

|

scrapy/scrapy | 73950960 | Title: Allow FEED_EXPORT_FIELDS to be specified per Item rather than for the whole project

Question:

username_0: This setting was added in this commit: https://github.com/scrapy/scrapy/commit/1534e8540bf083c8d7beb0264cccea5488ee0250

But it would be more useful to define per Item. Maybe change it to a dict and have the Item name as the key? Or a new attribute for the Item class?

Answers:

username_1: `FEED_EXPORT_FIELDS` option was added for 2 main reasons:

1. It is not possible to know all possible fields in advance (because items are processed on-by-one), but we need to know it to write a CSV header. With a single Item class a set of its defined fields can be used. But when several item classes this doesn't work, and `FEED_EXPORT_FIELDS` allows to specify which fields should be in result.

2. In Scrapy master it is possible to yield raw dicts instead of Item instances; FEED_EXPORT_FIELDS allows to define fields to export for the cases dicts have different keys (e.g. when keys for empty values are omitted).

(1) won't work if you define `FEED_EXPORT_FIELDS` per Item class.

A set of fields defined in Item is essentially a per-item `FEED_EXPORT_FIELDS`.

Do you want to skip some fields on export time even if they are defined?

username_0: I see what you mean. I have a requirement at the moment that feed exports are csv format and that the fields remain in the same order (currently using a custom exporter to do this). Spiders only use one item each, so defining via the Item is not an issue. Maybe define these fields in the Spider instead?

username_1: In scrapy master it is possible to override settings per-spider - see http://doc.scrapy.org/en/master/topics/settings.html#settings-per-spider.

username_0: I keep forgetting this! Thank you!

Status: Issue closed

|

metal3-io/baremetal-operator | 811463280 | Title: Resetting status.errorCount manualy?

Question:

username_0: Is there any way of re-setting `errorCount` manually inside bmh object?

Maybe using some kind of special annotation?

```

{"level":"info","ts":1613681703.0899441,"logger":"controllers.BareMetalHost","msg":"done","baremetalhost":"default/master-2","provisioningState":"deprovisioning","requeue":false,"after":18790.040866384}

```

Waiting for ~5h is a bit too much :)

Would you be willing to accept for review PR that add special annotation for clearing error count and message?

Answers:

username_1: /kind feature

/cc @username_2

username_2: The error count was meant to introduce an exponential backoff in case of error, so clearing out the message doesn't seem the correct thing to do to manage an erroneous situation: there should be an intervention (manual or automatic) to fix the problem, with a subsequent re-evaluation (reconcile) that will take care of clearing error if the right conditions are met. Can you please provide more details about you specific scenario? What kind of error? What did prevent the reconcile retriggering?

username_0: @username_2 Image field on bmh object was incorrectly set in this particular case, this triggered multiple reconsiles and eventually a backoff.

I think that we need some kind of a backup plan to remove this errors without re-provisioning bmh.

username_0: @username_2 Any ideas how to approach this?

username_2: And fixing the Image field on the bmh object didn't retrigger the reconcile loop and cleared out the errorCount? If not, that's the issue to be fixed

username_0: @username_2 no, to re-trigger I had to pause/resume reconcile via annotation, after that, successful de-provisioning cleared errorCount as expected.

username_0: @username_2 So this happened again in different setup, stuck at backoff with no apparent way to trigger reconcile.

```

{"level":"info","ts":1615829787.7539394,"logger":"controllers.BareMetalHost","msg":"publishing event","baremetalhost":"worker-1","reason":"InspectionError","message":"No lookup attributes were found, inspector won't be able to find it after introspection, consider creating ironic ports or providing an IPMI address"}

{"level":"info","ts":1615829788.0157337,"logger":"controllers.BareMetalHost","msg":"done","baremetalhost":"worker-1","provisioningState":"inspecting","requeue":false,"after":11686.964158174}

```

This error happened because of ironic not providing free port to `worker-1` node, but this is different story, not related to this feature.

cc: @username_3

Can we please consider implementing this in some way?

For additional notes, ironic error happened due to Ironic pod being unexpectedly restarted.

```

"error":"action \"registering\" failed: failed to validate BMC access: failed to create port in ironic: The service is currently unable to handle the request due to a temporary overloading or maintenance. This is a temporary condition. Try again later."

```

username_2: I guess could make sense to have an explicit mechanism to force re-triggering a reconciliation loop for a specific BMH, considering that in some cases the remediation task could be an external (in respect to the resource) activity carried out by a user, but still wondering why amending the Image field didn't work, as it is a normal spec field?

username_3: Any edit of the host resource should trigger reconciliation. If we want a less crude way to do it, we could add an annotation API to reset the value, but maybe it would be better to reduce the longest wait time that we use?

username_0: but also this approach would greatly help with debugability

currently struggling with this long back-off blocking and unability to disable this during debuging.

username_2: I'm not very keen to such approach (if the intention it's to zero the value), as it will give the user the possibility to modify a field that it is managed by the operator - and usually the ErrorCount goes in pair with the ErrorMessage, so it wouldn't be enough to clear it.

I think that in such cases what it's required it's something like a "wake up" annotation that will set it to 1, forcing thus at the same time a re-evaluation of the current status without losing the current error condition

username_3: That makes sense. I was mostly pointing out that *any* edit to the host should trigger reconciliation, so we don't need a special API just to trigger a retry. If we wanted an API to let the user say "I think I have cleared the error on this host, try again" then an annotation would make sense instead of a spec field, because the controller could delete the annotation.

username_0: @username_2 So how do we set ErrorCount to 1 ?

via annotation?

username_2: The idea sounds fine to me, but I think we should prepare first a design proposal on https://github.com/metal3-io/metal3-docs to be discussed and approved by the community (I could prepare a draft tomorrow in case)

username_0: @username_2 Would be great, thanks!

username_4: @username_0 Please open a new bug and upload logs so we can figure out what the root cause is here.

As Andrea and Doug pointed out, modifying the BMH to fix the erroneous configuration will cause an immediate reconcile, and the fact that another reconcile is scheduled in 5 hours time is not relevant.

There is definitely work still to be done on retrying provisioning. When a bad image is set I believe we currently retry in a loop without incrementing error count past 1 (it goes from provisioning->deprovisioning->ready->provisioning with the successful deprovisioning clearing the error count). Ideally the error count should continue to increment as long as the image hasn't changed.

Clearly you are seeing something else, where the deprovisioning is failing repeatedly. That shouldn't happen if ironic is working and the BMC is reachable, so we need to look into it.

username_0: @username_4 For the most part https://github.com/metal3-io/metal3-docs/pull/171 seems like a solution, let's keep this one open until that design doc is implemented.

If I encounter similar issue to this I will do another more specific issue with logs.

username_4: No that is absolutely not a solution. In fact that the existence of such workarounds tends to prevent people from reporting obvious bugs is one reason not to implement them.

username_0: @username_4 existence of such workaround help people fix issues in production clusters without recompiling code and not having new gray hair appearing.

Lets not forget that contributions to code works both ways, sometimes community just needs some workaround to solve current active problems without waiting for quite some time for a proper fix.

username_4: After a lot of back-and-forth on metal3-io/metal3-docs#171 we have concluded that a retry can be triggered at any time by making any change to the BMH resource (e.g. add an annotation of your choice, not one recognised by the bmo), and we have no information to suggest that this mechanism would not work as intended when the underlying problem is fixed. Please open another bug if information comes to light suggesting otherwise.

/close |

EthicalNYC/website | 245258609 | Title: Design: What page width for the site?

Question:

username_0: 960px? Fluid? Other?

Answers:

username_0: From Renee: To be determined – we ultimately want the site to be mobile responsive, which (I imagine) will drive the proper answer to this question. In the meantime, I suggest you create a width that is appropriate for the three-columns

Status: Issue closed

|

Esri/military-tools-geoprocessing-toolbox | 192081539 | Title: Highest Points and Find Local Peaks in Pro 1.2 are symbolizing properly but not labeling

Question:

username_0: ## Expected Behavior

Running Highest Points or Find Local Peaks should produce an output that has an orange triangle for the marker, with a label that gives the value of that point from the elevation surface

## Current Behavior

Tools both run properly and symbolize properly, but there is no label with the point.

Answers:

username_1: Known issue in core geoprocessing as recent as Pro 1.3. Not much we can do for our tools until this one is fixed in core.

Status: Issue closed

|

flutter/flutter | 769188340 | Title: Can't run on Ubuntu 20.10

Question:

username_0: <!-- Thank you for using Flutter!

If you are looking for support, please check out our documentation

or consider asking a question on Stack Overflow:

* https://flutter.dev/

* https://api.flutter.dev/

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

If you have found a bug or if our documentation doesn't have an answer

to what you're looking for, then fill out the template below. Please read

our guide to filing a bug first: https://flutter.dev/docs/resources/bug-reports

-->

## Steps to Reproduce

<!-- You must include full steps to reproduce so that we can reproduce the problem. -->

1. `flutter create bug`

2. `cd bug`

3. `flutter run`

**Expected results:** <!-- what did you want to see? -->

The app running! It was working before on Ubuntu 20.04. Also, flutter-gallery 'snap version' isn't working any more!

**Actual results:** <!-- what did you see? -->

```

Launching lib/main.dart on Linux in debug mode...

Building Linux application...

Error waiting for a debug connection: The log reader stopped unexpectedly.

Error launching application on Linux.

```

<details>

<summary>Logs</summary>

<!--

Run your application with `flutter run --verbose` and attach all the

log output below between the lines with the backticks. If there is an

exception, please see if the error message includes enough information

to explain how to solve the issue.

-->

```

$ flutter run --verbose

[ +102 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git -c log.showSignature=false log -n 1 --pretty=format:%H

[ +57 ms] Exit code 0 from: git -c log.showSignature=false log -n 1 --pretty=format:%H

[ ] e24e763872c1bcd34e9a5b1e6baaa98defff7fc5

[ ] executing: [/home/username_0/snap/flutter/common/flutter/] git tag --points-at e24e763872c1bcd34e9a5b1e6baaa98defff7fc5

[ +22 ms] Exit code 0 from: git tag --points-at e24e763872c1bcd34e9a5b1e6baaa98defff7fc5

[ +7 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git describe --match *.*.* --long --tags e24e763872c1bcd34e9a5b1e6baaa98defff7fc5

[ +45 ms] Exit code 0 from: git describe --match *.*.* --long --tags e24e763872c1bcd34e9a5b1e6baaa98defff7fc5

[ ] 1.25.0-8.0.pre-88-ge24e763872

[ +59 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref --symbolic @{u}

[ +9 ms] Exit code 0 from: git rev-parse --abbrev-ref --symbolic @{u}

[ ] origin/master

[ ] executing: [/home/username_0/snap/flutter/common/flutter/] git ls-remote --get-url origin

[ +9 ms] Exit code 0 from: git ls-remote --get-url origin

[ ] https://github.com/flutter/flutter.git

[ +61 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref HEAD

[Truncated]

See https://flutter.dev/docs/get-started/install/linux#android-setup for more details.

[✓] Linux toolchain - develop for Linux desktop

• clang version 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

• cmake version 3.10.2

• ninja version 1.8.2

• pkg-config version 0.29.1

[!] Android Studio (not installed)

• Android Studio not found; download from https://developer.android.com/studio/index.html

(or visit https://flutter.dev/docs/get-started/install/linux#android-setup for detailed instructions).

[✓] Connected device (1 available)

• Linux (desktop) • linux • linux-x64 • Linux

! Doctor found issues in 2 categories.

```

</details>

Answers:

username_1: The same happens in Ubuntu 18.04

username_2: tried to run the counter app on Ubuntu 20.04 with the latest master

<details>

<summary>logs</summary>

```bash

[ +4 ms] Launching lib/main.dart on Linux in debug mode...

[ +5 ms] /home/francesco/snap/flutter/common/flutter/bin/cache/dart-sdk/bin/dart --disable-dart-dev

/home/francesco/snap/flutter/common/flutter/bin/cache/artifacts/engine/linux-x64/frontend_server.dart.snapshot --sdk-root

/home/francesco/snap/flutter/common/flutter/bin/cache/artifacts/engine/common/flutter_patched_sdk/ --incremental --target=flutter --debugger-module-names --experimental-emit-debug-metadata --output-dill

/tmp/flutter_tools.DNDTRL/flutter_tool.QXNDPQ/app.dill --packages /home/francesco/projects/issue/.dart_tool/package_config.json -Ddart.vm.profile=false -Ddart.vm.product=false --enable-asserts

--track-widget-creation --filesystem-scheme org-dartlang-root --initialize-from-dill build/152a2ce78bff4a6f74a44ed554717a64.cache.dill.track.dill --flutter-widget-cache

--enable-experiment=alternative-invalidation-strategy

[ +15 ms] Building Linux application...

[ +12 ms] <- compile package:issue/main.dart

[ +4 ms] executing: [build/linux/debug/] cmake -G Ninja -DCMAKE_BUILD_TYPE=Debug /home/francesco/projects/issue/linux

[ +964 ms] -- The CXX compiler identification is Clang 6.0.0

[ +15 ms] -- Check for working CXX compiler: /snap/flutter/38/usr/bin/clang++

[ +162 ms] -- Check for working CXX compiler: /snap/flutter/38/usr/bin/clang++ -- works

[ +2 ms] -- Detecting CXX compiler ABI info

[ +123 ms] -- Detecting CXX compiler ABI info - done

[ +5 ms] -- Detecting CXX compile features

[ +480 ms] -- Detecting CXX compile features - done

[ +9 ms] -- Found PkgConfig: /snap/flutter/38/usr/bin/pkg-config (found version "0.29.1")

[ ] -- Checking for module 'gtk+-3.0'

[ +32 ms] -- Found gtk+-3.0, version 3.22.30

[ +98 ms] -- Checking for module 'glib-2.0'

[ +22 ms] -- Found glib-2.0, version 2.56.4

[ +59 ms] -- Checking for module 'gio-2.0'

[ +33 ms] -- Found gio-2.0, version 2.56.4

[ +60 ms] -- Checking for module 'blkid'

[ +28 ms] -- Found blkid, version 2.31.1

[ +64 ms] -- Checking for module 'liblzma'

[ +25 ms] -- Found liblzma, version 5.2.2

[ +66 ms] -- Configuring done

[ +50 ms] -- Generating done

[ +1 ms] -- Build files have been written to: /home/francesco/projects/issue/build/linux/debug

[ +3 ms] executing: ninja -C build/linux/debug install

[ +18 ms] ninja: Entering directory `build/linux/debug'

[+20536 ms] [1/6] Generating /home/francesco/projects/issue/linux/flutter/ephemeral/libflutter_linux_gtk.so, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_basic_message_channel.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_binary_codec.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_binary_messenger.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_dart_project.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_engine.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_json_message_codec.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_json_method_codec.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_message_codec.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_method_call.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_method_channel.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_method_codec.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_method_response.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_plugin_registrar.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_plugin_registry.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_standard_message_codec.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_standard_method_codec.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_string_codec.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_value.h, /home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/fl_view.h,

/home/francesco/projects/issue/linux/flutter/ephemeral/flutter_linux/flutter_linux.h, _phony_

[ +20 ms] [ +83 ms] executing: [/home/francesco/snap/flutter/common/flutter/] git -c log.showSignature=false log -n 1 --pretty=format:%H

[ ] [ +44 ms] Exit code 0 from: git -c log.showSignature=false log -n 1 --pretty=format:%H

[ ] [ ] 9ff4326e1fb5375e9adae37367338a2de5daa438

[ ] [ +1 ms] executing: [/home/francesco/snap/flutter/common/flutter/] git tag --points-at 9ff4326e1fb5375e9adae37367338a2de5daa438

[ ] [ +16 ms] Exit code 0 from: git tag --points-at 9ff4326e1fb5375e9adae37367338a2de5daa438

[ ] [ +2 ms] executing: [/home/francesco/snap/flutter/common/flutter/] git describe --match *.*.* --long --tags 9ff4326e1fb5375e9adae37367338a2de5daa438

[ ] [ +32 ms] Exit code 0 from: git describe --match *.*.* --long --tags 9ff4326e1fb5375e9adae37367338a2de5daa438

[ ] [ ] 1.26.0-1.0.pre-64-g9ff4326e1f

[ ] [ +64 ms] executing: [/home/francesco/snap/flutter/common/flutter/] git rev-parse --abbrev-ref --symbolic @{u}

[ ] [ +6 ms] Exit code 0 from: git rev-parse --abbrev-ref --symbolic @{u}

[Truncated]

+sssssssssdmydMMMMMMMMddddyssssssss+ Terminal: gnome-terminal

/ssssssssssshdmNNNNmyNMMMMhssssss/ CPU: Intel i5-7200U (4) @ 2.500GHz

.ossssssssssssssssssdMMMNysssso. GPU: Intel HD Graphics 620

-+sssssssssssssssssyyyssss+- Memory: 6149MiB / 7685MiB

`:+ssssssssssssssssss+:`

.-/+oossssoo+/-.

```

</details>

everything works fine, although this shows up in the logs

```bash

[+1213 ms] libEGL warning: DRI2: failed to create dri screen

[ +18 ms] libEGL warning: DRI2: failed to create dri screen

```

username_3: Hi @username_0

Just tried to reproduce on the latest `master` channel, no issues

<details>

<summary>logs</summary>

```bash

taha@pop-os:~/AndroidStudioProjects/mybug$ flutterm run -d linux -v

[ +51 ms] executing: [/home/taha/Code/flutter_master/] git -c

log.showSignature=false log -n 1 --pretty=format:%H

[ +27 ms] Exit code 0 from: git -c log.showSignature=false log -n 1

--pretty=format:%H

[ ] 9ff4326e1fb5375e9adae37367338a2de5daa438

[ ] executing: [/home/taha/Code/flutter_master/] git tag --points-at

9ff4326e1fb5375e9adae37367338a2de5daa438

[ +9 ms] Exit code 0 from: git tag --points-at

9ff4326e1fb5375e9adae37367338a2de5daa438

[ +1 ms] executing: [/home/taha/Code/flutter_master/] git describe --match *.*.*

--long --tags 9ff4326e1fb5375e9adae37367338a2de5daa438

[ +19 ms] Exit code 0 from: git describe --match *.*.* --long --tags

9ff4326e1fb5375e9adae37367338a2de5daa438

[ ] 1.26.0-1.0.pre-64-g9ff4326e1f

[ +33 ms] executing: [/home/taha/Code/flutter_master/] git rev-parse --abbrev-ref

--symbolic @{u}

[ +4 ms] Exit code 0 from: git rev-parse --abbrev-ref --symbolic @{u}

[ ] origin/master

[ ] executing: [/home/taha/Code/flutter_master/] git ls-remote --get-url

origin

[ +3 ms] Exit code 0 from: git ls-remote --get-url origin

[ ] https://github.com/flutter/flutter.git

[ +27 ms] executing: [/home/taha/Code/flutter_master/] git rev-parse --abbrev-ref

HEAD

[ +3 ms] Exit code 0 from: git rev-parse --abbrev-ref HEAD

[ ] master

[ +28 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required,

skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required,

skipping update.

[ ] Artifact Instance of 'IOSEngineArtifacts' is not required, skipping

update.

[ ] Artifact Instance of 'FlutterWebSdk' is not required, skipping update.

[ +1 ms] Artifact Instance of 'WindowsEngineArtifacts' is not required, skipping

update.

[ ] Artifact Instance of 'MacOSEngineArtifacts' is not required, skipping

update.

[ ] Artifact Instance of 'LinuxEngineArtifacts' is not required, skipping

update.

[ ] Artifact Instance of 'LinuxFuchsiaSDKArtifacts' is not required,

skipping update.

[ ] Artifact Instance of 'MacOSFuchsiaSDKArtifacts' is not required,

skipping update.

[ ] Artifact Instance of 'FlutterRunnerSDKArtifacts' is not required,

skipping update.

[ ] Artifact Instance of 'FlutterRunnerDebugSymbols' is not required,

skipping update.

[ +38 ms] executing: /home/taha/Code/sdk/platform-tools/adb devices -l

[ +10 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required,

skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required,

skipping update.

[Truncated]

• VS Code at /usr/share/code

• Flutter extension version 3.17.0

[✓] Connected device (2 available)

• Linux (desktop) • linux • linux-x64 • Linux

• Chrome (web) • chrome • web-javascript • Google Chrome 87.0.4280.88

! Doctor found issues in 1 category.

```

</details>

@username_0 @username_1

It seems you guys are using the snap version of Flutter

For Flutter on snap related issues, please file an issue on https://github.com/canonical/flutter-snap/issues

If it reproduces with git clone version of Flutter, please provide details

https://flutter.dev/docs/get-started/install/linux

Thank you

username_0: Hi @username_2 , just to make sure, did you create a new flutter project or used an old copy?

username_0: @username_3 I think it's an issue with the boilerplate code because @username_2 uses the snap version and it works for him!

username_2: @username_0 as a matter of fact I do

I know it's a long shot, but sometimes happens w/ master... would you mind try a try `flutter run upgrade -f`

username_0: you mean `flutter upgrade -f`?

username_0: ```

$ flutter upgrade -f

Flutter is already up to date on channel master

Flutter 1.26.0-2.0.pre.64 • channel master • https://github.com/flutter/flutter.git

Framework • revision 9ff4326e1f (9 hours ago) • 2020-12-17 06:19:05 +0000

Engine • revision 6edb402ee4

Tools • Dart 2.12.0 (build 2.12.0-157.0.dev)

```

username_3: Hi @username_0

Does the issue persists with uograde?

Can you please provide `flutter run --verbose` and a complete reproducible minimal code sample

Thank you

username_4: I removed the flutter snap package and installed flutter manually, no issue now.

username_0: Hi @username_3 Yes, it does!

<details>

<summary>flutter run -v</summary>

```

[ +101 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git -c log.showSignature=false log -n 1 --pretty=format:%H

[ +53 ms] Exit code 0 from: git -c log.showSignature=false log -n 1 --pretty=format:%H

[ ] cda1fae6b6f17e7178c7668cfe1a8b714840f4b3

[ +1 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git tag --points-at cda1fae6b6f17e7178c7668cfe1a8b714840f4b3

[ +21 ms] Exit code 0 from: git tag --points-at cda1fae6b6f17e7178c7668cfe1a8b714840f4b3

[ +5 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git describe --match *.*.* --long --tags cda1fae6b6f17e7178c7668cfe1a8b714840f4b3

[ +39 ms] Exit code 0 from: git describe --match *.*.* --long --tags cda1fae6b6f17e7178c7668cfe1a8b714840f4b3

[ ] 1.26.0-1.0.pre-84-gcda1fae6b6

[ +61 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref --symbolic @{u}

[ +11 ms] Exit code 0 from: git rev-parse --abbrev-ref --symbolic @{u}

[ ] origin/master

[ ] executing: [/home/username_0/snap/flutter/common/flutter/] git ls-remote --get-url origin

[ +5 ms] Exit code 0 from: git ls-remote --get-url origin

[ ] https://github.com/flutter/flutter.git

[ +65 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref HEAD

[ +6 ms] Exit code 0 from: git rev-parse --abbrev-ref HEAD

[ ] master

[ +65 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'IOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterWebSdk' is not required, skipping update.

[ +2 ms] Artifact Instance of 'WindowsEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'LinuxEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'LinuxFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerDebugSymbols' is not required, skipping update.

[ +88 ms] executing: /home/username_0/Android/Sdk/platform-tools/adb devices -l

[ +56 ms] List of devices attached

[ +5 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'IOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterWebSdk' is not required, skipping update.

[ +1 ms] Artifact Instance of 'WindowsEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSEngineArtifacts' is not required, skipping update.

[ +19 ms] Downloading linux-x64/linux-x64-flutter-gtk tools...

[+1283 ms] Content https://storage.googleapis.com/flutter_infra/flutter/1be6f414e7db52304b5e94a6725260c7f32f8dec/linux-x64/linux-x64-flutter-gtk.zip

md5 hash: dqSECrYxWPG+xTqbrKnerg==

[+293698 ms] Downloading linux-x64/linux-x64-flutter-gtk tools... (completed in 295.0s)

[ ] executing: unzip -o -q

/home/username_0/snap/flutter/common/flutter/bin/cache/downloads/storage.googleapis.com/flutter_infra/flutter/1be6f414e7db52304b5e94a6725260c7f32f8dec/l

inux-x64/linux-x64-flutter-gtk.zip -d /home/username_0/snap/flutter/common/flutter/bin/cache/artifacts/engine/linux-x64

[+1245 ms] Exit code 0 from: unzip -o -q

/home/username_0/snap/flutter/common/flutter/bin/cache/downloads/storage.googleapis.com/flutter_infra/flutter/1be6f414e7db52304b5e94a6725260c7f32f8dec/l

inux-x64/linux-x64-flutter-gtk.zip -d /home/username_0/snap/flutter/common/flutter/bin/cache/artifacts/engine/linux-x64

[ +65 ms] Downloading linux-x64-profile/linux-x64-flutter-gtk tools...

[ +826 ms] Content

https://storage.googleapis.com/flutter_infra/flutter/1be6f414e7db52304b5e94a6725260c7f32f8dec/linux-x64-profile/linux-x64-flutter-gtk.zip md5 hash:

gVpm7iiDoJrBfKmEDkf7Ng==

^[[B[+142955 ms] Downloading linux-x64-profile/linux-x64-flutter-gtk tools... (completed in 143.8s)

[ ] executing: unzip -o -q

[Truncated]

<asynchronous suspension>

#14 AppContext.run (package:flutter_tools/src/base/context.dart:149:12)

<asynchronous suspension>

#15 runInContext (package:flutter_tools/src/context_runner.dart:72:10)

<asynchronous suspension>

#16 main (package:flutter_tools/executable.dart:89:3)

<asynchronous suspension>

[ +254 ms] ensureAnalyticsSent: 252ms

[ +1 ms] Running shutdown hooks

[ ] Shutdown hook priority 4

[ +11 ms] Shutdown hooks complete

[ ] exiting with code 1

```

</details>

`flutter build linux --debug && ./build/linux/debug/bundle/bugs` & `flutter build linux --release && ./build/linux/debug/bundle/bugs` works! It's just the run command that doesn't work. I'll try to manually install flutter...

username_0: I cloned the repo and ran `flutter doctor` and other commands like `flutter channel`, and I'm waiting for flutter to download the dart sdk! not sure if this is by design or a bug or there is some issue on my laptop!

username_0: It works now!

username_0: Maybe the issue was because I chose "Ubuntu on wayland" when I login! I wasn't able to run the snap version of flutter-gallery until I changed that setting! I think so!

Or maybe it was because I have a minimal installation of Ubuntu and needed to run `sudo apt install clang cmake ninja-build liblzma-dev` as found by `flutter doctor`?

Anyway, I can't re-install the snap version of flutter to know for sure because it'll download another 200MB and I'm really tired of downloading! Hopefully someone else will be able to solve the mystery! :)

Thank you.

Status: Issue closed

username_3: Hi @username_0

Glad it works for you now, generally, snap packages should download everything necessary even if you're using a minimal version of Ubuntu. Since @username_4 also had the same issue, I would think it might snap Flutter issue. If the problem persists using the Snap Flutter version, please feel free to file an issue in their dedicated GitHub [repository](https://github.com/canonical/flutter-snap/issues).

Given your last message I feel safe to close this issue, if you disagree please write in the comments and I will reopen it.

Thank you

username_0: Does it work if you choose "Ubuntu on wayland" when you login? :thinking:

username_5: Hi, could I please ask you to try a full re-install of the snap and see if it fixes things:

```

snap remove --purge flutter

rm -rf ~/snap/flutter

snap install flutter --classic

flutter channel dev

flutter upgrade

flutter config --enable-linux-desktop

```

username_0: Hi @username_5

I believe I did that before (with the --edge flag) and it didn't work at all! I really don't want to do it again because my internet speed isn't fast, but I may try in a few days.

I think it's a wayland issue or Ubuntu 20.10 issue!

The snap version of "fluttter gallery" used to work on Ubuntu 20.04 on wayland (as well as flutter itself), but now I get:

```

$ flutter-gallery

/bin/bash: warning: setlocale: LC_ALL: cannot change locale (en_US.UTF-8)

(flutter_gallery:7808): Gtk-WARNING **: 17:00:39.491: cannot open display: :0

```

when I run flutter-gallery on wayland and not when I use the default display (x11?!)

username_0: @username_5 It seems it did purge flutter and installed the git version afterwards! Will try these steps later on.

username_0: Hi @username_5,

I tried to run the snap version of flutter on x11 and wayland but it still doesn't run!

These're the commands I used:

```

sudo snap remove --purge flutter

rm -rf ~/snap/flutter

sudo snap install flutter --classic

flutter channel dev

flutter upgrade

flutter config --enable-linux-desktop

flutter create isbuggy

```

<details>

<summary>flutter run -v</summary>

```

$ flutter run -v

[ +101 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git -c log.showSignature=false log -n 1 --pretty=format:%H

[ +52 ms] Exit code 0 from: git -c log.showSignature=false log -n 1 --pretty=format:%H

[ ] 63062a64432cce03315d6b5196fda7912866eb37

[ +1 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git tag --points-at 63062a64432cce03315d6b5196fda7912866eb37

[ +17 ms] Exit code 0 from: git tag --points-at 63062a64432cce03315d6b5196fda7912866eb37

[ ] 1.26.0-1.0.pre

[ +58 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref --symbolic @{u}

[ +8 ms] Exit code 0 from: git rev-parse --abbrev-ref --symbolic @{u}

[ ] origin/dev

[ ] executing: [/home/username_0/snap/flutter/common/flutter/] git ls-remote --get-url origin

[ +6 ms] Exit code 0 from: git ls-remote --get-url origin

[ ] https://github.com/flutter/flutter.git

[ +72 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref HEAD

[ +5 ms] Exit code 0 from: git rev-parse --abbrev-ref HEAD

[ ] dev

[ +88 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'IOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterWebSdk' is not required, skipping update.

[ +3 ms] Artifact Instance of 'WindowsEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'LinuxEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'LinuxFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerDebugSymbols' is not required, skipping update.

[ +104 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'IOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterWebSdk' is not required, skipping update.

[ +1 ms] Artifact Instance of 'WindowsEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSEngineArtifacts' is not required, skipping update.

[ +1 ms] Artifact Instance of 'LinuxFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerDebugSymbols' is not required, skipping update.

[ +105 ms] Skipping pub get: version match.

[ +119 ms] Found plugin integration_test at /home/username_0/snap/flutter/common/flutter/packages/integration_test/

[ +295 ms] Found plugin integration_test at /home/username_0/snap/flutter/common/flutter/packages/integration_test/

[Truncated]

[ +256 ms] ensureAnalyticsSent: 254ms

[ +3 ms] Running shutdown hooks

[ ] Shutdown hook priority 4

[ +11 ms] Shutdown hooks complete

[ ] exiting with code 1

```

</details>

then:

```

snap refresh flutter --edge

flutter upgrade

flutter run -v

```

and still not working on wayland and x11.

Note: It seems I hadn't used flutter on wayland on Ubuntu 20.04.

username_5: Thanks @username_0, it appears this may not be snap specific. We’re seeing the same from another user using the flutter git repo directly: https://github.com/flutter/flutter/issues/72703#issuecomment-749529246

username_0: welcome but that issue seems a bit different to me! (logger vs. linker)

username_5: Oh I see, sorry I just saw that the end of the trace looked the same, missed the message just above.

username_5: I've just pushed a new version of the snap to edge, please could you see if it fixes the issue for you:

```

snap refresh flutter --edge

```

username_5: I've just pushed a new version of the snap, please could you see if it fixes the issue for you:

```

snap refresh flutter --stable

```

username_0: Hi @username_5 Yes, it does fix it but closing the release version of the app doesn't finish it; I have to press `q` in the terminal to finish it!

Also, the app renders black screen for about 2 seconds when on debug mode and keeps showing a black screen on release mode unless I press something or move the mouse!

```

$ flutter run --release -v

[ +100 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git -c log.showSignature=false log -n 1 --pretty=format:%H

[ +55 ms] Exit code 0 from: git -c log.showSignature=false log -n 1 --pretty=format:%H

[ ] 63062a64432cce03315d6b5196fda7912866eb37

[ ] executing: [/home/username_0/snap/flutter/common/flutter/] git tag --points-at 63062a64432cce03315d6b5196fda7912866eb37

[ +23 ms] Exit code 0 from: git tag --points-at 63062a64432cce03315d6b5196fda7912866eb37

[ ] 1.26.0-1.0.pre

[ +65 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref --symbolic @{u}

[ +10 ms] Exit code 0 from: git rev-parse --abbrev-ref --symbolic @{u}

[ ] origin/dev

[ ] executing: [/home/username_0/snap/flutter/common/flutter/] git ls-remote --get-url origin

[ +14 ms] Exit code 0 from: git ls-remote --get-url origin

[ ] https://github.com/flutter/flutter.git

[ +62 ms] executing: [/home/username_0/snap/flutter/common/flutter/] git rev-parse --abbrev-ref HEAD

[ +6 ms] Exit code 0 from: git rev-parse --abbrev-ref HEAD

[ ] dev

[ +65 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'IOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterWebSdk' is not required, skipping update.

[ +2 ms] Artifact Instance of 'WindowsEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'LinuxEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'LinuxFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerDebugSymbols' is not required, skipping update.

[ +94 ms] Artifact Instance of 'AndroidGenSnapshotArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'AndroidInternalBuildArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'IOSEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterWebSdk' is not required, skipping update.

[ +1 ms] Artifact Instance of 'WindowsEngineArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSEngineArtifacts' is not required, skipping update.

[ +1 ms] Artifact Instance of 'LinuxFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'MacOSFuchsiaSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerSDKArtifacts' is not required, skipping update.

[ ] Artifact Instance of 'FlutterRunnerDebugSymbols' is not required, skipping update.

[ +110 ms] Skipping pub get: version match.

[ +124 ms] Found plugin integration_test at /home/username_0/snap/flutter/common/flutter/packages/integration_test/

[ +275 ms] Found plugin integration_test at /home/username_0/snap/flutter/common/flutter/packages/integration_test/

[ +5 ms] Generating /home/username_0/Coding/projects/waw/android/app/src/main/java/io/flutter/plugins/GeneratedPluginRegistrant.java

[ +127 ms] Launching lib/main.dart on Linux in release mode...

[ +13 ms] Building Linux application...

[ +24 ms] executing: [build/linux/release/] cmake -G Ninja -DCMAKE_BUILD_TYPE=Release /home/username_0/Coding/projects/waw/linux

[ +60 ms] -- Configuring done

[ +2 ms] -- Generating done

[ ] -- Build files have been written to: /home/username_0/Coding/projects/waw/build/linux/release

[ +14 ms] executing: ninja -C build/linux/release install

[ +17 ms] ninja: Entering directory `build/linux/release'

[+3581 ms] [1/5] Generating /home/username_0/Coding/projects/waw/linux/flutter/ephemeral/libflutter_linux_gtk.so,

/home/username_0/Coding/projects/waw/linux/flutter/ephemeral/flutter_linux/fl_basic_message_channel.h,

/home/username_0/Coding/projects/waw/linux/flutter/ephemeral/flutter_linux/fl_binary_codec.h,

/home/username_0/Coding/projects/waw/linux/flutter/ephemeral/flutter_linux/fl_binary_messenger.h,

/home/username_0/Coding/projects/waw/linux/flutter/ephemeral/flutter_linux/fl_dart_project.h,

/home/username_0/Coding/projects/waw/linux/flutter/ephemeral/flutter_linux/fl_engine.h,

[Truncated]

• Framework revision 63062a6443 (3 weeks ago), 2020-12-13 23:19:13 +0800

• Engine revision 4797b06652

• Dart version 2.12.0 (build 2.12.0-141.0.dev)

[✓] Linux toolchain - develop for Linux desktop

• clang version 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

• cmake version 3.10.2

• ninja version 1.8.2

• pkg-config version 0.29.1

[✗] Flutter IDE Support (No supported IDEs installed)

• IntelliJ - https://www.jetbrains.com/idea/

• Android Studio - https://developer.android.com/studio/

• VS Code - https://code.visualstudio.com/

[✓] Connected device (1 available)

• Linux (desktop) • linux • linux-x64 • Linux

! Doctor found issues in 1 category.

```

username_5: @username_0, do you see the same behaviour when running the built application from `build/linux/debug/bundle/` / `build/linux/release/bundle/`?

username_0: @username_5 Yes for the debug version, and no for the release version!

Using the commands:

```

flutter build linux --debug && ./build/linux/debug/bundle/waw

flutter build linux --release && ./build/linux/release/bundle/waw

```

Note: I have a similar issue started when I upgraded to Ubuntu 20.10, the clock doesn't update whenever I watch a video while on full-screen mode. So maybe Ubuntu issue? or a snap issue (not sure if the clock is related to the gnome-calendar snap!)

username_5: Ok, if you see any of these issues when running direct from `build/linux/xxx/bundle/` it won't be snap-specific. I think it's fair to say this bug can be closed now. Could you please [log a separate bug](https://github.com/flutter/flutter/issues/new?assignees=&labels=&template=2_bug.md&title=) for new issue you're facing. Thanks for all your help! |

ssu-see/see.ssu.ac.kr | 64591764 | Title: 작업시 요청사항[반드시 읽어주시기 바랍니다.]

Question:

username_0: 서버에서 절대 작업하지 마세요. 로컬 환경이랑 충돌납니다.

(해서는 안되지만 했다면 꼭 깃헙에 올려주세요.)

@준형 @Luavis 서버 환경을 바꾸는 것은 뭐 그렇다고 칩니다. 그렇지만

사전에 반드시 말해주셔야 하는 사항들이 있습니다.

(하지만 저 역시 배우는 단계이고, 저번에 실수를 많이 한건 거듭 사과 드립니다.)

무엇보다, 실력보다 협업이 훨씬 중요하다구요.

변경사항 적용시 Fork를 떠서 작업 후, Pull request를 하고 적용해주시기 바랍니다.

Travis 결과를 확인해주시기 바랍니다.<issue_closed>

Status: Issue closed |

cmdcolin/travigraphjs | 456654226 | Title: Data display sometimes includes bad time (unix epoch)

Question:

username_0: Could be related to network failure as it's not super reproducible

Answers:

username_0: Could be related to network failure as it's not super reproducible

username_1: It seems it's because sometimes when you're querying new jobs that haven't finished yet, their `finished_at` field is `null`, which is probably interpreted as `0` by the graphing library

username_0: Thanks, I'll see if i can at least filter these out!

Status: Issue closed

username_0: Should be added now |

wuhan2020/map-viz | 561465868 | Title: 合并两个折线图

Question:

username_0: ## 描述功能要求

### 如果有的话,请附图

[Figma](https://www.figma.com/file/Dq4SyxvTwO7lDQVnwox36G/wuhan-v1-rapid-prototype?node-id=0%3A28)

## 额外的信息

Answers:

username_0: 两个图标合并在一起,无法看清死亡和治愈。 数量级差距太大。 所以 关闭 issue。

Status: Issue closed

|

prometheus/snmp_exporter | 734715655 | Title: cant add APC Swtiched Rack PDU

Question:

username_0: <!--

Please note: GitHub issues should only be used for feature requests and

bug reports. For general discussions and support, please refer to one of:

- #prometheus on freenode

- the Prometheus Users list: https://groups.google.com/forum/#!forum/prometheus-users

For bug reports, please fill out the below fields and provide as much detail

as possible about your issue. For feature requests, you may omit the

following template.

If you include CLI output, please run those programs with additional parameters:

snmp_exporter: `-log.level=debug`

snmpbulkget etc: `-On`

-->

### Host operating system: output of `uname -a`

### snmp_exporter version: output of `snmp_exporter -version`

<!-- If building from source, run `make` first. -->

### What device/snmpwalk OID are you using?

modules:

apcpdu:

version: 1

walk:

- .1.3.6.1.4.1.318.1.1.26.2.1.3 # rPDU2IdentName

- .1.3.6.1.4.1.318.1.1.26.2.1.4 # rPDU2IdentLocation

- .1.3.6.1.4.1.318.172.16.17.32.1.8 # rPDU2IdentModelNumber

- .1.3.6.1.4.1.318.1.1.26.2.1.9 # rPDU2IdentSerialNumber

- .1.3.6.1.4.1.318.172.16.17.32.3.1.4 # rPDU2DeviceStatusLoadState

- 1.3.6.1.4.1.318.172.16.17.32.3.1.5 # rPDU2DeviceStatusPower

- 1.3.6.1.4.1.318.1.1.26.4.3.1.6 # rPDU2DeviceStatusPeakPower

- 1.3.6.1.4.1.318.1.1.26.4.3.1.9 # rPDU2DeviceStatusEnergy

- 1.3.6.1.4.1.318.1.1.26.4.3.1.12 # rPDU2DeviceStatusPowerSupplyAlarm

- 1.3.6.1.4.1.318.1.1.26.4.3.1.13 # rPDU2DeviceStatusPowerSupply1Status

- 1.3.6.1.4.1.318.1.1.26.4.3.1.14 # rPDU2DeviceStatusPowerSupply2Status

- 1.3.6.1.4.1.318.1.1.26.4.3.1.16 # rPDU2DeviceStatusApparentPower### If this is a new device, please link to the MIB(s).

### What did you do that produced an error?

generate doesnt build the snmp.yml file. i am using this mibs. https://www.se.com/us/en/download/document/APC_POWERNETMIB_432/

a successful snmp.yml file

### What did you expect to see?

### What did you see instead? ./generator generate

level=info ts=2020-11-02T18:06:50.747Z caller=net_snmp.go:142 msg="Loading MIBs" from=../mibs/apc/powernet432.mib

level=info ts=2020-11-02T18:06:50.752Z caller=main.go:52 msg="Generating config for module" module=apcpdu

level=error ts=2020-11-02T18:06:50.752Z caller=main.go:130 msg="Error generating config netsnmp" err="cannot find oid '.1.3.6.1.4.1.318.1.1.26.2.1.3' to walk"

Answers:

username_1: It makes more sense to ask questions like this on the [prometheus-users mailing list](https://groups.google.com/forum/#!forum/prometheus-users) rather than in a GitHub issue. On the mailing list, more people are available to potentially respond to your question, and the whole community can benefit from the answers provided.

Status: Issue closed

|

quasilyte/go-ruleguard | 677921898 | Title: breaking api change in ruleguard.go -> Context -> Report ?

Question:

username_0: See https://github.com/username_1/go-ruleguard/pull/64/files#r469483412 for the exact location of the problematic change

Here is how the error materializes itself in a project that uses go-ruleguard (in this case, go-critic):

```

[16:55:23] : [Step 5/6] #21 51.74 /go/pkg/mod/github.com/go-critic/go-critic@v0.5.0/checkers/ruleguard_checker.go:81:3: cannot use func literal (type func(ast.Node, string, *ruleguard.Suggestion)) as type func(ruleguard.GoRuleInfo, ast.Node, string, *ruleguard.Suggestion) in field value

[16:55:23] : [Step 5/6] #21 57.86 make: *** [GNUmakefile:183: lint-deps] Error 2

```

Typical dependency chain is as follows:

<project> -> golangci-lint -> go-critic -> go-ruleguard

if <project> uses go get -u to install packages, the dependency tree gets updated and go-critic can't build so the build breaks.

I think this is would be considered a breaking change by semver rules, but given it's all pre-release, I guess all bets are off. Up to you to decide how to best address this :)

Answers:

username_1: Am I right that we basically need to bumb at least minor version to make things work?

username_0: Looking at:

- https://golang.org/cmd/go/#hdr-Add_dependencies_to_current_module_and_install_them

I think bumping the minor version would protect folks using `-u=patch` option but not folks using `-u` so unfortunately not everything is in your hands - this would reduce the number of users running into issues but not eliminate it. I'm not sure it's possible to eliminate the problem entirely though.

- https://semver.org/#spec-item-4

specifies that with version 0.x.x all bets are off.

in my experience (mostly with NPM), pre-release packages are easier to manage using the following dependency scheme:

`<X.Y.Z>-preview.<a>` or to the extreme, `<X.Y.Z>-preview.<a.b.c>` (this is what nuget does) where `X.Y.Z` is the version you expect the package to be released as stable and `<a>` or `<a.b.c>` is the pre-release versioning. I'm still kinda new to the golang world, so I don't think I know all the intricacies of how `go get` works. And I believe as a maintainer you have the right to pick what works best for you :)

username_1: I'll start by updating the ruleguard version in go-critic. This should fix the golangci build.

username_1: Release `v0.2.0` is out and we're also using Go modules now.

username_0: That's awesome, thanks for the quick turn around :)

Status: Issue closed

|

openshift/origin | 82076180 | Title: Container status errors look ugly in details sidebar

Question:

username_0: Better to have the message than not have it, but we could present it better.

/cc @liggitt

Answers:

username_0: We might just close this. We have individual pod pages now and don't show pod details in the sidebar.

username_0: Container state reason is supposed to be a brief message.

```

// ContainerStateWaiting is a waiting state of a container.

type ContainerStateWaiting struct {

// (brief) reason the container is not yet running.

Reason string `json:"reason,omitempty"`

// Message regarding why the container is not yet running.

Message string `json:"message,omitempty"`

}

```

I haven't seen a message like this for a while. Closing. We can reopen if we see it again.

Status: Issue closed

|

postmanlabs/postman-app-support | 345663751 | Title: Postman App Performance Lag

Question:

username_0: I'm using the desktop native app on a Windows 10 machine. I've closed all tabs within the collection and rebooted my machine. I'm authoring in form-data fields specifically in the Body section of a POST request of the collection and noticing a very long lag (~10-20 seconds) from keystroke to the character appearing in the text box. My requests are at 176 in that one collection so I understand I may be pushing the tool to the limits. However, please advise best practices to improve the performance of the tool on our end. Is there a way we should say author outside and can import it in? All of my content is written, I'm just pulling it in from another source via a combination of bulk edit and copy/paste.

Answers:

username_1: I have the "new" desktop Windows app, I have installed it / uninstalled it and it runs poorly. My laptop is a 4 core CPU with 16 GB of RAM running Windows 10 Enterprise.

It takes several minutes for it to respond to a mouse click. When it does finally respond, it takes ages to search. The interceptor feature was removed. This is a horrible experience that I would not wish on my worst enemy. When does a company decide to remove critical functionality? Is it so you can make $$$ from support cases? I really wish you added to the Chrome version rather than deprecating it. You seem to be moving backward in time, apps are going away from the desktop, not to the desktop.

username_2: I'm a paying client (Postman Pro) and the one who reported the original lag issue. I'm running Windows 10 Enterprise on a laptop with a Core i7 processor and 16 GB of RAM, 64-bit OS.

username_3: We are making some fundamental improvements to Postman's response rendering including using the Monaco editor for better memory optimization and rendering speeds. You can try out these updates in the Canary channel here: https://www.getpostman.com/downloads/canary

<img width="1790" alt="Screenshot 2019-06-12 12 19 53" src="https://user-images.githubusercontent.com/653409/59379813-72ffc700-8d0c-11e9-9da4-5c9ce0492dc4.png">

You can test it out with an API that we have created on the Postman Echo service. Examples here: https://documenter.getpostman.com/view/55577/S1ZudWWm?version=latest

username_4: It's 2020, I think it is the same. I used postman extensively. Insomnia is still better experience when switching tabs. I am sorry but, you can feel it too. IMHO.

username_5: Still lagging on windows, as high as a 1.5 second delay after typing things for things to appear in the URL bar.

username_6: We have made some performance improvements in the past few months.

Can anyone having issues please try the most recent version of the application and let us know if they are still seeing an issue?

username_7: Windows 10. Core i7 and 16GB of RAM.

Postman lags so much.

username_8: I had a similar issue with a not so big postman file (1 collection with 30 requests) on Windows 10, the IDE was irregularly laggy when travelling through the app.

Changing the "Performance options" settings to "best performances" solved the problems for me. I did not spend time to identify which option caused the lags (maybe text smoothing, idk).

That could be a idea.