url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.83B

| node_id

stringlengths 18

32

| number

int64 1

6.09k

| title

stringlengths 1

290

| labels

list | state

stringclasses 2

values | locked

bool 1

class | milestone

dict | comments

int64 0

54

| created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| closed_at

stringlengths 20

20

⌀ | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes | comments_text

sequence |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/1709 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1709/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1709/comments | https://api.github.com/repos/huggingface/datasets/issues/1709/events | https://github.com/huggingface/datasets/issues/1709 | 781,875,640 | MDU6SXNzdWU3ODE4NzU2NDA= | 1,709 | Databases | [] | closed | false | null | 0 | 2021-01-08T06:14:03Z | 2021-01-08T09:00:08Z | 2021-01-08T09:00:08Z | null | ## Adding a Dataset

- **Name:** *name of the dataset*

- **Description:** *short description of the dataset (or link to social media or blog post)*

- **Paper:** *link to the dataset paper if available*

- **Data:** *link to the Github repository or current dataset location*

- **Motivation:** *what are some good reasons to have this dataset*

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md). | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1709/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1709/timeline | null | completed | null | null | false | [] |

https://api.github.com/repos/huggingface/datasets/issues/2864 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2864/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2864/comments | https://api.github.com/repos/huggingface/datasets/issues/2864/events | https://github.com/huggingface/datasets/pull/2864 | 986,159,438 | MDExOlB1bGxSZXF1ZXN0NzI1MzkyNjcw | 2,864 | Fix data URL in ToTTo dataset | [] | closed | false | {

"closed_at": null,

"closed_issues": 2,

"created_at": "2021-07-21T15:34:56Z",

"creator": {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

},

"description": "Next minor release",

"due_on": "2021-08-30T07:00:00Z",

"html_url": "https://github.com/huggingface/datasets/milestone/8",

"id": 6968069,

"labels_url": "https://api.github.com/repos/huggingface/datasets/milestones/8/labels",

"node_id": "MI_kwDODunzps4AalMF",

"number": 8,

"open_issues": 4,

"state": "open",

"title": "1.12",

"updated_at": "2021-10-13T10:26:33Z",

"url": "https://api.github.com/repos/huggingface/datasets/milestones/8"

} | 0 | 2021-09-02T05:25:08Z | 2021-09-02T06:47:40Z | 2021-09-02T06:47:40Z | null | Data source host changed their data URL: google-research-datasets/ToTTo@cebeb43.

Fix #2860. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2864/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2864/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2864.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2864",

"merged_at": "2021-09-02T06:47:40Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2864.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2864"

} | true | [] |

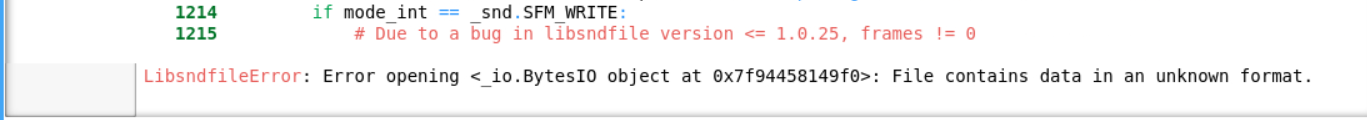

https://api.github.com/repos/huggingface/datasets/issues/5276 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5276/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5276/comments | https://api.github.com/repos/huggingface/datasets/issues/5276/events | https://github.com/huggingface/datasets/issues/5276 | 1,459,363,442 | I_kwDODunzps5W_B5y | 5,276 | Bug in downloading common_voice data and snall chunk of it to one's own hub | [] | closed | false | null | 17 | 2022-11-22T08:17:53Z | 2023-07-21T14:33:10Z | 2023-07-21T14:33:10Z | null | ### Describe the bug

I'm trying to load the common voice dataset. Currently there is no implementation to download just par tof the data, and I need just one part of it, without downloading the entire dataset

Help please?

### Steps to reproduce the bug

So here is what I have done:

1. Download common_voice data

2. Trim part of it and publish it to my own repo.

3. Download data from my own repo, but am getting this error.

### Expected behavior

There shouldn't be an error in downloading part of the data and publishing it to one's own repo

### Environment info

common_voice 11 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5276/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5276/timeline | null | completed | null | null | false | [

"Sounds like one of the file is not a valid one, can you make sure you uploaded valid mp3 files ?",

"Well I just sharded the original commonVoice dataset and pushed a small chunk of it in a private rep\n\nWhat did go wrong?\n\nHolen Sie sich Outlook für iOS<https://aka.ms/o0ukef>\n________________________________\nVon: Quentin Lhoest ***@***.***>\nGesendet: Tuesday, November 22, 2022 3:03:40 PM\nAn: huggingface/datasets ***@***.***>\nCc: capsabogdan ***@***.***>; Author ***@***.***>\nBetreff: Re: [huggingface/datasets] Bug in downloading common_voice data and snall chunk of it to one's own hub (Issue #5276)\n\n\nSounds like one of the file is not a valid one, can you make sure you uploaded valid mp3 files ?\n\n—\nReply to this email directly, view it on GitHub<https://github.com/huggingface/datasets/issues/5276#issuecomment-1323727434>, or unsubscribe<https://github.com/notifications/unsubscribe-auth/ALSIFOAPAL2V4TBJTSPMAULWJTHDZANCNFSM6AAAAAASHQJ63U>.\nYou are receiving this because you authored the thread.Message ID: ***@***.***>\n",

"It should be all good then !\r\nCould you share a link to your repository for me to investigate what went wrong ?",

"https://huggingface.co/datasets/DTU54DL/common-voice-test16k\n\nAm Di., 22. Nov. 2022 um 16:43 Uhr schrieb Quentin Lhoest <\n***@***.***>:\n\n> It should be all good then !\n> Could you share a link to your repository for me to investigate what went\n> wrong ?\n>\n> —\n> Reply to this email directly, view it on GitHub\n> <https://github.com/huggingface/datasets/issues/5276#issuecomment-1323876682>,\n> or unsubscribe\n> <https://github.com/notifications/unsubscribe-auth/ALSIFOEUJRZWXAM7DYA5VJDWJTS3NANCNFSM6AAAAAASHQJ63U>\n> .\n> You are receiving this because you authored the thread.Message ID:\n> ***@***.***>\n>\n",

"I see ! This is a bug with MP3 files.\r\n\r\nWhen we store audio data in parquet, we store the bytes and the file name. From the file name extension we know if it's a WAV, an MP3 or else. But here it looks like the paths are all None.\r\n\r\nIt looks like it comes from here:\r\n\r\nhttps://github.com/huggingface/datasets/blob/7feeb5648a63b6135a8259dedc3b1e19185ee4c7/src/datasets/features/audio.py#L212\r\n\r\nCc @polinaeterna maybe we should simply put the file name instead of None values ?",

"@lhoestq I remember we wanted to avoid storing redundant data but maybe it's not that crucial indeed to store one more string value. \r\nOr we can store paths only for mp3s, considering that for other formats we don't have such a problem with reading from bytes without format specified. ",

"It doesn't cost much to always store the file name IMO",

"thanks for the help!\n\ncan I do anything on my side? we are doing a DL project and we need the\ndata really quick.\n\nthanks\nbogdan\n\n> Message ID: ***@***.***>\n>\n",

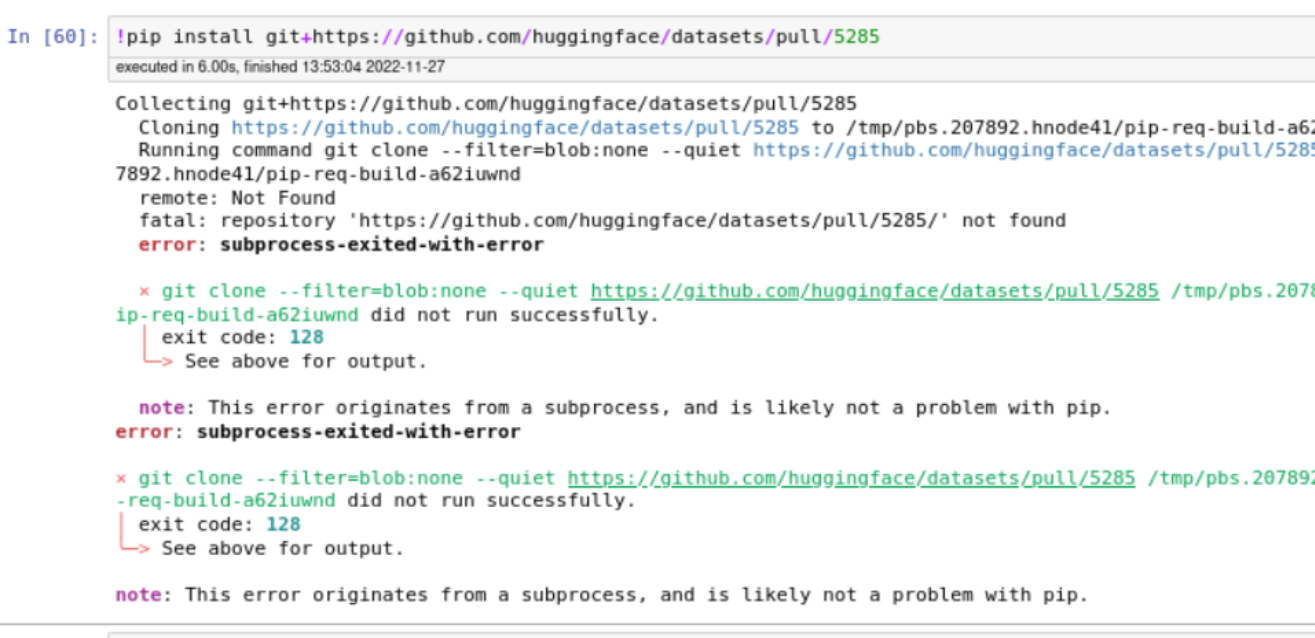

"I opened a pull requests here: https://github.com/huggingface/datasets/pull/5285, we'll do a new release soon with this fix.\r\n\r\nOtherwise if you're really in a hurry you can install `datasets` from this PR",

"[image: image.png]\n\n> Message ID: ***@***.***>\n>\n",

"any idea on what's going wrong here?\n\nthanks\n\nAm So., 27. Nov. 2022 um 13:53 Uhr schrieb Bogdan Capsa <\n***@***.***>:\n\n> [image: image.png]\n>\n>> Message ID: ***@***.***>\n>>\n>\n",

"hi @capsabogdan! \r\ncould you please share more specifically what problem do you have now?",

"I have attached this screenshot above . can u pls help? So can not pip from pull request\r\n\r\n\r\n",

"The pull request has been merged on `main`.\r\nYou can install `datasets` from `main` using\r\n```\r\npip install git+https://github.com/huggingface/datasets.git\r\n```",

"I've tried to load this dataset DTU54DL/common-voice-test16k, but am\ngetting the same error.\n\nSo the bug fix will fix only if I upload a new dataset, or also loading\npreviously uploaded datasets?\n\nthanks\n\nAm Mo., 28. Nov. 2022 um 19:51 Uhr schrieb Quentin Lhoest <\n***@***.***>:\n\n> The pull request has been merged on main.\n> You can install datasets from main using\n>\n> pip install git+https://github.com/huggingface/datasets.git\n>\n> —\n> Reply to this email directly, view it on GitHub\n> <https://github.com/huggingface/datasets/issues/5276#issuecomment-1329587334>,\n> or unsubscribe\n> <https://github.com/notifications/unsubscribe-auth/ALSIFOCNYYIGHM2EX3ZIO6DWKT5MXANCNFSM6AAAAAASHQJ63U>\n> .\n> You are receiving this because you were mentioned.Message ID:\n> ***@***.***>\n>\n",

"> So the bug fix will fix only if I upload a new dataset, or also loading\r\npreviously uploaded datasets?\r\n\r\nYou have to reupload the dataset, sorry for the inconvenience",

"thank you so much for the help! works like a charm!\n\nAm Di., 29. Nov. 2022 um 12:15 Uhr schrieb Quentin Lhoest <\n***@***.***>:\n\n> So the bug fix will fix only if I upload a new dataset, or also loading\n> previously uploaded datasets?\n>\n> You have to reupload the dataset, sorry for the inconvenience\n>\n> —\n> Reply to this email directly, view it on GitHub\n> <https://github.com/huggingface/datasets/issues/5276#issuecomment-1330468393>,\n> or unsubscribe\n> <https://github.com/notifications/unsubscribe-auth/ALSIFOBKEFZO57BAKY4IGW3WKXQUZANCNFSM6AAAAAASHQJ63U>\n> .\n> You are receiving this because you were mentioned.Message ID:\n> ***@***.***>\n>\n"

] |

https://api.github.com/repos/huggingface/datasets/issues/111 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/111/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/111/comments | https://api.github.com/repos/huggingface/datasets/issues/111/events | https://github.com/huggingface/datasets/pull/111 | 618,528,060 | MDExOlB1bGxSZXF1ZXN0NDE4MjQwMjMy | 111 | [Clean-up] remove under construction datastes | [] | closed | false | null | 0 | 2020-05-14T20:52:13Z | 2020-05-14T20:52:23Z | 2020-05-14T20:52:22Z | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/111/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/111/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/111.diff",

"html_url": "https://github.com/huggingface/datasets/pull/111",

"merged_at": "2020-05-14T20:52:22Z",

"patch_url": "https://github.com/huggingface/datasets/pull/111.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/111"

} | true | [] |

|

https://api.github.com/repos/huggingface/datasets/issues/2723 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2723/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2723/comments | https://api.github.com/repos/huggingface/datasets/issues/2723/events | https://github.com/huggingface/datasets/pull/2723 | 954,864,104 | MDExOlB1bGxSZXF1ZXN0Njk4Njk0NDMw | 2,723 | Fix en subset by modifying dataset_info with correct validation infos | [] | closed | false | null | 0 | 2021-07-28T13:36:19Z | 2021-07-28T15:22:23Z | 2021-07-28T15:22:23Z | null | - Related to: #2682

We correct the values of `en` subset concerning the expected validation values (both `num_bytes` and `num_examples`.

Instead of having:

`{"name": "validation", "num_bytes": 828589180707, "num_examples": 364868892, "dataset_name": "c4"}`

We replace with correct values:

`{"name": "validation", "num_bytes": 825767266, "num_examples": 364608, "dataset_name": "c4"}`

There are still issues with validation with other subsets, but I can't download all the files, unzip to check for the correct number of bytes. (If you have a fast way to obtain those values for other subsets, I can do this in this PR ... otherwise I can't spend those resources)

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2723/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2723/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2723.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2723",

"merged_at": "2021-07-28T15:22:23Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2723.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2723"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/1237 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1237/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1237/comments | https://api.github.com/repos/huggingface/datasets/issues/1237/events | https://github.com/huggingface/datasets/pull/1237 | 758,318,353 | MDExOlB1bGxSZXF1ZXN0NTMzNTExMDky | 1,237 | Add AmbigQA dataset | [] | closed | false | null | 0 | 2020-12-07T09:07:19Z | 2020-12-08T13:38:52Z | 2020-12-08T13:38:52Z | null | # AmbigQA: Answering Ambiguous Open-domain Questions Dataset

Adding the [AmbigQA](https://nlp.cs.washington.edu/ambigqa/) dataset as part of the sprint 🎉 (from Open dataset list for Dataset sprint)

Added both the light and full versions (as seen on the dataset homepage)

The json format changes based on the value of one 'type' field, so I set the unavailable field to an empty list. This is explained in the README -> Data Fields

```py

train_light_dataset = load_dataset('./datasets/ambig_qa',"light",split="train")

val_light_dataset = load_dataset('./datasets/ambig_qa',"light",split="validation")

train_full_dataset = load_dataset('./datasets/ambig_qa',"full",split="train")

val_full_dataset = load_dataset('./datasets/ambig_qa',"full",split="validation")

for example in train_light_dataset:

for i,t in enumerate(example['annotations']['type']):

if t =='singleAnswer':

# use the example['annotations']['answer'][i]

# example['annotations']['qaPairs'][i] - > is []

print(example['annotations']['answer'][i])

else:

# use the example['annotations']['qaPairs'][i]

# example['annotations']['answer'][i] - > is []

print(example['annotations']['qaPairs'][i])

```

- [x] All tests passed

- [x] Added dummy data

- [x] Added data card (as much as I could)

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1237/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1237/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1237.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1237",

"merged_at": "2020-12-08T13:38:52Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1237.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1237"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/3579 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3579/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3579/comments | https://api.github.com/repos/huggingface/datasets/issues/3579/events | https://github.com/huggingface/datasets/pull/3579 | 1,103,451,118 | PR_kwDODunzps4xBmY4 | 3,579 | Add Text2log Dataset | [] | closed | false | null | 1 | 2022-01-14T10:45:01Z | 2022-01-20T17:09:44Z | 2022-01-20T17:09:44Z | null | Adding the text2log dataset used for training FOL sentence translating models | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3579/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3579/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3579.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3579",

"merged_at": "2022-01-20T17:09:44Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3579.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3579"

} | true | [

"The CI fails are unrelated to your PR and fixed on master, I think we can merge now !"

] |

https://api.github.com/repos/huggingface/datasets/issues/3157 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3157/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3157/comments | https://api.github.com/repos/huggingface/datasets/issues/3157/events | https://github.com/huggingface/datasets/pull/3157 | 1,034,775,165 | PR_kwDODunzps4tm3_I | 3,157 | Fixed: duplicate parameter and missing parameter in docstring | [] | closed | false | null | 0 | 2021-10-25T07:26:00Z | 2021-10-25T14:02:19Z | 2021-10-25T14:02:19Z | null | changing duplicate parameter `data_files` in `DatasetBuilder.__init__` to the missing parameter `data_dir` | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3157/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3157/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3157.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3157",

"merged_at": "2021-10-25T14:02:18Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3157.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3157"

} | true | [] |

https://api.github.com/repos/huggingface/datasets/issues/1568 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1568/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1568/comments | https://api.github.com/repos/huggingface/datasets/issues/1568/events | https://github.com/huggingface/datasets/pull/1568 | 766,722,994 | MDExOlB1bGxSZXF1ZXN0NTM5NjY2ODg1 | 1,568 | Added the dataset clickbait_news_bg | [] | closed | false | null | 2 | 2020-12-14T17:03:00Z | 2020-12-15T18:28:56Z | 2020-12-15T18:28:56Z | null | There was a problem with my [previous PR 1445](https://github.com/huggingface/datasets/pull/1445) after rebasing, so I'm copying the dataset code into a new branch and submitting a new PR. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1568/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1568/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1568.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1568",

"merged_at": "2020-12-15T18:28:56Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1568.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1568"

} | true | [

"Hi @tsvm Great work! \r\nSince you have raised a clean PR could you close the earlier one - #1445 ? \r\n",

"> Hi @tsvm Great work!\r\n> Since you have raised a clean PR could you close the earlier one - #1445 ?\r\n\r\nDone."

] |

https://api.github.com/repos/huggingface/datasets/issues/3643 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3643/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3643/comments | https://api.github.com/repos/huggingface/datasets/issues/3643/events | https://github.com/huggingface/datasets/pull/3643 | 1,116,417,428 | PR_kwDODunzps4xr8mX | 3,643 | Fix sem_eval_2018_task_1 download location | [] | closed | false | null | 1 | 2022-01-27T15:45:00Z | 2022-02-04T15:15:26Z | 2022-02-04T15:15:26Z | null | As discussed with @lhoestq in https://github.com/huggingface/datasets/issues/3549#issuecomment-1020176931_ this is the new pull request to fix the download location. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3643/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3643/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3643.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3643",

"merged_at": "2022-02-04T15:15:26Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3643.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3643"

} | true | [

"I fixed those two things, the two remaining failing checks seem to be due to some dependency missing in the tests."

] |

https://api.github.com/repos/huggingface/datasets/issues/2903 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2903/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2903/comments | https://api.github.com/repos/huggingface/datasets/issues/2903/events | https://github.com/huggingface/datasets/pull/2903 | 995,715,191 | PR_kwDODunzps4rtxxV | 2,903 | Fix xpathopen to accept positional arguments | [] | closed | false | null | 1 | 2021-09-14T08:02:50Z | 2021-09-14T08:51:21Z | 2021-09-14T08:40:47Z | null | Fix `xpathopen()` so that it also accepts positional arguments.

Fix #2901. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2903/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2903/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2903.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2903",

"merged_at": "2021-09-14T08:40:47Z",

"patch_url": "https://github.com/huggingface/datasets/pull/2903.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2903"

} | true | [

"thanks!"

] |

https://api.github.com/repos/huggingface/datasets/issues/2395 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/2395/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/2395/comments | https://api.github.com/repos/huggingface/datasets/issues/2395/events | https://github.com/huggingface/datasets/pull/2395 | 898,762,730 | MDExOlB1bGxSZXF1ZXN0NjUwNTk3NjI0 | 2,395 | `pretty_name` for dataset in YAML tags | [

{

"color": "0e8a16",

"default": false,

"description": "Contribution to a dataset script",

"id": 4564477500,

"name": "dataset contribution",

"node_id": "LA_kwDODunzps8AAAABEBBmPA",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20contribution"

}

] | closed | false | null | 19 | 2021-05-22T09:24:45Z | 2022-09-23T13:29:14Z | 2022-09-23T13:29:13Z | null | I'm updating `pretty_name` for datasets in YAML tags as discussed with @lhoestq. Here are the first 10, please let me know if they're looking good.

If dataset has 1 config, I've added `pretty_name` as `config_name: full_name_of_dataset` as config names were `plain_text`, `default`, `squad` etc (not so important in this case) whereas when dataset has >1 configs, I've added `config_name: full_name_of_dataset+config_name` so as to let user know about the `config` here. | {

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/2395/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/2395/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/2395.diff",

"html_url": "https://github.com/huggingface/datasets/pull/2395",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/2395.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/2395"

} | true | [

"Initially I removed the ` - ` since there was only one `pretty_name` per config but turns out it was breaking here in `from_yaml_string`https://github.com/huggingface/datasets/blob/74751e3f98c74d22c48c6beb1fab0c13b5dfd075/src/datasets/utils/metadata.py#L197 in `/utils/metadata.py`",

"@lhoestq I guess this will also need some validation?",

"Looks like the parser doesn't allow things like\r\n```\r\npretty_name:\r\n config_name1: My awesome config number 1\r\n config_name2: My amazing config number 2\r\n```\r\ntherefore you had to use `-` and consider them as a list.\r\n\r\nI would be nice to add support for this case in the validator.\r\n\r\nThere's one thing though: the DatasetMetadata object currently corresponds to the yaml tags that are flattened: the config names are just ignored, and the lists are concatenated.\r\n\r\nTherefore I think we would potentially need to instantiate several `DatasetMetadata` objects: one per config. Otherwise we would end up with a list of several pretty_name while we actually need at most 1 per config.\r\n\r\nWhat do you think @gchhablani ?",

"I was thinking of returning `metada_dict` (on line 193) whenever `load_dataset_card` is called (we can pass an extra parameter to `from_readme` or `from_yaml_string` for that to happen).\r\n\r\nOne just needs config_name as key for the dictionary inside `pretty_name` dict and for single config, there would be only one value to print. We can do this for other fields as well like `size_categories`, `languages` etc. This will obviate the need to flatten the YAML tags so that don't have to instantiate several DatasetMetadata objects. What do you guys think @lhoestq @gchhablani? \r\n\r\nUpdate: I was thinking of returning the whole dictionary before flattening so that user can access whatever they want with specific configs. Let's say [this](https://pastebin.com/eJ84314f) is my `metadata_dict` before flattening (the loaded YAML string), so instead of validating it and then returning the items individually we can return it just after loading the YAML string.",

"Hi @lhoestq @bhavitvyamalik \r\n\r\n@bhavitvyamalik, I'm not sure I understand your approach, can you please elaborate? The `metadata_dict` is flattened before instantiating the object, do you want to remove that? Still confused.\r\n\r\nFew things come to my mind after going through this PR. They might not be entirely relevant to the current task, but I'm just trying to think about possible cases and discuss them here.\r\n\r\n1. Instead of instantiating a new `DatasetMetadata` for each config with flattened tags, why can't we make it more flexible and validate only non-dict items? However, in that case, the types wouldn't be as strict for the class attributes. It would also not work for cases that are like `Dict[str,List[Dict[str,str]]`, but I guess that won't be needed anyway in the foreseeable future?\r\n\r\n Ideally, it would be something like - Check the metadata tag type (root), do a DFS, and find the non-dict objects (leaves), and validate them. Is this an overkill to handle the problem?\r\n2. For single-config datasets, there can be slightly different validation for `pretty_names`, than for multi-config. The user shouldn't need to provide a config name for single-config datasets, wdyt @bhavitvyamalik @lhoestq? Either way, for multi-config, the validation can use the dictionary keys in the path to that leaf node to verify `pretty_names: ... (config)` as well. This will check whether the config name is same as the key (might be unnecessary but prevents typos, so less work for the reviewer(s)). For future, however, it might be beneficial to have something like this.\r\n3. Should we have a default config name for single-config datasets? People use any string they feel like. I've seen `plain_text`, `default` and the dataset name. I've used `image` for a few datasets myself AFAIR. For smarter validation (again, a future case ;-;), it'd be easier for us to have a simple rule for naming configs in single-config datasets. Wdyt @lhoestq?",

"Btw, `pretty_names` can also be used to handle this during validation :P \r\n\r\n```\r\n-# Dataset Card for [Dataset Name]\r\n+# Dataset Card for Allegro Reviews\r\n```\r\n\r\nThis is where `DatasetMetadata` and `ReadMe` should be combined. But there are very few overlaps, I guess.\r\n\r\n\n@bhavitvyamalik @lhoestq What about adding a pretty name across all configs, and then config-specific names?\n\nLike\n\n```yaml\npretty_names:\n all_configs: X (dataset_name)\n config_1: X1 (config_1_name)\n config_2: X2 (config_2_name)\n```\nThen, using the `metadata_dict`, the ReadMe header can be validated against `X`.\n\nSorry if I'm throwing too many ideas at once.",

"@bhavitvyamalik\r\n\r\nNow, I think I better understand what you're saying. So you want to skip validation for the unflattened metadata and just return it? And let the validation run for the flattened version?",

"Exactly! Validation is important but once the YAML tags are validated I feel we shouldn't do that again while calling `load_dataset_card`. +1 for default config name for single-config datasets.",

"@bhavitvyamalik\r\nActually, I made the `ReadMe` validation similar to `DatasetMetadata` validation and the class was validating the metadata during the creation. \r\n\r\nMaybe we need to have a separate validation method instead of having it in `__post_init__`? Wdyt @lhoestq? \r\n\r\nI'm sensing too many things to look into. It'd be great to discuss these sometime. \r\n\r\nBut if this PR is urgent then @bhavitvyamalik's logic seems good to me. It doesn't need major modifications in validation.",

"> Maybe we need to have a separate validation method instead of having it in __post_init__? Wdyt @lhoestq?\r\n\r\nWe can definitely have a `is_valid()` method instead of doing it in the post init.\r\n\r\n> What about adding a pretty name across all configs, and then config-specific names?\r\n\r\nLet's keep things simple to starts with. If we can allow both single-config and multi-config cases it would already be great :)\r\n\r\nfor single-config:\r\n```yaml\r\npretty_name: Allegro Reviews\r\n```\r\n\r\nfor multi-config:\r\n```yaml\r\npretty_name:\r\n mrpc: Microsoft Research Paraphrase Corpus (MRPC)\r\n sst2: Stanford Sentiment Treebank\r\n ...\r\n```\r\n\r\nTo support the multi-config case I see two options:\r\n1. Don't allow DatasetMetadata to have dictionaries but instead have separate DatasetMetadata objects per config\r\n2. allow DatasetMetadata to have dictionaries. It implies to remove the flattening step. Then we could get metadata for a specific config this way for example:\r\n```python\r\nfrom datasets import load_dataset_card\r\n\r\nglue_dataset_card = load_dataset_card(\"glue\")\r\nprint(glue_dataset_card.metadata)\r\n# DatasetMetatada object with dictionaries since there are many configs\r\nprint(glue_dataset_card.metadata.get_metadata_for_config(\"mrpc\"))\r\n# DatasetMetatada object with no dictionaries since there are only the mrpc tags\r\n```\r\n\r\nLet me know what you think or if you have other ideas.",

"I think Option 2 is better.\n\nJust to clarify, will `get_metadata_for_config` also return common details, like language, say?",

"> Just to clarify, will get_metadata_for_config also return common details, like language, say?\r\n\r\nYes that would be more convenient IMO. For example a dataset card like this\r\n```yaml\r\nlanguages:\r\n- en\r\npretty_name:\r\n config1: Pretty Name for Config 1\r\n config3: Pretty Name for Config 2\r\n```\r\n\r\nthen `metadat.get_metadata_for_config(\"config1\")` would return something like\r\n```python\r\nDatasetMetadata(languages=[\"en\"], pretty_name=\"Pretty Name for Config 1\")\r\n```",

"@lhoestq, should we do this post-processing in `load_dataset_card` by returning unflattened dictionary from `DatasetMetadata` or send this from `DatasetMetadata`? Since there isn't much to do I feel once we have the unflattened dictionary",

"Not sure I understand the difference @bhavitvyamalik , could you elaborate please ?",

"I was talking about this unflattened dictionary:\r\n\r\n> I was thinking of returning the whole dictionary before flattening so that user can access whatever they want with specific configs. Let's say [this](https://pastebin.com/eJ84314f) is my metadata_dict before flattening (the loaded YAML string), so instead of validating it and then returning the items individually we can return it just after loading the YAML string.\r\n\r\nPost-processing meant extracting config-specific fields from this dictionary and then return this `languages=[\"en\"], pretty_name=\"Pretty Name for Config 1\"`",

"I still don't understand what you mean by \"returning unflattened dictionary from DatasetMetadata or send this from DatasetMetadata\", sorry. Can you give an example or rephrase this ?\r\n\r\nIMO load_dataset_card can return a dataset card object with a metadata field. If the metadata isn't flat (i.e. it has several configs), you can get the flat metadata of 1 specific config with `get_metadata_for_config`. But of course if you have better ideas or suggestions, we can discuss this",

"@lhoestq, I think he is saying whatever `get_metadata_for_config` is doing can be done in `load_dataset_card` by taking the unflattened `metadata_dict` as input.\r\n\r\n@bhavitvyamalik, I think it'd be better to have this \"post-processing\" in `DatasetMetadata` instead of `load_dataset_card`, as @lhoestq has shown. I'll quickly get on that.\r\n\r\n---\r\nThree things that are to be changed in `DatasetMetadata`:\r\n1. Allow Non-flat elements and their validation.\r\n2. Create a method to get metadata by config name.\r\n3. Create a `validate()` method.\r\n\r\nOnce that is done, this PR can be updated and reviewed, wdys?",

"Thanks @gchhablani for the help ! Now that https://github.com/huggingface/datasets/pull/2436 is merged you can remove the `-` in the pretty_name @bhavitvyamalik :)",

"Thanks @bhavitvyamalik.\r\n\r\nI think this PR was superseded by these others also made by you:\r\n- #3498\r\n- #3536\r\n\r\nI'm closing this."

] |

https://api.github.com/repos/huggingface/datasets/issues/973 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/973/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/973/comments | https://api.github.com/repos/huggingface/datasets/issues/973/events | https://github.com/huggingface/datasets/pull/973 | 754,807,963 | MDExOlB1bGxSZXF1ZXN0NTMwNjQxMTky | 973 | Adding The Microsoft Terminology Collection dataset. | [] | closed | false | null | 9 | 2020-12-01T23:36:23Z | 2020-12-04T15:25:44Z | 2020-12-04T15:12:46Z | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/973/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/973/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/973.diff",

"html_url": "https://github.com/huggingface/datasets/pull/973",

"merged_at": "2020-12-04T15:12:46Z",

"patch_url": "https://github.com/huggingface/datasets/pull/973.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/973"

} | true | [

"I have to manually copy a dataset_infos.json file from other dataset and modify it since the `datasets-cli` isn't able to handle manually downloaded datasets yet (as far as I know).",

"you can generate the dataset_infos.json file even for dataset with manual data\r\nTo do so just specify `--data_dir <path/to/the/folder/containing/the/manual/data>`",

"Also, dummy_data seems having difficulty to handle manually downloaded datasets. `python datasets-cli dummy_data datasets/ms_terms --data_dir ...` reported `error: unrecognized arguments: --data_dir` error. Without `--data_dir`, it reported this error:\r\n```\r\nDataset ms_terms with config BuilderConfig(name='ms_terms-full', version=1.0.0, data_dir=None, data_files=None, description='...\\n') seems to already open files in the method `_split_generators(...)`. You might consider to instead only open files in the method `_generate_examples(...)` instead. If this is not possible the dummy data has to be created with less guidance. Make sure you create the file None.\r\nTraceback (most recent call last):\r\n File \"datasets-cli\", line 36, in <module>\r\n service.run()\r\n File \"/Users/lzhao/Downloads/huggingface/datasets/src/datasets/commands/dummy_data.py\", line 326, in run\r\n dataset_builder=dataset_builder, mock_dl_manager=mock_dl_manager\r\n File \"/Users/lzhao/Downloads/huggingface/datasets/src/datasets/commands/dummy_data.py\", line 406, in _print_dummy_data_instructions\r\n for split in generator_splits:\r\nUnboundLocalError: local variable 'generator_splits' referenced before assignment\r\n```",

"Oh yes `--data_dir` seems to only be supported for the `datasets_cli test` command. Sorry about that.\r\n\r\nCan you try to build the dummy_data.zip file manually ?\r\n\r\nIt has to be inside `./datasets/ms_terms/dummy/ms_terms-full/1.0.0`.\r\nInside this folder, please create a folder `dummy_data` that contains a dummy file `MicrosoftTermCollection.tbx` (with just a few examples in it). Then you can zip the `dummy_data` folder to `dummy_data.zip`\r\n\r\nThen you can check if it worked using the command\r\n```\r\npytest tests/test_dataset_common.py::LocalDatasetTest::test_load_dataset_ms_terms\r\n```\r\n\r\nFeel free to use some debugging print statements in your script if it doesn't work first try to see what `dl_manager.manual_dir` ends up being and also `path_to_manual_file`.\r\n\r\nFeel free to ping me if you have other questions",

"`pytest tests/test_dataset_common.py::LocalDatasetTest::test_load_dataset_ms_terms` gave `1 passed, 4 warnings in 8.13s`. Existing datasets, like `wikihow`, and `newsroom`, also report 4 warnings. So, I guess that is not related to this dataset.",

"Could you run `make style` before we merge @leoxzhao ?",

"the other errors are fixed on master so it's fine",

"> Could you run `make style` before we merge @leoxzhao ?\r\n\r\nSure thing. Done. Thanks Quentin. I have other datasets in mind. All of which requires manual download. This process is very helpful",

"Thank you :) "

] |

|

https://api.github.com/repos/huggingface/datasets/issues/4411 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4411/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4411/comments | https://api.github.com/repos/huggingface/datasets/issues/4411/events | https://github.com/huggingface/datasets/pull/4411 | 1,249,462,390 | PR_kwDODunzps44g_yL | 4,411 | Update `_format_columns` in `remove_columns` | [] | closed | false | null | 20 | 2022-05-26T11:40:06Z | 2022-06-14T19:05:37Z | 2022-06-14T16:01:56Z | null | As explained at #4398, when calling `dataset.add_faiss_index` under certain conditions when calling a sequence of operations `cast_column`, `map`, and `remove_columns`, it fails as it's trying to look for already removed columns.

So on, after testing some possible fixes, it seems that setting the dataset format right after removing the columns seems to be working fine, so I had to add a call to `.set_format` in the `remove_columns` function.

Hope this helps! | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4411/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4411/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4411.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4411",

"merged_at": "2022-06-14T16:01:55Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4411.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4411"

} | true | [

"🤗 This PR closes https://github.com/huggingface/datasets/issues/4398",

"_The documentation is not available anymore as the PR was closed or merged._",

"Hi! Thanks for reporting and providing a fix. I made a small change to make the fix easier to understand.",

"Hi, @mariosasko thanks! It makes sense, sorry I'm not that familiar with `datasets` code 😩 ",

"Sure @albertvillanova I'll do that later today and ping you once done, thanks! :hugs:",

"Hi again @albertvillanova! Let me know if those tests are fine 🤗 ",

"Hi @alvarobartt,\r\n\r\nI think your tests are failing. I don't know why previously, after your last commit, the CI tests were not triggered. \r\n\r\nIn order to force the re-running of the CI tests, I had to edit your file using the GitHub UI.\r\n\r\nFirst I tried to do it using my terminal, but I don't have push right to your PR branch: I would ask you next time you open a PR, please mark the checkbox \"Allow edits from maintainers\": https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/working-with-forks/allowing-changes-to-a-pull-request-branch-created-from-a-fork#enabling-repository-maintainer-permissions-on-existing-pull-requests",

"Hi @albertvillanova, let me check those again! And regarding that checkbox I thought it was already checked so my bad there 😩 ",

"@albertvillanova again it seems that the tests were not automatically triggered, but I tested those locally and now they work, as previously those were failing as I created an assertion as `self.assertEqual` over an empty list that was compared as `None` while the value was `[]` so I updated it to be `self.assertListEqual` and changed the comparison value to `[]`.",

"@lhoestq any idea why the CI is not triggered?",

"@alvarobartt I have tested locally and the tests continue failing.\r\n\r\nI think there is a basis error: `new_dset._format_columns` is always `None` in those cases.\r\n",

"You're right @albertvillanova I was indeed running the tests with `datasets==2.2.0` rather than with the branch version, I'll check it again! Sorry for the inconvenience...",

"> @alvarobartt I have tested locally and the tests continue failing.\r\n> \r\n> I think there is a basis error: `new_dset._format_columns` is always `None` in those cases.\r\n\r\nIn order to have some regressions tests for the fixed scenario, I've manually updated the value of `_format_columns` in the `ArrowDataset` so as to check whether it's updated or not right after calling `remove_columns`, and it does behave as expected, so with the latest version of this branch the reported issue doesn't occur anymore.",

"Hi again @albertvillanova sorry I was on leave! I'll do that ASAP :hugs:",

"@albertvillanova, does it make sense to add regression tests for `DatasetDict`? As `DatasetDict` doesn't have the attribute `_format_columns`, when we call `remove_columns` over a `DatasetDict` it removes the columns and updates the attributes of each split which is an `ArrowDataset`.\r\n\r\nSo on, we can either:\r\n- Update first the `_format_columns` attribute of each split and then remove the columns over the `DatasetDict`\r\n- Loop over the splits of `DatasetDict` and remove the columns right after updating `_format_columns` of each `ArrowDataset`.\r\n\r\nI assume that the best regression test is the one implemented (mentioned first above), let me know if there's a better way to do that 👍🏻 ",

"I think there's already a decorator to support transmitting the right `_format_columns`: `@transmit_format`, have you tried adding this decorator to `remove_columns` ?",

"> I think there's already a decorator to support transmitting the right `_format_columns`: `@transmit_format`, have you tried adding this decorator to `remove_columns` ?\r\n\r\nHi @lhoestq I can check now!",

"It worked indeed @lhoestq, thanks for the proposal and the review! 🤗 ",

"Oops, I forgot about `@transmit_format`'s existence. From what I see, we should also use this decorator in `flatten`, `rename_column` and `rename_columns`. \r\n\r\n@alvarobartt Let me know if you'd like to work on this (in a subsequent PR).",

"Sure @mariosasko I can prepare another PR to add those too, thanks! "

] |

https://api.github.com/repos/huggingface/datasets/issues/4999 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/4999/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/4999/comments | https://api.github.com/repos/huggingface/datasets/issues/4999/events | https://github.com/huggingface/datasets/pull/4999 | 1,379,610,030 | PR_kwDODunzps4_SQxL | 4,999 | Add EmptyDatasetError | [] | closed | false | null | 1 | 2022-09-20T15:28:05Z | 2022-09-21T12:23:43Z | 2022-09-21T12:21:24Z | null | examples:

from the hub:

```python

Traceback (most recent call last):

File "playground/ttest.py", line 3, in <module>

print(load_dataset("lhoestq/empty"))

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 1686, in load_dataset

**config_kwargs,

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 1458, in load_dataset_builder

data_files=data_files,

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 1171, in dataset_module_factory

raise e1 from None

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 1162, in dataset_module_factory

download_mode=download_mode,

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 760, in get_module

else get_data_patterns_in_dataset_repository(hfh_dataset_info, self.data_dir)

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/data_files.py", line 678, in get_data_patterns_in_dataset_repository

) from None

datasets.data_files.EmptyDatasetError: The dataset repository at 'lhoestq/empty' doesn't contain any data file.

```

from local directory:

```python

Traceback (most recent call last):

File "playground/ttest.py", line 3, in <module>

print(load_dataset("playground/empty"))

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 1686, in load_dataset

**config_kwargs,

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 1458, in load_dataset_builder

data_files=data_files,

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 1107, in dataset_module_factory

path, data_dir=data_dir, data_files=data_files, download_mode=download_mode

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/load.py", line 625, in get_module

else get_data_patterns_locally(base_path)

File "/Users/quentinlhoest/Desktop/hf/nlp/src/datasets/data_files.py", line 460, in get_data_patterns_locally

raise EmptyDatasetError(f"The directory at {base_path} doesn't contain any data file") from None

datasets.data_files.EmptyDatasetError: The directory at playground/empty doesn't contain any data file

```

Close https://github.com/huggingface/datasets/issues/4995 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/4999/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/4999/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/4999.diff",

"html_url": "https://github.com/huggingface/datasets/pull/4999",

"merged_at": "2022-09-21T12:21:24Z",

"patch_url": "https://github.com/huggingface/datasets/pull/4999.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/4999"

} | true | [

"_The documentation is not available anymore as the PR was closed or merged._"

] |

https://api.github.com/repos/huggingface/datasets/issues/1455 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/1455/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/1455/comments | https://api.github.com/repos/huggingface/datasets/issues/1455/events | https://github.com/huggingface/datasets/pull/1455 | 761,205,073 | MDExOlB1bGxSZXF1ZXN0NTM1OTA1OTQy | 1,455 | Add HEAD-QA: A Healthcare Dataset for Complex Reasoning | [] | closed | false | null | 1 | 2020-12-10T12:36:56Z | 2020-12-17T17:03:32Z | 2020-12-17T16:58:11Z | null | HEAD-QA is a multi-choice HEAlthcare Dataset, the questions come from exams to access a specialized position in the

Spanish healthcare system. | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/1455/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/1455/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/1455.diff",

"html_url": "https://github.com/huggingface/datasets/pull/1455",

"merged_at": "2020-12-17T16:58:11Z",

"patch_url": "https://github.com/huggingface/datasets/pull/1455.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/1455"

} | true | [

"Thank you for your review @lhoestq, I've changed the types of `qid` and `ra` and now they are integers as `aid`.\r\n\r\nReady for another review!"

] |

https://api.github.com/repos/huggingface/datasets/issues/3172 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3172/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3172/comments | https://api.github.com/repos/huggingface/datasets/issues/3172/events | https://github.com/huggingface/datasets/issues/3172 | 1,038,351,587 | I_kwDODunzps494_zj | 3,172 | `SystemError 15` thrown in `Dataset.__del__` when using `Dataset.map()` with `num_proc>1` | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | null | 9 | 2021-10-28T10:29:00Z | 2023-01-26T07:07:54Z | 2021-11-03T11:26:10Z | null | ## Describe the bug

I use `datasets.map` to preprocess some data in my application. The error `SystemError 15` is thrown at the end of the execution of `Dataset.map()` (only with `num_proc>1`. Traceback included bellow.

The exception is raised only when the code runs within a specific context. Despite ~10h spent investigating this issue, I have failed to isolate the bug, so let me describe my setup.

In my project, `Dataset` is wrapped into a `LightningDataModule` and the data is preprocessed when calling `LightningDataModule.setup()`. Calling `.setup()` in an isolated script works fine (even when wrapped with `hydra.main()`). However, when calling `.setup()` within the experiment script (depends on `pytorch_lightning`), the script crashes and `SystemError 15`.

I could avoid throwing this error by modifying ` Dataset.__del__()` (see bellow), but I believe this only moves the problem somewhere else. I am completely stuck with this issue, any hint would be welcome.

```python

class Dataset()

...

def __del__(self):

if hasattr(self, "_data"):

_ = self._data # <- ugly trick that allows avoiding the issue.

del self._data

if hasattr(self, "_indices"):

del self._indices

```

## Steps to reproduce the bug

```python

# Unfortunately I couldn't isolate the bug.

```

## Expected results

Calling `Dataset.map()` without throwing an exception. Or at least raising a more detailed exception/traceback.

## Actual results

```

Exception ignored in: <function Dataset.__del__ at 0x7f7cec179160>███████████████████████████████████████████████████| 5/5 [00:05<00:00, 1.17ba/s]

Traceback (most recent call last):

File ".../python3.8/site-packages/datasets/arrow_dataset.py", line 906, in __del__

del self._data

File ".../python3.8/site-packages/ray/worker.py", line 1033, in sigterm_handler

sys.exit(signum)

SystemExit: 15

```

## Environment info

Tested on 2 environments:

**Environment 1.**

- `datasets` version: 1.14.0

- Platform: macOS-10.16-x86_64-i386-64bit

- Python version: 3.8.8

- PyArrow version: 6.0.0

**Environment 2.**

- `datasets` version: 1.14.0

- Platform: Linux-4.18.0-305.19.1.el8_4.x86_64-x86_64-with-glibc2.28

- Python version: 3.9.7

- PyArrow version: 6.0.0

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3172/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3172/timeline | null | completed | null | null | false | [

"NB: even if the error is raised, the dataset is successfully cached. So restarting the script after every `map()` allows to ultimately run the whole preprocessing. But this prevents to realistically run the code over multiple nodes.",

"Hi,\r\n\r\nIt's not easy to debug the problem without the script. I may be wrong since I'm not very familiar with PyTorch Lightning, but shouldn't you preprocess the data in the `prepare_data` function of `LightningDataModule` and not in the `setup` function.\r\nAs you can't modify the module state in `prepare_data` (according to the docs), use the `cache_file_name` argument in `Dataset.map` there, and reload the processed data in `setup` with `Dataset.from_file(cache_file_name)`. If `num_proc>1`, check the docs on the `suffix_template` argument of `Dataset.map` to get an idea what the final `cache_file_names` are going to be.\r\n\r\nLet me know if this helps.",

"Hi @mariosasko, thank you for the hint, that helped me to move forward with that issue. \r\n\r\nI did a major refactoring of my project to disentangle my `LightningDataModule` and `Dataset`. Just FYI, it looks like:\r\n\r\n```python\r\nclass Builder():\r\n def __call__() -> DatasetDict:\r\n # load and preprocess the data\r\n return dataset\r\n\r\nclass DataModule(LightningDataModule):\r\n def prepare_data():\r\n self.builder()\r\n def setup():\r\n self.dataset = self.builder()\r\n```\r\n\r\nUnfortunately, the entanglement between `LightningDataModule` and `Dataset` was not the issue.\r\n\r\nThe culprit was `hydra` and a slight adjustment of the structure of my project solved this issue. The problematic project structure was:\r\n\r\n```\r\nsrc/\r\n | - cli.py\r\n | - training/\r\n | -experiment.py\r\n\r\n# code in experiment.py\r\ndef run_experiment(config):\r\n # preprocess data and run\r\n \r\n# code in cli.py\r\n@hydra.main(...)\r\ndef run(config):\r\n return run_experiment(config)\r\n```\r\n\r\nMoving `run()` from `clip.py` to `training.experiment.py` solved the issue with `SystemError 15`. No idea why. \r\n\r\nEven if the traceback was referring to `Dataset.__del__`, the problem does not seem to be primarily related to `datasets`, so I will close this issue. Thank you for your help!",

"Please allow me to revive this discussion, as I have an extremely similar issue. Instead of an error, my datasets functions simply aren't caching properly. My setup is almost the same as yours, with hydra to configure my experiment parameters.\r\n\r\n@vlievin Could you confirm if your code correctly loads the cache? If so, do you have any public code that I can reference for comparison?\r\n\r\nI will post a full example with hydra that illustrates this problem in a little bit, probably on another thread.",

"Hello @mariomeissner, very sorry for the late reply, I hope you have found a solution to your problem!\r\n\r\nI don't have public code at the moment. I have not experienced any other issue with hydra, even if I don't understand why changing the location of the definition of `run()` fixed the problem. \r\n\r\nOverall, I don't have issue with caching anymore, even when \r\n1. using custom fingerprints using the argument `new_fingerprint \r\n2. when using `num_proc>1`",

"I solved my issue by turning the map callable into a class static method, like they do in `lightning-transformers`. Very strange...",

"I have this issue with datasets v2.5.2 with Python 3.8.10 on Ubuntu 20.04.4 LTS. It does not occur when num_proc=1. When num_proc>1, it intermittently occurs and will cause process to hang. As previously mentioned, it occurs even when datasets have been previously cached. I have tried wrapping logic in a static class as suggested with @mariomeissner with no improvement.",

"@philipchung hello ,i have the same issue like yours,did you solve it?",

"No. I was not able to get num_proc>1 to work."

] |

https://api.github.com/repos/huggingface/datasets/issues/306 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/306/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/306/comments | https://api.github.com/repos/huggingface/datasets/issues/306/events | https://github.com/huggingface/datasets/pull/306 | 644,176,078 | MDExOlB1bGxSZXF1ZXN0NDM4ODQ2MTI3 | 306 | add pg19 dataset | [] | closed | false | null | 12 | 2020-06-23T22:03:52Z | 2020-07-06T07:55:59Z | 2020-07-06T07:55:59Z | null | https://github.com/huggingface/nlp/issues/274

Add functioning PG19 dataset with dummy data

`cos_e.py` was just auto-linted by `make style` | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/306/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/306/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/306.diff",

"html_url": "https://github.com/huggingface/datasets/pull/306",

"merged_at": "2020-07-06T07:55:59Z",

"patch_url": "https://github.com/huggingface/datasets/pull/306.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/306"

} | true | [

"@lucidrains - Thanks a lot for making the PR - PG19 is a super important dataset! Thanks for making it. Many people are asking for PG-19, so it would be great to have that in the library as soon as possible @thomwolf .",

"@mariamabarham yup! around 11GB!",

"I'm looking forward to our first deep learning written novel already lol. It's definitely happening",

"Good to merge IMO.",

"Oh I just noticed but as we changed the urls to download the files, we have to update `dataset_infos.json`.\r\nCould you re-rurn `nlp-cli test ./datasets/pg19 --save_infos` ?",

"@lhoestq on it!",

"should be good!",

"@lhoestq - I think it's good to merge no?",

"`dataset_infos.json` is still not up to date with the new urls (we can see that there are urls like `gs://deepmind-gutenberg/train/*` instead of `https://storage.googleapis.com/deepmind-gutenberg/train/*` in the json file)\r\n\r\nCan you check that you re-ran the command to update the json file, and that you pushed the changes @lucidrains ?",

"@lhoestq ohhh, I made the change in this commit https://github.com/lucidrains/nlp/commit/f3e23d823ad9942031be80b7c4e4212c592cd90c , that's interesting that the pull request didn't pick it up. maybe it's because I did it on another machine, let me check and get back to you!",

"@lhoestq wrong branch 😅 thanks for catching! ",

"Awesome thanks 🎉"

] |

https://api.github.com/repos/huggingface/datasets/issues/789 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/789/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/789/comments | https://api.github.com/repos/huggingface/datasets/issues/789/events | https://github.com/huggingface/datasets/pull/789 | 734,237,839 | MDExOlB1bGxSZXF1ZXN0NTEzODM1MzE0 | 789 | dataset(ncslgr): add initial loading script | [] | closed | false | null | 4 | 2020-11-02T06:50:10Z | 2020-12-01T13:41:37Z | 2020-12-01T13:41:36Z | null | Its a small dataset, but its heavily annotated

https://www.bu.edu/asllrp/ncslgr.html

| {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/789/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/789/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/789.diff",

"html_url": "https://github.com/huggingface/datasets/pull/789",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/789.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/789"

} | true | [

"Hi @AmitMY, sorry for leaving you hanging for a minute :) \r\n\r\nWe've developed a new pipeline for adding datasets with a few extra steps, including adding a dataset card. You can find the full process [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md)\r\n\r\nWould you be up for adding the tags and description in the README.md so we can merge this cool dataset?",

"@lhoestq should be ready for another review :) ",

"Awesome thank you !\r\n\r\nIt looks like the PR now includes changes from other PR that were previously merged. \r\nFeel free to create another branch and another PR so that we can have a clean diff.\r\n",

"Closing for #958 "

] |

https://api.github.com/repos/huggingface/datasets/issues/6018 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/6018/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/6018/comments | https://api.github.com/repos/huggingface/datasets/issues/6018/events | https://github.com/huggingface/datasets/pull/6018 | 1,799,411,999 | PR_kwDODunzps5VOmKY | 6,018 | test1 | [] | closed | false | null | 1 | 2023-07-11T17:25:49Z | 2023-07-20T10:11:41Z | 2023-07-20T10:11:41Z | null | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/6018/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/6018/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/6018.diff",

"html_url": "https://github.com/huggingface/datasets/pull/6018",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/6018.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/6018"

} | true | [

"We no longer host datasets in this repo. You should use the HF Hub instead."

] |

https://api.github.com/repos/huggingface/datasets/issues/3570 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3570/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3570/comments | https://api.github.com/repos/huggingface/datasets/issues/3570/events | https://github.com/huggingface/datasets/pull/3570 | 1,100,480,791 | PR_kwDODunzps4w3Xez | 3,570 | Add the KMWP dataset (extension of #3564) | [

{

"color": "0e8a16",

"default": false,

"description": "Contribution to a dataset script",

"id": 4564477500,

"name": "dataset contribution",

"node_id": "LA_kwDODunzps8AAAABEBBmPA",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20contribution"

}

] | closed | false | null | 3 | 2022-01-12T15:33:08Z | 2022-10-01T06:43:16Z | 2022-10-01T06:43:16Z | null | New pull request of #3564 (Add the KMWP dataset) | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3570/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/3570/timeline | null | null | false | {

"diff_url": "https://github.com/huggingface/datasets/pull/3570.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3570",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/3570.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3570"

} | true | [

"Sorry, I'm late to check! I'll send it to you soon!",

"Thanks for your contribution, @sooftware. Are you still interested in adding this dataset?\r\n\r\nWe are removing the dataset scripts from this GitHub repo and moving them to the Hugging Face Hub: https://huggingface.co/datasets\r\n\r\nWe would suggest you create this dataset there, under this organization namespace: https://huggingface.co/tunib\r\n\r\nPlease, feel free to tell us if you need some help.",

"Close this PR. Thanks!"

] |

https://api.github.com/repos/huggingface/datasets/issues/5081 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/5081/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/5081/comments | https://api.github.com/repos/huggingface/datasets/issues/5081/events | https://github.com/huggingface/datasets/issues/5081 | 1,399,340,050 | I_kwDODunzps5TaDwS | 5,081 | Bug loading `sentence-transformers/parallel-sentences` | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | open | false | null | 8 | 2022-10-06T10:47:51Z | 2022-10-11T10:00:48Z | null | null | ## Steps to reproduce the bug

```python

from datasets import load_dataset

dataset = load_dataset("sentence-transformers/parallel-sentences")

```

raises this:

```

/home/phmay/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/download/streaming_download_manager.py:697: FutureWarning: the 'mangle_dupe_cols' keyword is deprecated and will be removed in a future version. Please take steps to stop the use of 'mangle_dupe_cols'

return pd.read_csv(xopen(filepath_or_buffer, "rb", use_auth_token=use_auth_token), **kwargs)

/home/phmay/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/download/streaming_download_manager.py:697: FutureWarning: the 'mangle_dupe_cols' keyword is deprecated and will be removed in a future version. Please take steps to stop the use of 'mangle_dupe_cols'

return pd.read_csv(xopen(filepath_or_buffer, "rb", use_auth_token=use_auth_token), **kwargs)

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In [4], line 1

----> 1 dataset = load_dataset("sentence-transformers/parallel-sentences", split="train")

File ~/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/load.py:1693, in load_dataset(path, name, data_dir, data_files, split, cache_dir, features, download_config, download_mode, ignore_verifications, keep_in_memory, save_infos, revision, use_auth_token, task, streaming, **config_kwargs)

1690 try_from_hf_gcs = path not in _PACKAGED_DATASETS_MODULES

1692 # Download and prepare data

-> 1693 builder_instance.download_and_prepare(

1694 download_config=download_config,

1695 download_mode=download_mode,

1696 ignore_verifications=ignore_verifications,

1697 try_from_hf_gcs=try_from_hf_gcs,

1698 use_auth_token=use_auth_token,

1699 )

1701 # Build dataset for splits

1702 keep_in_memory = (

1703 keep_in_memory if keep_in_memory is not None else is_small_dataset(builder_instance.info.dataset_size)

1704 )

File ~/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/builder.py:807, in DatasetBuilder.download_and_prepare(self, output_dir, download_config, download_mode, ignore_verifications, try_from_hf_gcs, dl_manager, base_path, use_auth_token, file_format, max_shard_size, storage_options, **download_and_prepare_kwargs)

801 if not downloaded_from_gcs:

802 prepare_split_kwargs = {

803 "file_format": file_format,

804 "max_shard_size": max_shard_size,

805 **download_and_prepare_kwargs,

806 }

--> 807 self._download_and_prepare(

808 dl_manager=dl_manager,

809 verify_infos=verify_infos,

810 **prepare_split_kwargs,

811 **download_and_prepare_kwargs,

812 )

813 # Sync info

814 self.info.dataset_size = sum(split.num_bytes for split in self.info.splits.values())

File ~/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/builder.py:898, in DatasetBuilder._download_and_prepare(self, dl_manager, verify_infos, **prepare_split_kwargs)

894 split_dict.add(split_generator.split_info)

896 try:

897 # Prepare split will record examples associated to the split

--> 898 self._prepare_split(split_generator, **prepare_split_kwargs)

899 except OSError as e:

900 raise OSError(

901 "Cannot find data file. "

902 + (self.manual_download_instructions or "")

903 + "\nOriginal error:\n"

904 + str(e)

905 ) from None

File ~/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/builder.py:1513, in ArrowBasedBuilder._prepare_split(self, split_generator, file_format, max_shard_size)

1506 shard_id += 1

1507 writer = writer_class(

1508 features=writer._features,

1509 path=fpath.replace("SSSSS", f"{shard_id:05d}"),

1510 storage_options=self._fs.storage_options,

1511 embed_local_files=embed_local_files,

1512 )

-> 1513 writer.write_table(table)

1514 finally:

1515 num_shards = shard_id + 1

File ~/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/arrow_writer.py:540, in ArrowWriter.write_table(self, pa_table, writer_batch_size)

538 if self.pa_writer is None:

539 self._build_writer(inferred_schema=pa_table.schema)

--> 540 pa_table = table_cast(pa_table, self._schema)

541 if self.embed_local_files:

542 pa_table = embed_table_storage(pa_table)

File ~/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/table.py:2044, in table_cast(table, schema)

2032 """Improved version of pa.Table.cast.

2033

2034 It supports casting to feature types stored in the schema metadata.

(...)

2041 table (:obj:`pyarrow.Table`): the casted table

2042 """

2043 if table.schema != schema:

-> 2044 return cast_table_to_schema(table, schema)

2045 elif table.schema.metadata != schema.metadata:

2046 return table.replace_schema_metadata(schema.metadata)

File ~/miniconda3/envs/paraphrase-mining/lib/python3.9/site-packages/datasets/table.py:2005, in cast_table_to_schema(table, schema)

2003 features = Features.from_arrow_schema(schema)

2004 if sorted(table.column_names) != sorted(features):

-> 2005 raise ValueError(f"Couldn't cast\n{table.schema}\nto\n{features}\nbecause column names don't match")

2006 arrays = [cast_array_to_feature(table[name], feature) for name, feature in features.items()]

2007 return pa.Table.from_arrays(arrays, schema=schema)

ValueError: Couldn't cast

Action taken on Parliament's resolutions: see Minutes: string

Následný postup na základě usnesení Parlamentu: viz zápis: string

-- schema metadata --

pandas: '{"index_columns": [{"kind": "range", "name": null, "start": 0, "' + 742

to

{'Membership of Parliament: see Minutes': Value(dtype='string', id=None), 'Състав на Парламента: вж. протоколи': Value(dtype='string', id=None)}

because column names don't match

```

## Expected results

no error

## Actual results

error

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version:

- Platform: Linux

- Python version: Python 3.9.13

- PyArrow version: pyarrow 9.0.0

- transformers 4.22.2

- datasets 2.5.2 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/5081/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/5081/timeline | null | null | null | null | false | [

"tagging @nreimers ",

"The dataset is sadly not really compatible to be loaded with `load_dataset`. So far it is better to git clone it and to use the files directly.\r\n\r\nA data loading script would be needed to be added to this dataset. But this was too much overhead / not really intuitive how to create it.",

"Since the dataset is a bunch of TSVs we should not need a dataset script I think.\r\n\r\nBy default it tries to load all the TSVs at once, which fails here because they don't all have the same columns (pd.read_csv uses the first line as header by default). But those files have no header ! So, to properly load any TSV file in this repo, one has to pass `names=[...]` for pd.read_csv to know which column names to use.\r\n\r\nTo fix this situation, we can either do\r\n1. replace the TSVs by TSV with column names\r\n2. OR specify the pd.read_csv kwargs as YAML in the dataset card - and `datasets` would use that by default\r\n\r\nWDTY ?",