questions

stringlengths 4

1.65k

| answers

stringlengths 1.73k

353k

| site

stringclasses 24

values | answers_cleaned

stringlengths 1.73k

353k

|

|---|---|---|---|

vault Client count calculation Technical overview of client count calculations in Vault Vault provides usage telemetry for the number of clients based on the number of layout docs page title Client count calculation | ---

layout: docs

page_title: Client count calculation

description: |-

Technical overview of client count calculations in Vault

---

# Client count calculation

Vault provides usage telemetry for the number of clients based on the number of

unique entity assignments within a Vault cluster over a given billing period:

- Standard entity assignments based on authentication method for active entities.

- Constructed entity assignments for active non-entity tokens, including batch

tokens created by performance standby nodes.

- Certificate entity assignments for ACME connections.

- Secrets being synced to at least one sync destination.

```markdown

CLIENT_COUNT_PER_CLUSTER = UNIQUE_STANDARD_ENTITIES +

UNIQUE_CONSTRUCTED_ENTITIES +

UNIQUE_CERTIFICATE_ENTITIES +

UNIQUE_SYNCED_SECRETS

```

Vault does not aggregate or de-duplicate clients across clusters, but all logs

and precomputed reports are included in DR replication.

## How Vault tracks clients

Each time a client authenticates, Vault checks whether the corresponding entity

ID has already been recorded in the client log as active for the current month:

- **If no record exists**, Vault adds an entry for the entity ID.

- If a record exists but the entity was last active **prior to the current month**,

Vault adds a new entry to the client record for the entity ID.

- If a record exists and the entity was last active **within the current month**,

Vault does not add a new entry to the client record for the entity ID.

For example:

- Two non-entity tokens under the same namespace, with the same alias name and

policy assignment receive the same entity assignment and are only counted

**once**.

- Two authentication requests from a single ACME client for the same certificate

identifiers from different mounts receive the same entity assignments and

are counted **once**.

- An application authenticating with AppRole receive the same entity assignment

every time and only counted **once**.

At the **end of each month**, Vault pre-computes reports for each cluster on the

number of active entities, per namespace, for each time period within the

configured retention period. By de-duplicating records from the current month

against records for the previous month, Vault ensures entities that remain

active within every calendar month are only counted once for the year.

The deduplication process has two additional consequences:

1. Detailed reporting lags by 1 month at the start of the billing period.

1. Billing period reports that include the current month must use an

approximation for the number of new clients in the current month.

## How Vault approximates current-month client count

Vault approximates client count for the current month using a

[hyperloglog algorithm](https://en.wikipedia.org/wiki/HyperLogLog) that looks

at the difference between the cardinalities of:

- the number of clients across the **entire** billing period, and

- the number of clients across the billing period **excluding** clients from the current month.

The approximation algorithm uses the

[axiomhq](https://github.com/axiomhq/hyperloglog) library with fourteen

registers and sparse representations (when applicable). The multiset for the

calculation is the total number of clients within a billing period, and the

accuracy estimate for the approximation decreases as the difference between the

number of clients in the current month and the number of clients in the billing

period increases.

### Testing verification for client count approximations

Given `CM` as the number of clients for the current month and `BP` as the number

of clients in the billing period, we found that the approximation becomes

increasingly imprecise as:

- the difference between `BC` and `CM` increases

- the value of `CM` approaches zero.

- the number of months in the billing period increase.

The maximum observed error rate

(`ER = (FOUND_NEW_CLIENTS / EXPECTED_NEW_CLIENTS)`) was 30% for 10,000 clients

or less, with an error rate of 5 – 10% in the average case.

For the purposes of predictive analysis, the following tables list a random

sample the values we found during testing for `CM`, `BP`, and `ER`.

<Tabs>

<Tab heading="Single-month tests">

| Current month (`CM`) | Billing period (`BP`) | Error rate (`ER`) |

| :-----------------: | :------------------: | :---------------: |

| 7 | 10 | 0% |

| 20 | 600 | 0% |

| 20 | 1000 | 0% |

| 20 | 6000 | 10% |

| 20 | 10000 | 10% |

| 200 | 600 | 0% |

| 200 | 10000 | 7% |

| 400 | 6000 | 5% |

| 2000 | 10000 | 4% |

</Tab>

<Tab heading="Multi-month / multi-segment tests">

| Current month (`CM`) | Billing period (`BP`) | Error rate (`ER`) |

| :-----------------: | :------------------: | :---------------: |

| 20 | 15 | 0% |

| 20 | 100 | 0% |

| 20 | 1000 | 0% |

| 20 | 10000 | 30% |

| 200 | 10000 | 6% |

| 2000 | 10000 | 2% |

</Tab>

</Tabs>

## Resource costs for client computation

In addition to the storage used for storing the pre-computed reports, each

active entity in the client log consumes a few bytes of storage. As a safety

measure against runaway storage growth, Vault limits the number of entity

records to 656,000 per month, but typical storage costs are much less.

On average, 1000 monthly active entities requires 3.0 MiB of storage capacity

over the default 48-month retention period.

@include "content-footer-title.mdx"

<Tabs>

<Tab heading="Related concepts">

<ul>

<li>

<a href="/vault/docs/concepts/client-count/">Clients and entities</a>

</li>

<li>

<a href="/vault/docs/concepts/client-count/faq">Client count FAQ</a>

</li>

</ul>

</Tab>

<Tab heading="Related API docs">

<ul>

<li>

<a href="/vault/api-docs/system/internal-counters#client-count">Client Count API</a>

</li>

<li>

<a href="/vault/api-docs/system/internal-counters">Internal counters API</a>

</li>

</ul>

</Tab>

<Tab heading="Related tutorials">

<ul>

<li>

<a href="/vault/tutorials/monitoring/usage-metrics">

Vault Usage Metrics in Vault UI

</a>

</li>

<li>

<a href="/vault/tutorials/monitoring/usage-metrics">KMIP Client metrics</a>

</li>

</ul>

</Tab>

<Tab heading="Other resources">

<ul>

<li>

<a href="https://github.com/axiomhq/hyperloglog#readme">Accuracy estimates for the axiomhq hyperloglog library</a>

</li>

<li>

Blog post: <a href="https://www.hashicorp.com/blog/onboarding-applications-to-vault-using-terraform-a-practical-guide">

Onboarding Applications to Vault Using Terraform: A Practical Guide

</a>

</li>

</ul>

</Tab>

</Tabs> | vault | layout docs page title Client count calculation description Technical overview of client count calculations in Vault Client count calculation Vault provides usage telemetry for the number of clients based on the number of unique entity assignments within a Vault cluster over a given billing period Standard entity assignments based on authentication method for active entities Constructed entity assignments for active non entity tokens including batch tokens created by performance standby nodes Certificate entity assignments for ACME connections Secrets being synced to at least one sync destination markdown CLIENT COUNT PER CLUSTER UNIQUE STANDARD ENTITIES UNIQUE CONSTRUCTED ENTITIES UNIQUE CERTIFICATE ENTITIES UNIQUE SYNCED SECRETS Vault does not aggregate or de duplicate clients across clusters but all logs and precomputed reports are included in DR replication How Vault tracks clients Each time a client authenticates Vault checks whether the corresponding entity ID has already been recorded in the client log as active for the current month If no record exists Vault adds an entry for the entity ID If a record exists but the entity was last active prior to the current month Vault adds a new entry to the client record for the entity ID If a record exists and the entity was last active within the current month Vault does not add a new entry to the client record for the entity ID For example Two non entity tokens under the same namespace with the same alias name and policy assignment receive the same entity assignment and are only counted once Two authentication requests from a single ACME client for the same certificate identifiers from different mounts receive the same entity assignments and are counted once An application authenticating with AppRole receive the same entity assignment every time and only counted once At the end of each month Vault pre computes reports for each cluster on the number of active entities per namespace for each time period within the configured retention period By de duplicating records from the current month against records for the previous month Vault ensures entities that remain active within every calendar month are only counted once for the year The deduplication process has two additional consequences 1 Detailed reporting lags by 1 month at the start of the billing period 1 Billing period reports that include the current month must use an approximation for the number of new clients in the current month How Vault approximates current month client count Vault approximates client count for the current month using a hyperloglog algorithm https en wikipedia org wiki HyperLogLog that looks at the difference between the cardinalities of the number of clients across the entire billing period and the number of clients across the billing period excluding clients from the current month The approximation algorithm uses the axiomhq https github com axiomhq hyperloglog library with fourteen registers and sparse representations when applicable The multiset for the calculation is the total number of clients within a billing period and the accuracy estimate for the approximation decreases as the difference between the number of clients in the current month and the number of clients in the billing period increases Testing verification for client count approximations Given CM as the number of clients for the current month and BP as the number of clients in the billing period we found that the approximation becomes increasingly imprecise as the difference between BC and CM increases the value of CM approaches zero the number of months in the billing period increase The maximum observed error rate ER FOUND NEW CLIENTS EXPECTED NEW CLIENTS was 30 for 10 000 clients or less with an error rate of 5 ndash 10 in the average case For the purposes of predictive analysis the following tables list a random sample the values we found during testing for CM BP and ER Tabs Tab heading Single month tests Current month CM Billing period BP Error rate ER 7 10 0 20 600 0 20 1000 0 20 6000 10 20 10000 10 200 600 0 200 10000 7 400 6000 5 2000 10000 4 Tab Tab heading Multi month multi segment tests Current month CM Billing period BP Error rate ER 20 15 0 20 100 0 20 1000 0 20 10000 30 200 10000 6 2000 10000 2 Tab Tabs Resource costs for client computation In addition to the storage used for storing the pre computed reports each active entity in the client log consumes a few bytes of storage As a safety measure against runaway storage growth Vault limits the number of entity records to 656 000 per month but typical storage costs are much less On average 1000 monthly active entities requires 3 0 MiB of storage capacity over the default 48 month retention period include content footer title mdx Tabs Tab heading Related concepts ul li a href vault docs concepts client count Clients and entities a li li a href vault docs concepts client count faq Client count FAQ a li ul Tab Tab heading Related API docs ul li a href vault api docs system internal counters client count Client Count API a li li a href vault api docs system internal counters Internal counters API a li ul Tab Tab heading Related tutorials ul li a href vault tutorials monitoring usage metrics Vault Usage Metrics in Vault UI a li li a href vault tutorials monitoring usage metrics KMIP Client metrics a li ul Tab Tab heading Other resources ul li a href https github com axiomhq hyperloglog readme Accuracy estimates for the axiomhq hyperloglog library a li li Blog post a href https www hashicorp com blog onboarding applications to vault using terraform a practical guide Onboarding Applications to Vault Using Terraform A Practical Guide a li ul Tab Tabs |

vault in Vault page title Clients and entities Clients and entities layout docs Technical overview covering the concept of clients entities and entity IDs | ---

layout: docs

page_title: Clients and entities

description: |-

Technical overview covering the concept of clients, entities, and entity IDs

in Vault

---

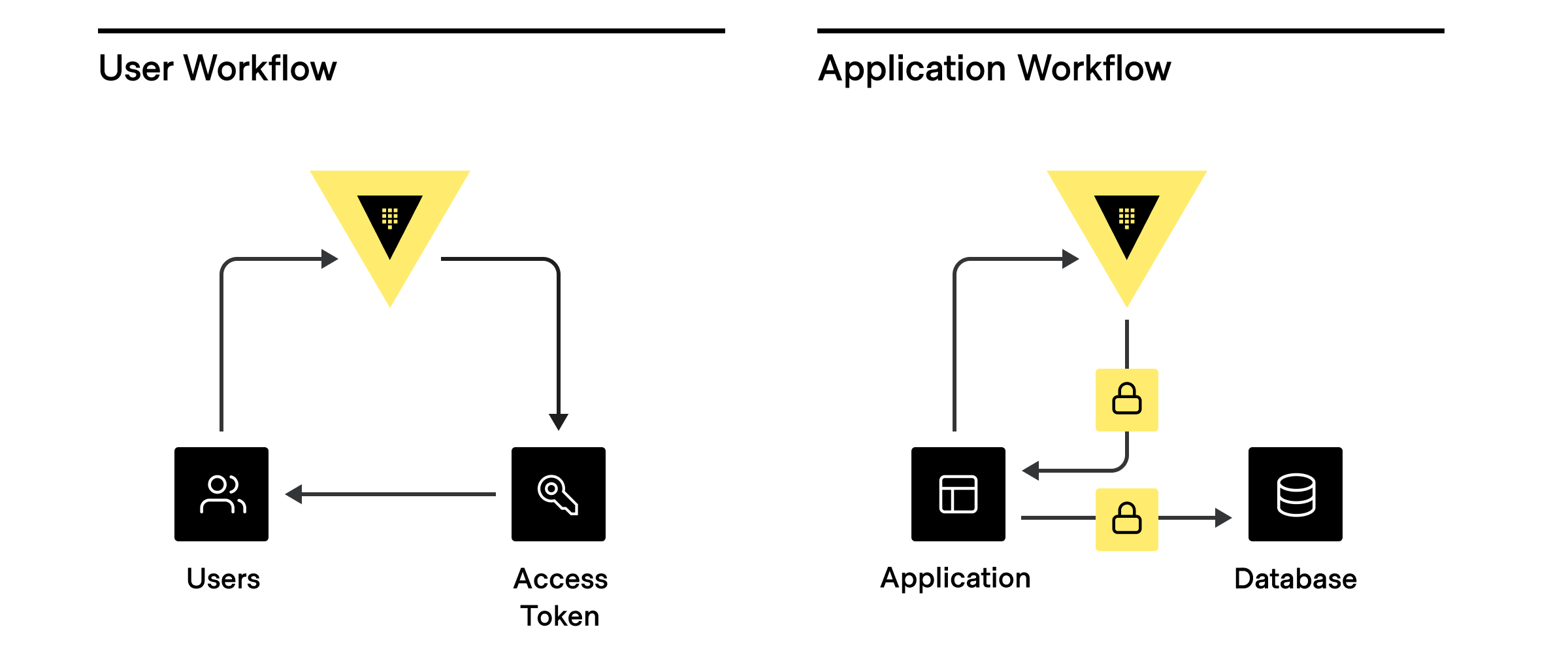

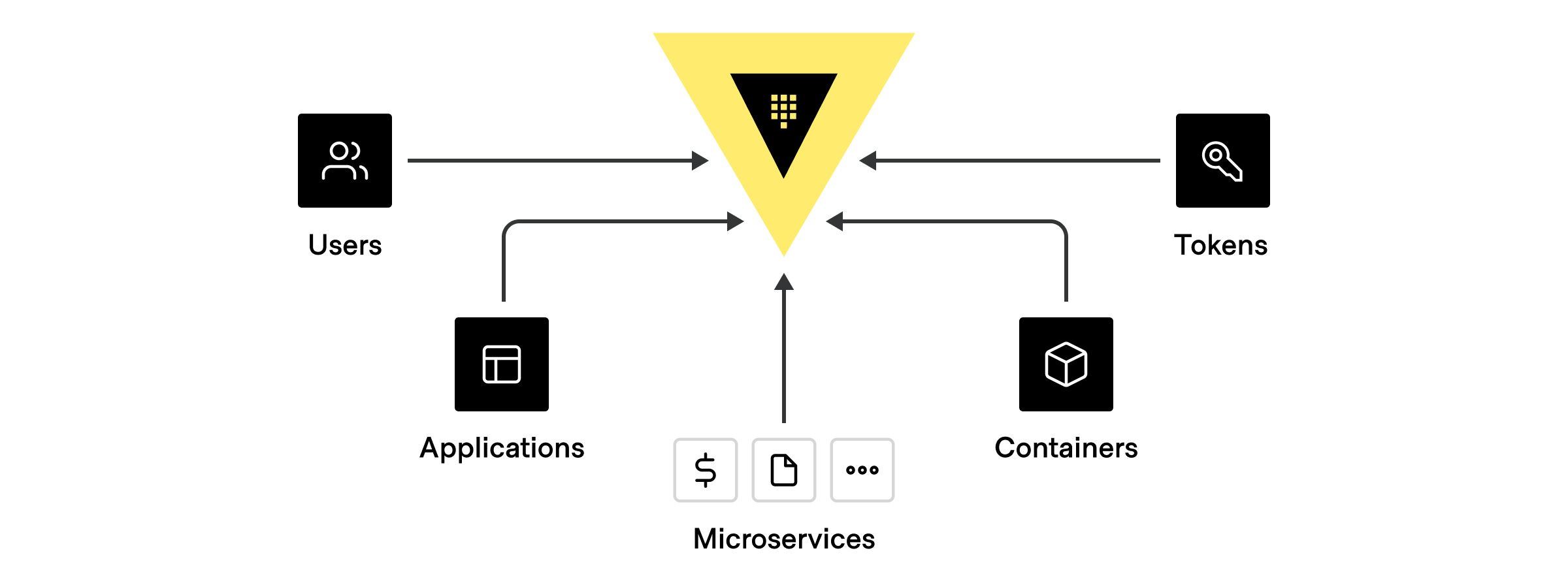

# Clients and entities

Anything that connects and authenticates to Vault to accomplish a task is a

**client**. For example, a user logging into a cluster to manage policies or a

machine-based system (application or cloud service) requesting a database token

are both considered clients.

While there are many different potential clients, the most common are:

1. **Human users** interacting directly with Vault.

1. **Applications and microservices**.

1. **Servers and platforms** like VMs, Docker containers, or Kubernetes pods.

1. **Orchestrators** like Nomad, Terraform, Ansible, ACME, and other continuous

integration / continuous delivery (CI/CD) pipelines.

1. **Vault agents and proxies** that act on behalf of an application or

microservice.

## Identity and entity assignment

Authorized clients can connect to Vault with a variety of authentication methods.

Authorization source | AuthN method

-------------------------- | ---------------------------------

Externally managed or SSO | Active Directory, LDAP, OIDC, JWT, GitHub, username+password

Platform- or server-based | Kubernetes, AWS, GCP, Azure, Cert, Cloud Foundry

Self | AppRole, tokens with no associated authN path or role

When a client authenticates, Vault assigns a unique identifier

(**client entity**) in the [Vault identity system](/vault/docs/secrets/identity)

based on the authentication method used or a previously assigned alias.

**Entity aliases** let clients authenticate with multiple methods but still be

associated with a single policy, share resources, and count as the same entity,

regardless of the authentication method used for a particular session.

## Standard entity assignments

@include "authn-names.mdx"

Each authentication method has a unique ID string that corresponds to a client

entity used for telemetry. For example, a microservice authenticating with

AppRole takes the associated role ID as the entity. If you are running at scale

and have multiple copies of the microservices using the same role id, the full

set of instances will share the same identifier.

As a result, it is critical that you configure different clients

(microservices, humans, applications, services, platforms, servers, or pipelines)

in a way that results in distinct clients having unique identifiers. For example,

the role IDs should be different **between** two microservices, MicroserviceA and

MicroServiceB, even if the **specific instances** of MicroServiceA and

MicroServiceB share a common role ID.

## Entity assignment with ACME

Vault treats all ACME connections that authenticate under the same certificate

identifier (domain) as the same **certificate entity** for client count

calculations.

For example:

- ACME client requests (from the same server or separate servers) for the same

certificate identifier (a unique combination of CN, DNS, SANS and IP SANS)

are treated as the same entity.

- If an ACME client makes a request for `a.test.com`, and subsequently makes a new

request for `b.test.com` and `*.test.com` then two distinct entities will be created,

one for `a.test.com` and another for the combination of `b.test.com` and `*.test.com`.

- Overlap of certificate identifiers from different ACME clients will be treated

as the same entity e.g. if client 1 requests `a.test.com` and client 2 requests

`a.test.com` a single entity is created for both requests.

## Secret sync clients

Vault can automatically update secrets in external destinations with [secret sync](/vault/docs/sync).

A secret that gets synced to one or more destinations is considered a **secret

sync client** for client count calculations.

Note that:

- Each synced secret is counted distinctly based on the path and namespace of

the secret. If you have secrets at path `kv1/secret` and `kv2/secret`

which are both synced, then two distinct secret syncs will be counted.

- A secret can be synced to multiple different destinations, and it will still

only be counted as one secret sync. If `kv/secret` is synced to both Azure Key

Vault and AWS Secret Manager, this will be counted as only one secret sync

client.

- Secret sync clients are only created after you create an association between a

secret and a store. If you create `kv/secret` and do not associate this secret

with any destinations, it will not be counted as a secret sync client.

- Secret syncs clients are registered in Vault's client counting system so long

as the sync is active. If you create `kv/secret` and associate it with a

destination in January, update the secret in May, and then delete the secret

in September, Vault will consider this client as having been seen throughout

the entire period of January through September.

## Entity assignment with namespaces

A namespace represents a isolated, logical space within a single Vault

cluster and is typically used for administrative purposes.

When a client authenticates **within a given namespace**, Vault assigns the same

client entity to activities within any child namespaces because the namespaces

exist within the same larger scope.

When a client authenticates **across namespace boundaries**, Vault treats the

single client as two distinct entities because the client is operating

across different scopes with different policy assignments and resources.

For example:

- Different requests under parent and child namespaces from a single client

authenticated under the **parent** namespace are assigned **the same entity

ID**. All the client activities occur **within** the boundaries of the

namespace referenced in the original authentication request.

- Different requests under parent and child namespaces from a single client

authenticated under the **child** namespace are assigned **different entity

IDs**. Some of the client activities occur **outside** the boundaries of the

namespace referenced in the original authentication request.

- Requests by the same client to two different namespaces, NAMESPACE<sub>A</sub>

and NAMESPACE<sub>B</sub> are assigned **different entity IDs**.

## Entity assignment with non-entity tokens

Vault uses tokens as the core method for authentication. You can use tokens to

authenticate directly, or use token [auth methods](/vault/docs/concepts/auth)

to dynamically generate tokens based on external identities.

When clients authenticate with the [token auth method](/vault/docs/auth/token)

**without** a client identity, the result is a **non-entity token**. For example,

a service might use the token authentication method to create a token for a user

whose explicit identity is unknown.

Ultimately, non-entity tokens trace back to a particular client or purpose so

Vault assigns unique entity IDs to non-entity tokens based on a combination of

the:

- assigned entity alias name (if present),

- associated policies, and

- namespace under which the token was created.

In **rare** cases, tokens may be created outside of the Vault identity system

**without** an associated entity or identity. Vault treats every unaffiliated

token as a unique client for production usage. We strongly discourage the use of

unaffiliated tokens and recommend that you always associate a token with an

entity alias and token role.

<Note title="Behavior change in Vault 1.9+">

As of Vault 1.9, all non-entity tokens with the same namespace and policy

assignments are treated as the same client entity. Prior to Vault 1.9, every

non-entity token was treated as a unique client entity, which drastically

inflated telemetry around client count.

If you are using Vault 1.8 or earlier, and need to address client count

inflation without upgrading, we recommend creating a

[token role](/vault/api-docs/auth/token#create-update-token-role) with

allowable entity aliases and assigning all tokens to an appropriate

[role and entity alias name](/vault/api-docs/auth/token#create-token) before

using them.

</Note>

@include "content-footer-title.mdx"

<Tabs>

<Tab heading="Related concepts">

<ul>

<li>

<a href="/vault/docs/concepts/client-count/counting">Client count calculation</a>

</li>

<li>

<a href="/vault/docs/concepts/client-count/faq">Client count FAQ</a>

</li>

</ul>

</Tab>

<Tab heading="Related tutorials">

<ul>

<li>

<a href="/vault/tutorials/auth-methods/identity">Identity: Entities and Groups</a>

</li>

<li>

<a href="/vault/tutorials/enterprise/namespaces">Secure Multi-Tenancy with Namespaces</a>

</li>

</ul>

</Tab>

<Tab heading="Other resources">

<ul>

<li>

Article: <a href="https://www.hashicorp.com/identity-based-security-and-low-trust-networks">

Identity-based Security and Low-trust Networks

</a>

</li>

</ul>

</Tab>

</Tabs> | vault | layout docs page title Clients and entities description Technical overview covering the concept of clients entities and entity IDs in Vault Clients and entities Anything that connects and authenticates to Vault to accomplish a task is a client For example a user logging into a cluster to manage policies or a machine based system application or cloud service requesting a database token are both considered clients Vault Client Workflows https www datocms assets com 2885 1617325020 valult client workflows png While there are many different potential clients the most common are 1 Human users interacting directly with Vault 1 Applications and microservices 1 Servers and platforms like VMs Docker containers or Kubernetes pods 1 Orchestrators like Nomad Terraform Ansible ACME and other continuous integration continuous delivery CI CD pipelines 1 Vault agents and proxies that act on behalf of an application or microservice Identity and entity assignment Authorized clients can connect to Vault with a variety of authentication methods Authorization source AuthN method Externally managed or SSO Active Directory LDAP OIDC JWT GitHub username password Platform or server based Kubernetes AWS GCP Azure Cert Cloud Foundry Self AppRole tokens with no associated authN path or role Vault client types https www datocms assets com 2885 1617325030 vault clients png When a client authenticates Vault assigns a unique identifier client entity in the Vault identity system vault docs secrets identity based on the authentication method used or a previously assigned alias Entity aliases let clients authenticate with multiple methods but still be associated with a single policy share resources and count as the same entity regardless of the authentication method used for a particular session Standard entity assignments include authn names mdx Each authentication method has a unique ID string that corresponds to a client entity used for telemetry For example a microservice authenticating with AppRole takes the associated role ID as the entity If you are running at scale and have multiple copies of the microservices using the same role id the full set of instances will share the same identifier As a result it is critical that you configure different clients microservices humans applications services platforms servers or pipelines in a way that results in distinct clients having unique identifiers For example the role IDs should be different between two microservices MicroserviceA and MicroServiceB even if the specific instances of MicroServiceA and MicroServiceB share a common role ID Entity assignment with ACME Vault treats all ACME connections that authenticate under the same certificate identifier domain as the same certificate entity for client count calculations For example ACME client requests from the same server or separate servers for the same certificate identifier a unique combination of CN DNS SANS and IP SANS are treated as the same entity If an ACME client makes a request for a test com and subsequently makes a new request for b test com and test com then two distinct entities will be created one for a test com and another for the combination of b test com and test com Overlap of certificate identifiers from different ACME clients will be treated as the same entity e g if client 1 requests a test com and client 2 requests a test com a single entity is created for both requests Secret sync clients Vault can automatically update secrets in external destinations with secret sync vault docs sync A secret that gets synced to one or more destinations is considered a secret sync client for client count calculations Note that Each synced secret is counted distinctly based on the path and namespace of the secret If you have secrets at path kv1 secret and kv2 secret which are both synced then two distinct secret syncs will be counted A secret can be synced to multiple different destinations and it will still only be counted as one secret sync If kv secret is synced to both Azure Key Vault and AWS Secret Manager this will be counted as only one secret sync client Secret sync clients are only created after you create an association between a secret and a store If you create kv secret and do not associate this secret with any destinations it will not be counted as a secret sync client Secret syncs clients are registered in Vault s client counting system so long as the sync is active If you create kv secret and associate it with a destination in January update the secret in May and then delete the secret in September Vault will consider this client as having been seen throughout the entire period of January through September Entity assignment with namespaces A namespace represents a isolated logical space within a single Vault cluster and is typically used for administrative purposes When a client authenticates within a given namespace Vault assigns the same client entity to activities within any child namespaces because the namespaces exist within the same larger scope When a client authenticates across namespace boundaries Vault treats the single client as two distinct entities because the client is operating across different scopes with different policy assignments and resources For example Different requests under parent and child namespaces from a single client authenticated under the parent namespace are assigned the same entity ID All the client activities occur within the boundaries of the namespace referenced in the original authentication request Different requests under parent and child namespaces from a single client authenticated under the child namespace are assigned different entity IDs Some of the client activities occur outside the boundaries of the namespace referenced in the original authentication request Requests by the same client to two different namespaces NAMESPACE sub A sub and NAMESPACE sub B sub are assigned different entity IDs Entity assignment with non entity tokens Vault uses tokens as the core method for authentication You can use tokens to authenticate directly or use token auth methods vault docs concepts auth to dynamically generate tokens based on external identities When clients authenticate with the token auth method vault docs auth token without a client identity the result is a non entity token For example a service might use the token authentication method to create a token for a user whose explicit identity is unknown Ultimately non entity tokens trace back to a particular client or purpose so Vault assigns unique entity IDs to non entity tokens based on a combination of the assigned entity alias name if present associated policies and namespace under which the token was created In rare cases tokens may be created outside of the Vault identity system without an associated entity or identity Vault treats every unaffiliated token as a unique client for production usage We strongly discourage the use of unaffiliated tokens and recommend that you always associate a token with an entity alias and token role Note title Behavior change in Vault 1 9 As of Vault 1 9 all non entity tokens with the same namespace and policy assignments are treated as the same client entity Prior to Vault 1 9 every non entity token was treated as a unique client entity which drastically inflated telemetry around client count If you are using Vault 1 8 or earlier and need to address client count inflation without upgrading we recommend creating a token role vault api docs auth token create update token role with allowable entity aliases and assigning all tokens to an appropriate role and entity alias name vault api docs auth token create token before using them Note include content footer title mdx Tabs Tab heading Related concepts ul li a href vault docs concepts client count counting Client count calculation a li li a href vault docs concepts client count faq Client count FAQ a li ul Tab Tab heading Related tutorials ul li a href vault tutorials auth methods identity Identity Entities and Groups a li li a href vault tutorials enterprise namespaces Secure Multi Tenancy with Namespaces a li ul Tab Tab heading Other resources ul li Article a href https www hashicorp com identity based security and low trust networks Identity based Security and Low trust Networks a li ul Tab Tabs |

vault Client calculation and sizing can be complex to compute when you have multiple Vault usage metrics page title Vault usage metrics Learn how to discover the number of Vault clients for each namespace in Vault layout docs | ---

layout: docs

page_title: Vault usage metrics

description: |-

Learn how to discover the number of Vault clients for each namespace in Vault.

---

# Vault usage metrics

Client calculation and sizing can be complex to compute when you have multiple

namespaces and auth mounts. The **Vault Usage Metrics** dashboard on Vault UI

provides the information where you can filter the data by namespace and/or auth

mounts. You can also use Vault CLI or API to query the usage metrics.

## Enable usage metrics

Usage metrics are a feature that is enabled by default for Vault Enterprise and

HCP Vault Dedicated. However, if you are running Vault Community Edition, you

need to enable usage metrics since it is disabled by default.

<Tabs>

<Tab heading="Web UI" group="ui">

1. Open a web browser to access the Vault UI, and sign in.

1. Select **Client Count** from the left navigation menu.

1. Select **Configuration**.

1. Select **Edit configuration**.

1. Select the toggle for **Usage data collection** so that the text reads **Data

collection is on**.

<Tip title="Retention period">

The retention period sets the number of months for which Vault will maintain

activity logs to track active clients. (Default: 48 months)

</Tip>

1. Click **Save** to apply the changes.

1. Click **Continue** in the confirmation dialog to enable usage metrics tracking.

</Tab>

<Tab heading="CLI command" group="cli">

```shell-session

$ vault write sys/internal/counters/config enabled=enable

```

Valid values for `enabled` parameter are: `default`, `enable`, and `disable`.

<Tip title="Retention period">

By default, Vault maintains activity logs to track

active clients for 24 months. If you wish to change the retention period, use

the `retention_months` parameter.

</Tip>

**Example:**

```shell-session

$ vault write sys/internal/counters/config \

enabled=enable \

retention_months=12

```

</Tab>

<Tab heading="API call using cURL" group="api">

```shell-session

$ curl --header "X-Vault-Token: <TOKEN>" \

--request POST \

--data '{"enabled": "enable"}' \

$VAULT_ADDR/v1/sys/internal/counters/config

```

Valid values for `enabled` parameter are: `default`, `enable`, and `disable`.

<Tip title="Retention period">

By default, Vault maintains activity logs to track

active clients for 24 months. If you wish to change the retention period, use

the `retention_months` parameter.

</Tip>

**Example:**

```shell-session

$ curl --header "X-Vault-Token: <TOKEN>" \

--request POST \

--data '{"enabled": "enable", "retention_months": 12}' \

$VAULT_ADDR/v1/sys/internal/counters/config

```

</Tab>

</Tabs>

## Usage metrics dashboard

1. Sign into Vault UI. The **Client count** section displays the total number of

clients for the current billing period.

1. Select **Details**.

1. Examine the **Vault Usage Metrics** dashboard to learn your Vault usage.

#### Usage metrics data categories

- **Running client total** are the primary metric on which pricing is based.

It is the sum of entity clients (or distinct entities) and non-entity clients.

- **Entity clients** (distinct entities) are representations of a particular

user, client, or application that belongs to a defined Vault entity. If you

are unfamiliar with Vault entities, refer to the [Identity: Entities and

Groups](/vault/tutorials/auth-methods/identity) tutorial.

- **Non-entity clients** are clients without an entity attached.

This is because some customers or workflows might avoid using entity-creating

authentication methods and instead depend on token creation through the Vault

API. Refer to [understanding non-entity

tokens](/vault/docs/concepts/client-count#understanding-non-entity-tokens)

to learn more.

<Note>

The non-entity client count excludes `root` tokens.

</Note>

- **Secrets sync clients** are the number of external destinations Vault

connects to sync the secrets. Refer to the

[documentation](/vault/docs/concepts/client-count#secret-sync-clients) for

more details.

- **ACME clients** are the ACME connections that authenticate under the same

certificate identifier (domain) as the same certificate entity for client

count calculations. Refer to the

[documentation](/vault/docs/concepts/client-count#entity-assignment-with-acme)

for more details.

## Select a data range

Under the **Client counting period**, select **Edit** to query the data for

a different billing period.

Keep in mind that Vault begins collecting data when the feature is enabled. For

example, if you enabled the usage metrics in March of 2023, you cannot query

data in January of 2023.

Vault will return metrics from March of 2023 through most recent full month.

## Filter by namespaces

If you have [namespaces](/vault/docs/enterprise/namespaces), the dashboard

displays the top ten namespaces by total clients.

Use the **Filters** to view the metrics data of a specific namespace.

## Mount attribution

The clients are also shown as graphs per auth mount. The **Mount attribution**

section displays the top auth mounts where you can expect to find your most used

auth method mounts with respect to client usage. This allows you to detect which

auth mount had the most number of total clients in the given billing period. You

can filter for auth mounts within a namespace, or view auth mounts across

namespaces. The mount attribution is available even if you are not using

namespaces.

## Query usage metrics via CLI

Retrieve the usage metrics for the current billing period.

```shell-session

$ vault operator usage

```

**Example output:**

<CodeBlockConfig hideClipboard>

```plaintxt

Period start: 2024-03-01T00:00:00Z

Period end: 2024-10-31T23:59:59Z

Namespace path Entity Clients Non-Entity clients Secret syncs ACME clients Active clients

-------------- -------------- ------------------ ------------ ------------ --------------

[root] 86 114 0 0 200

education/ 31 31 0 0 62

education/certification/ 18 25 0 0 43

education/training/ 192 197 0 0 389

finance/ 18 26 0 0 44

marketing/ 28 17 0 0 45

test-ns-1-with-namespace-length-over-18-characters/ 84 75 0 0 159

test-ns-1/ 59 66 0 0 125

test-ns-2-with-namespace-length-over-18-characters/ 58 46 0 0 104

test-ns-2/ 56 47 0 0 103

Total 630 644 0 0 1274

```

</CodeBlockConfig>

The output shows client usage metrics for each namespace.

### Filter by namespace

You can narrow the scope for `education` namespace and its child namespaces.

```shell-session

$ vault operator usage -namespace education

Period start: 2024-03-01T00:00:00Z

Period end: 2024-10-31T23:59:59Z

Namespace path Entity Clients Non-Entity clients Secret syncs ACME clients Active clients

-------------- -------------- ------------------ ------------ ------------ --------------

education/ 31 31 0 0 62

education/certification/ 18 25 0 0 43

education/training/ 192 197 0 0 389

Total 241 253 0 0 494

```

### Query with a time frame

To query the client usage metrics for the month of June, 2024. The start

time is June 1, 2024 (`2024-06-01T00:00:00Z`) and the end time is June

30, 2024 (`2024-06-30T23:59:59Z`).

The `start_time` and `end_time` should be an RFC3339 timestamp or Unix epoch

time.

```shell-session

$ vault operator usage \

-start-time=2024-06-01T00:00:00Z \

-end-time=2024-06-30T23:59:59Z

```

**Example output:**

<CodeBlockConfig hideClipboard>

```plaintext

Period start: 2024-06-01T00:00:00Z

Period end: 2024-06-30T23:59:59Z

Namespace path Entity Clients Non-Entity clients Secret syncs ACME clients Active clients

-------------- -------------- ------------------ ------------ ------------ --------------

[root] 10 16 0 0 26

education/ 7 1 0 0 8

education/certification/ 2 4 0 0 6

education/training/ 37 30 0 0 67

finance/ 3 6 0 0 9

marketing/ 2 2 0 0 4

test-ns-1-with-namespace-length-over-18-characters/ 6 9 0 0 15

test-ns-1/ 9 12 0 0 21

test-ns-2-with-namespace-length-over-18-characters/ 5 5 0 0 10

test-ns-2/ 9 7 0 0 16

Total 90 92 0 0 182

```

</CodeBlockConfig>

## Export the metrics data

You can export the metrics data by clicking on the **Export attribution data**

button.

This downloads the usage metrics data on your local drive in comma separated

values format (`.csv`) or JSON.

## API

- Refer to the

[`sys/internal/counters`](/vault/api-docs/system/internal-counters#client-count)

page to retrieve client count using API.

- [Activity export API](/vault/api-docs/system/internal-counters#activity-export) to

export activity log. | vault | layout docs page title Vault usage metrics description Learn how to discover the number of Vault clients for each namespace in Vault Vault usage metrics Client calculation and sizing can be complex to compute when you have multiple namespaces and auth mounts The Vault Usage Metrics dashboard on Vault UI provides the information where you can filter the data by namespace and or auth mounts You can also use Vault CLI or API to query the usage metrics Enable usage metrics Usage metrics are a feature that is enabled by default for Vault Enterprise and HCP Vault Dedicated However if you are running Vault Community Edition you need to enable usage metrics since it is disabled by default Tabs Tab heading Web UI group ui 1 Open a web browser to access the Vault UI and sign in 1 Select Client Count from the left navigation menu 1 Select Configuration 1 Select Edit configuration Edit configuration img ui usage metrics config png 1 Select the toggle for Usage data collection so that the text reads Data collection is on Tip title Retention period The retention period sets the number of months for which Vault will maintain activity logs to track active clients Default 48 months Tip 1 Click Save to apply the changes 1 Click Continue in the confirmation dialog to enable usage metrics tracking Tab Tab heading CLI command group cli shell session vault write sys internal counters config enabled enable Valid values for enabled parameter are default enable and disable Tip title Retention period By default Vault maintains activity logs to track active clients for 24 months If you wish to change the retention period use the retention months parameter Tip Example shell session vault write sys internal counters config enabled enable retention months 12 Tab Tab heading API call using cURL group api shell session curl header X Vault Token TOKEN request POST data enabled enable VAULT ADDR v1 sys internal counters config Valid values for enabled parameter are default enable and disable Tip title Retention period By default Vault maintains activity logs to track active clients for 24 months If you wish to change the retention period use the retention months parameter Tip Example shell session curl header X Vault Token TOKEN request POST data enabled enable retention months 12 VAULT ADDR v1 sys internal counters config Tab Tabs Usage metrics dashboard 1 Sign into Vault UI The Client count section displays the total number of clients for the current billing period 1 Select Details Vault UI default dashboard example img ui client count png 1 Examine the Vault Usage Metrics dashboard to learn your Vault usage Example Vault Usage Metrics dashboard view img ui usage metrics 1 png Usage metrics data categories Running client total are the primary metric on which pricing is based It is the sum of entity clients or distinct entities and non entity clients Entity clients distinct entities are representations of a particular user client or application that belongs to a defined Vault entity If you are unfamiliar with Vault entities refer to the Identity Entities and Groups vault tutorials auth methods identity tutorial Non entity clients are clients without an entity attached This is because some customers or workflows might avoid using entity creating authentication methods and instead depend on token creation through the Vault API Refer to understanding non entity tokens vault docs concepts client count understanding non entity tokens to learn more Note The non entity client count excludes root tokens Note Secrets sync clients are the number of external destinations Vault connects to sync the secrets Refer to the documentation vault docs concepts client count secret sync clients for more details ACME clients are the ACME connections that authenticate under the same certificate identifier domain as the same certificate entity for client count calculations Refer to the documentation vault docs concepts client count entity assignment with acme for more details ACME clients example img ui usage metrics acme png Select a data range Under the Client counting period select Edit to query the data for a different billing period Query img ui usage metrics period png Keep in mind that Vault begins collecting data when the feature is enabled For example if you enabled the usage metrics in March of 2023 you cannot query data in January of 2023 Vault will return metrics from March of 2023 through most recent full month Filter by namespaces If you have namespaces vault docs enterprise namespaces the dashboard displays the top ten namespaces by total clients Namespace attribution example img ui usage metrics namespace png Use the Filters to view the metrics data of a specific namespace Filter by namespace img ui usage metrics filter png Mount attribution The clients are also shown as graphs per auth mount The Mount attribution section displays the top auth mounts where you can expect to find your most used auth method mounts with respect to client usage This allows you to detect which auth mount had the most number of total clients in the given billing period You can filter for auth mounts within a namespace or view auth mounts across namespaces The mount attribution is available even if you are not using namespaces Usage metrics by mount attribution img ui usage metrics mounts png Query usage metrics via CLI Retrieve the usage metrics for the current billing period shell session vault operator usage Example output CodeBlockConfig hideClipboard plaintxt Period start 2024 03 01T00 00 00Z Period end 2024 10 31T23 59 59Z Namespace path Entity Clients Non Entity clients Secret syncs ACME clients Active clients root 86 114 0 0 200 education 31 31 0 0 62 education certification 18 25 0 0 43 education training 192 197 0 0 389 finance 18 26 0 0 44 marketing 28 17 0 0 45 test ns 1 with namespace length over 18 characters 84 75 0 0 159 test ns 1 59 66 0 0 125 test ns 2 with namespace length over 18 characters 58 46 0 0 104 test ns 2 56 47 0 0 103 Total 630 644 0 0 1274 CodeBlockConfig The output shows client usage metrics for each namespace Filter by namespace You can narrow the scope for education namespace and its child namespaces shell session vault operator usage namespace education Period start 2024 03 01T00 00 00Z Period end 2024 10 31T23 59 59Z Namespace path Entity Clients Non Entity clients Secret syncs ACME clients Active clients education 31 31 0 0 62 education certification 18 25 0 0 43 education training 192 197 0 0 389 Total 241 253 0 0 494 Query with a time frame To query the client usage metrics for the month of June 2024 The start time is June 1 2024 2024 06 01T00 00 00Z and the end time is June 30 2024 2024 06 30T23 59 59Z The start time and end time should be an RFC3339 timestamp or Unix epoch time shell session vault operator usage start time 2024 06 01T00 00 00Z end time 2024 06 30T23 59 59Z Example output CodeBlockConfig hideClipboard plaintext Period start 2024 06 01T00 00 00Z Period end 2024 06 30T23 59 59Z Namespace path Entity Clients Non Entity clients Secret syncs ACME clients Active clients root 10 16 0 0 26 education 7 1 0 0 8 education certification 2 4 0 0 6 education training 37 30 0 0 67 finance 3 6 0 0 9 marketing 2 2 0 0 4 test ns 1 with namespace length over 18 characters 6 9 0 0 15 test ns 1 9 12 0 0 21 test ns 2 with namespace length over 18 characters 5 5 0 0 10 test ns 2 9 7 0 0 16 Total 90 92 0 0 182 CodeBlockConfig Export the metrics data You can export the metrics data by clicking on the Export attribution data button Metrics UI img ui usage metrics export png This downloads the usage metrics data on your local drive in comma separated values format csv or JSON API Refer to the sys internal counters vault api docs system internal counters client count page to retrieve client count using API Activity export API vault api docs system internal counters activity export to export activity log |

vault Deprecation announcements updates and migration plans for Vault page title Deprecation notices Vault implements a multi phased approach to deprecations to provide users with layout docs Deprecation notices | ---

layout: docs

page_title: Deprecation notices

description: >-

Deprecation announcements, updates, and migration plans for Vault.

---

# Deprecation notices

Vault implements a multi-phased approach to deprecations to provide users with

advanced warning, minimize business disruptions, and allow for the safe handling

of data affected by a feature removal.

<Highlight title="Have questions?">

If you have questions or concerns about a deprecated feature, please create a

topic on the [Vault community forum](https://discuss.hashicorp.com/c/vault/30)

or raise a ticket with your support team.

</Highlight>

<a id="announcements" />

## Recent announcements

<Tabs>

<Tab heading="DEPRECATED">

<EnterpriseAlert product="vault">

The Vault Support Team can provide <b>limited</b> help with a deprecated feature.

Limited support includes troubleshooting solutions and workarounds but does not

include software patches or bug fixes. Refer to

the <a href="https://support.hashicorp.com/hc/en-us/articles/360021185113-Support-Period-and-End-of-Life-EOL-Policy">HashiCorp Support Policy</a> for

more information on the product support timeline.

</EnterpriseAlert>

@include 'deprecation/ruby-client-library.mdx'

@include 'deprecation/active-directory-secrets-engine.mdx'

</Tab>

<Tab heading="PENDING REMOVAL">

@include 'deprecation/vault-agent-api-proxy.mdx'

@include 'deprecation/aws-field-change.mdx'

@include 'deprecation/centrify-auth-method.mdx'

</Tab>

<Tab heading="REMOVED">

@include 'deprecation/duplicative-docker-images.mdx'

@include 'deprecation/azure-password-policy.mdx'

</Tab>

</Tabs>

<a id="phases" />

## Deprecation phases

The lifecycle of a Vault feature or plugin includes 4 phases:

- **supported** - generally available (GA), functioning as expected, and under

active maintenance

- **deprecated** - marked for removal in a future release

- **pending removal** - support ended or replaced by another feature

- **removed** - end of lifecycle

### Deprecated ((#deprecated))

"Deprecated" is the first phase of the deprecation process and indicates that

the feature is marked for removal in a future release. When you upgrade Vault,

newly deprecated features will begin alerting that the feature is deprecated:

- Built-in authentication and secrets plugins log `Warn`-level messages on

unseal.

- All deprecated features log `Warn`-level messages.

- All `POST`, `GET`, and `LIST` endpoints associated with the feature return

warnings in response data.

Built-in Vault authentication and secrets plugins also expose their deprecation

status through the Vault CLI and Vault API.

CLI command | API endpoint

---------------------------------------------------------------------------- | --------------

N/A | [`/sys/plugins/catalog`](/vault/api-docs/system/plugins-catalog)

[`vault plugin info auth <PLUGIN_NAME>`](/vault/docs/commands/plugin/info) | [`/sys/plugins/catalog/auth/:name`](/vault/api-docs/system/plugins-catalog#list-plugins-1)

[`vault plugin info secret <PLUGIN_NAME>`](/vault/docs/commands/plugin/info) | [`/sys/plugins/catalog/secret/:name`](/vault/api-docs/system/plugins-catalog#list-plugins-1)

### Pending removal

"Pending removal" is the second phase of the deprecation process and indicates

that the feature behavior is fundamentally altered in the following ways:

- Built-in authentication and secrets plugins log `Error`-level messages and

cause an immediate shutdown of the Vault core.

- All features pending removal fail and log `Error`-level messages.

- All CLI commands and API endpoints associated with the feature fail and return

errors.

<Warning title="Use with caution">

In critical situations, you may be able to override the pending removal behavior with the

[`VAULT_ALLOW_PENDING_REMOVAL_MOUNTS`](/vault/docs/commands/server#vault_allow_pending_removal_mounts)

environment variable, which forces Vault to treat some features that are pending

removal as if they were still only deprecated.

</Warning>

### Removed

"Removed" is the last phase of the deprecation process and indicates that the

feature is no longer supported and no longer exists within Vault.

## Migrate from deprecated features

Features in the "pending removal" and "removed" phases will fail, log errors,

and, for built-in authentication or secret plugins, cause an immediate shutdown

of the Vault core.

Migrate away from a deprecated feature and successfully upgrade to newer Vault

versions, you must eliminate the deprecated features:

1. Downgrade Vault to a previous version if necessary.

1. Replace any "Removed" or "Pending removal" feature with the recommended

alternative.

1. Upgrade to latest desired version. | vault | layout docs page title Deprecation notices description Deprecation announcements updates and migration plans for Vault Deprecation notices Vault implements a multi phased approach to deprecations to provide users with advanced warning minimize business disruptions and allow for the safe handling of data affected by a feature removal Highlight title Have questions If you have questions or concerns about a deprecated feature please create a topic on the Vault community forum https discuss hashicorp com c vault 30 or raise a ticket with your support team Highlight a id announcements Recent announcements Tabs Tab heading DEPRECATED EnterpriseAlert product vault The Vault Support Team can provide b limited b help with a deprecated feature Limited support includes troubleshooting solutions and workarounds but does not include software patches or bug fixes Refer to the a href https support hashicorp com hc en us articles 360021185113 Support Period and End of Life EOL Policy HashiCorp Support Policy a for more information on the product support timeline EnterpriseAlert include deprecation ruby client library mdx include deprecation active directory secrets engine mdx Tab Tab heading PENDING REMOVAL include deprecation vault agent api proxy mdx include deprecation aws field change mdx include deprecation centrify auth method mdx Tab Tab heading REMOVED include deprecation duplicative docker images mdx include deprecation azure password policy mdx Tab Tabs a id phases Deprecation phases The lifecycle of a Vault feature or plugin includes 4 phases supported generally available GA functioning as expected and under active maintenance deprecated marked for removal in a future release pending removal support ended or replaced by another feature removed end of lifecycle Deprecated deprecated Deprecated is the first phase of the deprecation process and indicates that the feature is marked for removal in a future release When you upgrade Vault newly deprecated features will begin alerting that the feature is deprecated Built in authentication and secrets plugins log Warn level messages on unseal All deprecated features log Warn level messages All POST GET and LIST endpoints associated with the feature return warnings in response data Built in Vault authentication and secrets plugins also expose their deprecation status through the Vault CLI and Vault API CLI command API endpoint N A sys plugins catalog vault api docs system plugins catalog vault plugin info auth PLUGIN NAME vault docs commands plugin info sys plugins catalog auth name vault api docs system plugins catalog list plugins 1 vault plugin info secret PLUGIN NAME vault docs commands plugin info sys plugins catalog secret name vault api docs system plugins catalog list plugins 1 Pending removal Pending removal is the second phase of the deprecation process and indicates that the feature behavior is fundamentally altered in the following ways Built in authentication and secrets plugins log Error level messages and cause an immediate shutdown of the Vault core All features pending removal fail and log Error level messages All CLI commands and API endpoints associated with the feature fail and return errors Warning title Use with caution In critical situations you may be able to override the pending removal behavior with the VAULT ALLOW PENDING REMOVAL MOUNTS vault docs commands server vault allow pending removal mounts environment variable which forces Vault to treat some features that are pending removal as if they were still only deprecated Warning Removed Removed is the last phase of the deprecation process and indicates that the feature is no longer supported and no longer exists within Vault Migrate from deprecated features Features in the pending removal and removed phases will fail log errors and for built in authentication or secret plugins cause an immediate shutdown of the Vault core Migrate away from a deprecated feature and successfully upgrade to newer Vault versions you must eliminate the deprecated features 1 Downgrade Vault to a previous version if necessary 1 Replace any Removed or Pending removal feature with the recommended alternative 1 Upgrade to latest desired version |

vault it for a certain duration page title debug Command The debug command monitors a Vault server probing information about layout docs debug | ---

layout: docs

page_title: debug - Command

description: |-

The "debug" command monitors a Vault server, probing information about

it for a certain duration.

---

# debug

The `debug` command starts a process that monitors a Vault server, probing

information about it for a certain duration.

Gathering information about the state of the Vault cluster often requires the

operator to access all necessary information via various API calls and terminal

commands. The `debug` command aims to provide a simple workflow that produces a

consistent output to help operators retrieve and share information about the

server in question.

The `debug` command honors the same variables that the base command

accepts, such as the token stored via a previous login or the environment

variables `VAULT_TOKEN` and `VAULT_ADDR`. The token used determines the

permissions and, in turn, the information that `debug` may be able to collect.

The address specified determines the target server that will be probed against.

If the command is interrupted, the information collected up until that

point gets persisted to an output directory.

## Permissions

Regardless of whether a particular target is provided, the ability for `debug`

to fetch data for the target depends on the token provided. Some targets, such

as `server-status`, queries unauthenticated endpoints which means that it can be

queried all the time. Other targets require the token to have ACL permissions to

query the matching endpoint in order to get a proper response. Any errors

encountered during capture due to permissions or otherwise will be logged in the

index file.

The following policy can be used for generating debug packages with all targets:

```hcl

path "auth/token/lookup-self" {

capabilities = ["read"]

}

path "sys/pprof/*" {

capabilities = ["read"]

}

path "sys/config/state/sanitized" {

capabilities = ["read"]

}

path "sys/monitor" {

capabilities = ["read"]

}

path "sys/host-info" {

capabilities = ["read"]

}

path "sys/in-flight-req" {

capabilities = ["read"]

}

```

## Capture targets

The `-target` flag can be specified multiple times to capture specific

information when debug is running. By default, it captures all information.

| Target | Description |

| :------------------- | :-------------------------------------------------------------------------------- |

| `config` | Sanitized version of the configuration state. |

| `host` | Information about the instance running the server, such as CPU, memory, and disk. |

| `metrics` | Telemetry information. |

| `pprof` | Runtime profiling data, including heap, CPU, goroutine, and trace profiling. |

| `replication-status` | Replication status. |

| `server-status` | Health and seal status. |

Note that the `config`, `host`,`metrics`, and `pprof` targets are only queried

on active and performance standby nodes due to the the fact that the information

pertains to the local node and the request should not be forwarded.

Additionally, host information is not available on the OpenBSD platform due to

library limitations in fetching the data without enabling `cgo`.

[Enterprise] Telemetry can be gathered from a DR Secondary active node via the

`metrics` target if [unauthenticated_metrics_access](/vault/docs/configuration/listener/tcp#unauthenticated_metrics_access) is enabled.

## Output layout

The output of the bundled information, once decompressed, is contained within a

single directory. Each target, with the exception of profiling data, is captured

in a single file. For each of those targets collection is represented as a JSON

array object, with each entry captured at each interval as a JSON object.

```shell-session

$ tree vault-debug-2019-10-15T21-44-49Z/

vault-debug-2019-10-15T21-44-49Z/

├── 2019-10-15T21-44-49Z

│ ├── goroutine.prof

│ ├── heap.prof

│ ├── profile.prof

│ └── trace.out

├── 2019-10-15T21-45-19Z

│ ├── goroutine.prof

│ ├── heap.prof

│ ├── profile.prof

│ └── trace.out

├── 2019-10-15T21-45-49Z

│ ├── goroutine.prof

│ ├── heap.prof

│ ├── profile.prof

│ └── trace.out

├── 2019-10-15T21-46-19Z

│ ├── goroutine.prof

│ ├── heap.prof

│ ├── profile.prof

│ └── trace.out

├── 2019-10-15T21-46-49Z

│ ├── goroutine.prof

│ └── heap.prof

├── config.json

├── host_info.json

├── index.json

├── metrics.json

├── replication_status.json

└── server_status.json

```

## Examples

Start debug using reasonable defaults:

```shell-session

$ vault debug

```

Start debug with different duration, intervals, and metrics interval values, and

skip compression:

```shell-session

$ vault debug -duration=1m -interval=10s -metrics-interval=5s -compress=false

```

Start debug with specific targets:

```shell-session

$ vault debug -target=host -target=metrics

```

## Usage

The following flags are available in addition to the [standard set of

flags](/vault/docs/commands) included on all commands.

### Command options

- `-compress` `(bool: true)` - Toggles whether to compress output package The

default is true.

- `-duration` `(int or time string: "2m")` - Duration to run the command. The

default is 2m0s.

- `-interval` `(int or time string: "30s")` - The polling interval at which to

collect profiling data and server state. The default is 30s.

- `-log-format` `(string: "standard")` - Log format to be captured if "log"

target specified. Supported values are "standard" and "json". The default is

"standard".

- `-metrics-interval` `(int or time string: "10s")` - The polling interval at

which to collect metrics data. The default is 10s.

- `-output` `(string)` - Specifies the output path for the debug package. Defaults

to a time-based generated file name.

- `-target` `(string: all targets)` - Target to capture, defaulting to all if

none specified. This can be specified multiple times to capture multiple

targets. Available targets are: config, host, metrics, pprof,

replication-status, server-status. | vault | layout docs page title debug Command description The debug command monitors a Vault server probing information about it for a certain duration debug The debug command starts a process that monitors a Vault server probing information about it for a certain duration Gathering information about the state of the Vault cluster often requires the operator to access all necessary information via various API calls and terminal commands The debug command aims to provide a simple workflow that produces a consistent output to help operators retrieve and share information about the server in question The debug command honors the same variables that the base command accepts such as the token stored via a previous login or the environment variables VAULT TOKEN and VAULT ADDR The token used determines the permissions and in turn the information that debug may be able to collect The address specified determines the target server that will be probed against If the command is interrupted the information collected up until that point gets persisted to an output directory Permissions Regardless of whether a particular target is provided the ability for debug to fetch data for the target depends on the token provided Some targets such as server status queries unauthenticated endpoints which means that it can be queried all the time Other targets require the token to have ACL permissions to query the matching endpoint in order to get a proper response Any errors encountered during capture due to permissions or otherwise will be logged in the index file The following policy can be used for generating debug packages with all targets hcl path auth token lookup self capabilities read path sys pprof capabilities read path sys config state sanitized capabilities read path sys monitor capabilities read path sys host info capabilities read path sys in flight req capabilities read Capture targets The target flag can be specified multiple times to capture specific information when debug is running By default it captures all information Target Description config Sanitized version of the configuration state host Information about the instance running the server such as CPU memory and disk metrics Telemetry information pprof Runtime profiling data including heap CPU goroutine and trace profiling replication status Replication status server status Health and seal status Note that the config host metrics and pprof targets are only queried on active and performance standby nodes due to the the fact that the information pertains to the local node and the request should not be forwarded Additionally host information is not available on the OpenBSD platform due to library limitations in fetching the data without enabling cgo Enterprise Telemetry can be gathered from a DR Secondary active node via the metrics target if unauthenticated metrics access vault docs configuration listener tcp unauthenticated metrics access is enabled Output layout The output of the bundled information once decompressed is contained within a single directory Each target with the exception of profiling data is captured in a single file For each of those targets collection is represented as a JSON array object with each entry captured at each interval as a JSON object shell session tree vault debug 2019 10 15T21 44 49Z vault debug 2019 10 15T21 44 49Z 2019 10 15T21 44 49Z goroutine prof heap prof profile prof trace out 2019 10 15T21 45 19Z goroutine prof heap prof profile prof trace out 2019 10 15T21 45 49Z goroutine prof heap prof profile prof trace out 2019 10 15T21 46 19Z goroutine prof heap prof profile prof trace out 2019 10 15T21 46 49Z goroutine prof heap prof config json host info json index json metrics json replication status json server status json Examples Start debug using reasonable defaults shell session vault debug Start debug with different duration intervals and metrics interval values and skip compression shell session vault debug duration 1m interval 10s metrics interval 5s compress false Start debug with specific targets shell session vault debug target host target metrics Usage The following flags are available in addition to the standard set of flags vault docs commands included on all commands Command options compress bool true Toggles whether to compress output package The default is true duration int or time string 2m Duration to run the command The default is 2m0s interval int or time string 30s The polling interval at which to collect profiling data and server state The default is 30s log format string standard Log format to be captured if log target specified Supported values are standard and json The default is standard metrics interval int or time string 10s The polling interval at which to collect metrics data The default is 10s output string Specifies the output path for the debug package Defaults to a time based generated file name target string all targets Target to capture defaulting to all if none specified This can be specified multiple times to capture multiple targets Available targets are config host metrics pprof replication status server status |

vault page title events Command The events command interacts with the Vault events notifications subsystem events layout docs EnterpriseAlert product vault | ---

layout: docs

page_title: events - Command

description: |-

The "events" command interacts with the Vault events notifications subsystem.

---

# events

<EnterpriseAlert product="vault" />

Use the `events` command to get a real-time display of

[event notifications](/vault/docs/concepts/events) generated by Vault and to subscribe to Vault

event notifications. Note that the `events subscribe` runs indefinitly and will not exit on

its own unless it encounters an unexpected error. Similar to `tail -f` in the

Unix world, you must terminate the process from the command line to end the

`events` command.

Specify the desired event types (also called "topics") as a glob pattern. To

match against multiple event types, use `*` as a wildcard. The command returns

serialized JSON objects in the default protobuf JSON serialization format with

one line per event received.

## Examples

Subscribe to all event notifications:

```shell-session

$ vault events subscribe '*'

```

Subscribe to all KV event notifications:

```shell-session

$ vault events subscribe 'kv*'

```

Subscribe to all `kv-v2/data-write` event notifications:

```shell-session

$ vault events subscribe kv-v2/data-write

```

Subscribe to all KV event notifications in the current and `ns1` namespaces for the secret `secret/data/foo` that do not involve writing data:

```shell-session

$ vault events subscribe -namespaces=ns1 -filter='data_path == secret/data/foo and operation != "data-write"' 'kv*'

```

## Usage

`events subscribe` supports the following flags in addition to the [standard set of

flags](/vault/docs/commands) included on all commands.

### Options

- `-timeout`: `(duration: "")` - close the WebSocket automatically after the

specified duration.

- `-namespaces` `(string)` - Additional **child** namespaces for the

subscription. Repeat the flag to add additional namespace patterns to the

subscription request. Vault automatically prepends the issuing namespace for

the request to the provided namespace. For example, if you include

`-namespaces=ns2` on a request made in the `ns1` namespace, Vault will attempt

to subscribe you to event notifications under the `ns1/ns2` and `ns1` namespaces. You can

use the `*` character to include wildcards in the namespace pattern. By

default, Vault will only subscribe to event notifications in the requesting namespace.

<Note>

To subscribe to event notifications across multiple namespaces, you must provide a root

token or a token associated with appropriate policies across all the targeted

namespaces. Refer to

the <a href="/vault/tutorials/enterprise/namespaces">Secure multi-tenancy with

namespaces</a>tutorial for configuring your Vault instance appropriately.

</Note>

- `-filter` `(string: "")` - Filter expression used to select event notifications to be sent

through the WebSocket.

Refer to the [Filter expressions](/vault/docs/concepts/filtering) guide for a complete

list of filtering options and an explanation on how Vault evaluates filter expressions.

The following values are available in the filter expression:

- `event_type`: the event type, e.g., `kv-v2/data-write`.

- `operation`: the operation name that caused the event notification, e.g., `write`.

- `source_plugin_mount`: the mount of the plugin that produced the event notification,

e.g., `secret/`

- `data_path`: the API path that can be used to access the data of the secret related to the event notification, e.g., `secret/data/foo`

- `namespace`: the path of the namespace that created the event notification, e.g., `ns1/`

The filter string is empty by default. Unfiltered subscription requests match to

all event notifications that the requestor has access to for the target event type. When the

filter string is not empty, Vault applies the filter conditions after the policy

checks to narrow the event notifications provided in the response.

Filters can be straightforward path matches like

`data_path == secret/data/foo`, which specifies that Vault should pass

return event notifications that refer to the `secret/data/foo` secret to the WebSocket.

Or more complex statements that exclude specific operations. For example:

```

data_path == secret/data/foo and operation != write

``` | vault | layout docs page title events Command description The events command interacts with the Vault events notifications subsystem events EnterpriseAlert product vault Use the events command to get a real time display of event notifications vault docs concepts events generated by Vault and to subscribe to Vault event notifications Note that the events subscribe runs indefinitly and will not exit on its own unless it encounters an unexpected error Similar to tail f in the Unix world you must terminate the process from the command line to end the events command Specify the desired event types also called topics as a glob pattern To match against multiple event types use as a wildcard The command returns serialized JSON objects in the default protobuf JSON serialization format with one line per event received Examples Subscribe to all event notifications shell session vault events subscribe Subscribe to all KV event notifications shell session vault events subscribe kv Subscribe to all kv v2 data write event notifications shell session vault events subscribe kv v2 data write Subscribe to all KV event notifications in the current and ns1 namespaces for the secret secret data foo that do not involve writing data shell session vault events subscribe namespaces ns1 filter data path secret data foo and operation data write kv Usage events subscribe supports the following flags in addition to the standard set of flags vault docs commands included on all commands Options timeout duration close the WebSocket automatically after the specified duration namespaces string Additional child namespaces for the subscription Repeat the flag to add additional namespace patterns to the subscription request Vault automatically prepends the issuing namespace for the request to the provided namespace For example if you include namespaces ns2 on a request made in the ns1 namespace Vault will attempt to subscribe you to event notifications under the ns1 ns2 and ns1 namespaces You can use the character to include wildcards in the namespace pattern By default Vault will only subscribe to event notifications in the requesting namespace Note To subscribe to event notifications across multiple namespaces you must provide a root token or a token associated with appropriate policies across all the targeted namespaces Refer to the a href vault tutorials enterprise namespaces Secure multi tenancy with namespaces a tutorial for configuring your Vault instance appropriately Note filter string Filter expression used to select event notifications to be sent through the WebSocket Refer to the Filter expressions vault docs concepts filtering guide for a complete list of filtering options and an explanation on how Vault evaluates filter expressions The following values are available in the filter expression event type the event type e g kv v2 data write operation the operation name that caused the event notification e g write source plugin mount the mount of the plugin that produced the event notification e g secret data path the API path that can be used to access the data of the secret related to the event notification e g secret data foo namespace the path of the namespace that created the event notification e g ns1 The filter string is empty by default Unfiltered subscription requests match to all event notifications that the requestor has access to for the target event type When the filter string is not empty Vault applies the filter conditions after the policy checks to narrow the event notifications provided in the response Filters can be straightforward path matches like data path secret data foo which specifies that Vault should pass return event notifications that refer to the secret data foo secret to the WebSocket Or more complex statements that exclude specific operations For example data path secret data foo and operation write |

vault page title Use a custom token helper The Vault CLI supports external token helpers to help simplify retrieving layout docs Use a custom token helper setting and erasing authentication tokens | ---

layout: docs

page_title: Use a custom token helper

description: >-

The Vault CLI supports external token helpers to help simplify retrieving,

setting and erasing authentication tokens.

---

# Use a custom token helper

A **token helper** is a program or script that saves, retrieves, or erases a

saved authentication token.