instance_id

stringlengths 13

37

| text

stringlengths 2.59k

1.94M

| repo

stringclasses 35

values | base_commit

stringlengths 40

40

| problem_statement

stringlengths 10

256k

| hints_text

stringlengths 0

908k

| created_at

stringlengths 20

20

| patch

stringlengths 18

101M

| test_patch

stringclasses 1

value | version

stringclasses 1

value | FAIL_TO_PASS

stringclasses 1

value | PASS_TO_PASS

stringclasses 1

value | environment_setup_commit

stringclasses 1

value |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

ipython__ipython-7819

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

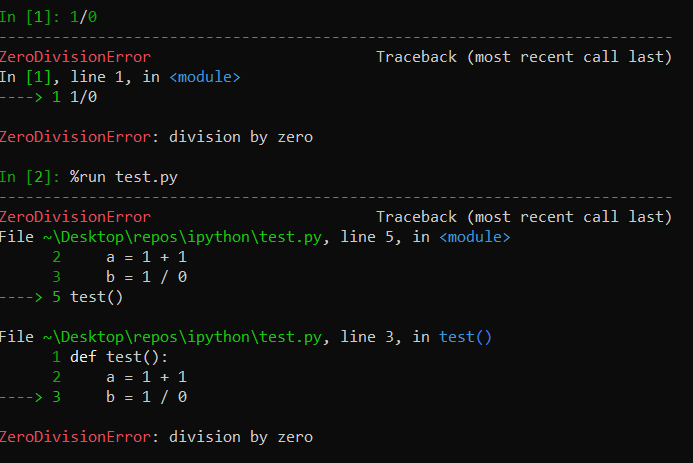

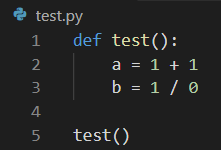

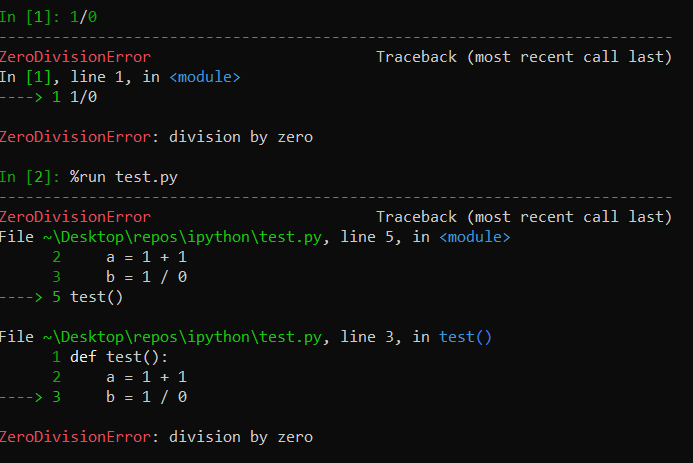

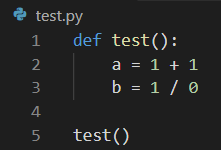

<issue>

Inspect requests inside a function call should be smarter about what they inspect.

Previously, `func(a, b, <shift-tab>` would give information on `func`, now it gives information on `b`, which is not especially helpful.

This is because we removed logic from the frontend to make it more language agnostic, and we have not yet reimplemented that on the frontend. For 3.1, we should make it at least as smart as 2.x was. The quicky and dirty approach would be a regex; the proper way is tokenising the code.

Ping @mwaskom who brought this up on the mailing list.

</issue>

<code>

[start of README.rst]

1 .. image:: https://img.shields.io/coveralls/ipython/ipython.svg

2 :target: https://coveralls.io/r/ipython/ipython?branch=master

3

4 .. image:: https://img.shields.io/pypi/dm/IPython.svg

5 :target: https://pypi.python.org/pypi/ipython

6

7 .. image:: https://img.shields.io/pypi/v/IPython.svg

8 :target: https://pypi.python.org/pypi/ipython

9

10 .. image:: https://img.shields.io/travis/ipython/ipython.svg

11 :target: https://travis-ci.org/ipython/ipython

12

13

14 ===========================================

15 IPython: Productive Interactive Computing

16 ===========================================

17

18 Overview

19 ========

20

21 Welcome to IPython. Our full documentation is available on `our website

22 <http://ipython.org/documentation.html>`_; if you downloaded a built source

23 distribution the ``docs/source`` directory contains the plaintext version of

24 these manuals. If you have Sphinx installed, you can build them by typing

25 ``cd docs; make html`` for local browsing.

26

27

28 Dependencies and supported Python versions

29 ==========================================

30

31 For full details, see the installation section of the manual. The basic parts

32 of IPython only need the Python standard library, but much of its more advanced

33 functionality requires extra packages.

34

35 Officially, IPython requires Python version 2.7, or 3.3 and above.

36 IPython 1.x is the last IPython version to support Python 2.6 and 3.2.

37

38

39 Instant running

40 ===============

41

42 You can run IPython from this directory without even installing it system-wide

43 by typing at the terminal::

44

45 $ python -m IPython

46

47

48 Development installation

49 ========================

50

51 If you want to hack on certain parts, e.g. the IPython notebook, in a clean

52 environment (such as a virtualenv) you can use ``pip`` to grab the necessary

53 dependencies quickly::

54

55 $ git clone --recursive https://github.com/ipython/ipython.git

56 $ cd ipython

57 $ pip install -e ".[notebook]" --user

58

59 This installs the necessary packages and symlinks IPython into your current

60 environment so that you can work on your local repo copy and run it from anywhere::

61

62 $ ipython notebook

63

64 The same process applies for other parts, such as the qtconsole (the

65 ``extras_require`` attribute in the setup.py file lists all the possibilities).

66

67 Git Hooks and Submodules

68 ************************

69

70 IPython now uses git submodules to ship its javascript dependencies.

71 If you run IPython from git master, you may need to update submodules once in a while with::

72

73 $ git submodule update

74

75 or::

76

77 $ python setup.py submodule

78

79 We have some git hooks for helping keep your submodules always in sync,

80 see our ``git-hooks`` directory for more info.

81

[end of README.rst]

[start of IPython/utils/tokenutil.py]

1 """Token-related utilities"""

2

3 # Copyright (c) IPython Development Team.

4 # Distributed under the terms of the Modified BSD License.

5

6 from __future__ import absolute_import, print_function

7

8 from collections import namedtuple

9 from io import StringIO

10 from keyword import iskeyword

11

12 from . import tokenize2

13 from .py3compat import cast_unicode_py2

14

15 Token = namedtuple('Token', ['token', 'text', 'start', 'end', 'line'])

16

17 def generate_tokens(readline):

18 """wrap generate_tokens to catch EOF errors"""

19 try:

20 for token in tokenize2.generate_tokens(readline):

21 yield token

22 except tokenize2.TokenError:

23 # catch EOF error

24 return

25

26 def line_at_cursor(cell, cursor_pos=0):

27 """Return the line in a cell at a given cursor position

28

29 Used for calling line-based APIs that don't support multi-line input, yet.

30

31 Parameters

32 ----------

33

34 cell: text

35 multiline block of text

36 cursor_pos: integer

37 the cursor position

38

39 Returns

40 -------

41

42 (line, offset): (text, integer)

43 The line with the current cursor, and the character offset of the start of the line.

44 """

45 offset = 0

46 lines = cell.splitlines(True)

47 for line in lines:

48 next_offset = offset + len(line)

49 if next_offset >= cursor_pos:

50 break

51 offset = next_offset

52 else:

53 line = ""

54 return (line, offset)

55

56 def token_at_cursor(cell, cursor_pos=0):

57 """Get the token at a given cursor

58

59 Used for introspection.

60

61 Parameters

62 ----------

63

64 cell : unicode

65 A block of Python code

66 cursor_pos : int

67 The location of the cursor in the block where the token should be found

68 """

69 cell = cast_unicode_py2(cell)

70 names = []

71 tokens = []

72 offset = 0

73 for tup in generate_tokens(StringIO(cell).readline):

74

75 tok = Token(*tup)

76

77 # token, text, start, end, line = tup

78 start_col = tok.start[1]

79 end_col = tok.end[1]

80 # allow '|foo' to find 'foo' at the beginning of a line

81 boundary = cursor_pos + 1 if start_col == 0 else cursor_pos

82 if offset + start_col >= boundary:

83 # current token starts after the cursor,

84 # don't consume it

85 break

86

87 if tok.token == tokenize2.NAME and not iskeyword(tok.text):

88 if names and tokens and tokens[-1].token == tokenize2.OP and tokens[-1].text == '.':

89 names[-1] = "%s.%s" % (names[-1], tok.text)

90 else:

91 names.append(tok.text)

92 elif tok.token == tokenize2.OP:

93 if tok.text == '=' and names:

94 # don't inspect the lhs of an assignment

95 names.pop(-1)

96

97 if offset + end_col > cursor_pos:

98 # we found the cursor, stop reading

99 break

100

101 tokens.append(tok)

102 if tok.token == tokenize2.NEWLINE:

103 offset += len(tok.line)

104

105 if names:

106 return names[-1]

107 else:

108 return ''

109

110

111

[end of IPython/utils/tokenutil.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+ points.append((x, y))

return points

</patch>

|

ipython/ipython

|

92333e1084ea0d6ff91b55434555e741d2274dc7

|

Inspect requests inside a function call should be smarter about what they inspect.

Previously, `func(a, b, <shift-tab>` would give information on `func`, now it gives information on `b`, which is not especially helpful.

This is because we removed logic from the frontend to make it more language agnostic, and we have not yet reimplemented that on the frontend. For 3.1, we should make it at least as smart as 2.x was. The quicky and dirty approach would be a regex; the proper way is tokenising the code.

Ping @mwaskom who brought this up on the mailing list.

|

Thanks! I don't actually know how to _use_ any of these packages, so I rely on what IPython tells me they'll do :)

Should note here too that the help also seems to be displaying the `__repr__` for, at least, pandas DataFrames slightly differently in 3.0.rc1, which yields a help popup that is garbled and hides the important bits.

The dataframe reprs sounds like a separate thing - can you file an issue for it? Preferably with screenshots? Thanks.

Done: #7817

More related to this issue:

While implementing a smarter inspector, it would be _great_ if it would work across line breaks. I'm constantly getting bitten by trying to do

``` python

complex_function(some_arg, another_arg, data_frame.some_transformation(),

a_kwarg=a_value, <shift-TAB>

```

And having it not work.

This did not work on the 2.x series either, AFAICT, but if the inspector is going to be reimplemented it would be awesome if it could be added.

If there's smart, tokenising logic to determine what you're inspecting, there's no reason it shouldn't handle multiple lines. Making it smart enough for that might not be a 3.1 thing, though.

|

2015-02-19T20:14:23Z

|

<patch>

diff --git a/IPython/utils/tokenutil.py b/IPython/utils/tokenutil.py

--- a/IPython/utils/tokenutil.py

+++ b/IPython/utils/tokenutil.py

@@ -58,6 +58,9 @@ def token_at_cursor(cell, cursor_pos=0):

Used for introspection.

+ Function calls are prioritized, so the token for the callable will be returned

+ if the cursor is anywhere inside the call.

+

Parameters

----------

@@ -70,6 +73,7 @@ def token_at_cursor(cell, cursor_pos=0):

names = []

tokens = []

offset = 0

+ call_names = []

for tup in generate_tokens(StringIO(cell).readline):

tok = Token(*tup)

@@ -93,6 +97,11 @@ def token_at_cursor(cell, cursor_pos=0):

if tok.text == '=' and names:

# don't inspect the lhs of an assignment

names.pop(-1)

+ if tok.text == '(' and names:

+ # if we are inside a function call, inspect the function

+ call_names.append(names[-1])

+ elif tok.text == ')' and call_names:

+ call_names.pop(-1)

if offset + end_col > cursor_pos:

# we found the cursor, stop reading

@@ -102,7 +111,9 @@ def token_at_cursor(cell, cursor_pos=0):

if tok.token == tokenize2.NEWLINE:

offset += len(tok.line)

- if names:

+ if call_names:

+ return call_names[-1]

+ elif names:

return names[-1]

else:

return ''

</patch>

|

[]

|

[]

| |||

docker__compose-2878

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Merge build args when using multiple compose files (or when extending services)

Based on the behavior of `environment` and `labels`, as well as `build.image`, `build.context` etc, I would also expect `build.args` to be merged, instead of being replaced.

To give an example:

## Input

**docker-compose.yml:**

``` yaml

version: "2"

services:

my_service:

build:

context: my-app

args:

SOME_VARIABLE: "42"

```

**docker-compose.override.yml:**

``` yaml

version: "2"

services:

my_service:

build:

args:

HTTP_PROXY: http://proxy.somewhere:80

HTTPS_PROXY: http://proxy.somewhere:80

NO_PROXY: somewhere,localhost

```

**my-app/Dockerfile**

``` Dockerfile

# Just needed to be able to use `build:`

FROM busybox:latest

ARG SOME_VARIABLE=xyz

RUN echo "$SOME_VARIABLE" > /etc/example

```

## Current Output

``` bash

$ docker-compose config

networks: {}

services:

my_service:

build:

args:

HTTPS_PROXY: http://proxy.somewhere:80

HTTP_PROXY: http://proxy.somewhere:80

NO_PROXY: somewhere,localhost

context: <project-dir>\my-app

version: '2.0'

volumes: {}

```

## Expected Output

``` bash

$ docker-compose config

networks: {}

services:

my_service:

build:

args:

SOME_VARIABLE: 42 # Note the merged variable here

HTTPS_PROXY: http://proxy.somewhere:80

HTTP_PROXY: http://proxy.somewhere:80

NO_PROXY: somewhere,localhost

context: <project-dir>\my-app

version: '2.0'

volumes: {}

```

## Version Information

``` bash

$ docker-compose version

docker-compose version 1.6.0, build cdb920a

docker-py version: 1.7.0

CPython version: 2.7.11

OpenSSL version: OpenSSL 1.0.2d 9 Jul 2015

```

# Implementation proposal

I mainly want to get clarification on what the desired behavior is, so that I can possibly help implementing it, maybe even for `1.6.1`.

Personally, I'd like the behavior to be to merge the `build.args` key (as outlined above), for a couple of reasons:

- Principle of least surprise/consistency with `environment`, `labels`, `ports` and so on.

- It enables scenarios like the one outlined above, where the images require some transient configuration to build, in addition to other build variables which actually have an influence on the final image.

The scenario that one wants to replace all build args at once is not very likely IMO; why would you define base build variables in the first place if you're going to replace them anyway?

# Alternative behavior: Output a warning

If the behavior should stay the same as it is now, i.e. to fully replaced the `build.args` keys, then `docker-compose` should at least output a warning IMO. It took me some time to figure out that `docker-compose` was ignoring the build args in the base `docker-compose.yml` file.

</issue>

<code>

[start of README.md]

1 Docker Compose

2 ==============

3

4

5 Compose is a tool for defining and running multi-container Docker applications.

6 With Compose, you use a Compose file to configure your application's services.

7 Then, using a single command, you create and start all the services

8 from your configuration. To learn more about all the features of Compose

9 see [the list of features](https://github.com/docker/compose/blob/release/docs/overview.md#features).

10

11 Compose is great for development, testing, and staging environments, as well as

12 CI workflows. You can learn more about each case in

13 [Common Use Cases](https://github.com/docker/compose/blob/release/docs/overview.md#common-use-cases).

14

15 Using Compose is basically a three-step process.

16

17 1. Define your app's environment with a `Dockerfile` so it can be

18 reproduced anywhere.

19 2. Define the services that make up your app in `docker-compose.yml` so

20 they can be run together in an isolated environment:

21 3. Lastly, run `docker-compose up` and Compose will start and run your entire app.

22

23 A `docker-compose.yml` looks like this:

24

25 web:

26 build: .

27 ports:

28 - "5000:5000"

29 volumes:

30 - .:/code

31 links:

32 - redis

33 redis:

34 image: redis

35

36 For more information about the Compose file, see the

37 [Compose file reference](https://github.com/docker/compose/blob/release/docs/compose-file.md)

38

39 Compose has commands for managing the whole lifecycle of your application:

40

41 * Start, stop and rebuild services

42 * View the status of running services

43 * Stream the log output of running services

44 * Run a one-off command on a service

45

46 Installation and documentation

47 ------------------------------

48

49 - Full documentation is available on [Docker's website](https://docs.docker.com/compose/).

50 - If you have any questions, you can talk in real-time with other developers in the #docker-compose IRC channel on Freenode. [Click here to join using IRCCloud.](https://www.irccloud.com/invite?hostname=irc.freenode.net&channel=%23docker-compose)

51 - Code repository for Compose is on [Github](https://github.com/docker/compose)

52 - If you find any problems please fill out an [issue](https://github.com/docker/compose/issues/new)

53

54 Contributing

55 ------------

56

57 [](http://jenkins.dockerproject.org/job/Compose%20Master/)

58

59 Want to help build Compose? Check out our [contributing documentation](https://github.com/docker/compose/blob/master/CONTRIBUTING.md).

60

61 Releasing

62 ---------

63

64 Releases are built by maintainers, following an outline of the [release process](https://github.com/docker/compose/blob/master/project/RELEASE-PROCESS.md).

65

[end of README.md]

[start of compose/config/config.py]

1 from __future__ import absolute_import

2 from __future__ import unicode_literals

3

4 import codecs

5 import functools

6 import logging

7 import operator

8 import os

9 import string

10 import sys

11 from collections import namedtuple

12

13 import six

14 import yaml

15 from cached_property import cached_property

16

17 from ..const import COMPOSEFILE_V1 as V1

18 from ..const import COMPOSEFILE_V2_0 as V2_0

19 from .errors import CircularReference

20 from .errors import ComposeFileNotFound

21 from .errors import ConfigurationError

22 from .errors import VERSION_EXPLANATION

23 from .interpolation import interpolate_environment_variables

24 from .sort_services import get_container_name_from_network_mode

25 from .sort_services import get_service_name_from_network_mode

26 from .sort_services import sort_service_dicts

27 from .types import parse_extra_hosts

28 from .types import parse_restart_spec

29 from .types import ServiceLink

30 from .types import VolumeFromSpec

31 from .types import VolumeSpec

32 from .validation import match_named_volumes

33 from .validation import validate_against_fields_schema

34 from .validation import validate_against_service_schema

35 from .validation import validate_depends_on

36 from .validation import validate_extends_file_path

37 from .validation import validate_network_mode

38 from .validation import validate_top_level_object

39 from .validation import validate_top_level_service_objects

40 from .validation import validate_ulimits

41

42

43 DOCKER_CONFIG_KEYS = [

44 'cap_add',

45 'cap_drop',

46 'cgroup_parent',

47 'command',

48 'cpu_quota',

49 'cpu_shares',

50 'cpuset',

51 'detach',

52 'devices',

53 'dns',

54 'dns_search',

55 'domainname',

56 'entrypoint',

57 'env_file',

58 'environment',

59 'extra_hosts',

60 'hostname',

61 'image',

62 'ipc',

63 'labels',

64 'links',

65 'mac_address',

66 'mem_limit',

67 'memswap_limit',

68 'net',

69 'pid',

70 'ports',

71 'privileged',

72 'read_only',

73 'restart',

74 'security_opt',

75 'stdin_open',

76 'stop_signal',

77 'tty',

78 'user',

79 'volume_driver',

80 'volumes',

81 'volumes_from',

82 'working_dir',

83 ]

84

85 ALLOWED_KEYS = DOCKER_CONFIG_KEYS + [

86 'build',

87 'container_name',

88 'dockerfile',

89 'logging',

90 'network_mode',

91 ]

92

93 DOCKER_VALID_URL_PREFIXES = (

94 'http://',

95 'https://',

96 'git://',

97 'github.com/',

98 'git@',

99 )

100

101 SUPPORTED_FILENAMES = [

102 'docker-compose.yml',

103 'docker-compose.yaml',

104 ]

105

106 DEFAULT_OVERRIDE_FILENAME = 'docker-compose.override.yml'

107

108

109 log = logging.getLogger(__name__)

110

111

112 class ConfigDetails(namedtuple('_ConfigDetails', 'working_dir config_files')):

113 """

114 :param working_dir: the directory to use for relative paths in the config

115 :type working_dir: string

116 :param config_files: list of configuration files to load

117 :type config_files: list of :class:`ConfigFile`

118 """

119

120

121 class ConfigFile(namedtuple('_ConfigFile', 'filename config')):

122 """

123 :param filename: filename of the config file

124 :type filename: string

125 :param config: contents of the config file

126 :type config: :class:`dict`

127 """

128

129 @classmethod

130 def from_filename(cls, filename):

131 return cls(filename, load_yaml(filename))

132

133 @cached_property

134 def version(self):

135 if 'version' not in self.config:

136 return V1

137

138 version = self.config['version']

139

140 if isinstance(version, dict):

141 log.warn('Unexpected type for "version" key in "{}". Assuming '

142 '"version" is the name of a service, and defaulting to '

143 'Compose file version 1.'.format(self.filename))

144 return V1

145

146 if not isinstance(version, six.string_types):

147 raise ConfigurationError(

148 'Version in "{}" is invalid - it should be a string.'

149 .format(self.filename))

150

151 if version == '1':

152 raise ConfigurationError(

153 'Version in "{}" is invalid. {}'

154 .format(self.filename, VERSION_EXPLANATION))

155

156 if version == '2':

157 version = V2_0

158

159 if version != V2_0:

160 raise ConfigurationError(

161 'Version in "{}" is unsupported. {}'

162 .format(self.filename, VERSION_EXPLANATION))

163

164 return version

165

166 def get_service(self, name):

167 return self.get_service_dicts()[name]

168

169 def get_service_dicts(self):

170 return self.config if self.version == V1 else self.config.get('services', {})

171

172 def get_volumes(self):

173 return {} if self.version == V1 else self.config.get('volumes', {})

174

175 def get_networks(self):

176 return {} if self.version == V1 else self.config.get('networks', {})

177

178

179 class Config(namedtuple('_Config', 'version services volumes networks')):

180 """

181 :param version: configuration version

182 :type version: int

183 :param services: List of service description dictionaries

184 :type services: :class:`list`

185 :param volumes: Dictionary mapping volume names to description dictionaries

186 :type volumes: :class:`dict`

187 :param networks: Dictionary mapping network names to description dictionaries

188 :type networks: :class:`dict`

189 """

190

191

192 class ServiceConfig(namedtuple('_ServiceConfig', 'working_dir filename name config')):

193

194 @classmethod

195 def with_abs_paths(cls, working_dir, filename, name, config):

196 if not working_dir:

197 raise ValueError("No working_dir for ServiceConfig.")

198

199 return cls(

200 os.path.abspath(working_dir),

201 os.path.abspath(filename) if filename else filename,

202 name,

203 config)

204

205

206 def find(base_dir, filenames):

207 if filenames == ['-']:

208 return ConfigDetails(

209 os.getcwd(),

210 [ConfigFile(None, yaml.safe_load(sys.stdin))])

211

212 if filenames:

213 filenames = [os.path.join(base_dir, f) for f in filenames]

214 else:

215 filenames = get_default_config_files(base_dir)

216

217 log.debug("Using configuration files: {}".format(",".join(filenames)))

218 return ConfigDetails(

219 os.path.dirname(filenames[0]),

220 [ConfigFile.from_filename(f) for f in filenames])

221

222

223 def validate_config_version(config_files):

224 main_file = config_files[0]

225 validate_top_level_object(main_file)

226 for next_file in config_files[1:]:

227 validate_top_level_object(next_file)

228

229 if main_file.version != next_file.version:

230 raise ConfigurationError(

231 "Version mismatch: file {0} specifies version {1} but "

232 "extension file {2} uses version {3}".format(

233 main_file.filename,

234 main_file.version,

235 next_file.filename,

236 next_file.version))

237

238

239 def get_default_config_files(base_dir):

240 (candidates, path) = find_candidates_in_parent_dirs(SUPPORTED_FILENAMES, base_dir)

241

242 if not candidates:

243 raise ComposeFileNotFound(SUPPORTED_FILENAMES)

244

245 winner = candidates[0]

246

247 if len(candidates) > 1:

248 log.warn("Found multiple config files with supported names: %s", ", ".join(candidates))

249 log.warn("Using %s\n", winner)

250

251 return [os.path.join(path, winner)] + get_default_override_file(path)

252

253

254 def get_default_override_file(path):

255 override_filename = os.path.join(path, DEFAULT_OVERRIDE_FILENAME)

256 return [override_filename] if os.path.exists(override_filename) else []

257

258

259 def find_candidates_in_parent_dirs(filenames, path):

260 """

261 Given a directory path to start, looks for filenames in the

262 directory, and then each parent directory successively,

263 until found.

264

265 Returns tuple (candidates, path).

266 """

267 candidates = [filename for filename in filenames

268 if os.path.exists(os.path.join(path, filename))]

269

270 if not candidates:

271 parent_dir = os.path.join(path, '..')

272 if os.path.abspath(parent_dir) != os.path.abspath(path):

273 return find_candidates_in_parent_dirs(filenames, parent_dir)

274

275 return (candidates, path)

276

277

278 def load(config_details):

279 """Load the configuration from a working directory and a list of

280 configuration files. Files are loaded in order, and merged on top

281 of each other to create the final configuration.

282

283 Return a fully interpolated, extended and validated configuration.

284 """

285 validate_config_version(config_details.config_files)

286

287 processed_files = [

288 process_config_file(config_file)

289 for config_file in config_details.config_files

290 ]

291 config_details = config_details._replace(config_files=processed_files)

292

293 main_file = config_details.config_files[0]

294 volumes = load_mapping(config_details.config_files, 'get_volumes', 'Volume')

295 networks = load_mapping(config_details.config_files, 'get_networks', 'Network')

296 service_dicts = load_services(

297 config_details.working_dir,

298 main_file,

299 [file.get_service_dicts() for file in config_details.config_files])

300

301 if main_file.version != V1:

302 for service_dict in service_dicts:

303 match_named_volumes(service_dict, volumes)

304

305 return Config(main_file.version, service_dicts, volumes, networks)

306

307

308 def load_mapping(config_files, get_func, entity_type):

309 mapping = {}

310

311 for config_file in config_files:

312 for name, config in getattr(config_file, get_func)().items():

313 mapping[name] = config or {}

314 if not config:

315 continue

316

317 external = config.get('external')

318 if external:

319 if len(config.keys()) > 1:

320 raise ConfigurationError(

321 '{} {} declared as external but specifies'

322 ' additional attributes ({}). '.format(

323 entity_type,

324 name,

325 ', '.join([k for k in config.keys() if k != 'external'])

326 )

327 )

328 if isinstance(external, dict):

329 config['external_name'] = external.get('name')

330 else:

331 config['external_name'] = name

332

333 mapping[name] = config

334

335 return mapping

336

337

338 def load_services(working_dir, config_file, service_configs):

339 def build_service(service_name, service_dict, service_names):

340 service_config = ServiceConfig.with_abs_paths(

341 working_dir,

342 config_file.filename,

343 service_name,

344 service_dict)

345 resolver = ServiceExtendsResolver(service_config, config_file)

346 service_dict = process_service(resolver.run())

347

348 service_config = service_config._replace(config=service_dict)

349 validate_service(service_config, service_names, config_file.version)

350 service_dict = finalize_service(

351 service_config,

352 service_names,

353 config_file.version)

354 return service_dict

355

356 def build_services(service_config):

357 service_names = service_config.keys()

358 return sort_service_dicts([

359 build_service(name, service_dict, service_names)

360 for name, service_dict in service_config.items()

361 ])

362

363 def merge_services(base, override):

364 all_service_names = set(base) | set(override)

365 return {

366 name: merge_service_dicts_from_files(

367 base.get(name, {}),

368 override.get(name, {}),

369 config_file.version)

370 for name in all_service_names

371 }

372

373 service_config = service_configs[0]

374 for next_config in service_configs[1:]:

375 service_config = merge_services(service_config, next_config)

376

377 return build_services(service_config)

378

379

380 def process_config_file(config_file, service_name=None):

381 service_dicts = config_file.get_service_dicts()

382 validate_top_level_service_objects(config_file.filename, service_dicts)

383

384 interpolated_config = interpolate_environment_variables(service_dicts, 'service')

385

386 if config_file.version == V2_0:

387 processed_config = dict(config_file.config)

388 processed_config['services'] = services = interpolated_config

389 processed_config['volumes'] = interpolate_environment_variables(

390 config_file.get_volumes(), 'volume')

391 processed_config['networks'] = interpolate_environment_variables(

392 config_file.get_networks(), 'network')

393

394 if config_file.version == V1:

395 processed_config = services = interpolated_config

396

397 config_file = config_file._replace(config=processed_config)

398 validate_against_fields_schema(config_file)

399

400 if service_name and service_name not in services:

401 raise ConfigurationError(

402 "Cannot extend service '{}' in {}: Service not found".format(

403 service_name, config_file.filename))

404

405 return config_file

406

407

408 class ServiceExtendsResolver(object):

409 def __init__(self, service_config, config_file, already_seen=None):

410 self.service_config = service_config

411 self.working_dir = service_config.working_dir

412 self.already_seen = already_seen or []

413 self.config_file = config_file

414

415 @property

416 def signature(self):

417 return self.service_config.filename, self.service_config.name

418

419 def detect_cycle(self):

420 if self.signature in self.already_seen:

421 raise CircularReference(self.already_seen + [self.signature])

422

423 def run(self):

424 self.detect_cycle()

425

426 if 'extends' in self.service_config.config:

427 service_dict = self.resolve_extends(*self.validate_and_construct_extends())

428 return self.service_config._replace(config=service_dict)

429

430 return self.service_config

431

432 def validate_and_construct_extends(self):

433 extends = self.service_config.config['extends']

434 if not isinstance(extends, dict):

435 extends = {'service': extends}

436

437 config_path = self.get_extended_config_path(extends)

438 service_name = extends['service']

439

440 extends_file = ConfigFile.from_filename(config_path)

441 validate_config_version([self.config_file, extends_file])

442 extended_file = process_config_file(

443 extends_file,

444 service_name=service_name)

445 service_config = extended_file.get_service(service_name)

446

447 return config_path, service_config, service_name

448

449 def resolve_extends(self, extended_config_path, service_dict, service_name):

450 resolver = ServiceExtendsResolver(

451 ServiceConfig.with_abs_paths(

452 os.path.dirname(extended_config_path),

453 extended_config_path,

454 service_name,

455 service_dict),

456 self.config_file,

457 already_seen=self.already_seen + [self.signature])

458

459 service_config = resolver.run()

460 other_service_dict = process_service(service_config)

461 validate_extended_service_dict(

462 other_service_dict,

463 extended_config_path,

464 service_name)

465

466 return merge_service_dicts(

467 other_service_dict,

468 self.service_config.config,

469 self.config_file.version)

470

471 def get_extended_config_path(self, extends_options):

472 """Service we are extending either has a value for 'file' set, which we

473 need to obtain a full path too or we are extending from a service

474 defined in our own file.

475 """

476 filename = self.service_config.filename

477 validate_extends_file_path(

478 self.service_config.name,

479 extends_options,

480 filename)

481 if 'file' in extends_options:

482 return expand_path(self.working_dir, extends_options['file'])

483 return filename

484

485

486 def resolve_environment(service_dict):

487 """Unpack any environment variables from an env_file, if set.

488 Interpolate environment values if set.

489 """

490 env = {}

491 for env_file in service_dict.get('env_file', []):

492 env.update(env_vars_from_file(env_file))

493

494 env.update(parse_environment(service_dict.get('environment')))

495 return dict(filter(None, (resolve_env_var(k, v) for k, v in six.iteritems(env))))

496

497

498 def resolve_build_args(build):

499 args = parse_build_arguments(build.get('args'))

500 return dict(filter(None, (resolve_env_var(k, v) for k, v in six.iteritems(args))))

501

502

503 def validate_extended_service_dict(service_dict, filename, service):

504 error_prefix = "Cannot extend service '%s' in %s:" % (service, filename)

505

506 if 'links' in service_dict:

507 raise ConfigurationError(

508 "%s services with 'links' cannot be extended" % error_prefix)

509

510 if 'volumes_from' in service_dict:

511 raise ConfigurationError(

512 "%s services with 'volumes_from' cannot be extended" % error_prefix)

513

514 if 'net' in service_dict:

515 if get_container_name_from_network_mode(service_dict['net']):

516 raise ConfigurationError(

517 "%s services with 'net: container' cannot be extended" % error_prefix)

518

519 if 'network_mode' in service_dict:

520 if get_service_name_from_network_mode(service_dict['network_mode']):

521 raise ConfigurationError(

522 "%s services with 'network_mode: service' cannot be extended" % error_prefix)

523

524 if 'depends_on' in service_dict:

525 raise ConfigurationError(

526 "%s services with 'depends_on' cannot be extended" % error_prefix)

527

528

529 def validate_service(service_config, service_names, version):

530 service_dict, service_name = service_config.config, service_config.name

531 validate_against_service_schema(service_dict, service_name, version)

532 validate_paths(service_dict)

533

534 validate_ulimits(service_config)

535 validate_network_mode(service_config, service_names)

536 validate_depends_on(service_config, service_names)

537

538 if not service_dict.get('image') and has_uppercase(service_name):

539 raise ConfigurationError(

540 "Service '{name}' contains uppercase characters which are not valid "

541 "as part of an image name. Either use a lowercase service name or "

542 "use the `image` field to set a custom name for the service image."

543 .format(name=service_name))

544

545

546 def process_service(service_config):

547 working_dir = service_config.working_dir

548 service_dict = dict(service_config.config)

549

550 if 'env_file' in service_dict:

551 service_dict['env_file'] = [

552 expand_path(working_dir, path)

553 for path in to_list(service_dict['env_file'])

554 ]

555

556 if 'build' in service_dict:

557 if isinstance(service_dict['build'], six.string_types):

558 service_dict['build'] = resolve_build_path(working_dir, service_dict['build'])

559 elif isinstance(service_dict['build'], dict) and 'context' in service_dict['build']:

560 path = service_dict['build']['context']

561 service_dict['build']['context'] = resolve_build_path(working_dir, path)

562

563 if 'volumes' in service_dict and service_dict.get('volume_driver') is None:

564 service_dict['volumes'] = resolve_volume_paths(working_dir, service_dict)

565

566 if 'labels' in service_dict:

567 service_dict['labels'] = parse_labels(service_dict['labels'])

568

569 if 'extra_hosts' in service_dict:

570 service_dict['extra_hosts'] = parse_extra_hosts(service_dict['extra_hosts'])

571

572 for field in ['dns', 'dns_search']:

573 if field in service_dict:

574 service_dict[field] = to_list(service_dict[field])

575

576 return service_dict

577

578

579 def finalize_service(service_config, service_names, version):

580 service_dict = dict(service_config.config)

581

582 if 'environment' in service_dict or 'env_file' in service_dict:

583 service_dict['environment'] = resolve_environment(service_dict)

584 service_dict.pop('env_file', None)

585

586 if 'volumes_from' in service_dict:

587 service_dict['volumes_from'] = [

588 VolumeFromSpec.parse(vf, service_names, version)

589 for vf in service_dict['volumes_from']

590 ]

591

592 if 'volumes' in service_dict:

593 service_dict['volumes'] = [

594 VolumeSpec.parse(v) for v in service_dict['volumes']]

595

596 if 'net' in service_dict:

597 network_mode = service_dict.pop('net')

598 container_name = get_container_name_from_network_mode(network_mode)

599 if container_name and container_name in service_names:

600 service_dict['network_mode'] = 'service:{}'.format(container_name)

601 else:

602 service_dict['network_mode'] = network_mode

603

604 if 'restart' in service_dict:

605 service_dict['restart'] = parse_restart_spec(service_dict['restart'])

606

607 normalize_build(service_dict, service_config.working_dir)

608

609 service_dict['name'] = service_config.name

610 return normalize_v1_service_format(service_dict)

611

612

613 def normalize_v1_service_format(service_dict):

614 if 'log_driver' in service_dict or 'log_opt' in service_dict:

615 if 'logging' not in service_dict:

616 service_dict['logging'] = {}

617 if 'log_driver' in service_dict:

618 service_dict['logging']['driver'] = service_dict['log_driver']

619 del service_dict['log_driver']

620 if 'log_opt' in service_dict:

621 service_dict['logging']['options'] = service_dict['log_opt']

622 del service_dict['log_opt']

623

624 if 'dockerfile' in service_dict:

625 service_dict['build'] = service_dict.get('build', {})

626 service_dict['build'].update({

627 'dockerfile': service_dict.pop('dockerfile')

628 })

629

630 return service_dict

631

632

633 def merge_service_dicts_from_files(base, override, version):

634 """When merging services from multiple files we need to merge the `extends`

635 field. This is not handled by `merge_service_dicts()` which is used to

636 perform the `extends`.

637 """

638 new_service = merge_service_dicts(base, override, version)

639 if 'extends' in override:

640 new_service['extends'] = override['extends']

641 elif 'extends' in base:

642 new_service['extends'] = base['extends']

643 return new_service

644

645

646 class MergeDict(dict):

647 """A dict-like object responsible for merging two dicts into one."""

648

649 def __init__(self, base, override):

650 self.base = base

651 self.override = override

652

653 def needs_merge(self, field):

654 return field in self.base or field in self.override

655

656 def merge_field(self, field, merge_func, default=None):

657 if not self.needs_merge(field):

658 return

659

660 self[field] = merge_func(

661 self.base.get(field, default),

662 self.override.get(field, default))

663

664 def merge_mapping(self, field, parse_func):

665 if not self.needs_merge(field):

666 return

667

668 self[field] = parse_func(self.base.get(field))

669 self[field].update(parse_func(self.override.get(field)))

670

671 def merge_sequence(self, field, parse_func):

672 def parse_sequence_func(seq):

673 return to_mapping((parse_func(item) for item in seq), 'merge_field')

674

675 if not self.needs_merge(field):

676 return

677

678 merged = parse_sequence_func(self.base.get(field, []))

679 merged.update(parse_sequence_func(self.override.get(field, [])))

680 self[field] = [item.repr() for item in merged.values()]

681

682 def merge_scalar(self, field):

683 if self.needs_merge(field):

684 self[field] = self.override.get(field, self.base.get(field))

685

686

687 def merge_service_dicts(base, override, version):

688 md = MergeDict(base, override)

689

690 md.merge_mapping('environment', parse_environment)

691 md.merge_mapping('labels', parse_labels)

692 md.merge_mapping('ulimits', parse_ulimits)

693 md.merge_sequence('links', ServiceLink.parse)

694

695 for field in ['volumes', 'devices']:

696 md.merge_field(field, merge_path_mappings)

697

698 for field in [

699 'depends_on',

700 'expose',

701 'external_links',

702 'networks',

703 'ports',

704 'volumes_from',

705 ]:

706 md.merge_field(field, operator.add, default=[])

707

708 for field in ['dns', 'dns_search', 'env_file']:

709 md.merge_field(field, merge_list_or_string)

710

711 for field in set(ALLOWED_KEYS) - set(md):

712 md.merge_scalar(field)

713

714 if version == V1:

715 legacy_v1_merge_image_or_build(md, base, override)

716 else:

717 merge_build(md, base, override)

718

719 return dict(md)

720

721

722 def merge_build(output, base, override):

723 build = {}

724

725 if 'build' in base:

726 if isinstance(base['build'], six.string_types):

727 build['context'] = base['build']

728 else:

729 build.update(base['build'])

730

731 if 'build' in override:

732 if isinstance(override['build'], six.string_types):

733 build['context'] = override['build']

734 else:

735 build.update(override['build'])

736

737 if build:

738 output['build'] = build

739

740

741 def legacy_v1_merge_image_or_build(output, base, override):

742 output.pop('image', None)

743 output.pop('build', None)

744 if 'image' in override:

745 output['image'] = override['image']

746 elif 'build' in override:

747 output['build'] = override['build']

748 elif 'image' in base:

749 output['image'] = base['image']

750 elif 'build' in base:

751 output['build'] = base['build']

752

753

754 def merge_environment(base, override):

755 env = parse_environment(base)

756 env.update(parse_environment(override))

757 return env

758

759

760 def split_env(env):

761 if isinstance(env, six.binary_type):

762 env = env.decode('utf-8', 'replace')

763 if '=' in env:

764 return env.split('=', 1)

765 else:

766 return env, None

767

768

769 def split_label(label):

770 if '=' in label:

771 return label.split('=', 1)

772 else:

773 return label, ''

774

775

776 def parse_dict_or_list(split_func, type_name, arguments):

777 if not arguments:

778 return {}

779

780 if isinstance(arguments, list):

781 return dict(split_func(e) for e in arguments)

782

783 if isinstance(arguments, dict):

784 return dict(arguments)

785

786 raise ConfigurationError(

787 "%s \"%s\" must be a list or mapping," %

788 (type_name, arguments)

789 )

790

791

792 parse_build_arguments = functools.partial(parse_dict_or_list, split_env, 'build arguments')

793 parse_environment = functools.partial(parse_dict_or_list, split_env, 'environment')

794 parse_labels = functools.partial(parse_dict_or_list, split_label, 'labels')

795

796

797 def parse_ulimits(ulimits):

798 if not ulimits:

799 return {}

800

801 if isinstance(ulimits, dict):

802 return dict(ulimits)

803

804

805 def resolve_env_var(key, val):

806 if val is not None:

807 return key, val

808 elif key in os.environ:

809 return key, os.environ[key]

810 else:

811 return ()

812

813

814 def env_vars_from_file(filename):

815 """

816 Read in a line delimited file of environment variables.

817 """

818 if not os.path.exists(filename):

819 raise ConfigurationError("Couldn't find env file: %s" % filename)

820 env = {}

821 for line in codecs.open(filename, 'r', 'utf-8'):

822 line = line.strip()

823 if line and not line.startswith('#'):

824 k, v = split_env(line)

825 env[k] = v

826 return env

827

828

829 def resolve_volume_paths(working_dir, service_dict):

830 return [

831 resolve_volume_path(working_dir, volume)

832 for volume in service_dict['volumes']

833 ]

834

835

836 def resolve_volume_path(working_dir, volume):

837 container_path, host_path = split_path_mapping(volume)

838

839 if host_path is not None:

840 if host_path.startswith('.'):

841 host_path = expand_path(working_dir, host_path)

842 host_path = os.path.expanduser(host_path)

843 return u"{}:{}".format(host_path, container_path)

844 else:

845 return container_path

846

847

848 def normalize_build(service_dict, working_dir):

849

850 if 'build' in service_dict:

851 build = {}

852 # Shortcut where specifying a string is treated as the build context

853 if isinstance(service_dict['build'], six.string_types):

854 build['context'] = service_dict.pop('build')

855 else:

856 build.update(service_dict['build'])

857 if 'args' in build:

858 build['args'] = resolve_build_args(build)

859

860 service_dict['build'] = build

861

862

863 def resolve_build_path(working_dir, build_path):

864 if is_url(build_path):

865 return build_path

866 return expand_path(working_dir, build_path)

867

868

869 def is_url(build_path):

870 return build_path.startswith(DOCKER_VALID_URL_PREFIXES)

871

872

873 def validate_paths(service_dict):

874 if 'build' in service_dict:

875 build = service_dict.get('build', {})

876

877 if isinstance(build, six.string_types):

878 build_path = build

879 elif isinstance(build, dict) and 'context' in build:

880 build_path = build['context']

881

882 if (

883 not is_url(build_path) and

884 (not os.path.exists(build_path) or not os.access(build_path, os.R_OK))

885 ):

886 raise ConfigurationError(

887 "build path %s either does not exist, is not accessible, "

888 "or is not a valid URL." % build_path)

889

890

891 def merge_path_mappings(base, override):

892 d = dict_from_path_mappings(base)

893 d.update(dict_from_path_mappings(override))

894 return path_mappings_from_dict(d)

895

896

897 def dict_from_path_mappings(path_mappings):

898 if path_mappings:

899 return dict(split_path_mapping(v) for v in path_mappings)

900 else:

901 return {}

902

903

904 def path_mappings_from_dict(d):

905 return [join_path_mapping(v) for v in d.items()]

906

907

908 def split_path_mapping(volume_path):

909 """

910 Ascertain if the volume_path contains a host path as well as a container

911 path. Using splitdrive so windows absolute paths won't cause issues with

912 splitting on ':'.

913 """

914 # splitdrive has limitations when it comes to relative paths, so when it's

915 # relative, handle special case to set the drive to ''

916 if volume_path.startswith('.') or volume_path.startswith('~'):

917 drive, volume_config = '', volume_path

918 else:

919 drive, volume_config = os.path.splitdrive(volume_path)

920

921 if ':' in volume_config:

922 (host, container) = volume_config.split(':', 1)

923 return (container, drive + host)

924 else:

925 return (volume_path, None)

926

927

928 def join_path_mapping(pair):

929 (container, host) = pair

930 if host is None:

931 return container

932 else:

933 return ":".join((host, container))

934

935

936 def expand_path(working_dir, path):

937 return os.path.abspath(os.path.join(working_dir, os.path.expanduser(path)))

938

939

940 def merge_list_or_string(base, override):

941 return to_list(base) + to_list(override)

942

943

944 def to_list(value):

945 if value is None:

946 return []

947 elif isinstance(value, six.string_types):

948 return [value]

949 else:

950 return value

951

952

953 def to_mapping(sequence, key_field):

954 return {getattr(item, key_field): item for item in sequence}

955

956

957 def has_uppercase(name):

958 return any(char in string.ascii_uppercase for char in name)

959

960

961 def load_yaml(filename):

962 try:

963 with open(filename, 'r') as fh:

964 return yaml.safe_load(fh)

965 except (IOError, yaml.YAMLError) as e:

966 error_name = getattr(e, '__module__', '') + '.' + e.__class__.__name__

967 raise ConfigurationError(u"{}: {}".format(error_name, e))

968

[end of compose/config/config.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+ points.append((x, y))

return points

</patch>

|

docker/compose

|

7b5bad6050e337ca41d8f1a0e80b44787534e92f

|

Merge build args when using multiple compose files (or when extending services)

Based on the behavior of `environment` and `labels`, as well as `build.image`, `build.context` etc, I would also expect `build.args` to be merged, instead of being replaced.

To give an example:

## Input

**docker-compose.yml:**

``` yaml

version: "2"

services:

my_service:

build:

context: my-app

args:

SOME_VARIABLE: "42"

```

**docker-compose.override.yml:**

``` yaml

version: "2"

services:

my_service:

build:

args:

HTTP_PROXY: http://proxy.somewhere:80

HTTPS_PROXY: http://proxy.somewhere:80

NO_PROXY: somewhere,localhost

```

**my-app/Dockerfile**

``` Dockerfile

# Just needed to be able to use `build:`

FROM busybox:latest

ARG SOME_VARIABLE=xyz

RUN echo "$SOME_VARIABLE" > /etc/example

```

## Current Output

``` bash

$ docker-compose config

networks: {}

services:

my_service:

build:

args:

HTTPS_PROXY: http://proxy.somewhere:80

HTTP_PROXY: http://proxy.somewhere:80

NO_PROXY: somewhere,localhost

context: <project-dir>\my-app

version: '2.0'

volumes: {}

```

## Expected Output

``` bash

$ docker-compose config

networks: {}

services:

my_service:

build:

args:

SOME_VARIABLE: 42 # Note the merged variable here

HTTPS_PROXY: http://proxy.somewhere:80

HTTP_PROXY: http://proxy.somewhere:80

NO_PROXY: somewhere,localhost

context: <project-dir>\my-app

version: '2.0'

volumes: {}

```

## Version Information

``` bash

$ docker-compose version

docker-compose version 1.6.0, build cdb920a

docker-py version: 1.7.0

CPython version: 2.7.11

OpenSSL version: OpenSSL 1.0.2d 9 Jul 2015

```

# Implementation proposal

I mainly want to get clarification on what the desired behavior is, so that I can possibly help implementing it, maybe even for `1.6.1`.

Personally, I'd like the behavior to be to merge the `build.args` key (as outlined above), for a couple of reasons:

- Principle of least surprise/consistency with `environment`, `labels`, `ports` and so on.

- It enables scenarios like the one outlined above, where the images require some transient configuration to build, in addition to other build variables which actually have an influence on the final image.

The scenario that one wants to replace all build args at once is not very likely IMO; why would you define base build variables in the first place if you're going to replace them anyway?

# Alternative behavior: Output a warning

If the behavior should stay the same as it is now, i.e. to fully replaced the `build.args` keys, then `docker-compose` should at least output a warning IMO. It took me some time to figure out that `docker-compose` was ignoring the build args in the base `docker-compose.yml` file.

|

I think we should merge build args. It was probably just overlooked since this is the first time we have nested configuration that we actually want to merge (other nested config like `logging` is not merged by design, because changing one option likely invalidates the rest).

I think the implementation would be to use the new `MergeDict()` object in `merge_build()`. Currently we just use `update()`.

A PR for this would be great!

I'm going to pick this up since it can be fixed at the same time as #2874

|

2016-02-10T18:55:23Z

|

<patch>

diff --git a/compose/config/config.py b/compose/config/config.py

--- a/compose/config/config.py

+++ b/compose/config/config.py

@@ -713,29 +713,24 @@ def merge_service_dicts(base, override, version):

if version == V1:

legacy_v1_merge_image_or_build(md, base, override)

- else:

- merge_build(md, base, override)

+ elif md.needs_merge('build'):

+ md['build'] = merge_build(md, base, override)

return dict(md)

def merge_build(output, base, override):

- build = {}

-

- if 'build' in base:

- if isinstance(base['build'], six.string_types):

- build['context'] = base['build']

- else:

- build.update(base['build'])

-

- if 'build' in override:

- if isinstance(override['build'], six.string_types):

- build['context'] = override['build']

- else:

- build.update(override['build'])

-

- if build:

- output['build'] = build

+ def to_dict(service):

+ build_config = service.get('build', {})

+ if isinstance(build_config, six.string_types):

+ return {'context': build_config}

+ return build_config

+

+ md = MergeDict(to_dict(base), to_dict(override))

+ md.merge_scalar('context')

+ md.merge_scalar('dockerfile')

+ md.merge_mapping('args', parse_build_arguments)

+ return dict(md)

def legacy_v1_merge_image_or_build(output, base, override):

</patch>

|

[]

|

[]

| |||

ipython__ipython-13417

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add line number to error messages

As suggested in #13169, it adds line number to error messages, in order to make them more friendly.

That was the file used in the test

</issue>

<code>

[start of README.rst]

1 .. image:: https://codecov.io/github/ipython/ipython/coverage.svg?branch=master

2 :target: https://codecov.io/github/ipython/ipython?branch=master

3

4 .. image:: https://img.shields.io/pypi/v/IPython.svg

5 :target: https://pypi.python.org/pypi/ipython

6

7 .. image:: https://github.com/ipython/ipython/actions/workflows/test.yml/badge.svg

8 :target: https://github.com/ipython/ipython/actions/workflows/test.yml)

9

10 .. image:: https://www.codetriage.com/ipython/ipython/badges/users.svg

11 :target: https://www.codetriage.com/ipython/ipython/

12

13 .. image:: https://raster.shields.io/badge/Follows-NEP29-brightgreen.png

14 :target: https://numpy.org/neps/nep-0029-deprecation_policy.html

15

16

17 ===========================================

18 IPython: Productive Interactive Computing

19 ===========================================

20

21 Overview

22 ========

23

24 Welcome to IPython. Our full documentation is available on `ipython.readthedocs.io

25 <https://ipython.readthedocs.io/en/stable/>`_ and contains information on how to install, use, and

26 contribute to the project.

27 IPython (Interactive Python) is a command shell for interactive computing in multiple programming languages, originally developed for the Python programming language, that offers introspection, rich media, shell syntax, tab completion, and history.

28

29 **IPython versions and Python Support**

30

31 Starting with IPython 7.10, IPython follows `NEP 29 <https://numpy.org/neps/nep-0029-deprecation_policy.html>`_

32

33 **IPython 7.17+** requires Python version 3.7 and above.

34

35 **IPython 7.10+** requires Python version 3.6 and above.

36

37 **IPython 7.0** requires Python version 3.5 and above.

38

39 **IPython 6.x** requires Python version 3.3 and above.

40

41 **IPython 5.x LTS** is the compatible release for Python 2.7.

42 If you require Python 2 support, you **must** use IPython 5.x LTS. Please

43 update your project configurations and requirements as necessary.

44

45

46 The Notebook, Qt console and a number of other pieces are now parts of *Jupyter*.

47 See the `Jupyter installation docs <https://jupyter.readthedocs.io/en/latest/install.html>`__

48 if you want to use these.

49

50 Main features of IPython

51 ========================

52 Comprehensive object introspection.

53

54 Input history, persistent across sessions.

55

56 Caching of output results during a session with automatically generated references.

57

58 Extensible tab completion, with support by default for completion of python variables and keywords, filenames and function keywords.

59

60 Extensible system of ‘magic’ commands for controlling the environment and performing many tasks related to IPython or the operating system.

61

62 A rich configuration system with easy switching between different setups (simpler than changing $PYTHONSTARTUP environment variables every time).

63

64 Session logging and reloading.

65

66 Extensible syntax processing for special purpose situations.

67

68 Access to the system shell with user-extensible alias system.

69

70 Easily embeddable in other Python programs and GUIs.

71

72 Integrated access to the pdb debugger and the Python profiler.

73

74

75 Development and Instant running

76 ===============================

77

78 You can find the latest version of the development documentation on `readthedocs

79 <https://ipython.readthedocs.io/en/latest/>`_.

80

81 You can run IPython from this directory without even installing it system-wide

82 by typing at the terminal::

83

84 $ python -m IPython

85

86 Or see the `development installation docs

87 <https://ipython.readthedocs.io/en/latest/install/install.html#installing-the-development-version>`_

88 for the latest revision on read the docs.

89

90 Documentation and installation instructions for older version of IPython can be

91 found on the `IPython website <https://ipython.org/documentation.html>`_

92

93

94

95 IPython requires Python version 3 or above

96 ==========================================

97

98 Starting with version 6.0, IPython does not support Python 2.7, 3.0, 3.1, or

99 3.2.

100

101 For a version compatible with Python 2.7, please install the 5.x LTS Long Term

102 Support version.

103

104 If you are encountering this error message you are likely trying to install or

105 use IPython from source. You need to checkout the remote 5.x branch. If you are

106 using git the following should work::

107

108 $ git fetch origin

109 $ git checkout 5.x

110

111 If you encounter this error message with a regular install of IPython, then you

112 likely need to update your package manager, for example if you are using `pip`

113 check the version of pip with::

114

115 $ pip --version

116

117 You will need to update pip to the version 9.0.1 or greater. If you are not using

118 pip, please inquiry with the maintainers of the package for your package

119 manager.

120

121 For more information see one of our blog posts:

122

123 https://blog.jupyter.org/release-of-ipython-5-0-8ce60b8d2e8e

124

125 As well as the following Pull-Request for discussion:

126

127 https://github.com/ipython/ipython/pull/9900

128

129 This error does also occur if you are invoking ``setup.py`` directly – which you

130 should not – or are using ``easy_install`` If this is the case, use ``pip

131 install .`` instead of ``setup.py install`` , and ``pip install -e .`` instead

132 of ``setup.py develop`` If you are depending on IPython as a dependency you may

133 also want to have a conditional dependency on IPython depending on the Python

134 version::

135

136 install_req = ['ipython']

137 if sys.version_info[0] < 3 and 'bdist_wheel' not in sys.argv:

138 install_req.remove('ipython')

139 install_req.append('ipython<6')

140

141 setup(

142 ...

143 install_requires=install_req

144 )

145

146 Alternatives to IPython

147 =======================

148

149 IPython may not be to your taste; if that's the case there might be similar

150 project that you might want to use:

151

152 - The classic Python REPL.

153 - `bpython <https://bpython-interpreter.org/>`_

154 - `mypython <https://www.asmeurer.com/mypython/>`_

155 - `ptpython and ptipython <https://pypi.org/project/ptpython/>`_

156 - `Xonsh <https://xon.sh/>`_

157

158 Ignoring commits with git blame.ignoreRevsFile

159 ==============================================

160

161 As of git 2.23, it is possible to make formatting changes without breaking

162 ``git blame``. See the `git documentation

163 <https://git-scm.com/docs/git-config#Documentation/git-config.txt-blameignoreRevsFile>`_

164 for more details.

165

166 To use this feature you must:

167

168 - Install git >= 2.23

169 - Configure your local git repo by running:

170 - POSIX: ``tools\configure-git-blame-ignore-revs.sh``

171 - Windows: ``tools\configure-git-blame-ignore-revs.bat``

172

[end of README.rst]

[start of IPython/core/ultratb.py]

1 # -*- coding: utf-8 -*-

2 """

3 Verbose and colourful traceback formatting.

4

5 **ColorTB**

6

7 I've always found it a bit hard to visually parse tracebacks in Python. The

8 ColorTB class is a solution to that problem. It colors the different parts of a

9 traceback in a manner similar to what you would expect from a syntax-highlighting

10 text editor.

11

12 Installation instructions for ColorTB::

13

14 import sys,ultratb

15 sys.excepthook = ultratb.ColorTB()

16

17 **VerboseTB**

18

19 I've also included a port of Ka-Ping Yee's "cgitb.py" that produces all kinds

20 of useful info when a traceback occurs. Ping originally had it spit out HTML

21 and intended it for CGI programmers, but why should they have all the fun? I

22 altered it to spit out colored text to the terminal. It's a bit overwhelming,

23 but kind of neat, and maybe useful for long-running programs that you believe

24 are bug-free. If a crash *does* occur in that type of program you want details.

25 Give it a shot--you'll love it or you'll hate it.

26

27 .. note::

28

29 The Verbose mode prints the variables currently visible where the exception

30 happened (shortening their strings if too long). This can potentially be

31 very slow, if you happen to have a huge data structure whose string

32 representation is complex to compute. Your computer may appear to freeze for

33 a while with cpu usage at 100%. If this occurs, you can cancel the traceback

34 with Ctrl-C (maybe hitting it more than once).

35

36 If you encounter this kind of situation often, you may want to use the

37 Verbose_novars mode instead of the regular Verbose, which avoids formatting

38 variables (but otherwise includes the information and context given by

39 Verbose).

40

41 .. note::

42

43 The verbose mode print all variables in the stack, which means it can

44 potentially leak sensitive information like access keys, or unencrypted

45 password.

46

47 Installation instructions for VerboseTB::

48

49 import sys,ultratb

50 sys.excepthook = ultratb.VerboseTB()

51

52 Note: Much of the code in this module was lifted verbatim from the standard

53 library module 'traceback.py' and Ka-Ping Yee's 'cgitb.py'.

54

55 Color schemes

56 -------------

57

58 The colors are defined in the class TBTools through the use of the

59 ColorSchemeTable class. Currently the following exist:

60

61 - NoColor: allows all of this module to be used in any terminal (the color

62 escapes are just dummy blank strings).

63

64 - Linux: is meant to look good in a terminal like the Linux console (black

65 or very dark background).

66

67 - LightBG: similar to Linux but swaps dark/light colors to be more readable

68 in light background terminals.

69

70 - Neutral: a neutral color scheme that should be readable on both light and

71 dark background

72

73 You can implement other color schemes easily, the syntax is fairly

74 self-explanatory. Please send back new schemes you develop to the author for

75 possible inclusion in future releases.

76

77 Inheritance diagram:

78

79 .. inheritance-diagram:: IPython.core.ultratb

80 :parts: 3

81 """

82

83 #*****************************************************************************

84 # Copyright (C) 2001 Nathaniel Gray <n8gray@caltech.edu>

85 # Copyright (C) 2001-2004 Fernando Perez <fperez@colorado.edu>

86 #

87 # Distributed under the terms of the BSD License. The full license is in

88 # the file COPYING, distributed as part of this software.

89 #*****************************************************************************

90

91

92 import inspect

93 import linecache

94 import pydoc

95 import sys

96 import time

97 import traceback

98

99 import stack_data

100 from pygments.formatters.terminal256 import Terminal256Formatter

101 from pygments.styles import get_style_by_name

102

103 # IPython's own modules

104 from IPython import get_ipython

105 from IPython.core import debugger

106 from IPython.core.display_trap import DisplayTrap

107 from IPython.core.excolors import exception_colors

108 from IPython.utils import path as util_path

109 from IPython.utils import py3compat

110 from IPython.utils.terminal import get_terminal_size

111

112 import IPython.utils.colorable as colorable

113

114 # Globals

115 # amount of space to put line numbers before verbose tracebacks

116 INDENT_SIZE = 8

117

118 # Default color scheme. This is used, for example, by the traceback

119 # formatter. When running in an actual IPython instance, the user's rc.colors

120 # value is used, but having a module global makes this functionality available

121 # to users of ultratb who are NOT running inside ipython.

122 DEFAULT_SCHEME = 'NoColor'

123

124 # ---------------------------------------------------------------------------

125 # Code begins

126

127 # Helper function -- largely belongs to VerboseTB, but we need the same

128 # functionality to produce a pseudo verbose TB for SyntaxErrors, so that they

129 # can be recognized properly by ipython.el's py-traceback-line-re

130 # (SyntaxErrors have to be treated specially because they have no traceback)

131

132

133 def _format_traceback_lines(lines, Colors, has_colors, lvals):

134 """

135 Format tracebacks lines with pointing arrow, leading numbers...

136

137 Parameters

138 ----------

139 lines : list[Line]

140 Colors

141 ColorScheme used.

142 lvals : str

143 Values of local variables, already colored, to inject just after the error line.

144 """

145 numbers_width = INDENT_SIZE - 1

146 res = []

147

148 for stack_line in lines:

149 if stack_line is stack_data.LINE_GAP:

150 res.append('%s (...)%s\n' % (Colors.linenoEm, Colors.Normal))

151 continue

152

153 line = stack_line.render(pygmented=has_colors).rstrip('\n') + '\n'

154 lineno = stack_line.lineno

155 if stack_line.is_current:

156 # This is the line with the error

157 pad = numbers_width - len(str(lineno))

158 num = '%s%s' % (debugger.make_arrow(pad), str(lineno))

159 start_color = Colors.linenoEm

160 else:

161 num = '%*s' % (numbers_width, lineno)

162 start_color = Colors.lineno

163

164 line = '%s%s%s %s' % (start_color, num, Colors.Normal, line)

165

166 res.append(line)

167 if lvals and stack_line.is_current:

168 res.append(lvals + '\n')

169 return res

170

171

172 def _format_filename(file, ColorFilename, ColorNormal):

173 """

174 Format filename lines with `In [n]` if it's the nth code cell or `File *.py` if it's a module.

175

176 Parameters

177 ----------

178 file : str

179 ColorFilename

180 ColorScheme's filename coloring to be used.

181 ColorNormal

182 ColorScheme's normal coloring to be used.

183 """

184 ipinst = get_ipython()

185

186 if ipinst is not None and file in ipinst.compile._filename_map:

187 file = "[%s]" % ipinst.compile._filename_map[file]

188 tpl_link = "Input %sIn %%s%s" % (ColorFilename, ColorNormal)

189 else:

190 file = util_path.compress_user(

191 py3compat.cast_unicode(file, util_path.fs_encoding)

192 )

193 tpl_link = "File %s%%s%s" % (ColorFilename, ColorNormal)

194

195 return tpl_link % file

196

197 #---------------------------------------------------------------------------

198 # Module classes

199 class TBTools(colorable.Colorable):

200 """Basic tools used by all traceback printer classes."""

201

202 # Number of frames to skip when reporting tracebacks

203 tb_offset = 0

204

205 def __init__(self, color_scheme='NoColor', call_pdb=False, ostream=None, parent=None, config=None):

206 # Whether to call the interactive pdb debugger after printing

207 # tracebacks or not

208 super(TBTools, self).__init__(parent=parent, config=config)

209 self.call_pdb = call_pdb

210

211 # Output stream to write to. Note that we store the original value in

212 # a private attribute and then make the public ostream a property, so

213 # that we can delay accessing sys.stdout until runtime. The way

214 # things are written now, the sys.stdout object is dynamically managed