repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

katanaml/sparrow

|

computer-vision

| 54 |

Getting Errors while running sparrow.sh under llm

|

I already installed all dependencies, Looks like there are some version conflicts.

FYI: I'm running it on Linux(Ubuntu 22.04.1)

Issues: ModuleNotFoundError: No module named 'tenacity.asyncio'

|

closed

|

2024-06-17T11:01:41Z

|

2024-06-18T10:53:23Z

|

https://github.com/katanaml/sparrow/issues/54

|

[] |

sanjay-nit

| 3 |

custom-components/pyscript

|

jupyter

| 484 |

@state_trigger does not trigger on climate domain, attribute changes

|

In general, there appears to be a hit or miss state trigger situation with the climate domain.

For instance, the following

`@state_trigger('climate.my_thermostat.hvac_action')`

does not trigger on `hvac_action` but only on `hvac_mode` changes.

to workaround that, I tried adding a watch:

`@state_trigger('climate.my_thermostat.hvac_action', watch=['climate.my_thermostat.hvac_action'])`

which made it trigger on the `hvac_action` attribute but the value/old_value I get, are both from the `hvac_mode`.

meaning, inside the trigger I have to explicitly get the value of the climate attribute `hvac_action`.

Another problem that I could not work around easily this time, was trigger on the climate `current_temperature` attribute.

Even with a watch, I can't get it to trigger. It only triggers for `hvac_action` changes.

Example:

`@state_trigger('climate.my_thermostat.current_temperature', watch=['climate.my_thermostat.current_temperature'])`

This one, just does not want to trigger on current temperature changes. It triggers on `hvac_mode` changes, instead.

Same thing, even with the `*`, like so:

`@state_trigger('climate.my_thermostat.*', watch=['climate.my_thermostat.*'])`

It will only trigger again, on `hvac_mode` changes and NOT on `current_temperature` changes.

My more involved workaround, was making a service with pyscript, that gets called by a HA "native" trigger on the `current_temperature` attribute changes, so that I can capture changes to that attribute. But that now has created a tightly coupled service/function to a HA trigger, which is not ideal. My pyscripts should be standalone.

Thank you.

|

open

|

2023-07-08T19:43:06Z

|

2024-10-22T22:13:28Z

|

https://github.com/custom-components/pyscript/issues/484

|

[] |

antokara

| 1 |

Lightning-AI/pytorch-lightning

|

deep-learning

| 19,989 |

TransformerEnginePrecision _convert_layers(module) fails for FSDP zero2/zero3

|

### Bug description

`TransformerEnginePrecision.convert_module` function seems to not work for the the FSDP-wrapped model.

### What version are you seeing the problem on?

master

### How to reproduce the bug

```python

model = FSDP(

model,

sharding_strategy=sharding_strategy,

auto_wrap_policy=custom_wrap_policy,

device_id=local_rank,

use_orig_params=True,

device_mesh=mesh,

)

te_precision = TransformerEnginePrecision(weights_dtype=torch.bfloat16, replace_layers=True)

self.model = te_precision.convert_module(self.model)

```

### Error messages and logs

```

[rank1]: self.model = te_precision.convert_module(self.model)

[rank1]: _convert_layers(module)

[rank1]: File "/usr/local/lib/python3.10/dist-packages/lightning/fabric/plugins/precision/transformer_engine.py", line 165, in _convert_layers

[rank1]: replacement.weight.data = child.weight.data.clone()

[rank1]: RuntimeError: Attempted to call `variable.set_data(tensor)`, but `variable` and `tensor` have incompatible tensor type.

```

### More info

I actually see it for pytorch-lightning==2.3.0

|

open

|

2024-06-18T14:08:00Z

|

2024-06-18T14:08:56Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19989

|

[

"bug",

"needs triage",

"ver: 2.2.x"

] |

wprazuch

| 0 |

twopirllc/pandas-ta

|

pandas

| 890 |

wrong NaN import

|

in momentum squeeze_pro

there should be

from numpy import nan as npNaN

not from numpy import Nan as npNaN

|

closed

|

2025-02-22T09:52:54Z

|

2025-02-22T18:59:15Z

|

https://github.com/twopirllc/pandas-ta/issues/890

|

[

"bug",

"duplicate"

] |

kkr007007

| 1 |

jupyterlab/jupyter-ai

|

jupyter

| 833 |

We have Github Copilot license and we want to use this model provider on Jupyter AI

|

### Proposed Solution

We don´t see in the list of model providers : https://jupyter-ai.readthedocs.io/en/latest/users/index.html#model-providers , the GithHUb Copilot solution, Are you going to integrate this model provider?

Thanks.

|

open

|

2024-06-14T10:24:16Z

|

2024-07-30T22:56:55Z

|

https://github.com/jupyterlab/jupyter-ai/issues/833

|

[

"enhancement",

"status:Blocked"

] |

japineda3

| 2 |

jacobgil/pytorch-grad-cam

|

computer-vision

| 193 |

Gradients are: 'NoneType' object has no attribute 'shape'

|

Hi, thank you for providing an open-source implementation for your work. I am trying to build on top of it to visualize my Resnet-18 encoder for the Image captioning task. It is an encoder-decoder architecture with a resnet-18 encoder and an RNN decoder. Below is my code for reproducibility.

```python

from pytorch_grad_cam import GradCAM, ScoreCAM, GradCAMPlusPlus, AblationCAM, XGradCAM, EigenCAM, FullGrad

from pytorch_grad_cam.utils.image import show_cam_on_image

from model import EncoderCNN, DecoderRNN

class CaptioningModelOutputWrapper(torch.nn.Module):

def __init__(self, embedding, gloves):

super(CaptioningModelOutputWrapper, self).__init__()

self.embedding_size = 300

self.embed_size = 512

self.embedding = embedding

self.gloves = gloves

self.hidden_size = 512

self.vocab_size = len(data_loader.dataset.vocab)

self.encoder = EncoderCNN(self.embed_size,self.embedding_size+18)

self.decoder = DecoderRNN(self.embedding_size, self.embed_size, self.hidden_size, self.vocab_size)

def forward(self, x):

features = self.encoder(x, self.embedding)

outputs = self.decoder(features, self.gloves)

return outputs

class CaptionModelOutputTarget:

def __init__(self, index, category):

self.index = index

self.category = category

def __call__(self, model_output):

print(model_output.shape)

return model_output[self.index, self.category]

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

it=iter(data_loader)

input_tensor, embedding, gloves, caption, string_caption, entity_name, path = next(it)

input_tensor, embedding, gloves = input_tensor.to(device), embedding.to(device), gloves.to(device)

model = CaptioningModelOutputWrapper(embedding, gloves)

model.to(device)

outputs = model.forward(input_tensor)

print(outputs.shape)

target_layers = [model.encoder.resnet[:-2]]

# Note: input_tensor can be a batch tensor with several images!

# Construct the CAM object once, and then re-use it on many images:

cam = GradCAM(model=model, target_layers=target_layers, use_cuda=True)

targets = [CaptionModelOutputTarget(10,caption[0,10])]

cam.model = model.train()

# You can also pass aug_smooth=True and eigen_smooth=True, to apply smoothing.

grayscale_cam = cam(input_tensor=input_tensor, targets=targets)

# In this example grayscale_cam has only one image in the batch:

grayscale_cam = grayscale_cam[0, :]

visualization = show_cam_on_image(rgb_img, grayscale_cam, use_rgb=True)

```

Error Stack:

```python

torch.Size([1, 100, 31480])

torch.Size([100, 31480])

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

/tmp/ipykernel_86959/3158658298.py in <module>

13 cam.model = model.train()

14 # You can also pass aug_smooth=True and eigen_smooth=True, to apply smoothing.

---> 15 grayscale_cam = cam(input_tensor=input_tensor, targets=targets)

16

17 # In this example grayscale_cam has only one image in the batch:

~/anaconda3/envs/fake/lib/python3.9/site-packages/pytorch_grad_cam/base_cam.py in __call__(self, input_tensor, targets, aug_smooth, eigen_smooth)

182 input_tensor, targets, eigen_smooth)

183

--> 184 return self.forward(input_tensor,

185 targets, eigen_smooth)

186

~/anaconda3/envs/fake/lib/python3.9/site-packages/pytorch_grad_cam/base_cam.py in forward(self, input_tensor, targets, eigen_smooth)

91 # use all conv layers for example, all Batchnorm layers,

92 # or something else.

---> 93 cam_per_layer = self.compute_cam_per_layer(input_tensor,

94 targets,

95 eigen_smooth)

~/anaconda3/envs/fake/lib/python3.9/site-packages/pytorch_grad_cam/base_cam.py in compute_cam_per_layer(self, input_tensor, targets, eigen_smooth)

123 layer_grads = grads_list[i]

124

--> 125 cam = self.get_cam_image(input_tensor,

126 target_layer,

127 targets,

~/anaconda3/envs/fake/lib/python3.9/site-packages/pytorch_grad_cam/base_cam.py in get_cam_image(self, input_tensor, target_layer, targets, activations, grads, eigen_smooth)

48 eigen_smooth: bool = False) -> np.ndarray:

49

---> 50 weights = self.get_cam_weights(input_tensor,

51 target_layer,

52 targets,

~/anaconda3/envs/fake/lib/python3.9/site-packages/pytorch_grad_cam/grad_cam.py in get_cam_weights(self, input_tensor, target_layer, target_category, activations, grads)

20 activations,

21 grads):

---> 22 return np.mean(grads, axis=(2, 3))

AttributeError: 'NoneType' object has no attribute 'shape'

```

I add the line ```python cam.model=model.train()``` to the training script in order to allow backpropagation in the RNN otherwise it throws cuDNN error that RNN backward cannot be called outside train mode. Solving that error leads to this. It would really help if you can help debug why this issue occurs in first place.

|

closed

|

2022-01-11T11:17:02Z

|

2022-01-11T14:47:10Z

|

https://github.com/jacobgil/pytorch-grad-cam/issues/193

|

[] |

Anurag14

| 1 |

pytest-dev/pytest-django

|

pytest

| 1,144 |

do not require DJANGO_SETTINGS_MODULE when running pytest help

|

Is it really necessary to require seting `DJANGO_SETTINGS_MODULE` only to display help?

I run my tests using `tox`, and `DJANGO_SETTINGS_MODULE` is being set there.

Sometimes I just need to quickly run `pytest help` just to see some syntax.

Does the output of `pytest help` depend on my currently configured settings?

My current output:

```

$ pytest --help

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "H:\python\django-pytest\.venv\Scripts\pytest.exe\__main__.py", line 7, in <module>

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 201, in console_main

code = main()

^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 156, in main

config = _prepareconfig(args, plugins)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 341, in _prepareconfig

config = pluginmanager.hook.pytest_cmdline_parse(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_hooks.py", line 513, in __call__

return self._hookexec(self.name, self._hookimpls.copy(), kwargs, firstresult)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_manager.py", line 120, in _hookexec

return self._inner_hookexec(hook_name, methods, kwargs, firstresult)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_callers.py", line 139, in _multicall

raise exception.with_traceback(exception.__traceback__)

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_callers.py", line 122, in _multicall

teardown.throw(exception) # type: ignore[union-attr]

^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\helpconfig.py", line 105, in pytest_cmdline_parse

config = yield

^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_callers.py", line 103, in _multicall

res = hook_impl.function(*args)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 1140, in pytest_cmdline_parse

self.parse(args)

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 1490, in parse

self._preparse(args, addopts=addopts)

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 1394, in _preparse

self.hook.pytest_load_initial_conftests(

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_hooks.py", line 513, in __call__

return self._hookexec(self.name, self._hookimpls.copy(), kwargs, firstresult)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_manager.py", line 120, in _hookexec

return self._inner_hookexec(hook_name, methods, kwargs, firstresult)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_callers.py", line 139, in _multicall

raise exception.with_traceback(exception.__traceback__)

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_callers.py", line 122, in _multicall

teardown.throw(exception) # type: ignore[union-attr]

^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\warnings.py", line 151, in pytest_load_initial_conftests

return (yield)

^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_callers.py", line 122, in _multicall

teardown.throw(exception) # type: ignore[union-attr]

^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\capture.py", line 154, in pytest_load_initial_conftests

yield

File "H:\python\django-pytest\.venv\Lib\site-packages\pluggy\_callers.py", line 103, in _multicall

res = hook_impl.function(*args)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 1218, in pytest_load_initial_conftests

self.pluginmanager._set_initial_conftests(

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 581, in _set_initial_conftests

self._try_load_conftest(

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 619, in _try_load_conftest

self._loadconftestmodules(

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 659, in _loadconftestmodules

mod = self._importconftest(

^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 735, in _importconftest

self.consider_conftest(mod, registration_name=conftestpath_plugin_name)

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 816, in consider_conftest

self.register(conftestmodule, name=registration_name)

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 512, in register

self.consider_module(plugin)

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 824, in consider_module

self._import_plugin_specs(getattr(mod, "pytest_plugins", []))

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 831, in _import_plugin_specs

self.import_plugin(import_spec)

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\config\__init__.py", line 858, in import_plugin

__import__(importspec)

File "<frozen importlib._bootstrap>", line 1176, in _find_and_load

File "<frozen importlib._bootstrap>", line 1147, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 690, in _load_unlocked

File "H:\python\django-pytest\.venv\Lib\site-packages\_pytest\assertion\rewrite.py", line 174, in exec_module

exec(co, module.__dict__)

File "h:\python\django-pytest\test_fixtures.py", line 11, in <module>

from django_pytest_app.models import (

File "H:\python\django-pytest\django_pytest_app\models.py", line 5, in <module>

class Foo(models.Model):

File "H:\python\django-pytest\.venv\Lib\site-packages\django\db\models\base.py", line 129, in __new__

app_config = apps.get_containing_app_config(module)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "H:\python\django-pytest\.venv\Lib\site-packages\django\apps\registry.py", line 260, in get_containing_app_config

self.check_apps_ready()

File "H:\python\django-pytest\.venv\Lib\site-packages\django\apps\registry.py", line 137, in check_apps_ready

settings.INSTALLED_APPS

File "H:\python\django-pytest\.venv\Lib\site-packages\django\conf\__init__.py", line 81, in __getattr__

self._setup(name)

File "H:\python\django-pytest\.venv\Lib\site-packages\django\conf\__init__.py", line 61, in _setup

raise ImproperlyConfigured(

django.core.exceptions.ImproperlyConfigured: Requested setting INSTALLED_APPS, but settings are not configured. You must either define the environment variable DJANGO_SETTINGS_MODULE or call settings.configure() before accessing settings.

```

|

closed

|

2024-09-04T08:48:47Z

|

2024-09-04T12:20:33Z

|

https://github.com/pytest-dev/pytest-django/issues/1144

|

[] |

piotr-kubiak

| 4 |

deepfakes/faceswap

|

machine-learning

| 439 |

Secondary masks/artifacts/blur in converted images, even thou secondary aligned images have been deleted

|

running latest github version.

|

closed

|

2018-06-21T15:01:37Z

|

2018-07-18T05:37:14Z

|

https://github.com/deepfakes/faceswap/issues/439

|

[] |

minersday

| 4 |

tqdm/tqdm

|

pandas

| 1,185 |

Throw a warning for `tqdm(enumerate(...))`

|

It would be helpful to warn people know they use `tqdm(enumerate(...))` instead of `enumerate(tqdm(...))`.

It can be detected like this:

```

>>> a = [1,2,3]

>>> b = enumerate(a)

>>> isinstance(b, enumerate)

True

```

|

open

|

2021-06-15T14:00:42Z

|

2021-07-29T11:04:38Z

|

https://github.com/tqdm/tqdm/issues/1185

|

[

"help wanted 🙏",

"p4-enhancement-future 🧨"

] |

cccntu

| 0 |

neuml/txtai

|

nlp

| 541 |

Encoding using multiple-GPUs

|

I am doing this task where I am recommending products based on their reviews. To do that I am using your library and when I am creating the index, what I would like to know is whether there is a way to utilize several GPUs. Because the time it takes to encode the review text is huge and I have a multiGPU environment.

Also the task also expects explanations and I would also like to know can the same be done when I execute the explain method.

Thank you.

|

closed

|

2023-09-01T07:21:20Z

|

2024-12-22T01:32:13Z

|

https://github.com/neuml/txtai/issues/541

|

[] |

DenuwanClouda

| 7 |

nolar/kopf

|

asyncio

| 143 |

[PR] Die properly: fast and with no remnants

|

> <a href="https://github.com/nolar"><img align="left" height="50" src="https://avatars0.githubusercontent.com/u/544296?v=4"></a> A pull request by [nolar](https://github.com/nolar) at _2019-07-10 15:42:31+00:00_

> Original URL: https://github.com/zalando-incubator/kopf/pull/143

>

When an operator gets SIGINT (Ctrl+C) or SIGTERM (in pods), it exits immediately, with no extra waits for anything.

This also improves its behaviour in PyCharm, where the operator can now be stopped and restarted in one click (previously required double-stopping).

> Issue : #142

> Requires: https://github.com/hjacobs/pykube/pull/31

## Description

### Watch-stream termination

`pykube-ng`, `kubernetes`, `requests`, and any other synchronous client libraries use the streaming responses of the built-in `urllib3` and `http` for watching over the k8s-events.

These streaming requests/responses can be closed when a chunk/line is yielded to the consumer, the control flow is returned to the caller, and the streaming socket itself is idling. E.g., for `requests`: https://2.python-requests.org/en/master/user/advanced/#streaming-requests

However, if nothing happens on the k8s-event stream (i.e. no k8s resources are added/modified/deleted), the streaming response spends most of its time in the blocking `read()` operation on a socket. It can remain there for long time — minutes, hours — until some data arrives on the socket.

If the streaming response runs in a thread, while the main thread is used for an asyncio event-loop, such stream cannot be closed/cancelled/terminated (because of the blocking `read()`). This, in turn, makes the application to hang on exit, holding its pod from restarting, since the thread is not finished until the `read()` call is finished.

There is no easy way to terminate the blocking `read()` operation on a socket. The only way is a dirty hack with the OS-level process signals, which interrupt the I/O operations on low (libc&co) level.

This hack can be done only from the main thread (because signal handlers can be set only from the main thread — a Python's limitation), and therefore is only suitable for the runnable application rather than for a library.

In `kopf run`, we can be sure that the event-loop and its asyncio tasks run in the main thread. In case of explicit task orchestration, we can assume that the operator developers do the same, but warn if they don't.

This PR:

* Moves the streaming watch-requests into their dedicated threads (instead of the asyncio thread-pool executors for every `next()` call).

* These dedicated threads are tracked in each `watch_objs()` call, and are interrupted when the watch-stream exits for any reason (an error, generator-exit, task cancellation, etc).

### Tasks cancellation

Beside of the stream termination, this PR improves the way how the tasks are orchestrated in an event-loop, so that they are cancelled properly, and have some minimal time for graceful exit (e.g. cleanups). This is used, for example, by the peering `disappear()` call to remove self from the peering object (previously, this call was cancelled on start, and therefore never actually executed).

Also, the sub-tasks of the root tasks are tracked and also cancelled. For example, the scheduled workers, handlers, all shielded tasks, and generally anything that is not produced by `create_tasks()`, but runs in the loop. This guarantees, that when `run()` exits, it leaves nothing behind.

## Types of Changes

- Bug fix (non-breaking change which fixes an issue)

- Refactor/improvements

## Review

_List of tasks the reviewer must do to review the PR_

- [ ] Tests

- [ ] Documentation

---

> <a href="https://github.com/nolar"><img align="left" height="30" src="https://avatars0.githubusercontent.com/u/544296?v=4"></a> Commented by [nolar](https://github.com/nolar) at _2019-07-16 10:33:52+00:00_

>

Closed in favour of separate #147 #148 #149 #152 — one per fix.

|

closed

|

2020-08-18T19:57:19Z

|

2020-08-23T20:47:32Z

|

https://github.com/nolar/kopf/issues/143

|

[

"bug",

"archive"

] |

kopf-archiver[bot]

| 0 |

kynan/nbstripout

|

jupyter

| 86 |

Allowlist metadata fields to keep

|

By far the most requested feature is adding extra metadata fields to strip (#58 #72 #78 #85). A more scalable approach would be using a (configurable) list of metadata fields to keep.

|

open

|

2018-08-14T10:53:46Z

|

2025-01-20T10:40:30Z

|

https://github.com/kynan/nbstripout/issues/86

|

[

"type:enhancement",

"help wanted"

] |

kynan

| 5 |

vitalik/django-ninja

|

django

| 569 |

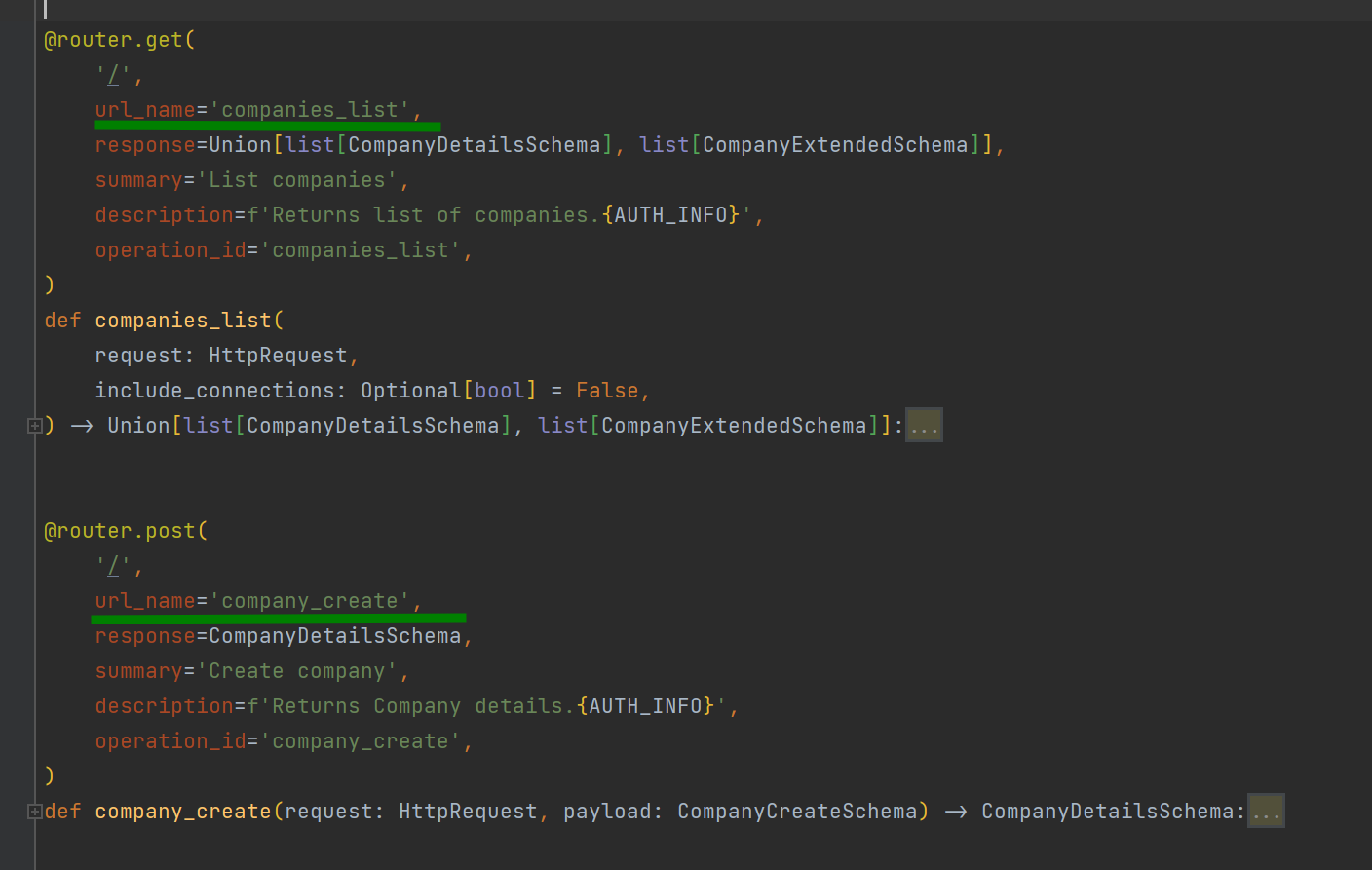

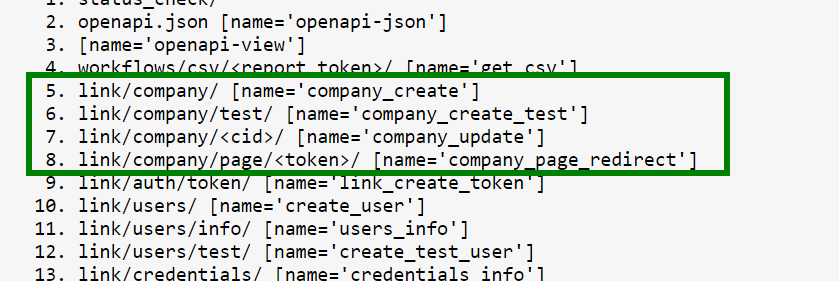

[BUG] Incorrect storing url_name to URLconf

|

In case that you have two endpoints with same url, but different `url_name` param, django will store only last name in URLconf for reverse matching.

Example:

We have companies_list endpoint and company_create endpoint with the same url '/' but different methods (GET and POST).

And only second name appears in URLconf list.

So if we try make reverse match `reverse('api:companies_list ')` - NoReverseMatch exception will be raised.

**Versions (please complete the following information):**

- Python version: 3.9

- Django version: 3.2.15

- Django-Ninja version: 0.18.0

|

closed

|

2022-09-16T12:27:34Z

|

2022-09-16T12:33:54Z

|

https://github.com/vitalik/django-ninja/issues/569

|

[] |

denis-manakov-cndt

| 1 |

dask/dask

|

scikit-learn

| 11,809 |

module 'dask.dataframe.multi' has no attribute 'concat'

|

<!-- Please include a self-contained copy-pastable example that generates the issue if possible.

Please be concise with code posted. See guidelines below on how to provide a good bug report:

- Craft Minimal Bug Reports http://matthewrocklin.com/blog/work/2018/02/28/minimal-bug-reports

- Minimal Complete Verifiable Examples https://stackoverflow.com/help/mcve

Bug reports that follow these guidelines are easier to diagnose, and so are often handled much more quickly.

-->

**Describe the issue**:

I am trying to run the first examle code from https://xgboost.readthedocs.io/en/stable/tutorials/dask.html

and get following error

```shell

Traceback (most recent call last):

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 244, in dconcat

return concat(value)

^^^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/compat.py", line 164, in concat

raise TypeError("Unknown type.")

^^^^^^^^^^^^^^^^^

TypeError: Unknown type.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/Users/liao/LeoGit/NEU_courses/a.py", line 20, in <module>

output = dxgb.train(

^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/core.py", line 726, in inner_f

return func(**kwargs)

^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 1088, in train

return client.sync(

^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/distributed/utils.py", line 363, in sync

return sync(

^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/distributed/utils.py", line 439, in sync

raise error

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/distributed/utils.py", line 413, in f

result = yield future

^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/tornado/gen.py", line 766, in run

value = future.result()

^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 1024, in _train_async

result = await map_worker_partitions(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 549, in map_worker_partitions

result = await client.compute(fut).result()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/distributed/client.py", line 409, in _result

raise exc.with_traceback(tb)

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 533, in <lambda>

lambda *args, **kwargs: [func(*args, **kwargs)],

^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 979, in dispatched_train

Xy, evals = _get_dmatrices(

^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 921, in _get_dmatrices

Xy = _dmatrix_from_list_of_parts(**train_ref, nthread=n_threads)

^^^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 828, in _dmatrix_from_list_of_parts

return _create_dmatrix(**kwargs)

^^^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 810, in _create_dmatrix

v = concat_or_none(value)

^^^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 805, in concat_or_none

return dconcat(data)

^^^^^^^^^^^^^^^^^

File "/Users/liao/miniconda3/envs/boost3/lib/python3.11/site-packages/xgboost/dask/__init__.py", line 246, in dconcat

return dd.multi.concat(list(value), axis=0)

^^^^^^^^^^^^^^^^^

AttributeError: module 'dask.dataframe.multi' has no attribute 'concat'

```

**Minimal Complete Verifiable Example**:

```python

from xgboost import dask as dxgb

import dask.array as da

import dask.distributed

if __name__ == "__main__":

cluster = dask.distributed.LocalCluster()

client = dask.distributed.Client(cluster)

# X and y must be Dask dataframes or arrays

num_obs = 1e5

num_features = 20

X = da.random.random(size=(num_obs, num_features), chunks=(1000, num_features))

y = da.random.random(size=(num_obs, 1), chunks=(1000, 1))

dtrain = dxgb.DaskDMatrix(client, X, y)

# or

# dtrain = dxgb.DaskQuantileDMatrix(client, X, y)

output = dxgb.train(

client,

{"verbosity": 2, "tree_method": "hist", "objective": "reg:squarederror"},

dtrain,

num_boost_round=4,

evals=[(dtrain, "train")],

)

```

**Anything else we need to know?**:

I create a new conda env, and only install neccessary packages

**Environment**:

- Dask version: 2025.2.0

- Python version: 3.11

- Operating System: mac M3

- Install method (conda, pip, source): conda

- xgboost version: 2.1.4

|

closed

|

2025-03-05T01:50:56Z

|

2025-03-05T10:58:26Z

|

https://github.com/dask/dask/issues/11809

|

[

"needs triage"

] |

Leo-AO-99

| 1 |

biosustain/potion

|

sqlalchemy

| 57 |

Enhancement: Optionally make use of SQLAlchemy joinedloads in `instances` queries

|

Depending on how ones' relationships are configured in SQLAlchemy, adding `.options(joinedload("relationship_name"))` to the query can substantially reduce the number of queries executed.

I've prototyped this a bit for our code, relationship set ups, and on Postgres, and would be happy to share that snippet or start a preliminary PR. Unfortunately, I'm not 100% sure how well this generates to different model specifications, DB backends, etc.

|

open

|

2015-12-30T20:17:01Z

|

2016-01-01T21:06:34Z

|

https://github.com/biosustain/potion/issues/57

|

[] |

boydgreenfield

| 2 |

2noise/ChatTTS

|

python

| 238 |

【分享】几个还不错的音色分享给大家

|

https://ixo96gpj4vq.feishu.cn/wiki/SjiZwNSuUin9fukZh23cIMBOnge?from=from_copylink

|

closed

|

2024-06-03T16:40:32Z

|

2024-07-01T10:12:39Z

|

https://github.com/2noise/ChatTTS/issues/238

|

[

"ad"

] |

QuantumDriver

| 7 |

pyjanitor-devs/pyjanitor

|

pandas

| 1,102 |

improvement suggestions for conditional_join

|

- [x] allow selection/renaming of final columns within the function

- [x] implement a numba alternative, for hopefully faster processing

- [x] improve performance for left and right joins

|

closed

|

2022-05-11T23:06:42Z

|

2022-11-03T20:03:11Z

|

https://github.com/pyjanitor-devs/pyjanitor/issues/1102

|

[] |

samukweku

| 1 |

replicate/cog

|

tensorflow

| 1,303 |

the server sometimes just hang, can't receive request or process the request

|

i 'm using the cog python interface, and i deploy a model on virtual machine with ubuntu os

the server is running successfully at the beginning. but after a long time could be days or a cuple of hours, when the server process plenty of http requests, suddenly the server can't receive any request.

if i use postman to send a post request, there will be no logs. and if i use curl to send a health-check request, there will be nothing output. but the port is still occupied and the server process still exists.

and sometimes the server suddenly can't process any request. if i send a request, the cog log will be like this:

```python

{"prediction_id": "dcb630a2-f1d1-4d60-b05d-28918f087478", "logger": "cog.server.runner", "timestamp": "2023-09-14T02:58:10.318314Z", "severity": "INFO", "message": "starting prediction"}

```

and after the starting prediction log, there will be no more logs, and server hang. even i print something in predict function, there will be no logs.

could anyone give me some advices?

|

open

|

2023-09-14T03:14:14Z

|

2023-09-14T03:14:14Z

|

https://github.com/replicate/cog/issues/1303

|

[] |

zhangvia

| 0 |

thtrieu/darkflow

|

tensorflow

| 495 |

Please verify correct format of detection in Json

|

I am getting this as result in json file.

[{"topleft": {"y": 376, "x": 1073}, "confidence": 0.6, "bottomright": {"y": 419, "x": 1135}, "label": "0"}, {"topleft": {"y": 562, "x": 755}, "confidence": 0.62, "bottomright": {"y": 605, "x": 826}, "label": "0"},

{"topleft": {"y": 562, "x": 857}, "confidence": 0.61, "bottomright": {"y": 605, "x": 932}, "label": "0"}]

Is above is the correct format of result.

In Readme file of this repo the format shown for detection is below which i different from what I am getting:

[{"label":"person", "confidence": 0.56, "topleft": {"x": 184, "y": 101}, "bottomright": {"x": 274, "y": 382}},

{"label": "dog", "confidence": 0.32, "topleft": {"x": 71, "y": 263}, "bottomright": {"x": 193, "y": 353}},

{"label": "horse", "confidence": 0.76, "topleft": {"x": 412, "y": 109}, "bottomright": {"x": 592,"y": 337}}]

Any help will be appreciated.

|

closed

|

2017-12-31T09:30:47Z

|

2017-12-31T11:20:27Z

|

https://github.com/thtrieu/darkflow/issues/495

|

[] |

gangooteli

| 1 |

benbusby/whoogle-search

|

flask

| 1,089 |

[QUESTION] Persistency of custom theme when using Docker(-compose)

|

Hi,

since a few days now im using this awesome application.

I had a look at the documentation and was wondering why there is no volume-mount in the docker-compose file to keep all settings persistent

I understand that it is possible to set 90% of all settings as env-var, but i could not figure out how to make e.g. a custom theme persistent

After each server-reboot, the default settings/theme is present again

Is there a single file holding all of these settings to be able to mount this file/folder to a docker-volume?

Thanks in advance

|

closed

|

2023-10-23T16:49:47Z

|

2023-10-25T14:18:25Z

|

https://github.com/benbusby/whoogle-search/issues/1089

|

[

"question"

] |

SHU-red

| 2 |

plotly/dash-table

|

plotly

| 717 |

Objects are not valid as a React child (found: object with keys {id, full_name}). If you meant to render a collection of children, use an array instead.

|

Code

```python

app.layout = html.Div(children=[

html.H1('Dashboard', className='text-center'),

dash_table.DataTable(

id='preventions',

columns=[{"name": col, "id": col} for col in df.columns],

data=df.to_dict('records')

)

])

```

```

Objects are not valid as a React child (found: object with keys {id, full_name}). If you meant to render a collection of children, use an array instead.

in div (created by t)

in t (created by t)

in td (created by t)

in t (created by t)

in tr (created by t)

in tbody (created by t)

in table (created by t)

in div (created by t)

in div (created by t)

in div (created by t)

in div (created by t)

in div (created by t)

in t (created by t)

in t (created by t)

in t (created by t)

in Suspense (created by t)

in t (created by CheckedComponent)

in CheckedComponent (created by TreeContainer)

in UnconnectedComponentErrorBoundary (created by Connect(UnconnectedComponentErrorBoundary))

in Connect(UnconnectedComponentErrorBoundary) (created by TreeContainer)

in TreeContainer (created by Connect(TreeContainer))

in Connect(TreeContainer) (created by TreeContainer)

in div (created by u)

in u (created by CheckedComponent)

in CheckedComponent (created by TreeContainer)

in UnconnectedComponentErrorBoundary (created by Connect(UnconnectedComponentErrorBoundary))

in Connect(UnconnectedComponentErrorBoundary) (created by TreeContainer)

in TreeContainer (created by Connect(TreeContainer))

in Connect(TreeContainer) (created by UnconnectedContainer)

in div (created by UnconnectedGlobalErrorContainer)

in div (created by GlobalErrorOverlay)

in div (created by GlobalErrorOverlay)

in GlobalErrorOverlay (created by DebugMenu)

in div (created by DebugMenu)

in DebugMenu (created by UnconnectedGlobalErrorContainer)

in div (created by UnconnectedGlobalErrorContainer)

in UnconnectedGlobalErrorContainer (created by Connect(UnconnectedGlobalErrorContainer))

in Connect(UnconnectedGlobalErrorContainer) (created by UnconnectedContainer)

in UnconnectedContainer (created by Connect(UnconnectedContainer))

in Connect(UnconnectedContainer) (created by UnconnectedAppContainer)

in UnconnectedAppContainer (created by Connect(UnconnectedAppContainer))

in Connect(UnconnectedAppContainer) (created by AppProvider)

in Provider (created by AppProvider)

in AppProvider

```

```

(This error originated from the built-in JavaScript code that runs Dash apps. Click to see the full stack trace or open your browser's console.)

invariant@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:49:15

throwOnInvalidObjectType@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:11968:14

reconcileChildFibers@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:12742:31

reconcileChildren@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:14537:28

updateHostComponent@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:14998:20

beginWork@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:15784:14

performUnitOfWork@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:19447:12

workLoop@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:19487:24

renderRoot@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:19570:15

performWorkOnRoot@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:20477:17

performWork@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:20389:24

performSyncWork@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:20363:14

requestWork@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:20232:5

retryTimedOutBoundary@http://localhost:8050/_dash-component-suites/dash_renderer/react-dom@16.v1_2_2m1583518104.8.6.js:19924:18

wrapped@http://localhost:8050/_dash-component-suites/dash_renderer/react@16.v1_2_2m1583518104.8.6.js:1353:34

```

|

closed

|

2020-03-06T19:35:01Z

|

2020-03-06T20:06:10Z

|

https://github.com/plotly/dash-table/issues/717

|

[] |

e0ff

| 1 |

pydantic/pydantic-ai

|

pydantic

| 1,082 |

Cannot upload images to gemini

|

### Initial Checks

- [x] I confirm that I'm using the latest version of Pydantic AI

### Description

Hi, i have been trying to upload an image to gemini mode gemini-2.0-flash-thinking-exp-01-21 which accepts multimodal image.

However when I try to upload the image which has a file extension it seems to break. It works fine if there is some url on the internet which has an image but the URL does not have file extension.

below is the attached response

```

pydantic_ai.exceptions.ModelHTTPError: status_code: 400, model_name: gemini-2.0-flash-thinking-exp-01-21, body: {

"error": {

"code": 400,

"message": "Request contains an invalid argument.",

"status": "INVALID_ARGUMENT"

}

}

```

### Example Code

```Python

import os

import io

import base64

from dotenv import load_dotenv

from pydantic_ai.models.gemini import GeminiModel

from pydantic_ai.agent import Agent

from pydantic_ai.messages import ImageUrl

from PIL import Image

load_dotenv(override=True)

llm = GeminiModel(os.getenv('MODEL'))

pydantic_agent = Agent(

model=llm,

system_prompt="You are an helpful agent for reading and extracting data from images and pdfs."

)

def image_to_byte_string(image: Image.Image) -> str:

img_byte_arr = io.BytesIO()

image.save(img_byte_arr, format='PNG') # or 'JPEG', etc.

img_byte_arr = img_byte_arr.getvalue()

return base64.b64encode(img_byte_arr).decode('utf-8')

def main():

image = Image.open("./fuzzy-bunnies.png")

formatted_image=image_to_byte_string(image)

input = [

"What is this image of?",

# ImageUrl(f'data:{image.get_format_mimetype()};base64,{formatted_image}'),

ImageUrl(url='https://hatrabbits.com/wp-content/uploads/2017/01/random.png')

]

response = pydantic_agent.run_sync(input)

print(response.data)

if __name__ == "__main__":

main()

```

### Python, Pydantic AI & LLM client version

```Text

python: 3.9.6

pydantic_core: 2.27.2

pydantic_ai: 0.0.36

```

|

open

|

2025-03-08T21:08:41Z

|

2025-03-10T10:29:56Z

|

https://github.com/pydantic/pydantic-ai/issues/1082

|

[

"need confirmation"

] |

Atoo35

| 6 |

fastapi/sqlmodel

|

pydantic

| 356 |

Support discriminated union

|

### First Check

- [X] I added a very descriptive title to this issue.

- [X] I used the GitHub search to find a similar issue and didn't find it.

- [X] I searched the SQLModel documentation, with the integrated search.

- [X] I already searched in Google "How to X in SQLModel" and didn't find any information.

- [X] I already read and followed all the tutorial in the docs and didn't find an answer.

- [X] I already checked if it is not related to SQLModel but to [Pydantic](https://github.com/samuelcolvin/pydantic).

- [X] I already checked if it is not related to SQLModel but to [SQLAlchemy](https://github.com/sqlalchemy/sqlalchemy).

### Commit to Help

- [ ] I commit to help with one of those options 👆

### Example Code

```python

from typing import Literal, Union, Optional

from sqlmodel import SQLModel, Field

class Bar(SQLModel):

class_type: Literal["bar"] = "bar"

name: str

class Foo(SQLModel):

class_type: Literal["foo"] = "foo"

name: str

id: Optional[int]

class FooBar(SQLModel, table=True):

id: int = Field(default=None, primary_key=True)

data: Union[Foo, Bar] = Field(discriminator="class_type")

```

### Description

* No support for Pydantic 1.9's implementation of discriminated union

### Wanted Solution

Would like to have support for discriminated union in SQLModel-classes

### Wanted Code

```python

from typing import Literal, Union, Optional

from sqlmodel import SQLModel, Field

class Bar(SQLModel):

class_type: Literal["bar"] = "bar"

name: str

class Foo(SQLModel):

class_type: Literal["foo"] = "foo"

name: str

id: Optional[int]

class FooBar(SQLModel, table=True):

id: int = Field(default=None, primary_key=True)

data: Union[Foo, Bar] = Field(discriminator="class_type")

```

### Alternatives

_No response_

### Operating System

Linux

### Operating System Details

_No response_

### SQLModel Version

0.0.6

### Python Version

3.10.4

### Additional Context

Pydantic has added support for discrimated unions, something I use in my project with Pydantic + SQLAlchemy.

I want to switch to SQLModel, but cannot switch before discriminated unions

|

closed

|

2022-06-07T12:50:05Z

|

2023-11-06T00:10:07Z

|

https://github.com/fastapi/sqlmodel/issues/356

|

[

"feature",

"answered"

] |

wholmen

| 3 |

SALib/SALib

|

numpy

| 614 |

`extract_group_names()` and associated methods can be simplified

|

These methods can now be replaced with variations of `pd.unique()` and `np.unique()`

|

closed

|

2024-04-19T13:13:25Z

|

2024-04-21T01:56:45Z

|

https://github.com/SALib/SALib/issues/614

|

[

"clean up/maintenance"

] |

ConnectedSystems

| 0 |

deepspeedai/DeepSpeed

|

pytorch

| 6,994 |

[BUG] loading model error

|

**Describe the bug**

I use 8 * A100-80GB GPUs to fine-tune a 72B model, but when it loads 16/37 safetensor, it always been shutdown for no reasons without any hints!

The error is as follows:

```

Loading checkpoint Shards"43%16/37 [19:49<25:57,74.18s/it]

Sending process4029695 closing signal SIGTERK

failad (edtcote: -5) lcalrank:5

```

I use zero-3 and acclerate to launch my script. The command I use is `accelerate launch --config_file xxx --gpu_ids 0,1,2,3,4,5,6,7 xxx.py`.

The deepspeed file is as follows:

```

{

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"initial_scale_power": 16,

"hysteresis": 2,

"min_loss_scale": 1

},

"optimizer": {

"type": "AdamW",

"params": {

"lr": "auto",

"weight_decay": "auto"

}

},

"scheduler": {

"type": "WarmupDecayLR",

"params": {

"warmup_min_lr": "auto",

"warmup_max_lr": "auto",

"warmup_num_steps": "auto",

"total_num_steps": "auto"

}

},

"zero_optimization": {

"stage": 3,

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"offload_param": {

"device": "cpu",

"pin_memory": true

},

"overlap_comm": true,

"contiguous_gradients": true,

"reduce_bucket_size": "auto",

"stage3_prefetch_bucket_size": "auto",

"stage3_param_persistence_threshold": "auto",

"sub_group_size": 1e9,

"stage3_max_live_parameters": 1e9,

"stage3_max_reuse_distance": 1e9,

"stage3_gather_16bit_weights_on_model_save": "auto"

},

"gradient_accumulation_steps": 1,

"gradient_clipping": "auto",

"steps_per_print": 2000,

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"wall_clock_breakdown": false

}

```

**Expected behavior**

I think it should load all of safetensors, what's more, during the loading process, I notice that gpu memory is still 0.

**Screenshots**

<img width="1392" alt="Image" src="https://github.com/user-attachments/assets/9da6fc2c-d842-4a77-8f9c-6d14fa6f74c3" />

**System info (please complete the following information):**

- OS: Ubuntu 18.04

- GPU count and types: 8*A100-80GB

- Python version 3.12

|

open

|

2025-02-03T08:03:14Z

|

2025-02-03T08:03:14Z

|

https://github.com/deepspeedai/DeepSpeed/issues/6994

|

[

"bug",

"training"

] |

tengwang0318

| 0 |

mirumee/ariadne-codegen

|

graphql

| 350 |

10x memory increase from v0.13 -> v0.14

|

We measured the Ariadne generated module after upgrading to v0.14, and we realize memory of the module increased from 400MB -> 4GB after upgrading from v0.13 to v0.14.

We would like to use ClientForwardRefs in v0.14, but the memory increase between v0.13 and v0.14 is untenable.

Any ideas what might be causing this and how to avoid this?

Verified on python 3.9 and 3.10 and 3.11 and 3.12

|

closed

|

2025-02-03T21:43:11Z

|

2025-02-11T16:59:56Z

|

https://github.com/mirumee/ariadne-codegen/issues/350

|

[] |

eltontian

| 3 |

jupyter-book/jupyter-book

|

jupyter

| 2,181 |

README - outdated section?

|

is this section outdated and can be deleted from README.md?

https://github.com/jupyter-book/jupyter-book/blob/47d2481c8ddc427ee8c9d1bbb24a4d57cc0a8fbd/README.md?plain=1#L21-L25

|

closed

|

2024-07-29T18:34:22Z

|

2024-08-29T22:27:54Z

|

https://github.com/jupyter-book/jupyter-book/issues/2181

|

[] |

VladimirFokow

| 2 |

jacobgil/pytorch-grad-cam

|

computer-vision

| 364 |

Possibly inverted heatmaps for Score-CAM for YOLOv5

|

Hi @jacobgil , I'm working on applying Score-CAM on YOLOv5 by implementing the `YOLOBoxScoreTarget` class (so this issue is closely related with #242). There are several issues I want to iron out before making a PR (e.g. YOLOv5 returns parseable `Detection` objects only when the input is _not_ a Torch tensor - see https://github.com/ultralytics/yolov5/issues/6726 - and my workaround is ad-hoc atm), but in my current implementation I find that the resulting heatmaps are inverted, e.g.:

Outputs for dog & cat example, unnormalized (L)/normalized (R):

Also observed similar trends with other images like the 5-dogs example. My `YOLOBoxScoreTarget` is as follows, it is essentially identical to [FasterRCNNBoxScoreTarget](https://github.com/jacobgil/pytorch-grad-cam/blob/2183a9cbc1bd5fc1d8e134b4f3318c3b6db5671f/pytorch_grad_cam/utils/model_targets.py#L69), except it leverages `parse_detections` from the YOLOv5 notebook:

```

class YOLOBoxScoreTarget:

""" For every original detected bounding box specified in "bounding boxes",

assign a score on how the current bounding boxes match it,

1. In IOU

2. In the classification score.

If there is not a large enough overlap, or the category changed,

assign a score of 0.

The total score is the sum of all the box scores.

"""

def __init__(self, labels, bounding_boxes, iou_threshold=0.5):

self.labels = labels

self.bounding_boxes = bounding_boxes

self.iou_threshold = iou_threshold

def __call__(self, model_outputs):

boxes, colors, categories, names, confidences = parse_detections(model_outputs)

boxes = torch.Tensor(boxes)

output = torch.Tensor([0])

if torch.cuda.is_available():

output = output.cuda()

boxes = boxes.cuda()

if len(boxes) == 0:

return output

for box, label in zip(self.bounding_boxes, self.labels):

box = torch.Tensor(box[None, :])

if torch.cuda.is_available():

box = box.cuda()

ious = torchvision.ops.box_iou(box, boxes)

index = ious.argmax()

if ious[0, index] > self.iou_threshold and categories[index] == label:

score = ious[0, index] + confidences[index]

output = output + score

return output

```

I also slightly altered `get_cam_weights` in [score_cam.py](https://github.com/jacobgil/pytorch-grad-cam/blob/master/pytorch_grad_cam/score_cam.py) to make it play nice with numpy inputs instead of torch tensors.

```

import torch

import tqdm

from .base_cam import BaseCAM

import numpy as np

class ScoreCAM(BaseCAM):

def __init__(

self,

model,

target_layers,

use_cuda=False,

reshape_transform=None):

super(ScoreCAM, self).__init__(model,

target_layers,

use_cuda,

reshape_transform=reshape_transform,

uses_gradients=False)

if len(target_layers) > 0:

print("Warning: You are using ScoreCAM with target layers, "

"however ScoreCAM will ignore them.")

def get_cam_weights(self,

input_tensor,

target_layer,

targets,

activations,

grads):

with torch.no_grad():

upsample = torch.nn.UpsamplingBilinear2d(

size=input_tensor.shape[-2:])

activation_tensor = torch.from_numpy(activations)

if self.cuda:

activation_tensor = activation_tensor.cuda()

upsampled = upsample(activation_tensor)

maxs = upsampled.view(upsampled.size(0),

upsampled.size(1), -1).max(dim=-1)[0]

mins = upsampled.view(upsampled.size(0),

upsampled.size(1), -1).min(dim=-1)[0]

maxs, mins = maxs[:, :, None, None], mins[:, :, None, None]

upsampled = (upsampled - mins) / (maxs - mins)

input_tensors = input_tensor[:, None,

:, :] * upsampled[:, :, None, :, :]

if hasattr(self, "batch_size"):

BATCH_SIZE = self.batch_size

else:

BATCH_SIZE = 16

scores = []

for target, tensor in zip(targets, input_tensors):

for i in tqdm.tqdm(range(0, tensor.size(0), BATCH_SIZE)):

batch = tensor[i: i + BATCH_SIZE, :]

# TODO: current solution to handle the issues with torch inputs, improve

batch = list(batch.numpy())

batch = [np.swapaxes((elt * 255).astype(np.uint8), 0, -1) for elt in batch]

outs = [self.model(b) for b in batch]

outputs = [target(o).cpu().item() for o in outs]

scores.extend(outputs)

scores = torch.Tensor(scores)

scores = scores.view(activations.shape[0], activations.shape[1])

weights = torch.nn.Softmax(dim=-1)(scores).numpy()

return weights

```

To me the implementations seem correct, and therefore I am not able to address why the resulting heatmaps seem inverted. Any ideas? I'd be happy to make a branch with a reproducible example to debug and potentially extend it to a PR. Please let me know.

|

open

|

2022-11-17T12:13:34Z

|

2023-11-08T15:38:48Z

|

https://github.com/jacobgil/pytorch-grad-cam/issues/364

|

[] |

semihcanturk

| 3 |

ageitgey/face_recognition

|

python

| 624 |

How to increase the size of a detected rectangle in python?

|

* face_recognition version: latest version

* Python version: 3.7

* Operating System: Kali Linux

### Description

How to increase the size of a detected rectangle, for example, I want to crop 3x4 photo of detected faces

### What I Did

First I thought it needs to increase the top&bottom position but then it breaks the accuracy of face detection and images are not face-centered. after that, I searched about that and found OpenCV version which can add padding to the detected rectangle. Are you have the same function that can add padding and space to the detected rectangle?

```

from PIL import Image

import face_recognition

image = face_recognition.load_image_file(importLocation)

face_locations = face_recognition.face_locations(image)

i = 0

for face_location in face_locations:

top, right, bottom, left = face_location

face_image = image[top:bottom, left:right]

pil_img = Image.fromarray(face_image)

pil_img.save("%s/face-{}.jpg".format(i) % (exportLocation))

i += 1

```

|

closed

|

2018-09-19T13:53:13Z

|

2018-09-23T14:59:24Z

|

https://github.com/ageitgey/face_recognition/issues/624

|

[] |

hasanparasteh

| 1 |

onnx/onnx

|

deep-learning

| 6,425 |

Inconsistency in LSTM's operator description and backend testcases?

|

# Ask a Question

### Question

In the [LSTM's operator description](https://onnx.ai/onnx/operators/onnx__LSTM.html#inputs), there are 3 optional outputs(`Y, Y_h, Y_c`). But in the [testcases](https://github.com/onnx/onnx/blob/main/onnx/backend/test/case/node/lstm.py), there are only 2 outputs(looks like `Y, Y_h`). Is this something deliberate?

### Further information

- Relevant Area: operators

- Is this issue related to a specific model?

**Model name**: LSTM

**Model opset**: all

|

open

|

2024-10-03T20:48:13Z

|

2024-10-21T09:13:40Z

|

https://github.com/onnx/onnx/issues/6425

|

[

"question"

] |

knwng

| 1 |

marcomusy/vedo

|

numpy

| 430 |

Color aberations

|

Hey,

I am using [BrainRender](https://github.com/brainglobe/brainrender) for visualisation of neuro-related data.

I have been using a function to slice some parts of the 3D objects however I get some aberations.

After discussing with Fede Claudi, he mentionned that the problem could be probably using vedo.

I am attached here an example. Any idea how to solve that?

Thanks a lot !!

|

closed

|

2021-07-20T13:30:10Z

|

2021-08-28T10:53:24Z

|

https://github.com/marcomusy/vedo/issues/430

|

[] |

MathieuBo

| 6 |

ivy-llc/ivy

|

pytorch

| 28,628 |

Fix Frontend Failing Test: torch - activations.tensorflow.keras.activations.relu

|

To-do List: https://github.com/unifyai/ivy/issues/27498

|

closed

|

2024-03-17T23:57:34Z

|

2024-03-25T12:47:11Z

|

https://github.com/ivy-llc/ivy/issues/28628

|

[

"Sub Task"

] |

ZJay07

| 0 |

nerfstudio-project/nerfstudio

|

computer-vision

| 3,541 |

Error running command: colmap vocab_tree_matcher --database_path during ns-process-data

|

Given an input of 20 images in a folder located in /vulcanscratch/aidris12/nerfstudio/data/nerfstudio/unprocessed_monkey/, I am trying to run ns-process-data. I am running the following:

`ns-process-data images --data /vulcanscratch/aidris12/nerfstudio/data/nerfstudio/unprocessed_monkey/ --output-dir /vulcanscratch/aidris12/nerfstudio/data/nerfstudio/processed_monkey/

`

However, I am currently running into this problem:

<img width="1440" alt="Screen Shot 2024-12-04 at 10 48 06 PM" src="https://github.com/user-attachments/assets/7aba065a-0397-4ab7-98e4-8b5fb6f0e68a">

I don't know why this is occurring. I have tried running COLMAP before in another directory, where I ran

`ns-process-data images --data /vulcanscratch/aidris12/nerfstudio/data/nerfstudio/unprocessed_teapot/ --output-dir /vulcanscratch/aidris12/nerfstudio/data/nerfstudio/processed_teapot/`

The result was the following. Not good, but at least it ran:

<img width="1440" alt="Screen Shot 2024-12-04 at 10 51 31 PM" src="https://github.com/user-attachments/assets/5ba64237-a505-4fcc-b099-4e2eaa7d6065">

They are both folders of png images that COLMAP processes that are even in the same superfolder (/vulcanscratch/aidris12/nerfstudio/data/nerfstudio), but it is freaking out in one folder versus the other.

Is there perhaps a problem with pathing? You may have noticed that I had a folder named nerfstudio/data/nerfstudio, which seems like poor practice. However, I am confused why the pathing to one folder was fine when the other was not.

|

open

|

2024-12-05T03:55:25Z

|

2024-12-05T03:55:25Z

|

https://github.com/nerfstudio-project/nerfstudio/issues/3541

|

[] |

aidris823

| 0 |

huggingface/datasets

|

deep-learning

| 6,990 |

Problematic rank after calling `split_dataset_by_node` twice

|

### Describe the bug

I'm trying to split `IterableDataset` by `split_dataset_by_node`.

But when doing split on a already split dataset, the resulting `rank` is greater than `world_size`.

### Steps to reproduce the bug

Here is the minimal code for reproduction:

```py

>>> from datasets import load_dataset

>>> from datasets.distributed import split_dataset_by_node

>>> dataset = load_dataset('fla-hub/slimpajama-test', split='train', streaming=True)

>>> dataset = split_dataset_by_node(dataset, 1, 32)

>>> dataset._distributed

DistributedConfig(rank=1, world_size=32)

>>> dataset = split_dataset_by_node(dataset, 1, 15)

>>> dataset._distributed

DistributedConfig(rank=481, world_size=480)

```

As you can see, the second rank 481 > 480, which is problematic.

### Expected behavior

I think this error comes from this line @lhoestq

https://github.com/huggingface/datasets/blob/a6ccf944e42c1a84de81bf326accab9999b86c90/src/datasets/iterable_dataset.py#L2943-L2944

We may need to obtain the rank first. Then the above code gives

```py

>>> dataset._distributed

DistributedConfig(rank=16, world_size=480)

```

### Environment info

datasets==2.20.0

|

closed

|

2024-06-21T14:25:26Z

|

2024-06-25T16:19:19Z

|

https://github.com/huggingface/datasets/issues/6990

|

[] |

yzhangcs

| 1 |

schemathesis/schemathesis

|

pytest

| 2,355 |

[FEATURE] Negative tests for GraphQL

|

### Checklist

- [x] I checked the [FAQ section](https://schemathesis.readthedocs.io/en/stable/faq.html#frequently-asked-questions) of the documentation

- [x] I looked for similar issues in the [issue tracker](https://github.com/schemathesis/schemathesis/issues)

- [x] I am using the latest version of Schemathesis

### Describe the bug

I try to generate negative tests, however it doesn't work.

### To Reproduce

```python

import schemathesis

from hypothesis import settings

from schemathesis import DataGenerationMethod

f = open("schema.graphql")

schema = schemathesis.graphql.from_file(f, data_generation_methods=[DataGenerationMethod.negative])

@schema.parametrize()

@settings(max_examples=10)

def test_api(case):

print(case.body)

```

Minimal API schema causing this issue:

```

schema {

query: Query

mutation: Mutation

}

type Query {

hello: String

user(id: Int!): String

}

type Mutation {

createUser(name: String!, age: Int!): String

}

```

### Expected behavior

I was expecting negative tests, but clearly these are just ordinary.

```

{

user(id: 0)

}

```

```

mutation {

createUser(name: "", age: 0) {

age

}

}

```

### Environment

```

- OS: MacOS Ventura

- Python version: 3.12

- Schemathesis version: 3.32.2

```

|

open

|

2024-07-18T11:01:47Z

|

2024-07-18T11:10:13Z

|

https://github.com/schemathesis/schemathesis/issues/2355

|

[

"Priority: Medium",

"Type: Feature",

"Specification: GraphQL",

"Difficulty: Hard"

] |

antonchuvashow

| 1 |

ansible/ansible

|

python

| 84,074 |

Expose more timeout-related context in `ansible-test`

|

### Summary

So sometimes our CI gets stuck on a single task for so long that it gets killed after a 50-minute timeout. And there wouldn't be any context regarding what was the state of processes at that point.

Here's an example: https://dev.azure.com/ansible/ansible/_build/results?buildId=125233&view=logs&j=decc2f6c-caaf-5327-9dc2-edc5c3d85ed8&t=c5b61780-1e0c-5015-76da-ae0f2062a595&l=11894. Following the source code is just a guessing game. My guess is that the file system access is slow somewhere since `assert` does templating, but I'm pretty sure I won't be able to reproduce it w/o knowing where to look more precisely.

### Issue Type

Bug Report

### Component Name

ansible-test

### Ansible Version

```

stable-2.15

```

### Configuration

```console

N/A

```

### OS / Environment

alpine container @ azure pipelines

### Steps to Reproduce

N/A

### Expected Results

There should be more info printed out.

I think the signal handler @ https://github.com/ansible/ansible/blob/f1f0d9bd5355de5b45b894a9adf649abb2f97df5/test/lib/ansible_test/_internal/timeout.py#L115-L119 should attempt retrieving more context to show us.

What occurred to me was that maybe it could call `py-spy` and perhaps integrate [the stdlib `faulthandler`](https://docs.python.org/3/library/faulthandler.html#dumping-the-traceback-on-a-user-signal).

cc @mattclay any other ideas?

### Actual Results

```console

No useful context that would provide any clues regarding what's happening beyond the timestamps that vaguely point to the action plugin that's hanging:

15:14 TASK [assert] ******************************************************************

15:14 ok: [localhost] => {

15:14 "changed": false,

15:14 "msg": "All assertions passed"

15:14 }

15:14

15:14 TASK [Less than 1] *************************************************************

15:15 Pausing for 1 seconds

15:15 ok: [localhost] => {"changed": false, "delta": 1, "echo": true, "rc": 0, "start": "2024-10-08 07:20:03.109197", "stderr": "", "stdout": "Paused for 1.0 seconds", "stop": "2024-10-08 07:20:04.112144", "user_input": ""}

15:15

15:15 TASK [assert] ******************************************************************

50:10

50:10 NOTICE: Killed command to avoid an orphaned child process during handling of an unexpected exception.

50:10 Run command with stdout: docker exec -i ansible-test-controller-fNcVkXOj sh -c 'tar cf - -C /root/ansible/test --exclude .tmp results | gzip'

50:10 Run command with stdin: tar oxzf - -C /__w/1/ansible/test

50:10 FATAL: Tests aborted after exceeding the 50 minute time limit.

50:10 Run command: docker stop --time 0 ansible-test-controller-fNcVkXOj

50:10 Run command: docker rm ansible-test-controller-fNcVkXOj

50:11 Run command: docker stop --time 0 48cbf6f96fc859d835848038445a1164ddfe08ea7695b68f39636c8cb6693599

50:11 Run command: docker rm 48cbf6f96fc859d835848038445a1164ddfe08ea7695b68f39636c8cb6693599

##[error]Bash exited with code '1'.

Finishing: Run Tests

```

### Code of Conduct

- [X] I agree to follow the Ansible Code of Conduct

|

open

|

2024-10-08T13:24:55Z

|

2025-01-21T15:43:38Z

|

https://github.com/ansible/ansible/issues/84074

|

[

"bug"

] |

webknjaz

| 4 |

junyanz/pytorch-CycleGAN-and-pix2pix

|

pytorch

| 1,608 |

wandb & visdom question

|

I used visdom and wandb to record the training process.But steps is not the same,For visdom ,it's xaxis have 200 epochs,but in wandb have 1200 steps.Why is there such a problem.

|

open

|

2023-11-01T02:49:01Z

|

2023-12-22T11:12:37Z

|

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/1608

|

[] |

xia-QQ

| 1 |

kizniche/Mycodo

|

automation

| 678 |

7.6.3 Test Email Not Sending

|

Mycodo Issue Report: 7.6.3

Problem Description:

While using the test email alert system I noticed that none of the emails showed up in my inbox despite saying that the email was sent successfully. I have filled in all of the SMTP account information and have enabled the POP and IMAP stuff on the gmail account.

|

closed

|

2019-08-06T23:38:11Z

|

2023-11-08T20:21:03Z

|

https://github.com/kizniche/Mycodo/issues/678

|

[] |

wllyng

| 4 |

autogluon/autogluon

|

scikit-learn

| 4,561 |

[MultiModal] Need to add supported loss functions in AutoMM

|

### Describe the issue linked to the documentation

Users need to understand the list of supported loss functions for their problem type in our doc, specifically at [here](https://auto.gluon.ai/stable/tutorials/multimodal/advanced_topics/customization.html#optimization)

### Suggest a potential alternative/fix

_No response_

|

open

|

2024-10-18T19:56:44Z

|

2024-11-26T21:42:57Z

|

https://github.com/autogluon/autogluon/issues/4561

|

[

"API & Doc",

"Needs Triage",

"module: multimodal"

] |

tonyhoo

| 2 |

FactoryBoy/factory_boy

|

django

| 776 |

Add Dynamodb ORM Factory

|

#### The problem

I haven't found support for creating a factory with the Dynamodb ORM [pynamodb](https://github.com/pynamodb/PynamoDB). Sometimes I use a django-supported ORM for which the `DjangoModelFactory` works great, and sometimes I need a NoSQL DB.

#### Proposed solution

I assume this would include implementing the `base.Factory` interface, though I'm pretty unfamiliar with what's under the hood of factory_boy.

Edit: ORM (Object Relational Mapping) for a NoSQL DB is a misnomer :-P ONSQLM (Object NoSQL Mapping) would be more appropriate

|

open

|

2020-08-28T15:46:34Z

|

2022-01-25T12:57:30Z

|

https://github.com/FactoryBoy/factory_boy/issues/776

|

[

"Feature",

"DesignDecision"

] |

ezbc

| 6 |

yt-dlp/yt-dlp

|

python

| 11,871 |

[telegram:embed] Extractor telegram:embed returned nothing

|

### DO NOT REMOVE OR SKIP THE ISSUE TEMPLATE

- [X] I understand that I will be **blocked** if I *intentionally* remove or skip any mandatory\* field

### Checklist

- [X] I'm reporting that yt-dlp is broken on a **supported** site

- [X] I've verified that I have **updated yt-dlp to nightly or master** ([update instructions](https://github.com/yt-dlp/yt-dlp#update-channels))

- [X] I've checked that all provided URLs are playable in a browser with the same IP and same login details

- [X] I've checked that all URLs and arguments with special characters are [properly quoted or escaped](https://github.com/yt-dlp/yt-dlp/wiki/FAQ#video-url-contains-an-ampersand--and-im-getting-some-strange-output-1-2839-or-v-is-not-recognized-as-an-internal-or-external-command)

- [X] I've searched [known issues](https://github.com/yt-dlp/yt-dlp/issues/3766) and the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=) for similar issues **including closed ones**. DO NOT post duplicates

- [X] I've read the [guidelines for opening an issue](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#opening-an-issue)

- [X] I've read about [sharing account credentials](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#are-you-willing-to-share-account-details-if-needed) and I'm willing to share it if required

### Region

Russia

### Provide a description that is worded well enough to be understood

Unable to download audio or videos messages from telegram

### Provide verbose output that clearly demonstrates the problem

- [X] Run **your** yt-dlp command with **-vU** flag added (`yt-dlp -vU <your command line>`)

- [ ] If using API, add `'verbose': True` to `YoutubeDL` params instead

- [X] Copy the WHOLE output (starting with `[debug] Command-line config`) and insert it below

### Complete Verbose Output

```shell

[debug] Command-line config: ['-vU', '--verbose', 'https://t.me/trsch/4068']

[debug] Encodings: locale cp1251, fs utf-8, pref cp1251, out utf-8, error utf-8, screen utf-8

[debug] yt-dlp version stable@2024.12.13 from yt-dlp/yt-dlp [542166962] (win_exe)

[debug] Python 3.10.11 (CPython AMD64 64bit) - Windows-10-10.0.17763-SP0 (OpenSSL 1.1.1t 7 Feb 2023)

[debug] exe versions: ffmpeg 6.0-full_build-www.gyan.dev (setts), ffprobe 6.0-full_build-www.gyan.dev

[debug] Optional libraries: Cryptodome-3.21.0, brotli-1.1.0, certifi-2024.08.30, curl_cffi-0.5.10, mutagen-1.47.0, requests-2.32.3, sqlite3-3.40.1, urllib3-2.2.3, websockets-14.1

[debug] Proxy map: {}

[debug] Request Handlers: urllib, requests, websockets, curl_cffi

[debug] Loaded 1837 extractors

[debug] Fetching release info: https://api.github.com/repos/yt-dlp/yt-dlp/releases/latest

Latest version: stable@2024.12.13 from yt-dlp/yt-dlp

yt-dlp is up to date (stable@2024.12.13 from yt-dlp/yt-dlp)

[telegram:embed] Extracting URL: https://t.me/trsch/4068

[telegram:embed] 4068: Downloading embed frame

WARNING: Extractor telegram:embed returned nothing; please report this issue on https://github.com/yt-dlp/yt-dlp/issues?q= , filling out the appropriate issue template. Confirm you are on the latest version using yt-dlp -U

```

|

open

|

2024-12-22T06:01:58Z

|

2024-12-24T11:53:28Z

|

https://github.com/yt-dlp/yt-dlp/issues/11871

|

[

"site-bug",

"patch-available"

] |

k0zyrev

| 5 |

microsoft/nni

|

machine-learning

| 5,766 |

TypeError: 'Tensor' object is not a mapping

|

**Describe the issue**:

Upon running the following code to compress and speed up the YOLOv5 model using NNI's pruning and speedup techniques.:

```

import torch

from nni.common.concrete_trace_utils import concrete_trace

from nni.compression.pruning import L1NormPruner

from nni.compression.utils import auto_set_denpendency_group_ids

from nni.compression.speedup import ModelSpeedup

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', device='cpu')

model(torch.rand([1, 3, 640, 640]))

config_list = [{

'sparsity': 0.5,

'op_types': ['Conv2d'],

'exclude_op_names_re': ['model.model.model.24.*'], # this layer is detector head

}]

config_list = auto_set_denpendency_group_ids(model, config_list, torch.rand([1, 3, 640, 640]))

pruner = L1NormPruner(model, config_list)

masked_model, masks = pruner.compress()

pruner.unwrap_model()