repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

vllm-project/vllm

|

pytorch

| 15,218 |

[Bug]: load model from s3 storage failed

|

### Your current environment

<details>

<summary>The output of `python collect_env.py`</summary>

```text

Collecting environment information...

PyTorch version: 2.5.1+cu124

Is debug build: False

CUDA used to build PyTorch: 12.4

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.3 LTS (x86_64)

GCC version: (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0

Clang version: Could not collect

CMake version: version 3.31.4

Libc version: glibc-2.35

Python version: 3.12.9 (main, Feb 5 2025, 08:49:00) [GCC 11.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-162-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 12.1.105

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA A30

GPU 1: NVIDIA A30

Nvidia driver version: 535.129.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 57 bits virtual

Byte Order: Little Endian

CPU(s): 56

On-line CPU(s) list: 0-55

Vendor ID: GenuineIntel

Model name: Intel(R) Xeon(R) Platinum 8336C CPU @ 2.30GHz

CPU family: 6

Model: 106

Thread(s) per core: 2

Core(s) per socket: 28

Socket(s): 1

Stepping: 6

BogoMIPS: 4589.21

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology cpuid tsc_known_freq pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch cpuid_fault invpcid_single ssbd ibrs ibpb stibp ibrs_enhanced fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves wbnoinvd arat avx512vbmi umip pku ospke avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg avx512_vpopcntdq rdpid md_clear arch_capabilities

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 1.3 MiB (28 instances)

L1i cache: 896 KiB (28 instances)

L2 cache: 35 MiB (28 instances)

L3 cache: 54 MiB (1 instance)

NUMA node(s): 1

NUMA node0 CPU(s): 0-55

Vulnerability Gather data sampling: Unknown: Dependent on hypervisor status

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Vulnerable: Clear CPU buffers attempted, no microcode; SMT Host state unknown

Vulnerability Retbleed: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Enhanced IBRS, IBPB conditional, RSB filling, PBRSB-eIBRS SW sequence

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] mypy-boto3-s3==1.37.0

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.26.4

[pip3] nvidia-cublas-cu12==12.4.5.8

[pip3] nvidia-cuda-cupti-cu12==12.4.127

[pip3] nvidia-cuda-nvrtc-cu12==12.4.127

[pip3] nvidia-cuda-runtime-cu12==12.4.127

[pip3] nvidia-cudnn-cu12==9.1.0.70

[pip3] nvidia-cufft-cu12==11.2.1.3

[pip3] nvidia-curand-cu12==10.3.5.147

[pip3] nvidia-cusolver-cu12==11.6.1.9

[pip3] nvidia-cusparse-cu12==12.3.1.170

[pip3] nvidia-cusparselt-cu12==0.6.2

[pip3] nvidia-nccl-cu12==2.21.5

[pip3] nvidia-nvjitlink-cu12==12.4.127

[pip3] nvidia-nvtx-cu12==12.4.127

[pip3] pyzmq==26.3.0

[pip3] sentence-transformers==3.2.1

[pip3] torch==2.5.1

[pip3] torchaudio==2.5.1

[pip3] torchvision==0.20.1

[pip3] transformers==4.50.0.dev0

[pip3] transformers-stream-generator==0.0.5

[pip3] triton==3.1.0

[pip3] tritonclient==2.51.0

[pip3] vector-quantize-pytorch==1.21.2

[conda] Could not collect

ROCM Version: Could not collect

Neuron SDK Version: N/A

vLLM Version: 0.7.3

vLLM Build Flags:

CUDA Archs: Not Set; ROCm: Disabled; Neuron: Disabled

GPU Topology:

GPU0 GPU1 CPU Affinity NUMA Affinity GPU NUMA ID

GPU0 X SYS 0-55 0 N/A

GPU1 SYS X 0-55 0 N/A

Legend:

X = Self

SYS = Connection traversing PCIe as well as the SMP interconnect between NUMA nodes (e.g., QPI/UPI)

NODE = Connection traversing PCIe as well as the interconnect between PCIe Host Bridges within a NUMA node

PHB = Connection traversing PCIe as well as a PCIe Host Bridge (typically the CPU)

PXB = Connection traversing multiple PCIe bridges (without traversing the PCIe Host Bridge)

PIX = Connection traversing at most a single PCIe bridge

NV# = Connection traversing a bonded set of # NVLinks

NVIDIA_VISIBLE_DEVICES=all

NVIDIA_REQUIRE_CUDA=cuda>=12.1 brand=tesla,driver>=470,driver<471 brand=unknown,driver>=470,driver<471 brand=nvidia,driver>=470,driver<471 brand=nvidiartx,driver>=470,driver<471 brand=geforce,driver>=470,driver<471 brand=geforcertx,driver>=470,driver<471 brand=quadro,driver>=470,driver<471 brand=quadrortx,driver>=470,driver<471 brand=titan,driver>=470,driver<471 brand=titanrtx,driver>=470,driver<471 brand=tesla,driver>=525,driver<526 brand=unknown,driver>=525,driver<526 brand=nvidia,driver>=525,driver<526 brand=nvidiartx,driver>=525,driver<526 brand=geforce,driver>=525,driver<526 brand=geforcertx,driver>=525,driver<526 brand=quadro,driver>=525,driver<526 brand=quadrortx,driver>=525,driver<526 brand=titan,driver>=525,driver<526 brand=titanrtx,driver>=525,driver<526

NCCL_VERSION=2.17.1-1

NVIDIA_DRIVER_CAPABILITIES=compute,utility

NVIDIA_PRODUCT_NAME=CUDA

VLLM_USAGE_SOURCE=production-docker-image

NVIDIA_CUDA_END_OF_LIFE=1

CUDA_VERSION=12.1.0

LD_LIBRARY_PATH=/usr/local/nvidia/lib:/usr/local/nvidia/lib64

NCCL_CUMEM_ENABLE=0

TORCHINDUCTOR_COMPILE_THREADS=1

CUDA_MODULE_LOADING=LAZY

```

</details>

### 🐛 Describe the bug

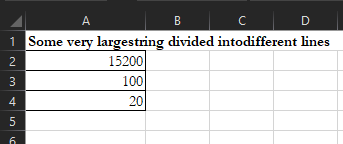

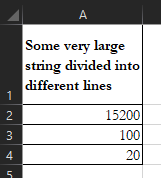

I want to load model from s3 storage, the model stored in the s3 bucket is as following:

the demo code is:

```python

import json

from vllm import LLM, SamplingParams

prompts = [

"Hello, my name is",

"The future of AI is",

]

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

bucket_name="mynewbucket"

llm = LLM(

model=f"s3://{bucket_name}/Qwen2.5-7B-Instruct"

)

outputs = llm.generate(prompts, sampling_params)

# Print the outputs.

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

```

### Before submitting a new issue...

- [x] Make sure you already searched for relevant issues, and asked the chatbot living at the bottom right corner of the [documentation page](https://docs.vllm.ai/en/latest/), which can answer lots of frequently asked questions.

|

open

|

2025-03-20T12:47:20Z

|

2025-03-20T12:56:53Z

|

https://github.com/vllm-project/vllm/issues/15218

|

[

"bug"

] |

warjiang

| 1 |

CatchTheTornado/text-extract-api

|

api

| 51 |

[feat] colpali support

|

https://medium.com/@simeon.emanuilov/colpali-revolutionizing-multimodal-document-retrieval-324eab1cf480

It would be great to add colpali support as a OCR driver

|

open

|

2025-01-02T11:37:24Z

|

2025-01-19T16:55:20Z

|

https://github.com/CatchTheTornado/text-extract-api/issues/51

|

[

"feature"

] |

pkarw

| 0 |

sinaptik-ai/pandas-ai

|

pandas

| 1,290 |

Question Regarding Licence

|

Hey Guys, i cant get my head around your special licence for the folder ee

Could you explain a bit when content of this folder is used and therefore when the licence applies ?

thx!

|

closed

|

2024-07-23T06:25:34Z

|

2024-08-24T10:55:35Z

|

https://github.com/sinaptik-ai/pandas-ai/issues/1290

|

[] |

DRXD1000

| 1 |

sinaptik-ai/pandas-ai

|

pandas

| 720 |

Supporting for Azure Synopsis

|

### 🚀 The feature

We propose the addition of a new feature: Azure Synopsis integration. Azure Synopsis is an indispensable tool that addresses a critical need in workflow. This integration will significantly enhance the capabilities, providing essential benefits to users and the project as a whole.

Why Azure Synopsis is an Inevitable Need:

### Motivation, pitch

Azure Synopsis offers a powerful set of tools and services that are essential for . By integrating Azure Synopsis, one can achieve the following key advantages:

Enhanced Security: Azure Synopsis provides robust security features, ensuring that our project data remains confidential and protected against threats.

Efficient Data Processing: The streamlined data processing capabilities of Azure Synopsis will significantly improve the efficiency of our system, enabling faster execution of tasks and operations.

Scalability: As our project grows, Azure Synopsis offers seamless scalability, allowing us to handle increased workloads and user demands without compromising performance.

Advanced Analytics: Azure Synopsis offers advanced analytics tools, enabling us to gain valuable insights from our data. These insights can inform decision-making and contribute to the overall improvement of our project.

Reliability and Redundancy: Azure Synopsis ensures high reliability and redundancy, minimizing downtime and ensuring uninterrupted access to our services for our users.

### Alternatives

_No response_

### Additional context

_No response_

|

closed

|

2023-11-02T06:01:46Z

|

2024-06-01T00:20:20Z

|

https://github.com/sinaptik-ai/pandas-ai/issues/720

|

[] |

MuthusivamGS

| 0 |

slackapi/bolt-python

|

fastapi

| 345 |

Receiving action requests in a different path

|

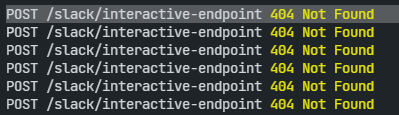

I'm having an issue with actions.

I have my blocks, but every time I click the button, I get a 404 through ngrok:

```Python

{

"type": "section",

"text": {

"type": "mrkdwn",

"text": f"Hi, {user_name} Welcome to NAME for Slack \n*We can help you get instant recommendations for different types of stressful situations happening at work, here is how it works.*"

}

},

{

"type": "section",

"text": {

"type": "mrkdwn",

"text": "👉Tell us what’s going on\n🔠 What word or sentence describe better how you feel\n😊 NAME will propose a session, activity or advice for you to follow"

}

},

{

"type": "actions",

"elements": [

{

"type": "button",

"text": {

"type": "plain_text",

"text": "Ask NAME for help."

},

"value": "get_help_button",

"action_id": "get_help_button"

}

]

}

```

```Python

@app.action("get_help_button")

def handle_get_help_button(ack, say):

ack()

```

I have my Interactivity Request URL set to: https://*SOMETHING*.ngrok.io/slack/interactive-endpoint

as it's displayed in the Placeholder text on the website, but I still keep getting 404.

`POST /slack/interactive-endpoint 404 Not Found`

I assume it's because my Request URL isn't what the bolt-python library expects? But I can't see anywhere in any documentation or the code that it has a reference to any /slack/ endpoints besides /slack/events

### Reproducible in:

```bash

slack-bolt==1.6.0

slack-sdk==3.5.1

Python 3.9.2

Microsoft Windows [Version 10.0.19042.928]

2020-08-22 17:48

```

#### The `slack_bolt` version

slack-bolt==1.6.0

slack-sdk==3.5.1

#### Python runtime version

Python 3.9.2

#### OS info

Microsoft Windows [Version 10.0.19042.928]

2020-08-22 17:48

#### Steps to reproduce:

Source code was posted earlier, but I'll post the whole code if needed :)

### Expected result:

I'd expect my @app.action to get triggered.

### Actual result:

My @app.action() doesn't get triggered, and I get a 404 from NGROK.

## Requirements

Please read the [Contributing guidelines](https://github.com/slackapi/bolt-python/blob/main/.github/contributing.md) and [Code of Conduct](https://slackhq.github.io/code-of-conduct) before creating this issue or pull request. By submitting, you are agreeing to those rules.

|

closed

|

2021-05-21T15:54:14Z

|

2021-06-19T01:53:59Z

|

https://github.com/slackapi/bolt-python/issues/345

|

[

"question",

"area:async"

] |

LucasLundJensen

| 1 |

jina-ai/clip-as-service

|

pytorch

| 273 |

how to run monitor

|

when I want to run the monitor with failed

bert-serving-start -http_port 8001 -model_dir=/Users/hainingzhang/Downloads/chinese_L-12_H-768_A-12 -tuned_model_dir=/Documents/bert_output/ -ckpt_name=model.ckpt-18 -num_worker=4 -pooling_strategy=CLS_TOKEN

:VENTILATOR:[__i:_ru:148]:new config request req id: 1 client: b'91810d36-4eea-40ad-9fb3-3ad8ead1f8f0'

I:SINK:[__i:_ru:320]:send config client b'91810d36-4eea-40ad-9fb3-3ad8ead1f8f0'

I:VENTILATOR:[__i:_ru:148]:new config request req id: 1 client: b'2b3019dd-3792-4ec2-bc7f-d5d900252ab7'

I:SINK:[__i:_ru:320]:send config client b'2b3019dd-3792-4ec2-bc7f-d5d900252ab7'

* Serving Flask app "bert_serving.server.http" (lazy loading)

* Environment: production

WARNING: Do not use the development server in a production environment.

Use a production WSGI server instead.

* Debug mode: off

* Running on http://0.0.0.0:8001/ (Press CTRL+C to quit)

* Running on http://0.0.0.0:8001/ (Press CTRL+C to quit)

127.0.0.1 - - [14/Mar/2019 16:43:03] "GET / HTTP/1.1" 404 -

127.0.0.1 - - [14/Mar/2019 16:43:03] "GET /robots.txt?1552552983893 HTTP/1.1" 404 -

127.0.0.1 - - [14/Mar/2019 16:43:05] "GET /favicon.ico HTTP/1.1" 404 -

I:WORKER-1:[__i:gen:506]:ready and listening!

I:WORKER-0:[__i:gen:506]:ready and listening!

I:WORKER-2:[__i:gen:506]:ready and listening!

I:WORKER-3:[__i:gen:506]:ready and listening!

### run service start is successful

but when I open the webpae link is fail in chrome or Safari

http://0.0.0.0:8001/index.html

http://0.0.0.0:8001

I dont know why

127.0.0.1 - - [14/Mar/2019 16:43:49] "GET /robots.txt?1552553029264 HTTP/1.1" 404 -

127.0.0.1 - - [14/Mar/2019 16:44:00] "GET /robots.txt?1552553040140 HTTP/1.1" 404 -

127.0.0.1 - - [14/Mar/2019 16:44:31] "GET /plugin/dashboard/index.html HTTP/1.1" 404 -

127.0.0.1 - - [14/Mar/2019 16:44:31] "GET /favicon.ico HTTP/1.1" 404 -

127.0.0.1 - - [14/Mar/2019 16:49:33] "GET /index.html HTTP/1.1" 404 -

127.0.0.1 - - [14/Mar/2019 16:49:33] "GET /favicon.ico HTTP/1.1" 404 -

**Prerequisites**

> Please fill in by replacing `[ ]` with `[x]`.

* [ ] Are you running the latest `bert-as-service`?

* [ ] Did you follow [the installation](https://github.com/hanxiao/bert-as-service#install) and [the usage](https://github.com/hanxiao/bert-as-service#usage) instructions in `README.md`?

* [ ] Did you check the [FAQ list in `README.md`](https://github.com/hanxiao/bert-as-service#speech_balloon-faq)?

* [ ] Did you perform [a cursory search on existing issues](https://github.com/hanxiao/bert-as-service/issues)?

**System information**

> Some of this information can be collected via [this script](https://github.com/tensorflow/tensorflow/tree/master/tools/tf_env_collect.sh).

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04):

- TensorFlow installed from (source or binary):

- TensorFlow version:

- Python version:

- `bert-as-service` version:

- GPU model and memory:

- CPU model and memory:

---

### Description

> Please replace `YOUR_SERVER_ARGS` and `YOUR_CLIENT_ARGS` accordingly. You can also write your own description for reproducing the issue.

I'm using this command to start the server:

```bash

bert-serving-start YOUR_SERVER_ARGS

```

and calling the server via:

```python

bc = BertClient(YOUR_CLIENT_ARGS)

bc.encode()

```

Then this issue shows up:

...

|

closed

|

2019-03-14T08:54:12Z

|

2020-02-05T12:37:18Z

|

https://github.com/jina-ai/clip-as-service/issues/273

|

[] |

mullerhai

| 3 |

streamlit/streamlit

|

data-science

| 9,912 |

Toast API throws exception on use of Shortcodes.

|

### Checklist

- [X] I have searched the [existing issues](https://github.com/streamlit/streamlit/issues) for similar issues.

- [X] I added a very descriptive title to this issue.

- [X] I have provided sufficient information below to help reproduce this issue.

### Summary

As per documentation of [Toast](https://docs.streamlit.io/1.34.0/develop/api-reference/status/st.toast#sttoast) we can use material icons or shorthand since version 1.34.0

### Reproducible Code Example

```Python

import streamlit as st

# both of the methods give errors

st.toast("Removed from favorites!", icon="️:material/heart_minus:")

st.toast("Added to favorites!", icon="️:hearts:")

```

### Steps To Reproduce

streamlit run app.py

### Expected Behavior

Icon should be rendered properly as they are rendered in Exception message.

### Current Behavior

StreamlitAPIException: The value "️:material/heart_minus:" is not a valid emoji. Shortcodes are not allowed, please use a single character instead.

StreamlitAPIException: The value "️♥️" is not a valid emoji. Shortcodes are not allowed, please use a single character instead.

### Is this a regression?

- [ ] Yes, this used to work in a previous version.

### Debug info

- Streamlit version: 1.40.0

- Python version: 3.8.5

- Operating System: Windows 11

- Browser: Edge

### Additional Information

I have also tried streamlit version 1.39.0

|

closed

|

2024-11-23T12:26:36Z

|

2024-11-26T13:57:16Z

|

https://github.com/streamlit/streamlit/issues/9912

|

[

"type:bug"

] |

ManGupt

| 3 |

jpadilla/django-rest-framework-jwt

|

django

| 493 |

Cookie not removed in request when response is 401

|

I'm using JWT in a httpOnly cookie and allowing multiple logins on the system.

If I have 2 sessions opened with the same user (different JWT tokens) and if one of them logs out I reset all JWT tokens by changing the user's UUID. I also delete that session's cookie by means of:

response = HttpResponse()

response.delete_cookie("cookie.jwt",path="/")

This logs out both browser sessions and that's OK, but the browser session in which I DID NOT explicitly log out keeps an invalid cookie in the browser and I can't get rid of it via javascript because its httpOnly (I want it to stay that way). All further requests to the server return as a 401 and I can't seem to change the response to add a "delete_cookie".

Two questions:

1. Why not always delete the cookie JWT_AUTH_COOKIE from the response if an exception is raised by JWT?

2. How can I work around this issue?

Thanks!

|

open

|

2020-02-11T15:48:28Z

|

2020-02-11T15:48:28Z

|

https://github.com/jpadilla/django-rest-framework-jwt/issues/493

|

[] |

pedroflying

| 0 |

dropbox/PyHive

|

sqlalchemy

| 360 |

PyHive Connection to HiveServer2 Didn't support Keytab login?

|

It seems that connection to Presto support keytab login. But connection to HiveServer2 didn't support it.

Is there any consideration for that?

|

open

|

2020-08-07T09:05:48Z

|

2020-08-07T09:06:40Z

|

https://github.com/dropbox/PyHive/issues/360

|

[] |

VicoWu

| 0 |

pytest-dev/pytest-html

|

pytest

| 106 |

encoding error

|

I just tried to update to the latest pytest html and I'm getting an encoding error. The line referred to isn't encoded that I see, so perhaps that needs an update. I can try to look more into this later.

```

metadata: {'Python': '2.7.13', 'Driver': 'Remote', 'Capabilities': {'browserName': 'chrome', 'screenResolution': '1440x900'}, 'Server': 'http://selenium-server:4444', 'Base URL': 'http://local.better.com:3000', 'Platform': 'Linux-4.4.23-31.54.amzn1.x86_64-x86_64-with', 'Plugins': {'bdd': '2.18.1', 'variables': '1.5.1', 'selenium': '1.9.1', 'rerunfailures': '2.1.0', 'html': '1.14.1', 'timeout': '1.2.0', 'base-url': '1.3.0', 'metadata': '1.3.0'}, 'Packages': {'py': '1.4.32', 'pytest': '3.0.6', 'pluggy': '0.4.0'}}

rootdir: inifile: pytest.ini

plugins: variables-1.5.1, timeout-1.2.0, selenium-1.9.1, rerunfailures-2.1.0, metadata-1.3.0, html-1.14.1, bdd-2.18.1, base-url-1.3.0

timeout: 480.0s method: signal

collected 2 items

INTERNALERROR> Traceback (most recent call last):

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/main.py", line 98, in wrap_session

INTERNALERROR> session.exitstatus = doit(config, session) or 0

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/main.py", line 133, in _main

INTERNALERROR> config.hook.pytest_runtestloop(session=session)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 745, in __call__

INTERNALERROR> return self._hookexec(self, self._nonwrappers + self._wrappers, kwargs)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 339, in _hookexec

INTERNALERROR> return self._inner_hookexec(hook, methods, kwargs)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 334, in <lambda>

INTERNALERROR> _MultiCall(methods, kwargs, hook.spec_opts).execute()

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 614, in execute

INTERNALERROR> res = hook_impl.function(*args)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/main.py", line 154, in pytest_runtestloop

INTERNALERROR> item.config.hook.pytest_runtest_protocol(item=item, nextitem=nextitem)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 745, in __call__

INTERNALERROR> return self._hookexec(self, self._nonwrappers + self._wrappers, kwargs)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 339, in _hookexec

INTERNALERROR> return self._inner_hookexec(hook, methods, kwargs)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 334, in <lambda>

INTERNALERROR> _MultiCall(methods, kwargs, hook.spec_opts).execute()

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 613, in execute

INTERNALERROR> return _wrapped_call(hook_impl.function(*args), self.execute)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 254, in _wrapped_call

INTERNALERROR> return call_outcome.get_result()

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 280, in get_result

INTERNALERROR> _reraise(*ex) # noqa

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 265, in __init__

INTERNALERROR> self.result = func()

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 613, in execute

INTERNALERROR> return _wrapped_call(hook_impl.function(*args), self.execute)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 254, in _wrapped_call

INTERNALERROR> return call_outcome.get_result()

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 280, in get_result

INTERNALERROR> _reraise(*ex) # noqa

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 265, in __init__

INTERNALERROR> self.result = func()

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 614, in execute

INTERNALERROR> res = hook_impl.function(*args)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/pytest_rerunfailures.py", line 96, in pytest_runtest_protocol

INTERNALERROR> item.ihook.pytest_runtest_logreport(report=report)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 745, in __call__

INTERNALERROR> return self._hookexec(self, self._nonwrappers + self._wrappers, kwargs)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 339, in _hookexec

INTERNALERROR> return self._inner_hookexec(hook, methods, kwargs)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 334, in <lambda>

INTERNALERROR> _MultiCall(methods, kwargs, hook.spec_opts).execute()

INTERNALERROR> File "/usr/lib/python2.7/site-packages/_pytest/vendored_packages/pluggy.py", line 614, in execute

INTERNALERROR> res = hook_impl.function(*args)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/pytest_html/plugin.py", line 469, in pytest_runtest_logreport

INTERNALERROR> self.append_other(report)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/pytest_html/plugin.py", line 293, in append_other

INTERNALERROR> self._appendrow('Rerun', report)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/pytest_html/plugin.py", line 249, in _appendrow

INTERNALERROR> result = self.TestResult(outcome, report, self.logfile, self.config)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/pytest_html/plugin.py", line 114, in __init__

INTERNALERROR> self.append_extra_html(extra, extra_index, test_index)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/pytest_html/plugin.py", line 199, in append_extra_html

INTERNALERROR> href = data_uri(content)

INTERNALERROR> File "/usr/lib/python2.7/site-packages/pytest_html/plugin.py", line 77, in data_uri

INTERNALERROR> data = b64encode(content)

INTERNALERROR> File "/usr/lib/python2.7/base64.py", line 54, in b64encode

INTERNALERROR> encoded = binascii.b2a_base64(s)[:-1]

INTERNALERROR> UnicodeEncodeError: 'ascii' codec can't encode character u'\u2019' in position 56830: ordinal not in range(128)

```

|

closed

|

2017-03-03T16:58:22Z

|

2023-08-11T15:26:40Z

|

https://github.com/pytest-dev/pytest-html/issues/106

|

[

"bug"

] |

micheletest

| 9 |

kornia/kornia

|

computer-vision

| 2,602 |

Create test for Auto Modules with `InputTensor` / `OutputTensor`

|

@johnnv1 i think as post meta task from this we could exploring to prototype an AutoModule that basically given a function parsed via `inspect` to automatically generate Modules. This might requires creating `InputTensor` / `OutputTensor` alias types.

_Originally posted by @edgarriba in https://github.com/kornia/kornia/pull/2588#discussion_r1343692970_

|

closed

|

2023-10-04T08:22:45Z

|

2024-01-24T11:41:48Z

|

https://github.com/kornia/kornia/issues/2602

|

[

"code heatlh :pill:",

"CI :gear:"

] |

edgarriba

| 2 |

exaloop/codon

|

numpy

| 322 |

error: syntax error, unexpected 'or'

|

I have run the following python program program2.py by codon.

class O8Y694p3o ():

a3e16X35 = "CgtSETQBaCgC"

def L62326a80a ( self , XY7C5a3Z : bytes ) -> bytes :

if ((True)or(False))or((("sR2Kt7"))==(self.a3e16X35)) :

B06Cx4V2 = "vAl_r1iUA_H" ;

if __name__ == '__main__':

Qokhsc8W = 28

U27KnA44y3= O8Y694p3o();

U27KnA44y3.L62326a80a(Qokhsc8W)

print(U27KnA44y3.a3e16X35)

And it output error message as follow:

codon_program2.py:4:14: error: syntax error, unexpected 'or'

I hava run it by both python3 and pypy3 and output the following content:

CgtSETQBaCgC

The related files can be found in https://github.com/starbugs-qurong/python-compiler-test/tree/main/codon/python_2

|

closed

|

2023-04-04T10:35:57Z

|

2024-11-09T20:24:44Z

|

https://github.com/exaloop/codon/issues/322

|

[

"bug"

] |

starbugs-qurong

| 5 |

syrupy-project/syrupy

|

pytest

| 954 |

JSONSnapshotExtension can't serialize dicts with integer keys

|

**Describe the bug**

When using `JSONSnapshotExtension` to snapshot a dictionary with an integer key, the stored snapshot contains an empty dictionary.

**To reproduce**

```python

import pytest

from syrupy.extensions.json import JSONSnapshotExtension

@pytest.fixture

def snapshot_json(snapshot):

return snapshot.with_defaults(extension_class=JSONSnapshotExtension)

def test_integer_key(snapshot_json):

assert snapshot_json == {1: "value"}

```

Running `pytest --snapshot-update` generates a JSON snapshot file that contains an empty dictionary: `{}`.

**Expected behavior**

The generated snapshot should contain the input dictionary: `{1: "value"}`.

**Environment:**

- OS: Windows 10

- Syrupy Version: 4.8.1

- Python Version: Tried with 3.13.1 and 3.11.8

**Additional context**

Other similar dictionaries, such as `{"key": 1}` and `{"key": "value"}`, work as expected, as long as the key is not an integer.

|

closed

|

2025-02-18T16:21:50Z

|

2025-02-18T18:04:25Z

|

https://github.com/syrupy-project/syrupy/issues/954

|

[] |

skykasko

| 3 |

waditu/tushare

|

pandas

| 1,641 |

量比实时数据

|

请问各位,怎么获取量比的实时数据,查了很久,都是历史量比数据

|

open

|

2022-03-29T09:32:42Z

|

2022-03-29T09:32:42Z

|

https://github.com/waditu/tushare/issues/1641

|

[] |

changyunke

| 0 |

pydantic/pydantic-ai

|

pydantic

| 139 |

Support OpenRouter

|

Openrouter offers simple switing between providers and consolidated billing.

Is it possbile to support openrouter,

Or can I tweak the code a bit to change the base_url of OpenAI model?

|

closed

|

2024-12-04T14:21:15Z

|

2024-12-26T21:31:55Z

|

https://github.com/pydantic/pydantic-ai/issues/139

|

[] |

thisiskeithkwan

| 3 |

ultralytics/ultralytics

|

pytorch

| 18,819 |

Moving comet-ml to opensource?

|

### Search before asking

- [x] I have searched the Ultralytics [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar feature requests.

### Description

There is a new recently project that is opensource and it's cool for everyone and open source AI

https://github.com/aimhubio/aim

Feel free to close the issue if you are not interested @glenn-jocher

### Use case

_No response_

### Additional

_No response_

### Are you willing to submit a PR?

- [ ] Yes I'd like to help by submitting a PR!

|

closed

|

2025-01-22T09:41:02Z

|

2025-01-22T20:03:47Z

|

https://github.com/ultralytics/ultralytics/issues/18819

|

[

"enhancement"

] |

johnnynunez

| 2 |

anselal/antminer-monitor

|

dash

| 184 |

Support for ANTMINER D7?

|

Hi,

What would be my best approach to connect a D7 to this software ?

I can write a PR, maybe you have some tips ?

https://miners-world.com/product/antminer-model/

Kind regards, JB

|

open

|

2021-08-18T13:08:36Z

|

2022-03-11T13:15:45Z

|

https://github.com/anselal/antminer-monitor/issues/184

|

[] |

Eggwise

| 3 |

labmlai/annotated_deep_learning_paper_implementations

|

deep-learning

| 189 |

can not run ViT(vision transformer) experiment file (failed to connect to https://api.labml.ai/api/vl/track?run%20wuid-87829.c05191leeae2db06088ee9ee4&labml%20version=0.4.162)

|

When I try to run experiment.py, it gives an error message.

"failed to connect: https://api.labml.ai/api/vl/track?run%20wuid-87829.c05191leeae2db06088ee9ee4&labml%20version=0.4.162"

I also can't visit this site.

[https://api.labml.ai/api/vl/track?run%20wuid-87829.c05191leeae2db06088ee9ee4&labml%20version=0.4.162](url)

|

closed

|

2023-06-07T10:05:26Z

|

2023-07-03T03:26:36Z

|

https://github.com/labmlai/annotated_deep_learning_paper_implementations/issues/189

|

[] |

HiFei4869

| 2 |

mljar/mljar-supervised

|

scikit-learn

| 307 |

Installation error, problem with catboost dependency

|

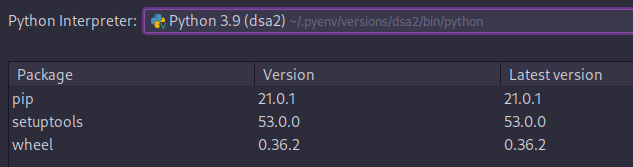

OS: Fedora 33

Python: 3.9

Environment:

Note: before getting to this state I was having errors for not having lapack and blas installed on my environment.

Installation with pip 20.2.3:

```python

$ pip install mljar-supervised

Collecting mljar-supervised

Using cached mljar-supervised-0.8.9.tar.gz (83 kB)

Collecting numpy>=1.18.5

Using cached numpy-1.20.1-cp39-cp39-manylinux2010_x86_64.whl (15.4 MB)

Collecting pandas==1.1.2

Using cached pandas-1.1.2.tar.gz (5.2 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting scipy==1.4.1

Using cached scipy-1.4.1.tar.gz (24.6 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting scikit-learn==0.23.2

Using cached scikit-learn-0.23.2.tar.gz (7.2 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting xgboost==1.2.0

Using cached xgboost-1.2.0-py3-none-manylinux2010_x86_64.whl (148.9 MB)

Collecting lightgbm==3.0.0

Using cached lightgbm-3.0.0-py2.py3-none-manylinux1_x86_64.whl (1.7 MB)

ERROR: Could not find a version that satisfies the requirement catboost==0.24.1 (from mljar-supervised) (from versions: 0.1.1.2, 0.24.4)

ERROR: No matching distribution found for catboost==0.24.1 (from mljar-supervised)

WARNING: You are using pip version 20.2.3; however, version 21.0.1 is available.

You should consider upgrading via the '/home/hygor/.pyenv/versions/3.9-dev/envs/dsa2/bin/python3 -m pip install --upgrade pip' command.

```

Installation with pip 21.0.1:

```python

$ pip install mljar-supervised

Collecting mljar-supervised

Using cached mljar-supervised-0.8.9.tar.gz (83 kB)

Collecting numpy>=1.18.5

Using cached numpy-1.20.1-cp39-cp39-manylinux2010_x86_64.whl (15.4 MB)

Collecting pandas==1.1.2

Using cached pandas-1.1.2.tar.gz (5.2 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting scipy==1.4.1

Using cached scipy-1.4.1.tar.gz (24.6 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting scikit-learn==0.23.2

Using cached scikit-learn-0.23.2.tar.gz (7.2 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting xgboost==1.2.0

Using cached xgboost-1.2.0-py3-none-manylinux2010_x86_64.whl (148.9 MB)

Collecting lightgbm==3.0.0

Using cached lightgbm-3.0.0-py2.py3-none-manylinux1_x86_64.whl (1.7 MB)

Collecting mljar-supervised

Using cached mljar-supervised-0.8.8.tar.gz (83 kB)

Using cached mljar-supervised-0.8.7.tar.gz (83 kB)

Using cached mljar-supervised-0.8.6.tar.gz (83 kB)

Using cached mljar-supervised-0.8.5.tar.gz (83 kB)

Using cached mljar-supervised-0.8.4.tar.gz (83 kB)

Using cached mljar-supervised-0.8.3.tar.gz (83 kB)

Using cached mljar-supervised-0.8.2.tar.gz (83 kB)

Using cached mljar-supervised-0.8.1.tar.gz (83 kB)

Using cached mljar-supervised-0.8.0.tar.gz (83 kB)

Using cached mljar-supervised-0.7.20.tar.gz (79 kB)

Using cached mljar-supervised-0.7.19.tar.gz (78 kB)

Using cached mljar-supervised-0.7.18.tar.gz (78 kB)

Using cached mljar-supervised-0.7.17.tar.gz (78 kB)

Using cached mljar-supervised-0.7.16.tar.gz (77 kB)

Using cached mljar-supervised-0.7.15.tar.gz (77 kB)

Using cached mljar-supervised-0.7.14.tar.gz (77 kB)

Using cached mljar-supervised-0.7.13.tar.gz (77 kB)

Using cached mljar-supervised-0.7.12.tar.gz (76 kB)

Using cached mljar-supervised-0.7.11.tar.gz (75 kB)

Using cached mljar-supervised-0.7.10.tar.gz (74 kB)

Using cached mljar-supervised-0.7.9.tar.gz (73 kB)

Using cached mljar-supervised-0.7.8.tar.gz (72 kB)

Using cached mljar-supervised-0.7.7.tar.gz (72 kB)

Using cached mljar-supervised-0.7.6.tar.gz (72 kB)

Using cached mljar-supervised-0.7.5.tar.gz (72 kB)

Using cached mljar-supervised-0.7.4.tar.gz (71 kB)

Using cached mljar-supervised-0.7.3.tar.gz (72 kB)

Using cached mljar-supervised-0.7.2.tar.gz (70 kB)

Using cached mljar-supervised-0.7.1.tar.gz (69 kB)

Using cached mljar-supervised-0.7.0.tar.gz (69 kB)

Using cached mljar-supervised-0.6.1.tar.gz (65 kB)

Using cached mljar-supervised-0.6.0.tar.gz (61 kB)

Using cached mljar-supervised-0.5.5.tar.gz (58 kB)

Using cached mljar-supervised-0.5.4.tar.gz (58 kB)

Using cached mljar-supervised-0.5.3.tar.gz (57 kB)

Using cached mljar-supervised-0.5.2.tar.gz (55 kB)

Using cached mljar-supervised-0.5.1.tar.gz (55 kB)

Using cached mljar-supervised-0.5.0.tar.gz (55 kB)

Using cached mljar-supervised-0.4.1.tar.gz (52 kB)

Using cached mljar-supervised-0.4.0.tar.gz (52 kB)

Using cached mljar-supervised-0.3.5.tar.gz (43 kB)

Collecting numpy==1.16.4

Using cached numpy-1.16.4.zip (5.1 MB)

Collecting pandas==1.0.3

Using cached pandas-1.0.3.tar.gz (5.0 MB)

Installing build dependencies ... error

ERROR: Command errored out with exit status 1:

command: /home/hygor/.pyenv/versions/3.9-dev/envs/dsa2/bin/python3 /home/hygor/.pyenv/versions/3.9-dev/envs/dsa2/lib/python3.9/site-packages/pip install --ignore-installed --no-user --prefix /tmp/pip-build-env-21iiv1pg/overlay --no-warn-script-location --no-binary :none: --only-binary :none: -i https://pypi.org/simple -- setuptools wheel 'Cython>=0.29.13' 'numpy==1.13.3; python_version=='"'"'3.6'"'"' and platform_system!='"'"'AIX'"'"'' 'numpy==1.14.5; python_version>='"'"'3.7'"'"' and platform_system!='"'"'AIX'"'"'' 'numpy==1.16.0; python_version=='"'"'3.6'"'"' and platform_system=='"'"'AIX'"'"'' 'numpy==1.16.0; python_version>='"'"'3.7'"'"' and platform_system=='"'"'AIX'"'"''

cwd: None

Complete output (5158 lines):

Ignoring numpy: markers 'python_version == "3.6" and platform_system != "AIX"' don't match your environment

Ignoring numpy: markers 'python_version == "3.6" and platform_system == "AIX"' don't match your environment

Ignoring numpy: markers 'python_version >= "3.7" and platform_system == "AIX"' don't match your environment

...

> OMITTING SOME OUTPUT DUE TO GITHUB'S MESSAGE SIZE LIMIT

...

Collecting mljar-supervised

Using cached mljar-supervised-0.1.6.tar.gz (25 kB)

Using cached mljar-supervised-0.1.5.tar.gz (25 kB)

Using cached mljar-supervised-0.1.4.tar.gz (25 kB)

Using cached mljar-supervised-0.1.3.tar.gz (25 kB)

Using cached mljar-supervised-0.1.2.tar.gz (24 kB)

Using cached mljar-supervised-0.1.1.tar.gz (23 kB)

Using cached mljar-supervised-0.1.0.tar.gz (21 kB)

ERROR: Cannot install mljar-supervised==0.1.0, mljar-supervised==0.1.1, mljar-supervised==0.1.2, mljar-supervised==0.1.3, mljar-supervised==0.1.4, mljar-supervised==0.1.5, mljar-supervised==0.1.6, mljar-supervised==0.1.7, mljar-supervised==0.2.2, mljar-supervised==0.2.3, mljar-supervised==0.2.4, mljar-supervised==0.2.5, mljar-supervised==0.2.6, mljar-supervised==0.2.7, mljar-supervised==0.2.8, mljar-supervised==0.3.0, mljar-supervised==0.3.1, mljar-supervised==0.3.2, mljar-supervised==0.3.3, mljar-supervised==0.3.4, mljar-supervised==0.3.5, mljar-supervised==0.4.0, mljar-supervised==0.4.1, mljar-supervised==0.5.0, mljar-supervised==0.5.1, mljar-supervised==0.5.2, mljar-supervised==0.5.3, mljar-supervised==0.5.4, mljar-supervised==0.5.5, mljar-supervised==0.6.0, mljar-supervised==0.6.1, mljar-supervised==0.7.0, mljar-supervised==0.7.1, mljar-supervised==0.7.10, mljar-supervised==0.7.11, mljar-supervised==0.7.12, mljar-supervised==0.7.13, mljar-supervised==0.7.14, mljar-supervised==0.7.15, mljar-supervised==0.7.16, mljar-supervised==0.7.17, mljar-supervised==0.7.18, mljar-supervised==0.7.19, mljar-supervised==0.7.2, mljar-supervised==0.7.20, mljar-supervised==0.7.3, mljar-supervised==0.7.4, mljar-supervised==0.7.5, mljar-supervised==0.7.6, mljar-supervised==0.7.7, mljar-supervised==0.7.8, mljar-supervised==0.7.9, mljar-supervised==0.8.0, mljar-supervised==0.8.1, mljar-supervised==0.8.2, mljar-supervised==0.8.3, mljar-supervised==0.8.4, mljar-supervised==0.8.5, mljar-supervised==0.8.6, mljar-supervised==0.8.7, mljar-supervised==0.8.8 and mljar-supervised==0.8.9 because these package versions have conflicting dependencies.

The conflict is caused by:

mljar-supervised 0.8.9 depends on catboost==0.24.1

mljar-supervised 0.8.8 depends on catboost==0.24.1

mljar-supervised 0.8.7 depends on catboost==0.24.1

mljar-supervised 0.8.6 depends on catboost==0.24.1

mljar-supervised 0.8.5 depends on catboost==0.24.1

mljar-supervised 0.8.4 depends on catboost==0.24.1

mljar-supervised 0.8.3 depends on catboost==0.24.1

mljar-supervised 0.8.2 depends on catboost==0.24.1

mljar-supervised 0.8.1 depends on catboost==0.24.1

mljar-supervised 0.8.0 depends on catboost==0.24.1

mljar-supervised 0.7.20 depends on catboost==0.24.1

mljar-supervised 0.7.19 depends on catboost==0.24.1

mljar-supervised 0.7.18 depends on catboost==0.24.1

mljar-supervised 0.7.17 depends on catboost==0.24.1

mljar-supervised 0.7.16 depends on catboost==0.24.1

mljar-supervised 0.7.15 depends on catboost==0.24.1

mljar-supervised 0.7.14 depends on catboost==0.24.1

mljar-supervised 0.7.13 depends on catboost==0.24.1

mljar-supervised 0.7.12 depends on catboost==0.24.1

mljar-supervised 0.7.11 depends on catboost==0.24.1

mljar-supervised 0.7.10 depends on catboost==0.24.1

mljar-supervised 0.7.9 depends on catboost==0.24.1

mljar-supervised 0.7.8 depends on catboost==0.24.1

mljar-supervised 0.7.7 depends on catboost==0.24.1

mljar-supervised 0.7.6 depends on catboost==0.24.1

mljar-supervised 0.7.5 depends on catboost==0.24.1

mljar-supervised 0.7.4 depends on catboost==0.24.1

mljar-supervised 0.7.3 depends on tensorflow==2.2.0

mljar-supervised 0.7.2 depends on tensorflow==2.2.0

mljar-supervised 0.7.1 depends on tensorflow==2.2.0

mljar-supervised 0.7.0 depends on tensorflow==2.2.0

mljar-supervised 0.6.1 depends on tensorflow==2.2.0

mljar-supervised 0.6.0 depends on tensorflow==2.2.0

mljar-supervised 0.5.5 depends on tensorflow==2.2.0

mljar-supervised 0.5.4 depends on tensorflow==2.2.0

mljar-supervised 0.5.3 depends on tensorflow==2.2.0

mljar-supervised 0.5.2 depends on tensorflow==2.2.0

mljar-supervised 0.5.1 depends on tensorflow==2.2.0

mljar-supervised 0.5.0 depends on tensorflow==2.2.0

mljar-supervised 0.4.1 depends on tensorflow==2.2.0

mljar-supervised 0.4.0 depends on tensorflow==2.2.0

mljar-supervised 0.3.5 depends on pandas==1.0.3

mljar-supervised 0.3.4 depends on pandas==1.0.3

mljar-supervised 0.3.3 depends on pandas==1.0.3

mljar-supervised 0.3.2 depends on pandas==1.0.3

mljar-supervised 0.3.1 depends on pandas==1.0.3

mljar-supervised 0.3.0 depends on pandas==1.0.3

mljar-supervised 0.2.8 depends on pandas==1.0.3

mljar-supervised 0.2.7 depends on pandas==1.0.3

mljar-supervised 0.2.6 depends on pandas==1.0.3

mljar-supervised 0.2.5 depends on pandas==1.0.3

mljar-supervised 0.2.4 depends on pandas==1.0.3

mljar-supervised 0.2.3 depends on pandas==1.0.3

mljar-supervised 0.2.2 depends on pandas==1.0.3

mljar-supervised 0.1.7 depends on catboost==0.13.1

mljar-supervised 0.1.6 depends on catboost==0.13.1

mljar-supervised 0.1.5 depends on catboost==0.13.1

mljar-supervised 0.1.4 depends on catboost==0.13.1

mljar-supervised 0.1.3 depends on catboost==0.13.1

mljar-supervised 0.1.2 depends on catboost==0.13.1

mljar-supervised 0.1.1 depends on catboost==0.13.1

mljar-supervised 0.1.0 depends on catboost==0.13.1

To fix this you could try to:

1. loosen the range of package versions you've specified

2. remove package versions to allow pip attempt to solve the dependency conflict

ERROR: ResolutionImpossible: for help visit https://pip.pypa.io/en/latest/user_guide/#fixing-conflicting-dependencies

```

|

closed

|

2021-02-09T10:28:02Z

|

2021-02-09T23:37:18Z

|

https://github.com/mljar/mljar-supervised/issues/307

|

[] |

hygorxaraujo

| 2 |

psf/black

|

python

| 3,633 |

Line not wrapped

|

I found an issue in the current version of black where line wrapping is not correctly applied. This does not involve string reformatting.

**Black v23.3.0**

[Playground link](https://black.vercel.app/?version=stable&state=_Td6WFoAAATm1rRGAgAhARYAAAB0L-Wj4AHfAOhdAD2IimZxl1N_WlXnON2nzOXxnCQIxzIqQZbS3zQTwTW6xptQlTj-3pETmXPnpbnXnLb3eYNAvH6LCRbVMTSCqZQr7FCRQ4-fkpwBhQ_Kz5CtmFXpjv0xc0_v8nuB0CGjzA9o2ytyiyMRU7df_BmY9pQ2UapC3cfAYp1osTezMWNzrcufcWOYSQlLLO62l9n9j0LJUiBO-IQyOXnjiVNVMD9JCUIwGB3jbUyYFJKo-yvRpw9WGHrhrOkRZtwvsPzNCn17jwUPU9VEs55zPlEadM_bJk9QUD-ihc-tsr7jdIQ27qff53k9ygAAcjvKZbMf-RQAAYQC4AMAABIujeixxGf7AgAAAAAEWVo=)

## Options

`--line-length=88`

`--safe`

## Input

```python

class Test:

def example(self):

if True:

try:

result = very_important_function(

testdict=json.dumps(

{

"something_quite_long": self.this_is_a_long_string_as_well,

})

)

except:

pass

```

## Output

```python

class Test:

def example(self):

if True:

try:

result = very_important_function(

testdict=json.dumps(

{

"something_quite_long": self.this_is_a_long_string_as_well,

}

)

)

except:

pass

```

## Expected

```python

class Test:

def example(self):

if True:

try:

result = very_important_function(

testdict=json.dumps(

{

"something_quite_long":

self.this_is_a_long_string_as_well,

}

)

)

except:

pass

```

|

closed

|

2023-03-31T16:30:40Z

|

2024-02-02T21:05:49Z

|

https://github.com/psf/black/issues/3633

|

[] |

classner

| 4 |

tensorflow/tensor2tensor

|

machine-learning

| 1,441 |

Problems in multihead_attention

|

In T2T v1.12.0

Lines 3600-3643 in common_attention.py

` if cache is None or memory_antecedent is None:

q, k, v = compute_qkv(query_antecedent, memory_antecedent,

total_key_depth, total_value_depth, q_filter_width,

kv_filter_width, q_padding, kv_padding,

vars_3d_num_heads=vars_3d_num_heads)

if cache is not None:

if attention_type not in ["dot_product", "dot_product_relative"]:

# TODO(petershaw): Support caching when using relative position

# representations, i.e. "dot_product_relative" attention.

raise NotImplementedError(

"Caching is not guaranteed to work with attention types other than"

" dot_product.")

if bias is None:

raise ValueError("Bias required for caching. See function docstring "

"for details.")

if memory_antecedent is not None:

# Encoder-Decoder Attention Cache

q = compute_attention_component(query_antecedent, total_key_depth,

q_filter_width, q_padding, "q",

vars_3d_num_heads=vars_3d_num_heads)

k = cache["k_encdec"]

v = cache["v_encdec"]

else:

k = split_heads(k, num_heads)

v = split_heads(v, num_heads)

decode_loop_step = kwargs.get("decode_loop_step")

if decode_loop_step is None:

k = cache["k"] = tf.concat([cache["k"], k], axis=2)

v = cache["v"] = tf.concat([cache["v"], v], axis=2)

else:

# Inplace update is required for inference on TPU.

# Inplace_ops only supports inplace_update on the first dimension.

# The performance of current implementation is better than updating

# the tensor by adding the result of matmul(one_hot,

# update_in_current_step)

tmp_k = tf.transpose(cache["k"], perm=[2, 0, 1, 3])

tmp_k = inplace_ops.alias_inplace_update(

tmp_k, decode_loop_step, tf.squeeze(k, axis=2))

k = cache["k"] = tf.transpose(tmp_k, perm=[1, 2, 0, 3])

tmp_v = tf.transpose(cache["v"], perm=[2, 0, 1, 3])

tmp_v = inplace_ops.alias_inplace_update(

tmp_v, decode_loop_step, tf.squeeze(v, axis=2))

v = cache["v"] = tf.transpose(tmp_v, perm=[1, 2, 0, 3])`

The block:

` if memory_antecedent is not None:

# Encoder-Decoder Attention Cache

q = compute_attention_component(query_antecedent, total_key_depth,

q_filter_width, q_padding, "q",

vars_3d_num_heads=vars_3d_num_heads)

k = cache["k_encdec"]

v = cache["v_encdec"]`

should be unreachable, due to the sequence of preceding conditionals.

Also - currently trying to debug why I'm having shape issues in dot product attention:

` with tf.variable_scope(

name, default_name="dot_product_attention", values=[q, k, v]) as scope:

q = tf.Print(q,[q],'ATTENTION QUERY: ')

k = tf.Print(k,[k],'ATTENTION KEY: ')

logits = tf.matmul(q, k, transpose_b=True) # [..., length_q, length_kv]

if bias is not None:

bias = common_layers.cast_like(bias, logits)

bias = tf.Print(bias,[bias],'BIAS: ')

logits = tf.Print(logits,[logits],'LOGITS ')

logits += bias

logits = tf.Print(logits,[logits],'LOGITS POST ADD BIAS')

# If logits are fp16, upcast before softmax

logits = maybe_upcast(logits, activation_dtype, weight_dtype)

weights = tf.nn.softmax(logits, name="attention_weights")

weights = common_layers.cast_like(weights, q)

weights = tf.Print(weights,[weights],'WEIGHTS POST CAST LIKE')

if save_weights_to is not None:

save_weights_to[scope.name] = weights

save_weights_to[scope.name + "/logits"] = logits

# Drop out attention links for each head.

weights = common_layers.dropout_with_broadcast_dims(weights, 1.0 - dropout_rate, broadcast_dims=dropout_broadcast_dims)

#Debugging weights last dimension not matching value first dimension

weights = tf.Print(weights,[weights],'ATTENTION WEIGHTS AFTER DROPOUT: ')

v = tf.Print(v,[v],'VALUE VECTOR')

if common_layers.should_generate_summaries() and make_image_summary:

attention_image_summary(weights, image_shapes)

return tf.matmul(weights, v)

`

OUTPUT:

BIAS: [[[[-0]]]]BIAS: [[[[-0]]]]

BIAS: [[[[-0]]]]

BIAS: [[[[-0]]]]

BIAS: [[[[-0]]]]

BIAS: [[[[-0]]]]

ATTENTION KEY: [[[[0.151335612 -0.404835284 1.54091191...]]]...]

VALUE VECTOR[[[[0.166511878 -1.3204602 -0.372632712...]]]...]

ATTENTION QUERY: [[[[0.0620518401 -0.0874474421 0.000517165521...]]]...]

LOGITS [[[[-0.137875706]][[1.05136979]][[-0.866865873]]]...]

LOGITS POST ADD BIAS[[[[-0.137875706]][[1.05136979]][[-0.866865873]]]...]

WEIGHTS POST CAST LIKE[[[[1]][[1]][[1]]]...]

ATTENTION WEIGHTS AFTER DROPOUT: [[[[1]][[1]][[1]]]...]

ATTENTION KEY: [[[[-0.493546933 0.678736925 1.12754095...]]]...]

VALUE VECTOR[[[[0.427185386 -0.0682374686 -0.551386356...]]]...]

ATTENTION QUERY: [[[[-0.290087074 0.0257696807 -0.136151105...]]]...]

LOGITS [[[[-0.889816523]][[0.497348607]][[-1.31549573]]]...]

LOGITS POST ADD BIAS[[[[-0.889816523]][[0.497348607]][[-1.31549573]]]...]

WEIGHTS POST CAST LIKE[[[[1]][[1]][[1]]]...]

ATTENTION WEIGHTS AFTER DROPOUT: [[[[1]][[1]][[1]]]...]

VALUE VECTOR[[[[0.394824564 -0.571578 -1.01061356...]]]...]

ATTENTION KEY: [[[[3.20773053 -1.05240285 0.20996505...]]]...]

ATTENTION QUERY: [[[[0.187075511 -0.0486161038 0.089396365...]]]...]

LOGITS [[[[1.59205556]][[-1.71665192]][[-1.37695456]]]...]

LOGITS POST ADD BIAS[[[[1.59205556]][[-1.71665192]][[-1.37695456]]]...]

WEIGHTS POST CAST LIKE[[[[1]][[1]][[1]]]...]

ATTENTION WEIGHTS AFTER DROPOUT: [[[[1]][[1]][[1]]]...]

ATTENTION QUERY: [[[[-0.136278272 -0.159313381 -0.204157695...]]]...]

VALUE VECTOR[[[[-1.74210846 1.38060951 -1.33350658...]]]...]

ATTENTION KEY: [[[[-0.646322787 -0.519498885 -0.108884774...]]]...]

LOGITS [[[[-1.05637074]][[-1.38687372]][[1.01201]]]...]

LOGITS POST ADD BIAS[[[[-1.05637074]][[-1.38687372]][[1.01201]]]...]

WEIGHTS POST CAST LIKE[[[[1]][[1]][[1]]]...]

ATTENTION WEIGHTS AFTER DROPOUT: [[[[1]][[1]][[1]]]...]

ATTENTION KEY: [[[[-0.766771 1.29248929 0.353579193...]]]...]

VALUE VECTOR[[[[-0.473533779 0.339176893 -0.285344601...]]]...]

ATTENTION QUERY: [[[[-0.00684091263 -0.0722586587 -0.0857348889...]]]...]

LOGITS [[[[-1.03410649]][[0.495673776]][[-1.93785417]]]...]

LOGITS POST ADD BIAS[[[[-1.03410649]][[0.495673776]][[-1.93785417]]]...]

WEIGHTS POST CAST LIKE[[[[1]][[1]][[1]]]...]

ATTENTION WEIGHTS AFTER DROPOUT: [[[[1]][[1]][[1]]]...]

ATTENTION KEY: [[[[-0.752419114 0.899734139 0.263004512...]]]...]

VALUE VECTOR[[[[-1.55072808 0.319361448 0.537948072...]]]...]

ATTENTION QUERY: [[[[-0.130305797 0.192893207 -0.0268632658...]]]...]

LOGITS [[[[-0.535128653]][[0.04286623]][[0.769883]]]...]

LOGITS POST ADD BIAS[[[[-0.535128653]][[0.04286623]][[0.769883]]]...]

WEIGHTS POST CAST LIKE[[[[1]][[1]][[1]]]...]

ATTENTION WEIGHTS AFTER DROPOUT: [[[[1]][[1]][[1]]]...]

BIAS: [[[[-0 -0]]]]

BIAS: [[[[-0 -0]]]]

BIAS: [[[[-0 -0]]]]

BIAS: [[[[-0 -0]]]]

BIAS: [[[[-0 -0]]]]

BIAS: [[[[-0 -0]]]]

ATTENTION KEY: [[[[-1.19346094 0.665090382 -0.502224...]]]...]

ATTENTION QUERY: [[[[0.193519413 -0.132038742 -0.0287191756...]]]...]

LOGITS [[[[-0.292171031]][[1.25045216]][[1.87848222]]]...]

VALUE VECTOR[[[[-0.436115 -0.63339144 0.0544230118...]]]...]

LOGITS POST ADD BIAS[[[[-0.292171031 -0.292171031]][[1.25045216...]]]...]

WEIGHTS POST CAST LIKE[[[[0.5 0.5]][[0.5...]]]...]

ATTENTION WEIGHTS AFTER DROPOUT: [[[[0.5 0.5]][[0.5...]]]...]

File "/usr/local/lib/python2.7/dist-packages/tensor2tensor/layers/common_attention.py", line 3682, in multihead_attention

activation_dtype=kwargs.get("activation_dtype"))

File "/usr/local/lib/python2.7/dist-packages/tensor2tensor/layers/common_attention.py", line 1540, in dot_product_attention

return tf.matmul(weights, v)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/math_ops.py", line 2019, in matmul

a, b, adj_x=adjoint_a, adj_y=adjoint_b, name=name)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/gen_math_ops.py", line 1245, in batch_mat_mul

"BatchMatMul", x=x, y=y, adj_x=adj_x, adj_y=adj_y, name=name)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.py", line 787, in _apply_op_helper

op_def=op_def)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/util/deprecation.py", line 488, in new_func

return func(*args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 3274, in create_op

op_def=op_def)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 1770, in __init__

self._traceback = tf_stack.extract_stack()

InvalidArgumentError (see above for traceback): In[0] mismatch In[1] shape: 2 vs. 1: [1,16,1,2] [1,16,1,64] 0 0

[[node transformer/while/body/parallel_0/body/decoder/layer_0/self_attention/multihead_attention/dot_product_attention/MatMul_1 (defined at /usr/local/lib/python2.7/dist-packages/tensor2tensor/layers/common_attention.py:1540) = BatchMatMul[T=DT_FLOAT, adj_x=false, adj_y=false, _device="/job:localhost/replica:0/task:0/device:CPU:0"](transformer/while/body/parallel_0/body/decoder/layer_0/self_attention/multihead_attention/dot_product_attention/Print_6, transformer/while/body/parallel_0/body/decoder/layer_0/self_attention/multihead_attention/dot_product_attention/Print_7)]]

Basically, adding logits to bias is causing logits to have an invalid shape. This sort of seems to be a caching issue, since the previous queries,keys, and values are not found on the second autoregressive iteration.

|

open

|

2019-02-07T19:01:01Z

|

2019-02-07T19:44:53Z

|

https://github.com/tensorflow/tensor2tensor/issues/1441

|

[] |

mikeymezher

| 1 |

amidaware/tacticalrmm

|

django

| 1,459 |

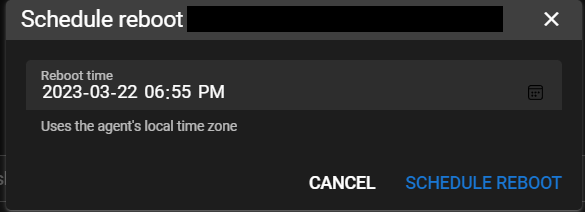

[FEATURE REQUEST]: Schedule bulk tasks

|

**Is your feature request related to a problem? Please describe.**

One of our clients has frequent power outages that don't follow any sort of schedule.

Each time, we need to "time" this using `shutdown /s /t` a day or so in advance, and we have to calculate the number of minutes until the desired shutdown time.

**Describe the solution you'd like**

Besides shutdowns, there are several cases where we have desired to quickly fire off bulk command / script at a scheduled time as opposed to creating a one-time task in Automation Manager.

In addition, we often use the same Automation Policies across clients (using sub containers as a workaround for fine grained policies). This works perfect for our needs, but since you can only apply one policy per container it would be much more flexible and powerful (and easier) if we could schedule bulk tasks to run at a specified time.

**Describe alternatives you've considered**

Some commands like `shutdown` allow us to work around this. Another potential workaround is to do a bulk command that adds the task using the build in Windows Task Scheduler, but this is a lot more work.

**Additional context**

IMO **Tools -> Bulk Command** and **Tools -> Bulk Script** would benefit form having a scheduling option similar to the **Reboot Later** option:

For consistency, I also think the right-click on agent -> **Send Command** and **Run Script** should have this option as well.

Thank you,

|

open

|

2023-03-23T00:59:26Z

|

2023-03-23T01:01:39Z

|

https://github.com/amidaware/tacticalrmm/issues/1459

|

[] |

joeldeteves

| 0 |

polarsource/polar

|

fastapi

| 4,526 |

Deprecate `Ads`

|

Our Ad benefit was designed for two use cases:

1. README sponsorship

2. Sponsorship shoutout/showcase in our newsletter feature

We've sunsetted our newsletter feature a few months ago (https://github.com/orgs/polarsource/discussions/3998) so `#2` is no longer applicable – leaving `#1`. Simultaneously, we've been supported in the amazing `Sponsorkit` (https://github.com/antfu-collective/sponsorkit) library for a while which can merge sponsors/subscribers from GitHub, Patreon, Polar and more into one. Being a better offering and solution for `#1`.

As a result, we should sunset our ad benefit and update our documentation to promote `Sponsorkit` alone for this use case as it's a better solution and offering. Since you're getting setup now, I'd highly recommend going with `Sponsorkit` out of the gates.

|

closed

|

2024-11-24T21:03:04Z

|

2025-02-28T10:03:48Z

|

https://github.com/polarsource/polar/issues/4526

|

[

"refactor",

"docs/content"

] |

birkjernstrom

| 0 |

kizniche/Mycodo

|

automation

| 471 |

6.1.0: Send Test Mail broken?

|

So my installation has been acting up lately, so I tried to set up some alerts.

After configuring all the smtp parameters I tried to send a test mail:

Entered my eMail Address, clicked "Send Test eMail" and got the following error:

Error: Modify Alert Settings: name 'message' is not defined

Either my installation has some serious issues, or mycodo is quite buggy right now...

|

closed

|

2018-05-15T10:01:54Z

|

2018-06-20T03:18:30Z

|

https://github.com/kizniche/Mycodo/issues/471

|

[] |

fry42

| 7 |

microsoft/nni

|

tensorflow

| 5,059 |

borken when ModelSpeedup

|

**borken when ModelSpeedup**:

**Ubuntu20**:

- NNI version:2.7

- Training service (local|remote|pai|aml|etc):local

- Client OS:

- Server OS (for remote mode only):

- Python version:3.7

- PyTorch/TensorFlow version:PyTorch 1.11.0

- Is conda/virtualenv/venv used?:conda

- Is running in Docker?:No

**Configuration**:

- Experiment config (remember to remove secrets!):

- Search space:

**Log message**:

-

nnimanager.log:

> Traceback (most recent call last):

File "lib/prune_gssl.py", line 137, in <module>

ModelSpeedup(model, torch.rand(3, 3, cfg.input_size, cfg.input_size).to(device), "./prune_model/mask.pth").speedup_model()

File "/home/inspur/Projects/nni/nni/compression/pytorch/speedup/compressor.py", line 512, in speedup_model

self.infer_modules_masks()

File "/home/inspur/Projects/nni/nni/compression/pytorch/speedup/compressor.py", line 355, in infer_modules_masks

self.update_direct_sparsity(curnode)

File "/home/inspur/Projects/nni/nni/compression/pytorch/speedup/compressor.py", line 237, in update_direct_sparsity

node.outputs) == 1, 'The number of the output should be one after the Tuple unpacked manually'

AssertionError: The number of the output should be one after the Tuple unpacked manually

- dispatcher.log:

- nnictl stdout and stderr:

<!--

Where can you find the log files:

LOG: https://github.com/microsoft/nni/blob/master/docs/en_US/Tutorial/HowToDebug.md#experiment-root-director

STDOUT/STDERR: https://nni.readthedocs.io/en/stable/reference/nnictl.html#nnictl-log-stdout

-->

**How to reproduce it?**: below is my forward code

> def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = F.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

cls1 = self.cls_layer(x)

offset_x = self.x_layer(x)

offset_y = self.y_layer(x)

nb_x = self.nb_x_layer(x)

nb_y = self.nb_y_layer(x)

x = self.my_maxpool(x)

cls2 = self.cls_layer(x)

x = self.my_maxpool(x)

cls3 = self.cls_layer(x)

return cls1, cls2, cls3, offset_x, offset_y, nb_x, nb_y

"cls1, cls2, cls3" are produced by the same layer(cls_layer), when return cls1, cls2, cls3, error comes up, when return one of them, error is gone

|

open

|

2022-08-11T08:33:38Z

|

2023-05-11T10:30:20Z

|

https://github.com/microsoft/nni/issues/5059

|

[

"bug",

"support",

"ModelSpeedup",

"v3.0"

] |

ly0303521

| 3 |

explosion/spaCy

|

machine-learning

| 13,528 |

Numpy v2.0.0 breaks the ability to download models using spaCy

|

## How to reproduce the behaviour

In my dockerfile, I run these commands:

```Dockerfile

FROM --platform=linux/amd64 python:3.12.4

RUN pip install --upgrade pip

RUN pip install torch --index-url https://download.pytorch.org/whl/cpu

RUN pip install spacy

RUN python -m spacy download en_core_web_lg

```

It returns the following error (and stacktrace):

```

2.519 Traceback (most recent call last):

2.519 File "<frozen runpy>", line 189, in _run_module_as_main

2.519 File "<frozen runpy>", line 148, in _get_module_details

2.519 File "<frozen runpy>", line 112, in _get_module_details

2.519 File "/usr/local/lib/python3.12/site-packages/spacy/__init__.py", line 6, in <module>

2.521 from .errors import setup_default_warnings

2.522 File "/usr/local/lib/python3.12/site-packages/spacy/errors.py", line 3, in <module>

2.522 from .compat import Literal

2.522 File "/usr/local/lib/python3.12/site-packages/spacy/compat.py", line 39, in <module>

2.522 from thinc.api import Optimizer # noqa: F401

2.522 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2.522 File "/usr/local/lib/python3.12/site-packages/thinc/api.py", line 1, in <module>

2.522 from .backends import (

2.522 File "/usr/local/lib/python3.12/site-packages/thinc/backends/__init__.py", line 17, in <module>

2.522 from .cupy_ops import CupyOps

2.522 File "/usr/local/lib/python3.12/site-packages/thinc/backends/cupy_ops.py", line 16, in <module>

2.522 from .numpy_ops import NumpyOps

2.522 File "thinc/backends/numpy_ops.pyx", line 1, in init thinc.backends.numpy_ops

2.524 ValueError: numpy.dtype size changed, may indicate binary incompatibility. Expected 96 from C header, got 88 from PyObject

```

Locking to the previous version of numpy will resolve this issue:

```Dockerfile

FROM --platform=linux/amd64 python:3.12.4

RUN pip install --upgrade pip

RUN pip install torch --index-url https://download.pytorch.org/whl/cpu

RUN pip install numpy==1.26.4 spacy

RUN python -m spacy download en_core_web_lg

```

|

open

|

2024-06-16T15:42:21Z

|

2024-12-29T11:20:23Z

|

https://github.com/explosion/spaCy/issues/13528

|

[

"bug"

] |

afogel

| 16 |

modoboa/modoboa

|

django

| 2,336 |

Webmail does not list mails when FTS-Solr is enabled

|

# Impacted versions

* OS Type: Debian

* OS Version: 9 (stretch)

* Database Type: MySQL

* Database version: 10.1.48-MariaDB-0+deb9u2

* Modoboa: 1.17.0

* installer used: Yes

* Webserver: Nginx / Uwsgi

# Steps to reproduce

1. Configure Solr as FTS engine for Dovecot like:

plugin {

fts = solr

fts_autoindex = yes

fts_solr = break-imap-search url=http://xxxx:8080/solr/

}

2. Launch webmail client in Modoboa

# Current behavior

1. Mails are not listed in overview but are searchable

2. Also quarantine lists mails

Due to how default IMAP search is implemented no mails are found

# Expected behavior

List mails in overview

# Video/Screenshot link (optional)

<img width="1236" alt="grafik" src="https://user-images.githubusercontent.com/15322546/127743140-5afd3717-d139-4209-ab33-f91b65cc1993.png">

|

open

|

2021-07-31T14:33:07Z

|

2021-10-14T07:21:02Z

|

https://github.com/modoboa/modoboa/issues/2336

|

[

"bug"

] |

zsoltbarat

| 5 |

marshmallow-code/apispec

|

rest-api

| 440 |

Add minimum/maximum on date/datetime fields

|

Python's date/datetime objects have min/max bounds.

https://docs.python.org/3.5/library/datetime.html

https://stackoverflow.com/a/31972447/4653485

We should use those to automatically specify min/max values in the spec.

|

open

|

2019-04-29T13:09:32Z

|

2025-01-21T18:22:35Z

|

https://github.com/marshmallow-code/apispec/issues/440

|

[

"help wanted"

] |

lafrech

| 0 |

wkentaro/labelme

|

computer-vision

| 780 |

new script to convert a whole folder of Json files to a ready to training dataset

|

Hi, i have created a variation script of json_to_dataset.py script, i would like make this contribution to the project if you consider it appropriate.

its named "folder_to_dataset.py", it converts not a single json file into dataset, but a whole folder of json files into a ready to training dataset.

**OUTPUT**: as output it drops the folders "training/images" and "training/labels" with the png files obtained from his correspondent json file, the png files are named as a sequence of numbers started by default from "1"

**PARAMETERS**: folder_to_dataset.py receives as input the folder which contains the json files you want to convert into dataset, also has an optional parameter named "-startsWith" which sets the first number to start the sequence of png output files.

**Example**:

the command: "folder_to_dataset.py myJsonsFolderPath" will drop: 1.png, 2.png, 3.png, … in training/images and training/labels folders

the command: "folder_to_dataset.py myJsonsFolderPath -startsWith 5“ will drop: 5.png, 6.png, 7.png, … in training/images and training/labels folders

**script features:**

*shows dataset building progress by percents

*skip no Json files without interrupt the process

*allows dataset updating by “startsWith” parameter

-the picture “example” shows how the script works

|

closed

|

2020-09-27T16:34:47Z

|

2022-06-25T04:56:21Z

|

https://github.com/wkentaro/labelme/issues/780

|

[] |

JasgTrilla

| 0 |

Miserlou/Zappa

|

flask

| 1,896 |

FastAPI incompatibility

|

## Context

I get a 500 response code when I try to deploy a simple application.

```python

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def read_root():

return {"Hello": "World"}

```

Zappa tail says `[1562665728357] __call__() missing 1 required positional argument: 'send'`.

When I go to the api gateway url, I see this message:

> "{'message': 'An uncaught exception happened while servicing this request. You can investigate this with the `zappa tail` command.', 'traceback': ['Traceback (most recent call last):\\n', ' File \"/var/task/handler.py\", line 531, in handler\\n with Response.from_app(self.wsgi_app, environ) as response:\\n', ' File \"/var/task/werkzeug/wrappers/base_response.py\", line 287, in from_app\\n return cls(*_run_wsgi_app(app, environ, buffered))\\n', ' File \"/var/task/werkzeug/test.py\", line 1119, in run_wsgi_app\\n app_rv = app(environ, start_response)\\n', ' File \"/var/task/zappa/middleware.py\", line 70, in __call__\\n response = self.application(environ, encode_response)\\n', \"TypeError: __call__() missing 1 required positional argument: 'send'\\n\"]}"

## Steps to Reproduce

```bash

zappa init

zappa deploy dev

# Error: Warning! Status check on the deployed lambda failed. A GET request to '/' yielded a 500 response code.

```

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Zappa version used: 0.48.2

* Operating System and Python version: macOS Mojave | Python 3.6.5

* The output of `pip freeze`:

```

Package Version

------------------- ---------

aiofiles 0.4.0

aniso8601 6.0.0

argcomplete 1.9.3

boto3 1.9.184

botocore 1.12.184

certifi 2019.6.16

cfn-flip 1.2.1

chardet 3.0.4

Click 7.0

dataclasses 0.6

dnspython 1.16.0

docutils 0.14

durationpy 0.5

email-validator 1.0.4

fastapi 0.31.0

future 0.16.0

graphene 2.1.6

graphql-core 2.2

graphql-relay 0.4.5

h11 0.8.1

hjson 3.0.1

httptools 0.0.13

idna 2.8

itsdangerous 1.1.0

Jinja2 2.10.1

jmespath 0.9.3

kappa 0.6.0

lambda-packages 0.20.0

MarkupSafe 1.1.1

pip 19.1.1

placebo 0.9.0

promise 2.2.1

pydantic 0.29

python-dateutil 2.6.1

python-multipart 0.0.5

python-slugify 1.2.4

PyYAML 5.1.1

requests 2.22.0

Rx 1.6.1

s3transfer 0.2.1

setuptools 39.0.1

six 1.12.0

starlette 0.12.0

toml 0.10.0

tqdm 4.19.1

troposphere 2.4.9

ujson 1.35

Unidecode 1.1.1

urllib3 1.25.3

uvicorn 0.8.3

uvloop 0.12.2

websockets 7.0

Werkzeug 0.15.4

wheel 0.33.4

wsgi-request-logger 0.4.6

zappa 0.48.2

```

* Your `zappa_settings.py`:

```

{

"dev": {

"app_function": "app.main.app",

"aws_region": "ap-northeast-2",

"profile_name": "default",

"project_name": "myprojectname",

"runtime": "python3.6",

"s3_bucket": "mybucketname"

}

}

```

|

open

|

2019-07-09T10:06:07Z

|

2020-02-09T21:40:56Z

|

https://github.com/Miserlou/Zappa/issues/1896

|

[] |

sunnysid3up

| 11 |

kizniche/Mycodo

|

automation

| 426 |

LCD does not activate when attempt to display relay state

|

## Mycodo Issue Report:

- Specific Mycodo Version: 5.6.1

#### Problem Description

- Attempt to display relay state on LCD. e.g. Output 4 (Lights) State (on/off)

- Activate LCD

- nothing happens, LCD continues to show Mycodo version (see Daemon errors below)

### Errors

2018-03-15 00:34:38,819 - mycodo.lcd_1 - ERROR - Count not initialize LCD. Error: 'LCDController' object has no attribute 'LCD_CMD'

2018-03-15 00:39:45,509 - mycodo.lcd_1 - ERROR - Error: 'relay_state'

Traceback (most recent call last):

File "/var/mycodo-root/mycodo/controller_lcd.py", line 171, in __init__

each_lcd_display.line_2_measurement)

File "/var/mycodo-root/mycodo/controller_lcd.py", line 440, in setup_lcd_line

self.lcd_line[display_id][line]['unit'] = self.list_inputs[measurement]['unit']

KeyError: 'relay_state'

2018-03-15 00:39:45,540 - mycodo.lcd_1 - INFO - Activated in 24436.7 ms

2018-03-15 00:39:45,547 - mycodo.lcd_1 - ERROR - Exception: 'LCDController' object has no attribute 'LCD_CMD'

Traceback (most recent call last):

File "/var/mycodo-root/mycodo/controller_lcd.py", line 268, in run

self.lcd_byte(0x01, self.LCD_CMD, self.LCD_BACKLIGHT_OFF)

AttributeError: 'LCDController' object has no attribute 'LCD_CMD'

2018-03-15 00:39:45,599 - mycodo.lcd_1 - ERROR - Count not initialize LCD. Error: 'LCDController' object has no attribute 'LCD_CMD'

2018-03-15 00:51:16,468 - mycodo.lcd_1 - INFO - Activated in 9383.0 ms

|

closed

|

2018-03-15T01:03:42Z

|

2018-03-15T02:09:27Z

|

https://github.com/kizniche/Mycodo/issues/426

|

[] |

drgrumpy

| 4 |

FactoryBoy/factory_boy

|

django

| 912 |

Consider renaming to FactoryPy or the like

|

#### The problem

There is massive gender imbalance in the tech industry and the Ruby library which this project was inspired from was renamed some time ago. A fixtures factory has nothing to do with a "boy".

[FactoryGirl was renamed FactoryBot](https://dev.to/ben/factorygirl-has-been-renamed-factorybot-cma)