repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

sequencelengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

ydataai/ydata-profiling | jupyter | 741 | pandas profilling too dlow: make multi processing | Have a stable of 10000 rows, 4000 columns,

panda profile is too slow...

Can you make it in multi-processing ?

(ie column stats can be done in multi-processing easily).

thanks | closed | 2021-03-30T06:11:08Z | 2021-09-27T22:45:44Z | https://github.com/ydataai/ydata-profiling/issues/741 | [

"performance 🚀"

] | arita37 | 1 |

CorentinJ/Real-Time-Voice-Cloning | tensorflow | 695 | No module named pathlib | > matteo@MBP-di-matteo Real-Time-Voice-Cloning-master % python demo_cli.py

> Traceback (most recent call last):

> File "demo_cli.py", line 2, in <module>

> from utils.argutils import print_args

> File "/Users/matteo/Real-Time-Voice-Cloning-master/utils/argutils.py", line 22

> def print_args(args: argparse.Namespace, parser=None):

> ^

> SyntaxError: invalid syntax

> matteo@MBP-di-matteo Real-Time-Voice-Cloning-master % python demo_toolbox.py

> Traceback (most recent call last):

> File "demo_toolbox.py", line 1, in <module>

> from pathlib import Path

> ImportError: No module named pathlib

> matteo@MBP-di-matteo Real-Time-Voice-Cloning-master % sudo python demo_toolbox.py

> Password:

> Traceback (most recent call last):

> File "demo_toolbox.py", line 1, in <module>

> from pathlib import Path

> ImportError: No module named pathlib

What should I do? | closed | 2021-03-07T10:40:27Z | 2021-03-08T21:24:20Z | https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/695 | [] | matteopuppis | 3 |

axnsan12/drf-yasg | django | 129 | Is it possible to set example value for fields | Hello,

I am looking to set *example* attribute of model property but cannot find example in documentation or source code.

Basically, I would like swagger.yaml to create attribute [here](https://github.com/axnsan12/drf-yasg/blob/aca0c4713e0163fb0deea8ea397368084a7c83e5/tests/reference.yaml#L1485-L1489), like this:

```yaml

properties:

title:

description: title model help_text

type: string

maxLength: 255

minLength: 1

example: My title

```

Then result documentation would include "My title" instead of "string" like in screenshot:

Is that possible? Would appreciate direction where to look. | closed | 2018-05-21T20:21:19Z | 2024-06-20T00:35:36Z | https://github.com/axnsan12/drf-yasg/issues/129 | [] | bmihelac | 11 |

huggingface/transformers | deep-learning | 36,145 | Problems with Training ModernBERT | ### System Info

- `transformers` version: 4.48.3

- Platform: Linux-6.8.0-52-generic-x86_64-with-glibc2.35

- Python version: 3.12.9

- Huggingface_hub version: 0.28.1

- Safetensors version: 0.5.2

- Accelerate version: 1.3.0

- Accelerate config: not found

- PyTorch version (GPU?): 2.6.0+cu126 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using distributed or parallel set-up in script?: Parallel (I'm not sure; I'm using a single GPU on a single machine)

- Using GPU in script?: Yes

- GPU type: NVIDIA GeForce RTX 2060

I have also tried installing `python3.12-dev` in response to the following initial error message (included with the code snippet later)

```python

/usr/include/python3.12/pyconfig.h:3:12: fatal error: x86_64-linux-gnu/python3.12/pyconfig.h: No such file or directory

# include <x86_64-linux-gnu/python3.12/pyconfig.h>

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

compilation terminated.

```

but the error persists.

### Who can help?

@ArthurZucker

### Information

- [ ] The official example scripts

- [x] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [x] My own task or dataset (give details below)

### Reproduction

I am essentially replicating the code from the following:

- https://github.com/di37/ner-electrical-engineering-finetuning/blob/main/notebooks/01_data_tokenization.ipynb

- https://github.com/di37/ner-electrical-engineering-finetuning/blob/main/notebooks/02_finetuning.ipynb

- See also: https://blog.cubed.run/automating-electrical-engineering-text-analysis-with-named-entity-recognition-ner-part-1-babd2df422d8

The following is the code for setting up the training process:

```python

import os

from transformers import BertTokenizerFast

from datasets import load_dataset

# from utilities import MODEL_ID, DATASET_ID, OUTPUT_DATASET_PATH

DATASET_ID = "disham993/ElectricalNER"

MODEL_ID = "answerdotai/ModernBERT-large"

LOGS = "logs"

OUTPUT_DATASET_PATH = os.path.join(

"data", "tokenized_electrical_ner_modernbert"

) # "data"

OUTPUT_DIR = "models"

MODEL_PATH = os.path.join(OUTPUT_DIR, MODEL_ID)

OUTPUT_MODEL = os.path.join(OUTPUT_DIR, f"electrical-ner-{MODEL_ID.split('/')[-1]}")

EVAL_STRATEGY = "epoch"

LEARNING_RATE = 1e-5

PER_DEVICE_TRAIN_BATCH_SIZE = 64

PER_DEVICE_EVAL_BATCH_SIZE = 64

NUM_TRAIN_EPOCHS = 5

WEIGHT_DECAY = 0.01

LOCAL_MODELS = {

"google-bert/bert-base-uncased": "electrical-ner-bert-base-uncased",

"distilbert/distilbert-base-uncased": "electrical-ner-distilbert-base-uncased",

"google-bert/bert-large-uncased": "electrical-ner-bert-large-uncased",

"answerdotai/ModernBERT-base": "electrical-ner-ModernBERT-base",

"answerdotai/ModernBERT-large": "electrical-ner-ModernBERT-large",

}

ONLINE_MODELS = {

"google-bert/bert-base-uncased": "disham993/electrical-ner-bert-base",

"distilbert/distilbert-base-uncased": "disham993/electrical-ner-distilbert-base",

"google-bert/bert-large-uncased": "disham993/electrical-ner-bert-large",

"answerdotai/ModernBERT-base": "disham993/electrical-ner-ModernBERT-base",

"answerdotai/ModernBERT-large": "disham993/electrical-ner-ModernBERT-large",

}

electrical_ner_dataset = load_dataset(DATASET_ID, trust_remote_code=True)

print(electrical_ner_dataset)

from datasets import DatasetDict

shrunk_train = electrical_ner_dataset['train'].select(range(10))

shrunk_valid = electrical_ner_dataset['validation'].select(range(5))

shrunk_test = electrical_ner_dataset['test'].select(range(5))

electrical_ner_dataset = DatasetDict({

'train': shrunk_train,

'validation': shrunk_valid,

'test': shrunk_test

})

electrical_ner_dataset.shape

tokenizer = BertTokenizerFast.from_pretrained(MODEL_ID)

def tokenize_and_align_labels(examples, label_all_tokens=True):

"""

Function to tokenize and align labels with respect to the tokens. This function is specifically designed for

Named Entity Recognition (NER) tasks where alignment of the labels is necessary after tokenization.

Parameters:

examples (dict): A dictionary containing the tokens and the corresponding NER tags.

- "tokens": list of words in a sentence.

- "ner_tags": list of corresponding entity tags for each word.

label_all_tokens (bool): A flag to indicate whether all tokens should have labels.

If False, only the first token of a word will have a label,

the other tokens (subwords) corresponding to the same word will be assigned -100.

Returns:

tokenized_inputs (dict): A dictionary containing the tokenized inputs and the corresponding labels aligned with the tokens.

"""

tokenized_inputs = tokenizer(examples["tokens"], truncation=True, is_split_into_words=True)

labels = []

for i, label in enumerate(examples["ner_tags"]):

word_ids = tokenized_inputs.word_ids(batch_index=i)

# word_ids() => Return a list mapping the tokens

# to their actual word in the initial sentence.

# It Returns a list indicating the word corresponding to each token.

previous_word_idx = None

label_ids = []

# Special tokens like `<s>` and `<\s>` are originally mapped to None

# We need to set the label to -100 so they are automatically ignored in the loss function.

for word_idx in word_ids:

if word_idx is None:

# set –100 as the label for these special tokens

label_ids.append(-100)

# For the other tokens in a word, we set the label to either the current label or -100, depending on

# the label_all_tokens flag.

elif word_idx != previous_word_idx:

# if current word_idx is != prev then its the most regular case

# and add the corresponding token

label_ids.append(label[word_idx])

else:

# to take care of sub-words which have the same word_idx

# set -100 as well for them, but only if label_all_tokens == False

label_ids.append(label[word_idx] if label_all_tokens else -100)

# mask the subword representations after the first subword

previous_word_idx = word_idx

labels.append(label_ids)

tokenized_inputs["labels"] = labels

return tokenized_inputs

tokenized_datasets = electrical_ner_dataset.map(tokenize_and_align_labels, batched=True)

tokenized_electrical_ner_dataset = tokenized_datasets

import os

import numpy as np

from transformers import AutoTokenizer

from transformers import DataCollatorForTokenClassification

from transformers import AutoModelForTokenClassification

from datasets import load_from_disk

from transformers import TrainingArguments, Trainer

import evaluate

import json

import pandas as pd

label_list= tokenized_electrical_ner_dataset["train"].features["ner_tags"].feature.names

num_labels = len(label_list)

print(f"Labels: {label_list}")

print(f"Number of labels: {num_labels}")

model = AutoModelForTokenClassification.from_pretrained(MODEL_ID, num_labels=num_labels)

tokenizer = AutoTokenizer.from_pretrained(MODEL_ID)

args = TrainingArguments(

output_dir=MODEL_PATH,

eval_strategy=EVAL_STRATEGY,

learning_rate=LEARNING_RATE,

per_device_train_batch_size=1,

per_device_eval_batch_size=1,

num_train_epochs=NUM_TRAIN_EPOCHS,

weight_decay=WEIGHT_DECAY,

push_to_hub=False

)

data_collator = DataCollatorForTokenClassification(tokenizer)

def compute_metrics(eval_preds):

"""

Function to compute the evaluation metrics for Named Entity Recognition (NER) tasks.

The function computes precision, recall, F1 score and accuracy.

Parameters:

eval_preds (tuple): A tuple containing the predicted logits and the true labels.

Returns:

A dictionary containing the precision, recall, F1 score and accuracy.

"""

pred_logits, labels = eval_preds

pred_logits = np.argmax(pred_logits, axis=2)

# the logits and the probabilities are in the same order,

# so we don’t need to apply the softmax

# We remove all the values where the label is -100

predictions = [

[label_list[eval_preds] for (eval_preds, l) in zip(prediction, label) if l != -100]

for prediction, label in zip(pred_logits, labels)

]

true_labels = [

[label_list[l] for (eval_preds, l) in zip(prediction, label) if l != -100]

for prediction, label in zip(pred_logits, labels)

]

metric = evaluate.load("seqeval")

results = metric.compute(predictions=predictions, references=true_labels)

return {

"precision": results["overall_precision"],

"recall": results["overall_recall"],

"f1": results["overall_f1"],

"accuracy": results["overall_accuracy"],

}

trainer = Trainer(

model,

args,

train_dataset=tokenized_electrical_ner_dataset["train"],

eval_dataset=tokenized_electrical_ner_dataset["validation"],

data_collator=data_collator,

tokenizer=tokenizer,

compute_metrics=compute_metrics,

)

trainer.train()

```

```python

The tokenizer class you load from this checkpoint is not the same type as the class this function is called from. It may result in unexpected tokenization.

The tokenizer class you load from this checkpoint is 'PreTrainedTokenizerFast'.

The class this function is called from is 'BertTokenizerFast'.

Map: 100%|█████████████████████████████| 10/10 [00:00<00:00, 1220.41 examples/s]

Map: 100%|████████████████████████████████| 5/5 [00:00<00:00, 818.40 examples/s]

Map: 100%|███████████████████████████████| 5/5 [00:00<00:00, 1246.82 examples/s]

Some weights of ModernBertForTokenClassification were not initialized from the model checkpoint at answerdotai/ModernBERT-large and are newly initialized: ['classifier.bias', 'classifier.weight']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

/home/hyunjong/Documents/Development/Python/trouver_personal_playground/playgrounds/ml_model_training_playground/modernbert_training_error.py:183: FutureWarning: `tokenizer` is deprecated and will be removed in version 5.0.0 for `Trainer.__init__`. Use `processing_class` instead.

trainer = Trainer(

0%| | 0/50 [00:00<?, ?it/s]In file included from /usr/include/python3.12/Python.h:12:0,

from /tmp/tmpjbmobkir/main.c:5:

/usr/include/python3.12/pyconfig.h:3:12: fatal error: x86_64-linux-gnu/python3.12/pyconfig.h: No such file or directory

# include <x86_64-linux-gnu/python3.12/pyconfig.h>

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

compilation terminated.

Traceback (most recent call last):

File "/home/hyunjong/Documents/Development/Python/trouver_personal_playground/playgrounds/ml_model_training_playground/modernbert_training_error.py", line 193, in <module>

trainer.train()

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/transformers/trainer.py", line 2171, in train

return inner_training_loop(

^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/transformers/trainer.py", line 2531, in _inner_training_loop

tr_loss_step = self.training_step(model, inputs, num_items_in_batch)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/transformers/trainer.py", line 3675, in training_step

loss = self.compute_loss(model, inputs, num_items_in_batch=num_items_in_batch)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/transformers/trainer.py", line 3731, in compute_loss

outputs = model(**inputs)

^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/transformers/models/modernbert/modeling_modernbert.py", line 1349, in forward

outputs = self.model(

^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/transformers/models/modernbert/modeling_modernbert.py", line 958, in forward

hidden_states = self.embeddings(input_ids=input_ids, inputs_embeds=inputs_embeds)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/transformers/models/modernbert/modeling_modernbert.py", line 217, in forward

self.compiled_embeddings(input_ids)

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/eval_frame.py", line 574, in _fn

return fn(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 1380, in __call__

return self._torchdynamo_orig_callable(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 1164, in __call__

result = self._inner_convert(

^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 547, in __call__

return _compile(

^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 986, in _compile

guarded_code = compile_inner(code, one_graph, hooks, transform)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 715, in compile_inner

return _compile_inner(code, one_graph, hooks, transform)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_utils_internal.py", line 95, in wrapper_function

return function(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 750, in _compile_inner

out_code = transform_code_object(code, transform)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/bytecode_transformation.py", line 1361, in transform_code_object

transformations(instructions, code_options)

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 231, in _fn

return fn(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/convert_frame.py", line 662, in transform

tracer.run()

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/symbolic_convert.py", line 2868, in run

super().run()

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/symbolic_convert.py", line 1052, in run

while self.step():

^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/symbolic_convert.py", line 962, in step

self.dispatch_table[inst.opcode](self, inst)

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/symbolic_convert.py", line 3048, in RETURN_VALUE

self._return(inst)

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/symbolic_convert.py", line 3033, in _return

self.output.compile_subgraph(

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/output_graph.py", line 1101, in compile_subgraph

self.compile_and_call_fx_graph(

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/output_graph.py", line 1382, in compile_and_call_fx_graph

compiled_fn = self.call_user_compiler(gm)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/output_graph.py", line 1432, in call_user_compiler

return self._call_user_compiler(gm)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/output_graph.py", line 1483, in _call_user_compiler

raise BackendCompilerFailed(self.compiler_fn, e).with_traceback(

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/output_graph.py", line 1462, in _call_user_compiler

compiled_fn = compiler_fn(gm, self.example_inputs())

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/repro/after_dynamo.py", line 130, in __call__

compiled_gm = compiler_fn(gm, example_inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/__init__.py", line 2340, in __call__

return compile_fx(model_, inputs_, config_patches=self.config)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1863, in compile_fx

return aot_autograd(

^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/backends/common.py", line 83, in __call__

cg = aot_module_simplified(gm, example_inputs, **self.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_functorch/aot_autograd.py", line 1155, in aot_module_simplified

compiled_fn = dispatch_and_compile()

^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_functorch/aot_autograd.py", line 1131, in dispatch_and_compile

compiled_fn, _ = create_aot_dispatcher_function(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_functorch/aot_autograd.py", line 580, in create_aot_dispatcher_function

return _create_aot_dispatcher_function(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_functorch/aot_autograd.py", line 830, in _create_aot_dispatcher_function

compiled_fn, fw_metadata = compiler_fn(

^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_functorch/_aot_autograd/jit_compile_runtime_wrappers.py", line 678, in aot_dispatch_autograd

compiled_fw_func = aot_config.fw_compiler(fw_module, adjusted_flat_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_functorch/aot_autograd.py", line 489, in __call__

return self.compiler_fn(gm, example_inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1741, in fw_compiler_base

return inner_compile(

^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 569, in compile_fx_inner

return wrap_compiler_debug(_compile_fx_inner, compiler_name="inductor")(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_dynamo/repro/after_aot.py", line 102, in debug_wrapper

inner_compiled_fn = compiler_fn(gm, example_inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 685, in _compile_fx_inner

mb_compiled_graph = fx_codegen_and_compile(

^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1129, in fx_codegen_and_compile

return scheme.codegen_and_compile(gm, example_inputs, inputs_to_check, graph_kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1044, in codegen_and_compile

compiled_fn = graph.compile_to_module().call

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2027, in compile_to_module

return self._compile_to_module()

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2033, in _compile_to_module

self.codegen_with_cpp_wrapper() if self.cpp_wrapper else self.codegen()

^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/graph.py", line 1968, in codegen

self.scheduler.codegen()

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/scheduler.py", line 3477, in codegen

return self._codegen()

^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/scheduler.py", line 3554, in _codegen

self.get_backend(device).codegen_node(node)

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/codegen/cuda_combined_scheduling.py", line 80, in codegen_node

return self._triton_scheduling.codegen_node(node)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/codegen/simd.py", line 1219, in codegen_node

return self.codegen_node_schedule(

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/codegen/simd.py", line 1263, in codegen_node_schedule

src_code = kernel.codegen_kernel()

^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/codegen/triton.py", line 3154, in codegen_kernel

**self.inductor_meta_common(),

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/_inductor/codegen/triton.py", line 3013, in inductor_meta_common

"backend_hash": torch.utils._triton.triton_hash_with_backend(),

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/utils/_triton.py", line 111, in triton_hash_with_backend

backend = triton_backend()

^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/torch/utils/_triton.py", line 103, in triton_backend

target = driver.active.get_current_target()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/runtime/driver.py", line 23, in __getattr__

self._initialize_obj()

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/runtime/driver.py", line 20, in _initialize_obj

self._obj = self._init_fn()

^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/runtime/driver.py", line 9, in _create_driver

return actives[0]()

^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/backends/nvidia/driver.py", line 450, in __init__

self.utils = CudaUtils() # TODO: make static

^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/backends/nvidia/driver.py", line 80, in __init__

mod = compile_module_from_src(Path(os.path.join(dirname, "driver.c")).read_text(), "cuda_utils")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/backends/nvidia/driver.py", line 57, in compile_module_from_src

so = _build(name, src_path, tmpdir, library_dirs(), include_dir, libraries)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/runtime/build.py", line 50, in _build

ret = subprocess.check_call(cc_cmd)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.12/subprocess.py", line 415, in check_call

raise CalledProcessError(retcode, cmd)

torch._dynamo.exc.BackendCompilerFailed: backend='inductor' raised:

CalledProcessError: Command '['/home/hyunjong/anaconda3/bin/x86_64-conda-linux-gnu-cc', '/tmp/tmpjbmobkir/main.c', '-O3', '-shared', '-fPIC', '-Wno-psabi', '-o', '/tmp/tmpjbmobkir/cuda_utils.cpython-312-x86_64-linux-gnu.so', '-lcuda', '-L/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/backends/nvidia/lib', '-L/lib/x86_64-linux-gnu', '-L/lib/i386-linux-gnu', '-I/home/hyunjong/Documents/Development/Python/trouver_py312_venv/lib/python3.12/site-packages/triton/backends/nvidia/include', '-I/tmp/tmpjbmobkir', '-I/usr/include/python3.12']' returned non-zero exit status 1.

Set TORCH_LOGS="+dynamo" and TORCHDYNAMO_VERBOSE=1 for more information

You can suppress this exception and fall back to eager by setting:

import torch._dynamo

torch._dynamo.config.suppress_errors = True

0%| | 0/50 [00:01<?, ?it/s]

```

### Expected behavior

For the training process to happen. | closed | 2025-02-12T04:04:50Z | 2025-02-14T04:21:22Z | https://github.com/huggingface/transformers/issues/36145 | [

"bug"

] | hyunjongkimmath | 4 |

psf/black | python | 4,507 | Re-condensation / Simplification Of Code After Line Length Reduction | Generally i've come to really love the black formatter, so first off thanks for that!

There is really only one thing I dont like about it which is that if the length of a line is reduced such that it could be put simply on one line, the nested format remains and leaves sometimes goofy looking artifacts.

I believe the black formatter would be better off it attempted to (perhaps via option) to first eliminate any line nesting or extra space then performed a format.

Example - a list comprehension with conditional logic removed

```

existing = list(

[bw.uuid for bw in session.query(Benchmark_Whitelist_DB).all()]

)

```

Could easily be replaced with:

```

existing = [bw.uuid for bw in session.query(Benchmark_Whitelist_DB).all()]

```

No doubt that is more readable, and I think this would reduce some of the opposition to regular use of black.

I think this case could be isolated for strings of a certain line length, between the tab and a colon?

I feel like someone has to have suggested this before?

| closed | 2024-11-08T03:06:46Z | 2024-11-12T04:38:37Z | https://github.com/psf/black/issues/4507 | [

"T: style"

] | SoundsSerious | 8 |

keras-team/autokeras | tensorflow | 1,209 | Set minimum epochs before early stopping | ### Feature Description

The ability to run a minimum amount of epochs before early stopping is activated. For example. Min_epochs=100, the model will run a minimum of 100 epochs before early stopping will be able to stop the model training.

### Code Example

<!---

Please provide a code example for using that feature

given the proposed feature is implemented.

-->

```classifier = ak.AutoModel(

inputs=input_node, outputs=output_node, max_trials=100, min_epochs=100, overwrite=True)

```

### Reason

<!---

It allows for more model control and the ability to run a minimum amount of epochs before stopping the model.

-->

### Solution

<!---

Add it as a parameter for the automodel or fit function.

-->

| closed | 2020-06-25T14:17:53Z | 2020-12-10T06:23:15Z | https://github.com/keras-team/autokeras/issues/1209 | [

"feature request",

"wontfix"

] | sword134 | 3 |

ansible/ansible | python | 84,381 | exctract list of ips from inventory without providing vault password | ### Summary

I want to extract the list of ips from ansible inventory,

I can do that as follows:

```

ansible-inventory --list --ask-vault-pass | jq -r '._meta.hostvars[].ansible_host'

```

but this requires giving vault pass which theoretically is not required.

please provide a way to extract ip list from inventory without querying the encrypted vault

A generalization of the requested feature is as follows:

provide a way to parse and extract information from inventory without querying the encrypted vault

### Issue Type

Feature Idea

### Component Name

cli

### Additional Information

It is useful in bash scripts that need ip list. this feature help one to not have a duplicate ip list for things which could not be done directly with ansible playbooks and needs scripting

### Code of Conduct

- [X] I agree to follow the Ansible Code of Conduct | open | 2024-11-24T04:48:24Z | 2024-12-09T15:48:18Z | https://github.com/ansible/ansible/issues/84381 | [

"feature"

] | zxsimba | 5 |

Miserlou/Zappa | flask | 1,735 | "package" create zip with files modified date set to Jan 1, 1980 (this prevents django collectstatic from working properly) | ## Context

Running `zappa package <stage> --output package.zip` will create a package.zip, however all of the files in the package have a modified date of January 1, 1980. This breaks django's collectstatic, which seems to depend on the modified date of the file changing.

## Expected Behavior

modified dates in the package zip should be the correct date

## Actual Behavior

modified dates in the package zip are fixed to January 1, 1980, preventing collectstatic from updating existing files that have changed.

## Your Environment

* Zappa version used: 0.47

* Operating System and Python version: ubuntu 16.04, python 3.6.5

## temporary work-around

Delete files from the s3 bucket before running collectstatic | open | 2018-12-21T15:53:46Z | 2018-12-21T16:14:56Z | https://github.com/Miserlou/Zappa/issues/1735 | [] | kylegibson | 1 |

ScottfreeLLC/AlphaPy | scikit-learn | 38 | Error in importing BalanceCascade from imblearn.ensemble | **Describe the bug**

ImportError: cannot import name 'BalanceCascade' from 'imblearn.ensemble' (/opt/conda/lib/python3.7/site-packages/imblearn/ensemble/__init__.py)

**To Reproduce**

Steps to reproduce the behavior:

1. Following instructions from here: https://alphapy.readthedocs.io/en/latest/tutorials/kaggle.html

2. Running step 2 (alphapy) throws the following error. Seems like there is no BalanceCascade in imblearn

**Expected behavior**

No error thrown.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Desktop (please complete the following information):**

- OS: Google Cloud Platform Virtual Machine running Linux

**Additional context**

Not sure what I'm doing wrong. Googled the imblearn package and they dont seem to have a BalanceCascade class in ensemble: https://github.com/scikit-learn-contrib/imbalanced-learn/blob/master/imblearn/ensemble/__init__.py

Any help is appreciated!

| closed | 2020-07-06T08:47:21Z | 2020-08-25T23:48:49Z | https://github.com/ScottfreeLLC/AlphaPy/issues/38 | [

"bug"

] | toko-stephen-leo | 4 |

indico/indico | flask | 6,538 | Fix faulty checking for empty string and extend to more formats | **Describe the bug**

The `check-format-strings` function should warn us when we remove `{}` from a translation string. Currently with empty braces this is not the case (see below). We also want to extend the feature so that we support `%(...)` notation.

**To Reproduce**

Steps to reproduce the behavior:

1. Go to a translation file

2. Change a `msgstr` that contains `{}` to not contain that `{}` anymore.

3. Run `indico i18n check-format-strings`

4. It should show that there were `No issues found!`

**Screenshots**

| closed | 2024-09-13T14:37:18Z | 2024-09-18T09:07:04Z | https://github.com/indico/indico/issues/6538 | [

"bug"

] | AjobK | 7 |

jmcnamara/XlsxWriter | pandas | 653 | XlsxWriter Roadmap | I write and maintain 4 libraries for writing Xlsx files in 4 different programming languages with more or less the same APIs:

* [Excel::Writer::XLSX][1] in Perl,

* [XlsxWriter][2] in Python,

* [Libxlsxwriter][3] in C and

* [rust_xlsxwriter][4] in Rust.

See also Note 1.

New features get added to the Perl version first, then the Python version and then the C version. As such a feature request has to be implemented 4 times, with tests and documentation. The Perl and Python versions are almost completely feature compatible. The C version is somewhat behind the others and the Rust version is a work in progress.

This document gives a broad overview of features that are planned to be implemented, in order.

1. Bugs. These have the highest priority. (Note 2)

2. ~~Add user defined types to the write() method in XlsxWriter.~~ #631 **Done**.

3. ~~Add hyperlinks to images in Excel::Writer::XLSX [Issue 161][5], fix it in the Python version, and add it to the C version.~~ **Done**

4. ~~Learn the phrygian dominant mode in all keys.~~ Close enough.

5. ~~Fix the issue where duplicate images aren't removed/merged.~~ #615 In Python, Perl and C versions. **Done**

6. ~~Add support for comments to the C version~~ https://github.com/jmcnamara/libxlsxwriter/issues/38. **Done**

7. ~~Add support for object positioning to the C version.~~ **Done**

8. ~~Add support for user defined chart data labels.~~ #343 This is the most frequently requested feature across all the libraries. **Done**

9. ~~Add header/footer image support to the C version.~~ **Done**

10. Learn the altered scale in all keys.

11. ~~Add conditional formatting to the C library.~~ **Done**

12. ~~Add support for new Excel dynamic functions.~~ **Done**

13. ~~Add autofilter filter conditions to the C library.~~ **Done**

14. ~~Drop Python 2 support.~~ #720

15. ~~Add table support to libxlsxwriter.~~ **Done**

16. Implement missing features in the C library.

17. Other frequently requested, and feasible, features, in all 3 versions.

**Update for 2023**: I will implement a simulated column autofit method in the Python library. The majority of any other effort will go into getting the Rust version of the library to feature compatibility with the Python version.

Notes:

1. I also wrote a version in Lua, and two other Perl versions (for older Excel file formats) that I no longer actively maintain. I wrote, and open sourced, the first version in January 2000.

2. Some avoidable bugs have lower priority.

[1]: https://github.com/jmcnamara/excel-writer-xlsx

[2]: https://github.com/jmcnamara/XlsxWriter

[3]: https://github.com/jmcnamara/libxlsxwriter

[4]: https://github.com/jmcnamara/rust_xlsxwriter

[5]: https://github.com/jmcnamara/excel-writer-xlsx/issues/161

| open | 2019-08-31T17:13:10Z | 2024-11-19T11:15:01Z | https://github.com/jmcnamara/XlsxWriter/issues/653 | [] | jmcnamara | 21 |

nschloe/tikzplotlib | matplotlib | 452 | Figure alignment in subfloats | Hi,

what is your workflow when generating plots to be used as subfloats?

(I'm not happy with groupplots because of the lack of configurability, the visual appeal and it being complicated to add/change subcaptions of the plots)

I've tried it with the code below. But i have several size issues. Is it possible so set the 'drawing area' to a fixed size or sth? And how to do this from tikzplotlib?

Thanks!

Python:

```

import matplotlib.pyplot as plt

import tikzplotlib

import numpy as np

x1=np.arange(0,10)*10e9

x2=np.arange(0,1000)

y1=np.random.randn(1,len(x1))[0]

y2=0.01*x2*np.random.randn(1,len(x2))[0]

KIT_green=(0/255,150/255,130/255)

KIT_blue=(70/255,100/255,170/255)

plt.figure()

plt.plot(x2,y2,label="second trace",color=KIT_green)

plt.xlabel(r"Time $t$ (in \si{\milli\second})")

plt.ylabel(r"Amplitude $S_{11}$ \\ (some measurement) \\ (and another meaningless line) (in \si{\volt})");

tikzplotlib.save("subfigs_left.tikz",extra_axis_parameters=["ylabel style={align=center}"],axis_width="5cm",axis_height="5cm")

plt.figure()

plt.plot(x1,y1,label="first trace",color=KIT_blue)

plt.xlabel(r"Time $t$ (in \si{\milli\second})")

plt.ylabel(r"Amplitude $S_{11}$, $S_{35}$ (in \si{\volt})");

tikzplotlib.save("subfigs_right.tikz",extra_axis_parameters=["ylabel style={align=center}"],axis_width="5cm",axis_height="5cm")

```

LaTeX:

```

\documentclass[11pt]{article}

\usepackage[utf8]{inputenc}

\usepackage{graphicx}

\usepackage{subfig}

\usepackage{siunitx}

\usepackage{tikz}

\usepackage{pgfplots}

\usepackage{tikzscale}

\begin{document}

\begin{figure}

\centering

\subfloat[Plot 1: this shows this]{\includegraphics[width=0.4\textwidth]{subfigs_left.tikz}}

\qquad

\subfloat[Plot 2: and this shows that. But this explanation is quite long. blablabla]{\includegraphics[width=0.4\textwidth]{subfigs_right.tikz}}

\caption{Two plots}

\label{fig:subfig}

\end{figure}

\end{document}

```

| open | 2020-12-04T22:52:00Z | 2020-12-04T22:52:00Z | https://github.com/nschloe/tikzplotlib/issues/452 | [] | youcann | 0 |

desec-io/desec-stack | rest-api | 655 | GUI: Domains not selectable using Firefox | From a user's report:

> One note, using firefox on OSX ( ffv 109.0 (64-bit) ) I was unable to select a domain to edit,

>

> I tested with chrome and safari and was able to select a domain to edit. | closed | 2023-01-26T12:53:01Z | 2024-10-07T16:59:39Z | https://github.com/desec-io/desec-stack/issues/655 | [

"bug",

"gui"

] | peterthomassen | 0 |

vitalik/django-ninja | pydantic | 828 | [BUG] ForeignKey field in modelSchema do not use the related alias_generator | **Describe the bug**

When using an alias_generator in the config of a `modelSchema` the id's returned for ForeignKey Fields do not use that generator

**Versions (please complete the following information):**

- Python version: [ 3.11.4]

- Django version: [4.1.5]

- Django-Ninja version: [0.20.0]

- Pydantic version: [1.10.4]

I have this `Dealer` model

```

class Dealer(AbstractDisableModel, AddressBase, ContactInfoBase):

...

distributor = models.ForeignKey(

Distributor, on_delete=models.DO_NOTHING, related_name="dealers"

)

...

```

Which adheres to this modelschema

```

class DealerSchema(ModelSchema):

...

class Config(CamelModelSchema.Config):

...

```

which uses this schema that converts properties to camelcase

```

class CamelModelSchema(Schema):

class Config:

alias_generator = to_camel

allow_population_by_field_name = True

```

All of this works for the fields directly attached to the instances, but foreignkey fields (that end in `_id`) don't seem to be converted. Would it be possible to have the generator adjust ALL fields?

E.g. this is the schema of the response of a dealer instance

<img width="242" alt="image" src="https://github.com/vitalik/django-ninja/assets/8971598/584a1d91-ef33-4312-b41e-e4c51493d7d3">

`distributor_id` is still not camelcased although the rest of the keys are. The `distributor_id` field is not sent through the alias_generator. Applying the same alias generator to the modelschema of the distributor also doesn't fix the issue.

I would guess this is a bug with the framework? Or potentially it is expected to behave like this... | open | 2023-08-18T09:27:14Z | 2024-03-21T19:29:39Z | https://github.com/vitalik/django-ninja/issues/828 | [] | stvdrsch | 3 |

roboflow/supervision | deep-learning | 1,411 | Increasing Video FPS running on CPU Using Threading | ### Search before asking

- [X] I have searched the Supervision [issues](https://github.com/roboflow/supervision/issues) and found no similar feature requests.

### Description

I want to increase FPS of a video running on my CPU system. I tested with few annotated and object tracking videos. When I am running the frames without passing through the model the fps is still low thus resulting lesser while passing them through YOLO or any model.

The code snippet I am using is

-----

<img width="410" alt="VideoSpeed1" src="https://github.com/user-attachments/assets/f36f708c-b9aa-477f-af1d-031a90d6fa01">

So, with the following method and running the normal frames I am getting something like the following :

<img width="469" alt="VideoSpeed2" src="https://github.com/user-attachments/assets/438503da-2746-4bc4-8bd4-be12099cef15">

With normal supervision's frame generator - fps is around 1-10 max

With threading its increasing to a greater value

### Use case

If we notice there is a significant change with threading. I was wondering if we could add a MainThread Class in the supervision utils in sv.VideoInfo or add a total new class so that frames running on CPU can have such fps. Let me know if we can handle such case. I can share the python file on drive if necesssary.

Thanks

### Additional

_No response_

### Are you willing to submit a PR?

- [X] Yes I'd like to help by submitting a PR! | open | 2024-07-27T21:45:43Z | 2024-10-19T01:27:38Z | https://github.com/roboflow/supervision/issues/1411 | [

"enhancement"

] | dsaha21 | 13 |

zappa/Zappa | django | 616 | [Migrated] KeyError: 'events' on running cli command `zappa unschedule STAGE` | Originally from: https://github.com/Miserlou/Zappa/issues/1577 by [monkut](https://github.com/monkut)

<!--- Provide a general summary of the issue in the Title above -->

## Context

In an attempt to reset scheduled events via zappa cli:

```

zappa unschedule prod

```

Seems that on unschedule 'events' key is expected from the defined `event_source` settings, but not required?

## Expected Behavior

<!--- Tell us what should happen -->

If unschedule is successful, the output should reflect that.

## Actual Behavior

<!--- Tell us what happens instead -->

```

zappa unschedule prod

Calling unschedule for stage prod..

Unscheduling..

Oh no! An error occurred! :(

==============

Traceback (most recent call last):

File "/project/.venv/lib/python3.6/site-packages/zappa/cli.py", line 2693, in handle

sys.exit(cli.handle())

File "/project/.venv/lib/python3.6/site-packages/zappa/cli.py", line 504, in handle

self.dispatch_command(self.command, stage)

File "/project/.venv/lib/python3.6/site-packages/zappa/cli.py", line 605, in dispatch_command

self.unschedule()

File "/project/.venv/lib/python3.6/site-packages/zappa/cli.py", line 1228, in unschedule

events=events,

File "/project/.venv/lib/python3.6/site-packages/zappa/core.py", line 2615, in unschedule_events

print("Removed event " + name + " (" + str(event_source['events']) + ").")

KeyError: 'events'

```

## Possible Fix

<!--- Not obligatory, but suggest a fix or reason for the bug -->

Could remove the attempt to output the missing key

(since it's not outputting now, i'm not sure what this is... but seems to work when I comment out this line)

## Steps to Reproduce

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

<!--- reproduce this bug include code to reproduce, if relevant -->

Not sure if this is a problem with my setup or not.. but

1. create project with kinesis stream scheduled events

2. deploy

3. turn of scheduling with `zappa unschedule STAGE`

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Zappa version used: 0.46.2 (was also a problem in 0.46.1)

* Operating System and Python version: macOS (3.6.2)

* The output of `pip freeze`:

```

argcomplete==1.9.3

aws-requests-auth==0.4.1

awscli==1.15.55

base58==1.0.0

boto3==1.7.68

botocore==1.10.68

Cerberus==1.2

certifi==2018.4.16

cfn-flip==1.0.3

chardet==3.0.4

click==6.7

colorama==0.3.9

Django==2.0.7

django-extensions==2.0.7

django-logging-json==1.15

django-reversion==2.0.13

django-storages==1.6.6

djangorestframework==3.8.2

djangorestframework-csv==2.1.0

docutils==0.14

durationpy==0.5

elasticsearch==6.3.0

future==0.16.0

hjson==3.0.1

idna==2.7

jmespath==0.9.3

jsonlines==1.2.0

kappa==0.6.0

lambda-packages==0.20.0

placebo==0.8.1

psycopg2-binary==2.7.5

pyasn1==0.4.4

PyJWT==1.6.4

python-dateutil==2.6.1

python-slugify==1.2.4

pytz==2018.5

PyYAML==3.12

redis==2.10.6

requests==2.19.1

rsa==3.4.2

s3transfer==0.1.13

six==1.11.0

toml==0.9.4

tqdm==4.19.1

troposphere==2.3.1

unicodecsv==0.14.1

Unidecode==1.0.22

urllib3==1.23

Werkzeug==0.14.1

wsgi-request-logger==0.4.6

zappa==0.46.2

```

* Link to your project (optional):

* Your `zappa_settings.py`:

```

{

"prod": {

"aws_region": "region",

"django_settings": "project.settings",

"profile_name": "myprofile",

"project_name": "project",

"runtime": "python3.6",

"memory_size": 2048,

"timeout_seconds": 300,

"s3_bucket": "zappa-project-prod",

"vpc_config": {

"SubnetIds": [

"subnet-yyyy88",

"subnet-zzz99"

],

"SecurityGroupIds": [

"sg-119191"

]

}

"events": [

{

"function": "project.event_handler.x_data_handler",

"event_source": {

"arn": "arn:aws:kinesis...",

"starting_position": "LATEST",

"batch_size": 500,

"enabled": true

},

"name": "lumbergh_prod_track_data_handler"

},

{

"function": "project.track_event_handler.y_data_handler",

"event_source": {

"arn": "kinesis-stream..",

"starting_position": "LATEST",

"batch_size": 500,

"enabled": true

},

"name": "y_event_data_handler"

}

],

"aws_environment_variables": {

"GDAL_DATA": "/var/task/geolib/usr/local/share/gdal/",

"GDAL_LIBRARY_PATH": "/var/task/libgdal.so.1.18.2",

"GEOS_LIBRARY_PATH": "/var/task/libgeos_c.so.1"

},

"manage_roles": false,

"role_name": "xxx"

}

}

``` | closed | 2021-02-20T12:26:41Z | 2022-07-16T06:52:03Z | https://github.com/zappa/Zappa/issues/616 | [] | jneves | 1 |

hyperspy/hyperspy | data-visualization | 2,774 | BLO output writer not working for lazy signals | It seems that the BLO writer does not work for lazy signals. The error seems to be in the line containing `tofile`. This is problematic for converting larger 4DSTEM/NBED datasets to BLO. I'll try to fix this.

In addition, the writer is very particular about units and does not seem to accept angstroms. | closed | 2021-06-22T14:44:49Z | 2021-09-05T17:08:07Z | https://github.com/hyperspy/hyperspy/issues/2774 | [

"type: bug"

] | din14970 | 3 |

graphql-python/gql | graphql | 471 | Shopify error: Prefix "query" when sending a mutation | When I have my mutation written I get an error when the request is sent that there is an unexpected sting (\"query\") at the start of my code. when the response is sent i can see that there is a hardcoded prefix "query" at the start of the request. I am unsure what to do now as I dont have that hard coded in my mutation so any advice on what to do would be greatly appreciated. There might be something I am doing wrong but I am just unsure. Thanks. I have transport and a client already defined in my code so not sure if there is an error in that but its not relevant to my traceback error as well which is the same as log errors.

2024-02-28 10:05:26 INFO: >>> {"query": "mutation customerRequestDataErasure($customerId: ID!) {\n customerRequestDataErasure(customerId: $customerId) {\n customerId\n userErrors {\n field\n message\n code\n }\n }\n}", "variables": {"customerId": "global_id (removed for security purposes)"}}

2024-02-28 10:05:26 INFO: <<< {"errors":[{"message":"syntax error, unexpected STRING (\"query\") at [1, 2]","locations":[{"line":1,"column":2}]}]}

My mutation:

query = gql("""

mutation customerRequestDataErasure($customerId: ID!) {

customerRequestDataErasure(customerId: $customerId) {

customerId

userErrors {

field

message

code

}

}

}

""")

params = {"customerId": ""}

result = client.execute(query, variable_values=params)

| closed | 2024-02-28T00:17:31Z | 2024-03-06T01:14:02Z | https://github.com/graphql-python/gql/issues/471 | [

"type: question or discussion",

"status: needs more information"

] | mattroberts96 | 3 |

python-visualization/folium | data-visualization | 1,521 | How to add custom `script` content to Folium output? | #### Please add a code sample or a nbviewer link, copy-pastable if possible

```html

<!DOCTYPE html>

<html lang="en">

<head>

<title></title>

<meta charset="utf-8" />

<meta http-equiv="x-ua-compatible" content="IE=Edge" />

<meta name="viewport" content="width=device-width, initial-scale=1, shrink-to-fit=no" />

<!-- Add references to the Leaflet JS map control resources. -->

<link rel="stylesheet" href="https://unpkg.com/leaflet@1.3.1/dist/leaflet.css"

integrity="sha512-Rksm5RenBEKSKFjgI3a41vrjkw4EVPlJ3+OiI65vTjIdo9brlAacEuKOiQ5OFh7cOI1bkDwLqdLw3Zg0cRJAAQ=="

crossorigin="" />

<script src="https://unpkg.com/leaflet@1.3.1/dist/leaflet.js"

integrity="sha512-/Nsx9X4HebavoBvEBuyp3I7od5tA0UzAxs+j83KgC8PU0kgB4XiK4Lfe4y4cgBtaRJQEIFCW+oC506aPT2L1zw=="

crossorigin=""></script>

<script type='text/javascript'>

var map;

function GetMap() {

map = L.map('myMap').setView([25,0], 3);

//Create a tile layer that points to the Azure Maps tiles.

L.tileLayer('https://atlas.microsoft.com/map/tile?subscription-key={subscriptionKey}&api-version=2.0&tilesetId={tilesetId}&zoom={z}&x={x}&y={y}&tileSize=256&language={language}&view={view}', {

attribution: `© ${new Date().getFullYear()} TomTom, Microsoft`,

//Add your Azure Maps key to the map SDK. Get an Azure Maps key at https://azure.com/maps. NOTE: The primary key should be used as the key.

subscriptionKey: 'key_goes_here',

tilesetId: 'microsoft.base.road',

language: 'en-US',

view: 'Auto'

}).addTo(map);

}

</script>

</head>

<body onload="GetMap()">

<div id="myMap" style="position:relative;width:100%;height:1000px;"></div>

</body>

</html>

```

#### Problem description

How do I add the `<script>` shown above (the one with `var map` and `subscriptionKey`) to Folium output? I'd like to use Azure basemap and my own subscription key.

| closed | 2021-10-21T20:13:43Z | 2022-11-18T11:01:56Z | https://github.com/python-visualization/folium/issues/1521 | [] | SeaDude | 2 |

microsoft/qlib | deep-learning | 901 | loss_fn() takes 3 positional arguments but 4 were given | Hi author,

When i run examples/benchmarks/LSTM/workflow_config_lstm_Alpha158.yaml, I got errors below, can you give me some favor?

i do not use GPU and the version of qlib is 0.8.3

(venv) aurora@aurora-MS-7D22:/data/vcodes/qlib/workshop$ python ../qlib/workflow/cli.py workflow_config_lstm_Alpha158.yaml

[64471:MainThread](2022-01-27 09:12:13,773) INFO - qlib.Initialization - [config.py:405] - default_conf: client.

[64471:MainThread](2022-01-27 09:12:13,774) INFO - qlib.Initialization - [__init__.py:73] - qlib successfully initialized based on client settings.

[64471:MainThread](2022-01-27 09:12:13,774) INFO - qlib.Initialization - [__init__.py:75] - data_path={'__DEFAULT_FREQ': PosixPath('/home/aurora/.qlib/qlib_data/cn_data')}

[64471:MainThread](2022-01-27 09:12:13,774) INFO - qlib.workflow - [expm.py:320] - <mlflow.tracking.client.MlflowClient object at 0x7fea7a241c10>

[64471:MainThread](2022-01-27 09:12:13,783) INFO - qlib.workflow - [exp.py:257] - Experiment 1 starts running ...

[64471:MainThread](2022-01-27 09:12:13,848) INFO - qlib.workflow - [recorder.py:290] - Recorder 22a03d2d5a164b45a1beebd9cea24de3 starts running under Experiment 1 ...

Please install necessary libs for CatBoostModel.

Please install necessary libs for XGBModel, such as xgboost.

[64471:MainThread](2022-01-27 09:12:14,194) INFO - qlib.LSTM - [pytorch_lstm_ts.py:62] - LSTM pytorch version...

[64471:MainThread](2022-01-27 09:12:14,194) INFO - qlib.LSTM - [pytorch_lstm_ts.py:80] - LSTM parameters setting:

d_feat : 20

hidden_size : 64

num_layers : 2

dropout : 0.0

n_epochs : 200

lr : 0.001

metric : loss

batch_size : 800

early_stop : 10

optimizer : adam

loss_type : mse

device : cpu

n_jobs : 20

use_GPU : False

seed : None

/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/utils/__init__.py:689: FutureWarning: MultiIndex.is_lexsorted is deprecated as a public function, users should use MultiIndex.is_monotonic_increasing instead.

if idx.is_monotonic_increasing and not (isinstance(idx, pd.MultiIndex) and not idx.is_lexsorted()):

[64471:MainThread](2022-01-27 09:12:47,336) INFO - qlib.timer - [log.py:113] - Time cost: 33.140s | Loading data Done

[64471:MainThread](2022-01-27 09:12:47,696) INFO - qlib.timer - [log.py:113] - Time cost: 0.016s | FilterCol Done

/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/data/dataset/processor.py:288: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

df[self.cols] = X

/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/data/dataset/processor.py:290: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

df.clip(-3, 3, inplace=True)

[64471:MainThread](2022-01-27 09:12:48,510) INFO - qlib.timer - [log.py:113] - Time cost: 0.813s | RobustZScoreNorm Done

/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/data/dataset/processor.py:192: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

df.fillna({col: self.fill_value for col in cols}, inplace=True)

[64471:MainThread](2022-01-27 09:12:48,718) INFO - qlib.timer - [log.py:113] - Time cost: 0.208s | Fillna Done

[64471:MainThread](2022-01-27 09:12:48,788) INFO - qlib.timer - [log.py:113] - Time cost: 0.027s | DropnaLabel Done

/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/data/dataset/processor.py:334: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

df[cols] = t

[64471:MainThread](2022-01-27 09:12:48,970) INFO - qlib.timer - [log.py:113] - Time cost: 0.182s | CSRankNorm Done

[64471:MainThread](2022-01-27 09:12:48,970) INFO - qlib.timer - [log.py:113] - Time cost: 1.634s | fit & process data Done

[64471:MainThread](2022-01-27 09:12:48,970) INFO - qlib.timer - [log.py:113] - Time cost: 34.774s | Init data Done

/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/utils/__init__.py:689: FutureWarning: MultiIndex.is_lexsorted is deprecated as a public function, users should use MultiIndex.is_monotonic_increasing instead.

if idx.is_monotonic_increasing and not (isinstance(idx, pd.MultiIndex) and not idx.is_lexsorted()):

/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/utils/__init__.py:689: FutureWarning: MultiIndex.is_lexsorted is deprecated as a public function, users should use MultiIndex.is_monotonic_increasing instead.

if idx.is_monotonic_increasing and not (isinstance(idx, pd.MultiIndex) and not idx.is_lexsorted()):

/data/vcodes/qlib/venv/lib/python3.8/site-packages/torch/utils/data/dataloader.py:478: UserWarning: This DataLoader will create 20 worker processes in total. Our suggested max number of worker in current system is 12, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

warnings.warn(_create_warning_msg(

[64471:MainThread](2022-01-27 09:12:50,313) INFO - qlib.LSTM - [pytorch_lstm_ts.py:250] - training...

[64471:MainThread](2022-01-27 09:12:50,313) INFO - qlib.LSTM - [pytorch_lstm_ts.py:254] - Epoch0:

[64471:MainThread](2022-01-27 09:12:50,313) INFO - qlib.LSTM - [pytorch_lstm_ts.py:255] - training...

[64471:MainThread](2022-01-27 09:12:51,220) INFO - qlib.timer - [log.py:113] - Time cost: 0.000s | waiting `async_log` Done

[64471:MainThread](2022-01-27 09:12:51,220) ERROR - qlib.workflow - [utils.py:38] - An exception has been raised[TypeError: loss_fn() takes 3 positional arguments but 4 were given].

File "../qlib/workflow/cli.py", line 67, in <module>

run()

File "../qlib/workflow/cli.py", line 63, in run

fire.Fire(workflow)

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/fire/core.py", line 141, in Fire

component_trace = _Fire(component, args, parsed_flag_args, context, name)

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/fire/core.py", line 466, in _Fire

component, remaining_args = _CallAndUpdateTrace(

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/fire/core.py", line 681, in _CallAndUpdateTrace

component = fn(*varargs, **kwargs)

File "../qlib/workflow/cli.py", line 57, in workflow

recorder = task_train(config.get("task"), experiment_name=experiment_name)

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/model/trainer.py", line 122, in task_train

_exe_task(task_config)

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/model/trainer.py", line 44, in _exe_task

auto_filter_kwargs(model.fit)(dataset, reweighter=reweighter)

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/utils/__init__.py", line 852, in _func

return func(*args, **new_kwargs)

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/contrib/model/pytorch_lstm_ts.py", line 256, in fit

self.train_epoch(train_loader)

File "/data/vcodes/qlib/venv/lib/python3.8/site-packages/qlib/contrib/model/pytorch_lstm_ts.py", line 172, in train_epoch

loss = self.loss_fn(pred, label, weight.to(self.device))

TypeError: loss_fn() takes 3 positional arguments but 4 were given

| closed | 2022-01-27T01:23:50Z | 2022-02-06T14:40:15Z | https://github.com/microsoft/qlib/issues/901 | [

"question"

] | aurora5161 | 4 |

onnx/onnx | machine-learning | 5,916 | Should numpy_helper.to_array() support segments? | # Ask a Question

### Question

Should numpy_helper.to_array() be expected to support segments?

### Further information

Currently in [numpy_helper](https://github.com/onnx/onnx/blob/48501e0ff59843a33f89e028cbcbf080edd0c1df/onnx/numpy_helper.py#L231-L232) there is:

```

def to_array(tensor: TensorProto, base_dir: str = "") -> np.ndarray: # noqa: PLR0911

"""Converts a tensor def object to a numpy array.

...

"""

if tensor.HasField("segment"):

raise ValueError("Currently not supporting loading segments.")

```

Issue https://github.com/onnx/onnx/issues/2984 hit this in the past and [comment](https://github.com/onnx/onnx/issues/2984#issuecomment-748358644) that says it was closed by https://github.com/onnx/onnx/pull/3136.

However it looks like to_array() still doesn't support segments. If I'm reading it correctly the "fix" for #2984 was to use [_load_proto](https://github.com/jcwchen/onnx/blob/main/onnx/backend/test/runner/__init__.py#L461) instead of to_array().

I'm currently hitting this error from to_array() trying to load resnet-preproc-v1-18/test_data_set_0/input_0.pb from [resnet-preproc-v1-18.tar.gz in the onnx model zoo](https://github.com/onnx/models/blob/main/validated/vision/classification/resnet/preproc/resnet-preproc-v1-18.tar.gz) using:

```

with open('resnet-preproc-v1-18/test_data_set_0/input_0.pb', 'rb') as pb:

proto.ParseFromString(pb.read())

arr = onnx.numpy_helper.to_array(proto)

```

So should numpy_helper.to_array() support segments?

* If yes, can the logic for load_segments copied/moved/made accessible to to_array()

* If no, should the to_array() error be updated to use _load_proto()?

### Relevant Area: <!--e.g., model usage, backend, best practices, converters, shape_inference, version_converter, training, test, operators, IR, ONNX Hub, data preprocessing, CI pipelines. -->

loading model zoo test data

### Is this issue related to a specific model?

It's not model specific, but I hit it attempting to load test data for resnet-preproc-v1-18.tar.gz from the onnx model zoo

### Notes

<!-- Any additional information, code snippets. -->

| closed | 2024-02-07T23:19:47Z | 2024-03-07T20:27:37Z | https://github.com/onnx/onnx/issues/5916 | [

"question"

] | cjvolzka | 1 |

voila-dashboards/voila | jupyter | 947 | Widgets not rendering with xeus-python kernel. | ## Description

<!--Describe the bug clearly and concisely. Include screenshots/gifs if possible-->

Widgets do not render in Voila with `xeus-python` kernel.

## Reproduce

<!--Describe step-by-step instructions to reproduce the behavior-->

1. Create new environnement:

```bash

mamba create -n testenv python=3.9 jupyterlab voila ipywidgets xeus-python -y

```

2. Create a new notebook with content:

```python

from ipywidgets import widgets

widgets.Button(description="Click Me!")

```

2. Select `XPython` as kernel then save notebook.

3. Start `Voila` with the newly created notebook.

4. See error :

```python

Traceback (most recent call last):

File "/home/********/miniconda3/lib/python3.9/site-packages/voila/handler.py", line 209, in _jinja_cell_generator

output_cell = await task

File "/home/********/miniconda3/lib/python3.9/site-packages/voila/execute.py", line 69, in execute_cell

result = await self.async_execute_cell(cell, cell_index, store_history)

File "/home/********/miniconda3/lib/python3.9/site-packages/nbclient/client.py", line 846, in async_execute_cell

exec_reply = await self.task_poll_for_reply

File "/home/********/miniconda3/lib/python3.9/site-packages/nbclient/client.py", line 632, in _async_poll_for_reply

await asyncio.wait_for(task_poll_output_msg, self.iopub_timeout)

File "/home/********/miniconda3/lib/python3.9/asyncio/tasks.py", line 481, in wait_for

return fut.result()

File "/home/********/miniconda3/lib/python3.9/site-packages/nbclient/client.py", line 665, in _async_poll_output_msg

self.process_message(msg, cell, cell_index)

File "/home/********/miniconda3/lib/python3.9/site-packages/nbclient/client.py", line 904, in process_message

display_id = content.get('transient', {}).get('display_id', None)

AttributeError: 'NoneType' object has no attribute 'get'

```

<!--Describe how you diagnosed the issue -->

## Expected behavior

A button with text "Click Me!" should be shown.

## Context

<!--Complete the following for context, and add any other relevant context-->

- Version:

```

ipykernel 6.3.1 py39hef51801_0 conda-forge

ipython 7.27.0 py39hef51801_0 conda-forge

ipywidgets 7.6.4 pyhd8ed1ab_0 conda-forge

jupyter_client 7.0.2 pyhd8ed1ab_0 conda-forge

jupyter_core 4.7.1 py39hf3d152e_0 conda-forge

jupyter_server 1.10.2 pyhd8ed1ab_0 conda-forge

jupyterlab 3.1.10 pyhd8ed1ab_0 conda-forge

jupyterlab_pygments 0.1.2 pyh9f0ad1d_0 conda-forge

jupyterlab_server 2.7.2 pyhd8ed1ab_0 conda-forge

jupyterlab_widgets 1.0.1 pyhd8ed1ab_0 conda-forge

nbclient 0.5.4 pyhd8ed1ab_0 conda-forge

nbconvert 6.1.0 py39hf3d152e_0 conda-forge

nbformat 5.1.3 pyhd8ed1ab_0 conda-forge

notebook 6.4.3 pyha770c72_0 conda-forge

python 3.9.7 h49503c6_0_cpython conda-forge

tornado 6.1 py39h3811e60_1 conda-forge

traitlets 5.1.0 pyhd8ed1ab_0 conda-forge

voila 0.2.11 pyhd8ed1ab_0 conda-forge

widgetsnbextension 3.5.1 py39hf3d152e_4 conda-forge

xeus 1.0.4 h7d0c39e_0 conda-forge

xeus-python 0.12.5 py39h1aaad98_2 conda-forge

```

- Operating System and version: Windows 10

- Browser and version: Chrome 92.0.4515.159

| closed | 2021-09-03T14:17:04Z | 2021-09-09T12:37:23Z | https://github.com/voila-dashboards/voila/issues/947 | [

"bug"

] | trungleduc | 4 |

Lightning-AI/pytorch-lightning | deep-learning | 19,624 | IterableDataset with CORRECT length causes validation loop to be skipped | ### Bug description

This is related to this issue:

https://github.com/Lightning-AI/pytorch-lightning/issues/10290

Whereby an IterableDataset with a length defined wont trigger a validation epoch, even if the defined length is correct so long as the following conditions met:

1. Accurate length of IterableDataset defined

2. Dataset accurately split between multiple workers with no overlap

3. Drop last = True for the dataloader

4. Dataset size does not evenly divide into the batches

In this instance multiple workers may be left with an incomplete batch right at the end of the training epoch. So the number of "dropped batches" exceeds 1. Then the dataloader will raise a StopIteration before the length is reached, causing the validation epoch to be skipped.

This is standard PyTorch behavior as the collation function is called per worker in an IterableDataset.

https://github.com/pytorch/pytorch/issues/33413

I am having this issue right now my current fix is artificially subtract from the length of my IterableDataset to account for this. Unfortunately I really would like the length to be defined, so can't set it to inf which was the hotfix in the previous thread.

The progress bar is useful for me to judge which partition I need to run certain jobs on plus I use the dataset length to sync up my cyclic learning rate with the number of steps in an epoch.

### What version are you seeing the problem on?

master

### How to reproduce the bug

```python

import torch as T

import numpy as np

from torch.utils.data import DataLoader, Dataset, IterableDataset, get_worker_info

from lightning import LightningModule, Trainer, LightningDataModule

nwrkrs = 4

drop = True

class Data(IterableDataset):

def __init__(self) -> None:

super().__init__()

self.data = np.random.rand(100, 10).astype(np.float32)

def __len__(self) -> int:

return len(self.data)

def __iter__(self):

worker_info = get_worker_info()

worker_id = 0 if worker_info is None else worker_info.id

num_workers = 1 if worker_info is None else worker_info.num_workers

worker_samples = np.array_split(self.data, num_workers)[worker_id]

for i in worker_samples:

yield i

class Model(LightningModule):

def __init__(self) -> None:

super().__init__()

self.layer = T.nn.Linear(10, 1)

self.did_validation = False

def forward(self, x: T.Tensor) -> T.Tensor:

return self.layer(x)

def training_step(self, batch):

return self(batch).mean()

def validation_step(self, batch):

self.did_validation = True

return self(batch).mean()

def configure_optimizers(self):

return T.optim.Adam(self.parameters())

model = Model()

trainer = Trainer(logger=False, max_epochs=2, num_sanity_val_steps=0)

train_loader = DataLoader(Data(), num_workers=nwrkrs, batch_size=32, drop_last=drop)

valid_loader = DataLoader(Data(), num_workers=nwrkrs, batch_size=32, drop_last=drop)

trainer.fit(model, train_loader, valid_loader)

print("Performed validation:", model.did_validation)

```

Setting up the code above and running it with the following settings gives these results:

```python

nwrkrs = 0, drop = True

```

`Performed validation: True`

```python

nwrkrs = 4, drop = False

```

`Performed validation: True`

```python

nwrkrs = 4, drop = True

```

`Performed validation: False`

cc @justusschock @awaelchli | open | 2024-03-13T09:14:56Z | 2025-03-03T10:33:07Z | https://github.com/Lightning-AI/pytorch-lightning/issues/19624 | [

"question",

"data handling",

"ver: 2.2.x"

] | mattcleigh | 10 |

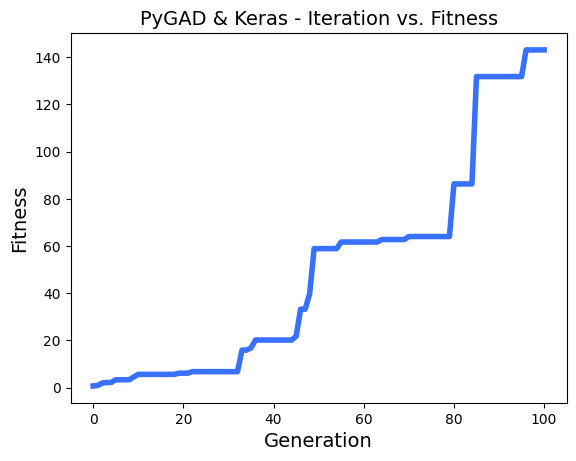

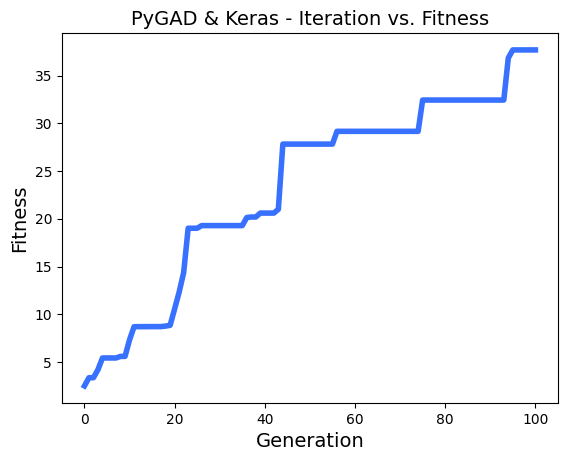

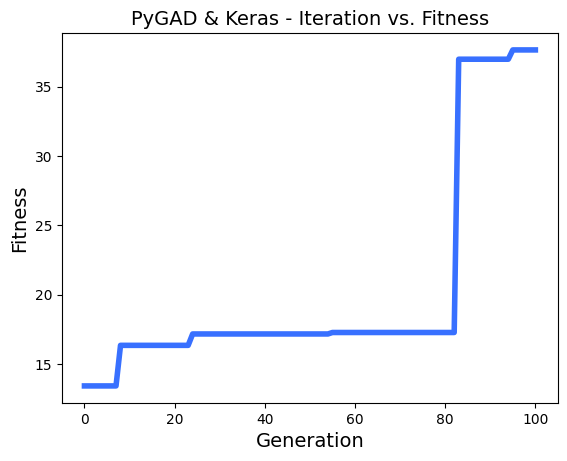

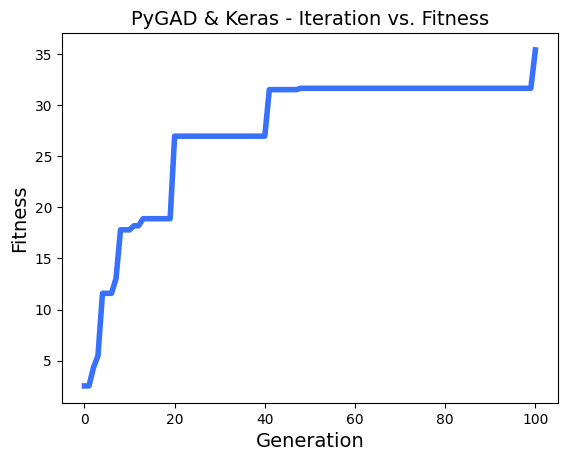

ahmedfgad/GeneticAlgorithmPython | numpy | 150 | Problems with multithreading and generation step | Hi,

I realy appreciate your works on PyGAD!

I'm using it to make some chaotic learning with thousands of model, and a greedy fitness function. the parallelization is realy efficient in my case.

I have found some problems with multithreading using keras models.

To reproduce the problem, i use this regression sample : `https://pygad.readthedocs.io/en/latest/README_pygad_kerasga_ReadTheDocs.html#example-1-regression-example`

I only reduce the **num_generations** to 100.

Steps to reproduce :

I run a few times the sample,

- then, i enable the parallel processing on 8 threads :

- then, run again a few times :

- sometimes, i see in logs a fitness lower than the n-1 generation, example :

- I printed all solutions used in each epoch, and i saw thats solutions are most of time the same, so the parallel_processing seems to break the generation of the next population in the most of cases.

Thanks!

EDIT :

In addition i tried to reproduce the same problem with this [classification problem sample ](https://pygad.readthedocs.io/en/latest/README_pygad_gann_ReadTheDocs.html#image-classification),

Adding the multiprocessing support cause the same problem.

| open | 2022-12-13T13:09:47Z | 2024-01-28T05:47:51Z | https://github.com/ahmedfgad/GeneticAlgorithmPython/issues/150 | [

"bug"