repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

sequencelengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

FactoryBoy/factory_boy | sqlalchemy | 215 | Generic Foreign Keys and Rails-like polymorphic associations | I can't seem to figure out how to implement a GenericForeignKey with FactoryBoy. I have a model `Comment` and a model `Post`, but `Comment` can be attached to mostly anything. In Python (django), these look like:

``` python

class Comment(models.Model):

"""Comment for any other object."""

#: Message for the Comment

message = models.TextField()

#: Date of creation, automatically set upon creation

created_at = models.DateTimeField(auto_now=True)

#: Type of class this is associated to

owner_type = models.ForeignKey(ContentType)

#: ID of the associated model

owner_id = models.PositiveIntegerField()

# Object to associate with

content_object = GenericForeignKey('owner_type', 'owner_id')

class Meta:

ordering = ['-created_at']

```

I was about to implement this using the @factory.post_generation decorator but I wasn't quite sure how to associate the keys properly there either. Any more information on this?

| closed | 2015-07-09T14:52:31Z | 2015-07-25T12:10:34Z | https://github.com/FactoryBoy/factory_boy/issues/215 | [

"Q&A"

] | Amnesthesia | 4 |

lukas-blecher/LaTeX-OCR | pytorch | 177 | [feature] Support readline hotkey? | That is input `<C-B>/<C-F>/...` can move the cursor in `Predict LaTeX code for image ("?"/"h" for help).` Thanks. | closed | 2022-09-12T11:03:52Z | 2022-09-20T09:05:07Z | https://github.com/lukas-blecher/LaTeX-OCR/issues/177 | [] | Freed-Wu | 1 |

vitalik/django-ninja | rest-api | 1,063 | query parameter variabel | some applications request data with url query, and one of the variable call "pass"

ex:

http://my_url/api?pass=testvalue

when i try to gaet the parameter

@api.get("/user")

def list_weapons(request, pass:str):

return pass

it's be a problem because pass can't use as variable in python. how to handle it?

| open | 2024-01-25T17:00:16Z | 2024-01-26T12:31:20Z | https://github.com/vitalik/django-ninja/issues/1063 | [] | lutfyneutron | 1 |

plotly/dash-table | plotly | 231 | Feature request: Ability to remove table borders completely | I'm trying to convert some tables that I rendered in my app through convoluted HTML into the new `DataTable`s, but in order to reproduce the format I need to get rid of some of the table borders and that doesn't seem possible right now. I have used `style_as_list_view` but that doesn't help me with the horizontal borders. Basically, I'm trying to style my table like this:

Just as there is the `style_as_list_view` flag, could there be one called something like `style_without_borders` so that we can then add borders as needed through normal cell styling?

If this is acceptable and with some guidance, I'm happy to submit a PR for this. | closed | 2018-11-07T17:23:36Z | 2021-10-05T09:06:54Z | https://github.com/plotly/dash-table/issues/231 | [] | oriolmirosa | 7 |

xonsh/xonsh | data-science | 5,610 | conda and mamba: `DeprecationWarning: Use xonsh.lib.lazyasd instead of xonsh.lazyasd.` | Just want to pin here the related PRs in downstream tools

The fix for this in downstream tools:

```xsh

try:

# xonsh >= 0.18.0

from xonsh.lib.lazyasd import lazyobject

except:

# xonsh < 0.18.0

from xonsh.lazyasd import lazyobject

```

## For community

⬇️ **Please click the 👍 reaction instead of leaving a `+1` or 👍 comment**

| closed | 2024-07-18T15:36:31Z | 2024-07-18T15:38:54Z | https://github.com/xonsh/xonsh/issues/5610 | [

"refactoring"

] | anki-code | 1 |

flairNLP/flair | nlp | 3,307 | [Question]: Fail to install allennlp=0.9.0 | ### Question

When I install allennlp without defining the version, elmo embedding does not work.

But when I install with allennlp 0.9.0 it cannot install successful in colab.

Collecting spacy<2.2,>=2.1.0 (from allennlp==0.9.0)

Using cached spacy-2.1.9.tar.gz (30.7 MB)

Installing build dependencies ... error

error: subprocess-exited-with-error | closed | 2023-08-30T18:25:22Z | 2023-09-04T09:57:06Z | https://github.com/flairNLP/flair/issues/3307 | [

"question"

] | nonameisagoodname | 1 |

AUTOMATIC1111/stable-diffusion-webui | deep-learning | 16,676 | [Bug]: Networks with errors lora | ### Checklist

- [ ] The issue exists after disabling all extensions

- [ ] The issue exists on a clean installation of webui

- [ ] The issue is caused by an extension, but I believe it is caused by a bug in the webui

- [X] The issue exists in the current version of the webui

- [ ] The issue has not been reported before recently

- [ ] The issue has been reported before but has not been fixed yet

### What happened?

When I try to create an image using Lora, I get

error network lora name(32) and the lora does not come out correctly.

Example: When trying to use lora diana-prince-dcau-ponyxl-lora-nochekaiser

This is what the error message will look like

Network with error: diana-prince-dcau-ponyxl-lora-nochekaiser (32)

The same goes for other loras.

### Steps to reproduce the problem

1. Select Lora.

2. Create a prompt for that Lora.

3. Start the task.

### What should have happened?

The Lora (character) must function properly and generate outputs successfully.

Additionally, the error message "error network lora name (32)" should no longer appear.

### What browsers do you use to access the UI ?

Google Chrome

### Sysinfo

[sysinfo-2024-11-24-02-54.json](https://github.com/user-attachments/files/17891574/sysinfo-2024-11-24-02-54.json)

### Console logs

```Shell

Loading network C:\stable-diffusion-webui\models\Lora\loradiana-prince-dcau-ponyxl-lora-nochekaiser.safetensors: FileNotFoundError

Traceback (at the end of the most recent call):

File “C:\stable-diffusion-webui\extensions-builtin\Lora\networks.py”, line 321, in load_networks.

NET = load_networks(name, network_on_disk)

In File “C:\stable-diffusion-webui\extensions-builtin\Lora\networks.py”, Line 160, load_network

net.mtime = os.path.getmtime(network_on_disk.filename)

File “C:\Python310\lib\genericpath.py”, line 55, in getmtime

Return os.stat(filename).st_mtime

File not found error: [WinError 2] The specified file could not be found: 'C:\\stable-diffusion-webui\\models\\Lora\\loradiana-prince-dcau-ponyxl-lora-nochekaiser.safetensors'

```

### Additional information

This is the version I'm using.

version:v1.10.1 • python: 3.10.11 • torch: 2.1.2+cu121 • xformers: 0.0.23.post1 • gradio: 3.41.2 • checkpoint: ac006fdd7e

The path to lora.

C:\stable-diffusion-webui\models\Lora

I used this model.

autismmixSDXL_autismmixConfetti.safetensors

Steps I have tried to resolve the issue:

1. Reinstalled Stable Diffusion.

2. Deleted and reinstalled the Lora file.

3. Renamed the Lora file.

4. Reinstalled the checkpoint (model).

I still have no idea why this error occurs. I would greatly appreciate your help. | closed | 2024-11-24T03:02:37Z | 2025-01-17T12:57:32Z | https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/16676 | [

"bug-report"

] | Musess | 0 |

PaddlePaddle/ERNIE | nlp | 713 | ERNIE的词表中的##开头的token的用途? | ERNIE的词表文件中有很多##开头的字,但ernie本身就是基于字的模型,那为什么还需要这些##开头的字呢? | closed | 2021-07-08T12:48:03Z | 2021-10-02T06:09:43Z | https://github.com/PaddlePaddle/ERNIE/issues/713 | [

"wontfix"

] | KinvenW | 2 |

HumanSignal/labelImg | deep-learning | 724 | CreateML annotation format does not work. | The CreateML annotation format is currently not supported in the tool. It can be selected, but saving and loading does not work. I assume that this is a temporary fix for some incompatibility, but I would suggest either making it work or getting rid of the format altogether to not have unexpected behavior in the tool

_Originally posted by @Cerno-b in https://github.com/tzutalin/labelImg/pull/723#r598457996_ | closed | 2021-03-22T06:46:54Z | 2022-09-26T03:41:50Z | https://github.com/HumanSignal/labelImg/issues/724 | [] | Cerno-b | 1 |

deezer/spleeter | deep-learning | 792 | [Feature] speaker/singer/vocalist separation | ## Description

speaker separation, so when we hear different speakers or singers or vocalists,

every different voice gets a separated audio voice track

## Additional information

| closed | 2022-09-27T02:45:38Z | 2022-10-07T10:33:54Z | https://github.com/deezer/spleeter/issues/792 | [

"enhancement",

"feature"

] | bartman081523 | 2 |

CPJKU/madmom | numpy | 264 | Spectrul flux vs median deviation | Hello,

I notice in the you have changed the way you compute positive differences in the Mel filterbank in comparison to your paper from 2011. Seems you no longer look at the deviations from the median, but rather utilize flux.

Would this affect the results from 2011 or should I go ahead with the median deviations?

Also,

Could you tell me what the difference is in the BEATS_BLSTM models?

Cheers | closed | 2017-03-07T19:59:34Z | 2017-04-12T06:45:14Z | https://github.com/CPJKU/madmom/issues/264 | [

"question"

] | ghost | 2 |

matterport/Mask_RCNN | tensorflow | 2,517 | Why applying biggest anchor sizes on smallest feature maps? | Hi everyone,

In model, it is called

```

a = utils.generate_pyramid_anchors(

self.config.RPN_ANCHOR_SCALES,

self.config.RPN_ANCHOR_RATIOS,

backbone_shapes,

self.config.BACKBONE_STRIDES,

self.config.RPN_ANCHOR_STRIDE)

```

for the anchor generation. In utils.generate_pyramid_anchors, we can find this line

```

anchors.append(generate_anchors(scales[i], ratios, feature_shapes[i],

feature_strides[i], anchor_stride))

```

meaning that we apply the scales[i] size to generate anchor on the feature map of size feature_shapes[i]. We have on one hand

`config.RPN_ANCHOR_SCALES = (32, 64, 128, 256, 512)`

and

`backbone_shapes = compute_backbone_shapes(self.config, image_shape)`

on the other hand, that is in fact the array containing the shapes of the feature maps, the biggest being first. To be clear, we have for an input image of 1024x1024x1

`backbone_shapes = np.array( [256,256], [128,128], [64,64], [32,32], [16,16] )`

It means that we apply the smallest anchor size to the biggest feature maps (may be ok) and the biggest anchor sizes on the smallest feature maps. My question is why? I would have considered to apply the smallest anchor sizes to the smallest features maps? Am I missing something here?

Thank you in advance. | closed | 2021-03-24T15:30:09Z | 2025-01-06T12:33:33Z | https://github.com/matterport/Mask_RCNN/issues/2517 | [] | gdavid57 | 1 |

jupyterhub/jupyterhub-deploy-docker | jupyter | 19 | How to spawn user container with DOCKER_NOTEBOOK_DIR | suppose a user named bob signed in with github, how to spwan a bob container with DOCKER_NOTEBOOK_DIR to /home/bob/work?

| closed | 2016-08-18T04:21:52Z | 2022-12-05T00:55:52Z | https://github.com/jupyterhub/jupyterhub-deploy-docker/issues/19 | [

"enhancement",

"help wanted"

] | z333d | 7 |

Ehco1996/django-sspanel | django | 22 | 嗯,,,提个建议 | 单端口多用户,,感觉比较重要。。。

形势 | closed | 2017-10-26T05:29:03Z | 2017-10-28T18:53:49Z | https://github.com/Ehco1996/django-sspanel/issues/22 | [] | cheapssr | 4 |

ludwig-ai/ludwig | computer-vision | 3,881 | Cannot run/install finetuning colab notebook | **Describe the bug**

The [demo colab notebook](https://colab.research.google.com/drive/1r4oSEwRJpYKBPM0M0RSh0pBEYK_gBKbe) for finetuning Llama-2-7b is crashing at the third runnable cell when trying to import torch.

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

[<ipython-input-3-dac5961b998e>](https://localhost:8080/#) in <cell line: 5>()

3 import logging

4 import os

----> 5 import torch

6 import yaml

7

12 frames

[/usr/lib/python3.10/_pyio.py](https://localhost:8080/#) in __init__(self, buffer, encoding, errors, newline, line_buffering, write_through)

2043 encoding = "utf-8"

2044 else:

-> 2045 encoding = locale.getpreferredencoding(False)

2046

2047 if not isinstance(encoding, str):

TypeError: <lambda>() takes 0 positional arguments but 1 was given

```

**To Reproduce**

1. Go to https://colab.research.google.com/drive/1r4oSEwRJpYKBPM0M0RSh0pBEYK_gBKbe

2. Connect T4 GPU

3. Run the first three cells

4. Last cell should fail with the error message

**Expected behavior**

It should work!

**Environment (please complete the following information):**

(not sure if relevant)

| closed | 2024-01-15T17:15:32Z | 2024-01-15T21:29:12Z | https://github.com/ludwig-ai/ludwig/issues/3881 | [] | dotXem | 3 |

xlwings/xlwings | automation | 2,267 | test_markdown.py on macOS | #### OS (e.g. Windows 10 or macOS Sierra)

macOS

#### Versions of xlwings, Excel and Python (e.g. 0.11.8, Office 365, Python 3.7)

xlwings - 0.30.7

Python 3.11

#### Describe your issue (incl. Traceback!)

```python

pytest tests/test_markdown.py

```

All tests fail on macOS. As is also noted in a comment within the module

> `test_markdown.py`

> Characters are currently not properly supported

> on macOS due to an Excel/AppleScript bug

Knowing this is the case for macOS, would it be an idea to wrap theses tests within a pytest class and skip the test if the platform is macOS. As is being done for some other individual tests?

Skipping a tests has my preference as this gives me as a developer the most ease of mind. Seeing failing tests kinda makes me nervous 🙂.

```python

/Users/hayer/xlwings/xlwings/pro/reports/markdown.py:214: UserWarning: Markdown formatting is currently ignored on macOS.

warnings.warn("Markdown formatting is currently ignored on macOS.")

----

FAILED tests/test_markdown.py::test_markdown_cell_defaults_formatting - assert False is True

FAILED tests/test_markdown.py::test_markdown_cell_defaults_value - AssertionError: assert 'Title\nText ...eak\nnew line' == 'Title\n\nTex...eak\nnew line'

FAILED tests/test_markdown.py::test_markdown_cell_h1 - assert None == (255, 0, 0)

FAILED tests/test_markdown.py::test_markdown_cell_strong - assert None == (255, 0, 0)

FAILED tests/test_markdown.py::test_markdown_cell_emphasis - assert None == (255, 0, 0)

FAILED tests/test_markdown.py::test_markdown_cell_unordered_list - AssertionError: assert ' a first bul... a second bul' == '-'

FAILED tests/test_markdown.py::test_markdown_shape_defaults_formatting - AttributeError: Characters isn't supported on macOS with shapes.

FAILED tests/test_markdown.py::test_markdown_shape_defaults_value - AssertionError: assert 'Title\nText ...eak\nnew line' == 'Title\n\nTex...eak\nnew line'

FAILED tests/test_markdown.py::test_markdown_shape_h1 - AttributeError: Characters isn't supported on macOS with shapes.

FAILED tests/test_markdown.py::test_markdown_shape_strong - AttributeError: Characters isn't supported on macOS with shapes.

FAILED tests/test_markdown.py::test_markdown_shape_emphasis - AttributeError: Characters isn't supported on macOS with shapes.

FAILED tests/test_markdown.py::test_markdown_shape_unordered_list - AttributeError: Characters isn't supported on macOS with shapes.

``` | closed | 2023-05-24T11:44:10Z | 2023-05-25T09:34:00Z | https://github.com/xlwings/xlwings/issues/2267 | [] | Jeroendevr | 0 |

huggingface/datasets | nlp | 6,941 | Supporting FFCV: Fast Forward Computer Vision | ### Feature request

Supporting FFCV, https://github.com/libffcv/ffcv

### Motivation

According to the benchmark, FFCV seems to be fastest image loading method.

### Your contribution

no | open | 2024-06-01T05:34:52Z | 2024-06-01T05:34:52Z | https://github.com/huggingface/datasets/issues/6941 | [

"enhancement"

] | Luciennnnnnn | 0 |

nalepae/pandarallel | pandas | 77 | Hangs on Completion When nb_workers is Too High | Debian 9.9

pandarallel 1.4.5

pyhton 3.7.5

I'm applying in parallel some string comparisons:

```

track_matches = isrcs_to_match_by_title["title_cleaned"].parallel_apply(

lambda title_cleaned: tracks.index[tracks["name_cleaned"].values == title_cleaned])

```

It's not always reproducible. In some runs it will work and others it won't.

Setting `progress_bar=True` or `False` doesn't seem to affect it.

The higher the number of processes, the less likely it seems to complete. When I set `nb_workers=8`, it always completes. 24 sometimes completes, 96 never completes.

On completion the processes all die out (none of them are being used), and the program never continues. | closed | 2020-02-14T00:04:04Z | 2022-09-07T11:17:17Z | https://github.com/nalepae/pandarallel/issues/77 | [] | xanderdunn | 2 |

explosion/spaCy | machine-learning | 12,738 | Custom spaCy NER model not making expected predictions | ## Issue

A custom NER model (trained to identify certain code numbers) does not produce predictions in certain documents.

The tables below show text snippets of pairs of two documents in row#1 and row#2 respectively, where row#1 represents text on which model returns correct prediction and row#2 represents another text on which model does not return correct prediction, despite text1 and text2 being very similar.

I have tried multiple variations of these texts from row#3 onwards, in an attempt to pinpoint what piece of text is causing differences in predictions. The issue here is- if the model recognizes a given code as the correct inference in one document, why is it not able to identify another similar looking code number as the correct inference, in another similar document.

### Model Inputs and Corresponding Outputs

Example1:

<img width="1162" alt="PC spaCy model input and output" src="https://github.com/explosion/spaCy/assets/15351802/8755fdd3-2c92-45cf-b4f2-203aca4e0c6d">

Example2:

<img width="1193" alt="Screenshot 2023-06-18 at 6 59 41 PM" src="https://github.com/explosion/spaCy/assets/15351802/8b5220dd-7a7a-45f2-b156-57595519d346">

## Environment

* spaCy version: 3.0.6

* Platform: Linux-5.10.178-162.673.amzn2.x86_64-x86_64-with-glibc2.26

* Python version: 3.10.10

| closed | 2023-06-18T23:14:18Z | 2023-07-20T00:02:26Z | https://github.com/explosion/spaCy/issues/12738 | [] | MisbahKhan789 | 3 |

yzhao062/pyod | data-science | 150 | callbacks in autoencoder | How can i implement callback parameter in fit moder Autoencoder ?

There is not parameter.

from keras.callbacks.callbacks import EarlyStopping

cb_earlystop = EarlyStopping(monitor='val_loss', min_delta=0, patience=0, verbose=0,

mode='auto', baseline=None, restore_best_weights=False)

pyod_model.fit(scaler, callbacks=[cb_earlystop])

TypeError: fit() got an unexpected keyword argument 'callbacks'

Can you implement this parameter? Its very usefull for monitor, early stop and another cases.

| open | 2019-12-16T16:58:31Z | 2024-05-07T13:05:38Z | https://github.com/yzhao062/pyod/issues/150 | [

"enhancement",

"help wanted",

"good first issue"

] | satrum | 6 |

pydantic/pydantic-settings | pydantic | 446 | Validation is only performed during debugging | I have an environment file that contains several key variables necessary for my application's proper functioning. However, I accidentally added a comment in front of one of these variables, which caused some confusion during debugging. While my code runs smoothly without any apparent issues, I encounter a validation error related to that specific variable when I debug it.

Here are sample codes:

```python

# config.py

from typing import Literal

from pydantic_settings import BaseSettings, SettingsConfigDict

class Config(BaseSettings):

ENVIRONMENT: Literal['debug', 'testing', 'production'] = 'debug'

model_config = SettingsConfigDict(

env_file='.env',,

env_ignore_empty=True,

extra='ignore',

)

config = Config()

```

``` python

# main.py

from config import config

```

``` python

# .env

ENVIRONMENT=debug # some comments here

```

Enclosing the `debug` statement in double quotes doesn't seem to resolve the issue, but removing the comment does.

Am I missing something? | closed | 2024-10-18T09:58:35Z | 2024-10-18T16:14:17Z | https://github.com/pydantic/pydantic-settings/issues/446 | [

"unconfirmed"

] | BabakAmini | 11 |

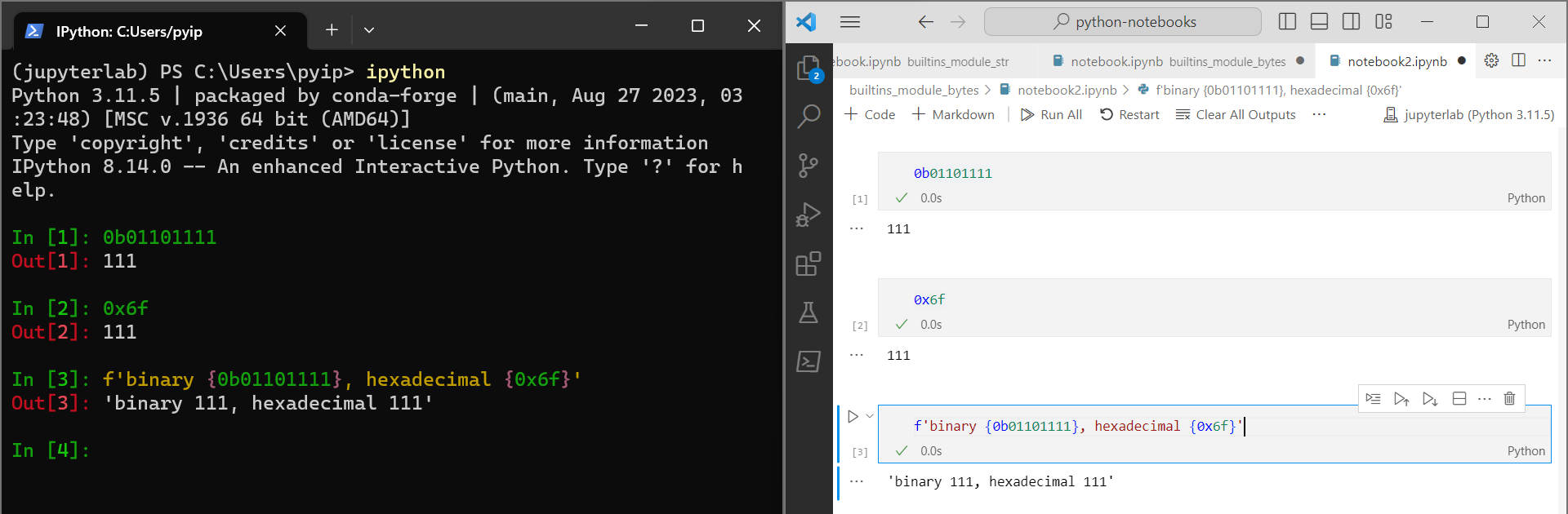

ipython/ipython | data-science | 14,202 | Syntax Highlighting 0b and 0x Prefix | <!-- This is the repository for IPython command line, if you can try to make sure this question/bug/feature belong here and not on one of the Jupyter repositories.

If it's a generic Python/Jupyter question, try other forums or discourse.jupyter.org.

If you are unsure, it's ok to post here, though, there are few maintainer so you might not get a fast response.

-->

The prefixes for binary ```0b``` and hex ```0x``` are not highlighted in a different colour in ipython like they are in vscode. Having the prefix in a different colour makes the code a bit more readable, emphasising that it is not a decimal number:

```

0b01101111

0x6f

f'binary {0b01101111}, hexadecimal {0x6f}'

```

| open | 2023-10-02T10:30:05Z | 2023-10-02T10:30:05Z | https://github.com/ipython/ipython/issues/14202 | [] | PhilipYip1988 | 0 |

microsoft/nni | data-science | 5,488 | It is recommended to combine quantification of NNI with onnxruntime | NNI is a great project and its quantification function is very easy.

However, I find that the quantified results can only be converted to tensorrt acceleration for the GPU within the framework of nni. This will be the biggest resistance for developers like mobile or cross-language platforms to choose nni.

So if you can combine the quantification function of NNI with the reasoning function of onnxruntime, it will be very convenient for project deployment. I believe it will make NNI the most popular project. | open | 2023-03-29T07:54:13Z | 2023-03-31T02:35:49Z | https://github.com/microsoft/nni/issues/5488 | [

"feature request"

] | Z-yq | 0 |

drivendataorg/erdantic | pydantic | 139 | Add support for msgspec.Struct | [msgspec](https://jcristharif.com/msgspec/) is a serialization and validation library, and it has a `Struct` class for declaring typed dataclass-like classes.

https://jcristharif.com/msgspec/structs.html | open | 2025-03-24T01:10:21Z | 2025-03-24T01:10:21Z | https://github.com/drivendataorg/erdantic/issues/139 | [

"enhancement"

] | jayqi | 0 |

ading2210/poe-api | graphql | 144 | Run temp_message error | - I know this question may be stupid, but I struggled for a long time.

- Is this library working now?

- I used version `0.4.8.`

- And this is my first time run it, then I got this error

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 233, in get_bot_thread

chat_data = self.get_bot(bot["node"]["displayName"])

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 214, in get_bot

chat_data = data["pageProps"]["data"]["chatOfBotDisplayName"]

KeyError: 'chatOfBotDisplayName'

Exception in thread Thread-3:

Traceback (most recent call last):

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 233, in get_bot_thread

chat_data = self.get_bot(bot["node"]["displayName"])

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 214, in get_bot

chat_data = data["pageProps"]["data"]["chatOfBotDisplayName"]

KeyError: 'chatOfBotDisplayName'

Exception in thread Thread-5:

Traceback (most recent call last):

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 233, in get_bot_thread

chat_data = self.get_bot(bot["node"]["displayName"])

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 214, in get_bot

chat_data = data["pageProps"]["data"]["chatOfBotDisplayName"]

KeyError: 'chatOfBotDisplayName'

Exception in thread Thread-1:

Traceback (most recent call last):

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 233, in get_bot_thread

chat_data = self.get_bot(bot["node"]["displayName"])

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 214, in get_bot

chat_data = data["pageProps"]["data"]["chatOfBotDisplayName"]

KeyError: 'chatOfBotDisplayName'

Exception in thread Thread-7:

Traceback (most recent call last):

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 233, in get_bot_thread

chat_data = self.get_bot(bot["node"]["displayName"])

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 214, in get_bot

chat_data = data["pageProps"]["data"]["chatOfBotDisplayName"]

KeyError: 'chatOfBotDisplayName'

Exception in thread Thread-4:

Traceback (most recent call last):

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 233, in get_bot_thread

chat_data = self.get_bot(bot["node"]["displayName"])

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 214, in get_bot

chat_data = data["pageProps"]["data"]["chatOfBotDisplayName"]

KeyError: 'chatOfBotDisplayName'

Exception in thread Thread-2:

Traceback (most recent call last):

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 233, in get_bot_thread

chat_data = self.get_bot(bot["node"]["displayName"])

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 214, in get_bot

chat_data = data["pageProps"]["data"]["chatOfBotDisplayName"]

KeyError: 'chatOfBotDisplayName'

INFO:root:Subscribing to mutations

WARNING:websocket:websocket connected

INFO:root:Sending message to capybara: Who are you?

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (1/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (2/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (3/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (4/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (5/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (6/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (7/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (8/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (9/10)...

WARNING:root:Server returned a status code of 404 while downloading https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json. Retrying (10/10)...

Traceback (most recent call last):

File "D:\Git_project\Duolingo_auto_answer\temp.py", line 20, in <module>

for chunk in client.send_message("capybara", message, with_chat_break=True):

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 484, in send_message

chat_id = self.get_bot_by_codename(chatbot)["chatId"]

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 254, in get_bot_by_codename

return self.get_bot(bot_codename)

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 210, in get_bot

data = request_with_retries(self.session.get, url).json()

File "C:\Users\111\AppData\Local\Programs\Python\Python38\lib\site-packages\poe.py", line 53, in request_with_retries

raise RuntimeError(f"Failed to download {url} too many times.")

RuntimeError: Failed to download https://poe.com/_next/data/2WUKJiaLlyAttUI7qPryw/capybara.json too many times. | closed | 2023-07-04T02:20:00Z | 2023-07-04T09:08:26Z | https://github.com/ading2210/poe-api/issues/144 | [

"bug"

] | LiMingchen159 | 3 |

flaskbb/flaskbb | flask | 218 | Activation email error | Hello, I have issue with email activation token sending.

[2016-09-23 23:56:21,050: INFO/MainProcess] Received task: flaskbb.email.send_activation_token[811ca7d8-7f1b-4440-8e89-3a5f5e9c266d]

[2016-09-23 23:56:21,061: ERROR/MainProcess] Task flaskbb.email.send_activation_token[811ca7d8-7f1b-4440-8e89-3a5f5e9c266d] raised unexpected: DetachedInstanceError('Instance <User at 0x7fc6a76e1590> is not bound to a Session; attribute refresh operation cannot proceed',)

Traceback (most recent call last):

File "/var/www/virtenv/flaskbb/local/lib/python2.7/site-packages/celery/app/trace.py", line 240, in trace_task

R = retval = fun(_args, *_kwargs)

File "/var/www/flaskbb/app.py", line 92, in **call**

return TaskBase.**call**(self, _args, *_kwargs)

File "/var/www/virtenv/flaskbb/local/lib/python2.7/site-packages/celery/app/trace.py", line 438, in **protected_call**

return self.run(_args, *_kwargs)

File "/var/www/flaskbb/email.py", line 48, in send_activation_token

token = make_token(user=user, operation="activate_account")

File "/var/www/flaskbb/utils/tokens.py", line 37, in make_token

data = {"id": user.id, "op": operation}

File "/var/www/virtenv/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/attributes.py", line 237, in **get**

return self.impl.get(instance_state(instance), dict_)

File "/var/www/virtenv/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/attributes.py", line 578, in get

value = state._load_expired(state, passive)

File "/var/www/virtenv/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/state.py", line 474, in _load_expired

self.manager.deferred_scalar_loader(self, toload)

File "/var/www/virtenv/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/loading.py", line 610, in load_scalar_attributes

(state_str(state)))

DetachedInstanceError: Instance <User at 0x7fc6a76e1590> is not bound to a Session; attribute refresh operation cannot proceed

Have any ideas?

| closed | 2016-09-23T21:03:36Z | 2018-04-15T07:47:40Z | https://github.com/flaskbb/flaskbb/issues/218 | [] | JustOnce | 3 |

modelscope/modelscope | nlp | 828 | 下载数据集失败了 | Thanks for your error report and we appreciate it a lot.

**Checklist**

* I have searched the tutorial on modelscope [doc-site](https://modelscope.cn/docs)

* I have searched related issues but cannot get the expected help.

* The bug has not been fixed in the latest version.

**Describe the bug**

```

Traceback (most recent call last):

File "/Users/wangxiaoxin/Downloads/test/download.py", line 6, in <module>

ds = MsDataset.load('modelscope/Youku-AliceMind', namespace='tany0699', split='train')

File "/opt/homebrew/lib/python3.9/site-packages/modelscope/msdatasets/ms_dataset.py", line 315, in load

dataset_inst = remote_dataloader_manager.load_dataset(

File "/opt/homebrew/lib/python3.9/site-packages/modelscope/msdatasets/data_loader/data_loader_manager.py", line 132, in load_dataset

oss_downloader.process()

File "/opt/homebrew/lib/python3.9/site-packages/modelscope/msdatasets/data_loader/data_loader.py", line 82, in process

self._build()

File "/opt/homebrew/lib/python3.9/site-packages/modelscope/msdatasets/data_loader/data_loader.py", line 109, in _build

meta_manager.parse_dataset_structure()

File "/opt/homebrew/lib/python3.9/site-packages/modelscope/msdatasets/meta/data_meta_manager.py", line 119, in parse_dataset_structure

raise 'Cannot find dataset meta-files, please fetch meta from modelscope hub.'

TypeError: exceptions must derive from BaseException

```

**To Reproduce**

```

from modelscope.msdatasets.ms_dataset import MsDataset

from modelscope import HubApi

api=HubApi()

api.login('')

ds = MsDataset.load('modelscope/Youku-AliceMind', namespace='tany0699', split='train')

print(next(iter(ds)))

```

**Your Environments (__required__)**

* OS: `uname -a`

* CPU: `lscpu`

* Commit id (e.g. `a3ffc7d8`)

* You may add addition that may be helpful for locating the problem, such as

* How you installed PyTorch [e.g., pip, conda, source]

* Other environment variables that may be related (such as $PATH, $LD_LIBRARY_PATH, $PYTHONPATH, etc.)

Please @ corresponding people according to your problem:

Model related: @wenmengzhou @tastelikefeet

Model hub related: @liuyhwangyh

Dataset releated: @wangxingjun778

Finetune related: @tastelikefeet @Jintao-Huang

Pipeline related: @Firmament-cyou @wenmengzhou

Contribute your model: @zzclynn

| closed | 2024-04-12T12:41:49Z | 2024-04-17T08:13:47Z | https://github.com/modelscope/modelscope/issues/828 | [] | 459737087 | 2 |

pydata/pandas-datareader | pandas | 111 | Additional data for yahoo finance data reader | Can additional data be added to the `Datareader` other than OHLC and volume data.

Data such as can be obtained:

- Dividend information (dates, yield, ex-dividend date...etc)

- EPS

- Market cap

- Price to book ratio

- P/E ratio

- Financial information such as quarter earnings.

The yahoo API supports streaming some of these through special tags as seen here: https://greenido.wordpress.com/2009/12/22/yahoo-finance-hidden-api/

This can be done in request but it would be nice if the Datareader had some option to scrape this information.

Thoughts?

| closed | 2015-10-19T08:11:41Z | 2018-01-18T16:32:37Z | https://github.com/pydata/pandas-datareader/issues/111 | [] | ccsv | 5 |

deezer/spleeter | tensorflow | 77 | [Discussion] WARNING:spleeter:[WinError 2] The system cannot find the file specified | Hello guys,

I'm trying spleeter on a Win10 laptop. When running ``spleeter separate -i spleeter\audio_example.mp3 -p spleeter:2stems -o output`` I got the following error:

``WARNING:spleeter:[WinError 2] The system cannot find the file specified``

I'm sure the file path is correct, so I'm confused about this error. Anyone has idea?

For your information, I didnt install spleeter using "conda" but using "pip" because when using conda I always had the problem of "simplejson finished with status error". | closed | 2019-11-12T10:32:50Z | 2019-11-16T23:21:21Z | https://github.com/deezer/spleeter/issues/77 | [

"question"

] | gladys0313 | 9 |

google-research/bert | tensorflow | 888 | how use the pretrain checkpoint to continue train on my own corpus? | I want to load the pretrain checkpoint to continue train on my own corpus, I use the `run_pretrain.py` code and set the init_checkpoint to the pretrain dir, while I run the code, it raise error?

```

ERROR:tensorflow:Error recorded from training_loop: Restoring from checkpoint failed. This is most likely due to a Variable name or other graph key that is missing from the checkpoint. Please ensure that you have not altered the graph expected based on the checkpoint. Original error:

From /job:worker/replica:0/task:0:

Key bert/embeddings/LayerNorm/beta/adam_m not found in checkpoint

[[node save/RestoreV2 (defined at /usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/ops.py:1748) ]]

```

I know that when finish training, it is better to remove `adam_m` and `adam_v` parameter to reduce the size of the checkpoint file, but I while want to continue train on the pretrain checkpoint, how to sovle this problem, may be I can recovert adam reference variable name in the checkpoint file ?thank you | open | 2019-10-25T13:27:04Z | 2021-11-10T03:27:30Z | https://github.com/google-research/bert/issues/888 | [] | RyanHuangNLP | 7 |

databricks/koalas | pandas | 2,213 | read_excel's parameter - mangle_dupe_cols is used to handle duplicate columns but fails if the duplicate columns are case sensitive. | mangle_dupe_cols - default is True

So ideally it should have handled duplicate columns, but in case if the columns are case sensitive it fails as below.

AnalysisException: Reference '`Sheet.col`' is ambiguous, could be: Sheet.col, Sheet.col.

Where two columns are Col and cOL

In the best practices, there is a mention of not to use case sensitive columns - https://koalas.readthedocs.io/en/latest/user_guide/best_practices.html#do-not-use-duplicated-column-names

Either the docs for read_excel/mangle_dupe_cols has to be updated about this or it has to be handled. | open | 2022-01-17T18:39:26Z | 2022-01-25T15:10:34Z | https://github.com/databricks/koalas/issues/2213 | [

"docs"

] | saikrishnapujari102087 | 2 |

robusta-dev/robusta | automation | 1,601 | enable cpu limit for "robusta-forwarder" service as current config cause cpu hogging | **Describe the bug**

Current helm config for `cpu limit` of `robusta-forwarder` caused the pods to consume available cpu of the Node.

**To Reproduce**

NA

**Expected behavior**

`robusta-forwarder` pod should run in specified cpu limits.

**Screenshots**

```

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc adm top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

worker7.copper.cp.xxxxx.xxxx.com 42648m 98% 34248Mi 14%

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc adm top po -n robusta

NAME CPU(cores) MEMORY(bytes)

robusta-forwarder-6ddb7758f7-xm42p 53400m 502Mi

robusta-runner-6cb648c696-44sqp 9m 838Mi

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc delete -n robusta po robusta-forwarder-6ddb7758f7-xm42p

pod "robusta-forwarder-6ddb7758f7-xm42p" deleted

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc get po -n robusta

NAME READY STATUS RESTARTS AGE

robusta-forwarder-6ddb7758f7-b2l69 1/1 Running 0 36s

robusta-runner-6cb648c696-44sqp 2/2 Running 2 (31h ago) 3d22h

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc version

Client Version: 4.15.15

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version: 4.14.36

Kubernetes Version: v1.27.16+03a907c

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc get po -n robusta

NAME READY STATUS RESTARTS AGE

robusta-forwarder-6ddb7758f7-b2l69 1/1 Running 0 17h

robusta-runner-6cb648c696-44sqp 2/2 Running 2 (2d1h ago) 4d16h

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc adm top po -n robusta

NAME CPU(cores) MEMORY(bytes)

robusta-forwarder-6ddb7758f7-b2l69 29m 286Mi

robusta-runner-6cb648c696-44sqp 880m 991Mi

pratikraj@Pratiks-MacBook-Pro ~ %

pratikraj@Pratiks-MacBook-Pro ~ % oc adm top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

worker7.copper.cp.xxxxx.xxxx.com 1284m 2% 34086Mi 14%

pratikraj@Pratiks-MacBook-Pro ~ %

```

**Environment Info (please complete the following information):**

```

Client Version: 4.15.15

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version: 4.14.36

Kubernetes Version: v1.27.16+03a907c

```

**Additional context**

Add any other context about the problem here.

| open | 2024-10-22T06:03:59Z | 2024-10-29T06:57:15Z | https://github.com/robusta-dev/robusta/issues/1601 | [] | Rajpratik71 | 3 |

QuivrHQ/quivr | api | 3,378 | fix: Linking brain syncs return as root | File list should filter on local only | closed | 2024-10-16T07:33:39Z | 2024-10-16T08:19:30Z | https://github.com/QuivrHQ/quivr/issues/3378 | [

"bug"

] | linear[bot] | 1 |

neuml/txtai | nlp | 816 | Fix error with inferring function parameters in agents | Currently, when passing a function as a tool, it's not correctly inferring the name and input parameters. This should be fixed.

In the meantime, callable objects are working as expected. | closed | 2024-11-21T19:01:47Z | 2024-11-21T19:16:22Z | https://github.com/neuml/txtai/issues/816 | [

"bug"

] | davidmezzetti | 0 |

holoviz/panel | matplotlib | 7,428 | Bubble map at each well location represented by pie charts. | In the oil industry we typically plot a bubble plot at each well location on a map where the size of the bubble might be scaled by daily oil production. In our applications the bubbles are actually pie charts at each well location where the pie could be divided up by total oil, water or gas production at each well.

I would think that this application might be useful for other mapping applications too. | closed | 2024-10-21T18:51:30Z | 2025-01-21T13:43:48Z | https://github.com/holoviz/panel/issues/7428 | [] | Philliec459 | 4 |

python-restx/flask-restx | api | 358 | Apply method_decorators globally | Is there a way to apply `method_decorators` globally without having to define them on every Resource? | closed | 2021-07-22T16:34:26Z | 2021-07-22T19:07:27Z | https://github.com/python-restx/flask-restx/issues/358 | [

"question"

] | santalvarez | 1 |

scikit-hep/awkward | numpy | 3,036 | awkward._nplikes.typetracer.TypeTracerArray._new needs further optimization, it is hot code | ### Version of Awkward Array

2.6.1

### Description and code to reproduce

The present code is below:

```python3

@classmethod

def _new(

cls,

dtype: DType,

shape: tuple[ShapeItem, ...],

form_key: str | None = None,

report: TypeTracerReport | None = None,

):

self = super().__new__(cls)

self.form_key = form_key

self.report = report

if not isinstance(shape, tuple):

raise TypeError("typetracer shape must be a tuple")

if not all(isinstance(x, int) or x is unknown_length for x in shape):

raise TypeError("typetracer shape must be integers or unknown-length")

if not isinstance(dtype, np.dtype):

raise TypeError("typetracer dtype must be an instance of np.dtype")

self._shape = shape

self._dtype = dtype

return self

```

in particular:

```python3

if not isinstance(shape, tuple):

raise TypeError("typetracer shape must be a tuple")

if not all(isinstance(x, int) or x is unknown_length for x in shape):

raise TypeError("typetracer shape must be integers or unknown-length")

if not isinstance(dtype, np.dtype):

raise TypeError("typetracer dtype must be an instance of np.dtype")

```

is quite expensive at the scale of thousands of awkward array kernel calls and in normal, non-development, operation none of these if statements ever evaluate to True. They are relatively expensive to evaluate when there is no data processing, see for example typetracing in dask-awkward.

We know already that commenting out these lines brings significant performance improvements.

A reasonable alternative to that would be wrapping this code in an if block.

```python3

@classmethod

def _new(

cls,

dtype: DType,

shape: tuple[ShapeItem, ...],

form_key: str | None = None,

report: TypeTracerReport | None = None,

):

self = super().__new__(cls)

self.form_key = form_key

self.report = report

if __development_build__:

if not isinstance(shape, tuple):

raise TypeError("typetracer shape must be a tuple")

if not all(isinstance(x, int) or x is unknown_length for x in shape):

raise TypeError("typetracer shape must be integers or unknown-length")

if not isinstance(dtype, np.dtype):

raise TypeError("typetracer dtype must be an instance of np.dtype")

self._shape = shape

self._dtype = dtype

return self

```

Where `__development_build__` is just a stand in name. I think the only requirements would be that the parameter to the if statement is a simple bool, no lookups (etc.), as this is known to be pretty hot code.

Savings are O(1s) for a complex HEP analysis when building a single few-thousand layer task graph. This corresponds to many minutes saved in processing for full-scale analysis.

@jpivarski @agoose77 @martindurant | closed | 2024-03-01T16:03:08Z | 2024-03-21T23:09:00Z | https://github.com/scikit-hep/awkward/issues/3036 | [

"performance"

] | lgray | 10 |

Ehco1996/django-sspanel | django | 207 | 还更吗? | 还更吗? | closed | 2019-02-01T10:53:50Z | 2019-02-25T13:47:57Z | https://github.com/Ehco1996/django-sspanel/issues/207 | [] | perfect-network | 2 |

unit8co/darts | data-science | 2,111 | How to use darts libraries with PySpark? | Hello all,

I'm trying to integrate darts library with PySpark session to make my application perform time-series prediction in parallelism.

I referred this blog [Time-Series Forecasting with Spark and Prophet](https://medium.com/@y.s.yoon/scalable-time-series-forecasting-in-spark-prophet-cnn-lstm-and-sarima-a5306153711e) inplace of Prophet i was trying using darts.

Is this a good approch? or any other suggestion for prediction in parallelism ? | closed | 2023-12-07T14:26:12Z | 2024-01-10T07:44:56Z | https://github.com/unit8co/darts/issues/2111 | [

"question"

] | AyushBhardwaj321 | 1 |

sinaptik-ai/pandas-ai | data-visualization | 712 | Using pandasai with llama2 70B. Error: "Unfortunately, I was not able to answer your question, because of the following error:\n\nCSVFormatter.__init__() got an unexpected keyword argument 'line_terminator'\n" | ### System Info

pandasai version 1.4.1

python version 3.10.6

llm - llama2 70B

environment - sagemaker

### 🐛 Describe the bug

code:

``` python

from pandasai.llm import HuggingFaceTextGen

from pandasai import SmartDataframe

llm = HuggingFaceTextGen(

inference_server_url="ENDPOINT_URL"

)

df = SmartDataframe("data.csv", config={"llm": llm})

df.chat("plot a chart of col_1 by col_2")

```

Error:

"Unfortunately, I was not able to answer your question, because of the following error:\n\nCSVFormatter.__init__() got an unexpected keyword argument 'line_terminator'\n" | closed | 2023-10-31T02:24:14Z | 2024-06-01T00:19:41Z | https://github.com/sinaptik-ai/pandas-ai/issues/712 | [] | aparnakesarkar | 2 |

ultralytics/yolov5 | pytorch | 13,127 | YOLOv5 receptive range size | Hello

I am currently doing small defect detection. The length or width of the defect occupies at most 0.02-0.08 of the original image. I am using YOLOv5s. I have found in other previous questions that YOLO default uses P3 P4 P5 as the detection head. In other questions and answers, you can Knowing that the P5 is a large detector, I thought the listing could be deleted in my case. In your previous answer I noticed that you mentioned the receptive field.

I want to ask about this. I calculated the receptive field size in 5s to skip "skip connection".

(The only modules that affect perception and calculation are CNN and bottleneck in CSP)

P1 is 6

P2+CSP is 18

P3+CSP is 66

P4+CSP is 194

P5+CSP is 322

What I want to ask you about is about receptive field correspondence.

In my example, my input size is 640 multiplied by the overall defect size to cover the maximum range of 0.08, and the defective pixels account for 52. So I think I only need to cover the receptive field with the tiny defect size and expand it to twice the receptive field size in another layer. To make another area partial, taking the above 52 as an example, I should select 66 of P3 and reduce the bottleneck in P4 to twice the size of 66 and delete P5.

(Since P5 is aimed at large objects and the larger receptive field may cause the object to be blurred in the depth of the rolling machine), I think that the reason for modifying this part from the backbone is because the backbone is feature extraction.

What I want to ask is, is the idea of deleting the bottleneck correct?

In addition, does this idea correspond to the receptive field you mentioned (I am doing research on alignment) | closed | 2024-06-25T13:06:54Z | 2024-10-20T19:48:52Z | https://github.com/ultralytics/yolov5/issues/13127 | [

"question"

] | Heaven0612 | 8 |

viewflow/viewflow | django | 81 | django 1.7+ support? | whats the roadmap/plans for supporting atleast django 1.7?

| closed | 2015-03-19T16:47:34Z | 2015-07-31T08:24:57Z | https://github.com/viewflow/viewflow/issues/81 | [

"request/question"

] | Bashar | 1 |

davidteather/TikTok-Api | api | 378 | [BUG] - Your Error Here | # Read Below!!! If this doesn't fix your issue delete these two lines

**You may need to install chromedriver for your machine globally. Download it [here](https://sites.google.com/a/chromium.org/chromedriver/) and add it to your path.**

**Describe the bug**

A clear and concise description of what the bug is.

**The buggy code**

Please insert the code that is throwing errors or is giving you weird unexpected results.

```

# Code Goes Here

```

**Expected behavior**

A clear and concise description of what you expected to happen.

**Error Trace (if any)**

Put the error trace below if there's any error thrown.

```

# Error Trace Here

```

**Desktop (please complete the following information):**

- OS: [e.g. Windows 10]

- TikTokApi Version [e.g. 3.3.1] - if out of date upgrade before posting an issue

**Additional context**

Add any other context about the problem here.

| closed | 2020-11-20T10:31:11Z | 2020-11-20T10:40:46Z | https://github.com/davidteather/TikTok-Api/issues/378 | [

"bug"

] | handole | 1 |

sunscrapers/djoser | rest-api | 353 | It's unclear what is meant by SERIALIZERS.current_user | I'm getting a deprecation warning "Current user endpoints now use their own serializer setting. For more information, see: https://djoser.readthedocs.io/en/latest/settings.html#serializers".

The referred documentation mentions that "Current user endpoints now use the serializer specified by SERIALIZERS.current_user". However, it's unclear what `SERIALIZERS.current_user` exactly refers to. Does it mean `settings.DJOSER['SERIALIZERS']['current_user']`? Or does it mean that `settings.SERIALIZERS` should point to an object that has a `current_user` member? In the latter case, I suppose `settings.SERIALIZERS.current_user` is a string that points to a serializer class?

In any case, I'm confused. Could this be clarified? | closed | 2019-02-12T10:37:59Z | 2019-05-15T18:08:39Z | https://github.com/sunscrapers/djoser/issues/353 | [] | mnieber | 1 |

Anjok07/ultimatevocalremovergui | pytorch | 1,402 | Model weights changed? Won't run past 1% | I'm getting this error every time I try to use MDX23C_D1581 modelI. I didn't change any settings (that I'm aware of) and I even uninstalled/reinstalled the GUI but the same thing is happening. I'm completely lost.

Last Error Received:

Process: MDX-Net

If this error persists, please contact the developers with the error details.

Raw Error Details:

RuntimeError: "Error(s) in loading state_dict for TFC_TDF_net:

size mismatch for final_conv.2.weight: copying a param with shape torch.Size([32, 128, 1, 1]) from checkpoint, the shape in current model is torch.Size([16, 128, 1, 1])."

Traceback Error: "

File "UVR.py", line 6638, in process_start

File "separate.py", line 652, in seperate

File "separate.py", line 741, in demix

File "torch\nn\modules\module.py", line 1667, in load_state_dict

"

Error Time Stamp [2024-06-11 17:21:37]

Full Application Settings:

vr_model: Choose Model

aggression_setting: 5

window_size: 512

mdx_segment_size: 256

batch_size: Default

crop_size: 256

is_tta: True

is_output_image: False

is_post_process: False

is_high_end_process: False

post_process_threshold: 0.2

vr_voc_inst_secondary_model: MDX-Net: UVR-MDX-NET-Voc_FT

vr_other_secondary_model: Demucs: v3 | mdx_extra_q

vr_bass_secondary_model: Demucs: v4 | htdemucs_ft

vr_drums_secondary_model: Demucs: v4 | htdemucs_6s

vr_is_secondary_model_activate: True

vr_voc_inst_secondary_model_scale: 0.80

vr_other_secondary_model_scale: 0.7

vr_bass_secondary_model_scale: 0.5

vr_drums_secondary_model_scale: 0.5

demucs_model: Choose Model

segment: Default

overlap: 0.25

overlap_mdx: Default

overlap_mdx23: 8

shifts: 2

chunks_demucs: Auto

margin_demucs: 44100

is_chunk_demucs: False

is_chunk_mdxnet: False

is_primary_stem_only_Demucs: False

is_secondary_stem_only_Demucs: False

is_split_mode: True

is_demucs_combine_stems: True

is_mdx23_combine_stems: True

demucs_voc_inst_secondary_model: MDX-Net: UVR-MDX-NET-Voc_FT

demucs_other_secondary_model: Demucs: v3 | repro_mdx_a_hybrid

demucs_bass_secondary_model: Demucs: v4 | htdemucs_ft

demucs_drums_secondary_model: Demucs: v4 | htdemucs_6s

demucs_is_secondary_model_activate: True

demucs_voc_inst_secondary_model_scale: 0.80

demucs_other_secondary_model_scale: 0.7

demucs_bass_secondary_model_scale: 0.5

demucs_drums_secondary_model_scale: 0.5

demucs_pre_proc_model: MDX-Net: MDX23C-InstVoc HQ 2

is_demucs_pre_proc_model_activate: True

is_demucs_pre_proc_model_inst_mix: False

mdx_net_model: MDX23C_D1581.ckpt

chunks: Auto

margin: 44100

compensate: Auto

denoise_option: None

is_match_frequency_pitch: True

phase_option: Automatic

phase_shifts: None

is_save_align: False

is_match_silence: False

is_spec_match: True

is_mdx_c_seg_def: False

is_invert_spec: False

is_deverb_vocals: True

deverb_vocal_opt: All Vocal Types

voc_split_save_opt: Lead Only

is_mixer_mode: False

mdx_batch_size: Default

mdx_voc_inst_secondary_model: MDX-Net: UVR-MDX-NET Karaoke 2

mdx_other_secondary_model: Demucs: v3 | repro_mdx_a_hybrid

mdx_bass_secondary_model: Demucs: v4 | htdemucs_ft

mdx_drums_secondary_model: Demucs: v4 | htdemucs_6s

mdx_is_secondary_model_activate: True

mdx_voc_inst_secondary_model_scale: 0.80

mdx_other_secondary_model_scale: 0.7

mdx_bass_secondary_model_scale: 0.5

mdx_drums_secondary_model_scale: 0.5

is_save_all_outputs_ensemble: True

is_append_ensemble_name: False

chosen_audio_tool: Manual Ensemble

choose_algorithm: Min Spec

time_stretch_rate: 2.0

pitch_rate: 2.0

is_time_correction: True

is_gpu_conversion: True

is_primary_stem_only: False

is_secondary_stem_only: False

is_testing_audio: False

is_auto_update_model_params: True

is_add_model_name: False

is_accept_any_input: False

is_task_complete: False

is_normalization: False

is_use_opencl: False

is_wav_ensemble: False

is_create_model_folder: False

mp3_bit_set: 320k

semitone_shift: 0

save_format: WAV

wav_type_set: PCM_16

device_set: Quadro M4000:0

help_hints_var: True

set_vocal_splitter: VR Arc: 6_HP-Karaoke-UVR

is_set_vocal_splitter: True

is_save_inst_set_vocal_splitter: False

model_sample_mode: False

model_sample_mode_duration: 30

demucs_stems: All Stems

mdx_stems: Vocals | open | 2024-06-11T21:35:22Z | 2024-06-11T21:49:21Z | https://github.com/Anjok07/ultimatevocalremovergui/issues/1402 | [] | dirtdoggy | 0 |

deezer/spleeter | deep-learning | 116 | [Discussion] FileNotFoundError When Trying to Train | ### **Steps:**

1. Installed using anaconda

2. Run as: spleeter train -p sirens_config.json -d sirens_train.csv

3. FileNotFoundError: [Errno 2] File b'configs/sirens_train.csv' does not exist: b'configs/sirens_train.csv'

sirens _train.csv definitely exists so I'm not sure what the issue is. any help would be great thanks! | closed | 2019-11-18T22:43:11Z | 2020-01-23T09:38:13Z | https://github.com/deezer/spleeter/issues/116 | [

"question"

] | clockworkred458 | 1 |

kizniche/Mycodo | automation | 670 | TH10 w/ AM2301. Mycodo reporting C as F | ## Mycodo Issue Report:

- Specific Mycodo Version:

Mycodo Version: 7.5.10

Python Version: 3.5.3 (default, Sep 27 2018, 17:25:39) [GCC 6.3.0 20170516]

Database Version: 6333b0832b3d

Daemon Status: Running

Daemon Process ID: 759

Daemon RAM Usage: 68.756 MB

Daemon Virtualenv: Yes

Frontend RAM Usage: 53.084 MB

Frontend Virtualenv: Yes

#### Problem Description

TH10 + AM2301 with Tasmota 6.6.0. Mycodo reports humidity correctly but displays incorrect data for Temperature. When set to read C and Do Not Convert it lists the C temp much lower than actual. When Input set to Convert to Fahrenheit, it lists the correct temperature in Celcius but displays it as Fahrenheit.

### Errors

- List any errors you encountered.

- Copy and pasting crash logs, or link to any specific

code lines you've isolated (please use GitHub permalinks for this)

### Steps to Reproduce the issue:

How can this issue be reproduced?

1. Add TH10 w/ AM2301 w/ Tasmota as input

2. Live data lists incorrect C temperature

3. Set input to Convert to Fahrenheit

4. Live data displays correct C but displayed as F

### Additional Notes

Images attached. Thanks for the great product!

| closed | 2019-07-09T21:16:18Z | 2019-07-17T11:09:17Z | https://github.com/kizniche/Mycodo/issues/670 | [

"bug"

] | toposwope | 49 |

collerek/ormar | pydantic | 885 | save_related doesn't work if id is uuid | **Describe the bug**

If you use Model.save_related and the model has a uuid pk instead of an int it doent save anything

(The save itself returns the numer of rows saved correctly)

**To Reproduce**

Copy code example of https://collerek.github.io/ormar/models/methods/#save_related

Code works as expected.

replace id with `id: UUID4 = ormar.UUID(primary_key=True, default=uuid4)`

Now the get() raises `ormar.exceptions.NoMatch`

**Expected behavior**

Expect the same behavior as with int pk.

**Versions (please complete the following information):**

- Database backend used postgress

- Python version python:3.10.8-slim-bullseye (docker image)

- `ormar` version 0.11.3

- `pydantic` version 1.10.2

- if applicable `fastapi` version 0.85.0

| closed | 2022-10-18T18:09:02Z | 2022-10-31T16:45:39Z | https://github.com/collerek/ormar/issues/885 | [

"bug"

] | eloi-martinez-qida | 2 |

vvbbnn00/WARP-Clash-API | flask | 152 | 你的ip是什么ip啊 | http://你的IP:21001

你的ip是什么ip啊

小白看不太懂

http://127.0.0.1:21001/api/clash?best=false&randomName=true&proxyFormat=full&ipv6=true

我得到这个链接,但是无法下载节点,把上面的链接打开提示{"code":403,"message":"Unauthorized"}

请问这是什么意思 | closed | 2024-03-16T06:19:47Z | 2024-11-24T06:07:01Z | https://github.com/vvbbnn00/WARP-Clash-API/issues/152 | [

"enhancement"

] | hicaicai | 2 |

junyanz/pytorch-CycleGAN-and-pix2pix | pytorch | 1,127 | When training the GAN model, how many epochs do we need to train | Hello,

In your article, Cyclegan is trained a total of 200 epochs.

But when I read the latest GAN article-"Reusing Discriminators for Encoding: Towards Unsupervised Image-to-Image Translation", the author compares some gan models, including Cyclegan. In the article, All models are trained over 100K iterations.

so,i want to konw , When training the GAN model, how many epochs do we need to train | closed | 2020-08-19T08:13:03Z | 2021-04-15T14:24:56Z | https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/1127 | [] | hankhaohao | 6 |

psf/requests | python | 6,145 | timeout parameter not applied to initial connection | If specifying the timeout parameter to requests.get(), an exception is raised after the specified timeout. If the code is executed without an internet connection, an exception is raised immediately. Python is at 3.10.

## Expected Result

It feels like the timeout should apply to this scenario as well.

## Actual Result

<!-- What happened instead. -->

## Reproduction Steps

```python

import requests

```

## System Information

$ python -m requests.help

```json

{

"paste": "here"

}

```

<!-- This command is only available on Requests v2.16.4 and greater. Otherwise,

please provide some basic information about your system (Python version,

operating system, &c). -->

| closed | 2022-05-29T18:56:10Z | 2023-05-30T00:02:52Z | https://github.com/psf/requests/issues/6145 | [] | chatumao | 2 |

huggingface/pytorch-image-models | pytorch | 1,878 | [BUG] EfficientFormer TypeError: expected TensorOptions | **Describe the Bug**

TypeError occurred when implementing the EfficientFormer. The remaining tested models work well

**To Reproduce**

Run the below code in the training pipeline

```

class EfficientFormer(torch.nn.Module):

def __init__(self):

super(EfficientFormer, self).__init__()

self.model = timm.create_model('efficientformer_l1.snap_dist_in1k', num_classes=2)

def forward(self, x):

for name, param in self.model.named_parameters():

if 'head' not in name:

param.requires_grad = False

print(name, param.requires_grad)

return self.model(x)

```

**Expected Behavior**

```

C:\Users\e0575844\Anaconda3\envs\ET\lib\site-packages\torch\functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at C:\actions-runner\_work\pytorch\pytorch\builder\windows\pytorch\aten\src\ATen\native\TensorShape.cpp:3191.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

Traceback (most recent call last):

File "C:\Users\A\Desktop\CCCC\train.py", line 116, in <module>

main()

File "C:\Users\A\Desktop\CCCC\train.py", line 112, in main

train(**vars(args))

File "C:\Users\A\Desktop\CCCC\train.py", line 14, in train

model = model_sel(model, device)

File "C:\Users\A\Desktop\CCCC\utils\selection.py", line 35, in model_sel

model = model_dict[model_name]()

File "C:\Users\A\Desktop\CCCC\utils\models.py", line 352, in __init__

self.model = timm.create_model('efficientformer_l1.snap_dist_in1k', num_classes=2)

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\_factory.py", line 114, in create_model

model = create_fn(

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\efficientformer.py", line 551, in efficientformer_l1

return _create_efficientformer('efficientformer_l1', pretrained=pretrained, **dict(model_args, **kwargs))

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\efficientformer.py", line 537, in _create_efficientformer

model = build_model_with_cfg(

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\_builder.py", line 381, in build_model_with_cfg

model = model_cls(**kwargs)

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\efficientformer.py", line 389, in __init__

stage = EfficientFormerStage(

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\efficientformer.py", line 320, in __init__

MetaBlock1d(

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\efficientformer.py", line 220, in __init__

self.token_mixer = Attention(dim)

File "C:\Users\A\Anaconda3\envs\ET\lib\site-packages\timm\models\efficientformer.py", line 70, in __init__

self.register_buffer('attention_bias_idxs', torch.LongTensor(rel_pos))

TypeError: expected TensorOptions(dtype=__int64, device=cpu, layout=Strided, requires_grad=false (default), pinned_memory=false (default), memory_format=(nullopt)) (got TensorOptions(dtype=__int64, device=cuda:0, layout=Strided, requires_grad=false (default), pinned_memory=false (default), memory_format=(nullopt)))

```

**Desktop**

- Windows 10

- timm==0.9.2

- PyTorch==1.13.1+cu116, cuDNN==8302 | closed | 2023-07-22T07:07:24Z | 2023-08-03T23:39:47Z | https://github.com/huggingface/pytorch-image-models/issues/1878 | [

"bug"

] | NUS-Tim | 1 |

jupyter-book/jupyter-book | jupyter | 2,150 | Can't build book on mac when folder name is not project name. | ### Describe the bug

I have a repository whose folder name is different than the package name. When building the book it fails because the virtual environment name is wrong (see below).

### Reproduce the bug

Have a repo with a different folder name than the package, and the build will fail. Changing the folder name to match the package, and the build succeeds. The bug only manifests on mac, on linux it works as expected.

### List your environment

```

# Platform: darwin; (macOS-14.1-arm64-arm-64bit)

# Sphinx version: 7.3.7

# Python version: 3.11.9 (CPython)

# Docutils version: 0.20.1

# Jinja2 version: 3.1.3

# Pygments version: 2.18.0

# Last messages:

#

#

# reading sources... [ 50%]

# markdown

#

#

# reading sources... [ 75%]

# markdown-notebooks

#

# /Users/bothg/Documents/flybody/flybody_internal/docs/markdown-notebooks.md: Executing notebook using local CWD [mystnb]

# Loaded extensions:

# sphinx.ext.mathjax (7.3.7)

# alabaster (0.7.16)

# sphinxcontrib.applehelp (1.0.8)

# sphinxcontrib.devhelp (1.0.6)

# sphinxcontrib.htmlhelp (2.0.5)

# sphinxcontrib.serializinghtml (1.1.10)

# sphinxcontrib.qthelp (1.0.7)

# sphinx_togglebutton (0.3.2)

# sphinx_copybutton (0.5.2)

# myst_nb (1.1.0)

# jupyter_book (1.0.0)

# sphinx_thebe (0.3.1)

# sphinx_comments (0.0.3)

# sphinx_external_toc (1.0.1)

# sphinx.ext.intersphinx (7.3.7)

# sphinx_design (0.5.0)

# sphinx_book_theme (unknown version)

# sphinxcontrib.bibtex (2.6.2)

# sphinx_jupyterbook_latex (unknown version)

# sphinx_multitoc_numbering (unknown version)

# pydata_sphinx_theme (unknown version)

# Traceback:

Traceback (most recent call last):

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_book/sphinx.py", line 167, in build_sphinx

app.build(force_all, filenames)

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/sphinx/application.py", line 351, in build

self.builder.build_update()

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/sphinx/builders/__init__.py", line 293, in build_update

self.build(to_build,

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/sphinx/builders/__init__.py", line 313, in build

updated_docnames = set(self.read())

^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/sphinx/builders/__init__.py", line 419, in read

self._read_serial(docnames)

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/sphinx/builders/__init__.py", line 440, in _read_serial

self.read_doc(docname)

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/sphinx/builders/__init__.py", line 497, in read_doc

publisher.publish()

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/docutils/core.py", line 234, in publish

self.document = self.reader.read(self.source, self.parser,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/sphinx/io.py", line 107, in read

self.parse()

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/docutils/readers/__init__.py", line 76, in parse

self.parser.parse(self.input, document)

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/myst_nb/sphinx_.py", line 152, in parse

with create_client(

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/myst_nb/core/execute/base.py", line 79, in __enter__

self.start_client()

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/myst_nb/core/execute/direct.py", line 40, in start_client

result = single_nb_execution(

^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_cache/executors/utils.py", line 58, in single_nb_execution

executenb(

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/nbclient/client.py", line 1314, in execute

return NotebookClient(nb=nb, resources=resources, km=km, **kwargs).execute()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_core/utils/__init__.py", line 165, in wrapped

return loop.run_until_complete(inner)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/asyncio/base_events.py", line 654, in run_until_complete

return future.result()

^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/nbclient/client.py", line 693, in async_execute

async with self.async_setup_kernel(**kwargs):

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/contextlib.py", line 210, in __aenter__

return await anext(self.gen)

^^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/nbclient/client.py", line 648, in async_setup_kernel

await self.async_start_new_kernel(**kwargs)

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/nbclient/client.py", line 550, in async_start_new_kernel

await ensure_async(self.km.start_kernel(extra_arguments=self.extra_arguments, **kwargs))

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_core/utils/__init__.py", line 198, in ensure_async

result = await obj

^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_client/manager.py", line 96, in wrapper

raise e

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_client/manager.py", line 87, in wrapper

out = await method(self, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_client/manager.py", line 439, in _async_start_kernel

await self._async_launch_kernel(kernel_cmd, **kw)

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_client/manager.py", line 354, in _async_launch_kernel

connection_info = await self.provisioner.launch_kernel(kernel_cmd, **kw)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_client/provisioning/local_provisioner.py", line 210, in launch_kernel

self.process = launch_kernel(cmd, **scrubbed_kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_client/launcher.py", line 170, in launch_kernel

raise ex

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/site-packages/jupyter_client/launcher.py", line 155, in launch_kernel

proc = Popen(cmd, **kwargs) # noqa

^^^^^^^^^^^^^^^^^^^^

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/subprocess.py", line 1026, in __init__

self._execute_child(args, executable, preexec_fn, close_fds,

File "/Users/bothg/.pyenv/versions/3.11.9/lib/python3.11/subprocess.py", line 1955, in _execute_child

raise child_exception_type(errno_num, err_msg, err_filename)

FileNotFoundError: [Errno 2] No such file or directory: '/Users/bothg/Documents/flybody/flybody/.venv/bin/python'

``` | open | 2024-05-13T20:58:20Z | 2024-05-13T20:58:50Z | https://github.com/jupyter-book/jupyter-book/issues/2150 | [

"bug"

] | GJBoth | 1 |

ymcui/Chinese-LLaMA-Alpaca | nlp | 735 | run_clm_sft_with_peft.py脚本是不是不支持shareGPT那种形式的多轮数据训练? | ### 提交前必须检查以下项目

- [X] 请确保使用的是仓库最新代码(git pull),一些问题已被解决和修复。

- [X] 由于相关依赖频繁更新,请确保按照[Wiki](https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki)中的相关步骤执行

- [X] 我已阅读[FAQ章节](https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki/常见问题)并且已在Issue中对问题进行了搜索,没有找到相似问题和解决方案

- [X] 第三方插件问题:例如[llama.cpp](https://github.com/ggerganov/llama.cpp)、[text-generation-webui](https://github.com/oobabooga/text-generation-webui)、[LlamaChat](https://github.com/alexrozanski/LlamaChat)等,同时建议到对应的项目中查找解决方案

- [X] 模型正确性检查:务必检查模型的[SHA256.md](https://github.com/ymcui/Chinese-LLaMA-Alpaca/blob/main/SHA256.md),模型不对的情况下无法保证效果和正常运行

### 问题类型

模型训练与精调

### 基础模型

LLaMA-Plus-13B

### 操作系统

Linux

### 详细描述问题

run_clm_sft_with_peft.py脚本是不是不支持shareGPT那种形式的多轮数据训练?

### 依赖情况(代码类问题务必提供)

```

# 请在此处粘贴依赖情况

```

### 运行日志或截图

```

# 请在此处粘贴运行日志

``` | closed | 2023-07-11T05:51:49Z | 2023-07-21T22:02:09Z | https://github.com/ymcui/Chinese-LLaMA-Alpaca/issues/735 | [

"stale"

] | xyfZzz | 4 |

netbox-community/netbox | django | 18,373 | Cannot Assign devices to Cluster | ### Deployment Type

Self-hosted

### Triage priority

N/A

### NetBox Version

v.4.2.1

### Python Version

3.11

### Steps to Reproduce

1. Create a new Cluster, fill out only required fields

2. Click on Assign Device to Cluster, and fill out only required fields

3. Click Add Devices

### Expected Behavior

Device(s) are assigned to Cluster

### Observed Behavior

Server Error from NetBox

```

<class 'AttributeError'>

'Cluster' object has no attribute 'site'

Python version: 3.11.2

NetBox version: 4.2.1

Plugins:

netbox_branching: 0.5.2

netbox_device_view: 0.1.7

netbox_reorder_rack: 1.1.3

``` | closed | 2025-01-09T21:45:21Z | 2025-01-17T13:35:18Z | https://github.com/netbox-community/netbox/issues/18373 | [

"type: bug",

"status: accepted",

"severity: medium"

] | joeladria | 0 |

pydantic/pydantic-ai | pydantic | 667 | Ollama: Stream Always Fails | Hi,