modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

unknown | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

unknown | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

myshell-ai/MeloTTS-French | myshell-ai | "2024-03-01T17:32:59Z" | 28,334 | 3 | transformers | [

"transformers",

"text-to-speech",

"ko",

"license:mit",

"endpoints_compatible",

"region:us"

] | text-to-speech | "2024-02-29T14:54:16Z" | ---

license: mit

language:

- ko

pipeline_tag: text-to-speech

---

# MeloTTS

MeloTTS is a **high-quality multi-lingual** text-to-speech library by [MyShell.ai](https://myshell.ai). Supported languages include:

| Model card | Example |

| --- | --- |

| [English](https://huggingface.co/myshell-ai/MeloTTS-English-v2) (American) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/en/EN-US/speed_1.0/sent_000.wav) |

| [English](https://huggingface.co/myshell-ai/MeloTTS-English-v2) (British) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/en/EN-BR/speed_1.0/sent_000.wav) |

| [English](https://huggingface.co/myshell-ai/MeloTTS-English-v2) (Indian) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/en/EN_INDIA/speed_1.0/sent_000.wav) |

| [English](https://huggingface.co/myshell-ai/MeloTTS-English-v2) (Australian) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/en/EN-AU/speed_1.0/sent_000.wav) |

| [English](https://huggingface.co/myshell-ai/MeloTTS-English-v2) (Default) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/en/EN-Default/speed_1.0/sent_000.wav) |

| [Spanish](https://huggingface.co/myshell-ai/MeloTTS-Spanish) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/es/ES/speed_1.0/sent_000.wav) |

| [French](https://huggingface.co/myshell-ai/MeloTTS-French) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/fr/FR/speed_1.0/sent_000.wav) |

| [Chinese](https://huggingface.co/myshell-ai/MeloTTS-Chinese) (mix EN) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/zh/ZH/speed_1.0/sent_008.wav) |

| [Japanese](https://huggingface.co/myshell-ai/MeloTTS-Japanese) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/jp/JP/speed_1.0/sent_000.wav) |

| [Korean](https://huggingface.co/myshell-ai/MeloTTS-Korean/) | [Link](https://myshell-public-repo-hosting.s3.amazonaws.com/myshellttsbase/examples/kr/KR/speed_1.0/sent_000.wav) |

Some other features include:

- The Chinese speaker supports `mixed Chinese and English`.

- Fast enough for `CPU real-time inference`.

## Usage

### Without Installation

An unofficial [live demo](https://huggingface.co/spaces/mrfakename/MeloTTS) is hosted on Hugging Face Spaces.

#### Use it on MyShell

There are hundreds of TTS models on MyShell, much more than MeloTTS. See examples [here](https://github.com/myshell-ai/MeloTTS/blob/main/docs/quick_use.md#use-melotts-without-installation).

More can be found at the widget center of [MyShell.ai](https://app.myshell.ai/robot-workshop).

### Install and Use Locally

Follow the installation steps [here](https://github.com/myshell-ai/MeloTTS/blob/main/docs/install.md#linux-and-macos-install) before using the following snippet:

```python

from melo.api import TTS

# Speed is adjustable

speed = 1.0

device = 'cpu' # or cuda:0

text = "La lueur dorée du soleil caresse les vagues, peignant le ciel d'une palette éblouissante."

model = TTS(language='FR', device=device)

speaker_ids = model.hps.data.spk2id

output_path = 'fr.wav'

model.tts_to_file(text, speaker_ids['FR'], output_path, speed=speed)

```

## Join the Community

**Open Source AI Grant**

We are actively sponsoring open-source AI projects. The sponsorship includes GPU resources, fundings and intellectual support (collaboration with top research labs). We welcome both reseach and engineering projects, as long as the open-source community needs them. Please contact [Zengyi Qin](https://www.qinzy.tech/) if you are interested.

**Contributing**

If you find this work useful, please consider contributing to the GitHub [repo](https://github.com/myshell-ai/MeloTTS).

- Many thanks to [@fakerybakery](https://github.com/fakerybakery) for adding the Web UI and CLI part.

## License

This library is under MIT License, which means it is free for both commercial and non-commercial use.

## Acknowledgements

This implementation is based on [TTS](https://github.com/coqui-ai/TTS), [VITS](https://github.com/jaywalnut310/vits), [VITS2](https://github.com/daniilrobnikov/vits2) and [Bert-VITS2](https://github.com/fishaudio/Bert-VITS2). We appreciate their awesome work.

|

backyardai/Hathor_Fractionate-L3-8B-v.05-GGUF | backyardai | "2024-06-26T15:40:40Z" | 28,323 | 0 | null | [

"gguf",

"en",

"base_model:Nitral-AI/Hathor_Fractionate-L3-8B-v.05",

"license:other",

"region:us"

] | null | "2024-06-25T20:39:19Z" | ---

language:

- en

license: other

base_model: Nitral-AI/Hathor_Fractionate-L3-8B-v.05

model_name: Hathor_Fractionate-L3-8B-v.05-GGUF

quantized_by: brooketh

parameter_count: 8030261248

---

<img src="BackyardAI_Banner.png" alt="Backyard.ai" style="height: 90px; min-width: 32px; display: block; margin: auto;">

**<p style="text-align: center;">The official library of GGUF format models for use in the local AI chat app, Backyard AI.</p>**

<p style="text-align: center;"><a href="https://backyard.ai/">Download Backyard AI here to get started.</a></p>

<p style="text-align: center;"><a href="https://www.reddit.com/r/LLM_Quants/">Request Additional models at r/LLM_Quants.</a></p>

***

# Hathor_Fractionate L3 V.05 8B

- **Creator:** [Nitral-AI](https://huggingface.co/Nitral-AI/)

- **Original:** [Hathor_Fractionate L3 V.05 8B](https://huggingface.co/Nitral-AI/Hathor_Fractionate-L3-8B-v.05)

- **Date Created:** 2024-06-22

- **Trained Context:** 8192 tokens

- **Description:** Uncensored model based on the LLaMA 3 architecture, designed to seamlessly integrate the qualities of creativity, intelligence, and robust performance. Trained on 3 epochs of private data, synthetic opus instructions, a mix of light/classical novel data, and roleplaying chat pairs over llama 3 8B instruct, with domain knowledge of cybersecurity, programming, biology and anatomy.

***

## What is a GGUF?

GGUF is a large language model (LLM) format that can be split between CPU and GPU. GGUFs are compatible with applications based on llama.cpp, such as Backyard AI. Where other model formats require higher end GPUs with ample VRAM, GGUFs can be efficiently run on a wider variety of hardware.

GGUF models are quantized to reduce resource usage, with a tradeoff of reduced coherence at lower quantizations. Quantization reduces the precision of the model weights by changing the number of bits used for each weight.

***

<img src="BackyardAI_Logo.png" alt="Backyard.ai" style="height: 75px; min-width: 32px; display: block; horizontal align: left;">

## Backyard AI

- Free, local AI chat application.

- One-click installation on Mac and PC.

- Automatically use GPU for maximum speed.

- Built-in model manager.

- High-quality character hub.

- Zero-config desktop-to-mobile tethering.

Backyard AI makes it easy to start chatting with AI using your own characters or one of the many found in the built-in character hub. The model manager helps you find the latest and greatest models without worrying about whether it's the correct format. Backyard AI supports advanced features such as lorebooks, author's note, text formatting, custom context size, sampler settings, grammars, local TTS, cloud inference, and tethering, all implemented in a way that is straightforward and reliable.

**Join us on [Discord](https://discord.gg/SyNN2vC9tQ)**

*** |

Lajavaness/sentence-camembert-large | Lajavaness | "2024-06-11T13:02:57Z" | 28,305 | 6 | transformers | [

"transformers",

"pytorch",

"safetensors",

"camembert",

"feature-extraction",

"Text",

"Sentence Similarity",

"Sentence-Embedding",

"camembert-large",

"sentence-similarity",

"fr",

"dataset:stsb_multi_mt",

"arxiv:1908.10084",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

] | sentence-similarity | "2023-10-25T19:46:36Z" | ---

pipeline_tag: sentence-similarity

language: fr

datasets:

- stsb_multi_mt

tags:

- Text

- Sentence Similarity

- Sentence-Embedding

- camembert-large

license: apache-2.0

model-index:

- name: sentence-camembert-large by Van Tuan DANG

results:

- task:

name: Sentence-Embedding

type: Text Similarity

dataset:

name: Text Similarity fr

type: stsb_multi_mt

args: fr

metrics:

- name: Test Pearson correlation coefficient

type: Pearson_correlation_coefficient

value: 88.63

---

## Description:

This [**Sentence-CamemBERT-Large**](https://huggingface.co/Lajavaness/sentence-camembert-large) Model is an Embedding Model for French developed by [La Javaness](https://www.lajavaness.com/). The purpose of this embedding model is to represent the content and semantics of a French sentence as a mathematical vector, allowing it to understand the meaning of the text beyond individual words in queries and documents. It offers powerful semantic search capabilities.

## Pre-trained sentence embedding models are state-of-the-art of Sentence Embeddings for French.

The [Lajavaness/sentence-camembert-large](https://huggingface.co/Lajavaness/sentence-camembert-large) model is an improvement over the [dangvantuan/sentence-camembert-base](https://huggingface.co/dangvantuan/sentence-camembert-large) offering greater robustness and better performance on all STS benchmark datasets. It has been fine-tuned using the pre-trained [facebook/camembert-large](https://huggingface.co/camembert/camembert-large) and

[Siamese BERT-Networks with 'sentences-transformers'](https://www.sbert.net/) on dataset [stsb](https://huggingface.co/datasets/stsb_multi_mt/viewer/fr/train). Additionally, it has been combined with [Augmented SBERT](https://aclanthology.org/2021.naacl-main.28.pdf) on dataset [stsb](https://huggingface.co/datasets/stsb_multi_mt/viewer/fr/train). The model benefits from Pair Sampling Strategies using two models: [CrossEncoder-camembert-large](https://huggingface.co/dangvantuan/CrossEncoder-camembert-large) and [dangvantuan/sentence-camembert-large](https://huggingface.co/dangvantuan/sentence-camembert-large)

## Usage

The model can be used directly (without a language model) as follows:

```python

from sentence_transformers import SentenceTransformer

model = SentenceTransformer("Lajavaness/sentence-camembert-large")

sentences = ["Un avion est en train de décoller.",

"Un homme joue d'une grande flûte.",

"Un homme étale du fromage râpé sur une pizza.",

"Une personne jette un chat au plafond.",

"Une personne est en train de plier un morceau de papier.",

]

embeddings = model.encode(sentences)

```

## Evaluation

The model can be evaluated as follows on the French test data of stsb.

```python

from sentence_transformers import SentenceTransformer

from sentence_transformers.readers import InputExample

from datasets import load_dataset

def convert_dataset(dataset):

dataset_samples=[]

for df in dataset:

score = float(df['similarity_score'])/5.0 # Normalize score to range 0 ... 1

inp_example = InputExample(texts=[df['sentence1'],

df['sentence2']], label=score)

dataset_samples.append(inp_example)

return dataset_samples

# Loading the dataset for evaluation

df_dev = load_dataset("stsb_multi_mt", name="fr", split="dev")

df_test = load_dataset("stsb_multi_mt", name="fr", split="test")

# Convert the dataset for evaluation

# For Dev set:

dev_samples = convert_dataset(df_dev)

val_evaluator = EmbeddingSimilarityEvaluator.from_input_examples(dev_samples, name='sts-dev')

val_evaluator(model, output_path="./")

# For Test set:

test_samples = convert_dataset(df_test)

test_evaluator = EmbeddingSimilarityEvaluator.from_input_examples(test_samples, name='sts-test')

test_evaluator(model, output_path="./")

```

**Test Result**:

The performance is measured using Pearson and Spearman correlation:

- On dev

| Model | Pearson correlation | Spearman correlation | #params |

| ------------- | ------------- | ------------- |------------- |

| [Lajavaness/sentence-camembert-large](https://huggingface.co/dangvantuan/sentence-camembert-large)| **88.63** |**88.46** | 336M|

| [dangvantuan/sentence-camembert-large](https://huggingface.co/dangvantuan/sentence-camembert-large)| 88.2 |88.02 | 336M|

| [Sahajtomar/french_semanti](https://huggingface.co/Sahajtomar/french_semantic)| 87.44 |87.30 | 336M|

| [Lajavaness/sentence-flaubert-base](https://huggingface.co/Lajavaness/sentence-flaubert-base)| 87.14 |87.10 | 137M |

| [GPT-3 (text-davinci-003)](https://platform.openai.com/docs/models) | 85 | NaN|175B |

| [GPT-(text-embedding-ada-002)](https://platform.openai.com/docs/models) | 79.75 | 80.44|NaN |

- On test, Pearson and Spearman correlation are evaluated on many different benchmark datasets:

**Pearson score**

| Model | [STS-B](https://huggingface.co/datasets/stsb_multi_mt/viewer/fr/train) | [STS12-fr ](https://huggingface.co/datasets/Lajavaness/STS12-fr)| [STS13-fr](https://huggingface.co/datasets/Lajavaness/STS13-fr) | [STS14-fr](https://huggingface.co/datasets/Lajavaness/STS14-fr) | [STS15-fr](https://huggingface.co/datasets/Lajavaness/STS15-fr) | [STS16-fr](https://huggingface.co/datasets/Lajavaness/STS16-fr) | [SICK-fr](https://huggingface.co/datasets/Lajavaness/SICK-fr) | params |

|------------------------------------------|-------|----------|----------|----------|----------|----------|---------|--------|

| [Lajavaness/sentence-camembert-large](https://huggingface.co/dangvantuan/sentence-camembert-large) | **86.26** | **87.42** | **89.34** | **88.05** | **88.91** | 77.15 | 83.13 | 336M |

| [dangvantuan/sentence-camembert-large](https://huggingface.co/dangvantuan/sentence-camembert-large) | 85.88 | 87.28 | 89.25 | 87.91 | 88.54 | 76.90 | 83.26 | 336M |

| [Sahajtomar/french_semantic](https://huggingface.co/Sahajtomar/french_semantic) | 85.80 | 86.05 | 88.50 | 86.57 | 87.49 | 77.85 | 83.27 | 336M |

| [Lajavaness/sentence-flaubert-base](https://huggingface.co/Lajavaness/sentence-flaubert-base) | 85.39 | 86.64 | 87.24 | 85.68 | 87.99 | 75.78 | 82.84 | 137M |

| [GPT3 (text-embedding-ada-002)](https://platform.openai.com/docs/models) | 79.03 | 66.16 | 75.48 | 70.69 | 77.88 | 65.18 | - | - |

**Spearman score**

| Model | [STS-B](https://huggingface.co/datasets/stsb_multi_mt/viewer/fr/train) | [STS12-fr ](https://huggingface.co/datasets/Lajavaness/STS12-fr)| [STS13-fr](https://huggingface.co/datasets/Lajavaness/STS13-fr) | [STS14-fr](https://huggingface.co/datasets/Lajavaness/STS14-fr) | [STS15-fr](https://huggingface.co/datasets/Lajavaness/STS15-fr) | [STS16-fr](https://huggingface.co/datasets/Lajavaness/STS16-fr) | [SICK-fr](https://huggingface.co/datasets/Lajavaness/SICK-fr) | params |

|:-------------------------------------|-------:|---------:|---------:|---------:|---------:|---------:|--------:|:-------|

| [Lajavaness/sentence-camembert-large](https://huggingface.co/dangvantuan/sentence-camembert-large) | **86.14** | **81.22** | 88.61 | **86.28** | **89.01** | 78.65 | **77.71** | 336M |

| [dangvantuan/sentence-camembert-large](https://huggingface.co/dangvantuan/sentence-camembert-large) | 85.78 | 81.09 | 88.68 | 85.81 | 88.56 | 78.49 | 77.70 | 336M |

| [Sahajtomar/french_semantic](https://huggingface.co/Sahajtomar/french_semantic) | 85.55 | 77.92 | 87.85 | 83.96 | 87.63 | 79.07 | 77.14 | 336M |

| [Lajavaness/sentence-flaubert-base](https://huggingface.co/Lajavaness/sentence-flaubert-base) | 85.67 | 79.97 | 86.91 | 84.57 | 88.10 | 77.84 | 77.55 | 137M |

| [GPT3 (text-embedding-ada-002)](https://platform.openai.com/docs/models) | 77.53 | 64.27 | 76.41 | 69.63 | 78.65 | 75.30 | - | - |

## Citation

@article{reimers2019sentence,

title={Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks},

author={Nils Reimers, Iryna Gurevych},

journal={https://arxiv.org/abs/1908.10084},

year={2019}

}

@article{martin2020camembert,

title={CamemBERT: a Tasty French Language Mode},

author={Martin, Louis and Muller, Benjamin and Su{\'a}rez, Pedro Javier Ortiz and Dupont, Yoann and Romary, Laurent and de la Clergerie, {\'E}ric Villemonte and Seddah, Djam{\'e} and Sagot, Beno{\^\i}t},

journal={Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics},

year={2020}

} |

SenseTime/deformable-detr-with-box-refine-two-stage | SenseTime | "2024-05-08T07:47:46Z" | 28,297 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"deformable_detr",

"object-detection",

"vision",

"dataset:coco",

"arxiv:2010.04159",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | object-detection | "2022-03-02T23:29:05Z" | ---

license: apache-2.0

tags:

- object-detection

- vision

datasets:

- coco

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/savanna.jpg

example_title: Savanna

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/football-match.jpg

example_title: Football Match

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/airport.jpg

example_title: Airport

---

# Deformable DETR model with ResNet-50 backbone, with box refinement and two stage

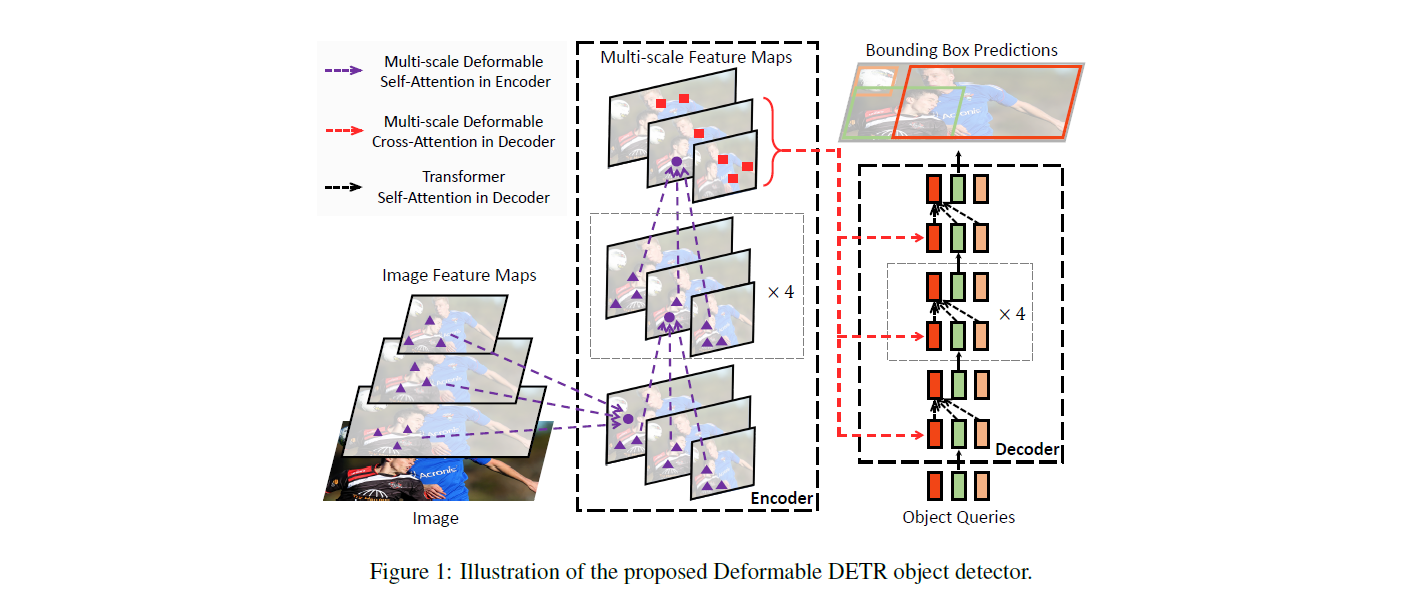

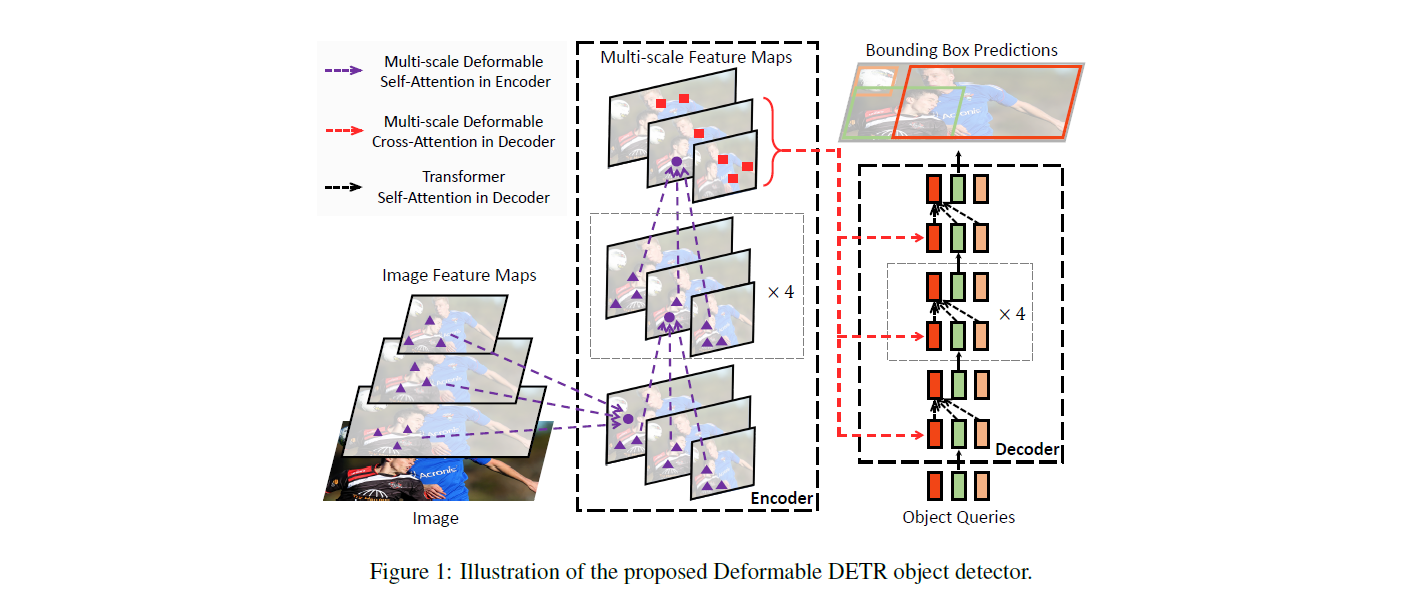

Deformable DEtection TRansformer (DETR), with box refinement and two stage model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159) by Zhu et al. and first released in [this repository](https://github.com/fundamentalvision/Deformable-DETR).

Disclaimer: The team releasing Deformable DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=sensetime/deformable-detr) to look for all available Deformable DETR models.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr-with-box-refine-two-stage")

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr-with-box-refine-two-stage")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The Deformable DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### BibTeX entry and citation info

```bibtex

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}

``` |

digiplay/AM-mix1 | digiplay | "2024-05-10T15:54:42Z" | 28,296 | 3 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | "2023-11-02T18:51:41Z" | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

in test...

Sample image I made generated by huggingface's API :

|

NbAiLab/nb-whisper-large-beta | NbAiLab | "2023-07-24T18:05:01Z" | 28,286 | 8 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"whisper",

"automatic-speech-recognition",

"audio",

"asr",

"hf-asr-leaderboard",

"no",

"nb",

"nn",

"en",

"dataset:NbAiLab/ncc_speech",

"dataset:NbAiLab/NST",

"dataset:NbAiLab/NPSC",

"arxiv:2212.04356",

"arxiv:1910.09700",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | "2023-07-23T19:31:02Z" | ---

license: cc-by-4.0

language:

- 'no'

- nb

- nn

- en

datasets:

- NbAiLab/ncc_speech

- NbAiLab/NST

- NbAiLab/NPSC

tags:

- audio

- asr

- automatic-speech-recognition

- hf-asr-leaderboard

metrics:

- wer

- cer

library_name: transformers

pipeline_tag: automatic-speech-recognition

widget:

- src: https://datasets-server.huggingface.co/assets/google/fleurs/--/nb_no/train/1/audio/audio.mp3

example_title: FLEURS sample 1

- src: https://datasets-server.huggingface.co/assets/google/fleurs/--/nb_no/train/4/audio/audio.mp3

example_title: FLEURS sample 2

---

# NB-Whisper Large (beta)

This is a **_public beta_** of the Norwegian NB-Whisper Large model released by the National Library of Norway. NB-Whisper is a series of models for automatic speech recognition (ASR) and speech translation, building upon the foundation laid by [OpenAI's Whisper](https://arxiv.org/abs/2212.04356). All models are trained on 20,000 hours of labeled data.

<center>

<figure>

<video controls>

<source src="https://huggingface.co/NbAiLab/nb-whisper-small-beta/resolve/main/king.mp4" type="video/mp4">

Your browser does not support the video tag.

</video>

<figcaption><a href="https://www.royalcourt.no/tale.html?tid=137662&sek=28409&scope=27248" target="_blank">Speech given by His Majesty The King of Norway at the garden party hosted by Their Majesties The King and Queen at the Palace Park on 1 September 2016.</a>Transcribed using the Small model.</figcaption>

</figure>

</center>

## Model Details

NB-Whisper models will be available in five different sizes:

| Model Size | Parameters | Availability |

|------------|------------|--------------|

| tiny | 39M | [NB-Whisper Tiny (beta)](https://huggingface.co/NbAiLab/nb-whisper-tiny-beta) |

| base | 74M | [NB-Whisper Base (beta)](https://huggingface.co/NbAiLab/nb-whisper-base-beta) |

| small | 244M | [NB-Whisper Small (beta)](https://huggingface.co/NbAiLab/nb-whisper-small-beta) |

| medium | 769M | [NB-Whisper Medium (beta)](https://huggingface.co/NbAiLab/nb-whisper-medium-beta) |

| large | 1550M | [NB-Whisper Large (beta)](https://huggingface.co/NbAiLab/nb-whisper-large-beta) |

An official release of NB-Whisper models is planned for the Fall 2023.

Please refer to the OpenAI Whisper model card for more details about the backbone model.

### Model Description

- **Developed by:** [NB AI-Lab](https://ai.nb.no/)

- **Shared by:** [NB AI-Lab](https://ai.nb.no/)

- **Model type:** `whisper`

- **Language(s) (NLP):** Norwegian, Norwegian Bokmål, Norwegian Nynorsk, English

- **License:** [Creative Commons Attribution 4.0 International (CC BY 4.0)](https://creativecommons.org/licenses/by/4.0/)

- **Finetuned from model:** [openai/whisper-small](https://huggingface.co/openai/whisper-small)

### Model Sources

<!-- Provide the basic links for the model. -->

- **Repository:** https://github.com/NbAiLab/nb-whisper/

- **Paper:** _Coming soon_

- **Demo:** http://ai.nb.no/demo/nb-whisper

## Uses

### Direct Use

This is a **_public beta_** release. The models published in this repository are intended for a generalist purpose and are available to third parties.

### Downstream Use

For Norwegian transcriptions we are confident that this public beta will give you State-of-the-Art results compared to currently available Norwegian ASR models of the same size. However, it is still known to show some hallucinations, as well as a tendency to drop part of the transcript from time to time. Please also note that the transcripts are typically not word by word. Spoken language and written language are often very different, and the model aims to "translate" spoken utterances into grammatically correct written sentences. We strongly believe that the best way to understand these models is to try them yourself.

A significant part of the training material comes from TV subtitles. Subtitles often shorten the content to make it easier to read. Typically, non-essential parts of the utterance can be also dropped. In some cases, this is a desired ability, in other cases, this is undesired. The final release of these model will provida a mechanism to control for this beaviour.

## Bias, Risks, and Limitations

This is a public beta that is not intended for production. Production use without adequate assessment of risks and mitigation may be considered irresponsible or harmful. These models may have bias and/or any other undesirable distortions. When third parties, deploy or provide systems and/or services to other parties using any of these models (or using systems based on these models) or become users of the models, they should note that it is their responsibility to mitigate the risks arising from their use and, in any event, to comply with applicable regulations, including regulations regarding the use of artificial intelligence. In no event shall the owner of the models (The National Library of Norway) be liable for any results arising from the use made by third parties of these models.

## How to Get Started with the Model

Use the code below to get started with the model.

```python

from transformers import pipeline

asr = pipeline(

"automatic-speech-recognition",

"NbAiLab/nb-whisper-large-beta"

)

asr(

"audio.mp3",

generate_kwargs={'task': 'transcribe', 'language': 'no'}

)

# {'text': ' Så mange anga kører seg i så viktig sak, så vi får du kører det tilbake med. Om kabaret gudam i at vi skal hjælge. Kør seg vi gjør en uda? Nei noe skal å abelistera sonvorne skrifer. Det er sak, så kjent det bare handling i samtatsen til bargører. Trudet første lask. På den å først så å køre og en gange samme, og så får vi gjør å vorte vorte vorte når vi kjent dit.'}

```

Timestamps can also be retrieved by passing in the right parameter.

```python

asr(

"audio.mp3",

generate_kwargs={'task': 'transcribe', 'language': 'no'},

return_timestamps=True,

)

# {'text': ' at så mange angar til seg så viktig sak, så vi får jo kjølget klare tilbakemeldingen om hva valget dem gjør at vi skal gjøre. Hva skjer vi gjøre nå da? Nei, nå skal jo administrationen vår skrivferdige sak, så kjem til behandling i samfærdshetshøyvalget, tror det første

# r. Først så kan vi ta og henge dem kjemme, og så får vi gjøre vårt valget når vi kommer dit.',

# 'chunks': [{'timestamp': (0.0, 5.34),

# 'text': ' at så mange angar til seg så viktig sak, så vi får jo kjølget klare tilbakemeldingen om'},

# {'timestamp': (5.34, 8.64),

# 'text': ' hva valget dem gjør at vi skal gjøre.'},

# {'timestamp': (8.64, 10.64), 'text': ' Hva skjer vi gjøre nå da?'},

# {'timestamp': (10.64, 17.44),

# 'text': ' Nei, nå skal jo administrationen vår skrivferdige sak, så kjem til behandling i samfærdshetshøyvalget,'},

# {'timestamp': (17.44, 19.44), 'text': ' tror det første år.'},

# {'timestamp': (19.44, 23.94),

# 'text': ' Først så kan vi ta og henge dem kjemme, og så får vi gjøre vårt valget når vi kommer dit.'}]}

```

## Training Data

Trained data comes from Språkbanken and the digital collection at the National Library of Norway. Training data includes:

- NST Norwegian ASR Database (16 kHz), and its corresponding dataset

- Transcribed speeches from the Norwegian Parliament produced by Språkbanken

- TV broadcast (NRK) subtitles (NLN digital collection)

- Audiobooks (NLN digital collection)

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** TPUv4

- **Hours used:** 1,536

- **Cloud Provider:** Google Cloud

- **Compute Region:** `us-central1`

- **Carbon Emitted:** Total emissions are estimated to be 247.77 kgCO₂ of which 100 percents were directly offset by the cloud provider.

#### Software

The model is trained using Jax/Flax. The final model is converted to Pytorch, Tensorflow, whisper.cpp and ONXX. Please tell us if you would like future models to be converted to other format.

## Citation & Contributors

The development of this model was part of the contributors' professional roles at the National Library of Norway, under the _NoSTram_ project led by _Per Egil Kummervold (PEK)_. The Jax code, dataset loaders, and training scripts were collectively designed by _Javier de la Rosa (JdlR)_, _Freddy Wetjen (FW)_, _Rolv-Arild Braaten (RAB)_, and _PEK_. Primary dataset curation was handled by _FW_, _RAB_, and _PEK_, while _JdlR_ and _PEK_ crafted the documentation. The project was completed under the umbrella of AiLab, directed by _Svein Arne Brygfjeld_.

All contributors played a part in shaping the optimal training strategy for the Norwegian ASR model based on the Whisper architecture.

_A paper detailing our process and findings is underway!_

## Acknowledgements

Thanks to [Google TPU Research Cloud](https://sites.research.google/trc/about/) for supporting this project with extensive training resources. Thanks to Google Cloud for supporting us with credits for translating large parts of the corpus. A special thanks to [Sanchit Ghandi](https://huggingface.co/sanchit-gandhi) for providing thorough technical advice in debugging and with the work of getting this to train on Google TPUs. A special thanks to Per Erik Solberg at Språkbanken for the collaboration with regard to the Stortinget corpus.

## Contact

We are releasing this ASR Whisper model as a public beta to gather constructive feedback on its performance. Please do not hesitate to contact us with any experiences, insights, or suggestions that you may have. Your input is invaluable in helping us to improve the model and ensure that it effectively serves the needs of users. Whether you have technical concerns, usability suggestions, or ideas for future enhancements, we welcome your input. Thank you for participating in this critical stage of our model's development.

If you intend to incorporate this model into your research, we kindly request that you reach out to us. We can provide you with the most current status of our upcoming paper, which you can cite to acknowledge and provide context for the work done on this model.

Please use this email as the main contact point, it is read by the entire team: <a rel="noopener nofollow" href="mailto:ailab@nb.no">ailab@nb.no</a>

|

malteos/scincl | malteos | "2024-06-04T17:45:02Z" | 28,199 | 32 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"safetensors",

"bert",

"feature-extraction",

"transformers",

"en",

"dataset:SciDocs",

"dataset:s2orc",

"arxiv:2202.06671",

"license:mit",

"endpoints_compatible",

"region:us"

] | feature-extraction | "2022-03-02T23:29:05Z" | ---

tags:

- feature-extraction

- sentence-transformers

- transformers

library_name: sentence-transformers

language: en

datasets:

- SciDocs

- s2orc

metrics:

- F1

- accuracy

- map

- ndcg

license: mit

---

## SciNCL

SciNCL is a pre-trained BERT language model to generate document-level embeddings of research papers.

It uses the citation graph neighborhood to generate samples for contrastive learning.

Prior to the contrastive training, the model is initialized with weights from [scibert-scivocab-uncased](https://huggingface.co/allenai/scibert_scivocab_uncased).

The underlying citation embeddings are trained on the [S2ORC citation graph](https://github.com/allenai/s2orc).

Paper: [Neighborhood Contrastive Learning for Scientific Document Representations with Citation Embeddings (EMNLP 2022 paper)](https://arxiv.org/abs/2202.06671).

Code: https://github.com/malteos/scincl

PubMedNCL: Working with biomedical papers? Try [PubMedNCL](https://huggingface.co/malteos/PubMedNCL).

## How to use the pretrained model

### Sentence Transformers

```python

from sentence_transformers import SentenceTransformer

# Load the model

model = SentenceTransformer("malteos/scincl")

# Concatenate the title and abstract with the [SEP] token

papers = [

"BERT [SEP] We introduce a new language representation model called BERT",

"Attention is all you need [SEP] The dominant sequence transduction models are based on complex recurrent or convolutional neural networks",

]

# Inference

embeddings = model.encode(papers)

# Compute the (cosine) similarity between embeddings

similarity = model.similarity(embeddings[0], embeddings[1])

print(similarity.item())

# => 0.8440517783164978

```

### Transformers

```python

from transformers import AutoTokenizer, AutoModel

# load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained('malteos/scincl')

model = AutoModel.from_pretrained('malteos/scincl')

papers = [{'title': 'BERT', 'abstract': 'We introduce a new language representation model called BERT'},

{'title': 'Attention is all you need', 'abstract': ' The dominant sequence transduction models are based on complex recurrent or convolutional neural networks'}]

# concatenate title and abstract with [SEP] token

title_abs = [d['title'] + tokenizer.sep_token + (d.get('abstract') or '') for d in papers]

# preprocess the input

inputs = tokenizer(title_abs, padding=True, truncation=True, return_tensors="pt", max_length=512)

# inference

result = model(**inputs)

# take the first token ([CLS] token) in the batch as the embedding

embeddings = result.last_hidden_state[:, 0, :]

# calculate the similarity

embeddings = torch.nn.functional.normalize(embeddings, p=2, dim=1)

similarity = (embeddings[0] @ embeddings[1].T)

print(similarity.item())

# => 0.8440518379211426

```

## Triplet Mining Parameters

| **Setting** | **Value** |

|-------------------------|--------------------|

| seed | 4 |

| triples_per_query | 5 |

| easy_positives_count | 5 |

| easy_positives_strategy | 5 |

| easy_positives_k | 20-25 |

| easy_negatives_count | 3 |

| easy_negatives_strategy | random_without_knn |

| hard_negatives_count | 2 |

| hard_negatives_strategy | knn |

| hard_negatives_k | 3998-4000 |

## SciDocs Results

These model weights are the ones that yielded the best results on SciDocs (`seed=4`).

In the paper we report the SciDocs results as mean over ten seeds.

| **model** | **mag-f1** | **mesh-f1** | **co-view-map** | **co-view-ndcg** | **co-read-map** | **co-read-ndcg** | **cite-map** | **cite-ndcg** | **cocite-map** | **cocite-ndcg** | **recomm-ndcg** | **recomm-P@1** | **Avg** |

|-------------------|-----------:|------------:|----------------:|-----------------:|----------------:|-----------------:|-------------:|--------------:|---------------:|----------------:|----------------:|---------------:|--------:|

| Doc2Vec | 66.2 | 69.2 | 67.8 | 82.9 | 64.9 | 81.6 | 65.3 | 82.2 | 67.1 | 83.4 | 51.7 | 16.9 | 66.6 |

| fasttext-sum | 78.1 | 84.1 | 76.5 | 87.9 | 75.3 | 87.4 | 74.6 | 88.1 | 77.8 | 89.6 | 52.5 | 18 | 74.1 |

| SGC | 76.8 | 82.7 | 77.2 | 88 | 75.7 | 87.5 | 91.6 | 96.2 | 84.1 | 92.5 | 52.7 | 18.2 | 76.9 |

| SciBERT | 79.7 | 80.7 | 50.7 | 73.1 | 47.7 | 71.1 | 48.3 | 71.7 | 49.7 | 72.6 | 52.1 | 17.9 | 59.6 |

| SPECTER | 82 | 86.4 | 83.6 | 91.5 | 84.5 | 92.4 | 88.3 | 94.9 | 88.1 | 94.8 | 53.9 | 20 | 80 |

| SciNCL (10 seeds) | 81.4 | 88.7 | 85.3 | 92.3 | 87.5 | 93.9 | 93.6 | 97.3 | 91.6 | 96.4 | 53.9 | 19.3 | 81.8 |

| **SciNCL (seed=4)** | 81.2 | 89.0 | 85.3 | 92.2 | 87.7 | 94.0 | 93.6 | 97.4 | 91.7 | 96.5 | 54.3 | 19.6 | 81.9 |

Additional evaluations are available in the paper.

## License

MIT

|

suno/bark | suno | "2023-10-04T14:17:55Z" | 28,173 | 954 | transformers | [

"transformers",

"pytorch",

"bark",

"text-to-audio",

"audio",

"text-to-speech",

"en",

"de",

"es",

"fr",

"hi",

"it",

"ja",

"ko",

"pl",

"pt",

"ru",

"tr",

"zh",

"license:mit",

"endpoints_compatible",

"region:us"

] | text-to-speech | "2023-04-25T14:44:46Z" | ---

language:

- en

- de

- es

- fr

- hi

- it

- ja

- ko

- pl

- pt

- ru

- tr

- zh

thumbnail: >-

https://user-images.githubusercontent.com/5068315/230698495-cbb1ced9-c911-4c9a-941d-a1a4a1286ac6.png

library: bark

license: mit

tags:

- bark

- audio

- text-to-speech

pipeline_tag: text-to-speech

inference: true

---

# Bark

Bark is a transformer-based text-to-audio model created by [Suno](https://www.suno.ai).

Bark can generate highly realistic, multilingual speech as well as other audio - including music,

background noise and simple sound effects. The model can also produce nonverbal

communications like laughing, sighing and crying. To support the research community,

we are providing access to pretrained model checkpoints ready for inference.

The original github repo and model card can be found [here](https://github.com/suno-ai/bark).

This model is meant for research purposes only.

The model output is not censored and the authors do not endorse the opinions in the generated content.

Use at your own risk.

Two checkpoints are released:

- [small](https://huggingface.co/suno/bark-small)

- [**large** (this checkpoint)](https://huggingface.co/suno/bark)

## Example

Try out Bark yourself!

* Bark Colab:

<a target="_blank" href="https://colab.research.google.com/drive/1eJfA2XUa-mXwdMy7DoYKVYHI1iTd9Vkt?usp=sharing">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

* Hugging Face Colab:

<a target="_blank" href="https://colab.research.google.com/drive/1dWWkZzvu7L9Bunq9zvD-W02RFUXoW-Pd?usp=sharing">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

* Hugging Face Demo:

<a target="_blank" href="https://huggingface.co/spaces/suno/bark">

<img src="https://huggingface.co/datasets/huggingface/badges/raw/main/open-in-hf-spaces-sm.svg" alt="Open in HuggingFace"/>

</a>

## 🤗 Transformers Usage

You can run Bark locally with the 🤗 Transformers library from version 4.31.0 onwards.

1. First install the 🤗 [Transformers library](https://github.com/huggingface/transformers) and scipy:

```

pip install --upgrade pip

pip install --upgrade transformers scipy

```

2. Run inference via the `Text-to-Speech` (TTS) pipeline. You can infer the bark model via the TTS pipeline in just a few lines of code!

```python

from transformers import pipeline

import scipy

synthesiser = pipeline("text-to-speech", "suno/bark")

speech = synthesiser("Hello, my dog is cooler than you!", forward_params={"do_sample": True})

scipy.io.wavfile.write("bark_out.wav", rate=speech["sampling_rate"], data=speech["audio"])

```

3. Run inference via the Transformers modelling code. You can use the processor + generate code to convert text into a mono 24 kHz speech waveform for more fine-grained control.

```python

from transformers import AutoProcessor, AutoModel

processor = AutoProcessor.from_pretrained("suno/bark")

model = AutoModel.from_pretrained("suno/bark")

inputs = processor(

text=["Hello, my name is Suno. And, uh — and I like pizza. [laughs] But I also have other interests such as playing tic tac toe."],

return_tensors="pt",

)

speech_values = model.generate(**inputs, do_sample=True)

```

4. Listen to the speech samples either in an ipynb notebook:

```python

from IPython.display import Audio

sampling_rate = model.generation_config.sample_rate

Audio(speech_values.cpu().numpy().squeeze(), rate=sampling_rate)

```

Or save them as a `.wav` file using a third-party library, e.g. `scipy`:

```python

import scipy

sampling_rate = model.config.sample_rate

scipy.io.wavfile.write("bark_out.wav", rate=sampling_rate, data=speech_values.cpu().numpy().squeeze())

```

For more details on using the Bark model for inference using the 🤗 Transformers library, refer to the [Bark docs](https://huggingface.co/docs/transformers/model_doc/bark).

## Suno Usage

You can also run Bark locally through the original [Bark library]((https://github.com/suno-ai/bark):

1. First install the [`bark` library](https://github.com/suno-ai/bark)

2. Run the following Python code:

```python

from bark import SAMPLE_RATE, generate_audio, preload_models

from IPython.display import Audio

# download and load all models

preload_models()

# generate audio from text

text_prompt = """

Hello, my name is Suno. And, uh — and I like pizza. [laughs]

But I also have other interests such as playing tic tac toe.

"""

speech_array = generate_audio(text_prompt)

# play text in notebook

Audio(speech_array, rate=SAMPLE_RATE)

```

[pizza.webm](https://user-images.githubusercontent.com/5068315/230490503-417e688d-5115-4eee-9550-b46a2b465ee3.webm)

To save `audio_array` as a WAV file:

```python

from scipy.io.wavfile import write as write_wav

write_wav("/path/to/audio.wav", SAMPLE_RATE, audio_array)

```

## Model Details

The following is additional information about the models released here.

Bark is a series of three transformer models that turn text into audio.

### Text to semantic tokens

- Input: text, tokenized with [BERT tokenizer from Hugging Face](https://huggingface.co/docs/transformers/model_doc/bert#transformers.BertTokenizer)

- Output: semantic tokens that encode the audio to be generated

### Semantic to coarse tokens

- Input: semantic tokens

- Output: tokens from the first two codebooks of the [EnCodec Codec](https://github.com/facebookresearch/encodec) from facebook

### Coarse to fine tokens

- Input: the first two codebooks from EnCodec

- Output: 8 codebooks from EnCodec

### Architecture

| Model | Parameters | Attention | Output Vocab size |

|:-------------------------:|:----------:|------------|:-----------------:|

| Text to semantic tokens | 80/300 M | Causal | 10,000 |

| Semantic to coarse tokens | 80/300 M | Causal | 2x 1,024 |

| Coarse to fine tokens | 80/300 M | Non-causal | 6x 1,024 |

### Release date

April 2023

## Broader Implications

We anticipate that this model's text to audio capabilities can be used to improve accessbility tools in a variety of languages.

While we hope that this release will enable users to express their creativity and build applications that are a force

for good, we acknowledge that any text to audio model has the potential for dual use. While it is not straightforward

to voice clone known people with Bark, it can still be used for nefarious purposes. To further reduce the chances of unintended use of Bark,

we also release a simple classifier to detect Bark-generated audio with high accuracy (see notebooks section of the main repository). |

vikp/column_detector | vikp | "2023-12-22T05:55:14Z" | 28,079 | 10 | transformers | [

"transformers",

"pytorch",

"layoutlmv3",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2023-11-22T05:53:47Z" | Detects number of columns in pdf page images. Based on layoutlmv3.

Used in [marker](https://github.com/VikParuchuri/marker). |

facebook/vit-mae-huge | facebook | "2023-06-13T19:43:24Z" | 28,061 | 6 | transformers | [

"transformers",

"pytorch",

"tf",

"vit_mae",

"pretraining",

"vision",

"dataset:imagenet-1k",

"arxiv:2111.06377",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | "2022-03-02T23:29:05Z" | ---

license: apache-2.0

tags:

- vision

datasets:

- imagenet-1k

---

# Vision Transformer (huge-sized model) pre-trained with MAE

Vision Transformer (ViT) model pre-trained using the MAE method. It was introduced in the paper [Masked Autoencoders Are Scalable Vision Learners](https://arxiv.org/abs/2111.06377) by Kaiming He, Xinlei Chen, Saining Xie, Yanghao Li, Piotr Dollár, Ross Girshick and first released in [this repository](https://github.com/facebookresearch/mae).

Disclaimer: The team releasing MAE did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The Vision Transformer (ViT) is a transformer encoder model (BERT-like). Images are presented to the model as a sequence of fixed-size patches.

During pre-training, one randomly masks out a high portion (75%) of the image patches. First, the encoder is used to encode the visual patches. Next, a learnable (shared) mask token is added at the positions of the masked patches. The decoder takes the encoded visual patches and mask tokens as input and reconstructs raw pixel values for the masked positions.

By pre-training the model, it learns an inner representation of images that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled images for instance, you can train a standard classifier by placing a linear layer on top of the pre-trained encoder.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=facebook/vit-mae) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, ViTMAEForPreTraining

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained('facebook/vit-mae-huge')

model = ViTMAEForPreTraining.from_pretrained('facebook/vit-mae-huge')

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

loss = outputs.loss

mask = outputs.mask

ids_restore = outputs.ids_restore

```

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2111-06377,

author = {Kaiming He and

Xinlei Chen and

Saining Xie and

Yanghao Li and

Piotr Doll{\'{a}}r and

Ross B. Girshick},

title = {Masked Autoencoders Are Scalable Vision Learners},

journal = {CoRR},

volume = {abs/2111.06377},

year = {2021},

url = {https://arxiv.org/abs/2111.06377},

eprinttype = {arXiv},

eprint = {2111.06377},

timestamp = {Tue, 16 Nov 2021 12:12:31 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-2111-06377.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` |

sentence-transformers/stsb-mpnet-base-v2 | sentence-transformers | "2024-03-27T12:57:11Z" | 28,058 | 12 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"safetensors",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"arxiv:1908.10084",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | sentence-similarity | "2022-03-02T23:29:05Z" | ---

license: apache-2.0

library_name: sentence-transformers

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

pipeline_tag: sentence-similarity

---

# sentence-transformers/stsb-mpnet-base-v2

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/stsb-mpnet-base-v2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/stsb-mpnet-base-v2')

model = AutoModel.from_pretrained('sentence-transformers/stsb-mpnet-base-v2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/stsb-mpnet-base-v2)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 75, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

``` |

diffusers/controlnet-depth-sdxl-1.0 | diffusers | "2024-04-24T01:31:15Z" | 28,029 | 152 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"controlnet",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail++",

"region:us"

] | text-to-image | "2023-08-12T17:23:20Z" |

---

license: openrail++

base_model: stabilityai/stable-diffusion-xl-base-1.0

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- controlnet

inference: false

---

# SDXL-controlnet: Depth

These are controlnet weights trained on stabilityai/stable-diffusion-xl-base-1.0 with depth conditioning. You can find some example images in the following.

prompt: spiderman lecture, photorealistic

## Usage

Make sure to first install the libraries:

```bash

pip install accelerate transformers safetensors diffusers

```

And then we're ready to go:

```python

import torch

import numpy as np

from PIL import Image

from transformers import DPTFeatureExtractor, DPTForDepthEstimation

from diffusers import ControlNetModel, StableDiffusionXLControlNetPipeline, AutoencoderKL

from diffusers.utils import load_image

depth_estimator = DPTForDepthEstimation.from_pretrained("Intel/dpt-hybrid-midas").to("cuda")

feature_extractor = DPTFeatureExtractor.from_pretrained("Intel/dpt-hybrid-midas")

controlnet = ControlNetModel.from_pretrained(

"diffusers/controlnet-depth-sdxl-1.0",

variant="fp16",

use_safetensors=True,

torch_dtype=torch.float16,

)

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

controlnet=controlnet,

vae=vae,

variant="fp16",

use_safetensors=True,

torch_dtype=torch.float16,

)

pipe.enable_model_cpu_offload()

def get_depth_map(image):

image = feature_extractor(images=image, return_tensors="pt").pixel_values.to("cuda")

with torch.no_grad(), torch.autocast("cuda"):

depth_map = depth_estimator(image).predicted_depth

depth_map = torch.nn.functional.interpolate(

depth_map.unsqueeze(1),

size=(1024, 1024),

mode="bicubic",

align_corners=False,

)

depth_min = torch.amin(depth_map, dim=[1, 2, 3], keepdim=True)

depth_max = torch.amax(depth_map, dim=[1, 2, 3], keepdim=True)

depth_map = (depth_map - depth_min) / (depth_max - depth_min)

image = torch.cat([depth_map] * 3, dim=1)

image = image.permute(0, 2, 3, 1).cpu().numpy()[0]

image = Image.fromarray((image * 255.0).clip(0, 255).astype(np.uint8))

return image

prompt = "stormtrooper lecture, photorealistic"

image = load_image("https://huggingface.co/lllyasviel/sd-controlnet-depth/resolve/main/images/stormtrooper.png")

controlnet_conditioning_scale = 0.5 # recommended for good generalization

depth_image = get_depth_map(image)

images = pipe(

prompt, image=depth_image, num_inference_steps=30, controlnet_conditioning_scale=controlnet_conditioning_scale,

).images

images[0]

images[0].save(f"stormtrooper.png")

```

For more details, check out the official documentation of [`StableDiffusionXLControlNetPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet_sdxl).

### Training

Our training script was built on top of the official training script that we provide [here](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/README_sdxl.md).

#### Training data and Compute

The model is trained on 3M image-text pairs from LAION-Aesthetics V2. The model is trained for 700 GPU hours on 80GB A100 GPUs.

#### Batch size

Data parallel with a single GPU batch size of 8 for a total batch size of 256.

#### Hyper Parameters

The constant learning rate of 1e-5.

#### Mixed precision

fp16 |

monologg/bert-base-cased-goemotions-original | monologg | "2021-05-19T23:48:33Z" | 27,952 | 7 | transformers | [

"transformers",

"pytorch",

"bert",

"endpoints_compatible",

"region:us"

] | null | "2022-03-02T23:29:05Z" | Entry not found |

ptx0/terminus-xl-velocity-v2 | ptx0 | "2024-06-15T16:09:04Z" | 27,851 | 6 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"full",

"base_model:ptx0/terminus-xl-velocity-v1",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | "2024-04-14T23:35:06Z" | ---

license: creativeml-openrail-m

base_model: "ptx0/terminus-xl-velocity-v1"

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- full

inference: true

---

# terminus-xl-velocity-v2

This is a full rank finetuned model derived from [ptx0/terminus-xl-velocity-v1](https://huggingface.co/ptx0/terminus-xl-velocity-v1).

The main validation prompt used during training was:

```

a cute anime character named toast

```

## Validation settings

- CFG: `7.5`

- CFG Rescale: `0.7`

- Steps: `30`

- Sampler: `euler`

- Seed: `420420420`

- Resolutions: `1024x1024,1152x960,896x1152`

Note: The validation settings are not necessarily the same as the [training settings](#training-settings).

<Gallery />

The text encoder **was not** trained.

You may reuse the base model text encoder for inference.

## Training settings

- Training epochs: 0

- Training steps: 5400

- Learning rate: 1e-06

- Effective batch size: 32

- Micro-batch size: 8

- Gradient accumulation steps: 4

- Prediction type: v_prediction

- Rescaled betas zero SNR: True

- Optimizer: AdamW, stochastic bf16

- Precision: Pure BF16

- Xformers: Enabled

## Datasets

### celebrities

- Repeats: 4

- Total number of images: 1184

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### movieposters

- Repeats: 5

- Total number of images: 1728

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### normalnudes

- Repeats: 5

- Total number of images: 1056

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### propagandaposters

- Repeats: 0

- Total number of images: 608

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### guys

- Repeats: 5

- Total number of images: 352

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### pixel-art

- Repeats: 0

- Total number of images: 1024

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### signs

- Repeats: 5

- Total number of images: 352

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### moviecollection

- Repeats: 0

- Total number of images: 1888

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### bookcovers

- Repeats: 0

- Total number of images: 736

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### nijijourney

- Repeats: 0

- Total number of images: 608

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### experimental

- Repeats: 0

- Total number of images: 3040

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### ethnic

- Repeats: 0

- Total number of images: 3072

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### sports

- Repeats: 0

- Total number of images: 736

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### gay

- Repeats: 0

- Total number of images: 1056

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### architecture

- Repeats: 0

- Total number of images: 4320

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### shutterstock

- Repeats: 0

- Total number of images: 21059

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### cinemamix-1mp

- Repeats: 0

- Total number of images: 8992

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### nsfw-1024

- Repeats: 0

- Total number of images: 10761

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### anatomy

- Repeats: 5

- Total number of images: 16385

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### bg20k-1024

- Repeats: 0

- Total number of images: 89250

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### yoga

- Repeats: 0

- Total number of images: 3584

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### photo-aesthetics

- Repeats: 0

- Total number of images: 33121

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### text-1mp

- Repeats: 5

- Total number of images: 13123

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

### photo-concept-bucket

- Repeats: 0

- Total number of images: 567521

- Total number of aspect buckets: 3

- Resolution: 1.0 megapixels

- Cropped: True

- Crop style: random

- Crop aspect: random

|

facebook/nllb-200-3.3B | facebook | "2023-02-11T20:19:13Z" | 27,849 | 212 | transformers | [

"transformers",

"pytorch",

"m2m_100",

"text2text-generation",

"nllb",

"translation",

"ace",

"acm",

"acq",

"aeb",

"af",

"ajp",

"ak",

"als",

"am",

"apc",

"ar",

"ars",

"ary",

"arz",

"as",

"ast",

"awa",

"ayr",

"azb",

"azj",

"ba",

"bm",

"ban",

"be",

"bem",

"bn",

"bho",

"bjn",

"bo",

"bs",

"bug",

"bg",

"ca",

"ceb",

"cs",

"cjk",

"ckb",

"crh",

"cy",

"da",

"de",

"dik",

"dyu",

"dz",

"el",

"en",

"eo",

"et",

"eu",

"ee",

"fo",

"fj",

"fi",

"fon",

"fr",

"fur",

"fuv",

"gaz",

"gd",

"ga",

"gl",

"gn",

"gu",

"ht",

"ha",

"he",

"hi",

"hne",

"hr",

"hu",

"hy",

"ig",

"ilo",

"id",

"is",

"it",

"jv",

"ja",

"kab",

"kac",

"kam",

"kn",

"ks",

"ka",

"kk",

"kbp",

"kea",

"khk",

"km",

"ki",

"rw",

"ky",

"kmb",

"kmr",

"knc",

"kg",

"ko",

"lo",

"lij",

"li",

"ln",

"lt",

"lmo",

"ltg",

"lb",

"lua",

"lg",

"luo",

"lus",

"lvs",

"mag",

"mai",

"ml",

"mar",

"min",

"mk",

"mt",

"mni",

"mos",

"mi",

"my",

"nl",

"nn",

"nb",

"npi",

"nso",

"nus",

"ny",

"oc",

"ory",

"pag",

"pa",

"pap",

"pbt",

"pes",

"plt",

"pl",

"pt",

"prs",

"quy",

"ro",

"rn",

"ru",

"sg",

"sa",

"sat",

"scn",

"shn",

"si",

"sk",

"sl",

"sm",

"sn",

"sd",

"so",

"st",

"es",

"sc",

"sr",

"ss",

"su",

"sv",

"swh",

"szl",

"ta",

"taq",

"tt",

"te",

"tg",

"tl",

"th",

"ti",

"tpi",

"tn",

"ts",

"tk",

"tum",

"tr",

"tw",

"tzm",

"ug",

"uk",

"umb",

"ur",

"uzn",

"vec",

"vi",

"war",

"wo",

"xh",

"ydd",

"yo",

"yue",

"zh",

"zsm",

"zu",

"dataset:flores-200",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"region:us"

] | translation | "2022-07-08T10:06:00Z" | ---

language:

- ace

- acm

- acq

- aeb

- af

- ajp

- ak

- als

- am

- apc

- ar

- ars

- ary

- arz

- as

- ast

- awa

- ayr

- azb

- azj

- ba

- bm

- ban

- be

- bem

- bn

- bho

- bjn

- bo

- bs

- bug

- bg

- ca

- ceb

- cs

- cjk

- ckb

- crh

- cy

- da

- de

- dik

- dyu

- dz

- el

- en

- eo

- et

- eu

- ee

- fo

- fj

- fi

- fon

- fr

- fur

- fuv

- gaz

- gd

- ga

- gl

- gn

- gu

- ht

- ha

- he

- hi

- hne

- hr

- hu

- hy

- ig

- ilo

- id

- is

- it

- jv

- ja

- kab

- kac

- kam

- kn

- ks

- ka

- kk

- kbp

- kea

- khk

- km

- ki

- rw

- ky

- kmb

- kmr

- knc

- kg

- ko

- lo

- lij

- li

- ln

- lt

- lmo

- ltg

- lb

- lua

- lg

- luo

- lus

- lvs

- mag

- mai

- ml

- mar

- min

- mk

- mt

- mni

- mos

- mi

- my

- nl

- nn

- nb

- npi

- nso

- nus

- ny

- oc

- ory

- pag

- pa

- pap

- pbt

- pes

- plt

- pl

- pt

- prs

- quy

- ro

- rn

- ru

- sg

- sa

- sat

- scn

- shn

- si

- sk

- sl

- sm

- sn

- sd

- so

- st

- es

- sc

- sr

- ss

- su

- sv

- swh

- szl

- ta

- taq

- tt

- te

- tg

- tl

- th

- ti

- tpi

- tn

- ts

- tk

- tum

- tr

- tw

- tzm

- ug

- uk

- umb

- ur

- uzn

- vec

- vi

- war

- wo

- xh

- ydd

- yo

- yue

- zh

- zsm

- zu

language_details: "ace_Arab, ace_Latn, acm_Arab, acq_Arab, aeb_Arab, afr_Latn, ajp_Arab, aka_Latn, amh_Ethi, apc_Arab, arb_Arab, ars_Arab, ary_Arab, arz_Arab, asm_Beng, ast_Latn, awa_Deva, ayr_Latn, azb_Arab, azj_Latn, bak_Cyrl, bam_Latn, ban_Latn,bel_Cyrl, bem_Latn, ben_Beng, bho_Deva, bjn_Arab, bjn_Latn, bod_Tibt, bos_Latn, bug_Latn, bul_Cyrl, cat_Latn, ceb_Latn, ces_Latn, cjk_Latn, ckb_Arab, crh_Latn, cym_Latn, dan_Latn, deu_Latn, dik_Latn, dyu_Latn, dzo_Tibt, ell_Grek, eng_Latn, epo_Latn, est_Latn, eus_Latn, ewe_Latn, fao_Latn, pes_Arab, fij_Latn, fin_Latn, fon_Latn, fra_Latn, fur_Latn, fuv_Latn, gla_Latn, gle_Latn, glg_Latn, grn_Latn, guj_Gujr, hat_Latn, hau_Latn, heb_Hebr, hin_Deva, hne_Deva, hrv_Latn, hun_Latn, hye_Armn, ibo_Latn, ilo_Latn, ind_Latn, isl_Latn, ita_Latn, jav_Latn, jpn_Jpan, kab_Latn, kac_Latn, kam_Latn, kan_Knda, kas_Arab, kas_Deva, kat_Geor, knc_Arab, knc_Latn, kaz_Cyrl, kbp_Latn, kea_Latn, khm_Khmr, kik_Latn, kin_Latn, kir_Cyrl, kmb_Latn, kon_Latn, kor_Hang, kmr_Latn, lao_Laoo, lvs_Latn, lij_Latn, lim_Latn, lin_Latn, lit_Latn, lmo_Latn, ltg_Latn, ltz_Latn, lua_Latn, lug_Latn, luo_Latn, lus_Latn, mag_Deva, mai_Deva, mal_Mlym, mar_Deva, min_Latn, mkd_Cyrl, plt_Latn, mlt_Latn, mni_Beng, khk_Cyrl, mos_Latn, mri_Latn, zsm_Latn, mya_Mymr, nld_Latn, nno_Latn, nob_Latn, npi_Deva, nso_Latn, nus_Latn, nya_Latn, oci_Latn, gaz_Latn, ory_Orya, pag_Latn, pan_Guru, pap_Latn, pol_Latn, por_Latn, prs_Arab, pbt_Arab, quy_Latn, ron_Latn, run_Latn, rus_Cyrl, sag_Latn, san_Deva, sat_Beng, scn_Latn, shn_Mymr, sin_Sinh, slk_Latn, slv_Latn, smo_Latn, sna_Latn, snd_Arab, som_Latn, sot_Latn, spa_Latn, als_Latn, srd_Latn, srp_Cyrl, ssw_Latn, sun_Latn, swe_Latn, swh_Latn, szl_Latn, tam_Taml, tat_Cyrl, tel_Telu, tgk_Cyrl, tgl_Latn, tha_Thai, tir_Ethi, taq_Latn, taq_Tfng, tpi_Latn, tsn_Latn, tso_Latn, tuk_Latn, tum_Latn, tur_Latn, twi_Latn, tzm_Tfng, uig_Arab, ukr_Cyrl, umb_Latn, urd_Arab, uzn_Latn, vec_Latn, vie_Latn, war_Latn, wol_Latn, xho_Latn, ydd_Hebr, yor_Latn, yue_Hant, zho_Hans, zho_Hant, zul_Latn"

tags:

- nllb

- translation

license: "cc-by-nc-4.0"

datasets:

- flores-200

metrics:

- bleu

- spbleu

- chrf++

inference: false

---

# NLLB-200

This is the model card of NLLB-200's 3.3B variant.

Here are the [metrics](https://tinyurl.com/nllb200dense3bmetrics) for that particular checkpoint.

- Information about training algorithms, parameters, fairness constraints or other applied approaches, and features. The exact training algorithm, data and the strategies to handle data imbalances for high and low resource languages that were used to train NLLB-200 is described in the paper.

- Paper or other resource for more information NLLB Team et al, No Language Left Behind: Scaling Human-Centered Machine Translation, Arxiv, 2022

- License: CC-BY-NC

- Where to send questions or comments about the model: https://github.com/facebookresearch/fairseq/issues

## Intended Use

- Primary intended uses: NLLB-200 is a machine translation model primarily intended for research in machine translation, - especially for low-resource languages. It allows for single sentence translation among 200 languages. Information on how to - use the model can be found in Fairseq code repository along with the training code and references to evaluation and training data.

- Primary intended users: Primary users are researchers and machine translation research community.

- Out-of-scope use cases: NLLB-200 is a research model and is not released for production deployment. NLLB-200 is trained on general domain text data and is not intended to be used with domain specific texts, such as medical domain or legal domain. The model is not intended to be used for document translation. The model was trained with input lengths not exceeding 512 tokens, therefore translating longer sequences might result in quality degradation. NLLB-200 translations can not be used as certified translations.

## Metrics

• Model performance measures: NLLB-200 model was evaluated using BLEU, spBLEU, and chrF++ metrics widely adopted by machine translation community. Additionally, we performed human evaluation with the XSTS protocol and measured the toxicity of the generated translations.

## Evaluation Data

- Datasets: Flores-200 dataset is described in Section 4

- Motivation: We used Flores-200 as it provides full evaluation coverage of the languages in NLLB-200

- Preprocessing: Sentence-split raw text data was preprocessed using SentencePiece. The

SentencePiece model is released along with NLLB-200.

## Training Data