modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

unknown | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

unknown | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

Qwen/Qwen2-7B | Qwen | "2024-06-06T14:41:44Z" | 57,379 | 81 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"pretrained",

"conversational",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | "2024-06-04T13:35:27Z" | ---

language:

- en

pipeline_tag: text-generation

tags:

- pretrained

license: apache-2.0

---

# Qwen2-7B

## Introduction

Qwen2 is the new series of Qwen large language models. For Qwen2, we release a number of base language models and instruction-tuned language models ranging from 0.5 to 72 billion parameters, including a Mixture-of-Experts model. This repo contains the 7B Qwen2 base language model.

Compared with the state-of-the-art opensource language models, including the previous released Qwen1.5, Qwen2 has generally surpassed most opensource models and demonstrated competitiveness against proprietary models across a series of benchmarks targeting for language understanding, language generation, multilingual capability, coding, mathematics, reasoning, etc.

For more details, please refer to our [blog](https://qwenlm.github.io/blog/qwen2/), [GitHub](https://github.com/QwenLM/Qwen2), and [Documentation](https://qwen.readthedocs.io/en/latest/).

<br>

## Model Details

Qwen2 is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. It is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes.

## Requirements

The code of Qwen2 has been in the latest Hugging face transformers and we advise you to install `transformers>=4.37.0`, or you might encounter the following error:

```

KeyError: 'qwen2'

```

## Usage

We do not advise you to use base language models for text generation. Instead, you can apply post-training, e.g., SFT, RLHF, continued pretraining, etc., on this model.

### Performance

The evaluation of base models mainly focuses on the model performance of natural language understanding, general question answering, coding, mathematics, scientific knowledge, reasoning, multilingual capability, etc.

The datasets for evaluation include:

**English Tasks**: MMLU (5-shot), MMLU-Pro (5-shot), GPQA (5shot), Theorem QA (5-shot), BBH (3-shot), HellaSwag (10-shot), Winogrande (5-shot), TruthfulQA (0-shot), ARC-C (25-shot)

**Coding Tasks**: EvalPlus (0-shot) (HumanEval, MBPP, HumanEval+, MBPP+), MultiPL-E (0-shot) (Python, C++, JAVA, PHP, TypeScript, C#, Bash, JavaScript)

**Math Tasks**: GSM8K (4-shot), MATH (4-shot)

**Chinese Tasks**: C-Eval(5-shot), CMMLU (5-shot)

**Multilingual Tasks**: Multi-Exam (M3Exam 5-shot, IndoMMLU 3-shot, ruMMLU 5-shot, mMMLU 5-shot), Multi-Understanding (BELEBELE 5-shot, XCOPA 5-shot, XWinograd 5-shot, XStoryCloze 0-shot, PAWS-X 5-shot), Multi-Mathematics (MGSM 8-shot), Multi-Translation (Flores-101 5-shot)

#### Qwen2-7B performance

| Datasets | Mistral-7B | Gemma-7B | Llama-3-8B | Qwen1.5-7B | Qwen2-7B |

| :--------| :---------: | :------------: | :------------: | :------------: | :------------: |

|# Params | 7.2B | 8.5B | 8.0B | 7.7B | 7.6B |

|# Non-emb Params | 7.0B | 7.8B | 7.0B | 6.5B | 6.5B |

| ***English*** | | | | | |

|MMLU | 64.2 | 64.6 | 66.6 | 61.0 | **70.3** |

|MMLU-Pro | 30.9 | 33.7 | 35.4 | 29.9 | **40.0** |

|GPQA | 24.7 | 25.7 | 25.8 | 26.7 | **31.8** |

|Theorem QA | 19.2 | 21.5 | 22.1 | 14.2 | **31.1** |

|BBH | 56.1 | 55.1 | 57.7 | 40.2 | **62.6** |

|HellaSwag | **83.2** | 82.2 | 82.1 | 78.5 | 80.7 |

|Winogrande | 78.4 | **79.0** | 77.4 | 71.3 | 77.0 |

|ARC-C | 60.0 | **61.1** | 59.3 | 54.2 | 60.6 |

|TruthfulQA | 42.2 | 44.8 | 44.0 | 51.1 | **54.2** |

| ***Coding*** | | | | | |

|HumanEval | 29.3 | 37.2 | 33.5 | 36.0 | **51.2** |

|MBPP | 51.1 | 50.6 | 53.9 | 51.6 | **65.9** |

|EvalPlus | 36.4 | 39.6 | 40.3 | 40.0 | **54.2** |

|MultiPL-E | 29.4 | 29.7 | 22.6 | 28.1 | **46.3** |

| ***Mathematics*** | | | | | |

|GSM8K | 52.2 | 46.4 | 56.0 | 62.5 | **79.9** |

|MATH | 13.1 | 24.3 | 20.5 | 20.3 | **44.2** |

| ***Chinese*** | | | | | |

|C-Eval | 47.4 | 43.6 | 49.5 | 74.1 | **83.2** |

|CMMLU | - | - | 50.8 | 73.1 | **83.9** |

| ***Multilingual*** | | | | | |

|Multi-Exam | 47.1 | 42.7 | 52.3 | 47.7 | **59.2** |

|Multi-Understanding | 63.3 | 58.3 | 68.6 | 67.6 | **72.0** |

|Multi-Mathematics | 26.3 | 39.1 | 36.3 | 37.3 | **57.5** |

|Multi-Translation | 23.3 | 31.2 | **31.9** | 28.4 | 31.5 |

## Citation

If you find our work helpful, feel free to give us a cite.

```

@article{qwen2,

title={Qwen2 Technical Report},

year={2024}

}

``` |

duxprajapati/symptom-disease-model | duxprajapati | "2023-08-28T10:03:44Z" | 57,269 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"distilbert",

"text-classification",

"en",

"dataset:duxprajapati/symptom-disease-dataset",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2023-08-22T12:40:18Z" | ---

datasets:

- duxprajapati/symptom-disease-dataset

language:

- en

pipeline_tag: text-classification

--- |

mradermacher/Smaug-Qwen2-72B-Instruct-GGUF | mradermacher | "2024-06-27T16:41:33Z" | 57,233 | 0 | transformers | [

"transformers",

"gguf",

"chat",

"en",

"base_model:abacusai/Smaug-Qwen2-72B-Instruct",

"license:other",

"endpoints_compatible",

"region:us"

] | null | "2024-06-27T05:59:42Z" | ---

base_model: abacusai/Smaug-Qwen2-72B-Instruct

language:

- en

library_name: transformers

license: other

license_link: https://huggingface.co/Qwen/Qwen2-72B-Instruct/blob/main/LICENSE

license_name: tongyi-qianwen

quantized_by: mradermacher

tags:

- chat

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/abacusai/Smaug-Qwen2-72B-Instruct

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q2_K.gguf) | Q2_K | 29.9 | |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.IQ3_XS.gguf) | IQ3_XS | 32.9 | |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.IQ3_S.gguf) | IQ3_S | 34.6 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q3_K_S.gguf) | Q3_K_S | 34.6 | |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.IQ3_M.gguf) | IQ3_M | 35.6 | |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q3_K_M.gguf) | Q3_K_M | 37.8 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q3_K_L.gguf) | Q3_K_L | 39.6 | |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.IQ4_XS.gguf) | IQ4_XS | 40.3 | |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q4_K_S.gguf) | Q4_K_S | 44.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q4_K_M.gguf) | Q4_K_M | 47.5 | fast, recommended |

| [PART 1](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q5_K_S.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q5_K_S.gguf.part2of2) | Q5_K_S | 51.5 | |

| [PART 1](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q5_K_M.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q5_K_M.gguf.part2of2) | Q5_K_M | 54.5 | |

| [PART 1](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q6_K.gguf.part2of2) | Q6_K | 64.4 | very good quality |

| [PART 1](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q8_0.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/Smaug-Qwen2-72B-Instruct-GGUF/resolve/main/Smaug-Qwen2-72B-Instruct.Q8_0.gguf.part2of2) | Q8_0 | 77.4 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

Qwen/Qwen1.5-1.8B | Qwen | "2024-04-05T10:39:41Z" | 57,187 | 42 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"pretrained",

"conversational",

"en",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | "2024-01-22T16:53:32Z" | ---

license: other

license_name: tongyi-qianwen-research

license_link: >-

https://huggingface.co/Qwen/Qwen1.5-1.8B/blob/main/LICENSE

language:

- en

pipeline_tag: text-generation

tags:

- pretrained

---

# Qwen1.5-1.8B

## Introduction

Qwen1.5 is the beta version of Qwen2, a transformer-based decoder-only language model pretrained on a large amount of data. In comparison with the previous released Qwen, the improvements include:

* 8 model sizes, including 0.5B, 1.8B, 4B, 7B, 14B, 32B and 72B dense models, and an MoE model of 14B with 2.7B activated;

* Significant performance improvement in Chat models;

* Multilingual support of both base and chat models;

* Stable support of 32K context length for models of all sizes

* No need of `trust_remote_code`.

For more details, please refer to our [blog post](https://qwenlm.github.io/blog/qwen1.5/) and [GitHub repo](https://github.com/QwenLM/Qwen1.5).

## Model Details

Qwen1.5 is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. It is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, mixture of sliding window attention and full attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes. For the beta version, temporarily we did not include GQA (except for 32B) and the mixture of SWA and full attention.

## Requirements

The code of Qwen1.5 has been in the latest Hugging face transformers and we advise you to install `transformers>=4.37.0`, or you might encounter the following error:

```

KeyError: 'qwen2'.

```

## Usage

We do not advise you to use base language models for text generation. Instead, you can apply post-training, e.g., SFT, RLHF, continued pretraining, etc., on this model.

## Citation

If you find our work helpful, feel free to give us a cite.

```

@article{qwen,

title={Qwen Technical Report},

author={Jinze Bai and Shuai Bai and Yunfei Chu and Zeyu Cui and Kai Dang and Xiaodong Deng and Yang Fan and Wenbin Ge and Yu Han and Fei Huang and Binyuan Hui and Luo Ji and Mei Li and Junyang Lin and Runji Lin and Dayiheng Liu and Gao Liu and Chengqiang Lu and Keming Lu and Jianxin Ma and Rui Men and Xingzhang Ren and Xuancheng Ren and Chuanqi Tan and Sinan Tan and Jianhong Tu and Peng Wang and Shijie Wang and Wei Wang and Shengguang Wu and Benfeng Xu and Jin Xu and An Yang and Hao Yang and Jian Yang and Shusheng Yang and Yang Yao and Bowen Yu and Hongyi Yuan and Zheng Yuan and Jianwei Zhang and Xingxuan Zhang and Yichang Zhang and Zhenru Zhang and Chang Zhou and Jingren Zhou and Xiaohuan Zhou and Tianhang Zhu},

journal={arXiv preprint arXiv:2309.16609},

year={2023}

}

``` |

upstage/SOLAR-10.7B-Instruct-v1.0 | upstage | "2024-04-16T09:46:14Z" | 57,175 | 594 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"en",

"dataset:c-s-ale/alpaca-gpt4-data",

"dataset:Open-Orca/OpenOrca",

"dataset:Intel/orca_dpo_pairs",

"dataset:allenai/ultrafeedback_binarized_cleaned",

"arxiv:2312.15166",

"arxiv:2403.19270",

"base_model:upstage/SOLAR-10.7B-v1.0",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | "2023-12-12T12:39:22Z" | ---

datasets:

- c-s-ale/alpaca-gpt4-data

- Open-Orca/OpenOrca

- Intel/orca_dpo_pairs

- allenai/ultrafeedback_binarized_cleaned

language:

- en

license: cc-by-nc-4.0

base_model:

- upstage/SOLAR-10.7B-v1.0

---

<p align="left">

<a href="https://go.upstage.ai/solar-obt-hf-modelcardv1-instruct">

<img src="https://huggingface.co/upstage/SOLAR-10.7B-Instruct-v1.0/resolve/main/solar-api-banner.png" width="100%"/>

</a>

<p>

# **Meet 10.7B Solar: Elevating Performance with Upstage Depth UP Scaling!**

**(This model is [upstage/SOLAR-10.7B-v1.0](https://huggingface.co/upstage/SOLAR-10.7B-v1.0) fine-tuned version for single-turn conversation.)**

# **Introduction**

We introduce SOLAR-10.7B, an advanced large language model (LLM) with 10.7 billion parameters, demonstrating superior performance in various natural language processing (NLP) tasks. It's compact, yet remarkably powerful, and demonstrates unparalleled state-of-the-art performance in models with parameters under 30B.

We present a methodology for scaling LLMs called depth up-scaling (DUS) , which encompasses architectural modifications and continued pretraining. In other words, we integrated Mistral 7B weights into the upscaled layers, and finally, continued pre-training for the entire model.

SOLAR-10.7B has remarkable performance. It outperforms models with up to 30B parameters, even surpassing the recent Mixtral 8X7B model. For detailed information, please refer to the experimental table.

Solar 10.7B is an ideal choice for fine-tuning. SOLAR-10.7B offers robustness and adaptability for your fine-tuning needs. Our simple instruction fine-tuning using the SOLAR-10.7B pre-trained model yields significant performance improvements.

For full details of this model please read our [paper](https://arxiv.org/abs/2312.15166).

# **Instruction Fine-Tuning Strategy**

We utilize state-of-the-art instruction fine-tuning methods including supervised fine-tuning (SFT) and direct preference optimization (DPO) [1].

We used a mixture of the following datasets

- c-s-ale/alpaca-gpt4-data (SFT)

- Open-Orca/OpenOrca (SFT)

- in-house generated data utilizing Metamath [2] (SFT, DPO)

- Intel/orca_dpo_pairs (DPO)

- allenai/ultrafeedback_binarized_cleaned (DPO)

where we were careful of data contamination by not using GSM8K samples when generating data and filtering tasks when applicable via the following list.

```python

filtering_task_list = [

'task228_arc_answer_generation_easy',

'ai2_arc/ARC-Challenge:1.0.0',

'ai2_arc/ARC-Easy:1.0.0',

'task229_arc_answer_generation_hard',

'hellaswag:1.1.0',

'task1389_hellaswag_completion',

'cot_gsm8k',

'cot_gsm8k_ii',

'drop:2.0.0',

'winogrande:1.1.0'

]

```

Using the datasets mentioned above, we applied SFT and iterative DPO training, a proprietary alignment strategy, to maximize the performance of our resulting model.

[1] Rafailov, R., Sharma, A., Mitchell, E., Ermon, S., Manning, C.D. and Finn, C., 2023. Direct preference optimization: Your language model is secretly a reward model. NeurIPS.

[2] Yu, L., Jiang, W., Shi, H., Yu, J., Liu, Z., Zhang, Y., Kwok, J.T., Li, Z., Weller, A. and Liu, W., 2023. Metamath: Bootstrap your own mathematical questions for large language models. arXiv preprint arXiv:2309.12284.

# **Data Contamination Test Results**

Recently, there have been contamination issues in some models on the LLM leaderboard.

We note that we made every effort to exclude any benchmark-related datasets from training.

We also ensured the integrity of our model by conducting a data contamination test [3] that is also used by the HuggingFace team [4, 5].

Our results, with `result < 0.1, %:` being well below 0.9, indicate that our model is free from contamination.

*The data contamination test results of HellaSwag and Winograde will be added once [3] supports them.*

| Model | ARC | MMLU | TruthfulQA | GSM8K |

|------------------------------|-------|-------|-------|-------|

| **SOLAR-10.7B-Instruct-v1.0**| result < 0.1, %: 0.06 |result < 0.1, %: 0.15 | result < 0.1, %: 0.28 | result < 0.1, %: 0.70 |

[3] https://github.com/swj0419/detect-pretrain-code-contamination

[4] https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard/discussions/474#657f2245365456e362412a06

[5] https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard/discussions/265#657b6debf81f6b44b8966230

# **Evaluation Results**

| Model | H6 | Model Size |

|----------------------------------------|-------|------------|

| **SOLAR-10.7B-Instruct-v1.0** | **74.20** | **~ 11B** |

| mistralai/Mixtral-8x7B-Instruct-v0.1 | 72.62 | ~ 46.7B |

| 01-ai/Yi-34B-200K | 70.81 | ~ 34B |

| 01-ai/Yi-34B | 69.42 | ~ 34B |

| mistralai/Mixtral-8x7B-v0.1 | 68.42 | ~ 46.7B |

| meta-llama/Llama-2-70b-hf | 67.87 | ~ 70B |

| tiiuae/falcon-180B | 67.85 | ~ 180B |

| **SOLAR-10.7B-v1.0** | **66.04** | **~11B** |

| mistralai/Mistral-7B-Instruct-v0.2 | 65.71 | ~ 7B |

| Qwen/Qwen-14B | 65.86 | ~ 14B |

| 01-ai/Yi-34B-Chat | 65.32 | ~34B |

| meta-llama/Llama-2-70b-chat-hf | 62.4 | ~ 70B |

| mistralai/Mistral-7B-v0.1 | 60.97 | ~ 7B |

| mistralai/Mistral-7B-Instruct-v0.1 | 54.96 | ~ 7B |

# **Usage Instructions**

This model has been fine-tuned primarily for single-turn conversation, making it less suitable for multi-turn conversations such as chat.

### **Version**

Make sure you have the correct version of the transformers library installed:

```sh

pip install transformers==4.35.2

```

### **Loading the Model**

Use the following Python code to load the model:

```python

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("Upstage/SOLAR-10.7B-Instruct-v1.0")

model = AutoModelForCausalLM.from_pretrained(

"Upstage/SOLAR-10.7B-Instruct-v1.0",

device_map="auto",

torch_dtype=torch.float16,

)

```

### **Conducting Single-Turn Conversation**

```python

conversation = [ {'role': 'user', 'content': 'Hello?'} ]

prompt = tokenizer.apply_chat_template(conversation, tokenize=False, add_generation_prompt=True)

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, use_cache=True, max_length=4096)

output_text = tokenizer.decode(outputs[0])

print(output_text)

```

Below is an example of the output.

```

<s> ### User:

Hello?

### Assistant:

Hello, how can I assist you today? Please feel free to ask any questions or request help with a specific task.</s>

```

### **License**

- [upstage/SOLAR-10.7B-v1.0](https://huggingface.co/upstage/SOLAR-10.7B-v1.0): apache-2.0

- [upstage/SOLAR-10.7B-Instruct-v1.0](https://huggingface.co/upstage/SOLAR-10.7B-Instruct-v1.0): cc-by-nc-4.0

- Since some non-commercial datasets such as Alpaca are used for fine-tuning, we release this model as cc-by-nc-4.0.

### **How to Cite**

Please cite the following papers using the below format when using this model.

```bibtex

@misc{kim2023solar,

title={SOLAR 10.7B: Scaling Large Language Models with Simple yet Effective Depth Up-Scaling},

author={Dahyun Kim and Chanjun Park and Sanghoon Kim and Wonsung Lee and Wonho Song and Yunsu Kim and Hyeonwoo Kim and Yungi Kim and Hyeonju Lee and Jihoo Kim and Changbae Ahn and Seonghoon Yang and Sukyung Lee and Hyunbyung Park and Gyoungjin Gim and Mikyoung Cha and Hwalsuk Lee and Sunghun Kim},

year={2023},

eprint={2312.15166},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

```bibtext

@misc{kim2024sdpo,

title={sDPO: Don't Use Your Data All at Once},

author={Dahyun Kim and Yungi Kim and Wonho Song and Hyeonwoo Kim and Yunsu Kim and Sanghoon Kim and Chanjun Park},

year={2024},

eprint={2403.19270},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

### **The Upstage AI Team** ###

Upstage is creating the best LLM and DocAI. Please find more information at https://upstage.ai

### **Contact Us** ###

Any questions and suggestions, please use the discussion tab. If you want to contact us directly, drop an email to [contact@upstage.ai](mailto:contact@upstage.ai) |

luminar9/bert-finetuned-368items | luminar9 | "2024-05-11T07:06:05Z" | 57,173 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2024-05-11T07:02:44Z" | Entry not found |

lpiccinelli/unidepth-v2-vitl14 | lpiccinelli | "2024-06-12T12:46:03Z" | 57,067 | 0 | UniDepth | [

"UniDepth",

"pytorch",

"safetensors",

"monocular-metric-depth-estimation",

"pytorch_model_hub_mixin",

"model_hub_mixin",

"region:us"

] | null | "2024-06-12T12:39:28Z" | ---

library_name: UniDepth

tags:

- monocular-metric-depth-estimation

- pytorch_model_hub_mixin

- model_hub_mixin

---

This model has been pushed to the Hub using the [PytorchModelHubMixin](https://huggingface.co/docs/huggingface_hub/package_reference/mixins#huggingface_hub.PyTorchModelHubMixin) integration:

- Library: https://github.com/lpiccinelli-eth/UniDepth

- Docs: [More Information Needed] |

Dremmar/nsfw-xl | Dremmar | "2024-01-07T11:19:41Z" | 57,053 | 48 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"region:us"

] | text-to-image | "2024-01-07T11:18:33Z" | ---

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

widget:

- text: "UNICODE\0\0a\0n\0a\0l\0o\0g\0 \0f\0i\0l\0m\0 \0p\0h\0o\0t\0o\0 \0w\0o\0m\0a\0n\0,\0 \0b\0r\0e\0a\0s\0t\0s\0,\0 \0h\0e\0a\0t\0s\0h\0o\0t\0,\0 \0f\0a\0c\0i\0n\0g\0 \0v\0i\0e\0w\0e\0r\0 \0<\0l\0o\0r\0a\0:\0n\0s\0f\0w\0-\0x\0l\0-\02\0.\00\0:\01\0>\0 \0.\0 \0f\0a\0d\0e\0d\0 \0f\0i\0l\0m\0,\0 \0d\0e\0s\0a\0t\0u\0r\0a\0t\0e\0d\0,\0 \03\05\0m\0m\0 \0p\0h\0o\0t\0o\0,\0 \0g\0r\0a\0i\0n\0y\0,\0 \0v\0i\0g\0n\0e\0t\0t\0e\0,\0 \0v\0i\0n\0t\0a\0g\0e\0,\0 \0K\0o\0d\0a\0c\0h\0r\0o\0m\0e\0,\0 \0L\0o\0m\0o\0g\0r\0a\0p\0h\0y\0,\0 \0s\0t\0a\0i\0n\0e\0d\0,\0 \0h\0i\0g\0h\0l\0y\0 \0d\0e\0t\0a\0i\0l\0e\0d\0,\0 \0f\0o\0u\0n\0d\0 \0f\0o\0o\0t\0a\0g\0e\0"

output:

url: images/00097-3192725504.jpeg

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: null

---

# nsfw-xl

<Gallery />

## Model description

just copy of https://civitai.com/models/141300/nsfw-xl

## Download model

Weights for this model are available in Safetensors format.

[Download](/Dremmar/nsfw-xl/tree/main) them in the Files & versions tab.

|

internlm/internlm2-7b | internlm | "2024-07-02T12:26:11Z" | 57,041 | 36 | transformers | [

"transformers",

"pytorch",

"internlm2",

"text-generation",

"custom_code",

"arxiv:2403.17297",

"license:other",

"autotrain_compatible",

"region:us"

] | text-generation | "2024-01-12T06:18:18Z" | ---

pipeline_tag: text-generation

license: other

---

# InternLM

<div align="center">

<img src="https://github.com/InternLM/InternLM/assets/22529082/b9788105-8892-4398-8b47-b513a292378e" width="200"/>

<div> </div>

<div align="center">

<b><font size="5">InternLM</font></b>

<sup>

<a href="https://internlm.intern-ai.org.cn/">

<i><font size="4">HOT</font></i>

</a>

</sup>

<div> </div>

</div>

[](https://github.com/internLM/OpenCompass/)

[💻Github Repo](https://github.com/InternLM/InternLM) • [🤔Reporting Issues](https://github.com/InternLM/InternLM/issues/new) • [📜Technical Report](https://arxiv.org/abs/2403.17297)

</div>

## Introduction

The second generation of the InternLM model, InternLM2, includes models at two scales: 7B and 20B. For the convenience of users and researchers, we have open-sourced four versions of each scale of the model, which are:

- internlm2-base: A high-quality and highly adaptable model base, serving as an excellent starting point for deep domain adaptation.

- internlm2 (**recommended**): Built upon the internlm2-base, this version has further pretrained on domain-specific corpus. It shows outstanding performance in evaluations while maintaining robust general language abilities, making it our recommended choice for most applications.

- internlm2-chat-sft: Based on the Base model, it undergoes supervised human alignment training.

- internlm2-chat (**recommended**): Optimized for conversational interaction on top of the internlm2-chat-sft through RLHF, it excels in instruction adherence, empathetic chatting, and tool invocation.

The base model of InternLM2 has the following technical features:

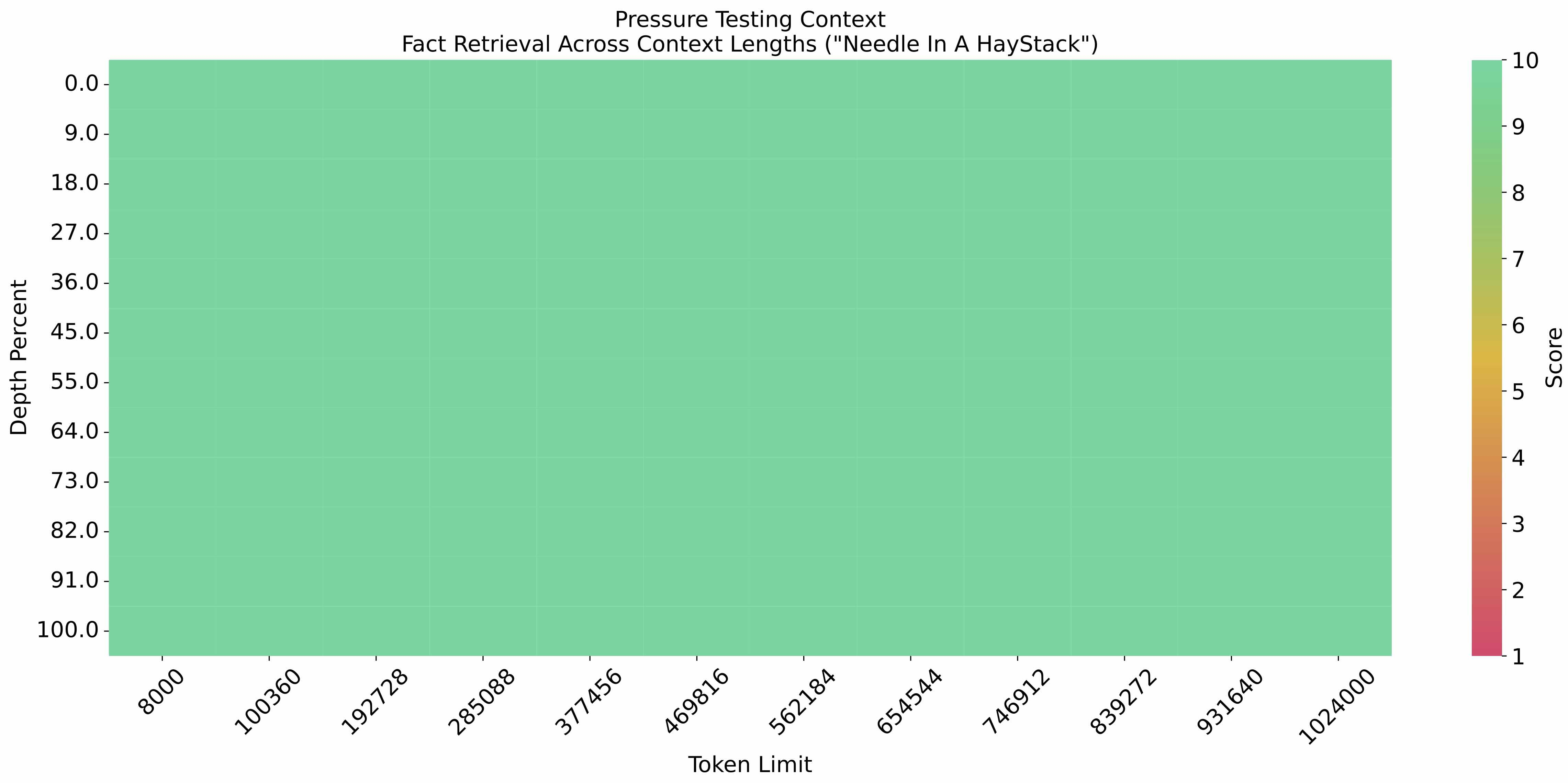

- Effective support for ultra-long contexts of up to 200,000 characters: The model nearly perfectly achieves "finding a needle in a haystack" in long inputs of 200,000 characters. It also leads among open-source models in performance on long-text tasks such as LongBench and L-Eval.

- Comprehensive performance enhancement: Compared to the previous generation model, it shows significant improvements in various capabilities, including reasoning, mathematics, and coding.

## InternLM2-7B

### Performance Evaluation

We have evaluated InternLM2 on several important benchmarks using the open-source evaluation tool [OpenCompass](https://github.com/open-compass/opencompass). Some of the evaluation results are shown in the table below. You are welcome to visit the [OpenCompass Leaderboard](https://rank.opencompass.org.cn/leaderboard-llm) for more evaluation results.

| Dataset\Models | InternLM2-7B | InternLM2-Chat-7B | InternLM2-20B | InternLM2-Chat-20B | ChatGPT | GPT-4 |

| --- | --- | --- | --- | --- | --- | --- |

| MMLU | 65.8 | 63.7 | 67.7 | 66.5 | 69.1 | 83.0 |

| AGIEval | 49.9 | 47.2 | 53.0 | 50.3 | 39.9 | 55.1 |

| BBH | 65.0 | 61.2 | 72.1 | 68.3 | 70.1 | 86.7 |

| GSM8K | 70.8 | 70.7 | 76.1 | 79.6 | 78.2 | 91.4 |

| MATH | 20.2 | 23.0 | 25.5 | 31.9 | 28.0 | 45.8 |

| HumanEval | 43.3 | 59.8 | 48.8 | 67.1 | 73.2 | 74.4 |

| MBPP(Sanitized) | 51.8 | 51.4 | 63.0 | 65.8 | 78.9 | 79.0 |

- The evaluation results were obtained from [OpenCompass](https://github.com/open-compass/opencompass) , and evaluation configuration can be found in the configuration files provided by [OpenCompass](https://github.com/open-compass/opencompass).

- The evaluation data may have numerical differences due to the version iteration of [OpenCompass](https://github.com/open-compass/opencompass), so please refer to the latest evaluation results of [OpenCompass](https://github.com/open-compass/opencompass).

**Limitations:** Although we have made efforts to ensure the safety of the model during the training process and to encourage the model to generate text that complies with ethical and legal requirements, the model may still produce unexpected outputs due to its size and probabilistic generation paradigm. For example, the generated responses may contain biases, discrimination, or other harmful content. Please do not propagate such content. We are not responsible for any consequences resulting from the dissemination of harmful information.

### Import from Transformers

To load the InternLM2-7B model using Transformers, use the following code:

```python

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("internlm/internlm2-7b", trust_remote_code=True)

# Set `torch_dtype=torch.float16` to load model in float16, otherwise it will be loaded as float32 and might cause OOM Error.

model = AutoModelForCausalLM.from_pretrained("internlm/internlm2-7b", torch_dtype=torch.float16, trust_remote_code=True).cuda()

model = model.eval()

inputs = tokenizer(["A beautiful flower"], return_tensors="pt")

for k,v in inputs.items():

inputs[k] = v.cuda()

gen_kwargs = {"max_length": 128, "top_p": 0.8, "temperature": 0.8, "do_sample": True, "repetition_penalty": 1.0}

output = model.generate(**inputs, **gen_kwargs)

output = tokenizer.decode(output[0].tolist(), skip_special_tokens=True)

print(output)

# A beautiful flowering shrub with clusters of pinkish white flowers in the summer. The foliage is glossy green with a hint of bronze. A great plant for small gardens or as a pot plant. Can be grown as a hedge or as a single specimen plant.

```

## Open Source License

The code is licensed under Apache-2.0, while model weights are fully open for academic research and also allow **free** commercial usage. To apply for a commercial license, please fill in the [application form (English)](https://wj.qq.com/s2/12727483/5dba/)/[申请表(中文)](https://wj.qq.com/s2/12725412/f7c1/). For other questions or collaborations, please contact <internlm@pjlab.org.cn>.

## Citation

```

@misc{cai2024internlm2,

title={InternLM2 Technical Report},

author={Zheng Cai and Maosong Cao and Haojiong Chen and Kai Chen and Keyu Chen and Xin Chen and Xun Chen and Zehui Chen and Zhi Chen and Pei Chu and Xiaoyi Dong and Haodong Duan and Qi Fan and Zhaoye Fei and Yang Gao and Jiaye Ge and Chenya Gu and Yuzhe Gu and Tao Gui and Aijia Guo and Qipeng Guo and Conghui He and Yingfan Hu and Ting Huang and Tao Jiang and Penglong Jiao and Zhenjiang Jin and Zhikai Lei and Jiaxing Li and Jingwen Li and Linyang Li and Shuaibin Li and Wei Li and Yining Li and Hongwei Liu and Jiangning Liu and Jiawei Hong and Kaiwen Liu and Kuikun Liu and Xiaoran Liu and Chengqi Lv and Haijun Lv and Kai Lv and Li Ma and Runyuan Ma and Zerun Ma and Wenchang Ning and Linke Ouyang and Jiantao Qiu and Yuan Qu and Fukai Shang and Yunfan Shao and Demin Song and Zifan Song and Zhihao Sui and Peng Sun and Yu Sun and Huanze Tang and Bin Wang and Guoteng Wang and Jiaqi Wang and Jiayu Wang and Rui Wang and Yudong Wang and Ziyi Wang and Xingjian Wei and Qizhen Weng and Fan Wu and Yingtong Xiong and Chao Xu and Ruiliang Xu and Hang Yan and Yirong Yan and Xiaogui Yang and Haochen Ye and Huaiyuan Ying and Jia Yu and Jing Yu and Yuhang Zang and Chuyu Zhang and Li Zhang and Pan Zhang and Peng Zhang and Ruijie Zhang and Shuo Zhang and Songyang Zhang and Wenjian Zhang and Wenwei Zhang and Xingcheng Zhang and Xinyue Zhang and Hui Zhao and Qian Zhao and Xiaomeng Zhao and Fengzhe Zhou and Zaida Zhou and Jingming Zhuo and Yicheng Zou and Xipeng Qiu and Yu Qiao and Dahua Lin},

year={2024},

eprint={2403.17297},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

## 简介

第二代浦语模型, InternLM2 包含 7B 和 20B 两个量级的模型。为了方便用户使用和研究,每个量级的模型我们总共开源了四个版本的模型,他们分别是

- internlm2-base: 高质量和具有很强可塑性的模型基座,是模型进行深度领域适配的高质量起点;

- internlm2(**推荐**): 在internlm2-base基础上,进一步在特定领域的语料上进行预训练,在评测中成绩优异,同时保持了很好的通用语言能力,是我们推荐的在大部分应用中考虑选用的优秀基座;

- internlm2-chat-sft:在Base基础上,进行有监督的人类对齐训练;

- internlm2-chat(**推荐**):在internlm2-chat-sft基础上,经过RLHF,面向对话交互进行了优化,具有很好的指令遵循、共情聊天和调用工具等的能力。

InternLM2 的基础模型具备以下的技术特点

- 有效支持20万字超长上下文:模型在20万字长输入中几乎完美地实现长文“大海捞针”,而且在 LongBench 和 L-Eval 等长文任务中的表现也达到开源模型中的领先水平。

- 综合性能全面提升:各能力维度相比上一代模型全面进步,在推理、数学、代码等方面的能力提升显著。

## InternLM2-7B

### 性能评测

我们使用开源评测工具 [OpenCompass](https://github.com/internLM/OpenCompass/) 对 InternLM2 在几个重要的评测集进行了评测 ,部分评测结果如下表所示,欢迎访问[ OpenCompass 榜单 ](https://rank.opencompass.org.cn/leaderboard-llm)获取更多的评测结果。

| 评测集 | InternLM2-7B | InternLM2-Chat-7B | InternLM2-20B | InternLM2-Chat-20B | ChatGPT | GPT-4 |

| --- | --- | --- | --- | --- | --- | --- |

| MMLU | 65.8 | 63.7 | 67.7 | 66.5 | 69.1 | 83.0 |

| AGIEval | 49.9 | 47.2 | 53.0 | 50.3 | 39.9 | 55.1 |

| BBH | 65.0 | 61.2 | 72.1 | 68.3 | 70.1 | 86.7 |

| GSM8K | 70.8 | 70.7 | 76.1 | 79.6 | 78.2 | 91.4 |

| MATH | 20.2 | 23.0 | 25.5 | 31.9 | 28.0 | 45.8 |

| HumanEval | 43.3 | 59.8 | 48.8 | 67.1 | 73.2 | 74.4 |

| MBPP(Sanitized) | 51.8 | 51.4 | 63.0 | 65.8 | 78.9 | 79.0 |

- 以上评测结果基于 [OpenCompass](https://github.com/open-compass/opencompass) 获得(部分数据标注`*`代表数据来自原始论文),具体测试细节可参见 [OpenCompass](https://github.com/open-compass/opencompass) 中提供的配置文件。

- 评测数据会因 [OpenCompass](https://github.com/open-compass/opencompass) 的版本迭代而存在数值差异,请以 [OpenCompass](https://github.com/open-compass/opencompass) 最新版的评测结果为主。

**局限性:** 尽管在训练过程中我们非常注重模型的安全性,尽力促使模型输出符合伦理和法律要求的文本,但受限于模型大小以及概率生成范式,模型可能会产生各种不符合预期的输出,例如回复内容包含偏见、歧视等有害内容,请勿传播这些内容。由于传播不良信息导致的任何后果,本项目不承担责任。

### 通过 Transformers 加载

通过以下的代码加载 InternLM2-7B 模型进行文本续写

```python

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("internlm/internlm2-7b", trust_remote_code=True)

# `torch_dtype=torch.float16` 可以令模型以 float16 精度加载,否则 transformers 会将模型加载为 float32,有可能导致显存不足

model = AutoModelForCausalLM.from_pretrained("internlm/internlm2-7b", torch_dtype=torch.float16, trust_remote_code=True).cuda()

model = model.eval()

inputs = tokenizer(["来到美丽的大自然"], return_tensors="pt")

for k,v in inputs.items():

inputs[k] = v.cuda()

gen_kwargs = {"max_length": 128, "top_p": 0.8, "temperature": 0.8, "do_sample": True, "repetition_penalty": 1.0}

output = model.generate(**inputs, **gen_kwargs)

output = tokenizer.decode(output[0].tolist(), skip_special_tokens=True)

print(output)

# 来到美丽的大自然

# 走进那迷人的花园

# 鸟儿在枝头歌唱

# 花儿在微风中翩翩起舞

# 我们坐在草地上

# 仰望蔚蓝的天空

# 白云像棉花糖一样柔软

# 阳光温暖着我们的脸庞

# 大自然的美景

# 让我们感到无比的幸福

# 让我们心旷神怡

# 让我们感到无比的快乐

# 让我们陶醉其中

# 让我们流连忘返

# 让我们忘记所有的烦恼

# 让我们尽情享受这美好的时光

# 让我们珍惜这美好的瞬间

# 让我们感恩大自然

# 让我们与大自然和谐共处

# 让我们共同保护这美丽的家园

# 让我们永远保持一颗纯真的心灵

```

## 开源许可证

本仓库的代码依照 Apache-2.0 协议开源。模型权重对学术研究完全开放,也可申请免费的商业使用授权([申请表](https://wj.qq.com/s2/12725412/f7c1/))。其他问题与合作请联系 <internlm@pjlab.org.cn>。

## 引用

```

@misc{cai2024internlm2,

title={InternLM2 Technical Report},

author={Zheng Cai and Maosong Cao and Haojiong Chen and Kai Chen and Keyu Chen and Xin Chen and Xun Chen and Zehui Chen and Zhi Chen and Pei Chu and Xiaoyi Dong and Haodong Duan and Qi Fan and Zhaoye Fei and Yang Gao and Jiaye Ge and Chenya Gu and Yuzhe Gu and Tao Gui and Aijia Guo and Qipeng Guo and Conghui He and Yingfan Hu and Ting Huang and Tao Jiang and Penglong Jiao and Zhenjiang Jin and Zhikai Lei and Jiaxing Li and Jingwen Li and Linyang Li and Shuaibin Li and Wei Li and Yining Li and Hongwei Liu and Jiangning Liu and Jiawei Hong and Kaiwen Liu and Kuikun Liu and Xiaoran Liu and Chengqi Lv and Haijun Lv and Kai Lv and Li Ma and Runyuan Ma and Zerun Ma and Wenchang Ning and Linke Ouyang and Jiantao Qiu and Yuan Qu and Fukai Shang and Yunfan Shao and Demin Song and Zifan Song and Zhihao Sui and Peng Sun and Yu Sun and Huanze Tang and Bin Wang and Guoteng Wang and Jiaqi Wang and Jiayu Wang and Rui Wang and Yudong Wang and Ziyi Wang and Xingjian Wei and Qizhen Weng and Fan Wu and Yingtong Xiong and Chao Xu and Ruiliang Xu and Hang Yan and Yirong Yan and Xiaogui Yang and Haochen Ye and Huaiyuan Ying and Jia Yu and Jing Yu and Yuhang Zang and Chuyu Zhang and Li Zhang and Pan Zhang and Peng Zhang and Ruijie Zhang and Shuo Zhang and Songyang Zhang and Wenjian Zhang and Wenwei Zhang and Xingcheng Zhang and Xinyue Zhang and Hui Zhao and Qian Zhao and Xiaomeng Zhao and Fengzhe Zhou and Zaida Zhou and Jingming Zhuo and Yicheng Zou and Xipeng Qiu and Yu Qiao and Dahua Lin},

year={2024},

eprint={2403.17297},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

timm/convnext_xxlarge.clip_laion2b_soup_ft_in1k | timm | "2024-02-10T23:38:09Z" | 56,947 | 2 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"dataset:laion-2b",

"arxiv:2210.08402",

"arxiv:2201.03545",

"arxiv:2103.00020",

"license:apache-2.0",

"region:us"

] | image-classification | "2023-03-31T22:51:41Z" | ---

license: apache-2.0

library_name: timm

tags:

- image-classification

- timm

datasets:

- imagenet-1k

- laion-2b

---

# Model card for convnext_xxlarge.clip_laion2b_soup_ft_in1k

A ConvNeXt image classification model. CLIP image tower weights pretrained in [OpenCLIP](https://github.com/mlfoundations/open_clip) on LAION and fine-tuned on ImageNet-1k in `timm` by Ross Wightman.

Please see related OpenCLIP model cards for more details on pretrain:

* https://huggingface.co/laion/CLIP-convnext_xxlarge-laion2B-s34B-b82K-augreg-soup

* https://huggingface.co/laion/CLIP-convnext_large_d.laion2B-s26B-b102K-augreg

* https://huggingface.co/laion/CLIP-convnext_base_w-laion2B-s13B-b82K-augreg

* https://huggingface.co/laion/CLIP-convnext_base_w_320-laion_aesthetic-s13B-b82K

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 846.5

- GMACs: 198.1

- Activations (M): 124.5

- Image size: 256 x 256

- **Papers:**

- LAION-5B: An open large-scale dataset for training next generation image-text models: https://arxiv.org/abs/2210.08402

- A ConvNet for the 2020s: https://arxiv.org/abs/2201.03545

- Learning Transferable Visual Models From Natural Language Supervision: https://arxiv.org/abs/2103.00020

- **Original:** https://github.com/mlfoundations/open_clip

- **Pretrain Dataset:** LAION-2B

- **Dataset:** ImageNet-1k

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('convnext_xxlarge.clip_laion2b_soup_ft_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'convnext_xxlarge.clip_laion2b_soup_ft_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 384, 64, 64])

# torch.Size([1, 768, 32, 32])

# torch.Size([1, 1536, 16, 16])

# torch.Size([1, 3072, 8, 8])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'convnext_xxlarge.clip_laion2b_soup_ft_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 3072, 8, 8) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

All timing numbers from eager model PyTorch 1.13 on RTX 3090 w/ AMP.

| model |top1 |top5 |img_size|param_count|gmacs |macts |samples_per_sec|batch_size|

|------------------------------------------------------------------------------------------------------------------------------|------|------|--------|-----------|------|------|---------------|----------|

| [convnextv2_huge.fcmae_ft_in22k_in1k_512](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in22k_in1k_512) |88.848|98.742|512 |660.29 |600.81|413.07|28.58 |48 |

| [convnextv2_huge.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in22k_in1k_384) |88.668|98.738|384 |660.29 |337.96|232.35|50.56 |64 |

| [convnext_xxlarge.clip_laion2b_soup_ft_in1k](https://huggingface.co/timm/convnext_xxlarge.clip_laion2b_soup_ft_in1k) |88.612|98.704|256 |846.47 |198.09|124.45|122.45 |256 |

| [convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_384](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_384) |88.312|98.578|384 |200.13 |101.11|126.74|196.84 |256 |

| [convnextv2_large.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in22k_in1k_384) |88.196|98.532|384 |197.96 |101.1 |126.74|128.94 |128 |

| [convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_320](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_320) |87.968|98.47 |320 |200.13 |70.21 |88.02 |283.42 |256 |

| [convnext_xlarge.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_xlarge.fb_in22k_ft_in1k_384) |87.75 |98.556|384 |350.2 |179.2 |168.99|124.85 |192 |

| [convnextv2_base.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in22k_in1k_384) |87.646|98.422|384 |88.72 |45.21 |84.49 |209.51 |256 |

| [convnext_large.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_large.fb_in22k_ft_in1k_384) |87.476|98.382|384 |197.77 |101.1 |126.74|194.66 |256 |

| [convnext_large_mlp.clip_laion2b_augreg_ft_in1k](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_augreg_ft_in1k) |87.344|98.218|256 |200.13 |44.94 |56.33 |438.08 |256 |

| [convnextv2_large.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in22k_in1k) |87.26 |98.248|224 |197.96 |34.4 |43.13 |376.84 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in12k_in1k_384](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in12k_in1k_384) |87.138|98.212|384 |88.59 |45.21 |84.49 |365.47 |256 |

| [convnext_xlarge.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_xlarge.fb_in22k_ft_in1k) |87.002|98.208|224 |350.2 |60.98 |57.5 |368.01 |256 |

| [convnext_base.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_base.fb_in22k_ft_in1k_384) |86.796|98.264|384 |88.59 |45.21 |84.49 |366.54 |256 |

| [convnextv2_base.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in22k_in1k) |86.74 |98.022|224 |88.72 |15.38 |28.75 |624.23 |256 |

| [convnext_large.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_large.fb_in22k_ft_in1k) |86.636|98.028|224 |197.77 |34.4 |43.13 |581.43 |256 |

| [convnext_base.clip_laiona_augreg_ft_in1k_384](https://huggingface.co/timm/convnext_base.clip_laiona_augreg_ft_in1k_384) |86.504|97.97 |384 |88.59 |45.21 |84.49 |368.14 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in12k_in1k](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in12k_in1k) |86.344|97.97 |256 |88.59 |20.09 |37.55 |816.14 |256 |

| [convnextv2_huge.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in1k) |86.256|97.75 |224 |660.29 |115.0 |79.07 |154.72 |256 |

| [convnext_small.in12k_ft_in1k_384](https://huggingface.co/timm/convnext_small.in12k_ft_in1k_384) |86.182|97.92 |384 |50.22 |25.58 |63.37 |516.19 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in1k](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in1k) |86.154|97.68 |256 |88.59 |20.09 |37.55 |819.86 |256 |

| [convnext_base.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_base.fb_in22k_ft_in1k) |85.822|97.866|224 |88.59 |15.38 |28.75 |1037.66 |256 |

| [convnext_small.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_small.fb_in22k_ft_in1k_384) |85.778|97.886|384 |50.22 |25.58 |63.37 |518.95 |256 |

| [convnextv2_large.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in1k) |85.742|97.584|224 |197.96 |34.4 |43.13 |375.23 |256 |

| [convnext_small.in12k_ft_in1k](https://huggingface.co/timm/convnext_small.in12k_ft_in1k) |85.174|97.506|224 |50.22 |8.71 |21.56 |1474.31 |256 |

| [convnext_tiny.in12k_ft_in1k_384](https://huggingface.co/timm/convnext_tiny.in12k_ft_in1k_384) |85.118|97.608|384 |28.59 |13.14 |39.48 |856.76 |256 |

| [convnextv2_tiny.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in22k_in1k_384) |85.112|97.63 |384 |28.64 |13.14 |39.48 |491.32 |256 |

| [convnextv2_base.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in1k) |84.874|97.09 |224 |88.72 |15.38 |28.75 |625.33 |256 |

| [convnext_small.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_small.fb_in22k_ft_in1k) |84.562|97.394|224 |50.22 |8.71 |21.56 |1478.29 |256 |

| [convnext_large.fb_in1k](https://huggingface.co/timm/convnext_large.fb_in1k) |84.282|96.892|224 |197.77 |34.4 |43.13 |584.28 |256 |

| [convnext_tiny.in12k_ft_in1k](https://huggingface.co/timm/convnext_tiny.in12k_ft_in1k) |84.186|97.124|224 |28.59 |4.47 |13.44 |2433.7 |256 |

| [convnext_tiny.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_tiny.fb_in22k_ft_in1k_384) |84.084|97.14 |384 |28.59 |13.14 |39.48 |862.95 |256 |

| [convnextv2_tiny.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in22k_in1k) |83.894|96.964|224 |28.64 |4.47 |13.44 |1452.72 |256 |

| [convnext_base.fb_in1k](https://huggingface.co/timm/convnext_base.fb_in1k) |83.82 |96.746|224 |88.59 |15.38 |28.75 |1054.0 |256 |

| [convnextv2_nano.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in22k_in1k_384) |83.37 |96.742|384 |15.62 |7.22 |24.61 |801.72 |256 |

| [convnext_small.fb_in1k](https://huggingface.co/timm/convnext_small.fb_in1k) |83.142|96.434|224 |50.22 |8.71 |21.56 |1464.0 |256 |

| [convnextv2_tiny.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in1k) |82.92 |96.284|224 |28.64 |4.47 |13.44 |1425.62 |256 |

| [convnext_tiny.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_tiny.fb_in22k_ft_in1k) |82.898|96.616|224 |28.59 |4.47 |13.44 |2480.88 |256 |

| [convnext_nano.in12k_ft_in1k](https://huggingface.co/timm/convnext_nano.in12k_ft_in1k) |82.282|96.344|224 |15.59 |2.46 |8.37 |3926.52 |256 |

| [convnext_tiny_hnf.a2h_in1k](https://huggingface.co/timm/convnext_tiny_hnf.a2h_in1k) |82.216|95.852|224 |28.59 |4.47 |13.44 |2529.75 |256 |

| [convnext_tiny.fb_in1k](https://huggingface.co/timm/convnext_tiny.fb_in1k) |82.066|95.854|224 |28.59 |4.47 |13.44 |2346.26 |256 |

| [convnextv2_nano.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in22k_in1k) |82.03 |96.166|224 |15.62 |2.46 |8.37 |2300.18 |256 |

| [convnextv2_nano.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in1k) |81.83 |95.738|224 |15.62 |2.46 |8.37 |2321.48 |256 |

| [convnext_nano_ols.d1h_in1k](https://huggingface.co/timm/convnext_nano_ols.d1h_in1k) |80.866|95.246|224 |15.65 |2.65 |9.38 |3523.85 |256 |

| [convnext_nano.d1h_in1k](https://huggingface.co/timm/convnext_nano.d1h_in1k) |80.768|95.334|224 |15.59 |2.46 |8.37 |3915.58 |256 |

| [convnextv2_pico.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_pico.fcmae_ft_in1k) |80.304|95.072|224 |9.07 |1.37 |6.1 |3274.57 |256 |

| [convnext_pico.d1_in1k](https://huggingface.co/timm/convnext_pico.d1_in1k) |79.526|94.558|224 |9.05 |1.37 |6.1 |5686.88 |256 |

| [convnext_pico_ols.d1_in1k](https://huggingface.co/timm/convnext_pico_ols.d1_in1k) |79.522|94.692|224 |9.06 |1.43 |6.5 |5422.46 |256 |

| [convnextv2_femto.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_femto.fcmae_ft_in1k) |78.488|93.98 |224 |5.23 |0.79 |4.57 |4264.2 |256 |

| [convnext_femto_ols.d1_in1k](https://huggingface.co/timm/convnext_femto_ols.d1_in1k) |77.86 |93.83 |224 |5.23 |0.82 |4.87 |6910.6 |256 |

| [convnext_femto.d1_in1k](https://huggingface.co/timm/convnext_femto.d1_in1k) |77.454|93.68 |224 |5.22 |0.79 |4.57 |7189.92 |256 |

| [convnextv2_atto.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_atto.fcmae_ft_in1k) |76.664|93.044|224 |3.71 |0.55 |3.81 |4728.91 |256 |

| [convnext_atto_ols.a2_in1k](https://huggingface.co/timm/convnext_atto_ols.a2_in1k) |75.88 |92.846|224 |3.7 |0.58 |4.11 |7963.16 |256 |

| [convnext_atto.d2_in1k](https://huggingface.co/timm/convnext_atto.d2_in1k) |75.664|92.9 |224 |3.7 |0.55 |3.81 |8439.22 |256 |

## Citation

```bibtex

@software{ilharco_gabriel_2021_5143773,

author = {Ilharco, Gabriel and

Wortsman, Mitchell and

Wightman, Ross and

Gordon, Cade and

Carlini, Nicholas and

Taori, Rohan and

Dave, Achal and

Shankar, Vaishaal and

Namkoong, Hongseok and

Miller, John and

Hajishirzi, Hannaneh and

Farhadi, Ali and

Schmidt, Ludwig},

title = {OpenCLIP},

month = jul,

year = 2021,

note = {If you use this software, please cite it as below.},

publisher = {Zenodo},

version = {0.1},

doi = {10.5281/zenodo.5143773},

url = {https://doi.org/10.5281/zenodo.5143773}

}

```

```bibtex

@inproceedings{schuhmann2022laionb,

title={{LAION}-5B: An open large-scale dataset for training next generation image-text models},

author={Christoph Schuhmann and

Romain Beaumont and

Richard Vencu and

Cade W Gordon and

Ross Wightman and

Mehdi Cherti and

Theo Coombes and

Aarush Katta and

Clayton Mullis and

Mitchell Wortsman and

Patrick Schramowski and

Srivatsa R Kundurthy and

Katherine Crowson and

Ludwig Schmidt and

Robert Kaczmarczyk and

Jenia Jitsev},

booktitle={Thirty-sixth Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

year={2022},

url={https://openreview.net/forum?id=M3Y74vmsMcY}

}

```

```bibtex

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/huggingface/pytorch-image-models}}

}

```

```bibtex

@inproceedings{Radford2021LearningTV,

title={Learning Transferable Visual Models From Natural Language Supervision},

author={Alec Radford and Jong Wook Kim and Chris Hallacy and A. Ramesh and Gabriel Goh and Sandhini Agarwal and Girish Sastry and Amanda Askell and Pamela Mishkin and Jack Clark and Gretchen Krueger and Ilya Sutskever},

booktitle={ICML},

year={2021}

}

```

```bibtex

@article{liu2022convnet,

author = {Zhuang Liu and Hanzi Mao and Chao-Yuan Wu and Christoph Feichtenhofer and Trevor Darrell and Saining Xie},

title = {A ConvNet for the 2020s},

journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022},

}

```

|

ntc-ai/SDXL-LoRA-slider.cinematic-lighting | ntc-ai | "2024-01-27T01:28:52Z" | 56,812 | 5 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion-xl",

"lora",

"template:sd-lora",

"template:sdxl-lora",

"sdxl-sliders",

"ntcai.xyz-sliders",

"concept",

"en",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:mit",

"region:us"

] | text-to-image | "2024-01-27T01:28:49Z" |

---

language:

- en

thumbnail: "images/evaluate/cinematic lighting.../cinematic lighting_17_3.0.png"

widget:

- text: cinematic lighting

output:

url: images/cinematic lighting_17_3.0.png

- text: cinematic lighting

output:

url: images/cinematic lighting_19_3.0.png

- text: cinematic lighting

output:

url: images/cinematic lighting_20_3.0.png

- text: cinematic lighting

output:

url: images/cinematic lighting_21_3.0.png

- text: cinematic lighting

output:

url: images/cinematic lighting_22_3.0.png

tags:

- text-to-image

- stable-diffusion-xl

- lora

- template:sd-lora

- template:sdxl-lora

- sdxl-sliders

- ntcai.xyz-sliders

- concept

- diffusers

license: "mit"

inference: false

instance_prompt: "cinematic lighting"

base_model: "stabilityai/stable-diffusion-xl-base-1.0"

---

# ntcai.xyz slider - cinematic lighting (SDXL LoRA)

| Strength: -3 | Strength: 0 | Strength: 3 |

| --- | --- | --- |

| <img src="images/cinematic lighting_17_-3.0.png" width=256 height=256 /> | <img src="images/cinematic lighting_17_0.0.png" width=256 height=256 /> | <img src="images/cinematic lighting_17_3.0.png" width=256 height=256 /> |

| <img src="images/cinematic lighting_19_-3.0.png" width=256 height=256 /> | <img src="images/cinematic lighting_19_0.0.png" width=256 height=256 /> | <img src="images/cinematic lighting_19_3.0.png" width=256 height=256 /> |

| <img src="images/cinematic lighting_20_-3.0.png" width=256 height=256 /> | <img src="images/cinematic lighting_20_0.0.png" width=256 height=256 /> | <img src="images/cinematic lighting_20_3.0.png" width=256 height=256 /> |

## Download

Weights for this model are available in Safetensors format.

## Trigger words

You can apply this LoRA with trigger words for additional effect:

```

cinematic lighting

```

## Use in diffusers

```python

from diffusers import StableDiffusionXLPipeline

from diffusers import EulerAncestralDiscreteScheduler

import torch

pipe = StableDiffusionXLPipeline.from_single_file("https://huggingface.co/martyn/sdxl-turbo-mario-merge-top-rated/blob/main/topRatedTurboxlLCM_v10.safetensors")

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

# Load the LoRA

pipe.load_lora_weights('ntc-ai/SDXL-LoRA-slider.cinematic-lighting', weight_name='cinematic lighting.safetensors', adapter_name="cinematic lighting")

# Activate the LoRA

pipe.set_adapters(["cinematic lighting"], adapter_weights=[2.0])

prompt = "medieval rich kingpin sitting in a tavern, cinematic lighting"

negative_prompt = "nsfw"

width = 512

height = 512

num_inference_steps = 10

guidance_scale = 2

image = pipe(prompt, negative_prompt=negative_prompt, width=width, height=height, guidance_scale=guidance_scale, num_inference_steps=num_inference_steps).images[0]

image.save('result.png')

```

## Support the Patreon

If you like this model please consider [joining our Patreon](https://www.patreon.com/NTCAI).

By joining our Patreon, you'll gain access to an ever-growing library of over 1140+ unique and diverse LoRAs, covering a wide range of styles and genres. You'll also receive early access to new models and updates, exclusive behind-the-scenes content, and the powerful LoRA slider creator, allowing you to craft your own custom LoRAs and experiment with endless possibilities.

Your support on Patreon will allow us to continue developing and refining new models.

## Other resources

- [CivitAI](https://civitai.com/user/ntc) - Follow ntc on Civit for even more LoRAs

- [ntcai.xyz](https://ntcai.xyz) - See ntcai.xyz to find more articles and LoRAs

|

ntc-ai/SDXL-LoRA-slider.anime | ntc-ai | "2024-02-06T00:29:53Z" | 56,778 | 1 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion-xl",

"lora",

"template:sd-lora",

"template:sdxl-lora",

"sdxl-sliders",

"ntcai.xyz-sliders",

"concept",

"en",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:mit",

"region:us"

] | text-to-image | "2023-12-11T09:45:31Z" |

---

language:

- en

thumbnail: "images/anime_17_3.0.png"

widget:

- text: anime

output:

url: images/anime_17_3.0.png

- text: anime

output:

url: images/anime_19_3.0.png

- text: anime

output:

url: images/anime_20_3.0.png

- text: anime

output:

url: images/anime_21_3.0.png

- text: anime

output:

url: images/anime_22_3.0.png

tags:

- text-to-image

- stable-diffusion-xl

- lora

- template:sd-lora

- template:sdxl-lora

- sdxl-sliders

- ntcai.xyz-sliders

- concept

- diffusers

license: "mit"

inference: false

instance_prompt: "anime"

base_model: "stabilityai/stable-diffusion-xl-base-1.0"

---

# ntcai.xyz slider - anime (SDXL LoRA)

| Strength: -3 | Strength: 0 | Strength: 3 |

| --- | --- | --- |

| <img src="images/anime_17_-3.0.png" width=256 height=256 /> | <img src="images/anime_17_0.0.png" width=256 height=256 /> | <img src="images/anime_17_3.0.png" width=256 height=256 /> |

| <img src="images/anime_19_-3.0.png" width=256 height=256 /> | <img src="images/anime_19_0.0.png" width=256 height=256 /> | <img src="images/anime_19_3.0.png" width=256 height=256 /> |

| <img src="images/anime_20_-3.0.png" width=256 height=256 /> | <img src="images/anime_20_0.0.png" width=256 height=256 /> | <img src="images/anime_20_3.0.png" width=256 height=256 /> |

See more at [https://sliders.ntcai.xyz/sliders/app/loras/36f7a252-7e7a-4e7d-9ae0-fc31cdc48fef](https://sliders.ntcai.xyz/sliders/app/loras/36f7a252-7e7a-4e7d-9ae0-fc31cdc48fef)

## Download

Weights for this model are available in Safetensors format.

## Trigger words

You can apply this LoRA with trigger words for additional effect:

```

anime

```

## Use in diffusers

```python

from diffusers import StableDiffusionXLPipeline

from diffusers import EulerAncestralDiscreteScheduler

import torch

pipe = StableDiffusionXLPipeline.from_single_file("https://huggingface.co/martyn/sdxl-turbo-mario-merge-top-rated/blob/main/topRatedTurboxlLCM_v10.safetensors")

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

# Load the LoRA

pipe.load_lora_weights('ntc-ai/SDXL-LoRA-slider.anime', weight_name='anime.safetensors', adapter_name="anime")

# Activate the LoRA

pipe.set_adapters(["anime"], adapter_weights=[2.0])

prompt = "medieval rich kingpin sitting in a tavern, anime"

negative_prompt = "nsfw"

width = 512

height = 512

num_inference_steps = 10

guidance_scale = 2

image = pipe(prompt, negative_prompt=negative_prompt, width=width, height=height, guidance_scale=guidance_scale, num_inference_steps=num_inference_steps).images[0]

image.save('result.png')

```

## Support the Patreon

If you like this model please consider [joining our Patreon](https://www.patreon.com/NTCAI).

By joining our Patreon, you'll gain access to an ever-growing library of over 1496+ unique and diverse LoRAs along with 14600+ slider merges, covering a wide range of styles and genres. You'll also receive early access to new models and updates, exclusive behind-the-scenes content, and the powerful <strong>NTC Slider Factory</strong> LoRA creator, allowing you to craft your own custom LoRAs and merges opening up endless possibilities.

Your support on Patreon will allow us to continue developing new models and tools.

## Other resources

- [CivitAI](https://civitai.com/user/ntc) - Follow ntc on Civit for even more LoRAs

- [ntcai.xyz](https://ntcai.xyz) - See ntcai.xyz to find more articles and LoRAs

|

prajjwal1/bert-mini | prajjwal1 | "2021-10-27T18:27:38Z" | 56,656 | 17 | transformers | [

"transformers",

"pytorch",

"BERT",

"MNLI",

"NLI",

"transformer",

"pre-training",

"en",

"arxiv:1908.08962",

"arxiv:2110.01518",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | "2022-03-02T23:29:05Z" | ---

language:

- en

license:

- mit

tags:

- BERT

- MNLI

- NLI

- transformer

- pre-training

---

The following model is a Pytorch pre-trained model obtained from converting Tensorflow checkpoint found in the [official Google BERT repository](https://github.com/google-research/bert).

This is one of the smaller pre-trained BERT variants, together with [bert-small](https://huggingface.co/prajjwal1/bert-small) and [bert-medium](https://huggingface.co/prajjwal1/bert-medium). They were introduced in the study `Well-Read Students Learn Better: On the Importance of Pre-training Compact Models` ([arxiv](https://arxiv.org/abs/1908.08962)), and ported to HF for the study `Generalization in NLI: Ways (Not) To Go Beyond Simple Heuristics` ([arXiv](https://arxiv.org/abs/2110.01518)). These models are supposed to be trained on a downstream task.

If you use the model, please consider citing both the papers:

```

@misc{bhargava2021generalization,

title={Generalization in NLI: Ways (Not) To Go Beyond Simple Heuristics},

author={Prajjwal Bhargava and Aleksandr Drozd and Anna Rogers},

year={2021},

eprint={2110.01518},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@article{DBLP:journals/corr/abs-1908-08962,

author = {Iulia Turc and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {Well-Read Students Learn Better: The Impact of Student Initialization

on Knowledge Distillation},

journal = {CoRR},

volume = {abs/1908.08962},

year = {2019},

url = {http://arxiv.org/abs/1908.08962},

eprinttype = {arXiv},

eprint = {1908.08962},

timestamp = {Thu, 29 Aug 2019 16:32:34 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1908-08962.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

Config of this model:

`prajjwal1/bert-mini` (L=4, H=256) [Model Link](https://huggingface.co/prajjwal1/bert-mini)

Other models to check out:

- `prajjwal1/bert-tiny` (L=2, H=128) [Model Link](https://huggingface.co/prajjwal1/bert-tiny)

- `prajjwal1/bert-small` (L=4, H=512) [Model Link](https://huggingface.co/prajjwal1/bert-small)

- `prajjwal1/bert-medium` (L=8, H=512) [Model Link](https://huggingface.co/prajjwal1/bert-medium)

Original Implementation and more info can be found in [this Github repository](https://github.com/prajjwal1/generalize_lm_nli).

Twitter: [@prajjwal_1](https://twitter.com/prajjwal_1)

|

ntc-ai/SDXL-LoRA-slider.pixar-style | ntc-ai | "2024-02-06T00:30:20Z" | 56,644 | 5 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion-xl",

"lora",

"template:sd-lora",

"template:sdxl-lora",

"sdxl-sliders",

"ntcai.xyz-sliders",

"concept",

"en",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:mit",

"region:us"

] | text-to-image | "2023-12-11T16:46:56Z" |

---

language:

- en

thumbnail: "images/pixar-style_17_3.0.png"

widget:

- text: pixar-style

output:

url: images/pixar-style_17_3.0.png

- text: pixar-style

output:

url: images/pixar-style_19_3.0.png

- text: pixar-style

output:

url: images/pixar-style_20_3.0.png

- text: pixar-style

output:

url: images/pixar-style_21_3.0.png

- text: pixar-style

output:

url: images/pixar-style_22_3.0.png

tags:

- text-to-image

- stable-diffusion-xl

- lora

- template:sd-lora

- template:sdxl-lora

- sdxl-sliders

- ntcai.xyz-sliders

- concept

- diffusers

license: "mit"

inference: false

instance_prompt: "pixar-style"

base_model: "stabilityai/stable-diffusion-xl-base-1.0"

---

# ntcai.xyz slider - pixar-style (SDXL LoRA)

| Strength: -3 | Strength: 0 | Strength: 3 |

| --- | --- | --- |

| <img src="images/pixar-style_17_-3.0.png" width=256 height=256 /> | <img src="images/pixar-style_17_0.0.png" width=256 height=256 /> | <img src="images/pixar-style_17_3.0.png" width=256 height=256 /> |

| <img src="images/pixar-style_19_-3.0.png" width=256 height=256 /> | <img src="images/pixar-style_19_0.0.png" width=256 height=256 /> | <img src="images/pixar-style_19_3.0.png" width=256 height=256 /> |

| <img src="images/pixar-style_20_-3.0.png" width=256 height=256 /> | <img src="images/pixar-style_20_0.0.png" width=256 height=256 /> | <img src="images/pixar-style_20_3.0.png" width=256 height=256 /> |

See more at [https://sliders.ntcai.xyz/sliders/app/loras/c48d077a-a9e0-4bc0-a9a8-e607835a7f1d](https://sliders.ntcai.xyz/sliders/app/loras/c48d077a-a9e0-4bc0-a9a8-e607835a7f1d)

## Download

Weights for this model are available in Safetensors format.

## Trigger words

You can apply this LoRA with trigger words for additional effect:

```

pixar-style

```

## Use in diffusers

```python

from diffusers import StableDiffusionXLPipeline

from diffusers import EulerAncestralDiscreteScheduler

import torch

pipe = StableDiffusionXLPipeline.from_single_file("https://huggingface.co/martyn/sdxl-turbo-mario-merge-top-rated/blob/main/topRatedTurboxlLCM_v10.safetensors")

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

# Load the LoRA

pipe.load_lora_weights('ntc-ai/SDXL-LoRA-slider.pixar-style', weight_name='pixar-style.safetensors', adapter_name="pixar-style")

# Activate the LoRA

pipe.set_adapters(["pixar-style"], adapter_weights=[2.0])

prompt = "medieval rich kingpin sitting in a tavern, pixar-style"

negative_prompt = "nsfw"

width = 512

height = 512

num_inference_steps = 10

guidance_scale = 2

image = pipe(prompt, negative_prompt=negative_prompt, width=width, height=height, guidance_scale=guidance_scale, num_inference_steps=num_inference_steps).images[0]

image.save('result.png')

```

## Support the Patreon

If you like this model please consider [joining our Patreon](https://www.patreon.com/NTCAI).

By joining our Patreon, you'll gain access to an ever-growing library of over 1496+ unique and diverse LoRAs along with 14602+ slider merges, covering a wide range of styles and genres. You'll also receive early access to new models and updates, exclusive behind-the-scenes content, and the powerful <strong>NTC Slider Factory</strong> LoRA creator, allowing you to craft your own custom LoRAs and merges opening up endless possibilities.

Your support on Patreon will allow us to continue developing new models and tools.

## Other resources

- [CivitAI](https://civitai.com/user/ntc) - Follow ntc on Civit for even more LoRAs

- [ntcai.xyz](https://ntcai.xyz) - See ntcai.xyz to find more articles and LoRAs

|

ntc-ai/SDXL-LoRA-slider.raw | ntc-ai | "2024-02-06T00:34:31Z" | 56,548 | 2 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion-xl",

"lora",

"template:sd-lora",

"template:sdxl-lora",

"sdxl-sliders",

"ntcai.xyz-sliders",

"concept",

"en",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:mit",

"region:us"

] | text-to-image | "2023-12-17T10:32:34Z" |

---

language:

- en

thumbnail: "images/raw_17_3.0.png"

widget:

- text: raw

output:

url: images/raw_17_3.0.png

- text: raw

output:

url: images/raw_19_3.0.png

- text: raw

output:

url: images/raw_20_3.0.png

- text: raw

output:

url: images/raw_21_3.0.png

- text: raw

output:

url: images/raw_22_3.0.png

tags:

- text-to-image

- stable-diffusion-xl

- lora

- template:sd-lora

- template:sdxl-lora

- sdxl-sliders

- ntcai.xyz-sliders

- concept

- diffusers

license: "mit"

inference: false

instance_prompt: "raw"

base_model: "stabilityai/stable-diffusion-xl-base-1.0"

---

# ntcai.xyz slider - raw (SDXL LoRA)

| Strength: -3 | Strength: 0 | Strength: 3 |

| --- | --- | --- |

| <img src="images/raw_17_-3.0.png" width=256 height=256 /> | <img src="images/raw_17_0.0.png" width=256 height=256 /> | <img src="images/raw_17_3.0.png" width=256 height=256 /> |

| <img src="images/raw_19_-3.0.png" width=256 height=256 /> | <img src="images/raw_19_0.0.png" width=256 height=256 /> | <img src="images/raw_19_3.0.png" width=256 height=256 /> |

| <img src="images/raw_20_-3.0.png" width=256 height=256 /> | <img src="images/raw_20_0.0.png" width=256 height=256 /> | <img src="images/raw_20_3.0.png" width=256 height=256 /> |

See more at [https://sliders.ntcai.xyz/sliders/app/loras/c2a33da7-6834-473d-8e52-0b4a5637fbde](https://sliders.ntcai.xyz/sliders/app/loras/c2a33da7-6834-473d-8e52-0b4a5637fbde)

## Download

Weights for this model are available in Safetensors format.

## Trigger words

You can apply this LoRA with trigger words for additional effect:

```

raw

```

## Use in diffusers

```python

from diffusers import StableDiffusionXLPipeline

from diffusers import EulerAncestralDiscreteScheduler

import torch

pipe = StableDiffusionXLPipeline.from_single_file("https://huggingface.co/martyn/sdxl-turbo-mario-merge-top-rated/blob/main/topRatedTurboxlLCM_v10.safetensors")

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

# Load the LoRA

pipe.load_lora_weights('ntc-ai/SDXL-LoRA-slider.raw', weight_name='raw.safetensors', adapter_name="raw")

# Activate the LoRA

pipe.set_adapters(["raw"], adapter_weights=[2.0])

prompt = "medieval rich kingpin sitting in a tavern, raw"

negative_prompt = "nsfw"

width = 512

height = 512

num_inference_steps = 10

guidance_scale = 2

image = pipe(prompt, negative_prompt=negative_prompt, width=width, height=height, guidance_scale=guidance_scale, num_inference_steps=num_inference_steps).images[0]

image.save('result.png')

```

## Support the Patreon

If you like this model please consider [joining our Patreon](https://www.patreon.com/NTCAI).

By joining our Patreon, you'll gain access to an ever-growing library of over 1496+ unique and diverse LoRAs along with 14602+ slider merges, covering a wide range of styles and genres. You'll also receive early access to new models and updates, exclusive behind-the-scenes content, and the powerful <strong>NTC Slider Factory</strong> LoRA creator, allowing you to craft your own custom LoRAs and merges opening up endless possibilities.

Your support on Patreon will allow us to continue developing new models and tools.

## Other resources

- [CivitAI](https://civitai.com/user/ntc) - Follow ntc on Civit for even more LoRAs

- [ntcai.xyz](https://ntcai.xyz) - See ntcai.xyz to find more articles and LoRAs

|

naver-clova-ocr/bros-base-uncased | naver-clova-ocr | "2022-04-05T13:56:46Z" | 56,503 | 13 | transformers | [

"transformers",

"pytorch",

"bros",

"feature-extraction",

"arxiv:2108.04539",

"endpoints_compatible",

"region:us"

] | feature-extraction | "2022-03-02T23:29:05Z" | # BROS

GitHub: https://github.com/clovaai/bros

## Introduction

BROS (BERT Relying On Spatiality) is a pre-trained language model focusing on text and layout for better key information extraction from documents.<br>

Given the OCR results of the document image, which are text and bounding box pairs, it can perform various key information extraction tasks, such as extracting an ordered item list from receipts.<br>

For more details, please refer to our paper:

BROS: A Pre-trained Language Model Focusing on Text and Layout for Better Key Information Extraction from Documents<br>

Teakgyu Hong, Donghyun Kim, Mingi Ji, Wonseok Hwang, Daehyun Nam, Sungrae Park<br>

AAAI 2022 - Main Technical Track

[[arXiv]](https://arxiv.org/abs/2108.04539)

## Pre-trained models

| name | # params | Hugging Face - Models |

|---------------------|---------:|-------------------------------------------------------------------------------------------------|

| bros-base-uncased (**this**) | < 110M | [naver-clova-ocr/bros-base-uncased](https://huggingface.co/naver-clova-ocr/bros-base-uncased) |

| bros-large-uncased | < 340M | [naver-clova-ocr/bros-large-uncased](https://huggingface.co/naver-clova-ocr/bros-large-uncased) | |

microsoft/Phi-3-mini-4k-instruct-gguf | microsoft | "2024-07-02T19:36:04Z" | 56,415 | 396 | null | [

"gguf",

"nlp",

"code",

"text-generation",

"en",

"license:mit",

"region:us"

] | text-generation | "2024-04-22T17:02:08Z" | ---

license: mit

license_link: >-

https://huggingface.co/microsoft/Phi-3-mini-4k-instruct-gguf/resolve/main/LICENSE

language:

- en

pipeline_tag: text-generation

tags:

- nlp

- code

---

## Model Summary

This repo provides the GGUF format for the Phi-3-Mini-4K-Instruct.