anchor

stringlengths 159

16.8k

| positive

stringlengths 184

16.2k

| negative

stringlengths 167

16.2k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Inspiration

As women ourselves, we have always been aware that there are unfortunately additional measures we have to take in order to stay safe in public. Recently, we have seen videos emerge online for individuals to play in these situations, prompting users to engage in conversation with a “friend” on the other side. We saw that the idea was extremely helpful to so many people around the world, and wanted to use the features of voice assistants to add more convenience and versatility to the concept.

## What it does

Safety Buddy is an Alexa Skill that simulates a conversation with the user, creating the illusion that there is somebody on the other line aware of the user’s situation. It intentionally states that the user has their location shared and continues to converse with the user until they are in a safe location and can stop the skill.

## How I built it

We built the Safety Buddy on the Alexa Developer Console, while hosting the audio files on AWS S3 and used a Twilio messaging API to send a text message to the user. On the front-end, we created intents to capture what the user said and connected those to the backend where we used JavaScript to handle each intent.

## Challenges I ran into

While trying to add additional features to the skill, we had Alexa send a text message to the user, which then interrupted the audio that was playing. With the help of a mentor, we were able to handle the asynchronous events.

## Accomplishments that I'm proud of

We are proud of building an application that can help prevent dangerous situations. Our Alexa skill will keep people out of uncomfortable situations when they are alone and cannot contact anyone on their phone. We hope to see our creation being used for the greater good!

## What I learned

We were exploring different ways we could improve our skill in the future, and learned about the differences between deploying on AWS Lambda versus Microsoft Azure Functions. We used AWS Lambda for our development, but tested out Azure Functions briefly. In the future, we would further consider which platform to continue with.

## What's next for Safety Buddy

We wish to expand the skill by developing more intents to allow the user to engage in various conversation flows. We can monetize these additional conversation options through in-skill purchases in order to continue improving Safety Buddy and bring awareness to more individuals. Additionally, we can adapt the skill to be used for various languages users speak. | ## Inspiration

During this lockdown, everyone is pretty much staying home and not able to interact with others. So we want to connect like-minded people using our platform.

## What it does

You can register to our portal and then look for events (e.g. sports, hiking etc) happening around you and can join that person. The best thing about our platform is that once you register you can use the voice assistant to search for events, request the host for joining, and publish events. Everything is hands-free. It is really easy to use.

## How we built it

We built the front end using ReactJS and for the voice assistant, we used Alexa. We built a back-end that is connected to both the front end and Alexa. Whenever a user requests an event or wants to publish it is connect to our server hosted on AWS instance. Even now it is hosted live so that anyone who wants to try can use it. We are also using MongoDB to store the currently active events, user details, etc. One user requests something we scan through the database based on the user's location and deliver events happening near him. We create several REST APIs on the server that servers the requests.

## Challenges we ran into

There were lot of technical challenges faced. Setting up the server. Building the Alexa voice assistant which can serve the user easily without asking too many questions. We also thought of safety and privacy as our top priority.

## Accomplishments that we're proud of

An easy to use assistant and web portal to connect people.

## What we learned

How to use Alexa assistant for custom real life use case. How to deploy the production on AWS instances. Configuring the server to

## What's next for Get Together

Adding more privacy for the user who posts events, having official accounts for better credibility, rating mechanism for a better match-making. | ## Inspiration

Partially inspired by the Smart Cities track, we wanted our app to have the direct utility of ordering food, while still being fun to interact with. We aimed to combine convenience with entertainment, making the experience more enjoyable than your typical drive-through order.

## What it does

You interact using only your voice. The app automatically detects when you start and stop talking, uses AI to transcribe what you say, figures out the food items (with modifications) you want to order, and adds them to your current order. It even handles details like size and flavor preferences. The AI then generates text-to-speech audio, which is played back to confirm your order in a humorous, engaging way. There is absolutely zero set-up or management necessary, as the program will completely ignore all background noises and conversation. Even then, it will still take your order with staggering precision.

## How we built it

The frontend of the app is built with React and TypeScript, while the backend uses Flask and Python. We containerized the app using Docker and deployed it using Defang. The design of the menu is also done in Canva with a dash of Harvard colors.

## Challenges we ran into

One major challenge was getting the different parts of the app—frontend, backend, and AI—to communicate effectively. From media file conversions to AI prompt engineering, we worked through each of the problems together. We struggled particularly with maintaining smooth communication once the app was deployed. Additionally, fine-tuning the AI to accurately extract order information from voice inputs while keeping the interaction natural was a big hurdle.

## Accomplishments that we're proud of

We're proud of building a fully functioning product that successfully integrates all the features we envisioned. We also managed to deploy the app, which was a huge achievement given the complexity of the project. Completing our initial feature set within the hackathon timeframe was a key success for us. Trying to work with Python data type was difficult to manage, and we were proud to navigate around that. We are also extremely proud to meet a bunch of new people and tackle new challenges that we were not previously comfortable with.

## What we learned

We honed our skills in React, TypeScript, Flask, and Python, especially in how to make these technologies work together. We also learned how to containerize and deploy applications using Docker and Docker Compose, as well as how to use Defang for cloud deployment.

## What's next for Harvard Burger

Moving forward, we want to add a business-facing interface, where restaurant staff would be able to view and fulfill customer orders. There will also be individual kiosk devices to handle order inputs. These features would allow *Harvard Burger* to move from a demo to a fully functional app that restaurants could actually use. Lastly, we can sell the product by designing marketing strategies for fast food chains. | losing |

## Inspiration

There is a need for an electronic health record (EHR) system that is secure, accessible, and user-friendly. Currently, hundred of EHRs exist and different clinical practices may use different systems. If a patient requires an emergency visit to a certain physician, the physician may be unable to access important records and patient information efficiently, requiring extra time and resources that strain the healthcare system. This is especially true for patients traveling abroad where doctors from different countries may be unable to access a centralized healthcare database in another.

In addition, there is a strong potential to utilize the data available for improved analytics. In a clinical consultation, patient description of symptoms may be ambiguous and doctors often want to monitor the patient's symptoms for an extended period. With limited resources, this is impossible outside of an acute care unit in a hospital. As access to the internet is becoming increasingly widespread, patients may be able to self-report certain symptoms through a web portal if such an EHR exists. With a large amount of patient data, artificial intelligence techniques can be used to analyze the similarity of patients to predict certain outcomes before adverse events happen such that intervention can occur timely.

## What it does

myHealthTech is a block-chain EHR system that has a user-friendly interface for patients and health care providers to record patient information such as clinical visitation history, lab test results, and self-reporting records from the patient. The system is a web application that is accessible from any end user that is approved by the patient. Thus, doctors in different clinics can access essential information in an efficient manner. With the block-chain architecture compared to traditional databases, patient data is stored securely and anonymously in a decentralized manner such that third parties cannot access the encrypted information.

Artificial intelligence methods are used to analyze patient data for prognostication of adverse events. For instance, a patient's reported mood scores are compared to a database of similar patients that have resulted in self-harm, and myHealthTech will compute a probability that the patient will trend towards a self-harm event. This allows healthcare providers to monitor and intervene if an adverse event is predicted.

## How we built it

The block-chain EHR architecture was written in solidity, truffle, testRPC, and remix. The web interface was written in HTML5, CSS3, and JavaScript. The artificial intelligence predictive behavior engine was written in python.

## Challenges we ran into

The greatest challenge was integrating the back-end and front-end components. We had challenges linking smart contracts to the web UI and executing the artificial intelligence engine from a web interface. Several of these challenges require compatibility troubleshooting and running a centralized python server, which will be implemented in a consistent environment when this project is developed further.

## Accomplishments that we're proud of

We are proud of working with novel architecture and technology, providing a solution to solve common EHR problems in design, functionality, and implementation of data.

## What we learned

We learned the value of leveraging the strengths of different team members from design to programming and math in order to advance the technology of EHRs.

## What's next for myHealthTech?

Next is the addition of additional self-reporting fields to increase the robustness of the artificial intelligence engine. In the case of depression, there are clinical standards from the Diagnostics and Statistical Manual that identify markers of depression such as mood level, confidence, energy, and feeling of guilt. By monitoring these values for individuals that have recovered, are depressed, or inflict self-harm, the AI engine can predict the behavior of new individuals much stronger by logistically regressing the data and use a deep learning approach.

There is an issue with the inconvenience of reporting symptoms. Hence, a logical next step would be to implement smart home technology, such as an Amazon Echo, for the patient to interact with for self reporting. For instance, when the patient is at home, the Amazon Echo will prompt the patient and ask "What would you rate your mood today? What would you rate your energy today?" and record the data in the patient's self reporting records on myHealthTech.

These improvements would further the capability of myHealthTech of being a highly dynamic EHR with strong analytical capabilitys to understand and predict the outcome of patients to improve treatment options. | ## Inspiration

As a patient in the United States you do not know what costs you are facing when you receive treatment at a hospital or if your insurance plan covers the expenses. Patients are faced with unexpected bills and left with expensive copayments. In some instances patients would pay less if they cover the expenses out of pocket instead of using their insurance plan.

## What it does

Healthiator provides patients with a comprehensive overview of medical procedures that they will need to undergo for their health condition and sums up the total costs of that treatment depending on which hospital they go-to, and if they pay the treatment out-of-pocket or through their insurance.

This allows patients to choose the most cost-effective treatment and understand the medical expenses they are facing. A second feature healthiator provides is that once patients receive their actual hospital bill they can claim inaccuracies. Healthiator helps patients with billing disputes by leveraging AI to handle the process of negotiating fair pricing.

## How we built it

We used a combination of Together.AI and Fetch.AI. We have several smart agents running in Fetch.AI each responsible for one of the features. For instance, we get the online and instant data from the hospitals (publicly available under the Good Faith act/law) about the prices and cash discounts using one agent and then use together.ai's API to integrate those information in the negotiation part.

## Ethics

The reason is that although our end purpose is to help people get medical treatment by reducing the fear of surprise bills and actually making healthcare more affordable, we are aware that any wrong suggestions or otherwise violations of the user's privacy have significant consequences. Giving the user as much information as possible while keeping away from making clinical suggestions and false/hallucinated information was the most challenging part in our work.

## Challenges we ran into

Finding actionable data from the hospitals was one of the most challenging parts as each hospital has their own format and assumptions and it was not straightforward at all how to integrate them all into a single database. Another challenge was making various APIs and third parties work together in time.

## Accomplishments that we're proud of

Solving a relevant social issue. Everyone we talked to has experienced the problem of not knowing the costs they're facing for different procedures at hospitals and if their insurance covers it. While it is an anxious process for everyone, this fact might prevent and delay a number of people from going to hospitals and getting the care that they urgently need. This might result in health conditions that could have had a better outcome if treated earlier.

## What we learned

How to work with convex fetch.api and together.api.

## What's next for Healthiator

As a next step, we want to set-up a database and take the medical costs directly from the files published by hospitals. | ## Inspiration

We hate making resumes and customizing them for each employeer so we created a tool to speed that up.

## What it does

A user creates "blocks" which are saved. Then they can pick and choose which ones they want to use.

## How we built it

[Node.js](https://nodejs.org/en/)

[Express](https://expressjs.com/)

[Nuxt.js](https://nuxtjs.org/)

[Editor.js](https://editorjs.io/)

[html2pdf.js](https://ekoopmans.github.io/html2pdf.js/)

[mongoose](https://mongoosejs.com/docs/)

[MongoDB](https://www.mongodb.com/) | partial |

## Inspiration

As cybersecurity enthusiasts, we are taking one for the team by breaking the curse of CLIs. `Appealing UI for tools like nmap` + `Implementation of Metasploitable scripts` = `happy hacker`

## What it does

nmap is a cybersecurity tool that scans ports of an ip on a network, and retrives the service that is running on each of them, as well as the version. Metasploitable is another tool that is able to run attacks on specified ip and ports to gain access to a machine.

Our app creates a graphical user interface for the use of both tools: it first scans an IP adress with nmap, and then retrieves the attack script from Metasploitable that matches the version of the service to use it.

In one glance, see what ports of an IP address are open, and if they are vulnerable or not. If they are, then click on the `🕹️` button to run the attack.

## How we built it

* ⚛️ React for the front-end

* 🐍 Python with fastapi for the backend

* 🌐 nmap and 🪳 Metasploitable

* 📚 SQLi for the database

## Challenges we ran into

Understanding that terminal sessions running under python take time to complete 💀

## Accomplishments that we're proud of

We are proud of the project in general. As cybersecurity peeps, we're making one small step for humans but a giant leap for hackers.

## What we learned

How Mestaploitable actually works lol.

No for real just discovering new libraries is always one main takeaway during hackathons, and McHacks delivered for that one.

## What's next for Phoenix

Have a fuller database, and possibly a way to update it redundantly and less manually. Then, it's just matter of showing it to the world. | ## Inspiration

We wanted to be able to connect with mentors. There are very few opportunities to do that outside of LinkedIn where many of the mentors are in a foreign field to our interests'.

## What it does

A networking website that connects mentors with mentees. It uses a weighted matching algorithm based on mentors' specializations and mentees' interests to prioritize matches.

## How we built it

Google Firebase is used for our NoSQL database which holds all user data. The other website elements were programmed using JavaScript and HTML.

## Challenges we ran into

There was no suitable matching algorithm module on Node.js that did not have version mismatches so we abandoned Node.js and programmed our own weighted matching algorithm. Also, our functions did not work since our code completed execution before Google Firebase returned the data from its API call, so we had to make all of our functions asynchronous.

## Accomplishments that we're proud of

We programmed our own weighted matching algorithm based on interest and specialization. Also, we refactored our entire code to make it suitable for asynchronous execution.

## What we learned

We learned how to use Google Firebase, Node.js and JavaScript from scratch. Additionally, we learned advanced programming concepts such as asynchronous programming.

## What's next for Pyre

We would like to add interactive elements such as integrated text chat between matched members. Additionally, we would like to incorporate distance between mentor and mentee into our matching algorithm. | As a response to the ongoing wildfires devastating vast areas of Australia, our team developed a web tool which provides wildfire data visualization, prediction, and logistics handling. We had two target audiences in mind: the general public and firefighters. The homepage of Phoenix is publicly accessible and anyone can learn information about the wildfires occurring globally, along with statistics regarding weather conditions, smoke levels, and safety warnings. We have a paid membership tier for firefighting organizations, where they have access to more in-depth information, such as wildfire spread prediction.

We deployed our web app using Microsoft Azure, and used Standard Library to incorporate Airtable, which enabled us to centralize the data we pulled from various sources. We also used it to create a notification system, where we send users a text whenever the air quality warrants action such as staying indoors or wearing a P2 mask.

We have taken many approaches to improving our platform’s scalability, as we anticipate spikes of traffic during wildfire events. Our codes scalability features include reusing connections to external resources whenever possible, using asynchronous programming, and processing API calls in batch. We used Azure’s functions in order to achieve this.

Azure Notebook and Cognitive Services were used to build various machine learning models using the information we collected from the NASA, EarthData, and VIIRS APIs. The neural network had a reasonable accuracy of 0.74, but did not generalize well to niche climates such as Siberia.

Our web-app was designed using React, Python, and d3js. We kept accessibility in mind by using a high-contrast navy-blue and white colour scheme paired with clearly legible, sans-serif fonts. Future work includes incorporating a text-to-speech feature to increase accessibility, and a color-blind mode. As this was a 24-hour hackathon, we ran into a time challenge and were unable to include this feature, however, we would hope to implement this in further stages of Phoenix. | partial |

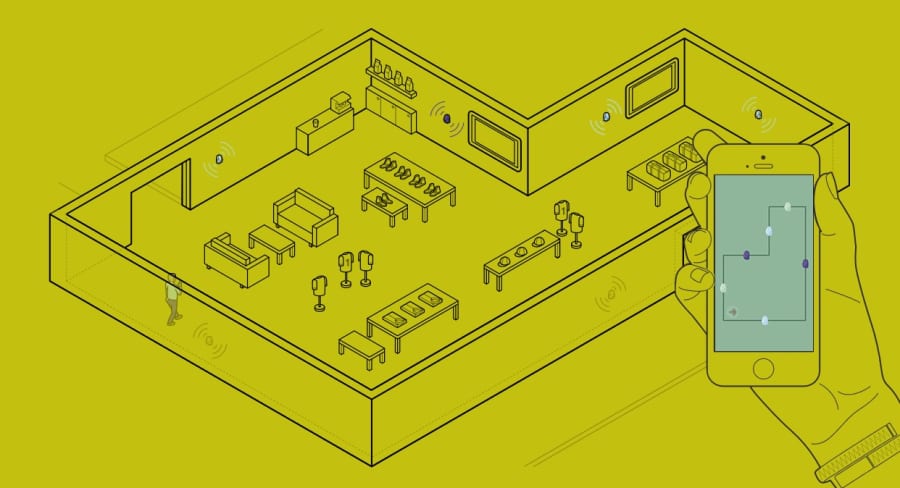

## Inspiration

We've all been in the situation where we've ran back and forth in the store, looking for a single small thing on our grocery list. We've all been on the time crunch and have found ourselves running back and forth from dairy to snacks to veggies, frustrated that we can't find what we need in an efficient way. This isn't a problem that just extends to us as college students, but is also a problem which people of all ages face, including parents and elderly grandparents, which can make the shopping experience very unpleasant. InstaShop is a platform that solves this problem once and for all.

## What it does

Input any grocery list with a series of items to search at the Target retail store in Boston. If the item is available, then our application will search the Target store to see where this item is located in the store. It will add a bullet point to the location of the item in the store. You can add all of your items as you wish. Then, based on the store map of the Target, we will provide the exact route that you should take from the entrance to the exit to retrieve all of the items.

## How we built it

Based off of the grocery list, we trigger the Target retail developer API to search for a certain item and retrieve the aisle number of the location within the given store. Alongside, we also wrote classes and functions to create and develop a graph with different nodes to mock the exact layout of the store. Then, we plot the exact location of the given item within the map. Once the user is done inputting all of the items, we will use our custom dynamic programming algorithm which we developed using a variance of the Traveling Salesman algorithm along with a breadth first search. This algorithm will return the shortest path from the entrance to retrieving all of your items to the exit. We display the shortest path on the frontend.

## Challenges we ran into

One of the major problems we ran into was developing the intricacies of the algorithm. This is a very much so convoluted algorithm (as mentioned above). Additionally, setting up the data structures with the nodes, edges, and creating the graph as a combination of the nodes and edges required a lot of thinking. We made sure to think through our data structure carefully and ensure that we were approaching it correctly.

## Accomplishments that we're proud of

According to our approximations in acquiring all of the items within the retail store, we are extremely proud that we improved our runtime down from 1932! \* 7 / 100! minutes to a few seconds. Initially, we were performing a recursive depth-first search on each of the nodes to calculate the shortest path taken. At first, it was working flawlessly on a smaller scale, but when we started to process the results on a larger scale (10\*10 grid), it took around 7 minutes to find the path for just one operation. Assuming that we scale this to the size of the store, one operation would take 7 divided by 100! minutes and the entire store would take 1932! \* 7 / 100! minutes. In order to improve this, we run a breadth-first search combined with an application of the Traveling Salesman problem developed in our custom dynamic programming based algorithm. We were able to bring it down to just a few seconds. Yay!

## What we learned

We learned about optimizing algorithms and overall graph usage and building an application from the ground up regarding the structure of the data.

## What's next for InstaShop

Our next step is to go to Target and pitch our idea. We would like to establish partnership with many Target stores and establish a profitable business model that we can incorporate with Target. We strongly believe that this will be a huge help for the public. | ## Inspiration

Shopping can be a very frustrating experience at times. Nowadays, almost everything is digitally connected yet some stores fall behind when it comes to their shopping experience. We've unfortunately encountered scenarios where we weren't able to find products stocked at our local grocery store, and there have been times where we had no idea how much stock was left or if we need to hurry! Our app solves this issue, by displaying various data relating to each ingredient to the user.

## What it does

Our application aims to guide users to the nearest store that stocks the ingredient they're looking for. This is done on the maps section of the app, and the user can redirect to other stores in the area as well to find the most suitable option. Displaying the price also enables the user to find the most suitable product for them if there are alternatives, ultimately leading to a much smoother shopping experience.

## How we built it

The application was built using React Native and MongoDB. While there were some hurdles to overcome, we were finally able to get a functional application that we could view and interact with using Expo.

## Challenges we ran into

Despite our best efforts, we weren't able to fit the integration of the database within the allocated timeframe. Given that this was a fairly new experience to us with using MongoDB, we struggled to correctly implement it within our React Native code which resulted in having to rely on hard-coding ingredients.

## Accomplishments that we're proud of

We're very proud of the progress we managed to get on our mobile app. Both of us have little experience ever making such a program, so we're very happy we have a fully functioning app in so little time.

Although we weren't able to get the database loaded into the search functionality, we're still quite proud of the fact that we were able to create and connect all users on the team to the database, as well as correctly upload documents to it and we were even able to get the database printing through our code. Just being able to connect to the database and correctly output it, as well as being able to implement a query functionality, was quite a positive experience since this was unfamiliar territory to us.

## What we learned

We learnt how to create and use databases with MongoDB and were able to enhance our React Native skills through importing Google Cloud APIs and being able to work with them (particularly through react-native-maps).

## What's next for IngredFind

In the future, we would hope to improve the front and back end of our application. Aside from visual tweaks and enhancing our features, as well as fixing any bugs that may occur, we would also hope to get the database fully functional and working and perhaps create the application that enables the grocery store to add and alter products on their end. | **Come check out our fun Demo near the Google Cloud Booth in the West Atrium!! Could you use a physiotherapy exercise?**

## The problem

A specific application of physiotherapy is that joint movement may get limited through muscle atrophy, surgery, accident, stroke or other causes. Reportedly, up to 70% of patients give up physiotherapy too early — often because they cannot see the progress. Automated tracking of ROM via a mobile app could help patients reach their physiotherapy goals.

Insurance studies showed that 70% of the people are quitting physiotherapy sessions when the pain disappears and they regain their mobility. The reasons are multiple, and we can mention a few of them: cost of treatment, the feeling that they recovered, no more time to dedicate for recovery and the loss of motivation. The worst part is that half of them are able to see the injury reappear in the course of 2-3 years.

Current pose tracking technology is NOT realtime and automatic, requiring the need for physiotherapists on hand and **expensive** tracking devices. Although these work well, there is a HUGE room for improvement to develop a cheap and scalable solution.

Additionally, many seniors are unable to comprehend current solutions and are unable to adapt to current in-home technology, let alone the kinds of tech that require hours of professional setup and guidance, as well as expensive equipment.

[](http://www.youtube.com/watch?feature=player_embedded&v=PrbmBMehYx0)

## Our Solution!

* Our solution **only requires a device with a working internet connection!!** We aim to revolutionize the physiotherapy industry by allowing for extensive scaling and efficiency of physiotherapy clinics and businesses. We understand that in many areas, the therapist to patient ratio may be too high to be profitable, reducing quality and range of service for everyone, so an app to do this remotely is revolutionary.

We collect real-time 3D position data of the patient's body while doing exercises for the therapist to adjust exercises using a machine learning model directly implemented into the browser, which is first analyzed within the app, and then provided to a physiotherapist who can further analyze the data. It also asks the patient for subjective feedback on a pain scale

This makes physiotherapy exercise feedback more accessible to remote individuals **WORLDWIDE** from their therapist

## Inspiration

* The growing need for accessible physiotherapy among seniors, stroke patients, and individuals in third-world countries without access to therapists but with a stable internet connection

* The room for AI and ML innovation within the physiotherapy market for scaling and growth

## How I built it

* Firebase hosting

* Google cloud services

* React front-end

* Tensorflow PoseNet ML model for computer vision

* Several algorithms to analyze 3d pose data.

## Challenges I ran into

* Testing in React Native

* Getting accurate angle data

* Setting up an accurate timer

* Setting up the ML model to work with the camera using React

## Accomplishments that I'm proud of

* Getting real-time 3D position data

* Supporting multiple exercises

* Collection of objective quantitative as well as qualitative subjective data from the patient for the therapist

* Increasing the usability for senior patients by moving data analysis onto the therapist's side

* **finishing this within 48 hours!!!!** We did NOT think we could do it, but we came up with a working MVP!!!

## What I learned

* How to implement Tensorflow models in React

* Creating reusable components and styling in React

* Creating algorithms to analyze 3D space

## What's next for Physio-Space

* Implementing the sharing of the collected 3D position data with the therapist

* Adding a dashboard onto the therapist's side | losing |

## Inspiration

To introduce the most impartial and ensured form of voting submission in response to controversial democratic electoral polling following the 2018 US midterm elections. This event involved several encircling clauses of doubt and questioned authenticity of results by citizen voters. This propelled the idea of bringing enforced and much needed decentralized security to the polling process.

## What it does

Allows voters to vote through a web portal on a blockchain. This web portal is written in HTML and Javascript using the Bootstrap UI framework and JQuery to send Ajax HTTP requests through a flask server written in Python communicating with a blockchain running on the ARK platform. The polling station uses a web portal to generate a unique passphrase for each voter. The voter then uses said passphrase to cast their ballot anonymously and securely. Following this, their vote alongside passphrase go to a flask web server where it is properly parsed and sent to the ARK blockchain accounting it as a transaction. Is transaction is delegated by one ARK coin represented as the count. Finally, a paper trail is generated following the submission of vote on the web portal in the event of public verification.

## How we built it

The initial approach was to use Node.JS, however, Python with Flask was opted for as it proved to be a more optimally implementable solution. Visual studio code was used as a basis to present the HTML and CSS front end for visual representations of the voting interface. Alternatively, the ARK blockchain was constructed on the Docker container. These were used in a conjoined manner to deliver the web-based application.

## Challenges I ran into

* Integration for seamless formation of app between front and back-end merge

* Using flask as an intermediary to act as transitional fit for back-end

* Understanding incorporation, use, and capability of blockchain for security in the purpose applied to

## Accomplishments that I'm proud of

* Successful implementation of blockchain technology through an intuitive web-based medium to address a heavily relevant and critical societal concern

## What I learned

* Application of ARK.io blockchain and security protocols

* The multitude of transcriptional stages for encryption involving pass-phrases being converted to private and public keys

* Utilizing JQuery to compile a comprehensive program

## What's next for Block Vote

Expand Block Vote’s applicability in other areas requiring decentralized and trusted security, hence, introducing a universal initiative. | ## Inspiration

After observing the news about the use of police force for so long, we considered to ourselves how to solve that. We realized that in some ways, the problem was made worse by a lack of trust in law enforcement. We then realized that we could use blockchain to create a better system for accountability in the use of force. We believe that it can help people trust law enforcement officers more and diminish the use of force when possible, saving lives.

## What it does

Chain Gun is a modification for a gun (a Nerf gun for the purposes of the hackathon) that sits behind the trigger mechanism. When the gun is fired, the GPS location and ID of the gun are put onto the Ethereum blockchain.

## Challenges we ran into

Some things did not work well with the new updates to Web3 causing a continuous stream of bugs. To add to this, the major updates broke most old code samples. Android lacks a good implementation of any Ethereum client making it a poor platform for connecting the gun to the blockchain. Sending raw transactions is not very well documented, especially when signing the transactions manually with a public/private keypair.

## Accomplishments that we're proud of

* Combining many parts to form a solution including an Android app, a smart contract, two different back ends, and a front end

* Working together to create something we believe has the ability to change the world for the better.

## What we learned

* Hardware prototyping

* Integrating a bunch of different platforms into one system (Arduino, Android, Ethereum Blockchain, Node.JS API, React.JS frontend)

* Web3 1.0.0

## What's next for Chain Gun

* Refine the prototype | ## Inspiration

While working on a political campaign this summer, we noticed a lack of distributed system's that allowed for civic engagement. The campaigns we saw had large numbers of willing volunteers willing to do cold calling to help their favorite candidate win the election, but who lacked the infrastructure to do so. Even those few who did manage to do so successfully are utilized ineffectively. Since they have no communication with the campaign, they often end up wasting many calls on people who would vote for their candidate anyway, or in districts where their candidate has an overwhelming majority.

## What it does

Our app allows political campaigns and volunteers to strategize and work together to get their candidate elected. On the logistical end, in our web dashboard campaign managers can work to target those most open to their candidate and see what people are saying. They can also input the numbers of people in districts which are most vital to the campaign, and have their constituents target those people.

## How we built it

We took a two prong approach to building our applications since they would have to serve two different people. Our web app is more analytically focused and closely resembles an enterprise app in it's sophistication and functionality. It allows campaign staff to clearly see how their volunteers are being utilized and who their calling, and perform advanced analytical operations to enhance volunteer effectiveness.

This is very different from the approach we took with the consumer app which we wanted to make as easy to use and intuitive as possible. Our consumer facing app allows users to quickly login with their google accounts, and, with the touch of a button start calling voters who are carefully curated by the campaign staff on their dashboard. We also added a gasification element by adding a leaderboard and offering the user simple analytics on their performance.

## Challenges we ran into

One challenge we ran into was getting statistically relevant data into our platform. At first we struggled with creating an easy to use interface for users to convey information about people they called back to the campaign staff without making the process tedious. We solved this problem by spending a lot of time refining our app's user interface to be as simple as possible.

## Accomplishments that we're proud of

We're very proud of the fact that we were able to build what is essentially two closely integrated platforms in one hackathon. Our iOS app is built natively in swift while our website is built in PHP so very little of the code, besides the api was reusable despite the fact that the two apps were constantly interfacing with each other.

## What we learned

That creating effective actionable data is hard, and that it's not being done enough. We also learned through the process of brainstorming the concept for the app that for civic movements to be effective in the future, they have to be more strategic with who they target, and how they utilize their volunteers.

## What's next for PolitiCall

Analytics are at the core of any modern political campaign, and we believe volunteers calling thousands of people are one of the best ways to gather analytics. We plan to combine user gathered analytics with proprietary campaign information to offer campaign managers the best possible picture of their campaign, and what they need to focus on. | winning |

# BananaExpress

A self-writing journal of your life, with superpowers!

We make journaling easier and more engaging than ever before by leveraging **home-grown, cutting edge, CNN + LSTM** models to do **novel text generation** to prompt our users to practice active reflection by journaling!

Features:

* User photo --> unique question about that photo based on 3 creative techniques

+ Real time question generation based on (real-time) user journaling (and the rest of their writing)!

+ Ad-lib style questions - we extract location and analyze the user's activity to generate a fun question!

+ Question-corpus matching - we search for good questions about the user's current topics

* NLP on previous journal entries for sentiment analysis

I love our front end - we've re-imagined how easy and futuristic journaling can be :)

And, honestly, SO much more! Please come see!

♥️ from the Lotus team,

Theint, Henry, Jason, Kastan | ## Inspiration

Have you ever had to wait in long lines just to buy a few items from a store? Not wanted to interact with employees to get what you want? Now you can buy items quickly and hassle free through your phone, without interacting with any people whatsoever.

## What it does

CheckMeOut is an iOS application that allows users to buy an item that has been 'locked' in a store. For example, clothing that have the sensors attached to them or items that are physically locked behind glass. Users can scan a QR code or use ApplePay to quickly access the information about an item (price, description, etc.) and 'unlock' the item by paying for it. The user will not have to interact with any store clerks or wait in line to buy the item.

## How we built it

We used xcode to build the iOS application, and MS Azure to host our backend. We used an intel Edison board to help simulate our 'locking' of an item.

## Challenges I ran into

We're using many technologies that our team is unfamiliar with, namely Swift and Azure.

## What I learned

I've learned not underestimate things you don't know, to ask for help when you need it, and to just have a good time.

## What's next for CheckMeOut

Hope to see it more polished in the future. | ## Inspiration 💡

Our inspiration for this project was to leverage new AI technologies such as text to image, text generation and natural language processing to enhance the education space. We wanted to harness the power of machine learning to inspire creativity and improve the way students learn and interact with educational content. We believe that these cutting-edge technologies have the potential to revolutionize education and make learning more engaging, interactive, and personalized.

## What it does 🎮

Our project is a text and image generation tool that uses machine learning to create stories from prompts given by the user. The user can input a prompt, and the tool will generate a story with corresponding text and images. The user can also specify certain attributes such as characters, settings, and emotions to influence the story's outcome. Additionally, the tool allows users to export the generated story as a downloadable book in the PDF format. The goal of this project is to make story-telling interactive and fun for users.

## How we built it 🔨

We built our project using a combination of front-end and back-end technologies. For the front-end, we used React which allows us to create interactive user interfaces. On the back-end side, we chose Go as our main programming language and used the Gin framework to handle concurrency and scalability. To handle the communication between the resource intensive back-end tasks we used a combination of RabbitMQ as the message broker and Celery as the work queue. These technologies allowed us to efficiently handle the flow of data and messages between the different components of our project.

To generate the text and images for the stories, we leveraged the power of OpenAI's DALL-E-2 and GPT-3 models. These models are state-of-the-art in their respective fields and allow us to generate high-quality text and images for our stories. To improve the performance of our system, we used MongoDB to cache images and prompts. This allows us to quickly retrieve data without having to re-process it every time it is requested. To minimize the load on the server, we used socket.io for real-time communication, it allow us to keep the HTTP connection open and once work queue is done processing data, it sends a notification to the React client.

## Challenges we ran into 🚩

One of the challenges we ran into during the development of this project was converting the generated text and images into a PDF format within the React front-end. There were several libraries available for this task, but many of them did not work well with the specific version of React we were using. Additionally, some of the libraries required additional configuration and setup, which added complexity to the project. We had to spend a significant amount of time researching and testing different solutions before we were able to find a library that worked well with our project and was easy to integrate into our codebase. This challenge highlighted the importance of thorough testing and research when working with new technologies and libraries.

## Accomplishments that we're proud of ⭐

One of the accomplishments we are most proud of in this project is our ability to leverage the latest technologies, particularly machine learning, to enhance the user experience. By incorporating natural language processing and image generation, we were able to create a tool that can generate high-quality stories with corresponding text and images. This not only makes the process of story-telling more interactive and fun, but also allows users to create unique and personalized stories.

## What we learned 📚

Throughout the development of this project, we learned a lot about building highly scalable data pipelines and infrastructure. We discovered the importance of choosing the right technology stack and tools to handle large amounts of data and ensure efficient communication between different components of the system. We also learned the importance of thorough testing and research when working with new technologies and libraries.

We also learned about the importance of using message brokers and work queues to handle data flow and communication between different components of the system, which allowed us to create a more robust and scalable infrastructure. We also learned about the use of NoSQL databases, such as MongoDB to cache data and improve performance. Additionally, we learned about the importance of using socket.io for real-time communication, which can minimize the load on the server.

Overall, we learned about the importance of using the right tools and technologies to build a highly scalable and efficient data pipeline and infrastructure, which is a critical component of any large-scale project.

## What's next for Dream.ai 🚀

There are several exciting features and improvements that we plan to implement in the future for Dream.ai. One of the main focuses will be on allowing users to export their generated stories to YouTube. This will allow users to easily share their stories with a wider audience and potentially reach a larger audience.

Another feature we plan to implement is user history. This will allow users to save and revisit old prompts and stories they have created, making it easier for them to pick up where they left off. We also plan to allow users to share their prompts on the site with other users, which will allow them to collaborate and create stories together.

Finally, we are planning to improve the overall user experience by incorporating more customization options, such as the ability to select different themes, characters and settings. We believe these features will further enhance the interactive and fun nature of the tool, making it even more engaging for users. | winning |

# 🪼 **SeaScript** 🪸

## Inspiration

Learning MATLAB can be as appealing as a jellyfish sting. Traditional resources often leave students lost at sea, making the process more exhausting than a shark's endless swim. SeaScript transforms this challenge into an underwater adventure, turning the tedious journey of mastering MATLAB into an exciting expedition.

## What it does

SeaScript plunges you into an oceanic MATLAB adventure with three distinct zones:

1. 🪼 Jellyfish Junction: Help bioluminescent jellies navigate nighttime waters.

2. 🦈 Shark Bay: Count endangered sharks to aid conservation efforts.

3. 🪸 Coral Code Reef: Assist Nemo in finding the tallest coral home.

Solve MATLAB challenges in each zone to collect puzzle pieces, unlocking a final mystery message. It's not just coding – it's saving the ocean, one function at a time!

## How we built it

* Python game engine for our underwater world

* MATLAB integration for real-time, LeetCode-style challenges

* MongoDB for data storage (player progress, challenges, marine trivia)

## Challenges we ran into

* Seamlessly integrating MATLAB with our Python game engine

* Crafting progressively difficult challenges without overwhelming players

* Balancing education and entertainment (fish puns have limits!)

## Accomplishments that we're proud of

* Created a unique three-part underwater journey for MATLAB learning

* Successfully merged MATLAB, Python, and MongoDB into a cohesive game

* Developed a rewarding puzzle system that tracks player progress

## What we learned

* MATLAB's capabilities are as vast as the ocean

* Gamification can transform challenging subjects into adventures

* The power of combining coding, marine biology, and puzzle-solving in education

## What's next for SeaScript

* Expand with more advanced MATLAB concepts

* Implement multiplayer modes for collaborative problem-solving

* Develop mobile and VR versions for on-the-go and immersive learning

Ready to dive in? Don't let MATLAB be the one that got away – catch the wave with SeaScript and code like a fish boss! 🐠👑 | ## Inspiration

We were inspired by hard working teachers and students. Although everyone was working hard, there was still a disconnect with many students not being able to retain what they learned. So, we decided to create both a web application and a companion phone application to help target this problem.

## What it does

The app connects students with teachers in a whole new fashion. Students can provide live feedback to their professors on various aspects of the lecture, such as the volume and pace. Professors, on the other hand, get an opportunity to receive live feedback on their teaching style and also give students a few warm-up exercises with a built-in clicker functionality.

The web portion of the project ties the classroom experience to the home. Students receive live transcripts of what the professor is currently saying, along with a summary at the end of the lecture which includes key points. The backend will also generate further reading material based on keywords from the lecture, which will further solidify the students’ understanding of the material.

## How we built it

We built the mobile portion using react-native for the front-end and firebase for the backend. The web app is built with react for the front end and firebase for the backend. We also implemented a few custom python modules to facilitate the client-server interaction to ensure a smooth experience for both the instructor and the student.

## Challenges we ran into

One major challenge we ran into was getting and processing live audio and giving a real-time transcription of it to all students enrolled in the class. We were able to solve this issue through a python script that would help bridge the gap between opening an audio stream and doing operations on it while still serving the student a live version of the rest of the site.

## Accomplishments that we’re proud of

Being able to process text data to the point that we were able to get a summary and information on tone/emotions from it. We are also extremely proud of the

## What we learned

We learned more about React and its usefulness when coding in JavaScript. Especially when there were many repeating elements in our Material Design. We also learned that first creating a mockup of what we want will facilitate coding as everyone will be on the same page on what is going on and all thats needs to be done is made very evident. We used some API’s such as the Google Speech to Text API and a Summary API. We were able to work around the constraints of the API’s to create a working product. We also learned more about other technologies that we used such as: Firebase, Adobe XD, React-native, and Python.

## What's next for Gradian

The next goal for Gradian is to implement a grading system for teachers that will automatically integrate with their native grading platform so that clicker data and other quiz material can instantly be graded and imported without any issues. Beyond that, we can see the potential for Gradian to be used in office scenarios as well so that people will never miss a beat thanks to the live transcription that happens. | ## Inspiration

Us college students all can relate to having a teacher that was not engaging enough during lectures or mumbling to the point where we cannot hear them at all. Instead of finding solutions to help the students outside of the classroom, we realized that the teachers need better feedback to see how they can improve themselves to create better lecture sessions and better ratemyprofessor ratings.

## What it does

Morpheus is a machine learning system that analyzes a professor’s lesson audio in order to differentiate between various emotions portrayed through his speech. We then use an original algorithm to grade the lecture. Similarly we record and score the professor’s body language throughout the lesson using motion detection/analyzing software. We then store everything on a database and show the data on a dashboard which the professor can access and utilize to improve their body and voice engagement with students. This is all in hopes of allowing the professor to be more engaging and effective during their lectures through their speech and body language.

## How we built it

### Visual Studio Code/Front End Development: Sovannratana Khek

Used a premade React foundation with Material UI to create a basic dashboard. I deleted and added certain pages which we needed for our specific purpose. Since the foundation came with components pre build, I looked into how they worked and edited them to work for our purpose instead of working from scratch to save time on styling to a theme. I needed to add a couple new original functionalities and connect to our database endpoints which required learning a fetching library in React. In the end we have a dashboard with a development history displayed through a line graph representing a score per lecture (refer to section 2) and a selection for a single lecture summary display. This is based on our backend database setup. There is also space available for scalability and added functionality.

### PHP-MySQL-Docker/Backend Development & DevOps: Giuseppe Steduto

I developed the backend for the application and connected the different pieces of the software together. I designed a relational database using MySQL and created API endpoints for the frontend using PHP. These endpoints filter and process the data generated by our machine learning algorithm before presenting it to the frontend side of the dashboard. I chose PHP because it gives the developer the option to quickly get an application running, avoiding the hassle of converters and compilers, and gives easy access to the SQL database. Since we’re dealing with personal data about the professor, every endpoint is only accessible prior authentication (handled with session tokens) and stored following security best-practices (e.g. salting and hashing passwords). I deployed a PhpMyAdmin instance to easily manage the database in a user-friendly way.

In order to make the software easily portable across different platforms, I containerized the whole tech stack using docker and docker-compose to handle the interaction among several containers at once.

### MATLAB/Machine Learning Model for Speech and Emotion Recognition: Braulio Aguilar Islas

I developed a machine learning model to recognize speech emotion patterns using MATLAB’s audio toolbox, simulink and deep learning toolbox. I used the Berlin Database of Emotional Speech To train my model. I augmented the dataset in order to increase accuracy of my results, normalized the data in order to seamlessly visualize it using a pie chart, providing an easy and seamless integration with our database that connects to our website.

### Solidworks/Product Design Engineering: Riki Osako

Utilizing Solidworks, I created the 3D model design of Morpheus including fixtures, sensors, and materials. Our team had to consider how this device would be tracking the teacher’s movements and hearing the volume while not disturbing the flow of class. Currently the main sensors being utilized in this product are a microphone (to detect volume for recording and data), nfc sensor (for card tapping), front camera, and tilt sensor (for vertical tilting and tracking professor). The device also has a magnetic connector on the bottom to allow itself to change from stationary position to mobility position. It’s able to modularly connect to a holonomic drivetrain to move freely around the classroom if the professor moves around a lot. Overall, this allowed us to create a visual model of how our product would look and how the professor could possibly interact with it. To keep the device and drivetrain up and running, it does require USB-C charging.

### Figma/UI Design of the Product: Riki Osako

Utilizing Figma, I created the UI design of Morpheus to show how the professor would interact with it. In the demo shown, we made it a simple interface for the professor so that all they would need to do is to scan in using their school ID, then either check his lecture data or start the lecture. Overall, the professor is able to see if the device is tracking his movements and volume throughout the lecture and see the results of their lecture at the end.

## Challenges we ran into

Riki Osako: Two issues I faced was learning how to model the product in a way that would feel simple for the user to understand through Solidworks and Figma (using it for the first time). I had to do a lot of research through Amazon videos and see how they created their amazon echo model and looking back in my UI/UX notes in the Google Coursera Certification course that I’m taking.

Sovannratana Khek: The main issues I ran into stemmed from my inexperience with the React framework. Oftentimes, I’m confused as to how to implement a certain feature I want to add. I overcame these by researching existing documentation on errors and utilizing existing libraries. There were some problems that couldn’t be solved with this method as it was logic specific to our software. Fortunately, these problems just needed time and a lot of debugging with some help from peers, existing resources, and since React is javascript based, I was able to use past experiences with JS and django to help despite using an unfamiliar framework.

Giuseppe Steduto: The main issue I faced was making everything run in a smooth way and interact in the correct manner. Often I ended up in a dependency hell, and had to rethink the architecture of the whole project to not over engineer it without losing speed or consistency.

Braulio Aguilar Islas: The main issue I faced was working with audio data in order to train my model and finding a way to quantify the fluctuations that resulted in different emotions when speaking. Also, the dataset was in german

## Accomplishments that we're proud of

Achieved about 60% accuracy in detecting speech emotion patterns, wrote data to our database, and created an attractive dashboard to present the results of the data analysis while learning new technologies (such as React and Docker), even though our time was short.

## What we learned

As a team coming from different backgrounds, we learned how we could utilize our strengths in different aspects of the project to smoothly operate. For example, Riki is a mechanical engineering major with little coding experience, but we were able to allow his strengths in that area to create us a visual model of our product and create a UI design interface using Figma. Sovannratana is a freshman that had his first hackathon experience and was able to utilize his experience to create a website for the first time. Braulio and Gisueppe were the most experienced in the team but we were all able to help each other not just in the coding aspect, with different ideas as well.

## What's next for Untitled

We have a couple of ideas on how we would like to proceed with this project after HackHarvard and after hibernating for a couple of days.

From a coding standpoint, we would like to improve the UI experience for the user on the website by adding more features and better style designs for the professor to interact with. In addition, add motion tracking data feedback to the professor to get a general idea of how they should be changing their gestures.

We would also like to integrate a student portal and gather data on their performance and help the teacher better understand where the students need most help with.

From a business standpoint, we would like to possibly see if we could team up with our university, Illinois Institute of Technology, and test the functionality of it in actual classrooms. | partial |

## What it does

ColoVR is a virtual experience allowing users to modify their environment with colors and models. It's a relaxing, low poly experience that is the first of its kind to bring an accessible 3D experience to the Google Cardboard platform. It's a great way to become a creative in 3D without dropping a ton of money on VR hardware.

## How we built it

We used Google Daydream and extended off of the demo scene to our liking. We used Unity and C# to create the scene, and do all the user interactions. We did painting and dragging objects around the scene using a common graphics technique called Ray Tracing. We colored the scene by changing the vertex color. We also created a palatte that allowed you to change tools, colors, and insert meshes. We hand modeled the low poly meshes in Maya.

## Challenges we ran into

The main challenge was finding a proper mechanism for coloring the geometry in the scene. We first tried to paint the mesh face by face, but we had no way of accessing the entire face from the way the Unity mesh was set up. We then looked into an asset that would help us paint directly on top of the texture, but it ended up being too slow. Textures tend to render too slowly, so we ended up doing it with a vertex shader that would interpolate between vertex colors, allowing real time painting of meshes. We implemented it so that we changed all the vertices that belong to a fragment of a face. The vertex shader was the fastest way that we could render real time painting, and emulate the painting of a triangulated face. Our second biggest challenge was using ray tracing to find the object we were interacting with. We had to navigate the Unity API and get acquainted with their Physics raytracer and mesh colliders to properly implement all interaction with the cursor. Our third challenge was making use of the Controller that Google Daydream supplied us with - it was great and very sandboxed, but we were almost limited in terms of functionality. We had to find a way to be able to change all colors, insert different meshes, and interact with the objects with only two available buttons.

## Accomplishments that we're proud of

We're proud of the fact that we were able to get a clean, working project that was exactly what we pictured. People seemed to really enjoy the concept and interaction.

## What we learned

How to optimize for a certain platform - in terms of UI, geometry, textures and interaction.

## What's next for ColoVR

Hats, interactive collaborative space for multiple users. We want to be able to host the scene and make it accessible to multiple users at once, and store the state of the scene (maybe even turn it into an infinite world) where users can explore what past users have done and see the changes other users make in real time. | ## Background

Collaboration is the heart of humanity. From contributing to the rises and falls of great civilizations to helping five sleep deprived hackers communicate over 36 hours, it has become a required dependency in the [git@ssh.github.com](mailto:git@ssh.github.com):**hacker/life**.

Plowing through the weekend, we found ourselves shortchanged by the current available tools for collaborating within a cluster of devices. Every service requires:

1. *Authentication* Users must sign up and register to use a service.

2. *Contact* People trying to share information must establish a prior point of contact(share e-mails, phone numbers, etc).

3. *Unlinked Output* Shared content is not deep-linked with mobile or web applications.

This is where Grasshopper jumps in. Built as a **streamlined cross-platform contextual collaboration service**, it uses our on-prem installation of Magnet on Microsoft Azure and Google Cloud Messaging to integrate deep-linked commands and execute real-time applications between all mobile and web platforms located in a cluster near each other. It completely gets rid of the overhead of authenticating users and sharing contacts - all sharing is done locally through gps-enabled devices.

## Use Cases

Grasshopper lets you collaborate locally between friends/colleagues. We account for sharing application information at the deepest contextual level, launching instances with accurately prepopulated information where necessary. As this data is compatible with all third-party applications, the use cases can shoot through the sky. Here are some applications that we accounted for to demonstrate the power of our platform:

1. Share a video within a team through their mobile's native YouTube app **in seconds**.

2. Instantly play said video on a bigger screen by hopping the video over to Chrome on your computer.

3. Share locations on Google maps between nearby mobile devices and computers with a single swipe.

4. Remotely hop important links while surfing your smartphone over to your computer's web browser.

5. Rick Roll your team.

## What's Next?

Sleep. | ## Inspiration

Metaverse, VR, and games today lack true immersion. Even in the Metaverse, as a person, you exist completely phantom waist down. The movement of your elbows is predicted by an algorithm, and can look unstable and jittery. Worst of all, you have to use joycons to do something like waterbend, or spawn a fireball in your open palm.

## What it does

We built an iPhone powered full-body 3D tracking system that captures every aspect of the way that you move, and it costs practically nothing. By leveraging MediaPipePose's precise body part tracking and Unity's dynamic digital environment, it allows users to embody a virtual avatar that mirrors their real-life movements with precision. The use of Python-based sockets facilitates real-time communication, ensuring seamless and immediate translation of physical actions into the virtual world, elevating immersion for users engaging in virtual experiences.

## How we built it

To create our real-life full-body tracking avatar, we integrated MediaPipePose with Unity and utilized Python-based sockets. Initially, we employed MediaPipePose's computer vision capabilities to capture precise body part coordinates, forming the avatar's basis. Simultaneously, we built a dynamic digital environment within Unity to house the avatar. The critical link connecting these technologies was established through Python-based sockets, enabling real-time communication. This integration seamlessly translated users' physical movements into their virtual avatars, enhancing immersion in virtual spaces.

## Challenges we ran into

There were a number of issues. We first used media pipe holistic but then realized it was a legacy system and we couldn't get 3d coordinates for the hands. Then, we transitioned to using media pipe pose for the person's body and cutting out small sections of the image where we detected hands, and running media pipe hand on those sub images to capture both the position of the body and the position of the hands. The math required to map the local coordinate system of the hand tracking to the global coordinate system of the full body pose was difficult. There were latency issues with python --> Unity, that had to be resolved by decreasing the amount of data points. We also had to use techniques like an exponential moving average to make the movements smoother. And naturally, there were hundreds of bugs that had to be resolved in parsing, moving, storing, and working with the data from these deep learning CV models.

## Accomplishments that we're proud of

We're proud of achieving full-body tracking, which enhances user immersion. Equally satisfying is our seamless transition of the body with little latency, ensuring a fluid user experience.

## What we learned

For one, we learned how to integrate MediaPipePose with Unity, all the while learning socket programming for data transfer via servers in python. We learned how to integrate c# with python since unity only works on c# scripts and MediaPipePose only works on python scripts. We also learned how to use openCV and computer vision pretty intimately, since we had to work around a number of limitations for the libraries and old legacy code lurking around Google. There was also an element of asynchronous code handling via queues. Cool to see the data structure in action!

## What's next for Full Body Fusion!

Optimizing the hand mappings, implementing gesture recognition, and adding a real avatar in Unity instead of white dots. | partial |

# Inspiration and Product

There's a certain feeling we all have when we're lost. It's a combination of apprehension and curiosity – and it usually drives us to explore and learn more about what we see. It happens to be the case that there's a [huge disconnect](http://www.purdue.edu/discoverypark/vaccine/assets/pdfs/publications/pdf/Storylines%20-%20Visual%20Exploration%20and.pdf) between that which we see around us and that which we know: the building in front of us might look like an historic and famous structure, but we might not be able to understand its significance until we read about it in a book, at which time we lose the ability to visually experience that which we're in front of.

Insight gives you actionable information about your surroundings in a visual format that allows you to immerse yourself in your surroundings: whether that's exploring them, or finding your way through them. The app puts the true directions of obstacles around you where you can see them, and shows you descriptions of them as you turn your phone around. Need directions to one of them? Get them without leaving the app. Insight also supports deeper exploration of what's around you: everything from restaurant ratings to the history of the buildings you're near.

## Features

* View places around you heads-up on your phone - as you rotate, your field of vision changes in real time.

* Facebook Integration: trying to find a meeting or party? Call your Facebook events into Insight to get your bearings.

* Directions, wherever, whenever: surveying the area, and find where you want to be? Touch and get instructions instantly.

* Filter events based on your location. Want a tour of Yale? Touch to filter only Yale buildings, and learn about the history and culture. Want to get a bite to eat? Change to a restaurants view. Want both? You get the idea.

* Slow day? Change your radius to a short distance to filter out locations. Feeling adventurous? Change your field of vision the other way.

* Want get the word out on where you are? Automatically check-in with Facebook at any of the locations you see around you, without leaving the app.

# Engineering

## High-Level Tech Stack

* NodeJS powers a RESTful API powered by Microsoft Azure.

* The API server takes advantage of a wealth of Azure's computational resources:

+ A Windows Server 2012 R2 Instance, and an Ubuntu 14.04 Trusty instance, each of which handle different batches of geospatial calculations

+ Azure internal load balancers

+ Azure CDN for asset pipelining

+ Azure automation accounts for version control

* The Bing Maps API suite, which offers powerful geospatial analysis tools:

+ RESTful services such as the Bing Spatial Data Service

+ Bing Maps' Spatial Query API

+ Bing Maps' AJAX control, externally through direction and waypoint services

* iOS objective-C clients interact with the server RESTfully and display results as parsed

## Application Flow

iOS handles the entirety of the user interaction layer and authentication layer for user input. Users open the app, and, if logging in with Facebook or Office 365, proceed through the standard OAuth flow, all on-phone. Users can also opt to skip the authentication process with either provider (in which case they forfeit the option to integrate Facebook events or Office365 calendar events into their views).

After sign in (assuming the user grants permission for use of these resources), and upon startup of the camera, requests are sent with the user's current location to a central server on an Ubuntu box on Azure. The server parses that location data, and initiates a multithread Node process via Windows 2012 R2 instances. These processes do the following, and more:

* Geospatial radial search schemes with data from Bing

* Location detail API calls from Bing Spatial Query APIs

* Review data about relevant places from a slew of APIs

After the data is all present on the server, it's combined and analyzed, also on R2 instances, via the following:

* Haversine calculations for distance measurements, in accordance with radial searches

* Heading data (to make client side parsing feasible)

* Condensation and dynamic merging - asynchronous cross-checking from the collection of data which events are closest

Ubuntu brokers and manages the data, sends it back to the client, and prepares for and handles future requests.

## Other Notes

* The most intense calculations involved the application of the [Haversine formulae](https://en.wikipedia.org/wiki/Haversine_formula), i.e. for two points on a sphere, the central angle between them can be described as:

and the distance as:

(the result of which is non-standard/non-Euclidian due to the Earth's curvature). The results of these formulae translate into the placement of locations on the viewing device.

These calculations are handled by the Windows R2 instance, essentially running as a computation engine. All communications are RESTful between all internal server instances.

## Challenges We Ran Into

* *iOS and rotation*: there are a number of limitations in iOS that prevent interaction with the camera in landscape mode (which, given the need for users to see a wide field of view). For one thing, the requisite data registers aren't even accessible via daemons when the phone is in landscape mode. This was the root of the vast majority of our problems in our iOS, since we were unable to use any inherited or pre-made views (we couldn't rotate them) - we had to build all of our views from scratch.

* *Azure deployment specifics with Windows R2*: running a pure calculation engine (written primarily in C# with ASP.NET network interfacing components) was tricky at times to set up and get logging data for.

* *Simultaneous and asynchronous analysis*: Simultaneously parsing asynchronously-arriving data with uniform Node threads presented challenges. Our solution was ultimately a recursive one that involved checking the status of other resources upon reaching the base case, then using that knowledge to better sort data as the bottoming-out step bubbled up.

* *Deprecations in Facebook's Graph APIs*: we needed to use the Facebook Graph APIs to query specific Facebook events for their locations: a feature only available in a slightly older version of the API. We thus had to use that version, concurrently with the newer version (which also had unique location-related features we relied on), creating some degree of confusion and required care.

## A few of Our Favorite Code Snippets

A few gems from our codebase:

```

var deprecatedFQLQuery = '...

```

*The story*: in order to extract geolocation data from events vis-a-vis the Facebook Graph API, we were forced to use a deprecated API version for that specific query, which proved challenging in how we versioned our interactions with the Facebook API.

```

addYaleBuildings(placeDetails, function(bulldogArray) {

addGoogleRadarSearch(bulldogArray, function(luxEtVeritas) {

...

```

*The story*: dealing with quite a lot of Yale API data meant we needed to be creative with our naming...

```

// R is the earth's radius in meters

var a = R * 2 * Math.atan2(Math.sqrt((Math.sin((Math.PI / 180)(latitude2 - latitude1) / 2) * Math.sin((Math.PI / 180)(latitude2 - latitude1) / 2) + Math.cos((Math.PI / 180)(latitude1)) * Math.cos((Math.PI / 180)(latitude2)) * Math.sin((Math.PI / 180)(longitude2 - longitude1) / 2) * Math.sin((Math.PI / 180)(longitude2 - longitude1) / 2))), Math.sqrt(1 - (Math.sin((Math.PI / 180)(latitude2 - latitude1) / 2) * Math.sin((Math.PI / 180)(latitude2 - latitude1) / 2) + Math.cos((Math.PI / 180)(latitude1)) * Math.cos((Math.PI / 180)(latitude2)) * Math.sin((Math.PI / 180)(longitude2 - longitude1) / 2) * Math.sin((Math.PI / 180)(longitude2 - longitude1) / 2);)));

```

*The story*: while it was shortly after changed and condensed once we noticed it's proliferation, our implementation of the Haversine formula became cumbersome very quickly. Degree/radian mismatches between APIs didn't make things any easier. | ## Inspiration