text

stringlengths 74

478k

| repo

stringlengths 7

106

|

|---|---|

microsoft/promptbase;promptbase promptbase is an evolving collection of resources, best practices, and example scripts for eliciting the best performance from foundation models like GPT-4 . We currently host scripts demonstrating the Medprompt methodology , including examples of how we further extended this collection of prompting techniques (" Medprompt+ ") into non-medical domains: | Benchmark | GPT-4 Prompt | GPT-4 Results | Gemini Ultra Results |

| ---- | ------- | ------- | ---- |

| MMLU | Medprompt+ | 90.10% | 90.04% |

| GSM8K | Zero-shot | 95.3% | 94.4% |

| MATH | Zero-shot | 68.4% | 53.2% |

| HumanEval | Zero-shot | 87.8% | 74.4% |

| BIG-Bench-Hard | Few-shot + CoT | 89.0% | 83.6% |

| DROP | Zero-shot + CoT | 83.7% | 82.4% |

| HellaSwag | 10-shot | 95.3% | 87.8% | In the near future, promptbase will also offer further case studies and structured interviews around the scientific process we take behind prompt engineering. We'll also offer specialized deep dives into specialized tooling that accentuates the prompt engineering process. Stay tuned! Medprompt and The Power of Prompting "Can Generalist Foundation Models Outcompete Special-Purpose Tuning? Case Study in Medicine" (H. Nori, Y. T. Lee, S. Zhang, D. Carignan, R. Edgar, N. Fusi, N. King, J. Larson, Y. Li, W. Liu, R. Luo, S. M. McKinney, R. O. Ness, H. Poon, T. Qin, N. Usuyama, C. White, E. Horvitz 2023) @article{nori2023can,

title={Can Generalist Foundation Models Outcompete Special-Purpose Tuning? Case Study in Medicine},

author={Nori, Harsha and Lee, Yin Tat and Zhang, Sheng and Carignan, Dean and Edgar, Richard and Fusi, Nicolo and King, Nicholas and Larson, Jonathan and Li, Yuanzhi and Liu, Weishung and others},

journal={arXiv preprint arXiv:2311.16452},

year={2023}

} Paper link In a recent study , we showed how the composition of several prompting strategies into a method that we refer to as Medprompt can efficiently steer generalist models like GPT-4 to achieve top performance, even when compared to models specifically finetuned for medicine. Medprompt composes three distinct strategies together -- including dynamic few-shot selection, self-generated chain of thought, and choice-shuffle ensembling -- to elicit specialist level performance from GPT-4. We briefly describe these strategies here: Dynamic Few Shots : Few-shot learning -- providing several examples of the task and response to a foundation model -- enables models quickly adapt to a specific domain and

learn to follow the task format. For simplicity and efficiency, the few-shot examples applied in prompting for a particular task are typically fixed; they are unchanged across test examples. This necessitates that the few-shot examples selected are broadly representative and relevant to a wide distribution of text examples. One approach to meeting these requirements is to have domain experts carefully hand-craft exemplars. Even so, this approach cannot guarantee that the curated, fixed few-shot examples will be appropriately representative of every test example. However, with enough available data, we can select different few-shot examples for different task inputs. We refer to this approach as employing dynamic few-shot examples. The method makes use of a mechanism to identify examples based on their similarity to the case at hand. For Medprompt, we did the following to identify representative few shot examples: Given a test example, we choose k training examples that are semantically similar using a k-NN clustering in the embedding space. Specifically, we first use OpenAI's text-embedding-ada-002 model to embed candidate exemplars for few-shot learning. Then, for each test question x, we retrieve its nearest k neighbors x1, x2, ..., xk from the training set (according to distance in the embedding space of text-embedding-ada-002). These examples -- the ones most similar in embedding space to the test question -- are ultimately registered in the prompt. Self-Generated Chain of Thought (CoT) : Chain-of-thought (CoT) uses natural language statements, such as “Let’s think step by step,” to explicitly encourage the model to generate a series of intermediate reasoning steps. The approach has been found to significantly improve the ability of foundation models to perform complex reasoning. Most approaches to chain-of-thought center on the use of experts to manually compose few-shot examples with chains of thought for prompting. Rather than rely on human experts, we pursued

a mechanism to automate the creation of chain-of-thought examples. We found that we could simply ask GPT-4 to generate chain-of-thought for the training examples, with appropriate guardrails for reducing risk of hallucination via incorrect reasoning chains. Majority Vote Ensembling : Ensembling refers to combining the output of several algorithms together to yield better predictive performance than any individual algorithm. Frontier models like GPT-4 benefit from ensembling of their own outputs. A simple technique is to have a variety of prompts, or a single prompt with varied temperature , and report the most frequent answer amongst the ensemble constituents. For multiple choice questions, we employ a further trick that increases the diversity of the ensemble called choice-shuffling , where we shuffle the relative order of the answer choices before generating each reasoning

path. We then select the most consistent answer, i.e., the one that is least sensitive to choice shuffling, which increases the robustness of the answer. The combination of these three techniques led to breakthrough performance in Medprompt for medical challenge questions. Implementation details of these techniques can be found here: https://github.com/microsoft/promptbase/tree/main/src/promptbase/mmlu Medprompt+ | Extending the power of prompting Here we provide some intuitive details on how we extended the medprompt prompting framework to elicit even stronger out-of-domain performance on the MMLU (Measuring Massive Multitask Language Understanding) benchmark. MMLU was established as a test of general knowledge and reasoning powers of large language models. The complete MMLU benchmark contains tens of thousands of challenge problems of different forms across 57 areas from basic mathematics to United States history, law, computer science, engineering, medicine, and more. We found that applying Medprompt without modification to the whole MMLU achieved a score of 89.1%. Not bad for a single policy working across a great diversity of problems! But could we push Medprompt to do better? Simply scaling-up MedPrompt can yield further benefits. As a first step, we increased the number of ensembled calls from five to 20. This boosted performance to 89.56%. On working to push further with refinement of Medprompt, we noticed that performance was relatively poor for specific topics of the MMLU. MMLU contains a great diversity of types of questions, depending on the discipline and specific benchmark at hand. How might we push GPT-4 to perform even better on MMLU given the diversity of problems? We focused on extension to a portfolio approach based on the observation that some topical areas tend to ask questions that would require multiple steps of reasoning and perhaps a scratch pad to keep track of multiple parts of a solution. Other areas seek factual answers that follow more directly from questions. Medprompt employs “chain-of-thought” (CoT) reasoning, resonating with multi-step solving. We wondered if the sophisticated Medprompt-classic approach might do less well on very simple questions and if the system might do better if a simpler method were used for the factual queries. Following this argument, we found that we could boost the performance on MMLU by extending MedPrompt with a simple two-method prompt portfolio. We add to the classic Medprompt a set of 10 simple, direct few-shot prompts soliciting an answer directly without Chain of Thought. We then ask GPT-4 for help with deciding on the best strategy for each topic area and question. As a screening call, for each question we first ask GPT-4:

``` Question {{ question }} Task Does answering the question above require a scratch-pad?

A. Yes

B. No

``` If GPT-4 thinks the question does require a scratch-pad, then the contribution of the Chain-of-Thought component of the ensemble is doubled. If it doesn't, we halve that contribution (and let the ensemble instead depend more on the direct few-shot prompts). Dynamically leveraging the appropriate prompting technique in the ensemble led to a further +0.5% performance improvement across the MMLU. We note that Medprompt+ relies on accessing confidence scores (logprobs) from GPT-4. These are not publicly available via the current API but will be enabled for all in the near future. Running Scripts Note: Some scripts hosted here are published for reference on methodology, but may not be immediately executable against public APIs. We're working hard on making the pipelines easier to run "out of the box" over the next few days, and appreciate your patience in the interim! First, clone the repo and install the promptbase package: bash

cd src

pip install -e . Next, decide which tests you'd like to run. You can choose from: bigbench drop gsm8k humaneval math mmlu Before running the tests, you will need to download the datasets from the original sources (see below) and place them in the src/promptbase/datasets directory. After downloading datasets and installing the promptbase package, you can run a test with: python -m promptbase dataset_name For example: python -m promptbase gsm8k Dataset Links To run evaluations, download these datasets and add them to /src/promptbase/datasets/ MMLU: https://github.com/hendrycks/test Download the data.tar file from the above page Extract the contents Run mkdir src/promptbase/datasets/mmlu Run python ./src/promptbase/format/format_mmlu.py --mmlu_csv_dir /path/to/extracted/csv/files --output_path ./src/promptbase/datasets/mmlu You will also need to set the following environment variables: AZURE_OPENAI_API_KEY AZURE_OPENAI_CHAT_API_KEY AZURE_OPENAI_CHAT_ENDPOINT_URL AZURE_OPENAI_EMBEDDINGS_URL Run with python -m promptbase mmlu --subject <SUBJECT> where <SUBJECT> is one of the MMLU datasets (such as 'abstract_algebra') In addition to the individual subjects, the format_mmlu.py script prepares files which enables all to be passed as a subject, which will run on the entire dataset HumanEval: https://huggingface.co/datasets/openai_humaneval DROP: https://allenai.org/data/drop GSM8K: https://github.com/openai/grade-school-math MATH: https://huggingface.co/datasets/hendrycks/competition_math Big-Bench-Hard: https://github.com/suzgunmirac/BIG-Bench-Hard

The contents of this repo need to be put into a directory called BigBench in the datasets directory Other Resources: Medprompt Blog: https://www.microsoft.com/en-us/research/blog/the-power-of-prompting/ Medprompt Research Paper: https://arxiv.org/abs/2311.16452 Medprompt+: https://www.microsoft.com/en-us/research/blog/steering-at-the-frontier-extending-the-power-of-prompting/ Microsoft Introduction to Prompt Engineering: https://learn.microsoft.com/en-us/azure/ai-services/openai/concepts/prompt-engineering Microsoft Advanced Prompt Engineering Guide: https://learn.microsoft.com/en-us/azure/ai-services/openai/concepts/advanced-prompt-engineering?pivots=programming-language-chat-completions;All things prompt engineering;[] | microsoft/promptbase |

google/gemma_pytorch;Gemma in PyTorch Gemma is a family of lightweight, state-of-the art open models built from research and technology used to create Google Gemini models. They are text-to-text, decoder-only large language models, available in English, with open weights, pre-trained variants, and instruction-tuned variants. For more details, please check out the following links: Gemma on Google AI Gemma on Kaggle Gemma on Vertex AI Model Garden This is the official PyTorch implementation of Gemma models. We provide model and inference implementations using both PyTorch and PyTorch/XLA, and support running inference on CPU, GPU and TPU. Updates [April 9th] Support CodeGemma. You can find the checkpoints on Kaggle and Hugging Face [April 5] Support Gemma v1.1. You can find the v1.1 checkpoints on Kaggle and Hugging Face . Download Gemma model checkpoint You can find the model checkpoints on Kaggle here . Alternatively, you can find the model checkpoints on the Hugging Face Hub here . To download the models, go the the model repository of the model of interest and click the Files and versions tab, and download the model and tokenizer files. For programmatic downloading, if you have huggingface_hub installed, you can also run: huggingface-cli download google/gemma-7b-it-pytorch Note that you can choose between the 2B, 7B, 7B int8 quantized variants. VARIANT=<2b or 7b>

CKPT_PATH=<Insert ckpt path here> Try it free on Colab Follow the steps at https://ai.google.dev/gemma/docs/pytorch_gemma . Try it out with PyTorch Prerequisite: make sure you have setup docker permission properly as a non-root user. bash

sudo usermod -aG docker $USER

newgrp docker Build the docker image. ```bash

DOCKER_URI=gemma:${USER} docker build -f docker/Dockerfile ./ -t ${DOCKER_URI}

``` Run Gemma inference on CPU. ```bash

PROMPT="The meaning of life is" docker run -t --rm \

-v ${CKPT_PATH}:/tmp/ckpt \

${DOCKER_URI} \

python scripts/run.py \

--ckpt=/tmp/ckpt \

--variant="${VARIANT}" \

--prompt="${PROMPT}"

# add --quant for the int8 quantized model.

``` Run Gemma inference on GPU. ```bash

PROMPT="The meaning of life is" docker run -t --rm \

--gpus all \

-v ${CKPT_PATH}:/tmp/ckpt \

${DOCKER_URI} \

python scripts/run.py \

--device=cuda \

--ckpt=/tmp/ckpt \

--variant="${VARIANT}" \

--prompt="${PROMPT}"

# add --quant for the int8 quantized model.

``` Try It out with PyTorch/XLA Build the docker image (CPU, TPU). ```bash

DOCKER_URI=gemma_xla:${USER} docker build -f docker/xla.Dockerfile ./ -t ${DOCKER_URI}

``` Build the docker image (GPU). ```bash

DOCKER_URI=gemma_xla_gpu:${USER} docker build -f docker/xla_gpu.Dockerfile ./ -t ${DOCKER_URI}

``` Run Gemma inference on CPU. bash

docker run -t --rm \

--shm-size 4gb \

-e PJRT_DEVICE=CPU \

-v ${CKPT_PATH}:/tmp/ckpt \

${DOCKER_URI} \

python scripts/run_xla.py \

--ckpt=/tmp/ckpt \

--variant="${VARIANT}" \

# add `--quant` for the int8 quantized model. Run Gemma inference on TPU. Note: be sure to use the docker container built from xla.Dockerfile . bash

docker run -t --rm \

--shm-size 4gb \

-e PJRT_DEVICE=TPU \

-v ${CKPT_PATH}:/tmp/ckpt \

${DOCKER_URI} \

python scripts/run_xla.py \

--ckpt=/tmp/ckpt \

--variant="${VARIANT}" \

# add `--quant` for the int8 quantized model. Run Gemma inference on GPU. Note: be sure to use the docker container built from xla_gpu.Dockerfile . bash

docker run -t --rm --privileged \

--shm-size=16g --net=host --gpus all \

-e USE_CUDA=1 \

-e PJRT_DEVICE=CUDA \

-v ${CKPT_PATH}:/tmp/ckpt \

${DOCKER_URI} \

python scripts/run_xla.py \

--ckpt=/tmp/ckpt \

--variant="${VARIANT}" \

# add `--quant` for the int8 quantized model. Tokenizer Notes 99 unused tokens are reserved in the pretrained tokenizer model to assist with more efficient training/fine-tuning. Unused tokens are in the string format of <unused[0-98]> with token id range of [7-105] . "<unused0>": 7,

"<unused1>": 8,

"<unused2>": 9,

...

"<unused98>": 105, Disclaimer This is not an officially supported Google product.;The official PyTorch implementation of Google's Gemma models;gemma,google,pytorch | google/gemma_pytorch |

levihsu/OOTDiffusion;OOTDiffusion This repository is the official implementation of OOTDiffusion 🤗 Try out OOTDiffusion (Thanks to ZeroGPU for providing A100 GPUs) OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on [ arXiv paper ] Yuhao Xu , Tao Gu , Weifeng Chen , Chengcai Chen Xiao-i Research Our model checkpoints trained on VITON-HD (half-body) and Dress Code (full-body) have been released 🤗 Hugging Face link for checkpoints (ootd, humanparsing, and openpose) 📢📢 We support ONNX for humanparsing now. Most environmental issues should have been addressed : ) Please also download clip-vit-large-patch14 into checkpoints folder We've only tested our code and models on Linux (Ubuntu 22.04) Installation Clone the repository sh

git clone https://github.com/levihsu/OOTDiffusion Create a conda environment and install the required packages sh

conda create -n ootd python==3.10

conda activate ootd

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2

pip install -r requirements.txt Inference Half-body model sh

cd OOTDiffusion/run

python run_ootd.py --model_path <model-image-path> --cloth_path <cloth-image-path> --scale 2.0 --sample 4 Full-body model Garment category must be paired: 0 = upperbody; 1 = lowerbody; 2 = dress sh

cd OOTDiffusion/run

python run_ootd.py --model_path <model-image-path> --cloth_path <cloth-image-path> --model_type dc --category 2 --scale 2.0 --sample 4 Citation @article{xu2024ootdiffusion,

title={OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on},

author={Xu, Yuhao and Gu, Tao and Chen, Weifeng and Chen, Chengcai},

journal={arXiv preprint arXiv:2403.01779},

year={2024}

} Star History TODO List [x] Paper [x] Gradio demo [x] Inference code [x] Model weights [ ] Training code;Official implementation of OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on;[] | levihsu/OOTDiffusion |

lavague-ai/LaVague;Welcome to LaVague A Large Action Model framework for developing AI Web Agents 🏄♀️ What is LaVague? LaVague is an open-source Large Action Model framework to develop AI Web Agents. Our web agents take an objective, such as "Print installation steps for Hugging Face's Diffusers library" and performs the required actions to achieve this goal by leveraging our two core components: A World Model that takes an objective and the current state (aka the current web page) and turns that into instructions An Action Engine which “compiles” these instructions into action code, e.g. Selenium or Playwright & execute them 🚀 Getting Started Demo Here is an example of how LaVague can take multiple steps to achieve the objective of "Go on the quicktour of PEFT": Hands-on You can do this with the following steps: Download LaVague with: bash

pip install lavague 2. Use our framework to build a Web Agent and implement the objective: ```python

from lavague.core import WorldModel, ActionEngine

from lavague.core.agents import WebAgent

from lavague.drivers.selenium import SeleniumDriver selenium_driver = SeleniumDriver(headless=False)

world_model = WorldModel()

action_engine = ActionEngine(selenium_driver)

agent = WebAgent(world_model, action_engine)

agent.get("https://huggingface.co/docs")

agent.run("Go on the quicktour of PEFT") Launch Gradio Agent Demo agent.demo("Go on the quicktour of PEFT")

``` For more information on this example and how to use LaVague, see our quick-tour . Note, these examples use our default OpenAI API configuration and you will need to set the OPENAI_API_KEY variable in your local environment with a valid API key for these to work. For an end-to-end example of LaVague in a Google Colab, see our quick-tour notebook 🙋 Contributing We would love your help and support on our quest to build a robust and reliable Large Action Model for web automation. To avoid having multiple people working on the same things & being unable to merge your work, we have outlined the following contribution process: 1) 📢 We outline tasks on our backlog : we recommend you check out issues with the help-wanted labels & good first issue labels

2) 🙋♀️ If you are interested in working on one of these tasks, comment on the issue!

3) 🤝 We will discuss with you and assign you the task with a community assigned label

4) 💬 We will then be available to discuss this task with you

5) ⬆️ You should submit your work as a PR

6) ✅ We will review & merge your code or request changes/give feedback Please check out our contributing guide for a more detailed guide. If you want to ask questions, contribute, or have proposals, please come on our Discord to chat! 🗺️ Roadmap TO keep up to date with our project backlog here . 🚨 Security warning Note, this project executes LLM-generated code using exec . This is not considered a safe practice. We therefore recommend taking extra care when using LaVague and running LaVague in a sandboxed environment! 📈 Data collection We want to build a dataset that can be used by the AI community to build better Large Action Models for better Web Agents. You can see our work so far on building community datasets on our BigAction HuggingFace page . This is why LaVague collects the following user data telemetry by default: Version of LaVague installed Code generated for each web action step LLM used (i.e GPT4) Multi modal LLM used (i.e GPT4) Randomly generated anonymous user ID Whether you are using a CLI command or our library directly The instruction used/generated The objective used (if you are using the agent) The chain of thoughts (if you are using the agent) The interaction zone on the page (bounding box) The viewport size of your browser The URL you performed an action on Whether the action failed or succeeded Error message, where relevant The source nodes (chunks of HTML code retrieved from the web page to perform this action) 🚫 Turn off all telemetry If you want to turn off all telemetry, you can set the TELEMETRY_VAR environment variable to "NONE". If you are running LaVague locally in a Linux environment, you can persistently set this variable for your environment with the following steps: 1) Add TELEMETRY_VAR="NONE" to your ~/.bashrc, ~/.bash_profile, or ~/.profile file (which file you have depends on your shell and its configuration)

2) Use `source ~/.bashrc (or .bash_profile or .profile) to apply your modifications without having to log out and back in In a notebook cell, you can use: bash

import os

os.environ['TELEMETRY_VAR'] = "NONE";Large Action Model framework to develop AI Web Agents;ai,browser,large-action-model,llm,oss,rag | lavague-ai/LaVague |

miurla/morphic;Morphic An AI-powered search engine with a generative UI. [!NOTE]

Please note that there are differences between this repository and the official website morphic.sh . The official website is a fork of this repository with additional features such as authentication, which are necessary for providing the service online. The core source code of Morphic resides in this repository, and it's designed to be easily built and deployed. 🗂️ Overview 🛠 Features 🧱 Stack 🚀 Quickstart 🌐 Deploy 🔎 Search Engine ✅ Verified models 🛠 Features Search and answer using GenerativeUI Understand user's questions Search history functionality Share search results ( Optional ) Video search support ( Optional ) Get answers from specified URLs Use as a search engine ※ Support for providers other than OpenAI Google Generative AI Provider ※ Anthropic Provider ※ Ollama Provider ( Unstable ) Specify the model to generate answers Groq API support ※ 🧱 Stack App framework: Next.js Text streaming / Generative UI: Vercel AI SDK Generative Model: OpenAI Search API: Tavily AI / Serper Reader API: Jina AI Serverless Database: Upstash Component library: shadcn/ui Headless component primitives: Radix UI Styling: Tailwind CSS 🚀 Quickstart 1. Fork and Clone repo Fork the repo to your Github account, then run the following command to clone the repo: git clone git@github.com:[YOUR_GITHUB_ACCOUNT]/morphic.git 2. Install dependencies cd morphic

bun install 3. Setting up Upstash Redis Follow the guide below to set up Upstash Redis. Create a database and obtain UPSTASH_REDIS_REST_URL and UPSTASH_REDIS_REST_TOKEN . Refer to the Upstash guide for instructions on how to proceed. 4. Fill out secrets cp .env.local.example .env.local Your .env.local file should look like this: ``` OpenAI API key retrieved here: https://platform.openai.com/api-keys OPENAI_API_KEY= Tavily API Key retrieved here: https://app.tavily.com/home TAVILY_API_KEY= Upstash Redis URL and Token retrieved here: https://console.upstash.com/redis UPSTASH_REDIS_REST_URL=

UPSTASH_REDIS_REST_TOKEN=

``` Note: This project focuses on Generative UI and requires complex output from LLMs. Currently, it's assumed that the official OpenAI models will be used. Although it's possible to set up other models, if you use an OpenAI-compatible model, but we don't guarantee that it'll work. 5. Run app locally bun dev You can now visit http://localhost:3000. 🌐 Deploy Host your own live version of Morphic with Vercel or Cloudflare Pages. Vercel Cloudflare Pages Fork the repo to your GitHub. Create a Cloudflare Pages project. Select Morphic repo and Next.js preset. Set OPENAI_API_KEY and TAVILY_API_KEY env vars. Save and deploy. Cancel deployment, go to Settings -> Functions -> Compatibility flags , add nodejs_compat to preview and production. Redeploy. The build error needs to be fixed: issue 🔎 Search Engine Setting up the Search Engine in Your Browser If you want to use Morphic as a search engine in your browser, follow these steps: Open your browser settings. Navigate to the search engine settings section. Select "Manage search engines and site search". Under "Site search", click on "Add". Fill in the fields as follows: Search engine : Morphic Shortcut : morphic URL with %s in place of query : https://morphic.sh/search?q=%s Click "Add" to save the new search engine. Find "Morphic" in the list of site search, click on the three dots next to it, and select "Make default". This will allow you to use Morphic as your default search engine in the browser. ✅ Verified models List of models applicable to all: OpenAI gpt-4o gpt-4-turbo gpt-3.5-turbo Google Gemini 1.5 pro ※ Ollama (Unstable) mistral/openhermes & Phi3/llama3 ※ List of verified models that can be specified to writers: Groq LLaMA3 8b LLaMA3 70b;An AI-powered search engine with a generative UI;generative-ai,generative-ui,nextjs,react,tailwindcss,typescript,shadcn-ui,vercel-ai-sdk | miurla/morphic |

stanford-oval/storm;STORM: Synthesis of Topic Outlines through Retrieval and Multi-perspective Question Asking | Research preview | Paper | Documentation (WIP) |

**Latest News** 🔥

- [2024/06] We will present STORM at NAACL 2024! Find us at Poster Session 2 on June 17 or check our [presentation material](assets/storm_naacl2024_slides.pdf).

- [2024/05] We add Bing Search support in [rm.py](src/rm.py). Test STORM with `GPT-4o` - we now configure the article generation part in our demo using `GPT-4o` model.

- [2024/04] We release refactored version of STORM codebase! We define [interface](src/interface.py) for STORM pipeline and reimplement STORM-wiki (check out [`src/storm_wiki`](src/storm_wiki)) to demonstrate how to instantiate the pipeline. We provide API to support customization of different language models and retrieval/search integration.

## Overview [(Try STORM now!)](https://storm.genie.stanford.edu/) STORM is a LLM system that writes Wikipedia-like articles from scratch based on Internet search.

While the system cannot produce publication-ready articles that often require a significant number of edits, experienced Wikipedia editors have found it helpful in their pre-writing stage.

**Try out our [live research preview](https://storm.genie.stanford.edu/) to see how STORM can help your knowledge exploration journey and please provide feedback to help us improve the system 🙏!**

## How STORM works

STORM breaks down generating long articles with citations into two steps:

1. **Pre-writing stage**: The system conducts Internet-based research to collect references and generates an outline.

2. **Writing stage**: The system uses the outline and references to generate the full-length article with citations. STORM identifies the core of automating the research process as automatically coming up with good questions to ask. Directly prompting the language model to ask questions does not work well. To improve the depth and breadth of the questions, STORM adopts two strategies:

1. **Perspective-Guided Question Asking**: Given the input topic, STORM discovers different perspectives by surveying existing articles from similar topics and uses them to control the question-asking process.

2. **Simulated Conversation**: STORM simulates a conversation between a Wikipedia writer and a topic expert grounded in Internet sources to enable the language model to update its understanding of the topic and ask follow-up questions.

Based on the separation of the two stages, STORM is implemented in a highly modular way using [dspy](https://github.com/stanfordnlp/dspy).

## Getting started

### 1. Setup

Below, we provide a quick start guide to run STORM locally.

1. Clone the git repository.

```shell

git clone https://github.com/stanford-oval/storm.git

cd storm

```

2. Install the required packages.

```shell

conda create -n storm python=3.11

conda activate storm

pip install -r requirements.txt

```

3. Set up OpenAI API key (if you want to use OpenAI models to power STORM) and [You.com search API](https://api.you.com/) key. Create a file `secrets.toml` under the root directory and add the following content:

```shell

# Set up OpenAI API key.

OPENAI_API_KEY="your_openai_api_key"

# If you are using the API service provided by OpenAI, include the following line:

OPENAI_API_TYPE="openai"

# If you are using the API service provided by Microsoft Azure, include the following lines:

OPENAI_API_TYPE="azure"

AZURE_API_BASE="your_azure_api_base_url"

AZURE_API_VERSION="your_azure_api_version"

# Set up You.com search API key.

YDC_API_KEY="your_youcom_api_key"

```

### 2. Running STORM-wiki locally

Currently, we provide example scripts under [`examples`](examples) to demonstrate how you can run STORM using different models.

**To run STORM with `gpt` family models**: Make sure you have set up the OpenAI API key and run the following command.

```

python examples/run_storm_wiki_gpt.py \

--output_dir $OUTPUT_DIR \

--retriever you \

--do-research \

--do-generate-outline \

--do-generate-article \

--do-polish-article

```

- `--do-research`: if True, simulate conversation to research the topic; otherwise, load the results.

- `--do-generate-outline`: If True, generate an outline for the topic; otherwise, load the results.

- `--do-generate-article`: If True, generate an article for the topic; otherwise, load the results.

- `--do-polish-article`: If True, polish the article by adding a summarization section and (optionally) removing duplicate content.

**To run STORM with `mistral` family models on local VLLM server**: have a VLLM server running with the `Mistral-7B-Instruct-v0.2` model and run the following command.

```

python examples/run_storm_wiki_mistral.py \

--url $URL \

--port $PORT \

--output_dir $OUTPUT_DIR \

--retriever you \

--do-research \

--do-generate-outline \

--do-generate-article \

--do-polish-article

```

- `--url` URL of the VLLM server.

- `--port` Port of the VLLM server.

## Customize STORM

### Customization of the Pipeline

STORM is a knowledge curation engine consisting of 4 modules:

1. Knowledge Curation Module: Collects a broad coverage of information about the given topic.

2. Outline Generation Module: Organizes the collected information by generating a hierarchical outline for the curated knowledge.

3. Article Generation Module: Populates the generated outline with the collected information.

4. Article Polishing Module: Refines and enhances the written article for better presentation.

The interface for each module is defined in `src/interface.py`, while their implementations are instantiated in `src/storm_wiki/modules/*`. These modules can be customized according to your specific requirements (e.g., generating sections in bullet point format instead of full paragraphs).

:star2: **You can share your customization of `Engine` by making PRs to this repo!**

### Customization of Retriever Module

As a knowledge curation engine, STORM grabs information from the Retriever module. The interface for the Retriever module is defined in [`src/interface.py`](src/interface.py). Please consult the interface documentation if you plan to create a new instance or replace the default search engine API. By default, STORM utilizes the You.com search engine API (see `YouRM` in [`src/rm.py`](src/rm.py)).

:new: [2024/05] We test STORM with [Bing Search](https://learn.microsoft.com/en-us/bing/search-apis/bing-web-search/reference/endpoints). See `BingSearch` in [`src/rm.py`](src/rm.py) for the configuration and you can specify `--retriever bing` to use Bing Search in our [example scripts](examples).

:star2: **PRs for integrating more search engines/retrievers are highly appreciated!**

### Customization of Language Models

STORM provides the following language model implementations in [`src/lm.py`](src/lm.py):

- `OpenAIModel`

- `ClaudeModel`

- `VLLMClient`

- `TGIClient`

- `TogetherClient`

:star2: **PRs for integrating more language model clients are highly appreciated!**

:bulb: **For a good practice,**

- choose a cheaper/faster model for `conv_simulator_lm` which is used to split queries, synthesize answers in the conversation.

- if you need to conduct the actual writing step, choose a more powerful model for `article_gen_lm`. Based on our experiments, weak models are bad at generating text with citations.

- for open models, adding one-shot example can help it better follow instructions.

Please refer to the scripts in the [`examples`](examples) directory for concrete guidance on customizing the language model used in the pipeline.

## Replicate NAACL2024 result

Please switch to the branch `NAACL-2024-code-backup` Show me instructions ### Paper Experiments

The FreshWiki dataset used in our experiments can be found in [./FreshWiki](FreshWiki).

Run the following commands under [./src](src).

#### Pre-writing Stage

For batch experiment on FreshWiki dataset:

```shell

python -m scripts.run_prewriting --input-source file --input-path ../FreshWiki/topic_list.csv --engine gpt-4 --do-research --max-conv-turn 5 --max-perspective 5

```

- `--engine` (choices=[`gpt-4`, `gpt-35-turbo`]): the LLM engine used for generating the outline

- `--do-research`: if True, simulate conversation to research the topic; otherwise, load the results.

- `--max-conv-turn`: the maximum number of questions for each information-seeking conversation

- `--max-perspective`: the maximum number of perspectives to be considered, each perspective corresponds to an information-seeking conversation.

- STORM also uses a general conversation to collect basic information about the topic. So, the maximum number of QA pairs is `max_turn * (max_perspective + 1)`. :bulb: Reducing `max_turn` or `max_perspective` can speed up the process and reduce the cost but may result in less comprehensive outline.

- The parameter will not have any effect if `--disable-perspective` is set (the perspective-driven question asking is disabled).

To run the experiment on a single topic:

```shell

python -m scripts.run_prewriting --input-source console --engine gpt-4 --max-conv-turn 5 --max-perspective 5 --do-research

```

- The script will ask you to enter the `Topic` and the `Ground truth url` that will be excluded. If you do not have any url to exclude, leave that field empty.

The generated outline will be saved in `{output_dir}/{topic}/storm_gen_outline.txt` and the collected references will be saved in `{output_dir}/{topic}/raw_search_results.json`.

#### Writing Stage

For batch experiment on FreshWiki dataset:

```shell

python -m scripts.run_writing --input-source file --input-path ../FreshWiki/topic_list.csv --engine gpt-4 --do-polish-article --remove-duplicate

```

- `--do-polish-article`: if True, polish the article by adding a summarization section and removing duplicate content if `--remove-duplicate` is set True.

To run the experiment on a single topic:

```shell

python -m scripts.run_writing --input-source console --engine gpt-4 --do-polish-article --remove-duplicate

```

- The script will ask you to enter the `Topic`. Please enter the same topic as the one used in the pre-writing stage.

The generated article will be saved in `{output_dir}/{topic}/storm_gen_article.txt` and the references corresponding to citation index will be saved in `{output_dir}/{topic}/url_to_info.json`. If `--do-polish-article` is set, the polished article will be saved in `{output_dir}/{topic}/storm_gen_article_polished.txt`.

### Customize the STORM Configurations

We set up the default LLM configuration in `LLMConfigs` in [src/modules/utils.py](src/modules/utils.py). You can use `set_conv_simulator_lm()`,`set_question_asker_lm()`, `set_outline_gen_lm()`, `set_article_gen_lm()`, `set_article_polish_lm()` to override the default configuration. These functions take in an instance from `dspy.dsp.LM` or `dspy.dsp.HFModel`.

### Automatic Evaluation

In our paper, we break down the evaluation into two parts: outline quality and full-length article quality.

#### Outline Quality

We introduce *heading soft recall* and *heading entity recall* to evaluate the outline quality. This makes it easier to prototype methods for pre-writing.

Run the following command under [./eval](eval) to compute the metrics on FreshWiki dataset:

```shell

python eval_outline_quality.py --input-path ../FreshWiki/topic_list.csv --gt-dir ../FreshWiki --pred-dir ../results --pred-file-name storm_gen_outline.txt --result-output-path ../results/storm_outline_quality.csv

```

#### Full-length Article Quality

[eval/eval_article_quality.py](eval/eval_article_quality.py) provides the entry point of evaluating full-length article quality using ROUGE, entity recall, and rubric grading. Run the following command under `eval` to compute the metrics:

```shell

python eval_article_quality.py --input-path ../FreshWiki/topic_list.csv --gt-dir ../FreshWiki --pred-dir ../results --gt-dir ../FreshWiki --output-dir ../results/storm_article_eval_results --pred-file-name storm_gen_article_polished.txt

```

#### Use the Metric Yourself

The similarity-based metrics (i.e., ROUGE, entity recall, and heading entity recall) are implemented in [eval/metrics.py](eval/metrics.py).

For rubric grading, we use the [prometheus-13b-v1.0](https://huggingface.co/prometheus-eval/prometheus-13b-v1.0) introduced in [this paper](https://arxiv.org/abs/2310.08491). [eval/evaluation_prometheus.py](eval/evaluation_prometheus.py) provides the entry point of using the metric. ## Contributions

If you have any questions or suggestions, please feel free to open an issue or pull request. We welcome contributions to improve the system and the codebase!

Contact person: [Yijia Shao](mailto:shaoyj@stanford.edu) and [Yucheng Jiang](mailto:yuchengj@stanford.edu)

## Acknowledgement

We would like to thank Wikipedia for their excellent open-source content. The FreshWiki dataset is sourced from Wikipedia, licensed under the Creative Commons Attribution-ShareAlike (CC BY-SA) license.

We are very grateful to [Michelle Lam](https://michelle123lam.github.io/) for designing the logo for this project.

## Citation

Please cite our paper if you use this code or part of it in your work:

```bibtex

@inproceedings{shao2024assisting,

title={{Assisting in Writing Wikipedia-like Articles From Scratch with Large Language Models}},

author={Yijia Shao and Yucheng Jiang and Theodore A. Kanell and Peter Xu and Omar Khattab and Monica S. Lam},

year={2024},

booktitle={Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers)}

}

```;An LLM-powered knowledge curation system that researches a topic and generates a full-length report with citations.;large-language-models,nlp,knowledge-curation,naacl,report-generation,retrieval-augmented-generation | stanford-oval/storm |

microsoft/sudo;Sudo for Windows Welcome to the repository for Sudo for Windows 🥪. Sudo

for Windows allows users to run elevated commands directly from unelevated

terminal windows. The "Inbox" version of sudo is available for Windows 11 builds 26045 and later. If you're on an Insiders

build with sudo, you can enable it in the Windows Settings app, on the

"Developer Features" page. Here you can report issues and file feature requests. Relationship to sudo on Unix/Linux Everything about permissions and the command line experience is

different between Windows and Linux. This project is not a fork of the Unix/Linux sudo project, nor is it a port of that sudo project. Instead, Sudo for

Windows is a Windows-specific implementation of the sudo concept. As the two are entirely different applications, you'll find that certain

elements of the traditional sudo experience are not present in Sudo for Windows, and

vice versa. Scripts and documentation that are written for sudo may not

be able to be used directly with Sudo for Windows without some modification. Documentation All project documentation is located at aka.ms/sudo-docs . If you would like to contribute to

the documentation, please submit a pull request on the Sudo for Windows

Documentation repo . Contributing Check out CONTRIBUTING.md for details on how to contribute to this project. sudo.ps1 In the meantime, you can contribute to the [ sudo.ps1 ] script. This script is

meant to be a helper wrapper around sudo.exe that provides a more

user-friendly experience for using sudo from PowerShell. This script is located

in the scripts/ directory. Communicating with the Team The easiest way to communicate with the team is via GitHub issues. Please file new issues, feature requests and suggestions, but DO search for

similar open/closed preexisting issues before creating a new issue. If you would like to ask a question that you feel doesn't warrant an issue

(yet), try a discussion thread . Those are especially helpful for question &

answer threads. Otherwise, you can reach out to us via your social media

platform of choice: Mike Griese, Senior Developer: @zadjii@mastodon.social Jordi Adoumie, Product Manager: @joadoumie Dustin Howett, Engineering Lead: @dhowett@mas.to Clint Rutkas, Lead Product Manager: @crutkas Code of Conduct This project has adopted the Microsoft Open Source Code of

Conduct . For more information see the Code of Conduct

FAQ or contact opencode@microsoft.com with any

additional questions or comments.;It's sudo, for Windows;sudo,windows,windows-11 | microsoft/sudo |

jianchang512/ChatTTS-ui;English README | 获取音色 | Discord交流群 | 打赏项目 ChatTTS webUI & API 一个简单的本地网页界面,在网页使用 ChatTTS 将文字合成为语音,支持中英文、数字混杂,并提供API接口。 原始 ChatTTS 项目 界面预览 试听合成语音效果 https://github.com/jianchang512/ChatTTS-ui/assets/3378335/bd6aaef9-a49a-4a81-803a-91e3320bf808 文字数字符号 控制符混杂效果 https://github.com/jianchang512/ChatTTS-ui/assets/3378335/e2a08ea0-32af-4a30-8880-3a91f6cbea55 Windows预打包版 从 Releases 中下载压缩包,解压后双击 app.exe 即可使用 某些安全软件可能报毒,请退出或使用源码部署 英伟达显卡大于4G显存,并安装了CUDA11.8+后,将启用GPU加速 Linux 下容器部署 安装 拉取项目仓库 在任意路径下克隆项目,例如: bash

git clone https://github.com/jianchang512/ChatTTS-ui.git chat-tts-ui 启动 Runner 进入到项目目录: bash

cd chat-tts-ui 启动容器并查看初始化日志: ```bash

gpu版本

docker compose -f docker-compose.gpu.yaml up -d cpu版本 docker compose -f docker-compose.cpu.yaml up -d docker compose logs -f --no-log-prefix 访问 ChatTTS WebUI 启动:['0.0.0.0', '9966'] ,也即,访问部署设备的 IP:9966 即可,例如: 本机: http://127.0.0.1:9966 服务器: http://192.168.1.100:9966 更新 Get the latest code from the main branch: bash

git checkout main

git pull origin main Go to the next step and update to the latest image: ```bash

docker compose down gpu版本

docker compose -f docker-compose.gpu.yaml up -d --build cpu版本

docker compose -f docker-compose.cpu.yaml up -d --build docker compose logs -f --no-log-prefix

``` Linux 下源码部署 配置好 python3.9-3.11环境 创建空目录 /data/chattts 执行命令 cd /data/chattts && git clone https://github.com/jianchang512/chatTTS-ui . 创建虚拟环境 python3 -m venv venv 激活虚拟环境 source ./venv/bin/activate 安装依赖 pip3 install -r requirements.txt 如果不需要CUDA加速,执行 pip3 install torch==2.2.0 torchaudio==2.2.0 如果需要CUDA加速,执行 ```

pip install torch==2.2.0 torchaudio==2.2.0 --index-url https://download.pytorch.org/whl/cu118 pip install nvidia-cublas-cu11 nvidia-cudnn-cu11 ``` 另需安装 CUDA11.8+ ToolKit,请自行搜索安装方法 或参考 https://juejin.cn/post/7318704408727519270 除CUDA外,也可以使用AMD GPU进行加速,这需要安装ROCm和PyTorch_ROCm版本。AMG GPU借助ROCm,在PyTorch开箱即用,无需额外修改代码。

1. 请参考https://rocm.docs.amd.com/projects/install-on-linux/en/latest/tutorial/quick-start.html 来安装AMD GPU Driver及ROCm.

1. 再通过https://pytorch.org/ 安装PyTorch_ROCm版本。 pip3 install torch==2.2.0 torchaudio==2.2.0 --index-url https://download.pytorch.org/whl/rocm6.0 安装完成后,可以通过rocm-smi命令来查看系统中的AMD GPU。也可以用以下Torch代码(query_gpu.py)来查询当前AMD GPU Device. ```

import torch print(torch. version ) if torch.cuda.is_available():

device = torch.device("cuda") # a CUDA device object

print('Using GPU:', torch.cuda.get_device_name(0))

else:

device = torch.device("cpu")

print('Using CPU') torch.cuda.get_device_properties(0) ``` 使用以上代码,以AMD Radeon Pro W7900为例,查询设备如下。 ``` $ python ~/query_gpu.py 2.4.0.dev20240401+rocm6.0 Using GPU: AMD Radeon PRO W7900 ``` 执行 python3 app.py 启动,将自动打开浏览器窗口,默认地址 http://127.0.0.1:9966 (注意:默认从 modelscope 魔塔下载模型,不可使用代理下载,请关闭代理) MacOS 下源码部署 配置好 python3.9-3.11 环境,安装git ,执行命令 brew install libsndfile git python@3.10 继续执行 ```

export PATH="/usr/local/opt/python@3.10/bin:$PATH" source ~/.bash_profile source ~/.zshrc ``` 创建空目录 /data/chattts 执行命令 cd /data/chattts && git clone https://github.com/jianchang512/chatTTS-ui . 创建虚拟环境 python3 -m venv venv 激活虚拟环境 source ./venv/bin/activate 安装依赖 pip3 install -r requirements.txt 安装torch pip3 install torch==2.2.0 torchaudio==2.2.0 执行 python3 app.py 启动,将自动打开浏览器窗口,默认地址 http://127.0.0.1:9966 (注意:默认从 modelscope 魔塔下载模型,不可使用代理下载,请关闭代理) Windows源码部署 下载python3.9-3.11,安装时注意选中 Add Python to environment variables 下载并安装git,https://github.com/git-for-windows/git/releases/download/v2.45.1.windows.1/Git-2.45.1-64-bit.exe 创建空文件夹 D:/chattts 并进入,地址栏输入 cmd 回车,在弹出的cmd窗口中执行命令 git clone https://github.com/jianchang512/chatTTS-ui . 创建虚拟环境,执行命令 python -m venv venv 激活虚拟环境,执行 .\venv\scripts\activate 安装依赖,执行 pip install -r requirements.txt 如果不需要CUDA加速, 执行 pip install torch==2.2.0 torchaudio==2.2.0 如果需要CUDA加速,执行 pip install torch==2.2.0 torchaudio==2.2.0 --index-url https://download.pytorch.org/whl/cu118 另需安装 CUDA11.8+ ToolKit,请自行搜索安装方法或参考 https://juejin.cn/post/7318704408727519270 执行 python app.py 启动,将自动打开浏览器窗口,默认地址 http://127.0.0.1:9966 (注意:默认从 modelscope 魔塔下载模型,不可使用代理下载,请关闭代理) 部署注意 如果GPU显存低于4G,将强制使用CPU。 Windows或Linux下如果显存大于4G并且是英伟达显卡,但源码部署后仍使用CPU,可尝试先卸载torch再重装,卸载 pip uninstall -y torch torchaudio , 重新安装cuda版torch。 pip install torch==2.2.0 torchaudio==2.2.0 --index-url https://download.pytorch.org/whl/cu118 。必须已安装CUDA11.8+ 默认检测 modelscope 是否可连接,如果可以,则从modelscope下载模型,否则从 huggingface.co下载模型 音色获取 从 0.92 版本起,支持csv或pt格式的固定音色,下载后保存到软件目录下的 speaker 文件夹中即可 pt文件可从 https://github.com/6drf21e/ChatTTS_Speaker 项目提供的体验链接页面 (https://modelscope.cn/studios/ttwwwaa/ChatTTS_Speaker) 下载。 也可以从此页面 http://ttslist.aiqbh.com/10000cn/ 查看试听后将对应音色值填写到 “自定义音色值”文本框中 不同设备同一音色值seed,最终合成的声音会有差异的,以及同一设备相同音色值,音色也可能会有变化,尤其音调 常见问题与报错解决方法 修改http地址 默认地址是 http://127.0.0.1:9966 ,如果想修改,可打开目录下的 .env 文件,将 WEB_ADDRESS=127.0.0.1:9966 改为合适的ip和端口,比如修改为 WEB_ADDRESS=192.168.0.10:9966 以便局域网可访问 使用API请求 v0.5+ 请求方法: POST 请求地址: http://127.0.0.1:9966/tts 请求参数: text: str| 必须, 要合成语音的文字 voice: 可选,默认 2222, 决定音色的数字, 2222 | 7869 | 6653 | 4099 | 5099,可选其一,或者任意传入将随机使用音色 prompt: str| 可选,默认 空, 设定 笑声、停顿,例如 [oral_2][laugh_0][break_6] temperature: float| 可选, 默认 0.3 top_p: float| 可选, 默认 0.7 top_k: int| 可选, 默认 20 skip_refine: int| 可选, 默认0, 1=跳过 refine text,0=不跳过 custom_voice: int| 可选, 默认0,自定义获取音色值时的种子值,需要大于0的整数,如果设置了则以此为准,将忽略 voice 返回:json数据 成功返回:

{code:0,msg:ok,audio_files:[dict1,dict2]} 其中 audio_files 是字典数组,每个元素dict为 {filename:wav文件绝对路径,url:可下载的wav网址} 失败返回: {code:1,msg:错误原因} ``` API调用代码 import requests res = requests.post('http://127.0.0.1:9966/tts', data={

"text": "若不懂无需填写",

"prompt": "",

"voice": "3333",

"temperature": 0.3,

"top_p": 0.7,

"top_k": 20,

"skip_refine": 0,

"custom_voice": 0

})

print(res.json()) ok {code:0, msg:'ok', audio_files:[{filename: E:/python/chattts/static/wavs/20240601-22_12_12-c7456293f7b5e4dfd3ff83bbd884a23e.wav, url: http://127.0.0.1:9966/static/wavs/20240601-22_12_12-c7456293f7b5e4dfd3ff83bbd884a23e.wav}]} error {code:1, msg:"error"} ``` 在pyVideoTrans软件中使用 升级 pyVideoTrans 到 1.82+ https://github.com/jianchang512/pyvideotrans 点击菜单-设置-ChatTTS,填写请求地址,默认应该填写 http://127.0.0.1:9966 测试无问题后,在主界面中选择 ChatTTS;一个简单的本地网页界面,使用ChatTTS将文字合成为语音,同时支持对外提供API接口。A simple native web interface that uses ChatTTS to synthesize text into speech, along with support for external API interfaces.;tts,chattts | jianchang512/ChatTTS-ui |

birobirobiro/awesome-shadcn-ui;awesome-shadcn/ui A curated list of awesome things related to shadcn/ui Created by: birobirobiro.dev ## Libs and Components

- [aceternity-ui](https://ui.aceternity.com/) - Copy paste the most trending react components without having to worry about styling and animations.

- [assistant-ui](https://github.com/Yonom/assistant-ui) - React Components for AI Chat.

- [autocomplete-select-shadcn-ui](https://www.armand-salle.fr/post/autocomplete-select-shadcn-ui) - Autocomplete component built with shadcn/ui and Fancy Multi Select by Maximilian Kaske.

- [auto-form](https://github.com/vantezzen/auto-form) - A React component that automatically creates a shadcn/ui form based on a zod schema.

- [capture-photo](https://github.com/UretzkyZvi/capture-photo) - Capture-Photo is a versatile, browser-based React component designed to streamline the integration of camera functionalities directly into your web applications.

- [clerk-elements](https://clerk.com/docs/elements/examples/shadcn-ui) - Composable components that can be used to build custom UIs on top of Clerk's APIs.

- [clerk-shadcn-theme](https://github.com/stormynight9/clerk-shadcn-theme) - Easily synchronize your Clerk ` ` and ` ` components with your shadcn/ui styles.

- [country-state-dropdown](https://github.com/Jayprecode/country-state-dropdown) - This Component is built with Nextjs, Tailwindcss, shadcn/ui & Zustand for state management.

- [cult-ui](https://www.cult-ui.com/) - A well curated set of animated shadcn-style React components for more specific use-cases.

- [credenza](https://github.com/redpangilinan/credenza) - Ready-made responsive modal component for shadcn/ui.

- [date-range-picker-for-shadcn](https://github.com/johnpolacek/date-range-picker-for-shadcn) - Includes multi-month views, text entry, preset ranges, responsive design, and date range comparisons.

- [downshift-shadcn-combobox](https://github.com/TheOmer77/downshift-shadcn-combobox) - Combobox/autocomplete component built with shadcn/ui and Downshift.

- [echo-editor](https://github.com/Seedsa/echo-editor) - A modern WYSIWYG rich-text editor base on tiptap and shadcn/ui

- [emblor](https://github.com/JaleelB/emblor) - A highly customizable, accessible, and fully-featured tag input component built with shadcn/ui.

- [enhanced-button](https://github.com/jakobhoeg/enhanced-button) - An enhanced version of the default shadcn-button component.

- [fancy-area](https://craft.mxkaske.dev/post/fancy-area) - The Textarea is inspired by GitHub's PR comment section. The impressive part is the @mention support including hover cards in the preview. The goal is to reproduce it without text editor library.

- [fancy-box](https://craft.mxkaske.dev/post/fancy-box) - The Combobox is inspired by GitHub's PR label selector and is powered by shadcn/ui. Almost all elements are radix-ui components, styled with tailwindcss.

- [fancy-multi-select](https://craft.mxkaske.dev/post/fancy-multi-select) - The Multi Select Component is inspired by campsite.design's and cal.com's settings forms.

- [farmui](https://farmui.com) - A shadcn and tailwindcss based beautifully styled and animated component library solution with its own [npm package](https://www.npmjs.com/package/@kinfe123/farm-ui) to install any component with in a component registery.

- [file-uploader](https://github.com/sadmann7/file-uploader) - A file uploader built with shadcn/ui and react-dropzone.

- [file-vault](https://github.com/ManishBisht777/file-vault) - File upload component for React.

- [ibelick/background-snippet](https://bg.ibelick.com/) - Ready to use collection of modern background snippets.

- [indie-ui](https://github.com/Ali-Hussein-dev/indie-ui) - UI components with variants - [Docs](https://ui.indie-starter.dev)

- [magicui](https://magicui.design) - React components to build beautiful landing pages using tailwindcss + framer motion + shadcn/ui

- [maily.to](https://github.com/arikchakma/maily.to) - Craft beautiful emails effortlessly with notion like powerful editor.

- [minimal-tiptap](https://github.com/Aslam97/shadcn-minimal-tiptap) - A minimal WYSIWYG editor built with shadcn/ui and tiptap.

- [mynaui](https://mynaui.com/) - TailwindCSS and shadcn/ui UI Kit for Figma and React.

- [neobrutalism-components](https://github.com/ekmas/neobrutalism-components) - Collection of neobrutalism-styled Tailwind React and Shadcn UI components.

- [nextjs-components](https://components.bridger.to/) - A collection of Next.js components build with TypeScript, React, shadcn/ui, Craft UI, and Tailwind CSS.

- [nextjs-dnd](https://github.com/sujjeee/nextjs-dnd) - Sortable Drag and Drop with Next.js, shadcn/ui, and dnd-kit.

- [novel](https://github.com/steven-tey/novel) - Novel is a Notion-style WYSIWYG editor with AI-powered autocompletion. Built with [Tiptap](https://tiptap.dev/) + [Vercel AI SDK](https://sdk.vercel.ai/docs).

- [password-input](https://gist.github.com/mjbalcueva/b21f39a8787e558d4c536bf68e267398) - shadcn/ui custom password input.

- [phone-input-shadcn-ui](https://www.armand-salle.fr/post/phone-input-shadcn-ui) - Custom phone number component built with shadcn/ui.

- [planner](https://github.com/UretzkyZvi/planner) - Planner is a highly adaptable scheduling component tailored for React applications.

- [plate](https://github.com/udecode/plate) - The rich-text editor for React.

- [pricing-page-shadcn](https://github.com/m4nute/pricing-page-shadcn) - Pricing Page made with shadcn/ui & Next.js 14. Completely customizable.

- [progress-button](https://github.com/tomredman/ProgressButton) - An extension of shadcn/ui button component that uses a state machine to drive a progress UX.

- [react-dnd-kit-tailwind-shadcn-ui](https://github.com/Georgegriff/react-dnd-kit-tailwind-shadcn-ui) - Drag and drop Accessible kanban board implementing using React, dnd-kit, tailwind, and shadcn/ui.

- [search-address](https://github.com/UretzkyZvi/search-address) - The SearchAddress component provides a flexible and interactive search interface for addresses, utilizing the powerful Nominatim service from OpenStreetMap.

- [shadcn-blocks](https://ui.shadcn.com/blocks) - Blocks is the official shadcn/ui pre-made but customizable components that can be copied and pasted into your projects.

- [shadcn-cal](https://shadcn-cal-com.vercel.app/?date=2024-04-29) - A copy of the monthly calendar used by Cal.com with shadcn/ui, Radix Colors and React Aria.

- [shadcn-calendar-component](https://github.com/sersavan/shadcn-calendar-component) - A calendar date picker component designed with shadcn/ui.

- [shadcn-chat](https://github.com/jakobhoeg/shadcn-chat) - Customizable and reusable chat component for you to use in your projects.

- [shadcn-data-table-advanced-col-opions](https://github.com/danielagg/shadcn-data-table-advanced-col-opions) - Column-resizing option to shadcn/ui DataTable.

- [shadcn-drag-table](https://github.com/zenoncao/shadcn-drag-table) - A drag-and-drop table component using shadcn/ui and Next.js.

- [shadcn-extends](https://github.com/lucioew28/extends) - Intended to be a collection of components built using shadcn/ui.

- [shadcn-extension](https://github.com/BelkacemYerfa/shadcn-extension) - An open-source component collection that extends your UI library, built using shadcn/ui components.

- [shadcn-linear-combobox](https://github.com/damianricobelli/shadcn-linear-combobox) - A copy of the combobox that Linear uses to set the priority of a task.

- [shadcn-multi-select-component](https://github.com/sersavan/shadcn-multi-select-component) - A multi-select component designed with shadcn/ui.

- [shadcn-phone-input-2](https://github.com/damianricobelli/shadcn-phone-input) - Simple and formatted phone input component built with shadcn/ui y libphonenumber-js.

- [shadcn-phone-input](https://github.com/omeralpi/shadcn-phone-input) - Customizable phone input component with proper validation for any country.

- [shadcn-stepper](https://github.com/damianricobelli/shadcn-stepper) - A complete stepper component built with shadcn/ui.

- [shadcn-table-v2](https://github.com/sadmann7/shadcn-table) - shadcn/ui table component with server-side sorting, filtering, and pagination.

- [shadcn-timeline](https://github.com/timDeHof/shadcn-timeline) - Customizable and re-usable timeline component for you to use in your projects. Built on top of shadcn.

- [shadcn-ui-blocks](https://shadcn-ui-blocks.vercel.app/) - A collection of Over 10+ fully responsive, UI blocks you can drop into your Shadcn UI projects and customize to your heart's content.

- [shadcn-ui-expansions](https://github.com/hsuanyi-chou/shadcn-ui-expansions) - A lots of useful components which shadcn/ui does not have out of the box.

- [shadcn-ui-sidebar](https://github.com/salimi-my/shadcn-ui-sidebar) - A stunning, functional and responsive retractable sidebar built on top of shadcn/ui.

- [sortable](https://github.com/sadmann7/sortable) - A sortable component built with shadcn/ui, radix ui, and dnd-kit.

- [time-picker](https://github.com/openstatusHQ/time-picker) - A simple TimePicker for your shadcn/ui project.

- [tremor-raw](https://github.com/tremorlabs/tremor-raw) - Copy & paste React components to build modern web applications. Good for building charts.

- [uixmat/onborda](https://github.com/uixmat/onborda) - Give your application the onboarding it deserves with Onborda product tour for Next.js

## Apps

### Plugins and Extensions

- [chat-with-youtube](https://chat-with-youtube.vercel.app/) - A chrome extension is designed to give you the ability to efficiently summarize videos, easily search for specific parts, and enjoy additional useful features.

- [raycast-shadcn](https://www.raycast.com/luisFilipePT/shadcn-ui) - Raycast extension to Browse shadcn/ui documentation, components and examples

- [shadcn-ui](https://marketplace.visualstudio.com/items?itemName=SuhelMakkad.shadcn-ui) - Add components from shadcn/ui directly from VS Code.

- [shadcn/ui Components Manager](https://plugins.jetbrains.com/plugin/23479-shadcn-ui-components-manager) - A plugin for Jetbrain products. It allows you to manage your shadcn/ui components across Svelte, React, Vue, and Solid frameworks with this plugin. Simplify tasks like adding, removing, and updating components.

- [vscode-shadcn-svelte](https://marketplace.visualstudio.com/items?itemName=Selemondev.vscode-shadcn-svelte&ssr=false#overview) - VS Code extension for shadcn/ui components in Svelte projects.

- [vscode-shadcn-ui-snippets](https://marketplace.visualstudio.com/items?itemName=VeroXyle.shadcn-ui-snippets) - Easily import and use shadcn-ui components with ease using snippets within VSCode. Just type cn or shadcn in you jsx/tsx file and you will get a list of all the components to choose from.

- [vscode-shadcn-vue](https://marketplace.visualstudio.com/items?itemName=Selemondev.vscode-shadcn-vue) - Extension for integrating shadcn/ui components into Vue.js projects.

### Colors and Customizations

- [10000+Themes for shadcn/ui](https://ui.jln.dev/) - 10000+ Themes for shadcn/ui.

- [dizzy](https://dizzy.systems/) - Bootstrap a new Next or Vite project with shadcn/ui. Customize font, icons, colors, spacing, radii, and shadows.

- [gradient-picker](https://github.com/Illyism/gradient-picker) - Fancy Gradient Picker built with Shadcn UI, Radix UI and Tailwind CSS.

- [navnote/rangeen](https://github.com/navnote/rangeen) - Tool that helps you to create a colour palette for your website

- [shadcn-ui-customizer](https://github.com/Railly/shadcn-ui-customizer) - POC - shadcn/ui themes with color pickers

- [ui-colorgen](https://ui-colorgen.vercel.app/) - An application designed to assist you with color configuration of shadcn/ui.

- [zippy starter's shadcn/ui theme generator.](https://zippystarter.com/tools/shadcn-ui-theme-generator) - Easily create custom themes from a single colour that you can copy and paste into your apps.

### Animations

- [magicui.design](https://magicui.design) - Largest collection of open-source react components to build beautiful landing pages.

- [motionvariants](https://github.com/chrisabdo/motionvariants) - Beautiful Framer Motion Animations.

### Tools

- [5devs](https://www.5devs.com.br/) - A website to get fake brazilian data for testing purposes.

- [cut-it](https://github.com/mehrabmp/cut-it) - Link shortener built using Next.js App Router, Server Actions, Drizzle ORM, Turso and styled with shadcn/ui

- [CV Forge](https://cvforge.app) - Resume builder, build with @shadcn/ui, react-hook-form and react-pdf

- [form-builder](https://github.com/AlandSleman/FormBuilder) - UI based codegen tool to easily create Beautiful and Type safe @shadcn/ui forms.

- [imgsrc](https://imgsrc.io/) - Generate beautiful Open Graph images with zero effort.

- [invoify](https://github.com/aliabb01/invoify) - An invoice generator app built using Next.js, Typescript, and shadcn/ui

- [pastecode](https://github.com/Quorin/PasteCode.app) - Pastebin alternative built with Typescript, Next.js, Drizzle, Shadcn, RSC

- [QuackDB](https://github.com/mattf96s/QuackDB) - Open-source in-browser DuckDB SQL editor

- [shadcn-pricing-page-generator](https://shipixen.com/shadcn-pricing-page) - The easiest way to get a React pricing page with shadcn/ui, Radix UI and/or Tailwind CSS.

- [translate-app](https://github.com/developaul/translate-app) - Translate App using TypeScript, Tailwind CSS, NextJS, Bun, shadcn/ui, AI-SDK/OpenAI, Zod

- [typelabs](https://github.com/imsandeshpandey/typelabs) - MonkeyType inspired typing test app built with React, shadcn, and Zustand at it's core.

- [v0](https://v0.dev/) - Vercel's generative UI system, built on shadcn/ui and TailwindCSS, allows effortless UI generation from text prompts and/or images. It produces React and HTML code, integration is also possible via v0 CLI command.

## Platforms

- [bolhadev](https://bolhadev.chat/) - The quickest path to learn English is speaking it regularly. Just find someone to chat with.

- [enjoytown](https://github.com/avalynndev/enjoytown) - A free anime, manga, movie, tv-shows streaming platform. Built with Nextjs, shadcn/ui

- [infinitunes](https://github.com/rajput-hemant/infinitunes) - A Simple Music Player Web App built using Next.js, shadcn/ui, Tailwind CSS, DrizzleORM and more...

- [kd](https://github.com/gneiru/kd) - Ad-free Kdrama streaming app. Built with Nextjs, Drizzle ORM, NeonDB and shadcn/ui

- [plotwist](https://plotwist.app/en-US) - Easy management and reviews of your movies, series and animes using Next.js, Tailwind CSS, Supabase and shadcn/ui.

## Ports

- [Angular](https://github.com/goetzrobin/spartan) - Angular port of shadcn/ui

- [Flutter](https://github.com/nank1ro/shadcn-ui) - Flutter port of shadcn/ui

- [Franken UI](https://www.franken-ui.dev/) - HTML-first, framework-agnostic, beautifully designed components that you can truly copy and paste into your site. Accessible. Customizable. Open Source.

- [JollyUI](https://github.com/jolbol1/jolly-ui) - shadcn/ui compatible react aria components

- [Kotlin](https://github.com/dead8309/shadcn-kotlin) - Kotlin port of shadcn/ui

- [Phoenix Liveview](https://github.com/bluzky/salad_ui) - Phoenix Liveview port of shadcn/ui

- [React Native](https://github.com/Mobilecn-UI/nativecn-ui) - React Native port of shadcn/ui

- [React Native](https://github.com/mrzachnugent/react-native-reusables) - React Native port of shadcn/ui (recommended)

- [Ruby](https://github.com/aviflombaum/shadcn-rails) - Ruby port of shadcn/ui

- [Solid](https://github.com/hngngn/shadcn-solid) - Solid port of shadcn/ui

- [Svelte](https://github.com/huntabyte/shadcn-svelte) - Svelte port of shadcn/ui

- [Swift](https://github.com/Mobilecn-UI/swiftcn-ui) - Swift port of shadcn/ui

- [Vue](https://github.com/radix-vue/shadcn-vue) - Vue port of shadcn/ui

## Design System

- [shadcn-ui-components](https://www.figma.com/community/file/1342715840824755935/shadcn-ui-components) - Every component recreated in Figma.

- [shadcn-ui-storybook](https://65711ecf32bae758b457ae34-uryqbzvojc.chromatic.com/) - All shadcn/ui components registered in the storybook by [JheanAntunes](https://github.com/JheanAntunes/storybook-shadcn)

- [shadcn-ui-storybook](https://fellipeutaka-ui.vercel.app/?path=/docs/components-accordion--docs) - All shadcn/ui components registered in the storybook by [fellipeutaka](https://github.com/fellipeutaka/ui)

## Boilerplates / Templates

- [chadnext](https://github.com/moinulmoin/chadnext) - Quick Starter Template includes Next.js 14 App router, shadcn/ui, LuciaAuth, Prisma, Server Actions, Stripe, Internationalization and more.

- [design-system-template](https://github.com/arevalolance/design-system-template) - Turborepo + TailwindCSS + Storybook + shadcn/ui

- [electron-shadcn](https://github.com/LuanRoger/electron-shadcn) - Electron app template with shadcn/ui and a bunch of other libs and tools ready to use.

- [horizon-ai-nextjs-shadcn-boilerplate](https://horizon-ui.com/boilerplate-shadcn) - Premium AI NextJS & Shadcn UI Boilerplate + Stripe + Supabase + OAuth

- [kirimase](https://kirimase.dev/) - A template and boilerplate for quickly starting your next project with shadcn/ui, Tailwind CSS, and Next.js.

- [magicui-startup-templates](https://magicui.design/docs/templates/startup) - Magic UI Startup template built using shadcn/ui + tailwindcss + framer-motion

- [next-shadcn-dashboard-starter](https://github.com/Kiranism/next-shadcn-dashboard-starter) - Admin Dashboard Starter with Next.js 14 and shadcn/ui

- [nextjs-mdx-blog](https://github.com/ChangoMan/nextjs-mdx-blog) - Starter template built with Contentlayer, MDX, shadcn/ui, and Tailwind CSS.

- [shadcn-landing-page](https://github.com/leoMirandaa/shadcn-landing-page) - Landing page template using shadcn/ui, React, Typescript and Tailwind CSS

- [shadcn-landing-page](https://github.com/nobruf/shadcn-landing-page) - Project conversion [shadcn-vue-landing-page](https://github.com/leoMirandaa/shadcn-vue-landing-page) to nextjs - Landing page template using Nestjs, shadcn/ui, TypeScript, Tailwind CSS

- [shadcn-nextjs-free-boilerplate](https://github.com/horizon-ui/shadcn-nextjs-boilerplate) - Free & Open-source NextJS Boilerplate + ChatGPT API Dashboard Template

- [shadcn-vue-landing-page](https://github.com/leoMirandaa/shadcn-vue-landing-page) - Landing page template using Vue, shadcn-vue, TypeScript, Tailwind CSS

- [t3-app-template](https://github.com/gaofubin/t3-app-template) - This is the admin template for T3 Stack and shadcn/ui

- [taxonomy](https://github.com/shadcn/taxonomy) - An open source application built using the new router, server components and everything new in Next.js

- [turborepo-shadcn-ui-tailwindcss](https://github.com/henriqpohl/turborepo-shadcn-ui-tailwindcss) - Turborepo starter with shadcn/ui & Tailwind CSS pre-configured for shared ui components.

- [turborepo-launchpad](https://github.com/JadRizk/turborepo-launchpad) - A comprehensive monorepo boilerplate for shadcn projects using Turbo. It features a highly scalable setup ideal for developing complex applications with shared components and utilities.

## Star History ## Contributors

Thanks goes to all these wonderful people:;A curated list of awesome things related to shadcn/ui.;awesome,awesome-list,resources,shadcn,shadcn-ui,list,open-source,shad | birobirobiro/awesome-shadcn-ui |

OpenInterpreter/01;○ The open-source language model computer. Preorder the Light | Get Updates | Documentation | [日本語](docs/README_JP.md) | [English](README.md) | We want to help you build. Apply for 1-on-1 support. [!IMPORTANT]

This experimental project is under rapid development and lacks basic safeguards. Until a stable 1.0 release, only run this repository on devices without sensitive information or access to paid services. A substantial rewrite to address these concerns and more, including the addition of RealtimeTTS and RealtimeSTT , is occurring here . The 01 Project is building an open-source ecosystem for AI devices. Our flagship operating system can power conversational devices like the Rabbit R1, Humane Pin, or Star Trek computer . We intend to become the GNU/Linux of this space by staying open, modular, and free. Software shell

git clone https://github.com/OpenInterpreter/01 # Clone the repository

cd 01/software # CD into the source directory shell

brew install portaudio ffmpeg cmake # Install Mac OSX dependencies

poetry install # Install Python dependencies

export OPENAI_API_KEY=sk... # OR run `poetry run 01 --local` to run everything locally

poetry run 01 # Runs the 01 Light simulator (hold your spacebar, speak, release) The RealtimeTTS and RealtimeSTT libraries in the incoming 01-rewrite are thanks to the state-of-the-art voice interface work of Kolja Beigel . Please star those repos and consider contributing to / utilizing those projects! Hardware The 01 Light is an ESP32-based voice interface. Build instructions are here . A list of what to buy here . It works in tandem with the 01 Server ( setup guide below ) running on your home computer. Mac OSX and Ubuntu are supported by running poetry run 01 ( Windows is supported experimentally). This uses your spacebar to simulate the 01 Light. (coming soon) The 01 Heavy is a standalone device that runs everything locally. We need your help supporting & building more hardware. The 01 should be able to run on any device with input (microphone, keyboard, etc.), output (speakers, screens, motors, etc.), and an internet connection (or sufficient compute to run everything locally). Contribution Guide → What does it do? The 01 exposes a speech-to-speech websocket at localhost:10001 . If you stream raw audio bytes to / in Streaming LMC format , you will receive its response in the same format. Inspired in part by Andrej Karpathy's LLM OS , we run a code-interpreting language model , and call it when certain events occur at your computer's kernel . The 01 wraps this in a voice interface: Protocols LMC Messages To communicate with different components of this system, we introduce LMC Messages format, which extends OpenAI’s messages format to include a "computer" role: https://github.com/OpenInterpreter/01/assets/63927363/8621b075-e052-46ba-8d2e-d64b9f2a5da9 Dynamic System Messages Dynamic System Messages enable you to execute code inside the LLM's system message, moments before it appears to the AI. ```python Edit the following settings in i.py interpreter.system_message = r" The time is {{time.time()}}. " # Anything in double brackets will be executed as Python

interpreter.chat("What time is it?") # It will know, without making a tool/API call

``` Guides 01 Server To run the server on your Desktop and connect it to your 01 Light, run the following commands: shell

brew install ngrok/ngrok/ngrok

ngrok authtoken ... # Use your ngrok authtoken

poetry run 01 --server --expose The final command will print a server URL. You can enter this into your 01 Light's captive WiFi portal to connect to your 01 Server. Local Mode poetry run 01 --local If you want to run local speech-to-text using Whisper, you must install Rust. Follow the instructions given here . Customizations To customize the behavior of the system, edit the system message, model, skills library path, etc. in the profiles directory under the server directory. This file sets up an interpreter, and is powered by Open Interpreter. To specify the text-to-speech service for the 01 base_device.py , set interpreter.tts to either "openai" for OpenAI, "elevenlabs" for ElevenLabs, or "coqui" for Coqui (local) in a profile. For the 01 Light, set SPEAKER_SAMPLE_RATE to 24000 for Coqui (local) or 22050 for OpenAI TTS. We currently don't support ElevenLabs TTS on the 01 Light. Ubuntu Dependencies bash

sudo apt-get install portaudio19-dev ffmpeg cmake Contributors Please see our contributing guidelines for more details on how to get involved. Roadmap Visit our roadmap to see the future of the 01. Background Context ↗ The story of devices that came before the 01. Inspiration ↗ Things we want to steal great ideas from. ○;The open-source language model computer;[] | OpenInterpreter/01 |

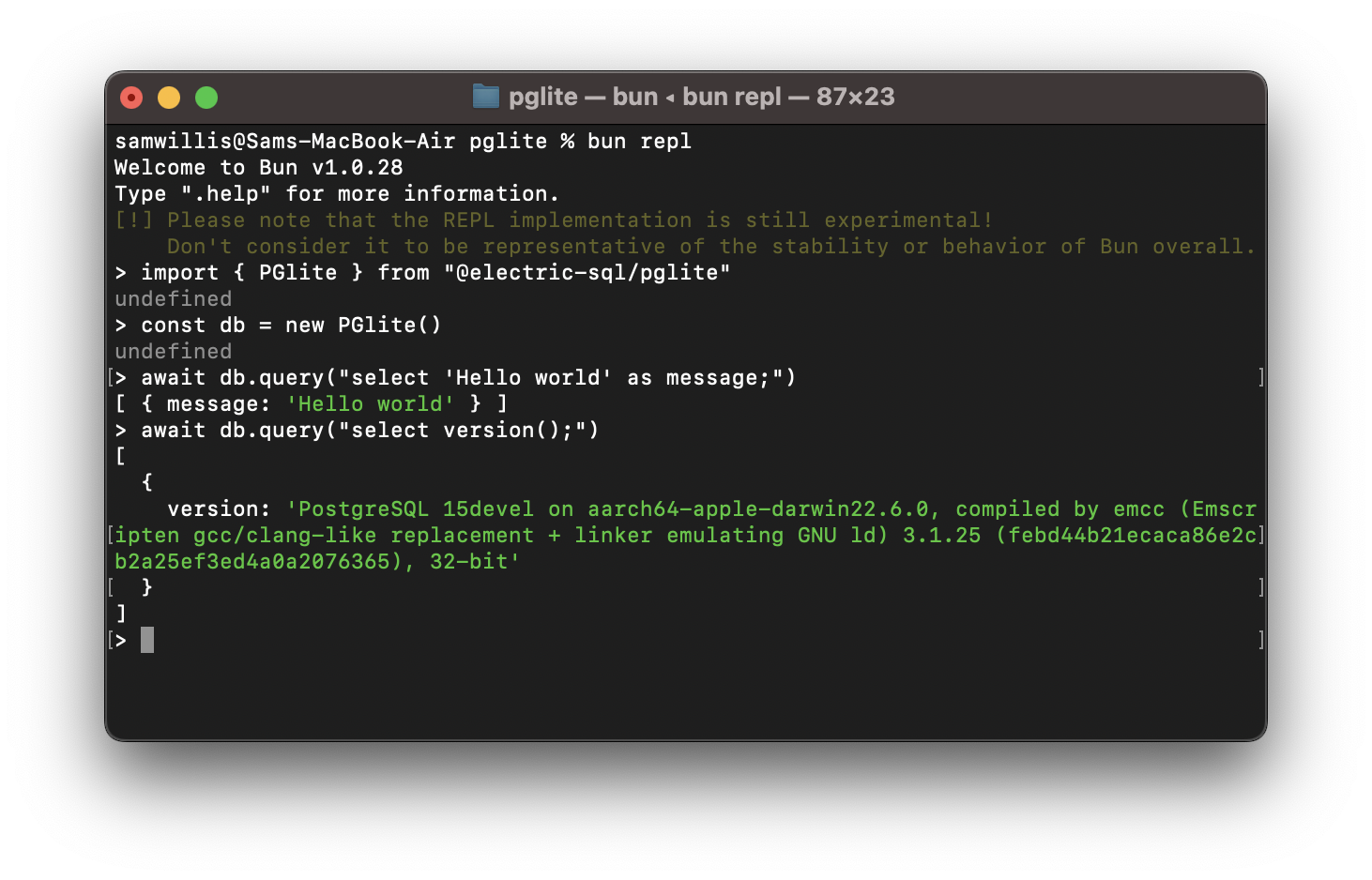

electric-sql/pglite;PGlite - the WASM build of Postgres from ElectricSQL . Build reactive, realtime, local-first apps directly on Postgres. # PGlite - Postgres in WASM

PGlite is a WASM Postgres build packaged into a TypeScript client library that enables you to run Postgres in the browser, Node.js and Bun, with no need to install any other dependencies. It is only 2.6mb gzipped.

```javascript

import { PGlite } from "@electric-sql/pglite";

const db = new PGlite();

await db.query("select 'Hello world' as message;");

// -> { rows: [ { message: "Hello world" } ] }

```

It can be used as an ephemeral in-memory database, or with persistence either to the file system (Node/Bun) or indexedDB (Browser).

Unlike previous "Postgres in the browser" projects, PGlite does not use a Linux virtual machine - it is simply Postgres in WASM.

It is being developed at [ElectricSQL](http://electric-sql.com) in collaboration with [Neon](http://neon.tech). We will continue to build on this experiment with the aim of creating a fully capable lightweight WASM Postgres with support for extensions such as pgvector.

## Whats new in V0.1

Version 0.1 (up from 0.0.2) includes significant changes to the Postgres build - it's about 1/3 smaller at 2.6mb gzipped, and up to 2-3 times faster. We have also found a way to statically compile Postgres extensions into the build - the first of these is pl/pgsql with more coming soon.

Key changes in this release are:

- Support for [parameterised queries](#querytquery-string-params-any-options-queryoptions-promiseresultst) #39

- An interactive [transaction API](#transactiontcallback-tx-transaction--promiset) #39

- pl/pgsql support #48

- Additional [query options](#queryoptions) #51

- Run PGlite in a [Web Workers](#web-workers) #49

- Fix for running on Windows #54

- Fix for missing `pg_catalog` and `information_schema` tables and view #41

We have also [published some benchmarks](https://github.com/electric-sql/pglite/blob/main/packages/benchmark/README.md) in comparison to a WASM SQLite build, and both native Postgres and SQLite. While PGlite is currently a little slower than WASM SQLite we have plans for further optimisations, including OPFS support and removing some the the Emscripten options that can add overhead.

## Browser

It can be installed and imported using your usual package manager:

```js

import { PGlite } from "@electric-sql/pglite";

```

or using a CDN such as JSDeliver:

```js

import { PGlite } from "https://cdn.jsdelivr.net/npm/@electric-sql/pglite/dist/index.js";

```

Then for an in-memory Postgres:

```js

const db = new PGlite()

await db.query("select 'Hello world' as message;")

// -> { rows: [ { message: "Hello world" } ] }

```

or to persist the database to indexedDB:

```js

const db = new PGlite("idb://my-pgdata");

```

## Node/Bun

Install into your project:

```bash

npm install @electric-sql/pglite

```

To use the in-memory Postgres:

```javascript

import { PGlite } from "@electric-sql/pglite";

const db = new PGlite();

await db.query("select 'Hello world' as message;");

// -> { rows: [ { message: "Hello world" } ] }

```

or to persist to the filesystem:

```javascript

const db = new PGlite("./path/to/pgdata");

```

## Deno

To use the in-memory Postgres, create a file `server.ts`:

```typescript

import { PGlite } from "npm:@electric-sql/pglite";

Deno.serve(async (_request: Request) => {

const db = new PGlite();

const query = await db.query("select 'Hello world' as message;");

return new Response(JSON.stringify(query));

});

```

Then run the file with `deno run --allow-net --allow-read server.ts`.

## API Reference

### Main Constructor:

#### `new PGlite(dataDir: string, options: PGliteOptions)`

A new pglite instance is created using the `new PGlite()` constructor.

##### `dataDir`

Path to the directory to store the Postgres database. You can provide a url scheme for various storage backends: