full_name

stringlengths 7

70

| description

stringlengths 4

590

⌀ | created_at

stringlengths 20

20

| last_commit

float64 | readme

stringlengths 14

559k

⌀ | label

int64 0

1

|

|---|---|---|---|---|---|

totond/TextPathView | A View with text path animation! | 2018-01-10T10:36:47Z | null | # TextPathView

<figure class="half">

<img src="https://github.com/totond/MyTUKU/blob/master/textdemo1.gif?raw=true">

<img src="https://github.com/totond/MyTUKU/blob/master/text1.gif?raw=true">

</figure>

> [Go to the English README](https://github.com/totond/TextPathView/blob/master/README-en.md)

## 介绍

TextPathView是一个把文字转化为路径动画然后展现出来的自定义控件。效果如上图。

> 这里有[原理解析!](https://juejin.im/post/5a9677b16fb9a063375765ad)

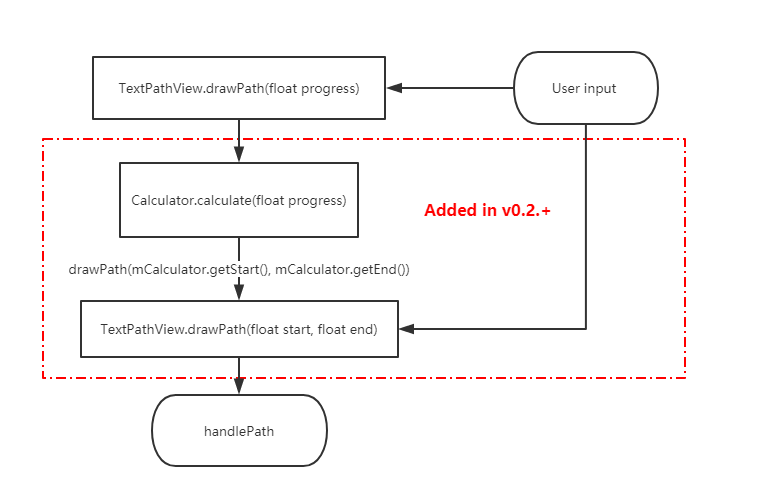

### v0.2.+重要更新

- 现在不但可以控制文字路径结束位置end,还可以控制开始位置start,如上图二

- 可以通过PathCalculator的子类来控制实现一些字路径变化,如下面的MidCalculator、AroundCalculator、BlinkCalculator

- 可以通知直接设置FillColor属性来控制结束时是否填充颜色

## 使用

主要的使用流程就是输入文字,然后设置一些动画的属性,还有画笔特效,最后启动就行了。想要自己控制绘画的进度也可以,详情见下面。

### Gradle

```

compile 'com.yanzhikai:TextPathView:0.2.1'

```

> minSdkVersion 16

> 如果遇到播放完后消失的问题,请关闭硬件加速,可能是硬件加速对`drawPath()`方法不支持

### 使用方法

#### TextPathView

TextPathView分为两种,一种是每个笔画按顺序刻画的SyncTextPathView,一种是每个笔画同时刻画的AsyncTextPathView,使用方法都是一样,在xml里面配置属性,然后直接在java里面调用startAnimation()方法就行了,具体的可以看例子和demo。下面是一个简单的例子:

xml里面:

```

<yanzhikai.textpath.SyncTextPathView

android:id="@+id/stpv_2017"

android:layout_width="match_parent"

android:layout_height="wrap_content"

app:duration="12000"

app:showPainter="true"

app:text="2017"

app:textInCenter="true"

app:textSize="60sp"

android:layout_weight="1"

/>

<yanzhikai.textpath.AsyncTextPathView

android:id="@+id/atpv_1"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

app:duration="12000"

app:showPainter="true"

app:text="炎之铠"

app:textStrokeColor="@android:color/holo_orange_light"

app:textInCenter="true"

app:textSize="62sp"

android:layout_gravity="center_horizontal"

/>

```

java里面使用:

```

atpv1 = findViewById(R.id.atpv_1);

stpv_2017 = findViewById(R.id.stpv_2017);

//从无到显示

atpv1.startAnimation(0,1);

//从显示到消失

stpv_2017.startAnimation(1,0);

```

还可以通过控制进度,来控制TextPathView显示,这里用SeekBar:

```

sb_progress.setOnSeekBarChangeListener(new SeekBar.OnSeekBarChangeListener() {

@Override

public void onProgressChanged(SeekBar seekBar, int progress, boolean fromUser) {

atpv1.drawPath(progress / 1000f);

stpv_2017.drawPath(progress / 1000f);

}

}

```

#### PathView

PathView是0.1.1版本之后新增的,拥有三个子类TextPathView、SyncPathView和AsyncPathView,前者上面有介绍是文字的路径,后面这两个就是图形的路径,必须要输入一个Path类,才能正常运行:

```

public class TestPath extends Path {

public TestPath(){

init();

}

private void init() {

addCircle(350,300,150,Direction.CCW);

addCircle(350,300,100,Direction.CW);

addCircle(350,300,50,Direction.CCW);

moveTo(350,300);

lineTo(550,500);

}

}

```

```

//必须先调用setPath设置路径

aspv.setPath(new TestPath());

aspv.startAnimation(0,1);

```

(录屏可能有些问题,实际上是没有背景色的)上面就是SyncPathView和AsyncPathView效果,区别和文字路径是一样的。

### 属性

|**属性名称**|**意义**|**类型**|**默认值**|

|--|--|:--:|:--:|

|textSize | 文字的大小size | integer| 108 |

|text | 文字的具体内容 | String| Test|

|autoStart| 是否加载完后自动启动动画 | boolean| false|

|showInStart| 是否一开始就把文字全部显示 | boolean| false|

|textInCenter| 是否让文字内容处于控件中心 | boolean| false|

|duration | 动画的持续时间,单位ms | integer| 10000|

|showPainter | 在动画执行的时候是否执行画笔特效 | boolean| false|

|showPainterActually| 在所有时候是否展示画笔特效| boolean| false|

|~~textStrokeWidth~~ strokeWidth | 路径刻画的线条粗细 | dimension| 5px|

|~~textStrokeColor~~ pathStrokeColor| 路径刻画的线条颜色 | color| Color.black|

|paintStrokeWidth | 画笔特效刻画的线条粗细 | dimension| 3px|

|paintStrokeColor | 画笔特效刻画的线条颜色 | color| Color.black|

|repeat| 是否重复播放动画,重复类型| enum | NONE|

|fillColor| 文字动画结束时是否填充颜色 | boolean | false |

|**repeat属性值**|**意义**|

|--|--|

|NONE|不重复播放|

|RESTART|动画从头重复播放|

|REVERSE|动画从尾重复播放|

> PS:showPainterActually属性,由于动画绘画完毕应该将画笔特效消失,所以每次执行完动画都会自动设置为false。因此最好用于使用非自带动画的时候。

### 方法

#### 画笔特效

```

//设置画笔特效

public void setPainter(SyncPathPainter painter);

//设置画笔特效

public void setPainter(SyncPathPainter painter);

```

因为绘画的原理不一样,画笔特效也分两种:

```

public interface SyncPathPainter extends PathPainter {

//开始动画的时候执行

void onStartAnimation();

/**

* 绘画画笔特效时候执行

* @param x 当前绘画点x坐标

* @param y 当前绘画点y坐标

* @param paintPath 画笔Path对象,在这里画出想要的画笔特效

*/

@Override

void onDrawPaintPath(float x, float y, Path paintPath);

}

public interface AsyncPathPainter extends PathPainter {

/**

* 绘画画笔特效时候执行

* @param x 当前绘画点x坐标

* @param y 当前绘画点y坐标

* @param paintPath 画笔Path对象,在这里画出想要的画笔特效

*/

@Override

void onDrawPaintPath(float x, float y, Path paintPath);

}

```

看名字就知道是对应哪一个了,想要自定义画笔特效的话就可以实现上面之中的一个或者两个接口来自己画啦。

另外,还有里面已经自带了3种画笔特效,可供参考和使用(关于这些画笔特效的实现,可以参考[原理解析](http://blog.csdn.net/totond/article/details/79375200)):

```

//箭头画笔特效,根据传入的当前点与上一个点之间的速度方向,来调整箭头方向

public class ArrowPainter implements SyncPathPainter {

//一支笔的画笔特效,就是在绘画点旁边画多一支笔

public class PenPainter implements SyncPathPainter,AsyncPathPainter {

//火花特效,根据箭头引申变化而来,根据当前点与上一个点算出的速度方向来控制火花的方向

public class FireworksPainter implements SyncPathPainter {

```

由上面可见,因为烟花和箭头画笔特效都需要记录上一个点的位置,所以只适合按顺序绘画的SyncTextPathView,而PenPainter就适合两种TextPathView。仔细看它的代码的话,会发现画起来都是很简单的哦。

#### 自定义画笔特效

自定义画笔特效也是非常简单的,原理就是在当前绘画点上加上一个附加的Path,实现SyncPathPainter和AsyncPathPainter之中的一个或者两个接口,重写里面的`onDrawPaintPath(float x, float y, Path paintPath)`方法就行了,如下面这个:

```

atpv2.setPathPainter(new AsyncPathPainter() {

@Override

public void onDrawPaintPath(float x, float y, Path paintPath) {

paintPath.addCircle(x,y,6, Path.Direction.CCW);

}

});

```

#### 动画监听

```

//设置自定义动画监听

public void setAnimatorListener(PathAnimatorListener animatorListener);

```

PathAnimatorListener是实现了AnimatorListener接口的类,继承它的时候注意不要删掉super父类方法,因为里面可能有一些操作。

#### 画笔获取

```

//获取绘画文字的画笔

public Paint getDrawPaint() {

return mDrawPaint;

}

//获取绘画画笔特效的画笔

public Paint getPaint() {

return mPaint;

}

```

#### 控制绘画

```

/**

* 绘画文字路径的方法

*

* @param start 路径开始点百分比

* @param end 路径结束点百分比

*/

public abstract void drawPath(float start, float end);

/**

* 开始绘制路径动画

* @param start 路径比例,范围0-1

* @param end 路径比例,范围0-1

*/

public void startAnimation(float start, float end);

/**

* 绘画路径的方法

* @param progress 绘画进度,0-1

*/

public void drawPath(float progress);

/**

* Stop animation

*/

public void stopAnimation();

/**

* Pause animation

*/

@RequiresApi(api = Build.VERSION_CODES.KITKAT)

public void pauseAnimation();

/**

* Resume animation

*/

@RequiresApi(api = Build.VERSION_CODES.KITKAT)

public void resumeAnimation();

```

#### 填充颜色

```

//直接显示填充好颜色了的全部文字

public void showFillColorText();

//设置动画播放完后是否填充颜色

public void setFillColor(boolean fillColor)

```

由于正在绘画的时候文字路径不是封闭的,填充颜色会变得很混乱,所以这里给出`showFillColorText()`来设置直接显示填充好颜色了的全部文字,一般可以在动画结束后文字完全显示后过渡填充

#### 取值计算器

0.2.+版本开始,加入了取值计算器PathCalculator,可以通过`setCalculator(PathCalculator calculator)`方法设置。PathCalculator可以控制路径的起点start和终点end属性在不同progress对应的取值。TextPathView自带一些PathCalculator子类:

- **MidCalculator**

start和end从0.5开始往两边扩展:

- **AroundCalculator**

start会跟着end增长,end增长到0.75后start会反向增长

- **BlinkCalculator**

start一直为0,end自然增长,但是每增加几次会有一次end=1,造成闪烁

- **自定义PathCalculator:**用户可以通过继承抽象类PathCalculator,通过里面的`setStart(float start)`和`setEnd(float end)`,具体可以参考上面几个自带的PathCalculator实现代码。

#### 其他

```

//设置文字内容

public void setText(String text);

//设置路径,必须先设置好路径在startAnimation(),不然会报错!

public void setPath(Path path) ;

//设置字体样式

public void setTypeface(Typeface typeface);

//清除画面

public void clear();

//设置动画时能否显示画笔效果

public void setShowPainter(boolean showPainter);

//设置所有时候是否显示画笔效果,由于动画绘画完毕应该将画笔特效消失,所以每次执行完动画都会自动设置为false

public void setCanShowPainter(boolean canShowPainter);

//设置动画持续时间

public void setDuration(int duration);

//设置重复方式

public void setRepeatStyle(int repeatStyle);

//设置Path开始结束取值的计算器

public void setCalculator(PathCalculator calculator)

```

## 更新

- 2018/03/08 **version 0.0.5**:

- 增加了`showFillColorText()`方法来设置直接显示填充好颜色了的全部文字。

- 把PathAnimatorListener从TextPathView的内部类里面解放出来,之前使用太麻烦了。

- 增加`showPainterActually`属性,设置所有时候是否显示画笔效果,由于动画绘画完毕应该将画笔特效消失,所以每次执行完动画都会自动将它设置为false。因此它用处就是在不使用自带Animator的时候显示画笔特效。

- 2018/03/08 **version 0.0.6**:

- 增加了`stop(), pause(), resume()`方法来控制动画。之前是觉得让使用者自己用Animator实现就好了,现在一位外国友人[toanvc](https://github.com/toanvc)提交的PR封装好了,我稍作修改,不过后两者使用时API要大于等于19。

- 增加了`repeat`属性,让动画支持重复播放,也是[toanvc](https://github.com/toanvc)同学的PR。

- 2018/03/18 **version 0.1.0**:

- 重构代码,加入路径动画SyncPathView和AsyncPathView,把总父类抽象为PathView

- 增加`setDuration()`、`setRepeatStyle()`

- 修改一系列名字如下:

|Old Name|New Name|

|---|---|

|TextPathPainter|PathPainter|

|SyncTextPainter|SyncPathPainter|

|AsyncTextPainter|AsyncPathPainter|

|TextAnimatorListener|PathAnimatorListener|

- 2018/03/21 **version 0.1.2**:

- 修复高度warp_content时候内容有可能显示不全

- 原来PathMeasure获取文字Path时候,最后会有大概一个像素的缺失,现在只能在onDraw判断progress是否为1来显示完全路径(但是这样可能会导致硬件加速上显示不出来,需要手动关闭这个View的硬件加速)

- 增加字体设置

- 支持自动换行

- 2018/09/09 **version 0.1.3**:

- 默认关闭此控件的硬件加速

- 加入内存泄漏控制

- 准备后续优化

- 2019/04/04 **version 0.2.1**:

- 现在不但可以控制文字路径结束位置end,还可以控制开始位置start

- 可以通过PathCalculator的子类来控制实现一些字路径变化,如上面的MidCalculator、AroundCalculator、BlinkCalculator

- 可以通知直接设置FillColor属性来控制结束时是否填充颜色

- 硬件加速问题解决,默认打开

- 去除无用log和报错

#### 后续将会往下面的方向努力:

- 更多的特效,更多的动画,如果有什么想法和建议的欢迎issue提出来一起探讨,还可以提交PR出一份力。

- 更好的性能,目前单个TextPathView在模拟器上运行动画时是不卡的,多个就有一点点卡顿了,在性能较好的真机多个也是没问题的,这个性能方面目前还没头绪。

- 文字换行符支持。

- Path的宽高测量(包含空白,从坐标(0,0)开始)

## 贡献代码

如果想为TextPathView的完善出一份力的同学,欢迎提交PR:

- 首先请创建一个分支branch。

- 如果加入新的功能或者效果,请不要覆盖demo里面原来用于演示Activity代码,如FristActivity里面的实例,可以选择新增一个Activity做演示测试,或者不添加演示代码。

- 如果修改某些功能或者代码,请附上合理的依据和想法。

- 翻译成English版README(暂时没空更新英文版)

## 开源协议

TextPathView遵循MIT协议。

## 关于作者

> id:炎之铠

> 炎之铠的邮箱:yanzhikai_yjk@qq.com

> CSDN:http://blog.csdn.net/totond

| 0 |

square/mortar | A simple library that makes it easy to pair thin views with dedicated controllers, isolated from most of the vagaries of the Activity life cycle. | 2013-11-09T00:01:50Z | null | # Mortar

## Deprecated

Mortar had a good run and served us well, but new use is strongly discouraged. The app suite at Square that drove its creation is in the process of replacing Mortar with [Square Workflow](https://square.github.io/workflow/).

## What's a Mortar?

Mortar provides a simplified, composable overlay for the Android lifecycle,

to aid in the use of [Views as the modular unit of Android applications][rant].

It leverages [Context#getSystemService][services] to act as an a la carte supplier

of services like dependency injection, bundle persistence, and whatever else

your app needs to provide itself.

One of the most useful services Mortar can provide is its [BundleService][bundle-service],

which gives any View (or any object with access to the Activity context) safe access to

the Activity lifecycle's persistence bundle. For fans of the [Model View Presenter][mvp]

pattern, we provide a persisted [Presenter][presenter] class that builds on BundleService.

Presenters are completely isolated from View concerns. They're particularly good at

surviving configuration changes, weathering the storm as Android destroys your portrait

Activity and Views and replaces them with landscape doppelgangers.

Mortar can similarly make [Dagger][dagger] ObjectGraphs (or [Dagger2][dagger2]

Components) visible as system services. Or not — these services are

completely decoupled.

Everything is managed by [MortarScope][scope] singletons, typically

backing the top level Application and Activity contexts. You can also spawn

your own shorter lived scopes to manage transient sessions, like the state of

an object being built by a set of wizard screens.

<!--

This example is a little bit confusing. Maybe explain why you would want to have an extended graph for a wizard, then explain how Mortar shadows the parent graph with that extended graph.

-->

These nested scopes can shadow the services provided by higher level scopes.

For example, a [Dagger extension graph][ogplus] specific to your wizard session

can cover the one normally available, transparently to the wizard Views.

Calls like `ObjectGraphService.inject(getContext(), this)` are now possible

without considering which graph will do the injection.

## The Big Picture

An application will typically have a singleton MortarScope instance.

Its job is to serve as a delegate to the app's `getSystemService` method, something like:

```java

public class MyApplication extends Application {

private MortarScope rootScope;

@Override public Object getSystemService(String name) {

if (rootScope == null) rootScope = MortarScope.buildRootScope().build(getScopeName());

return rootScope.hasService(name) ? rootScope.getService(name) : super.getSystemService(name);

}

}

```

This exposes a single, core service, the scope itself. From the scope you can

spawn child scopes, and you can register objects that implement the

[Scoped](https://github.com/square/mortar/blob/master/mortar/src/main/java/mortar/Scoped.java#L18)

interface with it for setup and tear-down calls.

* `Scoped#onEnterScope(MortarScope)`

* `Scoped#onExitScope(MortarScope)`

To make a scope provide other services, like a [Dagger ObjectGraph][og],

you register them while building the scope. That would make our Application's

`getSystemService` method look like this:

```java

@Override public Object getSystemService(String name) {

if (rootScope == null) {

rootScope = MortarScope.buildRootScope()

.with(ObjectGraphService.SERVICE_NAME, ObjectGraph.create(new RootModule()))

.build(getScopeName());

}

return rootScope.hasService(name) ? rootScope.getService(name) : super.getSystemService(name);

}

```

Now any part of our app that has access to a `Context` can inject itself:

```java

public class MyView extends LinearLayout {

@Inject SomeService service;

public MyView(Context context, AttributeSet attrs) {

super(context, attrs);

ObjectGraphService.inject(context, this);

}

}

```

To take advantage of the BundleService describe above, you'll put similar code

into your Activity. If it doesn't exist already, you'll

build a sub-scope to back the Activity's `getSystemService` method, and

while building it set up the `BundleServiceRunner`. You'll also notify

the BundleServiceRunner each time `onCreate` and `onSaveInstanceState` are

called, to make the persistence bundle available to the rest of the app.

```java

public class MyActivity extends Activity {

private MortarScope activityScope;

@Override public Object getSystemService(String name) {

MortarScope activityScope = MortarScope.findChild(getApplicationContext(), getScopeName());

if (activityScope == null) {

activityScope = MortarScope.buildChild(getApplicationContext()) //

.withService(BundleServiceRunner.SERVICE_NAME, new BundleServiceRunner())

.withService(HelloPresenter.class.getName(), new HelloPresenter())

.build(getScopeName());

}

return activityScope.hasService(name) ? activityScope.getService(name)

: super.getSystemService(name);

}

@Override protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

BundleServiceRunner.getBundleServiceRunner(this).onCreate(savedInstanceState);

setContentView(R.layout.main_view);

}

@Override protected void onSaveInstanceState(Bundle outState) {

super.onSaveInstanceState(outState);

BundleServiceRunner.getBundleServiceRunner(this).onSaveInstanceState(outState);

}

}

```

With that in place, any object in your app can sign up with the `BundleService`

to save and restore its state. This is nice for views, since Bundles are less

of a hassle than the `Parcelable` objects required by `View#onSaveInstanceState`,

and a boon to any business objects in the rest of your app.

Download

--------

Download [the latest JAR][jar] or grab via Maven:

```xml

<dependency>

<groupId>com.squareup.mortar</groupId>

<artifactId>mortar</artifactId>

<version>(insert latest version)</version>

</dependency>

```

Gradle:

```groovy

compile 'com.squareup.mortar:mortar:(latest version)'

```

## Full Disclosure

This stuff has been in "rapid" development over a pretty long gestation period,

but is finally stabilizing. We don't expect drastic changes before cutting a

1.0 release, but we still cannot promise a stable API from release to release.

Mortar is a key component of multiple Square apps, including our flagship

[Square Register][register] app.

License

--------

Copyright 2013 Square, Inc.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

[bundle-service]: https://github.com/square/mortar/blob/master/mortar/src/main/java/mortar/bundler/BundleService.java

[mvp]: http://en.wikipedia.org/wiki/Model%E2%80%93view%E2%80%93presenter

[dagger]: http://square.github.io/dagger/

[dagger2]: http://google.github.io/dagger/

[jar]: http://repository.sonatype.org/service/local/artifact/maven/redirect?r=central-proxy&g=com.squareup.mortar&a=mortar&v=LATEST

[og]: https://square.github.io/dagger/1.x/dagger/dagger/ObjectGraph.html

[ogplus]: https://github.com/square/dagger/blob/dagger-parent-1.1.0/core/src/main/java/dagger/ObjectGraph.java#L96

[presenter]: https://github.com/square/mortar/blob/master/mortar/src/main/java/mortar/Presenter.java

[rant]: http://corner.squareup.com/2014/10/advocating-against-android-fragments.html

[register]: https://play.google.com/store/apps/details?id=com.squareup

[scope]: https://github.com/square/mortar/blob/master/mortar/src/main/java/mortar/MortarScope.java

[services]: http://developer.android.com/reference/android/content/Context.html#getSystemService(java.lang.String)

| 0 |

joyoyao/superCleanMaster | [DEPRECATED] | 2015-02-12T03:37:41Z | null | # superCleanMaster

superCleanMaster is deprecated Thanks for all your support!

| 0 |

frogermcs/GithubClient | Example of Github API client implemented on top of Dagger 2 DI framework. | 2015-05-27T16:43:03Z | null | # GithubClient

Example of Github API client implemented on top of Dagger 2 DI framework.

This code was created as an example for Dependency Injection with Dagger 2 series on my dev-blog:

- [Introdution to Dependency Injection](http://frogermcs.github.io/dependency-injection-with-dagger-2-introdution-to-di/)

- [Dagger 2 API](http://frogermcs.github.io/dependency-injection-with-dagger-2-the-api/)

- [Dagger 2 - custom scopes](http://frogermcs.github.io/dependency-injection-with-dagger-2-custom-scopes/)

- [Dagger 2 - graph creation performance](http://frogermcs.github.io/dagger-graph-creation-performance/)

- [Dependency injection with Dagger 2 - Producers](http://frogermcs.github.io/dependency-injection-with-dagger-2-producers/)

- [Inject everything - ViewHolder and Dagger 2 (with Multibinding and AutoFactory example)](http://frogermcs.github.io/inject-everything-viewholder-and-dagger-2-example/)

This code was originally prepared for my presentation at Google I/O Extended 2015 in Tech Space Cracow. http://www.meetup.com/GDG-Krakow/events/221822600/

| 1 |

patric-r/jvmtop | Java monitoring for the command-line, profiler included | 2015-07-14T12:58:49Z | null | <b>jvmtop</b> is a lightweight console application to monitor all accessible, running jvms on a machine.<br>

In a top-like manner, it displays <a href='https://github.com/patric-r/jvmtop/blob/master/doc/ExampleOutput.md'>JVM internal metrics</a> (e.g. memory information) of running java processes.<br>

<br>

Jvmtop does also include a <a href='https://github.com/patric-r/jvmtop/blob/master/doc/ConsoleProfiler.md'>CPU console profiler</a>.<br>

<br>

It's tested with different releases of Oracle JDK, IBM JDK and OpenJDK on Linux, Solaris, FreeBSD and Windows hosts.<br>

Jvmtop requires a JDK - a JRE will not suffice.<br>

<br>

Please note that it's currently in an alpha state -<br>

if you experience an issue or need further help, please <a href='https://github.com/patric-r/jvmtop/issues'>let us know</a>.<br>

<br>

Jvmtop is open-source. Checkout the <a href='https://github.com/patric-r/jvmtop'>source code</a>. Patches are very welcome!<br>

<br>

Also have a look at the <a href='https://github.com/patric-r/jvmtop/blob/master/doc/Documentation.md'>documentation</a> or at a <a href='https://github.com/patric-r/jvmtop/blob/master/doc/ExampleOutput.md'>captured live-example</a>.<br>

```

JvmTop 0.8.0 alpha amd64 8 cpus, Linux 2.6.32-27, load avg 0.12

https://github.com/patric-r/jvmtop

PID MAIN-CLASS HPCUR HPMAX NHCUR NHMAX CPU GC VM USERNAME #T DL

3370 rapperSimpleApp 165m 455m 109m 176m 0.12% 0.00% S6U37 web 21

11272 ver.resin.Resin [ERROR: Could not attach to VM]

27338 WatchdogManager 11m 28m 23m 130m 0.00% 0.00% S6U37 web 31

19187 m.jvmtop.JvmTop 20m 3544m 13m 130m 0.93% 0.47% S6U37 web 20

16733 artup.Bootstrap 159m 455m 166m 304m 0.12% 0.00% S6U37 web 46

```

<hr />

<h3>Installation</h3>

Click on the <a href="https://github.com/patric-r/jvmtop/releases"> releases tab</a>, download the

most recent tar.gz archive. Extract it, ensure that the `JAVA_HOME` environment variable points to a valid JDK and run `./jvmtop.sh`.<br><br>

Further information can be found in the [INSTALL file](https://github.com/patric-r/jvmtop/blob/master/INSTALL)

<h3>08/14/2013 jvmtop 0.8.0 released</h3>

<b>Changes:</b>

<ul><li>improved attach compatibility for all IBM jvms<br>

</li><li>fixed wrong CPU/GC values for IBM J9 jvms<br>

</li><li>in case of unsupported heap size metric retrieval, n/a will be displayed instead of 0m<br>

</li><li>improved argument parsing, support for short-options, added help (pass <code>--help</code>), see <a href='https://github.com/patric-r/jvmtop/issues/28'>issue #28</a> (now using the great <a href='http://pholser.github.io/jopt-simple'>jopt-simple</a> library)<br>

</li><li>when passing the <code>--once</code> option, terminal will not be cleared anymore (see <a href='https://github.com/patric-r/jvmtop/issues/27'>issue #27</a>)<br>

</li><li>improved shell script for guessing the path if a <code>JAVA_HOME</code> environment variable is not present (thanks to <a href='https://groups.google.com/forum/#!topic/jvmtop-discuss/KGg_WpL_yAU'>Markus Kolb</a>)</li></ul>

<a href='https://github.com/patric-r/jvmtop/blob/master/doc/Changelog.md'>Full changelog</a>

<hr />

In <a href='https://github.com/patric-r/jvmtop/blob/master/doc/ExampleOutput.md'>VM detail mode</a> it shows you the top CPU-consuming threads, beside detailed metrics:<br>

<br>

<br>

```

JvmTop 0.8.0 alpha amd64, 4 cpus, Linux 2.6.18-34

https://github.com/patric-r/jvmtop

PID 3539: org.apache.catalina.startup.Bootstrap

ARGS: start

VMARGS: -Djava.util.logging.config.file=/home/webserver/apache-tomcat-5.5[...]

VM: Sun Microsystems Inc. Java HotSpot(TM) 64-Bit Server VM 1.6.0_25

UP: 869:33m #THR: 106 #THRPEAK: 143 #THRCREATED: 128020 USER: webserver

CPU: 4.55% GC: 3.25% HEAP: 137m / 227m NONHEAP: 75m / 304m

TID NAME STATE CPU TOTALCPU BLOCKEDBY

25 http-8080-Processor13 RUNNABLE 4.55% 1.60%

128022 RMI TCP Connection(18)-10.101. RUNNABLE 1.82% 0.02%

36578 http-8080-Processor164 RUNNABLE 0.91% 2.35%

36453 http-8080-Processor94 RUNNABLE 0.91% 1.52%

27 http-8080-Processor15 RUNNABLE 0.91% 1.81%

14 http-8080-Processor2 RUNNABLE 0.91% 3.17%

128026 JMX server connection timeout TIMED_WAITING 0.00% 0.00%

```

<a href='https://github.com/patric-r/jvmtop/issues'>Pull requests / bug reports</a> are always welcome.<br>

<br>

| 0 |

Gavin-ZYX/StickyDecoration | null | 2017-05-31T07:38:49Z | null | # StickyDecoration

利用`RecyclerView.ItemDecoration`实现顶部悬浮效果

## 支持

- **LinearLayoutManager**

- **GridLayoutManager**

- **点击事件**

- **分割线**

## 添加依赖

项目要求: `minSdkVersion` >= 14.

在你的`build.gradle`中 :

```gradle

repositories {

maven { url 'https://jitpack.io' }

}

dependencies {

compile 'com.github.Gavin-ZYX:StickyDecoration:1.6.1'

}

```

**最新版本**

[](https://jitpack.io/#Gavin-ZYX/StickyDecoration)

## 使用

#### 文字悬浮——StickyDecoration

> **注意**

使用recyclerView.addItemDecoration()之前,必须先调用recyclerView.setLayoutManager();

代码:

```java

GroupListener groupListener = new GroupListener() {

@Override

public String getGroupName(int position) {

//获取分组名

return mList.get(position).getProvince();

}

};

StickyDecoration decoration = StickyDecoration.Builder

.init(groupListener)

//重置span(使用GridLayoutManager时必须调用)

//.resetSpan(mRecyclerView, (GridLayoutManager) manager)

.build();

...

mRecyclerView.setLayoutManager(manager);

//需要在setLayoutManager()之后调用addItemDecoration()

mRecyclerView.addItemDecoration(decoration);

```

效果:

**支持的方法:**

| 方法 | 功能 | 默认 |

|-|-|-|

| setGroupBackground | 背景色 | #48BDFF |

| setGroupHeight | 高度 | 120px |

| setGroupTextColor | 字体颜色 | Color.WHITE |

| setGroupTextSize | 字体大小 | 50px |

| setDivideColor | 分割线颜色 | #CCCCCC |

| setDivideHeight | 分割线高宽度 | 0 |

| setTextSideMargin | 边距(靠左时为左边距 靠右时为右边距) | 10 |

| setHeaderCount | 头部Item数量(仅LinearLayoutManager) | 0 |

| setSticky | 是否需要吸顶效果 | true |

|方法|功能|描述|

|-|-|-|

| setOnClickListener | 点击事件 | 设置点击事件,返回当前分组下第一个item的position |

| resetSpan | 重置 | 使用GridLayoutManager时必须调用 |

### 自定义View悬浮——PowerfulStickyDecoration

先创建布局`item_group`

```xml

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout

xmlns:android="http://schemas.android.com/apk/res/android"

android:id="@+id/ll"

android:orientation="horizontal"

...>

<ImageView

android:id="@+id/iv"

.../>

<TextView

android:id="@+id/tv"

.../>

</LinearLayout>

```

创建`PowerfulStickyDecoration`,实现自定`View`悬浮

```java

PowerGroupListener listener = new PowerGroupListener() {

@Override

public String getGroupName(int position) {

return mList.get(position).getProvince();

}

@Override

public View getGroupView(int position) {

//获取自定定义的组View

View view = getLayoutInflater().inflate(R.layout.item_group, null, false);

((TextView) view.findViewById(R.id.tv)).setText(mList.get(position).getProvince());

return view;

}

};

PowerfulStickyDecoration decoration = PowerfulStickyDecoration.Builder

.init(listener)

//重置span(注意:使用GridLayoutManager时必须调用)

//.resetSpan(mRecyclerView, (GridLayoutManager) manager)

.build();

...

mRecyclerView.addItemDecoration(decoration);

```

效果:

**支持的方法:**

| 方法 | 功能 | 默认 |

| -- | -- | -- |

| setGroupHeight | 高度 | 120px |

| setGroupBackground | 背景色 | #48BDFF |

| setDivideColor | 分割线颜色 | #CCCCCC |

| setDivideHeight | 分割线高宽度 | 0 |

| setCacheEnable | 是否使用缓存| 使用缓存 |

| setHeaderCount | 头部Item数量(仅LinearLayoutManager) | 0 |

| setSticky | 是否需要吸顶效果 | true |

|方法|功能|描述|

|-|-|-|

| setOnClickListener | 点击事件 | 设置点击事件,返回当前分组下第一个item的position以及对应的viewId |

| resetSpan | 重置span |使用GridLayoutManager时必须调用 |

| notifyRedraw | 通知重新绘制 | 使用场景:网络图片加载后调用方法使用) |

| clearCache | 清空缓存 | 在使用缓存的情况下,数据改变时需要清理缓存 |

**Tips**

1、若使用网络图片时,在图片加载完成后需要调用

```java

decoration.notifyRedraw(mRv, view, position);

```

2、使用缓存时,若数据源改变,需要调用clearCache清除数据

3、点击事件穿透问题,参考demo中MyRecyclerView。[issue47](https://github.com/Gavin-ZYX/StickyDecoration/issues/37)

# 更新日志

----------------------------- 1.6.0 (2022-8-21)----------------------------

- fix:取消缓存无效问题

- 迁移仓库

- 迁移到Androidx

----------------------------- 1.5.3 (2020-12-15)----------------------------

- 支持是否需要吸顶效果

----------------------------- 1.5.2 (2019-9-3)----------------------------

- fix:特殊情况下,吸顶效果不佳问题

----------------------------- 1.5.1 (2019-8-8)----------------------------

- fix:setHeaderCount导致显示错乱问题

----------------------------- 1.5.0 (2019-6-17)----------------------------

- fix:GridLayoutManager刷新后数据混乱问题

----------------------------- 1.4.12 (2019-5-8)----------------------------

- fix:setDivideColor不生效问题

----------------------------- 1.4.9 (2018-10-9)----------------------------

- fix:由于添加header导致的一些问题

----------------------------- 1.4.8 (2018-08-26)----------------------------

- 顶部悬浮栏点击事件穿透问题:提供处理方案

----------------------------- 1.4.7 (2018-08-16)----------------------------

- fix:数据变化后,布局未刷新问题

----------------------------- 1.4.6 (2018-07-29)----------------------------

- 修改缓存方式

- 加入性能检测

----------------------------- 1.4.5 (2018-06-17)----------------------------

- 在GridLayoutManager中使用setHeaderCount方法导致布局错乱问题

----------------------------- 1.4.4 (2018-06-2)----------------------------

- 添加setHeaderCount方法

- 修改README

- 修复bug

----------------------------- 1.4.3 (2018-05-27)----------------------------

- 修复一些bug,更改命名

----------------------------- 1.4.2 (2018-04-2)----------------------------

- 增强点击事件,现在可以得到悬浮条内View点击事件(没有设置id时,返回View.NO_ID)

- 修复加载更多返回null崩溃或出现多余的悬浮Item问题(把加载更多放在Item中的加载方式)

----------------------------- 1.4.1 (2018-03-21)----------------------------

- 默认取消缓存,避免数据改变时显示出问题

- 添加clearCache方法用于清理缓存

----------------------------- 1.4.0 (2018-03-04)----------------------------

- 支持异步加载后的重新绘制(如网络图片加载)

- 优化缓存

- 优化GridLayoutManager的分割线

----------------------------- 1.3.1 (2018-01-30)----------------------------

- 修改测量方式

----------------------------- 1.3.0 (2018-01-28)----------------------------

- 删除isAlignLeft()方法,需要靠右时,直接在布局中处理就可以了。

- 优化缓存机制。

| 0 |

in28minutes/spring-master-class | An updated introduction to the Spring Framework 5. Become an Expert understanding the core features of Spring In Depth. You would write Unit Tests, AOP, JDBC and JPA code during the course. Includes introductions to Spring Boot, JPA, Eclipse, Maven, JUnit and Mockito. | 2017-08-07T06:56:45Z | null | # Spring Master Class - Journey from Beginner to Expert

[](https://www.udemy.com/course/spring-tutorial-for-beginners/)

Learn the magic of Spring Framework. From IOC (Inversion of Control), DI (Dependency Injection), Application Context to the world of Spring Boot, AOP, JDBC and JPA. Get set for an incredible journey.

### Introduction

Spring Framework remains as popular today as it was when I first used it 12 years back. How is this possible in the incredibly dynamic world where architectures have completely changed?

### What You will learn

- You will learn the basics of Spring Framework - Dependency Injection, IOC Container, Application Context and Bean Factory.

- You will understand how to use Spring Annotations - @Autowired, @Component, @Service, @Repository, @Configuration, @Primary....

- You will understand Spring MVC in depth - DispatcherServlet , Model, Controllers and ViewResolver

- You will use a variety of Spring Boot Starters - Spring Boot Starter Web, Starter Data Jpa, Starter Test

- You will learn the basics of Spring Boot, Spring AOP, Spring JDBC and JPA

- You will learn the basics of Eclipse, Maven, JUnit and Mockito

- You will develop a basic Web application step by step using JSP Servlets and Spring MVC

- You will learn to write unit tests with XML, Java Application Contexts and Mockito

### Requirements

- You should have working knowledge of Java and Annotations.

- We will help you install Eclipse and get up and running with Maven and Tomcat.

### Step Wise Details

Refer each section

## Installing Tools

- Installation Video : https://www.youtube.com/playlist?list=PLBBog2r6uMCSmMVTW_QmDLyASBvovyAO3

- GIT Repository For Installation : https://github.com/in28minutes/getting-started-in-5-steps

- PDF : https://github.com/in28minutes/SpringIn28Minutes/blob/master/InstallationGuide-JavaEclipseAndMaven_v2.pdf

## Running Examples

- Download the zip or clone the Git repository.

- Unzip the zip file (if you downloaded one)

- Open Command Prompt and Change directory (cd) to folder containing pom.xml

- Open Eclipse

- File -> Import -> Existing Maven Project -> Navigate to the folder where you unzipped the zip

- Select the right project

- Choose the Spring Boot Application file (search for @SpringBootApplication)

- Right Click on the file and Run as Java Application

- You are all Set

- For help : use our installation guide - https://www.youtube.com/playlist?list=PLBBog2r6uMCSmMVTW_QmDLyASBvovyAO3

### Troubleshooting

- Refer our TroubleShooting Guide - https://github.com/in28minutes/in28minutes-initiatives/tree/master/The-in28Minutes-TroubleshootingGuide-And-FAQ

## Youtube Playlists - 500+ Videos

[Click here - 30+ Playlists with 500+ Videos on Spring, Spring Boot, REST, Microservices and the Cloud](https://www.youtube.com/user/rithustutorials/playlists?view=1&sort=lad&flow=list)

## Keep Learning in28Minutes

in28Minutes is creating amazing solutions for you to learn Spring Boot, Full Stack and the Cloud - Docker, Kubernetes, AWS, React, Angular etc. - [Check out all our courses here](https://github.com/in28minutes/learn)

| 1 |

JeasonWong/Particle | It's a cool animation which can use in splash or somewhere else. | 2016-08-29T09:21:15Z | null | ## What's Particle ?

It's a cool animation which can use in splash or anywhere else.

## Demo

## Article

[手摸手教你用Canvas实现简单粒子动画](http://www.wangyuwei.me/2016/08/29/%E6%89%8B%E6%91%B8%E6%89%8B%E6%95%99%E4%BD%A0%E5%AE%9E%E7%8E%B0%E7%AE%80%E5%8D%95%E7%B2%92%E5%AD%90%E5%8A%A8%E7%94%BB/)

## Attributes

|name|format|description|中文解释

|:---:|:---:|:---:|:---:|

| pv_host_text | string |set left host text|设置左边主文案

| pv_host_text_size | dimension |set host text size|设置主文案的大小

| pv_particle_text | string |set right particle text|设置右边粒子上的文案

| pv_particle_text_size | dimension |set particle text size|设置粒子上文案的大小

| pv_text_color | color |set host text color|设置左边主文案颜色

|pv_background_color|color|set background color|设置背景颜色

| pv_text_anim_time | integer |set particle text duration|设置粒子上文案的运动时间

| pv_spread_anim_time | integer |set particle text spread duration|设置粒子上文案的伸展时间

|pv_host_text_anim_time|integer|set host text displacement duration|设置左边主文案的位移时间

## Usage

#### Define your banner under your xml :

```xml

<me.wangyuwei.particleview.ParticleView

android:layout_width="match_parent"

android:layout_height="match_parent"

pv:pv_background_color="#2E2E2E"

pv:pv_host_text="github"

pv:pv_host_text_size="14sp"

pv:pv_particle_text=".com"

pv:pv_particle_text_size="14sp"

pv:pv_text_color="#FFF"

pv:pv_text_anim_time="3000"

pv:pv_spread_anim_time="2000"

pv:pv_host_text_anim_time="3000" />

```

#### Start animation :

```java

mParticleView.startAnim();

```

#### Add animation listener to listen the end callback :

```java

mParticleView.setOnParticleAnimListener(new ParticleView.ParticleAnimListener() {

@Override

public void onAnimationEnd() {

Toast.makeText(MainActivity.this, "Animation is End", Toast.LENGTH_SHORT).show();

}

});

```

## Import

Step 1. Add it in your project's build.gradle at the end of repositories:

```gradle

repositories {

maven {

url 'https://dl.bintray.com/wangyuwei/maven'

}

}

```

Step 2. Add the dependency:

```gradle

dependencies {

compile 'me.wangyuwei:ParticleView:1.0.4'

}

```

### About Me

[Weibo](http://weibo.com/WongYuwei)

[Blog](http://www.wangyuwei.me)

### QQ Group 欢迎讨论

**479729938**

##**License**

```license

Copyright [2016] [JeasonWong of copyright owner]

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

``` | 0 |

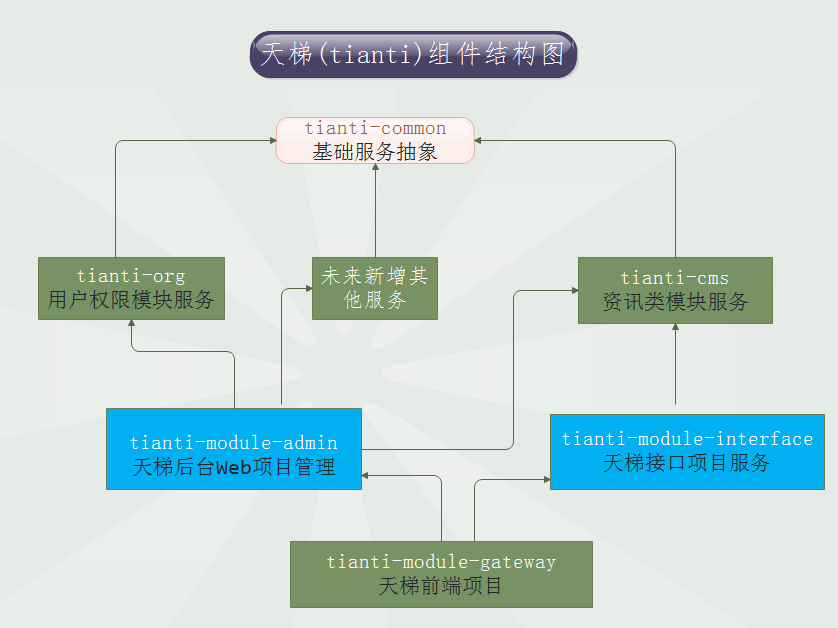

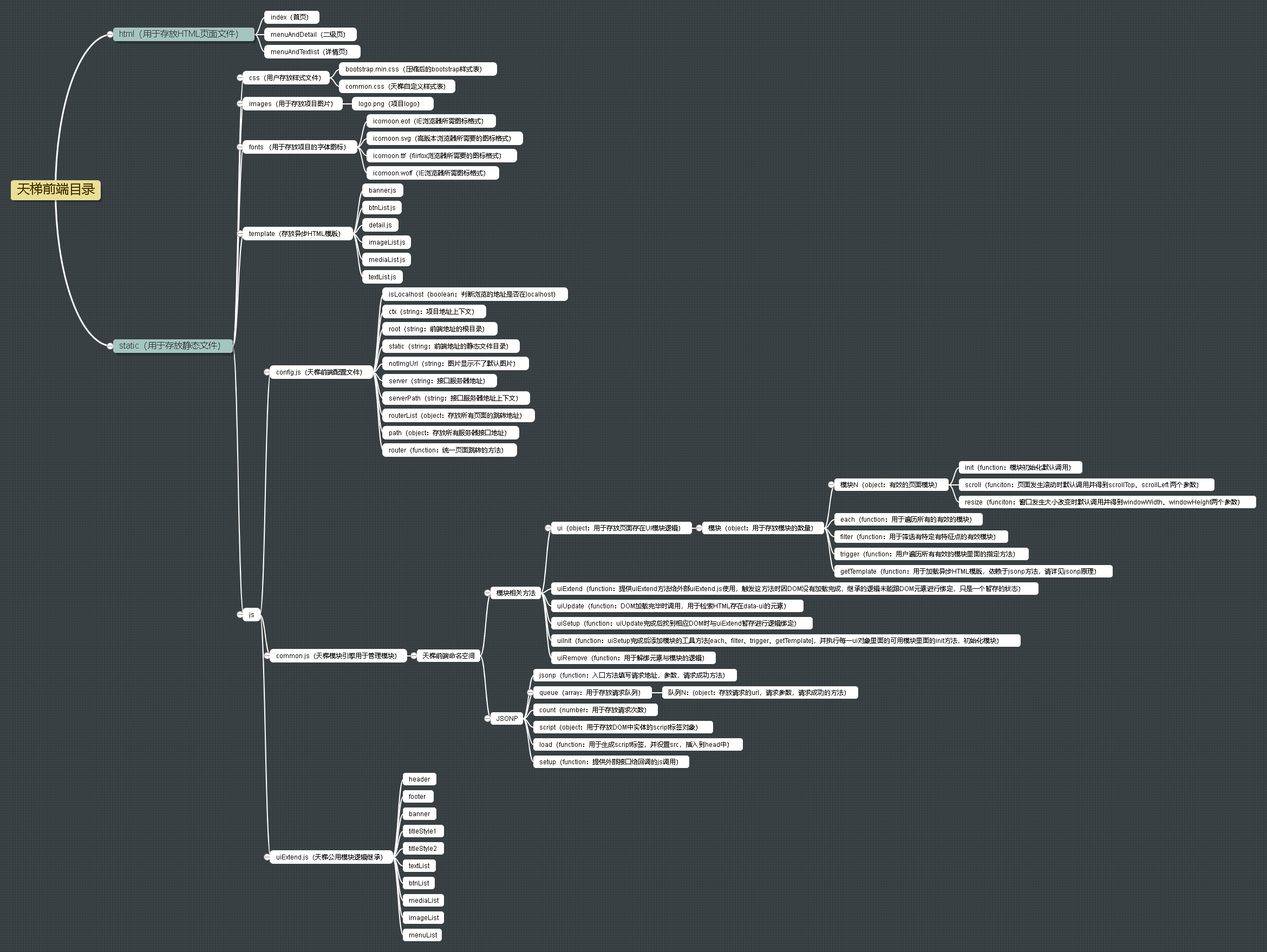

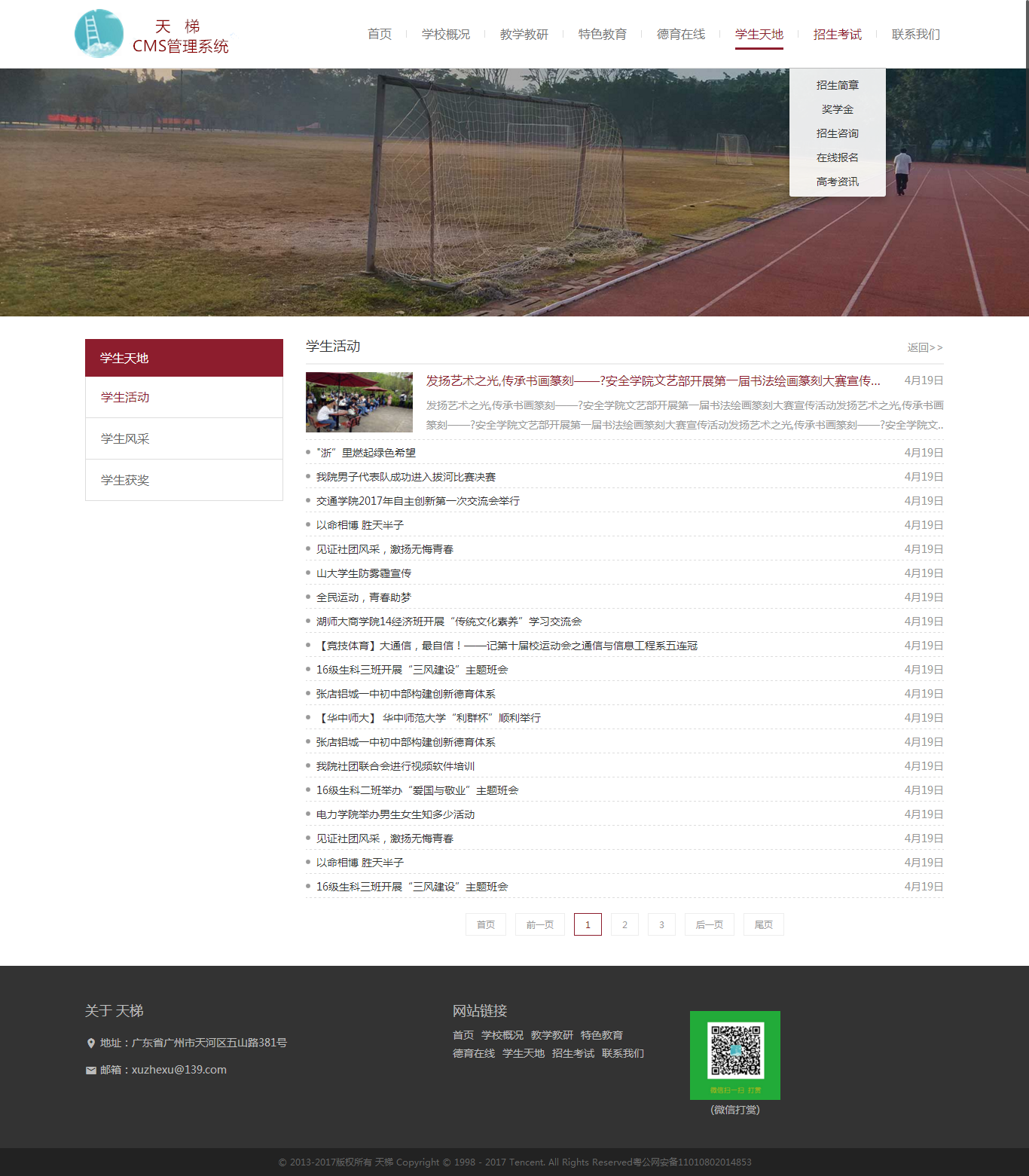

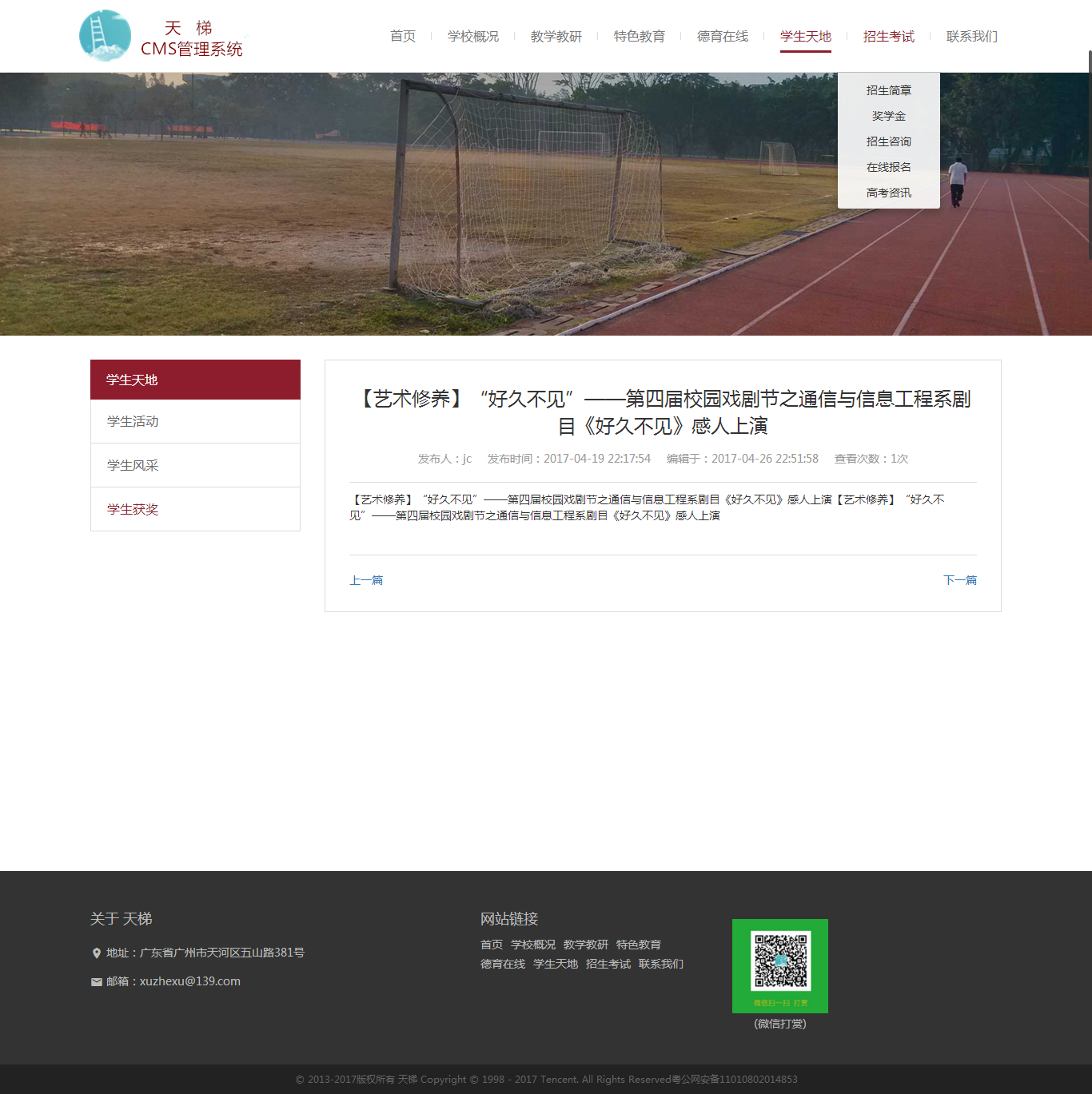

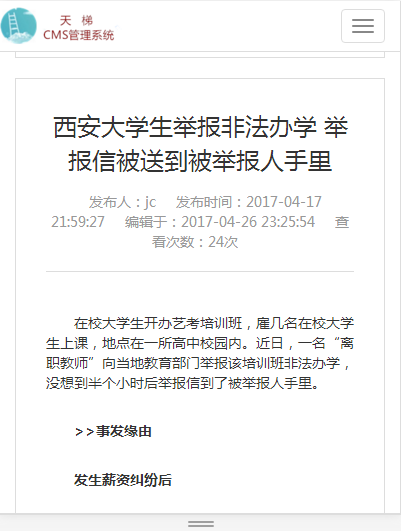

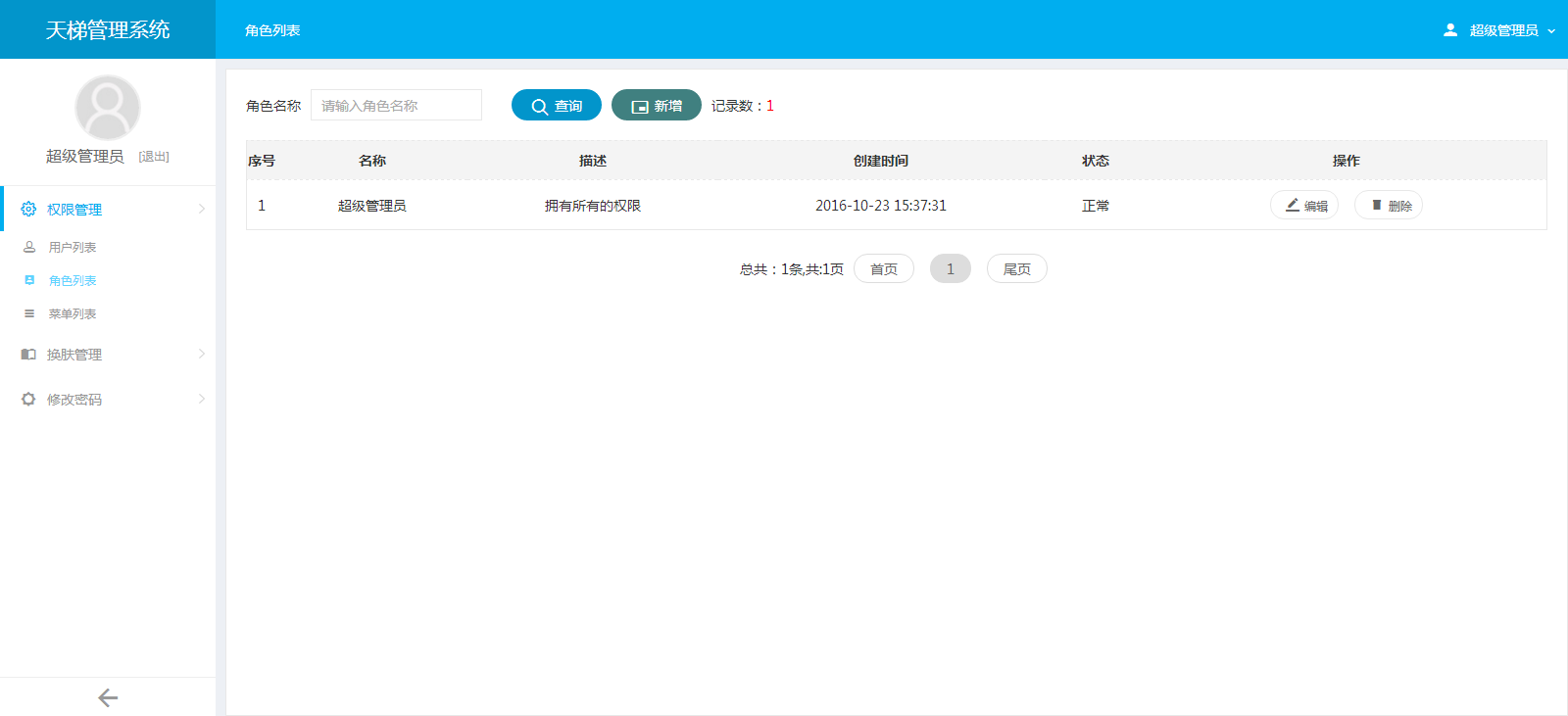

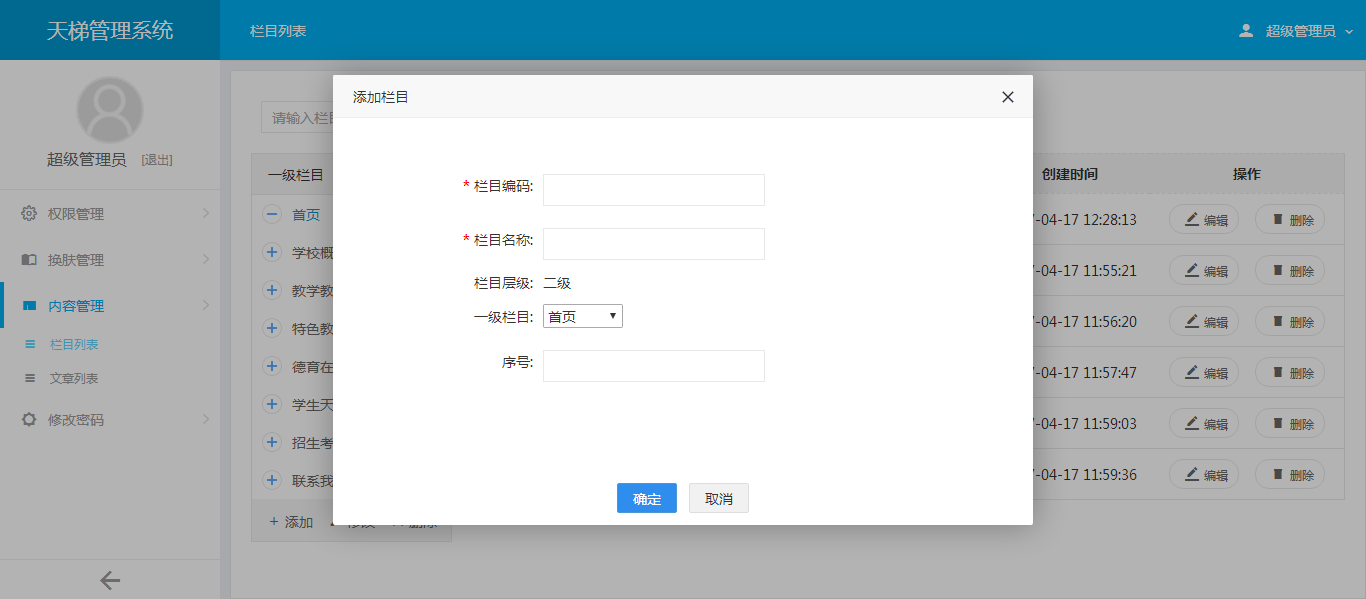

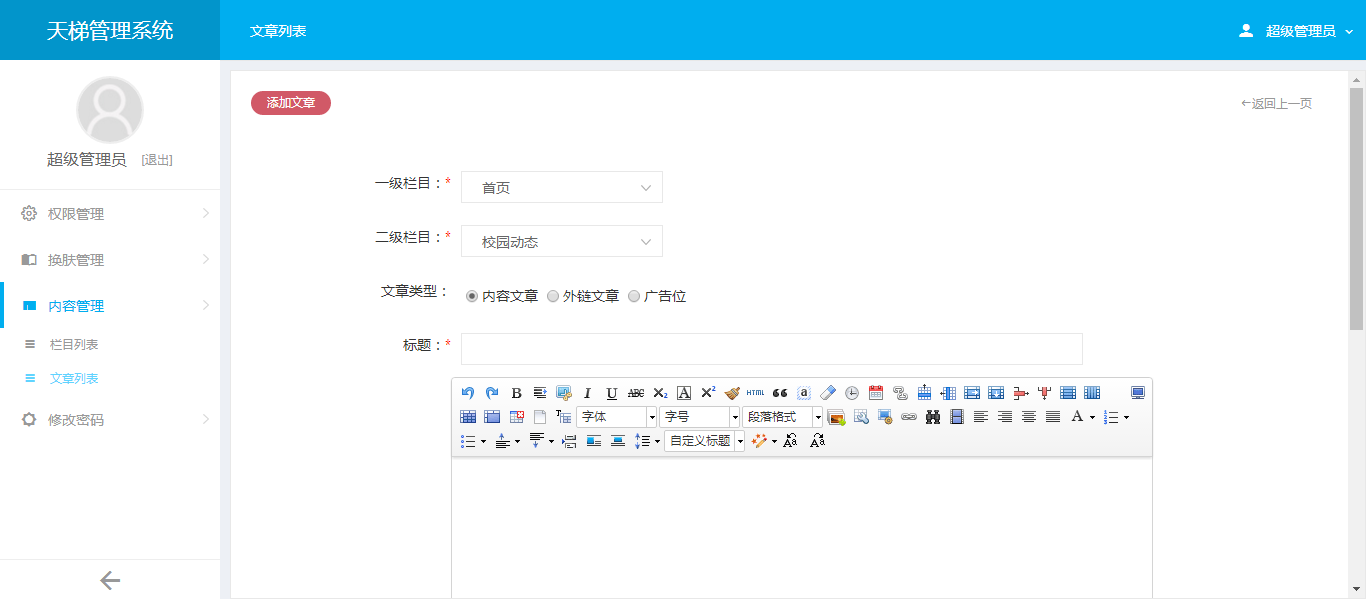

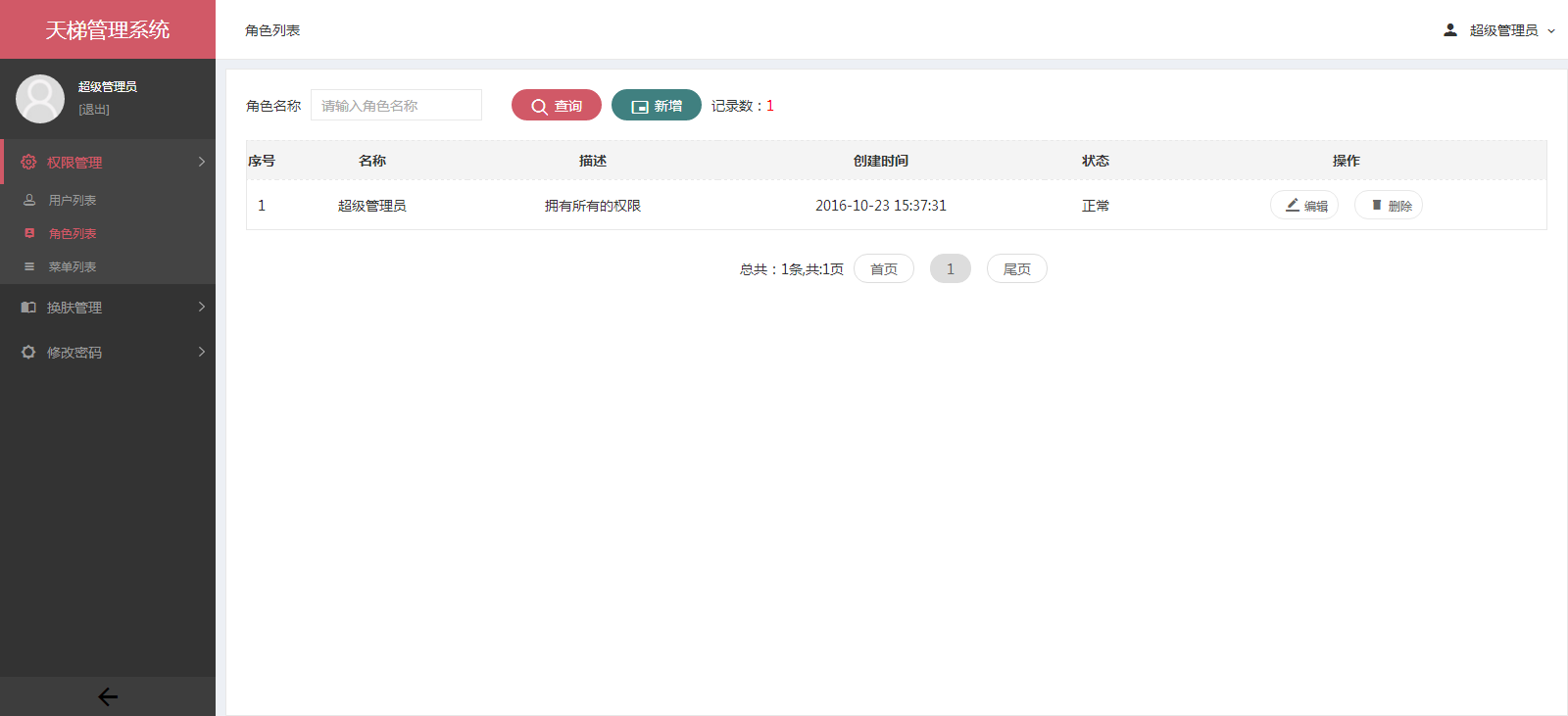

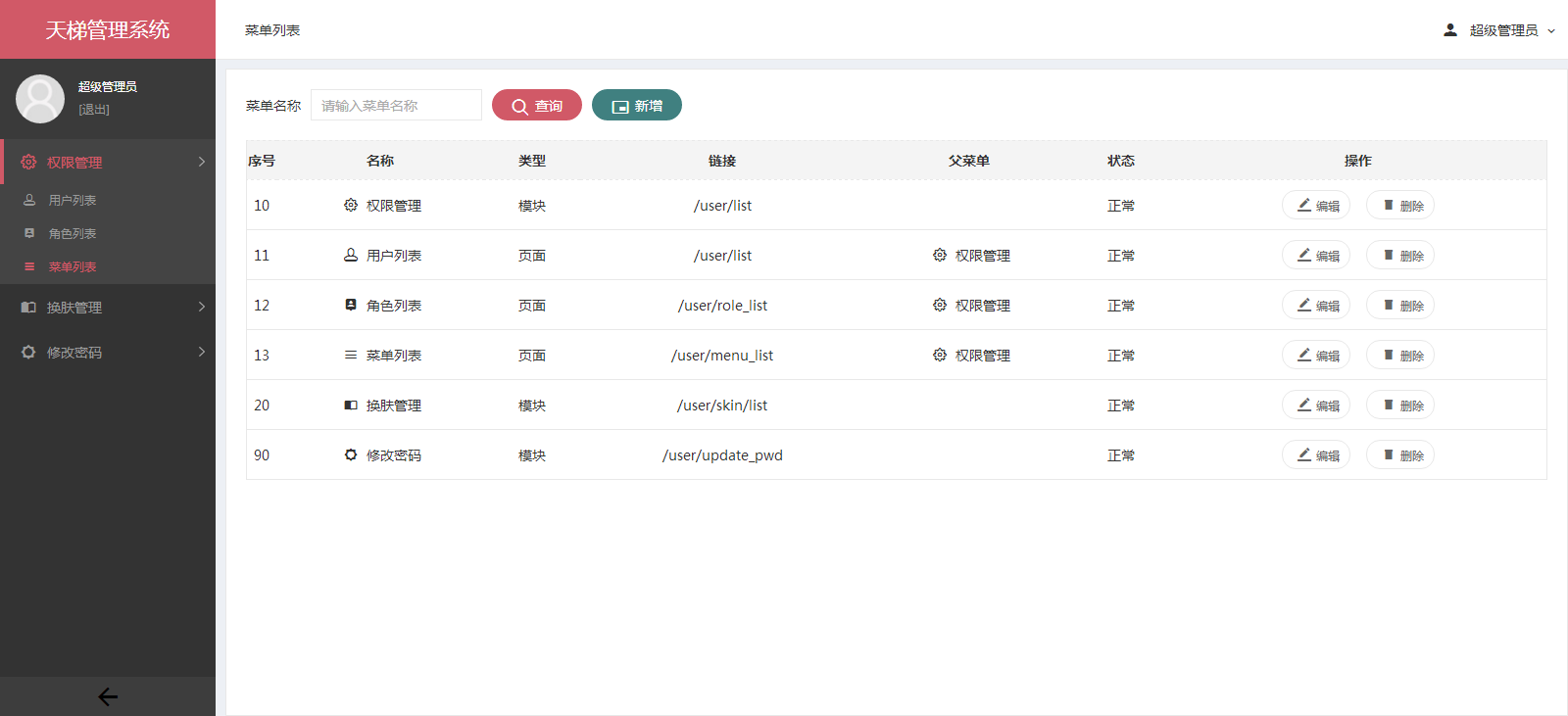

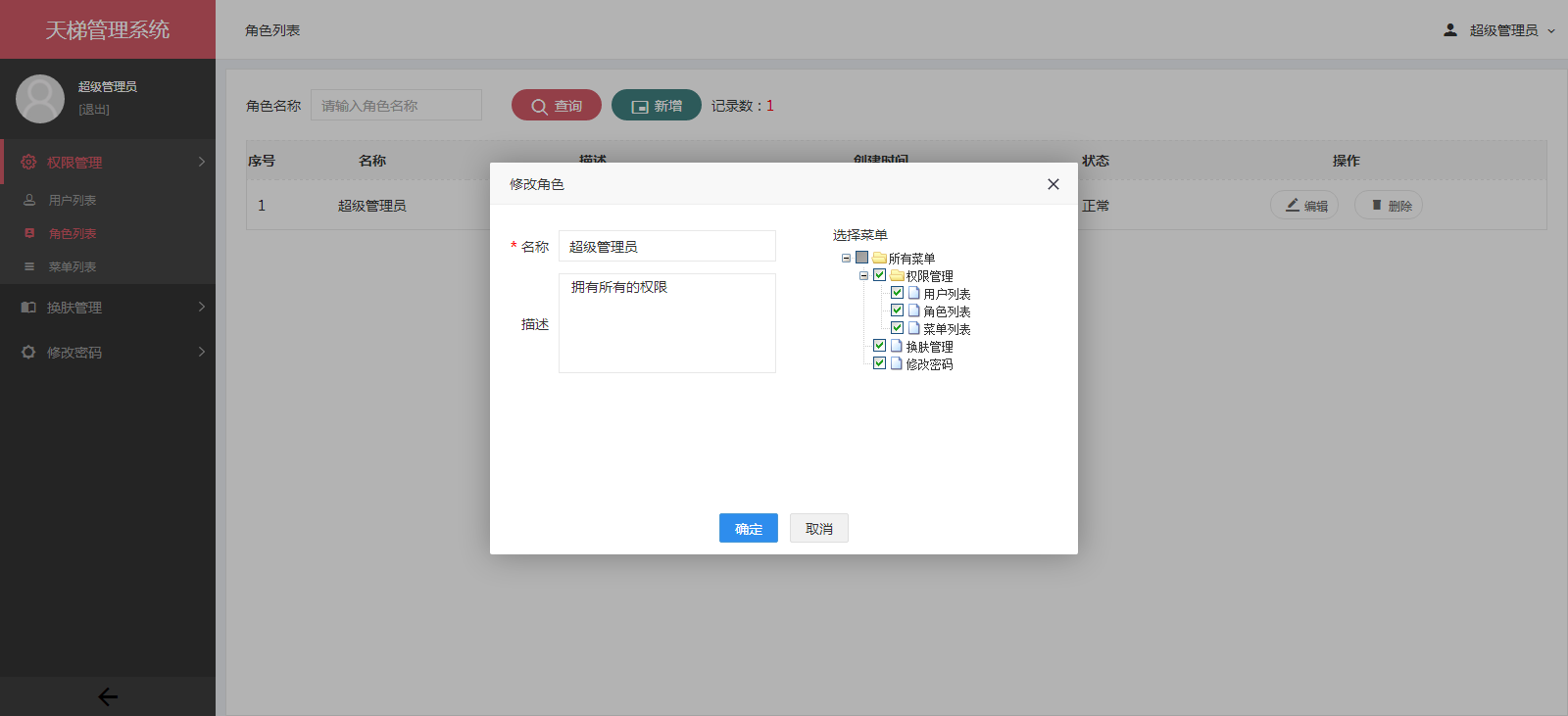

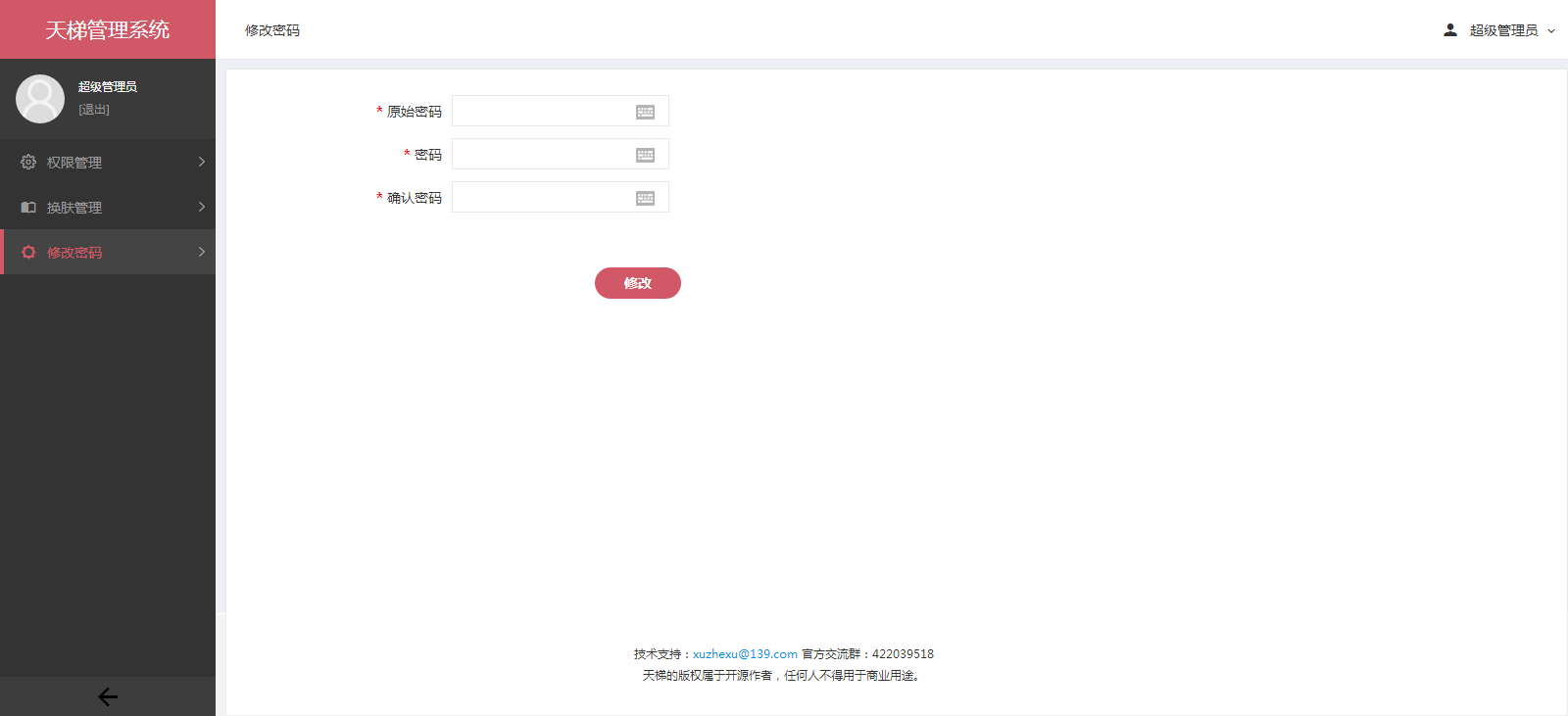

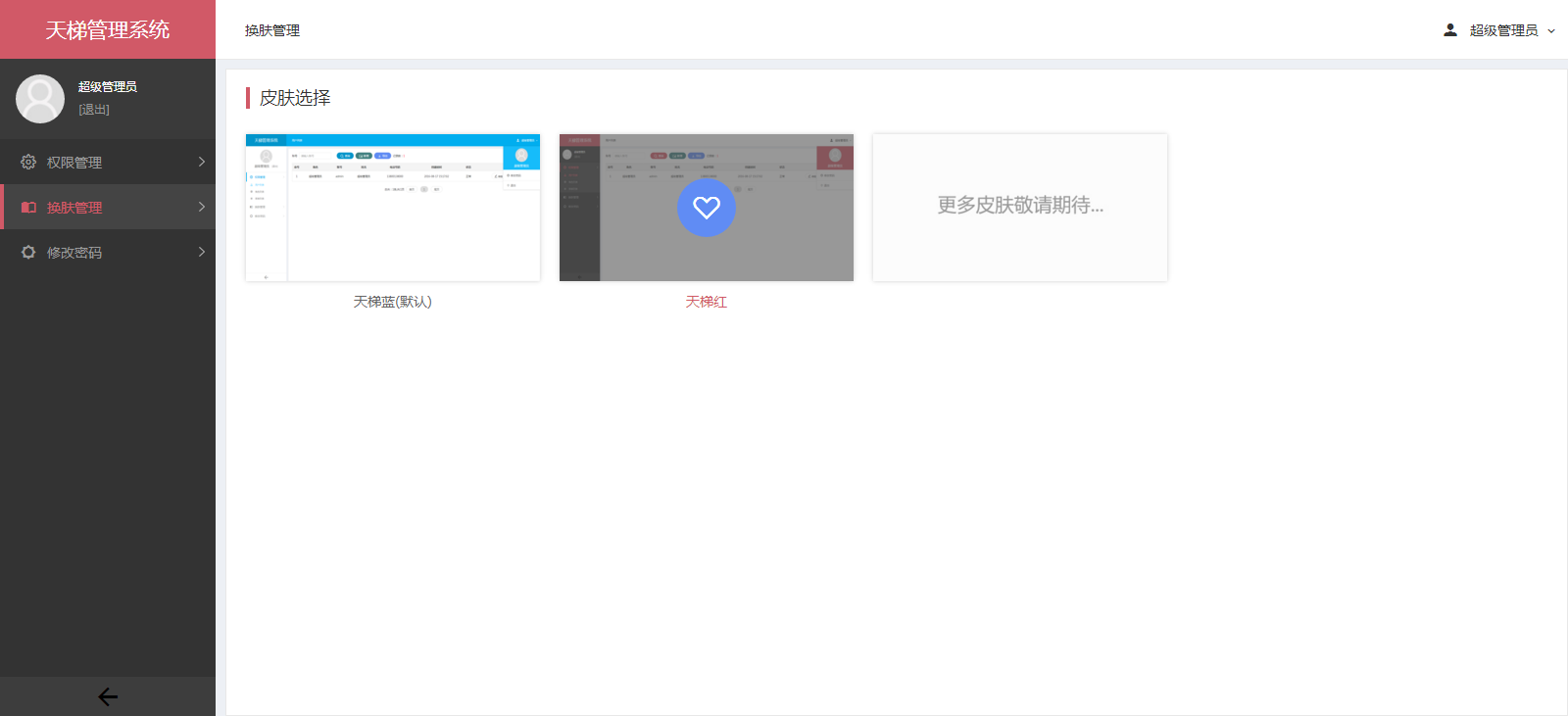

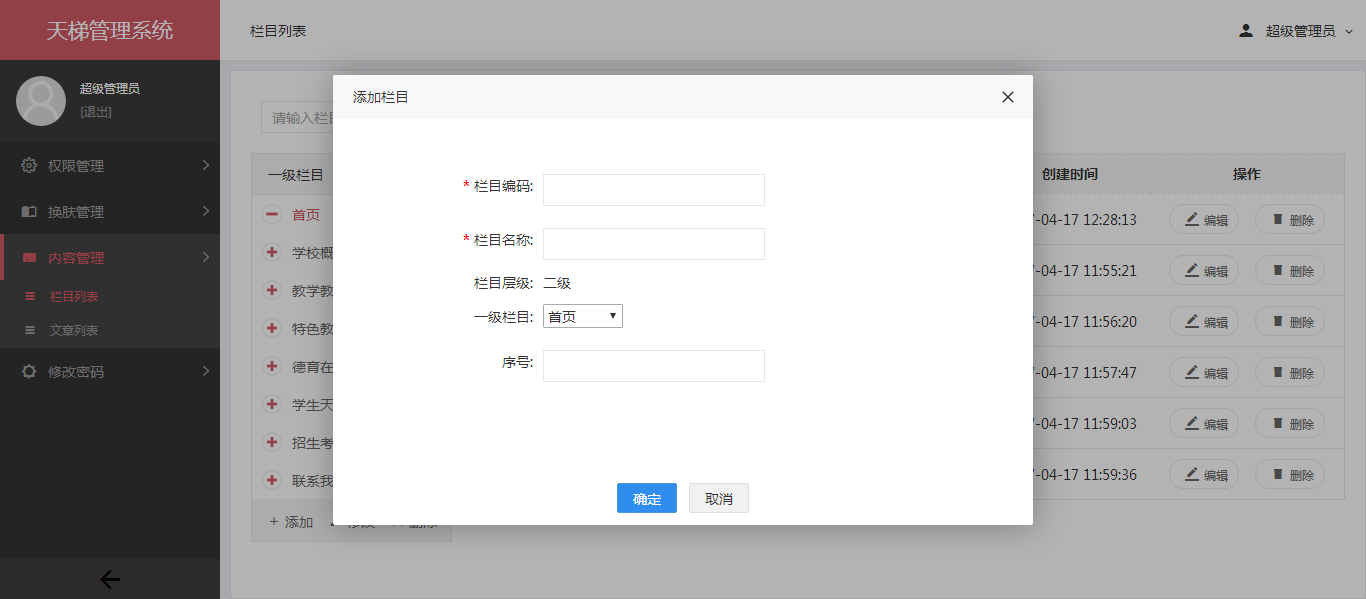

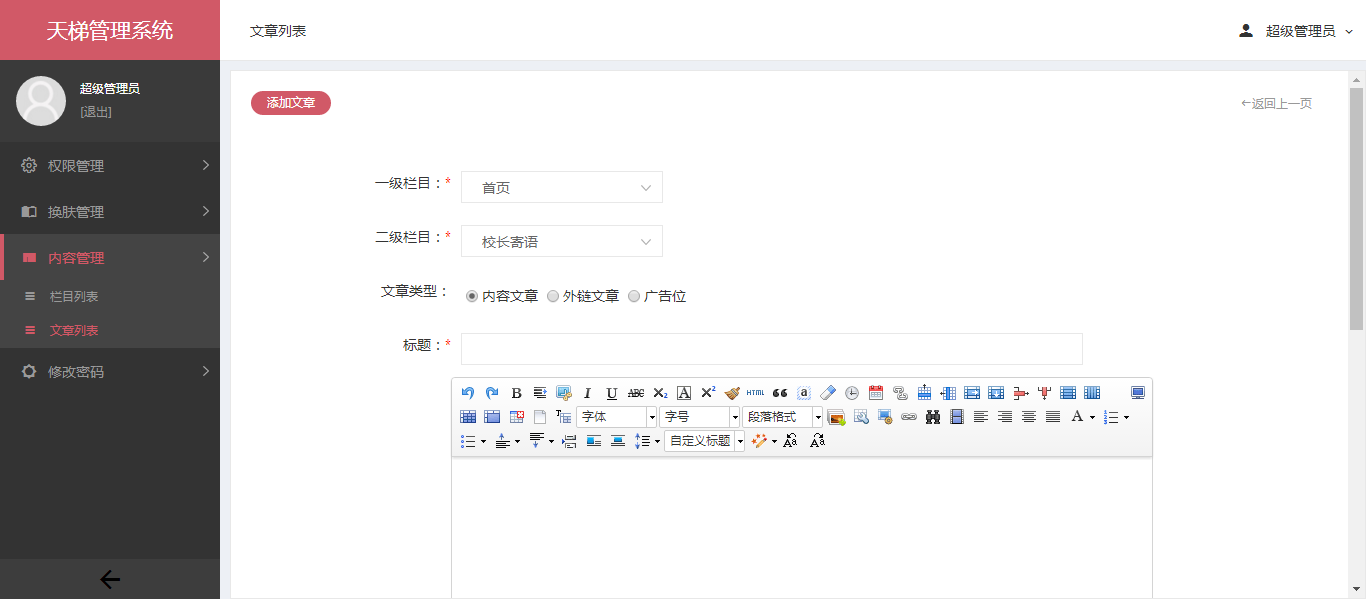

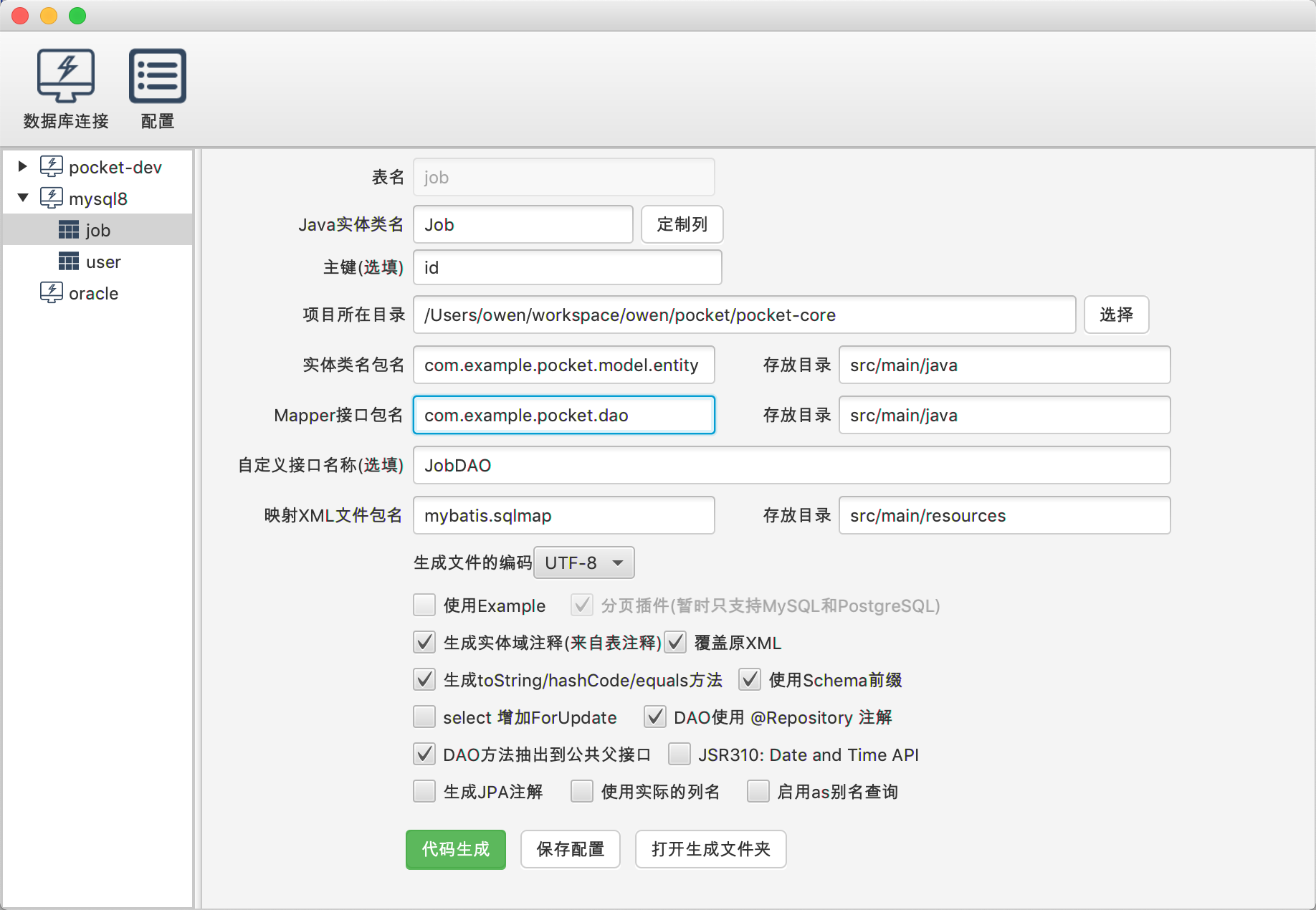

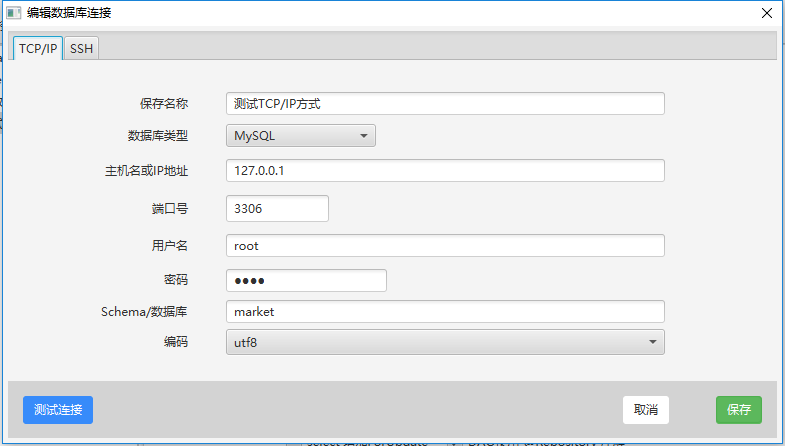

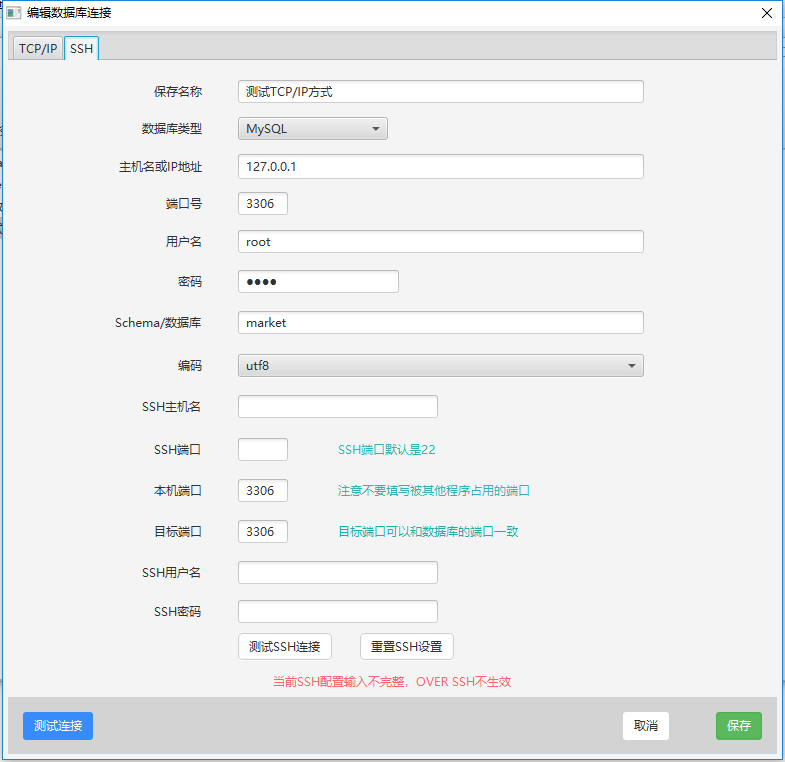

xujeff/tianti | java轻量级的CMS解决方案-天梯。天梯是一个用java相关技术搭建的后台CMS解决方案,用户可以结合自身业务进行相应扩展,同时提供了针对dao、service等的代码生成工具。技术选型:Spring Data JPA、Hibernate、Shiro、 Spring MVC、Layer、Mysql等。 | 2017-02-08T08:21:02Z | null | # 天梯(tianti)

[天梯](https://yuedu.baidu.com/ebook/7a5efa31fbd6195f312b3169a45177232f60e487)[tianti-tool](https://github.com/xujeff/tianti-tool)简介:<br>

1、天梯是一款使用Java编写的免费的轻量级CMS系统,目前提供了从后台管理到前端展现的整体解决方案。

2、用户可以不编写一句代码,就制作出一个默认风格的CMS站点。

3、前端页面自适应,支持PC和H5端,采用前后端分离的机制实现。后端支持天梯蓝和天梯红换肤功能。

4、项目技术分层明显,用户可以根据自己的业务模块进行相应地扩展,很方便二次开发。

<br>

<br>

技术架构:<br>

1、技术选型:

后端

·核心框架:Spring Framework 4.2.5.RELEASE

·安全框架:Apache Shiro 1.3.2

·视图框架:Spring MVC 4.2.5.RELEASE

·数据库连接池:Tomcat JDBC

·缓存框架:Ehcache

·ORM框架:Spring Data JPA、hibernate 4.3.5.Final

·日志管理:SLF4J 1.7.21、Log4j

·编辑器:ueditor

·工具类:Apache Commons、Jackson 2.8.5、POI 3.15

·view层:JSP

·数据库:mysql、oracle等关系型数据库

前端

·dom : Jquery

·分页 : jquery.pagination

·UI管理 : common

·UI集成 : uiExtend

·滚动条 : jquery.nicescroll.min.js

·图表 : highcharts

·3D图表 :highcharts-more

·轮播图 : jquery-swipe

·表单提交 :jquery.form

·文件上传 :jquery.uploadify

·表单验证 :jquery.validator

·展现树 :jquery.ztree

·html模版引擎 :template

2、项目结构:

2.1、tianti-common:系统基础服务抽象,包括entity、dao和service的基础抽象;

2.2、tianti-org:用户权限模块服务实现;

2.3、tianti-cms:资讯类模块服务实现;

2.4、tianti-module-admin:天梯后台web项目实现;

2.5、tianti-module-interface:天梯接口项目实现;

2.6、tianti-module-gateway:天梯前端自适应项目实现(是一个静态项目,调用tianti-module-interface获取数据);

前端项目概览:<br>

PC:<br>

H5:<br>

<br>

后台项目概览:<br>

天梯登陆页面:

天梯蓝风格(默认):

天梯红风格:

| 0 |

heysupratim/material-daterange-picker | A material Date Range Picker based on wdullaers MaterialDateTimePicker | 2015-09-14T12:00:47Z | null | [](http://android-arsenal.com/details/1/2501)

[  ](https://bintray.com/borax12/maven/material-datetime-rangepicker/_latestVersion)

[](https://maven-badges.herokuapp.com/maven-central/com.borax12.materialdaterangepicker/library)

Material Date and Time Picker with Range Selection

======================================================

Credits to the original amazing material date picker library by wdullaer - https://github.com/wdullaer/MaterialDateTimePicker

## Adding to your project

Add the jcenter repository information in your build.gradle file like this

```java

repositories {

jcenter()

}

dependencies {

implementation 'com.borax12.materialdaterangepicker:library:2.0'

}

```

Beginning Version 2.0 now also available on Maven Central

## Date Selection

## Time Selection

Support for Android 4.0 and up.

From the original library documentation -

You may also add the library as an Android Library to your project. All the library files live in ```library```.

Using the Pickers

--------------------------------

1. Implement an `OnDateSetListener` or `OnTimeSetListener`

2. Create a ``DatePickerDialog` using the supplied factory

### Implement an `OnDateSetListener`

In order to receive the date set in the picker, you will need to implement the `OnDateSetListener` interfaces. Typically this will be the `Activity` or `Fragment` that creates the Pickers.

or

### Implement an `OnTimeSetListener`

In order to receive the time set in the picker, you will need to implement the `OnTimeSetListener` interfaces. Typically this will be the `Activity` or `Fragment` that creates the Pickers.

```java

//new onDateSet

@Override

public void onDateSet(DatePickerDialog view, int year, int monthOfYear, int dayOfMonth,int yearEnd, int monthOfYearEnd, int dayOfMonthEnd) {

}

@Override

public void onTimeSet(DatePickerDialog view, int year, int monthOfYear, int dayOfMonth,int yearEnd, int monthOfYearEnd, int dayOfMonthEnd) {

String hourString = hourOfDay < 10 ? "0"+hourOfDay : ""+hourOfDay;

String minuteString = minute < 10 ? "0"+minute : ""+minute;

String hourStringEnd = hourOfDayEnd < 10 ? "0"+hourOfDayEnd : ""+hourOfDayEnd;

String minuteStringEnd = minuteEnd < 10 ? "0"+minuteEnd : ""+minuteEnd;

String time = "You picked the following time: From - "+hourString+"h"+minuteString+" To - "+hourStringEnd+"h"+minuteStringEnd;

timeTextView.setText(time);

}

```

### Create a DatePickerDialog` using the supplied factory

You will need to create a new instance of `DatePickerDialog` using the static `newInstance()` method, supplying proper default values and a callback. Once the dialogs are configured, you can call `show()`.

```java

Calendar now = Calendar.getInstance();

DatePickerDialog dpd = DatePickerDialog.newInstance(

MainActivity.this,

now.get(Calendar.YEAR),

now.get(Calendar.MONTH),

now.get(Calendar.DAY_OF_MONTH)

);

dpd.show(getFragmentManager(), "Datepickerdialog");

```

### Create a TimePickerDialog` using the supplied factory

You will need to create a new instance of `TimePickerDialog` using the static `newInstance()` method, supplying proper default values and a callback. Once the dialogs are configured, you can call `show()`.

```java

Calendar now = Calendar.getInstance();

TimePickerDialog tpd = TimePickerDialog.newInstance(

MainActivity.this,

now.get(Calendar.HOUR_OF_DAY),

now.get(Calendar.MINUTE),

false

);

tpd.show(getFragmentManager(), "Timepickerdialog");

```

For other documentation regarding theming , handling orientation changes , and callbacks - check out the original documentation - https://github.com/wdullaer/MaterialDateTimePicker | 0 |

strapdata/elassandra | Elassandra = Elasticsearch + Apache Cassandra | 2015-08-22T13:52:08Z | null | # Elassandra [](https://travis-ci.org/strapdata/elassandra) [](https://elassandra.readthedocs.io/en/latest/?badge=latest) [](https://github.com/strapdata/elassandra/releases/latest)

[](https://twitter.com/strapdataio)

## [http://www.elassandra.io/](http://www.elassandra.io/)

Elassandra is an [Apache Cassandra](http://cassandra.apache.org) distribution including an [Elasticsearch](https://github.com/elastic/elasticsearch) search engine.

Elassandra is a multi-master multi-cloud database and search engine with support for replicating across multiple datacenters in active/active mode.

Elasticsearch code is embedded in Cassanda nodes providing advanced search features on Cassandra tables and Cassandra serves as an Elasticsearch data and configuration store.

Elassandra supports Cassandra vnodes and scales horizontally by adding more nodes without the need to reshard indices.

Project documentation is available at [doc.elassandra.io](http://doc.elassandra.io).

## Benefits of Elassandra

For Cassandra users, elassandra provides Elasticsearch features :

* Cassandra updates are indexed in Elasticsearch.

* Full-text and spatial search on your Cassandra data.

* Real-time aggregation (does not require Spark or Hadoop to GROUP BY)

* Provide search on multiple keyspaces and tables in one query.

* Provide automatic schema creation and support nested documents using [User Defined Types](https://docs.datastax.com/en/cql/3.1/cql/cql_using/cqlUseUDT.html).

* Provide read/write JSON REST access to Cassandra data.

* Numerous Elasticsearch plugins and products like [Kibana](https://www.elastic.co/guide/en/kibana/current/introduction.html).

* Manage concurrent elasticsearch mappings changes and applies batched atomic CQL schema changes.

* Support [Elasticsearch ingest processors](https://www.elastic.co/guide/en/elasticsearch/reference/master/ingest.html) allowing to transform input data.

For Elasticsearch users, elassandra provides useful features :

* Elassandra is masterless. Cluster state is managed through [cassandra lightweight transactions](http://www.datastax.com/dev/blog/lightweight-transactions-in-cassandra-2-0).

* Elassandra is a sharded multi-master database, where Elasticsearch is sharded master-slave. Thus, Elassandra has no Single Point Of Write, helping to achieve high availability.

* Elassandra inherits Cassandra data repair mechanisms (hinted handoff, read repair and nodetool repair) providing support for **cross datacenter replication**.

* When adding a node to an Elassandra cluster, only data pulled from existing nodes are re-indexed in Elasticsearch.

* Cassandra could be your unique datastore for indexed and non-indexed data. It's easier to manage and secure. Source documents are now stored in Cassandra, reducing disk space if you need a NoSQL database and Elasticsearch.

* Write operations are not restricted to one primary shard, but distributed across all Cassandra nodes in a virtual datacenter. The number of shards does not limit your write throughput. Adding elassandra nodes increases both read and write throughput.

* Elasticsearch indices can be replicated among many Cassandra datacenters, allowing write to the closest datacenter and search globally.

* The [cassandra driver](http://www.planetcassandra.org/client-drivers-tools/) is Datacenter and Token aware, providing automatic load-balancing and failover.

* Elassandra efficiently stores Elasticsearch documents in binary SSTables without any JSON overhead.

## Quick start

* [Quick Start](http://doc.elassandra.io/en/latest/quickstart.html) guide to run a single node Elassandra cluster in docker.

* [Deploy Elassandra by launching a Google Kubernetes Engine](./docs/google-kubernetes-tutorial.md):

[](https://console.cloud.google.com/cloudshell/open?git_repo=https://github.com/strapdata/elassandra-google-k8s-marketplace&tutorial=docs/google-kubernetes-tutorial.md)

## Upgrade Instructions

#### Elassandra 6.8.4.2+

<<<<<<< HEAD

Since version 6.8.4.2, the gossip X1 application state can be compressed using a system property. Enabling this settings allows the creation of a lot of virtual indices.

Before enabling this setting, upgrade all the 6.8.4.x nodes to the 6.8.4.2 (or higher). Once all the nodes are in 6.8.4.2, they are able to decompress the application state even if the settings isn't yet configured locally.

#### Elassandra 6.2.3.25+

Elassandra use the Cassandra GOSSIP protocol to manage the Elasticsearch routing table and Elassandra 6.8.4.2+ add support for compression of

the X1 application state to increase the maxmimum number of Elasticsearch indices. For backward compatibility, the compression is disabled by default,

but once all your nodes are upgraded into version 6.8.4.2+, you should enable the X1 compression by adding **-Des.compress_x1=true** in your **conf/jvm.options** and rolling restart all nodes.

Nodes running version 6.8.4.2+ are able to read compressed and not compressed X1.

#### Elassandra 6.2.3.21+

Before version 6.2.3.21, the Cassandra replication factor for the **elasic_admin** keyspace (and elastic_admin_[datacenter.group]) was automatically adjusted to the

number of nodes of the datacenter. Since version 6.2.3.21 and because it has a performance impact on large clusters, it's now up to your Elassandra administrator to

properly adjust the replication factor for this keyspace. Keep in mind that Elasticsearch mapping updates rely on a PAXOS transaction that requires QUORUM nodes to succeed,

so replication factor should be at least 3 on each datacenter.

#### Elassandra 6.2.3.19+

Elassandra 6.2.3.19 metadata version now relies on the Cassandra table **elastic_admin.metadata_log** (that was **elastic_admin.metadata** from 6.2.3.8 to 6.2.3.18)

to keep the elasticsearch mapping update history and automatically recover from a possible PAXOS write timeout issue.

When upgrading the first node of a cluster, Elassandra automatically copy the current **metadata.version** into the new **elastic_admin.metadata_log** table.

To avoid Elasticsearch mapping inconsistency, you must avoid mapping update while the rolling upgrade is in progress. Once all nodes are upgraded,

the **elastic_admin.metadata** is not more used and can be removed. Then, you can get the mapping update history from the new **elastic_admin.metadata_log** and know

which node has updated the mapping, when and for which reason.

#### Elassandra 6.2.3.8+

Elassandra 6.2.3.8+ now fully manages the elasticsearch mapping in the CQL schema through the use of CQL schema extensions (see *system_schema.tables*, column *extensions*). These table extensions and the CQL schema updates resulting of elasticsearch index creation/modification are updated in batched atomic schema updates to ensure consistency when concurrent updates occurs. Moreover, these extensions are stored in binary and support partial updates to be more efficient. As the result, the elasticsearch mapping is not more stored in the *elastic_admin.metadata* table.

WARNING: During the rolling upgrade, elasticserach mapping changes are not propagated between nodes running the new and the old versions, so don't change your mapping while you're upgrading. Once all your nodes have been upgraded to 6.2.3.8+ and validated, apply the following CQL statements to remove useless elasticsearch metadata:

```bash

ALTER TABLE elastic_admin.metadata DROP metadata;

ALTER TABLE elastic_admin.metadata WITH comment = '';

```

WARNING: Due to CQL table extensions used by Elassandra, some old versions of **cqlsh** may lead to the following error message **"'module' object has no attribute 'viewkeys'."**. This comes from the old python cassandra driver embedded in Cassandra and has been reported in [CASSANDRA-14942](https://issues.apache.org/jira/browse/CASSANDRA-14942). Possible workarounds:

* Use the **cqlsh** embedded with Elassandra

* Install a recent version of the **cqlsh** utility (*pip install cqlsh*) or run it from a docker image:

```bash

docker run -it --rm strapdata/cqlsh:0.1 node.example.com

```

#### Elassandra 6.x changes

* Elasticsearch now supports only one document type per index backed by one Cassandra table. Unless you specify an elasticsearch type name in your mapping, data is stored in a cassandra table named **"_doc"**. If you want to search many cassandra tables, you now need to create and search many indices.

* Elasticsearch 6.x manages shard consistency through several metadata fields (_primary_term, _seq_no, _version) that are not used in elassandra because replication is fully managed by cassandra.

## Installation

Ensure Java 8 is installed and `JAVA_HOME` points to the correct location.

* [Download](https://github.com/strapdata/elassandra/releases) and extract the distribution tarball

* Define the CASSANDRA_HOME environment variable : `export CASSANDRA_HOME=<extracted_directory>`

* Run `bin/cassandra -e`

* Run `bin/nodetool status`

* Run `curl -XGET localhost:9200/_cluster/state`

#### Example

Try indexing a document on a non-existing index:

```bash

curl -XPUT 'http://localhost:9200/twitter/_doc/1?pretty' -H 'Content-Type: application/json' -d '{

"user": "Poulpy",

"post_date": "2017-10-04T13:12:00Z",

"message": "Elassandra adds dynamic mapping to Cassandra"

}'

```

Then look-up in Cassandra:

```bash

bin/cqlsh -e "SELECT * from twitter.\"_doc\""

```

Behind the scenes, Elassandra has created a new Keyspace `twitter` and table `_doc`.

```CQL

admin@cqlsh>DESC KEYSPACE twitter;

CREATE KEYSPACE twitter WITH replication = {'class': 'NetworkTopologyStrategy', 'DC1': '1'} AND durable_writes = true;

CREATE TABLE twitter."_doc" (

"_id" text PRIMARY KEY,

message list<text>,

post_date list<timestamp>,

user list<text>

) WITH bloom_filter_fp_chance = 0.01

AND caching = {'keys': 'ALL', 'rows_per_partition': 'NONE'}

AND comment = ''

AND compaction = {'class': 'org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy', 'max_threshold': '32', 'min_threshold': '4'}

AND compression = {'chunk_length_in_kb': '64', 'class': 'org.apache.cassandra.io.compress.LZ4Compressor'}

AND crc_check_chance = 1.0

AND dclocal_read_repair_chance = 0.1

AND default_time_to_live = 0

AND gc_grace_seconds = 864000

AND max_index_interval = 2048

AND memtable_flush_period_in_ms = 0

AND min_index_interval = 128

AND read_repair_chance = 0.0

AND speculative_retry = '99PERCENTILE';

CREATE CUSTOM INDEX elastic__doc_idx ON twitter."_doc" () USING 'org.elassandra.index.ExtendedElasticSecondaryIndex';

```

By default, multi valued Elasticsearch fields are mapped to Cassandra list.

Now, insert a row with CQL :

```CQL

INSERT INTO twitter."_doc" ("_id", user, post_date, message)

VALUES ( '2', ['Jimmy'], [dateof(now())], ['New data is indexed automatically']);

SELECT * FROM twitter."_doc";

_id | message | post_date | user

-----+--------------------------------------------------+-------------------------------------+------------

2 | ['New data is indexed automatically'] | ['2019-07-04 06:00:21.893000+0000'] | ['Jimmy']

1 | ['Elassandra adds dynamic mapping to Cassandra'] | ['2017-10-04 13:12:00.000000+0000'] | ['Poulpy']

(2 rows)

```

Then search for it with the Elasticsearch API:

```bash

curl "localhost:9200/twitter/_search?q=user:Jimmy&pretty"

```

And here is a sample response :

```JSON

{

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.6931472,

"hits" : [

{

"_index" : "twitter",

"_type" : "_doc",

"_id" : "2",

"_score" : 0.6931472,

"_source" : {

"post_date" : "2019-07-04T06:00:21.893Z",

"message" : "New data is indexed automatically",

"user" : "Jimmy"

}

}

]

}

}

```

## Support

* Commercial support is available through [Strapdata](http://www.strapdata.com/).

* Community support available via [elassandra google groups](https://groups.google.com/forum/#!forum/elassandra).

* Post feature requests and bugs on https://github.com/strapdata/elassandra/issues

## License

```

This software is licensed under the Apache License, version 2 ("ALv2"), quoted below.

Copyright 2015-2018, Strapdata (contact@strapdata.com).

Licensed under the Apache License, Version 2.0 (the "License"); you may not

use this file except in compliance with the License. You may obtain a copy of

the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

License for the specific language governing permissions and limitations under

the License.

```

## Acknowledgments

* Elasticsearch, Logstash, Beats and Kibana are trademarks of Elasticsearch BV, registered in the U.S. and in other countries.

* Apache Cassandra, Apache Lucene, Apache, Lucene and Cassandra are trademarks of the Apache Software Foundation.

* Elassandra is a trademark of Strapdata SAS.

| 0 |

dongjunkun/DropDownMenu | 一个实用的多条件筛选菜单 | 2015-06-23T07:43:56Z | null | [](https://jitpack.io/#dongjunkun/DropDownMenu)

## 简介

一个实用的多条件筛选菜单,在很多App上都能看到这个效果,如美团,爱奇艺电影票等

我的博客 [自己造轮子--android常用多条件帅选菜单实现思路(类似美团,爱奇艺电影票下拉菜单)](http://www.jianshu.com/p/d9407f799d2d)

## 特色

- 支持多级菜单

- 你可以完全自定义你的菜单样式,我这里只是封装了一些实用的方法,Tab的切换效果,菜单显示隐藏效果等

- 并非用popupWindow实现,无卡顿

## ScreenShot

<img src="https://raw.githubusercontent.com/dongjunkun/DropDownMenu/master/art/simple.gif"/>

<a href="https://raw.githubusercontent.com/dongjunkun/DropDownMenu/master/app/build/outputs/apk/app-debug.apk">Download APK</a>

或者扫描二维码

<img src="https://raw.githubusercontent.com/dongjunkun/DropDownMenu/master/art/download.png"/>

## Gradle Dependency

```

allprojects {

repositories {

...

maven { url "https://jitpack.io" }

}

}

dependencies {

compile 'com.github.dongjunkun:DropDownMenu:1.0.4'

}

```

## 使用

添加DropDownMenu 到你的布局文件,如下

```

<com.yyydjk.library.DropDownMenu

android:id="@+id/dropDownMenu"

android:layout_width="match_parent"

android:layout_height="match_parent"

app:ddmenuTextSize="13sp" //tab字体大小

app:ddtextUnselectedColor="@color/drop_down_unselected" //tab未选中颜色

app:ddtextSelectedColor="@color/drop_down_selected" //tab选中颜色

app:dddividerColor="@color/gray" //分割线颜色

app:ddunderlineColor="@color/gray" //下划线颜色

app:ddmenuSelectedIcon="@mipmap/drop_down_selected_icon" //tab选中状态图标

app:ddmenuUnselectedIcon="@mipmap/drop_down_unselected_icon"//tab未选中状态图标

app:ddmaskColor="@color/mask_color" //遮罩颜色,一般是半透明

app:ddmenuBackgroundColor="@color/white" //tab 背景颜色

app:ddmenuMenuHeightPercent="0.5" 菜单的最大高度,根据屏幕高度的百分比设置

...

/>

```

我们只需要在java代码中调用下面的代码

```

//tabs 所有标题,popupViews 所有菜单,contentView 内容

mDropDownMenu.setDropDownMenu(tabs, popupViews, contentView);

```

如果你要了解更多,可以直接看源码 <a href="https://github.com/dongjunkun/DropDownMenu/blob/master/app/src/main/java/com/yyy/djk/dropdownmenu/MainActivity.java">Example</a>

> 建议拷贝代码到项目中使用,拷贝DropDownMenu.java 以及res下的所有文件即可

## 关于我

简书[dongjunkun](http://www.jianshu.com/users/f07458c1a8f3/latest_articles)

| 0 |

DingMouRen/PaletteImageView | 懂得智能配色的ImageView,还能给自己设置多彩的阴影哦。(Understand the intelligent color matching ImageView, but also to set their own colorful shadow Oh!) | 2017-04-25T12:05:08Z | null |

### English Readme

[English Version](https://github.com/hasanmohdkhan/PaletteImageView/blob/master/README%20English.md)

(Thank you, [hasanmohdkhan](https://github.com/hasanmohdkhan))

### 简介

* 可以解析图片中的主色调,**默认将主色调作为控件阴影的颜色**

* 可以**自定义设置控件的阴影颜色**

* 可以**控制控件四个角的圆角大小**(如果控件设置成正方向,随着圆角半径增大,可以将控件变成圆形)

* 可以**控制控件的阴影半径大小**

* 可以分别**控制阴影在x方向和y方向上的偏移量**

* 可以将图片中的颜色解析出**六种主题颜色**,每一种主题颜色都有相应的**匹配背景、标题、正文的推荐颜色**

### build.gradle中引用

```

compile 'com.dingmouren.paletteimageview:paletteimageview:1.0.7'

```

##### 1.参数的控制

圆角半径|阴影模糊范围|阴影偏移量

---|---|---

|  |

##### 2.阴影颜色默认是图片的主色调

##### 3.图片颜色主题解析

### 使用

```

<com.dingmouren.paletteimageview.PaletteImageView

android:id="@+id/palette"

android:layout_width="match_parent"

android:layout_height="wrap_content"

app:palettePadding="20dp"

app:paletteOffsetX="15dp"

app:paletteOffsetY="15dp"

/>

mPaletteImageView.setOnParseColorListener(new PaletteImageView.OnParseColorListener() {

@Override//解析图片的颜色完毕

public void onComplete(PaletteImageView paletteImageView) {

int[] vibrant = paletteImageView.getVibrantColor();

int[] vibrantDark = paletteImageView.getDarkVibrantColor();

int[] vibrantLight = paletteImageView.getLightVibrantColor();

int[] muted = paletteImageView.getMutedColor();

int[] mutedDark = paletteImageView.getDarkMutedColor();

int[] mutedLight = paletteImageView.getLightMutedColor();

}

@Override//解析图片的颜色失败

public void onFail() {

}

});

```

### xml属性

xml属性 | 描述

---|---

app:palettePadding | **表示阴影显示最大空间距离。值为0,没有阴影,大于0,才有阴影。**

app:paletteOffsetX | 表示阴影在x方向上的偏移量

app:paletteOffsetY | 表示阴影在y方向上的偏移量

app:paletteSrc | 表示图片资源

app:paletteRadius | 表示圆角半径

app:paletteShadowRadius | 表示阴影模糊范围

### 公共的方法

方法 | 描述

---|---

public void setShadowColor(int color) | 表示自定义设置控件阴影的颜色

public void setBitmap(Bitmap bitmap) | 表示设置控件位图

public void setPaletteRadius(int raius) | 表示设置控件圆角半径

public void setPaletteShadowOffset(int offsetX, int offsetY) | 表示设置阴影在控件阴影在x方向 或 y方向上的偏移量

public void setPaletteShadowRadius(int radius) | 表示设置控件阴影模糊范围

public void setOnParseColorListener(OnParseColorListener listener) | 设置控件解析图片颜色的监听器

public int[] getVibrantColor() | 表示获取Vibrant主题的颜色数组;假设颜色数组为arry,arry[0]是推荐标题使用的颜色,arry[1]是推荐正文使用的颜色,arry[2]是推荐背景使用的颜色。颜色只是用于推荐,可以自行选择

public int[] getDarkVibrantColor()| 表示获取DarkVibrant主题的颜色数组,数组元素含义同上

public int[] getLightVibrantColor()| 表示获取LightVibrant主题的颜色数组,数组元素含义同上

public int[] getMutedColor()| 表示获取Muted主题的颜色数组,数组元素含义同上

public int[] getDarkMutedColor()| 表示获取DarkMuted主题的颜色数组,数组元素含义同上

public int[] getLightMutedColor()| 表示获取LightMuted主题的颜色数组,数组元素含义同上

<br>此项目已暂停维护<br>

| 0 |

Sunzxyong/Recovery | a crash recovery framework.(一个App异常恢复框架) | 2016-09-04T08:13:19Z | null | # **Recovery**

A crash recovery framework!

----

[  ](https://bintray.com/sunzxyong/maven/Recovery/_latestVersion)  [](https://github.com/Sunzxyong/Recovery/blob/master/LICENSE)

[中文文档](https://github.com/Sunzxyong/Recovery/blob/master/README-Chinese.md)

# **Introduction**

[Blog entry with introduction](http://zhengxiaoyong.com/2016/09/05/Android%E8%BF%90%E8%A1%8C%E6%97%B6Crash%E8%87%AA%E5%8A%A8%E6%81%A2%E5%A4%8D%E6%A1%86%E6%9E%B6-Recovery)

“Recovery” can help you to automatically handle application crash in runtime. It provides you with following functionality:

* Automatic recovery activity with stack and data;

* Ability to recover to the top activity;

* A way to view and save crash info;

* Ability to restart and clear the cache;

* Allows you to do a restart instead of recovering if failed twice in one minute.

# **Art**

# **Usage**

## **Installation**

**Using Gradle**

```gradle

implementation 'com.zxy.android:recovery:1.0.0'

```

or

```gradle

debugImplementation 'com.zxy.android:recovery:1.0.0'

releaseImplementation 'com.zxy.android:recovery-no-op:1.0.0'

```

**Using Maven**

```xml

<dependency>

<groupId>com.zxy.android</groupId>

<artifactId>recovery</artifactId>

<version>1.0.0</version>

<type>pom</type>

</dependency>

```

## **Initialization**

You can use this code sample to initialize Recovery in your application:

```java

Recovery.getInstance()

.debug(true)

.recoverInBackground(false)

.recoverStack(true)

.mainPage(MainActivity.class)

.recoverEnabled(true)

.callback(new MyCrashCallback())

.silent(false, Recovery.SilentMode.RECOVER_ACTIVITY_STACK)

.skip(TestActivity.class)

.init(this);

```

If you don't want to show the RecoveryActivity when the application crash in runtime,you can use silence recover to restore your application.

You can use this code sample to initialize Recovery in your application:

```java

Recovery.getInstance()

.debug(true)

.recoverInBackground(false)

.recoverStack(true)

.mainPage(MainActivity.class)

.recoverEnabled(true)

.callback(new MyCrashCallback())

.silent(true, Recovery.SilentMode.RECOVER_ACTIVITY_STACK)

.skip(TestActivity.class)

.init(this);

```

If you only need to display 'RecoveryActivity' page in development to obtain the debug data, and in the online version does not display, you can set up `recoverEnabled(false);`

## **Arguments**

| Argument | Type | Function |

| :-: | :-: | :-: |

| debug | boolean | Whether to open the debug mode |

| recoverInBackgroud | boolean | When the App in the background, whether to restore the stack |

| recoverStack | boolean | Whether to restore the activity stack, or to restore the top activity |

| mainPage | Class<? extends Activity> | Initial page activity |

| callback | RecoveryCallback | Crash info callback |

| silent | boolean,SilentMode | Whether to use silence recover,if true it will not display RecoveryActivity and restore the activity stack automatically |

**SilentMode**

> 1. RESTART - Restart App

> 2. RECOVER_ACTIVITY_STACK - Restore the activity stack

> 3. RECOVER_TOP_ACTIVITY - Restore the top activity

> 4. RESTART_AND_CLEAR - Restart App and clear data

## **Callback**

```java

public interface RecoveryCallback {

void stackTrace(String stackTrace);

void cause(String cause);

void exception(

String throwExceptionType,

String throwClassName,

String throwMethodName,

int throwLineNumber

);

void throwable(Throwable throwable);

}

```

## **Custom Theme**

You can customize UI by setting these properties in your styles file:

```xml

<color name="recovery_colorPrimary">#2E2E36</color>

<color name="recovery_colorPrimaryDark">#2E2E36</color>

<color name="recovery_colorAccent">#BDBDBD</color>

<color name="recovery_background">#3C4350</color>

<color name="recovery_textColor">#FFFFFF</color>

<color name="recovery_textColor_sub">#C6C6C6</color>

```

## **Crash File Path**

> {SDCard Dir}/Android/data/{packageName}/files/recovery_crash/

----

## **Update history**

* `VERSION-0.0.5`——**Support silent recovery**

* `VERSION-0.0.6`——**Strengthen the protection of silent restore mode**

* `VERSION-0.0.7`——**Add confusion configuration**

* `VERSION-0.0.8`——**Add the skip Activity features,method:skip()**

* `VERSION-0.0.9`——**Update the UI and solve some problems**

* `VERSION-0.1.0`——**Optimization of crash exception delivery, initial Recovery framework can be in any position, release the official version-0.1.0**

* `VERSION-0.1.3`——**Add 'no-op' support**

* `VERSION-0.1.4`——**update default theme**

* `VERSION-0.1.5`——**fix 8.0+ hook bug**

* `VERSION-0.1.6`——**update**

* `VERSION-1.0.0`——**Fix 8.0 compatibility issue**

## **About**

* **Blog**:[https://zhengxiaoyong.com](https://zhengxiaoyong.com)

* **Wechat**:

# **LICENSE**

```

Copyright 2016 zhengxiaoyong

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

```

| 0 |

Jude95/EasyRecyclerView | ArrayAdapter,pull to refresh,auto load more,Header/Footer,EmptyView,ProgressView,ErrorView | 2015-07-18T13:11:48Z | null | # EasyRecyclerView

[中文](https://github.com/Jude95/EasyRecyclerView/blob/master/README_ch.md) | [English](https://github.com/Jude95/EasyRecyclerView/blob/master/README.md)

Encapsulate many API about RecyclerView into the library,such as arrayAdapter,pull to refresh,auto load more,no more and error in the end,header&footer.

The library uses a new usage of ViewHolder,decoupling the ViewHolder and Adapter.

Adapter will do less work,adapter only direct the ViewHolder,if you use MVP,you can put adapter into presenter.ViewHolder only show the item,then you can use one ViewHolder for many Adapter.

Part of the code modified from [Malinskiy/SuperRecyclerView](https://github.com/Malinskiy/SuperRecyclerView),make more functions handed by Adapter.

# Dependency

```groovy

compile 'com.jude:easyrecyclerview:4.4.2'

```

# ScreenShot

# Usage

## EasyRecyclerView

```xml

<com.jude.easyrecyclerview.EasyRecyclerView

android:id="@+id/recyclerView"

android:layout_width="match_parent"

android:layout_height="match_parent"

app:layout_empty="@layout/view_empty"

app:layout_progress="@layout/view_progress"

app:layout_error="@layout/view_error"

app:recyclerClipToPadding="true"

app:recyclerPadding="8dp"

app:recyclerPaddingTop="8dp"

app:recyclerPaddingBottom="8dp"

app:recyclerPaddingLeft="8dp"

app:recyclerPaddingRight="8dp"

app:scrollbarStyle="insideOverlay"//insideOverlay or insideInset or outsideOverlay or outsideInset

app:scrollbars="none"//none or vertical or horizontal

/>

```

**Attention** EasyRecyclerView is not a RecyclerView just contain a RecyclerView.use 'getRecyclerView()' to get the RecyclerView;

**EmptyView&LoadingView&ErrorView**

xml:

```xml

app:layout_empty="@layout/view_empty"

app:layout_progress="@layout/view_progress"

app:layout_error="@layout/view_error"

```

code:

```java

void setEmptyView(View emptyView)

void setProgressView(View progressView)

void setErrorView(View errorView)

```

then you can show it by this whenever:

```java

void showEmpty()

void showProgress()

void showError()

void showRecycler()

```

**scrollToPosition**

```java

void scrollToPosition(int position); // such as scroll to top

```

**control the pullToRefresh**

```java

void setRefreshing(boolean isRefreshing);

void setRefreshing(final boolean isRefreshing, final boolean isCallback); //second params is callback immediately

```

##RecyclerArrayAdapter<T>

there is no relation between RecyclerArrayAdapter and EasyRecyclerView.you can user any Adapter for the EasyRecyclerView,and use the RecyclerArrayAdapter for any RecyclerView.

**Data Manage**

```java

void add(T object);

void addAll(Collection<? extends T> collection);

void addAll(T ... items);

void insert(T object, int index);

void update(T object, int index);

void remove(T object);

void clear();

void sort(Comparator<? super T> comparator);

```

**Header&Footer**

```java

void addHeader(ItemView view)

void addFooter(ItemView view)

```

ItemView is not a view but a view creator;

```java

public interface ItemView {

View onCreateView(ViewGroup parent);

void onBindView(View itemView);

}

```

The onCreateView and onBindView correspond the callback in RecyclerView's Adapter,so adapter will call `onCreateView` once and `onBindView` more than once;

It recommend that add the ItemView to Adapter after the data is loaded,initialization View in onCreateView and nothing in onBindView.

Header and Footer support `LinearLayoutManager`,`GridLayoutManager`,`StaggeredGridLayoutManager`.

In `GridLayoutManager` you must add this:

```java

//make adapter obtain a LookUp for LayoutManager,param is maxSpan。

gridLayoutManager.setSpanSizeLookup(adapter.obtainGridSpanSizeLookUp(2));

```

**OnItemClickListener&OnItemLongClickListener**

```java

adapter.setOnItemClickListener(new RecyclerArrayAdapter.OnItemClickListener() {

@Override

public void onItemClick(int position) {

//position not contain Header

}

});

adapter.setOnItemLongClickListener(new RecyclerArrayAdapter.OnItemLongClickListener() {

@Override

public boolean onItemLongClick(int position) {

return true;

}

});

```

equal 'itemview.setOnClickListener()' in ViewHolder.

if you set listener after RecyclerView has layout.you should use 'notifyDataSetChange()';

###the API below realized by add a Footer。

**LoadMore**

```java

void setMore(final int res,OnMoreListener listener);

void setMore(final View view,OnMoreListener listener);

```

Attention when you add null or the length of data you add is 0 ,it will finish LoadMore and show NoMore;

also you can show NoMore manually `adapter.stopMore();`

**LoadError**

```java

void setError(final int res,OnErrorListener listener)

void setError(final View view,OnErrorListener listener)

```

use `adapter.pauseMore()` to show Error,when your loading throw an error;

if you add data when showing Error.it will resume to load more;

when the ErrorView display to screen again,it will resume to load more too,and callback the OnLoadMoreListener(retry).

`adapter.resumeMore()`you can resume to load more manually,it will callback the OnLoadMoreListener immediately.

you can put resumeMore() into the OnClickListener of ErrorView to realize click to retry.

**NoMore**

```java

void setNoMore(final int res,OnNoMoreListener listener)

void setNoMore(final View view,OnNoMoreListener listener)

```

when loading is finished(add null or empty or stop manually),it while show in the end.

## BaseViewHolder\<M\>

decoupling the ViewHolder and Adapter,new ViewHolder in Adapter and inflate view in ViewHolder.

Example:

```java

public class PersonViewHolder extends BaseViewHolder<Person> {

private TextView mTv_name;

private SimpleDraweeView mImg_face;

private TextView mTv_sign;

public PersonViewHolder(ViewGroup parent) {

super(parent,R.layout.item_person);

mTv_name = $(R.id.person_name);

mTv_sign = $(R.id.person_sign);

mImg_face = $(R.id.person_face);

}

@Override

public void setData(final Person person){

mTv_name.setText(person.getName());

mTv_sign.setText(person.getSign());

mImg_face.setImageURI(Uri.parse(person.getFace()));

}

}

-----------------------------------------------------------------------

public class PersonAdapter extends RecyclerArrayAdapter<Person> {

public PersonAdapter(Context context) {

super(context);

}

@Override

public BaseViewHolder OnCreateViewHolder(ViewGroup parent, int viewType) {

return new PersonViewHolder(parent);

}

}

```

## Decoration

Now there are three commonly used decoration provide for you.

**DividerDecoration**

Usually used in LinearLayoutManager.add divider between items.

```java

DividerDecoration itemDecoration = new DividerDecoration(Color.GRAY, Util.dip2px(this,0.5f), Util.dip2px(this,72),0);//color & height & paddingLeft & paddingRight

itemDecoration.setDrawLastItem(true);//sometimes you don't want draw the divider for the last item,default is true.

itemDecoration.setDrawHeaderFooter(false);//whether draw divider for header and footer,default is false.