sha

stringlengths 40

40

| text

stringlengths 0

13.4M

| id

stringlengths 2

117

| tags

sequence | created_at

stringlengths 25

25

| metadata

stringlengths 2

31.7M

| last_modified

stringlengths 25

25

|

|---|---|---|---|---|---|---|

a5f168f935ebaebd708794c03241f07efbfdbeb1 | Rizwan125/AIByRizwan | [

"license:apache-2.0",

"region:us"

] | 2022-05-06T16:02:34+00:00 | {"license": "apache-2.0"} | 2022-05-06T16:06:15+00:00 |

|

e2fd67fea2d92b54b613fa1eb2af9023f172e91a |

# Dataset Card for "twitter-pos"

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://gate.ac.uk/wiki/twitter-postagger.html](https://gate.ac.uk/wiki/twitter-postagger.html)

- **Repository:** [https://github.com/GateNLP/gateplugin-Twitter](https://github.com/GateNLP/gateplugin-Twitter)

- **Paper:** [https://aclanthology.org/R13-1026/](https://aclanthology.org/R13-1026/)

- **Point of Contact:** [Leon Derczynski](https://github.com/leondz)

- **Size of downloaded dataset files:** 51.96 MiB

- **Size of the generated dataset:** 251.22 KiB

- **Total amount of disk used:** 52.05 MB

### Dataset Summary

Part-of-speech information is basic NLP task. However, Twitter text

is difficult to part-of-speech tag: it is noisy, with linguistic errors and idiosyncratic style.

This dataset contains two datasets for English PoS tagging for tweets:

* Ritter, with train/dev/test

* Foster, with dev/test

Splits defined in the Derczynski paper, but the data is from Ritter and Foster.

* Ritter: [https://aclanthology.org/D11-1141.pdf](https://aclanthology.org/D11-1141.pdf),

* Foster: [https://www.aaai.org/ocs/index.php/ws/aaaiw11/paper/download/3912/4191](https://www.aaai.org/ocs/index.php/ws/aaaiw11/paper/download/3912/4191)

### Supported Tasks and Leaderboards

* [Part of speech tagging on Ritter](https://paperswithcode.com/sota/part-of-speech-tagging-on-ritter)

### Languages

English, non-region-specific. `bcp47:en`

## Dataset Structure

### Data Instances

An example of 'train' looks as follows.

```

{'id': '0', 'tokens': ['Antick', 'Musings', 'post', ':', 'Book-A-Day', '2010', '#', '243', '(', '10/4', ')', '--', 'Gray', 'Horses', 'by', 'Hope', 'Larson', 'http://bit.ly/as8fvc'], 'pos_tags': [23, 23, 22, 9, 23, 12, 22, 12, 5, 12, 6, 9, 23, 23, 16, 23, 23, 51]}

```

### Data Fields

The data fields are the same among all splits.

#### twitter-pos

- `id`: a `string` feature.

- `tokens`: a `list` of `string` features.

- `pos_tags`: a `list` of classification labels (`int`). Full tagset with indices:

```python

```

### Data Splits

| name |tokens|sentences|

|---------|----:|---------:|

|ritter train|10652|551|

|ritter dev |2242|118|

|ritter test |2291|118|

|foster dev |2998|270|

|foster test |2841|250|

## Dataset Creation

### Curation Rationale

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the source language producers?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Annotations

#### Annotation process

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the annotators?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Personal and Sensitive Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Discussion of Biases

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Other Known Limitations

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Additional Information

### Dataset Curators

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Licensing Information

### Citation Information

```

@inproceedings{ritter2011named,

title={Named entity recognition in tweets: an experimental study},

author={Ritter, Alan and Clark, Sam and Etzioni, Oren and others},

booktitle={Proceedings of the 2011 conference on empirical methods in natural language processing},

pages={1524--1534},

year={2011}

}

@inproceedings{foster2011hardtoparse,

title={\# hardtoparse: POS Tagging and Parsing the Twitterverse},

author={Foster, Jennifer and Cetinoglu, Ozlem and Wagner, Joachim and Le Roux, Joseph and Hogan, Stephen and Nivre, Joakim and Hogan, Deirdre and Van Genabith, Josef},

booktitle={Workshops at the Twenty-Fifth AAAI Conference on Artificial Intelligence},

year={2011}

}

@inproceedings{derczynski2013twitter,

title={Twitter part-of-speech tagging for all: Overcoming sparse and noisy data},

author={Derczynski, Leon and Ritter, Alan and Clark, Sam and Bontcheva, Kalina},

booktitle={Proceedings of the international conference recent advances in natural language processing ranlp 2013},

pages={198--206},

year={2013}

}

```

### Contributions

Author uploaded ([@leondz](https://github.com/leondz)) | strombergnlp/twitter_pos | [

"task_categories:token-classification",

"task_ids:part-of-speech",

"annotations_creators:expert-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:10K<n<100K",

"source_datasets:original",

"language:en",

"license:cc-by-4.0",

"region:us"

] | 2022-05-06T18:09:49+00:00 | {"annotations_creators": ["expert-generated"], "language_creators": ["found"], "language": ["en"], "license": ["cc-by-4.0"], "multilinguality": ["monolingual"], "size_categories": ["10K<n<100K"], "source_datasets": ["original"], "task_categories": ["token-classification"], "task_ids": ["part-of-speech"], "paperswithcode_id": "ritter-pos", "pretty_name": "Twitter Part-of-speech"} | 2022-10-25T20:43:15+00:00 |

c66f16a81c93184bdc7f22cfbed284e5b7c12cc7 |

# Dataset Card for [KOR-RE-natures-and-environments]

You can find relation map, guidelines(written in Korean), short technical papers in this [github repo](https://github.com/boostcampaitech3/level2-data-annotation_nlp-level2-nlp-03). This work is done by as part of project for Boostcamp AI Tech supported by Naver Connect Foundation.

### Dataset Description

* Language: Korean

* Task: Relation Extraction

* Topics: Natures and Environments

* Sources: Korean wiki

### Main Data Fields

* Sentences: sentences

* Subject_entity: infos for subject entity in the sentence including words, start index, end index, type of entity

* object_entity: infos for object entity in the sentence including words, start index, end index, type of entity

* label : class ground truth label

* file : name of the file

| kimcando/KOR-RE-natures-and-environments | [

"license:apache-2.0",

"region:us"

] | 2022-05-06T20:59:28+00:00 | {"license": "apache-2.0"} | 2022-05-06T21:11:26+00:00 |

c1b3a1715af331b7834a66a4e878f5fad0a5761e | nateraw/background-remover-files | [

"license:apache-2.0",

"region:us"

] | 2022-05-07T01:49:48+00:00 | {"license": "apache-2.0"} | 2022-05-07T01:53:12+00:00 |

|

7dad1ae753d14498544c4dc1e48e41e7bd633d56 | d0r1h/customer_churn | [

"license:apache-2.0",

"region:us"

] | 2022-05-07T02:04:13+00:00 | {"license": "apache-2.0"} | 2022-05-07T02:27:33+00:00 |

|

6ed818c8ce6d452e5de3133f822c2b80cf02f8d5 | # README

## Annotated Student Feedback

---

annotations_creators: []

language:

- en

license:

- mit

---

This resource contains 3000 student feedback data that have been annotated for aspect terms, opinion terms, polarities of the opinion terms towards targeted aspects, document-level opinion polarities, and sentence separations.

### Folder Structure of the resource,

```bash

└───Annotated Student Feedback Data

├───Annotator_1

│ ├───Annotated_part_1

│ ├───Annotated_part_2

│ └───towe-eacl_recreation_data_set

│ ├───defomative comment removed

│ └───less than 100 lengthy comment

├───Annotator_2

│ ├───Annotated_part_3

│ ├───Annotated_part_4

│ └───Annotated_part_5

└───Annotator_3

└───Annotated_part_6

```

Each Annotated_part_# folders contain three files. Those are in XMI, XML, and ZIP formats.

XMI files contain the annotated student feedback data and XML files contain tagsets used for annotation.

Find the code for reading data from XML and XMI files in `code_for_read_annotated_data.py`

| NLPC-UOM/Student_feedback_analysis_dataset | [

"region:us"

] | 2022-05-07T02:17:15+00:00 | {} | 2022-10-25T09:13:19+00:00 |

e96165af1c82b5dd47b286d196f6ad6ab03ed3ff |

# Dataset Card for Bingsu/arcalive_220506

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-instances)

- [Data Splits](#data-instances)

## Dataset Description

- **Homepage:** https://huggingface.co/datasets/Bingsu/arcalive_220506

- **Repository:** [Needs More Information]

- **Paper:** [Needs More Information]

- **Leaderboard:** [Needs More Information]

- **Point of Contact:** [Needs More Information]

### Dataset Summary

[아카라이브 베스트 라이브 채널](https://arca.live/b/live)의 2021년 8월 16일부터 2022년 5월 6일까지의 데이터를 수집하여, 댓글만 골라낸 데이터입니다.

커뮤니티 특성상, 민감한 데이터들도 많으므로 사용에 주의가 필요합니다.

### Supported Tasks and Leaderboards

[Needs More Information]

### Languages

ko

## Dataset Structure

### Data Instances

- Size of downloaded dataset files: 21.3 MB

### Data Fields

- text: `string`

### Data Splits

| | train |

| ---------- | ------ |

| # of texts | 195323 |

```pycon

>>> from datasets import load_dataset

>>>

>>> data = load_dataset("Bingsu/arcalive_220506")

>>> data["train"].features

{'text': Value(dtype='string', id=None)}

```

```pycon

>>> data["train"][0]

{'text': '오오오오...'}

```

| Bingsu/arcalive_220506 | [

"task_categories:fill-mask",

"task_categories:text-generation",

"task_ids:masked-language-modeling",

"task_ids:language-modeling",

"annotations_creators:no-annotation",

"language_creators:crowdsourced",

"multilinguality:monolingual",

"size_categories:100K<n<1M",

"source_datasets:original",

"language:ko",

"license:cc0-1.0",

"region:us"

] | 2022-05-07T02:40:31+00:00 | {"annotations_creators": ["no-annotation"], "language_creators": ["crowdsourced"], "language": ["ko"], "license": ["cc0-1.0"], "multilinguality": ["monolingual"], "size_categories": ["100K<n<1M"], "source_datasets": ["original"], "task_categories": ["fill-mask", "text-generation"], "task_ids": ["masked-language-modeling", "language-modeling"], "pretty_name": "arcalive_210816_220506"} | 2022-07-01T23:09:48+00:00 |

c31fd74df02439e5a085005238addab9c70dfcf6 | readme! | zhiguoxu/test_data | [

"region:us"

] | 2022-05-07T05:53:04+00:00 | {} | 2022-05-07T05:55:39+00:00 |

9c250843ee2a24eb03085907ade3d4261916fa9c | deydebasmita91/Tweet | [

"license:afl-3.0",

"region:us"

] | 2022-05-07T06:09:33+00:00 | {"license": "afl-3.0"} | 2022-05-07T06:09:33+00:00 |

|

daab7272f119b6d223bb119da987cf10fe210ed7 |

Token classification dataset developed from dataset by Katarina Nimas Kusumawati's undergraduate thesis:

**"Identifikasi Entitas Bernama dalam Domain Medis pada Layanan Konsultasi Kesehatan Berbahasa Menggunkan Alrogritme Bidirectional-LSTM-CRF"**

Institut Teknologi Sepuluh Nopember, Surabaya, Indonesia - 2022

I just performed stratified train-validation-test split work from the original dataset.

Compatible with HuggingFace token-classification script (Tested in 4.17)

https://github.com/huggingface/transformers/tree/v4.17.0/examples/pytorch/token-classification | nadhifikbarw/id_ner_nimas | [

"task_categories:token-classification",

"language:id",

"region:us"

] | 2022-05-07T10:23:27+00:00 | {"language": ["id"], "task_categories": ["token-classification"]} | 2022-10-25T09:13:25+00:00 |

45afd873a3a06ec89473aee2cc4bcd0037474384 | ## fanfiction.net

Cleaning up https://archive.org/download/fanfictiondotnet_repack

Starting with "Z" stories to get the hang of it. | jeremyf/fanfiction_z | [

"language:en",

"fanfiction",

"region:us"

] | 2022-05-07T15:19:15+00:00 | {"language": ["en"], "tags": ["fanfiction"], "datasets": ["fanfiction_z"]} | 2022-05-07T19:53:30+00:00 |

26b54f488012d7f8fd935a4d5d85c46f05fb665d | Can be used for qualifying data sources | hidude562/textsources | [

"region:us"

] | 2022-05-07T16:10:18+00:00 | {} | 2022-05-07T16:12:39+00:00 |

9cdb9cd60e61788d28f341c0cd0bd6ffd2eb3eef | This dataset is a copy from a wikipedia dataset on kaggle | hidude562/BadWikipedia | [

"region:us"

] | 2022-05-07T16:47:50+00:00 | {} | 2022-05-07T16:48:25+00:00 |

764d16c169120835d703ec866dc9c41a6c2a7d88 | This is the English part of the ConceptNet and we have removed the useless information. | peandrew/conceptnet_en_nomalized | [

"region:us"

] | 2022-05-08T00:47:33+00:00 | {} | 2022-05-08T02:11:02+00:00 |

1925dfe6101a528f3dba572ae6aee25f49225c26 | This dataset is the CSV version of the original MCMD (Multi-programming-language Commit Message Dataset) provided by Tao et al. in their paper "On the Evaluation of Commit Message Generation Models: An Experimental Study".

The original version of the dataset can be found in [Zenodo](https://doi.org/10.5281/zenodo.5025758).

| parvezmrobin/MCMD | [

"region:us"

] | 2022-05-08T02:34:28+00:00 | {} | 2022-05-09T06:25:40+00:00 |

ab6223087bf5d6f2e81fef71cb174750266305d1 | nateraw/imagenet-sketch | [

"license:mit",

"region:us"

] | 2022-05-08T04:32:17+00:00 | {"license": "mit"} | 2022-05-08T04:41:33+00:00 |

|

6a2a328e05f100eff4a63f6aec652dbb2ccb214d | data I hand picked from https://blcklst.com/lists/ and http://cs.cmu.edu/~ark/personas/ | bananabot/engMollywoodSummaries | [

"license:wtfpl",

"region:us"

] | 2022-05-08T14:43:03+00:00 | {"license": "wtfpl"} | 2022-05-08T14:54:28+00:00 |

810d972d39c9710587f353f07efe5d3e5432815f | ufukhaman/uspto_balanced_200k_ipc_classification | [

"task_categories:text-classification",

"task_ids:topic-classification",

"annotations_creators:USPTO",

"size_categories:100K<n<1M",

"source_datasets:USPTO",

"language:en",

"license:mit",

"patent",

"refined_patents",

"patent classification",

"uspto",

"ipc",

"region:us"

] | 2022-05-08T15:50:41+00:00 | {"annotations_creators": ["USPTO"], "language": ["en"], "license": ["mit"], "size_categories": ["100K<n<1M"], "source_datasets": ["USPTO"], "task_categories": ["text-classification"], "task_ids": ["topic-classification"], "pretty_name": "uspto_balanced_filtered_200k_ipc_patents", "tags": ["patent", "refined_patents", "patent classification", "uspto", "ipc"]} | 2023-11-20T03:16:38+00:00 |

|

b8f1d27905d8f70f9ab5440a925e00f7bbddcb5f | nguyenvulebinh/fsd50k | [

"license:cc-by-4.0",

"region:us"

] | 2022-05-08T21:16:36+00:00 | {"license": "cc-by-4.0"} | 2022-05-08T21:18:48+00:00 |

|

212b8789f3958e28a961b7147be3c52b83992918 | # Dataset Card for eoir_privacy

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-instances)

- [Data Splits](#data-instances)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

## Dataset Description

- **Homepage:** [Needs More Information]

- **Repository:** [Needs More Information]

- **Paper:** [Needs More Information]

- **Leaderboard:** [Needs More Information]

- **Point of Contact:** [Needs More Information]

### Dataset Summary

This dataset mimics privacy standards for EOIR decisions. It is meant to help learn contextual data sanitization rules to anonymize potentially sensitive contexts in crawled language data.

### Languages

English

## Dataset Structure

### Data Instances

{

"text" : masked paragraph,

"label" : whether to use a pseudonym in filling masks

}

### Data Splits

train 75%, validation 25%

## Dataset Creation

### Curation Rationale

This dataset mimics privacy standards for EOIR decisions. It is meant to help learn contextual data sanitization rules to anonymize potentially sensitive contexts in crawled language data.

### Source Data

#### Initial Data Collection and Normalization

We scrape EOIR. We then filter at the paragraph level and replace any references to respondent, applicant, or names with [MASK] tokens. We then determine if the case used a pseudonym or not.

#### Who are the source language producers?

U.S. Executive Office for Immigration Review

### Annotations

#### Annotation process

Annotations (i.e., pseudonymity decisions) were made by the EOIR court. We use regex to identify if a pseudonym was used to refer to the applicant/respondent.

#### Who are the annotators?

EOIR judges.

### Personal and Sensitive Information

There may be sensitive contexts involved, the courts already make a determination as to data filtering of sensitive data, but nonetheless there may be sensitive topics discussed.

## Considerations for Using the Data

### Social Impact of Dataset

This dataset is meant to learn contextual privacy rules to help filter private/sensitive data, but itself encodes biases of the courts from which the data came. We suggest that people look beyond this data for learning more contextual privacy rules.

### Discussion of Biases

Data may be biased due to its origin in U.S. immigration courts.

### Licensing Information

CC-BY-NC

### Citation Information

```

@misc{hendersonkrass2022pileoflaw,

url = {https://arxiv.org/abs/2207.00220},

author = {Henderson, Peter and Krass, Mark S. and Zheng, Lucia and Guha, Neel and Manning, Christopher D. and Jurafsky, Dan and Ho, Daniel E.},

title = {Pile of Law: Learning Responsible Data Filtering from the Law and a 256GB Open-Source Legal Dataset},

publisher = {arXiv},

year = {2022}

}

``` | pile-of-law/eoir_privacy | [

"task_categories:text-classification",

"language_creators:found",

"multilinguality:monolingual",

"language:en",

"license:cc-by-nc-sa-4.0",

"arxiv:2207.00220",

"region:us"

] | 2022-05-08T21:30:20+00:00 | {"language_creators": ["found"], "language": ["en"], "license": ["cc-by-nc-sa-4.0"], "multilinguality": ["monolingual"], "source_datasets": [], "task_categories": ["text-classification"], "pretty_name": "eoir_privacy", "viewer": false} | 2022-07-07T07:44:32+00:00 |

369d3fa365afd16e699f5dfa2ff283675f637aaa | lilitket/voxlingua107 | [

"license:apache-2.0",

"region:us"

] | 2022-05-08T22:27:04+00:00 | {"license": "apache-2.0"} | 2022-05-08T22:27:04+00:00 |

|

a2a4aa7bb2f872f0164a04f198b1c875df065a8a |

# Dataset Card for "rustance"

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://figshare.com/articles/dataset/dataset_csv/7151906](https://figshare.com/articles/dataset/dataset_csv/7151906)

- **Repository:** [https://github.com/StrombergNLP/rustance](https://github.com/StrombergNLP/rustance)

- **Paper:** [https://link.springer.com/chapter/10.1007/978-3-030-14687-0_16](https://link.springer.com/chapter/10.1007/978-3-030-14687-0_16), [https://arxiv.org/abs/1809.01574](https://arxiv.org/abs/1809.01574)

- **Point of Contact:** [Leon Derczynski](https://github.com/leondz)

- **Size of downloaded dataset files:** 212.54 KiB

- **Size of the generated dataset:** 186.76 KiB

- **Total amount of disk used:** 399.30KiB

### Dataset Summary

This is a stance prediction dataset in Russian. The dataset contains comments on news articles,

and rows are a comment, the title of the news article it responds to, and the stance of the comment

towards the article.

Stance detection is a critical component of rumour and fake news identification. It involves the extraction of the stance a particular author takes related to a given claim, both expressed in text. This paper investigates stance classification for Russian. It introduces a new dataset, RuStance, of Russian tweets and news comments from multiple sources, covering multiple stories, as well as text classification approaches to stance detection as benchmarks over this data in this language. As well as presenting this openly-available dataset, the first of its kind for Russian, the paper presents a baseline for stance prediction in the language.

### Supported Tasks and Leaderboards

* Stance Detection: [Stance Detection on RuStance](https://paperswithcode.com/sota/stance-detection-on-rustance)

### Languages

Russian, as spoken on the Meduza website (i.e. from multiple countries) (`bcp47:ru`)

## Dataset Structure

### Data Instances

#### rustance

- **Size of downloaded dataset files:** 349.79 KiB

- **Size of the generated dataset:** 366.11 KiB

- **Total amount of disk used:** 715.90 KiB

An example of 'train' looks as follows.

```

{

'id': '0',

'text': 'Волки, волки!!',

'title': 'Минобороны обвинило «гражданского сотрудника» в публикации скриншота из игры вместо фото террористов. И показало новое «неоспоримое подтверждение»',

'stance': 3

}

```

### Data Fields

- `id`: a `string` feature.

- `text`: a `string` expressing a stance.

- `title`: a `string` of the target/topic annotated here.

- `stance`: a class label representing the stance the text expresses towards the target. Full tagset with indices:

```

0: "support",

1: "deny",

2: "query",

3: "comment",

```

### Data Splits

| name |train|

|---------|----:|

|rustance|958 sentences|

## Dataset Creation

### Curation Rationale

Toy data for training and especially evaluating stance prediction in Russian

### Source Data

#### Initial Data Collection and Normalization

The data is comments scraped from a Russian news site not situated in Russia, [Meduza](https://meduza.io/), in 2018.

#### Who are the source language producers?

Russian speakers including from the Russian diaspora, especially Latvia

### Annotations

#### Annotation process

Annotators labelled comments for supporting, denying, querying or just commenting on a news article.

#### Who are the annotators?

Russian native speakers, IT education, male, 20s.

### Personal and Sensitive Information

The data was public at the time of collection. No PII removal has been performed.

## Considerations for Using the Data

### Social Impact of Dataset

There's a risk of misinformative content being in this data. The data has NOT been vetted for any content.

### Discussion of Biases

### Other Known Limitations

The above limitations apply.

## Additional Information

### Dataset Curators

The dataset is curated by the paper's authors.

### Licensing Information

The authors distribute this data under Creative Commons attribution license, CC-BY 4.0.

### Citation Information

```

@inproceedings{lozhnikov2018stance,

title={Stance prediction for russian: data and analysis},

author={Lozhnikov, Nikita and Derczynski, Leon and Mazzara, Manuel},

booktitle={International Conference in Software Engineering for Defence Applications},

pages={176--186},

year={2018},

organization={Springer}

}

```

### Contributions

Author-added dataset [@leondz](https://github.com/leondz)

| strombergnlp/rustance | [

"task_categories:text-classification",

"task_ids:fact-checking",

"task_ids:sentiment-classification",

"annotations_creators:expert-generated",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:n<1K",

"source_datasets:original",

"language:ru",

"license:cc-by-4.0",

"stance-detection",

"arxiv:1809.01574",

"region:us"

] | 2022-05-09T07:53:27+00:00 | {"annotations_creators": ["expert-generated"], "language_creators": ["found"], "language": ["ru"], "license": ["cc-by-4.0"], "multilinguality": ["monolingual"], "size_categories": ["n<1K"], "source_datasets": ["original"], "task_categories": ["text-classification"], "task_ids": ["fact-checking", "sentiment-classification"], "paperswithcode_id": "rustance", "pretty_name": "RuStance", "tags": ["stance-detection"]} | 2022-10-25T20:46:32+00:00 |

a2026a5ccc555b7a1658105c515df80b683f26db |

# Dataset Card for audioset2022

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [AudioSet Ontology](https://research.google.com/audioset/ontology/index.html)

- **Repository:** [Needs More Information]

- **Paper:** [Audio Set: An ontology and human-labeled dataset for audio events](https://research.google.com/pubs/pub45857.html)

- **Leaderboard:** [Paperswithcode Leaderboard](https://paperswithcode.com/dataset/audioset)

### Dataset Summary

The AudioSet ontology is a collection of sound events organized in a hierarchy. The ontology covers a wide range of everyday sounds, from human and animal sounds, to natural and environmental sounds, to musical and miscellaneous sounds.

**This repository only includes audio files for DCASE 2022 - Task 3**

The included labels are limited to:

- Female speech, woman speaking

- Male speech, man speaking

- Clapping

- Telephone

- Telephone bell ringing

- Ringtone

- Laughter

- Domestic sounds, home sounds

- Vacuum cleaner

- Kettle whistle

- Mechanical fan

- Walk, footsteps

- Door

- Cupboard open or close

- Music

- Background music

- Pop music

- Musical instrument

- Acoustic guitar

- Marimba, xylophone

- Cowbell

- Piano

- Electric piano

- Rattle (instrument)

- Water tap, faucet

- Bell

- Bicycle bell

- Chime

- Knock

### Supported Tasks and Leaderboards

- `audio-classification`: The dataset can be used to train a model for Sound Event Detection/Localization.

**The recordings only includes the single channel audio. For Localization tasks, it will required to apply RIR information**

### Languages

None

## Dataset Structure

### Data Instances

**WIP**

```

{

'file':

}

```

### Data Fields

- file: A path to the downloaded audio file in .mp3 format.

### Data Splits

This dataset only includes audio file from the unbalance train list.

The data comprises two splits: weak labels and strong labels.

## Dataset Creation

### Curation Rationale

[Needs More Information]

### Source Data

#### Initial Data Collection and Normalization

[Needs More Information]

#### Who are the source language producers?

[Needs More Information]

### Annotations

#### Annotation process

[Needs More Information]

#### Who are the annotators?

[Needs More Information]

### Personal and Sensitive Information

[Needs More Information]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[Needs More Information]

## Additional Information

### Dataset Curators

The dataset was initially downloaded by Nelson Yalta (nelson.yalta@ieee.org).

### Licensing Information

[CC BY-SA 4.0](https://creativecommons.org/licenses/by-sa/4.0)

### Citation Information

```

@inproceedings{45857,

title = {Audio Set: An ontology and human-labeled dataset for audio events},

author = {Jort F. Gemmeke and Daniel P. W. Ellis and Dylan Freedman and Aren Jansen and Wade Lawrence and R. Channing Moore and Manoj Plakal and Marvin Ritter},

year = {2017},

booktitle = {Proc. IEEE ICASSP 2017},

address = {New Orleans, LA}

}

```

| Fhrozen/AudioSet2K22 | [

"task_categories:audio-classification",

"annotations_creators:unknown",

"language_creators:unknown",

"size_categories:100K<n<100M",

"source_datasets:unknown",

"license:cc-by-sa-4.0",

"audio-slot-filling",

"region:us"

] | 2022-05-09T11:42:09+00:00 | {"annotations_creators": ["unknown"], "language_creators": ["unknown"], "license": "cc-by-sa-4.0", "size_categories": ["100K<n<100M"], "source_datasets": ["unknown"], "task_categories": ["audio-classification"], "task_ids": [], "tags": ["audio-slot-filling"]} | 2023-05-07T22:50:56+00:00 |

14ee3d2371f129249d64b6e9171b0fa57a8270c8 | Maddy132/bottles | [

"license:afl-3.0",

"region:us"

] | 2022-05-09T12:13:11+00:00 | {"license": "afl-3.0"} | 2022-05-09T12:13:11+00:00 |

|

f223cad3fce49e4490733772610a0cbdb7fbcb9d |

# WCEP10 dataset for summarization

Summarization dataset copied from [PRIMERA](https://github.com/allenai/PRIMER)

This dataset is compatible with the [`run_summarization.py`](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization) script from Transformers if you add this line to the `summarization_name_mapping` variable:

```python

"ccdv/WCEP-10": ("document", "summary")

```

# Configs

4 possibles configs:

- `roberta` will concatenate documents with "\</s\>" (default)

- `newline` will concatenate documents with "\n"

- `bert` will concatenate documents with "[SEP]"

- `list` will return the list of documents instead of a string

### Data Fields

- `id`: paper id

- `document`: a string/list containing the body of a set of documents

- `summary`: a string containing the abstract of the set

### Data Splits

This dataset has 3 splits: _train_, _validation_, and _test_. \

| Dataset Split | Number of Instances |

| ------------- | --------------------|

| Train | 8158 |

| Validation | 1020 |

| Test | 1022 |

# Cite original article

```

@article{DBLP:journals/corr/abs-2005-10070,

author = {Demian Gholipour Ghalandari and

Chris Hokamp and

Nghia The Pham and

John Glover and

Georgiana Ifrim},

title = {A Large-Scale Multi-Document Summarization Dataset from the Wikipedia

Current Events Portal},

journal = {CoRR},

volume = {abs/2005.10070},

year = {2020},

url = {https://arxiv.org/abs/2005.10070},

eprinttype = {arXiv},

eprint = {2005.10070},

timestamp = {Fri, 22 May 2020 16:21:28 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-10070.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

@article{DBLP:journals/corr/abs-2110-08499,

author = {Wen Xiao and

Iz Beltagy and

Giuseppe Carenini and

Arman Cohan},

title = {{PRIMER:} Pyramid-based Masked Sentence Pre-training for Multi-document

Summarization},

journal = {CoRR},

volume = {abs/2110.08499},

year = {2021},

url = {https://arxiv.org/abs/2110.08499},

eprinttype = {arXiv},

eprint = {2110.08499},

timestamp = {Fri, 22 Oct 2021 13:33:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2110-08499.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` | ccdv/WCEP-10 | [

"task_categories:summarization",

"task_categories:text2text-generation",

"multilinguality:monolingual",

"size_categories:1K<n<10K",

"language:en",

"conditional-text-generation",

"arxiv:2005.10070",

"arxiv:2110.08499",

"region:us"

] | 2022-05-09T13:13:26+00:00 | {"language": ["en"], "multilinguality": ["monolingual"], "size_categories": ["1K<n<10K"], "task_categories": ["summarization", "text2text-generation"], "task_ids": [], "tags": ["conditional-text-generation"]} | 2022-10-25T09:55:52+00:00 |

bc70f671fe1762dc8b9822701c05fcca2ac6169d |

This dataset is created by Ilja Samoilov. In dataset is tv show subtitles from ERR and transcriptions of those shows created with TalTech ASR.

```

from datasets import load_dataset, load_metric

dataset = load_dataset('csv', data_files={'train': "train.tsv", \

"validation":"val.tsv", \

"test": "test.tsv"}, delimiter='\t')

``` | IljaSamoilov/ERR-transcription-to-subtitles | [

"license:afl-3.0",

"region:us"

] | 2022-05-09T14:30:37+00:00 | {"license": "afl-3.0"} | 2022-05-09T17:29:16+00:00 |

feb713097480947041997b09537353df3632e1bd | emotion datset | mmillet/copy | [

"license:other",

"region:us"

] | 2022-05-09T15:55:02+00:00 | {"license": "other"} | 2022-05-10T08:53:27+00:00 |

d9c5be9a7315c640a3562b12fa5406d15221e6e2 | benyang123/code | [

"region:us"

] | 2022-05-09T16:10:40+00:00 | {} | 2022-05-09T16:13:17+00:00 |

|

aa54aa83ba43c62484e0bba3bc3f50edd3c6d238 | Pengfei/test22 | [

"region:us"

] | 2022-05-09T19:21:11+00:00 | {} | 2022-05-09T19:21:40+00:00 |

|

d3e892e10158b2a84a8a9f7ad689c5db4fde444b | Eigen/twttone | [

"region:us"

] | 2022-05-09T20:18:19+00:00 | {} | 2022-05-09T20:45:39+00:00 |

|

ebe8f93c58bbd2a506df86b82d5f4375abf28bae |

This Dataset is from Kaggle. It originally comes from the US Consumer Finance Complaints. This is great dataset for NLP multi-class classification.

| milesbutler/consumer_complaints | [

"license:mit",

"region:us"

] | 2022-05-09T20:21:32+00:00 | {"license": "mit"} | 2022-05-09T20:27:44+00:00 |

d38d3f42978e72c8c3ccc5dca0d3a2ac745f1fcf |

# Dataset Card for QA2D

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-instances)

- [Data Splits](#data-instances)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

## Dataset Description

- **Homepage:** https://worksheets.codalab.org/worksheets/0xd4ebc52cebb84130a07cbfe81597aaf0/

- **Repository:** https://github.com/kelvinguu/qanli

- **Paper:** https://arxiv.org/abs/1809.02922

- **Leaderboard:** [Needs More Information]

- **Point of Contact:** [Needs More Information]

### Dataset Summary

Existing datasets for natural language inference (NLI) have propelled research on language understanding. We propose a new method for automatically deriving NLI datasets from the growing abundance of large-scale question answering datasets. Our approach hinges on learning a sentence transformation model which converts question-answer pairs into their declarative forms. Despite being primarily trained on a single QA dataset, we show that it can be successfully applied to a variety of other QA resources. Using this system, we automatically derive a new freely available dataset of over 500k NLI examples (QA-NLI), and show that it exhibits a wide range of inference phenomena rarely seen in previous NLI datasets.

This Question to Declarative Sentence (QA2D) Dataset contains 86k question-answer pairs and their manual transformation into declarative sentences. 95% of question answer pairs come from SQuAD (Rajkupar et al., 2016) and the remaining 5% come from four other question answering datasets.

### Supported Tasks and Leaderboards

[Needs More Information]

### Languages

en

## Dataset Structure

### Data Instances

See below.

### Data Fields

- `dataset`: lowercased name of dataset (movieqa, newsqa, qamr, race, squad)

- `example_uid`: unique id of example within dataset (there are examples with the same uids from different datasets, so the combination of dataset + example_uid should be used for unique indexing)

- `question`: tokenized (space-separated) question from the source QA dataset

- `answer`: tokenized (space-separated) answer span from the source QA dataset

- `turker_answer`: tokenized (space-separated) answer sentence collected from MTurk

- `rule-based`: tokenized (space-separated) answer sentence, generated by the rule-based model

### Data Splits

| Dataset Split | Number of Instances in Split |

| ------------- |----------------------------- |

| Train | 60,710 |

| Dev | 10,344 |

## Dataset Creation

### Curation Rationale

This Question to Declarative Sentence (QA2D) Dataset contains 86k question-answer pairs and their manual transformation into declarative sentences. 95% of question answer pairs come from SQuAD (Rajkupar et al., 2016) and the remaining 5% come from four other question answering datasets.

### Source Data

#### Initial Data Collection and Normalization

[Needs More Information]

#### Who are the source language producers?

[Needs More Information]

### Annotations

#### Annotation process

[Needs More Information]

#### Who are the annotators?

[Needs More Information]

### Personal and Sensitive Information

[Needs More Information]

## Considerations for Using the Data

### Social Impact of Dataset

[Needs More Information]

### Discussion of Biases

[Needs More Information]

### Other Known Limitations

[Needs More Information]

## Additional Information

### Dataset Curators

[Needs More Information]

### Licensing Information

[Needs More Information]

### Citation Information

@article{DBLP:journals/corr/abs-1809-02922,

author = {Dorottya Demszky and

Kelvin Guu and

Percy Liang},

title = {Transforming Question Answering Datasets Into Natural Language Inference

Datasets},

journal = {CoRR},

volume = {abs/1809.02922},

year = {2018},

url = {http://arxiv.org/abs/1809.02922},

eprinttype = {arXiv},

eprint = {1809.02922},

timestamp = {Fri, 05 Oct 2018 11:34:52 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1809-02922.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

} | domenicrosati/QA2D | [

"task_categories:text2text-generation",

"task_ids:text-simplification",

"annotations_creators:machine-generated",

"annotations_creators:crowdsourced",

"annotations_creators:found",

"language_creators:machine-generated",

"language_creators:crowdsourced",

"multilinguality:monolingual",

"size_categories:10K<n<100K",

"source_datasets:original",

"source_datasets:extended|squad",

"source_datasets:extended|race",

"source_datasets:extended|newsqa",

"source_datasets:extended|qamr",

"source_datasets:extended|movieQA",

"license:mit",

"arxiv:1809.02922",

"region:us"

] | 2022-05-09T22:35:19+00:00 | {"annotations_creators": ["machine-generated", "crowdsourced", "found"], "language_creators": ["machine-generated", "crowdsourced"], "language": [], "license": ["mit"], "multilinguality": ["monolingual"], "size_categories": ["10K<n<100K"], "source_datasets": ["original", "extended|squad", "extended|race", "extended|newsqa", "extended|qamr", "extended|movieQA"], "task_categories": ["text2text-generation"], "task_ids": ["text-simplification"], "pretty_name": "QA2D"} | 2022-10-25T09:13:31+00:00 |

21b1791c498766ed3d204ba380db7f6242fe3aab | annotations_creators:

- crowdsourced

language_creators:

- crowdsourced

languages:

- en-US

- ''

licenses:

- osl-2.0

multilinguality:

- monolingual

pretty_name: github_issues_300

size_categories:

- n<1K

source_datasets: []

task_categories:

- text-classification

task_ids:

- acceptability-classification

- topic-classification

# Dataset Card for github_issues_300

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-instances)

- [Data Splits](#data-instances)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

## Dataset Description

- **Homepage:** [Needs More Information]

- **Repository:** https://huggingface.co/datasets/mdroth/github_issues_300

- **Paper:** [Needs More Information]

- **Leaderboard:** [Needs More Information]

- **Point of Contact:** [Needs More Information]

### Dataset Summary

GitHub issues dataset as in the Hugging Face course (https://huggingface.co/course/chapter5/5?fw=pt) but restricted to 300 issues

### Supported Tasks and Leaderboards

[Needs More Information]

### Languages

[Needs More Information]

## Dataset Structure

### Data Instances

[Needs More Information]

### Data Fields

[Needs More Information]

### Data Splits

[Needs More Information]

## Dataset Creation

### Curation Rationale

[Needs More Information]

### Source Data

#### Initial Data Collection and Normalization

[Needs More Information]

#### Who are the source language producers?

[Needs More Information]

### Annotations

#### Annotation process

[Needs More Information]

#### Who are the annotators?

[Needs More Information]

### Personal and Sensitive Information

[Needs More Information]

## Considerations for Using the Data

### Social Impact of Dataset

[Needs More Information]

### Discussion of Biases

[Needs More Information]

### Other Known Limitations

[Needs More Information]

## Additional Information

### Dataset Curators

[Needs More Information]

### Licensing Information

[Needs More Information]

### Citation Information

[Needs More Information] | mdroth/github_issues_300 | [

"region:us"

] | 2022-05-09T23:17:18+00:00 | {"dataset_info": {"features": [{"name": "url", "dtype": "string"}, {"name": "repository_url", "dtype": "string"}, {"name": "labels_url", "dtype": "string"}, {"name": "comments_url", "dtype": "string"}, {"name": "events_url", "dtype": "string"}, {"name": "html_url", "dtype": "string"}, {"name": "id", "dtype": "int64"}, {"name": "node_id", "dtype": "string"}, {"name": "number", "dtype": "int64"}, {"name": "title", "dtype": "string"}, {"name": "user", "struct": [{"name": "login", "dtype": "string"}, {"name": "id", "dtype": "int64"}, {"name": "node_id", "dtype": "string"}, {"name": "avatar_url", "dtype": "string"}, {"name": "gravatar_id", "dtype": "string"}, {"name": "url", "dtype": "string"}, {"name": "html_url", "dtype": "string"}, {"name": "followers_url", "dtype": "string"}, {"name": "following_url", "dtype": "string"}, {"name": "gists_url", "dtype": "string"}, {"name": "starred_url", "dtype": "string"}, {"name": "subscriptions_url", "dtype": "string"}, {"name": "organizations_url", "dtype": "string"}, {"name": "repos_url", "dtype": "string"}, {"name": "events_url", "dtype": "string"}, {"name": "received_events_url", "dtype": "string"}, {"name": "type", "dtype": "string"}, {"name": "site_admin", "dtype": "bool"}]}, {"name": "labels", "list": [{"name": "id", "dtype": "int64"}, {"name": "node_id", "dtype": "string"}, {"name": "url", "dtype": "string"}, {"name": "name", "dtype": "string"}, {"name": "color", "dtype": "string"}, {"name": "default", "dtype": "bool"}, {"name": "description", "dtype": "string"}]}, {"name": "state", "dtype": "string"}, {"name": "locked", "dtype": "bool"}, {"name": "assignee", "struct": [{"name": "login", "dtype": "string"}, {"name": "id", "dtype": "int64"}, {"name": "node_id", "dtype": "string"}, {"name": "avatar_url", "dtype": "string"}, {"name": "gravatar_id", "dtype": "string"}, {"name": "url", "dtype": "string"}, {"name": "html_url", "dtype": "string"}, {"name": "followers_url", "dtype": "string"}, {"name": "following_url", "dtype": "string"}, {"name": "gists_url", "dtype": "string"}, {"name": "starred_url", "dtype": "string"}, {"name": "subscriptions_url", "dtype": "string"}, {"name": "organizations_url", "dtype": "string"}, {"name": "repos_url", "dtype": "string"}, {"name": "events_url", "dtype": "string"}, {"name": "received_events_url", "dtype": "string"}, {"name": "type", "dtype": "string"}, {"name": "site_admin", "dtype": "bool"}]}, {"name": "assignees", "list": [{"name": "login", "dtype": "string"}, {"name": "id", "dtype": "int64"}, {"name": "node_id", "dtype": "string"}, {"name": "avatar_url", "dtype": "string"}, {"name": "gravatar_id", "dtype": "string"}, {"name": "url", "dtype": "string"}, {"name": "html_url", "dtype": "string"}, {"name": "followers_url", "dtype": "string"}, {"name": "following_url", "dtype": "string"}, {"name": "gists_url", "dtype": "string"}, {"name": "starred_url", "dtype": "string"}, {"name": "subscriptions_url", "dtype": "string"}, {"name": "organizations_url", "dtype": "string"}, {"name": "repos_url", "dtype": "string"}, {"name": "events_url", "dtype": "string"}, {"name": "received_events_url", "dtype": "string"}, {"name": "type", "dtype": "string"}, {"name": "site_admin", "dtype": "bool"}]}, {"name": "milestone", "struct": [{"name": "url", "dtype": "string"}, {"name": "html_url", "dtype": "string"}, {"name": "labels_url", "dtype": "string"}, {"name": "id", "dtype": "int64"}, {"name": "node_id", "dtype": "string"}, {"name": "number", "dtype": "int64"}, {"name": "title", "dtype": "string"}, {"name": "description", "dtype": "string"}, {"name": "creator", "struct": [{"name": "login", "dtype": "string"}, {"name": "id", "dtype": "int64"}, {"name": "node_id", "dtype": "string"}, {"name": "avatar_url", "dtype": "string"}, {"name": "gravatar_id", "dtype": "string"}, {"name": "url", "dtype": "string"}, {"name": "html_url", "dtype": "string"}, {"name": "followers_url", "dtype": "string"}, {"name": "following_url", "dtype": "string"}, {"name": "gists_url", "dtype": "string"}, {"name": "starred_url", "dtype": "string"}, {"name": "subscriptions_url", "dtype": "string"}, {"name": "organizations_url", "dtype": "string"}, {"name": "repos_url", "dtype": "string"}, {"name": "events_url", "dtype": "string"}, {"name": "received_events_url", "dtype": "string"}, {"name": "type", "dtype": "string"}, {"name": "site_admin", "dtype": "bool"}]}, {"name": "open_issues", "dtype": "int64"}, {"name": "closed_issues", "dtype": "int64"}, {"name": "state", "dtype": "string"}, {"name": "created_at", "dtype": "timestamp[s]"}, {"name": "updated_at", "dtype": "timestamp[s]"}, {"name": "due_on", "dtype": "null"}, {"name": "closed_at", "dtype": "null"}]}, {"name": "comments", "sequence": "string"}, {"name": "created_at", "dtype": "timestamp[s]"}, {"name": "updated_at", "dtype": "timestamp[s]"}, {"name": "closed_at", "dtype": "timestamp[s]"}, {"name": "author_association", "dtype": "string"}, {"name": "active_lock_reason", "dtype": "null"}, {"name": "draft", "dtype": "bool"}, {"name": "pull_request", "struct": [{"name": "url", "dtype": "string"}, {"name": "html_url", "dtype": "string"}, {"name": "diff_url", "dtype": "string"}, {"name": "patch_url", "dtype": "string"}, {"name": "merged_at", "dtype": "timestamp[s]"}]}, {"name": "body", "dtype": "string"}, {"name": "reactions", "struct": [{"name": "url", "dtype": "string"}, {"name": "total_count", "dtype": "int64"}, {"name": "+1", "dtype": "int64"}, {"name": "-1", "dtype": "int64"}, {"name": "laugh", "dtype": "int64"}, {"name": "hooray", "dtype": "int64"}, {"name": "confused", "dtype": "int64"}, {"name": "heart", "dtype": "int64"}, {"name": "rocket", "dtype": "int64"}, {"name": "eyes", "dtype": "int64"}]}, {"name": "timeline_url", "dtype": "string"}, {"name": "performed_via_github_app", "dtype": "null"}, {"name": "state_reason", "dtype": "string"}, {"name": "is_pull_request", "dtype": "bool"}], "splits": [{"name": "train", "num_bytes": 2626101.12, "num_examples": 192}, {"name": "valid", "num_bytes": 656525.28, "num_examples": 48}, {"name": "test", "num_bytes": 820656.6, "num_examples": 60}], "download_size": 1373746, "dataset_size": 4103283.0000000005}, "configs": [{"config_name": "default", "data_files": [{"split": "train", "path": "data/train-*"}, {"split": "valid", "path": "data/valid-*"}, {"split": "test", "path": "data/test-*"}]}]} | 2023-07-26T14:36:44+00:00 |

bb8c37d84ddf2da1e691d226c55fef48fd8149b5 |

# Information Card for Brat

## Table of Contents

- [Description](#description)

- [Summary](#summary)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-instances)

- [Usage](#usage)

- [Additional Information](#additional-information)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

## Description

- **Homepage:** https://brat.nlplab.org

- **Paper:** https://aclanthology.org/E12-2021/

- **Leaderboard:** \[Needs More Information\]

- **Point of Contact:** \[Needs More Information\]

### Summary

Brat is an intuitive web-based tool for text annotation supported by Natural Language Processing (NLP) technology. BRAT has been developed for rich structured annota- tion for a variety of NLP tasks and aims to support manual curation efforts and increase annotator productivity using NLP techniques. brat is designed in particular for structured annotation, where the notes are not free form text but have a fixed form that can be automatically processed and interpreted by a computer.

## Dataset Structure

Dataset annotated with brat format is processed using this script. Annotations created in brat are stored on disk in a standoff format: annotations are stored separately from the annotated document text, which is never modified by the tool. For each text document in the system, there is a corresponding annotation file. The two are associated by the file naming convention that their base name (file name without suffix) is the same: for example, the file DOC-1000.ann contains annotations for the file DOC-1000.txt. More information can be found [here](https://brat.nlplab.org/standoff.html).

### Data Instances

```

{

"context": ''<?xml version="1.0" encoding="UTF-8" standalone="no"?>\n<Document xmlns:gate="http://www.gat...'

"file_name": "A01"

"spans": {

'id': ['T1', 'T2', 'T4', 'T5', 'T6', 'T3', 'T7', 'T8', 'T9', 'T10', 'T11', 'T12',...]

'type': ['background_claim', 'background_claim', 'background_claim', 'own_claim',...]

'locations': [{'start': [2417], 'end': [2522]}, {'start': [2524], 'end': [2640]},...]

'text': ['complicated 3D character models...', 'The range of breathtaking realistic...', ...]

}

"relations": {

'id': ['R1', 'R2', 'R3', 'R4', 'R5', 'R6', 'R7', 'R8', 'R9', 'R10', 'R11', 'R12',...]

'type': ['supports', 'supports', 'supports', 'supports', 'contradicts', 'contradicts',...]

'arguments': [{'type': ['Arg1', 'Arg2'], 'target': ['T4', 'T5']},...]

}

"equivalence_relations": {'type': [], 'targets': []},

"events": {'id': [], 'type': [], 'trigger': [], 'arguments': []},

"attributions": {'id': [], 'type': [], 'target': [], 'value': []},

"normalizations": {'id': [], 'type': [], 'target': [], 'resource_id': [], 'entity_id': []},

"notes": {'id': [], 'type': [], 'target': [], 'note': []},

}

```

### Data Fields

- `context` (`str`): the textual content of the data file

- `file_name` (`str`): the name of the data / annotation file without extension

- `spans` (`dict`): span annotations of the `context` string

- `id` (`str`): the id of the span, starts with `T`

- `type` (`str`): the label of the span

- `locations` (`list`): the indices indicating the span's locations (multiple because of fragments), consisting of `dict`s with

- `start` (`list` of `int`): the indices indicating the inclusive character start positions of the span fragments

- `end` (`list` of `int`): the indices indicating the exclusive character end positions of the span fragments

- `text` (`list` of `str`): the texts of the span fragments

- `relations`: a sequence of relations between elements of `spans`

- `id` (`str`): the id of the relation, starts with `R`

- `type` (`str`): the label of the relation

- `arguments` (`list` of `dict`): the spans related to the relation, consisting of `dict`s with

- `type` (`list` of `str`): the argument roles of the spans in the relation, either `Arg1` or `Arg2`

- `target` (`list` of `str`): the spans which are the arguments of the relation

- `equivalence_relations`: contains `type` and `target` (more information needed)

- `events`: contains `id`, `type`, `trigger`, and `arguments` (more information needed)

- `attributions` (`dict`): attribute annotations of any other annotation

- `id` (`str`): the instance id of the attribution

- `type` (`str`): the type of the attribution

- `target` (`str`): the id of the annotation to which the attribution is for

- `value` (`str`): the attribution's value or mark

- `normalizations` (`dict`): the unique identification of the real-world entities referred to by specific text expressions

- `id` (`str`): the instance id of the normalized entity

- `type`(`str`): the type of the normalized entity

- `target` (`str`): the id of the annotation to which the normalized entity is for

- `resource_id` (`str`): the associated resource to the normalized entity

- `entity_id` (`str`): the instance id of normalized entity

- `notes` (`dict`): a freeform text, added to the annotation

- `id` (`str`): the instance id of the note

- `type` (`str`): the type of note

- `target` (`str`): the id of the related annotation

- `note` (`str`): the text body of the note

### Usage

The `brat` dataset script can be used by calling `load_dataset()` method and passing any arguments that are accepted by the `BratConfig` (which is a special [BuilderConfig](https://huggingface.co/docs/datasets/v2.2.1/en/package_reference/builder_classes#datasets.BuilderConfig)). It requires at least the `url` argument. The full list of arguments is as follows:

- `url` (`str`): the url of the dataset which should point to either a zip file or a directory containing the Brat data (`*.txt`) and annotation (`*.ann`) files

- `description` (`str`, optional): the description of the dataset

- `citation` (`str`, optional): the citation of the dataset

- `homepage` (`str`, optional): the homepage of the dataset

- `split_paths` (`dict`, optional): a mapping of (arbitrary) split names to subdirectories or lists of files (without extension), e.g. `{"train": "path/to/train_directory", "test": "path/to/test_director"}` or `{"train": ["path/to/train_file1", "path/to/train_file2"]}`. In both cases (subdirectory paths or file paths), the paths are relative to the url. If `split_paths` is not provided, the dataset will be loaded from the root directory and all direct subfolders will be considered as splits.

- `file_name_blacklist` (`list`, optional): a list of file names (without extension) that should be ignored, e.g. `["A28"]`. This is useful if the dataset contains files that are not valid brat files.

Important: Using the `data_dir` parameter of the `load_dataset()` method overrides the `url` parameter of the `BratConfig`.

We provide an example of [SciArg](https://aclanthology.org/W18-5206.pdf) dataset below:

```python

from datasets import load_dataset

kwargs = {

"description" :

"""This dataset is an extension of the Dr. Inventor corpus (Fisas et al., 2015, 2016) with an annotation layer containing

fine-grained argumentative components and relations. It is the first argument-annotated corpus of scientific

publications (in English), which allows for joint analyses of argumentation and other rhetorical dimensions of

scientific writing.""",

"citation" :

"""@inproceedings{lauscher2018b,

title = {An argument-annotated corpus of scientific publications},

booktitle = {Proceedings of the 5th Workshop on Mining Argumentation},

publisher = {Association for Computational Linguistics},

author = {Lauscher, Anne and Glava\v{s}, Goran and Ponzetto, Simone Paolo},

address = {Brussels, Belgium},

year = {2018},

pages = {40–46}

}""",

"homepage": "https://github.com/anlausch/ArguminSci",

"url": "http://data.dws.informatik.uni-mannheim.de/sci-arg/compiled_corpus.zip",

"split_paths": {

"train": "compiled_corpus",

},

"file_name_blacklist": ['A28'],

}

dataset = load_dataset('dfki-nlp/brat', **kwargs)

```

## Additional Information

### Licensing Information

\[Needs More Information\]

### Citation Information

```

@inproceedings{stenetorp-etal-2012-brat,

title = "brat: a Web-based Tool for {NLP}-Assisted Text Annotation",

author = "Stenetorp, Pontus and

Pyysalo, Sampo and

Topi{\'c}, Goran and

Ohta, Tomoko and

Ananiadou, Sophia and

Tsujii, Jun{'}ichi",

booktitle = "Proceedings of the Demonstrations at the 13th Conference of the {E}uropean Chapter of the Association for Computational Linguistics",

month = apr,

year = "2012",

address = "Avignon, France",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/E12-2021",

pages = "102--107",

}

```

| DFKI-SLT/brat | [

"task_categories:token-classification",

"task_ids:parsing",

"annotations_creators:expert-generated",

"language_creators:found",

"region:us"

] | 2022-05-10T05:13:33+00:00 | {"annotations_creators": ["expert-generated"], "language_creators": ["found"], "license": [], "task_categories": ["token-classification"], "task_ids": ["parsing"]} | 2023-12-11T09:54:08+00:00 |

5bb1a071177dc778c2e9818d75a84bc70f4c1338 |

# Dataset Card for [kejian/pile-severetoxic-balanced2]

## Generation Procedures

The dataset was constructed using documents from the Pile scored using Perspective API SEVERE-TOXICITY scores.

The procedure was the following:

- The first half of this dataset is kejian/pile-severetoxic-chunk-0, 100k most toxic documents from Pile chunk-0

- The second half of this dataset is kejian/pile-severetoxic-random100k, 100k randomly sampled documents from Pile chunk-3

- Then, the dataset was shuffled and a 9:1 train-test split was done

## Basic Statistics

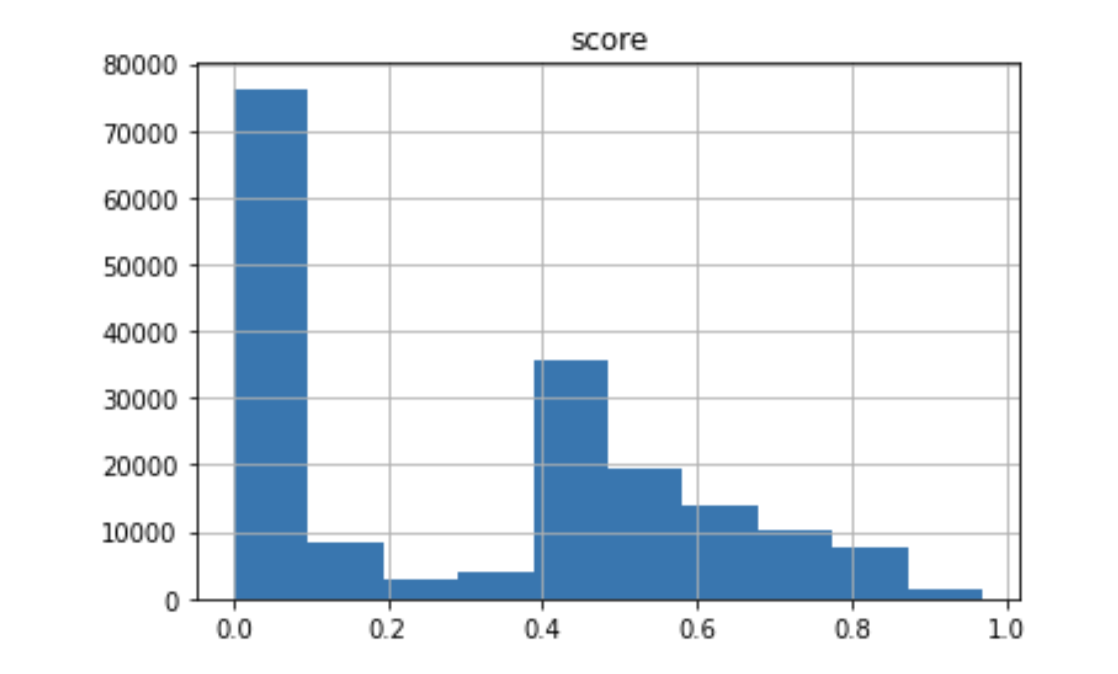

The average scores of the most toxic and random half are 0.555 and 0.061, respectively. The average score of the whole dataset is 0.308; the median is 0.385.

The weighted average score (weighted by document length) is 0.337. The correlation between score and document length is 0.099

| kejian/pile-severetoxic-balanced2 | [

"region:us"

] | 2022-05-10T05:25:33+00:00 | {} | 2022-05-10T13:34:07+00:00 |

cd95c2b7bda1e61b32ffde9ed59df0aec56f42d3 | # Golos dataset

Golos is a Russian corpus suitable for speech research. The dataset mainly consists of recorded audio files manually annotated on the crowd-sourcing platform. The total duration of the audio is about 1240 hours.

We have made the corpus freely available for downloading, along with the acoustic model prepared on this corpus.

Also we create 3-gram KenLM language model using an open Common Crawl corpus.

## **Dataset structure**

| Domain | Train files | Train hours | Test files | Test hours |

|:--------------:|:----------:|:------:|:-----:|:----:|

| Crowd | 979 796 | 1 095 | 9 994 | 11.2 |

| Farfield | 124 003 | 132.4| 1 916 | 1.4 |

| Total | 1 103 799 | 1 227.4|11 910 | 12.6 |

## **Downloads**

### **Audio files in opus format**

| Archive | Size | Link |

|:-----------------|:-----------|:--------------------|

| golos_opus.tar | 20.5 GB | https://sc.link/JpD |

### **Audio files in wav format**

Manifest files with all the training transcription texts are in the train_crowd9.tar archive listed in the table:

| Archives | Size | Links |

|-------------------|------------|---------------------|

| train_farfield.tar| 15.4 GB | https://sc.link/1Z3 |

| train_crowd0.tar | 11 GB | https://sc.link/Lrg |

| train_crowd1.tar | 14 GB | https://sc.link/MvQ |

| train_crowd2.tar | 13.2 GB | https://sc.link/NwL |

| train_crowd3.tar | 11.6 GB | https://sc.link/Oxg |

| train_crowd4.tar | 15.8 GB | https://sc.link/Pyz |

| train_crowd5.tar | 13.1 GB | https://sc.link/Qz7 |

| train_crowd6.tar | 15.7 GB | https://sc.link/RAL |

| train_crowd7.tar | 12.7 GB | https://sc.link/VG5 |

| train_crowd8.tar | 12.2 GB | https://sc.link/WJW |

| train_crowd9.tar | 8.08 GB | https://sc.link/XKk |

| test.tar | 1.3 GB | https://sc.link/Kqr |

### **Acoustic and language models**

Acoustic model built using [QuartzNet15x5](https://arxiv.org/pdf/1910.10261.pdf) architecture and trained using [NeMo toolkit](https://github.com/NVIDIA/NeMo/tree/r1.0.0b4)

Three n-gram language models created using [KenLM Language Model Toolkit](https://kheafield.com/code/kenlm)

* LM built on [Common Crawl](https://commoncrawl.org) Russian dataset

* LM built on Golos train set

* LM built on [Common Crawl](https://commoncrawl.org) and Golos datasets together (50/50)

| Archives | Size | Links |

|--------------------------|------------|-----------------|

| QuartzNet15x5_golos.nemo | 68 MB | https://sc.link/ZMv |

| KenLMs.tar | 4.8 GB | https://sc.link/YL0 |

Golos data and models are also available in the hub of pre-trained models, datasets, and containers - DataHub ML Space. You can train the model and deploy it on the high-performance SberCloud infrastructure in [ML Space](https://sbercloud.ru/ru/aicloud/mlspace) - full-cycle machine learning development platform for DS-teams collaboration based on the Christofari Supercomputer.

## **Evaluation**

Percents of Word Error Rate for different test sets

| Decoder \ Test set | Crowd test | Farfield test | MCV<sup>1</sup> dev | MCV<sup>1</sup> test |

|-------------------------------------|-----------|----------|-----------|----------|

| Greedy decoder | 4.389 % | 14.949 % | 9.314 % | 11.278 % |

| Beam Search with Common Crawl LM | 4.709 % | 12.503 % | 6.341 % | 7.976 % |

| Beam Search with Golos train set LM | 3.548 % | 12.384 % | - | - |

| Beam Search with Common Crawl and Golos LM | 3.318 % | 11.488 % | 6.4 % | 8.06 % |

<sup>1</sup> [Common Voice](https://commonvoice.mozilla.org) - Mozilla's initiative to help teach machines how real people speak.

## **Resources**

[[arxiv.org] Golos: Russian Dataset for Speech Research](https://arxiv.org/abs/2106.10161)

[[habr.com] Golos — самый большой русскоязычный речевой датасет, размеченный вручную, теперь в открытом доступе](https://habr.com/ru/company/sberdevices/blog/559496/)

[[habr.com] Как улучшить распознавание русской речи до 3% WER с помощью открытых данных](https://habr.com/ru/company/sberdevices/blog/569082/)

| SberDevices/Golos | [

"arxiv:1910.10261",

"arxiv:2106.10161",

"region:us"

] | 2022-05-10T07:20:45+00:00 | {} | 2022-05-10T07:37:58+00:00 |

ed0114d3241e3a55fdc92902f25b4e4a24ab77eb |

# Polish-Political-Advertising

## Info

Political campaigns are full of political ads posted by candidates on social media. Political advertisement constitute a basic form of campaigning, subjected to various social requirements. We present the first publicly open dataset for detecting specific text chunks and categories of political advertising in the Polish language. It contains 1,705 human-annotated tweets tagged with nine categories, which constitute campaigning under Polish electoral law.

> We achieved a 0.65 inter-annotator agreement (Cohen's kappa score). An additional annotator resolved the mismatches between the first two annotators improving the consistency and complexity of the annotation process.

## Tasks (input, output and metrics)

Political Advertising Detection

**Input** ('*tokens'* column): sequence of tokens

**Output** ('tags*'* column): sequence of tags

**Domain**: politics

**Measurements**: F1-Score (seqeval)

**Example:**

Input: `['@k_mizera', '@rdrozd', 'Problemem', 'jest', 'mała', 'produkcja', 'dlatego', 'takie', 'ceny', '.', '10', '000', 'mikrofirm', 'zamknęło', 'się', 'w', 'poprzednim', 'tygodniu', 'w', 'obawie', 'przed', 'ZUS', 'a', 'wystarczyło', 'zlecić', 'tym', 'co', 'chcą', 'np', '.', 'szycie', 'masek', 'czy', 'drukowanie', 'przyłbic', 'to', 'nie', 'wymaga', 'super', 'sprzętu', ',', 'umiejętności', '.', 'nie', 'będzie', 'pit', ',', 'vat', 'i', 'zus', 'będą', 'bezrobotni']`

Input (translated by DeepL): `@k_mizera @rdrozd The problem is small production that's why such prices . 10,000 micro businesses closed down last week for fear of ZUS and all they had to do was outsource to those who want e.g . sewing masks or printing visors it doesn't require super equipment , skills . there will be no pit , vat and zus will be unemployed`

Output: `['O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'B-WELFARE', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'B-WELFARE', 'O', 'B-WELFARE', 'O', 'B-WELFARE', 'O', 'B-WELFARE']`

## Data splits

| Subset | Cardinality |

|:-----------|--------------:|

| train | 1020 |

| test | 341 |

| validation | 340 |

## Class distribution

| Class | train | validation | test |

|:--------------------------------|--------:|-------------:|-------:|

| B-HEALHCARE | 0.237 | 0.226 | 0.233 |

| B-WELFARE | 0.210 | 0.232 | 0.183 |

| B-SOCIETY | 0.156 | 0.153 | 0.149 |

| B-POLITICAL_AND_LEGAL_SYSTEM | 0.137 | 0.143 | 0.149 |

| B-INFRASTRUCTURE_AND_ENVIROMENT | 0.110 | 0.104 | 0.133 |

| B-EDUCATION | 0.062 | 0.060 | 0.080 |

| B-FOREIGN_POLICY | 0.040 | 0.039 | 0.028 |

| B-IMMIGRATION | 0.028 | 0.017 | 0.018 |

| B-DEFENSE_AND_SECURITY | 0.020 | 0.025 | 0.028 |

## License

[Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0)](https://creativecommons.org/licenses/by-nc-sa/4.0/)

## Links

[HuggingFace](https://huggingface.co/datasets/laugustyniak/political-advertising-pl)

[Paper](https://aclanthology.org/2020.winlp-1.28/)

## Citing

> ACL WiNLP 2020 Paper

```bibtex

@inproceedings{augustyniak-etal-2020-political,

title = "Political Advertising Dataset: the use case of the Polish 2020 Presidential Elections",

author = "Augustyniak, Lukasz and Rajda, Krzysztof and Kajdanowicz, Tomasz and Bernaczyk, Micha{\l}",

booktitle = "Proceedings of the The Fourth Widening Natural Language Processing Workshop",

month = jul,

year = "2020",

address = "Seattle, USA",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.winlp-1.28",

pages = "110--114"

}

```

> Advances in Neural Information Processing Systems 35 (NeurIPS 2022) Datasets and Benchmarks Track

```bibtex

@inproceedings{NEURIPS2022_890b206e,

author = {Augustyniak, Lukasz and Tagowski, Kamil and Sawczyn, Albert and Janiak, Denis and Bartusiak, Roman and Szymczak, Adrian and Janz, Arkadiusz and Szyma\'{n}ski, Piotr and W\k{a}troba, Marcin and Morzy, Miko\l aj and Kajdanowicz, Tomasz and Piasecki, Maciej},

booktitle = {Advances in Neural Information Processing Systems},

editor = {S. Koyejo and S. Mohamed and A. Agarwal and D. Belgrave and K. Cho and A. Oh},

pages = {21805--21818},

publisher = {Curran Associates, Inc.},

title = {This is the way: designing and compiling LEPISZCZE, a comprehensive NLP benchmark for Polish},

url = {https://proceedings.neurips.cc/paper_files/paper/2022/file/890b206ebb79e550f3988cb8db936f42-Paper-Datasets_and_Benchmarks.pdf},

volume = {35},

year = {2022}

}

``` | laugustyniak/political-advertising-pl | [

"task_categories:token-classification",

"task_ids:named-entity-recognition",

"task_ids:part-of-speech",

"annotations_creators:hired_annotators",

"language_creators:found",

"multilinguality:monolingual",

"size_categories:10<n<10K",

"language:pl",

"license:other",

"region:us"

] | 2022-05-10T08:06:08+00:00 | {"annotations_creators": ["hired_annotators"], "language_creators": ["found"], "language": ["pl"], "license": ["other"], "multilinguality": ["monolingual"], "size_categories": ["10<n<10K"], "task_categories": ["token-classification"], "task_ids": ["named-entity-recognition", "part-of-speech"], "pretty_name": "Polish-Political-Advertising"} | 2023-03-29T09:49:42+00:00 |

b3d2e2bb154eae638f61999224f9ec1f7aff6c53 | mteb/raw_arxiv | [

"language:en",

"region:us"

] | 2022-05-10T08:43:45+00:00 | {"language": ["en"]} | 2022-09-27T18:12:40+00:00 |

|

0594adab4ce7680af4dd0f8df7471d4acd6594c6 |

# Dataset Card for "offenseval_2020"

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://sites.google.com/site/offensevalsharedtask/results-and-paper-submission](https://sites.google.com/site/offensevalsharedtask/results-and-paper-submission)

- **Repository:**