issue

dict | pr

dict | pr_details

dict |

|---|---|---|

{

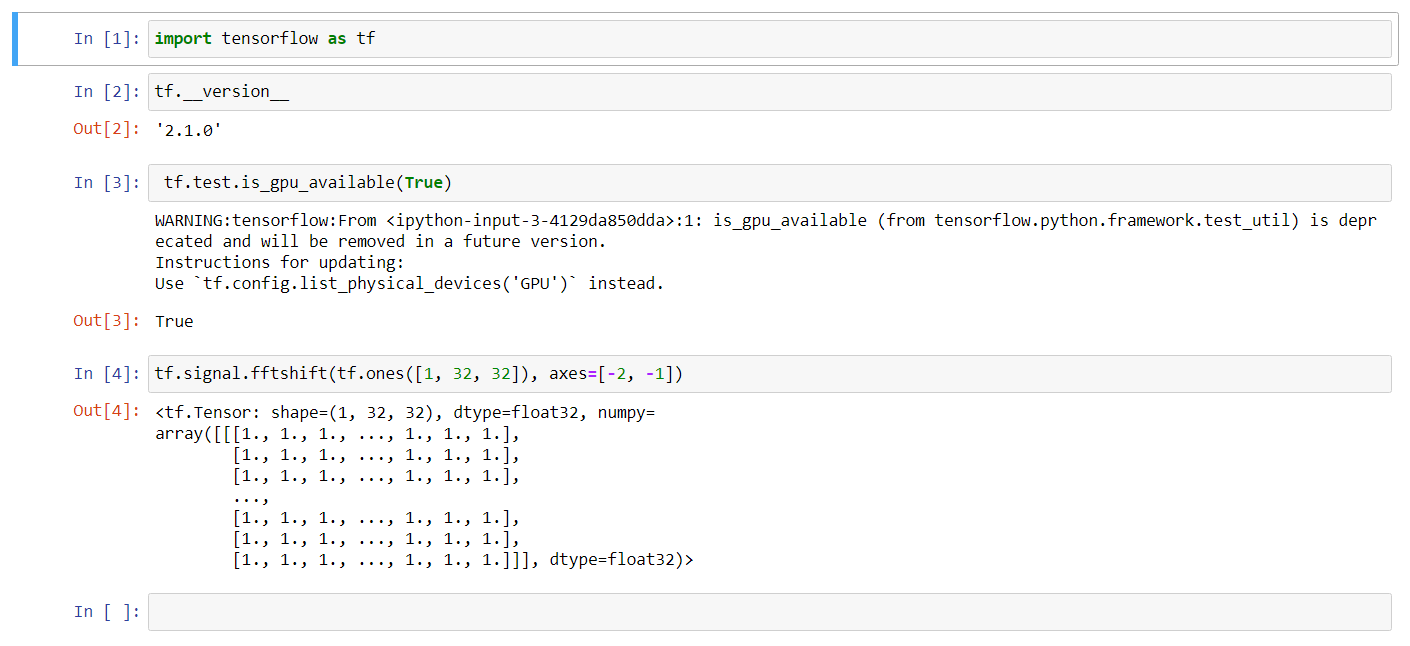

"body": "<em>Please make sure that this is a bug. As per our\r\n[GitHub Policy](https://github.com/tensorflow/tensorflow/blob/master/ISSUES.md),\r\nwe only address code/doc bugs, performance issues, feature requests and\r\nbuild/installation issues on GitHub. tag:bug_template</em>\r\n\r\n**System information**\r\n- Have I written custom code (as opposed to using a stock example script provided in TensorFlow):\r\n- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): windows 10\r\n- Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device:\r\n- TensorFlow installed from (source or binary): pip\r\n- TensorFlow version (use command below): 2.1.0\r\n- Python version: 3.7\r\n- Bazel version (if compiling from source):\r\n- GCC/Compiler version (if compiling from source):\r\n- CUDA/cuDNN version: 10.2 & 10.1\r\n- GPU model and memory:\r\n\r\nYou can collect some of this information using our environment capture\r\n[script](https://github.com/tensorflow/tensorflow/tree/master/tools/tf_env_collect.sh)\r\nYou can also obtain the TensorFlow version with:\r\n1. TF 1.0: `python -c \"import tensorflow as tf; print(tf.GIT_VERSION, tf.VERSION)\"`\r\n2. TF 2.0: `python -c \"import tensorflow as tf; print(tf.version.GIT_VERSION, tf.version.VERSION)\"`\r\n\r\n\r\n**Describe the current behavior**\r\nWhen loading the model with keras.models.load_model the activation functions are not recognized. This happens only when activation functions are not builtin strings\r\n\r\n**Describe the expected behavior**\r\nTo load the model with success\r\n\r\n**Standalone code to reproduce the issue**\r\nProvide a reproducible test case that is the bare minimum necessary to generate\r\nthe problem. If possible, please share a link to Colab/Jupyter/any notebook.\r\n\r\n```python\r\nimport tensorflow as tf\r\n\r\nlayer = tf.keras.layers.Dense(1, activation=tf.keras.layers.PReLU(alpha_initializer='random_uniform', alpha_regularizer=None, alpha_constraint=None, shared_axes=None),\\\r\n name='layerX', kernel_initializer=tf.keras.initializers.he_normal())\r\nmodel = tf.keras.Sequential(layer)\r\nmodel.compile(\"adam\", \"binary_crossentropy\", [\"accuracy\"])\r\nmodel.fit([[1]], [1])\r\nmodel.save(\"keras_model.h5\", save_format='h5' \")\r\nmodel = tf.keras.models.load_model(\"keras_model.h5\")\r\n```\r\n**EDIT**: After reading the docs I saved the model with save_format h5, first time I tried without any format (the default is tf for tensorflow 2.0+)\r\nInstead I found a workaround: I can load the model if I save it with (default) save_format='tf'\r\n\r\n**Other info / logs** Include any logs or source code that would be helpful to\r\ndiagnose the problem. If including tracebacks, please include the full\r\ntraceback. Large logs and files should be attached.\r\n```Traceback (most recent call last):\r\n File \"C:\\Users\\Teo\\OneDrive\\Licenta\\main.py\", line 138, in <module>\r\n main()\r\n File \"C:\\Users\\Teo\\OneDrive\\Licenta\\main.py\", line 135, in main\r\n model = keras.models.load_model(os.path.join(dataset_path, \"keras_model.h5\"))\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\saving\\save.py\", line 146, in load_model\r\n return hdf5_format.load_model_from_hdf5(filepath, custom_objects, compile)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\saving\\hdf5_format.py\", line 168, in load_model_from_hdf5\r\n custom_objects=custom_objects)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\saving\\model_config.py\", line 55, in model_from_config\r\n return deserialize(config, custom_objects=custom_objects)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\layers\\serialization.py\", line 106, in deserialize\r\n printable_module_name='layer')\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\utils\\generic_utils.py\", line 303, in deserialize_keras_object\r\n list(custom_objects.items())))\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\engine\\sequential.py\", line 377, in from_config\r\n custom_objects=custom_objects)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\layers\\serialization.py\", line 106, in deserialize\r\n printable_module_name='layer')\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\utils\\generic_utils.py\", line 305, in deserialize_keras_object\r\n return cls.from_config(cls_config)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\engine\\base_layer.py\", line 519, in from_config\r\n return cls(**config)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\layers\\core.py\", line 1082, in __init__\r\n self.activation = activations.get(activation)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\activations.py\", line 450, in get\r\n identifier, printable_module_name='activation')\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\utils\\generic_utils.py\", line 292, in deserialize_keras_object\r\n config, module_objects, custom_objects, printable_module_name)\r\n File \"C:\\ProgramData\\Anaconda3\\lib\\site-packages\\tensorflow_core\\python\\keras\\utils\\generic_utils.py\", line 250, in class_and_config_for_serialized_keras_object\r\n raise ValueError('Unknown ' + printable_module_name + ': ' + class_name)\r\nValueError: Unknown activation: PReLU\r\n```",

"comments": [

{

"body": "@teodor440 \r\n\r\nRequest you to share colab link or simple standalone code to reproduce the issue in our environment.It helps us in localizing the issue faster.Thanks!",

"created_at": "2020-04-29T06:56:00Z"

},

{

"body": "> \r\n> \r\n> @teodor440\r\n> \r\n> Request you to share colab link or simple standalone code to reproduce the issue in our environment.It helps us in localizing the issue faster.Thanks!\r\n\r\nhttps://colab.research.google.com/drive/1qZ8RuI-oNoTsD2uokHZztqeCb20RX3wR\r\nI also updated the code in the issue",

"created_at": "2020-04-29T14:09:43Z"

},

{

"body": "I have tried in colab with TF 2.1.0 , 2.2-rc3 and was able to reproduce the issue. Please, find the gist [here](https://colab.sandbox.google.com/gist/ravikyram/d18890e6d91c6fe62dcff2934500927a/untitled840.ipynb).Thanks!",

"created_at": "2020-04-30T03:55:40Z"

},

{

"body": "@teodor440 I was able to reproduce the issue with `save_format='h5'`. However, when I used `save_format = 'tf'` everything worked as expected. `model.predict` before saving and after loading are exactly same. Please take a look at the [gist here](https://colab.research.google.com/gist/jvishnuvardhan/e753ca365a61c21d77bdd70844156f68/untitled840.ipynb). Thanks!",

"created_at": "2020-04-30T05:19:17Z"

},

{

"body": "I am not familiar with the implementation of tensorflow, but seems like there is a problem with how tf sees keras models\r\nI can recall the same value error when trying to convert the model to an estimator with keras.estimator.model_to_estimator",

"created_at": "2020-05-01T21:59:00Z"

},

{

"body": "Ok so the problem was that I specified the activations in the layers as something different than a string. For some reason or another this confuses the operations made on keras models by tf",

"created_at": "2020-05-03T14:44:09Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/38994\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/38994\">No</a>\n",

"created_at": "2020-05-03T14:44:11Z"

},

{

"body": "@teodor440 You can use \"tf\" format as it is working well. However, this is still a bug with \"h5\" format. I will reopen the issue. Thanks!",

"created_at": "2020-05-03T15:55:14Z"

},

{

"body": "You can see now at the collab link that the issue with saving h5 model doesn't persist anymore if using separate layers for dense and activation. So basically this is a workaround for the problem",

"created_at": "2020-05-03T16:23:49Z"

},

{

"body": "Closing this issue since the associated PR has been merged and the issue is [fixed](https://colab.research.google.com/gist/ymodak/6217fe6a0850d369c7c8da9107821808/38994.ipynb). Thanks",

"created_at": "2021-04-23T18:10:31Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/38994\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/38994\">No</a>\n",

"created_at": "2021-04-23T18:10:33Z"

}

],

"number": 38994,

"title": "Can't load saved keras model.h5"

}

|

{

"body": "@k-w-w and @jvishnuvardhan , reference to #38994 \r\n\r\nPlease review changes. Tests: activations_test.py and advanced_activations.py passed.\r\n\r\nWe need to bring in the classes from advanced_activations if there are no custom objects specified. When no custom objects are specified, our module_objects/globals() in activations.deserialize() won't contain any advanced_activations.",

"number": 39252,

"review_comments": [

{

"body": "This if statement can be removed -- [the custom object dict is always checked before the module objects](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/keras/utils/generic_utils.py#L200), so it's safe to add the missing activations here.",

"created_at": "2020-06-03T04:42:18Z"

},

{

"body": "ahh right, removed if statement in 4e74ac602c55a77bd9d238a801df1894297bc464",

"created_at": "2020-06-03T18:16:02Z"

},

{

"body": "Ah, there's a small issue here. Can you swap lines 121 and 122? (deps should appear in alphabetical order)",

"created_at": "2020-10-15T00:44:38Z"

},

{

"body": "ah good to know, sorry about that. Fixed! :)",

"created_at": "2020-10-15T01:55:36Z"

}

],

"title": "Fixed loading saved keras model (h5 files) when using advanced activation functions"

}

|

{

"commits": [

{

"message": "We need to bring in the classes from advanced_activations if there are no custom objects specified. When no custom objects are specified, our module_objects/globals() in activations.deserialize() won't contain any advanced_activations."

},

{

"message": "no need for if statement since custom object dict is checked before module objects"

},

{

"message": "fixing pylint issues"

},

{

"message": "New feature. Use new param log_all in CSVLogger to log all elements in training even if some epochs don't contain the same elements."

},

{

"message": "Revert \"New feature. Use new param log_all in CSVLogger to log all elements in training even if some epochs don't contain the same elements.\"\n\nThis reverts commit 204913109700abfa7fd620bf05c4603dc7795f34."

},

{

"message": "Merge branch 'master' of https://github.com/tensorflow/tensorflow into datapi_tflow"

},

{

"message": "We need to bring in the classes from advanced_activations if there are no custom objects specified. When no custom objects are specified, our module_objects/globals() in activations.deserialize() won't contain any advanced_activations."

},

{

"message": "no need for if statement since custom object dict is checked before module objects"

},

{

"message": "fixing pylint issues"

},

{

"message": "New feature. Use new param log_all in CSVLogger to log all elements in training even if some epochs don't contain the same elements."

},

{

"message": "Revert \"New feature. Use new param log_all in CSVLogger to log all elements in training even if some epochs don't contain the same elements.\"\n\nThis reverts commit 204913109700abfa7fd620bf05c4603dc7795f34."

},

{

"message": "Merge branch 'datapi_tflow' of https://github.com/PiyushDatta/tensorflow into datapi_tflow"

},

{

"message": "added advanced_activations into activation lib dependancies"

},

{

"message": "swap these lines to keep in alphabetical order"

}

],

"files": [

{

"diff": "@@ -118,6 +118,7 @@ py_library(\n srcs_version = \"PY2AND3\",\n deps = [\n \":backend\",\n+ \"//tensorflow/python/keras/layers:advanced_activations\",\n \"//tensorflow/python/keras/utils:engine_utils\",\n ],\n )",

"filename": "tensorflow/python/keras/BUILD",

"status": "modified"

},

{

"diff": "@@ -26,6 +26,7 @@\n from tensorflow.python.ops import nn\n from tensorflow.python.util import dispatch\n from tensorflow.python.util.tf_export import keras_export\n+from tensorflow.python.keras.layers import advanced_activations\n \n # b/123041942\n # In TF 2.x, if the `tf.nn.softmax` is used as an activation function in Keras\n@@ -525,9 +526,17 @@ def deserialize(name, custom_objects=None):\n ValueError: `Unknown activation function` if the input string does not\n denote any defined Tensorflow activation function.\n \"\"\"\n+ globs = globals()\n+\n+ # only replace missing activations\n+ advanced_activations_globs = advanced_activations.get_globals()\n+ for key, val in advanced_activations_globs.items():\n+ if key not in globs:\n+ globs[key] = val\n+\n return deserialize_keras_object(\n name,\n- module_objects=globals(),\n+ module_objects=globs,\n custom_objects=custom_objects,\n printable_module_name='activation function')\n ",

"filename": "tensorflow/python/keras/activations.py",

"status": "modified"

},

{

"diff": "@@ -65,12 +65,19 @@ def test_serialization_with_layers(self):\n activation = advanced_activations.LeakyReLU(alpha=0.1)\n layer = core.Dense(3, activation=activation)\n config = serialization.serialize(layer)\n+ # with custom objects\n deserialized_layer = serialization.deserialize(\n config, custom_objects={'LeakyReLU': activation})\n self.assertEqual(deserialized_layer.__class__.__name__,\n layer.__class__.__name__)\n self.assertEqual(deserialized_layer.activation.__class__.__name__,\n activation.__class__.__name__)\n+ # without custom objects\n+ deserialized_layer = serialization.deserialize(config)\n+ self.assertEqual(deserialized_layer.__class__.__name__,\n+ layer.__class__.__name__)\n+ self.assertEqual(deserialized_layer.activation.__class__.__name__,\n+ activation.__class__.__name__)\n \n def test_softmax(self):\n x = backend.placeholder(ndim=2)",

"filename": "tensorflow/python/keras/activations_test.py",

"status": "modified"

},

{

"diff": "@@ -30,6 +30,10 @@\n from tensorflow.python.util.tf_export import keras_export\n \n \n+def get_globals():\n+ return globals()\n+\n+\n @keras_export('keras.layers.LeakyReLU')\n class LeakyReLU(Layer):\n \"\"\"Leaky version of a Rectified Linear Unit.",

"filename": "tensorflow/python/keras/layers/advanced_activations.py",

"status": "modified"

}

]

}

|

{

"body": "**System information**\r\n- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): Yes\r\n- TensorFlow installed from (source or binary): pip\r\n- TensorFlow version (use command below): v2.2.0-rc4-0-g70087ab4f4\r\n- Python version: 3.6.9\r\n\r\n**Describe the current behavior**\r\nmap_fn doesn't support empty lists\r\n\r\n**Describe the expected behavior**\r\nIt should return an empty list\r\n\r\n**Standalone code to reproduce the issue**\r\n```python\r\nimport numpy as np\r\nimport tensorflow as tf\r\nfn = lambda x: x\r\ntf.map_fn(fn, [])\r\n\r\n# additionally, this works:\r\ntf.map_fn(fn, np.array([1.]))\r\n# but not this eventhough [1.] is not a scalar.\r\ntf.map_fn(fn, [1.])\r\n```",

"comments": [

{

"body": "The second and the third examples are expected. In the second example,\r\n```\r\ntf.map_fn(fn, np.array([1.]))\r\n```\r\n`np.array([1.])` is a tensor (converted to tensor by np.array), so it is a **single element of shape `[1]`.**\r\n\r\nThe third example:\r\n```\r\ntf.map_fn(fn, [1.])\r\n```\r\nis a list of one tensor `1.` as map_fn treat it as **a list of one (scalar) element `1.`**, thus you see an error.\r\n\r\nThe first example `tf.map_fn(fn, [])` is not something that has been captured in the implementation of `tf.map_fn`. I think it makes sense to return an explicit error here.\r\n\r\nCreated a PR #39241 for the fix of `tf.map_fn(fn, [])`",

"created_at": "2020-05-06T22:40:33Z"

},

{

"body": "Hi,\r\n\r\nI really don't think you should raise when the list is empty. Every framework out there just returns an empty sequence when using map on an empty sequence.",

"created_at": "2020-05-07T08:50:21Z"

},

{

"body": "Was able to reproduce the issue with TF v2.1, [TF v2.2.0-rc4](https://colab.research.google.com/gist/amahendrakar/c164706bf7bd7f67bf13fe6719e0a489/39229.ipynb) and [TF-nightly](https://colab.research.google.com/gist/amahendrakar/81517ce8a62dd8cc9f121c1956630636/39229-tf-nightly.ipynb). Please find the attached gist. Thanks!",

"created_at": "2020-05-07T11:02:31Z"

},

{

"body": "@AdrienCorenflos If you want to map over a sequence, then you must pass that sequence to `map_fn` as a Tensor. \r\n\r\nIf you pass a non-Tensor sequence to `map_fn`, then it does *not* map over that sequence. Instead, it unstacks each tensor in that sequence, and calls the function with a list constructed from those unstacked slices.\r\n\r\nI.e., `tf.map_fn(func, [a, b, c])` is equivalent to Python's `map(func, a, b, c)`, and *not* to `map(func, [a, b, c])`. So calling `tf.map_fn(func, [])` is equivalent to calling `map(func)` in Python, which does indeed give an error (`\"map() requires at least two args\"`).\r\n\r\nA more complex example might help illustrate what's going on here -- we can pass any nested structure in to `elems`, including e.g. nested dictionaries. So if I call:\r\n\r\n```\r\ntf.map_fn(func, {'a': t1, 'b': [t2, t3]})\r\n```\r\n\r\nThen `func` will be called with:\r\n```\r\nfunc({'a': t1[0], 'b': [t2[0], t3[0]})\r\nfunc({'a': t1[1], 'b': [t2[1], t3[1]})\r\nfunc({'a': t1[2], 'b': [t2[2], t3[2]})\r\n...\r\nfunc({'a': t1[N], 'b': [t2[N], t3[N]})\r\n```\r\n\r\nWhere `N==t1.shape[0]==t2.shape[0]==t3.shape[0]`. By default, each function needs to return a value with the same structure that was passed in. E.g., in the example above, `func` must return a dictionary with keys `a` and `b`, where `a` is a tensor and `b` is a list of two tensors. If you want `func` to return a different structure, then you need to specify that structure with the `fn_output_signature` argument.\r\n",

"created_at": "2020-05-07T16:30:44Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/39229\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/39229\">No</a>\n",

"created_at": "2020-06-30T04:08:04Z"

}

],

"number": 39229,

"title": "map_fn doesn't work with empty lists"

}

|

{

"body": "This PR tries to address the issue raised in #39229 where\r\nempty lists input was not checked and throw out a non-obvious error:\r\n```python\r\n>>> import numpy as np\r\n>>> import tensorflow as tf\r\n>>> fn = lambda x: x\r\n>>> tf.map_fn(fn, [])\r\nTraceback (most recent call last):\r\n File \"<stdin>\", line 1, in <module>\r\n File \"/Library/Python/3.7/site-packages/tensorflow/python/util/deprecation.py\", line 574, in new_func\r\n return func(*args, **kwargs)\r\n File \"/Library/Python/3.7/site-packages/tensorflow/python/ops/map_fn.py\", line 425, in map_fn_v2\r\n name=name)\r\n File \"/Library/Python/3.7/site-packages/tensorflow/python/ops/map_fn.py\", line 213, in map_fn\r\n static_shape = elems_flat[0].shape\r\nIndexError: list index out of range\r\n>>>\r\n```\r\n\r\n\r\nThis PR update to perform a check and thrown out\r\n`ValueError(\"elems must not be empty\")` to help clarify.\r\n\r\nThis PR fixes #39229.\r\n\r\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>",

"number": 39241,

"review_comments": [

{

"body": "I looks like the common confusion here is that people expect that when `map_fn` is applied to a list, that it will map over that list. To help steer them in the right direction, let's make this message a little more verbose. How about something along the lines of:\r\n\r\n```\r\nraise ValueError(\r\n \"elems must be a Tensor or (possibly nested) sequence of Tensors. \"\r\n \"Got {}, which does not contain any Tensors.\".format(elems))\r\n```",

"created_at": "2020-05-07T13:05:44Z"

},

{

"body": "container -> contain",

"created_at": "2020-05-08T00:54:03Z"

},

{

"body": "@terrytangyuan Updated.",

"created_at": "2020-05-08T01:41:46Z"

},

{

"body": "Consists -> consist",

"created_at": "2020-06-08T17:58:51Z"

}

],

"title": "Return ValueError in case of empty list input for tf.map_fn"

}

|

{

"commits": [

{

"message": "Return ValueError in case of empty list input for tf.map_fn\n\nThis PR tries to address the issue raised in 39229 where\nempty lists input was not checked and throw out a non-obvious error:\n```python\n>>> import numpy as np\n>>> import tensorflow as tf\n>>> fn = lambda x: x\n>>> tf.map_fn(fn, [])\nTraceback (most recent call last):\n File \"<stdin>\", line 1, in <module>\n File \"/Library/Python/3.7/site-packages/tensorflow/python/util/deprecation.py\", line 574, in new_func\n return func(*args, **kwargs)\n File \"/Library/Python/3.7/site-packages/tensorflow/python/ops/map_fn.py\", line 425, in map_fn_v2\n name=name)\n File \"/Library/Python/3.7/site-packages/tensorflow/python/ops/map_fn.py\", line 213, in map_fn\n static_shape = elems_flat[0].shape\nIndexError: list index out of range\n>>>\n```\n\nIn case of empty list the behavior is undefined as we even don't know the output dtype.\n\nThis PR update to perform a check and thrown out\n`ValueError(\"elems must not be empty\")` to help clarify.\n\nThis PR fixes 39229.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Update tf.map_fn to specify that at least one tensor must be present\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>\n\nTypo fix\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

}

],

"files": [

{

"diff": "@@ -217,6 +217,12 @@ def testMapEmptyTensor(self):\n self.assertAllEqual([0, 3, 2], map_return.get_shape().dims)\n self.assertAllEqual([0, 3, 2], self.evaluate(map_return).shape)\n \n+ @test_util.run_in_graph_and_eager_modes\n+ def testMapEmptyList(self):\n+ x = []\n+ with self.assertRaisesRegexp(\n+ ValueError, r\"elems must be a Tensor or\"):\n+ _ = map_fn.map_fn(lambda e: e, x)\n \n if __name__ == \"__main__\":\n test.main()",

"filename": "tensorflow/python/kernel_tests/map_fn_test.py",

"status": "modified"

},

{

"diff": "@@ -267,7 +267,7 @@ def map_fn(fn,\n elems: A tensor or (possibly nested) sequence of tensors, each of which will\n be unstacked along their first dimension. `fn` will be applied to the\n nested sequence of the resulting slices. `elems` may include ragged and\n- sparse tensors.\n+ sparse tensors. `elems` must consist of at least one tensor.\n dtype: Deprecated: Equivalent to `fn_output_signature`.\n parallel_iterations: (optional) The number of iterations allowed to run in\n parallel. When graph building, the default value is 10. While executing\n@@ -296,7 +296,7 @@ def map_fn(fn,\n TypeError: if `fn` is not callable or the structure of the output of\n `fn` and `fn_output_signature` do not match.\n ValueError: if the lengths of the output of `fn` and `fn_output_signature`\n- do not match.\n+ do not match, or if the `elems` does not contain any tensor.\n \n Examples:\n \n@@ -375,6 +375,13 @@ def map_fn(fn,\n \n # Flatten the input tensors, and get the TypeSpec for each one.\n elems_flat = nest.flatten(elems)\n+\n+ # Check in case this is an empty list\n+ if len(elems_flat) == 0:\n+ raise ValueError(\n+ \"elems must be a Tensor or (possibly nested) sequence of Tensors. \"\n+ \"Got {}, which does not contain any Tensors.\".format(elems))\n+\n elems_flat_signature = [type_spec.type_spec_from_value(e) for e in elems_flat]\n elems_unflatten = lambda x: nest.pack_sequence_as(elems, x)\n ",

"filename": "tensorflow/python/ops/map_fn.py",

"status": "modified"

}

]

}

|

{

"body": "**System information**\r\n- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): yes\r\n- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Windows 10 x64\r\n- Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device: NA\r\n- TensorFlow installed from (source or binary): binary\r\n- TensorFlow version (use command below): unknown 1.14.0\r\n- Python version: 3.6.8 (Anaconda)\r\n- Bazel version (if compiling from source): NA\r\n- GCC/Compiler version (if compiling from source): NA\r\n- CUDA/cuDNN version: NA\r\n- GPU model and memory: NA\r\n\r\n**Describe the current behavior**\r\n\r\n[`tf.boolean_mask`](https://www.tensorflow.org/api_docs/python/tf/boolean_mask) does not accept a scalar [`tf.Tensor`](https://www.tensorflow.org/api_docs/python/tf/Tensor) object as axis parameter.\r\n\r\n**Describe the expected behavior**\r\n\r\nAs per the docs, [`tf.boolean_mask`](https://www.tensorflow.org/api_docs/python/tf/boolean_mask) should accept a scalar [`tf.Tensor`](https://www.tensorflow.org/api_docs/python/tf/Tensor) object as axis parameter.\r\n\r\n> * `axis`: A 0-D int Tensor representing the axis in `tensor` to mask from. By default, axis is 0 which will mask from the first dimension. Otherwise K + axis <= N.\r\n\r\n**Code to reproduce the issue**\r\n\r\nThe following snippet:\r\n\r\n```py\r\nimport tensorflow as tf\r\ntf.boolean_mask([1, 2, 3], [True, False, True], axis=tf.constant(0, dtype=tf.int32))\r\n```\r\n\r\nCauses the exception:\r\n\r\n```none\r\nTypeError: slice indices must be integers or None or have an __index__ method\r\n```\r\n\r\nFor comparison, the equivalent operation with [`tf.gather`](https://www.tensorflow.org/api_docs/python/tf/gather) works correctly:\r\n\r\n```py\r\nimport tensorflow as tf\r\nwith tf.Session() as sess:\r\n print(sess.run(tf.gather([1, 2, 3], [0, 1], axis=tf.constant(0, dtype=tf.int32))))\r\n # [1 2]\r\n```\r\n\r\n\r\n**Other info / logs**\r\n\r\nFull traceback:\r\n\r\n```none\r\nTypeError Traceback (most recent call last)\r\n<ipython-input-3-4beb5ed72842> in <module>\r\n 1 import tensorflow as tf\r\n----> 2 tf.boolean_mask([1, 2, 3], [True, False, True], axis=tf.constant(0, dtype=tf.int32))\r\n\r\n~\\AppData\\Local\\Continuum\\anaconda3\\envs\\tf_test\\lib\\site-packages\\tensorflow\\python\\ops\\array_ops.py in boolean_mask(tensor, mask, name, axis)\r\n 1369 \" are None. E.g. shape=[None] is ok, but shape=None is not.\")\r\n 1370 axis = 0 if axis is None else axis\r\n-> 1371 shape_tensor[axis:axis + ndims_mask].assert_is_compatible_with(shape_mask)\r\n 1372\r\n 1373 leading_size = gen_math_ops.prod(shape(tensor)[axis:axis + ndims_mask], [0])\r\n\r\n~\\AppData\\Local\\Continuum\\anaconda3\\envs\\tf_test\\lib\\site-packages\\tensorflow\\python\\framework\\tensor_shape.py in __getitem__(self, key)\r\n 861 if self._dims is not None:\r\n 862 if isinstance(key, slice):\r\n--> 863 return TensorShape(self._dims[key])\r\n 864 else:\r\n 865 if self._v2_behavior:\r\n\r\nTypeError: slice indices must be integers or None or have an __index__ method\r\n```",

"comments": [

{

"body": "Issue replicating for the TF version-1.14, kindly find the [gist](https://colab.sandbox.google.com/gist/oanush/8f7790e5d292c7d84482bd3c9629d2e0/32236.ipynb) of colab.Thanks!",

"created_at": "2019-09-06T09:03:42Z"

},

{

"body": "@javidcf I tried with latest TF and the issue should have been fixed. I will close this issue but feel free to reopen if issue persists.",

"created_at": "2020-05-03T18:02:58Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/32236\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/32236\">No</a>\n",

"created_at": "2020-05-03T18:03:00Z"

},

{

"body": "@yongtang Thanks for having a look. Unfortunately, this still happens in graph mode, e.g. with `tf.function`:\r\n\r\n```python\r\n@tf.function\r\ndef f():\r\n return tf.boolean_mask([1, 2, 3], [True, False, True],\r\n axis=tf.constant(0, dtype=tf.int32))\r\nf()\r\n# TypeError: slice indices must be integers or None or have an __index__ method\r\n```\r\n\r\nTested in TensorFlow 2.2.0-rc4.",

"created_at": "2020-05-04T10:06:28Z"

},

{

"body": "@javidcf Added a PR for the fix.",

"created_at": "2020-05-04T16:14:57Z"

},

{

"body": "@javidcf Update: Added a PR #39159 for the fix.",

"created_at": "2020-05-04T16:15:42Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/32236\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/32236\">No</a>\n",

"created_at": "2020-05-05T19:17:17Z"

}

],

"number": 32236,

"title": "boolean_mask does not accept a Tensor as axis"

}

|

{

"body": "\r\nThis PR tries to address the issue raised in #32236 where\r\na TypeError was thrown out when axis is passed as a tensor.\r\nIn the docstring axis has been specified as accepting a 1-D tensor.\r\n\r\nThis PR fixes the issue.\r\n\r\nThis PR fixes #32236.\r\n\r\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>",

"number": 39159,

"review_comments": [],

"title": "Fix issue in boolean_mask when axis is passed as a tensor"

}

|

{

"commits": [

{

"message": "Fix issue in boolean_mask when axis is passed as a tensor\n\nThis PR tries to address the issue raised in 32236 where\na TypeError was thrown out when axis is passed as a tensor.\nIn the docstring axis has been specified as accepting a 1-D tensor.\n\nThis PR fixes the issue.\n\nThis PR fixes 32236.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Add test case for boolean_mask with axis passed as a tensor\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Extend support of non-const axis tensor in boolean_mask\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Add test case for non-const axis tensor in boolean_mask\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

}

],

"files": [

{

"diff": "@@ -281,6 +281,28 @@ def testStringMask(self):\n result = sess.run(masked_tensor, feed_dict={tile_placeholder: [2, 2]})\n self.assertAllEqual([b\"hello\", b\"hello\", b\"hello\", b\"hello\"], result)\n \n+ def testMaskWithAxisTensor(self):\n+ @def_function.function(autograph=False)\n+ def f():\n+ return array_ops.boolean_mask(\n+ [1, 2, 3], [True, False, True],\n+ axis=constant_op.constant(0, dtype=dtypes.int32))\n+\n+ self.assertAllEqual(self.evaluate(f()), [1, 3])\n+\n+ def testMaskWithAxisNonConstTensor(self):\n+ @def_function.function(\n+ autograph=False,\n+ input_signature=[\n+ tensor_spec.TensorSpec(shape=None, dtype=dtypes.int32)])\n+ def f(axis):\n+ return array_ops.boolean_mask(\n+ [1, 2, 3], [True, False, True],\n+ axis=axis)\n+\n+ self.assertAllEqual(\n+ self.evaluate(f(constant_op.constant(0, dtype=dtypes.int32))), [1, 3])\n+\n \n @test_util.run_all_in_graph_and_eager_modes\n class OperatorShapeTest(test_util.TensorFlowTestCase):",

"filename": "tensorflow/python/kernel_tests/array_ops_test.py",

"status": "modified"

},

{

"diff": "@@ -1699,7 +1699,10 @@ def _apply_mask_1d(reshaped_tensor, mask, axis=None):\n \"Number of mask dimensions must be specified, even if some dimensions\"\n \" are None. E.g. shape=[None] is ok, but shape=None is not.\")\n axis = 0 if axis is None else axis\n- shape_tensor[axis:axis + ndims_mask].assert_is_compatible_with(shape_mask)\n+ axis_value = tensor_util.constant_value(axis)\n+ if axis_value is not None:\n+ axis = axis_value\n+ shape_tensor[axis:axis + ndims_mask].assert_is_compatible_with(shape_mask)\n \n leading_size = gen_math_ops.prod(shape(tensor)[axis:axis + ndims_mask], [0])\n tensor = reshape(\n@@ -1708,10 +1711,15 @@ def _apply_mask_1d(reshaped_tensor, mask, axis=None):\n shape(tensor)[:axis], [leading_size],\n shape(tensor)[axis + ndims_mask:]\n ], 0))\n- first_dim = shape_tensor[axis:axis + ndims_mask].num_elements()\n- tensor.set_shape(\n- tensor_shape.as_shape(shape_tensor[:axis]).concatenate(\n- [first_dim]).concatenate(shape_tensor[axis + ndims_mask:]))\n+ # TODO(yongtang): tf.reshape in C++ kernel might have set the shape\n+ # correctly, so the following may not be needed? It still might ben\n+ # possible that there are some edge case where tensor_util.constant_value\n+ # resolves more case than ShapeInference of tf.reshape in C++ kernel.\n+ if axis_value is not None:\n+ first_dim = shape_tensor[axis:axis + ndims_mask].num_elements()\n+ tensor.set_shape(\n+ tensor_shape.as_shape(shape_tensor[:axis]).concatenate(\n+ [first_dim]).concatenate(shape_tensor[axis + ndims_mask:]))\n \n mask = reshape(mask, [-1])\n return _apply_mask_1d(tensor, mask, axis)",

"filename": "tensorflow/python/ops/array_ops.py",

"status": "modified"

}

]

}

|

{

"body": "**System information**\r\n- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): yes\r\n- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Windows 10 x64 (1909)\r\n- TensorFlow installed from (source or binary): pip\r\n- TensorFlow version (use command below): v2.1.0-rc2-17-ge5bf8de410 2.1.0\r\n- Python version: 3.7.7\r\n- CUDA/cuDNN version: Using CPU\r\n- GPU model and memory: Using CPU\r\n\r\n**Describe the current behavior**\r\nDoing `Dataset.unbatch()` on dataset with known batch size resets cardinality to -2 (unknown).\r\n\r\n**Describe the expected behavior**\r\nWhen batch size of dataset is known, it should set cardinality to `batch_size * cardinality`.\r\n\r\n**Standalone code to reproduce the issue**\r\n```\r\nimport tensorflow as tf\r\nds = tf.data.Dataset.range(10) # shape=()\r\nds = ds.batch(2, drop_remainder=True) # shape=(2,)\r\nprint(tf.data.experimental.cardinality(ds)) # 5\r\nds = ds.unbatch() # shape=()\r\nprint(tf.data.experimental.cardinality(ds)) # Should be 10, but is -2 (unknown)\r\n```\r\n\r\n**Other info / logs** Include any logs or source code that would be helpful to\r\ndiagnose the problem. If including tracebacks, please include the full\r\ntraceback. Large logs and files should be attached.\r\nAlthough cardinality is currently experimental, it is used when traning keras model.",

"comments": [

{

"body": "Added a PR #39137 for the fix.",

"created_at": "2020-05-04T03:09:08Z"

},

{

"body": "Was able to reproduce the issue with [TF v2.1](https://colab.research.google.com/gist/amahendrakar/caaefad7a4ba4579d410e203fa20057c/39136-tf-nightly.ipynb), [TF v2.2.0-rc4](https://colab.research.google.com/gist/amahendrakar/b2ef86f09489bdad388f089ab3ba315b/39136-2-2.ipynb#scrollTo=52pV0lOQX93A) and [TF-nightly](https://colab.research.google.com/gist/amahendrakar/b01f15c8b4e12c9e879f62eca2fb8395/39136-tf-nightly.ipynb). Please find the attached gist. Thanks!",

"created_at": "2020-05-04T17:11:34Z"

},

{

"body": "@aaudiber please review the PR from Yong.",

"created_at": "2020-05-04T20:02:43Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/39136\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/39136\">No</a>\n",

"created_at": "2020-05-07T18:26:42Z"

}

],

"number": 39136,

"title": "Dataset.unbatch() sets cardinality to -2 even when batch size is known"

}

|

{

"body": "This PR tries to address the issue raised in #39136 where cardinality\r\nof Dataset.unbatch() was always UNKNOWN, even if it might be known\r\nin certain situations.\r\n\r\nThis PR add the cardinality calculation in case the input cardinality\r\nis known and the leading dim of the output shape is known.\r\n\r\nThis PR fixes #39136.\r\n\r\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>",

"number": 39137,

"review_comments": [

{

"body": "combine the two `if` clauses:\r\n```\r\nif (known_batch_size_ < 0 && shape.dim_size(0) >= 0) {\r\n ...\r\n}\r\n```",

"created_at": "2020-05-04T23:08:19Z"

},

{

"body": "can we call this just `batch_size_`? Then add a comment that it may or may not be known, with -1 representing unknown.",

"created_at": "2020-05-04T23:09:33Z"

},

{

"body": "Add test with 2 components, where only the second component's batch size is known:\r\n```\r\nlambda: dataset_ops.Dataset.zip(\r\n dataset_ops.Dataset.range(4).batch(2, drop_remainder=False),\r\n dataset_ops.Dataset.range(5).batch(2, drop_remainder=True))\r\n```",

"created_at": "2020-05-04T23:15:05Z"

},

{

"body": "Thanks @aaudiber, done.",

"created_at": "2020-05-05T16:10:00Z"

},

{

"body": "Thanks @aaudiber, updated.",

"created_at": "2020-05-05T16:10:11Z"

},

{

"body": "Done.",

"created_at": "2020-05-05T16:10:16Z"

}

],

"title": "Add cardinality calculation for Dataset.unbatch() when possible"

}

|

{

"commits": [

{

"message": "Add cardinality calculation for Dataset.unbatch() when possible\n\nThis PR tries to address the issue raised in 39136 where cardinality\nof Dataset.unbatch() was always UNKNOWN, even if it might be known\nin certain situations.\n\nThis PR add the cardinality calculation in case the input cardinality\nis known and the leading dim of the output shape is known.\n\nThis PR fixes 39136.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Add test case for cardinality with Dataset.unbatch()\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Update based on review feedback.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Add additional test case where only the second batch size is known (from the review comment)\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

}

],

"files": [

{

"diff": "@@ -38,8 +38,12 @@ class UnbatchDatasetOp : public UnaryDatasetOpKernel {\n explicit Dataset(OpKernelContext* ctx, DatasetBase* input)\n : DatasetBase(DatasetContext(ctx)), input_(input) {\n input_->Ref();\n+ batch_size_ = -1;\n for (const PartialTensorShape& shape : input->output_shapes()) {\n if (!shape.unknown_rank()) {\n+ if (batch_size_ < 0 && shape.dim_size(0) >= 0) {\n+ batch_size_ = shape.dim_size(0);\n+ }\n gtl::InlinedVector<int64, 4> partial_dim_sizes;\n for (int i = 1; i < shape.dims(); ++i) {\n partial_dim_sizes.push_back(shape.dim_size(i));\n@@ -69,6 +73,17 @@ class UnbatchDatasetOp : public UnaryDatasetOpKernel {\n \n string DebugString() const override { return \"UnbatchDatasetOp::Dataset\"; }\n \n+ int64 Cardinality() const override {\n+ int64 n = input_->Cardinality();\n+ if (n == kInfiniteCardinality || n == kUnknownCardinality) {\n+ return n;\n+ }\n+ if (batch_size_ > 0) {\n+ return n * batch_size_;\n+ }\n+ return kUnknownCardinality;\n+ }\n+\n Status CheckExternalState() const override {\n return input_->CheckExternalState();\n }\n@@ -222,6 +237,8 @@ class UnbatchDatasetOp : public UnaryDatasetOpKernel {\n \n const DatasetBase* const input_;\n std::vector<PartialTensorShape> shapes_;\n+ // batch_size_ may or may not be known, with -1 as unknown\n+ int64 batch_size_;\n };\n };\n ",

"filename": "tensorflow/core/kernels/data/experimental/unbatch_dataset_op.cc",

"status": "modified"

},

{

"diff": "@@ -134,6 +134,21 @@ def _test_combinations():\n lambda: dataset_ops.Dataset.range(5).filter(lambda _: True).take(2),\n cardinality.UNKNOWN),\n (\"Take4\", lambda: dataset_ops.Dataset.range(5).repeat().take(2), 2),\n+ (\"Unbatch1\",\n+ lambda: dataset_ops.Dataset.range(5).batch(2, drop_remainder=True).unbatch(), 4),\n+ (\"Unbatch2\",\n+ lambda: dataset_ops.Dataset.range(5).batch(2, drop_remainder=False).unbatch(), cardinality.UNKNOWN),\n+ (\"Unbatch3\",\n+ lambda: dataset_ops.Dataset.range(5).batch(2, drop_remainder=True).filter(lambda _: True).unbatch(),\n+ cardinality.UNKNOWN),\n+ (\"Unbatch4\", lambda: dataset_ops.Dataset.range(5).batch(2, drop_remainder=True).repeat().unbatch(),\n+ cardinality.INFINITE),\n+ (\"Unbatch5\",\n+ lambda: dataset_ops.Dataset.zip((\n+ dataset_ops.Dataset.range(4).batch(2, drop_remainder=False),\n+ dataset_ops.Dataset.range(5).batch(2, drop_remainder=True),\n+ )).unbatch(),\n+ 4),\n (\"Window1\", lambda: dataset_ops.Dataset.range(5).window(\n size=2, shift=2, drop_remainder=True), 2),\n (\"Window2\", lambda: dataset_ops.Dataset.range(5).window(",

"filename": "tensorflow/python/data/experimental/kernel_tests/cardinality_test.py",

"status": "modified"

}

]

}

|

{

"body": "**System information** - Have I written custom code on **Google Colab**: - \r\n**Code:**\r\n```\r\ntf.keras.backend.set_floatx('float64')\r\n\r\nmodel.compile(optimizer= Adam(learning_rate= 0.001, clipnorm=1.0, clipvalue=0.5),\r\n loss={\r\n 'class_output': BinaryCrossentropy(),\r\n 'decoder_output': BinaryCrossentropy()\r\n },\r\n loss_weights=[0.5, 1.0],\r\n metrics = {\r\n 'class_output':[tf.metrics.Recall(), tf.metrics.Precision()],\r\n 'decoder_output':[tf.metrics.Recall(), tf.metrics.Precision()],\r\n }\r\n ) \r\n```\r\n**Error:**\r\n```\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/ops.py in convert_to_tensor(value, dtype, name, as_ref, preferred_dtype, dtype_hint, ctx, accepted_result_types)\r\n 1288 raise ValueError(\r\n 1289 \"Tensor conversion requested dtype %s for Tensor with dtype %s: %r\" %\r\n-> 1290 (dtype.name, value.dtype.name, value))\r\n 1291 return value\r\n 1292 \r\n\r\nValueError: Tensor conversion requested dtype float64 for Tensor with dtype float32: <tf.Tensor 'metrics_6/class_output_recall_8/Sum:0' shape=(1,) dtype=float32>\r\n```\r\n\r\nOS Platform and Distribution : - \r\n```\r\nos.uname()\r\n>>> posix.uname_result(sysname='Linux', nodename='ed841897617b', release='4.14.137+', version='#1 SMP Thu Aug 8 02:47:02 PDT 2019', machine='x86_64')\r\n```\r\nTensorFlow installed from : - \r\n```!pip install tensorflow==2.10```\r\nTensorFlow version : - \r\n```\r\ntf.__version__\r\n>>> '2.1.0'\r\n```\r\nPython version: - \r\n```\r\n!python -V\r\n>>> Python 3.6.9\r\n```\r\n~~Bazel version :- NA~~\r\n~~GCC/Compiler version : - NA~~ \r\n~~CUDA/cuDNN version: - NA~~\r\n\r\nGPU model and memory:\r\n```\r\nfrom psutil import virtual_memory\r\nmem = virtual_memory()\r\nprint(mem.total / 1024**3, 'GB') # total physical memory available\r\n>>>12.717426300048828 GB\r\n````\r\n~~You can collect some of this information using our environment capture\r\n[script](https://github.com/tensorflow/tensorflow/tree/master/tools/tf_env_collect.sh)\r\nYou can also obtain the TensorFlow version with: 1. TF 1.0: `python -c \"import\r\ntensorflow as tf; print(tf.GIT_VERSION, tf.VERSION)\"` 2. TF 2.0: `python -c\r\n\"import tensorflow as tf; print(tf.version.GIT_VERSION, tf.version.VERSION)\"`~~\r\n\r\n**Describe the current behavior**\r\nWhen using `tf.keras.backend.set_floatx('float64')` , whole tf should be set to float64, right ?\r\nBut the tf.metrics are not getting set as shown in the code above\r\n\r\n**Describe the expected behavior**\r\nAll of tf including tf.metrics should be calculated on the basis of tf.keras.backend.set_floatx('float64')\r\n\r\n**Code to reproduce the issue** \r\n```\r\nimport tensorflow as tf \r\n\r\ntf.keras.backend.set_floatx('float64')\r\n\r\nm = tf.keras.metrics.Recall()\r\nm.update_state([0, 1, 1, 1], [1, 0, 1, 1])\r\nprint('Final result: ', m.result().numpy())\r\n```\r\n\r\n**Other info / logs** ~~Include any logs or source code that would be helpful to\r\ndiagnose the problem. If including tracebacks, please include the full\r\ntraceback. Large logs and files should be attached.~~\r\nStackTrace:\r\n```\r\n---------------------------------------------------------------------------\r\nValueError Traceback (most recent call last)\r\n<ipython-input-30-86f79f751766> in <module>()\r\n 6 loss_weights=[0.5, 1.0],\r\n 7 metrics = {\r\n----> 8 'class_output':[tf.metrics.Recall(), tf.metrics.Precision()],\r\n 9 # 'decoder_output':[tf.metrics.Recall(), tf.metrics.Precision()],\r\n 10 }\r\n\r\n13 frames\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/training/tracking/base.py in _method_wrapper(self, *args, **kwargs)\r\n 455 self._self_setattr_tracking = False # pylint: disable=protected-access\r\n 456 try:\r\n--> 457 result = method(self, *args, **kwargs)\r\n 458 finally:\r\n 459 self._self_setattr_tracking = previous_value # pylint: disable=protected-access\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/training.py in compile(self, optimizer, loss, metrics, loss_weights, sample_weight_mode, weighted_metrics, target_tensors, distribute, **kwargs)\r\n 437 targets=self._targets,\r\n 438 skip_target_masks=self._prepare_skip_target_masks(),\r\n--> 439 masks=self._prepare_output_masks())\r\n 440 \r\n 441 # Prepare sample weight modes. List with the same length as model outputs.\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/training.py in _handle_metrics(self, outputs, targets, skip_target_masks, sample_weights, masks, return_weighted_metrics, return_weighted_and_unweighted_metrics)\r\n 2002 metric_results.extend(\r\n 2003 self._handle_per_output_metrics(self._per_output_metrics[i],\r\n-> 2004 target, output, output_mask))\r\n 2005 if return_weighted_and_unweighted_metrics or return_weighted_metrics:\r\n 2006 metric_results.extend(\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/training.py in _handle_per_output_metrics(self, metrics_dict, y_true, y_pred, mask, weights)\r\n 1953 with K.name_scope(metric_name):\r\n 1954 metric_result = training_utils.call_metric_function(\r\n-> 1955 metric_fn, y_true, y_pred, weights=weights, mask=mask)\r\n 1956 metric_results.append(metric_result)\r\n 1957 return metric_results\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/engine/training_utils.py in call_metric_function(metric_fn, y_true, y_pred, weights, mask)\r\n 1153 \r\n 1154 if y_pred is not None:\r\n-> 1155 return metric_fn(y_true, y_pred, sample_weight=weights)\r\n 1156 # `Mean` metric only takes a single value.\r\n 1157 return metric_fn(y_true, sample_weight=weights)\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/metrics.py in __call__(self, *args, **kwargs)\r\n 194 from tensorflow.python.keras.distribute import distributed_training_utils # pylint:disable=g-import-not-at-top\r\n 195 return distributed_training_utils.call_replica_local_fn(\r\n--> 196 replica_local_fn, *args, **kwargs)\r\n 197 \r\n 198 @property\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/distribute/distributed_training_utils.py in call_replica_local_fn(fn, *args, **kwargs)\r\n 1133 with strategy.scope():\r\n 1134 return strategy.extended.call_for_each_replica(fn, args, kwargs)\r\n-> 1135 return fn(*args, **kwargs)\r\n 1136 \r\n 1137 \r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/metrics.py in replica_local_fn(*args, **kwargs)\r\n 177 def replica_local_fn(*args, **kwargs):\r\n 178 \"\"\"Updates the state of the metric in a replica-local context.\"\"\"\r\n--> 179 update_op = self.update_state(*args, **kwargs) # pylint: disable=not-callable\r\n 180 with ops.control_dependencies([update_op]):\r\n 181 result_t = self.result() # pylint: disable=not-callable\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/utils/metrics_utils.py in decorated(metric_obj, *args, **kwargs)\r\n 74 \r\n 75 with tf_utils.graph_context_for_symbolic_tensors(*args, **kwargs):\r\n---> 76 update_op = update_state_fn(*args, **kwargs)\r\n 77 if update_op is not None: # update_op will be None in eager execution.\r\n 78 metric_obj.add_update(update_op)\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/metrics.py in update_state(self, y_true, y_pred, sample_weight)\r\n 1340 top_k=self.top_k,\r\n 1341 class_id=self.class_id,\r\n-> 1342 sample_weight=sample_weight)\r\n 1343 \r\n 1344 def result(self):\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/utils/metrics_utils.py in update_confusion_matrix_variables(variables_to_update, y_true, y_pred, thresholds, top_k, class_id, sample_weight, multi_label, label_weights)\r\n 438 update_ops.append(\r\n 439 weighted_assign_add(label, pred, weights_tiled,\r\n--> 440 variables_to_update[matrix_cond]))\r\n 441 \r\n 442 return control_flow_ops.group(update_ops)\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/keras/utils/metrics_utils.py in weighted_assign_add(label, pred, weights, var)\r\n 414 if weights is not None:\r\n 415 label_and_pred *= weights\r\n--> 416 return var.assign_add(math_ops.reduce_sum(label_and_pred, 1))\r\n 417 \r\n 418 loop_vars = {\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/ops/resource_variable_ops.py in assign_add(self, delta, use_locking, name, read_value)\r\n 783 with _handle_graph(self.handle), self._assign_dependencies():\r\n 784 assign_add_op = gen_resource_variable_ops.assign_add_variable_op(\r\n--> 785 self.handle, ops.convert_to_tensor(delta, dtype=self.dtype),\r\n 786 name=name)\r\n 787 if read_value:\r\n\r\n/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/ops.py in convert_to_tensor(value, dtype, name, as_ref, preferred_dtype, dtype_hint, ctx, accepted_result_types)\r\n 1288 raise ValueError(\r\n 1289 \"Tensor conversion requested dtype %s for Tensor with dtype %s: %r\" %\r\n-> 1290 (dtype.name, value.dtype.name, value))\r\n 1291 return value\r\n 1292 \r\n\r\nValueError: Tensor conversion requested dtype float64 for Tensor with dtype float32: <tf.Tensor 'metrics_6/class_output_recall_8/Sum:0' shape=(1,) dtype=float32>\r\n```\r\n",

"comments": [

{

"body": "@MarkDaoust \r\nTagging you for escalation. Kindly excuse me if this is unprofessional.",

"created_at": "2020-02-16T06:55:40Z"

},

{

"body": "@Hemal-Mamtora Could you please confirm if the issue faced by you is similar to existing [issue](https://github.com/tensorflow/tensorflow/issues/33365)",

"created_at": "2020-02-17T07:07:28Z"

},

{

"body": "Yes, seems like TF 2.0 has issues with float 64\r\n\r\nTill when would this issue be resolved ?",

"created_at": "2020-02-17T12:35:51Z"

},

{

"body": "Hi @Hemal-Mamtora , \r\n\r\nI think you're right.\r\n\r\nIt looks like this is being caused by the mismatch of [metric.py recognizing `floatx`](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/keras/metrics.py#L146) but [metric_utils.py casting directly to float32](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/keras/utils/metrics_utils.py#L427) .\r\n\r\n@pavithrasv, what's the right way to fix this?\r\n",

"created_at": "2020-02-18T15:02:52Z"

},

{

"body": "Thank you @MarkDaoust. It should be cast to the predictions' dtype. If anyone would like to work on the fix please feel free to send me a PR.",

"created_at": "2020-02-18T17:52:45Z"

},

{

"body": "Added a fix and test case in PR #39134 that will address this issue.",

"created_at": "2020-05-03T22:43:05Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/36790\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/36790\">No</a>\n",

"created_at": "2020-05-28T17:07:19Z"

}

],

"number": 36790,

"title": "tf.keras.backend.set_floatx() causing ValueError (dtype conversion error) while computing tf.keras.metrics.*"

}

|

{

"body": "\r\nThis PR fixes the issue raised in #36790 where tf.keras.metrics.Recall\r\ncauses ValueError when the backend of the keras is float64:\r\n\r\nThis PR cast the value to the dtype of var as var.assign_add\r\nis being called.\r\n\r\nThis PR fixes #36790.\r\n\r\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>\r\n",

"number": 39134,

"review_comments": [

{

"body": "Could we change other places float32 is used in this function as well?",

"created_at": "2020-05-26T19:31:10Z"

}

],

"title": "Fix ValueError with tf.keras.metrics.Recall and float64 keras backend"

}

|

{

"commits": [

{

"message": "Fix ValueError with tf.keras.metrics.Recall and float64 keras backend\n\nThis PR fixes the issue raised in 36790 where tf.keras.metrics.Recall\ncauses ValueError when the backend of the keras is float64:\n\nThis PR cast the value to the dtype of var as var.assign_add\nis being called.\n\nThis PR fixes 36790.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Add test case for tf.keras.metrics.Recall() and float64 keras backend.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Update update_confusion_matrix_variables to alwasy cast to variables_to_update dtype (vs. explicit float32)\n\nThis commits updates the function update_confusion_matrix_variables\nto alwasy cast to dtype based on variables_to_update (previously\nthe values are casted to float32 explicitly and that cuases issues\nwhen keras' backend use non-float32).\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

}

],

"files": [

{

"diff": "@@ -33,6 +33,7 @@\n from tensorflow.python.framework import errors_impl\n from tensorflow.python.framework import ops\n from tensorflow.python.framework import test_util\n+from tensorflow.python.keras import backend\n from tensorflow.python.keras import combinations\n from tensorflow.python.keras import keras_parameterized\n from tensorflow.python.keras import layers\n@@ -2174,6 +2175,23 @@ def test_reset_states_mean_iou(self):\n self.assertArrayNear(self.evaluate(m_obj.total_cm)[0], [1, 0], 1e-1)\n self.assertArrayNear(self.evaluate(m_obj.total_cm)[1], [3, 0], 1e-1)\n \n+ def test_reset_states_recall_float64(self):\n+ # Test case for GitHub issue 36790.\n+ try:\n+ backend.set_floatx('float64')\n+ r_obj = metrics.Recall()\n+ model = _get_model([r_obj])\n+ x = np.concatenate((np.ones((50, 4)), np.zeros((50, 4))))\n+ y = np.concatenate((np.ones((50, 1)), np.ones((50, 1))))\n+ model.evaluate(x, y)\n+ self.assertEqual(self.evaluate(r_obj.true_positives), 50.)\n+ self.assertEqual(self.evaluate(r_obj.false_negatives), 50.)\n+ model.evaluate(x, y)\n+ self.assertEqual(self.evaluate(r_obj.true_positives), 50.)\n+ self.assertEqual(self.evaluate(r_obj.false_negatives), 50.)\n+ finally:\n+ backend.set_floatx('float32')\n+\n \n if __name__ == '__main__':\n test.main()",

"filename": "tensorflow/python/keras/metrics_test.py",

"status": "modified"

},

{

"diff": "@@ -299,9 +299,19 @@ def update_confusion_matrix_variables(variables_to_update,\n '`multi_label` is True.')\n if variables_to_update is None:\n return\n- y_true = math_ops.cast(y_true, dtype=dtypes.float32)\n- y_pred = math_ops.cast(y_pred, dtype=dtypes.float32)\n- thresholds = ops.convert_to_tensor_v2(thresholds, dtype=dtypes.float32)\n+ if not any(\n+ key for key in variables_to_update if key in list(ConfusionMatrix)):\n+ raise ValueError(\n+ 'Please provide at least one valid confusion matrix '\n+ 'variable to update. Valid variable key options are: \"{}\". '\n+ 'Received: \"{}\"'.format(\n+ list(ConfusionMatrix), variables_to_update.keys()))\n+\n+ variable_dtype = list(variables_to_update.values())[0].dtype\n+\n+ y_true = math_ops.cast(y_true, dtype=variable_dtype)\n+ y_pred = math_ops.cast(y_pred, dtype=variable_dtype)\n+ thresholds = ops.convert_to_tensor_v2(thresholds, dtype=variable_dtype)\n num_thresholds = thresholds.shape[0]\n if multi_label:\n one_thresh = math_ops.equal(\n@@ -314,14 +324,6 @@ def update_confusion_matrix_variables(variables_to_update,\n sample_weight)\n one_thresh = math_ops.cast(True, dtype=dtypes.bool)\n \n- if not any(\n- key for key in variables_to_update if key in list(ConfusionMatrix)):\n- raise ValueError(\n- 'Please provide at least one valid confusion matrix '\n- 'variable to update. Valid variable key options are: \"{}\". '\n- 'Received: \"{}\"'.format(\n- list(ConfusionMatrix), variables_to_update.keys()))\n-\n invalid_keys = [\n key for key in variables_to_update if key not in list(ConfusionMatrix)\n ]\n@@ -401,7 +403,7 @@ def update_confusion_matrix_variables(variables_to_update,\n \n if sample_weight is not None:\n sample_weight = weights_broadcast_ops.broadcast_weights(\n- math_ops.cast(sample_weight, dtype=dtypes.float32), y_pred)\n+ math_ops.cast(sample_weight, dtype=variable_dtype), y_pred)\n weights_tiled = array_ops.tile(\n array_ops.reshape(sample_weight, thresh_tiles), data_tiles)\n else:\n@@ -422,9 +424,9 @@ def update_confusion_matrix_variables(variables_to_update,\n \n def weighted_assign_add(label, pred, weights, var):\n label_and_pred = math_ops.cast(\n- math_ops.logical_and(label, pred), dtype=dtypes.float32)\n+ math_ops.logical_and(label, pred), dtype=var.dtype)\n if weights is not None:\n- label_and_pred *= weights\n+ label_and_pred *= math_ops.cast(weights, dtype=var.dtype)\n return var.assign_add(math_ops.reduce_sum(label_and_pred, 1))\n \n loop_vars = {",

"filename": "tensorflow/python/keras/utils/metrics_utils.py",

"status": "modified"

}

]

}

|

{

"body": "<em>Please make sure that this is a bug. As per our [GitHub Policy](https://github.com/tensorflow/tensorflow/blob/master/ISSUES.md), we only address code/doc bugs, performance issues, feature requests and build/installation issues on GitHub. tag:bug_template</em>\r\n\r\n**System information**\r\n- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): yes\r\n- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Linux Ubuntu 18.04\r\n- Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device: N/A\r\n- TensorFlow installed from (source or binary): binary\r\n- TensorFlow version (use command below): \r\nv2.0.0-beta1-5101-gc75bb66 2.0.0-rc0\r\n- Python version: 3.6.8\r\n- Bazel version (if compiling from source): N/A\r\n- GCC/Compiler version (if compiling from source): N/A\r\n- CUDA/cuDNN version: CUDA 10.0, cuDNN 7.6.2.24-1\r\n- GPU model and memory: Nvidia RTX 2070 8 GB\r\n\r\n**Describe the current behavior**\r\nA constructor of a tf.keras Model that uses `tf.keras.layers.BatchNormalization` with `virtual_batch_size` set and unspecified input shape dimensions throws an exception.\r\n\r\n**Describe the expected behavior**\r\nSuch a model should be usable.\r\n\r\n**Code to reproduce the issue**\r\n```python\r\nimport tensorflow as tf\r\n\r\ninp = tf.keras.layers.Input(shape=(None, None, 3))\r\nnet = tf.keras.layers.BatchNormalization(virtual_batch_size=8)(inp)\r\n\r\nmodel = tf.keras.Model(inputs=inp, outputs=net)\r\n```\r\n\r\n**Other info / logs**\r\nTraceback of the exception:\r\n```\r\nTraceback (most recent call last):\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/tensor_util.py\", line 541, in make_tensor_proto\r\n str_values = [compat.as_bytes(x) for x in proto_values]\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/tensor_util.py\", line 541, in <listcomp>\r\n str_values = [compat.as_bytes(x) for x in proto_values]\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/util/compat.py\", line 71, in as_bytes\r\n (bytes_or_text,))\r\nTypeError: Expected binary or unicode string, got 8\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\nTraceback (most recent call last):\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/test_virtual_batch.py\", line 6, in <module>\r\n net = tf.keras.layers.BatchNormalization(virtual_batch_size=8)(inp)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/keras/engine/base_layer.py\", line 802, in __call__\r\n outputs = call_fn(cast_inputs, *args, **kwargs)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/keras/layers/normalization.py\", line 652, in call\r\n inputs = array_ops.reshape(inputs, expanded_shape)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/ops/array_ops.py\", line 131, in reshape\r\n result = gen_array_ops.reshape(tensor, shape, name)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/ops/gen_array_ops.py\", line 8117, in reshape\r\n \"Reshape\", tensor=tensor, shape=shape, name=name)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/op_def_library.py\", line 530, in _apply_op_helper\r\n raise err\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/op_def_library.py\", line 527, in _apply_op_helper\r\n preferred_dtype=default_dtype)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/ops.py\", line 1296, in internal_convert_to_tensor\r\n ret = conversion_func(value, dtype=dtype, name=name, as_ref=as_ref)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/constant_op.py\", line 286, in _constant_tensor_conversion_function\r\n return constant(v, dtype=dtype, name=name)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/constant_op.py\", line 227, in constant\r\n allow_broadcast=True)\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/constant_op.py\", line 265, in _constant_impl\r\n allow_broadcast=allow_broadcast))\r\n File \"/home/ikrets/tf2/lib/python3.6/site-packages/tensorflow_core/python/framework/tensor_util.py\", line 545, in make_tensor_proto\r\n \"supported type.\" % (type(values), values))\r\nTypeError: Failed to convert object of type <class 'list'> to Tensor. Contents: [8, -1, None, None, 3]. Consider casting elements to a supported type.\r\n```",

"comments": [

{

"body": "I replicate the issue with TF 2.0.0.rc0. Please take a look at [gist here](https://colab.sandbox.google.com/gist/gadagashwini/514760082d2c9017a6a4cd51977e3a51/untitled138.ipynb). Thanks!",

"created_at": "2019-09-11T06:22:55Z"

},

{

"body": "Added a PR #39131 for the fix.",

"created_at": "2020-05-03T20:29:33Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/32380\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/32380\">No</a>\n",

"created_at": "2020-05-05T19:27:58Z"

}

],

"number": 32380,

"title": "BatchNormalization virtual_batch_size does not work with None in input shape"

}

|

{

"body": "This PR tries to address the issue raised in #32380 where BatchNormalization with virtual_batch_size will throw out error if shape has None:\r\n```\r\nTypeError: Failed to convert object of type <class 'list'> to Tensor. Contents: [8, -1, None, None, 3]. Consider casting elements to a supported type.\r\n```\r\n\r\nThis PR converts None to -1 so that it could be passed as a tensor to `reshape`.\r\n\r\nThis PR fixes #32380.\r\n\r\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>",

"number": 39131,

"review_comments": [

{

"body": "This is not super safe as it can generate more than one -1.\r\n\r\nIsn't it better to use tf.shape instead?",

"created_at": "2020-05-04T14:52:04Z"

}

],

"title": "Fix BatchNormalization issue with virtual_batch_size when shape has None"

}

|

{

"commits": [

{

"message": "Fix BatchNormalization issue with virtual_batch_size when shape has None\n\nThis PR tries to address the issue raised in 32380 where\nBatchNormalization with virtual_batch_size will throw out error if\nshape has None:\n```\nTypeError: Failed to convert object of type <class 'list'> to Tensor. Contents: [8, -1, None, None, 3]. Consider casting elements to a supported type.\n```\n\nThis PR converts None to -1 so that it could be passed as a tensor to `reshape`.\n\nThis PR fixes 32380.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Add test case for BatchNormalization with virtual_batch_size and shape has None.\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

},

{

"message": "Update to use tf.shape to get the shape of the tensor, from review comment\n\nSigned-off-by: Yong Tang <yong.tang.github@outlook.com>"

}

],

"files": [

{

"diff": "@@ -736,8 +736,13 @@ def call(self, inputs, training=None):\n if self.virtual_batch_size is not None:\n # Virtual batches (aka ghost batches) can be simulated by reshaping the\n # Tensor and reusing the existing batch norm implementation\n- original_shape = [-1] + inputs.shape.as_list()[1:]\n- expanded_shape = [self.virtual_batch_size, -1] + original_shape[1:]\n+ original_shape = array_ops.shape(inputs)\n+ original_shape = array_ops.concat([\n+ constant_op.constant([-1]),\n+ original_shape[1:]], axis=0)\n+ expanded_shape = array_ops.concat([\n+ constant_op.constant([self.virtual_batch_size, -1]),\n+ original_shape[1:]], axis=0)\n \n # Will cause errors if virtual_batch_size does not divide the batch size\n inputs = array_ops.reshape(inputs, expanded_shape)",

"filename": "tensorflow/python/keras/layers/normalization.py",

"status": "modified"

},

{

"diff": "@@ -354,6 +354,13 @@ def my_func():\n # Updates should be tracked in a `wrap_function`.\n self.assertLen(layer.updates, 2)\n \n+ @keras_parameterized.run_all_keras_modes\n+ def test_basic_batchnorm_v2_none_shape_and_virtual_batch_size(self):\n+ # Test case for GitHub issue for 32380\n+ norm = normalization_v2.BatchNormalization(virtual_batch_size=8)\n+ inp = keras.layers.Input(shape=(None, None, 3))\n+ _ = norm(inp)\n+\n \n def _run_batchnorm_correctness_test(layer, dtype='float32', fused=False):\n model = keras.models.Sequential()",

"filename": "tensorflow/python/keras/layers/normalization_test.py",

"status": "modified"

}

]

}

|

{

"body": "**System information**\r\n- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): somewhat custom\r\n- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Ubuntu 18.04.04\r\n- Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device: laptop\r\n- TensorFlow installed from (source or binary):\r\n- TensorFlow version (use command below): \r\n- Python version: 3.6.10\r\n- Bazel version (if compiling from source):\r\n- GCC/Compiler version (if compiling from source): 7.3.0\r\n- CUDA/cuDNN version: None\r\n- GPU model and memory: None\r\n\r\n**Describe the current behavior**\r\nHuber Loss crashes the script with the following error message:\r\n\r\n> TypeError: Input 'y' of 'Mul' Op has type float64 that does not match type float32 of argument 'x'.\r\n\r\nThat happens even though I cast everything to either `tf.float32` or `tf.float64` manually. It **does** work if I put this line \r\n```\r\ntf.keras.backend.set_floatx('float32')\r\n```\r\nOr if I remove the original line with `float64`. Seems to me like setting the global data type fails somewhere. And, I get the following warning that tensors are being re-casted automatically:\r\n>WARNING:tensorflow:Layer dense_8 is casting an input tensor from dtype float64 to the layer's dtype of float32, which is new behavior in TensorFlow 2. The layer has dtype float32 because it's dtype defaults to floatx.\r\n\r\n**Standalone code to reproduce the issue**\r\n```import os\r\nos.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'\r\nfrom sklearn.datasets import load_linnerud\r\nimport tensorflow as tf\r\ntf.keras.backend.set_floatx('float64')\r\nfrom tensorflow.keras.models import Model\r\nfrom tensorflow.keras.layers import Dense, Dropout, LSTM, Concatenate\r\n\r\nX, y = load_linnerud(return_X_y=True)\r\n\r\ndata = tf.data.Dataset.from_tensor_slices((X, y)).\\\r\n map(lambda a, b: (tf.divide(a, tf.reduce_max(X, axis=0, keepdims=True)), b))\r\n\r\ntrain_data = data.take(16).shuffle(16).batch(4)\r\ntest_data = data.skip(16).shuffle(4).batch(4)\r\n\r\n\r\nclass FullyConnectedNetwork(Model):\r\n def __init__(self):\r\n super(FullyConnectedNetwork, self).__init__()\r\n self.layer1 = Dense(9, input_shape=(3,))\r\n self.layer2 = LSTM(8, return_sequences=True)\r\n self.layer3 = Dense(27)\r\n self.layer4 = Dropout(5e-1)\r\n self.layer5 = Dense(27)\r\n self.layer6 = Concatenate()\r\n self.layer7 = Dense(3)\r\n\r\n def __call__(self, x, *args, **kwargs):\r\n x = tf.nn.tanh(self.layer1(x))\r\n y = self.layer2(x)\r\n x = tf.nn.selu(self.layer3(x))\r\n x = self.layer4(x)\r\n x = tf.nn.relu(self.layer5(x))\r\n x = self.layer6([x, y])\r\n x = self.layer7(x)\r\n return x\r\n\r\n\r\nmodel = FullyConnectedNetwork()\r\n\r\nloss_object = tf.keras.losses.Huber()\r\n\r\ntrain_loss = tf.keras.metrics.Mean()\r\ntest_loss = tf.keras.metrics.Mean()\r\n\r\noptimizer = tf.keras.optimizers.Adamax()\r\n\r\n\r\n@tf.function\r\ndef train_step(inputs, targets):\r\n with tf.GradientTape() as tape:\r\n outputs = model(inputs)\r\n loss = loss_object(outputs, targets)\r\n train_loss(loss)\r\n\r\n gradients = tape.gradient(loss, model.trainable_variables)\r\n optimizer.apply_gradients(zip(gradients, model.trainable_variables))\r\n\r\n\r\n@tf.function\r\ndef test_step(inputs, targets):\r\n outputs = model(inputs)\r\n print(outputs.dtype, targets.dtype)\r\n loss = loss_object(outputs, targets)\r\n test_loss(loss)\r\n\r\n\r\ndef main():\r\n train_loss.reset_states()\r\n test_loss.reset_states()\r\n\r\n for epoch in range(1, 10_000 + 1):\r\n for x, y in train_data:\r\n train_step(x, y)\r\n\r\n for x, y in test_data:\r\n test_step(x, y)\r\n\r\n if epoch % 25 == 0:\r\n print(f'Epoch: {epoch:>4} Train Loss: {train_loss.result().numpy():.2f} '\r\n f'Test Loss: {test_loss.result().numpy():.2f}')\r\n\r\n\r\nif __name__ == '__main__':\r\n main()\r\n```\r\n```\r\nTraceback (most recent call last):\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/IPython/core/interactiveshell.py\", line 3331, in run_code\r\n exec(code_obj, self.user_global_ns, self.user_ns)\r\n File \"<ipython-input-30-b08781047662>\", line 86, in <module>\r\n main()\r\n File \"<ipython-input-30-b08781047662>\", line 75, in main\r\n train_step(x, y)\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/eager/def_function.py\", line 568, in __call__\r\n result = self._call(*args, **kwds)\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/eager/def_function.py\", line 615, in _call\r\n self._initialize(args, kwds, add_initializers_to=initializers)\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/eager/def_function.py\", line 497, in _initialize\r\n *args, **kwds))\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/eager/function.py\", line 2389, in _get_concrete_function_internal_garbage_collected\r\n graph_function, _, _ = self._maybe_define_function(args, kwargs)\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/eager/function.py\", line 2703, in _maybe_define_function\r\n graph_function = self._create_graph_function(args, kwargs)\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/eager/function.py\", line 2593, in _create_graph_function\r\n capture_by_value=self._capture_by_value),\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/framework/func_graph.py\", line 978, in func_graph_from_py_func\r\n func_outputs = python_func(*func_args, **func_kwargs)\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/eager/def_function.py\", line 439, in wrapped_fn\r\n return weak_wrapped_fn().__wrapped__(*args, **kwds)\r\n File \"/home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/framework/func_graph.py\", line 968, in wrapper\r\n raise e.ag_error_metadata.to_exception(e)\r\nTypeError: in converted code:\r\n <ipython-input-20-f2c31267a363>:54 train_step *\r\n loss = loss_object(outputs, targets)\r\n /home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/keras/losses.py:126 __call__\r\n losses = self.call(y_true, y_pred)\r\n /home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/keras/losses.py:221 call\r\n return self.fn(y_true, y_pred, **self._fn_kwargs)\r\n /home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/keras/losses.py:915 huber_loss\r\n math_ops.multiply(delta, linear))\r\n /home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/util/dispatch.py:180 wrapper\r\n return target(*args, **kwargs)\r\n /home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/ops/math_ops.py:334 multiply\r\n return gen_math_ops.mul(x, y, name)\r\n /home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/ops/gen_math_ops.py:6125 mul\r\n \"Mul\", x=x, y=y, name=name)\r\n /home/nicolas/anaconda3/envs/condaenv/lib/python3.6/site-packages/tensorflow_core/python/framework/op_def_library.py:504 _apply_op_helper\r\n inferred_from[input_arg.type_attr]))\r\n TypeError: Input 'y' of 'Mul' Op has type float64 that does not match type float32 of argument 'x'.\r\n```",

"comments": [

{

"body": "Was able to reproduce the issue with TF v2.1, [TF v2.2.0-rc3](https://colab.research.google.com/gist/amahendrakar/6baa93476d84d2fef692b159e39eaaaa/39004.ipynb) and [TF-nightly](https://colab.research.google.com/gist/amahendrakar/869d37d6c2c3909353ce483f01fa5df0/39004-tf-nightly.ipynb). Please find the attached gist. Thanks!",

"created_at": "2020-04-29T13:56:43Z"

},

{

"body": "Potentially related to #36790",

"created_at": "2020-05-02T22:19:07Z"

},

{

"body": "Added a PR #39123 for the fix of this issue.",

"created_at": "2020-05-03T16:36:19Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/39004\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/39004\">No</a>\n",

"created_at": "2020-05-05T18:16:57Z"

}

],

"number": 39004,

"title": "Huber Loss crashes training loop due to data type mismatch"

}

|

{