issue

dict | pr

dict | pr_details

dict |

|---|---|---|

{

"body": "<details><summary>Click to expand!</summary> \n \n ### Issue Type\n\nBug\n\n### Have you reproduced the bug with TF nightly?\n\nYes\n\n### Source\n\nsource\n\n### Tensorflow Version\n\ntf 2.12.0\n\n### Custom Code\n\nYes\n\n### OS Platform and Distribution\n\nwin11\n\n### Mobile device\n\n_No response_\n\n### Python version\n\n_No response_\n\n### Bazel version\n\n_No response_\n\n### GCC/Compiler version\n\n_No response_\n\n### CUDA/cuDNN version\n\n_No response_\n\n### GPU model and memory\n\n_No response_\n\n### Current Behaviour?\n\nAccording to [doc](https://tensorflow.google.cn/api_docs/python/tf/eye), the param `num_rows` should be `Non-negative int32 scalar Tensor`. But below snippet code 1 indicates that the param `num_rows` cannot be zero which is inconsistent with doc. On the other hand, the param `num_rows` shouldnt be Bool Tensor, but when given bool tensor, `tf.eye` works, as below snippet code 2 shows.\n\n### Standalone code to reproduce the issue\n\n```shell\nsnippet code 1:\r\n\r\nimport tensorflow as tf\r\nresults={}\r\ntry:\r\n num_rows = \"1\"\r\n results[\"res\"] = tf.eye(num_rows=num_rows)\r\nexcept Exception as e:\r\n results[\"err\"] = \"Error:\"+str(e)\r\nprint(results)\r\n# results = Error:Arguments `num_rows` and `num_columns` must be positive integer values. Received: num_rows=1, num_columns=1\r\n```\r\n\r\nsnippet code 2:\r\n```\r\nimport tensorflow as tf\r\nresults={}\r\ntry:\r\n num_rows = True\r\n results[\"res\"] = tf.eye(num_rows=num_rows,)\r\nexcept Exception as e:\r\n results[\"err\"] = \"Error:\"+str(e)\r\nprint(results)\r\n# results = {'res': <tf.Tensor: shape=(1, 1), dtype=float32, numpy=array([[1.]], dtype=float32)>}\r\n```\n```\n\n\n### Relevant log output\n\n_No response_</details>",

"comments": [

{

"body": "Hi @cheyennee ,\r\n\r\nThanks for reporting. I need to cross check the implementation and let you update and do necessary. Thanks!",

"created_at": "2023-05-02T04:31:11Z"

},

{

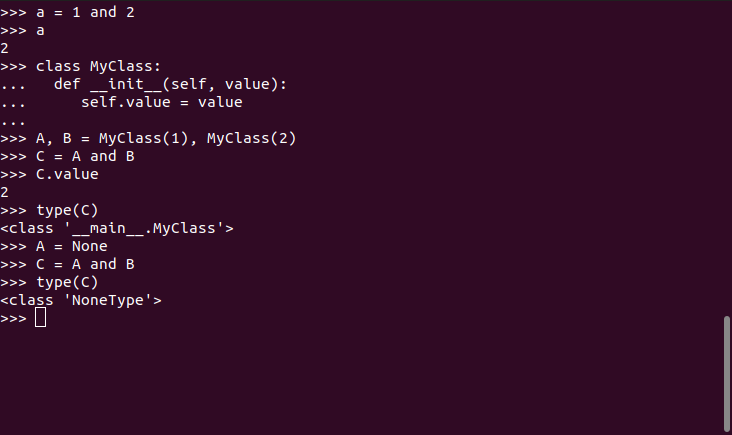

"body": "Hi @cheyennee ,\r\n\r\n**For the code snippet 1:**\r\n\r\nSince you are passing `num_rows = \"1\"` as string and it is raising the error as intended and in the description it is printing num_rows=1, and here 1 is string not a number. By default if you won't provide any value to `num_columns` then `num_columns=num_rows` as per API, hence you are getting same value for both `num_rows` and `num_columns`.\r\n\r\nFor example if I pass `num_rows = \"anything\"` then the error description will be like below:\r\n\r\n\r\n> \r\n\r\n> TypeError: Arguments `num_rows` and `num_columns` must be positive integer values. Received: num_rows=anything, num_columns=anything\r\n\r\n\r\n\r\nI hope this will clarify your query and there is no need to change any description here.\r\n\r\n\r\n\r\n**For the code snippet 2:**\r\n\r\nIf you pass `num_rows = True`, here True will be converted as 1 and hence `num_rows=1` and `num_columns=1` and the output will be (1,1) shaped tensor.\r\n\r\nHope this clarify your queries. Thanks!",

"created_at": "2023-05-02T11:30:01Z"

},

{

"body": "@SuryanarayanaY I see many other APIs that don't convert type internally. So I am a little confused, which APIs will automatically convert type internally, and which ones will not?",

"created_at": "2023-05-03T11:09:37Z"

},

{

"body": "Hi @cheyennee ,\r\n\r\nI hope for `code snippet-1`, I have answered your query right?\r\n\r\nI am assuming your question is for `code snippet-2` where True is converted as '1'.Correct me if I am wrong. \r\n\r\nThe `tf.eye` API calls `tf.ones` internally and this is where booleans are converted into integer.Please refer the source code and the [gist](https://colab.research.google.com/gist/SuryanarayanaY/c434827ac7569eceb8b37a7f9ab28d50/60457.ipynb) to explain this.\r\n\r\n I think in the both APIs we need to change the description of argument `shape` that it also accepts boolean and converts them into integers 1 or 0.\r\n\r\nI think this documentation change will suffice the purpose of this issue right? Please confirm",

"created_at": "2023-05-03T17:05:04Z"

},

{

"body": "@SuryanarayanaY Yeah, you're right. I think the documentation should be changed since the argument `shape` can accepts both boolean and integer.",

"created_at": "2023-05-04T14:42:12Z"

},

{

"body": "You didn't pass a boolean tensor, you passed a Python boolean, which automatically gets converted to and integer when you do math on it. The function fails if you do actually try to pass a tensor of type `tf.bool`. It's not useful to document that `tf.ones(True)` happens to work - it has no valid semantic meaning.",

"created_at": "2023-06-01T15:27:17Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60457\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60457\">No</a>\n",

"created_at": "2023-06-01T15:27:20Z"

}

],

"number": 60457,

"title": "error message of tf.eye is inconsistent with doc"

}

|

{

"body": "At present as per documentation of `tf.zeros` and `tf.ones`, both APIs have argument `shape` which accepts \"A list of integers, a tuple of integers, or a 1-D Tensor of type int32 \". \r\n\r\nBut its find out that when Boolean data types `True, False` passed to the `shape` argument it also accepts it and convert them into 1, 0 respectively and outputs correct results. Hence i am proposing to add a note under the '`shape`' argument description that it also accepts boolean data types.\r\n\r\nAttaching the [gist](https://colab.research.google.com/gist/SuryanarayanaY/837bf1888c618e3585f4f7247c329885/tf-ones_tf-zeros_tf-eye.ipynb) also for referring the results. \r\n\r\nAlso fixes #60457 ",

"number": 60584,

"review_comments": [],

"title": "Update Args data types of tf.ones and tf.zeros"

}

|

{

"commits": [

{

"message": "Update Args data types of tf.ones and tf.zeros\n\nAt present as per documentation of tf.zeros and tf.ones, both APIs have argument shape which accepts \"A list of integers, a tuple of integers, or a 1-D Tensor of type int32 \". But its find out that when Boolean data types True, False passed to the same argument it also accepts it and convert them into 1,0 respectively and outputs correct results. Hence i am proposing to add a note under the 'shape' argument description that it also accepts boolean data types.\r\n\r\nAttaching the gist also for referring the results.\r\nhttps://colab.research.google.com/gist/SuryanarayanaY/837bf1888c618e3585f4f7247c329885/tf-ones_tf-zeros_tf-eye.ipynb"

},

{

"message": "Update supported dtypes of tf.eye num_rows argument \n\nAt present as per documentation the tf.eye API, the argument num_rows accepts \"Non-negative int32 scalar Tensor giving the number of rows in each batch matrix\".\r\n\r\nBut when tested with booleans True or False it also accepting these arguments and converting them to 1 or 0 respectively and generating desired output.This means bool data types also supported argument and hence I am proposing to add a note in the argument description that bool data types also supported.\r\n\r\nGist is attached here for reference of results.\r\n\r\nhttps://colab.research.google.com/gist/SuryanarayanaY/837bf1888c618e3585f4f7247c329885/tf-ones_tf-zeros_tf-eye.ipynb"

}

],

"files": [

{

"diff": "@@ -2832,6 +2832,8 @@ def zeros(shape, dtype=dtypes.float32, name=None):\n Args:\n shape: A `list` of integers, a `tuple` of integers, or\n a 1-D `Tensor` of type `int32`.\n+ Note: Boolean datatypes True,False also acceptable and converted\n+ into numerics 1,0 respectively.\n dtype: The DType of an element in the resulting `Tensor`.\n name: Optional string. A name for the operation.\n \n@@ -3090,6 +3092,8 @@ def ones(shape, dtype=dtypes.float32, name=None):\n Args:\n shape: A `list` of integers, a `tuple` of integers, or\n a 1-D `Tensor` of type `int32`.\n+ Note: Boolean datatypes True,False also acceptable and converted\n+ into numerics 1,0 respectively.\n dtype: Optional DType of an element in the resulting `Tensor`. Default is\n `tf.float32`.\n name: Optional string. A name for the operation.",

"filename": "tensorflow/python/ops/array_ops.py",

"status": "modified"

},

{

"diff": "@@ -219,6 +219,8 @@ def eye(num_rows,\n Args:\n num_rows: Non-negative `int32` scalar `Tensor` giving the number of rows\n in each batch matrix.\n+ Note: Boolean data types True,False also acceptable and converts into\n+ 1, 0 respectively.\n num_columns: Optional non-negative `int32` scalar `Tensor` giving the number\n of columns in each batch matrix. Defaults to `num_rows`.\n batch_shape: A list or tuple of Python integers or a 1-D `int32` `Tensor`.",

"filename": "tensorflow/python/ops/linalg_ops.py",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \n \n ### Issue Type\n\nBug\n\n### Have you reproduced the bug with TF nightly?\n\nYes\n\n### Source\n\nsource\n\n### Tensorflow Version\n\ntf 2.12.0\n\n### Custom Code\n\nYes\n\n### OS Platform and Distribution\n\nwin11\n\n### Mobile device\n\n_No response_\n\n### Python version\n\n_No response_\n\n### Bazel version\n\n_No response_\n\n### GCC/Compiler version\n\n_No response_\n\n### CUDA/cuDNN version\n\n_No response_\n\n### GPU model and memory\n\n_No response_\n\n### Current Behaviour?\n\nAccording to [doc](https://tensorflow.google.cn/api_docs/python/tf/nn/leaky_relu), the argument `features` can be `float16, float32, float64, int32, int64`. But the error message in following snippet code indicates that the type of `features` can only be `float16, float32, float64` without `int`.\n\n### Standalone code to reproduce the issue\n\n```shell\nimport tensorflow as tf\r\nresults={}\r\ntry:\r\n features = [True]\r\n results[\"res\"] = tf.nn.leaky_relu(features=features,)\r\nexcept Exception as e:\r\n results[\"err\"] = \"Error:\"+str(e)\r\nprint(results)\r\n# results={'err': \"Error:Value for attr 'T' of bool is not in the list of allowed values: half, bfloat16, float, double\\n\\t; NodeDef: {{node LeakyRelu}}; Op<name=LeakyRelu; signature=features:T -> activations:T; attr=alpha:float,default=0.2; attr=T:type,default=DT_FLOAT,allowed=[DT_HALF, DT_BFLOAT16, DT_FLOAT, DT_DOUBLE]> [Op:LeakyRelu]\"}\n```\n\n\n### Relevant log output\n\n_No response_</details>",

"comments": [

{

"body": "Hi. Is anyone working on the issue to update the docs/error message? Would love to take it up and start working on the issue",

"created_at": "2023-05-29T06:06:07Z"

}

],

"number": 60521,

"title": "error message is inconsistent with documentation in tf.nn.leaky_relu"

}

|

{

"body": "https://github.com/tensorflow/tensorflow/issues/60521 issue no. #60521\r\n\"According to doc(https://tensorflow.google.cn/api_docs/python/tf/nn/leaky_relu), the argument features can be float16, float32, float64, int32, int64. But the error message in following snippet code indicates that the type of features can only be float16, float32, float64 without int.\" This is my first contribution to open source so please excuse any mistake and please tell if anything is wrong.",

"number": 60522,

"review_comments": [],

"title": "Update LeakyRelu.pbtxt"

}

|

{

"commits": [

{

"message": "Update LeakyRelu.pbtxt\n\nhttps://github.com/tensorflow/tensorflow/issues/60521\r\nissue no. #60521\r\n\"According to doc(https://tensorflow.google.cn/api_docs/python/tf/nn/leaky_relu), the argument features can be float16, float32, float64, int32, int64. But the error message in following snippet code indicates that the type of features can only be float16, float32, float64 without int.\"\r\nThis is my contribution to open source so please excuse any mistake and please tell if anything is wrong."

},

{

"message": "add int value in allowed_values list \n\nif applied, this commit will add DT_INT as a type of value in allowed_values list in LeakyRelu.pbtxt file."

}

],

"files": [

{

"diff": "@@ -59,6 +59,7 @@ op {\n type: DT_BFLOAT16\n type: DT_FLOAT\n type: DT_DOUBLE\n+ type: DT_INT\n }\n }\n }",

"filename": "tensorflow/core/ops/compat/ops_history_v1/LeakyRelu.pbtxt",

"status": "modified"

},

{

"diff": "@@ -59,6 +59,7 @@ op {\n type: DT_BFLOAT16\n type: DT_FLOAT\n type: DT_DOUBLE\n+ type: DT_INT\n }\n }\n }",

"filename": "tensorflow/core/ops/compat/ops_history_v2/LeakyRelu.pbtxt",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \n \n ### Issue Type\n\nBug\n\n### Have you reproduced the bug with TF nightly?\n\nYes\n\n### Source\n\nsource\n\n### Tensorflow Version\n\n2.13.0-dev20230208\n\n### Custom Code\n\nYes\n\n### OS Platform and Distribution\n\n_No response_\n\n### Mobile device\n\n_No response_\n\n### Python version\n\n_No response_\n\n### Bazel version\n\n_No response_\n\n### GCC/Compiler version\n\n_No response_\n\n### CUDA/cuDNN version\n\n_No response_\n\n### GPU model and memory\n\n_No response_\n\n### Current Behaviour?\n\n```shell\ntf.raw_ops.ResourceScatterUpdate crash with abortion\n```\n\n\n### Standalone code to reproduce the issue\n\n```shell\nimport tensorflow as tf\r\nimport numpy as np\r\n\r\ninit = np.random.rand(20)\r\nupdate = np.random.rand(20)\r\n\r\nresource = tf.Variable(init, dtype=tf.float32)\r\nresource_var = resource.handle\r\nindices = np.array([1, 3, 5], dtype=np.int32)\r\ntf.raw_ops.ResourceScatterUpdate(resource=resource_var, indices=indices, updates=update)\n```\n\n\n### Relevant log output\n\n```shell\n2023-03-28 11:39:22.735062: F tensorflow/core/framework/tensor.cc:770] Check failed: dtype() == expected_dtype (1 vs. 2) double expected, got float\r\nAborted (core dumped)\n```\n</details>",

"comments": [

{

"body": "Try this below\r\n\r\nimport tensorflow as tf\r\nimport numpy as np\r\n\r\ninit = np.random.rand(20).astype(np.float32) # Convert to float32\r\n\r\nresource = tf.Variable(init, dtype=tf.float32)\r\nresource_var = resource.handle\r\nindices = np.array([1, 3, 5], dtype=np.int32)\r\n\r\n# Make sure the updates tensor has the correct shape: (len(indices),)\r\nupdate = np.random.rand(len(indices)).astype(np.float32) # Convert to float32\r\n\r\ntf.raw_ops.ResourceScatterUpdate(resource=resource_var, indices=indices, updates=update)\r\nNow, the 'updates' tensor has a shape that matches the size of the 'indices' tensor, and the error should be resolved.",

"created_at": "2023-03-31T20:21:23Z"

},

{

"body": "@trickiwoo Could you please let us know if the above workaround worked for you?\r\nThank you!",

"created_at": "2023-04-04T11:16:20Z"

},

{

"body": "This issue is stale because it has been open for 7 days with no activity. It will be closed if no further activity occurs. Thank you.",

"created_at": "2023-04-12T01:53:24Z"

},

{

"body": "Thanks for providing the workaround. However, in my understanding, crashes like this seem to be a vulnerability according to https://github.com/tensorflow/tensorflow/issues/60121#issuecomment-1485230826",

"created_at": "2023-04-15T20:04:57Z"

},

{

"body": "I was able to reproduce the issue in Tensorflow 2.12, please find the attached Gist [here](https://gist.github.com/sachinprasadhs/028763a39c0f83e59f6a5ba2807fef8a). Thanks!",

"created_at": "2023-04-18T20:57:02Z"

},

{

"body": "@trickiwoo,\r\nI tried to execute the mentioned code on tf-nightly and the code was executed with the error and also observed that the crash did not happen. And the same has been in the respective files. Kindly find the [gist](https://colab.research.google.com/gist/tilakrayal/58a438e3530de19720ef3cac0201383f/untitled1681.ipynb) for the [reference](https://colab.research.google.com/gist/tilakrayal/64c329a1db61dd97eaaffed5e04e2f54/untitled1682.ipynb).\r\n\r\nhttps://github.com/tensorflow/tensorflow/blob/master/tensorflow/core/kernels/quantize_and_dequantize_op.cc#L22 \r\n\r\nhttps://github.com/tensorflow/tensorflow/blob/master/tensorflow/core/kernels/resource_variable_ops.cc#L1120\r\n\r\n> // Check data type of update and resource to scatter.\r\n> const DataType update_dtype = c->input(2).dtype();\r\n> OP_REQUIRES(c, v->tensor()->dtype() == update_dtype,\r\n> errors::InvalidArgument(\r\n> \"DType of scatter resource and updates does not match.\"));\r\n\r\nThank you!",

"created_at": "2024-01-25T12:55:14Z"

},

{

"body": "This issue is stale because it has been open for 7 days with no activity. It will be closed if no further activity occurs. Thank you.",

"created_at": "2024-02-02T01:47:25Z"

},

{

"body": "This issue was closed because it has been inactive for 7 days since being marked as stale. Please reopen if you'd like to work on this further.",

"created_at": "2024-02-10T01:46:04Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60147\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60147\">No</a>\n",

"created_at": "2024-02-10T01:46:10Z"

}

],

"number": 60147,

"title": "tf.raw_ops.ResourceScatterUpdate crash with abortion"

}

|

{

"body": "Add dtype checks to Scatter ops(Assign*VariableOp, ResourceScatter*) to report error instead of core dump.\r\nfix issues:\r\n#60147 \r\n#60121 \r\n",

"number": 60452,

"review_comments": [],

"title": "Add dtype checks to Scatter ops to report error instead of core dump"

}

|

{

"commits": [

{

"message": "Add dtype checks to Scatter ops to report error instead of core dump"

}

],

"files": [

{

"diff": "@@ -592,6 +592,14 @@ class AssignUpdateVariableOp : public OpKernel {\n // PrepareToUpdateVariable() for commutative operations like Op ==\n // ADD if value's refcount was 1.\n mutex_lock ml(*variable->mu());\n+ OP_REQUIRES(context,\n+ (variable->tensor()->dtype() == DT_INVALID &&\n+ !variable->is_initialized) ||\n+ variable->tensor()->dtype() == value.dtype(),\n+ errors::InvalidArgument(\n+ \"Trying to assign update var with wrong dtype. Expected \",\n+ DataTypeString(variable->tensor()->dtype()), \" got \",\n+ DataTypeString(value.dtype())));\n Tensor* var_tensor = variable->tensor();\n OP_REQUIRES_OK(context, ValidateAssignUpdateVariableOpShapes(\n var_tensor->shape(), value.shape()));\n@@ -1106,6 +1114,11 @@ class ResourceScatterUpdateOp : public OpKernel {\n \"updates.shape \", updates.shape().DebugString(),\n \", indices.shape \", indices.shape().DebugString(),\n \", params.shape \", params->shape().DebugString()));\n+ OP_REQUIRES(c, params->dtype() == updates.dtype(),\n+ errors::InvalidArgument(\n+ \"Trying to scatter update var with wrong dtype. Expected \",\n+ DataTypeString(params->dtype()), \" got \",\n+ DataTypeString(updates.dtype())));\n \n // Check that we have enough index space\n const int64_t N_big = indices.NumElements();",

"filename": "tensorflow/core/kernels/resource_variable_ops.cc",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \n \n ### Issue Type\n\nBug\n\n### Have you reproduced the bug with TF nightly?\n\nYes\n\n### Source\n\nsource\n\n### Tensorflow Version\n\n2.13.0-dev20230208\n\n### Custom Code\n\nYes\n\n### OS Platform and Distribution\n\n_No response_\n\n### Mobile device\n\n_No response_\n\n### Python version\n\n3.9\n\n### Bazel version\n\n_No response_\n\n### GCC/Compiler version\n\n_No response_\n\n### CUDA/cuDNN version\n\n_No response_\n\n### GPU model and memory\n\n_No response_\n\n### Current Behaviour?\n\n```shell\ntf.raw_ops.AssignAddVariableOp crash with abortion\n```\n\n\n### Standalone code to reproduce the issue\n\n```shell\nimport tensorflow as tf\r\nfrom tensorflow.python.eager import context\r\n\r\ninput1 = tf.raw_ops.VarHandleOp(dtype=tf.int32, shape=[2, 3], shared_name=context.anonymous_name())\r\ninput2 = tf.constant([],dtype=tf.float32)\r\n\r\ntf.raw_ops.AssignAddVariableOp(resource=input1, value=input2)\n```\n\n\n### Relevant log output\n\n```shell\n2023-03-26 18:39:30.729731: F tensorflow/core/framework/tensor.cc:770] Check failed: dtype() == expected_dtype (3 vs. 1) float expected, got int32\r\nAborted (core dumped\n```\n</details>",

"comments": [

{

"body": "https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md#reporting-vulnerabilities\r\n\r\n(if you want to be credited in advisories and Google VRP board)\r\n\r\nAlso, responsible disclosure.",

"created_at": "2023-03-27T14:35:58Z"

},

{

"body": "@sachinprasadhs,\r\nI was able to reproduce the issue on tensorflow v2.11, v2.12 and tf-nightly. Kindly find the gist of it [here](https://colab.research.google.com/gist/tilakrayal/363047ca6136212ebfc5820ba7525242/untitled1055.ipynb).",

"created_at": "2023-03-27T16:39:53Z"

},

{

"body": "@mihaimaruseac \r\nThank you for the great suggestion! I have reported this and other crash issues to the Google's Bug Hunting project.\r\n",

"created_at": "2023-03-27T17:09:59Z"

},

{

"body": "@trickiwoo what fuzzer you used to find this issue and another crashes?",

"created_at": "2023-04-03T00:24:05Z"

}

],

"number": 60121,

"title": "tf.raw_ops.AssignAddVariableOp crash with abortion"

}

|

{

"body": "Add dtype checks to Scatter ops(Assign*VariableOp, ResourceScatter*) to report error instead of core dump.\r\nfix issues:\r\n#60147 \r\n#60121 \r\n",

"number": 60452,

"review_comments": [],

"title": "Add dtype checks to Scatter ops to report error instead of core dump"

}

|

{

"commits": [

{

"message": "Add dtype checks to Scatter ops to report error instead of core dump"

}

],

"files": [

{

"diff": "@@ -592,6 +592,14 @@ class AssignUpdateVariableOp : public OpKernel {\n // PrepareToUpdateVariable() for commutative operations like Op ==\n // ADD if value's refcount was 1.\n mutex_lock ml(*variable->mu());\n+ OP_REQUIRES(context,\n+ (variable->tensor()->dtype() == DT_INVALID &&\n+ !variable->is_initialized) ||\n+ variable->tensor()->dtype() == value.dtype(),\n+ errors::InvalidArgument(\n+ \"Trying to assign update var with wrong dtype. Expected \",\n+ DataTypeString(variable->tensor()->dtype()), \" got \",\n+ DataTypeString(value.dtype())));\n Tensor* var_tensor = variable->tensor();\n OP_REQUIRES_OK(context, ValidateAssignUpdateVariableOpShapes(\n var_tensor->shape(), value.shape()));\n@@ -1106,6 +1114,11 @@ class ResourceScatterUpdateOp : public OpKernel {\n \"updates.shape \", updates.shape().DebugString(),\n \", indices.shape \", indices.shape().DebugString(),\n \", params.shape \", params->shape().DebugString()));\n+ OP_REQUIRES(c, params->dtype() == updates.dtype(),\n+ errors::InvalidArgument(\n+ \"Trying to scatter update var with wrong dtype. Expected \",\n+ DataTypeString(params->dtype()), \" got \",\n+ DataTypeString(updates.dtype())));\n \n // Check that we have enough index space\n const int64_t N_big = indices.NumElements();",

"filename": "tensorflow/core/kernels/resource_variable_ops.cc",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \n \n ### Issue Type\n\nBug\n\n### Have you reproduced the bug with TF nightly?\n\nNo\n\n### Source\n\nsource\n\n### Tensorflow Version\n\n2.12.0\n\n### Custom Code\n\nNo\n\n### OS Platform and Distribution\n\nmacOS 13.3\n\n### Mobile device\n\n_No response_\n\n### Python version\n\n3.10\n\n### Bazel version\n\n5.3.0\n\n### GCC/Compiler version\n\nXCode 14.3\n\n### CUDA/cuDNN version\n\n_No response_\n\n### GPU model and memory\n\n_No response_\n\n### Current Behaviour?\n\n```shell\nUsing standard compiling procedure (no special flags), compilation of the external library: boringssl/src/crypto/x509 fails. Log attached below.\n```\n\n\n### Standalone code to reproduce the issue\n\n```shell\nCompile TF 2.12.0 using MacOS 13.x and XCode 14.3 (not earlier).\n```\n\n\n### Relevant log output\n\n```shell\n: /private/var/tmp/_bazel_alex/dc1a9368c8e4ba5b96348c2850b37ab0/external/boringssl/BUILD:161:11: Compiling src/crypto/x509/t_x509.c [for host] failed: (Exit 1): cc_wrapper.sh failed: error executing command external/local_config_cc/cc_wrapper.sh -U_FORTIFY_SOURCE -fstack-protector -Wall -Wthread-safety -Wself-assign -Wunused-but-set-parameter -Wno-free-nonheap-object -fcolor-diagnostics ... (remaining 44 arguments skipped)\r\nexternal/boringssl/src/crypto/x509/t_x509.c:321:18: error: variable 'l' set but not used [-Werror,-Wunused-but-set-variable]\r\n int ret = 0, l, i;\r\n ^\r\n1 error generated.\r\nTarget //tensorflow/tools/pip_package:build_pip_package failed to build\n```\n</details>",

"comments": [

{

"body": "The issue is related to an unused variable in `external/boringssl/src/crypto/x509/t_x509.c:321:18`. Removing that variable fixes the issue. See attached.\r\n\r\nPatch: [issue_60191_patch.txt](https://github.com/tensorflow/tensorflow/files/11149628/issue_60191_patch.txt)\r\n\r\n",

"created_at": "2023-04-01T04:52:54Z"

},

{

"body": "@feranick \r\nCould you please elaborate more and provide detailed steps to replicate the issue reported here ?\r\n\r\nThank you!",

"created_at": "2023-04-03T10:02:43Z"

},

{

"body": "> Could you please elaborate more and provide detailed steps to replicate the issue reported here ?\r\n\r\n1. Make sure you have `XCode 14.3` installed (earlier versions won't compile TF as per issue: https://github.com/tensorflow/tensorflow/issues/58368 )\r\n2. `git clone` TF and checkout version 2.12.0 (or 2.11.1)\r\n3. `cd` in the folder `tensorflow` run `./configure` with all default options. \r\n4. run compilation: `bazel build --config=opt //tensorflow/tools/pip_package:build_pip_package --verbose_failures`\r\n\r\nAt some point compilation will stop with the error in this issue. \r\n\r\nTo fix it:\r\n1. run your text editor (I use nano) into the external folder with the problematic library boringssl: `nano /private/var/tmp/_bazel_YOU-AS-USER/SOME_ALPHANUMERIC/external/boringssl/src/crypto/x509/t_x509.c`\r\n2. modify the code according to the patch attached (essentially remove all references to the unused variable `l`)\r\n3. restart compilation: `bazel build --config=opt //tensorflow/tools/pip_package:build_pip_package --verbose_failures`\r\n\r\nPatch: \r\n[issue_60191_patch.txt](https://github.com/tensorflow/tensorflow/files/11150058/issue_60191_patch.txt)\r\n\r\n\r\n\r\n",

"created_at": "2023-04-04T11:05:10Z"

},

{

"body": "There might be ways to disable the `-Wunused-but-set-variable` flag, but I prefer to actually fix the code by removing the useless variable in first place. \r\n\r\nRemoving the variable should be applied in the ustream version as well (or make it do something useful, if that was the intent)",

"created_at": "2023-04-04T11:07:10Z"

},

{

"body": "Note: the issue is not present in the master git for boringssl: \r\nhttps://boringssl.googlesource.com/boringssl/\r\n\r\nTh unused variable is simply removed as per my patch above. Therefore TF either needs to resync boringssl for a newer release or apply my patch (attached). \r\nPatch: \r\n[issue_60191_patch.txt](https://github.com/tensorflow/tensorflow/files/11149626/issue_60191_patch.txt)\r\n",

"created_at": "2023-04-04T14:28:04Z"

},

{

"body": "@feranick ,\r\n\r\nThanks for bringing this with the solution. If you are willing to contribute please feel free to raise a PR.\r\n\r\nThanks!",

"created_at": "2023-04-06T10:41:24Z"

},

{

"body": "I would... Unfortunately the library is not included in the main TF tree, as it is pulled from private google servers. It needs to be fixed internally. BTW, TF pulls a specific version (can't tell you which one), but the bug is no longer present in the current master for boringssl (basically it has my patch applied). So bazel or whatever software pulls boringssl from the server needs to be updated to pull a more recent version, something only people with access to Google boringssl private repo can do.\r\nhttps://boringssl.googlesource.com/boringssl/\r\nIt is also fixed in the github repo:\r\nhttps://github.com/google/boringssl\r\nSo all this really needs is to pull a more recent version of boringssl. ",

"created_at": "2023-04-06T10:48:08Z"

},

{

"body": "Also, correct me if I am wrong.The file you mentioned for correction seems to be a temp file generated during bazel build.Not sure we can fix this from TF source tree. May be its related to Bazel.\r\n\r\nI have gone through the bazel build docs in TF repo and found this one have some context for boring SSL. \r\n\r\nhttps://github.com/tensorflow/tensorflow/blob/bc54be865c99c9c8b8174c98bf8665af4ab10949/tensorflow/workspace2.bzl#L557-L563\r\n\r\nWhether we can do something here by changing URL or any thing to rectify this problem ?",

"created_at": "2023-04-06T10:52:24Z"

},

{

"body": "> Whether we can do something here by changing URL or any thing to rectify this problem ?\r\n\r\nYes, you are correct. Bazel builds it within a temporary folder.\r\n\r\nAnd yes, I would think changing the URL might do it. However, I am not sure what URL/file to use from git as it probably uses an internal branch that is tar zipped. So the question is whether that package is there exclusively for TF... Maybe one can create a new package branched from main and placed in the same folder and then correct the reference URL in bazel.... ",

"created_at": "2023-04-06T11:01:56Z"

},

{

"body": "OK, on a deeper inspection, it seems that the link has been already fixed in TF master. When looking at `tensorflow/tensorflow/workspace2.bzl`\r\n\r\nTF Master:\r\n```\r\n tf_http_archive(\r\n name = \"boringssl\",\r\n sha256 = \"9dc53f851107eaf87b391136d13b815df97ec8f76dadb487b58b2fc45e624d2c\",\r\n strip_prefix = \"boringssl-c00d7ca810e93780bd0c8ee4eea28f4f2ea4bcdc\",\r\n system_build_file = \"//third_party/systemlibs:boringssl.BUILD\",\r\n urls = tf_mirror_urls(\"https://github.com/google/boringssl/archive/c00d7ca810e93780bd0c8ee4eea28f4f2ea4bcdc.tar.gz\"),\r\n )\r\n```\r\n\r\nwhile for TF 2.12.0:\r\n```\r\ntf_http_archive(\r\n name = \"boringssl\",\r\n sha256 = \"534fa658bd845fd974b50b10f444d392dfd0d93768c4a51b61263fd37d851c40\",\r\n strip_prefix = \"boringssl-b9232f9e27e5668bc0414879dcdedb2a59ea75f2\",\r\n system_build_file = \"//third_party/systemlibs:boringssl.BUILD\",\r\n urls = tf_mirror_urls(\"https://github.com/google/boringssl/archive/b9232f9e27e5668bc0414879dcdedb2a59ea75f2.tar.gz\"),\r\n )\r\n```\r\nSo, in principle, one would only need to replace the reference links in `workspace2.bzl`to the newer version now in master...",

"created_at": "2023-04-06T11:10:32Z"

},

{

"body": "I am doing a test build where I replaced the strings above from main. Will report shortly.",

"created_at": "2023-04-06T11:14:50Z"

},

{

"body": "So far compilation is proceeding normally, beyond the point where it would crash because of this issue. It seems like the proposed solution (swapping the `tf_http_archive` from master) will fix the issue, possibly also for the 2.12.0 branch.",

"created_at": "2023-04-06T14:08:33Z"

},

{

"body": "I can confirm that compilation proceeds correctly on any platform I tried (MacOSX, linux).",

"created_at": "2023-04-06T15:34:19Z"

},

{

"body": "> Thanks for bringing this with the solution. If you are willing to contribute please feel free to raise a PR.\r\n\r\nPull request is in https://github.com/tensorflow/tensorflow/pull/60259\r\n",

"created_at": "2023-04-06T23:00:05Z"

},

{

"body": "@feranick ,\r\n\r\nThanks for all your effort and time in resolving and raising the PR. Our Team will review and update.\r\n\r\nThanks!",

"created_at": "2023-04-10T06:32:45Z"

},

{

"body": "Hi @feranick ,\r\n\r\nI can see nightly build was updated as required.\r\n\r\nhttps://github.com/tensorflow/tensorflow/blob/0bc361b51ee4bad40392e77641ab74bd1ec4331a/tensorflow/workspace2.bzl#L569-L575\r\n\r\nCan we mark this as resolved. Please spare some time to verify and close the issue.\r\n\r\nThanks!",

"created_at": "2023-06-22T05:51:01Z"

},

{

"body": "It works now. Thanks for pushing it. Closing.",

"created_at": "2023-06-22T12:18:56Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60191\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60191\">No</a>\n",

"created_at": "2023-06-22T12:18:58Z"

}

],

"number": 60191,

"title": "Fail to compile TF 2.12.0 with XCode 14.3 due to Compiler flag in boringssl/src/crypto/x509"

}

|

{

"body": "Current version of boringssl pulled for TF 2.12.x does not compile with TF using XCode 14.3 due to an unused variable and an aggressive compiler flag (-Werror,-Wunused-but-set-variable) This patch adds links to an updated version of boringssl (used for TF Master), that fixes the issue. This fixes TF issue #60191",

"number": 60259,

"review_comments": [],

"title": "Update boringssl to allow compilation in MacOS XCode 14.3"

}

|

{

"commits": [

{

"message": "Update boringssl to allow compilation in MacOS XCode 14.3\n\nCurrent version of boringssl pulled for TF 2.12.x does not compile with TF using XCode 14.3\ndue to an unused variable and an aggressive compiler flag (-Werror,-Wunused-but-set-variable)\nThis patch adds links to an updated version of boringssl (used for TF Master), that\nfixes the issue. This fixes TF issue #60191"

}

],

"files": [

{

"diff": "@@ -567,10 +567,10 @@ def _tf_repositories():\n \n tf_http_archive(\n name = \"boringssl\",\n- sha256 = \"534fa658bd845fd974b50b10f444d392dfd0d93768c4a51b61263fd37d851c40\",\n- strip_prefix = \"boringssl-b9232f9e27e5668bc0414879dcdedb2a59ea75f2\",\n+ sha256 = \"9dc53f851107eaf87b391136d13b815df97ec8f76dadb487b58b2fc45e624d2c\",\n+ strip_prefix = \"boringssl-c00d7ca810e93780bd0c8ee4eea28f4f2ea4bcdc\",\n system_build_file = \"//third_party/systemlibs:boringssl.BUILD\",\n- urls = tf_mirror_urls(\"https://github.com/google/boringssl/archive/b9232f9e27e5668bc0414879dcdedb2a59ea75f2.tar.gz\"),\n+ urls = tf_mirror_urls(\"https://github.com/google/boringssl/archive/c00d7ca810e93780bd0c8ee4eea28f4f2ea4bcdc.tar.gz\"),\n )\n \n # Note: if you update this, you have to update libpng too. See cl/437813808",

"filename": "tensorflow/workspace2.bzl",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \r\n \r\n ### Issue Type\r\n\r\nBug\r\n\r\n### Have you reproduced the bug with TF nightly?\r\n\r\nNo\r\n\r\n### Source\r\n\r\nsource\r\n\r\n### Tensorflow Version\r\n\r\n2.11.0\r\n\r\n### Custom Code\r\n\r\nYes\r\n\r\n### OS Platform and Distribution\r\n\r\nMacOS 13.1\r\n\r\n### Mobile device\r\n\r\n_No response_\r\n\r\n### Python version\r\n\r\n3.10.6\r\n\r\n### Bazel version\r\n\r\n_No response_\r\n\r\n### GCC/Compiler version\r\n\r\n_No response_\r\n\r\n### CUDA/cuDNN version\r\n\r\n_No response_\r\n\r\n### GPU model and memory\r\n\r\n_No response_\r\n\r\n### Current Behaviour?\r\n\r\n```shell\r\nThe documentation for `tf.image.ssim` claims to output\r\n\r\n> ... a tensor containing an SSIM value for each pixel for each image in batch if return_index_map is True\r\n\r\nHowever, the output image is smaller than the source image (see example below).\r\n\r\nUpon comparing with a more well-known library implemented in PyTorch, I believe the reason for such discrepency is due to the Conv2D padding used.\r\n\r\nIn PyTorch's implementation, a \"SAME\" padding is used\r\n\r\nhttps://github.com/Po-Hsun-Su/pytorch-ssim/blob/3add4532d3f633316cba235da1c69e90f0dfb952/pytorch_ssim/__init__.py#L25\r\n\r\nHowever, in the current Tensorflow implementation, \"VALID\" padding is used\r\n\r\nhttps://github.com/tensorflow/tensorflow/blob/d5b57ca93e506df258271ea00fc29cf98383a374/tensorflow/python/ops/image_ops_impl.py#L4340\r\n\r\nPlease verify if this is the case.\r\n```\r\n\r\n\r\n### Standalone code to reproduce the issue\r\n\r\n```shell\r\nimport numpy as np\r\nimport tensorflow as tf # tf.__version__ == \"2.11.0\"\r\n# B x T x n_mel\r\nshape = (16, 2106, 80, 1)\r\nimage1 = np.arange(np.prod(shape))\r\nimage1 = (image1 / np.max(image1)) * 10 + 100\r\nimage1 = np.reshape(image1, shape)\r\nimage2 = np.linspace(0, 1, np.prod(shape))\r\nimage2 = np.exp(image2)\r\nimage2 = (image2 / np.max(image2)) * 10 + 100\r\nimage2 = np.reshape(image2, shape)\r\n\r\nout_tf = tf.image.ssim(image1, image2, max_val=255, return_index_map=True)\r\n\r\n```\r\nwhich outputs a tensor of shape [16, 2096, 70].\r\n\r\n\r\n### Relevant log output\r\n\r\n_No response_</details>",

"comments": [

{

"body": "@sapphire008,\r\nI was facing a different issue/error while executing the mentioned code. Kindly find the gist of it [here](https://colab.research.google.com/gist/tilakrayal/a18c78fc2f901e1c2c54facba4f6548c/untitled829.ipynb) and provide the dependencies. Thank you!",

"created_at": "2023-01-02T12:32:14Z"

},

{

"body": "This issue has been automatically marked as stale because it has no recent activity. It will be closed if no further activity occurs. Thank you.\n",

"created_at": "2023-01-09T12:39:12Z"

},

{

"body": "Closing as stale. Please reopen if you'd like to work on this further.\n",

"created_at": "2023-01-16T12:55:12Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/59067\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/59067\">No</a>\n",

"created_at": "2023-01-16T12:55:16Z"

},

{

"body": "@tilakrayal This is an issue with the latest release of tensorflow 2.11.0 as indicated in the original post under \"Tensorflow Version\". The default colab version is still using 2.9.2. `pip install tensorflow==2.11.0` and restart the runtime will get to the right version.\r\n\r\nPlease keep this issue open. Thank you.",

"created_at": "2023-01-21T04:37:32Z"

},

{

"body": "@sapphire008,\r\nThank you for the issue. In tf.image.ssim when we are using **return_index_map = True**, returns the **index map**; where as in the case of **return_index_map = False**, it should return the reduced **global value** which was working as expected. \r\n\r\n**O/p for return_index_map = True** :\r\n\r\n```\r\n2.12.0-dev20230201\r\n(2695680,)\r\n(2695680,)\r\n(16, 2106, 80, 1)\r\n(2695680,)\r\n(2695680,)\r\n(2695680,)\r\n(16, 2106, 80, 1)\r\n(16,)\r\n```\r\n\r\n**O/p for return_index_map = False** :\r\n\r\n```\r\n2.12.0-dev20230201\r\n(2695680,)\r\n(2695680,)\r\n(16, 2106, 80, 1)\r\n(2695680,)\r\n(2695680,)\r\n(2695680,)\r\n(16, 2106, 80, 1)\r\n(16, 2096, 70)\r\n```\r\nA tensor containing an SSIM value for each image in batch or a tensor containing an SSIM value for each pixel for each image in batch if **return_index_map** is True. Returned SSIM values are in range (-1, 1], when pixel values are non-negative. Returns a tensor with shape:\r\n```\r\n broadcast(img1.shape[:-3], img2.shape[:-3]) or broadcast(img1.shape[:-1],\r\n img2.shape[:-1]).\r\n```\r\n\r\nKindly find the gist of it [here](https://colab.research.google.com/gist/tilakrayal/9af60d2910d739bfc1230eb296c8599c/untitled917.ipynb) and also please have a look at the reference where it is explicitly described that the global ssim value is the mean of the local ssim value: https://medium.com/srm-mic/all-about-structural-similarity-index-ssim-theory-code-in-pytorch-6551b455541e .",

"created_at": "2023-02-03T09:00:14Z"

},

{

"body": "This issue has been automatically marked as stale because it has no recent activity. It will be closed if no further activity occurs. Thank you.\n",

"created_at": "2023-02-10T09:07:00Z"

},

{

"body": "@tilakrayal Thank you for your response. I believe in your response, the `return_index_map =True` and `return_index_map =False` cases are switched. However, the [gist](https://colab.research.google.com/gist/tilakrayal/9af60d2910d739bfc1230eb296c8599c/untitled917.ipynb) version correctly reproduced the problem I have described in the original post. Regardless, the output shape is (16, 2096, 70) when setting `return_index_map=True`, which is not consistent with the input shape of (16, 2106, 80). This is unexpected, because an index map of SSIM calculation is the scores corresponding to the original input, thus their shape should have been consistent. You can also find in my original post for a link to another implementation made in PyTorch, which does produce index map with shapes consistent with the inputs, and has been cited / reproduced by various repositories such as https://github.com/MoonInTheRiver/DiffSinger.",

"created_at": "2023-02-10T16:15:26Z"

},

{

"body": "Hi, @sapphire008 \r\n\r\nApologize for the delayed response and I was able to replicate the same issue and I have executed the same code multiple times and It seems like working as expected with `TF2.11` and I also tried with our latest pre-release `tensorflow==2.12.0rc1 `and `tensorflow==2.12.0rc0` for your reference I have added [gist-file](https://colab.research.google.com/gist/gaikwadrahul8/a332efd18ba5adb3e34a95ffa4abea92/-59067-test.ipynb) and I completely understood your point here the implementation in PyTorch with \"`SAME`\" padding but in Tensorflow we are using \"`VALID`\" padding, If have I missed something here please let me know ? Thank you!",

"created_at": "2023-03-09T14:04:55Z"

},

{

"body": "@gaikwadrahul8 Is there any reason why `VALID` padding is chosen instead of `SAME` padding in this specific implementation? The returned `index_map` is usually expected as the same size as the inputs, to my understanding. It's also how I see it could be used as a loss function.",

"created_at": "2023-03-20T13:59:55Z"

},

{

"body": "This issue is stale because it has been open for 7 days with no activity. It will be closed if no further activity occurs. Thank you.",

"created_at": "2023-03-28T01:58:14Z"

},

{

"body": "Hi, @SuryanarayanaY \r\n\r\nCould you please look into this issue ? Thank you!",

"created_at": "2023-03-29T06:13:10Z"

},

{

"body": "This issue is stale because it has been open for 7 days with no activity. It will be closed if no further activity occurs. Thank you.",

"created_at": "2023-04-06T01:53:50Z"

},

{

"body": "@sapphire008 ,\r\n\r\nThe shape mismatch might be due to the padding as you noticed. As per the documentation the API the output is:\r\n`Returns a tensor with shape: broadcast(img1.shape[:-3], img2.shape[:-3]) or broadcast(img1.shape[:-1], img2.shape[:-1])`.\r\n \r\nBut for our example the input is of shape (16, 2106, 80, 1) the API returns output of shape (16, 2096, 70) which seems incorrect and the root cause is due to the padding='VALID'\r\n\r\nI have tested the code by changing padding='SAME' and in this case the output is (16, 2106, 80).\r\n\r\nAll these details captured in attached [gist](https://colab.research.google.com/gist/SuryanarayanaY/d75ef4fcbd69b5211975fd4d29490084/59067_r1.ipynb#scrollTo=G24eYEw6Qi9Q).\r\n\r\nIt seems a bug for me and I am going to raise a PR for this. Thanks for reporting.",

"created_at": "2023-04-06T06:42:06Z"

}

],

"number": 59067,

"title": "TF 2.11.0: tf.image.ssim return_index_map=True outputs wrong shape"

}

|

{

"body": "Currently the API `tf.image.ssim` is using `padding='VALID'` internally. But with this padding the shape of the output is not matching with what documentation states. With `return_index_map=True` the output shape should be `broadcast(img1.shape[:-1], img2.shape[:-1])`. \r\n\r\nBut with` padding='VALID'`, for input of shape `(16, 2106, 80, 1)` the current output shape is `(16, 2096, 70)` which is not matching with the expected output which should be `(16, 2106, 80)`. With `padding='SAME'` the output is matching to the desired output. \r\n\r\nPlease refer to the attached [gist](https://colab.research.google.com/gist/SuryanarayanaY/d75ef4fcbd69b5211975fd4d29490084/59067_r1.ipynb) showcasing all the mentioned details. \r\n\r\nFixes #59067 ",

"number": 60251,

"review_comments": [],

"title": "Update padding='SAME' in tf.image.ssim API"

}

|

{

"commits": [

{

"message": "update padding='SAME' in tf.image.ssim API\n\nCurrently the API tf.image.ssim is using padding='VALID' internally. But with this padding the shape of the output is not matching with what documentation states. With return_index_map=True the output shape should be broadcast(img1.shape[:-1], img2.shape[:-1]). But with padding='VALID', for input of shape \r\n(16, 2106, 80, 1) the current output shape is (16, 2096, 70) which is not matching with the expected output which should be (16, 2106, 80). With padding='SAME' the output is matching to the desired output. \r\n\r\nPlease refer to the attached gist showcasing all the mentioned details.\r\nhttps://colab.research.google.com/gist/SuryanarayanaY/d75ef4fcbd69b5211975fd4d29490084/59067_r1.ipynb"

}

],

"files": [

{

"diff": "@@ -4348,7 +4348,7 @@ def _ssim_per_channel(img1,\n def reducer(x):\n shape = array_ops.shape(x)\n x = array_ops.reshape(x, shape=array_ops.concat([[-1], shape[-3:]], 0))\n- y = nn.depthwise_conv2d(x, kernel, strides=[1, 1, 1, 1], padding='VALID')\n+ y = nn.depthwise_conv2d(x, kernel, strides=[1, 1, 1, 1], padding='SAME')\n return array_ops.reshape(\n y, array_ops.concat([shape[:-3], array_ops.shape(y)[1:]], 0))\n ",

"filename": "tensorflow/python/ops/image_ops_impl.py",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \r\n \r\n ### Issue Type\r\n\r\nBug\r\n\r\n### Have you reproduced the bug with TF nightly?\r\n\r\nYes\r\n\r\n### Source\r\n\r\nbinary\r\n\r\n### Tensorflow Version\r\n\r\nTF 2.12.0, TF nightly 2.13.0-dev20230404\r\n\r\n### Custom Code\r\n\r\nYes\r\n\r\n### OS Platform and Distribution\r\n\r\n_No response_\r\n\r\n### Mobile device\r\n\r\n_No response_\r\n\r\n### Python version\r\n\r\n_No response_\r\n\r\n### Bazel version\r\n\r\n_No response_\r\n\r\n### GCC/Compiler version\r\n\r\n_No response_\r\n\r\n### CUDA/cuDNN version\r\n\r\n_No response_\r\n\r\n### GPU model and memory\r\n\r\n_No response_\r\n\r\n### Current Behaviour?\r\n\r\nConsider the following code creating ragged batches using `tf.data.Dataset.ragged_batch`:\r\n\r\n```python\r\ndata = tf.data.Dataset.from_tensor_slices(tf.ragged.constant([[1, 2], [3]]))\r\nlist(data.ragged_batch(2))\r\n```\r\n\r\nThe above code works fine in normal mode. However, if you enable debug mode using `tf.data.experimental.enable_debug_mode()`, the same code crashes with an error.\r\n\r\n### Standalone code to reproduce the issue\r\n\r\nI reproduced the error in https://colab.research.google.com/drive/1nf1BHjssx2YhF0ZbbgPg1QSALSS4Z89r?usp=sharing , both for TF 2.12.0 and TF nightly 2.13.0-dev20230404.\r\n\r\nThe code for triggering the bug is the following:\r\n\r\n```python\r\ntf.data.experimental.enable_debug_mode()\r\ndata = tf.data.Dataset.from_tensor_slices(tf.ragged.constant([[1, 2], [3]]))\r\nlist(data.ragged_batch(2))\r\n```\r\n\r\n### Relevant log output\r\n\r\nHere is the error printed by TF 2.12.0\r\n\r\n```\r\n---------------------------------------------------------------------------\r\n\r\nInvalidArgumentError Traceback (most recent call last)\r\n\r\n<ipython-input-3-34b7e4bb8c4b> in <cell line: 4>()\r\n 2 tf.data.experimental.enable_debug_mode()\r\n 3 data = tf.data.Dataset.from_tensor_slices(tf.ragged.constant([[1, 2], [3]]))\r\n----> 4 list(data.ragged_batch(2))\r\n\r\n3 frames\r\n\r\n/usr/local/lib/python3.9/dist-packages/tensorflow/python/framework/ops.py in raise_from_not_ok_status(e, name)\r\n 6651 def raise_from_not_ok_status(e, name):\r\n 6652 e.message += (\" name: \" + str(name if name is not None else \"\"))\r\n-> 6653 raise core._status_to_exception(e) from None # pylint: disable=protected-access\r\n 6654 \r\n 6655 \r\n\r\nInvalidArgumentError: {{function_node __wrapped__IteratorGetNext_output_types_1_device_/job:localhost/replica:0/task:0/device:CPU:0}} ValueError: Value [1 2] is not convertible to a tensor with dtype <dtype: 'variant'> and shape ().\r\nTraceback (most recent call last):\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/data/util/structure.py\", line 347, in reduce_fn\r\n component = ops.convert_to_tensor(component, spec.dtype)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/profiler/trace.py\", line 183, in wrapped\r\n return func(*args, **kwargs)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/framework/ops.py\", line 1440, in convert_to_tensor\r\n return tensor_conversion_registry.convert(\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/framework/tensor_conversion_registry.py\", line 209, in convert\r\n return overload(dtype, name) # pylint: disable=not-callable\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/framework/ops.py\", line 1335, in __tf_tensor__\r\n return super().__tf_tensor__(dtype, name)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/framework/ops.py\", line 967, in __tf_tensor__\r\n raise ValueError(\r\n\r\nValueError: Tensor conversion requested dtype variant for Tensor with dtype int32: <tf.Tensor: shape=(2,), dtype=int32, numpy=array([1, 2], dtype=int32)>\r\n\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\n\r\nTraceback (most recent call last):\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/ops/script_ops.py\", line 266, in __call__\r\n return func(device, token, args)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/ops/script_ops.py\", line 144, in __call__\r\n outputs = self._call(device, args)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/ops/script_ops.py\", line 151, in _call\r\n ret = self._func(*args)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/autograph/impl/api.py\", line 643, in wrapper\r\n return func(*args, **kwargs)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/data/ops/structured_function.py\", line 213, in py_function_wrapper\r\n ret = structure.to_tensor_list(self._output_structure, ret)\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/data/util/structure.py\", line 410, in to_tensor_list\r\n return _to_tensor_list_helper(\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/data/util/structure.py\", line 360, in _to_tensor_list_helper\r\n return functools.reduce(\r\n\r\n File \"/usr/local/lib/python3.9/dist-packages/tensorflow/python/data/util/structure.py\", line 349, in reduce_fn\r\n raise ValueError(\r\n\r\nValueError: Value [1 2] is not convertible to a tensor with dtype <dtype: 'variant'> and shape ().\r\n\r\n\r\n\t [[{{node EagerPyFunc}}]] [Op:IteratorGetNext] name:\r\n```\r\n</details>",

"comments": [],

"number": 60239,

"title": "In tf.data.experimental.enable_debug_mode, tf.data.Dataset.ragged_batch fails with an error"

}

|

{

"body": "Fixed bug with DEBUG_MODE bug when performing from_tensor_slices on a ragged tensor related to issue #60239\r\n\r\nThank you, if there are any suggestions feel free to comment.",

"number": 60250,

"review_comments": [],

"title": "Fixed bug with DEBUG_MODE bug when performing from_tensor_slices on a ragged tensor"

}

|

{

"commits": [

{

"message": "Update structured_function.py"

},

{

"message": "Merge branch 'tensorflow:master' into master"

},

{

"message": "Update structured_function.py"

}

],

"files": [

{

"diff": "@@ -247,7 +247,8 @@ def wrapped_fn(*args): # pylint: disable=missing-docstring\n else:\n defun_kwargs.update({\"func_name\": func_name})\n defun_kwargs.update({\"_tf_data_function\": True})\n- if debug_mode.DEBUG_MODE:\n+ element_spec_name = str(dataset.element_spec)[0:16]\n+ if debug_mode.DEBUG_MODE and element_spec_name != 'RaggedTensorSpec':\n fn_factory = trace_py_function(defun_kwargs)\n else:\n if def_function.functions_run_eagerly():",

"filename": "tensorflow/python/data/ops/structured_function.py",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \r\n \r\n ### Issue Type\r\n\r\nBug\r\n\r\n### Have you reproduced the bug with TF nightly?\r\n\r\nYes\r\n\r\n### Source\r\n\r\nsource\r\n\r\n### Tensorflow Version\r\n\r\ntf 2.12\r\n\r\n### Custom Code\r\n\r\nNo\r\n\r\n### OS Platform and Distribution\r\n\r\n_No response_\r\n\r\n### Mobile device\r\n\r\n_No response_\r\n\r\n### Python version\r\n\r\n_No response_\r\n\r\n### Bazel version\r\n\r\n_No response_\r\n\r\n### GCC/Compiler version\r\n\r\n_No response_\r\n\r\n### CUDA/cuDNN version\r\n\r\n_No response_\r\n\r\n### GPU model and memory\r\n\r\n_No response_\r\n\r\n### Current Behaviour?\r\n\r\n```shell\r\nVariable `dict` may be `nullptr` and is dereferenced on line 149 in `tensorflow/compiler/xla/mlir/backends/cpu/transforms/lmhlo_to_cpu_runtime.cc`. \r\n\r\n`dict` is initialized on line 146 and may equal `nullptr`. Then it is dereferenced on line 149. \r\n```\r\n\r\n\r\n### Standalone code to reproduce the issue\r\n\r\n```shell\r\nBug was found by Svace static analysis tool.\r\n```\r\n\r\n\r\n### Relevant log output\r\n\r\n_No response_</details>",

"comments": [

{

"body": "@mihaimaruseac \r\n\r\nhttps://github.com/tensorflow/tensorflow/blob/c1169a1ba98e1c5d0874cd44ffeb605bfd1cefba/tensorflow/compiler/xla/mlir/backends/cpu/transforms/lmhlo_to_cpu_runtime.cc#L145-L149",

"created_at": "2023-04-04T13:18:29Z"

},

{

"body": "@SweetVishnya Thanks for the PR.\r\n\r\n@PaDarochek The issue will be closed once the PR is merged.\r\n\r\nThanks.",

"created_at": "2023-04-06T12:45:18Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60223\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/60223\">No</a>\n",

"created_at": "2023-04-27T17:54:24Z"

}

],

"number": 60223,

"title": "Null pointer dereference in lmhlo_to_cpu_runtime.cc"

}

|

{

"body": "The bug was found by Svace static analyzer:\r\n\r\n1. op.getBackendConfig() can be null\r\n2. dict will be nullptr\r\n3. dict.begin() dereferences a null pointer\r\n\r\nCloses #60223\r\n\r\ncc @mihaimaruseac",

"number": 60242,

"review_comments": [],

"title": "Fix null pointer dereference in xla::cpu::CustomCallOpLowering::rewriteTypedCustomCall()"

}

|

{

"commits": [

{

"message": "Fix null pointer dereference in xla::cpu::CustomCallOpLowering::rewriteTypedCustomCall()\n\nThe bug was found by Svace static analyzer:\n\n1. op.getBackendConfig() can be null\n2. dict will be nullptr\n3. dict.begin() dereferences a null pointer\n\nCloses #60223"

}

],

"files": [

{

"diff": "@@ -143,9 +143,10 @@ class CustomCallOpLowering : public OpRewritePattern<CustomCallOp> {\n callee->setAttr(\"rt.dynamic\", UnitAttr::get(b.getContext()));\n \n // Forward backend config to the custom call implementation.\n- auto dict = op.getBackendConfig()\n- ? op.getBackendConfig()->cast<mlir::DictionaryAttr>()\n- : nullptr;\n+ auto config = op.getBackendConfig();\n+ if (!config)\n+ return op.emitOpError(\"Failed to get backend config\");\n+ auto dict = config->cast<mlir::DictionaryAttr>();\n llvm::SmallVector<NamedAttribute> backend_config(dict.begin(), dict.end());\n \n // Call the custom call function forwarding user-defined attributes.",

"filename": "tensorflow/compiler/xla/mlir/backends/cpu/transforms/lmhlo_to_cpu_runtime.cc",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \n \n ### Issue Type\n\nDocumentation Bug\n\n### Have you reproduced the bug with TF nightly?\n\nNo\n\n### Source\n\nsource\n\n### Tensorflow Version\n\n2.11\n\n### Custom Code\n\nNo\n\n### OS Platform and Distribution\n\n_No response_\n\n### Mobile device\n\n_No response_\n\n### Python version\n\n_No response_\n\n### Bazel version\n\n_No response_\n\n### GCC/Compiler version\n\n_No response_\n\n### CUDA/cuDNN version\n\n_No response_\n\n### GPU model and memory\n\n_No response_\n\n### Current Behaviour?\n\n```shell\nDocumentation links to .cc files are broken.\r\nhttps://www.tensorflow.org/lite/microcontrollers/get_started_low_level\r\n\r\nIn section 'Run Inference', link to 'hello_world_test.cc' and the other remaining .cc files linked cannot be opened.\n```\n\n\n### Standalone code to reproduce the issue\n\n```shell\nN/A\n```\n\n\n### Relevant log output\n\n_No response_</details>",

"comments": [

{

"body": "@acridland Thanks for reporting this.\r\n\r\nPR #59969 has been created. Issue will be closed once PR is merged.\r\n\r\nThanks.",

"created_at": "2023-03-13T17:18:45Z"

},

{

"body": "Hi @acridland \r\n\r\nThe Hello World example has been refactored with the commit https://github.com/tensorflow/tensorflow/commit/f263e19e227da0d325f15477f57a359291fc387c and broken links are fixed.\r\n\r\nThanks.",

"created_at": "2023-10-27T06:00:01Z"

},

{

"body": "This issue is stale because it has been open for 7 days with no activity. It will be closed if no further activity occurs. Thank you.",

"created_at": "2023-11-04T01:47:33Z"

},

{

"body": "This issue was closed because it has been inactive for 7 days since being marked as stale. Please reopen if you'd like to work on this further.",

"created_at": "2023-11-11T01:48:01Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/59959\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/59959\">No</a>\n",

"created_at": "2023-11-11T01:48:04Z"

}

],

"number": 59959,

"title": "Tensorflow lite for microcontrollers documentation has broken links to .cc files"

}

|

{

"body": "This commit updates the \"Hello World\" example documentation in the TensorFlow Lite for Microcontrollers repository to use the latest version of the `evaluate_test.cc`. \r\n\r\nAdditionally, the broken links in the example documentation have been fixed to ensure that users can access all the necessary resources. \r\n\r\nThese changes make it easier for users to get started with tflite on microcontrollers and ensure that the example document is up-to-date.\r\n\r\n Merging this closes the issue #59959",

"number": 59969,

"review_comments": [

{

"body": "```suggestion\r\n```\r\n\r\nPlease don't add empty lines that are not needed",

"created_at": "2023-09-01T11:31:59Z"

},

{

"body": "This seems to be doing something totally different than the original",

"created_at": "2023-09-01T11:32:38Z"

}

],

"title": "Update \"Hello World\" Example Doc and Fix Broken Links"

}

|

{

"commits": [

{

"message": "Update \"Hello World\" Example Doc and Fix Broken Links\n\nThis commit updates the \"Hello World\" example documentation in the TensorFlow Lite for Microcontrollers repository to use the latest version of the `evaluate_test.cc`. Additionally, the broken links in the example documentation have been fixed to ensure that users can access all the necessary resources. These changes make it easier for users to get started with machine learning on microcontrollers and ensure that the example document is up-to-date. Merging this closes the issue #59959"

},

{

"message": "Merge branch 'master' into pjpratik-patch-2"

},

{

"message": "Merge branch 'master' into pjpratik-patch-2"

},

{

"message": "Update tensorflow/lite/g3doc/microcontrollers/get_started_low_level.md\n\nCo-authored-by: Mihai Maruseac <mihaimaruseac@google.com>"

}

],

"files": [

{

"diff": "@@ -86,7 +86,7 @@ following header files:\n \n - [`micro_mutable_op_resolver.h`](https://github.com/tensorflow/tflite-micro/tree/main/tensorflow/lite/micro/micro_mutable_op_resolver.h)\n provides the operations used by the interpreter to run the model.\n-- [`micro_error_reporter.h`](https://github.com/tensorflow/tflite-micro/blob/main/tensorflow/lite/micro/tflite_bridge/micro_error_reporter.h)\n+- [`micro_log.h`](https://github.com/tensorflow/tflite-micro/blob/main/tensorflow/lite/micro/micro_log.h)\n outputs debug information.\n - [`micro_interpreter.h`](https://github.com/tensorflow/tflite-micro/tree/main/tensorflow/lite/micro/micro_interpreter.h)\n contains code to load and run models.\n@@ -99,11 +99,11 @@ following header files:\n ### 2. Include the model header\n \n The TensorFlow Lite for Microcontrollers interpreter expects the model to be\n-provided as a C++ array. The model is defined in `model.h` and `model.cc` files.\n+provided as a C++ array. The model is defined in `hello_world_float_model_data.h` and `hello_world_float_model_data.cc` files.\n The header is included with the following line:\n \n ```C++\n-#include \"tensorflow/lite/micro/examples/hello_world/model.h\"\n+#include \"tensorflow/lite/micro/examples/hello_world/models/hello_world_float_model_data.h\"\n ```\n \n ### 3. Include the unit test framework header\n@@ -120,7 +120,7 @@ The test is defined using the following macros:\n ```C++\n TF_LITE_MICRO_TESTS_BEGIN\n \n-TF_LITE_MICRO_TEST(LoadModelAndPerformInference) {\n+TF_LITE_MICRO_TEST(LoadFloatModelAndPerformInference) {\n . // add code here\n .\n }\n@@ -132,36 +132,38 @@ We now discuss the code included in the macro above.\n \n ### 4. Set up logging\n \n-To set up logging, a `tflite::ErrorReporter` pointer is created using a pointer\n-to a `tflite::MicroErrorReporter` instance:\n+To set up logging, `micro_log.h` is used.\n+\n+`MicroPrintf()` function can be used independent of the MicroErrorReporter to get\n+printf-like functionalitys and are common to all target platforms.\n+\n+### 5. Define the input and the expected output\n+\n+In the following lines, the input and the expected output are defined:\n \n ```C++\n-tflite::MicroErrorReporter micro_error_reporter;\n-tflite::ErrorReporter* error_reporter = µ_error_reporter;\n+ float x = 0.0f;\n+ float y_true = sin(x);\n ```\n \n-This variable will be passed into the interpreter, which allows it to write\n-logs. Since microcontrollers often have a variety of mechanisms for logging, the\n-implementation of `tflite::MicroErrorReporter` is designed to be customized for\n-your particular device.\n-\n-### 5. Load a model\n+### 6. Load a model\n \n In the following code, the model is instantiated using data from a `char` array,\n-`g_model`, which is declared in `model.h`. We then check the model to ensure its\n+`g_hello_world_float_model_data`, which is declared in `g_hello_world_float_model_data.h`.\n+We then check the model to ensure its\n schema version is compatible with the version we are using:\n \n ```C++\n-const tflite::Model* model = ::tflite::GetModel(g_model);\n+const tflite::Model* model = ::tflite::GetModel(g_hello_world_float_model_data);\n if (model->version() != TFLITE_SCHEMA_VERSION) {\n- TF_LITE_REPORT_ERROR(error_reporter,\n+ MIcroPrintf(\n \"Model provided is schema version %d not equal \"\n \"to supported version %d.\\n\",\n model->version(), TFLITE_SCHEMA_VERSION);\n }\n ```\n \n-### 6. Instantiate operations resolver\n+### 7. Instantiate operations resolver\n \n A\n [`MicroMutableOpResolver`](https://github.com/tensorflow/tflite-micro/tree/main/tensorflow/lite/micro/micro_mutable_op_resolver.h)\n@@ -187,40 +189,43 @@ TF_LITE_ENSURE_STATUS(RegisterOps(op_resolver));\n \n ```\n \n-### 7. Allocate memory\n+### 8. Allocate memory\n \n We need to preallocate a certain amount of memory for input, output, and\n intermediate arrays. This is provided as a `uint8_t` array of size\n `tensor_arena_size`:\n \n ```C++\n-const int tensor_arena_size = 2 * 1024;\n+const int tensor_arena_size = 2056;\n uint8_t tensor_arena[tensor_arena_size];\n ```\n \n The size required will depend on the model you are using, and may need to be\n determined by experimentation.\n \n-### 8. Instantiate interpreter\n+### 9. Instantiate interpreter\n \n We create a `tflite::MicroInterpreter` instance, passing in the variables\n created earlier:\n \n ```C++\n tflite::MicroInterpreter interpreter(model, resolver, tensor_arena,\n- tensor_arena_size, error_reporter);\n+ tensor_arena_size);\n ```\n \n-### 9. Allocate tensors\n+### 10. Allocate tensors\n \n We tell the interpreter to allocate memory from the `tensor_arena` for the\n-model's tensors:\n+model's tensors and throw error if failed:\n \n ```C++\n-interpreter.AllocateTensors();\n+if (interpreter.AllocateTensors() != kTfLiteOk) {\n+ MicroPrintf(\"Allocate tensor failed.\");\n+ return kTfLiteError;\n+ }\n ```\n \n-### 10. Validate input shape\n+### 11. Validate input shape\n \n The `MicroInterpreter` instance can provide us with a pointer to the model's\n input tensor by calling `.input(0)`, where `0` represents the first (and only)\n@@ -231,65 +236,51 @@ input tensor:\n TfLiteTensor* input = interpreter.input(0);\n ```\n \n-We then inspect this tensor to confirm that its shape and type are what we are\n-expecting:\n+We then inspect this tensor to confirm that it has properties what we\n+expect:\n \n ```C++\n-// Make sure the input has the properties we expect\n-TF_LITE_MICRO_EXPECT_NE(nullptr, input);\n-// The property \"dims\" tells us the tensor's shape. It has one element for\n-// each dimension. Our input is a 2D tensor containing 1 element, so \"dims\"\n-// should have size 2.\n-TF_LITE_MICRO_EXPECT_EQ(2, input->dims->size);\n-// The value of each element gives the length of the corresponding tensor.\n-// We should expect two single element tensors (one is contained within the\n-// other).\n-TF_LITE_MICRO_EXPECT_EQ(1, input->dims->data[0]);\n-TF_LITE_MICRO_EXPECT_EQ(1, input->dims->data[1]);\n-// The input is a 32 bit floating point value\n-TF_LITE_MICRO_EXPECT_EQ(kTfLiteFloat32, input->type);\n+if (input == nullptr) {\n+ MicroPrintf(\"Input tensor in null.\");\n+ return kTfLiteError;\n+ }\n ```\n \n The enum value `kTfLiteFloat32` is a reference to one of the TensorFlow Lite\n data types, and is defined in\n [`common.h`](https://github.com/tensorflow/tflite-micro/tree/main/tensorflow/lite/c/common.h).\n \n-### 11. Provide an input value\n+### 12. Provide an input value\n \n-To provide an input to the model, we set the contents of the input tensor, as\n-follows:\n+To provide an input to the model, we set the contents of the input tensor,\n+as follows:\n \n ```C++\n-input->data.f[0] = 0.;\n+input->data.f[0] = x;\n ```\n \n-In this case, we input a floating point value representing `0`.\n+In this case, we input a quantized input `x`.\n \n-### 12. Run the model\n+### 13. Run the model\n \n To run the model, we can call `Invoke()` on our `tflite::MicroInterpreter`\n instance:\n \n ```C++\n TfLiteStatus invoke_status = interpreter.Invoke();\n if (invoke_status != kTfLiteOk) {\n- TF_LITE_REPORT_ERROR(error_reporter, \"Invoke failed\\n\");\n-}\n+ MicroPrintf(\"Interpreter invocation failed.\");\n+ return kTfLiteError;\n+ }\n ```\n \n We can check the return value, a `TfLiteStatus`, to determine if the run was\n successful. The possible values of `TfLiteStatus`, defined in\n [`common.h`](https://github.com/tensorflow/tflite-micro/tree/main/tensorflow/lite/c/common.h),\n are `kTfLiteOk` and `kTfLiteError`.\n \n-The following code asserts that the value is `kTfLiteOk`, meaning inference was\n-successfully run.\n \n-```C++\n-TF_LITE_MICRO_EXPECT_EQ(kTfLiteOk, invoke_status);\n-```\n-\n-### 13. Obtain the output\n+### 14. Obtain the output\n \n The model's output tensor can be obtained by calling `output(0)` on the\n `tflite::MicroInterpreter`, where `0` represents the first (and only) output\n@@ -300,41 +291,65 @@ within a 2D tensor:\n \n ```C++\n TfLiteTensor* output = interpreter.output(0);\n-TF_LITE_MICRO_EXPECT_EQ(2, output->dims->size);\n-TF_LITE_MICRO_EXPECT_EQ(1, input->dims->data[0]);\n-TF_LITE_MICRO_EXPECT_EQ(1, input->dims->data[1]);\n-TF_LITE_MICRO_EXPECT_EQ(kTfLiteFloat32, output->type);\n ```\n \n We can read the value directly from the output tensor and assert that it is what\n we expect:\n \n ```C++\n // Obtain the output value from the tensor\n-float value = output->data.f[0];\n+float y_pred = output->data.f[0];\n // Check that the output value is within 0.05 of the expected value\n-TF_LITE_MICRO_EXPECT_NEAR(0., value, 0.05);\n+float epsilon = 0.05f;\n+ if (abs(y_true - y_pred) > epsilon) {\n+ MicroPrintf(\n+ \"Difference between predicted and actual y value \"\n+ \"is significant.\");\n+ return kTfLiteError;\n+ }\n ```\n \n-### 14. Run inference again\n+### 15. Run inference again\n \n The remainder of the code runs inference several more times. In each instance,\n we assign a value to the input tensor, invoke the interpreter, and read the\n result from the output tensor:\n \n ```C++\n-input->data.f[0] = 1.;\n-interpreter.Invoke();\n-value = output->data.f[0];\n-TF_LITE_MICRO_EXPECT_NEAR(0.841, value, 0.05);\n-\n-input->data.f[0] = 3.;\n-interpreter.Invoke();\n-value = output->data.f[0];\n-TF_LITE_MICRO_EXPECT_NEAR(0.141, value, 0.05);\n-\n-input->data.f[0] = 5.;\n-interpreter.Invoke();\n-value = output->data.f[0];\n-TF_LITE_MICRO_EXPECT_NEAR(-0.959, value, 0.05);\n+ x = 1.f;\n+ y_true = sin(x);\n+ input->data.f[0] = x;\n+ interpreter.Invoke();\n+ y_pred = output->data.f[0];\n+ if (abs(y_true - y_pred) > epsilon) {\n+ MicroPrintf(\n+ \"Difference between predicted and actual y value \"\n+ \"is significant.\");\n+ return kTfLiteError;\n+ }\n+\n+ x = 3.f;\n+ y_true = sin(x);\n+ input->data.f[0] = x;\n+ interpreter.Invoke();\n+ y_pred = output->data.f[0];\n+ if (abs(y_true - y_pred) > epsilon) {\n+ MicroPrintf(\n+ \"Difference between predicted and actual y value \"\n+ \"is significant.\");\n+ return kTfLiteError;\n+ }\n+\n+ x = 5.f;\n+ y_true = sin(x);\n+ input->data.f[0] = x;\n+ interpreter.Invoke();\n+ y_pred = output->data.f[0];\n+ if (abs(y_true - y_pred) > epsilon) {\n+ MicroPrintf(\n+ \"Difference between predicted and actual y value \"\n+ \"is significant.\");\n+ return kTfLiteError;\n+ }\n ```\n+",

"filename": "tensorflow/lite/g3doc/microcontrollers/get_started_low_level.md",

"status": "modified"

}

]

}

|

{

"body": "<details><summary>Click to expand!</summary> \n \n ### Issue Type\n\nBug\n\n### Source\n\nsource\n\n### Tensorflow Version\n\nTF 2.11\n\n### Custom Code\n\nYes\n\n### OS Platform and Distribution\n\n_No response_\n\n### Mobile device\n\n_No response_\n\n### Python version\n\n_No response_\n\n### Bazel version\n\n_No response_\n\n### GCC/Compiler version\n\n_No response_\n\n### CUDA/cuDNN version\n\nCUDA: 11.2 cuDNN 8.1\n\n### GPU model and memory\n\n_No response_\n\n### Current Behaviour?\n\n```shell\nThe results of tf.image.convert_image_dtype running on CPU and GPU are very different.\n```\n\n\n### Standalone code to reproduce the issue\n\n```shell\nCPU code:\r\n\r\n import tensorflow as tf\r\n with tf.device('/CPU'):\r\n arg_0 = [[[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]], [[7.0, 8.0, 9.0], [10.0, 11.0, 12.0]]]\r\n out = tf.image.convert_image_dtype(arg_0, dtype=tf.uint32, saturate=-1)\r\n print(out)\r\n\r\nGPU code:\r\n\r\n import tensorflow as tf\r\n with tf.device('/GPU:0'):\r\n arg_0 = [[[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]], [[7.0, 8.0, 9.0], [10.0, 11.0, 12.0]]]\r\n out = tf.image.convert_image_dtype(arg_0, dtype=tf.uint32, saturate=-1)\r\n print(out)\n```\n\n\n### Relevant log output\n\n```shell\nCPU result: tf.Tensor(\r\n[[[0 0 0]\r\n [0 0 0]]\r\n\r\n [[0 0 0]\r\n [0 0 0]]], shape=(2, 2, 3), dtype=uint32)\r\n\r\n\r\nGPU result: tf.Tensor(\r\n[[[2147483647 2147483647 2147483647]\r\n [2147483647 2147483647 2147483647]]\r\n\r\n [[2147483647 2147483647 2147483647]\r\n [2147483647 2147483647 2147483647]]], shape=(2, 2, 3), dtype=uint32)\n```\n</details>",

"comments": [

{

"body": "Hi @triumph-wangyuyang ,\r\n\r\nThere is this condition mentioned in [API](https://www.tensorflow.org/api_docs/python/tf/image/convert_image_dtype).\r\n\r\n`Images that are represented using floating point values are expected to have values in the range [0,1).`\r\n\r\nHence there is inconsistency in the result. I have tried values within [0,1) and result same on both CPU & GPU.Please refer to attached [gist](https://colab.research.google.com/gist/SuryanarayanaY/7d212236f3088b3edbd7de35532eedc3/17282.ipynb#scrollTo=q6utqo90YELo).\r\n\r\nPlease check and close the issue if your query got resolved.\r\n\r\nThankyou!",

"created_at": "2022-12-02T06:01:16Z"

},

{

"body": "> Hi @triumph-wangyuyang ,\r\n> \r\n> There is this condition mentioned in [API](https://www.tensorflow.org/api_docs/python/tf/image/convert_image_dtype).\r\n> \r\n> `Images that are represented using floating point values are expected to have values in the range [0,1).`\r\n> \r\n> Hence there is inconsistency in the result. I have tried values within [0,1) and result same on both CPU & GPU.Please refer to attached [gist](https://colab.research.google.com/gist/SuryanarayanaY/7d212236f3088b3edbd7de35532eedc3/17282.ipynb#scrollTo=q6utqo90YELo).\r\n> \r\n> Please check and close the issue if your query got resolved.\r\n> \r\n> Thankyou!\r\n\r\nI am doing the tensorflow operator test, and then deliberately use illegal parameters to test the operator. In this test, I did not use [0,1), but use >=1 value, and then in this way on the CPU and GPU The above results are different. Can we make a preliminary judgment on the value of the Images parameter at the operator entry, and if it is not in [0,1), an exception will be thrown to prevent the program from continuing.",

"created_at": "2022-12-02T06:14:58Z"

},

{

"body": "@triumph-wangyuyang ,\r\n\r\nI agree to that.It is better to raise exception/warning regarding invalid inputs rather than continuing and generating inconsistent results.Lets see if i can do something on this.\r\n\r\nThankyou!\r\n\r\n",

"created_at": "2022-12-02T07:30:23Z"

},

{

"body": "Hi @triumph-wangyuyang ,\r\n\r\nThe above mention PR should address the issue.",

"created_at": "2023-02-21T10:44:32Z"

},

{

"body": "We make no guarantees that CPU and GPU results are identical, especially for garbage data. The input doesn't crash, so it's not a security issue. Error checking is expensive.\r\n\r\nThe GPU result is flushing all results to the max value (essentially saturating the input). We could potentially do the same on CPU. I wouldn't say it's a requirement though.",

"created_at": "2023-02-21T17:54:40Z"

},

{

"body": "Just noticed that `saturate` was set to `True` (indirectly via the -1 value), so this should actually have defined behavior and there is an issue with saturation. Will dig into it.",

"created_at": "2023-02-24T17:12:38Z"

},

{

"body": "The issue here is that `uint32.max` is not actually representable in `float32` - and rounds up when converting, from 4294967295 to 4294967300.0. This eventually leads to a cast overflow and undefined behavior - which is why we see different values between CPU and GPU.\r\n",

"created_at": "2023-03-13T22:55:55Z"

},

{

"body": "Hi @triumph-wangyuyang ,\r\n\r\nPlease refer to attached explanation in above [comment](https://github.com/tensorflow/tensorflow/issues/58749#issuecomment-1467086661).The cast overflow causing undefined behaviour and hence getting different results. This has been fixed with tf-nightly(2.14.0-dev20230503). Please refer to attached [gist](https://colab.research.google.com/gist/SuryanarayanaY/fdbe3aa4f57128d0aef6dc890294fd52/58749_final.ipynb#scrollTo=1jbL0o8JOfRD) which showing both CPU and GPU are now producing same results.\r\n\r\nThanks!",

"created_at": "2023-05-04T05:21:21Z"

},

{

"body": "Are you satisfied with the resolution of your issue?\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=Yes&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/58749\">Yes</a>\n<a href=\"https://docs.google.com/forms/d/e/1FAIpQLSfaP12TRhd9xSxjXZjcZFNXPGk4kc1-qMdv3gc6bEP90vY1ew/viewform?entry.85265664=No&entry.2137816233=https://github.com/tensorflow/tensorflow/issues/58749\">No</a>\n",

"created_at": "2023-05-04T15:22:15Z"

},

{