url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.62B

| node_id

stringlengths 18

32

| number

int64 1

5.62k

| title

stringlengths 1

290

| user

dict | labels

list | state

stringclasses 1

value | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 3

values | active_lock_reason

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 2

values | draft

bool 2

classes | pull_request

dict | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/3454 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3454/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3454/comments | https://api.github.com/repos/huggingface/datasets/issues/3454/events | https://github.com/huggingface/datasets/pull/3454 | 1,084,519,107 | PR_kwDODunzps4wENam | 3,454 | Fix iter_archive generator | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-20T08:50:15" | "2021-12-20T10:05:00" | "2021-12-20T10:04:59" | MEMBER | null | This PR:

- Adds tests to DownloadManager and StreamingDownloadManager `iter_archive` for both path and file inputs

- Fixes bugs in `iter_archive` introduced in:

- #3443

Fix #3453. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3454/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3454/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3454",

"html_url": "https://github.com/huggingface/datasets/pull/3454",

"diff_url": "https://github.com/huggingface/datasets/pull/3454.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3454.patch",

"merged_at": "2021-12-20T10:04:59"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3453 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3453/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3453/comments | https://api.github.com/repos/huggingface/datasets/issues/3453/events | https://github.com/huggingface/datasets/issues/3453 | 1,084,515,911 | I_kwDODunzps5ApGZH | 3,453 | ValueError while iter_archive | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | closed | false | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | "2021-12-20T08:46:18" | "2021-12-20T10:04:59" | "2021-12-20T10:04:59" | MEMBER | null | ## Describe the bug

After the merge of:

- #3443

the method `iter_archive` throws a ValueError:

```

ValueError: read of closed file

```

## Steps to reproduce the bug

```python

for path, file in dl_manager.iter_archive(archive_path):

pass

```

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3453/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3453/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3452 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3452/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3452/comments | https://api.github.com/repos/huggingface/datasets/issues/3452/events | https://github.com/huggingface/datasets/issues/3452 | 1,083,803,178 | I_kwDODunzps5AmYYq | 3,452 | why the stratify option is omitted from test_train_split function? | {

"login": "j-sieger",

"id": 9985334,

"node_id": "MDQ6VXNlcjk5ODUzMzQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/9985334?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/j-sieger",

"html_url": "https://github.com/j-sieger",

"followers_url": "https://api.github.com/users/j-sieger/followers",

"following_url": "https://api.github.com/users/j-sieger/following{/other_user}",

"gists_url": "https://api.github.com/users/j-sieger/gists{/gist_id}",

"starred_url": "https://api.github.com/users/j-sieger/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/j-sieger/subscriptions",

"organizations_url": "https://api.github.com/users/j-sieger/orgs",

"repos_url": "https://api.github.com/users/j-sieger/repos",

"events_url": "https://api.github.com/users/j-sieger/events{/privacy}",

"received_events_url": "https://api.github.com/users/j-sieger/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

},

{

"id": 3761482852,

"node_id": "LA_kwDODunzps7gM6xk",

"url": "https://api.github.com/repos/huggingface/datasets/labels/good%20second%20issue",

"name": "good second issue",

"color": "BDE59C",

"default": false,

"description": "Issues a bit more difficult than \"Good First\" issues"

}

] | closed | false | null | [] | null | [

"Hi ! It's simply not added yet :)\r\n\r\nIf someone wants to contribute to add the `stratify` parameter I'd be happy to give some pointers.\r\n\r\nIn the meantime, I guess you can use `sklearn` or other tools to do a stratified train/test split over the **indices** of your dataset and then do\r\n```\r\ntrain_dataset = dataset.select(train_indices)\r\ntest_dataset = dataset.select(test_indices)\r\n```",

"Hi @lhoestq I would like to add `stratify` parameter, can you give me some pointers for adding the same ?",

"Hi ! Sure :)\r\n\r\nThe `train_test_split` method is defined here: \r\n\r\nhttps://github.com/huggingface/datasets/blob/dc62232fa1b3bcfe2fbddcb721f2d141f8908943/src/datasets/arrow_dataset.py#L3253-L3253\r\n\r\nand inside `train_test_split ` we need to create the right `train_indices` and `test_indices` that are passed here to `.select()`:\r\n\r\nhttps://github.com/huggingface/datasets/blob/dc62232fa1b3bcfe2fbddcb721f2d141f8908943/src/datasets/arrow_dataset.py#L3450-L3464\r\n\r\nFor example if your dataset is like\r\n| | label |\r\n|---:|--------:|\r\n| 0 | 1 |\r\n| 1 | 1 |\r\n| 2 | 0 |\r\n| 3 | 0 |\r\n\r\nand the user passes `stratify=dataset[\"label\"]`, then you should get indices that look like this\r\n```\r\ntrain_indices = [0, 2]\r\ntest_indices = [1, 3]\r\n```\r\n\r\nthese indices will be passed to `.select` to return the stratified train and test splits :)\r\n\r\nFeel free to îng me if you have any question !",

"@lhoestq \r\nI just added the implementation for `stratify` option here #4322 "

] | "2021-12-18T10:37:47" | "2022-05-25T20:43:51" | "2022-05-25T20:43:51" | NONE | null | why the stratify option is omitted from test_train_split function?

is there any other way implement the stratify option while splitting the dataset? as it is important point to be considered while splitting the dataset. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3452/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3452/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3451 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3451/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3451/comments | https://api.github.com/repos/huggingface/datasets/issues/3451/events | https://github.com/huggingface/datasets/pull/3451 | 1,083,459,137 | PR_kwDODunzps4wA5LP | 3,451 | [Staging] Update dataset repos automatically on the Hub | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-17T17:12:11" | "2021-12-21T10:25:46" | "2021-12-20T14:09:51" | MEMBER | null | Let's have a script that updates the dataset repositories on staging for now. This way we can make sure it works fine before going in prod.

Related to https://github.com/huggingface/datasets/issues/3341

The script runs on each commit on `master`. It checks the datasets that were changed, and it pushes the changes to the corresponding repositories on the Hub.

If there's a new dataset, then a new repository is created.

If the commit is a new release of `datasets`, it also pushes the tag to all the repositories. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3451/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3451/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3451",

"html_url": "https://github.com/huggingface/datasets/pull/3451",

"diff_url": "https://github.com/huggingface/datasets/pull/3451.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3451.patch",

"merged_at": "2021-12-20T14:09:51"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3448 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3448/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3448/comments | https://api.github.com/repos/huggingface/datasets/issues/3448/events | https://github.com/huggingface/datasets/issues/3448 | 1,083,231,080 | I_kwDODunzps5AkMto | 3,448 | JSONDecodeError with HuggingFace dataset viewer | {

"login": "kathrynchapman",

"id": 57716109,

"node_id": "MDQ6VXNlcjU3NzE2MTA5",

"avatar_url": "https://avatars.githubusercontent.com/u/57716109?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/kathrynchapman",

"html_url": "https://github.com/kathrynchapman",

"followers_url": "https://api.github.com/users/kathrynchapman/followers",

"following_url": "https://api.github.com/users/kathrynchapman/following{/other_user}",

"gists_url": "https://api.github.com/users/kathrynchapman/gists{/gist_id}",

"starred_url": "https://api.github.com/users/kathrynchapman/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/kathrynchapman/subscriptions",

"organizations_url": "https://api.github.com/users/kathrynchapman/orgs",

"repos_url": "https://api.github.com/users/kathrynchapman/repos",

"events_url": "https://api.github.com/users/kathrynchapman/events{/privacy}",

"received_events_url": "https://api.github.com/users/kathrynchapman/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 3470211881,

"node_id": "LA_kwDODunzps7O1zsp",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset-viewer",

"name": "dataset-viewer",

"color": "E5583E",

"default": false,

"description": "Related to the dataset viewer on huggingface.co"

}

] | closed | false | null | [] | null | [

"Hi ! I think the issue comes from the dataset_infos.json file: it has the \"flat\" field twice.\r\n\r\nCan you try deleting this file and regenerating it please ?",

"Thanks! That fixed that, but now I am getting:\r\nServer Error\r\nStatus code: 400\r\nException: KeyError\r\nMessage: 'feature'\r\n\r\nI checked the dataset_infos.json and pubmed_neg.py script, I don't use 'feature' anywhere as a key. Is the dataset viewer expecting that I do?",

"It seems that the `feature` key is missing from some feature type definition in your dataset_infos.json:\r\n```json\r\n\t\t\t\"tokens\": {\r\n\t\t\t\t\"dtype\": \"list\",\r\n\t\t\t\t\"id\": null,\r\n\t\t\t\t\"_type\": \"Sequence\"\r\n\t\t\t},\r\n\t\t\t\"tags\": {\r\n\t\t\t\t\"dtype\": \"list\",\r\n\t\t\t\t\"id\": null,\r\n\t\t\t\t\"_type\": \"Sequence\"\r\n\t\t\t}\r\n```\r\nThey should be\r\n```json\r\n\t\t\t\"tokens\": {\r\n\t\t\t\t\"dtype\": \"list\",\r\n\t\t\t\t\"id\": null,\r\n\t\t\t\t\"_type\": \"Sequence\"\r\n \"feature\": {\"dtype\": \"string\", \"id\": null, \"_type\": \"Value\"}\r\n\t\t\t},\r\n\t\t\t\"tags\": {\r\n\t\t\t\t\"dtype\": \"list\",\r\n\t\t\t\t\"id\": null,\r\n\t\t\t\t\"_type\": \"Sequence\",\r\n \"feature\": {\"num_classes\": 5, \"names\": [\"-\", \"S\", \"H\", \"N\", \"C\"], \"names_file\": null, \"id\": null, \"_type\": \"ClassLabel\"}\r\n\t\t\t}\r\n```\r\n\r\nNote that you can generate the dataset_infos.json automatically to avoid mistakes:\r\n```bash\r\ndatasets-cli test ./path/to/dataset --save_infos\r\n```"

] | "2021-12-17T12:52:41" | "2022-02-24T09:10:26" | "2022-02-24T09:10:26" | NONE | null | ## Dataset viewer issue for 'pubmed_neg'

**Link:** https://huggingface.co/datasets/IGESML/pubmed_neg

I am getting the error:

Status code: 400

Exception: JSONDecodeError

Message: Expecting property name enclosed in double quotes: line 61 column 2 (char 1202)

I have checked all files - I am not using single quotes anywhere. Not sure what is causing this issue.

Am I the one who added this dataset ? Yes

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3448/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3448/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3447 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3447/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3447/comments | https://api.github.com/repos/huggingface/datasets/issues/3447/events | https://github.com/huggingface/datasets/issues/3447 | 1,082,539,790 | I_kwDODunzps5Ahj8O | 3,447 | HF_DATASETS_OFFLINE=1 didn't stop datasets.builder from downloading | {

"login": "dunalduck0",

"id": 51274745,

"node_id": "MDQ6VXNlcjUxMjc0NzQ1",

"avatar_url": "https://avatars.githubusercontent.com/u/51274745?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/dunalduck0",

"html_url": "https://github.com/dunalduck0",

"followers_url": "https://api.github.com/users/dunalduck0/followers",

"following_url": "https://api.github.com/users/dunalduck0/following{/other_user}",

"gists_url": "https://api.github.com/users/dunalduck0/gists{/gist_id}",

"starred_url": "https://api.github.com/users/dunalduck0/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/dunalduck0/subscriptions",

"organizations_url": "https://api.github.com/users/dunalduck0/orgs",

"repos_url": "https://api.github.com/users/dunalduck0/repos",

"events_url": "https://api.github.com/users/dunalduck0/events{/privacy}",

"received_events_url": "https://api.github.com/users/dunalduck0/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | closed | false | null | [] | null | [

"Hi ! Indeed it says \"downloading and preparing\" but in your case it didn't need to download anything since you used local files (it would have thrown an error otherwise). I think we can improve the logging to make it clearer in this case",

"@lhoestq Thank you for explaining. I am sorry but I was not clear about my intention. I didn't want to kill internet traffic; I wanted to kill all write activity. In other words, you can imagine that my storage has only read access but crashes on write.\r\n\r\nWhen run_clm.py is invoked with the same parameters, the hash in the cache directory \"datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/...\" doesn't change, and my job can load cached data properly. This is great.\r\n\r\nUnfortunately, when params change (which happens sometimes), the hash changes and the old cache is invalid. datasets builder would create a new cache directory with the new hash and create JSON builder there, even though every JSON builder is the same. I didn't find a way to avoid such behavior.\r\n\r\nThis problem can be resolved when using datasets.map() for tokenizing and grouping text. This function allows me to specify output filenames with --cache_file_names, so that the cached files are always valid.\r\n\r\nThis is the code that I used to freeze cache filenames for tokenization. I wish I could do the same to datasets.load_dataset()\r\n```\r\n tokenized_datasets = raw_datasets.map(\r\n tokenize_function,\r\n batched=True,\r\n num_proc=data_args.preprocessing_num_workers,\r\n remove_columns=column_names,\r\n load_from_cache_file=not data_args.overwrite_cache,\r\n desc=\"Running tokenizer on dataset\",\r\n cache_file_names={k: os.path.join(model_args.cache_dir, f'{k}-tokenized') for k in raw_datasets},\r\n )\r\n```",

"Hi ! `load_dataset` may re-generate your dataset if some parameters changed indeed. If you want to freeze a dataset loaded with `load_dataset`, I think the best solution is just to save it somewhere on your disk with `.save_to_disk(my_dataset_dir)` and reload it with `load_from_disk(my_dataset_dir)`. This way you will be able to reload the dataset without having to run `load_dataset`"

] | "2021-12-16T18:51:13" | "2022-02-17T14:16:27" | "2022-02-17T14:16:27" | NONE | null | ## Describe the bug

According to https://huggingface.co/docs/datasets/loading_datasets.html#loading-a-dataset-builder, setting HF_DATASETS_OFFLINE to 1 should make datasets to "run in full offline mode". It didn't work for me. At the very beginning, datasets still tried to download "custom data configuration" for JSON, despite I have run the program once and cached all data into the same --cache_dir.

"Downloading" is not an issue when running with local disk, but crashes often with cloud storage because (1) multiply GPU processes try to access the same file, AND (2) FileLocker fails to synchronize all processes, due to storage throttling. 99% of times, when the main process releases FileLocker, the file is not actually ready for access in cloud storage and thus triggers "FileNotFound" errors for all other processes. Well, another way to resolve the problem is to investigate super reliable cloud storage, but that's out of scope here.

## Steps to reproduce the bug

```

export HF_DATASETS_OFFLINE=1

python run_clm.py --model_name_or_path=models/gpt-j-6B --train_file=trainpy.v2.train.json --validation_file=trainpy.v2.eval.json --cache_dir=datacache/trainpy.v2

```

## Expected results

datasets should stop all "downloading" behavior but reuse the cached JSON configuration. I think the problem here is part of the cache directory path, "default-471372bed4b51b53", is randomly generated, and it could change if some parameters changed. And I didn't find a way to use a fixed path to ensure datasets to reuse cached data every time.

## Actual results

The logging shows datasets are still downloading into "datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426".

```

12/16/2021 10:25:59 - WARNING - datasets.builder - Using custom data configuration default-471372bed4b51b53

12/16/2021 10:25:59 - INFO - datasets.builder - Generating dataset json (datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426)

Downloading and preparing dataset json/default to datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426...

100%|██████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 17623.13it/s]

12/16/2021 10:25:59 - INFO - datasets.utils.download_manager - Downloading took 0.0 min

12/16/2021 10:26:00 - INFO - datasets.utils.download_manager - Checksum Computation took 0.0 min

100%|███████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 1206.99it/s]

12/16/2021 10:26:00 - INFO - datasets.utils.info_utils - Unable to verify checksums.

12/16/2021 10:26:00 - INFO - datasets.builder - Generating split train

12/16/2021 10:26:01 - INFO - datasets.builder - Generating split validation

12/16/2021 10:26:02 - INFO - datasets.utils.info_utils - Unable to verify splits sizes.

Dataset json downloaded and prepared to datacache/trainpy.v2/json/default-471372bed4b51b53/0.0.0/c2d554c3377ea79c7664b93dc65d0803b45e3279000f993c7bfd18937fd7f426. Subsequent calls will reuse this data.

100%|█████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 53.54it/s]

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.16.1

- Platform: Linux

- Python version: 3.8.10

- PyArrow version: 6.0.1

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3447/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3447/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3445 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3445/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3445/comments | https://api.github.com/repos/huggingface/datasets/issues/3445/events | https://github.com/huggingface/datasets/issues/3445 | 1,082,370,968 | I_kwDODunzps5Ag6uY | 3,445 | question | {

"login": "BAKAYOKO0232",

"id": 38075175,

"node_id": "MDQ6VXNlcjM4MDc1MTc1",

"avatar_url": "https://avatars.githubusercontent.com/u/38075175?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/BAKAYOKO0232",

"html_url": "https://github.com/BAKAYOKO0232",

"followers_url": "https://api.github.com/users/BAKAYOKO0232/followers",

"following_url": "https://api.github.com/users/BAKAYOKO0232/following{/other_user}",

"gists_url": "https://api.github.com/users/BAKAYOKO0232/gists{/gist_id}",

"starred_url": "https://api.github.com/users/BAKAYOKO0232/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/BAKAYOKO0232/subscriptions",

"organizations_url": "https://api.github.com/users/BAKAYOKO0232/orgs",

"repos_url": "https://api.github.com/users/BAKAYOKO0232/repos",

"events_url": "https://api.github.com/users/BAKAYOKO0232/events{/privacy}",

"received_events_url": "https://api.github.com/users/BAKAYOKO0232/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 3470211881,

"node_id": "LA_kwDODunzps7O1zsp",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset-viewer",

"name": "dataset-viewer",

"color": "E5583E",

"default": false,

"description": "Related to the dataset viewer on huggingface.co"

}

] | closed | false | null | [] | null | [

"Hi ! What's your question ?"

] | "2021-12-16T15:57:00" | "2022-01-03T10:09:00" | "2022-01-03T10:09:00" | NONE | null | ## Dataset viewer issue for '*name of the dataset*'

**Link:** *link to the dataset viewer page*

*short description of the issue*

Am I the one who added this dataset ? Yes-No

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3445/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3445/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3443 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3443/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3443/comments | https://api.github.com/repos/huggingface/datasets/issues/3443/events | https://github.com/huggingface/datasets/pull/3443 | 1,082,052,833 | PR_kwDODunzps4v8QDX | 3,443 | Extend iter_archive to support file object input | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-16T10:59:14" | "2021-12-17T17:53:03" | "2021-12-17T17:53:02" | MEMBER | null | This PR adds support to passing a file object to `[Streaming]DownloadManager.iter_archive`.

With this feature, we can iterate over a tar file inside another tar file. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3443/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3443/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3443",

"html_url": "https://github.com/huggingface/datasets/pull/3443",

"diff_url": "https://github.com/huggingface/datasets/pull/3443.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3443.patch",

"merged_at": "2021-12-17T17:53:02"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3442 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3442/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3442/comments | https://api.github.com/repos/huggingface/datasets/issues/3442/events | https://github.com/huggingface/datasets/pull/3442 | 1,081,862,747 | PR_kwDODunzps4v7oBZ | 3,442 | Extend text to support yielding lines, paragraphs or documents | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-16T07:33:17" | "2021-12-20T16:59:10" | "2021-12-20T16:39:18" | MEMBER | null | Add `config.row` option to `text` module to allow yielding lines (default, current case), paragraphs or documents.

Feel free to comment on the name of the config parameter `row`:

- Currently, the docs state datasets are made of rows and columns

- Other names I considered: `example`, `item` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3442/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3442/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3442",

"html_url": "https://github.com/huggingface/datasets/pull/3442",

"diff_url": "https://github.com/huggingface/datasets/pull/3442.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3442.patch",

"merged_at": "2021-12-20T16:39:18"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3440 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3440/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3440/comments | https://api.github.com/repos/huggingface/datasets/issues/3440/events | https://github.com/huggingface/datasets/issues/3440 | 1,081,528,426 | I_kwDODunzps5AdtBq | 3,440 | datasets keeps reading from cached files, although I disabled it | {

"login": "dorost1234",

"id": 79165106,

"node_id": "MDQ6VXNlcjc5MTY1MTA2",

"avatar_url": "https://avatars.githubusercontent.com/u/79165106?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/dorost1234",

"html_url": "https://github.com/dorost1234",

"followers_url": "https://api.github.com/users/dorost1234/followers",

"following_url": "https://api.github.com/users/dorost1234/following{/other_user}",

"gists_url": "https://api.github.com/users/dorost1234/gists{/gist_id}",

"starred_url": "https://api.github.com/users/dorost1234/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/dorost1234/subscriptions",

"organizations_url": "https://api.github.com/users/dorost1234/orgs",

"repos_url": "https://api.github.com/users/dorost1234/repos",

"events_url": "https://api.github.com/users/dorost1234/events{/privacy}",

"received_events_url": "https://api.github.com/users/dorost1234/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | closed | false | null | [] | null | [

"Hi ! What version of `datasets` are you using ? Can you also provide the logs you get before it raises the error ?"

] | "2021-12-15T21:26:22" | "2022-02-24T09:12:22" | "2022-02-24T09:12:22" | NONE | null | ## Describe the bug

Hi,

I am trying to avoid dataset library using cached files, I get the following bug when this tried to read the cached files. I tried to do the followings:

```

from datasets import set_caching_enabled

set_caching_enabled(False)

```

also force redownlaod:

```

download_mode='force_redownload'

```

but none worked so far, this is on a cluster and on some of the machines this reads from the cached files, I really appreciate any idea on how to fully remove caching @lhoestq

many thanks

```

File "run_clm.py", line 496, in <module>

main()

File "run_clm.py", line 419, in main

train_result = trainer.train(resume_from_checkpoint=checkpoint)

File "/users/dara/codes/fewshot/debug/fewshot/third_party/trainers/trainer.py", line 943, in train

self._maybe_log_save_evaluate(tr_loss, model, trial, epoch, ignore_keys_for_eval)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/transformers/trainer.py", line 1445, in _maybe_log_save_evaluate

metrics = self.evaluate(ignore_keys=ignore_keys_for_eval)

File "/users/dara/codes/fewshot/debug/fewshot/third_party/trainers/trainer.py", line 172, in evaluate

output = self.eval_loop(

File "/users/dara/codes/fewshot/debug/fewshot/third_party/trainers/trainer.py", line 241, in eval_loop

metrics = self.compute_pet_metrics(eval_datasets, model, self.extra_info[metric_key_prefix], task=task)

File "/users/dara/codes/fewshot/debug/fewshot/third_party/trainers/trainer.py", line 268, in compute_pet_metrics

centroids = self._compute_per_token_train_centroids(model, task=task)

File "/users/dara/codes/fewshot/debug/fewshot/third_party/trainers/trainer.py", line 353, in _compute_per_token_train_centroids

data = get_label_samples(self.get_per_task_train_dataset(task), label)

File "/users/dara/codes/fewshot/debug/fewshot/third_party/trainers/trainer.py", line 350, in get_label_samples

return dataset.filter(lambda example: int(example['labels']) == label)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 470, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/fingerprint.py", line 406, in wrapper

out = func(self, *args, **kwargs)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 2519, in filter

indices = self.map(

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 2036, in map

return self._map_single(

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 503, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 470, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/fingerprint.py", line 406, in wrapper

out = func(self, *args, **kwargs)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 2248, in _map_single

return Dataset.from_file(cache_file_name, info=info, split=self.split)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 654, in from_file

return cls(

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 593, in __init__

self.info.features = self.info.features.reorder_fields_as(inferred_features)

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/features/features.py", line 1092, in reorder_fields_as

return Features(recursive_reorder(self, other))

File "/users/dara/conda/envs/multisuccess/lib/python3.8/site-packages/datasets/features/features.py", line 1081, in recursive_reorder

raise ValueError(f"Keys mismatch: between {source} and {target}" + stack_position)

ValueError: Keys mismatch: between {'indices': Value(dtype='uint64', id=None)} and {'candidates_ids': Sequence(feature=Value(dtype='null', id=None), length=-1, id=None), 'labels': Value(dtype='int64', id=None), 'attention_mask': Sequence(feature=Value(dtype='int8', id=None), length=-1, id=None), 'input_ids': Sequence(feature=Value(dtype='int32', id=None), length=-1, id=None), 'extra_fields': {}, 'task': Value(dtype='string', id=None)}

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version:

- Platform: linux

- Python version: 3.8.12

- PyArrow version: 6.0.1

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3440/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3440/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3439 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3439/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3439/comments | https://api.github.com/repos/huggingface/datasets/issues/3439/events | https://github.com/huggingface/datasets/pull/3439 | 1,081,389,723 | PR_kwDODunzps4v6Hxs | 3,439 | Add `cast_column` to `IterableDataset` | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-15T19:00:45" | "2021-12-16T15:55:20" | "2021-12-16T15:55:19" | CONTRIBUTOR | null | Closes #3369.

cc: @patrickvonplaten | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3439/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3439/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3439",

"html_url": "https://github.com/huggingface/datasets/pull/3439",

"diff_url": "https://github.com/huggingface/datasets/pull/3439.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3439.patch",

"merged_at": "2021-12-16T15:55:19"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3438 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3438/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3438/comments | https://api.github.com/repos/huggingface/datasets/issues/3438/events | https://github.com/huggingface/datasets/pull/3438 | 1,081,302,203 | PR_kwDODunzps4v52Va | 3,438 | Update supported versions of Python in setup.py | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-15T17:30:12" | "2021-12-20T14:22:13" | "2021-12-20T14:22:12" | CONTRIBUTOR | null | Update the list of supported versions of Python in `setup.py` to keep the PyPI project description updated. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3438/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3438/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3438",

"html_url": "https://github.com/huggingface/datasets/pull/3438",

"diff_url": "https://github.com/huggingface/datasets/pull/3438.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3438.patch",

"merged_at": "2021-12-20T14:22:12"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3437 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3437/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3437/comments | https://api.github.com/repos/huggingface/datasets/issues/3437/events | https://github.com/huggingface/datasets/pull/3437 | 1,081,247,889 | PR_kwDODunzps4v5qzI | 3,437 | Update BLEURT hyperlink | {

"login": "lewtun",

"id": 26859204,

"node_id": "MDQ6VXNlcjI2ODU5MjA0",

"avatar_url": "https://avatars.githubusercontent.com/u/26859204?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lewtun",

"html_url": "https://github.com/lewtun",

"followers_url": "https://api.github.com/users/lewtun/followers",

"following_url": "https://api.github.com/users/lewtun/following{/other_user}",

"gists_url": "https://api.github.com/users/lewtun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lewtun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lewtun/subscriptions",

"organizations_url": "https://api.github.com/users/lewtun/orgs",

"repos_url": "https://api.github.com/users/lewtun/repos",

"events_url": "https://api.github.com/users/lewtun/events{/privacy}",

"received_events_url": "https://api.github.com/users/lewtun/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-15T16:34:47" | "2021-12-17T13:28:26" | "2021-12-17T13:28:25" | MEMBER | null | The description of BLEURT on the hf.co website has a strange use of URL hyperlinking. This PR attempts to fix this, although I am not 100% sure Markdown syntax is allowed on the frontend or not.

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3437/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3437/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3437",

"html_url": "https://github.com/huggingface/datasets/pull/3437",

"diff_url": "https://github.com/huggingface/datasets/pull/3437.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3437.patch",

"merged_at": "2021-12-17T13:28:25"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3436 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3436/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3436/comments | https://api.github.com/repos/huggingface/datasets/issues/3436/events | https://github.com/huggingface/datasets/pull/3436 | 1,081,068,139 | PR_kwDODunzps4v5FE3 | 3,436 | Add the OneStopQa dataset | {

"login": "scaperex",

"id": 28459495,

"node_id": "MDQ6VXNlcjI4NDU5NDk1",

"avatar_url": "https://avatars.githubusercontent.com/u/28459495?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/scaperex",

"html_url": "https://github.com/scaperex",

"followers_url": "https://api.github.com/users/scaperex/followers",

"following_url": "https://api.github.com/users/scaperex/following{/other_user}",

"gists_url": "https://api.github.com/users/scaperex/gists{/gist_id}",

"starred_url": "https://api.github.com/users/scaperex/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/scaperex/subscriptions",

"organizations_url": "https://api.github.com/users/scaperex/orgs",

"repos_url": "https://api.github.com/users/scaperex/repos",

"events_url": "https://api.github.com/users/scaperex/events{/privacy}",

"received_events_url": "https://api.github.com/users/scaperex/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-15T13:53:31" | "2021-12-17T14:32:00" | "2021-12-17T13:25:29" | CONTRIBUTOR | null | Adding OneStopQA, a multiple choice reading comprehension dataset annotated according to the STARC (Structured Annotations for Reading Comprehension) scheme. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3436/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3436/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3436",

"html_url": "https://github.com/huggingface/datasets/pull/3436",

"diff_url": "https://github.com/huggingface/datasets/pull/3436.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3436.patch",

"merged_at": "2021-12-17T13:25:29"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3435 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3435/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3435/comments | https://api.github.com/repos/huggingface/datasets/issues/3435/events | https://github.com/huggingface/datasets/pull/3435 | 1,081,043,756 | PR_kwDODunzps4v4_-0 | 3,435 | Improve Wikipedia Loading Script | {

"login": "geohci",

"id": 45494522,

"node_id": "MDQ6VXNlcjQ1NDk0NTIy",

"avatar_url": "https://avatars.githubusercontent.com/u/45494522?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/geohci",

"html_url": "https://github.com/geohci",

"followers_url": "https://api.github.com/users/geohci/followers",

"following_url": "https://api.github.com/users/geohci/following{/other_user}",

"gists_url": "https://api.github.com/users/geohci/gists{/gist_id}",

"starred_url": "https://api.github.com/users/geohci/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/geohci/subscriptions",

"organizations_url": "https://api.github.com/users/geohci/orgs",

"repos_url": "https://api.github.com/users/geohci/repos",

"events_url": "https://api.github.com/users/geohci/events{/privacy}",

"received_events_url": "https://api.github.com/users/geohci/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-15T13:30:06" | "2022-03-04T08:16:00" | "2022-03-04T08:16:00" | CONTRIBUTOR | null | * More structured approach to detecting redirects

* Remove redundant template filter code (covered by strip_code)

* Add language-specific lists of additional media namespace aliases for filtering

* Add language-specific lists of category namespace aliases for new link text cleaning step

* Remove magic words (parser directions like __TOC__ that occasionally occur in text)

Fix #3400

With support from @albertvillanova

CC @yjernite | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3435/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3435/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3435",

"html_url": "https://github.com/huggingface/datasets/pull/3435",

"diff_url": "https://github.com/huggingface/datasets/pull/3435.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3435.patch",

"merged_at": "2022-03-04T08:16:00"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3434 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3434/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3434/comments | https://api.github.com/repos/huggingface/datasets/issues/3434/events | https://github.com/huggingface/datasets/issues/3434 | 1,080,917,446 | I_kwDODunzps5AbX3G | 3,434 | Add The People's Speech | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

},

{

"id": 2725241052,

"node_id": "MDU6TGFiZWwyNzI1MjQxMDUy",

"url": "https://api.github.com/repos/huggingface/datasets/labels/speech",

"name": "speech",

"color": "d93f0b",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"This dataset is now available on the Hub here: https://huggingface.co/datasets/MLCommons/peoples_speech"

] | "2021-12-15T11:21:21" | "2023-02-28T16:22:29" | "2023-02-28T16:22:28" | CONTRIBUTOR | null | ## Adding a Dataset

- **Name:** The People's Speech

- **Description:** a massive English-language dataset of audio transcriptions of full sentences.

- **Paper:** https://openreview.net/pdf?id=R8CwidgJ0yT

- **Data:** https://mlcommons.org/en/peoples-speech/

- **Motivation:** With over 30,000 hours of speech, this dataset is the largest and most diverse freely available English speech recognition corpus today.

[The article](https://thegradient.pub/new-datasets-to-democratize-speech-recognition-technology-2/) which may be useful when working on the dataset.

cc: @anton-l

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3434/reactions",

"total_count": 3,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 3,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3434/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3433 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3433/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3433/comments | https://api.github.com/repos/huggingface/datasets/issues/3433/events | https://github.com/huggingface/datasets/issues/3433 | 1,080,910,724 | I_kwDODunzps5AbWOE | 3,433 | Add Multilingual Spoken Words dataset | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

},

{

"id": 2725241052,

"node_id": "MDU6TGFiZWwyNzI1MjQxMDUy",

"url": "https://api.github.com/repos/huggingface/datasets/labels/speech",

"name": "speech",

"color": "d93f0b",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [] | "2021-12-15T11:14:44" | "2022-02-22T10:03:53" | "2022-02-22T10:03:53" | MEMBER | null | ## Adding a Dataset

- **Name:** Multilingual Spoken Words

- **Description:** Multilingual Spoken Words Corpus is a large and growing audio dataset of spoken words in 50 languages for academic research and commercial applications in keyword spotting and spoken term search, licensed under CC-BY 4.0. The dataset contains more than 340,000 keywords, totaling 23.4 million 1-second spoken examples (over 6,000 hours).

Read more: https://mlcommons.org/en/news/spoken-words-blog/

- **Paper:** https://datasets-benchmarks-proceedings.neurips.cc/paper/2021/file/fe131d7f5a6b38b23cc967316c13dae2-Paper-round2.pdf

- **Data:** https://mlcommons.org/en/multilingual-spoken-words/

- **Motivation:**

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3433/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3433/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3432 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3432/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3432/comments | https://api.github.com/repos/huggingface/datasets/issues/3432/events | https://github.com/huggingface/datasets/pull/3432 | 1,079,910,769 | PR_kwDODunzps4v1NGS | 3,432 | Correctly indent builder config in dataset script docs | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-14T15:39:47" | "2021-12-14T17:35:17" | "2021-12-14T17:35:17" | CONTRIBUTOR | null | null | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3432/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3432/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3432",

"html_url": "https://github.com/huggingface/datasets/pull/3432",

"diff_url": "https://github.com/huggingface/datasets/pull/3432.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3432.patch",

"merged_at": "2021-12-14T17:35:17"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3431 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3431/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3431/comments | https://api.github.com/repos/huggingface/datasets/issues/3431/events | https://github.com/huggingface/datasets/issues/3431 | 1,079,866,083 | I_kwDODunzps5AXXLj | 3,431 | Unable to resolve any data file after loading once | {

"login": "LzyFischer",

"id": 84694183,

"node_id": "MDQ6VXNlcjg0Njk0MTgz",

"avatar_url": "https://avatars.githubusercontent.com/u/84694183?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/LzyFischer",

"html_url": "https://github.com/LzyFischer",

"followers_url": "https://api.github.com/users/LzyFischer/followers",

"following_url": "https://api.github.com/users/LzyFischer/following{/other_user}",

"gists_url": "https://api.github.com/users/LzyFischer/gists{/gist_id}",

"starred_url": "https://api.github.com/users/LzyFischer/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/LzyFischer/subscriptions",

"organizations_url": "https://api.github.com/users/LzyFischer/orgs",

"repos_url": "https://api.github.com/users/LzyFischer/repos",

"events_url": "https://api.github.com/users/LzyFischer/events{/privacy}",

"received_events_url": "https://api.github.com/users/LzyFischer/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

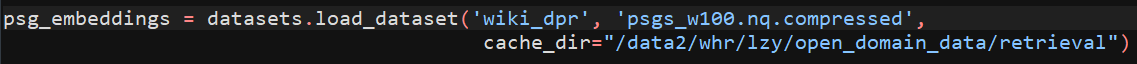

"Hi ! `load_dataset` accepts as input either a local dataset directory or a dataset name from the Hugging Face Hub.\r\n\r\nSo here you are getting this error the second time because it tries to load the local `wiki_dpr` directory, instead of `wiki_dpr` from the Hub. It doesn't work since it's a **cache** directory, not a **dataset** directory in itself.\r\n\r\nTo fix that you can use another cache directory like `cache_dir=\"/data2/whr/lzy/open_domain_data/retrieval/cache\"`",

"thx a lot"

] | "2021-12-14T15:02:15" | "2022-12-11T10:53:04" | "2022-02-24T09:13:52" | NONE | null | when I rerun my program, it occurs this error

" Unable to resolve any data file that matches '['**train*']' at /data2/whr/lzy/open_domain_data/retrieval/wiki_dpr with any supported extension ['csv', 'tsv', 'json', 'jsonl', 'parquet', 'txt', 'zip']", so how could i deal with this problem?

thx.

And below is my code .

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3431/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3431/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3430 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3430/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3430/comments | https://api.github.com/repos/huggingface/datasets/issues/3430/events | https://github.com/huggingface/datasets/pull/3430 | 1,079,811,124 | PR_kwDODunzps4v033w | 3,430 | Make decoding of Audio and Image feature optional | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-14T14:15:08" | "2022-01-25T18:57:52" | "2022-01-25T18:57:52" | CONTRIBUTOR | null | Add the `decode` argument (`True` by default) to the `Audio` and the `Image` feature to make it possible to toggle on/off decoding of these features.

Even though we've discussed that on Slack, I'm not removing the `_storage_dtype` argument of the Audio feature in this PR to avoid breaking the Audio feature tests. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3430/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3430/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3430",

"html_url": "https://github.com/huggingface/datasets/pull/3430",

"diff_url": "https://github.com/huggingface/datasets/pull/3430.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3430.patch",

"merged_at": "2022-01-25T18:57:52"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3429 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3429/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3429/comments | https://api.github.com/repos/huggingface/datasets/issues/3429/events | https://github.com/huggingface/datasets/pull/3429 | 1,078,902,390 | PR_kwDODunzps4vx1gp | 3,429 | Make cast cacheable (again) on Windows | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-13T19:32:02" | "2021-12-14T14:39:51" | "2021-12-14T14:39:50" | CONTRIBUTOR | null | `cast` currently emits the following warning when called on Windows:

```

Parameter 'function'=<function Dataset.cast.<locals>.<lambda> at 0x000001C930571EA0> of the transform datasets.arrow_dataset.Dataset._map_single couldn't be hashed properly, a random hash was used instead. Make sure your transforms and parameters are serializable with pickle or dill for the dataset fingerprinting

and caching to work. If you reuse this transform, the caching mechanism will consider it to be different

from the previous calls and recompute everything. This warning is only showed once. Subsequent hashing failures won't be showed.

```

It seems like the issue stems from the `config.PYARROW_VERSION` object not being serializable on Windows (tested with `dumps(lambda: config.PYARROW_VERSION)`), so I'm fixing this by capturing `config.PYARROW_VERSION.major` before the lambda definition. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3429/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3429/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3429",

"html_url": "https://github.com/huggingface/datasets/pull/3429",

"diff_url": "https://github.com/huggingface/datasets/pull/3429.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3429.patch",

"merged_at": "2021-12-14T14:39:50"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3428 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3428/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3428/comments | https://api.github.com/repos/huggingface/datasets/issues/3428/events | https://github.com/huggingface/datasets/pull/3428 | 1,078,863,468 | PR_kwDODunzps4vxtNT | 3,428 | Clean squad dummy data | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-12-13T18:46:29" | "2021-12-13T18:57:50" | "2021-12-13T18:57:50" | MEMBER | null | Some unused files were remaining, this PR removes them. We just need to keep the dummy_data.zip file | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3428/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3428/timeline | null | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3428",

"html_url": "https://github.com/huggingface/datasets/pull/3428",

"diff_url": "https://github.com/huggingface/datasets/pull/3428.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3428.patch",

"merged_at": "2021-12-13T18:57:50"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/3427 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3427/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3427/comments | https://api.github.com/repos/huggingface/datasets/issues/3427/events | https://github.com/huggingface/datasets/pull/3427 | 1,078,782,159 | PR_kwDODunzps4vxb_y | 3,427 | Add The Pile Enron Emails subset | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",