prompt

stringlengths 49

4.73k

| ground_truth

stringlengths 238

35k

|

|---|---|

Ingress Nginx - how to serve assets to application

I have an issue, I am deploying an application on [hostname]/product/console, but the .css .js files are being requested from [hostname]/product/static, hence they are not being loaded and I get 404.

I have tried `nginx.ingress.kubernetes.io/rewrite-target:` to no avail.

I also tried using: `nginx.ingress.kubernetes.io/location-snippet: |

location = /product/console/ {

proxy_pass http://[hostname]/product/static/;

}`

But the latter does not seem to be picked up by the nginx controller at all. This is my ingress.yaml

```

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-resource

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/enable-rewrite-log: "true"

# nginx.ingress.kubernetes.io/rewrite-target: /$1

nginx.ingress.kubernetes.io/location-snippet: |

location = /product/console/ {

proxy_pass http://[hostname]/product/static/;

}

spec:

rules:

- host: {{.Values.HOSTNAME}}

http:

paths:

- path: /product/console

backend:

serviceName: product-svc

servicePort: prod ##25022

- path: /product/

backend:

serviceName: product-svc

servicePort: prod #25022

```

--

Can I ask for some pointers? I have been trying to google this out and tried some different variations, but I seem to be doing something wrong. Thanks!

| **TL;DR**

To diagnose the reason why you get error 404 you can check in `nginx-ingress` controller pod logs. You can do it with below command:

`kubectl logs -n ingress-nginx INGRESS_NGINX_CONTROLLER_POD_NAME`

You should get output similar to this (depending on your use case):

```

CLIENT_IP - - [12/May/2020:11:06:56 +0000] "GET / HTTP/1.1" 200 238 "-" "REDACTED" 430 0.003 [default-ubuntu-service-ubuntu-port] [] 10.48.0.13:8080 276 0.003 200

CLIENT_IP - - [12/May/2020:11:06:56 +0000] "GET /assets/styles/style.css HTTP/1.1" 200 22 "http://SERVER_IP/" "REDACTED" 348 0.002 [default-ubuntu-service-ubuntu-port] [] 10.48.0.13:8080 22 0.002 200

```

With above logs you can check if the requests are handled properly by `nginx-ingress` controller and where they are sent.

Also you can check the [Kubernetes.github.io: ingress-nginx: Ingress-path-matching](https://kubernetes.github.io/ingress-nginx/user-guide/ingress-path-matching/). It's a document describing how `Ingress` matches paths with regular expressions.

---

You can experiment with `Ingress`, by following below example:

- Deploy `nginx-ingress` controller

- Create a `pod` and a `service`

- Run example application

- Create an `Ingress` resource

- Test

- Rewrite example

### Deploy `nginx-ingress` controller

You can deploy your `nginx-ingress` controller by following official documentation:

[Kubernetes.github.io: Ingress-nginx](https://kubernetes.github.io/ingress-nginx/deploy/)

### Create a `pod` and a `service`

Below is an example definition of a pod and a service attached to it which will be used for testing purposes:

```

apiVersion: apps/v1

kind: Deployment

metadata:

name: ubuntu-deployment

spec:

selector:

matchLabels:

app: ubuntu

replicas: 1

template:

metadata:

labels:

app: ubuntu

spec:

containers:

- name: ubuntu

image: ubuntu

command:

- sleep

- "infinity"

---

apiVersion: v1

kind: Service

metadata:

name: ubuntu-service

spec:

selector:

app: ubuntu

ports:

- name: ubuntu-port

port: 8080

targetPort: 8080

nodePort: 30080

type: NodePort

```

### Example page

I created a basic `index.html` with one `css` to simulate the request process. You need to create this files inside of a pod (manually or copy them to pod).

The file tree looks like this:

- **index.html**

- assets/styles/**style.css**

**index.html**:

```

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<link rel="stylesheet" href="assets/styles/style.css">

<title>Document</title>

</head>

<body>

<h1>Hi</h1>

</body>

```

Please take a specific look on a line:

```

<link rel="stylesheet" href="assets/styles/style.css">

```

**style.css**:

```

h1 {

color: red;

}

```

You can run above page with `python`:

- `$ apt update && apt install -y python3`

- `$ python3 -m http.server 8080` where the `index.html` and `assets` folder is stored.

## Create an `Ingress` resource

Below is an example `Ingress` resource configured to use `nginx-ingress` controller:

```

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress-example

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host:

http:

paths:

- path: /

backend:

serviceName: ubuntu-service

servicePort: ubuntu-port

```

After applying above resource you can start to test.

### Test

You can go to your browser and enter the external IP address associated with your `Ingress` resource.

**As I said above you can check the logs of `nginx-ingress` controller pod to check how your controller is handling request.**

If you run command mentioned earlier `python3 -m http.server 8080` you will get logs too:

```

$ python3 -m http.server 8080

Serving HTTP on 0.0.0.0 port 8080 (http://0.0.0.0:8080/) ...

10.48.0.16 - - [12/May/2020 11:06:56] "GET / HTTP/1.1" 200 -

10.48.0.16 - - [12/May/2020 11:06:56] "GET /assets/styles/style.css HTTP/1.1" 200 -

```

### Rewrite example

I've edited the `Ingress` resource to show you an example of a path rewrite:

```

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress-example

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/rewrite-target: /$1

spec:

rules:

- host:

http:

paths:

- path: /product/(.*)

backend:

serviceName: ubuntu-service

servicePort: ubuntu-port

```

Changes were made to lines:

```

nginx.ingress.kubernetes.io/rewrite-target: /$1

```

and:

```

- path: /product/(.*)

```

Steps:

- The browser sent: `/product/`

- Controller got `/product/` and had it rewritten to `/`

- Pod got `/` from a controller.

Logs from the`nginx-ingress` controller:

```

CLIENT_IP - - [12/May/2020:11:33:23 +0000] "GET /product/ HTTP/1.1" 200 228 "-" "REDACTED" 438 0.002 [default-ubuntu-service-ubuntu-port] [] 10.48.0.13:8080 276 0.001 200 fb0d95e7253335fc82cc84f70348683a

CLIENT_IP - - [12/May/2020:11:33:23 +0000] "GET /product/assets/styles/style.css HTTP/1.1" 200 22 "http://SERVER_IP/product/" "REDACTED" 364 0.002 [default-ubuntu-service-ubuntu-port] [] 10.48.0.13:8080 22 0.002 200

```

Logs from the pod:

```

10.48.0.16 - - [12/May/2020 11:33:23] "GET / HTTP/1.1" 200 -

10.48.0.16 - - [12/May/2020 11:33:23] "GET /assets/styles/style.css HTTP/1.1" 200 -

```

Please let me know if you have any questions in that.

|

why ({}+{})="[object Object][object Object]"?

I have tested the code:

```

{}+{} = NaN;

({}+{}) = "[object Object][object Object]";

```

Why does adding the `()` change the result?

| `{}+{}` is a *block* followed by an expression. The first `{}` is the block (like the kind you attach to an `if` statement), the `+{}` is the expression. The first `{}` is a block because when the parser is looking for a statement and sees `{`, it interprets it as the opening of a block. That block, being empty, does nothing. Having processed the block, the parser sees the `+` and reads it as a unary `+`. That shifts the parser into handling an expression. In an expression, a `{` starts an object initializer instead of a block, so the `{}` is an object initializer. The object initializer creates an object, which `+` then tries to coerce to a number, getting `NaN`.

In `({}+{})`, the opening `(` shifts the parser into the mode where it's expecting an expression, not a statement. So the `()` contains *two* object initializers with a *binary* `+` (e.g., the "addition" operator, which can be arithmetic or string concatenation) between them. The binary `+` operator will attempt to add or concatenate depending on its operands. It coerces its operands to primitives, and in the case of `{}`, they each become the string `"[object Object]"`. So you end up with `"[object Object][object Object]"`, the result of concatenating them.

|

ZFS storage on Docker

I would like to try out ZFS on Ubuntu(16.04) docker container. Followed the following <https://docs.docker.com/engine/userguide/storagedriver/zfs-driver/>

```

> lsmod | grep zfs

zfs 2813952 5

zunicode 331776 1 zfs

zcommon 57344 1 zfs

znvpair 90112 2 zfs,zcommon

spl 102400 3 zfs,zcommon,znvpair

zavl 16384 1 zfs

```

Listing the ZFS mounts

```

>sudo zfs list

NAME USED AVAIL REFER MOUNTPOINT

zpool-docker 261K 976M 53.5K /zpool-docker

zpool-docker/docker 120K 976M 120K /var/lib/docker

```

After starting docker

```

> sudo docker info

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 1.12.0

Storage Driver: aufs

Root Dir: /var/lib/docker/aufs

Backing Filesystem: zfs

Dirs: 0

...

```

Wonder why I still get \*\*Storage Driver: aufs & Root Dir: /var/lib/docker/aufs" in place of zfs?

Also how can I map "/zpool-docker" into the Ubuntu container image?

| Assuming you have:

- a ZFS pool (let's call it `data`)

- a ZFS dataset mounted on `/var/lib/docker` (created with a command along the line of: `zfs create -o mountpoint=/var/lib/docker data/docker`)

Then:

Stop your docker daemon (eg. `systemctl stop docker.service`)

Create a file `/etc/docker/daemon.json` or amend it to contain a line with `"storage-driver"` set to `zfs`:

```

{

...

"storage-driver": "zfs"

...

}

```

Restart your docker daemon.

`docker info` should now reveal:

```

Storage Driver: zfs

Zpool: data

Zpool Health: ONLINE

Parent Dataset: data/docker

```

|

How to return the result from Task?

I have the following methods:

```

public int getData() { return 2; } // suppose it is slow and takes 20 sec

// pseudocode

public int GetPreviousData()

{

Task<int> t = new Task<int>(() => getData());

return _cachedData; // some previous value

_cachedData = t.Result; // _cachedData == 2

}

```

I don't want to wait for the result of an already running operation.

I want to return `_cachedData` and update it after the `Task` will finish.

How to do this? I'm using `.net framework 4.5.2`

| You might want to use an `out` parameter here:

```

public Task<int> GetPreviousDataAsync(out int cachedData)

{

Task<int> t = Task.Run(() => getData());

cachedData = _cachedData; // some previous value

return t; // _cachedData == 2

}

int cachedData;

cachedData = await GetPreviousDataAsync(out int cachedData);

```

Pay attention to the `Task.Run` thing: this starts a task using the thread pool and returns a `Task<int>` to let the caller decide if it should be awaited, continued or *fire and forget* it.

See the following sample. I've re-arranged everything into a class:

```

class A

{

private int _cachedData;

private readonly static AutoResetEvent _getDataResetEvent = new AutoResetEvent(true);

private int GetData()

{

return 1;

}

public Task<int> GetPreviousDataAsync(out int cachedData)

{

// This will force calls to this method to be executed one by one, avoiding

// N calls to his method update _cachedData class field in an unpredictable way

// It will try to get a lock in 6 seconds. If it goes beyong 6 seconds it means that

// the task is taking too much time. This will prevent a deadlock

if (!_getDataResetEvent.WaitOne(TimeSpan.FromSeconds(6)))

{

throw new InvalidOperationException("Some previous operation is taking too much time");

}

// It has acquired an exclusive lock since WaitOne returned true

Task<int> getDataTask = Task.Run(() => GetData());

cachedData = _cachedData; // some previous value

// Once the getDataTask has finished, this will set the

// _cachedData class field. Since it's an asynchronous

// continuation, the return statement will be hit before the

// task ends, letting the caller await for the asynchronous

// operation, while the method was able to output

// previous _cachedData using the "out" parameter.

getDataTask.ContinueWith

(

t =>

{

if (t.IsCompleted)

_cachedData = t.Result;

// Open the door again to let other calls proceed

_getDataResetEvent.Set();

}

);

return getDataTask;

}

public void DoStuff()

{

int previousCachedData;

// Don't await it, when the underlying task ends, sets

// _cachedData already. This is like saying "fire and forget it"

GetPreviousDataAsync(out previousCachedData);

}

}

```

|

Most efficient way to find an entry in a C++ vector

I'm trying to construct an output table containing 80 rows of table status, that could be `EMPTY` or `USED` as below

```

+-------+-------+

| TABLE | STATE |

+-------+-------+

| 00 | USED |

| 01 | EMPTY |

| 02 | EMPTY |

..

..

| 79 | EMPTY |

+-------+-------+

```

I have a vector `m_availTableNums` which contains a list of available table numbers. In the below example, I'm putting 20 random table numbers into the above vector such that all the remaining 60 would be empty. My logic below works fine.

Is there a scope for improvement here on the find logic?

```

#include <iostream>

#include <iomanip>

#include <cmath>

#include <string.h>

#include <cstdlib>

#include <vector>

#include <algorithm>

#include <ctime>

using namespace std;

int main(int argc, const char * argv[]) {

std::vector<uint8_t> m_availTableNums;

char tmpStr[50];

uint8_t tableNum;

uint8_t randomCount;

srand(time(NULL));

for( tableNum=0; tableNum < 20; tableNum++ )

{

randomCount = ( rand() % 80 );

m_availTableNums.push_back( randomCount );

}

sprintf(tmpStr, "+-------+-------+");

printf("%s\n", tmpStr);

tmpStr[0]='\0';

sprintf(tmpStr, "| TABLE | STATE |");

printf("%s\n", tmpStr);

tmpStr[0]='\0';

sprintf(tmpStr, "+-------+-------+");

printf("%s\n", tmpStr);

tableNum = 0;

for( tableNum=0; tableNum < 80 ; tableNum++ )

{

tmpStr[0]='\0';

if ( std::find(m_availTableNums.begin(), m_availTableNums.end(), tableNum) != m_availTableNums.end() )

{

sprintf(tmpStr, "| %02d | EMPTY |", tableNum );

} else {

sprintf(tmpStr, "| %02d | USED |", tableNum );

}

printf("%s\n", tmpStr);

}

tmpStr[0]='\0';

sprintf(tmpStr, "+-------+-------+");

printf("%s\n", tmpStr);

return 0;

}

```

| # Header files

It's strange that this code uses the C header `<string.h>` but the C++ versions of `<cmath>`, `<ctime>` and `<cstdlib>`. I recommend sticking to the C++ headers except on the rare occasions that you need to compile the same code with a C compiler. In this case, I don't see anything using `<cstring>`, so we can probably just drop that, along with `<iomanip>`, `<iostream>` and `<cmath>`. And we need to add some missing includes: `<cstdint>` and `<cstdio>`.

# Avoid `using namespace std`

The standard namespace is not one that's designed for wholesale import like this. Unexpected name collisions when you add another header or move to a newer C++ could even cause changes to the meaning of your program.

# Use the appropriate signature for `main()`

Since we're ignoring the command-line arguments, we can use a `main()` that takes no parameters:

```

int main()

```

# Remove pointless temporary string

Instead of formatting into `tmpStr` and immediately printing its contents to standard output, we can eliminate that variable by formatting directly to standard output (using the same format string). For example, instead of:

>

>

> ```

> std::sprintf(tmpStr, "+-------+-------+");

> std::printf("%s\n", tmpStr);

> tmpStr[0]='\0';

>

> std::sprintf(tmpStr, "| TABLE | STATE |");

> std::printf("%s\n", tmpStr);

> tmpStr[0]='\0';

>

> std::sprintf(tmpStr, "+-------+-------+");

> std::printf("%s\n", tmpStr);

>

> ```

>

>

we could simply write:

```

std::puts("+-------+-------+\n"

"| TABLE | STATE |\n"

"+-------+-------+");

```

And instead of

>

>

> ```

> tmpStr[0]='\0';

> if ( std::find(m_availTableNums.begin(), m_availTableNums.end(), tableNum) != m_availTableNums.end() )

> {

> std::sprintf(tmpStr, "| %02d | EMPTY |", tableNum );

> } else {

> std::sprintf(tmpStr, "| %02d | USED |", tableNum );

> }

>

> printf("%s\n", tmpStr);

>

> ```

>

>

we would have:

```

if (std::find(m_availTableNums.begin(), m_availTableNums.end(), tableNum) != m_availTableNums.end()) {

std::printf("| %02d | EMPTY |\n", tableNum);

} else {

std::printf("| %02d | USED |\n", tableNum);

}

```

# Reduce duplication

Most of these statements are common:

>

>

> ```

> std::printf("| %02d | EMPTY |\n", tableNum);

> } else {

> std::printf("| %02d | USED |\n", tableNum);

> }

>

> ```

>

>

The only bit that's different is the `EMPTY` or `USED` string. So let's decide that first:

```

const char *status =

std::find(m_availTableNums.begin(), m_availTableNums.end(), tableNum) != m_availTableNums.end()

? "EMPTY" : "USED";

std::printf("| %02d | %-5s |\n", tableNum, status);

```

# Prefer `nullptr` value to `NULL` macro

The C++ null pointer has strong type, whereas `NULL` or `0` can be interpreted as integer.

# Reduce scope of variables

`randomCount` doesn't need to be valid outside the first `for` loop, and we don't need to use the same `tableNum` for both loops. Also, we could follow convention and use a short name for a short-lived loop index; `i` is the usual choice:

```

for (std::uint8_t i = 0; i < 20; ++i) {

std::uint8_t randomCount = rand() % 80;

m_availTableNums.push_back(randomCount);

}

```

```

for (std::uint8_t i = 0; i < 80; ++i) {

```

# Avoid magic numbers

What's special about `80`? Could we need a different range? Let's give the constant a name, and then we can be sure that the loop matches this range:

```

constexpr std::uint8_t LIMIT = 80;

...

std::uint8_t randomCount = rand() % LIMIT;

...

for (std::uint8_t i = 0; i < LIMIT; ++i) {

```

# A departure from specification

The description says

>

> I'm putting 20 random table numbers into the above vector such that, all the remaining 60 would be empty.

>

>

>

That's not exactly what's happening, as we're sampling *with replacement* from the values 0..79. There's nothing to prevent duplicates being added (it's actually quite unlikely that there will be exactly 60 empty values).

# Reduce the algorithmic complexity

Each time through the loop, we use `std::find()` to see whether we have any matching elements. This is a *linear* search, so it examines elements in turn until it finds a match. Since it only finds a match one-quarter of the time, the other three-quarters will examine *every element in the list*, and the time it takes will be proportional to the list length - we say it scales as O(*n*), where *n* is the size of the vector. The complete loop therefore scales as O(*mn*), where *m* is the value of `LIMIT`.

We can reduce the complexity to O(*m* + *n*) if we use some extra storage to store the values in a way that makes them easy to test. For example, we could populate a vector that's indexed by the values from `m_availTableNums`:

```

auto by_val = std::vector<bool>(LIMIT, false);

for (auto value: m_availTableNums)

by_val[value] = true;

for (std::uint8_t i = 0; i < LIMIT; ++i) {

const char *status = by_val[i] ? "EMPTY" : "USED";

std::printf("| %02d | %-5s |\n", i, status);

}

```

If the range were much larger, we might use an (unordered) set instead of `vector<bool>`. We might also choose `vector<char>` instead of `vector<bool>` for better speed at a cost of more space.

---

# Simplified code

Here's my version, keeping to the spirit of the original (creating a list of indices, rather than changing to storing in the form we want to use them):

```

#include <algorithm>

#include <cstdint>

#include <cstdio>

#include <cstdlib>

#include <ctime>

#include <vector>

int main()

{

constexpr std::uint8_t LIMIT = 80;

std::vector<std::uint8_t> m_availTableNums;

std::srand(std::time(nullptr));

for (std::uint8_t i = 0; i < 20; ++i) {

std::uint8_t randomCount = rand() % LIMIT;

m_availTableNums.push_back(randomCount);

}

std::puts("+-------+-------+\n"

"| TABLE | STATE |\n"

"+-------+-------+");

auto by_val = std::vector<bool>(LIMIT, false);

for (auto value: m_availTableNums)

by_val[value] = true;

for (std::uint8_t i = 0; i < LIMIT; ++i) {

const char *status = by_val[i] ? "EMPTY" : "USED";

std::printf("| %02d | %-5s |\n", i, status);

}

std::puts("+-------+-------+");

}

```

|

When searchController is active, status bar style changes

Throughout my app I have set the status bar style to light content.[](https://i.stack.imgur.com/st8JB.png)

However, when the search controller is active, it resets to the default style: [](https://i.stack.imgur.com/sCfIM.png)

I have tried everything to fix this, including checking if the search controller is active in an if statement, and then changing the tint color of the navigation bar to white, and setting the status bar style to light content. How do I fix this?

| a couple of option, and this could be a problem that is a bug, but in the mean time, have you tried this:

**Option 1:**

info.plist, set up the option in your info.plist for "Status bar style", this is a string value with the value of "UIStatusBarStyleLightContent"

Also, in your infor.plist, set up the variable "View controller-based status bar appearance" and set it's value to "NO"

Then, in each view controller in your app, explicitly declare the following in command in your initializers, your ViewWillAppear, and your ViewDidLoad

```

UIApplication.sharedApplication().statusBarStyle = UIStatusBarStyle.LightContent

```

**Option 2:**

In your info.plist set up the option for "Status bar style" to "UIStatusBarStyleLightContent". Also, in your infor.plist, set up the variable "View controller-based status bar appearance" and set it's value to "YES"

Then, in each view controller place the following methods

```

override func preferredStatusBarStyle() -> UIStatusBarStyle {

return UIStatusBarStyle.LightContent;

}

override func prefersStatusBarHidden() -> Bool {

return false

}

```

Also, you may need to do something like this:

```

self.extendedLayoutIncludesOpaqueBars = true

```

Also, I translated it to Swift code for you

|

Remote Desktop Forgets Multi Monitor Configuration

By default, I RDP from my personal PC to my work laptop, in order to make use of all my monitors without needing to resort to a KVM.

On my old laptop, it would remember the position of windows between RDP sessions, provided that I had not logged into the machine physically between sessions. This new laptop, however, forgets the window positions on each connection and forces my windows all onto the main monitor.

It *does* span multiple monitors, and I'm able to use them fine during the session, but once I end it and reconnect, it resets every time.

I'm using the same shortcut I used with the old laptop (I use a USB-C ethernet dongle on the laptop with a static IP assigned on the router), so the settings should all be the same.

How can I stop the laptop from resetting the screens on every reconnection.

| I think this is a bug courtesy of the latest [two] windows updates (1903 as is listed in the tags in the original post.. but also the more recently released 1909) because I had the same exact issue that was resolved by rollback to 1809.

I have a 12 monitor local computer and rdp into a host computer with only 1 monitor with the use all local monitor option and I was, prior to updating from 1809 I was able to use all 12 local monitors and it would remember the window locations between sessions prior to the latest windows update. Now it is like starting from scratch each time I connect via RDP even if there isn’t a local session between RDP sessions. Annoying AF. The first solution I came up with is to do a rollback to 1809.

I also had a second host that I also discovered had the same issue that too was upgraded from 1809 to 1909 but this host was past the 10 day rollback window. Since the rollback to 1809 resolved the issue with the first host I investigated further as to what was changed from 1809 to 1909 to try and find the root cause, and the issue seems to be the deprecation of the XDDM display driver and the forced use of WDDM.

Using GPO or registry modification to eliminate the forced use of WDDM also resolved the intersession windowing issue (amongst other things), without needing a rollback. See here for instructions on how to implement: [Remote Desktop black screen](https://answers.microsoft.com/en-us/windows/forum/all/remote-desktop-black-screen/63c665b5-883d-45e7-9356-f6135f89837a?page=2)

>

> In [Local Group Policy Editor->Local Computer Policy->Administrative

> Templates->Windows Components->Remote Desktop Services->Remote Desktop

> Session Host->Remote Session Enviroment], set the Policy **[Use WDDM

> graphics display driver for Remote Desktop Connections]** to

> **Disabled**.

>

>

> [](https://i.stack.imgur.com/znCHG.png)

>

>

>

Alternatively, to use the modify the registry method, open the command prompt with administrator privileges and type

reg add “HKLM\SOFTWARE\Policies\Microsoft\Windows NT\Terminal Services” /v “fEnableWddmDriver” /t REG\_DWORD /d 0 /f

See: <https://answers.microsoft.com/en-us/windows/forum/all/windows-10-1903-may-update-black-screen-with/23c8a740-0c79-4042-851e-9d98d0efb539?page=1>

Note that the machine must be restarted for this change to take effect.

---

Note: Some articles also claim KB452941 will solve various display issues related to the XDDM vs WDDM driver as well but it did not in my case.

|

Does NSPasteboard retain owner objects?

You can call `NSPasteboard` like this:

```

[pboard declareTypes:types owner:self];

```

Which means that the pasteboard will later ask the owner to supply data for a type as needed. However, what I can't find from the docs (and maybe I've missed something bleeding obvious), is whether or not `owner` is retained.

In practice what's worrying me is if the owner is a **weak** reference, it could be deallocated, causing a crash if the pasteboard then tries to request data from it.

**Note:** I should probably clarify that I'm interested in this more as an aid to tracking down a bug, than making my app rely on it. But I do also want the docs clarified.

| The docs:

>

> *newOwner*

>

>

> The object responsible for writing

> data to the pasteboard, or nil if you

> provide data for all types

> immediately. If you specify a newOwner

> object, it must support all of the

> types declared in the newTypes

> parameter and must remain valid for as

> long as the data is promised on the

> pasteboard.

>

>

>

Translation: The pasteboard may or may not retain the owner. Whether it does is an implementation detail that you should not rely upon. It is your responsibility to retain the owner for as long as it acts as an owner.

What the docs are saying about "remain valid" actually refers to the *proxied contents* that you might lazily provide. I.e. if the user were to copy something, you wouldn't want the owner's representation of what was copied to change as the user makes further edits with an intention of pasting sometime later.

The documentation says nothing about the retain/release policy of the owner (nor is there any kind of blanket rule statement). It should be clarified (rdar://8966209 filed). As it is, making an assumption about the retain/release behavior is dangerous.

|

I can't start a new project on Netbeans

## The issue:

When I open the "add new project" dialog (screenshot below), I can't create a new project. The loading message (hourglass icon) stays on forever. Except for "cancel", the other buttons are disabled.

It was working fine a few days ago, I haven't changed any setting prior to the issue appearing. I ran the internal update feature, but the issue persists.

## The info:

**My OS version**: Ubuntu 12.04.2 LTS 64 bits

**Netbeans version**:

Help -> about

```

Product Version: NetBeans IDE 7.2.1 (Build 201210100934)

Java: 1.6.0_27; OpenJDK 64-Bit Server VM 20.0-b12

System: Linux version 3.2.0-49-generic running on amd64; UTF-8; pt_BR (nb)

User directory: /home/user/.netbeans/7.2.1

Cache directory: /home/user/.cache/netbeans/7.2.1

```

## What I tried:

- Changing the Look and Feel with the `--laf` command-line option. The look-and-feel does change, but the issue persists.

- Using the internal update command, a plugin got updated but the issue persists.

- Downloading and installing the latest version (7.31), it imported the settings from the previous version and the issue persists.

- Removing the settings folder `~/.netbeans/7.3.1`, restarting netbeans, choosing not to import settings and rather have a new clean install

| Just posted the same question [here](https://askubuntu.com/questions/326933/netbeans-broken-after-openjdk-update) ... the solution for me was to downgrade OpenJDK from **6b27** to **6b24** (look at the link for details).

My NetBeans was looking ***excactly*** like in your sreenshot and also had some other strange problems.

I would suggest you do `java -version` if this shows that you have **6b27** installed and `cat /var/log/dpkg.log | grep openjdk` shows that you recently received the OpenJDK update you can try to fix the problem reverting to **6b24** using this command:

```

apt-get install openjdk-6-jre=6b24-1.11.1-4ubuntu2 openjdk-6-jre-headless=6b24-1.11.1-4ubuntu2 icedtea-6-jre-cacao=6b24-1.11.1-4ubuntu2

```

\*\*\*\*edit\*\*\*\*

As I discovered some other problems (SSH connection wouldn't establish within NetBeans) I finally took the step to upgrade to Oracle JDK7. To start NetBeans with another JDK you have got to edit `./netbeans-7.X/etc/netbeans.conf` and change the line `netbeans_jdkhome="/usr/lib/jvm/java-7-oracle/jre"` (I guess this gets generated in the NetBeans installation process). Otherwise it will still use your old JDK no matter what `java -version` says.

|

Recyclerview item click ripple effect

I am trying to add `Ripple` Effect to `RecyclerView`'s item. I had a look online, but could not find what I need. I have tried `android:background` attribute to the `RecyclerView` itself and set it to `"?android:selectableItemBackground"` but it did not work.:

My Parent layout is like this

```

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:padding="10dp">

<android.support.v7.widget.RecyclerView

android:id="@+id/dailyTaskList"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:clickable="true"

android:focusable="true"

android:scrollbars="vertical" />

</LinearLayout>

```

and adapter template is shown in below

```

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:custom="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<LinearLayout

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:orientation="vertical"

android:padding="5dp">

<TextView

android:id="@+id/txtTitle"

style="@style/kaho_panel_sub_heading_textview_style"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

<TextView

android:id="@+id/txtDate"

style="@style/kaho_content_small_textview_style"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

</LinearLayout>

</LinearLayout>

```

Kindly give me solution

| Adding the `android:background="?attr/selectableItemBackground"` to the top most parent of your item layout should do the trick.

However in some cases it still misses the animation, adding the `android:clickable="true"` does it.

```

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:background="?attr/selectableItemBackground"

android:orientation="vertical">

<LinearLayout

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:orientation="vertical"

android:padding="5dp">

<TextView

android:id="@+id/txtTitle"

style="@style/kaho_panel_sub_heading_textview_style"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

<TextView

android:id="@+id/txtDate"

style="@style/kaho_content_small_textview_style"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

</LinearLayout>

</LinearLayout>

```

|

C++ name mangling and linker symbol resolution

The name mangling schemes of C++ compilers vary, but they are documented publicly. Why aren't linkers made to decode a mangled symbol from an object file and attempt to find a mangled version via any of the mangling conventions across the other object files/static libraries? If linkers could do this, wouldn't it help alleviate the problem with C++ libraries that they have to be re-compiled by each compiler to have symbols that can be resolved?

List of mangling documentations I found:

- [MSVC++ mangling conventions](http://en.wikipedia.org/wiki/Microsoft_Visual_C++_Name_Mangling)

- [GCC un-mangler](https://stackoverflow.com/questions/4468770/c-name-mangling-decoder-for-g?answertab=votes#tab-top)

- [MSVC++ un-mangling function documentation](http://msdn.microsoft.com/en-us/library/windows/desktop/ms681400.aspx)

- [LLVM mangle class](https://llvm.org/svn/llvm-project/cfe/tags/RELEASE_26/lib/CodeGen/Mangle.cpp)

| Name mangling is a very small part of the problem.

Object layout is only defined in the C++ standard for a very restricted set of classes (essentially only standard layout types - and then only as much as the C standard does, alignment and padding are still to be considered). For anything that has virtuals, any form of non-trivial inheritance, mixed public and private members, etc. the standard doesn't say how they should be layed out in memory.

Two compilers can (and this is not purely hypothetical, this does happen in practice) return different values for `sizeof(std::string)` for instance. There is nothing in the standard that says: *an `std::string` is represented like this in memory*. So interoperability at the object file level doesn't exist.

Binary compatibility between C++ compilers is a much larger problem than just name mangling. You'd need to standardize much more than what is currently specified.

|

apply function not changing original value

Related to question on this page: [Randomly associate elements of two vectors given conditions](https://stackoverflow.com/questions/25221199/randomly-associate-elements-of-two-vectors-given-conditions)

If I have following data:

```

loss=c(45,10,5,1)

capitals = structure(list(capital = c(100L, 50L, 4L, 25L, 5L), loss = c(5L,

10L, 10L, 1L, 45L)), .Names = c("capital", "loss"), class = "data.frame", row.names = c(NA,

-5L))

capitals

capital loss

1 100 5

2 50 10

3 4 10

4 25 1

5 5 45

>

```

I am trying to correct any row with loss>capital (assign another random value from vector loss so that loss<=capital) by following command:

```

apply(capitals, 1, function(x){while(x[2]>x[1]) {x[2] = sample(loss,1); print(x[2])} })

```

print function shows that the value is changing in the function but value is not changing in dataframe capitals:

```

apply(capitals, 1, function(x){while(x[2]>x[1]) {x[2] = sample(loss,1); print(x[2])} })

loss

5

loss

10

loss

10

loss

1

loss

5

NULL

> capitals

capital loss

1 100 5

2 50 10

3 4 10

4 25 1

5 5 45

>

```

Why is value in capitals dataframe not changing and how can this be corrected? Thanks for your help.

| `apply` is evaluating a function, and assignment within functions do not affect the enclosing environment. A copy is being modified, and that copy is destroyed when the function exits.

Instead, to make use of `apply`, you should build an object, letting `apply` return each element. Something like this perhaps:

```

capitals$loss <-

apply(capitals, 1,

function(x){

while(x[2]>x[1])

x[2] <- sample(loss,1)

x[2]

}

)

capitals

## capital loss

## 1 100 5

## 2 50 10

## 3 4 1

## 4 25 1

## 5 5 5

```

Here, the new value for `loss` (`x[2]`) is returned from the function, and collected into a vector by `apply`. This is then used to replace the column in the data frame.

This can be done without the `while` loop, by sampling the desired subset of `loss`. An `if` is required to determine if sampling is needed:

```

apply(capitals, 1,

function(x)

if (x[2] > x[1])

sample(loss[loss<=x[1]], 1)

else

x[2]

)

```

Better yet, instead of using `if`, you can replace only those rows where the condition holds:

```

r <- capitals$capital < capitals$loss

capitals[r, 'loss'] <-

sapply(capitals[r,'capital'],

function(x) sample(loss[loss<=x], 1)

)

```

Here, the rows where replacement is needed is represented by `r` and only those rows are modified (this is the same condition present for the `while` in the original, but the order of the elements has been swapped -- thus the change from greater-than to less-than).

The `sapply` expression loops through the values of `capital` for those rows, and returns a single sample from those entries of `loss` that do not exceed the `capital` value.

|

IIS\_IUSRS and IUSR permissions in IIS8

I've just moved away from IIS6 on Win2003 to IIS8 on Win2012 for hosting ASP.NET applications.

Within one particular folder in my application I need to Create & Delete files. After copying the files to the new server, I kept seeing the following errors when I tried to delete files:

>

> Access to the path 'D:\WebSites\myapp.co.uk\companydata\filename.pdf' is denied.

>

>

>

When I check IIS I see that the application is running under the DefaultAppPool account, however, I never set up Windows permissions on this folder to include **IIS AppPool\DefaultAppPool**

Instead, to stop screaming customers I granted the following permissions on the folder:

**IUSR**

- Read & Execute

- List Folder Contents

- Read

- Write

**IIS\_IUSRS**

- Modify

- Read & Execute

- List Folder Contents

- Read

- Write

This seems to have worked, but I am concerned that too many privileges have been set. I've read conflicting information online about whether **IUSR** is actually needed at all here. Can anyone clarify which users/permissions would suffice to Create and Delete documents on this folder please? Also, is IUSR part of the IIS\_IUSRS group?

## Update & Solution

Please see [my answer below](https://stackoverflow.com/a/36597241/792888). I've had to do this sadly as some recent suggestions were not well thought out, or even safe (IMO).

| I hate to post my own answer, but some answers recently have ignored the solution I posted in my own question, suggesting approaches that are nothing short of foolhardy.

In short - **you do not need to edit any Windows user account privileges at all**. Doing so only introduces risk. The process is entirely managed in IIS using inherited privileges.

## Applying Modify/Write Permissions to the *Correct* User Account

1. Right-click the domain when it appears under the Sites list, and choose *Edit Permissions*

[](https://i.stack.imgur.com/b237H.png)

Under the *Security* tab, you will see `MACHINE_NAME\IIS_IUSRS` is listed. This means that IIS automatically has read-only permission on the directory (e.g. to run ASP.Net in the site). **You do not need to edit this entry**.

[](https://i.stack.imgur.com/1Jnl7.png)

2. Click the *Edit* button, then *Add...*

3. In the text box, type `IIS AppPool\MyApplicationPoolName`, substituting `MyApplicationPoolName` with your domain name or whatever application pool is accessing your site, e.g. `IIS AppPool\mydomain.com`

[](https://i.stack.imgur.com/P4BQe.png)

4. Press the *Check Names* button. The text you typed will transform (notice the underline):

[](https://i.stack.imgur.com/cZDJK.png)

5. Press *OK* to add the user

6. With the new user (your domain) selected, now you can safely provide any *Modify* or *Write* permissions

[](https://i.stack.imgur.com/x3TXY.png)

|

How much space does BigInteger use?

How many bytes of memory does a BigInteger object use in general ?

| BigInteger internally uses an `int[]` to represent the huge numbers you use.

Thus it really **depends on the size of the number you store in it**. The `int[]` will grow if the current number doesn't fit in dynamically.

To get the number of bytes your `BigInteger` instance *currently* uses, you can make use of the `Instrumentation` interface, especially [`getObjectSize(Object)`](http://docs.oracle.com/javase/7/docs/api/java/lang/instrument/Instrumentation.html#getObjectSize%28java.lang.Object%29).

```

import java.lang.instrument.Instrumentation;

public class ObjectSizeFetcher {

private static Instrumentation instrumentation;

public static void premain(String args, Instrumentation inst) {

instrumentation = inst;

}

public static long getObjectSize(Object o) {

return instrumentation.getObjectSize(o);

}

}

```

To convince yourself, take a look at the [source code](http://grepcode.com/file/repository.grepcode.com/java/root/jdk/openjdk/6-b14/java/math/BigInteger.java), where it says:

```

/**

* The magnitude of this BigInteger, in <i>big-endian</i> order: the

* zeroth element of this array is the most-significant int of the

* magnitude. The magnitude must be "minimal" in that the most-significant

* int ({@code mag[0]}) must be non-zero. This is necessary to

* ensure that there is exactly one representation for each BigInteger

* value. Note that this implies that the BigInteger zero has a

* zero-length mag array.

*/

final int[] mag;

```

|

Single quote Issue when executing Linux command in Java

I need to execute Linux command like this using Runtime.getRuntime().exec() :

```

/opt/ie/bin/targets --a '10.1.1.219 10.1.1.36 10.1.1.37'

```

Basically, this command is to connect each targets to server one by one (10.1.1.219, 10.1.1.36, 10.1.1.37). It works well in terminal, the result should be :

```

['10.1.1.219', '10.1.1.36', '10.1.1.37']

```

But if I execute the command using Runtime.getRuntime().exec(execute), like this :

```

execute = "/opt/ie/bin/targets" + " " + "--a" + " " + "'" + sb

+ "'";

```

Java will treat the single quote as string to execute, the result will be :

```

callProcessWithInput executeThis=/opt/ie/bin/targets --a '10.1.1.219 10.1.1.36 10.1.1.37'

The output for removing undesired targets :["'10.1.1.219"]

```

Anyone knows how to solve it? Thanks!

| Quote characters are interpreted by the shell, to control how it splits up the command line into a list of arguments. But when you call `exec` from Java, you're not using a shell; you're invoking the program directly. When you pass a single `String` to `exec`, it's split up into command arguments using a `StringTokenizer`, which just splits on whitespace and doesn't give any special meaning to quotes.

If you want more control over the arguments passed to the program, call one of the versions of `exec` that takes a `String[]` parameter. This skips the `StringTokenizer` step and lets you specify the exact argument list that the called program should receive. For example:

```

String[] cmdarray = { "/opt/ie/bin/targets", "--a", "10.1.1.219 10.1.1.36 10.1.1.37" };

Runtime.getRuntime().exec(cmdarray);

```

|

Python csv.DictReader: parse string?

I am downloading a CSV file directly from a URL using `requests`.

How can I parse the resulting string with `csv.DictReader`?

Right now I have this:

```

r = requests.get(url)

reader_list = csv.DictReader(r.text)

print reader_list.fieldnames

for row in reader_list:

print row

```

But I just get `['r']` as the result of `fieldnames`, and then all kinds of weird things from `print row`.

| From the documentation of [`csv`](https://docs.python.org/3/library/csv.html#csv.reader), the first argument to [`csv.reader`](https://docs.python.org/3/library/csv.html#csv.reader) or [`csv.DictReader`](https://docs.python.org/3/library/csv.html#csv.DictReader) is `csvfile` -

>

> *csvfile* can be any object which supports the [iterator](https://docs.python.org/3/glossary.html#term-iterator) protocol and returns a string each time its `__next__()` method is called — [file objects](https://docs.python.org/3/glossary.html#term-file-object) and list objects are both suitable.

>

>

>

In your case when you give the string as the direct input for the `csv.DictReader()` , the `__next__()` call on that string only provides a single character, and hence that becomes the header, and then `__next__()` is continuously called to get each row.

Hence, you need to either provide an in-memory stream of strings using `io.StringIO`:

```

>>> import csv

>>> s = """a,b,c

... 1,2,3

... 4,5,6

... 7,8,9"""

>>> import io

>>> reader_list = csv.DictReader(io.StringIO(s))

>>> print(reader_list.fieldnames)

['a', 'b', 'c']

>>> for row in reader_list:

... print(row)

...

{'a': '1', 'b': '2', 'c': '3'}

{'a': '4', 'b': '5', 'c': '6'}

{'a': '7', 'b': '8', 'c': '9'}

```

or a list of lines using [`str.splitlines`](https://docs.python.org/3/library/stdtypes.html#str.splitlines):

```

>>> reader_list = csv.DictReader(s.splitlines())

>>> print(reader_list.fieldnames)

['a', 'b', 'c']

>>> for row in reader_list:

... print(row)

...

{'a': '1', 'b': '2', 'c': '3'}

{'a': '4', 'b': '5', 'c': '6'}

{'a': '7', 'b': '8', 'c': '9'}

```

|

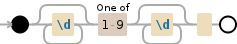

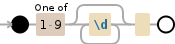

Regular expression to check if a String is a positive natural number

I want to check if a string is a positive natural number but I don't want to use `Integer.parseInt()` because the user may enter a number larger than an int. Instead I would prefer to use a regex to return false if a numeric String contains all "0" characters.

```

if(val.matches("[0-9]+")){

// We know that it will be a number, but what if it is "000"?

// what should I change to make sure

// "At Least 1 character in the String is from 1-9"

}

```

Note: the string must contain only `0`-`9` and it must not contain all `0`s; in other words it must have at least 1 character in `[1-9]`.

| You'd be better off using [`BigInteger`](http://docs.oracle.com/javase/7/docs/api/java/math/BigInteger.html) if you're trying to work with an arbitrarily large integer, however the following pattern should match a series of digits containing at least one non-zero character.

```

\d*[1-9]\d*

```

[Debuggex Demo](https://www.debuggex.com/r/rg9jF3AETqaCMAj8)

Debugex's unit tests seem a little buggy, but you can play with the pattern there. It's simple enough that it should be reasonably cross-language compatible, but in Java you'd need to escape it.

```

Pattern positiveNumber = Pattern.compile("\\d*[1-9]\\d*");

```

---

Note the above (intentionally) matches strings we wouldn't normally consider "positive natural numbers", as a valid string can start with one or more `0`s, e.g. `000123`. If you don't want to match such strings, you can simplify the pattern further.

```

[1-9]\d*

```

[Debuggex Demo](https://www.debuggex.com/r/ZEmlyzwjNlq1mnX-)

```

Pattern exactPositiveNumber = Pattern.compile("[1-9]\\d*");

```

|

What does idl attribute mean in the WHATWG html5 standard document?

While reading over the WHATWG's [HTML5 - A technical specification for Web developers](http://developers.whatwg.org) I see many references such as:

>

> # Reflecting content attributes in IDL attributes

>

>

> Some IDL attributes are defined to reflect a particular content

> attribute. This means that on getting, the IDL attribute returns the

> current value of the content attribute, and on setting, the IDL

> attribute changes the value of the content attribute to the given

> value.

>

>

>

and:

>

> In conforming documents, there is only one body element. The

> document.body IDL attribute provides scripts with easy access to a

> document's body element.

>

>

> The body element exposes as event handler content attributes a number

> of the event handlers of the Window object. It also mirrors their

> event handler IDL attributes.

>

>

>

My (admittedly fuzzy) understanding comes from the Windows world. I think an .idl file is used to map remote procedure calls in an n-tier distributed app. I would assume a content attribute refers to html element attributes.

There is no place in the standard *that I can see* that explains this usage of the terms "content attribute" and "IDL attribute". Could anyone explain what these terms mean and how the two kinds of attributes relate?

| The IDL ([Interface Definition Language](https://en.wikipedia.org/wiki/Interface_description_language)) comes from the [Web IDL](http://dev.w3.org/2006/webapi/WebIDL/) spec:

>

> This document defines an interface definition language, Web IDL, that

> can be used to describe interfaces that are intended to be implemented

> in web browsers. Web IDL is an IDL variant with a number of features

> that allow the behavior of common script objects in the web platform

> to be specified more readily. How interfaces described with Web IDL

> correspond to constructs within ECMAScript execution environments is

> also detailed in this document.

>

>

>

Content attributes are the ones that appear in the markup:

```

<div id="mydiv" class="example"></div>

```

In the above code `id` and `class` are attributes. Usually a content attribute will have a corresponding IDL attribute.

For example, the following JavaScript:

```

document.getElementById('mydiv').className = 'example'

```

Is equivalent to setting the `class` content attribute.

In JavaScript texts, the IDL attributes are often referred to as properties because they are exposed as properties of DOM objects to JavaScript.

While there's usually a corresponding pair of a content attribute and an IDL attribute/property, they are not necessarily interchangeable. For example, for an `<option>` element:

- the content attribute `selected` indicates the *initial* state of the option (and does not change when the user changes the option),

- the property `selected` reflects the *current* state of the control

|

What is the difference between "workflow engine" and "business process management engine"?

I have heard about these two concepts after a lot of time.

Such as "windows workflow foundation" and Activiti and jBPM and other project is "business process management engine".

Are these two nouns ("workflow engine" and "business process management engine") the same thing?

| In Wikipedia, a ["Workflow Engine"](https://en.wikipedia.org/wiki/Workflow_engine) is defined as:

>

> A software application that manages and executes modeled computer processes.

>

>

>

and from the same source, ["Business Process Management"](https://en.wikipedia.org/wiki/Business_process_management) is defined as:

>

> A "holistic management" approach to aligning an organization's business processes

> with the wants and needs of clients

>

>

>

Hence these nouns should ***not*** be used interchangeably; a "Workflow engine" is a generic engine used for modelling *any* workflow, whereas a "Business Process Management Engine" is a specific implementation of a "Workflow Engine" which models business process management.

So you could make the analogy, "Workflow Engine" is to a block of wood as "Business Process Management Engine" is to a carved wooden walking stick.

|

Why does map return an additional element when using ranges in Haskell?

I've just started learning Haskell and found a strange thing.

Let we have a list:

```

ghci> [0,2..5]

[0,2,4]

```

It has 3 elements. When I use `map` with this list I get 3 element as output, for example:

```

ghci> map (+ 1) [0,2..5]

[1,3,5]

ghci> map (* 2) [0,2..5]

[0,4,8]

ghci> map (`div` 2) [0,2..5]

[0,1,2]

```

But when I use fractional division I get 4 elements in output list:

```

ghci> map (/ 2) [0,2..5]

[0.0,1.0,2.0,3.0]

ghci> length (map (/ 2) [0,2..5])

4

```

Could you please explain why `map` may return more elements then it was?

Thank you!

| It's due to the implementation of `Enum` for `Float` and `Double`:

```

> [0,2..5] :: [Float]

[0.0,2.0,4.0,6.0]

```

It's not `map` doing it, but `Float`. Specifically, if you call `enumFromThenTo 0 2 5 :: [Float]`, you'll get the same list. You'll see the same results for `Double`.

This is hinted at in [the haskell report](https://www.haskell.org/onlinereport/haskell2010/haskellch9.html#verbatim-224), but the behavior is definitely non-obvious. Essentially, it comes down to the implementation of `numericEnumFromThenTo` (we're getting into some Haskell internals here), which is used by the `Enum Float` instance:

```

numericEnumFromThenTo n n' m = takeWhile p (numericEnumFromThen n n')

where

p | n' >= n = (<= m + (n' - n) / 2)

| otherwise = (>= m + (n' - n) / 2)

numericEnumFromThen n m = iterate (+ (m - n)) n

```

So you have `numericEnumFromThen 0.0 2.0` generating the list `[0.0,2.0,4.0,6.0,8.0,...]`, then you do `takeWhile p` on that, which in this case is equivalent to the function `\x -> x <= 5.0 + (2.0 - 0.0) / 2`, or more simply `\x -> x <= 6.0`, which is why `6.0` is included in the output list of `[0.0,2.0..5.0]`.

I can't explain *why* it's implemented this way, that's pretty baffling to me too, but hopefully I've answered the *how* for its implementation.

|

How to check the uniqueness inside a for-loop?

Is there a way to check slices/maps for the presence of a value?

I would like to add a value to a slice ***only*** if it does ***not*** exist in the slice.

This works, but it seems verbose. Is there a better way to do this?

```

orgSlice := []int{1, 2, 3}

newSlice := []int{}

newInt := 2

newSlice = append(newSlice, newInt)

for _, v := range orgSlice {

if v != newInt {

newSlice = append(newSlice, v)

}

}

newSlice == [2 1 3]

```

| Your approach would take linear time for each insertion. A better way would be to use a `map[int]struct{}`. Alternatively, you could also use a `map[int]bool` or something similar, but the empty `struct{}` has the advantage that it doesn't occupy any additional space. Therefore `map[int]struct{}` is a popular choice for a set of integers.

**Example:**

```

set := make(map[int]struct{})

set[1] = struct{}{}

set[2] = struct{}{}

set[1] = struct{}{}

// ...

for key := range(set) {

fmt.Println(key)

}

// each value will be printed only once, in no particular order

// you can use the ,ok idiom to check for existing keys

if _, ok := set[1]; ok {

fmt.Println("element found")

} else {

fmt.Println("element not found")

}

```

|

Does using a 'foreign' domain as email sender reduce email reputation?

We want to send emails through our webapp.

Users of the app provide their email adresses.

In some cases, we want to send transactional email from the webapp, using the current user as a sender.

Does using the User's name and email adress in the email `from` header affect email deliverability reputation?

Are there any other (bad) consquences, we should be aware of?

**adding details about the use case:**

- Say PersonA uses our app on myapp.com

- PersonA verified his email adress personA@example.com with a confirmation email we send him (he clicked a unique url in the email he got).

- Using the app, PersonA can invite other people to do something (attend an event for example)

- If PersonA invites PersonB we want to send an email, to let PersonB know that he has been invited. To do so, we would like to send an email from personA@example.com to PersonB.

- Having a sender header with myapp.com is totally fine. But PersonB should see see "PersonA ".

We are not going to send hundreds of emails like that. But we would like to create some trust when PersonB sees, that his good old friend PersonA invited him, not a stupid "notification@myapp.com" he never heard about.

| You would be opening a whole can of worms if you do not authenticate the email address first.

This would allow users to send emails with any from address. If you get each user to authenticate the email address they want to use, i.e. send an email to the address they specify, and get them to provide information in that email (which should be unique) or click on a unique link.

After an email has been authenticated, you know that they have (or at least had) access to that email account. It is now safer to send emails as that user.

However, this will still cause issues under specific circumstances. If the users domain has SPF enabled (SPF checks that only certain ip's send emails for that domain), it is likely that emails will be tagged as spam ( at least for that users with domains that use SPF).

This may increase the overall spam "rating" of your server with specific servers under specific circumstances. It is possible to alleviate this in various ways but that is a fair bit of work.

Unless there is a really good reason to have the emails show up as from a user, it would be better to not do that.

There is an option to use the "Sender: " header which may resolve this issue for you. <https://stackoverflow.com/questions/4367358/whats-the-difference-between-sender-from-and-return-path> provides a good example.

I, however, have no experience with this or its impact on messages or servers being tagged as spam.

|

Guice don't inject to Jersey's resources

Parsed allover the whole internet, but can't figure out why this happens. I've got a simplest possible project (over jersey-quickstart-grizzly2 archetype) with one Jersey resource. I'm using Guice as DI because CDI doesn't want to work with Jersey either. The problem is that Guice can't resolve the class to use when injecting in Jersey's resources. It works great outside, but not with Jersey.

Here is the Jersey resource:

```

import com.google.inject.Inject;

import javax.ws.rs.GET;

import javax.ws.rs.Path;

import javax.ws.rs.Produces;

import javax.ws.rs.core.MediaType;

@Path("api")

public class MyResource {

private Transport transport;

@Inject

public void setTransport(Transport transport) {

this.transport = transport;

}

@GET

@Produces(MediaType.TEXT_PLAIN)

public String getIt() {

return transport.encode("Got it!");

}

}

```

Transport interface:

```

public interface Transport {

String encode(String input);

}

```

And it's realization:

```

public class TransportImpl implements Transport {

@Override

public String encode(String input) {

return "before:".concat(input).concat(":after");

}

}

```

Following Google's GettingStarted manual, I've inherited `AbstractModule` and bound my classes like this:

```

public class TransportModule extends AbstractModule {

@Override

protected void configure() {

bind(Transport.class).to(TransportImpl.class);

}

}

```

I get injector in `main()` with this, but don't really need it here:

```

Injector injector = Guice.createInjector(new TransportModule());

```

Btw, there's no problem when I try to do smth like this:

```

Transport transport = injector.getInstance(Transport.class);

```

| Jersey 2 already has a DI framework, [HK2](https://hk2.java.net/2.4.0-b07/). You can either use it, or if you want, you can use the HK2/Guice bridge to bride your Guice module with HK2.

If you want to work with HK2, at the most basic level, it's not much different from the Guice module. For example, in your current code, you could do this

```

public class Binder extends AbstractBinder {

@Override

public void configurer() {

bind(TransportImpl.class).to(Transport.class);

}

}

```

Then just register the binder with Jersey

```

new ResourceConfig().register(new Binder());

```

One difference is the the binding declarations. With Guice, it "bind contract to implementation", while with HK2, it's "bind implementation to contract". You can see it's reversed from the Guice module.

If you want to bridge Guice and HK2, it's little more complicated. You need to understand a little more about HK2. Here's an example of how you can get it to work

```

@Priority(1)

public class GuiceFeature implements Feature {

@Override

public boolean configure(FeatureContext context) {

ServiceLocator locator = ServiceLocatorProvider.getServiceLocator(context);

GuiceBridge.getGuiceBridge().initializeGuiceBridge(locator);

Injector injector = Guice.createInjector(new TransportModule());

GuiceIntoHK2Bridge guiceBridge = locator.getService(GuiceIntoHK2Bridge.class);

guiceBridge.bridgeGuiceInjector(injector);

return true;

}

}

```

Then register the feature

```

new ResourceConfig().register(new GuiceFeature());

```

Personally, I would recommend getting familiar with HK2, if you're going to use Jersey.

**See Also:**

- [HK2 Documentation](https://hk2.java.net/2.4.0-b07/)

- [Custom Injection and Lifecycle Management](https://jersey.java.net/documentation/latest/ioc.html)

---

### UPDATE

Sorry, I forgot to add that to use the Guice Bridge, you need to dependency.

```

<dependency>

<groupId>org.glassfish.hk2</groupId>

<artifactId>guice-bridge</artifactId>

<version>2.4.0-b31</version>

</dependency>

```

Note that this is the dependency that goes with Jersey 2.22.1. If you are using a different version of HK2, you should make sure to use the same HK2 version that your Jersey version is using.

|

Text based game in Java

To help with learning code in my class, I've been working on this text based game to keep myself coding (almost) every day. I have a class called `BasicUnit`, and in it I have methods to create a custom class. I use 2 methods for this, allowing the user to enter the information for the class. I'm just wondering if I can do this in a more simplified manner?

```

public void buildCustomClass(int maxHP, int maxMP, int maxSP, int baseMeleeDmg, int baseSpellDmg, int baseAC, int baseSpeed) {

this.maxHP = maxHP;

this.maxMP = maxMP;

this.maxSP = maxSP;

this.baseMeleeDmg = baseMeleeDmg;

this.baseSpellDmg = baseSpellDmg;

this.baseAC = baseAC;

this.baseSpeed = baseSpeed;

lvl = 1;

xp = 0;

curHP = maxHP;

curMP = maxMP;

curSP = maxSP;

}

public void createCustomClass() {

kb = new Scanner(System.in);

System.out.println("Enter the information for your class: ");

System.out.println("Enter HP: ");

maxHP = kb.nextInt();

System.out.println("Enter MP: ");

maxMP = kb.nextInt();

System.out.println("Enter SP: ");

maxSP = kb.nextInt();

System.out.println("Enter Base Melee Damage: ");

baseMeleeDmg = kb.nextInt();

System.out.println("Enter Base Spell Damage: ");

baseSpellDmg = kb.nextInt();

System.out.println("Enter AC: ");

baseAC = kb.nextInt();

System.out.println("Enter Speed: ");

baseSpeed = kb.nextInt();

buildCustomClass(maxHP, maxMP, maxSP, baseMeleeDmg, baseSpellDmg, baseAC, baseSpeed);

}

```

| Welcome to Code Review and thanks for sharing your code!

# General issues

## Naming

Finding good names is the hardest part in programming. So always take your time to think carefully of your identifier names.

### Naming Conventions

It looks like you already know the

[Java Naming Conventions](http://www.oracle.com/technetwork/java/codeconventions-135099.html).

### Avoid abbreviations

In your code you use some abbreviations such as `maxSP` and `baseMeleeDmg`.

Although this abbreviation makes sense to you (now) anyone reading your code being not familiar with the problem (like me) has a hard time finding out what this means.

If you do this to save typing work: remember that you way more often read your code than actually typing something. Also for Java you have good IDE support with code completion so that you most likely type a long identifier only once and later on select it from the IDEs code completion proposals.

---

>

> The other identifiers make perfect sense to me. [because of context] – AJD

>

>

>

Context looks like your friend but in fact it is your enemy.

There are two reasons:

1. Context depends on *knowlegde* and *experience*. But different persons have different knowlegde and experience, so for one it might be easy to remember the context and for another it might be hard. Also your own knowledge and experiences change over time so you might find it hard to remember the context of any given code snipped you wrote when you come back to it in 3 in years or even 3 moth.

The point is that in any case you need (more or less) *time* to bring the context back to you brain.

But the only thing that you usually don't have when you do programming as a business ist time. So anything that makes you faster in understanding the code is a money worth benefit. And not needing to remember any context is such a time saver.

2. You may argue that we have a very simple promblem with an easy and common context. Thats true.

But:

- Real life projects usually have higher complexity and less eays to remember contexts. The point here is:

At which point is your context so complext that you switch from "acronym naming" to "verbose nameing"?

Again this point changes with you knowledge and your experience wich may lead to code that others have a hard time to understand.

The much better way to deal with it is to *always* white your code in a way that the dumbest person you know may be able to understand it. And this includes not to use akronyms in your identifiers that *might* need context to understand.

- This is a *training* project. When you train a physical skill like Highjumping you start with a very low bar that you can easily pass even not using the *flop* technique just to have a safe environment.

Same is here: The problem may be simple enough to be understood having the acronymed identifiers, but for the sake of training you should avoid acronyms.

### Avoid misleading naming

Both of your method names are misleading: They claim to *create* and *build* something but in reality neither one is creating or building anything.

One method is doing *user interaction* and the other is *configuring* the object.

The names of the methods should reflect that.

### Add units to identifiers for physical values

Physical values do mean nothing without a *unit*. This is a special case of the *context problem* mentioned above. The most famous example is the fail of the two space missions *Mars Climate Orbiter* and *Mars Polar Lander*. The Flight control software was build by NASA and worked with *metric* measurement (i.e. `meters`, `meters per second` or `newton seconds`) while the engine (and their driver software) has been build by *Lockheed Martin* which use *imperial* units (i.e. `feet`, `feet per minute` or `Pound-force second`).

The point is: not having *units* of physical values in your identifiers forces you to *think* if there is a problem or not:

```

double acceleration = flightManagement.calculateAcceleration();

engine.accelerate(acceleration);

```

But usually you don't quesion it unless you have a reason...

Having the units in the identifier names the problem becomes obvious:

```

double meterPerSquareSeconds =

flightManagement.calculateAccelerationInMeterPerSquareSecond();

engine.accelerateByFeedPerSquareMinute(

meterPerSquareSeconds); // oops

```

And we have the same argument again: your particular code is so easy and so small that we don't need the overhead.

But then: How do you decide at which point the overhead is needed? And again it depends on knowledge and experience which still are different among people...

# Flawed implementation

Both methods using the *same* member variables.

E.g. you variable `maxSP`: in `createCustomClass()` you assign it the result of `kb.nextInt()`. Then you pass this value as parameter to `buildCustomClass()` where you again assign the parameter value again to the *same* member variable.

Beside this being useless it may lead to confusing bugs later.

# keep same level of abstraction

Methods should either do "primitive" opeerations or call other methods, not both at the same time.

At the end of your method `createCustomClass()` you call the other method (`buildCustomClass()`). The better way to do this is to extract the code before the call to `buildCustomClass()` in a separate (private) method:

```

public void createCustomClass() {

aquireDataFromUser();

buildCustomClass(

maxHP,

maxMP,

maxSP,

baseMeleeDmg,

baseSpellDmg,

baseAC,

baseSpeed);

}

private void aquireDataFromUser() {

kb = new Scanner(System.in);

System.out.println("Enter the information for your class: ");

System.out.println("Enter HP: ");

maxHP = kb.nextInt();

System.out.println("Enter MP: ");

maxMP = kb.nextInt();

System.out.println("Enter SP: ");

maxSP = kb.nextInt();

System.out.println("Enter Base Melee Damage: ");

baseMeleeDmg = kb.nextInt();

System.out.println("Enter Base Spell Damage: ");

baseSpellDmg = kb.nextInt();

System.out.println("Enter AC: ");

baseAC = kb.nextInt();

System.out.println("Enter Speed: ");

baseSpeed = kb.nextInt();

}

```

Beside making `createCustomClass()` shorter it makes the useless reassingment of the member variables obvious.

|

Disable Python requests SSL validation for an imported module

I'm running a Python script that uses the `requests` package for making web requests. However, the web requests go through a proxy with a self-signed cert. As such, requests raise the following Exception:

`requests.exceptions.SSLError: ("bad handshake: Error([('SSL routines', 'SSL3_GET_SERVER_CERTIFICATE', 'certificate verify failed')],)",)`

I know that SSL validation can be disabled in my own code by passing `verify=False`, e.g.: `requests.get("https://www.google.com", verify=False)`. I also know that if I had the certificate bundle, I could set the `REQUESTS_CA_BUNDLE` or `CURL_CA_BUNDLE` environment variables to point to those files. However, I do not have the certificate bundle available.

How can I disable SSL validation for external modules without editing their code?

| **Note**: This solution is a complete hack.

**Short answer**: Set the `CURL_CA_BUNDLE` environment variable to an empty string.

Before:

```

$ python

import requests

requests.get('http://www.google.com')

<Response [200]>

requests.get('https://www.google.com')

...

File "/usr/local/lib/python2.7/site-packages/requests-2.17.3-py2.7.egg/requests/adapters.py", line 514, in send

raise SSLError(e, request=request)

requests.exceptions.SSLError: ("bad handshake: Error([('SSL routines', 'SSL3_GET_SERVER_CERTIFICATE', 'certificate verify failed')],)",)

```

After:

```

$ CURL_CA_BUNDLE="" python

import requests

requests.get('http://www.google.com')

<Response [200]>

requests.get('https://www.google.com')

/usr/local/lib/python2.7/site-packages/urllib3-1.21.1-py2.7.egg/urllib3/connectionpool.py:852: InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/latest/advanced-usage.html#ssl-warnings InsecureRequestWarning)

<Response [200]>

```

**How it works**

This solution works because Python `requests` overwrites the default value for `verify` from the environment variables `CURL_CA_BUNDLE` and `REQUESTS_CA_BUNDLE`, as can be seen [here](https://github.com/psf/requests/blob/8c211a96cdbe9fe320d63d9e1ae15c5c07e179f8/requests/sessions.py#L718):

```

if verify is True or verify is None:

verify = (os.environ.get('REQUESTS_CA_BUNDLE') or

os.environ.get('CURL_CA_BUNDLE'))

```

The environment variables are meant to specify the path to the certificate file or CA\_BUNDLE and are copied into `verify`. However, by setting `CURL_CA_BUNDLE` to an empty string, the empty string is copied into `verify` and in Python, an empty string evaluates to `False`.

Note that this hack only works with the `CURL_CA_BUNDLE` environment variable - it does not work with the `REQUESTS_CA_BUNDLE`. This is because `verify` [is set with the following statement](https://github.com/psf/requests/blob/8c211a96cdbe9fe320d63d9e1ae15c5c07e179f8/requests/sessions.py#L718):

`verify = (os.environ.get('REQUESTS_CA_BUNDLE') or os.environ.get('CURL_CA_BUNDLE'))`

It only works with `CURL_CA_BUNDLE` because `'' or None` is not the same as `None or ''`, as can be seen below:

```

print repr(None or "")

# Prints: ''

print repr("" or None )

# Prints: None

```

|