datasetId

stringlengths 2

81

| card

stringlengths 20

977k

|

|---|---|

code_x_glue_ct_code_to_text | ---

annotations_creators:

- found

language_creators:

- found

language:

- code

- en

license:

- c-uda

multilinguality:

- other-programming-languages

size_categories:

- 100K<n<1M

- 10K<n<100K

source_datasets:

- original

task_categories:

- translation

task_ids: []

pretty_name: CodeXGlueCtCodeToText

config_names:

- go

- java

- javascript

- php

- python

- ruby

tags:

- code-to-text

dataset_info:

- config_name: go

features:

- name: id

dtype: int32

- name: repo

dtype: string

- name: path

dtype: string

- name: func_name

dtype: string

- name: original_string

dtype: string

- name: language

dtype: string

- name: code

dtype: string

- name: code_tokens

sequence: string

- name: docstring

dtype: string

- name: docstring_tokens

sequence: string

- name: sha

dtype: string

- name: url

dtype: string

splits:

- name: train

num_bytes: 342243143

num_examples: 167288

- name: validation

num_bytes: 13721860

num_examples: 7325

- name: test

num_bytes: 16328406

num_examples: 8122

download_size: 121341698

dataset_size: 372293409

- config_name: java

features:

- name: id

dtype: int32

- name: repo

dtype: string

- name: path

dtype: string

- name: func_name

dtype: string

- name: original_string

dtype: string

- name: language

dtype: string

- name: code

dtype: string

- name: code_tokens

sequence: string

- name: docstring

dtype: string

- name: docstring_tokens

sequence: string

- name: sha

dtype: string

- name: url

dtype: string

splits:

- name: train

num_bytes: 452553835

num_examples: 164923

- name: validation

num_bytes: 13366344

num_examples: 5183

- name: test

num_bytes: 29080753

num_examples: 10955

download_size: 154701399

dataset_size: 495000932

- config_name: javascript

features:

- name: id

dtype: int32

- name: repo

dtype: string

- name: path

dtype: string

- name: func_name

dtype: string

- name: original_string

dtype: string

- name: language

dtype: string

- name: code

dtype: string

- name: code_tokens

sequence: string

- name: docstring

dtype: string

- name: docstring_tokens

sequence: string

- name: sha

dtype: string

- name: url

dtype: string

splits:

- name: train

num_bytes: 160860431

num_examples: 58025

- name: validation

num_bytes: 10337344

num_examples: 3885

- name: test

num_bytes: 10190713

num_examples: 3291

download_size: 65788314

dataset_size: 181388488

- config_name: php

features:

- name: id

dtype: int32

- name: repo

dtype: string

- name: path

dtype: string

- name: func_name

dtype: string

- name: original_string

dtype: string

- name: language

dtype: string

- name: code

dtype: string

- name: code_tokens

sequence: string

- name: docstring

dtype: string

- name: docstring_tokens

sequence: string

- name: sha

dtype: string

- name: url

dtype: string

splits:

- name: train

num_bytes: 614654499

num_examples: 241241

- name: validation

num_bytes: 33283045

num_examples: 12982

- name: test

num_bytes: 35374993

num_examples: 14014

download_size: 219692158

dataset_size: 683312537

- config_name: python

features:

- name: id

dtype: int32

- name: repo

dtype: string

- name: path

dtype: string

- name: func_name

dtype: string

- name: original_string

dtype: string

- name: language

dtype: string

- name: code

dtype: string

- name: code_tokens

sequence: string

- name: docstring

dtype: string

- name: docstring_tokens

sequence: string

- name: sha

dtype: string

- name: url

dtype: string

splits:

- name: train

num_bytes: 813663148

num_examples: 251820

- name: validation

num_bytes: 46888564

num_examples: 13914

- name: test

num_bytes: 50659688

num_examples: 14918

download_size: 325551862

dataset_size: 911211400

- config_name: ruby

features:

- name: id

dtype: int32

- name: repo

dtype: string

- name: path

dtype: string

- name: func_name

dtype: string

- name: original_string

dtype: string

- name: language

dtype: string

- name: code

dtype: string

- name: code_tokens

sequence: string

- name: docstring

dtype: string

- name: docstring_tokens

sequence: string

- name: sha

dtype: string

- name: url

dtype: string

splits:

- name: train

num_bytes: 51956439

num_examples: 24927

- name: validation

num_bytes: 2821037

num_examples: 1400

- name: test

num_bytes: 2671551

num_examples: 1261

download_size: 21921316

dataset_size: 57449027

configs:

- config_name: go

data_files:

- split: train

path: go/train-*

- split: validation

path: go/validation-*

- split: test

path: go/test-*

- config_name: java

data_files:

- split: train

path: java/train-*

- split: validation

path: java/validation-*

- split: test

path: java/test-*

- config_name: javascript

data_files:

- split: train

path: javascript/train-*

- split: validation

path: javascript/validation-*

- split: test

path: javascript/test-*

- config_name: php

data_files:

- split: train

path: php/train-*

- split: validation

path: php/validation-*

- split: test

path: php/test-*

- config_name: python

data_files:

- split: train

path: python/train-*

- split: validation

path: python/validation-*

- split: test

path: python/test-*

- config_name: ruby

data_files:

- split: train

path: ruby/train-*

- split: validation

path: ruby/validation-*

- split: test

path: ruby/test-*

---

# Dataset Card for "code_x_glue_ct_code_to_text"

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits-sample-size)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://github.com/microsoft/CodeXGLUE/tree/main/Code-Text/code-to-text

### Dataset Summary

CodeXGLUE code-to-text dataset, available at https://github.com/microsoft/CodeXGLUE/tree/main/Code-Text/code-to-text

The dataset we use comes from CodeSearchNet and we filter the dataset as the following:

- Remove examples that codes cannot be parsed into an abstract syntax tree.

- Remove examples that #tokens of documents is < 3 or >256

- Remove examples that documents contain special tokens (e.g. <img ...> or https:...)

- Remove examples that documents are not English.

### Supported Tasks and Leaderboards

- `machine-translation`: The dataset can be used to train a model for automatically generating **English** docstrings for code.

### Languages

- Go **programming** language

- Java **programming** language

- Javascript **programming** language

- PHP **programming** language

- Python **programming** language

- Ruby **programming** language

- English **natural** language

## Dataset Structure

### Data Instances

#### go

An example of 'test' looks as follows.

```

{

"code": "func NewSTM(c *v3.Client, apply func(STM) error, so ...stmOption) (*v3.TxnResponse, error) {\n\topts := &stmOptions{ctx: c.Ctx()}\n\tfor _, f := range so {\n\t\tf(opts)\n\t}\n\tif len(opts.prefetch) != 0 {\n\t\tf := apply\n\t\tapply = func(s STM) error {\n\t\t\ts.Get(opts.prefetch...)\n\t\t\treturn f(s)\n\t\t}\n\t}\n\treturn runSTM(mkSTM(c, opts), apply)\n}",

"code_tokens": ["func", "NewSTM", "(", "c", "*", "v3", ".", "Client", ",", "apply", "func", "(", "STM", ")", "error", ",", "so", "...", "stmOption", ")", "(", "*", "v3", ".", "TxnResponse", ",", "error", ")", "{", "opts", ":=", "&", "stmOptions", "{", "ctx", ":", "c", ".", "Ctx", "(", ")", "}", "\n", "for", "_", ",", "f", ":=", "range", "so", "{", "f", "(", "opts", ")", "\n", "}", "\n", "if", "len", "(", "opts", ".", "prefetch", ")", "!=", "0", "{", "f", ":=", "apply", "\n", "apply", "=", "func", "(", "s", "STM", ")", "error", "{", "s", ".", "Get", "(", "opts", ".", "prefetch", "...", ")", "\n", "return", "f", "(", "s", ")", "\n", "}", "\n", "}", "\n", "return", "runSTM", "(", "mkSTM", "(", "c", ",", "opts", ")", ",", "apply", ")", "\n", "}"],

"docstring": "// NewSTM initiates a new STM instance, using serializable snapshot isolation by default.",

"docstring_tokens": ["NewSTM", "initiates", "a", "new", "STM", "instance", "using", "serializable", "snapshot", "isolation", "by", "default", "."],

"func_name": "NewSTM",

"id": 0,

"language": "go",

"original_string": "func NewSTM(c *v3.Client, apply func(STM) error, so ...stmOption) (*v3.TxnResponse, error) {\n\topts := &stmOptions{ctx: c.Ctx()}\n\tfor _, f := range so {\n\t\tf(opts)\n\t}\n\tif len(opts.prefetch) != 0 {\n\t\tf := apply\n\t\tapply = func(s STM) error {\n\t\t\ts.Get(opts.prefetch...)\n\t\t\treturn f(s)\n\t\t}\n\t}\n\treturn runSTM(mkSTM(c, opts), apply)\n}",

"path": "clientv3/concurrency/stm.go",

"repo": "etcd-io/etcd",

"sha": "616592d9ba993e3fe9798eef581316016df98906",

"url": "https://github.com/etcd-io/etcd/blob/616592d9ba993e3fe9798eef581316016df98906/clientv3/concurrency/stm.go#L89-L102"

}

```

#### java

An example of 'test' looks as follows.

```

{

"code": "protected final void fastPathOrderedEmit(U value, boolean delayError, Disposable disposable) {\n final Observer<? super V> observer = downstream;\n final SimplePlainQueue<U> q = queue;\n\n if (wip.get() == 0 && wip.compareAndSet(0, 1)) {\n if (q.isEmpty()) {\n accept(observer, value);\n if (leave(-1) == 0) {\n return;\n }\n } else {\n q.offer(value);\n }\n } else {\n q.offer(value);\n if (!enter()) {\n return;\n }\n }\n QueueDrainHelper.drainLoop(q, observer, delayError, disposable, this);\n }",

"code_tokens": ["protected", "final", "void", "fastPathOrderedEmit", "(", "U", "value", ",", "boolean", "delayError", ",", "Disposable", "disposable", ")", "{", "final", "Observer", "<", "?", "super", "V", ">", "observer", "=", "downstream", ";", "final", "SimplePlainQueue", "<", "U", ">", "q", "=", "queue", ";", "if", "(", "wip", ".", "get", "(", ")", "==", "0", "&&", "wip", ".", "compareAndSet", "(", "0", ",", "1", ")", ")", "{", "if", "(", "q", ".", "isEmpty", "(", ")", ")", "{", "accept", "(", "observer", ",", "value", ")", ";", "if", "(", "leave", "(", "-", "1", ")", "==", "0", ")", "{", "return", ";", "}", "}", "else", "{", "q", ".", "offer", "(", "value", ")", ";", "}", "}", "else", "{", "q", ".", "offer", "(", "value", ")", ";", "if", "(", "!", "enter", "(", ")", ")", "{", "return", ";", "}", "}", "QueueDrainHelper", ".", "drainLoop", "(", "q", ",", "observer", ",", "delayError", ",", "disposable", ",", "this", ")", ";", "}"],

"docstring": "Makes sure the fast-path emits in order.\n@param value the value to emit or queue up\n@param delayError if true, errors are delayed until the source has terminated\n@param disposable the resource to dispose if the drain terminates",

"docstring_tokens": ["Makes", "sure", "the", "fast", "-", "path", "emits", "in", "order", "."],

"func_name": "QueueDrainObserver.fastPathOrderedEmit",

"id": 0,

"language": "java",

"original_string": "protected final void fastPathOrderedEmit(U value, boolean delayError, Disposable disposable) {\n final Observer<? super V> observer = downstream;\n final SimplePlainQueue<U> q = queue;\n\n if (wip.get() == 0 && wip.compareAndSet(0, 1)) {\n if (q.isEmpty()) {\n accept(observer, value);\n if (leave(-1) == 0) {\n return;\n }\n } else {\n q.offer(value);\n }\n } else {\n q.offer(value);\n if (!enter()) {\n return;\n }\n }\n QueueDrainHelper.drainLoop(q, observer, delayError, disposable, this);\n }",

"path": "src/main/java/io/reactivex/internal/observers/QueueDrainObserver.java",

"repo": "ReactiveX/RxJava",

"sha": "ac84182aa2bd866b53e01c8e3fe99683b882c60e",

"url": "https://github.com/ReactiveX/RxJava/blob/ac84182aa2bd866b53e01c8e3fe99683b882c60e/src/main/java/io/reactivex/internal/observers/QueueDrainObserver.java#L88-L108"

}

```

#### javascript

An example of 'test' looks as follows.

```

{

"code": "function createInstance(defaultConfig) {\n var context = new Axios(defaultConfig);\n var instance = bind(Axios.prototype.request, context);\n\n // Copy axios.prototype to instance\n utils.extend(instance, Axios.prototype, context);\n\n // Copy context to instance\n utils.extend(instance, context);\n\n return instance;\n}",

"code_tokens": ["function", "createInstance", "(", "defaultConfig", ")", "{", "var", "context", "=", "new", "Axios", "(", "defaultConfig", ")", ";", "var", "instance", "=", "bind", "(", "Axios", ".", "prototype", ".", "request", ",", "context", ")", ";", "// Copy axios.prototype to instance", "utils", ".", "extend", "(", "instance", ",", "Axios", ".", "prototype", ",", "context", ")", ";", "// Copy context to instance", "utils", ".", "extend", "(", "instance", ",", "context", ")", ";", "return", "instance", ";", "}"],

"docstring": "Create an instance of Axios\n\n@param {Object} defaultConfig The default config for the instance\n@return {Axios} A new instance of Axios",

"docstring_tokens": ["Create", "an", "instance", "of", "Axios"],

"func_name": "createInstance",

"id": 0,

"language": "javascript",

"original_string": "function createInstance(defaultConfig) {\n var context = new Axios(defaultConfig);\n var instance = bind(Axios.prototype.request, context);\n\n // Copy axios.prototype to instance\n utils.extend(instance, Axios.prototype, context);\n\n // Copy context to instance\n utils.extend(instance, context);\n\n return instance;\n}",

"path": "lib/axios.js",

"repo": "axios/axios",

"sha": "92d231387fe2092f8736bc1746d4caa766b675f5",

"url": "https://github.com/axios/axios/blob/92d231387fe2092f8736bc1746d4caa766b675f5/lib/axios.js#L15-L26"

}

```

#### php

An example of 'train' looks as follows.

```

{

"code": "public static function build($serviceAddress, $restConfigPath, array $config = [])\n {\n $config += [\n 'httpHandler' => null,\n ];\n list($baseUri, $port) = self::normalizeServiceAddress($serviceAddress);\n $requestBuilder = new RequestBuilder(\"$baseUri:$port\", $restConfigPath);\n $httpHandler = $config['httpHandler'] ?: self::buildHttpHandlerAsync();\n return new RestTransport($requestBuilder, $httpHandler);\n }",

"code_tokens": ["public", "static", "function", "build", "(", "$", "serviceAddress", ",", "$", "restConfigPath", ",", "array", "$", "config", "=", "[", "]", ")", "{", "$", "config", "+=", "[", "'httpHandler'", "=>", "null", ",", "]", ";", "list", "(", "$", "baseUri", ",", "$", "port", ")", "=", "self", "::", "normalizeServiceAddress", "(", "$", "serviceAddress", ")", ";", "$", "requestBuilder", "=", "new", "RequestBuilder", "(", "\"$baseUri:$port\"", ",", "$", "restConfigPath", ")", ";", "$", "httpHandler", "=", "$", "config", "[", "'httpHandler'", "]", "?", ":", "self", "::", "buildHttpHandlerAsync", "(", ")", ";", "return", "new", "RestTransport", "(", "$", "requestBuilder", ",", "$", "httpHandler", ")", ";", "}"],

"docstring": "Builds a RestTransport.\n\n@param string $serviceAddress\nThe address of the API remote host, for example \"example.googleapis.com\".\n@param string $restConfigPath\nPath to rest config file.\n@param array $config {\nConfig options used to construct the gRPC transport.\n\n@type callable $httpHandler A handler used to deliver PSR-7 requests.\n}\n@return RestTransport\n@throws ValidationException",

"docstring_tokens": ["Builds", "a", "RestTransport", "."],

"func_name": "RestTransport.build",

"id": 0,

"language": "php",

"original_string": "public static function build($serviceAddress, $restConfigPath, array $config = [])\n {\n $config += [\n 'httpHandler' => null,\n ];\n list($baseUri, $port) = self::normalizeServiceAddress($serviceAddress);\n $requestBuilder = new RequestBuilder(\"$baseUri:$port\", $restConfigPath);\n $httpHandler = $config['httpHandler'] ?: self::buildHttpHandlerAsync();\n return new RestTransport($requestBuilder, $httpHandler);\n }",

"path": "src/Transport/RestTransport.php",

"repo": "googleapis/gax-php",

"sha": "48387fb818c6882296710a2302a0aa973b99afb2",

"url": "https://github.com/googleapis/gax-php/blob/48387fb818c6882296710a2302a0aa973b99afb2/src/Transport/RestTransport.php#L85-L94"

}

```

#### python

An example of 'validation' looks as follows.

```

{

"code": "def save_act(self, path=None):\n \"\"\"Save model to a pickle located at `path`\"\"\"\n if path is None:\n path = os.path.join(logger.get_dir(), \"model.pkl\")\n\n with tempfile.TemporaryDirectory() as td:\n save_variables(os.path.join(td, \"model\"))\n arc_name = os.path.join(td, \"packed.zip\")\n with zipfile.ZipFile(arc_name, 'w') as zipf:\n for root, dirs, files in os.walk(td):\n for fname in files:\n file_path = os.path.join(root, fname)\n if file_path != arc_name:\n zipf.write(file_path, os.path.relpath(file_path, td))\n with open(arc_name, \"rb\") as f:\n model_data = f.read()\n with open(path, \"wb\") as f:\n cloudpickle.dump((model_data, self._act_params), f)",

"code_tokens": ["def", "save_act", "(", "self", ",", "path", "=", "None", ")", ":", "if", "path", "is", "None", ":", "path", "=", "os", ".", "path", ".", "join", "(", "logger", ".", "get_dir", "(", ")", ",", "\"model.pkl\"", ")", "with", "tempfile", ".", "TemporaryDirectory", "(", ")", "as", "td", ":", "save_variables", "(", "os", ".", "path", ".", "join", "(", "td", ",", "\"model\"", ")", ")", "arc_name", "=", "os", ".", "path", ".", "join", "(", "td", ",", "\"packed.zip\"", ")", "with", "zipfile", ".", "ZipFile", "(", "arc_name", ",", "'w'", ")", "as", "zipf", ":", "for", "root", ",", "dirs", ",", "files", "in", "os", ".", "walk", "(", "td", ")", ":", "for", "fname", "in", "files", ":", "file_path", "=", "os", ".", "path", ".", "join", "(", "root", ",", "fname", ")", "if", "file_path", "!=", "arc_name", ":", "zipf", ".", "write", "(", "file_path", ",", "os", ".", "path", ".", "relpath", "(", "file_path", ",", "td", ")", ")", "with", "open", "(", "arc_name", ",", "\"rb\"", ")", "as", "f", ":", "model_data", "=", "f", ".", "read", "(", ")", "with", "open", "(", "path", ",", "\"wb\"", ")", "as", "f", ":", "cloudpickle", ".", "dump", "(", "(", "model_data", ",", "self", ".", "_act_params", ")", ",", "f", ")"],

"docstring": "Save model to a pickle located at `path`",

"docstring_tokens": ["Save", "model", "to", "a", "pickle", "located", "at", "path"],

"func_name": "ActWrapper.save_act",

"id": 0,

"language": "python",

"original_string": "def save_act(self, path=None):\n \"\"\"Save model to a pickle located at `path`\"\"\"\n if path is None:\n path = os.path.join(logger.get_dir(), \"model.pkl\")\n\n with tempfile.TemporaryDirectory() as td:\n save_variables(os.path.join(td, \"model\"))\n arc_name = os.path.join(td, \"packed.zip\")\n with zipfile.ZipFile(arc_name, 'w') as zipf:\n for root, dirs, files in os.walk(td):\n for fname in files:\n file_path = os.path.join(root, fname)\n if file_path != arc_name:\n zipf.write(file_path, os.path.relpath(file_path, td))\n with open(arc_name, \"rb\") as f:\n model_data = f.read()\n with open(path, \"wb\") as f:\n cloudpickle.dump((model_data, self._act_params), f)",

"path": "baselines/deepq/deepq.py",

"repo": "openai/baselines",

"sha": "3301089b48c42b87b396e246ea3f56fa4bfc9678",

"url": "https://github.com/openai/baselines/blob/3301089b48c42b87b396e246ea3f56fa4bfc9678/baselines/deepq/deepq.py#L55-L72"

}

```

#### ruby

An example of 'train' looks as follows.

```

{

"code": "def render_body(context, options)\n if options.key?(:partial)\n [render_partial(context, options)]\n else\n StreamingTemplateRenderer.new(@lookup_context).render(context, options)\n end\n end",

"code_tokens": ["def", "render_body", "(", "context", ",", "options", ")", "if", "options", ".", "key?", "(", ":partial", ")", "[", "render_partial", "(", "context", ",", "options", ")", "]", "else", "StreamingTemplateRenderer", ".", "new", "(", "@lookup_context", ")", ".", "render", "(", "context", ",", "options", ")", "end", "end"],

"docstring": "Render but returns a valid Rack body. If fibers are defined, we return\n a streaming body that renders the template piece by piece.\n\n Note that partials are not supported to be rendered with streaming,\n so in such cases, we just wrap them in an array.",

"docstring_tokens": ["Render", "but", "returns", "a", "valid", "Rack", "body", ".", "If", "fibers", "are", "defined", "we", "return", "a", "streaming", "body", "that", "renders", "the", "template", "piece", "by", "piece", "."],

"func_name": "ActionView.Renderer.render_body",

"id": 0,

"language": "ruby",

"original_string": "def render_body(context, options)\n if options.key?(:partial)\n [render_partial(context, options)]\n else\n StreamingTemplateRenderer.new(@lookup_context).render(context, options)\n end\n end",

"path": "actionview/lib/action_view/renderer/renderer.rb",

"repo": "rails/rails",

"sha": "85a8bc644be69908f05740a5886ec19cd3679df5",

"url": "https://github.com/rails/rails/blob/85a8bc644be69908f05740a5886ec19cd3679df5/actionview/lib/action_view/renderer/renderer.rb#L38-L44"

}

```

### Data Fields

In the following each data field in go is explained for each config. The data fields are the same among all splits.

#### go, java, javascript, php, python, ruby

| field name | type | description |

|----------------|----------------|-----------------------------------------------------------------------------------|

|id |int32 | Index of the sample |

|repo |string | repo: the owner/repo |

|path |string | path: the full path to the original file |

|func_name |string | func_name: the function or method name |

|original_string |string | original_string: the raw string before tokenization or parsing |

|language |string | language: the programming language name |

|code |string | code/function: the part of the original_string that is code |

|code_tokens |Sequence[string]| code_tokens/function_tokens: tokenized version of code |

|docstring |string | docstring: the top-level comment or docstring, if it exists in the original string|

|docstring_tokens|Sequence[string]| docstring_tokens: tokenized version of docstring |

|sha |string | sha of the file |

|url |string | url of the file |

### Data Splits

| name |train |validation|test |

|----------|-----:|---------:|----:|

|go |167288| 7325| 8122|

|java |164923| 5183|10955|

|javascript| 58025| 3885| 3291|

|php |241241| 12982|14014|

|python |251820| 13914|14918|

|ruby | 24927| 1400| 1261|

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

Data from CodeSearchNet Challenge dataset.

[More Information Needed]

#### Who are the source language producers?

Software Engineering developers.

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

https://github.com/microsoft, https://github.com/madlag

### Licensing Information

Computational Use of Data Agreement (C-UDA) License.

### Citation Information

```

@article{husain2019codesearchnet,

title={Codesearchnet challenge: Evaluating the state of semantic code search},

author={Husain, Hamel and Wu, Ho-Hsiang and Gazit, Tiferet and Allamanis, Miltiadis and Brockschmidt, Marc},

journal={arXiv preprint arXiv:1909.09436},

year={2019}

}

```

### Contributions

Thanks to @madlag (and partly also @ncoop57) for adding this dataset. |

flaviagiammarino/vqa-rad | ---

license: cc0-1.0

task_categories:

- visual-question-answering

language:

- en

paperswithcode_id: vqa-rad

tags:

- medical

pretty_name: VQA-RAD

size_categories:

- 1K<n<10K

dataset_info:

features:

- name: image

dtype: image

- name: question

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 95883938.139

num_examples: 1793

- name: test

num_bytes: 23818877.0

num_examples: 451

download_size: 34496718

dataset_size: 119702815.139

---

# Dataset Card for VQA-RAD

## Dataset Description

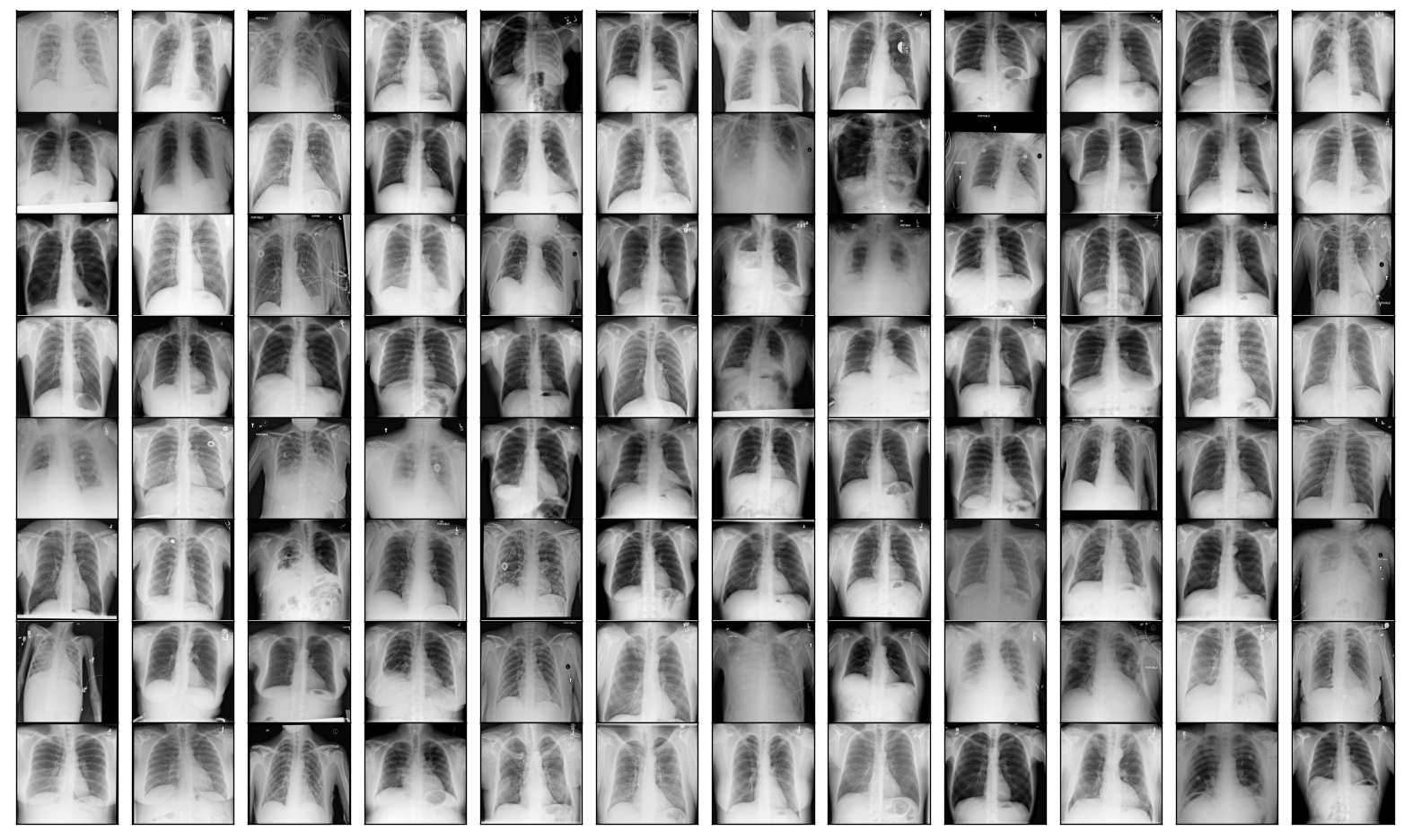

VQA-RAD is a dataset of question-answer pairs on radiology images. The dataset is intended to be used for training and testing

Medical Visual Question Answering (VQA) systems. The dataset includes both open-ended questions and binary "yes/no" questions.

The dataset is built from [MedPix](https://medpix.nlm.nih.gov/), which is a free open-access online database of medical images.

The question-answer pairs were manually generated by a team of clinicians.

**Homepage:** [Open Science Framework Homepage](https://osf.io/89kps/)<br>

**Paper:** [A dataset of clinically generated visual questions and answers about radiology images](https://www.nature.com/articles/sdata2018251)<br>

**Leaderboard:** [Papers with Code Leaderboard](https://paperswithcode.com/sota/medical-visual-question-answering-on-vqa-rad)

### Dataset Summary

The dataset was downloaded from the [Open Science Framework Homepage](https://osf.io/89kps/) on June 3, 2023. The dataset contains

2,248 question-answer pairs and 315 images. Out of the 315 images, 314 images are referenced by a question-answer pair, while 1 image

is not used. The training set contains 3 duplicate image-question-answer triplets. The training set also has 1 image-question-answer

triplet in common with the test set. After dropping these 4 image-question-answer triplets from the training set, the dataset contains

2,244 question-answer pairs on 314 images.

#### Supported Tasks and Leaderboards

This dataset has an active leaderboard on [Papers with Code](https://paperswithcode.com/sota/medical-visual-question-answering-on-vqa-rad)

where models are ranked based on three metrics: "Close-ended Accuracy", "Open-ended accuracy" and "Overall accuracy". "Close-ended Accuracy" is

the accuracy of a model's generated answers for the subset of binary "yes/no" questions. "Open-ended accuracy" is the accuracy

of a model's generated answers for the subset of open-ended questions. "Overall accuracy" is the accuracy of a model's generated

answers across all questions.

#### Languages

The question-answer pairs are in English.

## Dataset Structure

### Data Instances

Each instance consists of an image-question-answer triplet.

```

{

'image': <PIL.JpegImagePlugin.JpegImageFile image mode=RGB size=566x555>,

'question': 'are regions of the brain infarcted?',

'answer': 'yes'

}

```

### Data Fields

- `'image'`: the image referenced by the question-answer pair.

- `'question'`: the question about the image.

- `'answer'`: the expected answer.

### Data Splits

The dataset is split into training and test. The split is provided directly by the authors.

| | Training Set | Test Set |

|-------------------------|:------------:|:---------:|

| QAs |1,793 |451 |

| Images |313 |203 |

## Additional Information

### Licensing Information

The authors have released the dataset under the CC0 1.0 Universal License.

### Citation Information

```

@article{lau2018dataset,

title={A dataset of clinically generated visual questions and answers about radiology images},

author={Lau, Jason J and Gayen, Soumya and Ben Abacha, Asma and Demner-Fushman, Dina},

journal={Scientific data},

volume={5},

number={1},

pages={1--10},

year={2018},

publisher={Nature Publishing Group}

}

``` |

timit_asr | ---

pretty_name: TIMIT

annotations_creators:

- expert-generated

language_creators:

- expert-generated

language:

- en

license:

- other

license_details: "LDC-User-Agreement-for-Non-Members"

multilinguality:

- monolingual

size_categories:

- 1K<n<10K

source_datasets:

- original

task_categories:

- automatic-speech-recognition

task_ids: []

paperswithcode_id: timit

train-eval-index:

- config: clean

task: automatic-speech-recognition

task_id: speech_recognition

splits:

train_split: train

eval_split: test

col_mapping:

file: path

text: text

metrics:

- type: wer

name: WER

- type: cer

name: CER

---

# Dataset Card for timit_asr

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [TIMIT Acoustic-Phonetic Continuous Speech Corpus](https://catalog.ldc.upenn.edu/LDC93S1)

- **Repository:** [Needs More Information]

- **Paper:** [TIMIT: Dataset designed to provide speech data for acoustic-phonetic studies and for the development and evaluation of automatic speech recognition systems.](https://catalog.ldc.upenn.edu/LDC93S1)

- **Leaderboard:** [Paperswithcode Leaderboard](https://paperswithcode.com/sota/speech-recognition-on-timit)

- **Point of Contact:** [Needs More Information]

### Dataset Summary

The TIMIT corpus of read speech is designed to provide speech data for acoustic-phonetic studies and for the development and evaluation of automatic speech recognition systems. TIMIT contains broadband recordings of 630 speakers of eight major dialects of American English, each reading ten phonetically rich sentences. The TIMIT corpus includes time-aligned orthographic, phonetic and word transcriptions as well as a 16-bit, 16kHz speech waveform file for each utterance. Corpus design was a joint effort among the Massachusetts Institute of Technology (MIT), SRI International (SRI) and Texas Instruments, Inc. (TI). The speech was recorded at TI, transcribed at MIT and verified and prepared for CD-ROM production by the National Institute of Standards and Technology (NIST).

The dataset needs to be downloaded manually from https://catalog.ldc.upenn.edu/LDC93S1:

```

To use TIMIT you have to download it manually.

Please create an account and download the dataset from https://catalog.ldc.upenn.edu/LDC93S1

Then extract all files in one folder and load the dataset with:

`datasets.load_dataset('timit_asr', data_dir='path/to/folder/folder_name')`

```

### Supported Tasks and Leaderboards

- `automatic-speech-recognition`, `speaker-identification`: The dataset can be used to train a model for Automatic Speech Recognition (ASR). The model is presented with an audio file and asked to transcribe the audio file to written text. The most common evaluation metric is the word error rate (WER). The task has an active leaderboard which can be found at https://paperswithcode.com/sota/speech-recognition-on-timit and ranks models based on their WER.

### Languages

The audio is in English.

The TIMIT corpus transcriptions have been hand verified. Test and training subsets, balanced for phonetic and dialectal coverage, are specified. Tabular computer-searchable information is included as well as written documentation.

## Dataset Structure

### Data Instances

A typical data point comprises the path to the audio file, usually called `file` and its transcription, called `text`. Some additional information about the speaker and the passage which contains the transcription is provided.

```

{

'file': '/data/TRAIN/DR4/MMDM0/SI681.WAV',

'audio': {'path': '/data/TRAIN/DR4/MMDM0/SI681.WAV',

'array': array([-0.00048828, -0.00018311, -0.00137329, ..., 0.00079346, 0.00091553, 0.00085449], dtype=float32),

'sampling_rate': 16000},

'text': 'Would such an act of refusal be useful?',

'phonetic_detail': [{'start': '0', 'stop': '1960', 'utterance': 'h#'},

{'start': '1960', 'stop': '2466', 'utterance': 'w'},

{'start': '2466', 'stop': '3480', 'utterance': 'ix'},

{'start': '3480', 'stop': '4000', 'utterance': 'dcl'},

{'start': '4000', 'stop': '5960', 'utterance': 's'},

{'start': '5960', 'stop': '7480', 'utterance': 'ah'},

{'start': '7480', 'stop': '7880', 'utterance': 'tcl'},

{'start': '7880', 'stop': '9400', 'utterance': 'ch'},

{'start': '9400', 'stop': '9960', 'utterance': 'ix'},

{'start': '9960', 'stop': '10680', 'utterance': 'n'},

{'start': '10680', 'stop': '13480', 'utterance': 'ae'},

{'start': '13480', 'stop': '15680', 'utterance': 'kcl'},

{'start': '15680', 'stop': '15880', 'utterance': 't'},

{'start': '15880', 'stop': '16920', 'utterance': 'ix'},

{'start': '16920', 'stop': '18297', 'utterance': 'v'},

{'start': '18297', 'stop': '18882', 'utterance': 'r'},

{'start': '18882', 'stop': '19480', 'utterance': 'ix'},

{'start': '19480', 'stop': '21723', 'utterance': 'f'},

{'start': '21723', 'stop': '22516', 'utterance': 'y'},

{'start': '22516', 'stop': '24040', 'utterance': 'ux'},

{'start': '24040', 'stop': '25190', 'utterance': 'zh'},

{'start': '25190', 'stop': '27080', 'utterance': 'el'},

{'start': '27080', 'stop': '28160', 'utterance': 'bcl'},

{'start': '28160', 'stop': '28560', 'utterance': 'b'},

{'start': '28560', 'stop': '30120', 'utterance': 'iy'},

{'start': '30120', 'stop': '31832', 'utterance': 'y'},

{'start': '31832', 'stop': '33240', 'utterance': 'ux'},

{'start': '33240', 'stop': '34640', 'utterance': 's'},

{'start': '34640', 'stop': '35968', 'utterance': 'f'},

{'start': '35968', 'stop': '37720', 'utterance': 'el'},

{'start': '37720', 'stop': '39920', 'utterance': 'h#'}],

'word_detail': [{'start': '1960', 'stop': '4000', 'utterance': 'would'},

{'start': '4000', 'stop': '9400', 'utterance': 'such'},

{'start': '9400', 'stop': '10680', 'utterance': 'an'},

{'start': '10680', 'stop': '15880', 'utterance': 'act'},

{'start': '15880', 'stop': '18297', 'utterance': 'of'},

{'start': '18297', 'stop': '27080', 'utterance': 'refusal'},

{'start': '27080', 'stop': '30120', 'utterance': 'be'},

{'start': '30120', 'stop': '37720', 'utterance': 'useful'}],

'dialect_region': 'DR4',

'sentence_type': 'SI',

'speaker_id': 'MMDM0',

'id': 'SI681'

}

```

### Data Fields

- file: A path to the downloaded audio file in .wav format.

- audio: A dictionary containing the path to the downloaded audio file, the decoded audio array, and the sampling rate. Note that when accessing the audio column: `dataset[0]["audio"]` the audio file is automatically decoded and resampled to `dataset.features["audio"].sampling_rate`. Decoding and resampling of a large number of audio files might take a significant amount of time. Thus it is important to first query the sample index before the `"audio"` column, *i.e.* `dataset[0]["audio"]` should **always** be preferred over `dataset["audio"][0]`.

- text: The transcription of the audio file.

- phonetic_detail: The phonemes that make up the sentence. The PHONCODE.DOC contains a table of all the phonemic and phonetic symbols used in TIMIT lexicon.

- word_detail: Word level split of the transcript.

- dialect_region: The dialect code of the recording.

- sentence_type: The type of the sentence - 'SA':'Dialect', 'SX':'Compact' or 'SI':'Diverse'.

- speaker_id: Unique id of the speaker. The same speaker id can be found for multiple data samples.

- id: ID of the data sample. Contains the <SENTENCE_TYPE><SENTENCE_NUMBER>.

### Data Splits

The speech material has been subdivided into portions for training and

testing. The default train-test split will be made available on data download.

The test data alone has a core portion containing 24 speakers, 2 male and 1 female

from each dialect region. More information about the test set can

be found [here](https://catalog.ldc.upenn.edu/docs/LDC93S1/TESTSET.TXT)

## Dataset Creation

### Curation Rationale

[Needs More Information]

### Source Data

#### Initial Data Collection and Normalization

[Needs More Information]

#### Who are the source language producers?

[Needs More Information]

### Annotations

#### Annotation process

[Needs More Information]

#### Who are the annotators?

[Needs More Information]

### Personal and Sensitive Information

The dataset consists of people who have donated their voice online. You agree to not attempt to determine the identity of speakers in this dataset.

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

Dataset provided for research purposes only. Please check dataset license for additional information.

## Additional Information

### Dataset Curators

The dataset was created by John S. Garofolo, Lori F. Lamel, William M. Fisher, Jonathan G. Fiscus, David S. Pallett, Nancy L. Dahlgren, Victor Zue

### Licensing Information

[LDC User Agreement for Non-Members](https://catalog.ldc.upenn.edu/license/ldc-non-members-agreement.pdf)

### Citation Information

```

@inproceedings{

title={TIMIT Acoustic-Phonetic Continuous Speech Corpus},

author={Garofolo, John S., et al},

ldc_catalog_no={LDC93S1},

DOI={https://doi.org/10.35111/17gk-bn40},

journal={Linguistic Data Consortium, Philadelphia},

year={1983}

}

```

### Contributions

Thanks to [@vrindaprabhu](https://github.com/vrindaprabhu) for adding this dataset.

|

PKU-Alignment/processed-hh-rlhf | ---

license: mit

task_categories:

- conversational

language:

- en

tags:

- rlhf

- harmless

- helpful

- human-preference

pretty_name: hh-rlhf

size_categories:

- 100K<n<1M

---

# Dataset Card for Processed-Hh-RLHF

This is a dataset that processes [hh-rlhf](https://huggingface.co/datasets/Anthropic/hh-rlhf) into an easy-to-use conversational and human-preference form.

|

emo | ---

annotations_creators:

- expert-generated

language_creators:

- crowdsourced

language:

- en

license:

- unknown

multilinguality:

- monolingual

size_categories:

- 10K<n<100K

source_datasets:

- original

task_categories:

- text-classification

task_ids:

- sentiment-classification

paperswithcode_id: emocontext

pretty_name: EmoContext

dataset_info:

features:

- name: text

dtype: string

- name: label

dtype:

class_label:

names:

'0': others

'1': happy

'2': sad

'3': angry

config_name: emo2019

splits:

- name: train

num_bytes: 2433205

num_examples: 30160

- name: test

num_bytes: 421555

num_examples: 5509

download_size: 3362556

dataset_size: 2854760

---

# Dataset Card for "emo"

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://www.aclweb.org/anthology/S19-2005/](https://www.aclweb.org/anthology/S19-2005/)

- **Repository:** [More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

- **Paper:** [More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

- **Point of Contact:** [More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

- **Size of downloaded dataset files:** 3.37 MB

- **Size of the generated dataset:** 2.85 MB

- **Total amount of disk used:** 6.22 MB

### Dataset Summary

In this dataset, given a textual dialogue i.e. an utterance along with two previous turns of context, the goal was to infer the underlying emotion of the utterance by choosing from four emotion classes - Happy, Sad, Angry and Others.

### Supported Tasks and Leaderboards

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Languages

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Dataset Structure

### Data Instances

#### emo2019

- **Size of downloaded dataset files:** 3.37 MB

- **Size of the generated dataset:** 2.85 MB

- **Total amount of disk used:** 6.22 MB

An example of 'train' looks as follows.

```

{

"label": 0,

"text": "don't worry i'm girl hmm how do i know if you are what's ur name"

}

```

### Data Fields

The data fields are the same among all splits.

#### emo2019

- `text`: a `string` feature.

- `label`: a classification label, with possible values including `others` (0), `happy` (1), `sad` (2), `angry` (3).

### Data Splits

| name |train|test|

|-------|----:|---:|

|emo2019|30160|5509|

## Dataset Creation

### Curation Rationale

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the source language producers?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Annotations

#### Annotation process

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the annotators?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Personal and Sensitive Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Discussion of Biases

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Other Known Limitations

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Additional Information

### Dataset Curators

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Licensing Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Citation Information

```

@inproceedings{chatterjee-etal-2019-semeval,

title={SemEval-2019 Task 3: EmoContext Contextual Emotion Detection in Text},

author={Ankush Chatterjee and Kedhar Nath Narahari and Meghana Joshi and Puneet Agrawal},

booktitle={Proceedings of the 13th International Workshop on Semantic Evaluation},

year={2019},

address={Minneapolis, Minnesota, USA},

publisher={Association for Computational Linguistics},

url={https://www.aclweb.org/anthology/S19-2005},

doi={10.18653/v1/S19-2005},

pages={39--48},

abstract={In this paper, we present the SemEval-2019 Task 3 - EmoContext: Contextual Emotion Detection in Text. Lack of facial expressions and voice modulations make detecting emotions in text a challenging problem. For instance, as humans, on reading ''Why don't you ever text me!'' we can either interpret it as a sad or angry emotion and the same ambiguity exists for machines. However, the context of dialogue can prove helpful in detection of the emotion. In this task, given a textual dialogue i.e. an utterance along with two previous turns of context, the goal was to infer the underlying emotion of the utterance by choosing from four emotion classes - Happy, Sad, Angry and Others. To facilitate the participation in this task, textual dialogues from user interaction with a conversational agent were taken and annotated for emotion classes after several data processing steps. A training data set of 30160 dialogues, and two evaluation data sets, Test1 and Test2, containing 2755 and 5509 dialogues respectively were released to the participants. A total of 311 teams made submissions to this task. The final leader-board was evaluated on Test2 data set, and the highest ranked submission achieved 79.59 micro-averaged F1 score. Our analysis of systems submitted to the task indicate that Bi-directional LSTM was the most common choice of neural architecture used, and most of the systems had the best performance for the Sad emotion class, and the worst for the Happy emotion class}

}

```

### Contributions

Thanks to [@thomwolf](https://github.com/thomwolf), [@lordtt13](https://github.com/lordtt13), [@lhoestq](https://github.com/lhoestq) for adding this dataset. |

sahil2801/CodeAlpaca-20k | ---

license: cc-by-4.0

task_categories:

- text-generation

tags:

- code

pretty_name: CodeAlpaca 20K

size_categories:

- 10K<n<100K

language:

- en

--- |

ucberkeley-dlab/measuring-hate-speech | ---

annotations_creators:

- crowdsourced

language:

- en

license:

- cc-by-4.0

multilinguality:

- monolingual

source_datasets:

- original

task_categories:

- text-classification

task_ids:

- hate-speech-detection

- sentiment-classification

- multi-label-classification

pretty_name: measuring-hate-speech

tags:

- arxiv:2009.10277

- counterspeech

- hate-speech

- text-regression

- irt

---

## Dataset Description

- **Homepage:** http://hatespeech.berkeley.edu

- **Paper:** https://arxiv.org/abs/2009.10277

# Dataset card for _Measuring Hate Speech_

This is a public release of the dataset described in Kennedy et al. (2020) and Sachdeva et al. (2022), consisting of 39,565 comments annotated by 7,912 annotators, for 135,556 combined rows. The primary outcome variable is the "hate speech score" but the 10 constituent ordinal labels (sentiment, (dis)respect, insult, humiliation, inferior status, violence, dehumanization, genocide, attack/defense, hate speech benchmark) can also be treated as outcomes. Includes 8 target identity groups (race/ethnicity, religion, national origin/citizenship, gender, sexual orientation, age, disability, political ideology) and 42 target identity subgroups, as well as 6 annotator demographics and 40 subgroups. The hate speech score incorporates an IRT adjustment by estimating variation in annotator interpretation of the labeling guidelines.

This dataset card is a work in progress and will be improved over time.

## Key dataset columns

* hate_speech_score - continuous hate speech measure, where higher = more hateful and lower = less hateful. > 0.5 is approximately hate speech, < -1 is counter or supportive speech, and -1 to +0.5 is neutral or ambiguous.

* text - lightly processed text of a social media post

* comment\_id - unique ID for each comment

* annotator\_id - unique ID for each annotator

* sentiment - ordinal label that is combined into the continuous score

* respect - ordinal label that is combined into the continuous score

* insult - ordinal label that is combined into the continuous score

* humiliate - ordinal label that is combined into the continuous score

* status - ordinal label that is combined into the continuous score

* dehumanize - ordinal label that is combined into the continuous score

* violence - ordinal label that is combined into the continuous score

* genocide - ordinal label that is combined into the continuous score

* attack\_defend - ordinal label that is combined into the continuous score

* hatespeech - ordinal label that is combined into the continuous score

* annotator_severity - annotator's estimated survey interpretation bias

## Code to download

The dataset can be downloaded using the following python code:

```python

import datasets

dataset = datasets.load_dataset('ucberkeley-dlab/measuring-hate-speech', 'binary')

df = dataset['train'].to_pandas()

df.describe()

```

## Citation

```

@article{kennedy2020constructing,

title={Constructing interval variables via faceted Rasch measurement and multitask deep learning: a hate speech application},

author={Kennedy, Chris J and Bacon, Geoff and Sahn, Alexander and von Vacano, Claudia},

journal={arXiv preprint arXiv:2009.10277},

year={2020}

}

```

## Contributions

Dataset curated by [@ck37](https://github.com/ck37), [@pssachdeva](https://github.com/pssachdeva), et al.

## References

Kennedy, C. J., Bacon, G., Sahn, A., & von Vacano, C. (2020). [Constructing interval variables via faceted Rasch measurement and multitask deep learning: a hate speech application](https://arxiv.org/abs/2009.10277). arXiv preprint arXiv:2009.10277.

Pratik Sachdeva, Renata Barreto, Geoff Bacon, Alexander Sahn, Claudia von Vacano, and Chris Kennedy. 2022. [The Measuring Hate Speech Corpus: Leveraging Rasch Measurement Theory for Data Perspectivism](https://aclanthology.org/2022.nlperspectives-1.11/). In *Proceedings of the 1st Workshop on Perspectivist Approaches to NLP @LREC2022*, pages 83–94, Marseille, France. European Language Resources Association. |

openslr | ---

pretty_name: OpenSLR

annotations_creators:

- found

language_creators:

- found

language:

- af

- bn

- ca

- en

- es

- eu

- gl

- gu

- jv

- km

- kn

- ml

- mr

- my

- ne

- si

- st

- su

- ta

- te

- tn

- ve

- xh

- yo

language_bcp47:

- en-GB

- en-IE

- en-NG

- es-CL

- es-CO

- es-PE

- es-PR

license:

- cc-by-sa-4.0

multilinguality:

- multilingual

size_categories:

- 1K<n<10K

source_datasets:

- original

task_categories:

- automatic-speech-recognition

task_ids: []

paperswithcode_id: null

dataset_info:

- config_name: SLR41

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2423902

num_examples: 5822

download_size: 1890792360

dataset_size: 2423902

- config_name: SLR42

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1427984

num_examples: 2906

download_size: 866086951

dataset_size: 1427984

- config_name: SLR43

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1074005

num_examples: 2064

download_size: 800375645

dataset_size: 1074005

- config_name: SLR44

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1776827

num_examples: 4213

download_size: 1472252752

dataset_size: 1776827

- config_name: SLR63

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2016587

num_examples: 4126

download_size: 1345876299

dataset_size: 2016587

- config_name: SLR64

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 810375

num_examples: 1569

download_size: 712155683

dataset_size: 810375

- config_name: SLR65

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2136447

num_examples: 4284

download_size: 1373304655

dataset_size: 2136447

- config_name: SLR66

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1898335

num_examples: 4448

download_size: 1035127870

dataset_size: 1898335

- config_name: SLR69

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1647263

num_examples: 4240

download_size: 1848659543

dataset_size: 1647263

- config_name: SLR35

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 73565374

num_examples: 185076

download_size: 18900105726

dataset_size: 73565374

- config_name: SLR36

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 88942337

num_examples: 219156

download_size: 22996553929

dataset_size: 88942337

- config_name: SLR70

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1339608

num_examples: 3359

download_size: 1213955196

dataset_size: 1339608

- config_name: SLR71

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1676273

num_examples: 4374

download_size: 1445365903

dataset_size: 1676273

- config_name: SLR72

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1876301

num_examples: 4903

download_size: 1612030532

dataset_size: 1876301

- config_name: SLR73

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2084052

num_examples: 5447

download_size: 1940306814

dataset_size: 2084052

- config_name: SLR74

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 237395

num_examples: 617

download_size: 214181314

dataset_size: 237395

- config_name: SLR75

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1286937

num_examples: 3357

download_size: 1043317004

dataset_size: 1286937

- config_name: SLR76

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2756507

num_examples: 7136

download_size: 3041125513

dataset_size: 2756507

- config_name: SLR77

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2217652

num_examples: 5587

download_size: 2207991775

dataset_size: 2217652

- config_name: SLR78

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2121986

num_examples: 4272

download_size: 1743222102

dataset_size: 2121986

- config_name: SLR79

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 2176539

num_examples: 4400

download_size: 1820919115

dataset_size: 2176539

- config_name: SLR80

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1308651

num_examples: 2530

download_size: 948181015

dataset_size: 1308651

- config_name: SLR86

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 1378801

num_examples: 3583

download_size: 907065562

dataset_size: 1378801

- config_name: SLR32

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 4544052380

num_examples: 9821

download_size: 3312884763

dataset_size: 4544052380

- config_name: SLR52

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 77369899

num_examples: 185293

download_size: 14676484074

dataset_size: 77369899

- config_name: SLR53

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 88073248

num_examples: 218703

download_size: 14630810921

dataset_size: 88073248

- config_name: SLR54

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 62735822

num_examples: 157905

download_size: 9328247362

dataset_size: 62735822

- config_name: SLR83

features:

- name: path

dtype: string

- name: audio

dtype:

audio:

sampling_rate: 48000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 7098985

num_examples: 17877

download_size: 7229890819

dataset_size: 7098985

config_names:

- SLR32

- SLR35

- SLR36

- SLR41

- SLR42

- SLR43

- SLR44

- SLR52

- SLR53

- SLR54

- SLR63

- SLR64

- SLR65

- SLR66

- SLR69

- SLR70

- SLR71

- SLR72

- SLR73

- SLR74

- SLR75

- SLR76

- SLR77

- SLR78

- SLR79

- SLR80

- SLR83

- SLR86

---

# Dataset Card for openslr

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** https://www.openslr.org/

- **Repository:** [Needs More Information]

- **Paper:** [Needs More Information]

- **Leaderboard:** [Needs More Information]

- **Point of Contact:** [Needs More Information]

### Dataset Summary

OpenSLR is a site devoted to hosting speech and language resources, such as training corpora for speech recognition,

and software related to speech recognition. Currently, following resources are available:

#### SLR32: High quality TTS data for four South African languages (af, st, tn, xh).

This data set contains multi-speaker high quality transcribed audio data for four languages of South Africa.

The data set consists of wave files, and a TSV file transcribing the audio. In each folder, the file line_index.tsv

contains a FileID, which in turn contains the UserID and the Transcription of audio in the file.

The data set has had some quality checks, but there might still be errors.

This data set was collected by as a collaboration between North West University and Google.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See https://github.com/google/language-resources#license for license information.

Copyright 2017 Google, Inc.

#### SLR35: Large Javanese ASR training data set.

This data set contains transcribed audio data for Javanese (~185K utterances). The data set consists of wave files,

and a TSV file. The file utt_spk_text.tsv contains a FileID, UserID and the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

This dataset was collected by Google in collaboration with Reykjavik University and Universitas Gadjah Mada

in Indonesia.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/35/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017 Google, Inc.

#### SLR36: Large Sundanese ASR training data set.

This data set contains transcribed audio data for Sundanese (~220K utterances). The data set consists of wave files,

and a TSV file. The file utt_spk_text.tsv contains a FileID, UserID and the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

This dataset was collected by Google in Indonesia.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/36/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017 Google, Inc.

#### SLR41: High quality TTS data for Javanese.

This data set contains high-quality transcribed audio data for Javanese. The data set consists of wave files,

and a TSV file. The file line_index.tsv contains a filename and the transcription of audio in the file. Each

filename is prepended with a speaker identification number.

The data set has been manually quality checked, but there might still be errors.

This dataset was collected by Google in collaboration with Gadjah Mada University in Indonesia.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/41/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017, 2018 Google LLC

#### SLR42: High quality TTS data for Khmer.

This data set contains high-quality transcribed audio data for Khmer. The data set consists of wave files,

and a TSV file. The file line_index.tsv contains a filename and the transcription of audio in the file.

Each filename is prepended with a speaker identification number.

The data set has been manually quality checked, but there might still be errors.

This dataset was collected by Google.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/42/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017, 2018 Google LLC

#### SLR43: High quality TTS data for Nepali.

This data set contains high-quality transcribed audio data for Nepali. The data set consists of wave files,

and a TSV file. The file line_index.tsv contains a filename and the transcription of audio in the file.

Each filename is prepended with a speaker identification number.

The data set has been manually quality checked, but there might still be errors.

This dataset was collected by Google in Nepal.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/43/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017, 2018 Google LLC

#### SLR44: High quality TTS data for Sundanese.

This data set contains high-quality transcribed audio data for Sundanese. The data set consists of wave files,

and a TSV file. The file line_index.tsv contains a filename and the transcription of audio in the file.

Each filename is prepended with a speaker identification number.

The data set has been manually quality checked, but there might still be errors.

This dataset was collected by Google in collaboration with Universitas Pendidikan Indonesia.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/44/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017, 2018 Google LLC

#### SLR52: Large Sinhala ASR training data set.

This data set contains transcribed audio data for Sinhala (~185K utterances). The data set consists of wave files,

and a TSV file. The file utt_spk_text.tsv contains a FileID, UserID and the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/52/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017, 2018 Google, Inc.

#### SLR53: Large Bengali ASR training data set.

This data set contains transcribed audio data for Bengali (~196K utterances). The data set consists of wave files,

and a TSV file. The file utt_spk_text.tsv contains a FileID, UserID and the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/53/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017, 2018 Google, Inc.

#### SLR54: Large Nepali ASR training data set.

This data set contains transcribed audio data for Nepali (~157K utterances). The data set consists of wave files,

and a TSV file. The file utt_spk_text.tsv contains a FileID, UserID and the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/54/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2016, 2017, 2018 Google, Inc.

#### SLR63: Crowdsourced high-quality Malayalam multi-speaker speech data set

This data set contains transcribed high-quality audio of Malayalam sentences recorded by volunteers. The data set

consists of wave files, and a TSV file (line_index.tsv). The file line_index.tsv contains a anonymized FileID and

the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

Please report any issues in the following issue tracker on GitHub. https://github.com/googlei18n/language-resources/issues

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/63/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2018, 2019 Google, Inc.

#### SLR64: Crowdsourced high-quality Marathi multi-speaker speech data set

This data set contains transcribed high-quality audio of Marathi sentences recorded by volunteers. The data set

consists of wave files, and a TSV file (line_index.tsv). The file line_index.tsv contains a anonymized FileID and

the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

Please report any issues in the following issue tracker on GitHub. https://github.com/googlei18n/language-resources/issues

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/64/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2018, 2019 Google, Inc.

#### SLR65: Crowdsourced high-quality Tamil multi-speaker speech data set

This data set contains transcribed high-quality audio of Tamil sentences recorded by volunteers. The data set

consists of wave files, and a TSV file (line_index.tsv). The file line_index.tsv contains a anonymized FileID and

the transcription of audio in the file.

The data set has been manually quality checked, but there might still be errors.

Please report any issues in the following issue tracker on GitHub. https://github.com/googlei18n/language-resources/issues

The dataset is distributed under Creative Commons Attribution-ShareAlike 4.0 International Public License.

See [LICENSE](https://www.openslr.org/resources/65/LICENSE) file and

https://github.com/google/language-resources#license for license information.

Copyright 2018, 2019 Google, Inc.