datasetId

stringlengths 2

81

| card

stringlengths 20

977k

|

|---|---|

philschmid/markdown-documentation-transformers | ---

license: apache-2.0

---

# Hugging Face Transformers documentation as markdown dataset

This dataset was created using [Clipper.js](https://github.com/philschmid/clipper.js). Clipper is a Node.js command line tool that allows you to easily clip content from web pages and convert it to Markdown. It uses Mozilla's Readability library and Turndown under the hood to parse web page content and convert it to Markdown.

This dataset can be used to create RAG applications, which want to use the transformers documentation.

Example document: https://huggingface.co/docs/transformers/peft

```

# Load adapters with 🤗 PEFT

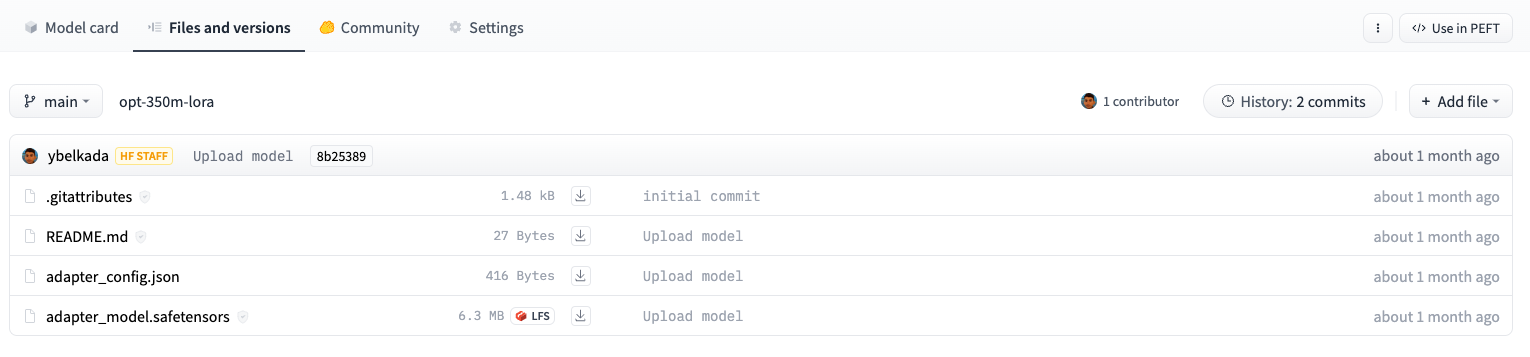

[Parameter-Efficient Fine Tuning (PEFT)](https://huggingface.co/blog/peft) methods freeze the pretrained model parameters during fine-tuning and add a small number of trainable parameters (the adapters) on top of it. The adapters are trained to learn task-specific information. This approach has been shown to be very memory-efficient with lower compute usage while producing results comparable to a fully fine-tuned model.

Adapters trained with PEFT are also usually an order of magnitude smaller than the full model, making it convenient to share, store, and load them.

The adapter weights for a OPTForCausalLM model stored on the Hub are only ~6MB compared to the full size of the model weights, which can be ~700MB.

If you’re interested in learning more about the 🤗 PEFT library, check out the [documentation](https://huggingface.co/docs/peft/index).

## Setup

Get started by installing 🤗 PEFT:

If you want to try out the brand new features, you might be interested in installing the library from source:

....

``` |

Wanfq/Explore_Instruct_Brainstorming_16k | ---

license: cc-by-nc-4.0

language:

- en

---

<p align="center" width="100%">

</p>

<div id="top" align="center">

**Explore-Instruct: Enhancing Domain-Specific Instruction Coverage through Active Exploration**

<h4> |<a href="https://arxiv.org/abs/2310.09168"> 📑 Paper </a> |

<a href="https://huggingface.co/datasets?sort=trending&search=Explore_Instruct"> 🤗 Data </a> |

<a href="https://huggingface.co/models?sort=trending&search=Explore-LM"> 🤗 Model </a> |

<a href="https://github.com/fanqiwan/Explore-Instruct"> 🐱 Github Repo </a> |

</h4>

<!-- **Authors:** -->

_**Fanqi Wan<sup>†</sup>, Xinting Huang<sup>‡</sup>, Tao Yang<sup>†</sup>, Xiaojun Quan<sup>†</sup>, Wei Bi<sup>‡</sup>, Shuming Shi<sup>‡</sup>**_

<!-- **Affiliations:** -->

_<sup>†</sup> Sun Yat-sen University,

<sup>‡</sup> Tencent AI Lab_

</div>

## News

- **Oct 16, 2023:** 🔥 We're excited to announce that the Explore-Instruct datasets in brainstorming, rewriting, and math domains are now available on 🤗 [Huggingface Datasets](https://huggingface.co/datasets?sort=trending&search=Explore_Instruct)! Additionally, we've released Explore-LM models that have been initialized with LLaMA-7B and fine-tuned with the Explore-Instruct data in each domain. You can find these models on 🤗 [Huggingface Models](https://huggingface.co/models?sort=trending&search=Explore-LM). Happy exploring and instructing!

## Contents

- [Overview](#overview)

- [Data Release](#data-release)

- [Model Release](#model-release)

- [Data Generation Process](#data-generation-process)

- [Fine-tuning](#fine-tuning)

- [Evaluation](#evaluation)

- [Limitations](#limitations)

- [License](#license)

- [Citation](#citation)

- [Acknowledgements](#acknowledgments)

## Overview

We propose Explore-Instruct, a novel approach to enhancing domain-specific instruction coverage. We posit that the domain space is inherently structured akin to a tree, reminiscent of cognitive science ontologies. Drawing from the essence of classical search algorithms and incorporating the power of LLMs, Explore-Instruct is conceived to actively traverse the domain space and generate instruction-tuning data, **not** necessitating a predefined tree structure. Specifically, Explore-Instruct employs two strategic operations: lookahead and backtracking exploration:

- **Lookahead** delves into a multitude of potential fine-grained sub-tasks, thereby mapping out a complex network of tasks

- **Backtracking** seeks alternative branches to widen the search boundary, hence extending the domain spectrum.

<p align="center">

<img src="https://github.com/fanqiwan/Explore-Instruct/blob/main/assets/fig2.png?raw=true" width="95%"> <br>

</p>

## Data Release

We release the Explore-Instruct data in brainstorming, rewriting, and math domains on 🤗 [Huggingface Datasets](https://huggingface.co/datasets?sort=trending&search=Explore_Instruct). Each domain includes two versions of datasets: the basic and extended version. The base version contains 10k instruction-tuning data and the extended version contains 16k, 32k, and 64k instruction-tuning data for each domain respectively. Each dataset is a structured data file in the JSON format. It consists of a list of dictionaries, with each dictionary containing the following fields:

- `instruction`: `str`, describes the task the model should perform.

- `input`: `str`, optional context or input for the task.

- `output`: `str`, ground-truth output text for the task and input text.

The results of data-centric analysis are shown as follows:

<p align="left">

<img src="https://github.com/fanqiwan/Explore-Instruct/blob/main/assets/fig1.png?raw=true" width="50%"> <br>

</p>

| Method | Brainstorming Unique<br/>V-N pairs | Rewriting Unique<br/>V-N pairs | Math Unique<br/>V-N pairs |

|:--------------------------------|:----------------------------------:|:------------------------------:|:-------------------------:|

| _Domain-Specific Human-Curated_ | 2 | 8 | 3 |

| _Domain-Aware Self-Instruct_ | 781 | 1715 | 451 |

| Explore-Instruct | **790** | **2015** | **917** |

## Model Release

We release the Explore-LM models in brainstorming, rewriting, and math domains on 🤗 [Huggingface Models](https://huggingface.co/models?sort=trending&search=Explore-LM). Each domain includes two versions of models: the basic and extended version trained with the corresponding version of dataset.

The results of automatic and human evaluation in three domains are shown as follows:

- Automatic evaluation:

| Automatic Comparison in the Brainstorming Domain | Win:Tie:Lose | Beat Rate |

|:-------------------------------------------------|:------------:|:---------:|

| Explore-LM vs Domain-Curated-LM | 194:1:13 | 93.72 |

| Explore-LM-Ext vs Domain-Curated-LM | 196:1:11 | 94.69 |

| Explore-LM vs Domain-Instruct-LM | 114:56:38 | 75.00 |

| Explore-LM-Ext vs Domain-Instruct-LM | 122:55:31 | 79.74 |

| Explore-LM vs ChatGPT | 52:71:85 | 37.96 |

| Explore-LM-Ext vs ChatGPT | 83:69:56 | 59.71 |

| Automatic Comparison in the Rewriting Domain | Win:Tie:Lose | Beat Rate |

|:---------------------------------------------|:------------:|:---------:|

| Explore-LM vs Domain-Curated-LM | 50:38:6 | 89.29 |

| Explore-LM-Ext vs Domain-Curated-LM | 53:37:4 | 92.98 |

| Explore-LM vs Domain-Instruct-LM | 34:49:11 | 75.56 |

| Explore-LM-Ext vs Domain-Instruct-LM | 35:53:6 | 85.37 |

| Explore-LM vs ChatGPT | 11:59:24 | 31.43 |

| Explore-LM-Ext vs ChatGPT | 12:56:26 | 31.58 |

| Automatic Comparison in the Math Domain | Accuracy Rate |

|:----------------------------------------|:-------------:|

| Domain-Curated-LM | 3.4 |

| Domain-Instruct-LM | 4.0 |

| Explore-LM | 6.8 |

| Explore-LM-Ext | 8.4 |

| ChatGPT | 34.8 |

- Human evaluation:

<p align="left">

<img src="https://github.com/fanqiwan/Explore-Instruct/blob/main/assets/fig5.png?raw=true" width="95%"> <br>

</p>

## Data Generation Process

To generate the domain-specific instruction-tuning data, please follow the following commands step by step:

### Domain Space Exploration

```

python3 generate_instruction.py \

--action extend \

--save_dir ./en_data/demo_domain \ # input dir include current domain tree for exploration

--out_dir ./en_data/demo_domain_exploration \ # output dir of the explored new domain tree

--lang <LANGUAGE> \ # currently support 'en'

--domain demo_domain \ # domain for exploration

--extend_nums <TASK_NUMBER_DEPTH_0>,...,<TASK_NUMBER_DEPTH_MAX_DEPTH-1> \ # exploration breadth at each depth

--max_depth <MAX_DEPTH> \ # exploration depth

--assistant_name <ASSISTANT_NAME> # currently support openai and claude

```

### Instruction-Tuning Data Generation

```

python3 generate_instruction.py \

--action enrich \

--save_dir ./en_data/demo_domain_exploration \ # input dir include current domain tree for data generation

--out_dir ./en_data/demo_domain_generation \ # output dir of the domain tree with generated data

--lang <LANGUAGE> \ # currently support 'en'

--domain demo_domain \ # domain for exploration

--enrich_nums <DATA_NUMBER_DEPTH_0>,...,<DATA_NUMBER_DEPTH_MAX_DEPTH> \ # data number for task at each depth

--enrich_batch_size <BATCH_SIZE> \ # batch size for data generation

--assistant_name <ASSISTANT_NAME> # currently support openai and claude

```

### Task Pruning

```

python3 generate_instruction.py \

--action prune \

--save_dir ./en_data/demo_domain_generation \ # input dir include current domain tree for task pruning

--out_dir ./en_data/demo_domain_pruning \ # output dir of the domain tree with 'pruned_subtasks_name.json' file

--lang <LANGUAGE> \ # currently support 'en'

--domain demo_domain \ # domain for exploration

--pruned_file ./en_data/demo_domain_pruning/pruned_subtasks_name.json \ # file of pruned tasks

--prune_threshold <PRUNE_THRESHOLD> \ # threshold of rouge-l overlap between task names

--assistant_name <ASSISTANT_NAME> # currently support openai and claude

```

### Data Filtering

```

python3 generate_instruction.py \

--action filter \

--save_dir ./en_data/demo_domain_pruning \ # input dir include current domain tree for data filtering

--out_dir ./en_data/demo_domain_filtering \ # output dir of the domain tree with fitered data

--lang <LANGUAGE> \ # currently support 'en'

--domain demo_domain \ # domain for exploration

--pruned_file ./en_data/demo_domain_pruning/pruned_subtasks_name.json \ # file of pruned tasks

--filter_threshold <FILTER_THRESHOLD> \ # threshold of rouge-l overlap between instructions

--assistant_name <ASSISTANT_NAME> # currently support openai and claude

```

### Data Sampling

```

python3 generate_instruction.py \

--action sample \

--save_dir ./en_data/demo_domain_filtering \ # input dir include current domain tree for data sampling

--out_dir ./en_data/demo_domain_sampling \ # output dir of the domain tree with sampled data

--lang <LANGUAGE> \ # currently support 'en'

--domain demo_domain \ # domain for exploration

--pruned_file ./en_data/demo_domain_filtering/pruned_subtasks_name.json \ # file of pruned tasks

--sample_example_num <SAMPLE_EXAMPLES_NUM> \ # number of sampled examples

--sample_max_depth <SAMPLE_MAX_DEPTH> \ # max depth for data sampling

--sample_use_pruned \ # do not sample from pruned tasks

--assistant_name <ASSISTANT_NAME> # currently support openai and claude

```

## Fine-tuning

We fine-tune LLaMA-7B with the following hyperparameters:

| Hyperparameter | Global Batch Size | Learning rate | Epochs | Max length | Weight decay |

|:----------------|-------------------:|---------------:|--------:|------------:|--------------:|

| LLaMA 7B | 128 | 2e-5 | 3 | 512| 0 |

To reproduce the training procedure, please use the following command:

```

deepspeed --num_gpus=8 ./train/train.py \

--deepspeed ./deepspeed_config/deepspeed_zero3_offload_config.json \

--model_name_or_path decapoda-research/llama-7b-hf \

--data_path ./en_data/demo_domain_sampling \

--fp16 True \

--output_dir ./training_results/explore-lm-7b-demo-domain \

--num_train_epochs 3 \

--per_device_train_batch_size 2 \

--per_device_eval_batch_size 2 \

--gradient_accumulation_steps 8 \

--evaluation_strategy "no" \

--model_max_length 512 \

--save_strategy "steps" \

--save_steps 2000 \

--save_total_limit 1 \

--learning_rate 2e-5 \

--weight_decay 0. \

--warmup_ratio 0.03 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--prompt_type alpaca \

2>&1 | tee ./training_logs/explore-lm-7b-demo-domain.log

python3 ./train/zero_to_fp32.py \

--checkpoint_dir ./training_results/explore-lm-7b-demo-domain \

--output_file ./training_results/explore-lm-7b-demo-domain/pytorch_model.bin

```

## Evaluation

The evaluation datasets for different domains are as follows:

- Brainstorming and Rewriting: From the corresponding categories in the translated test set of BELLE. ([en_eval_set.jsonl](./eval/question/en_eval_set.jsonl))

- Math: From randomly selected 500 questions from the test set of MATH. ([MATH_eval_set_sample.jsonl](./eval/question/MATH_eval_set_sample.jsonl))

The evaluation metrics for different domains are as follows:

- Brainstorming and Rewriting: Both automatic and human evaluations following Vicuna.

- Math: Accuracy Rate metric in solving math problems.

The automatic evaluation commands for different domains are as follows:

```

# Brainstorming and Rewriting Domain

# 1. Inference

python3 ./eval/generate.py \

--model_id <MODEL_ID> \

--model_path <MODEL_PATH> \

--question_file ./eval/question/en_eval_set.jsonl \

--answer_file ./eval/answer/<MODEL_ID>.jsonl \

--num_gpus 8 \

--num_beams 1 \

--temperature 0.7 \

--max_new_tokens 512 \

--prompt_type alpaca \

--do_sample

# 2. Evaluation

python3 ./eval/chatgpt_score.py \

--baseline_file ./eval/answer/<MODEL_1>.jsonl \ # answer of baseline model to compare with

--answer_file ./eval/answer/<MODEL_2>.jsonl \ # answer of evaluation model

--review_file ./eval/review/<MODEL_1>_cp_<MODEL_2>_<DOMAIN>.jsonl \ # review from chatgpt

--prompt_file ./eval/prompt/en_review_prompt_compare.jsonl \ # evaluation prompt for chatgpt

--target_classes <DOMAIN> \ # evaluation domain

--batch_size <BATCH_SIZE> \

--review_model "gpt-3.5-turbo-0301"

```

```

# Math Domain

# 1. Inference

python3 ./eval/generate.py \

--model_id <MODEL_ID> \

--model_path <MODEL_PATH> \

--question_file ./eval/question/MATH_eval_set_sample.jsonl \

--answer_file ./eval/answer/<MODEL_ID>.jsonl \

--num_gpus 8 \

--num_beams 10 \

--temperature 1.0 \

--max_new_tokens 512 \

--prompt_type alpaca

# 2. Evaluation

python3 ./eval/auto_eval.py \

--question_file ./eval/question/MATH_eval_set_sample.jsonl \

--answer_file ./eval/answer/<MODEL_ID>.jsonl # answer of evaluation model

```

## Limitations

Explore-Instruct is still under development and needs a lot of improvements. We acknowledge that our work focuses on the enhancement of domain-specific instruction coverage and does not address other aspects of instruction-tuning, such as the generation of complex and challenging instructions or the mitigation of toxic and harmful instructions. Future work is needed to explore the potential of our approach in these areas.

## License

Explore-Instruct is intended and licensed for research use only. The dataset is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes. The weights of Explore-LM models are also CC BY NC 4.0 (allowing only non-commercial use).

## Citation

If you find this work is relevant with your research or applications, please feel free to cite our work!

```

@misc{wan2023explore,

title={Explore-Instruct: Enhancing Domain-Specific Instruction Coverage through Active Exploration},

author={Fanqi, Wan and Xinting, Huang and Tao, Yang and Xiaojun, Quan and Wei, Bi and Shuming, Shi},

year={2023},

eprint={2310.09168},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

## Acknowledgments

This repo benefits from [Stanford-Alpaca](https://github.com/tatsu-lab/stanford_alpaca) and [Vicuna](https://github.com/lm-sys/FastChat). Thanks for their wonderful works!

|

khaimaitien/qa-expert-multi-hop-qa-V1.0 | ---

task_categories:

- question-answering

- text-generation

language:

- en

pretty_name: Multi-hop Question Answering

size_categories:

- 10K<n<100K

---

# Dataset Card for QA-Expert-multi-hop-qa-V1.0

This dataset aims to provide **multi-domain** training data for the task: Question Answering, with a focus on <b>Multi-hop Question Answering</b>.

In total, this dataset contains 25.5k for training and 3.19k for evaluation.

You can take a look at the model we trained on this data: [https://huggingface.co/khaimaitien/qa-expert-7B-V1.0](https://huggingface.co/khaimaitien/qa-expert-7B-V1.0)

The dataset is mostly generated using the OpenAPI model (**gpt-3.5-turbo-instruct**). Please read more information about how we created this dataset from here: [https://github.com/khaimt/qa_expert/tree/main/gen_data](https://github.com/khaimt/qa_expert/tree/main/gen_data)

. The repository contains the **scripts for generating the training data**, so you can run the available scripts to generate more data.

Example of single question: what is the capital city of Vietnam?

Example of multi-hop question: what is the population of the capital city of Vietnam?

## Dataset Details

### Dataset Description

### Format

Each data point is a Json:

+ **question**: the question, can be single question or multi-hop question

+ **multihop**: True/False whether the question is multihop or not

+ **sub_questions**: List of decomposed single questions from question. If the question is single question, ```len(sub_questions) == 1```

+ **question**: single question decomposed from original multi-hop question

+ **paragraph**: the retrieval context for the single question

+ **long_answer**: the answer to the single question, the format is: xxx\nAnswer:yyy where xxx is the reasoning (thought) before generte answer to the question.

+ **final_answer**: The final answer to the question. If the question is multihop, this has the form: Summary:xxx\nAnswer:yyy Where xxx is the summary of anwers from decomposed single questions before generating final answer: yyy

+ **answer**: <i>Can ignore this field</i>

+ **meta_info**: contains the information about how the data point was created

+ **tag**: <i>can ignore this field</i>

- **Curated by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

### Dataset Sources [optional]

<!-- Provide the basic links for the dataset. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the dataset is intended to be used. -->

### Direct Use

<!-- This section describes suitable use cases for the dataset. -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the dataset will not work well for. -->

[More Information Needed]

## Dataset Structure

<!-- This section provides a description of the dataset fields, and additional information about the dataset structure such as criteria used to create the splits, relationships between data points, etc. -->

[More Information Needed]

## Dataset Creation

### Curation Rationale

<!-- Motivation for the creation of this dataset. -->

[More Information Needed]

### Source Data

<!-- This section describes the source data (e.g. news text and headlines, social media posts, translated sentences, ...). -->

#### Data Collection and Processing

<!-- This section describes the data collection and processing process such as data selection criteria, filtering and normalization methods, tools and libraries used, etc. -->

[More Information Needed]

#### Who are the source data producers?

<!-- This section describes the people or systems who originally created the data. It should also include self-reported demographic or identity information for the source data creators if this information is available. -->

[More Information Needed]

### Annotations [optional]

<!-- If the dataset contains annotations which are not part of the initial data collection, use this section to describe them. -->

#### Annotation process

<!-- This section describes the annotation process such as annotation tools used in the process, the amount of data annotated, annotation guidelines provided to the annotators, interannotator statistics, annotation validation, etc. -->

[More Information Needed]

#### Who are the annotators?

<!-- This section describes the people or systems who created the annotations. -->

[More Information Needed]

#### Personal and Sensitive Information

<!-- State whether the dataset contains data that might be considered personal, sensitive, or private (e.g., data that reveals addresses, uniquely identifiable names or aliases, racial or ethnic origins, sexual orientations, religious beliefs, political opinions, financial or health data, etc.). If efforts were made to anonymize the data, describe the anonymization process. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users should be made aware of the risks, biases and limitations of the dataset. More information needed for further recommendations.

## Citation [optional]

```

@Misc{qa-expert,

title={QA Expert: LLM for Multi-hop Question Answering},

author={Khai Mai},

howpublished={\url{https://github.com/khaimt/qa_expert}},

year={2023},

}

```

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the dataset or dataset card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Dataset Card Authors [optional]

[More Information Needed]

## Dataset Card Contact

[More Information Needed] |

erhwenkuo/moss-003-sft-chinese-zhtw | ---

dataset_info:

features:

- name: conversation_id

dtype: int64

- name: category

dtype: string

- name: conversation

list:

- name: human

dtype: string

- name: assistant

dtype: string

splits:

- name: train

num_bytes: 8438001353

num_examples: 1074551

download_size: 4047825896

dataset_size: 8438001353

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

license: cc

task_categories:

- conversational

language:

- zh

size_categories:

- 1M<n<10M

---

# Dataset Card for "moss-003-sft-chinese-zhtw"

## 資料集摘要

本資料集主要是應用於專案:[MOSS: 開源對話語言模型](https://github.com/OpenLMLab/MOSS) 所收集的數據。

[MOSS]((https://github.com/OpenLMLab/MOSS)) 是支援中英雙語和多種外掛程式的開源對話語言模型,moss-moon 系列模型具有160億參數,在FP16精度下可在單張A100/A800或兩張3090顯示卡運行,在INT4/8精度下可在單張3090顯示卡運行。 MOSS基座語言模型在約七千億中英文以及程式碼單字上預訓練得到,後續經過對話指令微調、插件增強學習和人類偏好訓練具備多輪對話能力及使用多種插件的能力。

## 原始資料來源

- [moss-003-sft-data](https://github.com/OpenLMLab/MOSS/tree/main/SFT_data): `moss-moon-003-sft` 所使用的多輪對話數據,基於 MOSS-002 內測階段採集的約10萬用戶輸入數據和 gpt-3.5-turbo 構造而成,相比 `moss-002-sft-data`,`moss-003-sft-data` 更符合真實使用者意圖分佈,包含更細緻的有用性類別標記、更廣泛的無害性資料和更長對話輪數,約含110萬條對話數據。完整資料已全部開源。

- [YeungNLP/moss-003-sft-data](https://huggingface.co/datasets/YeungNLP/moss-003-sft-data)

## 資料下載清理

在原始資料集的基礎上,[YeungNLP/moss-003-sft-data](https://huggingface.co/datasets/YeungNLP/moss-003-sft-data) 去除了冗餘訊息,僅提取出有效的對話訊息,並且調整資料格式,以便在訓練中更加靈活地組織資料格式。

1. 下載 [YeungNLP/moss-003-sft-data](https://huggingface.co/datasets/YeungNLP/moss-003-sft-data)

2. 使用 OpenCC 來進行簡繁轉換

3. 使用 Huggingface Datasets 來上傳至 Huggingface Hub

## 資料集結構

本資料集原始為jsonl格式,每一行的樣本為一個多輪對話數據,格式如下:

```json

{

"conversation_id":1,

"category":"Brainstorming",

"conversation":[

{

"human":"如何保障工作中遵循正確的安全準則?",

"assistant":"為了保障工作中遵循正確的安全準則,以下是一些建議:\n\n1.瞭解相關安全規定和標準:瞭解相關的安全規定和標準,並遵守它們。這可以包括公司和政府的安全標準,以及行業標準和最佳實踐。\n\n2.培訓和教育:確保您和您的同事接受了必要的培訓和教育,以瞭解正確的安全準則和行為。\n\n3.使用正確的工具和裝置:確保您使用正確的工具和裝置,並且它們得到了正確的維護和保養。\n\n4.個人防護裝備:確保您和您的同事穿戴正確的個人防護裝備,如安全鞋、透明眼鏡或面罩、手套等。\n\n5.建立一個安全文化:鼓勵個人對安全的責任感和意識,並建立一個安全文化,使人們始終關注他們自己和他人的安全。\n\n6.持續監測和改進:持續監測和改進安全準則和程式,以確保它們保持最新,並適應變化的情況。\n\n這些措施可以幫助您和您的同事在工作中保障正確的安全準則,確保您的工作場所是一個安全的環境。"

},

{

"human":"如何應對突發緊急情況?",

"assistant":"突發緊急情況可能會在任何時間發生,為了應對這些情況,以下是一些建議:\n\n1.保持冷靜:保持冷靜和清醒,以便能夠更好地應對情況。\n\n2.評估情況:評估緊急情況的性質和範圍,以便了解應該採取哪些行動。\n\n3.觸發應急計劃:如果您的機構或公司有應急計劃,立即觸發它,並按照計劃的步驟行動。\n\n4.聯絡相關人員:通知必要的人員,例如緊急服務、管理層或其他相關人員,並與他們保持聯絡。\n\n5.遵循安全準則:在處理緊急情況時,請遵循正確的安全準則,確保自己和其他人的安全。\n\n6.提供及時資訊:儘快向相關人員提供準確的資訊,以便他們能夠做出正確的決策。\n\n7.合作應對:與其他人合作應對緊急情況並相互支援。\n\n總之,應對突發緊急情況需要保持冷靜和清醒,評估情況並採取適當的行動。同時,需要遵循正確的安全準則,並與其他人合作應對緊急情況。"

},

{

"human":"在網路安全方面,如何避免遭受駭客攻擊?",

"assistant":"以下是一些避免遭受駭客攻擊的建議:\n\n1.更新安全軟體:確保您的作業系統、應用程式和安全軟體是最新的,並隨時更新它們。這可以幫助您及時發現和修復安全漏洞。\n\n2.使用強密碼:使用強密碼,包括字母、數字和特殊字元。不要使用容易猜測的密碼,如生日、姓名或常用單詞。\n\n3.使用多因素身份驗證:使用多因素身份驗證來增加賬戶的安全性,例如傳送簡訊驗證碼或使用指紋識別。\n\n4.小心附件和連結:不要開啟未知的附件或連結,因為它們可能包含惡意軟體。\n\n5.備份重要檔案:定期備份重要檔案,並將其儲存在安全的位置。\n\n6.保護個人資訊:不要在網際網路上公開個人資訊,例如出生日期、地址或社交安全號碼。\n\n7.使用加密連線:在訪問敏感資訊時,使用加密連線,如HTTPS。\n\n8.教育員工:向員工提供網路安全培訓,並確保他們瞭解正確的安全措施和行為。\n\n總之,避免遭受駭客攻擊需要採取多種措施,包括更新安全軟體、使用強密碼、使用多因素身份驗證、小心附件和連結、備份重要檔案、保護個人資訊、使用加密連線以及教育員工。這些措施可以幫助您保護個人資訊和資料免受駭客攻擊。"

},

{

"human":"如何確保網路連線的安全性?",

"assistant":"以下是一些確保網路連線安全性的建議:\n\n1.使用加密連線:使用加密連線,如HTTPS,以確保資料傳輸過程中不被竊聽或篡改。\n\n2.使用防病毒軟體:安裝和更新防病毒軟體,可以幫助檢測和清除惡意軟體。\n\n3.使用防火牆:使用防火牆保護網路和裝置,可以幫助阻止未經授權的訪問和攻擊。\n\n4.禁止未經授權的訪問:禁止未經授權的訪問,包括限制網路訪問和裝置訪問許可權。\n\n5.定期更新作業系統和應用程式:定期更新作業系統和應用程式,以修復安全漏洞和缺陷。\n\n6.使用強密碼:使用強密碼,包括字母、數字和特殊字元,並定期更改密碼。\n\n7.控制訪問:控制訪問網路和裝置的人員,僅授權人員可訪問重要資源。\n\n8.教育員工:向員工提供網路安全培訓,並確保他們瞭解正確的安全措施和行為。\n\n總之,確保網路連線的安全性需要採取多種措施,包括使用加密連線、使用防病毒軟體、使用防火牆、禁止未經授權的訪問、定期更新作業系統和應用程式、使用強密碼、控制訪問和教育員工。這些措施可以幫助您保護網路和裝置免受未經授權的訪問和攻擊。"

},

{

"human":"對於金融機構來說,如何保護客戶的隱私和資料安全?",

"assistant":"金融機構需要對客戶的隱私和資料安全高度重視,以下是一些保護客戶隱私和資料安全的建議:\n\n1.遵守合規要求:確保遵守所有適用的合規要求和監管標準,包括資料隱私法規和安全標準。\n\n2.加強身份驗證:採用多因素身份驗證,例如使用簡訊驗證碼、指紋識別等,以確保客戶身份的真實性。\n\n3.加密敏感資料:使用加密技術對敏感資料進行加密,以保護客戶資訊不被未經授權的人員獲得。\n\n4.建立安全控制:建立安全控制,包括訪問控制、安全審計和安全事件響應,以保護客戶資訊的安全性。\n\n5.教育員工:向員工提供網路安全培訓,並確保他們瞭解正確的安全措施和行為。\n\n6.定期測試:定期進行安全測試和漏洞掃描,以發現和修復潛在的安全漏洞。\n\n7.建立應急計劃:建立應急計劃,並對其進行定期測試,以確保在緊急情況下能夠及時響應和恢復。\n\n總之,保護客戶隱私和資料安全需要採取多種措施,包括遵守合規要求、加強身份驗證、加密敏感資料、建立安全控制、教育員工、定期測試和建立應急計劃。這些措施可以幫助金融機構保護客戶資訊的安全性和隱私。"

}

]

}

```

## 資料欄位

- `conversation_id`: (int64) 資料編號

- `category`: (string) 對話類別

- `conversation`: (list) 多輪對話

- `human`: (string) 使用者角色的詢問

- `assistant`: (string) 小助手的回答

## 如何使用

```python

from datasets import load_dataset

dataset = load_dataset("erhwenkuo/moss-003-sft-chinese-zhtw", split="train")

```

## 許可資訊

[CC BY-NC 4.0](https://creativecommons.org/licenses/by-nc/4.0/deed.zh-hant)

## 引用

```

@article{sun2023moss,

title={MOSS: Training Conversational Language Models from Synthetic Data},

author={Tianxiang Sun and Xiaotian Zhang and Zhengfu He and Peng Li and Qinyuan Cheng and Hang Yan and Xiangyang Liu and Yunfan Shao and Qiong Tang and Xingjian Zhao and Ke Chen and Yining Zheng and Zhejian Zhou and Ruixiao Li and Jun Zhan and Yunhua Zhou and Linyang Li and Xiaogui Yang and Lingling Wu and Zhangyue Yin and Xuanjing Huang and Xipeng Qiu},

year={2023}

}

``` |

StrangeCroissant/fantasy_dataset | ---

task_categories:

- text-generation

- question-answering

language:

- en

tags:

- books

- fantasy

- scifi

- text

size_categories:

- 10K<n<100K

---

# Fantasy/Sci-fi Dataset

This dataset contains fantasy and scifi books in plain text format. Each line of the dataset represents each sentence of the concated corpus for the following books:

1. 01 Horselords.txt

2. 01 The Second Generation.txt 02 Tantras.txt

3. R.A. Salvatore - The Icewind Dale Trilogy - 2 - Streams of Silver.txt

4. RA SalvatoreThe Legacy of The Drow - 2 - Starless Night.txt

5. R.A.Salvatore - Icewind Dale Trilogy 1 - The Crystal Shard.txt

6. Star Wars - [Thrawn Trilogy 02] - Dark Force Rising (by Timothy Zahn).txt

7. Robert Jordan - The Wheel of Time 01 - Eye of the world.txt

8. 03 Crusade.txt

9. Salvatore, RA - Cleric Quintet 5 -The Chaos Curse.txt

10. 03 Waterdeep.txt Clarke Arthur C - 3001 The Final Odissey.txt

11. Dragonlance Preludes 2 vol 2 - Flint the King.txt

12. 03 Dragons of Spring Dawning.txt

13. Lloyd Alexander - [Chronicles Of Prydain 4] Taran Wanderer.txt

14. 01 Dragons of Autumn Twilight.txt

15. 03 The Two Swords.txt

16. Robert Jordan - 12 - The Gathering Storm - Chapter One.txt

17. 02 War Of The Twins.txt

18. 01 - The Fellowship Of The Ring.txt

19. 02 The Lone Drow.txt

20. 01 The Thousand Orcs.txt Auel, Jean - Earth's Children

21. 03 - The Mammoth Hunters.txt 01 Shadowdale.txt Salvatore, RA - Cleric Quintet 3 - Night Masks.txt

22. Robert Jordan - The Strike at Shayol Ghul.txt

23. Salvatore, R.A. - Paths of Darkness 1 - The Silent Blade.txt

24. Clancy Tom - Patriot Games.txt

25. Lloyd Alexander - [Chronicles Of Prydain 1] Book of Three.txt

26. Lloyd Alexander - [Chronicles Of Prydain 2] Black Cauldron.txt

27. Salvatore, R.A. - Paths of Darkness 3 - Servant of the Shard.txt

28. 02 Crown of Fire.txt

29. 04 Prince of Lies.txt

30. Salvatore, R.A. - Paths of Darkness 2 - The Spine of the World.txt

31. Robert Jordan - The Wheel of Time 11 - Knife of Dreams.txt

32. Lloyd Alexander - [Chronicles Of Prydain 3] Castle Of Llyr.txt R.A. Salvatore - The Dark Elf Trilogy.txt

33. 02 Dragonwall.txt Frank Herbert - Dune.txt

34. 02 - The Two Towers.txt

35. Salvatore, RA - Cleric Quintet 4 - The Fallen Fortress.txt

36. Robert Jordan - The Wheel of Time 04 - The Shadow Rising.txt

37. Robert Jordan - The Wheel of Time 10 - Crossroads of Twilight.txt

38. Harry Potter 2 - Chamber of Secrets.txt

39. Auel, Jean - Earth's Children 01 - The Clan of the Cave Bear.txt

40. Harry Potter 6 - The Half Blood Prince.txt

41. Robert Jordan - The Wheel of Time 03 - The Dragon Reborn.txt

42. R.A. Salvatore - The Legacy of the Drow 1 - Legacy.txt

43. 01 Spellfire.txt Frank Herbert - Children of Dune.txt

44. 01 Time Of The Twins.txt

45. R.A. Salvatore - The Legacy of the Drow III - Siege of Darkness.txt

46. Robert Jordan - The Wheel of Time 08 - The Path of Daggers.txt

47. R.A. Salvatore - The Icewind Dale Trilogy - 3 - The Halfling's Gem.txt

48. Auel, Jean - Earth's Children 05 - The Shelters Of Stone.txt

49. Harry Potter 7 - Deathly Hollows.txt

50. Robert Jordan - The Wheel of Time 07 - A Crown of Swords.txt

51. Harry Potter 1 - Sorcerer's Stone.txt

52. 05 Crucible - The Trial Of Cyric The Mad.txt Star Wars - [Thrawn Trilogy 01] - Heir to the Empire (by Timothy Zahn).txt

53. Robert Jordan - The Wheel of Time 05 - The Fires of Heaven.txt Robert Jordan - The Wheel of Time Compendium.txt

|

JosephLee/science_textbook_elementary_kor_seed | ---

task_categories:

- question-answering

language:

- ko

pretty_name: test dataset

--- |

teowu/LSVQ-videos | ---

license: mit

task_categories:

- video-classification

tags:

- video quality assessment

---

This is an **unofficial** copy of the videos in the *LSVQ dataset (Ying et al, CVPR, 2021)*, the largest dataset available for Non-reference Video Quality Assessment (NR-VQA); this is to facilitate research studies on this dataset given that we have received several reports that the original links of the dataset is not available anymore.

*See [FAST-VQA](https://github.com/VQAssessment/FAST-VQA-and-FasterVQA) (Wu et al, ECCV, 2022) or [DOVER](https://github.com/VQAssessment/DOVER) (Wu et al, ICCV, 2023) repo on its converted labels (i.e. quality scores for videos).*

The file links to the labels in either of the repositories above are as follows:

```

--- examplar_data_labels

--- --- train_labels.txt (this is the training set labels of LSVQ)

--- --- LSVQ

--- --- --- labels_test.txt (this is the LSVQ_test test subset)

--- --- --- labels_1080p.txt (this is the LSVQ_1080p test subset)

```

It should be noticed that the copyright of this dataset still belongs to the Facebook Research and LIVE Laboratory in UT Austin, and we may delete this unofficial repo at any time if requested by the copyright holders.

Here is the original copyright notice of this dataset, as follows.

-----------COPYRIGHT NOTICE STARTS WITH THIS LINE------------ Copyright (c) 2020 The University of Texas at Austin All rights reserved.

Permission is hereby granted, without written agreement and without license or royalty fees, to use, copy, modify, and distribute this database (the images, the results and the source files) and its documentation for any purpose, provided that the copyright notice in its entirety appear in all copies of this database, and the original source of this database, Laboratory for Image and Video Engineering (LIVE, http://live.ece.utexas.edu ) at the University of Texas at Austin (UT Austin, http://www.utexas.edu ), is acknowledged in any publication that reports research using this database.

The following papers are to be cited in the bibliography whenever the database is used as:

Z. Ying, M. Mandal, D. Ghadiyaram and A.C. Bovik, "Patch-VQ: ‘Patching Up’ the Video Quality Problem," arXiv 2020.[paper]

Z. Ying, M. Mandal, D. Ghadiyaram and A.C. Bovik, "LIVE Large-Scale Social Video Quality (LSVQ) Database", Online:https://github.com/baidut/PatchVQ, 2020.

IN NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS DATABASE AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

THE UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE DATABASE PROVIDED HEREUNDER IS ON AN "AS IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN HAS NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

-----------COPYRIGHT NOTICE ENDS WITH THIS LINE------------ |

renumics/f1_dataset | ---

dataset_info:

features:

- name: Time

dtype: duration[ns]

- name: Driver

dtype: string

- name: DriverNumber

dtype: string

- name: LapTime

dtype: duration[ns]

- name: LapNumber

dtype: float64

- name: Stint

dtype: float64

- name: PitOutTime

dtype: duration[ns]

- name: PitInTime

dtype: duration[ns]

- name: Sector1Time

dtype: duration[ns]

- name: Sector2Time

dtype: duration[ns]

- name: Sector3Time

dtype: duration[ns]

- name: Sector1SessionTime

dtype: duration[ns]

- name: Sector2SessionTime

dtype: duration[ns]

- name: Sector3SessionTime

dtype: duration[ns]

- name: SpeedI1

dtype: float64

- name: SpeedI2

dtype: float64

- name: SpeedFL

dtype: float64

- name: SpeedST

dtype: float64

- name: IsPersonalBest

dtype: bool

- name: Compound

dtype: string

- name: TyreLife

dtype: float64

- name: FreshTyre

dtype: bool

- name: Team

dtype: string

- name: LapStartTime

dtype: duration[ns]

- name: LapStartDate

dtype: timestamp[ns]

- name: TrackStatus

dtype: string

- name: Position

dtype: float64

- name: Deleted

dtype: bool

- name: DeletedReason

dtype: string

- name: FastF1Generated

dtype: bool

- name: IsAccurate

dtype: bool

- name: DistanceToDriverAhead

sequence:

sequence: float64

- name: RPM

sequence:

sequence: float64

- name: Speed

sequence:

sequence: float64

- name: nGear

sequence:

sequence: float64

- name: Throttle

sequence:

sequence: float64

- name: Brake

sequence:

sequence: float64

- name: DRS

sequence:

sequence: float64

- name: X

sequence:

sequence: float64

- name: Y

sequence:

sequence: float64

- name: Z

sequence:

sequence: float64

- name: gear_vis

dtype: image

- name: speed_vis

dtype: image

- name: RPM_emb

sequence: float64

- name: Speed_emb

sequence: float64

- name: nGear_emb

sequence: float64

- name: Throttle_emb

sequence: float64

- name: Brake_emb

sequence: float64

- name: X_emb

sequence: float64

- name: Y_emb

sequence: float64

- name: Z_emb

sequence: float64

- name: portrait

dtype: image

splits:

- name: train

num_bytes: 561415487.5469999

num_examples: 1317

download_size: 300522146

dataset_size: 561415487.5469999

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

# Dataset Card for "f1_dataset"

This dataset includes race telemetry data from the Formula1 Montreail 2023 GP. It was obtained from the Ergast API using the fastf1 library.

We built an [interactive demo](https://huggingface.co/spaces/renumics/f1_montreal_gp) for this dataset on Hugging Face spaces.

You can explore the dataset on your machine with [Spotlight](https://github.com/Renumics/spotlight):

```bash

pip install renumics-spotlight

```

```Python

import datasets

from renumics import spotlight

ds = datasets.load_dataset('renumics/f1_dataset', split='train')

dtypes = {"DistanceToDriverAhead": spotlight.Sequence1D, "RPM": spotlight.Sequence1D, "Speed": spotlight.Sequence1D, "nGear": spotlight.Sequence1D,

"Throttle": spotlight.Sequence1D, "Brake": spotlight.Sequence1D, "DRS": spotlight.Sequence1D, "X": spotlight.Sequence1D, "Y": spotlight.Sequence1D, "Z": spotlight.Sequence1D,

'RPM_emb': spotlight.Embedding, 'Speed_emb': spotlight.Embedding, 'nGear_emb': spotlight.Embedding, 'Throttle_emb': spotlight.Embedding, 'Brake_emb': spotlight.Embedding,

'X_emb': spotlight.Embedding, 'Y_emb': spotlight.Embedding, 'Z_emb': spotlight.Embedding}

spotlight.show(ds, dtype=dtypes)

``` |

stas/openwebtext-synthetic-testing | ---

license: apache-2.0

---

Using 10 records from [openwebtext-10k](https://huggingface.co/datasets/stas/openwebtext-10k) this dataset is written for very fast testing and can produce a repeat of these 10 records in a form of 1, 2, 3, 4, 5, 10, 100, 300 or 1k records splits, e.g.:

```

$ python -c 'from datasets import load_dataset; \

ds=load_dataset("stas/openwebtext-synthetic-testing", split="10.repeat"); print(len(ds))'

10

$ python -c 'from datasets import load_dataset; \

ds=load_dataset("stas/openwebtext-synthetic-testing", split="1k.repeat"); print(len(ds))'

1000

```

Each record is just a single `text` record of several paragraphs long - web articles.

As this is used for very fast functional testing on CI there is no `train` or `validation` splits, you can just repeat the same records.

|

ajibawa-2023/SlimOrca-ShareGPT | ---

license: mit

language:

- en

size_categories:

- 100K<n<1M

task_categories:

- token-classification

- text-classification

pretty_name: SoS

---

**SlimOrca-ShareGPT**

This dataset is in Vicuna/ShareGPT format. There are 517981 set of conversations. Each set having 2 conversations.

Original dataset was released by [Open-Orca](https://huggingface.co/datasets/Open-Orca/SlimOrca). I have refined it so that "system" is not present.

Idea is to check how this dataset will perform on Llama-2 & Mistral Models. I will relese both models very soon.

Will this dataset help to improve performance of fine tuned model?

All the credit goes to the Open-Orca team for releasing Orca & SlimOrca datasets. |

YangyiYY/LVLM_NLF | ---

task_categories:

- conversational

- text-generation

language:

- en

pretty_name: LVLM_NLF

size_categories:

- 10K<n<100K

---

NOTE: LVLM_NLF and VLSafe are constructed based on COCO and LLaVA. So the image can be directly retrieved from the COCO train-2017 version using the image id.

LVLM_NLF (Large Vision Language Model with Natural Language Feedback) Dataset Card

Dataset details

Dataset type: LVLM_NLF is a GPT-4-Annotated natural language feedback dataset that aims to improve the 3H alignment and interaction ability of large vision-language models (LVLMs).

Dataset date: LVLM_NLF was collected between September and November 2023.

Paper of this dataset: https://arxiv.org/abs/2311.10081

VLSafe (vision-language safety) Dataset Card

We also create and release VLSafe dataset, which contains training and testing sets for improving and examining the harmlessness alignment of LVLMs.

Dataset type: VLSafe is a GPT-3.5-Turbo-Annotated dataset.

Dataset date: LVLM_NLF was collected between September and October 2023.

|

kwaikeg/KAgentInstruct | ---

license: cc-by-nc-sa-4.0

language:

- zh

- en

size_categories:

- 100K<n<200K

task_categories:

- text-generation

---

KAgentInstruct is the instruction-tuning dataset proposed in KwaiAgents ([Github](https://github.com/KwaiKEG/KwaiAgents)), which is a series of Agent-related works open-sourced by the [KwaiKEG](https://github.com/KwaiKEG) from [Kuaishou Technology](https://www.kuaishou.com/en). It contains over 200k agent-related instructions finetuning data (partially human-edited). Note that the dataset does not contain General-type data mentioned in the [paper](https://arxiv.org/pdf/2312.04889.pdf).

## Overall statistics of KAgentInstruct

We incorporate open-source templates ([ReACT](https://github.com/ysymyth/ReAct), [AutoGPT](https://github.com/Significant-Gravitas/AutoGPT), [ToolLLaMA](https://github.com/OpenBMB/ToolBench), [ModelScope](https://github.com/modelscope/modelscope-agent)), the KAgentSys template, and our Meta-Agent generated templates alongside the sampled queries into the experimental agent loop. This resulted in a collection of prompt-response pairs, comprising 224,137 instances, 120,917 queries and 18,005 templates, summarized in the table below.

| #Instances | #Queries | #Templates | Avg. #Steps |

|:---------:|:--------:|:----------:|:-----------:|

| 224,137 | 120,917 | 18,005 | 1.85 |

---

## Data Format

Each entry in the dataset is a dictionary with the following structure:

- `id`: A unique identifier for the entry.

- `query`: The query string.

- `source`: The origin of the data, which is one of the following: 'kwai-agent', 'meta-agent', 'autogpt', 'modelscope', 'react', 'toolllama', 'profile'.

- `functions`: A list of strings, where each string is a JSON object in string form that can be parsed into a dictionary, unless the source is 'meta-agent'.

- `function_names`: A list of function names as strings, corresponding to the functions in the `functions` list.

- `llm_prompt_response`: A list of dict, each containing:

- `instruction`: Instruction text string.

- `input`: Input text string.

- `output`: Output text string.

- `llm_name`: The name of the LLM used, either 'gpt4' or 'gpt3.5'.

- `human_edited`: A Boolean value indicating whether the response was edited by a human.

- `extra_infos`: A dictionary containing additional useful information.

This format is designed for clarity and streamlined access to data points within the dataset.

The overall data format is as follows,

```json

{

"id": "",

"query": "",

"source": "",

"functions": [],

"function_names": [],

"llm_prompt_response": [

{

'instruction': "",

'input': "",

'output': "",

'llm_name': "",

'human_edited': bool

},

...

],

"extra_infos": {}

}

```

---

## How to download KAgentInstruct

You can download the KAgentInstruct through [kwaikeg/KAgentBench](https://huggingface.co/datasets/kwaikeg/KAgentInstruct/tree/main)

---

## Citation

```

@article{pan2023kwaiagents,

author = {Haojie Pan and

Zepeng Zhai and

Hao Yuan and

Yaojia Lv and

Ruiji Fu and

Ming Liu and

Zhongyuan Wang and

Bing Qin

},

title = {KwaiAgents: Generalized Information-seeking Agent System with Large Language Models},

journal = {CoRR},

volume = {abs/2312.04889},

year = {2023}

}

```

|

OpenGVLab/SA-Med2D-20M | ---

license: cc-by-nc-sa-4.0

---

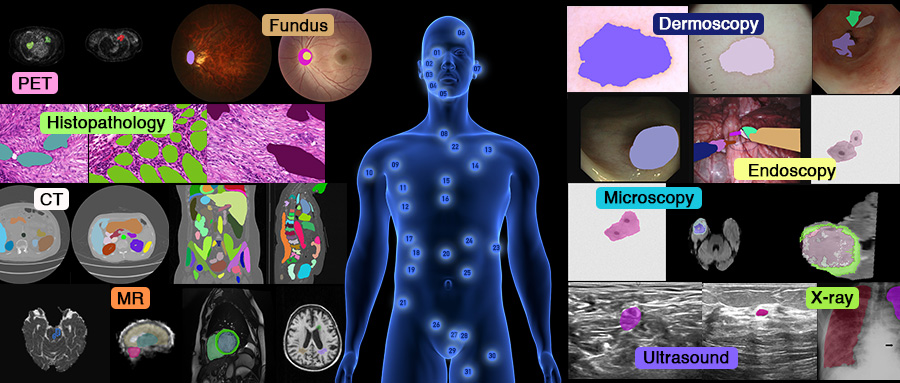

# [SA-Med2D-20M](https://arxiv.org/abs/2311.11969)

The largest benchmark dataset for segmentation in the field of medical imaging.

As is well known, the emergence of ImageNet has greatly propelled the development of AI, especially deep learning. It has provided massive data and powerful baseline models for the computer vision community, enabling researchers to achieve breakthroughs in tasks such as natural image classification, segmentation, and detection. However, in the medical image realm, there lack of such a large dataset for developing powerful medical models.

To address the gap in the medical field, we are introducing the largest benchmark dataset for medical image segmentation. This initiative aims to drive the rapid development of AI in healthcare and accelerate the transformation of computational medicine towards a more inclusive direction.

Please visit the [GitHub](https://github.com/OpenGVLab/SAM-Med2D) page and further exploit the dataset!

Due to data privacy and ethical requirements, we currently only provide access to a 16M dataset. We will keep updating and maintaining this database. Please stay tuned for further updates from us.

## 👉 Filesystem Hierarchy

```bash

~/SAM-Med2D-20M

├── images

| ├── mr_00--ACDC--patient001_frame01--x_0006.png

| ├── mr_t1--BraTS2021--BraTS2021_00218--z_0141.png

| ├── ...

| ├── ct_00--CAD_PE--001--x_0125.png

| ├── x_ray--covid_19_ct_cxr--16660_5_1--2d_none.png

|

├── masks

| ├── mr_00--ACDC--patient001_frame01--x_0006--0000_000.png

| ├── mr_t1--BraTS2021--BraTS2021_00218--z_0141--0011_000.png

| ├── ...

| ├── ct_00--CAD_PE--001--x_0125--0000_002.png

| ├── x_ray--covid_19_ct_cxr--16660_5_1--2d_none--0000_001.png

|

├── SAMed2D_v1_class_mapping_id.json

|

├── SAMed2D_v1.json

```

The SA-Med2D-20M dataset is named following the convention below:

```bash

-images

-{modality_sub-modality}--{dataset name}--{ori name}--{dimension_slice}.png

-masks

-{modality_sub-modality}--{dataset name}--{ori name}--{dimension_slice}--{class instance_id}.png

```

Note: "sub-modality" applies only to 3D data, and when "sub-modality" is "00," it indicates either the absence of a sub-modality or an unknown sub-modality type. "dataset name" refers to the specific dataset name that the case is from. "ori name" is the original case name in its dataset. "dimension slice", e.g., "x_100", indicates the dimension along which we split a 3D case as well as the slice ID in this dimension. If we split a 3D case with axis x and the current slice is 100, then the term can be "x_0100". For 2D datasets, the "dimension_slice id" is uniformly set to "2d_none". "class instance_id", unique to masks, encapsulates both category information and instance id, and the detailed information is stored in the "SAMed2D_v1_class_mapping_id.json" file. For instance, if the category "liver" is assigned the ID "0003" and there is only one instance of this category in the case, the "class instance_id" can be denoted as "0003_000". Besides, the category "liver" in the "SAMed2D_v1_class_mapping_id.json" file is formulated as key-value pair with _python-dict_ format: \{"liver": "0003"\}.

The file "SAMed2D_v1_class_mapping_id.json" stores the information for converting class instances. The file "SAMed2D_v1.json" contains the path information for all image and mask pairs.

## 👉 Unzipping split zip files

Windows:

decompress SA-Med2D-16M.zip to automatically extract the other volumes together.

Linux:

1. zip SA-Med2D-16M.zip SA-Med2D-16M.z0* SA-Med2D-16M.z10 -s=0 --out {full}.zip

2. unzip {full}.zip

## 🤝 免责声明

- SA-Med2D-20M是由多个公开的数据集组成,旨在取之于社区,回馈于社区,为研究人员和开发者提供一个用于学术和技术研究的资源。使用本数据集的任何个人或组织(以下统称为“使用者”)需遵守以下免责声明:

1. 数据集来源:本数据集由多个公开的数据集组成,这些数据集的来源已在预印版论文中明确标明。使用者应当遵守原始数据集的相关许可和使用条款。

2. 数据准确性:尽管我们已经努力确保数据集的准确性和完整性,但无法对数据集的准确性作出保证。使用者应自行承担使用数据集可能带来的风险和责任。

3. 责任限制:在任何情况下,数据集的提供者及相关贡献者均不对使用者的任何行为或结果承担责任。

4. 使用约束:使用者在使用本数据集时,应遵守适用的法律法规和伦理规范。使用者不得将本数据集用于非法、侵犯隐私、诽谤、歧视或其他违法或不道德的目的。

5. 知识产权:本数据集的知识产权归原始数据集的相关权利人所有,使用者不得以任何方式侵犯数据集的知识产权。

- 作为非盈利机构,团队倡导和谐友好的开源交流环境,若在开源数据集内发现有侵犯您合法权益的内容,可发送邮件至(yejin@pilab.org.cn, chengjunlong@pilab.org.cn),邮件中请写明侵权相关事实的详细描述并向我们提供相关的权属证明资料。我们将于3个工作日内启动调查处理机制,并采取必要的措施进行处置(如下架相关数据)。但应确保您投诉的真实性,否则采取措施后所产生的不利后果应由您独立承担。

- 通过下载、复制、访问或使用本数据集,即表示使用者已阅读、理解并同意遵守本免责声明中的所有条款和条件。如果使用者无法接受本免责声明的任何部分,请勿使用本数据集。

## 🤝 Disclaimer

- SA-Med2D-20M is composed of multiple publicly available datasets and aims to provide a resource for academic and technical research to researchers and developers. Any individual or organization (hereinafter referred to as "User") using this dataset must comply with the following disclaimer:

1. Dataset Source: SA-Med2D-20M is composed of multiple publicly available datasets, and the sources of these datasets have been clearly indicated in the preprint paper. Users should adhere to the relevant licenses and terms of use of the original datasets.

2. Data Accuracy: While efforts have been made to ensure the accuracy and completeness of the dataset, no guarantee can be given regarding its accuracy. Users assume all risks and liabilities associated with the use of the dataset.

3. Limitation of Liability: Under no circumstances shall the dataset providers or contributors be held liable for any actions or outcomes of the Users.

4. Usage Constraints: Users must comply with applicable laws, regulations, and ethical norms when using this dataset. The dataset must not be used for illegal, privacy-infringing, defamatory, discriminatory, or other unlawful or unethical purposes.

5. Intellectual Property: The intellectual property rights of this dataset belong to the relevant rights holders of the original datasets. Users must not infringe upon the intellectual property rights of the dataset in any way.

- As a non-profit organization, we advocate for a harmonious and friendly open-source communication environment. If any content in the open dataset is found to infringe upon your legitimate rights and interests, you can send an email to (yejin@pilab.org.cn, chengjunlong@pilab.org.cn) with a detailed description of the infringement and provide relevant ownership proof materials. We will initiate an investigation and handling mechanism within three working days and take necessary measures (such as removing relevant data) if warranted. However, the authenticity of your complaint must be ensured, as any adverse consequences resulting from the measures taken shall be borne solely by you.

- By downloading, copying, accessing, or using this dataset, the User indicates that they have read, understood, and agreed to comply with all the terms and conditions of this disclaimer. If the User cannot accept any part of this disclaimer, please refrain from using this dataset.

## 🤝 Acknowledgement

- We thank all medical workers and dataset owners for making public datasets available to the community. If you find that your dataset is included in our SA-Med2D-20M but you do not want us to do so, please contact us to remove it.

## 👋 Hiring & Global Collaboration

- **Hiring:** We are hiring researchers, engineers, and interns in General Vision Group, Shanghai AI Lab. If you are interested in Medical Foundation Models and General Medical AI, including designing benchmark datasets, general models, evaluation systems, and efficient tools, please contact us.

- **Global Collaboration:** We're on a mission to redefine medical research, aiming for a more universally adaptable model. Our passionate team is delving into foundational healthcare models, promoting the development of the medical community. Collaborate with us to increase competitiveness, reduce risk, and expand markets.

- **Contact:** Junjun He(hejunjun@pjlab.org.cn), Jin Ye(yejin@pjlab.org.cn), and Tianbin Li (litianbin@pjlab.org.cn).

## 👉 Typos of paper

1. Formula (1) is incorrect, after correction: <img src="https://i.postimg.cc/sXRK4MKh/20231123001020.png" alt="alt text" width="202" height="50">

## Reference

```

@misc{ye2023samed2d20m,

title={SA-Med2D-20M Dataset: Segment Anything in 2D Medical Imaging with 20 Million masks},

author={Jin Ye and Junlong Cheng and Jianpin Chen and Zhongying Deng and Tianbin Li and Haoyu Wang and Yanzhou Su and Ziyan Huang and Jilong Chen and Lei Jiang and Hui Sun and Min Zhu and Shaoting Zhang and Junjun He and Yu Qiao},

year={2023},

eprint={2311.11969},

archivePrefix={arXiv},

primaryClass={eess.IV}

}

@misc{cheng2023sammed2d,

title={SAM-Med2D},

author={Junlong Cheng and Jin Ye and Zhongying Deng and Jianpin Chen and Tianbin Li and Haoyu Wang and Yanzhou Su and

Ziyan Huang and Jilong Chen and Lei Jiangand Hui Sun and Junjun He and Shaoting Zhang and Min Zhu and Yu Qiao},

year={2023},

eprint={2308.16184},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

|

shi3z/Japanese_wikipedia_conversation_100K | ---

license: mit

---

Wikipedia日本語版データセット(izumi-lab/wikipedia-ja-20230720)を元にGPT-3.5-Turboで会話文を生成したデータセットです GPT-3.5-Turboを使用しました。 |

SciPhi/AgentSearch-V1 | ---

language:

- en

size_categories:

- 1B<n<10B

task_categories:

- text-generation

pretty_name: AgentSearch-V1

configs:

- config_name: default

data_files:

- split: train

path: "**/*.parquet"

---

### Getting Started

The AgentSearch-V1 dataset boasts a comprehensive collection of over one billion embeddings, produced using [jina-v2-base](https://huggingface.co/jinaai/jina-embeddings-v2-base-en). The dataset encompasses more than 50 million high-quality documents and over 1 billion passages, covering a vast range of content from sources such as Arxiv, Wikipedia, Project Gutenberg, and includes carefully filtered Creative Commons (CC) data. Our team is dedicated to continuously expanding and enhancing this corpus to improve the search experience. We welcome your thoughts and suggestions – please feel free to reach out with your ideas!

To access and utilize the AgentSearch-V1 dataset, you can stream it via HuggingFace with the following Python code:

```python

from datasets import load_dataset

import json

import numpy as np

# To stream the entire dataset:

ds = load_dataset("SciPhi/AgentSearch-V1", data_files="**/*", split="train", streaming=True)

# Optional, stream just the "arxiv" dataset

# ds = load_dataset("SciPhi/AgentSearch-V1", data_files="**/*", split="train", data_files="arxiv/*", streaming=True)

# To process the entries:

for entry in ds:

embeddings = np.frombuffer(

entry['embeddings'], dtype=np.float32

).reshape(-1, 768)

text_chunks = json.loads(entry['text_chunks'])

metadata = json.loads(entry['metadata'])

print(f'Embeddings:\n{embeddings}\n\nChunks:\n{text_chunks}\n\nMetadata:\n{metadata}')

break

```

---

A full set of scripts to recreate the dataset from scratch can be found [here](https://github.com/SciPhi-AI/agent-search). Further, you may check the docs for details on how to perform RAG over AgentSearch.

### Languages

English.

## Dataset Structure

The raw dataset structure is as follows:

```json

{

"url": ...,

"title": ...,

"metadata": {"url": "...", "timestamp": "...", "source": "...", "language": "...", ...},

"text_chunks": ...,

"embeddings": ...,

"dataset": "book" | "arxiv" | "wikipedia" | "stack-exchange" | "open-math" | "RedPajama-Data-V2"

}

```

## Dataset Creation

This dataset was created as a step towards making humanities most important knowledge openly searchable and LLM optimal. It was created by filtering, cleaning, and augmenting locally publicly available datasets.

To cite our work, please use the following:

```

@software{SciPhi2023AgentSearch,

author = {SciPhi},

title = {AgentSearch [ΨΦ]: A Comprehensive Agent-First Framework and Dataset for Webscale Search},

year = {2023},

url = {https://github.com/SciPhi-AI/agent-search}

}

```

### Source Data

```

@ONLINE{wikidump,

author = "Wikimedia Foundation",

title = "Wikimedia Downloads",

url = "https://dumps.wikimedia.org"

}

```

```

@misc{paster2023openwebmath,

title={OpenWebMath: An Open Dataset of High-Quality Mathematical Web Text},

author={Keiran Paster and Marco Dos Santos and Zhangir Azerbayev and Jimmy Ba},

year={2023},

eprint={2310.06786},

archivePrefix={arXiv},

primaryClass={cs.AI}

}

```

```

@software{together2023redpajama,

author = {Together Computer},

title = {RedPajama: An Open Source Recipe to Reproduce LLaMA training dataset},

month = April,

year = 2023,

url = {https://github.com/togethercomputer/RedPajama-Data}

}

```

### License

Please refer to the licenses of the data subsets you use.

* [Open-Web (Common Crawl Foundation Terms of Use)](https://commoncrawl.org/terms-of-use/full/)

* Books: [the_pile_books3 license](https://huggingface.co/datasets/the_pile_books3#licensing-information) and [pg19 license](https://huggingface.co/datasets/pg19#licensing-information)

* [ArXiv Terms of Use](https://info.arxiv.org/help/api/tou.html)

* [Wikipedia License](https://huggingface.co/datasets/wikipedia#licensing-information)

* [StackExchange license on the Internet Archive](https://archive.org/details/stackexchange)

<!--

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

[More Information Needed]

--> |

Weaxs/csc | ---

license: apache-2.0

task_categories:

- text2text-generation

language:

- zh

size_categories:

- 100M<n<1B

tags:

- chinese-spelling-check

- 中文

---

# Dataset for CSC

中文纠错数据集

# Dataset Description

Chinese Spelling Correction (CSC) is a task to detect and correct misspelled characters in Chinese texts.

共计 120w 条数据,以下是数据来源

|数据集|语料|链接|

|------|------|------|

|SIGHAN+Wang271K 拼写纠错数据集|SIGHAN+Wang271K(27万条)|https://huggingface.co/datasets/shibing624/CSC|

|ECSpell 拼写纠错数据集|包含法律、医疗、金融等领域|https://github.com/Aopolin-Lv/ECSpell|

|CGED 语法纠错数据集|仅包含了2016和2021年的数据集|https://github.com/wdimmy/Automatic-Corpus-Generation?spm=a2c22.12282016.0.0.5f3e7398w7SL4P|

|NLPCC 纠错数据集|包含语法纠错和拼写纠错|https://github.com/Arvid-pku/NLPCC2023_Shared_Task8 <br/>http://tcci.ccf.org.cn/conference/2023/dldoc/nacgec_training.zip<br/>http://tcci.ccf.org.cn/conference/2018/dldoc/trainingdata02.tar.gz|

|pycorrector 语法纠错集|中文语法纠错数据集|https://github.com/shibing624/pycorrector/tree/llm/examples/data/grammar|

其余的数据集还可以看

- 中文文本纠错数据集汇总 (天池):https://tianchi.aliyun.com/dataset/138195

- NLPCC 2023中文语法纠错数据集:http://tcci.ccf.org.cn/conference/2023/taskdata.php

# Languages

The data in CSC are in Chinese.

# Dataset Structure

An example of "train" looks as follows:

```json

{

"conversations": [

{"from":"human","value":"对这个句子纠错\n\n以后,我一直以来自学汉语了。"},

{"from":"gpt","value":"从此以后,我就一直自学汉语了。"}

]

}

```

# Contributions

[Weaxs](https://github.com/Weaxs) 整理并上传 |

smangrul/hinglish_self_instruct_v0 | ---

dataset_info:

features:

- name: messages

list:

- name: content

dtype: string

- name: role

dtype: string

- name: category

dtype: string

splits:

- name: train

num_bytes: 251497

num_examples: 1018

download_size: 124371

dataset_size: 251497

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

task_categories:

- text-generation

language:

- hi

- en

size_categories:

- 1K<n<10K

---

# Hinglish Instruct Dataset using Self Instruct method

The prompt used for generating the samples:

```

You are asked to come up with a set of 50 diverse task instructions in Hinglish or Hindi.

These task instructions will be given to a GPT model and we will evaluate the GPT model for completing the instructions.

Here are the requirements:

1. Try not to repeat the verb for each instruction to maximize diversity.

2. The language used for the instruction also should be diverse. For example, you should combine questions with imperative instructions.

3. The type of instructions should be diverse. The list should include diverse types of tasks like open-ended generation, classification, editing, etc.

2. A GPT language model should be able to complete the instruction. For example, do not ask the assistant to create any visual or audio output. For another example, do not ask the assistant to wake you up at 5pm or set a reminder because it cannot perform any action.

3. The instructions should be in Hinglish or Hindi.

4. The instructions should be 1 to 2 sentences long. Either an imperative sentence or a question is permitted.

5. You should generate an appropriate input to the instruction. The input field should contain a specific example provided for the instruction. It should involve realistic data and should not contain simple placeholders. The input should provide substantial content to make the instruction challenging but should ideally not exceed 100 words.

6. Not all instructions require input. For example, when an instruction asks about some general information, "what is the highest peak in the world", it is not necessary to provide a specific context. In this case, we simply put "<noinput>" in the input field.

7. The output should be an appropriate response to the instruction and the input. Make sure the output is less than 500 words.

8. Provide complete instruction, input and output without placeholders.

9. Example format of the output task:

Instruction: किसी भी एक भारतीय त्योहार के बारे में बताएं।

Input: <noinput>

Output: दीपावली, जिसे दिवाली भी कहा जाता है, प्रकाश का त्योहार है। इस दिन, लोग अपने घरों में दीये जलाते हैं, पूजा करते हैं, और मिठाईयाँ बांटते हैं।

List of 50 tasks:

```

Note:

1. The instruction "Provide complete instruction, input and output without placeholders." was important else GPT-4 especially was **very lazy** and just gave placeholders for the outputs.

2. Most of the dataset is generated using GPT-3.5 Turbo while some part of it is generated using GPT-4. Most of the dataset is in Hinglish while some part of it is in Hindi.

3. The prompt template is adapted from the Alpaca GitHub repo https://github.com/tatsu-lab/stanford_alpaca/blob/main/prompt.txt |

STEM-AI-mtl/Electrical-engineering | ---

license: other

license_name: stem.ai.mtl

license_link: LICENSE

task_categories:

- question-answering

- text-generation

language:

- en

tags:

- Python

- Kicad

- Electrical engineering

size_categories:

- 1K<n<10K

---

## To the electrical engineering community

This dataset contains Q&A prompts about electrical engineering, Kicad's EDA software features and scripting console Python codes.

## Authors

STEM.AI: stem.ai.mtl@gmail.com\

[William Harbec](https://www.linkedin.com/in/william-harbec-56a262248/) |

MMVP/MMVP | ---

license: mit

task_categories:

- question-answering

size_categories:

- n<1K

---

# MMVP Benchmark Datacard

## Basic Information

**Title:** MMVP Benchmark

**Description:** The MMVP (Multimodal Visual Patterns) Benchmark focuses on identifying “CLIP-blind pairs” – images that are perceived as similar by CLIP despite having clear visual differences. MMVP benchmarks the performance of state-of-the-art systems, including GPT-4V, across nine basic visual patterns. It highlights the challenges these systems face in answering straightforward questions, often leading to incorrect responses and hallucinated explanations.

## Dataset Details

- **Content Types:** Images (CLIP-blind pairs)

- **Volume:** 300 images

- **Source of Data:** Derived from ImageNet-1k and LAION-Aesthetics

- **Data Collection Method:** Identification of CLIP-blind pairs through comparative analysis

|

fhai50032/SymptomsDisease246k | ---

license: apache-2.0

language:

- en

tags:

- medical

size_categories:

- 100K<n<1M

---

## Source

[Disease-Symptom-Extensive-Clean](https://huggingface.co/datasets/dhivyeshrk/Disease-Symptom-Extensive-Clean)

## Context Sample

```json

{

"query": "Having these specific symptoms: anxiety and nervousness, depression, shortness of breath, depressive or psychotic symptoms, dizziness, palpitations, irregular heartbeat, breathing fast may indicate",

"response": "You may have panic disorder"

}

```

## Raw Sample

```json

{

"query": "dizziness, abnormal involuntary movements, headache, diminished vision",

"response": "pseudotumor cerebri"

}

``` |

allenai/aboutme | ---

language:

- en

tags:

- common crawl

- webtext

- social nlp

size_categories:

- 10M<n<100M

pretty_name: AboutMe

license: other

extra_gated_prompt: "Access to this dataset is automatically granted upon accepting the [**AI2 ImpACT License - Low Risk Artifacts (“LR Agreement”)**](https://allenai.org/licenses/impact-lr) and completing all fields below."

extra_gated_fields:

Your full name: text

Organization or entity you are affiliated with: text

State or country you are located in: text

Contact email: text

Please describe your intended use of the medium risk artifact(s): text

I AGREE to the terms and conditions of the MR Agreement above: checkbox

I AGREE to AI2’s use of my information for legal notices and administrative matters: checkbox

I CERTIFY that the information I have provided is true and accurate: checkbox

---

# AboutMe: Self-Descriptions in Webpages

## Dataset description

**Curated by:** Li Lucy, Suchin Gururangan, Luca Soldaini, Emma Strubell, David Bamman, Lauren Klein, Jesse Dodge

**Languages:** English

**License:** AI2 ImpACT License - Low Risk Artifacts

**Paper:** [https://arxiv.org/abs/2401.06408](https://arxiv.org/abs/2401.06408)

## Dataset sources

Common Crawl

## Uses

This dataset was originally created to document the effects of different pretraining data curation practices. It is intended for research use, e.g. AI evaluation and analysis of development pipelines or social scientific research of Internet communities and self-presentation.

## Dataset structure

This dataset consists of three parts:

- `about_pages`: webpages that are self-descriptions and profiles of website creators, or text *about* individuals and organizations on the web. These are zipped files with one json per line, with the following keys:

- `url`

- `hostname`

- `cc_segment` (for tracking where in Common Crawl the page is originally retrieved from)

- `text`

- `title` (webpage title)

- `sampled_pages`: random webpages from the same set of websites, or text created or curated *by* individuals and organizations on the web. It has the same keys as `about_pages`.

- `about_pages_meta`: algorithmically extracted information from "About" pages, including:

- `hn`: hostname of website

- `country`: the most frequent country of locations on the page, obtained using Mordecai3 geoparsing

- `roles`: social roles and occupations detected using RoBERTa based on expressions of self-identification, e.g. *I am a **dancer***. Each role is accompanied by sentence number and start/end character offsets.

- `class`: whether the page is detected to be an individual or organization

- `cluster`: one of fifty topical labels obtained via tf-idf clustering of "about" pages

Each file contains one json entry per line. Note that the entries in each file are not in a random order, but instead reflect an ordering outputted by CCNet (e.g. neighboring pages may be similar in Wikipedia-based perplexity.)

## Dataset creation

AboutMe is derived from twenty four snapshots of Common Crawl collected between 2020–05 and 2023–06. We extract text from raw Common Crawl using CCNet, and deduplicate URLs across all snapshots. We only include text that has a fastText English score > 0.5. "About" pages are identified using keywords in URLs (about, about-me, about-us, and bio), and their URLs end in `/keyword/` or `keyword.*`, e.g. `about.html`. We only include pages that have one candidate URL, to avoid ambiguity around which page is actually about the main website creator. If a webpage has both `https` and `http` versions in Common Crawl, we take the `https` version. The "sampled" pages are a single webpage randomly sampled from the website that has an "about" page.

More details on metadata creation can be found in our paper, linked above.

## Bias, Risks, and Limitations

Algorithmic measurements of textual content is scalable, but imperfect. We acknowledge that our dataset and analysis methods (e.g. classification, information retrieval) can also uphold language norms and standards that may disproportionately affect some social groups over others. We hope that future work continues to improve these content analysis pipelines, especially for long-tail or minoritized language phenomena.

We encourage future work using our dataset to minimize the extent to which they infer unlabeled or implicit information about subjects in this dataset, and to assess the risks of inferring various types of information from these pages. In addition, measurements of social identities from AboutMe pages are affected by reporting bias.

Future uses of this data should avoid incorporating personally identifiable information into generative models, report only aggregated results, and paraphrase quoted examples in papers to protect the privacy of subjects.

## Citation

```

@misc{lucy2024aboutme,

title={AboutMe: Using Self-Descriptions in Webpages to Document the Effects of English Pretraining Data Filters},

author={Li Lucy and Suchin Gururangan and Luca Soldaini and Emma Strubell and David Bamman and Lauren Klein and Jesse Dodge},

year={2024},

eprint={2401.06408},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

## Dataset contact

lucy3_li@berkeley.edu |

davidchan/anim400k | ---

task_categories:

- text-to-speech

- automatic-speech-recognition

- audio-to-audio

- audio-classification

- text-classification

- text2text-generation

- video-classification

- summarization

language:

- en

- ja

pretty_name: Anim-400K

size_categories:

- 100K<n<1M

extra_gated_prompt: "Due to copyright limitations, all prospect users of the Anim400K Datasets must sign both a Terms of Use Agreement (TOU) and a Non Disclosure Agreement (NDA). The form MUST be filled out for all users of the dataset. The answers to this form will auto-complete and sign the template TOU (https://docs.google.com/document/d/1MNAU12i4XIXj8O8ThUuep8jJw5WOGArCvZdcR2UmJGM/edit?usp=sharing) and NDA (https://docs.google.com/document/d/1cLtFX2GarMEzZn5RwL-gEuAthS1qaKOfb3r5m1icOX0/edit?usp=sharing)"

extra_gated_fields:

Full Name: text

Email: text

Researcher Google Scholar Page: text

Affiliation: text

Name of Principal Investigator or Supervisor: text

Principle Investigator/Supervisior Google Scholar Page: text

Purpose of Intended Use: text

I understand that the Anim400K team and the Regents of the University of California make no warranties, express or implied, regarding the Dataset, including but not limited to being up-to-date, correct or complete․ Neither the Anim400K team nor the Regents of the University of California can be held liable for providing access to the Dataset or usage of the Dataset: checkbox

I understand and agree that the use of this Dataset is for scientific or research purposes only․ Any other use is explicitly prohibited: checkbox

I understand and agree that this Dataset and the videos are protected by copyrights: checkbox

I understand and agree not to share the dataset with any third party: checkbox

I understand and agree that I (as the researcher) takes full responsibility for usage of the Dataset and processing the Dataset: checkbox

I have read, and I agree and sign the Non Disclosure Agreement: checkbox

I have read, and I agree and sign the Terms of Use Agreement: checkbox

---

# Anim-400K: A dataset designed from the ground up for automated dubbing of video

# What is Anim-400K?

Anim-400K is a large-scale dataset of aligned audio-video clips in both the English and Japanese languages. It is comprised of over 425K aligned clips (763 hours) consisting of both video and audio drawn from over 190 properties covering hundreds of themes and genres. Anim400K is further augmented with metadata including genres, themes, show-ratings, character profiles, and animation styles at a property level, episode synopses, ratings, and subtitles at an episode level, and pre-computed ASR at an aligned clip level to enable in-depth research into several audio-visual tasks.

Read the [[ArXiv Preprint](https://arxiv.org/abs/2401.05314)]

Check us out on [[Github](https://github.com/DavidMChan/Anim400K/)]

# News

**[January 2024]** Anim-400K (v1) available on [Huggingface Datasets](https://huggingface.co/datasets/davidchan/anim400k/). </br>

**[January 2024]** Anim-400K (v1) release. </br>

**[January 2024]** Anim-400K (v1) accepted at ICASSP2024. </br>

# Citation

If any part of our paper is helpful to your work, please cite with:

```

@inproceedings{cai2024anim400k,

title={ANIM-400K: A Large-Scale Dataset for Automated End to End Dubbing of Video},

author={Cai, Kevin and Liu, Chonghua and Chan, David M.},

booktitle={ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={1--5},

year={2024},

organization={IEEE}

}

```

# Acknowledgements

This repository, and data release model is modeled on that used by the [MAD](https://github.com/Soldelli/MAD) dataset.

|