repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

laurauzcategui/xlm-roberta-base-finetuned-marc-en | laurauzcategui | xlm-roberta | 12 | 3 | transformers | 0 | text-classification | true | false | false | mit | null | ['amazon_reviews_multi'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,259 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-marc-en

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the amazon_reviews_multi dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8945

- Mae: 0.5

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mae |

|:-------------:|:-----:|:----:|:---------------:|:---:|

| 1.1411 | 1.0 | 235 | 0.9358 | 0.5 |

| 0.9653 | 2.0 | 470 | 0.8945 | 0.5 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.0+cu111

- Datasets 1.14.0

- Tokenizers 0.10.3

| 0166f70a52720cf0bc7b4fa4a79adf40 |

Likalto4/Unconditional_Butterflies_x64 | Likalto4 | null | 6 | 0 | diffusers | 0 | unconditional-image-generation | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['pytorch', 'diffusers', 'unconditional-image-generation', 'diffusion-models-class'] | false | true | true | 677 | false |

# Model Card for a model trained based on the Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class), not using accelarate yet.

This model is a diffusion model for unconditional image generation of cute but small 🦋.

The model was trained with 1000 images using the [DDPM](https://arxiv.org/abs/2006.11239) architecture. Images generated are of 64x64 pixel size.

The model was trained for 50 epochs with a batch size of 64, using around 11 GB of GPU memory.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained(Likalto4/Unconditional_Butterflies_x64)

image = pipeline().images[0]

image

```

| 9bc2148cef8e56ad2e88bb5778a62104 |

google/t5-efficient-small-dm1000 | google | t5 | 12 | 11 | transformers | 0 | text2text-generation | true | true | true | apache-2.0 | ['en'] | ['c4'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['deep-narrow'] | false | true | true | 6,264 | false |

# T5-Efficient-SMALL-DM1000 (Deep-Narrow version)

T5-Efficient-SMALL-DM1000 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-small-dm1000** - is of model type **Small** with the following variations:

- **dm** is **1000**

It has **121.03** million parameters and thus requires *ca.* **484.11 MB** of memory in full precision (*fp32*)

or **242.05 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future. | 044a6823239e1c767eb11d2c823a473e |

pierreguillou/bert-large-cased-squad-v1.1-portuguese | pierreguillou | bert | 8 | 554 | transformers | 19 | question-answering | true | true | false | mit | ['pt'] | ['brWaC', 'squad', 'squad_v1_pt'] | null | 0 | 0 | 0 | 0 | 1 | 1 | 0 | ['question-answering', 'bert', 'bert-large', 'pytorch'] | false | true | true | 5,329 | false |

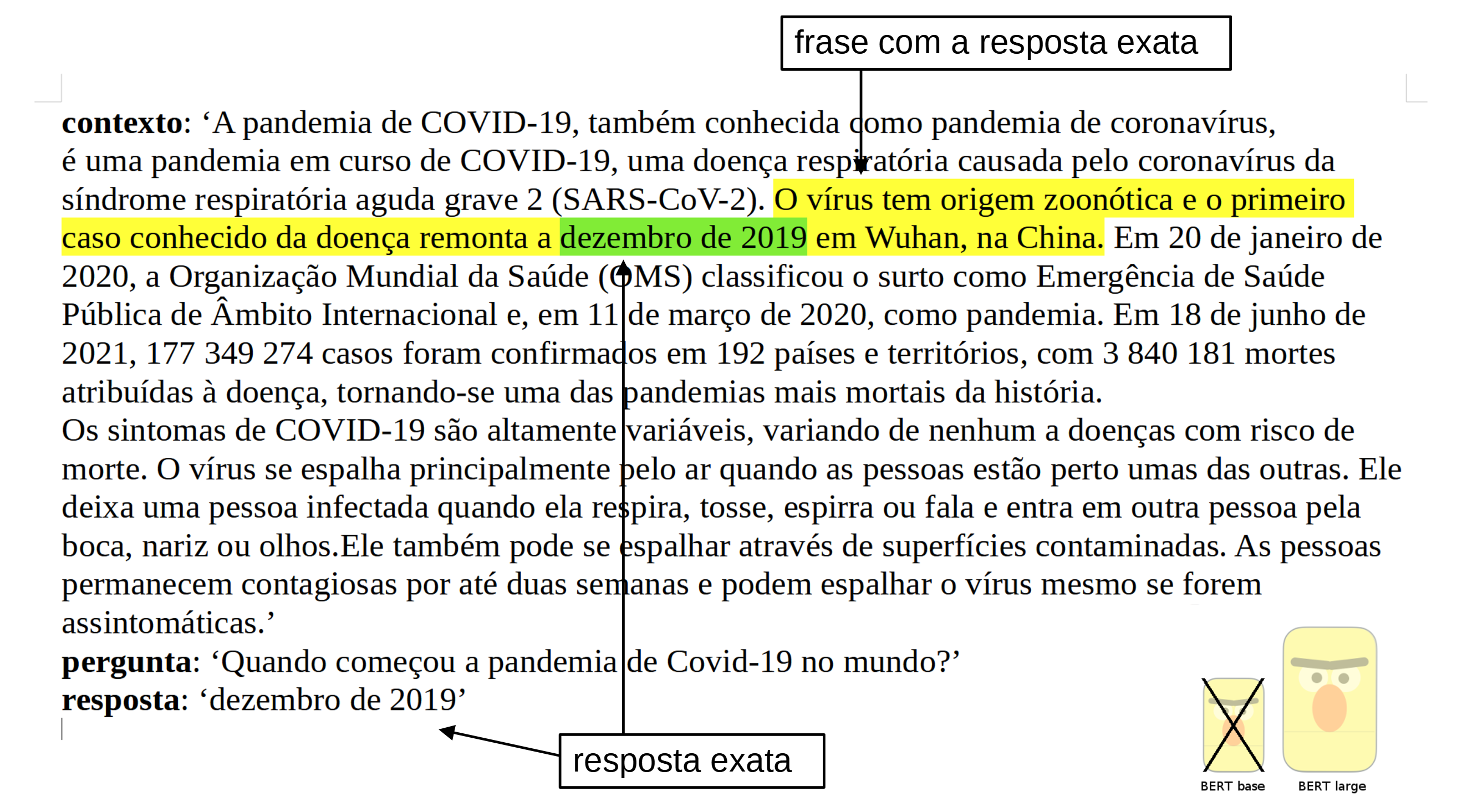

# Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1

## Introduction

The model was trained on the dataset SQUAD v1.1 in portuguese from the [Deep Learning Brasil group](http://www.deeplearningbrasil.com.br/).

The language model used is the [BERTimbau Large](https://huggingface.co/neuralmind/bert-large-portuguese-cased) (aka "bert-large-portuguese-cased") from [Neuralmind.ai](https://neuralmind.ai/): BERTimbau is a pretrained BERT model for Brazilian Portuguese that achieves state-of-the-art performances on three downstream NLP tasks: Named Entity Recognition, Sentence Textual Similarity and Recognizing Textual Entailment. It is available in two sizes: Base and Large.

## Informations on the method used

All the informations are in the blog post : [NLP | Como treinar um modelo de Question Answering em qualquer linguagem baseado no BERT large, melhorando o desempenho do modelo utilizando o BERT base? (estudo de caso em português)](https://medium.com/@pierre_guillou/nlp-como-treinar-um-modelo-de-question-answering-em-qualquer-linguagem-baseado-no-bert-large-1c899262dd96)

## Notebook in GitHub

[question_answering_BERT_large_cased_squad_v11_pt.ipynb](https://github.com/piegu/language-models/blob/master/question_answering_BERT_large_cased_squad_v11_pt.ipynb) ([nbviewer version](https://nbviewer.jupyter.org/github/piegu/language-models/blob/master/question_answering_BERT_large_cased_squad_v11_pt.ipynb))

## Performance

The results obtained are the following:

```

f1 = 84.43 (against 82.50 for the base model)

exact match = 72.68 (against 70.49 for the base model)

```

## How to use the model... with Pipeline

```python

import transformers

from transformers import pipeline

# source: https://pt.wikipedia.org/wiki/Pandemia_de_COVID-19

context = r"""

A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19,

uma doença respiratória causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2).

O vírus tem origem zoonótica e o primeiro caso conhecido da doença remonta a dezembro de 2019 em Wuhan, na China.

Em 20 de janeiro de 2020, a Organização Mundial da Saúde (OMS) classificou o surto

como Emergência de Saúde Pública de Âmbito Internacional e, em 11 de março de 2020, como pandemia.

Em 18 de junho de 2021, 177 349 274 casos foram confirmados em 192 países e territórios,

com 3 840 181 mortes atribuídas à doença, tornando-se uma das pandemias mais mortais da história.

Os sintomas de COVID-19 são altamente variáveis, variando de nenhum a doenças com risco de morte.

O vírus se espalha principalmente pelo ar quando as pessoas estão perto umas das outras.

Ele deixa uma pessoa infectada quando ela respira, tosse, espirra ou fala e entra em outra pessoa pela boca, nariz ou olhos.

Ele também pode se espalhar através de superfícies contaminadas.

As pessoas permanecem contagiosas por até duas semanas e podem espalhar o vírus mesmo se forem assintomáticas.

"""

model_name = 'pierreguillou/bert-large-cased-squad-v1.1-portuguese'

nlp = pipeline("question-answering", model=model_name)

question = "Quando começou a pandemia de Covid-19 no mundo?"

result = nlp(question=question, context=context)

print(f"Answer: '{result['answer']}', score: {round(result['score'], 4)}, start: {result['start']}, end: {result['end']}")

# Answer: 'dezembro de 2019', score: 0.5087, start: 290, end: 306

```

## How to use the model... with the Auto classes

```python

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

tokenizer = AutoTokenizer.from_pretrained("pierreguillou/bert-large-cased-squad-v1.1-portuguese")

model = AutoModelForQuestionAnswering.from_pretrained("pierreguillou/bert-large-cased-squad-v1.1-portuguese")

```

Or just clone the model repo:

```python

git lfs install

git clone https://huggingface.co/pierreguillou/bert-large-cased-squad-v1.1-portuguese

# if you want to clone without large files – just their pointers

# prepend your git clone with the following env var:

GIT_LFS_SKIP_SMUDGE=1

```

## Limitations and bias

The training data used for this model come from Portuguese SQUAD. It could contain a lot of unfiltered content, which is far from neutral, and biases.

## Author

Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1 was trained and evaluated by [Pierre GUILLOU](https://www.linkedin.com/in/pierreguillou/) thanks to the Open Source code, platforms and advices of many organizations ([link to the list](https://medium.com/@pierre_guillou/nlp-como-treinar-um-modelo-de-question-answering-em-qualquer-linguagem-baseado-no-bert-large-1c899262dd96#c2f5)). In particular: [Hugging Face](https://huggingface.co/), [Neuralmind.ai](https://neuralmind.ai/), [Deep Learning Brasil group](http://www.deeplearningbrasil.com.br/) and [AI Lab](https://ailab.unb.br/).

## Citation

If you use our work, please cite:

```bibtex

@inproceedings{pierreguillou2021bertlargecasedsquadv11portuguese,

title={Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1},

author={Pierre Guillou},

year={2021}

}

``` | 637c1f818c60255d4eefc56884f6741e |

sd-concepts-library/cindlop | sd-concepts-library | null | 9 | 0 | null | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,000 | false | ### cindlop on Stable Diffusion

This is the `<cindlop>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

| 10138bbc4bcb6337a79d8f03fc89f6e9 |

jonatasgrosman/exp_w2v2r_en_vp-100k_gender_male-5_female-5_s474 | jonatasgrosman | wav2vec2 | 10 | 3 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['en'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'en'] | false | true | true | 498 | false | # exp_w2v2r_en_vp-100k_gender_male-5_female-5_s474

Fine-tuned [facebook/wav2vec2-large-100k-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-100k-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (en)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 4fe246e92785fcd94d6282995a7dfff4 |

Helsinki-NLP/opus-mt-tvl-fi | Helsinki-NLP | marian | 10 | 8 | transformers | 0 | translation | true | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 776 | false |

### opus-mt-tvl-fi

* source languages: tvl

* target languages: fi

* OPUS readme: [tvl-fi](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/tvl-fi/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/tvl-fi/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/tvl-fi/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/tvl-fi/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.tvl.fi | 22.0 | 0.439 |

| 17ac19314251396f6ff939da797a6ce2 |

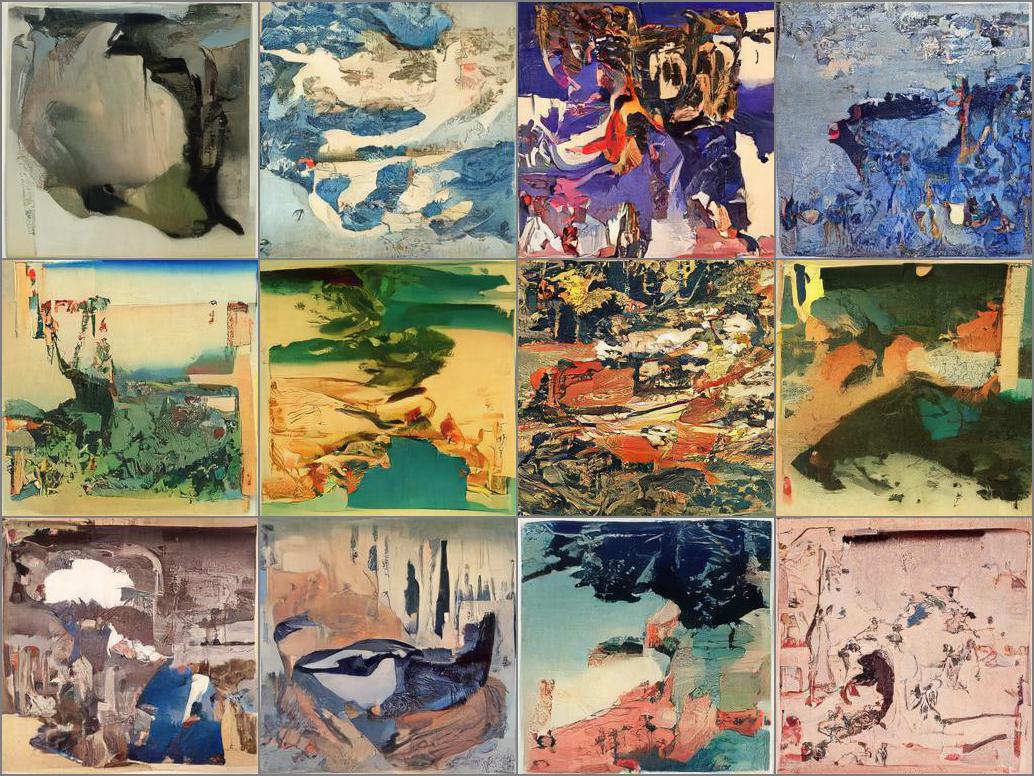

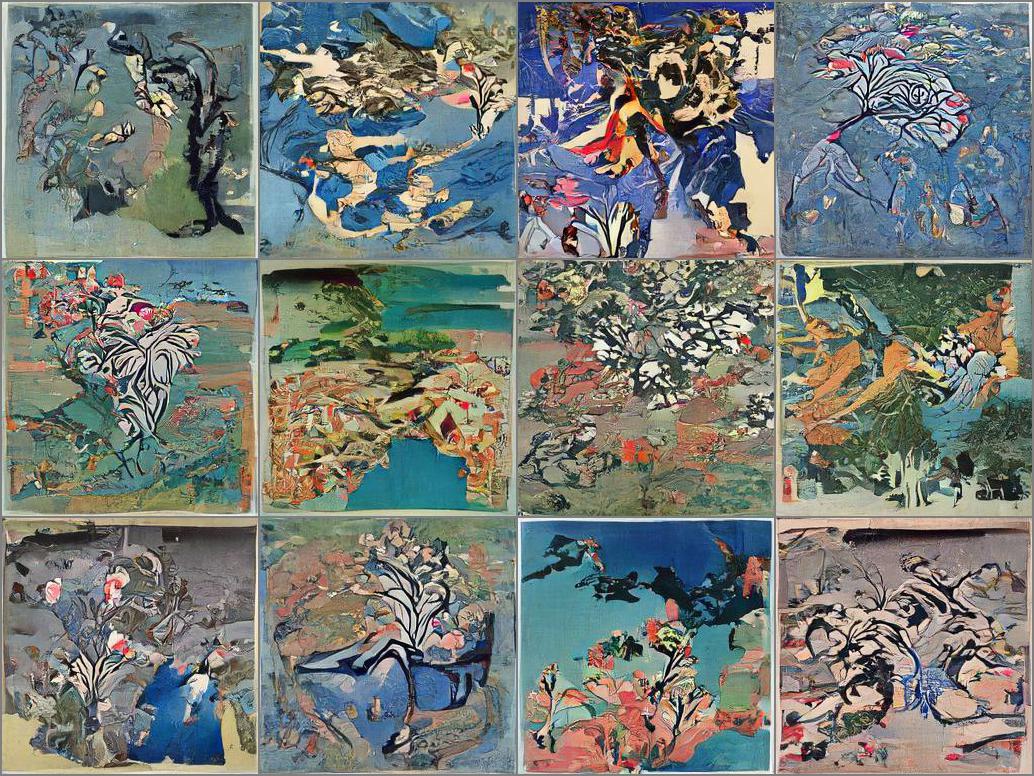

sd-concepts-library/museum-by-coop-himmelblau | sd-concepts-library | null | 9 | 0 | null | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,165 | false | ### museum by coop himmelblau on Stable Diffusion

This is the `<coop himmelblau museum>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

| 21ef75a2bdee8170a92792a2d7c6c580 |

DrishtiSharma/whisper-large-v2-punjabi-700-steps | DrishtiSharma | whisper | 15 | 2 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['pa'] | ['mozilla-foundation/common_voice_11_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['whisper-event', 'generated_from_trainer'] | true | true | true | 1,319 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Large Punjabi - Drishti Sharma

This model is a fine-tuned version of [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) on the Common Voice 11.0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2211

- Wer: 24.4764

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- training_steps: 700

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0584 | 5.79 | 700 | 0.2211 | 24.4764 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

| 1ea1c1ab23c27c29bf8ca3e66e543f09 |

sramasamy8/testModel | sramasamy8 | bert | 5 | 4 | transformers | 0 | fill-mask | true | false | false | apache-2.0 | ['en'] | ['bookcorpus', 'wikipedia'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['exbert'] | false | true | true | 8,918 | false |

# BERT base model (uncased)

Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced in

[this paper](https://arxiv.org/abs/1810.04805) and first released in

[this repository](https://github.com/google-research/bert). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

the Hugging Face team.

## Model description

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

predict if the two sentences were following each other or not.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

## Intended uses & limitations

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=bert) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-base-uncased')

>>> unmasker("Hello I'm a [MASK] model.")

[{'sequence': "[CLS] hello i'm a fashion model. [SEP]",

'score': 0.1073106899857521,

'token': 4827,

'token_str': 'fashion'},

{'sequence': "[CLS] hello i'm a role model. [SEP]",

'score': 0.08774490654468536,

'token': 2535,

'token_str': 'role'},

{'sequence': "[CLS] hello i'm a new model. [SEP]",

'score': 0.05338378623127937,

'token': 2047,

'token_str': 'new'},

{'sequence': "[CLS] hello i'm a super model. [SEP]",

'score': 0.04667217284440994,

'token': 3565,

'token_str': 'super'},

{'sequence': "[CLS] hello i'm a fine model. [SEP]",

'score': 0.027095865458250046,

'token': 2986,

'token_str': 'fine'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained("bert-base-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = TFBertModel.from_pretrained("bert-base-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

### Limitations and bias

Even if the training data used for this model could be characterized as fairly neutral, this model can have biased

predictions:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-base-uncased')

>>> unmasker("The man worked as a [MASK].")

[{'sequence': '[CLS] the man worked as a carpenter. [SEP]',

'score': 0.09747550636529922,

'token': 10533,

'token_str': 'carpenter'},

{'sequence': '[CLS] the man worked as a waiter. [SEP]',

'score': 0.0523831807076931,

'token': 15610,

'token_str': 'waiter'},

{'sequence': '[CLS] the man worked as a barber. [SEP]',

'score': 0.04962705448269844,

'token': 13362,

'token_str': 'barber'},

{'sequence': '[CLS] the man worked as a mechanic. [SEP]',

'score': 0.03788609802722931,

'token': 15893,

'token_str': 'mechanic'},

{'sequence': '[CLS] the man worked as a salesman. [SEP]',

'score': 0.037680890411138535,

'token': 18968,

'token_str': 'salesman'}]

>>> unmasker("The woman worked as a [MASK].")

[{'sequence': '[CLS] the woman worked as a nurse. [SEP]',

'score': 0.21981462836265564,

'token': 6821,

'token_str': 'nurse'},

{'sequence': '[CLS] the woman worked as a waitress. [SEP]',

'score': 0.1597415804862976,

'token': 13877,

'token_str': 'waitress'},

{'sequence': '[CLS] the woman worked as a maid. [SEP]',

'score': 0.1154729500412941,

'token': 10850,

'token_str': 'maid'},

{'sequence': '[CLS] the woman worked as a prostitute. [SEP]',

'score': 0.037968918681144714,

'token': 19215,

'token_str': 'prostitute'},

{'sequence': '[CLS] the woman worked as a cook. [SEP]',

'score': 0.03042375110089779,

'token': 5660,

'token_str': 'cook'}]

```

This bias will also affect all fine-tuned versions of this model.

## Training data

The BERT model was pretrained on [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038

unpublished books and [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and

headers).

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence A [SEP] Sentence B [SEP]

```

With probability 0.5, sentence A and sentence B correspond to two consecutive sentences in the original corpus and in

the other cases, it's another random sentence in the corpus. Note that what is considered a sentence here is a

consecutive span of text usually longer than a single sentence. The only constrain is that the result with the two

"sentences" has a combined length of less than 512 tokens.

The details of the masking procedure for each sentence are the following:

- 15% of the tokens are masked.

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

- In the 10% remaining cases, the masked tokens are left as is.

### Pretraining

The model was trained on 4 cloud TPUs in Pod configuration (16 TPU chips total) for one million steps with a batch size

of 256. The sequence length was limited to 128 tokens for 90% of the steps and 512 for the remaining 10%. The optimizer

used is Adam with a learning rate of 1e-4, \\(\beta_{1} = 0.9\\) and \\(\beta_{2} = 0.999\\), a weight decay of 0.01,

learning rate warmup for 10,000 steps and linear decay of the learning rate after.

## Evaluation results

When fine-tuned on downstream tasks, this model achieves the following results:

Glue test results:

| Task | MNLI-(m/mm) | QQP | QNLI | SST-2 | CoLA | STS-B | MRPC | RTE | Average |

|:----:|:-----------:|:----:|:----:|:-----:|:----:|:-----:|:----:|:----:|:-------:|

| | 84.6/83.4 | 71.2 | 90.5 | 93.5 | 52.1 | 85.8 | 88.9 | 66.4 | 79.6 |

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-1810-04805,

author = {Jacob Devlin and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {{BERT:} Pre-training of Deep Bidirectional Transformers for Language

Understanding},

journal = {CoRR},

volume = {abs/1810.04805},

year = {2018},

url = {http://arxiv.org/abs/1810.04805},

archivePrefix = {arXiv},

eprint = {1810.04805},

timestamp = {Tue, 30 Oct 2018 20:39:56 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1810-04805.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

<a href="https://huggingface.co/exbert/?model=bert-base-uncased">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a> | eb567d15e58145fbf6573f91111e2f31 |

lmqg/mt5-base-ruquad-qg-ae | lmqg | mt5 | 20 | 90 | transformers | 0 | text2text-generation | true | false | false | cc-by-4.0 | ['ru'] | ['lmqg/qg_ruquad'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['question generation', 'answer extraction'] | true | true | true | 7,595 | false |

# Model Card of `lmqg/mt5-base-ruquad-qg-ae`

This model is fine-tuned version of [google/mt5-base](https://huggingface.co/google/mt5-base) for question generation and answer extraction jointly on the [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [google/mt5-base](https://huggingface.co/google/mt5-base)

- **Language:** ru

- **Training data:** [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="ru", model="lmqg/mt5-base-ruquad-qg-ae")

# model prediction

question_answer_pairs = model.generate_qa("Нелишним будет отметить, что, развивая это направление, Д. И. Менделеев, поначалу априорно выдвинув идею о температуре, при которой высота мениска будет нулевой, в мае 1860 года провёл серию опытов.")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "lmqg/mt5-base-ruquad-qg-ae")

# answer extraction

answer = pipe("generate question: Нелишним будет отметить, что, развивая это направление, Д. И. Менделеев, поначалу априорно выдвинув идею о температуре, при которой высота мениска будет нулевой, <hl> в мае 1860 года <hl> провёл серию опытов.")

# question generation

question = pipe("extract answers: <hl> в английском языке в нарицательном смысле применяется термин rapid transit (скоростной городской транспорт), однако употребляется он только тогда, когда по смыслу невозможно ограничиться названием одной конкретной системы метрополитена. <hl> в остальных случаях используются индивидуальные названия: в лондоне — london underground, в нью-йорке — new york subway, в ливерпуле — merseyrail, в вашингтоне — washington metrorail, в сан-франциско — bart и т. п. в некоторых городах применяется название метро (англ. metro) для систем, по своему характеру близких к метро, или для всего городского транспорта (собственно метро и наземный пассажирский транспорт (в том числе автобусы и трамваи)) в совокупности.")

```

## Evaluation

- ***Metric (Question Generation)***: [raw metric file](https://huggingface.co/lmqg/mt5-base-ruquad-qg-ae/raw/main/eval/metric.first.sentence.paragraph_answer.question.lmqg_qg_ruquad.default.json)

| | Score | Type | Dataset |

|:-----------|--------:|:--------|:-----------------------------------------------------------------|

| BERTScore | 87.9 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_1 | 36.66 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_2 | 29.53 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_3 | 24.23 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_4 | 20.06 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| METEOR | 30.18 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| MoverScore | 66.6 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| ROUGE_L | 35.35 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

- ***Metric (Question & Answer Generation)***: [raw metric file](https://huggingface.co/lmqg/mt5-base-ruquad-qg-ae/raw/main/eval/metric.first.answer.paragraph.questions_answers.lmqg_qg_ruquad.default.json)

| | Score | Type | Dataset |

|:--------------------------------|--------:|:--------|:-----------------------------------------------------------------|

| QAAlignedF1Score (BERTScore) | 80.21 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedF1Score (MoverScore) | 57.17 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedPrecision (BERTScore) | 76.48 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedPrecision (MoverScore) | 54.4 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedRecall (BERTScore) | 84.49 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedRecall (MoverScore) | 60.55 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

- ***Metric (Answer Extraction)***: [raw metric file](https://huggingface.co/lmqg/mt5-base-ruquad-qg-ae/raw/main/eval/metric.first.answer.paragraph_sentence.answer.lmqg_qg_ruquad.default.json)

| | Score | Type | Dataset |

|:-----------------|--------:|:--------|:-----------------------------------------------------------------|

| AnswerExactMatch | 44.44 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| AnswerF1Score | 64.31 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| BERTScore | 86.22 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_1 | 45.61 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_2 | 40.76 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_3 | 36.22 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_4 | 31.64 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| METEOR | 38.79 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| MoverScore | 74.64 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| ROUGE_L | 49.73 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_ruquad

- dataset_name: default

- input_types: ['paragraph_answer', 'paragraph_sentence']

- output_types: ['question', 'answer']

- prefix_types: ['qg', 'ae']

- model: google/mt5-base

- max_length: 512

- max_length_output: 32

- epoch: 8

- batch: 32

- lr: 0.001

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 2

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/lmqg/mt5-base-ruquad-qg-ae/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

| 158792df68f0c8ac54fc3e04d2fb8d52 |

ThomasNLG/CT0-11B | ThomasNLG | t5 | 13 | 17 | transformers | 0 | text2text-generation | true | false | false | apache-2.0 | ['en'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 603 | false | **How do I pronounce the name of the model?** CT0 should be pronounced "C T Zero" (like in "Continual T5 for zero-shot")

# Model Description

CT0 is an extention of T0, a model showing great zero-shot task generalization on English natural language prompts, outperforming GPT-3 on many tasks, while being 16x smaller.

```bibtex

@misc{scialom2022Continual,

title={Fine-tuned Language Models are Continual Learners},

author={Thomas Scialom and Tuhin Chakrabarty and Smaranda Muresan},

year={2022},

eprint={2205.12393},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

``` | 2efe6312b6b27ad2ae3076a9bae2e239 |

aipicasso/cool-japan-diffusion-2-1-1-1 | aipicasso | null | 21 | 187 | diffusers | 1 | text-to-image | false | false | false | other | null | null | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 | ['stable-diffusion', 'text-to-image'] | false | true | true | 6,961 | false |

# Cool Japan Diffusion 2.1.1.1 Model Card

[注意事项。中国将对图像生成的人工智能实施法律限制。 ](http://www.cac.gov.cn/2022-12/11/c_1672221949318230.htm) (中国国内にいる人への警告)

English version is [here](README_en.md).

# はじめに

Cool Japan Diffusion はStable Diffsionをファインチューニングして、アニメやマンガ、ゲームなどのクールジャパンを表現することに特化したモデルです。なお、内閣府のクールジャパン戦略とは特に関係はありません。

# ライセンスについて

ライセンスについては、もとのライセンス CreativeML Open RAIL++-M License に例外を除き商用利用禁止を追加しただけです。

例外を除き商用利用禁止を追加した理由は創作業界に悪影響を及ぼしかねないという懸念からです。

この懸念が払拭されれば、次のバージョンから元のライセンスに戻し、商用利用可能とします。

ちなみに、元のライセンスの日本語訳は[こちら](https://qiita.com/robitan/items/887d9f3153963114823d)になります。

営利企業にいる方は法務部にいる人と相談してください。

趣味で利用する方はあまり気にしなくても一般常識を守れば大丈夫なはずです。

なお、ライセンスにある通り、このモデルを改造しても、このライセンスを引き継ぐ必要があります。

# 法律や倫理について

本モデルは日本にて作成されました。したがって、日本の法律が適用されます。

本モデルの学習は、著作権法第30条の4に基づき、合法であると主張します。

また、本モデルの配布については、著作権法や刑法175条に照らしてみても、

正犯や幇助犯にも該当しないと主張します。詳しくは柿沼弁護士の[見解](https://twitter.com/tka0120/status/1601483633436393473?s=20&t=yvM9EX0Em-_7lh8NJln3IQ)を御覧ください。

ただし、ライセンスにもある通り、本モデルの生成物は各種法令に従って取り扱って下さい。

しかし、本モデルを配布する行為が倫理的に良くないとは作者は思っています。

これは学習する著作物に対して著作者の許可を得ていないためです。

ただし、学習するには著作者の許可は法律上必要もなく、検索エンジンと同様法律上は問題はありません。

したがって、法的な側面ではなく、倫理的な側面を調査する目的も本配布は兼ねていると考えてください。

# 使い方

手軽に楽しみたい方は、こちらの[Space](https://huggingface.co/spaces/aipicasso/cool-japan-diffusion-latest-demo)をお使いください。

詳しい本モデルの取り扱い方は[こちらの取扱説明書](https://alfredplpl.hatenablog.com/entry/2023/01/11/182146)にかかれています。

モデルは[ここ](https://huggingface.co/aipicasso/cool-japan-diffusion-2-1-1-1/resolve/main/v2-1-1-1_fp16.ckpt)からダウンロードできます。

以下、一般的なモデルカードの日本語訳です。

## モデル詳細

- **開発者:** Robin Rombach, Patrick Esser, Alfred Increment

- **モデルタイプ:** 拡散モデルベースの text-to-image 生成モデル

- **言語:** 日本語

- **ライセンス:** CreativeML Open RAIL++-M-NC License

- **モデルの説明:** このモデルはプロンプトに応じて適切な画像を生成することができます。アルゴリズムは [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) と [OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip) です。

- **補足:**

- **参考文献:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## モデルの使用例

Stable Diffusion v2と同じ使い方です。

たくさんの方法がありますが、2つのパターンを提供します。

- Web UI

- Diffusers

### Web UIの場合

こちらの[取扱説明書](https://alfredplpl.hatenablog.com/entry/2023/01/11/182146)に従って作成してください。

### Diffusersの場合

[🤗's Diffusers library](https://github.com/huggingface/diffusers) を使ってください。

まずは、以下のスクリプトを実行し、ライブラリをいれてください。

```bash

pip install --upgrade git+https://github.com/huggingface/diffusers.git transformers accelerate scipy

```

次のスクリプトを実行し、画像を生成してください。

```python

from diffusers import StableDiffusionPipeline, EulerAncestralDiscreteScheduler

import torch

model_id = "aipicasso/cool-japan-diffusion-2-1-1-1"

scheduler = EulerAncestralDiscreteScheduler.from_pretrained(model_id, subfolder="scheduler")

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "anime, masterpiece, a portrait of a girl, good pupil, 4k, detailed"

negative_prompt="deformed, blurry, bad anatomy, bad pupil, disfigured, poorly drawn face, mutation, mutated, extra limb, ugly, poorly drawn hands, bad hands, fused fingers, messy drawing, broken legs censor, low quality, mutated hands and fingers, long body, mutation, poorly drawn, bad eyes, ui, error, missing fingers, fused fingers, one hand with more than 5 fingers, one hand with less than 5 fingers, one hand with more than 5 digit, one hand with less than 5 digit, extra digit, fewer digits, fused digit, missing digit, bad digit, liquid digit, long body, uncoordinated body, unnatural body, lowres, jpeg artifacts, 3d, cg, text, japanese kanji"

images = pipe(prompt,negative_prompt=negative_prompt, num_inference_steps=20).images

images[0].save("girl.png")

```

**注意**:

- [xformers](https://github.com/facebookresearch/xformers) を使うと早くなるらしいです。

- GPUを使う際にGPUのメモリが少ない人は `pipe.enable_attention_slicing()` を使ってください。

#### 想定される用途

- コンテスト

- [AIアートグランプリ](https://www.aiartgrandprix.com/)への投稿

- ファインチューニングに用いた全データを開示し、審査基準を満たしていることを判断してもらうようにします。

- コンテストに向けて、要望があれば、Hugging Face の Community などで私に伝えてください。

- 画像生成AIに関する報道

- 公共放送だけでなく、営利企業でも可能

- 画像合成AIに関する情報を「知る権利」は創作業界に悪影響を及ぼさないと判断したためです。また、報道の自由などを尊重しました。

- クールジャパンの紹介

- 他国の人にクールジャパンとはなにかを説明すること。

- 他国の留学生はクールジャパンに惹かれて日本に来ることがおおくあります。そこで、クールジャパンが日本では「クールでない」とされていることにがっかりされることがとても多いとAlfred Incrementは感じております。他国の人が憧れる自国の文化をもっと誇りに思ってください。

- 研究開発

- Discord上でのモデルの利用

- プロンプトエンジニアリング

- ファインチューニング(追加学習とも)

- DreamBooth など

- 他のモデルとのマージ

- Latent Diffusion Modelとクールジャパンとの相性

- 本モデルの性能をFIDなどで調べること

- 本モデルがStable Diffusion以外のモデルとは独立であることをチェックサムやハッシュ関数などで調べること

- 教育

- 美大生や専門学校生の卒業制作

- 大学生の卒業論文や課題制作

- 先生が画像生成AIの現状を伝えること

- 自己表現

- SNS上で自分の感情や思考を表現すること

- Hugging Face の Community にかいてある用途

- 日本語か英語で質問してください

#### 想定されない用途

- 物事を事実として表現するようなこと

- 収益化されているYouTubeなどのコンテンツへの使用

- 商用のサービスとして直接提供すること

- 先生を困らせるようなこと

- その他、創作業界に悪影響を及ぼすこと

# 使用してはいけない用途や悪意のある用途

- デジタル贋作 ([Digital Forgery](https://arxiv.org/abs/2212.03860)) は公開しないでください(著作権法に違反するおそれ)

- 特に既存のキャラクターは公開しないでください(著作権法に違反するおそれ)

- なお、学習していない[キャラクターも生成できる](https://twitter.com/ThePioneerJPnew/status/1609074173892235264?s=20&t=-rY1ufzNeIDT3Fm5YdME6g)そうです。(このツイート自体は研究目的として許可しています。)

- 他人の作品を無断でImage-to-Imageしないでください(著作権法に違反するおそれ)

- わいせつ物を頒布しないでください (刑法175条に違反するおそれ)

- いわゆる業界のマナーを守らないようなこと

- 事実に基づかないことを事実のように語らないようにしてください(威力業務妨害罪が適用されるおそれ)

- フェイクニュース

## モデルの限界やバイアス

### モデルの限界

- よくわかっていない

### バイアス

Stable Diffusionと同じバイアスが掛かっています。

気をつけてください。

## 学習

**学習データ**

次のデータを主に使ってStable Diffusionをファインチューニングしています。

- VAEについて

- Danbooruなどの無断転載サイトを除いた日本の国内法を遵守したデータ: 60万種類 (データ拡張により無限枚作成)

- U-Netについて

- Danbooruなどの無断転載サイトを除いた日本の国内法を遵守したデータ: 180万ペア

**学習プロセス**

Stable DiffusionのVAEとU-Netをファインチューニングしました。

- **ハードウェア:** RTX 3090, A6000

- **オプティマイザー:** AdamW

- **Gradient Accumulations**: 1

- **バッチサイズ:** 1

## 評価結果

## 環境への影響

ほとんどありません。

- **ハードウェアタイプ:** RTX 3090, A6000

- **使用時間(単位は時間):** 700

- **クラウド事業者:** なし

- **学習した場所:** 日本

- **カーボン排出量:** そんなにない

## 参考文献

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

*このモデルカードは [Stable Diffusion v2](https://huggingface.co/stabilityai/stable-diffusion-2/raw/main/README.md) に基づいて、Alfred Incrementがかきました。

| aa64282b2f9da11bda568ad17c8f507d |

espnet/Wangyou_Zhang_wsj0_2mix_enh_dc_crn_mapping_snr_raw | espnet | null | 17 | 22 | espnet | 0 | audio-to-audio | false | false | false | cc-by-4.0 | null | ['chime4'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['espnet', 'audio', 'audio-to-audio'] | false | true | true | 5,674 | false |

## ESPnet2 ENH model

### `espnet/Wangyou_Zhang_wsj0_2mix_enh_dc_crn_mapping_snr_raw`

This model was trained by Wangyou Zhang using chime4 recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```bash

cd espnet

pip install -e .

cd egs2/chime4/enh1

./run.sh --skip_data_prep false --skip_train true --download_model espnet/Wangyou_Zhang_wsj0_2mix_enh_dc_crn_mapping_snr_raw

```

## ENH config

<details><summary>expand</summary>

```

config: conf/tuning/train_enh_dc_crn_mapping_snr.yaml

print_config: false

log_level: INFO

dry_run: false

iterator_type: chunk

output_dir: exp/enh_train_enh_dc_crn_mapping_snr_raw

ngpu: 1

seed: 0

num_workers: 4

num_att_plot: 3

dist_backend: nccl

dist_init_method: env://

dist_world_size: null

dist_rank: null

local_rank: 0

dist_master_addr: null

dist_master_port: null

dist_launcher: null

multiprocessing_distributed: false

unused_parameters: false

sharded_ddp: false

cudnn_enabled: true

cudnn_benchmark: false

cudnn_deterministic: true

collect_stats: false

write_collected_feats: false

max_epoch: 200

patience: 10

val_scheduler_criterion:

- valid

- loss

early_stopping_criterion:

- valid

- loss

- min

best_model_criterion:

- - valid

- si_snr

- max

- - valid

- loss

- min

keep_nbest_models: 1

nbest_averaging_interval: 0

grad_clip: 5

grad_clip_type: 2.0

grad_noise: false

accum_grad: 1

no_forward_run: false

resume: true

train_dtype: float32

use_amp: false

log_interval: null

use_matplotlib: true

use_tensorboard: true

use_wandb: false

wandb_project: null

wandb_id: null

wandb_entity: null

wandb_name: null

wandb_model_log_interval: -1

detect_anomaly: false

pretrain_path: null

init_param: []

ignore_init_mismatch: false

freeze_param: []

num_iters_per_epoch: null

batch_size: 16

valid_batch_size: null

batch_bins: 1000000

valid_batch_bins: null

train_shape_file:

- exp/enh_stats_8k/train/speech_mix_shape

- exp/enh_stats_8k/train/speech_ref1_shape

- exp/enh_stats_8k/train/speech_ref2_shape

valid_shape_file:

- exp/enh_stats_8k/valid/speech_mix_shape

- exp/enh_stats_8k/valid/speech_ref1_shape

- exp/enh_stats_8k/valid/speech_ref2_shape

batch_type: folded

valid_batch_type: null

fold_length:

- 80000

- 80000

- 80000

sort_in_batch: descending

sort_batch: descending

multiple_iterator: false

chunk_length: 32000

chunk_shift_ratio: 0.5

num_cache_chunks: 1024

train_data_path_and_name_and_type:

- - dump/raw/tr_min_8k/wav.scp

- speech_mix

- sound

- - dump/raw/tr_min_8k/spk1.scp

- speech_ref1

- sound

- - dump/raw/tr_min_8k/spk2.scp

- speech_ref2

- sound

valid_data_path_and_name_and_type:

- - dump/raw/cv_min_8k/wav.scp

- speech_mix

- sound

- - dump/raw/cv_min_8k/spk1.scp

- speech_ref1

- sound

- - dump/raw/cv_min_8k/spk2.scp

- speech_ref2

- sound

allow_variable_data_keys: false

max_cache_size: 0.0

max_cache_fd: 32

valid_max_cache_size: null

optim: adam

optim_conf:

lr: 0.001

eps: 1.0e-08

weight_decay: 1.0e-07

amsgrad: true

scheduler: steplr

scheduler_conf:

step_size: 2

gamma: 0.98

init: xavier_uniform

model_conf:

stft_consistency: false

loss_type: mask_mse

mask_type: null

criterions:

- name: si_snr

conf:

eps: 1.0e-07

wrapper: pit

wrapper_conf:

weight: 1.0

use_preprocessor: false

encoder: stft

encoder_conf:

n_fft: 256

hop_length: 128

separator: dc_crn

separator_conf:

num_spk: 2

input_channels:

- 2

- 16

- 32

- 64

- 128

- 256

enc_hid_channels: 8

enc_layers: 5

glstm_groups: 2

glstm_layers: 2

glstm_bidirectional: true

glstm_rearrange: false

mode: mapping

decoder: stft

decoder_conf:

n_fft: 256

hop_length: 128

required:

- output_dir

version: 0.10.7a1

distributed: false

```

</details>

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

@inproceedings{li2021espnetse,

title={{ESPnet-SE}: End-to-End Speech Enhancement and Separation Toolkit Designed for {ASR} Integration},

author={Li, Chenda and Shi, Jing and Zhang, Wangyou and Subramanian, Aswin Shanmugam and Chang, Xuankai and Kamo, Naoyuki and Hira, Moto and Hayashi, Tomoki and Boeddeker, Christoph and Chen, Zhuo and Watanabe, Shinji},

booktitle={Proc. IEEE Spoken Language Technology Workshop (SLT)},

pages={785--792},

year={2021},

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@inproceedings{li2021espnetse,

title={{ESPnet-SE}: End-to-End Speech Enhancement and Separation Toolkit Designed for {ASR} Integration},

author={Li, Chenda and Shi, Jing and Zhang, Wangyou and Subramanian, Aswin Shanmugam and Chang, Xuankai and Kamo, Naoyuki and Hira, Moto and Hayashi, Tomoki and Boeddeker, Christoph and Chen, Zhuo and Watanabe, Shinji},

year={2020},

eprint={2011.03706},

archivePrefix={arXiv},

primaryClass={eess.AS}

}

```

| 3e2d443ba1de0c5e58de8c50c0f67565 |

0ys/mt5-small-finetuned-amazon-en-es | 0ys | mt5 | 13 | 5 | transformers | 0 | summarization | true | false | false | apache-2.0 | null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['summarization', 'generated_from_trainer'] | true | true | true | 1,996 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-amazon-en-es

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.0294

- Rouge1: 16.6807

- Rouge2: 8.0004

- Rougel: 16.2251

- Rougelsum: 16.1743

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|

| 6.5928 | 1.0 | 1209 | 3.3005 | 14.7863 | 6.5038 | 14.3031 | 14.2522 |

| 3.9024 | 2.0 | 2418 | 3.1399 | 16.9257 | 8.6583 | 16.15 | 16.1299 |

| 3.5806 | 3.0 | 3627 | 3.0869 | 18.2734 | 9.1667 | 17.7441 | 17.5782 |

| 3.4201 | 4.0 | 4836 | 3.0590 | 17.763 | 8.9447 | 17.1833 | 17.1661 |

| 3.3202 | 5.0 | 6045 | 3.0598 | 17.7754 | 8.5695 | 17.4139 | 17.2653 |

| 3.2436 | 6.0 | 7254 | 3.0409 | 16.8423 | 8.1593 | 16.5392 | 16.4297 |

| 3.2079 | 7.0 | 8463 | 3.0332 | 16.8991 | 8.1574 | 16.4229 | 16.3515 |

| 3.1801 | 8.0 | 9672 | 3.0294 | 16.6807 | 8.0004 | 16.2251 | 16.1743 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

| ea94e152ab296dd8a284a533c141aba7 |

r3dhummingbird/DialoGPT-small-neku | r3dhummingbird | gpt2 | 9 | 6 | transformers | 0 | conversational | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['conversational'] | false | true | true | 1,610 | false |

# DialoGPT Trained on the Speech of a Game Character

This is an instance of [microsoft/DialoGPT-small](https://huggingface.co/microsoft/DialoGPT-small) trained on a game character, Neku Sakuraba from [The World Ends With You](https://en.wikipedia.org/wiki/The_World_Ends_with_You). The data comes from [a Kaggle game script dataset](https://www.kaggle.com/ruolinzheng/twewy-game-script).

Chat with the model:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("r3dhummingbird/DialoGPT-small-neku")

model = AutoModelWithLMHead.from_pretrained("r3dhummingbird/DialoGPT-small-neku")

# Let's chat for 4 lines

for step in range(4):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# print(new_user_input_ids)

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(

bot_input_ids, max_length=200,

pad_token_id=tokenizer.eos_token_id,

no_repeat_ngram_size=3,

do_sample=True,

top_k=100,

top_p=0.7,

temperature=0.8

)

# pretty print last ouput tokens from bot

print("NekuBot: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

``` | 0bae7f2c9698ed93c4ee26d5cf75c4a4 |

Joeythemonster/anything-midjourney-v-4-1 | Joeythemonster | null | 18 | 3,610 | diffusers | 32 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 2 | 1 | 1 | 0 | 3 | 3 | 0 | ['text-to-image', 'stable-diffusion'] | false | true | true | 635 | false | ### ANYTHING-MIDJOURNEY-V-4.1 Dreambooth model trained by Joeythemonster with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Sample pictures of this concept:

| 4562e375bdbf89fc804f6dae4e7379fa |

microsoft/beit-large-patch16-224-pt22k-ft22k | microsoft | beit | 6 | 54,457 | transformers | 2 | image-classification | true | false | true | apache-2.0 | null | ['imagenet', 'imagenet-21k'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['image-classification', 'vision'] | false | true | true | 5,395 | false |

# BEiT (large-sized model, fine-tuned on ImageNet-22k)

BEiT model pre-trained in a self-supervised fashion on ImageNet-22k - also called ImageNet-21k (14 million images, 21,841 classes) at resolution 224x224, and fine-tuned on the same dataset at resolution 224x224. It was introduced in the paper [BEIT: BERT Pre-Training of Image Transformers](https://arxiv.org/abs/2106.08254) by Hangbo Bao, Li Dong and Furu Wei and first released in [this repository](https://github.com/microsoft/unilm/tree/master/beit).

Disclaimer: The team releasing BEiT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The BEiT model is a Vision Transformer (ViT), which is a transformer encoder model (BERT-like). In contrast to the original ViT model, BEiT is pretrained on a large collection of images in a self-supervised fashion, namely ImageNet-21k, at a resolution of 224x224 pixels. The pre-training objective for the model is to predict visual tokens from the encoder of OpenAI's DALL-E's VQ-VAE, based on masked patches.

Next, the model was fine-tuned in a supervised fashion on ImageNet (also referred to as ILSVRC2012), a dataset comprising 1 million images and 1,000 classes, also at resolution 224x224.

Images are presented to the model as a sequence of fixed-size patches (resolution 16x16), which are linearly embedded. Contrary to the original ViT models, BEiT models do use relative position embeddings (similar to T5) instead of absolute position embeddings, and perform classification of images by mean-pooling the final hidden states of the patches, instead of placing a linear layer on top of the final hidden state of the [CLS] token.

By pre-training the model, it learns an inner representation of images that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled images for instance, you can train a standard classifier by placing a linear layer on top of the pre-trained encoder. One typically places a linear layer on top of the [CLS] token, as the last hidden state of this token can be seen as a representation of an entire image. Alternatively, one can mean-pool the final hidden states of the patch embeddings, and place a linear layer on top of that.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=microsoft/beit) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import BeitFeatureExtractor, BeitForImageClassification

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = BeitFeatureExtractor.from_pretrained('microsoft/beit-large-patch16-224-pt22k-ft22k')

model = BeitForImageClassification.from_pretrained('microsoft/beit-large-patch16-224-pt22k-ft22k')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 21,841 ImageNet-22k classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The BEiT model was pretrained on [ImageNet-21k](http://www.image-net.org/), a dataset consisting of 14 million images and 21k classes, and fine-tuned on the same dataset.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/microsoft/unilm/blob/master/beit/datasets.py).

Images are resized/rescaled to the same resolution (224x224) and normalized across the RGB channels with mean (0.5, 0.5, 0.5) and standard deviation (0.5, 0.5, 0.5).

### Pretraining

For all pre-training related hyperparameters, we refer to page 15 of the [original paper](https://arxiv.org/abs/2106.08254).

## Evaluation results

For evaluation results on several image classification benchmarks, we refer to tables 1 and 2 of the original paper. Note that for fine-tuning, the best results are obtained with a higher resolution. Of course, increasing the model size will result in better performance.

### BibTeX entry and citation info

```@article{DBLP:journals/corr/abs-2106-08254,

author = {Hangbo Bao and

Li Dong and

Furu Wei},

title = {BEiT: {BERT} Pre-Training of Image Transformers},

journal = {CoRR},

volume = {abs/2106.08254},

year = {2021},

url = {https://arxiv.org/abs/2106.08254},

archivePrefix = {arXiv},

eprint = {2106.08254},

timestamp = {Tue, 29 Jun 2021 16:55:04 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2106-08254.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

```bibtex

@inproceedings{deng2009imagenet,

title={Imagenet: A large-scale hierarchical image database},

author={Deng, Jia and Dong, Wei and Socher, Richard and Li, Li-Jia and Li, Kai and Fei-Fei, Li},

booktitle={2009 IEEE conference on computer vision and pattern recognition},

pages={248--255},

year={2009},

organization={Ieee}

}

``` | bc69c81485e2e42195034acc2505ba4c |

imdanboy/ljspeech_tts_train_jets_raw_phn_tacotron_g2p_en_no_space_train.total_count.ave | imdanboy | null | 36 | 38 | espnet | 0 | text-to-speech | false | false | false | cc-by-4.0 | ['en'] | ['ljspeech'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['espnet', 'audio', 'text-to-speech'] | false | true | true | 11,604 | false |

## ESPnet2 TTS model

### `imdanboy/ljspeech_tts_train_jets_raw_phn_tacotron_g2p_en_no_space_train.total_count.ave`

This model was trained by imdanboy using ljspeech recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```bash

cd espnet

git checkout c173c30930631731e6836c274a591ad571749741

pip install -e .

cd egs2/ljspeech/tts1

./run.sh --skip_data_prep false --skip_train true --download_model imdanboy/ljspeech_tts_train_jets_raw_phn_tacotron_g2p_en_no_space_train.total_count.ave

```

## TTS config

<details><summary>expand</summary>

```

config: conf/tuning/train_jets.yaml

print_config: false

log_level: INFO

dry_run: false

iterator_type: sequence

output_dir: exp/tts_train_jets_raw_phn_tacotron_g2p_en_no_space

ngpu: 1

seed: 777

num_workers: 4

num_att_plot: 3

dist_backend: nccl

dist_init_method: env://

dist_world_size: 4

dist_rank: 0

local_rank: 0

dist_master_addr: localhost

dist_master_port: 39471

dist_launcher: null

multiprocessing_distributed: true

unused_parameters: true

sharded_ddp: false

cudnn_enabled: true

cudnn_benchmark: false

cudnn_deterministic: false

collect_stats: false

write_collected_feats: false

max_epoch: 1000

patience: null

val_scheduler_criterion:

- valid

- loss

early_stopping_criterion:

- valid

- loss

- min

best_model_criterion:

- - valid

- text2mel_loss

- min

- - train

- text2mel_loss

- min

- - train

- total_count

- max

keep_nbest_models: 5

nbest_averaging_interval: 0

grad_clip: -1

grad_clip_type: 2.0

grad_noise: false

accum_grad: 1

no_forward_run: false

resume: true

train_dtype: float32

use_amp: false

log_interval: 50

use_matplotlib: true

use_tensorboard: true

use_wandb: false

wandb_project: null

wandb_id: null

wandb_entity: null

wandb_name: null

wandb_model_log_interval: -1

detect_anomaly: false

pretrain_path: null

init_param: []

ignore_init_mismatch: false

freeze_param: []

num_iters_per_epoch: 1000

batch_size: 20

valid_batch_size: null

batch_bins: 3000000

valid_batch_bins: null

train_shape_file:

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/text_shape.phn

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/speech_shape

valid_shape_file:

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/valid/text_shape.phn

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/valid/speech_shape

batch_type: numel

valid_batch_type: null

fold_length:

- 150

- 204800

sort_in_batch: descending

sort_batch: descending

multiple_iterator: false

chunk_length: 500

chunk_shift_ratio: 0.5

num_cache_chunks: 1024

train_data_path_and_name_and_type:

- - dump/raw/tr_no_dev/text

- text

- text

- - dump/raw/tr_no_dev/wav.scp

- speech

- sound

- - exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/collect_feats/pitch.scp

- pitch

- npy

- - exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/collect_feats/energy.scp

- energy

- npy

valid_data_path_and_name_and_type:

- - dump/raw/dev/text

- text

- text

- - dump/raw/dev/wav.scp

- speech

- sound

- - exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/valid/collect_feats/pitch.scp

- pitch

- npy

- - exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/valid/collect_feats/energy.scp

- energy

- npy

allow_variable_data_keys: false

max_cache_size: 0.0

max_cache_fd: 32

valid_max_cache_size: null

optim: adamw

optim_conf:

lr: 0.0002

betas:

- 0.8

- 0.99

eps: 1.0e-09

weight_decay: 0.0

scheduler: exponentiallr

scheduler_conf:

gamma: 0.999875

optim2: adamw

optim2_conf:

lr: 0.0002

betas:

- 0.8

- 0.99

eps: 1.0e-09

weight_decay: 0.0

scheduler2: exponentiallr

scheduler2_conf:

gamma: 0.999875

generator_first: true

token_list:

- <blank>

- <unk>

- AH0

- N

- T

- D

- S

- R

- L

- DH

- K

- Z

- IH1

- IH0

- M

- EH1

- W

- P

- AE1

- AH1

- V

- ER0

- F

- ','

- AA1

- B

- HH

- IY1

- UW1

- IY0

- AO1

- EY1

- AY1

- .

- OW1

- SH

- NG

- G

- ER1

- CH

- JH

- Y

- AW1

- TH

- UH1

- EH2

- OW0

- EY2

- AO0

- IH2

- AE2

- AY2

- AA2

- UW0

- EH0

- OY1

- EY0

- AO2

- ZH

- OW2

- AE0

- UW2

- AH2

- AY0

- IY2

- AW2

- AA0

- ''''

- ER2

- UH2

- '?'

- OY2

- '!'

- AW0

- UH0

- OY0

- ..

- <sos/eos>

odim: null

model_conf: {}

use_preprocessor: true

token_type: phn

bpemodel: null

non_linguistic_symbols: null

cleaner: tacotron

g2p: g2p_en_no_space

feats_extract: fbank

feats_extract_conf:

n_fft: 1024

hop_length: 256

win_length: null

fs: 22050

fmin: 80

fmax: 7600

n_mels: 80

normalize: global_mvn

normalize_conf:

stats_file: exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/feats_stats.npz

tts: jets

tts_conf:

generator_type: jets_generator

generator_params:

adim: 256

aheads: 2

elayers: 4

eunits: 1024

dlayers: 4

dunits: 1024

positionwise_layer_type: conv1d

positionwise_conv_kernel_size: 3

duration_predictor_layers: 2

duration_predictor_chans: 256

duration_predictor_kernel_size: 3

use_masking: true

encoder_normalize_before: true

decoder_normalize_before: true

encoder_type: transformer

decoder_type: transformer

conformer_rel_pos_type: latest

conformer_pos_enc_layer_type: rel_pos

conformer_self_attn_layer_type: rel_selfattn

conformer_activation_type: swish

use_macaron_style_in_conformer: true

use_cnn_in_conformer: true

conformer_enc_kernel_size: 7

conformer_dec_kernel_size: 31

init_type: xavier_uniform

transformer_enc_dropout_rate: 0.2

transformer_enc_positional_dropout_rate: 0.2

transformer_enc_attn_dropout_rate: 0.2

transformer_dec_dropout_rate: 0.2

transformer_dec_positional_dropout_rate: 0.2

transformer_dec_attn_dropout_rate: 0.2

pitch_predictor_layers: 5

pitch_predictor_chans: 256

pitch_predictor_kernel_size: 5

pitch_predictor_dropout: 0.5

pitch_embed_kernel_size: 1

pitch_embed_dropout: 0.0

stop_gradient_from_pitch_predictor: true

energy_predictor_layers: 2

energy_predictor_chans: 256

energy_predictor_kernel_size: 3

energy_predictor_dropout: 0.5

energy_embed_kernel_size: 1

energy_embed_dropout: 0.0

stop_gradient_from_energy_predictor: false

generator_out_channels: 1

generator_channels: 512

generator_global_channels: -1

generator_kernel_size: 7

generator_upsample_scales:

- 8

- 8

- 2

- 2

generator_upsample_kernel_sizes:

- 16

- 16

- 4

- 4

generator_resblock_kernel_sizes:

- 3

- 7

- 11

generator_resblock_dilations:

- - 1

- 3

- 5

- - 1

- 3

- 5

- - 1

- 3

- 5

generator_use_additional_convs: true

generator_bias: true

generator_nonlinear_activation: LeakyReLU

generator_nonlinear_activation_params:

negative_slope: 0.1

generator_use_weight_norm: true

segment_size: 64

idim: 78

odim: 80

discriminator_type: hifigan_multi_scale_multi_period_discriminator

discriminator_params:

scales: 1

scale_downsample_pooling: AvgPool1d

scale_downsample_pooling_params:

kernel_size: 4

stride: 2

padding: 2

scale_discriminator_params:

in_channels: 1

out_channels: 1

kernel_sizes:

- 15

- 41

- 5

- 3

channels: 128

max_downsample_channels: 1024

max_groups: 16

bias: true

downsample_scales:

- 2

- 2

- 4

- 4

- 1

nonlinear_activation: LeakyReLU

nonlinear_activation_params:

negative_slope: 0.1

use_weight_norm: true

use_spectral_norm: false

follow_official_norm: false

periods:

- 2

- 3

- 5

- 7

- 11

period_discriminator_params:

in_channels: 1

out_channels: 1

kernel_sizes:

- 5

- 3

channels: 32

downsample_scales:

- 3

- 3

- 3

- 3

- 1

max_downsample_channels: 1024

bias: true

nonlinear_activation: LeakyReLU

nonlinear_activation_params:

negative_slope: 0.1

use_weight_norm: true

use_spectral_norm: false

generator_adv_loss_params:

average_by_discriminators: false

loss_type: mse

discriminator_adv_loss_params:

average_by_discriminators: false

loss_type: mse

feat_match_loss_params:

average_by_discriminators: false

average_by_layers: false

include_final_outputs: true

mel_loss_params:

fs: 22050

n_fft: 1024

hop_length: 256

win_length: null

window: hann

n_mels: 80

fmin: 0

fmax: null

log_base: null

lambda_adv: 1.0

lambda_mel: 45.0

lambda_feat_match: 2.0

lambda_var: 1.0

lambda_align: 2.0

sampling_rate: 22050

cache_generator_outputs: true

pitch_extract: dio

pitch_extract_conf:

reduction_factor: 1

use_token_averaged_f0: false

fs: 22050

n_fft: 1024

hop_length: 256

f0max: 400

f0min: 80

pitch_normalize: global_mvn

pitch_normalize_conf:

stats_file: exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/pitch_stats.npz

energy_extract: energy

energy_extract_conf:

reduction_factor: 1

use_token_averaged_energy: false

fs: 22050

n_fft: 1024

hop_length: 256

win_length: null

energy_normalize: global_mvn

energy_normalize_conf:

stats_file: exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/energy_stats.npz

required:

- output_dir

- token_list

version: '202204'

distributed: true

```

</details>

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,