repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

huggingface/swift-transformers

| 72

|

How to use BertTokenizer?

|

what is the best way to use the BertTokenizer? its not a public file so I'm not sure whats the best way to use it

|

https://github.com/huggingface/swift-transformers/issues/72

|

closed

|

[] | 2024-03-16T18:13:36Z

| 2024-03-22T10:29:54Z

| null |

jonathan-goodrx

|

huggingface/chat-ui

| 934

|

What are the rules to create a chatPromptTemplate in .env.local?

|

We know that chatPromptTemplate for google/gemma-7b-it in .env.local is:

"chatPromptTemplate" : "{{#each messages}}{{#ifUser}}<start_of_turn>user\n{{#if @first}}{{#if @root.preprompt}}{{@root.preprompt}}\n{{/if}}{{/if}}{{content}}<end_of_turn>\n<start_of_turn>model\n{{/ifUser}}{{#ifAssistant}}{{content}}<end_of_turn>\n{{/ifAssistant}}{{/each}}",

and its chat template is:

"chat_template": "{{ bos_token }}{% if messages[0]['role'] == 'system' %}{{ raise_exception('System role not supported') }}{% endif %}{% for message in messages %}{% if (message['role'] == 'user') != (loop.index0 % 2 == 0) %}{{ raise_exception('Conversation roles must alternate user/assistant/user/assistant/...') }}{% endif %}{% if (message['role'] == 'assistant') %}{% set role = 'model' %}{% else %}{% set role = message['role'] %}{% endif %}{{ '<start_of_turn>' + role + '\n' + message['content'] | trim + '<end_of_turn>\n' }}{% endfor %}{% if add_generation_prompt %}{{'<start_of_turn>model\n'}}{% endif %}",

The question is:

Are there any rules that are used to create the chatPromptTemplate for a model? Usually we have

the chat template from the model. But when we need to use this model in chat-ui, we have to use chatPromptTemplate.

|

https://github.com/huggingface/chat-ui/issues/934

|

open

|

[

"question"

] | 2024-03-16T17:51:38Z

| 2024-04-04T14:02:20Z

| null |

houghtonweihu

|

huggingface/chat-ui

| 933

|

Why the chat template of google/gemma-7b-it is invalid josn format in .env.local?

|

I used the chat template from google/gemma-7b-it in .env.local, shown below:

"chat_template": "{{ bos_token }}{% if messages[0]['role'] == 'system' %}{{ raise_exception('System role not supported') }}{% endif %}{% for message in messages %}{% if (message['role'] == 'user') != (loop.index0 % 2 == 0) %}{{ raise_exception('Conversation roles must alternate user/assistant/user/assistant/...') }}{% endif %}{% if (message['role'] == 'assistant') %}{% set role = 'model' %}{% else %}{% set role = message['role'] %}{% endif %}{{ '<start_of_turn>' + role + '\n' + message['content'] | trim + '<end_of_turn>\n' }}{% endfor %}{% if add_generation_prompt %}{{'<start_of_turn>model\n'}}{% endif %}",

I got this error:

[vite] Error when evaluating SSR module /src/lib/server/models.ts:

|- SyntaxError: Unexpected token ''', "'[" is not valid JSON

|

https://github.com/huggingface/chat-ui/issues/933

|

closed

|

[

"question"

] | 2024-03-15T20:34:11Z

| 2024-03-18T13:24:55Z

| null |

houghtonweihu

|

pytorch/xla

| 6,760

|

xla_model.RateTracker doesn't have a docstring and its behavior is subtle and potentially confusing.

|

## 📚 Documentation

The `RateTracker` class in https://github.com/pytorch/xla/blob/fe3f23c62c747da30595cb9906d929b926aae6e4/torch_xla/core/xla_model.py doesn't have a docstring. This class is [used in lots of tests](https://github.com/search?q=repo%3Apytorch%2Fxla%20RateTracker&type=code), including [this one](https://github.com/pytorch/xla/blob/master/test/test_train_mp_mnist.py) that is referenced from the [main documentation](https://pytorch.org/xla/release/2.2/index.html), so new PyTorch/XLA users may see it as a natural and supported way to track and report training efficiency metrics.

`RateTracker`'s behavior is subtle and potentially confusing, since tracking throughput can involve measuring data at different granularities (e.g. batch, example, or, for LLMs, tokens) and reporting per-accelerator, per-host, or globally. Here is what I think the answers to these are; please correct me.

Following the examples in those tests, (where the batch size is added to the tracker at each training step), I think that `rate` measures the examples (not tokens) per second seen during the last batch (specifically, since the last time `.rate()` was called) and `global_rate` measures the same for the whole training run. Therefore the expectation is that global_rate will be slow in the beginning but after compilation and other one-time costs it will rise and typically approach the per-batch training rate, though the latter may vary.

In terms of what granularity of devices the metrics reflect, for SPMD, I think these will be both global metrics (for the whole training job), but for other distribution strategies, I think they're per-device.

Is that right?

|

https://github.com/pytorch/xla/issues/6760

|

closed

|

[

"usability"

] | 2024-03-15T17:23:46Z

| 2025-04-18T13:52:01Z

| 10

|

ebreck

|

pytorch/xla

| 6,759

|

Do I have to implement PjRtLoadedExecutable::GetHloModules when `XLA_STABLEHLO_COMPILE=1` ?

|

## ❓ Questions and Help

Hi, I'm from a hardware vendor and we want to implement a PJRT plugin for our DSA accelerator. We have our own MLIR-based compiler stack and it takes StableHLO as the input IR.

I'm new to PJRT, according to the [description](https://opensource.googleblog.com/2024/03/pjrt-plugin-to-accelerate-machine-learning.html), PJRT API is supposed to be compiler-agnostic and should not assume a PJRT plugin's compiler backend must be XLA. However, in `PyTorch/XLA`'s PJRT runtime: `PjRtComputationClient::Compile`, it calls `PjRtLoadedExecutable::GetHloModules` (which we left unimplemented in our `PjRtLoadedExecutable` implementation) and expects returning of valid `xla::HloModule`:

https://github.com/pytorch/xla/blob/19b83830ac4ee3a39d99abaf154f485c2399f47a/torch_xla/csrc/runtime/pjrt_computation_client.cc#L585

My question is, does `PyTorch/XLA`'s `PjRtComputationClient` requires these `xla::HloModule` for execution? If not, when user set `XLA_STABLEHLO_COMPILE=1`, `PyTorch/XLA` should not expect the compiled `PjRtLoadedExecutable` has anything to do with XLA/HLO related stuff.

|

https://github.com/pytorch/xla/issues/6759

|

open

|

[

"question",

"stablehlo"

] | 2024-03-15T10:59:36Z

| 2025-04-18T13:58:24Z

| null |

Nullkooland

|

huggingface/diffusers

| 7,337

|

How to convert multiple piped files into a single SafeTensor file?

|

How to convert multiple piped files into a single SafeTensor file?

For example, from this address: https://huggingface.co/Vargol/sdxl-lightning-4-steps/tree/main

```python

import torch

from diffusers import StableDiffusionXLPipeline, EulerDiscreteScheduler

base = "Vargol/sdxl-lightning-4-steps"

pipe = StableDiffusionXLPipeline.from_pretrained(base, torch_dtype=torch.float16).to("cuda")

```

How can I convert `pipe` into a single SafeTensor file as a whole?

Just like the file `sd_xl_base_1.0_0.9vae.safetensors`, which contains the components needed from `diffusers`.

_Originally posted by @xddun in https://github.com/huggingface/diffusers/issues/5360#issuecomment-1998986263_

|

https://github.com/huggingface/diffusers/issues/7337

|

closed

|

[] | 2024-03-15T05:49:01Z

| 2024-03-15T06:51:24Z

| null |

xxddccaa

|

huggingface/transformers.js

| 648

|

`aggregation_strategy` in TokenClassificationPipeline

|

### Question

Hello, from Transformers original version they have aggregation_strategy parameter to group the token corresponding to the same entity together in the predictions or not. But in transformers.js version I haven't found this parameter. Is it possible to provide this parameter? I want the prediction result as same as the original version.

|

https://github.com/huggingface/transformers.js/issues/648

|

closed

|

[

"question"

] | 2024-03-15T04:07:22Z

| 2024-04-10T21:35:42Z

| null |

boat-p

|

pytorch/vision

| 8,317

|

position, colour, and background colour of text labels in draw_bounding_boxes

|

### 🚀 The feature

Text labels from `torchvision.utils.draw_bounding_boxes` are currently always inside the box with origin at the top left corner of the box, without a background colour, and the same colour as the bounding box itself. These are three things that would be nice to control.

### Motivation, pitch

The problem with the current implementation is that it makes it hard to read the label, particularly when the bounding box is filled (because the text has the same colour as the filling colour and is placed inside the box.

For example, this is the results from the current implementation:

Moving the label to outside the box already makes things better:

But by controlling those three things (placement of label, background colour behind the label, and text colour) one could fit to whatever they have. For what is worth, in the original issue for this feature, the only example image had labels outside the box, text coloured different from the box (black), and background of the same colour as the box. See https://github.com/pytorch/vision/issues/2556#issuecomment-671344086

I'm happy to contribute this but want to know if this will be accepted and with what interface.

|

https://github.com/pytorch/vision/issues/8317

|

open

|

[] | 2024-03-14T13:50:17Z

| 2025-04-17T13:28:39Z

| 9

|

carandraug

|

huggingface/transformers.js

| 646

|

Library no longer maintained?

|

### Question

1 year has passed since this PR is ready for merge: [Support React Native #118](https://github.com/xenova/transformers.js/pull/118)

Should we do our own fork of xenova/transformers.js ?

|

https://github.com/huggingface/transformers.js/issues/646

|

closed

|

[

"question"

] | 2024-03-14T10:37:33Z

| 2024-06-10T15:32:41Z

| null |

pax-k

|

pytorch/serve

| 3,026

|

Exception when using torchserve to deploy hugging face model: java.lang.InterruptedException: null

|

### 🐛 Describe the bug

I followed the tutorial as https://github.com/pytorch/serve/tree/master/examples/Huggingface_Transformers

First,

```

python Download_Transformer_models.py

```

Then,

```

torch-model-archiver --model-name BERTSeqClassification --version 1.0 --serialized-file Transformer_model/pytorch_model.bin --handler ./Transformer_handler_generalized.py --extra-files "Transformer_model/config.json,./setup_config.json,./Seq_classification_artifacts/index_to_name.json"

```

Finally,

```

torchserve --start --model-store model_store --models my_tc=BERTSeqClassification.mar --ncs

```

The system cannot start as usualy, it gives out the error log, throwing an Exception

```

java.lang.InterruptedException: null

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:1679) ~[?:?]

at java.util.concurrent.LinkedBlockingDeque.pollFirst(LinkedBlockingDeque.java:515) ~[?:?]

at java.util.concurrent.LinkedBlockingDeque.poll(LinkedBlockingDeque.java:677) ~[?:?]

at org.pytorch.serve.wlm.Model.pollBatch(Model.java:367) ~[model-server.jar:?]

at org.pytorch.serve.wlm.BatchAggregator.getRequest(BatchAggregator.java:36) ~[model-server.jar:?]

at org.pytorch.serve.wlm.WorkerThread.run(WorkerThread.java:194) [model-server.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635) [?:?]

at java.lang.Thread.run(Thread.java:833) [?:?]

```

I tried curl to check the model

```

root@0510f3693f42:/home/model-server# curl http://127.0.0.1:8081/models

{

"models": []

}

```

### Error logs

2024-03-14T07:34:24,938 [INFO ] epollEventLoopGroup-5-17 org.pytorch.serve.wlm.WorkerThread - 9015 Worker disconnected. WORKER_STARTED

2024-03-14T07:34:24,938 [INFO ] W-9015-my_tc_1.0-stdout MODEL_LOG - Connection accepted: /home/model-server/tmp/.ts.sock.9015.

2024-03-14T07:34:24,938 [DEBUG] W-9015-my_tc_1.0 org.pytorch.serve.wlm.WorkerThread - System state is : WORKER_STARTED

2024-03-14T07:34:24,938 [DEBUG] W-9015-my_tc_1.0 org.pytorch.serve.wlm.WorkerThread - Backend worker monitoring thread interrupted or backend worker process died.

java.lang.InterruptedException: null

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:1679) ~[?:?]

at java.util.concurrent.LinkedBlockingDeque.pollFirst(LinkedBlockingDeque.java:515) ~[?:?]

at java.util.concurrent.LinkedBlockingDeque.poll(LinkedBlockingDeque.java:677) ~[?:?]

at org.pytorch.serve.wlm.Model.pollBatch(Model.java:367) ~[model-server.jar:?]

at org.pytorch.serve.wlm.BatchAggregator.getRequest(BatchAggregator.java:36) ~[model-server.jar:?]

at org.pytorch.serve.wlm.WorkerThread.run(WorkerThread.java:194) [model-server.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635) [?:?]

at java.lang.Thread.run(Thread.java:833) [?:?]

2024-03-14T07:34:24,938 [DEBUG] W-9015-my_tc_1.0 org.pytorch.serve.wlm.WorkerThread - W-9015-my_tc_1.0 State change WORKER_STARTED -> WORKER_STOPPED

2024-03-14T07:34:24,938 [WARN ] W-9015-my_tc_1.0 org.pytorch.serve.wlm.WorkerThread - Auto recovery failed again

2024-03-14T07:34:24,939 [WARN ] W-9015-my_tc_1.0 org.pytorch.serve.wlm.WorkerLifeCycle - terminateIOStreams() threadName=W-9015-my_tc_1.0-stderr

2024-03-14T07:34:24,939 [WARN ] W-9015-my_tc_1.0 org.pytorch.serve.wlm.WorkerLifeCycle - terminateIOStreams() threadName=W-9015-my_tc_1.0-stdout

2024-03-14T07:34:24,939 [INFO ] W-9015-my_tc_1.0 org.pytorch.serve.wlm.WorkerThread - Retry worker: 9015 in 3 seconds.

2024-03-14T07:34:24,946 [INFO ] W-9015-my_tc_1.0-stdout org.pytorch.serve.wlm.WorkerLifeCycle - Stopped Scanner - W-9015-my_tc_1.0-stdout

2024-03-14T07:34:24,946 [INFO ] W-9015-my_tc_1.0-stderr org.pytorch.serve.wlm.WorkerLifeCycle - Stopped Scanner - W-9015-my_tc_1.0-stderr

2024-03-14T07:34:27,207 [DEBUG] W-9010-my_tc_1.0 org.pytorch.serve.wlm.WorkerLifeCycle - Worker cmdline: [/home/venv/bin/python, /home/venv/lib/python3.9/site-packages/ts/model_service_worker.py, --sock-type, unix, --sock-name, /home/model-server/tmp/.ts.sock.9010, --metrics-config, /home/venv/lib/python3.9/site-packages/ts/configs/metrics.yaml]

2024-03-14T07:34:27,489 [DEBUG] W-9012-my_tc_1.0 org.pytorch.serve.wlm.WorkerLifeCycle - Worker cmdline: [/home/venv/bin/python, /home/venv/lib/python3.9/site-packages/ts/model_service_worker.py, --sock-type, unix, --sock-name, /home/model-server/tmp/.ts.sock.9012, --metrics-config, /home/venv/lib/python3.9/site-packages/ts/configs/metrics.yaml]

2024-03-14T07:34:27,579 [DEBUG] W-9000-my_tc_1.0 org.pytorch.serve.wlm.WorkerLifeCycle - Wo

|

https://github.com/pytorch/serve/issues/3026

|

open

|

[

"help wanted",

"triaged",

"needs-reproduction"

] | 2024-03-14T07:56:57Z

| 2024-03-19T16:44:51Z

| 4

|

yolk-pie-L

|

pytorch/serve

| 3,025

|

torchserve output customization

|

Hi team

To process a inference request in torchserve, there are stages like initialize, preprocess, inference, postprocess.

If I want to convert the output format from tensor to my custom textual format, where and how can I carry this out ?

I am able to receive output in json format. But I need to make some customizations. Is it possible in torchserve ?

regards

|

https://github.com/pytorch/serve/issues/3025

|

closed

|

[

"triaged"

] | 2024-03-13T20:37:39Z

| 2024-03-14T21:05:42Z

| 3

|

advaitraut

|

pytorch/executorch

| 2,397

|

How to perform inference and gathering accuracy metrics on executorch model

|

Hi, I am having trouble finding solid documentation that explains how to do the following with executorch (stable):

- Load in the exported .pte model

- Run inference with images

- Gather accuracy

I have applied quantization and other optimizations to the original model and exported it to .pte. I'd like to see the accuracy after these techniques were applied. I followed the following tutorial for exporting the model. If we can't do the above items on the directly exported .pte file, then is there a way we can based on the below steps for preparing the model for edge dialect?

https://pytorch.org/executorch/stable/tutorials/export-to-executorch-tutorial.html

cc @mergennachin @byjlw

|

https://github.com/pytorch/executorch/issues/2397

|

open

|

[

"module: doc",

"need-user-input",

"triaged"

] | 2024-03-13T14:40:01Z

| 2025-02-04T20:21:12Z

| null |

mmingo848

|

huggingface/tokenizers

| 1,469

|

How to load tokenizer trained by sentencepiece or tiktoken

|

Hi, does this lib supports loading pre-trained tokenizer trained by other libs, like `sentencepiece` and `tiktoken`? Many models on hf hub store tokenizer in these formats

|

https://github.com/huggingface/tokenizers/issues/1469

|

closed

|

[

"Stale",

"planned"

] | 2024-03-13T10:22:00Z

| 2024-04-30T10:15:32Z

| null |

jordane95

|

pytorch/pytorch

| 121,798

|

what is the match numpy verison, can not build from source

|

### 🐛 Describe the bug

what is the match numpy verison, can not build from source

after run ` python3 setup.py develop`

got this error

```

error: no member named 'elsize' in '_PyArray_Descr'

```

### Versions

OS: macOS 14.4 (arm64)

GCC version: Could not collect

Clang version: 15.0.0 (clang-1500.3.9.4)

CMake version: version 3.22.2

Libc version: N/A

Python version: 3.11.7 (main, Jan 16 2024, 14:42:22) [Clang 14.0.0 (clang-1400.0.29.202)] (64-bit runtime)

Python platform: macOS-14.4-arm64-arm-64bit

Is CUDA available: N/A

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

CPU:

Apple M1 Max

Versions of relevant libraries:

[pip3] numpy==2.0.0b1

[pip3] torch==2.3.0.dev20240311

[pip3] torchaudio==2.2.0.dev20240311

[pip3] torchvision==0.18.0.dev20240311

[conda] Could not collect

cc @malfet @seemethere @mruberry @rgommers

|

https://github.com/pytorch/pytorch/issues/121798

|

closed

|

[

"module: build",

"triaged",

"module: numpy"

] | 2024-03-13T09:52:46Z

| 2024-03-14T07:10:15Z

| null |

yourmoonlight

|

pytorch/functorch

| 1,142

|

Swapping 2 columns in a 2d tensor

|

I have a function ```tridiagonalization``` to tridiagonalize matrix (2d tensor), and I want to map it to batch. It involves a for loop and on each iteration a permutation of 2 columns and 2 rows inside it. I do not understand how to permute 2 columns without errors. So my code for rows works and looks as follows:

```

row_temp = matrix_stacked[pivot[None]][0]

matrix_stacked[[pivot[None]][0]] = matrix_stacked[i+1].clone()

matrix_stacked[i+1] = row_temp

```

Where ```pivot``` is a tensor and ```i``` is a Python integer variable. For columns I have something like this:

```

column_temp = matrix_stacked[:, [pivot[None]][0]]

matrix_stacked[:, [pivot[None]][0]] = matrix_stacked[:, [i+1]].clone()

matrix_stacked[:, i+1] = column_temp

```

It does not wotk because of issues with size. What should I do in order to permute ```i+1``` and ```pivot``` columns?

|

https://github.com/pytorch/functorch/issues/1142

|

open

|

[] | 2024-03-13T09:33:29Z

| 2024-03-13T09:33:29Z

| 0

|

Kreativshikkk

|

huggingface/transformers.js

| 644

|

Contribution Question-What's next after run scripts.convert?

|

### Question

Hi @xenova I am trying to figure out how to contribute. I am new to huggingface. Just 2 months down the rabbit hole.

I ran

`python -m scripts.convert --quantize --model_id SeaLLMs/SeaLLM-7B-v2`

command

Here is a list of file I got in `models/SeaLLMs/SeaLLM-7B-v2` folder

```

_model_layers.0_self_attn_rotary_emb_Constant_5_attr__value

_model_layers.0_self_attn_rotary_emb_Constant_attr__value

config.json

generation_config.json

model.onnx

model.onnx_data

special_tokens_map.json

tokenizer.json

tokenizer.model

tokenizer_config.json

```

Does it work?

What's next from here? Do I upload the models to huggingface?

Do you have example commits or PR I should take a look? I have been scanning the model PR but none of which mentioned what happen after you ran `scripts/convert`

I have seen some other issues mentioned the need for document. I know you don't have it yet. That's fine. That's why I am only asking for a hint or a little guidiance.

|

https://github.com/huggingface/transformers.js/issues/644

|

closed

|

[

"question"

] | 2024-03-13T08:51:37Z

| 2024-04-11T02:33:04Z

| null |

pacozaa

|

huggingface/making-games-with-ai-course

| 11

|

[UPDATE] Typo in Unit 1, "What is HF?" section. The word "Danse" should be "Dance"

|

# What do you want to improve?

There is a typo in Unit 1, "What is HF?" section.

The word "Danse" should be "Dance"

- Explain the typo/error or the part of the course you want to improve

There is a typo in Unit 1, "What is HF?" section.

The word "Danse" should be "Dance"

The English spelling doesn't seem to include the French spelling.

https://www.dictionary.com/browse/dance

I assume this will also come up in later places, but I haven't gotten that far yet. :)

# Actual Issue:

In this image:

https://huggingface.co/datasets/huggingface-ml-4-games-course/course-images/resolve/main/en/unit1/unity/models4.jpg

which is used here:

https://github.com/huggingface/making-games-with-ai-course/blob/main/units/en/unit1/what-is-hf.mdx

# **Also, don't hesitate to open a Pull Request with the update**. This way you'll be a contributor of the project.

Sorry, I have no access to the problematic image's source

|

https://github.com/huggingface/making-games-with-ai-course/issues/11

|

closed

|

[

"documentation"

] | 2024-03-12T17:12:20Z

| 2024-04-18T07:18:12Z

| null |

PaulForest

|

huggingface/transformers.js

| 642

|

RangeError: offset is out of bounds #601

|

### Question

```

class NsfwDetector {

constructor() {

this._threshold = 0.5;

this._nsfwLabels = [

'FEMALE_BREAST_EXPOSED',

'FEMALE_GENITALIA_EXPOSED',

'BUTTOCKS_EXPOSED',

'ANUS_EXPOSED',

'MALE_GENITALIA_EXPOSED',

'BLOOD_SHED',

'VIOLENCE',

'GORE',

'PORNOGRAPHY',

'DRUGS',

'ALCOHOL',

];

}

async isNsfw(imageUrl) {

let blobUrl = '';

try {

// Load and resize the image first

blobUrl = await this._loadAndResizeImage(imageUrl);

const classifier = await window.tensorflowPipeline('zero-shot-image-classification', 'Xenova/clip-vit-base-patch16');

const output = await classifier(blobUrl, this._nsfwLabels);

console.log(output);

const nsfwDetected = output.some(result => result.score > this._threshold);

return nsfwDetected;

} catch (error) {

console.error('Error during NSFW classification: ', error);

throw error;

} finally {

if (blobUrl) {

URL.revokeObjectURL(blobUrl); // Ensure blob URLs are revoked after use to free up memory

}

}

}

async _loadAndResizeImage(imageUrl) {

const img = await this._loadImage(imageUrl);

const offScreenCanvas = document.createElement('canvas');

const ctx = offScreenCanvas.getContext('2d');

offScreenCanvas.width = 224;

offScreenCanvas.height = 224;

ctx.drawImage(img, 0, 0, offScreenCanvas.width, offScreenCanvas.height);

return new Promise((resolve, reject) => {

offScreenCanvas.toBlob(blob => {

if (!blob) {

reject('Canvas to Blob conversion failed');

return;

}

const blobUrl = URL.createObjectURL(blob);

resolve(blobUrl);

}, 'image/jpeg');

});

}

async _loadImage(url) {

return new Promise((resolve, reject) => {

const img = new Image();

img.crossOrigin = 'anonymous';

img.onload = () => resolve(img);

img.onerror = () => reject(`Failed to load image: ${url}`);

img.src = url;

});

}

}

window.NsfwDetector = NsfwDetector;

```

when used on a bunch of images, it fails, "RangeError: offset is out of bounds".

|

https://github.com/huggingface/transformers.js/issues/642

|

closed

|

[

"question"

] | 2024-03-12T16:47:58Z

| 2024-03-13T05:57:23Z

| null |

vijishmadhavan

|

huggingface/chat-ui

| 926

|

AWS credentials resolution for Sagemaker models

|

chat-ui is excellent, thanks for all your amazing work here!

I have been experimenting with a model in Sagemaker and am having some issues with the model endpoint configuration. It currently requires credentials to be provided explicitly. This does work, but the ergonomics are not great for our use cases:

- in development, my team uses AWS SSO and it would be great to use our session credentials and not need to update our MODELS environment variable manually every time our sessions refresh

- in deployments, we would want to use an instance or task execution role to sign requests

In my investigation I found this area of code https://github.com/huggingface/chat-ui/blob/eb071be4c938b0a2cf2e89a152d68305d4714949/src/lib/server/endpoints/aws/endpointAws.ts#L22-L37, which uses the `aws4fetch` library that only support signing with explicitly passed AWS credentials.

I was able to update this area of code locally and support AWS credential resolution by switching this to use a different library [`aws-sigv4-fetch`](https://github.com/zirkelc/aws-sigv4-fetch) like so:

```ts

try {

createSignedFetcher = (await import("aws-sigv4-fetch")).createSignedFetcher;

} catch (e) {

throw new Error("Failed to import aws-sigv4-fetch");

}

const { url, accessKey, secretKey, sessionToken, model, region, service } =

endpointAwsParametersSchema.parse(input);

const signedFetch = createSignedFetcher({

service,

region,

credentials:

accessKey && secretKey

? { accessKeyId: accessKey, secretAccessKey: secretKey, sessionToken }

: undefined,

});

// Replacer `aws.fetch` with `signedFetch` below when passing `fetch` to `textGenerationStream#options`

```

My testing has found this supports passing credentials like today, or letting the AWS SDK resolve them through the default chain.

Would you be open to a PR with this change? Or is there a different/better/more suitable way to accomplish AWS credential resolution here?

|

https://github.com/huggingface/chat-ui/issues/926

|

open

|

[] | 2024-03-12T16:24:57Z

| 2024-03-13T10:30:52Z

| 1

|

nason

|

huggingface/optimum

| 1,754

|

How to tell whether the backend of ONNXRuntime accelerator is Intel VINO.

|

According to the [wiki](https://onnxruntime.ai/docs/execution-providers/#summary-of-supported-execution-providers), OpenVINO is one of the ONNXRuntime's execution providers.

I am deploying model on Intel Xeon Gold server, which supports AVX512 and which is compatible with Intel OpenVINO. How could I tell if the accelerator is Default CPU or OpenVINO?

```python

from sentence_transformers import SentenceTransformer, models

from optimum.onnxruntime import ORTModelForCustomTasks

from transformers import AutoTokenizer

ort_model = ORTModelForCustomTasks.from_pretrained('Geotrend/distilbert-base-zh-cased', export=True)

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

ort_model.save_pretrained(save_directory + "/" + checkpoint)

tokenizer.save_pretrained(save_directory + "/" + checkpoint)

```

```shell

Framework not specified. Using pt to export to ONNX.

Using the export variant default. Available variants are:

- default: The default ONNX variant.

Using framework PyTorch: 2.1.2.post300

```

|

https://github.com/huggingface/optimum/issues/1754

|

closed

|

[] | 2024-03-12T08:54:01Z

| 2024-07-08T11:31:13Z

| null |

ghost

|

huggingface/alignment-handbook

| 134

|

Is there a way to freeze some layers of a model ?

|

Can we follow the normal way of:

```

for param in model.base_model.parameters():

param.requires_grad = False

```

|

https://github.com/huggingface/alignment-handbook/issues/134

|

open

|

[] | 2024-03-12T02:06:03Z

| 2024-03-12T02:06:03Z

| 0

|

shamanez

|

huggingface/diffusers

| 7,283

|

How to load lora trained with Stable Cascade?

|

I finished a lora traning based on Stable Cascade with onetrainer, but I cannot find a solution to load the load in diffusers pipeline. Anyone who can help me will be appreciated.

|

https://github.com/huggingface/diffusers/issues/7283

|

closed

|

[

"stale"

] | 2024-03-12T01:33:01Z

| 2024-06-29T13:35:45Z

| null |

zengjie617789

|

huggingface/datasets

| 6,729

|

Support zipfiles that span multiple disks?

|

See https://huggingface.co/datasets/PhilEO-community/PhilEO-downstream

The dataset viewer gives the following error:

```

Error code: ConfigNamesError

Exception: BadZipFile

Message: zipfiles that span multiple disks are not supported

Traceback: Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/dataset/config_names.py", line 67, in compute_config_names_response

get_dataset_config_names(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/inspect.py", line 347, in get_dataset_config_names

dataset_module = dataset_module_factory(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1871, in dataset_module_factory

raise e1 from None

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1846, in dataset_module_factory

return HubDatasetModuleFactoryWithoutScript(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1240, in get_module

module_name, default_builder_kwargs = infer_module_for_data_files(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 584, in infer_module_for_data_files

split_modules = {

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 585, in <dictcomp>

split: infer_module_for_data_files_list(data_files_list, download_config=download_config)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 526, in infer_module_for_data_files_list

return infer_module_for_data_files_list_in_archives(data_files_list, download_config=download_config)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 554, in infer_module_for_data_files_list_in_archives

for f in xglob(extracted, recursive=True, download_config=download_config)[

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/download/streaming_download_manager.py", line 576, in xglob

fs, *_ = fsspec.get_fs_token_paths(urlpath, storage_options=storage_options)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/core.py", line 622, in get_fs_token_paths

fs = filesystem(protocol, **inkwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/registry.py", line 290, in filesystem

return cls(**storage_options)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/spec.py", line 79, in __call__

obj = super().__call__(*args, **kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/implementations/zip.py", line 57, in __init__

self.zip = zipfile.ZipFile(

File "/usr/local/lib/python3.9/zipfile.py", line 1266, in __init__

self._RealGetContents()

File "/usr/local/lib/python3.9/zipfile.py", line 1329, in _RealGetContents

endrec = _EndRecData(fp)

File "/usr/local/lib/python3.9/zipfile.py", line 286, in _EndRecData

return _EndRecData64(fpin, -sizeEndCentDir, endrec)

File "/usr/local/lib/python3.9/zipfile.py", line 232, in _EndRecData64

raise BadZipFile("zipfiles that span multiple disks are not supported")

zipfile.BadZipFile: zipfiles that span multiple disks are not supported

```

The files (https://huggingface.co/datasets/PhilEO-community/PhilEO-downstream/tree/main/data) are:

<img width="629" alt="Capture d’écran 2024-03-11 à 22 07 30" src="https://github.com/huggingface/datasets/assets/1676121/0bb15a51-d54f-4d73-8572-e427ea644b36">

|

https://github.com/huggingface/datasets/issues/6729

|

closed

|

[

"enhancement",

"question"

] | 2024-03-11T21:07:41Z

| 2024-06-26T05:08:59Z

| null |

severo

|

huggingface/candle

| 1,834

|

How to increase model performance?

|

Hello all,

I have recently benchmarked completion token time, which is 30ms on an H100. However, with llama.cpp it is 10ms. Because [mistral.rs](https://github.com/EricLBuehler/mistral.rs) is built on Candle, it inherits this performance deficit. In #1680, @guoqingbao said that the Candle implementation is not suitable for batched computing because of naive CUDA kernels. What other areas could be optimized?

|

https://github.com/huggingface/candle/issues/1834

|

closed

|

[] | 2024-03-11T12:36:45Z

| 2024-03-29T20:44:46Z

| null |

EricLBuehler

|

huggingface/transformers.js

| 638

|

Using an EfficientNet Model - Looking for advice

|

### Question

Discovered this project from the recent Syntax podcast episode (which was excellent) - it got my mind racing with different possibilities.

I got some of the example projects up and running without too much issue and naturally wanted to try something a little more outside the box, which of course has led me down some rabbit holes.

I came across this huggingface model;

https://huggingface.co/chriamue/bird-species-classifier

and https://huggingface.co/dennisjooo/Birds-Classifier-EfficientNetB2

Great, file size is only like 32 mb... however just swapping in this model into the example code didn't work - something about efficientnet models not supported yet. Okay I'll just try to convert this model with the provided script.

Similar error about EfficientNet... Okay I will clone the repo, and retrain using a different architecture... Then looking at the training data https://www.kaggle.com/datasets/gpiosenka/100-bird-species, it seems like maybe it's meant for efficientnet?

Also digging into how the above huggingface projects were done, I realized they are fine-tunes of other image classification models...

So my questions is, can I fine tune an existing transformer js image classification model? such as https://huggingface.co/Xenova/convnext-tiny-224 or am I better off using the original https://huggingface.co/facebook/convnext-tiny-224 model and creating a fine tune from there, then converting it to onnx using the script?

Thanks for your help on this and for this awesome project. Really just looking for some direction.

|

https://github.com/huggingface/transformers.js/issues/638

|

closed

|

[

"question"

] | 2024-03-11T01:31:49Z

| 2024-03-11T17:42:31Z

| null |

ozzyonfire

|

pytorch/xla

| 6,710

|

Does XLA use the Nvidia GPU's tensor cores?

|

## ❓ Questions and Help

1. Does XLA use the Nvidia GPU's tensor cores?

2. Is Pytorch XLA only designed to accelerate neural network training or does it accelerate their inferencing as well?

|

https://github.com/pytorch/xla/issues/6710

|

closed

|

[] | 2024-03-11T00:55:36Z

| 2024-03-15T23:42:26Z

| 2

|

Demis6

|

huggingface/text-generation-inference

| 1,636

|

Need instructions for how to optimize for production serving (fast startup)

|

### Feature request

I suggest better educating developers how to download and optimize the model at build time (in container or in a volume) so that the command `text-generation-launcher` serves as fast as possible.

### Motivation

By default, when running TGI using Docker, the container downloads the model on the fly and spend a long time optimizing it.

The [quicktour](https://huggingface.co/docs/text-generation-inference/en/quicktour) recommends using a local volume, which is great, but this isn't really compatible with autoscaled cloud environments, where container startup as to be as fast as possible.

### Your contribution

As I explore this area, I will share my findings in this issue.

|

https://github.com/huggingface/text-generation-inference/issues/1636

|

closed

|

[

"Stale"

] | 2024-03-10T22:17:53Z

| 2024-04-15T02:49:03Z

| null |

steren

|

pytorch/tutorials

| 2,797

|

Contradiction in `save_for_backward`, what is permitted to be saved

|

https://pytorch.org/tutorials/beginner/examples_autograd/two_layer_net_custom_function.html

"ctx is a context object that can be used to stash information for backward computation. You can **cache arbitrary objects** for use in the backward pass using the ctx.save_for_backward method."

https://pytorch.org/docs/stable/generated/torch.autograd.function.FunctionCtx.save_for_backward.html

"save_for_backward should be called at most once, only from inside the forward() method, and **only with tensors**."

Most likely the second is correct, and the first is not. I haven't checked.

Suggestion: "You can cache **tensors** for use in the backward pass using the ctx.save_for_backward method. Other miscellaneous objects can be cached using ctx.my_object_name = object."

cc @albanD @jbschlosser

|

https://github.com/pytorch/tutorials/issues/2797

|

closed

|

[

"core",

"medium",

"docathon-h1-2025"

] | 2024-03-10T19:40:16Z

| 2025-06-04T21:11:21Z

| null |

ad8e

|

huggingface/optimum

| 1,752

|

Documentation for exporting openai/whisper-large-v3 to ONNX

|

### Feature request

Hello, I am exporting the [OpenAI Whisper-large0v3](https://huggingface.co/openai/whisper-large-v3) to ONNX and see it exports several files, most importantly in this case encoder (encoder_model.onnx & encoder_model.onnx.data) and decoder (decoder_model.onnx, decoder_model.onnx.data, decoder_with_past_model.onnx, decoder_with_past_model.onnx.data) files. I'd like to also be able to use as much as possible from the pipe in the new onnx files:

`pipe = pipeline(

"automatic-speech-recognition",

model=model,

tokenizer=processor.tokenizer,

feature_extractor=processor.feature_extractor,

max_new_tokens=128,

chunk_length_s=30,

batch_size=16,

return_timestamps=True,

torch_dtype=torch_dtype,

device=device,

)`

Is there documentation that explains how to incorporate all these different things? I know transformer models are much different in this whole process and I cannot find a clear A -> B process on how to export this model and perform tasks such as quantization, etc. I see I can do the following for the tokenizer with ONNX, but I'd like more insight about the rest I mentioned above (how to use the seperate onnx files & how to use as much as the preexisting pipeline).

`processor.tokenizer.save_pretrained(onnx_path)`

I also see I can do:

`model = ORTModelForSpeechSeq2Seq.from_pretrained(

model_id, export=True

)`

but I cannot find documentation on how to specify where it is exported to, which seem's like I am either missing something fairly simple or it is just not hyperlinked in the documentation.

### Motivation

I'd love to see further documentation on the entire export process for this highly popular model. Deployment is significantly slowed due to there not being a easy to find A -> B process for exporting the model and using the pipeline given in the vanilla model.

### Your contribution

I am able to provide additional information to make this process easier.

|

https://github.com/huggingface/optimum/issues/1752

|

open

|

[

"feature-request",

"onnx"

] | 2024-03-10T05:24:36Z

| 2024-10-09T09:18:27Z

| 10

|

mmingo848

|

huggingface/transformers

| 29,564

|

How to add new special tokens

|

### System Info

- `transformers` version: 4.38.0

- Platform: Linux-6.5.0-21-generic-x86_64-with-glibc2.35

- Python version: 3.10.13

- Huggingface_hub version: 0.20.2

- Safetensors version: 0.4.2

- Accelerate version: not installed

- Accelerate config: not found

- PyTorch version (GPU?): 2.2.0 (False)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: yes and no

- Using distributed or parallel set-up in script?: no

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

Execute the code below:

```

from transformers import AutoTokenizer, AutoModel

import torch

import os

from datasets import load_dataset

dataset = load_dataset("ftopal/huggingface-datasets-processed")

os.environ['CUDA_LAUNCH_BLOCKING'] = "1"

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

# device = torch.device("cpu")

checkpoint = 'intfloat/multilingual-e5-base'

model = AutoModel.from_pretrained(checkpoint)

tokenizer = AutoTokenizer.from_pretrained(

checkpoint,

additional_special_tokens=['<URL>']

)

model.to(device)

encoded_input = tokenizer(

dataset['train'][0]['input_texts'], # A tensor with 2, 512 shape

padding='max_length',

max_length=tokenizer.model_max_length,

truncation=True,

return_tensors="pt",

)

encoded_input_dict = {

k: v.to(device) for k, v in encoded_input.items()

}

with torch.no_grad():

model_output = model(**encoded_input_dict)

```

### Expected behavior

I expect this code to work however this results in very weird errors. More details on error stack trace can be found here: https://github.com/pytorch/pytorch/issues/121493

I found that if I remove `additional_special_tokens` param, code works. So that seems to be the problem. Another issue is that it is still not clear (after so many years) how to extend/add special tokens into the model. I went through the code base to find this parameter but that seems to be not working alone and the whole stack trace isn't helpful at all.

Questions from my side:

- What is the expected solution for this and could we document this somewhere? I can't find this anywhere or somehow i am not able to find this.

- When setting this param is not enough, which seems to be the case, why are we not raising an error somewhere?

|

https://github.com/huggingface/transformers/issues/29564

|

closed

|

[] | 2024-03-09T22:56:44Z

| 2024-04-17T08:03:43Z

| null |

lordsoffallen

|

pytorch/vision

| 8,305

|

aarch64 build for AWS Linux - Failed to load image Python extension

|

### 🐛 Describe the bug

Built Torch 2.1.2 and TorchVision 0.16.2 from source and running into the following problem:

/home/ec2-user/conda/envs/textgen/lib/python3.10/site-packages/torchvision/io/image.py:13: UserWarning: Failed to load image Python extension: '/home/ec2-user/conda/envs/textgen/lib/python3.10/site-packages/torchvision/image.so: undefined symbol: _ZNK3c1017SymbolicShapeMeta18init_is_contiguousEv'If you don't plan on using image functionality from `torchvision.io`, you can ignore this warning. Otherwise, there might be something wrong with your environment. Did you have `libjpeg` or `libpng` installed before building `torchvision` from source?

previously the error was about missing libs and not undefined symbol, so I believe the libs are correctly installed now. Building says:

```

Compiling extensions with following flags:

FORCE_CUDA: False

FORCE_MPS: False

DEBUG: False

TORCHVISION_USE_PNG: True

TORCHVISION_USE_JPEG: True

TORCHVISION_USE_NVJPEG: True

TORCHVISION_USE_FFMPEG: True

TORCHVISION_USE_VIDEO_CODEC: True

NVCC_FLAGS:

Compiling with debug mode OFF

Found PNG library

Building torchvision with PNG image support

libpng version: 1.6.37

libpng include path: /home/ec2-user/conda/envs/textgen/include/libpng16

Running build on conda-build: False

Running build on conda: True

Building torchvision with JPEG image support

libjpeg include path: /home/ec2-user/conda/envs/textgen/include

libjpeg lib path: /home/ec2-user/conda/envs/textgen/lib

Building torchvision without NVJPEG image support

Building torchvision with ffmpeg support

ffmpeg version: b'ffmpeg version 4.2.2 Copyright (c) 2000-2019 the FFmpeg developers\nbuilt with gcc 10.2.0 (crosstool-NG 1.22.0.1750_510dbc6_dirty)\nconfiguration: --prefix=/opt/conda/conda-bld/ffmpeg_1622823166193/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placeh --cc=/opt/conda/conda-bld/ffmpeg_1622823166193/_build_env/bin/aarch64-conda-linux-gnu-cc --disable-doc --enable-avresample --enable-gmp --enable-hardcoded-tables --enable-libfreetype --enable-libvpx --enable-pthreads --enable-libopus --enable-postproc --enable-pic --enable-pthreads --enable-shared --enable-static --enable-version3 --enable-zlib --enable-libmp3lame --disable-nonfree --enable-gpl --enable-gnutls --disable-openssl --enable-libopenh264 --enable-libx264\nlibavutil 56. 31.100 / 56. 31.100\nlibavcodec 58. 54.100 / 58. 54.100\nlibavformat 58. 29.100 / 58. 29.100\nlibavdevice 58. 8.100 / 58. 8.100\nlibavfilter 7. 57.100 / 7. 57.100\nlibavresample 4. 0. 0 / 4. 0. 0\nlibswscale 5. 5.100 / 5. 5.100\nlibswresample 3. 5.100 / 3. 5.100\nlibpostproc 55. 5.100 / 55. 5.100\n'

ffmpeg include path: ['/home/ec2-user/conda/envs/textgen/include']

ffmpeg library_dir: ['/home/ec2-user/conda/envs/textgen/lib']

Building torchvision without video codec support

```

So I believe I do have things set up correctly to be able to do image calls (I don't care about video). Any idea why I would still be getting the undefined symbol warning? Thanks!

### Versions

Collecting environment information...

PyTorch version: 2.1.2+cu121

Is debug build: False

CUDA used to build PyTorch: 12.2

ROCM used to build PyTorch: N/A

OS: Amazon Linux 2023.3.20240304 (aarch64)

GCC version: (GCC) 11.4.1 20230605 (Red Hat 11.4.1-2)

Clang version: Could not collect

CMake version: version 3.28.3

Libc version: glibc-2.34

Python version: 3.10.9 (main, Mar 8 2023, 10:41:45) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-6.1.79-99.164.amzn2023.aarch64-aarch64-with-glibc2.34

Is CUDA available: True

CUDA runtime version: 12.2.140

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA T4G

Nvidia driver version: 550.54.14

cuDNN version: Probably one of the following:

/usr/local/cuda-12.2/targets/sbsa-linux/lib/libcudnn.so.8.9.4

/usr/local/cuda-12.2/targets/sbsa-linux/lib/libcudnn_adv_infer.so.8.9.4

/usr/local/cuda-12.2/targets/sbsa-linux/lib/libcudnn_adv_train.so.8.9.4

/usr/local/cuda-12.2/targets/sbsa-linux/lib/libcudnn_cnn_infer.so.8.9.4

/usr/local/cuda-12.2/targets/sbsa-linux/lib/libcudnn_cnn_train.so.8.9.4

/usr/local/cuda-12.2/targets/sbsa-linux/lib/libcudnn_ops_infer.so.8.9.4

/usr/local/cuda-12.2/targets/sbsa-linux/lib/libcudnn_ops_train.so.8.9.4

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: aarch64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 4

On-line CPU(s) list: 0-3

Vendor ID: ARM

Model name: Neoverse-N1

Model:

|

https://github.com/pytorch/vision/issues/8305

|

open

|

[] | 2024-03-09T20:13:46Z

| 2024-03-12T18:53:04Z

| 6

|

elkay

|

huggingface/datasets

| 6,726

|

Profiling for HF Filesystem shows there are easy performance gains to be made

|

### Describe the bug

# Let's make it faster

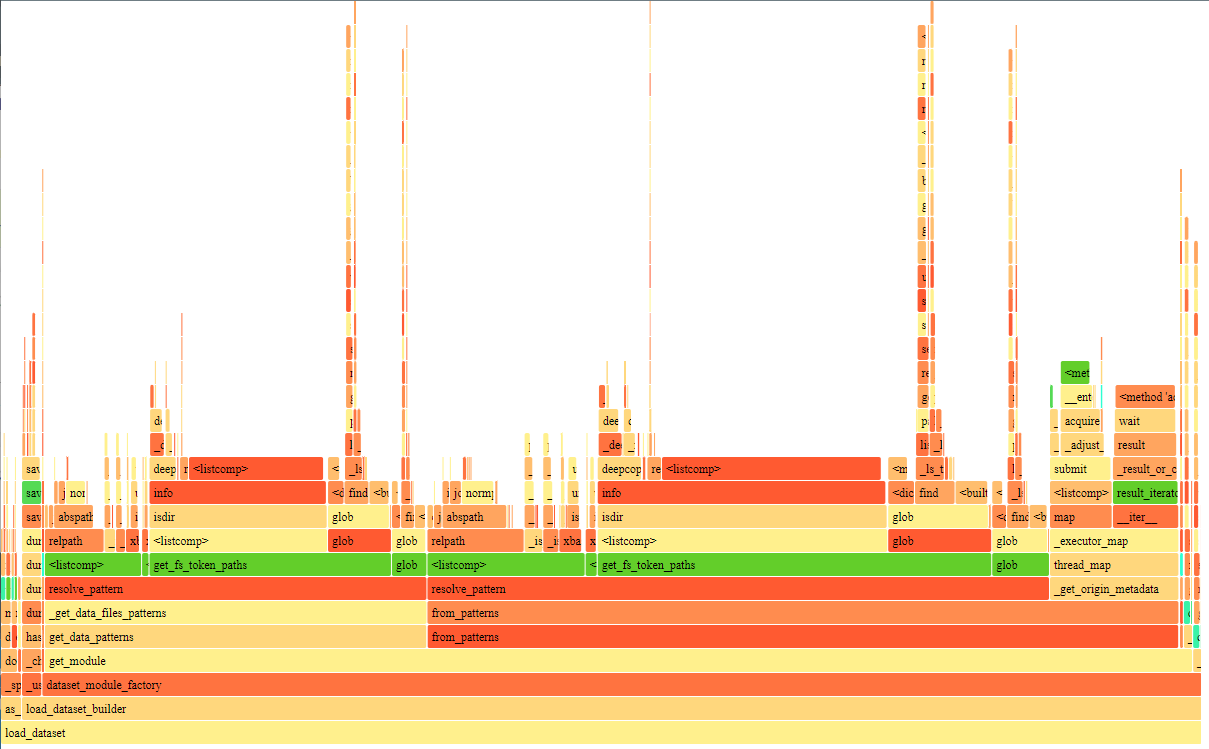

First, an evidence...

Figure 1: CProfile for loading 3 files from cerebras/SlimPajama-627B train split, and 3 files from test split using streaming=True. X axis is 1106 seconds long.

See? It's pretty slow.

What is resolve pattern doing?

```

resolve_pattern called with **/train/** and hf://datasets/cerebras/SlimPajama-627B@2d0accdd58c5d5511943ca1f5ff0e3eb5e293543

resolve_pattern took 20.815081119537354 seconds

```

Makes sense. How to improve it?

## Bigger project, biggest payoff

Databricks (and consequently, spark) store a compressed manifest file of the files contained in the remote filesystem.

Then, you download one tiny file, decompress it, and all the operations are local instead of this shenanigans.

It seems pretty straightforward to make dataset uploads compute a manifest and upload it alongside their data.

This would make resolution time so fast that nobody would ever think about it again.

It also means you either need to have the uploader compute it _every time_, or have a hook that computes it.

## Smaller project, immediate payoff: Be diligent in avoiding deepcopy

Revise the _ls_tree method to avoid deepcopy:

```

def _ls_tree(

self,

path: str,

recursive: bool = False,

refresh: bool = False,

revision: Optional[str] = None,

expand_info: bool = True,

):

..... omitted .....

for path_info in tree:

if isinstance(path_info, RepoFile):

cache_path_info = {

"name": root_path + "/" + path_info.path,

"size": path_info.size,

"type": "file",

"blob_id": path_info.blob_id,

"lfs": path_info.lfs,

"last_commit": path_info.last_commit,

"security": path_info.security,

}

else:

cache_path_info = {

"name": root_path + "/" + path_info.path,

"size": 0,

"type": "directory",

"tree_id": path_info.tree_id,

"last_commit": path_info.last_commit,

}

parent_path = self._parent(cache_path_info["name"])

self.dircache.setdefault(parent_path, []).append(cache_path_info)

out.append(cache_path_info)

return copy.deepcopy(out) # copy to not let users modify the dircache

```

Observe this deepcopy at the end. It is making a copy of a very simple data structure. We do not need to copy. We can simply generate the data structure twice instead. It will be much faster.

```

def _ls_tree(

self,

path: str,

recursive: bool = False,

refresh: bool = False,

revision: Optional[str] = None,

expand_info: bool = True,

):

..... omitted .....

def make_cache_path_info(path_info):

if isinstance(path_info, RepoFile):

return {

"name": root_path + "/" + path_info.path,

"size": path_info.size,

"type": "file",

"blob_id": path_info.blob_id,

"lfs": path_info.lfs,

"last_commit": path_info.last_commit,

"security": path_info.security,

}

else:

return {

"name": root_path + "/" + path_info.path,

"size": 0,

"type": "directory",

"tree_id": path_info.tree_id,

"last_commit": path_info.last_commit,

}

for path_info in tree:

cache_path_info = make_cache_path_info(path_info)

out_cache_path_info = make_cache_path_info(path_info) # copy to not let users modify the dircache

parent_path = self._parent(cache_path_info["name"])

self.dircache.setdefault(parent_path, []).append(cache_path_info)

out.append(out_cache_path_info)

return out

```

Note there is no longer a deepcopy in this method. We have replaced it with generating the output twice. This is substantially faster. For me, the entire resolution went from 1100s to 360s.

## Medium project, medium payoff

After the above change, we have this profile:

Figure 2: x-axis is 355 seconds. Note that globbing and _ls_tree deep copy is gone. No surprise there. It's much faster now, but we still spend ~187seconds i

|

https://github.com/huggingface/datasets/issues/6726

|

open

|

[] | 2024-03-09T07:08:45Z

| 2024-03-09T07:11:08Z

| 2

|

awgr

|

huggingface/alignment-handbook

| 133

|

Early Stopping Issue when used with ConstantLengthDataset

|

Hello

I modified the code to include the Constant Length Dataset and it's early stopping at around 15% of the training. This issue doesn't occur when not used with the normal code given. Is there an issue with constant length dataset? I used it with SFTTrainer.

|

https://github.com/huggingface/alignment-handbook/issues/133

|

open

|

[] | 2024-03-08T23:08:08Z

| 2024-03-08T23:08:08Z

| 0

|

sankydesai

|

pytorch/serve

| 3,008

|

very high QueueTime

|

Hi, I am seeing a very high queue time in my torchserve setup.

if I am considering correctly the `QueueTime.ms:19428` means this particular request had to wait for 19 sec for processing

while the QueTime just before that request was `QueueTime.ms:0` so why suddenly 18 sec delay

If I am wrong then what does this QueueTime parameter represent?

my env torch131+cu117, torchserve 0.7.2, and the model used is yolov5s which is a very small model, in input I am accepting an s3 uri downloading the image internally, and then processing

attaching the logs here any idea what could be happening here

```

2024-03-08T08:44:35,261 [INFO ] W-9003-vehicledetection TS_METRICS - QueueTime.ms:0|#Level:Host|#hostname:ai-gpu-service-5b585f9b9d-x4r7z,timestamp:1709887475

2024-03-08T08:44:35,261 [INFO ] W-9003-vehicledetection TS_METRICS - WorkerThreadTime.ms:0|#Level:Host|#hostname:ai-gpu-service-5b585f9b9d-x4r7z,timestamp:1709887475

2024-03-08T08:44:35,261 [INFO ] W-9003-vehicledetection org.pytorch.serve.wlm.WorkerThread - Flushing req. to backend at: 1709887475261

2024-03-08T08:44:35,262 [INFO ] W-9003-vehicledetection-stdout MODEL_LOG - Backend received inference at: 1709887475

2024-03-08T08:44:35,262 [INFO ] W-9003-vehicledetection-stdout MODEL_LOG - Received backend request -> {'image_uri': 's3://mubucket/062c650b3213.jpeg', 'conf_thresh': 0.5}

2024-03-08T08:44:35,282 [INFO ] W-9003-vehicledetection-stdout MODEL_LOG - completed processing results

2024-03-08T08:44:35,283 [INFO ] W-9003-vehicledetection-stdout MODEL_METRICS - HandlerTime.Milliseconds:20.93|#ModelName:vehicledetection,Level:Model|#hostname:ai-gpu-service-5b585f9b9d-x4r7z,requestID:3a44a9f4-4f5d-4ead-8f1f-153ecf6b001f,timestamp:1709887475

2024-03-08T08:44:35,283 [INFO ] W-9003-vehicledetection-stdout MODEL_METRICS - PredictionTime.Milliseconds:21.03|#ModelName:vehicledetection,Level:Model|#hostname:ai-gpu-service-5b585f9b9d-x4r7z,requestID:3a44a9f4-4f5d-4ead-8f1f-153ecf6b001f,timestamp:1709887475

2024-03-08T08:44:35,283 [INFO ] W-9003-vehicledetection org.pytorch.serve.wlm.WorkerThread - Backend response time: 22

2024-03-08T08:44:35,283 [INFO ] W-9003-vehicledetection ACCESS_LOG - /xxx.xx.xxx.xxx:18363 "POST /predictions/vehicledetection HTTP/1.1" 200 19450

2024-03-08T08:44:35,283 [INFO ] W-9003-vehicledetection TS_METRICS - Requests2XX.Count:1|#Level:Host|#hostname:ai-gpu-service-5b585f9b9d-x4r7z,timestamp:1706999000

2024-03-08T08:44:35,283 [DEBUG] W-9003-vehicledetection org.pytorch.serve.job.Job - Waiting time ns: 19428751625, Backend time ns: 21770097

2024-03-08T08:44:35,283 [INFO ] W-9003-vehicledetection TS_METRICS - QueueTime.ms:19428|#Level:Host|#hostname:ai-gpu-service-5b585f9b9d-x4r7z,timestamp:1709887475

```

|

https://github.com/pytorch/serve/issues/3008

|

closed

|

[] | 2024-03-08T14:52:09Z

| 2024-03-09T17:12:37Z

| 0

|

PushpakBhoge512

|

huggingface/transformers.js

| 635

|

Failed to process file. and Failed to upload.

|

### Question

I am hosting Supabase on Docker in Ubuntu, and I am facing file upload failures on the chatbot-ui. The error messages displayed are "Failed to process file" and "Failed to upload." The console output error messages are as follows:

- POST https://chat.example.com/api/retrieval/process 500 (Internal Server Error)

- GET https://supa.example.com/rest/v1/files?select=*&id=eq.5186a7c7-ff34-4a40-98c1-db8d36e47896 406 (Not Acceptable)

File uploads fail regardless of the file type - whether it's a file with a purely English filename, a .txt file, or a .docx file.

Additionally, registration, login, chatting, and uploading images are functioning properly.

|

https://github.com/huggingface/transformers.js/issues/635

|

closed

|

[

"question"

] | 2024-03-08T13:07:18Z

| 2024-03-08T13:22:57Z

| null |

chawaa

|

huggingface/peft

| 1,545

|

How to use lora finetune moe model

|

https://github.com/huggingface/peft/issues/1545

|

closed

|

[] | 2024-03-08T11:45:09Z

| 2024-04-16T15:03:39Z

| null |

Minami-su

|

|

huggingface/datatrove

| 119

|

how about make a ray executor to deduplication

|

- https://github.com/ChenghaoMou/text-dedup/blob/main/text_dedup/minhash_spark.py

- reference:https://github.com/alibaba/data-juicer/blob/main/data_juicer/core/ray_executor.py

- Ray is simpler and faster than Spark

|

https://github.com/huggingface/datatrove/issues/119

|

closed

|

[] | 2024-03-08T11:37:13Z

| 2024-04-11T12:48:53Z

| null |

simplew2011

|

huggingface/transformers.js

| 634

|

For nomic-ai/nomic-embed-text-v1 8192 context length

|

### Question

As per document: https://huggingface.co/nomic-ai/nomic-embed-text-v1

Model supports 8192 context length, however, in transformers.js model_max_length: 512.

Any guidance how to use full context (8192) instead of 512?

|

https://github.com/huggingface/transformers.js/issues/634

|

closed

|

[

"question"

] | 2024-03-08T05:33:39Z

| 2025-10-13T04:57:49Z

| null |

faizulhaque

|

huggingface/diffusers

| 7,254

|

Request proper examples on how to training a diffusion models with diffusers on large scale dataset like LAION

|

Hi, I do not see any examples in diffusers/examples on how to training a diffusion models with diffusers on large scale dataset like LAION. However, it is important since many works and models is willing integrate their models into diffusers, so if they can train their models in diffusers, it would be more easy when they want to do it.

|

https://github.com/huggingface/diffusers/issues/7254

|

closed

|

[

"stale"

] | 2024-03-08T01:31:33Z

| 2024-06-30T05:27:57Z

| null |

Luciennnnnnn

|

huggingface/swift-transformers

| 56

|

How to get models?

|

Missing in docu?

|

https://github.com/huggingface/swift-transformers/issues/56

|

closed

|

[] | 2024-03-07T15:47:54Z

| 2025-02-11T11:41:32Z

| null |

pannous

|

huggingface/datasets

| 6,721

|

Hi,do you know how to load the dataset from local file now?

|

Hi, if I want to load the dataset from local file, then how to specify the configuration name?

_Originally posted by @WHU-gentle in https://github.com/huggingface/datasets/issues/2976#issuecomment-1333455222_

|

https://github.com/huggingface/datasets/issues/6721

|

open

|

[] | 2024-03-07T13:58:40Z

| 2024-03-31T08:09:25Z

| null |

Gera001

|

pytorch/executorch

| 2,293

|

How to analyze executorch .pte file performance?

|

I am looking for a way to either benchmark the .pte files performance, the final state of the ExecutorchProgramManager object, or similar after following [this](https://pytorch.org/executorch/stable/tutorials/export-to-executorch-tutorial.html) tutorial. I used the PyTorch profiler on the model before putting it through executorch. I can’t find a way to use any one of the above on the profiler. I’d like to use the same or similar to compare the original model to the executorch model with quantization to see the performance differences. Thanks!

|

https://github.com/pytorch/executorch/issues/2293

|

closed

|

[

"module: devtools"

] | 2024-03-07T12:12:41Z

| 2025-02-03T22:04:48Z

| null |

mmingo848

|

huggingface/transformers.js

| 633

|

Is 'aggregation_strategy' parameter available for token classification pipeline?

|

### Question

Hi. I have question.

From HuggingFace Transformers documentation, they have **'aggregation_strategy'** parameter in token classification pipeline. [Link](https://huggingface.co/docs/transformers/en/main_classes/pipelines#transformers.TokenClassificationPipeline.aggregation_strategy)

Need to know in this library provide this parameter?

Thanks.

|

https://github.com/huggingface/transformers.js/issues/633

|

open

|

[

"help wanted",

"good first issue",

"question"

] | 2024-03-07T07:02:55Z

| 2024-06-09T15:16:56Z

| null |

boat-p

|

pytorch/xla

| 6,674

|

How to minimize memory expansion due to padding during sharding

|

Hello

For a model that can be sharded in model parallelization in TPUv4 (4x32) device, I am getting the error below at the beginning of the training on TPUv3 (8x16) device. There is `4x expansion` with respect to console message. Even if both both TPUv4 and TPUv3 devices have same total memory I cannot run the training on TPUv3 device.

```

Program hbm requirement 15.45G:

global 2.36M

scoped 3.88M

HLO temp 15.45G (60.9% utilization: Unpadded (9.40G) Padded (15.44G), 0.0% fragmentation (5.52M))

Largest program allocations in hbm:

1. Size: 4.00G

Shape: bf16[2048,1,2048,128]{0,1,3,2:T(4,128)(2,1)}

Unpadded size: 1.00G

Extra memory due to padding: 3.00G (4.0x expansion)

XLA label: broadcast.6042.remat3 = broadcast(bitcast.26), dimensions={2,3}

Allocation type: HLO temp

==========================

2. Size: 4.00G

Shape: bf16[2048,1,2048,128]{0,1,3,2:T(4,128)(2,1)}

Unpadded size: 1.00G

Extra memory due to padding: 3.00G (4.0x expansion)

XLA label: broadcast.6043.remat3 = broadcast(bitcast.27), dimensions={0,3}

Allocation type: HLO temp

==========================

```

The lines that causes `4x expansion` is below:

```

def forward(self, x): # Activation map volume = 1,128,2048,1

...

...

x = torch.transpose(x, 1, 3) # Activation map volume = 1,1,2048,128

x_batch_0 = x.expand(2048, -1, -1, -1) # Activation map volume = 2048,1,2048,128

x_batch_1 = x.repeat_interleave(2048, dim=2).reshape(2048, 1, 2048, 128) # Activation map volume = 2048,1,2048,128

x_batch = torch.cat((x_batch_0, x_batch_1), dim=1) # Activation map volume = 2048,2,2048,128

...

...

```

Here are the sharding properties that I set.

```

mesh_shape = (num_devices, 1, 1, 1)

mesh = xs.Mesh(device_ids, mesh_shape, ('w', 'x', 'y', 'z'))

partition_spec = (0, 1, 2, 3) # Apply sharding along all axes

for name, layer in model.named_modules():

if ( 'conv2d' in name ):

xs.mark_sharding(layer.weight, mesh, partition_spec)

```

How can I prevent `4x expansion`?

|

https://github.com/pytorch/xla/issues/6674

|

open

|

[

"performance",

"distributed"

] | 2024-03-06T15:23:31Z

| 2025-04-18T18:42:38Z

| null |

mfatih7

|

huggingface/swift-coreml-diffusers

| 93

|

Blocked at "loading" screen - how to reset the app / cache ?

|

After playing a bit with the app, it now stays in "Loading" state at startup (see screenshot)

I tried to remove the cache in `~/Library/Application Support/hf-diffusion-models` but it just cause a re-download.

How can I reset the app, delete all files created and start like on a fresh machine again ?

Alternatively, how can I pass the "Loading" screen ?

<img width="1016" alt="image" src="https://github.com/huggingface/swift-coreml-diffusers/assets/401798/15c7c67a-f61f-4855-a11e-ea7bd61b0a09">

|

https://github.com/huggingface/swift-coreml-diffusers/issues/93

|

open

|

[] | 2024-03-06T12:50:29Z

| 2024-03-10T11:24:49Z

| null |

sebsto

|

huggingface/chat-ui

| 905

|

Fail to create assistant.

|

I use the docker image chat-ui-db as the frontend, text-generation-inference as the inference backend, and meta-llamaLlama-2-70b-chat-hf as the model. Using the image and model mentioned above, I set up a large language model dialog service on server A. Assume that the IP address of the server A is x.x.x.x.

I use docker compose to deploy it. The content of docker-compose.yml is as follows:

```

services:

chat-ui:

image: chat-ui-db:latest

ports:

- "3000:3000"

restart: unless-stopped

textgen:

image: huggingface/text-generation-inference:1.4

ports:

- "8080:80"

command: ["--model-id", "/data/models/meta-llamaLlama-2-70b-chat-hf"]

volumes:

- /home/test/llm-test/serving/data:/data

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 8

capabilities: [gpu]

restart: unless-stopped

```

I set ENABLE_ASSISTANTS=true in .env.local to enable assistants feature.

I logged into localhost:3000 using chrome, clicked the settings button, and then clicked the create new assistant button. Enter the information in the Name and Description text boxes, select a model, and enter the information in the User start messages and Instructions (system prompt) text boxes. Finally, click the Create button. I can create an assistant just fine.

When I go to xxxx:3000 from a browser on a different server and access the service. (One may ask, how can I achieve access to server A's services from other servers without logging. The solution is to use nginx as a http to https anti-proxy(https://www.inovex.de/de/blog/code-assistant-how-to-self-host-your-own/)). I clicked the settings button, and then clicked the create new assistant button. Enter the information in the Name and Description text boxes, select a model, and enter the information in the User start messages and Instructions (system prompt) text boxes. Finally, click the Create button. The webpage is not responding. The container logs don't show anything either. I couldn't create an assistant.

What should i do?

Do I have to enable login authentication to create an assistant? unless I'm accessing it from localhost. I'm on a LAN and I can't get user authentication through Huggingface or google. I have also tried to set up a user authentication service using keycloak and configure .env.local to enable open id login. But the attempt failed. See this page(https://github.com/huggingface/chat-ui/issues/896) for the specific problem.

|

https://github.com/huggingface/chat-ui/issues/905

|

open

|

[] | 2024-03-06T08:33:03Z

| 2024-03-06T08:33:03Z

| 0

|

majestichou

|

pytorch/serve

| 3,004

|

How to 'Create model archive pod and run model archive file generation script' in the ‘User Guide’

|

### 🐛 Describe the bug

I'm reading the User Guide of KServe doc. One part of the 'Deploy a PyTorch Model with TorchServe InferenceService' is hard to understand.

3 'Create model archive pod and run model archive file generation script'

3.1 Create model archive pod and run model archive file generation script[¶](https://kserve.github.io/website/0.11/modelserving/v1beta1/torchserve/model-archiver/#31-create-model-archive-pod-and-run-model-archive-file-generation-script)

kubectl apply -f model-archiver.yaml -n kserve-test

(https://kserve.github.io/website/0.11/modelserving/v1beta1/torchserve/model-archiver/)

Idk how to write the model-archiver.yaml and the model archive file generation script. I would be very grateful if anyone can help me!

### Error logs

Not yet

### Installation instructions

Yes

yes

### Model Packaing

Not yet

### config.properties

_No response_

### Versions

aiohttp==3.8.6

aiohttp-cors==0.7.0

aiorwlock==1.3.0

aiosignal==1.3.1

anyio==4.0.0

async-timeout==4.0.3

attrs==23.1.0

azure-core==1.29.5

azure-identity==1.15.0

azure-storage-blob==12.18.3

azure-storage-file-share==12.14.2

blessed==1.20.0

#boto==31.28.73

botocore==1.31.73

cachetools==5.3.2

captum==0.6.0

certifi==2023.7.22

cffi==1.16.0

charset-normalizer==3.3.0

click==8.1.7

cloudevents==1.10.1

colorful==0.5.5

contourpy==1.1.1

cryptography==41.0.5

cuda-python==12.3.0

cycler==0.12.1

Cython==0.29.34

deprecation==2.1.0

distlib==0.3.7

enum-compat==0.0.3

exceptiongroup==1.1.3

fastapi==0.95.2

filelock==3.12.4

fonttools==4.43.1

frozenlist==1.4.0

fsspec==2023.9.2

google-api-core==2.12.0

google-auth==2.23.3

google-cloud-core==2.3.3

google-cloud-storage==1.44.0

#google-crc==32c1.5.0

google-resumable-media==2.6.0

googleapis-common-protos==1.61.0

gpustat==1.1.1

grpcio==1.51.3

grpcio-tools==1.48.2

#h==110.14.0

httpcore==0.16.3

httptools==0.6.1

httpx==0.23.3

huggingface-hub==0.17.3

idna==3.4

importlib-resources==6.1.0

isodate==0.6.1

#Jinja==23.1.2

jmespath==1.0.1

jsonschema==4.19.2

jsonschema-specifications==2023.7.1

kiwisolver==1.4.5

kserve==0.11.1

kubernetes==28.1.0

MarkupSafe==2.1.3

matplotlib==3.8.0

mpmath==1.3.0

msal==1.24.1

msal-extensions==1.0.0

msgpack==1.0.7

multidict==6.0.4

networkx==3.1

numpy==1.24.3

nvidia-ml-py==12.535.108

oauthlib==3.2.2

opencensus==0.11.3

opencensus-context==0.1.3

orjson==3.9.10

packaging==23.2

pandas==2.1.2

Pillow==10.0.1

#pip==23.3.1

platformdirs==3.11.0

portalocker==2.8.2

prometheus-client==0.13.1

protobuf==3.20.3

psutil==5.9.5

py-spy==0.3.14

#pyasn==10.5.0

#pyasn==1-modules0.3.0

pycparser==2.21

pydantic==1.10.13

PyJWT==2.8.0

pynvml==11.4.1

pyparsing==3.1.1

python-dateutil==2.8.2

python-dotenv==1.0.0

python-rapidjson==1.13

pytz==2023.3.post1

PyYAML==6.0

ray==2.4.0

referencing==0.30.2

regex==2023.10.3

requests==2.31.0

requests-oauthlib==1.3.1

#rfc==39861.5.0

rpds-py==0.10.6

rsa==4.9

#s==3transfer0.7.0

safetensors==0.4.0

setuptools==68.2.2

six==1.16.0

smart-open==6.4.0

sniffio==1.3.0

starlette==0.27.0

sympy==1.12

tabulate==0.9.0

timing-asgi==0.3.1

tokenizers==0.14.1

torch==2.1.0

torch-model-archiver==0.9.0

torch-workflow-archiver==0.2.11

torchaudio==2.1.0

torchdata==0.7.0

torchserve==0.9.0

torchtext==0.16.0

torchvision==0.16.0

tqdm==4.66.1

transformers==4.34.1

tritonclient==2.39.0

typing_extensions==4.8.0

tzdata==2023.3

#urllib==31.26.18

uvicorn==0.19.0

#uvloop==0.19.0

virtualenv==20.21.0

watchfiles==0.21.0

wcwidth==0.2.8

websocket-client==1.6.4

websockets==12.0

wheel==0.40.0

yarl==1.9.2

zipp==3.17.0

### Repro instructions

None

### Possible Solution

_No response_

|

https://github.com/pytorch/serve/issues/3004

|

open

|

[

"triaged",

"kfserving"

] | 2024-03-06T07:42:50Z

| 2024-03-07T07:06:52Z

| null |

Enochlove

|

huggingface/chat-ui

| 904

|

Running the project with `npm run dev`, but it does not hot reload.

|

Am I alone in this issue or are you just developing without hot reload? Does anyone have any ideas on how to resolve it?

**UPDATES:**

It has to do whenever you're running it on WSL.

I guess this is an unrelated issue so feel free to close, but would still be nice to know how to resolve this.

|

https://github.com/huggingface/chat-ui/issues/904

|

closed

|

[] | 2024-03-06T03:34:21Z

| 2024-03-06T16:07:11Z

| 2

|

CakeCrusher

|

huggingface/dataset-viewer

| 2,550

|

More precise dataset size computation

|

Currently, the Hub uses the `/size` endpoint's `num_bytes_original_files` value to display the `Size of downloaded dataset files` on a dataset's card page. However, this value does not consider a possible overlap between the configs' data files (and simply [sums](https://github.com/huggingface/datasets-server/blob/e4aac49c4d3c245cb3c0e48695b7d24a934a8377/services/worker/src/worker/job_runners/dataset/size.py#L97-L98) all the configs' sizes up), in which case the shared files need to be downloaded only once. Both `datasets` and `hfh` recognize this (by downloading them once), so the size computation should account for it, too.