text

stringlengths 184

4.48M

|

|---|

package estudos.maratonajava.javacore.streams.test;

//1. Order LightNovel by title

//2. Retrive the first 3 titles light novels with price less than 4

import estudos.maratonajava.javacore.streams.dominio.LightNovel;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.List;

public class StreamTest01 {

private static List<LightNovel> lightNovels = new ArrayList<>(List.of(

new LightNovel("Tensei Shittara", 8.99),

new LightNovel("Overlord", 3.99),

new LightNovel("Violet Evergarden", 5.99),

new LightNovel("No Game No Life", 2.99),

new LightNovel("Fullmetal Alchemist", 8.99),

new LightNovel("Kumo desuga", 1.99),

new LightNovel("Monogatari", 4.00)

));

public static void main(String[] args) {

lightNovels.sort(Comparator.comparing(LightNovel::getTitle));

List<String> titles = new ArrayList<>();

for (LightNovel lightNovel : lightNovels) {

if (lightNovel.getPrice() <= 4) {

titles.add(lightNovel.getTitle());

}

if (titles.size() >= 3) {

break;

}

}

System.out.println(lightNovels);

System.out.println(titles);

}

} |

package com.orderfleet.webapp.domain;

import java.io.Serializable;

import java.time.LocalDateTime;

import java.util.Objects;

import javax.persistence.Column;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.ManyToOne;

import javax.persistence.Table;

import javax.validation.constraints.NotNull;

import org.hibernate.annotations.GenericGenerator;

import org.hibernate.annotations.Parameter;

/**

* A ExecutiveTaskGroupPlan.

*

* @author Sarath

* @since July 14, 2016

*/

@Entity

@Table(name = "tbl_executive_task_group_plan")

public class ExecutiveTaskGroupPlan implements Serializable {

private static final long serialVersionUID = 1L;

@Id

@GenericGenerator(name = "seq_executive_task_group_plan_id_GEN", strategy = "org.hibernate.id.enhanced.SequenceStyleGenerator", parameters = {

@Parameter(name = "sequence_name", value = "seq_executive_task_group_plan_id") })

@GeneratedValue(generator = "seq_executive_task_group_plan_id_GEN")

@Column(name = "id", insertable = false, updatable = false, columnDefinition = "bigint DEFAULT nextval('seq_executive_task_group_plan_id')")

private Long id;

@NotNull

@Column(name = "pid", unique = true, nullable = false, updatable = false)

private String pid;

@NotNull

@Column(name = "planned_date", nullable = false)

private LocalDateTime plannedDate;

@NotNull

@Column(name = "created_date", nullable = false)

private LocalDateTime createdDate;

@Column(name = "remarks")

private String remarks;

@ManyToOne

@NotNull

private TaskGroup taskGroup;

@ManyToOne

@NotNull

private User user;

@ManyToOne

@NotNull

private Company company;

public Long getId() {

return id;

}

public void setId(Long id) {

this.id = id;

}

public String getPid() {

return pid;

}

public void setPid(String pid) {

this.pid = pid;

}

public LocalDateTime getPlannedDate() {

return plannedDate;

}

public void setPlannedDate(LocalDateTime plannedDate) {

this.plannedDate = plannedDate;

}

public LocalDateTime getCreatedDate() {

return createdDate;

}

public void setCreatedDate(LocalDateTime createdDate) {

this.createdDate = createdDate;

}

public String getRemarks() {

return remarks;

}

public void setRemarks(String remarks) {

this.remarks = remarks;

}

public TaskGroup getTaskGroup() {

return taskGroup;

}

public void setTaskGroup(TaskGroup taskGroup) {

this.taskGroup = taskGroup;

}

public User getUser() {

return user;

}

public void setUser(User user) {

this.user = user;

}

public Company getCompany() {

return company;

}

public void setCompany(Company company) {

this.company = company;

}

@Override

public boolean equals(Object o) {

if (this == o) {

return true;

}

if (o == null || getClass() != o.getClass()) {

return false;

}

ExecutiveTaskGroupPlan executiveTaskGroupPlan = (ExecutiveTaskGroupPlan) o;

if (executiveTaskGroupPlan.id == null || id == null) {

return false;

}

return Objects.equals(id, executiveTaskGroupPlan.id);

}

@Override

public int hashCode() {

return Objects.hashCode(id);

}

@Override

public String toString() {

return "ExecutiveTaskGroupPlan{" + "id=" + id + ", plannedDate='" + plannedDate + "'" + ", createdDate='"

+ createdDate + "'" + ", remarks='" + remarks + "'" + '}';

}

} |

const { MessageEmbed, CommandInteraction } = require("discord.js")

module.exports = {

name: 'nick-reset',

description: 'Removes The Nickname Of A User ',

type: 'Moderation',

perms: 'MANAGE_NICKNAMES',

usage: '/nick-reset',

options: [

{

name: 'user',

description: 'Select the user',

type: 'USER',

required: true

}

],

/**

*

* @param {Client} client

* @param {CommandInteraction} interaction

* @param {String[]} args

*/

async run(client, interaction, args) {

const { member, guild, options } = interaction

const user = options.getMember('user')

if (interaction.member.permissions.has("MANAGE_NICKNAMES")) {

try {

await user.setNickname("", 'noreason')

interaction.reply({

embeds: [

new MessageEmbed().setColor(`BLUE`)

.setDescription(`\n Removed Nickname of <@${user.id}> `)

.setFooter(`Nickname Removed By Shasri`)

.setTimestamp()

]

})

} catch (Err) {

return interaction.reply({

embeds: [

new MessageEmbed().setColor("BLUE")

.setDescription(`The person you are trying to change nickname is

having a role higher than you. To Change the nickname the bot must have a role higher than the mentioned one`)

], ephemeral: true

})

}

} else {

interaction.reply({

embeds: [

new MessageEmbed().setColor("BLUE")

.setDescription(`You Dont Have Permission To Manage Nicknames`)

]

})

}

}

} |

import Card from "@mui/material/Card";

import Grid from "@material-ui/core/Grid";

import CardActions from "@mui/material/CardActions";

import CardContent from "@mui/material/CardContent";

import IconButton from "@material-ui/core/IconButton";

import { Delete, Edit } from "@mui/icons-material";

import Button from "@mui/material/Button";

import Typography from "@mui/material/Typography";

import { Component } from "react";

import { withStyles } from "@material-ui/core";

import { useNavigate } from "react-router-dom";

const styles = {

card: {

margin: "1rem",

width: "16rem",

height: "16rem",

},

cardContent: {

minHeight: "13rem",

maxHeight: "13rem",

},

cardActions: {

height: "3rem",

},

iconButton: {

marginLeft: "auto",

width: "3rem",

height: "3rem",

borderRadius: "50%",

},

expandMore: {

position: "absolute",

left: "0",

top: "0",

width: "100%",

height: "100%",

padding: "0.5rem",

},

};

class FlashCard extends Component {

constructor(props) {

super(props);

this.state = {

isFlipped: true,

};

this.handleFlipClick = this.handleFlipClick.bind(this);

this.handleDelete = this.handleDelete.bind(this);

this.handleEdit = this.handleEdit.bind(this);

}

handleFlipClick(e) {

e.preventDefault();

this.setState((prevState) => ({ isFlipped: !prevState.isFlipped }));

}

async handleDelete(e) {

e.preventDefault();

const card = this.props.content;

await this.props.deleteCard(card);

}

handleEdit(e) {

e.preventDefault();

const content = this.props.content;

this.props.navigate(`/edit?id=${content._id}`, {

state: {

word: content.word,

definition: content.definition,

_id: content._id,

deck: content.deck,

},

});

}

render() {

const { content } = this.props;

return (

<Grid item>

<Card style={styles.card}>

<CardContent style={styles.cardContent}>

<div

style={{

overflow: "scroll",

textOverflow: "ellipsis",

maxHeight: "12rem",

}}

>

<Typography>

{this.state.isFlipped ? content.word : content.definition}

</Typography>

</div>

</CardContent>

<CardActions style={styles.cardActions}>

<Button size="small" onClick={this.handleFlipClick}>

Flip

</Button>

<IconButton style={styles.iconButton} onClick={this.handleDelete}>

<Delete />

</IconButton>

<IconButton style={styles.iconButton} onClick={this.handleEdit}>

<Edit />

</IconButton>

</CardActions>

</Card>

</Grid>

);

}

}

function WithNavigate(props) {

let navigate = useNavigate();

return <FlashCard {...props} navigate={navigate} />;

}

export default withStyles(styles)(WithNavigate); |

#version 330 core

out vec4 FragColor;

struct Material {

float ambient;

float diffuse;

float specular;

float shininess;

};

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

struct PointLight {

vec3 position;

float constant;

float linear;

float quadratic;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

struct SpotLight {

vec3 position;

vec3 direction;

float cutOff;

float outerCutOff;

float constant;

float linear;

float quadratic;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

#define NR_POINT_LIGHTS 6

in VS_OUT

{

vec3 pos;

vec3 normal;

} fs_in;

uniform vec3 object_color;

uniform vec3 view_pos;

uniform DirLight dirLight;

uniform PointLight pointLights[NR_POINT_LIGHTS];

uniform SpotLight spotLight;

uniform Material material;

// function prototypes

// vec3 CalcDirLight(DirLight light, vec3 normal, vec3 viewDir);

vec3 CalcPointLight(PointLight light, vec3 normal, vec3 fragPos, vec3 viewDir);

// vec3 CalcSpotLight(SpotLight light, vec3 normal, vec3 fragPos, vec3 viewDir);

void main()

{

// properties

vec3 norm = normalize(fs_in.normal);

vec3 viewDir = normalize(view_pos - fs_in.pos);

// Our lighting is set up in 3 phases: directional, point lights and an optional flashlight

// For each phase, a calculate function is defined that calculates the corresponding color

// per lamp. In the main() function we take all the calculated colors and sum them up for

// this fragment's final color.

vec3 result = vec3(0,0,0);

// phase 1: directional lighting

// result += CalcDirLight(dirLight, norm, viewDir);

// // phase 2: point lights

for(int i = 0; i < NR_POINT_LIGHTS; i++)

result += CalcPointLight(pointLights[i], norm, fs_in.pos, viewDir);

// // phase 3: spot light

// result += CalcSpotLight(spotLight, norm, fs_in.pos, viewDir);

FragColor = vec4(result, 1.0);

}

// // calculates the color when using a directional light.

// vec3 CalcDirLight(DirLight light, vec3 normal, vec3 viewDir)

// {

// vec3 lightDir = normalize(-light.direction);

// // diffuse shading

// float diff = max(dot(normal, lightDir), 0.0);

// // specular shading

// vec3 reflectDir = reflect(-lightDir, normal);

// float spec = pow(max(dot(viewDir, reflectDir), 0.0), material.shininess);

// // combine results

// // vec3 ambient = light.ambient * vec3(texture(material.diffuse, TexCoords));

// // vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, TexCoords));

// // vec3 specular = light.specular * spec * vec3(texture(material.specular, TexCoords));

// vec3 color = vec3(texture(object_texture, fs_in.texcoord));

// // vec3 ambient = light.ambient * color;

// // vec3 ambient = material.ambient * color;

// // vec3 diffuse = light.diffuse * diff * vec3(material.diffuse, material.diffuse,material.diffuse);

// // vec3 specular = light.specular * spec * vec3(material.specular, material.specular, material.specular);

// // return (ambient + diffuse + specular);

// return color;

// }

// calculates the color when using a point light.

vec3 CalcPointLight(PointLight light, vec3 normal, vec3 fragPos, vec3 viewDir)

{

vec3 lightDir = normalize(light.position - fragPos);

// diffuse shading

float diff = max(dot(normal, lightDir), 0.0);

// specular shading

vec3 reflectDir = reflect(-lightDir, normal);

float spec = pow(max(dot(viewDir, reflectDir), 0.0), material.shininess);

// attenuation

float distance = dot((light.position - fragPos),lightDir);

float total_dist = (light.constant + light.linear * distance + light.quadratic * (distance * distance));

if(total_dist > 20) total_dist = 20;

if(total_dist < 1) total_dist = 1;

float attenuation =

1.0 / total_dist;

// combine results

// vec3 color = vec3(texture(object_texture, fs_in.texcoord));

vec3 color = object_color;

vec3 ambient = light.ambient * material.ambient * color;

// vec3 diffuse = light.diffuse * diff * vec3(material.diffuse, material.diffuse, material.diffuse);

// vec3 specular = light.specular * spec * vec3(material.specular, material.specular, material.specular);

vec3 diffuse = light.diffuse * material.diffuse * diff * color;

vec3 specular = light.specular * material.specular * spec * color;

// if(attenuation > 50) attenuation = 1;

ambient *= attenuation;

diffuse *= attenuation;

specular *= attenuation;

return (ambient + diffuse + specular);

}

// // calculates the color when using a spot light.

// vec3 CalcSpotLight(SpotLight light, vec3 normal, vec3 fragPos, vec3 viewDir)

// {

// vec3 lightDir = normalize(light.position - fragPos);

// // diffuse shading

// float diff = max(dot(normal, lightDir), 0.0);

// // specular shading

// vec3 reflectDir = reflect(-lightDir, normal);

// float spec = pow(max(dot(viewDir, reflectDir), 0.0), material.shininess);

// // attenuation

// float distance = length(light.position - fragPos);

// float attenuation = 1.0 / (light.constant + light.linear * distance + light.quadratic * (distance * distance));

// // spotlight intensity

// float theta = dot(lightDir, normalize(-light.direction));

// float epsilon = light.cutOff - light.outerCutOff;

// float intensity = clamp((theta - light.outerCutOff) / epsilon, 0.0, 1.0);

// // combine results

// vec3 ambient = light.ambient * vec3(texture(object_texture, fs_in.texcoord));

// vec3 diffuse = light.diffuse * diff * vec3(material.diffuse, material.diffuse,material.diffuse);

// vec3 specular = light.specular * spec * vec3(material.specular, material.specular, material.specular);

// ambient *= attenuation * intensity;

// diffuse *= attenuation * intensity;

// specular *= attenuation * intensity;

// return (ambient + diffuse + specular);

// } |

import { useEffect, useState } from 'react'

import './App.css'

import PhotoComponent from './component/PhotoComponent'

function App() {

const apiKey = `Iv2GvHOGSHue1ZUpCH5e_9aDhyMLHMs5m5XiceF3Fwo`

const [photo,setPhotos] = useState([])

const [page,setPage] =useState(1)

const [isLoading,setIsLoading] = useState(false)

const fetchImage =async()=>{

setIsLoading(true)

try{

const apiUrl = `https://api.unsplash.com/photos/?client_id=${apiKey}&page=${page}`

const response = await fetch(apiUrl)

const data =await response.json()

setPhotos((oldData)=>{

return [...oldData,...data]

})

}catch(error){

console.log(error)

}

setIsLoading(false)

}

useEffect(()=>{

fetchImage()

},[page])

useEffect(()=>{

const event= window.addEventListener('scroll',()=>{

if(window.innerHeight+window.scrollY>document.body.offsetHeight-500 && !isLoading){

setPage((oldPage)=>{

return oldPage+1

})

}

})

return ()=>{

window.removeEventListener('scroll',event)

}

},[])

return (

<main>

<h1>Infinite Scroll Photo | Unsplash API</h1>

<section className='photo'>

<div className="display-photo">

{photo.map((data,index)=>{

return <PhotoComponent key={index} {...data}/>

})}

</div>

</section>

</main>

)

}

export default App |

package com.crossmin.megaverse.application.usecase;

import com.crossmin.megaverse.application.model.ActualMap;

import com.crossmin.megaverse.application.model.ContentObject;

import com.crossmin.megaverse.application.model.GoalMap;

import com.crossmin.megaverse.application.model.MapObject;

import com.crossmin.megaverse.domain.repository.MapRepository;

import org.junit.Test;

import org.junit.jupiter.api.Assertions;

import org.junit.runner.RunWith;

import org.mockito.InjectMocks;

import org.mockito.Mock;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import java.util.ArrayList;

import java.util.List;

import static org.mockito.Mockito.when;

@RunWith(SpringRunner.class)

@SpringBootTest

public class VerifyMegaverseUseCaseTest {

@Mock

MapRepository mapRepository;

@InjectMocks

VerifyMegaverseUseCase verifyMegaverseUseCase;

@Test

public void verify_checkBothMapAreEquals() {

List<String> rowGoal1 = List.of("SPACE");

List<String> rowGoal2 = List.of("POLYANET");

List<List<String>> rows = List.of(rowGoal1, rowGoal2);

when(mapRepository.findGoalMap()).thenReturn(new GoalMap(rows));

List<ContentObject> rowActual1 = new ArrayList<>();

rowActual1.add(null);

List<ContentObject> rowActual2 = List.of(new ContentObject(0));

List<List<ContentObject>> content = List.of(rowActual1, rowActual2);

when(mapRepository.findActualMap()).thenReturn(new ActualMap(new MapObject(content)));

String result = verifyMegaverseUseCase.verify();

Assertions.assertEquals("Actual and Goal Maps Match", result);

}

@Test

public void verify_checkBothMapAreNotEquals() {

List<String> rowGoal1 = List.of("SPACE");

List<String> rowGoal2 = List.of("POLYANET");

List<List<String>> rows = List.of(rowGoal1, rowGoal2);

when(mapRepository.findGoalMap()).thenReturn(new GoalMap(rows));

List<ContentObject> rowActual1 = List.of(new ContentObject(0));

List<ContentObject> rowActual2 = List.of(new ContentObject(0));

List<List<ContentObject>> content = List.of(rowActual1, rowActual2);

when(mapRepository.findActualMap()).thenReturn(new ActualMap(new MapObject(content)));

String result = verifyMegaverseUseCase.verify();

Assertions.assertEquals("Actual and Goal Maps NOT Match", result);

}

} |

package study.spring.springmyshop.model;

import java.util.List;

import com.google.gson.reflect.TypeToken;

import com.google.gson.Gson;

import study.spring.springmyshop.helper.UploadItem;

/** `상품` 테이블의 POJO 클래스 (20/05/08 22:58:51) */

public class Products {

/** 일련번호, IS NOT NULL, PRI */

private int id;

/** 상품명, IS NOT NULL */

private String name;

/** 간략설명, IS NOT NULL */

private String description;

/** 상품가격, IS NOT NULL */

private int price;

/** 할인가(할인없을 경우 0), IS NOT NULL */

private int salePrice;

/** 옵션(json=ProductOptions,list=True), IS NOT NULL */

private List<ProductOptions> productOption;

/** 노출여부(Y/N), IS NOT NULL */

private String isOpen;

/** 신상품(Y/N), IS NOT NULL */

private String isNew;

/** 베스트(Y/N), IS NOT NULL */

private String isBest;

/** 추천상품(Y/N), IS NOT NULL */

private String isVote;

/** 상품 URL(크롤링한 원본 사이트), IS NOT NULL */

private String url;

/** 상품설명, IS NOT NULL */

private String content;

/** 리스트 이미지{json=UploadItem}, IS NOT NULL */

private UploadItem listImg;

/** 상품 타이틀 이미지{json=UploadItem}, IS NOT NULL */

private UploadItem titleImg;

/** 등록일시, IS NOT NULL */

private String regDate;

/** 변경일시, IS NOT NULL */

private String editDate;

/** 일련번호, IS NOT NULL, PRI */

public void setId(int id) {

this.id = id;

}

/** 일련번호, IS NOT NULL, PRI */

public int getId() {

return this.id;

}

/** 상품명, IS NOT NULL */

public void setName(String name) {

this.name = name;

}

/** 상품명, IS NOT NULL */

public String getName() {

return this.name;

}

/** 간략설명, IS NOT NULL */

public void setDescription(String description) {

this.description = description;

}

/** 간략설명, IS NOT NULL */

public String getDescription() {

return this.description;

}

/** 상품가격, IS NOT NULL */

public void setPrice(int price) {

this.price = price;

}

/** 상품가격, IS NOT NULL */

public int getPrice() {

return this.price;

}

/** 할인가(할인없을 경우 0), IS NOT NULL */

public void setSalePrice(int salePrice) {

this.salePrice = salePrice;

}

/** 할인가(할인없을 경우 0), IS NOT NULL */

public int getSalePrice() {

return this.salePrice;

}

/** 옵션(json=ProductOptions,list=True), IS NOT NULL */

public void setProductOptionJson(String productOption) {

this.productOption = new Gson().fromJson(productOption, new TypeToken<List<ProductOptions>>() {}.getType());

}

/** 옵션(json=ProductOptions,list=True), IS NOT NULL */

public void setProductOption(List<ProductOptions> productOption) {

this.productOption = productOption;

}

/** 옵션(json=ProductOptions,list=True), IS NOT NULL */

public List<ProductOptions> getProductOption() {

return this.productOption;

}

/** 옵션(json=ProductOptions,list=True), IS NOT NULL */

public String getProductOptionJson() {

return new Gson().toJson(productOption);

}

/** 노출여부(Y/N), IS NOT NULL */

public void setIsOpen(String isOpen) {

this.isOpen = isOpen;

}

/** 노출여부(Y/N), IS NOT NULL */

public String getIsOpen() {

return this.isOpen;

}

/** 신상품(Y/N), IS NOT NULL */

public void setIsNew(String isNew) {

this.isNew = isNew;

}

/** 신상품(Y/N), IS NOT NULL */

public String getIsNew() {

return this.isNew;

}

/** 베스트(Y/N), IS NOT NULL */

public void setIsBest(String isBest) {

this.isBest = isBest;

}

/** 베스트(Y/N), IS NOT NULL */

public String getIsBest() {

return this.isBest;

}

/** 추천상품(Y/N), IS NOT NULL */

public void setIsVote(String isVote) {

this.isVote = isVote;

}

/** 추천상품(Y/N), IS NOT NULL */

public String getIsVote() {

return this.isVote;

}

/** 상품 URL(크롤링한 원본 사이트), IS NOT NULL */

public void setUrl(String url) {

this.url = url;

}

/** 상품 URL(크롤링한 원본 사이트), IS NOT NULL */

public String getUrl() {

return this.url;

}

/** 상품설명, IS NOT NULL */

public void setContent(String content) {

this.content = content;

}

/** 상품설명, IS NOT NULL */

public String getContent() {

return this.content;

}

/** 리스트 이미지{json=UploadItem}, IS NOT NULL */

public void setListImgJson(String listImg) {

this.listImg = new Gson().fromJson(listImg, UploadItem.class);

}

/** 리스트 이미지{json=UploadItem}, IS NOT NULL */

public void setListImg(UploadItem listImg) {

this.listImg = listImg;

}

/** 리스트 이미지{json=UploadItem}, IS NOT NULL */

public UploadItem getListImg() {

return this.listImg;

}

/** 리스트 이미지{json=UploadItem}, IS NOT NULL */

public String getListImgJson() {

return new Gson().toJson(this.listImg);

}

/** 상품 타이틀 이미지{json=UploadItem}, IS NOT NULL */

public void setTitleImgJson(String titleImg) {

this.titleImg = new Gson().fromJson(titleImg, UploadItem.class);

}

/** 상품 타이틀 이미지{json=UploadItem}, IS NOT NULL */

public void setTitleImg(UploadItem titleImg) {

this.titleImg = titleImg;

}

/** 상품 타이틀 이미지{json=UploadItem}, IS NOT NULL */

public UploadItem getTitleImg() {

return this.titleImg;

}

/** 상품 타이틀 이미지{json=UploadItem}, IS NOT NULL */

public String getTitleImgJson() {

return new Gson().toJson(this.titleImg);

}

/** 등록일시, IS NOT NULL */

public void setRegDate(String regDate) {

this.regDate = regDate;

}

/** 등록일시, IS NOT NULL */

public String getRegDate() {

return this.regDate;

}

/** 변경일시, IS NOT NULL */

public void setEditDate(String editDate) {

this.editDate = editDate;

}

/** 변경일시, IS NOT NULL */

public String getEditDate() {

return this.editDate;

}

/** LIMIT 절에서 사용할 조회 시작 위치 */

private static int offset;

/** LIMIT 절에서 사용할 조회할 데이터 수 */

private static int listCount;

public static int getOffset() {

return offset;

}

public static void setOffset(int offset) {

Products.offset = offset;

}

public static int getListCount() {

return listCount;

}

public static void setListCount(int listCount) {

Products.listCount = listCount;

}

@Override

public String toString() {

String str = "\n[Products]\n";

str += "id: " + this.id + " (일련번호, IS NOT NULL, PRI)\n";

str += "name: " + this.name + " (상품명, IS NOT NULL)\n";

str += "description: " + this.description + " (간략설명, IS NOT NULL)\n";

str += "price: " + this.price + " (상품가격, IS NOT NULL)\n";

str += "salePrice: " + this.salePrice + " (할인가(할인없을 경우 0), IS NOT NULL)\n";

str += "productOption: " + this.productOption + " (옵션(json=ProductOptions,list=True), IS NOT NULL)\n";

str += "isOpen: " + this.isOpen + " (노출여부(Y/N), IS NOT NULL)\n";

str += "isNew: " + this.isNew + " (신상품(Y/N), IS NOT NULL)\n";

str += "isBest: " + this.isBest + " (베스트(Y/N), IS NOT NULL)\n";

str += "isVote: " + this.isVote + " (추천상품(Y/N), IS NOT NULL)\n";

str += "url: " + this.url + " (상품 URL(크롤링한 원본 사이트), IS NOT NULL)\n";

str += "content: " + this.content + " (상품설명, IS NOT NULL)\n";

str += "listImg: " + this.listImg + " (리스트 이미지{json=UploadItem}, IS NOT NULL)\n";

str += "titleImg: " + this.titleImg + " (상품 타이틀 이미지{json=UploadItem}, IS NOT NULL)\n";

str += "regDate: " + this.regDate + " (등록일시, IS NOT NULL)\n";

str += "editDate: " + this.editDate + " (변경일시, IS NOT NULL)\n";

return str;

}

} |

package main

import (

"bytes"

"context"

"encoding/json"

"io/ioutil"

"testing"

"github.com/stretchr/testify/require"

)

func TestInfoCommand(t *testing.T) {

for _, test := range []struct {

name string

args []string

expectedOutput []byte

}{

{"info command with store",

[]string{"-s", "testdata/blob1.store", "testdata/blob1.caibx"},

[]byte(`{

"total": 161,

"unique": 131,

"in-store": 131,

"in-seed": 0,

"in-cache": 0,

"not-in-seed-nor-cache": 131,

"size": 2097152,

"dedup-size-not-in-seed": 1114112,

"dedup-size-not-in-seed-nor-cache": 1114112,

"chunk-size-min": 2048,

"chunk-size-avg": 8192,

"chunk-size-max": 32768

}`)},

{"info command with seed",

[]string{"-s", "testdata/blob1.store", "--seed", "testdata/blob2.caibx", "testdata/blob1.caibx"},

[]byte(`{

"total": 161,

"unique": 131,

"in-store": 131,

"in-seed": 124,

"in-cache": 0,

"not-in-seed-nor-cache": 7,

"size": 2097152,

"dedup-size-not-in-seed": 80029,

"dedup-size-not-in-seed-nor-cache": 80029,

"chunk-size-min": 2048,

"chunk-size-avg": 8192,

"chunk-size-max": 32768

}`)},

{"info command with seed and cache",

[]string{"-s", "testdata/blob2.store", "--seed", "testdata/blob1.caibx", "--cache", "testdata/blob2.cache", "testdata/blob2.caibx"},

[]byte(`{

"total": 161,

"unique": 131,

"in-store": 131,

"in-seed": 124,

"in-cache": 18,

"not-in-seed-nor-cache": 5,

"size": 2097152,

"dedup-size-not-in-seed": 80029,

"dedup-size-not-in-seed-nor-cache": 67099,

"chunk-size-min": 2048,

"chunk-size-avg": 8192,

"chunk-size-max": 32768

}`)},

{"info command with cache",

[]string{"-s", "testdata/blob2.store", "--cache", "testdata/blob2.cache", "testdata/blob2.caibx"},

[]byte(`{

"total": 161,

"unique": 131,

"in-store": 131,

"in-seed": 0,

"in-cache": 18,

"not-in-seed-nor-cache": 113,

"size": 2097152,

"dedup-size-not-in-seed": 1114112,

"dedup-size-not-in-seed-nor-cache": 950410,

"chunk-size-min": 2048,

"chunk-size-avg": 8192,

"chunk-size-max": 32768

}`)},

} {

t.Run(test.name, func(t *testing.T) {

exp := make(map[string]interface{})

err := json.Unmarshal(test.expectedOutput, &exp)

require.NoError(t, err)

cmd := newInfoCommand(context.Background())

cmd.SetArgs(test.args)

b := new(bytes.Buffer)

// Redirect the command's output

stdout = b

cmd.SetOutput(ioutil.Discard)

_, err = cmd.ExecuteC()

require.NoError(t, err)

// Decode the output and compare to what's expected

got := make(map[string]interface{})

err = json.Unmarshal(b.Bytes(), &got)

require.NoError(t, err)

require.Equal(t, exp, got)

})

}

} |

import PropTypes from 'prop-types';

import {

Card,

CardActionArea,

CardContent,

CardMedia,

Rating,

Stack,

Typography,

} from '@mui/material';

import { Link } from 'react-router-dom';

interface MovieCardProps {

id: number;

title: string;

rating: number;

director: string;

genre: string[];

imageUrls: string[];

excerpt: string;

grossIncome: number;

year: number;

writers: string[];

stars: string[];

description: string;

}

[];

const MovieCard = (props: MovieCardProps) => {

const { id, imageUrls, title, rating, description, year, grossIncome } =

props;

return (

<Card sx={{ mr: 2, height: '99.8%' }}>

<CardActionArea LinkComponent={Link} to={`/movie/${id}`}>

<CardMedia sx={{ height: 200 }} image={imageUrls[0]} title={title} />

<CardContent>

<Typography variant="h5" fontSize="18px" component="div">

{title} {year && `(${year})`}

</Typography>

{rating && (

<Stack direction="row" alignItems="center" gap={1} mt={1}>

<Rating

name="movie-rating"

size="small"

value={rating}

readOnly

/>

<Typography variant="body1" color="text.secondary">

{rating}

</Typography>

</Stack>

)}

{description && (

<Typography variant="body2" color="text.secondary" mt={1}>

{description}

</Typography>

)}

{grossIncome && (

<Typography

variant="body2"

color="text.secondary"

mt={1}

fontSize="16px"

>

Current Income:{' '}

<Typography

variant="body2"

color="success.main"

component="span"

>

${grossIncome}

</Typography>

</Typography>

)}

</CardContent>

</CardActionArea>

</Card>

);

};

// MovieCard.propTypes = {

// imageUrls: PropTypes.array.isRequired,

// title: PropTypes.string.isRequired,

// rating: PropTypes.number,

// description: PropTypes.string,

// year: PropTypes.number,

// };

export default MovieCard; |

---

title: Easy Tutorial for Activating iCloud from Apple iPhone 15 Plus Safe and Legal

date: 2024-04-08T06:25:27.004Z

updated: 2024-04-09T06:25:27.004Z

tags:

- unlock

- bypass activation lock

categories:

- ios

- iphone

description: This article describes Easy Tutorial for Activating iCloud from Apple iPhone 15 Plus Safe and Legal

excerpt: This article describes Easy Tutorial for Activating iCloud from Apple iPhone 15 Plus Safe and Legal

keywords: bypass activation lock on ipad,iphone imei icloud unlock,jailbreak icloud locked iphone,how to remove icloud account,mac activation lock,bypass activation lock on iphone 15,icloud unlocker download,ipad stuck on activation lock,apple watch activation lock,bypass iphone icloud activation lock

thumbnail: https://www.lifewire.com/thmb/V0mVc7hXHyanE76GvodfwiaKNvE=/400x300/filters:no_upscale():max_bytes(150000):strip_icc():format(webp)/GettyImages-966273172-fbc5e7e0e68a48f69a1a5ddc0d6df827.jpg

---

## Easy Tutorial for Activating iCloud on Apple iPhone 15 Plus: Safe and Legal

Any iOS device needs iCloud to function properly. In addition to storing and backing up your contacts, photos, passcodes, and documents, iCloud is an essential part of the iOS operating system.

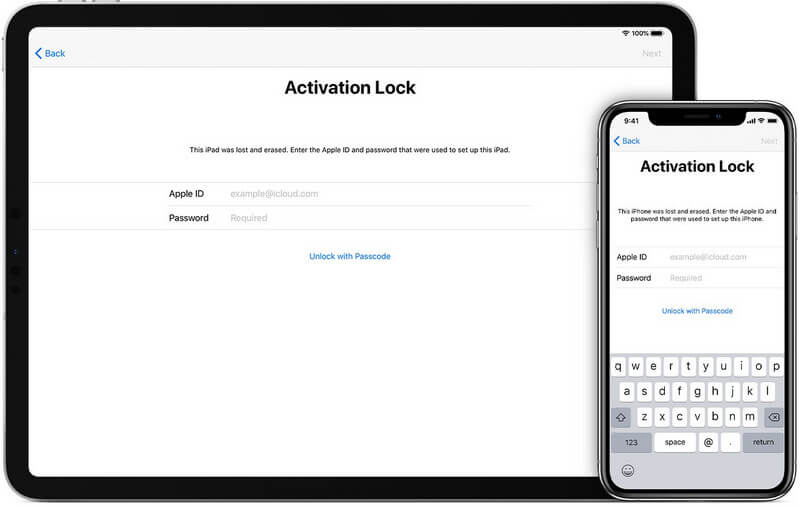

Activation locks prevent users from accessing iCloud. Users cannot back up their files to iCloud if the software has an activation lock. Activation locks protect your device if it is lost or stolen.

The former owner often sells their device with an activation lock enabled, which can cause difficulties for the current owner/buyer. The device may appear to be stolen by the current owner.

Fortunately, we have the best solutions for you-the five best tools for iOS 17 iCloud bypass. You can unlock the activation lock by following these simple steps.

Let's get started!

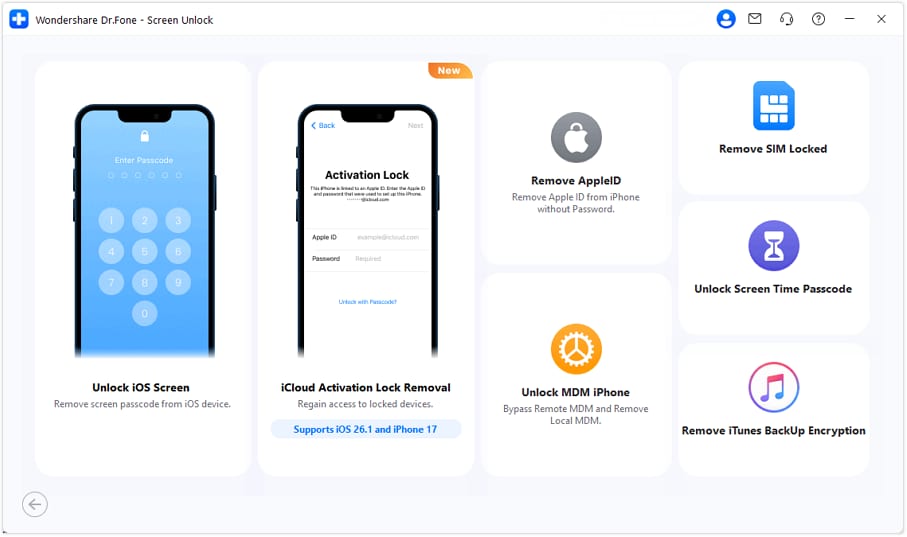

Choosing the right tool for iOS 17 bypasses is challenging. Looking for a safe, legal, and effective way to bypass the iCloud Activation lock is exhausting. To make understanding easier, we have added pictorial tutorials and a step-by-step guide.

Check out these iOS 17 iCloud bypass tools:

### 1\. Check M8

The best tool for bypassing the iCloud lock screen on iOS 17 is Check M8, software designed to unlock the iCloud lock screen on iOS 17.

- **Step 1:** Visit Check M8 website.

- **Step 2:** Select your computer model to find the '**Download**' button.

- **Step 3:** Run the software on your computer.

- **Step 4:** Connect your iOS device to your computer.

- **Step 5:** Tap on '**Start Bypass**' to start the process.

- **Step 6:** Wait for the bypass to complete, and you're done!

The iCloud activation lock can be unlocked in six easy steps, right after which you should update your phone.

### 2\. Frpfile All-In-One Tool

Bypassing iOS 17 is easy with Frpfile, and it has several other features as well. There are so many things you can accomplish with just one tool. For quick iOS 17 iCloud bypass, follow these steps:

- **Step 1:** Visit iFrpfile All-In-One Tool and click '**Download**' to install the software.

- **Step 2:** Run the software on your computer. Connect your device to your computer.

- **Step 3:** Click '**Process**' to start the bypass.

This free tool can simplify your work 10x, meaning you can use your phone/device to its fullest.

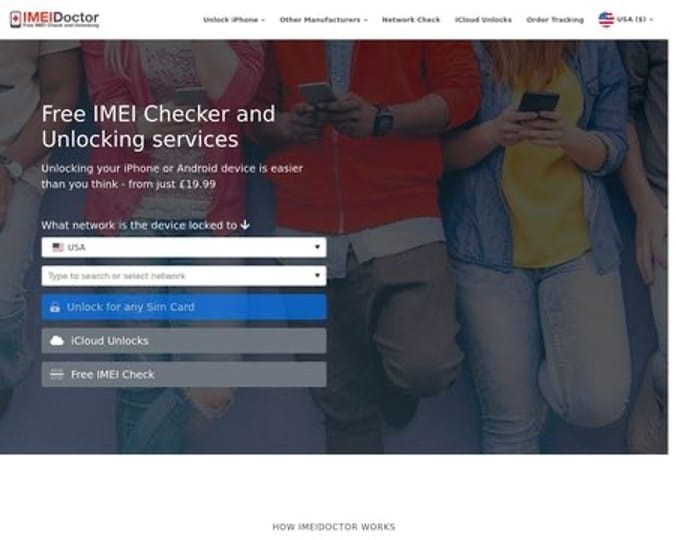

### 3\. IMEI Doctor

The best alternative for bypassing iCloud in iOS 17 is IMEI Doctor. A top-notch tech team does unlock your phone/device, so you can enjoy using it. Unlocking your phone/device is worth $19.

Follow these simple steps for iOS 17 bypass:

- **Step 1:** Go to the IMEI Doctor website.

- **Step 2:** Select your region.

- **Step 3:** Type in your iCloud IMEI number.

- **Step 4:** Select your device and model type from the drop-down button.

- **Step 5:** Tap "**Remove Activation Lock**".

- **Step 6:** Process the fee for unlocking the activation lock.

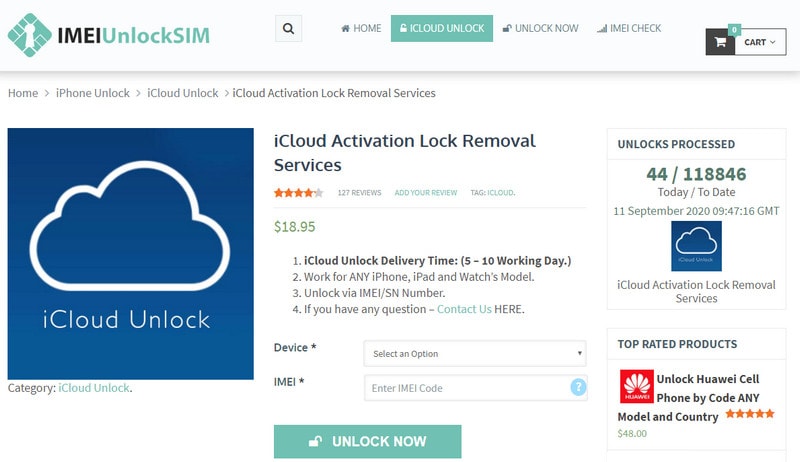

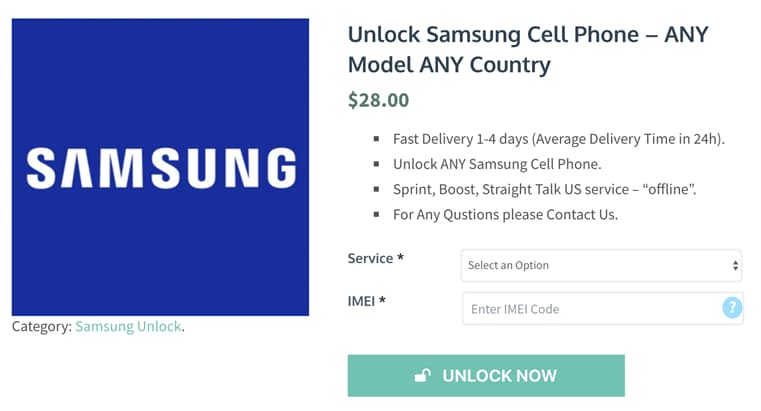

### 4\. IMEI Unlock Sim

There is some similarity between IMEI Doctor and IMEI Unlock Sim, but IMEI Unlock Sim is much better and more convenient. There is a guarantee that you will receive the results within 24 hours. Furthermore, all devices and models are compatible with the system.

Follow these steps to iOS 17 iCloud bypass:

- **Step 1:** Visit the IMEI Unlock Sim site.

- **Step 2:** Select your device by clicking the drop-down button. Type the IMEI code of your device.

- **Step 3:** Click on "**Unlock Now**", and process the payment.

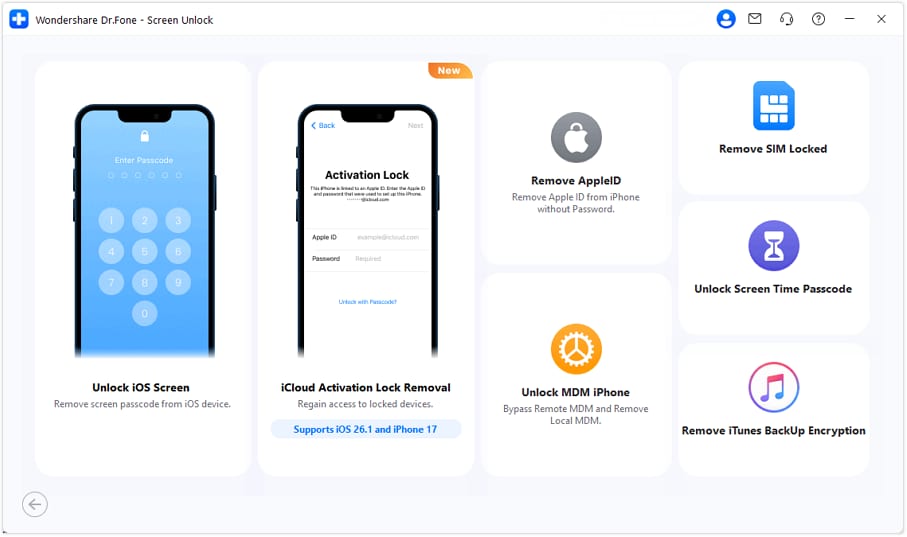

### 5\. Dr.Fone - Screen Unlock (iOS)

It is possible to bypass the iCloud activation lock with a tool called [Dr.Fone - Screen Unlock (iOS)](https://tools.techidaily.com/wondershare/drfone/iphone-unlock/). For some tools, it requires jailbreak before removing the activation lock. Lucky enough, Wondershare Dr.Fone launched an activation bypass solution that doesn’t require jailbreak (running on IOS 12.0-IOS 16.6). With the help of this powerful tool, you will be able to bypass iCloud on iOS 17 Without Jailbreak.

### [Dr.Fone - Screen Unlock (iOS)](https://tools.techidaily.com/wondershare/drfone/iphone-unlock/)

Remove Activation Lock on Apple iPhone 15 Pluss at Ease

- Unlock Face ID, Touch ID, Apple ID without password.

- Bypass the iCloud activation lock without hassle.

- Remove iPhone carrier restrictions for unlimited usage.

- No tech knowledge required, Simple, click-through, process.

**3,981,454** people have downloaded it

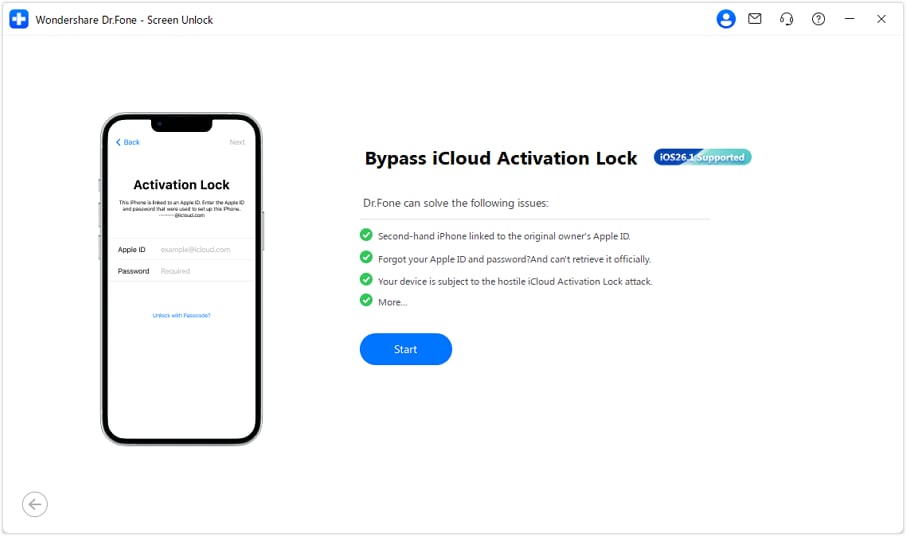

**Here's how to use Dr.Fone - Screen Unlock to bypass the iOS 17 activation lock without jailbreak:**

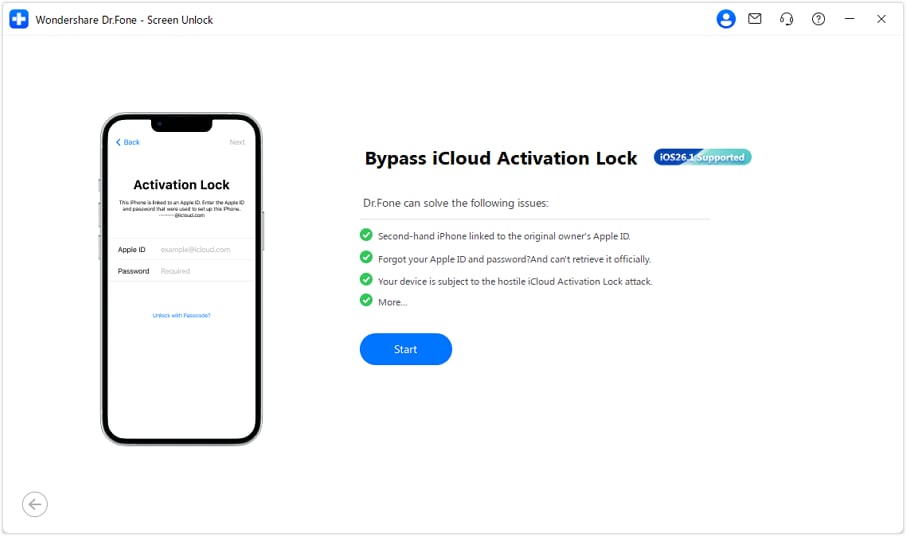

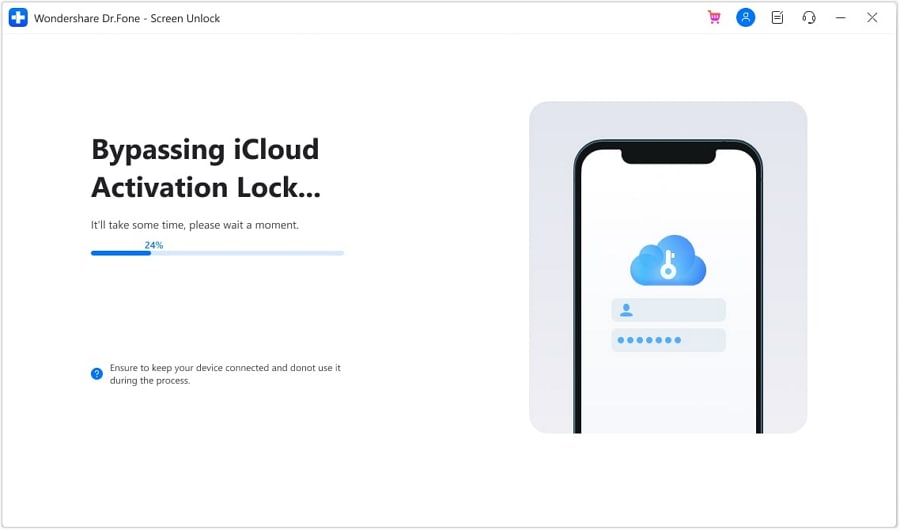

- **Step 1:** Log in Wondershare Dr.Fone and click **Toolbox** > **Screen Unlock** > **iOS**.

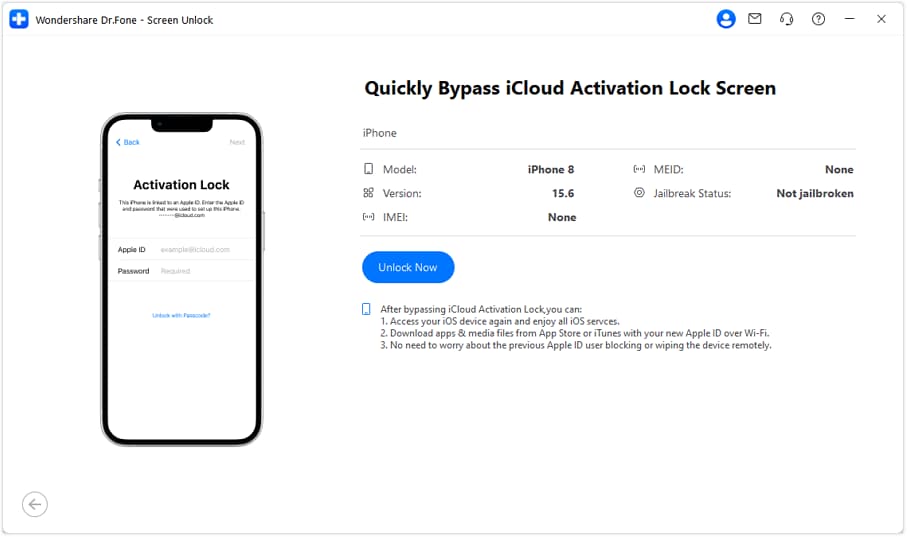

- **Step 2:** Make the Apple iPhone 15 Plus device connected to computer and choose “**iCloud Activation Lock Removal**”.

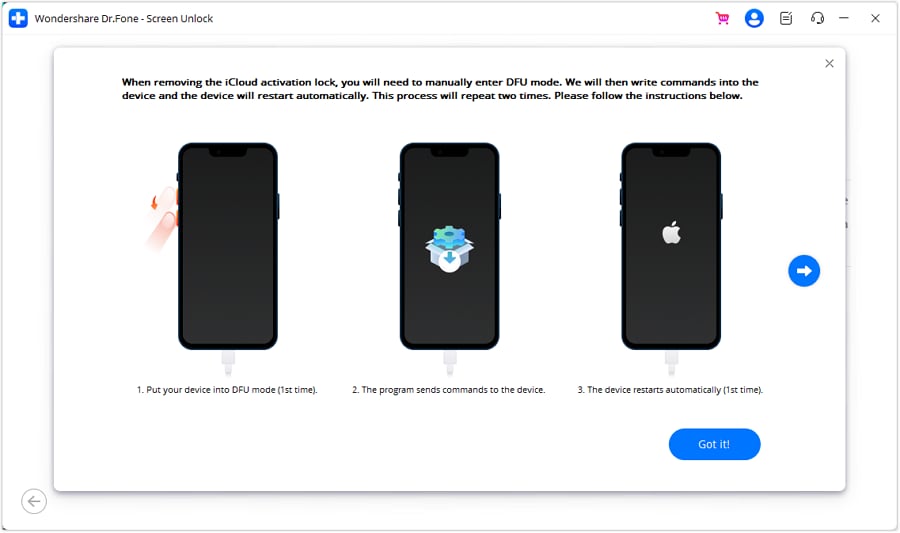

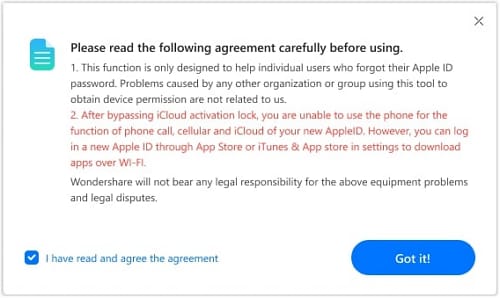

- **Step 3:** Tab '**Unlock Now**' button on the next window. When a prompt show up, read the details carefully and checkmark “I have read and agree the agreement”. Click “**Got it!**” button as well.

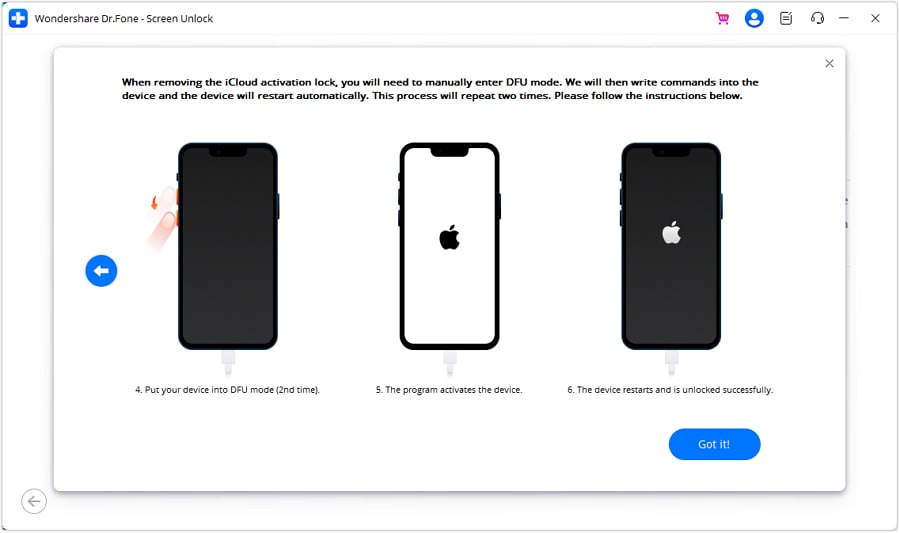

- **Step 4:** Put your iOS Device in DFU Mode for the first time: wait the program to send a command to the Apple iPhone 15 Plus device, and then it will restart.

- **Step 5:** Put the Apple iPhone 15 Plus device in DFU mode for the second time. The program will activate the Apple iPhone 15 Plus device when it finishes.

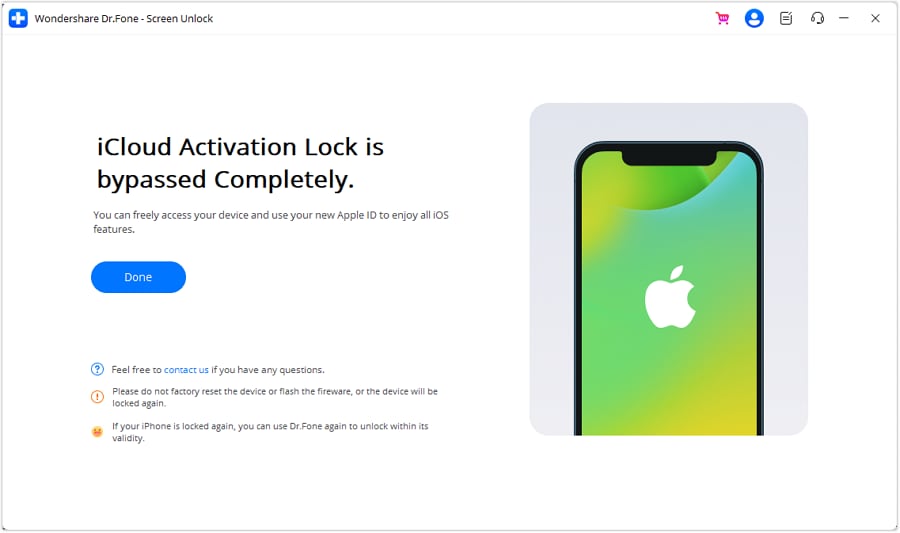

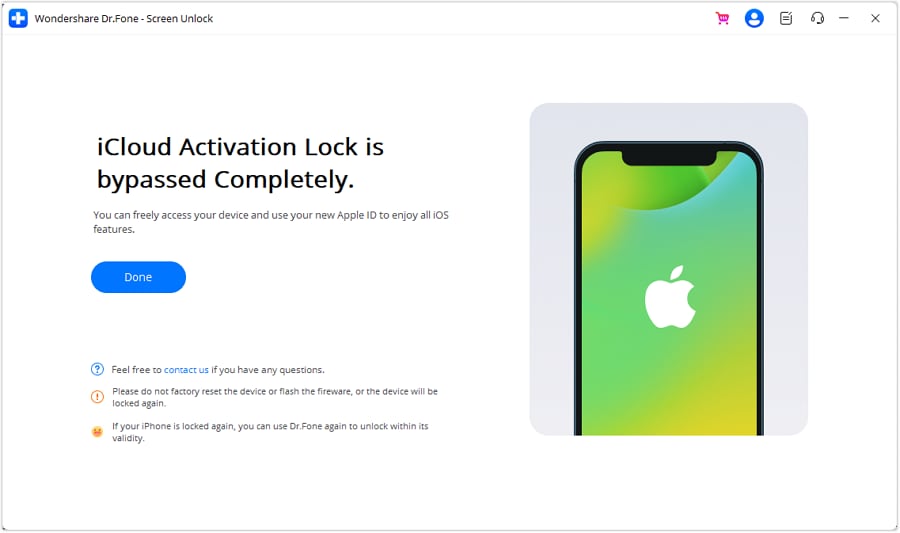

- **Step 6:** Once the Apple iPhone 15 Plus device has been processed under the DFU Mode, the computer’s screen will start displaying the removal of the iCloud Activation Lock. When it’s done, click '**Done**'.

Please note that after the removal of the Activation Lock from the iPhone, it won't be able to make or receive any calls or text messages through cellular networks.

## Part 2: FAQs

### 1\. Why do we need to bypass the iCloud activation lock?

iCloud is software that secures your backups, photos, and passcodes, allows access to Apple's credentials, and much more. iCloud activation lock is one of the features of 'Find Phone' that secures your device's personal information from falling into the wrong hands.

However, it can be trouble if you buy a second-hand iOS device. It can be difficult to access iCloud if the previous one has enabled activation lock. That's why you need to bypass iOS 17, so you can access all the applications and secure all backups.

### 2\. Is it legal to bypass the iCloud activation lock?

Bypassing the iCloud activation lock with or without the previous owner is legal. There are no illegal ways to unlock it. The steps and tools are all legal and safe to bypass the iOS 17 iCloud activation lock. Without bypassing the activation lock, you won't be able to use your iOS device freely or at all.

### 3\. What will happen after a successful bypass?

The data you previously had on your iOS device will be permanently erased as soon as iOS 17 has been bypassed. Additionally, the Apple iPhone 15 Plus device will be set up as a new one. After finishing the bypass, you can now enter all your details and start using the Apple iPhone 15 Plus device. Furthermore, you can back up all your data easily as the previous one has been deleted.

## Conclusion

There is nothing more frustrating than an iCloud activation lock. You cannot access your iOS devices without iCloud. Since there are 5 incredible tools to bypass iOS 17, this issue has been resolved.

You can easily unlock anything with one of the best tools available. Lock-screen removal is made easy with Wondershare [Dr.Fone - Screen Unlock (iOS)](https://tools.techidaily.com/wondershare/drfone/iphone-unlock/). You can conveniently bypass the lock screen without any difficulty. The tool works smoothly on iOS devices, which is the most important feature.

## How To Bypass iCloud Activation Lock on Mac For Apple iPhone 15 Plus?

_How to do Mac activation lock bypass?_

iCloud Activation Lock stands as a reliable security measure in the Apple ecosystem. It protects your Apple devices from unauthorized access and theft. Yet, navigating this security feature can be overwhelming for users locked out of their devices. It can happen due to forgotten passwords or second-hand purchases.

Mac users often seek effective methods to bypass this security measure. They aim to reclaim access to their devices without compromising safety. This article serves as a comprehensive guide, exploring the details of bypassing the iCloud **Activation Lock on Mac**. It will go through various methods, strategies, and best practices to unlock your Mac.

## Part 1. What is Mac Activation Lock?

**Mac Activation Lock**, a key part of Apple's security, safeguards your MacBook by linking it to your Apple ID. Similar to iOS devices, it makes it tough for others to access your device without permission. Once activated, it requires your Apple ID and password for various functions. These include the likes of disabling Find My Mac, erasing the Apple iPhone 15 Plus device, or using it after a factory reset.

This feature works hand-in-hand with the 'Find My' app. It ensures your data's safety even if your MacBook is lost or stolen. Only the rightful owner can disable **Activation Lock Mac** via their Apple ID. Yet, dealing with this security measure might be challenging for genuine users. This is especially true if they face issues like forgotten passwords or when buying a used device.

Navigating through the iCloud **Activation Lock on a MacBook** can be daunting. This is especially true when faced with legitimate scenarios like forgotten passwords. It can also happen in the case of purchasing pre-owned locked devices. Yet, several methods and techniques exist to bypass this security feature. Below, we'll go through various methods for how to bypass iCloud **Activation Lock on Mac**:

### Fix 1. Retrieve Your Password

Forgetting the Apple ID password can often lead to being locked out of your own device due to the iCloud Activation Lock. Fortunately, Apple provides a streamlined process to reset and retrieve forgotten passwords. This allows users to regain entry to their devices. Apple offers a mechanism to reset forgotten passwords via the Apple ID account recovery process.

Visit the [Apple ID account page](https://appleid.apple.com/sign-in) through a web browser on any device. Choose the option that says, "Forgot password?" and continue by following the instructions shown on the screen. You might need to answer security questions, use two-factor authentication, or receive account recovery instructions. It can happen via email or SMS to reset the password.

### Fix 2. Remove Mac Activation Lock from Another Device

When faced with a Mac locked by iCloud Activation Lock, another effective method exists. You can bypass this security measure using another trusted device. That device must be linked to the same Apple ID as the Mac. Employing this method allows you to remove the Activation Lock from the locked Mac through the “Find My” feature. Follow these steps to bypass the **Mac Activation Lock**:

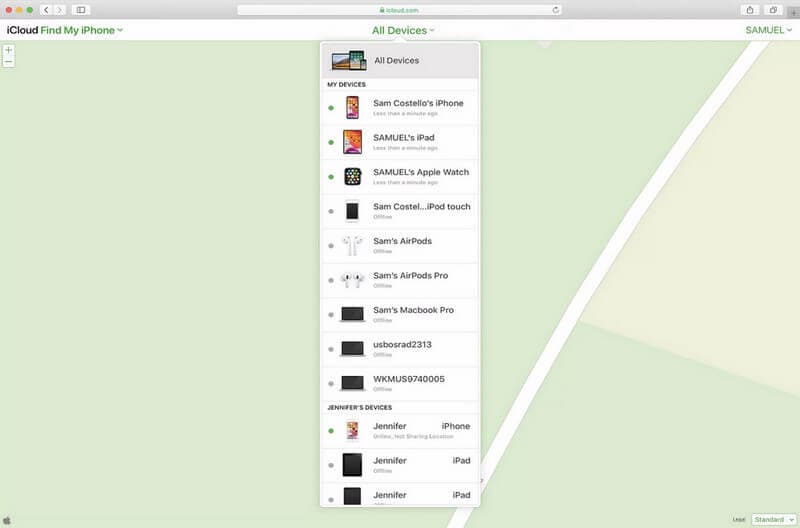

**Step 1.** Utilize a different Apple device, like an iPhone, iPad, or another Mac, that is currently signed in with the identical Apple ID. From there, navigate to the [iCloud website](https://www.icloud.com/) and sign in using your Apple account credentials.

**Step 2.** On the iCloud website, tap the grid icon from the top right corner and select "Find My." Here, enter your password and click on "All Devices." Choose the locked Mac from the Apple iPhone 15 Plus device and go on to tap "Remove This Device." Now, follow the on-screen prompts to complete the process.

### Fix 3. Ask The Previous Owner for Help

Have you acquired a second-hand Mac that is locked with iCloud Activation Lock? If faced with this scenario, seeking assistance from the previous owner can prove to be a valuable solution. Describe your situation to the previous owner and kindly ask them to log in to their iCloud account for support. Otherwise, they can use the iCloud website to disassociate the Apple iPhone 15 Plus device from their account.

They can do this by removing the Apple iPhone 15 Plus device from their iCloud account or disabling Find My Mac. Seeking help from the previous owner to remove the **Activation Lock Mac** is often the most straightforward method.

## Part 3. FAQs About Activation Lock on Mac

1. **Is Bypassing Activation Lock Legal?**

Bypassing Activation Lock mechanisms frequently fall into a legal gray area. The intention can be to regain access to a locked device legitimately owned by the user. Yet, circumventing security features may violate terms of service. It's important to know that attempting to bypass the Activation Lock might void warranties.

2. **How Does Activation Lock Work on Mac?**

**Activation Lock on Mac** is part of Apple's security framework designed to deter unauthorized access and protect user data. When enabled, Activation Lock ties the Mac to the owner's Apple ID. It requires the correct credentials to disable Find My Mac, erase the Apple iPhone 15 Plus device, or reactivate it after a factory reset. This feature effectively prevents unauthorized users from accessing or using a locked Mac.

3. **What Are the Risks of Bypassing Activation Lock?**

Bypassing Activation Lock might carry the following risks and implications:

1. It can expose the Apple iPhone 15 Plus device and personal data to potential security threats.

2. Unauthorized modifications or bypassing of security measures could void the Apple iPhone 15 Plus device's warranty.

3. Improper bypass attempts might lead to device malfunction.

## Extra Tip. How to Remove Activation Lock on iPhone/iPad/iPod Touch?

### [Dr.Fone - Screen Unlock (iOS)](https://tools.techidaily.com/wondershare/drfone/iphone-unlock/)

Bypass iCloud Activation Lock on iPhone Without Hassle.

- Simple, click-through, process.

- Bypass iCloud activation lock and Apple ID without password.

- No tech knowledge is required, everybody can handle it.

- Compatible with iPhone 5S to iPhone X, iPad 3 to iPad 7, and iPod touch 6 to iPod touch 7 running iOS 12.0 to iOS 16.6!

**4,395,219** people have downloaded it

The problem of iCloud Activation Lock is not limited to Mac computers. Apple devices such as iPhones and iPads commonly encounter this situation. If you're locked out of your Apple iPhone 15 Plus and can't recall your Apple ID credentials, there's no cause for concern. Wondershare Dr.Fone provides a robust solution to the iCloud Activation Lock issue. You can follow these steps to bypass the iCloud **Mac Activation Lock**:

### Step 1. Commence Unlocking iCloud Activation Lock Using Wondershare Dr.Fone

Install the most recent edition of Wondershare Dr.Fone on your computer and open the application. Proceed to the Toolbox section, then select "Screen Unlock." Follow it by selecting "iOS" to define the Apple iPhone 15 Plus device type. Next, opt for "iCloud Activation Lock Removal" for the intended purpose. Once directed to a new window, click on "Start" to commence the process.

### Step 2. Unlocking iOS Devices: GSM and CDMA Activation Differences

Follow the prompt to connect your iOS device with a USB cable. Identify if your device is GSM or CDMA. After bypassing iCloud Activation Lock on a GSM device, it will work normally. However, for a CDMA device, calling and other cellular functions won't be available. As you continue unlocking the CDMA device, you'll receive step-by-step instructions. Choose the agreement option and click 'Got It!' to move forward.

### Step 3. Enabling DFU Mode on iOS Devices (Versions 15.0 to 16.3)

Afterward, if your Apple device hasn't undergone jailbreaking, the system will prompt the user to proceed with the process. Guidelines for jailbreaking are available in both written and video formats. For iOS/iPadOS versions 16.4 to 16.6, Dr.Fone is designed to perform the jailbreaking process automatically on your device.

Put iOS devices running versions 15.0 to 16.3 into DFU Mode following the on-screen instructions. After initiating DFU Mode for the first time, the program will command the Apple iPhone 15 Plus device to restart. Click the right arrow to proceed. Repeat the process to enter DFU Mode for the second time. Upon completion, the program will activate and unlock the Apple iPhone 15 Plus device. Once finished, select the "Got It!" button to complete the process.

### Step 4. Complete the Activation Lock Removal Process

After confirming your jailbroken iOS device, the process initiates automatically to remove the Activation Lock. Upon completion, a message confirming the finished process will be displayed on the screen. While the Apple iPhone 15 Plus device is in DFU Mode, the computer screen will show the progress of removing the iCloud **Mac Activation Lock**. Keep an eye on the progress bar until it reaches completion. Click the 'Done' button to finish unlocking the Activation Lock.

## Conclusion

Navigating iCloud Activation Lock on Mac demands a balance between accessibility and security. The article explored fixes such as password retrieval, remote disassociation, and collaboration with the previous owner. Following them, users can unlock their devices securely. However, if you need to [bypass iCloud Activation Lock on an iOS device](https://tools.techidaily.com/wondershare/drfone/iphone-unlock/), Dr.Fone comes to the rescue.

## How to Bypass Activation Lock on Apple iPhone 15 Plus or iPad?

Apple has long been famous for providing sound devices, with nifty safety and user-friendly features. With that said, if you just purchased a used iOS device, you may be required to bypass the activation lock on your device using iCloud, or the previous user’s account. Before we take a look at how to bypass an activation lock on an Apple iPhone 15 Plus or iPad, let’s examine what an activation lock on an Apple iPhone 15 Plus or iPad entails.

## Part 1. What is Activation Lock on Apple iPhone 15 Plus or iPad?

This theft deterrent feature is cool for the sole reason that it helps keep your data safe, in case of misplacement or thievery. Without access to the owner’s Apple ID and/or password, accessing the Apple iPhone 15 Plus device becomes impossible. Unfortunately for used purchases, you may have procured a used item legitimately, but have no access to said device.

This feature is enabled by default when the Find My Apple iPhone 15 Plus option is selected on an iOS device. It is necessary when a user needs to erase data on an iOS device, set it up using a new Apple ID, or turn off Find My Apple iPhone 15 Plus. Knowing the activation lock is enabled on an Apple iPhone 15 Plus or iPad is easy, as the screen prompts you to input a user ID and password.

<iframe width="560" height="315" src="https://www.youtube.com/embed/lKERrs5S_uU" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen="allowfullscreen"></iframe>

## Part 2. How to Bypass Activation Lock on Apple iPhone 15 Plus or iPad with Previous Owner's Account?

Using a valid Apple ID and password is the easiest way to bypass the activation lock on Apple iPhone 15 Plus or iPad Mini. In any case, if you legitimately purchased the Apple iPhone 15 Plus device from the previous owner, they should have no qualms giving you these details. If it’s a new device, and you are the original owner, you will have this information ready to use for activation. Whatever the case, follow the steps below to remove the activation lock to Apple iPhone 15 Plus or iPad Mini.

- **Step 1.** Have the previous owner enter their details on the Apple iPhone 15 Plus or iPad Mini, or request them to send the same to you.

- **Step 2.** Fire up the Apple iPhone 15 Plus device and when prompted on the Activation Lock Screen, enter the Apple ID and password.

- **Step 3.** Within a few minutes, the home screen should appear on the Apple iPhone 15 Plus or iPad.

- **Step 4.** Upon reaching this page, navigate to the settings tab to sign out of iCloud.

_**A note for users before we proceed with the bypass steps:**

Users on iOS 12 or earlier can locate this option on settings, navigating to iCloud, then signing out. For iOS 13 or later, click on settings, then your name, and sign out._

- **Step 5.** Chances are, the Apple iPhone 15 Plus or iPad will prompt you to enter the original user’s ID and Password. Simply enter the details available to you.

- **Step 6.** Finally, the best part of the unlocking process; navigate to the settings tab to erase all data. Open up settings, click reset and proceed to erase all content, including settings.

- **Step 7.** At this point, your Apple iPhone 15 Plus or iPad will restart/reboot, allowing you to set up the Apple iPhone 15 Plus device anew.

There are a few web-based resources and tricks that facilitate this procedure. Suffice to say, these methods, known as Jailbreaking, do not work when activation lock is enabled. Stick to using credible methods like the one listed above. Alternatively, you can use iCloud to bypass the Apple iPhone 15 Plus or iPad Mini activation lock. It does, however, require the original owner’s iCloud information. Assuming they are in contact with you, have them use the following steps to bypass the activation lock.

## Part 3. How to Remove iCloud Activation Lock on Apple iPhone 15 Plus or iPad Without Password Using Dr.Fone?

This cool software program is available for use with every iOS device out there. It offers utility for all matters security, revamping or repairing as well as unlocking of iOS devices. On removing Apple ID and activation lock without a password, [Dr.Fone - Screen Unlock (iOS)](https://tools.techidaily.com/wondershare/drfone/iphone-unlock/) is one of the few recommended programs.

### [Dr.Fone - Screen Unlock (iOS)](https://tools.techidaily.com/wondershare/drfone/iphone-unlock/)

Remove Activation Lock from iPhone/Apple iPhone 15 Plus or iPad without Password

- Remove the 4-digit/6-digit passcode, Touch ID, and Face ID.

- Bypass iCloud activation lock and Apple ID without password.

- Remove mobile device management (MDM) iPhone.

- A few clicks and the iOS lock screen is gone.

- Fully compatible with all iOS device models and iOS versions.

**4,395,216** people have downloaded it

Follow the guide to remove activation lock on Apple iPhone 15 Plus or iPad without a password:

- **Step 1.** Click the “Start Download” button above to Download Dr.Fone onto your computer. Once the interface pops up, select the Screen Unlock option.

- **Step 2.** Then select iCloud Activation Lock Removal.

- **Step 3.** Start the Remove process, and connect your Apple iPhone 15 Plus or iPad to your computer.

- **Step 6.** Wait a moment for the removal process.

## Part 4. How to Bypass Apple iPhone 15 Plus or iPad Mini Activation Lock Using iCloud.com?

- **Step 1.** The original user (or yourself) should proceed to iCloud and sign in using a valid Apple ID and password. Goes without saying that they have to be valid details.

- **Step 2.** Click on the option to Find iPhone.

- **Step 3.** Select All Devices, and a screen should appear similar to the one below.

- **Step 4.** Select the Apple iPhone 15 Plus or iPad Mini that you need to unlock.

- **Step 5.** Click on the option to erase the Apple iPhone 15 Plus or iPad, then proceed to remove the Apple iPhone 15 Plus device from the account.

- **Step 6.** Completing this process will remove the Apple iPhone 15 Plus device from the previous user’s account, subsequently removing the activation lock from your Apple iPhone 15 Plus or iPad. Restart the Apple iPhone 15 Plus device and a different interface should appear, without the activation lock screen.

A popular query regarding the activation lock on an Apple iPhone 15 Plus or iPad Mini is why access is denied if you are not the original owner? This is explained in detail below.

## Conclusion

Having an iOS device is a unique and satisfying experience, one that many smart device users wish they could have. On that note, activation locks on Apple iPhone 15 Plus or iPads and other iOS devices are meant to protect user information and ensure privacy. Furthermore, using shady programs downloaded from the web may lead to the destruction of a device. Use the handy methods suggested above to fully enjoy the features on your iOS device.

<ins class="adsbygoogle"

style="display:block"

data-ad-format="autorelaxed"

data-ad-client="ca-pub-7571918770474297"

data-ad-slot="1223367746"></ins>

<ins class="adsbygoogle"

style="display:block"

data-ad-client="ca-pub-7571918770474297"

data-ad-slot="8358498916"

data-ad-format="auto"

data-full-width-responsive="true"></ins>

<span class="atpl-alsoreadstyle">Also read:</span>

<div><ul>

<li><a href="https://activate-lock.techidaily.com/in-2024-the-ultimate-guide-to-bypassing-icloud-activation-lock-on-apple-iphone-13-pro-max-by-drfone-ios/"><u>In 2024, The Ultimate Guide to Bypassing iCloud Activation Lock on Apple iPhone 13 Pro Max</u></a></li>

<li><a href="https://activate-lock.techidaily.com/in-2024-how-to-remove-icloud-from-iphone-8-plus-smoothly-by-drfone-ios/"><u>In 2024, How To Remove iCloud From iPhone 8 Plus Smoothly</u></a></li>

<li><a href="https://activate-lock.techidaily.com/in-2024-effective-ways-to-fix-checkra1n-error-31-from-iphone-15-pro-by-drfone-ios/"><u>In 2024, Effective Ways To Fix Checkra1n Error 31 From iPhone 15 Pro</u></a></li>

<li><a href="https://activate-lock.techidaily.com/a-how-to-guide-on-bypassing-the-iphone-11-pro-max-icloud-lock-by-drfone-ios/"><u>A How-To Guide on Bypassing the iPhone 11 Pro Max iCloud Lock</u></a></li>

<li><a href="https://activate-lock.techidaily.com/easy-fixes-how-to-recover-forgotten-icloud-password-on-your-iphone-13-mini-by-drfone-ios/"><u>Easy Fixes How To Recover Forgotten iCloud Password On your iPhone 13 mini</u></a></li>

<li><a href="https://activate-lock.techidaily.com/a-comprehensive-guide-to-icloud-unlock-on-apple-iphone-7-online-by-drfone-ios/"><u>A Comprehensive Guide to iCloud Unlock On Apple iPhone 7 Online</u></a></li>

<li><a href="https://activate-lock.techidaily.com/latest-guide-on-ipad-23-and-iphone-14-pro-max-icloud-activation-lock-bypass-by-drfone-ios/"><u>Latest Guide on iPad 2/3 and iPhone 14 Pro Max iCloud Activation Lock Bypass</u></a></li>

<li><a href="https://activate-lock.techidaily.com/in-2024-what-you-want-to-know-about-two-factor-authentication-for-icloud-from-your-iphone-14-pro-by-drfone-ios/"><u>In 2024, What You Want To Know About Two-Factor Authentication for iCloud From your iPhone 14 Pro</u></a></li>

<li><a href="https://activate-lock.techidaily.com/3-easy-methods-to-unlock-icloud-locked-apple-iphone-14-pro-maxipadipod-by-drfone-ios/"><u>3 Easy Methods to Unlock iCloud Locked Apple iPhone 14 Pro Max/iPad/iPod</u></a></li>

<li><a href="https://activate-lock.techidaily.com/in-2024-bypass-icloud-activation-lock-with-imei-code-from-your-apple-iphone-12-pro-by-drfone-ios/"><u>In 2024, Bypass iCloud Activation Lock with IMEI Code From your Apple iPhone 12 Pro</u></a></li>

<li><a href="https://activate-lock.techidaily.com/how-to-bypass-icloud-activation-lock-on-ipod-and-apple-iphone-6s-the-right-way-by-drfone-ios/"><u>How To Bypass iCloud Activation Lock On iPod and Apple iPhone 6s The Right Way</u></a></li>

<li><a href="https://activate-lock.techidaily.com/how-to-bypass-icloud-activation-lock-on-ipod-and-iphone-se-2020-the-right-way-by-drfone-ios/"><u>How To Bypass iCloud Activation Lock On iPod and iPhone SE (2020) The Right Way</u></a></li>

<li><a href="https://activate-lock.techidaily.com/easy-fixes-how-to-recover-forgotten-icloud-password-from-your-iphone-6-plus-by-drfone-ios/"><u>Easy Fixes How To Recover Forgotten iCloud Password From your iPhone 6 Plus</u></a></li>

<li><a href="https://activate-lock.techidaily.com/in-2024-unlock-your-device-icloud-dns-bypass-explained-and-tested-plus-easy-alternatives-from-iphone-6-plus-by-drfone-ios/"><u>In 2024, Unlock Your Device iCloud DNS Bypass Explained and Tested, Plus Easy Alternatives From iPhone 6 Plus</u></a></li>

<li><a href="https://activate-lock.techidaily.com/in-2024-how-to-remove-find-my-iphone-without-apple-id-from-your-iphone-12-by-drfone-ios/"><u>In 2024, How to Remove Find My iPhone without Apple ID From your iPhone 12?</u></a></li>

<li><a href="https://android-pokemon-go.techidaily.com/how-to-come-up-with-the-best-pokemon-team-on-lava-yuva-3-drfone-by-drfone-virtual-android/"><u>How to Come up With the Best Pokemon Team On Lava Yuva 3? | Dr.fone</u></a></li>

<li><a href="https://pokemon-go-android.techidaily.com/how-pgsharp-save-you-from-ban-while-spoofing-pokemon-go-on-nubia-red-magic-8s-pro-drfone-by-drfone-virtual-android/"><u>How PGSharp Save You from Ban While Spoofing Pokemon Go On Nubia Red Magic 8S Pro? | Dr.fone</u></a></li>

<li><a href="https://review-topics.techidaily.com/in-2024-how-can-i-use-a-fake-gps-without-mock-location-on-oppo-find-x6-drfone-by-drfone-virtual-android/"><u>In 2024, How Can I Use a Fake GPS Without Mock Location On Oppo Find X6? | Dr.fone</u></a></li>

<li><a href="https://blog-min.techidaily.com/how-to-retrieve-erased-videos-from-honor-90-pro-by-fonelab-android-recover-video/"><u>How to retrieve erased videos from Honor 90 Pro</u></a></li>

<li><a href="https://change-location.techidaily.com/in-2024-pokemon-go-cooldown-chart-on-vivo-x-flip-drfone-by-drfone-virtual-android/"><u>In 2024, Pokémon Go Cooldown Chart On Vivo X Flip | Dr.fone</u></a></li>

<li><a href="https://android-location-track.techidaily.com/top-10-best-spy-watches-for-your-poco-m6-pro-4g-drfone-by-drfone-virtual-android/"><u>Top 10 Best Spy Watches For your Poco M6 Pro 4G | Dr.fone</u></a></li>

<li><a href="https://ios-pokemon-go.techidaily.com/latest-way-to-get-shiny-meltan-box-in-pokemon-go-mystery-box-on-apple-iphone-xs-max-drfone-by-drfone-virtual-ios/"><u>Latest way to get Shiny Meltan Box in Pokémon Go Mystery Box On Apple iPhone XS Max | Dr.fone</u></a></li>

<li><a href="https://iphone-unlock.techidaily.com/how-to-unlock-your-iphone-14-pro-passcode-4-easy-methods-with-or-without-itunes-drfone-by-drfone-ios/"><u>How to Unlock Your iPhone 14 Pro Passcode 4 Easy Methods (With or Without iTunes) | Dr.fone</u></a></li>

<li><a href="https://pokemon-go-android.techidaily.com/pokemon-go-no-gps-signal-heres-every-possible-solution-on-poco-c55-drfone-by-drfone-virtual-android/"><u>Pokemon Go No GPS Signal? Heres Every Possible Solution On Poco C55 | Dr.fone</u></a></li>

<li><a href="https://ios-unlock.techidaily.com/resolve-your-apple-iphone-13-pro-keeps-asking-for-outlook-password-by-drfone-ios/"><u>Resolve Your Apple iPhone 13 Pro Keeps Asking for Outlook Password</u></a></li>

<li><a href="https://fix-guide.techidaily.com/restore-missing-app-icon-on-nubia-red-magic-9-pro-step-by-step-solutions-drfone-by-drfone-fix-android-problems-fix-android-problems/"><u>Restore Missing App Icon on Nubia Red Magic 9 Pro Step-by-Step Solutions | Dr.fone</u></a></li>

</ul></div> |

import { Component, OnInit, ViewChild, HostListener } from '@angular/core';

import { MatDialogRef } from '@angular/material/dialog';

import { AlarmDefinitionDataUIModel } from '@core/models/webModels/AlarmDefinitionDataUI.model';

import { AlarmService } from '@core/services/alarm.service';

import { InforceDeviceDataModel } from '@core/models/webModels/InforceDeviceData.model';

import { IAlarmsState } from '@store/state/alarms.state';

import { Store } from '@ngrx/store';

import { RealTimeDataSignalRService } from '@core/services/realTimeDataSignalR.service';

import { AlarmDefinition } from '@core/models/UIModels/alarmUIModel.model';

import { DeviceIdIndexValue } from '@core/models/webModels/PointTemplate.model';

import { Observable, Subscription } from 'rxjs';

import { UIReportUnits } from '@core/data/UICommon';

@Component({

selector: 'app-alarm-object',

templateUrl: './alarms-dialog.component.html',

styleUrls: ['./alarms-dialog.component.scss']

})

export class AlarmsDialogComponent implements OnInit {

cellStyles: any = null;

IsMobileView: boolean = false;

ActiveAlarmDescriptions: AlarmDefinitionDataUIModel[] = [];

AlarmsState$: Observable<IAlarmsState>;

inforcedevices: InforceDeviceDataModel[] = [];

isDirty: boolean = false;

Title: string;

Details: string;

public AlarmDescriptionsUI: AlarmDefinition[] = [];

private deviceIdIndexValue: DeviceIdIndexValue[] = [];

public handle: number;

private predefinedIndex: number = 0;

private realTimeSubscriptions: Subscription[] = [];

private dataSubscriptions: Subscription[] = [];

HasConfigurationDataLoaded: boolean = false;

constructor(

public dialogRef: MatDialogRef<AlarmsDialogComponent>,

protected alarmService: AlarmService,

protected store: Store<{ alarmsState: IAlarmsState }>,

private realTimeDataSignalRService: RealTimeDataSignalRService

) {

}

styles = {

padding: '2px'

}

private assignAlarms() {

let subscription = this.alarmService.subscribeToActiveAlarms().subscribe(alarmDesc => {

this.ActiveAlarmDescriptions = alarmDesc;

this.getData();

});

this.dataSubscriptions.push(subscription);

}

private getAlarmCount(): void {

let subscription = this.alarmService.subscribeToActiveAlarmCount().subscribe(alarmCount => {

this.Title = alarmCount ? "Alarms(" + alarmCount + ")" : "No active alarms";

});

this.dataSubscriptions.push(subscription);

}

private detectScreenSize() {

this.IsMobileView = window.innerWidth < 480 ? true : false;

}

OnCancel() {

this.unsubscribeSubscriptions();

this.dialogRef.close();

}

getAlarmMessage(alarm: AlarmDefinitionDataUIModel): string {

if (this.AlarmDescriptionsUI != null) {

let currentalarm: AlarmDefinition = this.getCurrentAlarmDefinition(alarm);

if (currentalarm != null) {

let realtimeValue = this.getDeviceIndexValue(currentalarm.currentIndex);

let alarmMessage = currentalarm.Details;

alarmMessage = alarmMessage.replace("{0}", realtimeValue.toFixed(1) + " " + currentalarm.Unit);

if (currentalarm.LimitType == 1 || currentalarm.LimitType == 2)//low alarm

alarmMessage = alarmMessage.replace("{1}", (Number(currentalarm.LimitValue.toFixed(1)) + this.convertPressure(Number(currentalarm.Deadband.toFixed(1)), this.alarmService.PressureUnit)).toFixed(1).toString() + " " + currentalarm.Unit);

else if (currentalarm.LimitType == 3 || currentalarm.LimitType == 4)//high alarm

alarmMessage = alarmMessage.replace("{1}", (Number(currentalarm.LimitValue.toFixed(1)) - this.convertPressure(Number(currentalarm.Deadband.toFixed(1)), this.alarmService.PressureUnit)).toFixed(1).toString() + " " + currentalarm.Unit);

else

alarmMessage = alarmMessage.replace("{1}", Number(currentalarm.LimitValue.toFixed(1)) + " " + currentalarm.Unit);

return alarmMessage;

}

}

else {

return null;

}

}

getCurrentAlarmDefinition(alarm: AlarmDefinitionDataUIModel): AlarmDefinition {

let currentalarm: AlarmDefinition;

if (this.AlarmDescriptionsUI.findIndex(a => a.AlarmId == alarm.AlarmId) != -1) {

for (let i = 0; i < this.AlarmDescriptionsUI.length; i++) {

if (this.AlarmDescriptionsUI[i].AlarmId == alarm.AlarmId)

currentalarm = this.AlarmDescriptionsUI[i];

}

}

return currentalarm;

}

getDeviceIndexValue(index: number): number {

if (this.deviceIdIndexValue != null && this.deviceIdIndexValue[index] != null)

return this.deviceIdIndexValue[index].value;

}

convertPressure(pressure: number, pressureUnit: string): number {

let convertedPressure: number = pressure;

if (pressureUnit == UIReportUnits._UnitPSIA.Name)

convertedPressure = pressure + 14.7;

else if (pressureUnit == UIReportUnits._UnitKPA.Name)

convertedPressure = pressure * 6.89476;

else if (pressureUnit == UIReportUnits._UnitMPA.Name)

convertedPressure = pressure * 0.00689476;

else if (pressureUnit == UIReportUnits._Unitbara.Name)

convertedPressure = pressure * 0.0689476;

else if (pressureUnit == UIReportUnits._Unitbarg.Name)

convertedPressure = pressure * 0.0689476;

else

convertedPressure = pressure;

return Number(convertedPressure.toFixed(1));

}

subScribeToRealTimeData() {

this.realTimeSubscriptions = [];

let deviceSubs = null;

if (this.deviceIdIndexValue != undefined && this.deviceIdIndexValue != null) {

this.deviceIdIndexValue.forEach(e => {

deviceSubs = this.realTimeDataSignalRService.GetRealtimeData(e.deviceId, e.pointIndex).subscribe(d => {

if (d != undefined && d != null)

e.match(d);

});

this.realTimeSubscriptions.push(deviceSubs);

});

}

}

createDeviceIdAndIndexArray() {

this.deviceIdIndexValue = [];

if (this.AlarmDescriptionsUI != null) {

for (let i = 0; i < this.AlarmDescriptionsUI.length; i++) {

this.AlarmDescriptionsUI[i].currentIndex = this.predefinedIndex;

this.predefinedIndex++;

this.deviceIdIndexValue.push(new DeviceIdIndexValue(this.AlarmDescriptionsUI[i].DeviceId, this.AlarmDescriptionsUI[i].DataPointIndex, -999.0, ''));

}

}

}

getAlarm() {

for (let i = 0; i < this.alarmService.AllAlarmsDescription.length; i++) {

this.AlarmDescriptionsUI.push(AlarmDefinition.CopyToAlarmDefinition(this.alarmService.AllAlarmsDescription[i]));

}

this.createDeviceIdAndIndexArray();

this.subScribeToRealTimeData();

}

getData() {

if (this.HasConfigurationDataLoaded == false) {

if (this.alarmService.AllAlarmsDescription && this.alarmService.AllAlarmsDescription.length > 0) {

this.HasConfigurationDataLoaded = true;

this.getAlarm();

}

}

}

unsubscribeSubscriptions(): void {

if (this.realTimeSubscriptions && this.realTimeSubscriptions.length > 0) {

this.realTimeSubscriptions.forEach(subscription => {

subscription.unsubscribe();

subscription = null;

});

}

this.realTimeSubscriptions = [];

if (this.dataSubscriptions && this.dataSubscriptions.length > 0) {

this.dataSubscriptions.forEach(subscription => {

subscription.unsubscribe();

subscription = null;

});

}

this.dataSubscriptions = [];

}

@HostListener("window:resize", [])

public onResize() {

this.detectScreenSize();

}

ngOnChanges() {

}

ngAfterViewInit(): void {

this.detectScreenSize();

}

ngOnInit(): void {

this.assignAlarms();

this.getAlarmCount();

}

} |

import Grid from "@mui/material/Grid";

import Typography from "@mui/material/Typography";

import { useFormContext } from "react-hook-form";

import AppTextInput from "../../app/components/AppTextInput";

import AppCheckBox from "../../app/components/AppCheckBox";

export default function AddressForm() {

const { control, formState } = useFormContext();

return (

<>

<Typography

variant="h6"

gutterBottom

>

Shipping address

</Typography>

<Grid

container

spacing={3}

>

<Grid

item

xs={12}

sm={12}

>

<AppTextInput

control={control}

name="fullName"

label="Full Name"

/>

</Grid>

<Grid

item

xs={12}

sm={6}

></Grid>

<Grid

item

xs={12}

>

<AppTextInput

control={control}

name="address1"

label="Address 1"

/>

</Grid>

<Grid

item

xs={12}

>

<AppTextInput

control={control}

name="address2"

label="Address 2"

/>

</Grid>

<Grid

item

xs={12}

sm={6}

>

<AppTextInput

control={control}

name="city"

label="City"

/>

</Grid>

<Grid

item

xs={12}

sm={6}

>

<AppTextInput

control={control}

name="state"

label="State"

/>

</Grid>

<Grid

item

xs={12}

sm={6}

>

<AppTextInput

control={control}

name="zip"

label="Zip / Postal Code"

/>

</Grid>

<Grid

item

xs={12}

sm={6}

>

<AppTextInput

control={control}

name="country"

label="Country"

/>

</Grid>

<Grid

item

xs={12}

>

<AppCheckBox

disabled={!formState.isDirty}

name="savedAddress" //important to match api value

label="Save this as default address"

control={control}

/>

</Grid>

</Grid>

</>

);

} |

const express = require('express')

const { createProduct, getProducts} = require('../dao/controllers/productController')

const { userRequired } = require('../dao/controllers/tokenController')

const Product = require('../dao/models/productModel')

const productRouter = express.Router()

productRouter.get('/', userRequired, async (req, res) => {

try {

res.json({ok: true, products: await getProducts()})

} catch (error) {

return res.status(500).json({ message: error.message })

}

})

productRouter.post('/', userRequired, async (req, res) => {

try {

const {nombre, tag, precio, stock, descripcion, thumbnail} = req.body

const desc = descripcion.split('\n')

await createProduct({nombre, tag, precio, stock, descripcion: desc, thumbnail})

res.json({ok: true, products: await getProducts()})

} catch (error) {

return res.status(500).json({ message: error.message })

}

})

productRouter.get('/:id', userRequired, async (req, res) => {

try {

const item = await Product.findById(req.params.id)

if (!item) return res.status(404).json({ message: 'No se encontro el producto' })

return res.json(item)

} catch (error) {

return res.status(500).json({ message: error.message })

}

})

productRouter.delete('/:id', userRequired, async (req, res) => {

try {

const deletedProduct = await Product.findByIdAndDelete(req.params.id)

if(!deletedProduct) return res.status(404).json({ message: 'Producto no encontrado' })

return res.sendStatus(204)

}catch(err){

return res.status(500).json({ message: error.message })

}

})

productRouter.put('/', userRequired, async (req, res) => {

try{

const {_id, precio, stock} = req.body

const updateProduct = await Product.findByIdAndUpdate( {_id}, {precio, stock}, {new: true})

return res.json(updateProduct)

}catch(error){

return res.status(500).json({ message: error.message })

}

})

module.exports = productRouter |

#####

### covidImpactVisualization Utility functions

#####

percent_proficient <- function(variable, achievement_levels, proficient_achievement_levels) {

tmp.table <- table(variable)

round(100*sum(tmp.table[achievement_levels[proficient_achievement_levels=="Proficient"]], na.rm=TRUE)/sum(tmp.table[achievement_levels], na.rm=TRUE), digits=1)

}

participation_rate <- function(numerator, denominator) {

round(100*(sum(!is.na(numerator))/length(denominator)), digits=1)

}

getCutscores <- function(state_abb, content_area, year) {

tmp_names <- names(SGPstateData[[state_abb]][['Achievement']][['Cutscores']])

if (paste(content_area, year, sep=".") %in% tmp_names) {

cutscore_name <- paste(content_area, year, sep=".")

} else {