Build and load¶

Nearly every deep learning workflow begins with loading a dataset, which makes it one of the most important steps. With 🤗 Datasets, there are more than 900 datasets available to help you get started with your NLP task. All you have to do is call: datasets.load_dataset() to take your first step. This function is a true workhorse in every sense because it builds and loads every dataset you use.

ELI5: load_dataset¶

Let’s begin with a basic Explain Like I’m Five.

For community datasets, datasets.load_dataset() downloads and imports the dataset loading script associated with the requested dataset from the Hugging Face Hub. The Hub is a central repository where all the Hugging Face datasets and models are stored. Code in the loading script defines the dataset information (description, features, URL to the original files, etc.), and tells 🤗 Datasets how to generate and display examples from it.

If you are working with a canonical dataset, datasets.load_dataset() downloads and imports the dataset loading script from GitHub.

See also

Read the Share section to learn more about the difference between community and canonical datasets. This section also provides a step-by-step guide on how to write your own dataset loading script!

The loading script downloads the dataset files from the original URL, generates the dataset and caches it in an Arrow table on your drive. If you’ve downloaded the dataset before, then 🤗 Datasets will reload it from the cache to save you the trouble of downloading it again.

Now that you have a high-level understanding about how datasets are built, let’s take a closer look at the nuts and bolts of how all this works.

Building a dataset¶

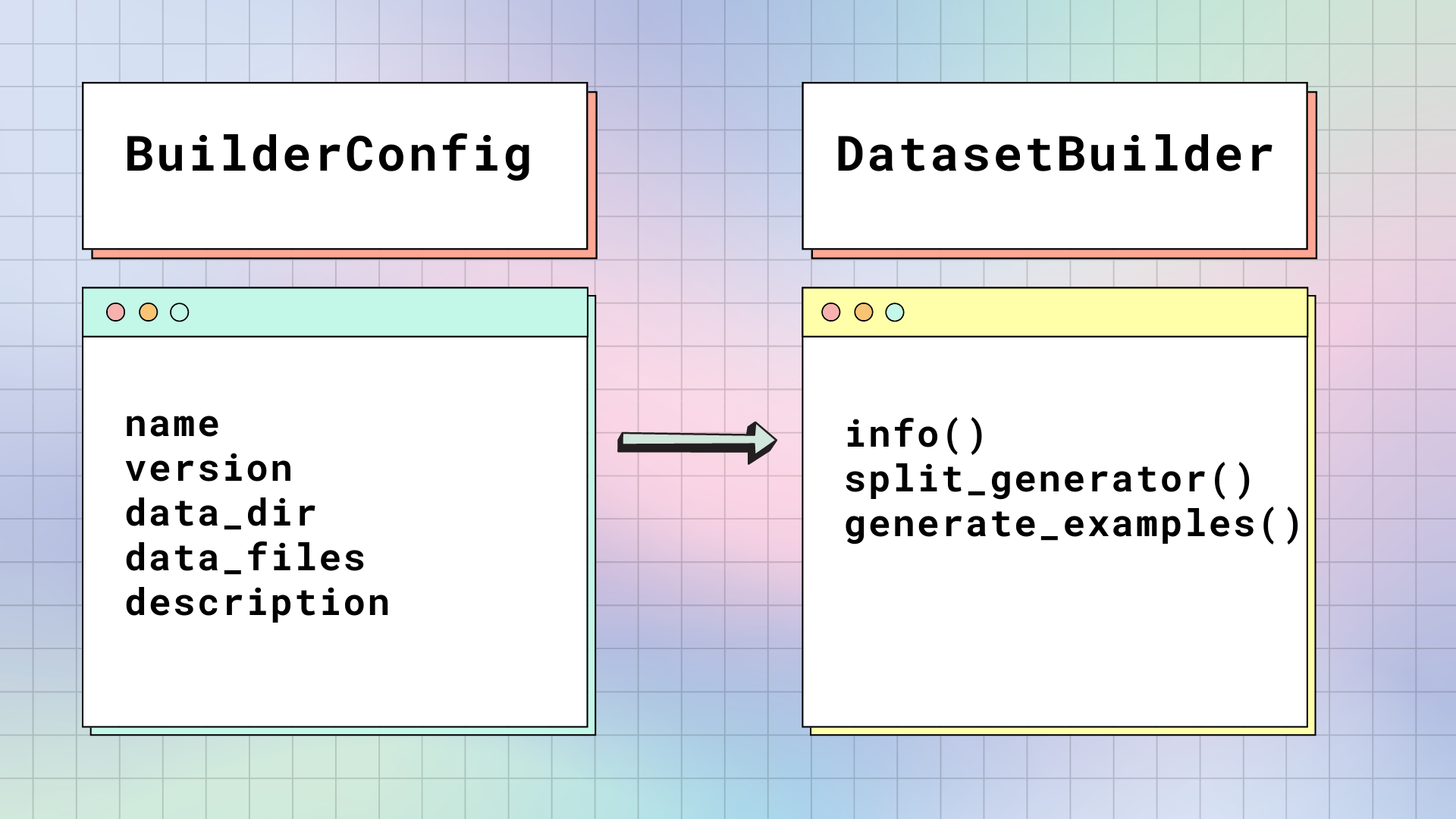

When you load a dataset for the first time, 🤗 Datasets takes the raw data file and builds it into a table of rows and typed columns. There are two main classes responsible for building a dataset: datasets.BuilderConfig and datasets.DatasetBuilder.

datasets.BuilderConfig¶

datasets.BuilderConfig is the configuration class of datasets.DatasetBuilder. The datasets.BuilderConfig contains the following basic attributes about a dataset:

Attribute |

Description |

|---|---|

|

Short name of the dataset. |

|

Dataset version identifier. |

|

Stores the path to a local folder containing the data files. |

|

Stores paths to local data files. |

|

Description of the dataset. |

If you want to add additional attributes to your dataset such as the class labels, you can subclass the base datasets.BuilderConfig class. There are two ways to populate the attributes of a datasets.BuilderConfig class or subclass:

Provide a list of predefined

datasets.BuilderConfigclass (or subclass) instances in the datasetsdatasets.DatasetBuilder.BUILDER_CONFIGSattribute.When you call

datasets.load_dataset(), any keyword arguments that are not specific to the method will be used to set the associated attributes of thedatasets.BuilderConfigclass. This will override the predefined attributes if a specific configuration was selected.

You can also set the datasets.DatasetBuilder.BUILDER_CONFIG_CLASS to any custom subclass of datasets.BuilderConfig.

datasets.DatasetBuilder¶

datasets.DatasetBuilder accesses all the attributes inside datasets.BuilderConfig to build the actual dataset.

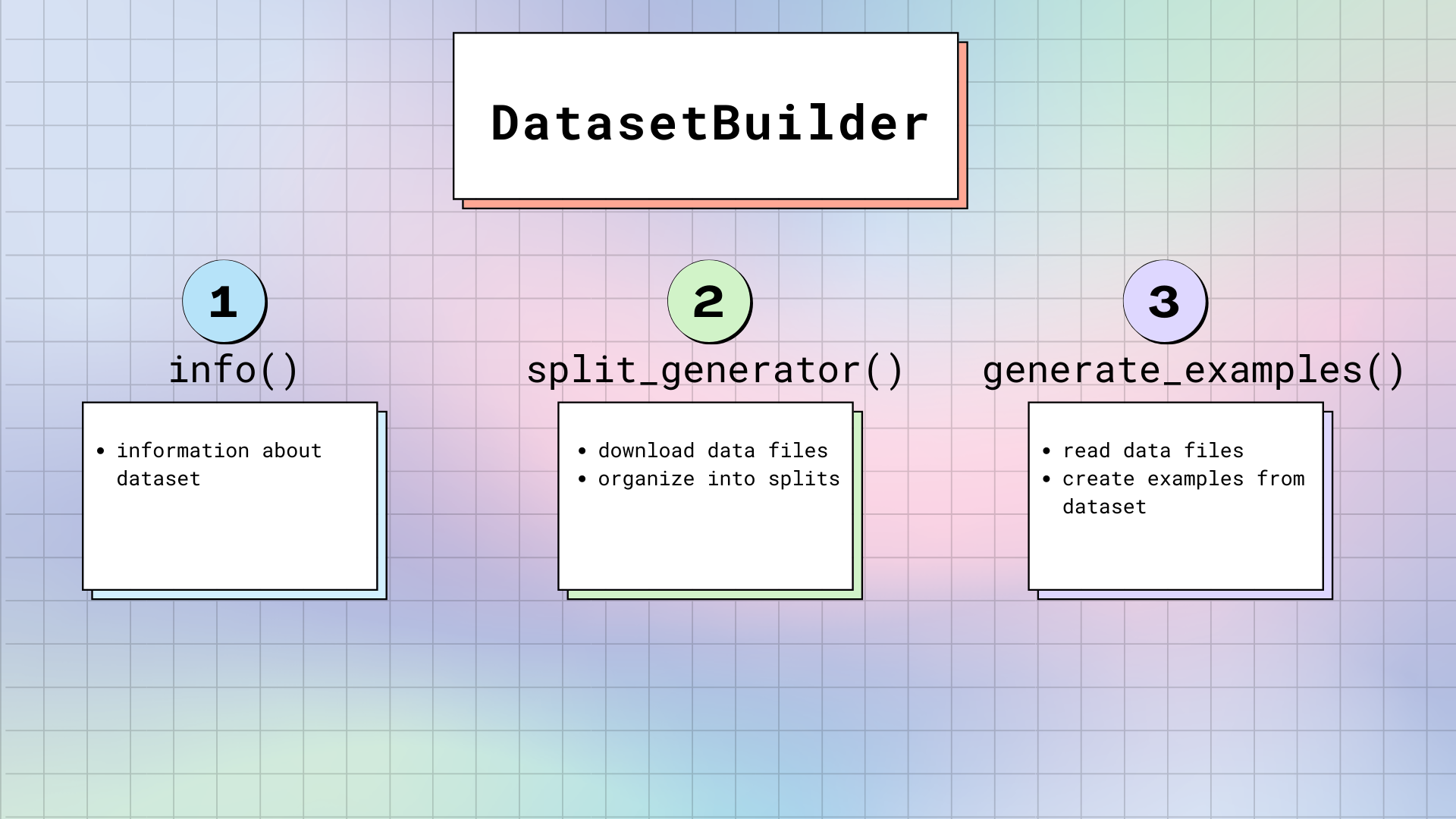

There are three main methods in datasets.DatasetBuilder:

datasets.DatasetBuilder._info()is in charge of defining the dataset attributes. When you calldataset.info, 🤗 Datasets returns the information stored here. Likewise, thedatasets.Featuresare also specified here. Remember, thedatasets.Featuresare like the skeleton of the dataset. It provides the names and types of each column.datasets.DatasetBuilder._split_generator()downloads or retrieves the requested data files, organizes them into splits, and defines specific arguments for the generation process. This method has adatasets.DownloadManagerthat downloads files or fetches them from your local filesystem. Within thedatasets.DownloadManager, there is adatasets.DownloadManager.download_and_extract()method that accepts a dictionary of URLs to the original data files, and downloads the requested files. Accepted inputs include: a single URL or path, or a list/dictionary of URLs or paths. Any compressed file types like TAR, GZIP and ZIP archives will be automatically extracted.Once the files are downloaded,

datasets.SplitGeneratororganizes them into splits. Thedatasets.SplitGeneratorcontains the name of the split, and any keyword arguments that are provided to thedatasets.DatasetBuilder._generate_examples()method. The keyword arguments can be specific to each split, and typically comprise at least the local path to the data files for each split.Tip

datasets.DownloadManager.download_and_extract()can download files from a wide range of sources. If the data files are hosted on a special access server, you should usedatasets.DownloadManger.download_custom(). Refer to the reference ofdatasets.DownloadManagerfor more details.datasets.DatasetBuilder._generate_examples()reads and parses the data files for a split. Then it yields dataset examples according to the format specified in thefeaturesfromdatasets.DatasetBuilder._info(). The input ofdatasets.DatasetBuilder._generate_examples()is actually thefilepathprovided in the keyword arguments of the last method.The dataset is generated with a Python generator, which doesn’t load all the data in memory. As a result, the generator can handle large datasets. However, before the generated samples are flushed to the dataset file on disk, they are stored in an

ArrowWriterbuffer. This means the generated samples are written by batch. If your dataset samples consumes a lot of memory (images or videos), then make sure to specify a low value for theDEFAULT_WRITER_BATCH_SIZEattribute indatasets.DatasetBuilder. We recommend not exceeding a size of 200 MB.

Without loading scripts¶

As a user, you want to be able to quickly use a dataset. Implementing a dataset loading script can sometimes get in the way, or it may be a barrier for some people without a developer background. 🤗 Datasets removes this barrier by making it possible to load any dataset from the Hub without a dataset loading script. All a user has to do is upload the data files (see Add a community dataset for a list of supported file formats) to a dataset repository on the Hub, and they will be able to load that dataset without having to create a loading script. This doesn’t mean we are moving away from loading scripts because they still offer the most flexibility in controlling how a dataset is generated.

The loading script-free method uses the huggingface_hub library to list the files in a dataset repository. You can also provide a path to a local directory instead of a repository name, in which case 🤗 Datasets will use glob instead. Depending on the format of the data files available, one of the data file builders will create your dataset for you. If you have a CSV file, the CSV builder will be used and if you have a Parquet file, the Parquet builder will be used. The drawback of this approach is it’s not possible to simultaneously load a CSV and JSON file. You will need to load the two file types separately, and then concatenate them.

Maintaining integrity¶

To ensure a dataset is complete, datasets.load_dataset() will perform a series of tests on the downloaded files to make sure everything is there. This way, you don’t encounter any surprises when your requested dataset doesn’t get generated as expected. datasets.load_dataset() verifies:

The list of downloaded files.

The number of bytes of the downloaded files.

The SHA256 checksums of the downloaded files.

The number of splits in the generated

DatasetDict.The number of samples in each split of the generated

DatasetDict.

If the dataset doesn’t pass the verifications, it is likely that the original host of the dataset made some changes in the data files.

In this case, an error is raised to alert that the dataset has changed.

To ignore the error, one needs to specify ignore_verifications=True in load_dataset().

Anytime you see a verification error, feel free to open an issue on GitHub so that we can update the integrity checks for this dataset.