xlm-roberta-large-pooled-long-cap

Model description

An xlm-roberta-large model finetuned on multilingual training data containing longer documents (>= 512 tokens) labelled with major topic codes from the Comparative Agendas Project.

Fine-tuning procedure

xlm-roberta-large-pooled-long-cap was fine-tuned using the Hugging Face Trainer with a batch size of 8, a learning rate of 5e-06, and a maximum sequence length of 512. Early stopping was implemented with a patience of 2 epochs.

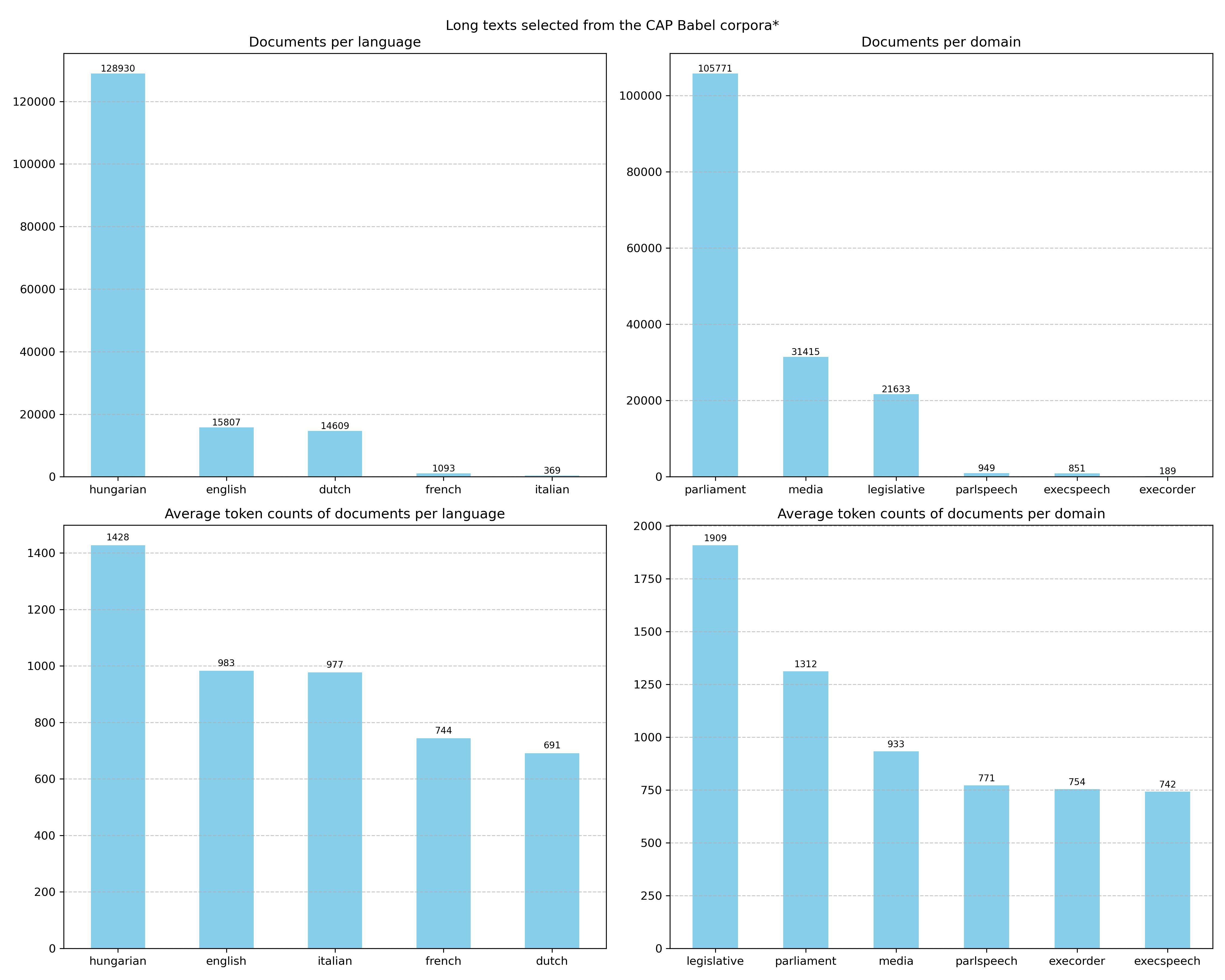

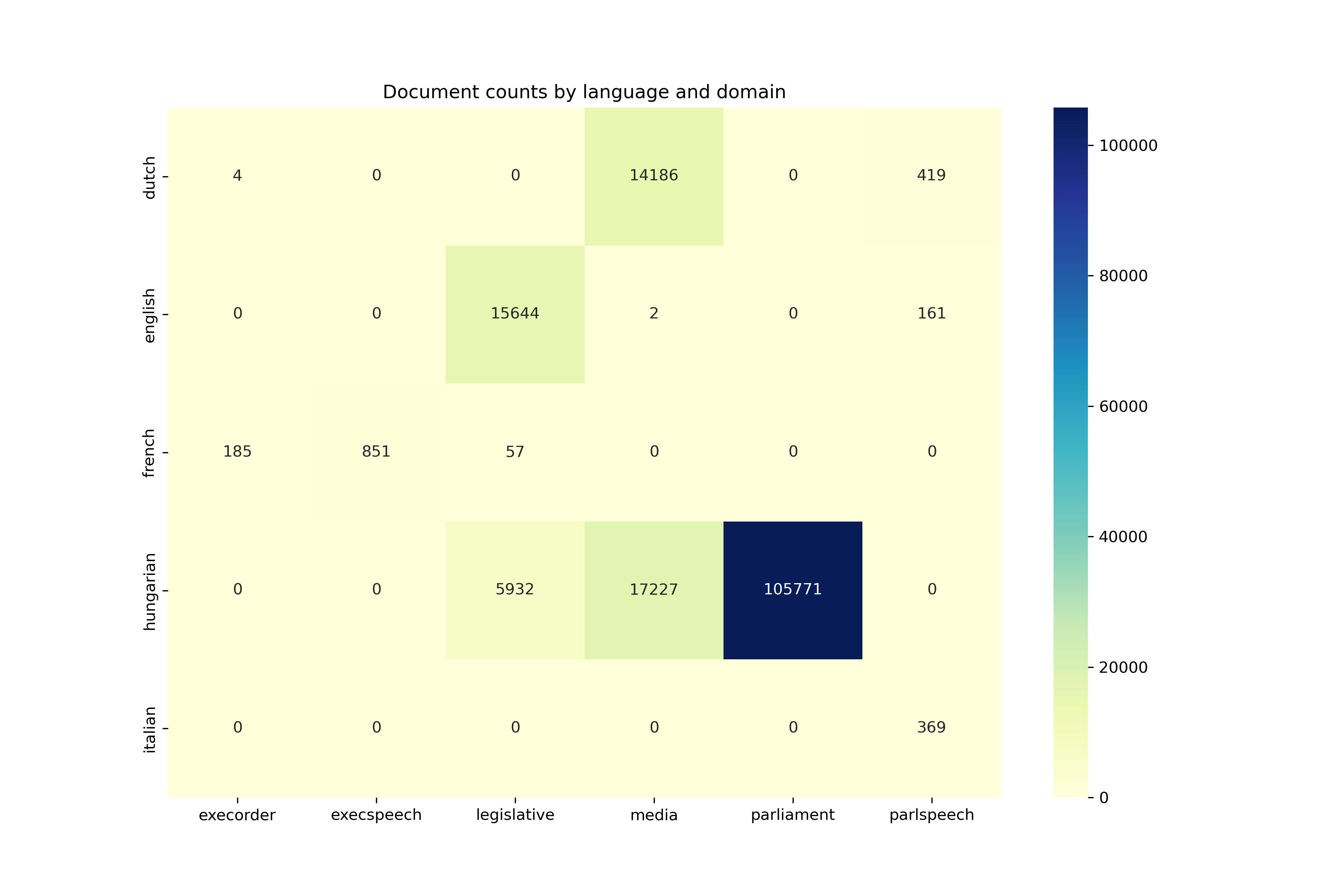

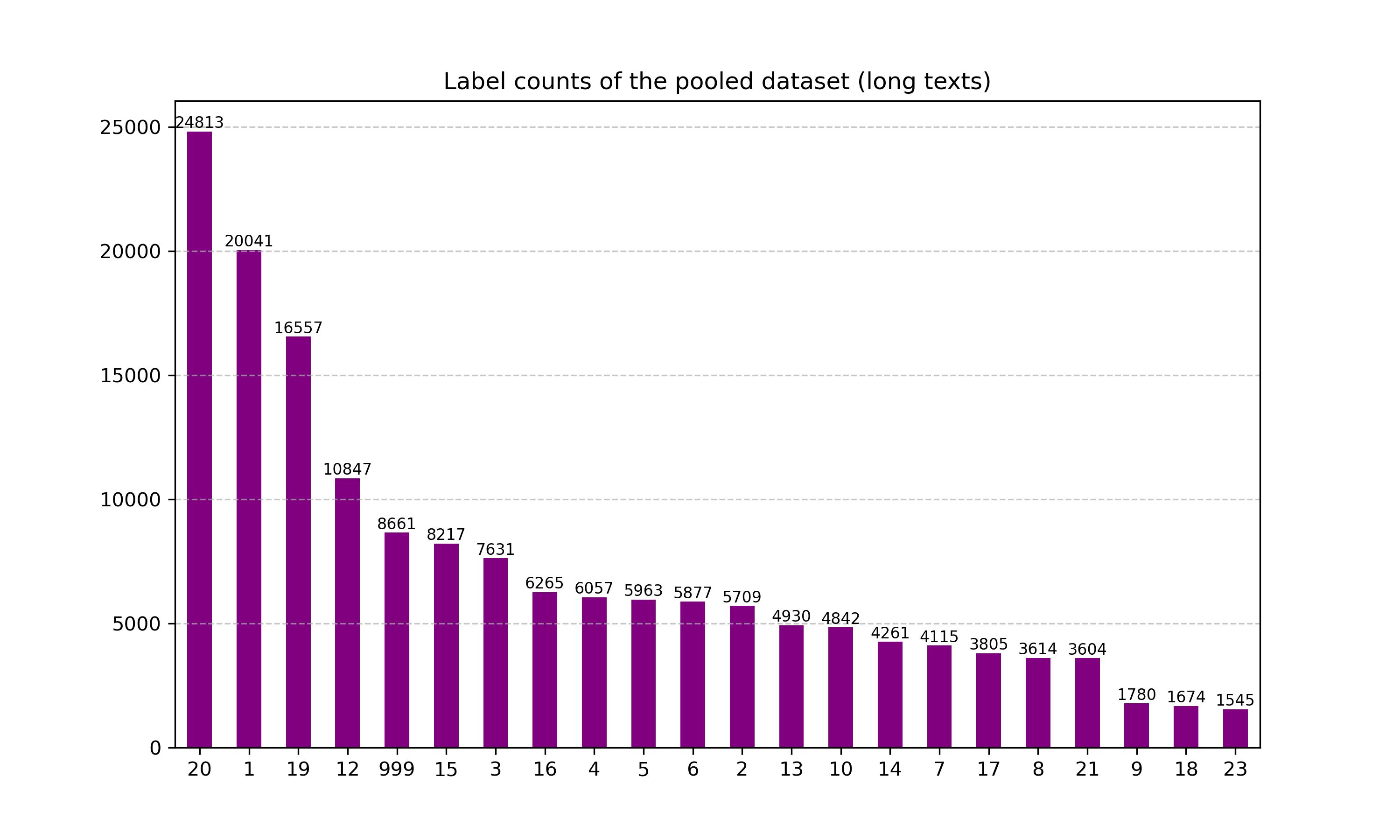

Training data consisted of 160 808 examples of texts longer than 512 tokens after tokenizing with the xlm-roberta-large tokenizer. Domain and language shares are the following:

Model performance

The model was evaluated on a test set of 40203 examples.

Metrics:

| precision | recall | f1-score | support | |

|---|---|---|---|---|

| Macroeconomics | 0.7573 | 0.7872 | 0.7720 | 5010 |

| Civil Rights | 0.6608 | 0.6566 | 0.6587 | 1427 |

| Health | 0.8407 | 0.8328 | 0.8368 | 1908 |

| Agriculture | 0.7910 | 0.8402 | 0.8149 | 1514 |

| Labor | 0.6608 | 0.6834 | 0.6719 | 1491 |

| Education | 0.8396 | 0.8796 | 0.8591 | 1470 |

| Environment | 0.7893 | 0.7901 | 0.7897 | 1029 |

| Energy | 0.7918 | 0.7865 | 0.7891 | 904 |

| Immigration | 0.7907 | 0.7640 | 0.7771 | 445 |

| Transportation | 0.8413 | 0.8413 | 0.8413 | 1210 |

| Law and Crime | 0.7715 | 0.7670 | 0.7692 | 2712 |

| Social Welfare | 0.6768 | 0.7070 | 0.6915 | 1232 |

| Housing | 0.7205 | 0.6948 | 0.7075 | 1065 |

| Domestic Commerce | 0.7277 | 0.7522 | 0.7398 | 2054 |

| Defense | 0.8839 | 0.8314 | 0.8569 | 1566 |

| Technology | 0.8536 | 0.8212 | 0.8371 | 951 |

| Foreign Trade | 0.7929 | 0.7512 | 0.7715 | 418 |

| International Affairs | 0.7961 | 0.8505 | 0.8224 | 4140 |

| Government Operations | 0.7746 | 0.7120 | 0.7420 | 6204 |

| Public Lands | 0.6109 | 0.6970 | 0.6511 | 901 |

| Culture | 0.7748 | 0.6062 | 0.6802 | 386 |

| No Policy Content | 0.8415 | 0.7992 | 0.8198 | 2166 |

Average metrics:

| precision | recall | f1-score | support | |

|---|---|---|---|---|

| macro avg | 0.7736 | 0.7736 | 0.7736 | 40203 |

| weighted avg | 0.7747 | 0.7736 | 0.7735 | 40203 |

The accuracy is 0.7736.

How to use the model

from transformers import AutoTokenizer, pipeline

tokenizer = AutoTokenizer.from_pretrained("xlm-roberta-large")

pipe = pipeline(model="poltextlab/xlm-roberta-large-pooled-long-cap", tokenizer=tokenizer, use_fast=False)

text = "We will place an immediate 6-month halt on the finance driven closure of beds and wards, and set up an independent audit of needs and facilities."

pipe(text)

Debugging and issues

This architecture uses the sentencepiece tokenizer. In order to run the model before transformers==4.27 you need to install it manually.

- Downloads last month

- 6