Use a custom Container Image

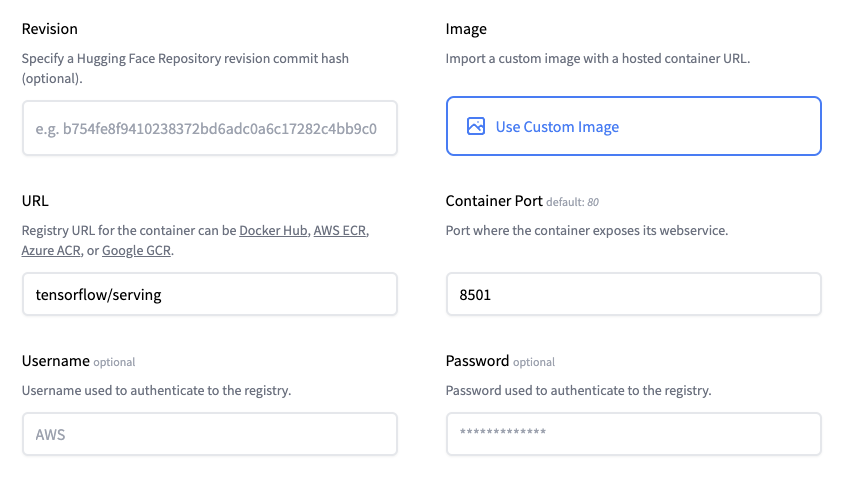

Inference Endpoints not only allows you to customize your inference handler, but it also allows you to provide a custom container image. Those can be public images like tensorflow/serving:2.7.3 or private Images hosted on Docker Hub, AWS ECR, Azure ACR, or Google GCR.

The creation flow of your Image artifacts from a custom image is the same as the base image. This means Inference Endpoints will create a unique image artifact derived from your provided image, including all Model Artifacts.

The Model Artifacts (weights) are stored under /repository. For example, if you usetensorflow/serving as your custom image, then you have to set `model_base_path=“/repository”:

tensorflow_model_server \

--rest_api_port=5000 \

--model_name=my_model \

--model_base_path="/repository"