Datasets:

image

imagewidth (px) 1.3k

1.92k

| label

class label 0

classes |

|---|---|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

|

null |

MSRA Text Detection 500 Database (MSRA-TD500)

The MSRA Text Detection 500 Database (MSRA-TD500) is a publicly released benchmark designed to evaluate text detection algorithms. This dataset aims to track recent progresses in the field of text detection within natural images, particularly focusing on texts of arbitrary orientations.

Dataset Overview

MSRA-TD500 contains 500 natural images sourced from indoor (e.g., office and mall) and outdoor (e.g., street) scenes captured with a pocket camera. The images depict various elements such as:

- Indoor: Signs, doorplates, and caution plates.

- Outdoor: Guide boards and billboards, often set against complex backgrounds.

Images resolutions range from 1296x864 to 1920x1280. This dataset challenges users with the diversity of texts and complexity of backgrounds, featuring texts in different languages (Chinese, English, or both), fonts, sizes, colors, and orientations. Backgrounds may include elements like vegetation and repeated patterns that can be difficult to distinguish from text.

Example Images

Figure 1: Typical images from MSRA-TD500 showing texts labeled as difficult due to factors like blur or occlusion.

Figure 1: Typical images from MSRA-TD500 showing texts labeled as difficult due to factors like blur or occlusion.

Dataset Structure

The dataset is split into two sets:

- Training Set: 300 images randomly selected from the original dataset.

- Test Set: 200 images.

All images are fully annotated, with the primary unit of annotation being the text line. This differs from the ICDAR datasets, which use the word as the basic unit.

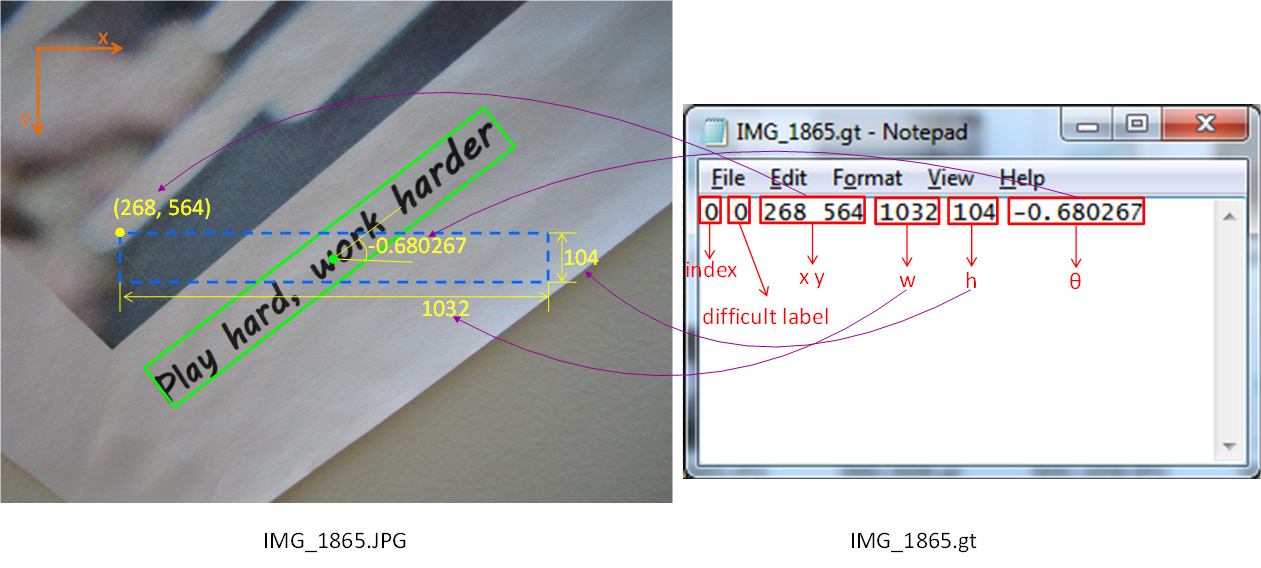

Ground Truth Annotation

Ground truth generation involves locating and bounding each text line using a four-vertex polygon, followed by fitting a minimum area rectangle around the polygon.

Figure 2: Ground truth generation process.

Figure 2: Ground truth generation process.

Evaluation Protocol

The evaluation protocol, designed to accommodate texts of arbitrary orientations, uses minimum area rectangles for tighter fitting. Texts labeled as "difficult" include additional challenges like small size, occlusion, blur, or truncation. Detection misses of such texts are not penalized.

Ground Truth File Format

Each image has a corresponding ground truth file. Each line in the file provides details about one text line, marking "difficult" texts with a label.

# Ground Truth Format Example

Index; Text Coords; Difficulty

0; x1,y1,x2,y2,x3,y3,x4,y4; 0

Figure 3: Illustration of the ground truth file format.

Figure 3: Illustration of the ground truth file format.

Reference

C. Yao, X. Bai, W. Liu, Y. Ma, and Z. Tu. "Detecting Texts of Arbitrary Orientations in Natural Images." CVPR 2012.

- Downloads last month

- 0