code

stringlengths 235

11.6M

| repo_path

stringlengths 3

263

|

|---|---|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# +

import panel as pn

import numpy as np

import holoviews as hv

pn.extension()

# -

# For a large variety of use cases we do not need complete control over the exact layout of each individual component on the page, as could be achieved with a [custom template](../../user_guide/Templates.ipynb), we just want to achieve a more polished look and feel. For these cases Panel ships with a number of default templates, which are defined by declaring four main content areas on the page, which can be populated as desired:

#

# * **`header`**: The header area of the HTML page

# * **`sidebar`**: A collapsible sidebar

# * **`main`**: The main area of the application

# * **`modal`**: A modal area which can be opened and closed from Python

#

# These four areas behave very similarly to other Panel layout components and have list-like semantics. The `ReactTemplate` in particular however is an exception to this rule as the `main` area behaves like panel `GridSpec` object. Unlike regular layout components however, the contents of the areas is fixed once rendered. If you need a dynamic layout you should therefore insert a regular Panel layout component (e.g. a `Column` or `Row`) and modify it in place once added to one of the content areas.

#

# Templates can allow for us to quickly and easily create web apps for displaying our data. Panel comes with a default Template, and includes multiple Templates that extend the default which add some customization for a better display.

#

# #### Parameters:

#

# In addition to the four different areas we can populate the `ReactTemplate` declares a few variables to configure the layout:

#

# * **`cols`** (dict): Number of columns in the grid for different display sizes (`default={'lg': 12, 'md': 10, 'sm': 6, 'xs': 4, 'xxs': 2}`)

# * **`breakpoints`** (dict): Sizes in pixels for various layouts (`default={'lg': 1200, 'md': 996, 'sm': 768, 'xs': 480, 'xxs': 0}`)

# * **`row_height`** (int, default=150): Height per row in the grid

# * **`dimensions`** (dict): Minimum/Maximum sizes of cells in grid units (`default={'minW': 0, 'maxW': 'Infinity', 'minH': 0, 'maxH': 'Infinity'}`)

# * **`prevent_collision`** (bool, default=Flase): Prevent collisions between grid items.

#

# These parameters control the responsive resizing in different layouts. The `ReactTemplate` also exposes the same parameters as other templates:

#

# * **`busy_indicator`** (BooleanIndicator): Visual indicator of application busy state.

# * **`header_background`** (str): Optional header background color override.

# * **`header_color`** (str): Optional header text color override.

# * **`logo`** (str): URI of logo to add to the header (if local file, logo is base64 encoded as URI).

# * **`site`** (str): Name of the site. Will be shown in the header. Default is '', i.e. not shown.

# * **`site_url`** (str): Url of the site and logo. Default is "/".

# * **`title`** (str): A title to show in the header.

# * **`theme`** (Theme): A Theme class (available in `panel.template.theme`)

#

# ________

# In this case we are using the `ReactTemplate`, built on [react-grid-layout](https://github.com/STRML/react-grid-layout), which provides a responsive, resizable, draggable grid layout. Here is an example of how you can set up a display using this template:

# +

react = pn.template.ReactTemplate(title='React Template')

pn.config.sizing_mode = 'stretch_both'

xs = np.linspace(0, np.pi)

freq = pn.widgets.FloatSlider(name="Frequency", start=0, end=10, value=2)

phase = pn.widgets.FloatSlider(name="Phase", start=0, end=np.pi)

@pn.depends(freq=freq, phase=phase)

def sine(freq, phase):

return hv.Curve((xs, np.sin(xs*freq+phase))).opts(

responsive=True, min_height=400)

@pn.depends(freq=freq, phase=phase)

def cosine(freq, phase):

return hv.Curve((xs, np.cos(xs*freq+phase))).opts(

responsive=True, min_height=400)

react.sidebar.append(freq)

react.sidebar.append(phase)

# Unlike other templates the `ReactTemplate.main` area acts like a GridSpec

react.main[:4, :6] = pn.Card(hv.DynamicMap(sine), title='Sine')

react.main[:4, 6:] = pn.Card(hv.DynamicMap(cosine), title='Cosine')

react.servable();

# -

# With the `row_height=150` this will result in the two `Card` objects filling 4 rows each totalling 600 pixels and each taking up 6 columns, which resize responsively to fill the screen and reflow when working on a smaller screen. When hovering of the top-left corner of each card a draggable handle will allow dragging the components around while a resize handle will show up at the bottom-right corner.

# <h3><b>ReactTemplate with DefaultTheme</b></h3>

# <img src="../../assets/React.png" style="margin-left: auto; margin-right: auto; display: block;"></img>

# </br>

# <h3><b>ReactTemplate with DarkTheme</b></h3>

# <img src="../../assets/ReactDark.png" style="margin-left: auto; margin-right: auto; display: block;"></img>

# The app can be displayed within the notebook by using `.servable()`, or rendered in another tab by replacing it with `.show()`.

#

# Themes can be added using the optional keyword argument `theme`. Each template comes with a DarkTheme and a DefaultTheme, which can be set `ReactTemplate(theme=DarkTheme)`. If no theme is set, then DefaultTheme will be applied.

#

# It should be noted that Templates may not render correctly in a notebook, and for the best performance the should ideally be deployed to a server.

| examples/reference/templates/React.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

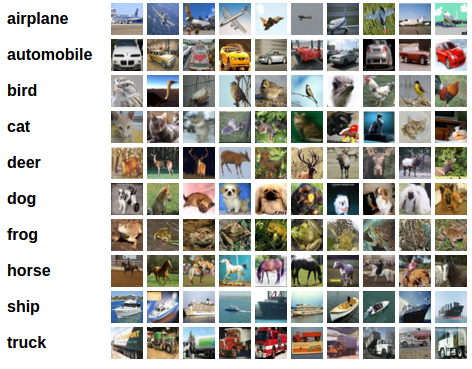

# # Convolutional Neural Networks: Application

#

# Welcome to Course 4's second assignment! In this notebook, you will:

#

# - Implement helper functions that you will use when implementing a TensorFlow model

# - Implement a fully functioning ConvNet using TensorFlow

#

# **After this assignment you will be able to:**

#

# - Build and train a ConvNet in TensorFlow for a classification problem

#

# We assume here that you are already familiar with TensorFlow. If you are not, please refer the *TensorFlow Tutorial* of the third week of Course 2 ("*Improving deep neural networks*").

# ## 1.0 - TensorFlow model

#

# In the previous assignment, you built helper functions using numpy to understand the mechanics behind convolutional neural networks. Most practical applications of deep learning today are built using programming frameworks, which have many built-in functions you can simply call.

#

# As usual, we will start by loading in the packages.

# +

import math

import numpy as np

import h5py

import matplotlib.pyplot as plt

import scipy

from PIL import Image

from scipy import ndimage

import tensorflow as tf

from tensorflow.python.framework import ops

from cnn_utils import *

# %matplotlib inline

np.random.seed(1)

# -

# Run the next cell to load the "SIGNS" dataset you are going to use.

# Loading the data (signs)

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = load_dataset()

# As a reminder, the SIGNS dataset is a collection of 6 signs representing numbers from 0 to 5.

#

# <img src="images/SIGNS.png" style="width:800px;height:300px;">

#

# The next cell will show you an example of a labelled image in the dataset. Feel free to change the value of `index` below and re-run to see different examples.

# Example of a picture

index = 6

plt.imshow(X_train_orig[index])

print ("y = " + str(np.squeeze(Y_train_orig[:, index])))

# In Course 2, you had built a fully-connected network for this dataset. But since this is an image dataset, it is more natural to apply a ConvNet to it.

#

# To get started, let's examine the shapes of your data.

X_train = X_train_orig/255.

X_test = X_test_orig/255.

Y_train = convert_to_one_hot(Y_train_orig, 6).T

Y_test = convert_to_one_hot(Y_test_orig, 6).T

print ("number of training examples = " + str(X_train.shape[0]))

print ("number of test examples = " + str(X_test.shape[0]))

print ("X_train shape: " + str(X_train.shape))

print ("Y_train shape: " + str(Y_train.shape))

print ("X_test shape: " + str(X_test.shape))

print ("Y_test shape: " + str(Y_test.shape))

conv_layers = {}

# ### 1.1 - Create placeholders

#

# TensorFlow requires that you create placeholders for the input data that will be fed into the model when running the session.

#

# **Exercise**: Implement the function below to create placeholders for the input image X and the output Y. You should not define the number of training examples for the moment. To do so, you could use "None" as the batch size, it will give you the flexibility to choose it later. Hence X should be of dimension **[None, n_H0, n_W0, n_C0]** and Y should be of dimension **[None, n_y]**. [Hint](https://www.tensorflow.org/api_docs/python/tf/placeholder).

# +

# GRADED FUNCTION: create_placeholders

def create_placeholders(n_H0, n_W0, n_C0, n_y):

"""

Creates the placeholders for the tensorflow session.

Arguments:

n_H0 -- scalar, height of an input image

n_W0 -- scalar, width of an input image

n_C0 -- scalar, number of channels of the input

n_y -- scalar, number of classes

Returns:

X -- placeholder for the data input, of shape [None, n_H0, n_W0, n_C0] and dtype "float"

Y -- placeholder for the input labels, of shape [None, n_y] and dtype "float"

"""

### START CODE HERE ### (≈2 lines)

X = tf.placeholder(tf.float32,[None, n_H0, n_W0, n_C0])

Y = tf.placeholder(tf.float32,[None, n_y])

### END CODE HERE ###

return X, Y

# -

X, Y = create_placeholders(64, 64, 3, 6)

print ("X = " + str(X))

print ("Y = " + str(Y))

# **Expected Output**

#

# <table>

# <tr>

# <td>

# X = Tensor("Placeholder:0", shape=(?, 64, 64, 3), dtype=float32)

#

# </td>

# </tr>

# <tr>

# <td>

# Y = Tensor("Placeholder_1:0", shape=(?, 6), dtype=float32)

#

# </td>

# </tr>

# </table>

# ### 1.2 - Initialize parameters

#

# You will initialize weights/filters $W1$ and $W2$ using `tf.contrib.layers.xavier_initializer(seed = 0)`. You don't need to worry about bias variables as you will soon see that TensorFlow functions take care of the bias. Note also that you will only initialize the weights/filters for the conv2d functions. TensorFlow initializes the layers for the fully connected part automatically. We will talk more about that later in this assignment.

#

# **Exercise:** Implement initialize_parameters(). The dimensions for each group of filters are provided below. Reminder - to initialize a parameter $W$ of shape [1,2,3,4] in Tensorflow, use:

# ```python

# W = tf.get_variable("W", [1,2,3,4], initializer = ...)

# ```

# [More Info](https://www.tensorflow.org/api_docs/python/tf/get_variable).

# +

# GRADED FUNCTION: initialize_parameters

def initialize_parameters():

"""

Initializes weight parameters to build a neural network with tensorflow. The shapes are:

W1 : [4, 4, 3, 8]

W2 : [2, 2, 8, 16]

Returns:

parameters -- a dictionary of tensors containing W1, W2

"""

tf.set_random_seed(1) # so that your "random" numbers match ours

### START CODE HERE ### (approx. 2 lines of code)

W1 = tf.get_variable("W1",[4, 4, 3, 8],initializer=tf.contrib.layers.xavier_initializer(seed=0))

W2 = tf.get_variable("W2",[2, 2, 8, 16],initializer=tf.contrib.layers.xavier_initializer(seed=0))

### END CODE HERE ###

parameters = {"W1": W1,

"W2": W2}

return parameters

# -

tf.reset_default_graph()

with tf.Session() as sess_test:

parameters = initialize_parameters()

init = tf.global_variables_initializer()

sess_test.run(init)

print("W1 = " + str(parameters["W1"].eval()[1,1,1]))

print("W2 = " + str(parameters["W2"].eval()[1,1,1]))

# ** Expected Output:**

#

# <table>

#

# <tr>

# <td>

# W1 =

# </td>

# <td>

# [ 0.00131723 0.14176141 -0.04434952 0.09197326 0.14984085 -0.03514394 <br>

# -0.06847463 0.05245192]

# </td>

# </tr>

#

# <tr>

# <td>

# W2 =

# </td>

# <td>

# [-0.08566415 0.17750949 0.11974221 0.16773748 -0.0830943 -0.08058 <br>

# -0.00577033 -0.14643836 0.24162132 -0.05857408 -0.19055021 0.1345228 <br>

# -0.22779644 -0.1601823 -0.16117483 -0.10286498]

# </td>

# </tr>

#

# </table>

# ### 1.2 - Forward propagation

#

# In TensorFlow, there are built-in functions that carry out the convolution steps for you.

#

# - **tf.nn.conv2d(X,W1, strides = [1,s,s,1], padding = 'SAME'):** given an input $X$ and a group of filters $W1$, this function convolves $W1$'s filters on X. The third input ([1,f,f,1]) represents the strides for each dimension of the input (m, n_H_prev, n_W_prev, n_C_prev). You can read the full documentation [here](https://www.tensorflow.org/api_docs/python/tf/nn/conv2d)

#

# - **tf.nn.max_pool(A, ksize = [1,f,f,1], strides = [1,s,s,1], padding = 'SAME'):** given an input A, this function uses a window of size (f, f) and strides of size (s, s) to carry out max pooling over each window. You can read the full documentation [here](https://www.tensorflow.org/api_docs/python/tf/nn/max_pool)

#

# - **tf.nn.relu(Z1):** computes the elementwise ReLU of Z1 (which can be any shape). You can read the full documentation [here.](https://www.tensorflow.org/api_docs/python/tf/nn/relu)

#

# - **tf.contrib.layers.flatten(P)**: given an input P, this function flattens each example into a 1D vector it while maintaining the batch-size. It returns a flattened tensor with shape [batch_size, k]. You can read the full documentation [here.](https://www.tensorflow.org/api_docs/python/tf/contrib/layers/flatten)

#

# - **tf.contrib.layers.fully_connected(F, num_outputs):** given a the flattened input F, it returns the output computed using a fully connected layer. You can read the full documentation [here.](https://www.tensorflow.org/api_docs/python/tf/contrib/layers/fully_connected)

#

# In the last function above (`tf.contrib.layers.fully_connected`), the fully connected layer automatically initializes weights in the graph and keeps on training them as you train the model. Hence, you did not need to initialize those weights when initializing the parameters.

#

#

# **Exercise**:

#

# Implement the `forward_propagation` function below to build the following model: `CONV2D -> RELU -> MAXPOOL -> CONV2D -> RELU -> MAXPOOL -> FLATTEN -> FULLYCONNECTED`. You should use the functions above.

#

# In detail, we will use the following parameters for all the steps:

# - Conv2D: stride 1, padding is "SAME"

# - ReLU

# - Max pool: Use an 8 by 8 filter size and an 8 by 8 stride, padding is "SAME"

# - Conv2D: stride 1, padding is "SAME"

# - ReLU

# - Max pool: Use a 4 by 4 filter size and a 4 by 4 stride, padding is "SAME"

# - Flatten the previous output.

# - FULLYCONNECTED (FC) layer: Apply a fully connected layer without an non-linear activation function. Do not call the softmax here. This will result in 6 neurons in the output layer, which then get passed later to a softmax. In TensorFlow, the softmax and cost function are lumped together into a single function, which you'll call in a different function when computing the cost.

# +

# GRADED FUNCTION: forward_propagation

def forward_propagation(X, parameters):

"""

Implements the forward propagation for the model:

CONV2D -> RELU -> MAXPOOL -> CONV2D -> RELU -> MAXPOOL -> FLATTEN -> FULLYCONNECTED

Arguments:

X -- input dataset placeholder, of shape (input size, number of examples)

parameters -- python dictionary containing your parameters "W1", "W2"

the shapes are given in initialize_parameters

Returns:

Z3 -- the output of the last LINEAR unit

"""

# Retrieve the parameters from the dictionary "parameters"

W1 = parameters['W1']

W2 = parameters['W2']

### START CODE HERE ###

# CONV2D: stride of 1, padding 'SAME'

Z1 = tf.nn.conv2d(X,W1,strides=[1,1,1,1],padding="SAME")

# RELU

A1 = tf.nn.relu(Z1)

# MAXPOOL: window 8x8, sride 8, padding 'SAME'

P1 = tf.nn.max_pool(A1,ksize=[1,8,8,1],strides=[1,8,8,1],padding="SAME")

# CONV2D: filters W2, stride 1, padding 'SAME'

Z2 = tf.nn.conv2d(P1,W2,strides=[1,1,1,1],padding="SAME")

# RELU

A2 = tf.nn.relu(Z2)

# MAXPOOL: window 4x4, stride 4, padding 'SAME'

P2 = tf.nn.max_pool(A2,ksize=[1,4,4,1],strides=[1,4,4,1],padding="SAME")

# FLATTEN

P2 = tf.contrib.layers.flatten(P2)

# FULLY-CONNECTED without non-linear activation function (not not call softmax).

# 6 neurons in output layer. Hint: one of the arguments should be "activation_fn=None"

Z3 = tf.contrib.layers.fully_connected(P2,num_outputs=6,activation_fn=None)

### END CODE HERE ###

return Z3

# +

tf.reset_default_graph()

with tf.Session() as sess:

np.random.seed(1)

X, Y = create_placeholders(64, 64, 3, 6)

parameters = initialize_parameters()

Z3 = forward_propagation(X, parameters)

init = tf.global_variables_initializer()

sess.run(init)

a = sess.run(Z3, {X: np.random.randn(2,64,64,3), Y: np.random.randn(2,6)})

print("Z3 = " + str(a))

# -

# **Expected Output**:

#

# <table>

# <td>

# Z3 =

# </td>

# <td>

# [[-0.44670227 -1.57208765 -1.53049231 -2.31013036 -1.29104376 0.46852064] <br>

# [-0.17601591 -1.57972014 -1.4737016 -2.61672091 -1.00810647 0.5747785 ]]

# </td>

# </table>

# ### 1.3 - Compute cost

#

# Implement the compute cost function below. You might find these two functions helpful:

#

# - **tf.nn.softmax_cross_entropy_with_logits(logits = Z3, labels = Y):** computes the softmax entropy loss. This function both computes the softmax activation function as well as the resulting loss. You can check the full documentation [here.](https://www.tensorflow.org/api_docs/python/tf/nn/softmax_cross_entropy_with_logits)

# - **tf.reduce_mean:** computes the mean of elements across dimensions of a tensor. Use this to sum the losses over all the examples to get the overall cost. You can check the full documentation [here.](https://www.tensorflow.org/api_docs/python/tf/reduce_mean)

#

# ** Exercise**: Compute the cost below using the function above.

# +

# GRADED FUNCTION: compute_cost

def compute_cost(Z3, Y):

"""

Computes the cost

Arguments:

Z3 -- output of forward propagation (output of the last LINEAR unit), of shape (6, number of examples)

Y -- "true" labels vector placeholder, same shape as Z3

Returns:

cost - Tensor of the cost function

"""

### START CODE HERE ### (1 line of code)

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits = Z3, labels = Y))

### END CODE HERE ###

return cost

# +

tf.reset_default_graph()

with tf.Session() as sess:

np.random.seed(1)

X, Y = create_placeholders(64, 64, 3, 6)

parameters = initialize_parameters()

Z3 = forward_propagation(X, parameters)

cost = compute_cost(Z3, Y)

init = tf.global_variables_initializer()

sess.run(init)

a = sess.run(cost, {X: np.random.randn(4,64,64,3), Y: np.random.randn(4,6)})

print("cost = " + str(a))

# -

# **Expected Output**:

#

# <table>

# <td>

# cost =

# </td>

#

# <td>

# 2.91034

# </td>

# </table>

# ## 1.4 Model

#

# Finally you will merge the helper functions you implemented above to build a model. You will train it on the SIGNS dataset.

#

# You have implemented `random_mini_batches()` in the Optimization programming assignment of course 2. Remember that this function returns a list of mini-batches.

#

# **Exercise**: Complete the function below.

#

# The model below should:

#

# - create placeholders

# - initialize parameters

# - forward propagate

# - compute the cost

# - create an optimizer

#

# Finally you will create a session and run a for loop for num_epochs, get the mini-batches, and then for each mini-batch you will optimize the function. [Hint for initializing the variables](https://www.tensorflow.org/api_docs/python/tf/global_variables_initializer)

# +

# GRADED FUNCTION: model

def model(X_train, Y_train, X_test, Y_test, learning_rate = 0.009,

num_epochs = 100, minibatch_size = 64, print_cost = True):

"""

Implements a three-layer ConvNet in Tensorflow:

CONV2D -> RELU -> MAXPOOL -> CONV2D -> RELU -> MAXPOOL -> FLATTEN -> FULLYCONNECTED

Arguments:

X_train -- training set, of shape (None, 64, 64, 3)

Y_train -- test set, of shape (None, n_y = 6)

X_test -- training set, of shape (None, 64, 64, 3)

Y_test -- test set, of shape (None, n_y = 6)

learning_rate -- learning rate of the optimization

num_epochs -- number of epochs of the optimization loop

minibatch_size -- size of a minibatch

print_cost -- True to print the cost every 100 epochs

Returns:

train_accuracy -- real number, accuracy on the train set (X_train)

test_accuracy -- real number, testing accuracy on the test set (X_test)

parameters -- parameters learnt by the model. They can then be used to predict.

"""

ops.reset_default_graph() # to be able to rerun the model without overwriting tf variables

tf.set_random_seed(1) # to keep results consistent (tensorflow seed)

seed = 3 # to keep results consistent (numpy seed)

(m, n_H0, n_W0, n_C0) = X_train.shape

n_y = Y_train.shape[1]

costs = [] # To keep track of the cost

# Create Placeholders of the correct shape

### START CODE HERE ### (1 line)

X, Y = create_placeholders(n_H0, n_W0, n_C0, n_y)

### END CODE HERE ###

# Initialize parameters

### START CODE HERE ### (1 line)

parameters = initialize_parameters()

### END CODE HERE ###

# Forward propagation: Build the forward propagation in the tensorflow graph

### START CODE HERE ### (1 line)

Z3 = forward_propagation(X, parameters)

### END CODE HERE ###

# Cost function: Add cost function to tensorflow graph

### START CODE HERE ### (1 line)

cost = compute_cost(Z3, Y)

### END CODE HERE ###

# Backpropagation: Define the tensorflow optimizer. Use an AdamOptimizer that minimizes the cost.

### START CODE HERE ### (1 line)

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

### END CODE HERE ###

# Initialize all the variables globally

init = tf.global_variables_initializer()

# Start the session to compute the tensorflow graph

with tf.Session() as sess:

# Run the initialization

sess.run(init)

# Do the training loop

for epoch in range(num_epochs):

minibatch_cost = 0.

num_minibatches = int(m / minibatch_size) # number of minibatches of size minibatch_size in the train set

seed = seed + 1

minibatches = random_mini_batches(X_train, Y_train, minibatch_size, seed)

for minibatch in minibatches:

# Select a minibatch

(minibatch_X, minibatch_Y) = minibatch

# IMPORTANT: The line that runs the graph on a minibatch.

# Run the session to execute the optimizer and the cost, the feedict should contain a minibatch for (X,Y).

### START CODE HERE ### (1 line)

_ , temp_cost = sess.run([optimizer,cost],feed_dict={X:minibatch_X,Y:minibatch_Y})

### END CODE HERE ###

minibatch_cost += temp_cost / num_minibatches

# Print the cost every epoch

if print_cost == True and epoch % 5 == 0:

print ("Cost after epoch %i: %f" % (epoch, minibatch_cost))

if print_cost == True and epoch % 1 == 0:

costs.append(minibatch_cost)

# plot the cost

plt.plot(np.squeeze(costs))

plt.ylabel('cost')

plt.xlabel('iterations (per tens)')

plt.title("Learning rate =" + str(learning_rate))

plt.show()

# Calculate the correct predictions

predict_op = tf.argmax(Z3, 1)

correct_prediction = tf.equal(predict_op, tf.argmax(Y, 1))

# Calculate accuracy on the test set

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print(accuracy)

train_accuracy = accuracy.eval({X: X_train, Y: Y_train})

test_accuracy = accuracy.eval({X: X_test, Y: Y_test})

print("Train Accuracy:", train_accuracy)

print("Test Accuracy:", test_accuracy)

return train_accuracy, test_accuracy, parameters

# -

# Run the following cell to train your model for 100 epochs. Check if your cost after epoch 0 and 5 matches our output. If not, stop the cell and go back to your code!

_, _, parameters = model(X_train, Y_train, X_test, Y_test)

# **Expected output**: although it may not match perfectly, your expected output should be close to ours and your cost value should decrease.

#

# <table>

# <tr>

# <td>

# **Cost after epoch 0 =**

# </td>

#

# <td>

# 1.917929

# </td>

# </tr>

# <tr>

# <td>

# **Cost after epoch 5 =**

# </td>

#

# <td>

# 1.506757

# </td>

# </tr>

# <tr>

# <td>

# **Train Accuracy =**

# </td>

#

# <td>

# 0.940741

# </td>

# </tr>

#

# <tr>

# <td>

# **Test Accuracy =**

# </td>

#

# <td>

# 0.783333

# </td>

# </tr>

# </table>

# Congratulations! You have finised the assignment and built a model that recognizes SIGN language with almost 80% accuracy on the test set. If you wish, feel free to play around with this dataset further. You can actually improve its accuracy by spending more time tuning the hyperparameters, or using regularization (as this model clearly has a high variance).

#

# Once again, here's a thumbs up for your work!

fname = "images/thumbs_up.jpg"

image = np.array(ndimage.imread(fname, flatten=False))

my_image = scipy.misc.imresize(image, size=(64,64))

plt.imshow(my_image)

| Convolutional Neural Networks/Week 1/Convolution+model+-+Application+-+v1.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 2

# language: python

# name: python2

# ---

# # Непараметрические криетрии

# Критерий | Одновыборочный | Двухвыборочный | Двухвыборочный (связанные выборки)

# ------------- | -------------|

# **Знаков** | $\times$ | | $\times$

# **Ранговый** | $\times$ | $\times$ | $\times$

# **Перестановочный** | $\times$ | $\times$ | $\times$

# ## Mirrors as potential environmental enrichment for individually housed laboratory mice

# (Sherwin, 2004): 16 лабораторных мышей были помещены в двухкомнатные клетки, в одной из комнат висело зеркало. С целью установить, есть ли у мышей какие-то предпочтения насчет зеркал, измерялась доля времени, которое каждая мышь проводила в каждой из своих двух клеток.

# +

import numpy as np

import pandas as pd

import itertools

from scipy import stats

from statsmodels.stats.descriptivestats import sign_test

from statsmodels.stats.weightstats import zconfint

# -

# %pylab inline

# ### Загрузка данных

mouses_data = pd.read_csv('mirror_mouses.txt', header = None)

mouses_data.columns = ['proportion_of_time']

mouses_data

mouses_data.describe()

pylab.hist(mouses_data.proportion_of_time)

pylab.show()

# ## Одновыборочные критерии

print '95%% confidence interval for the median time: [%f, %f]' % zconfint(mouses_data)

# ### Критерий знаков

# $H_0\colon$ медиана доли времени, проведенного в клетке с зеркалом, равна 0.5

#

# $H_1\colon$ медиана доли времени, проведенного в клетке с зеркалом, не равна 0.5

print "M: %d, p-value: %f" % sign_test(mouses_data, 0.5)

# ### Критерий знаковых рангов Вилкоксона

m0 = 0.5

stats.wilcoxon(mouses_data.proportion_of_time - m0)

# ### Перестановочный критерий

# $H_0\colon$ среднее равно 0.5

#

# $H_1\colon$ среднее не равно 0.5

def permutation_t_stat_1sample(sample, mean):

t_stat = sum(map(lambda x: x - mean, sample))

return t_stat

permutation_t_stat_1sample(mouses_data.proportion_of_time, 0.5)

def permutation_zero_distr_1sample(sample, mean, max_permutations = None):

centered_sample = map(lambda x: x - mean, sample)

if max_permutations:

signs_array = set([tuple(x) for x in 2 * np.random.randint(2, size = (max_permutations,

len(sample))) - 1 ])

else:

signs_array = itertools.product([-1, 1], repeat = len(sample))

distr = [sum(centered_sample * np.array(signs)) for signs in signs_array]

return distr

pylab.hist(permutation_zero_distr_1sample(mouses_data.proportion_of_time, 0.5), bins = 15)

pylab.show()

def permutation_test(sample, mean, max_permutations = None, alternative = 'two-sided'):

if alternative not in ('two-sided', 'less', 'greater'):

raise ValueError("alternative not recognized\n"

"should be 'two-sided', 'less' or 'greater'")

t_stat = permutation_t_stat_1sample(sample, mean)

zero_distr = permutation_zero_distr_1sample(sample, mean, max_permutations)

if alternative == 'two-sided':

return sum([1. if abs(x) >= abs(t_stat) else 0. for x in zero_distr]) / len(zero_distr)

if alternative == 'less':

return sum([1. if x <= t_stat else 0. for x in zero_distr]) / len(zero_distr)

if alternative == 'greater':

return sum([1. if x >= t_stat else 0. for x in zero_distr]) / len(zero_distr)

print "p-value: %f" % permutation_test(mouses_data.proportion_of_time, 0.5)

print "p-value: %f" % permutation_test(mouses_data.proportion_of_time, 0.5, 10000)

| statistics/Одновыборочные непараметрические критерии stat.non_parametric_tests_1sample.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python [default]

# language: python

# name: python3

# ---

# # The Multivariate Gaussian distribution

#

# The density of a multivariate Gaussian with mean vector $\mu$ and covariance matrix $\Sigma$ is given as

#

# \begin{align}

# \mathcal{N}(x; \mu, \Sigma) &= |2\pi \Sigma|^{-1/2} \exp\left( -\frac{1}{2} (x-\mu)^\top \Sigma^{-1} (x-\mu) \right) \\

# & = \exp\left(-\frac{1}{2} x^\top \Sigma^{-1} x + \mu^\top \Sigma^{-1} x - \frac{1}{2} \mu^\top \Sigma^{-1} \mu -\frac{1}{2}\log \det(2\pi \Sigma) \right) \\

# \end{align}

#

# Here, $|X|$ denotes the determinant of a square matrix.

#

# $\newcommand{\trace}{\mathop{Tr}}$

#

# \begin{align}

# {\cal N}(s; \mu, P) & = |2\pi P|^{-1/2} \exp\left(-\frac{1}2 (s-\mu)^\top P^{-1} (s-\mu) \right)

# \\

# & = \exp\left(

# -\frac{1}{2}s^\top{P^{-1}}s + \mu^\top P^{-1}s { -\frac{1}{2}\mu^\top{P^{-1}\mu -\frac12|2\pi P|}}

# \right) \\

# \log {\cal N}(s; \mu, P) & = -\frac{1}{2}s^\top{P^{-1}}s + \mu^\top P^{-1}s + \text{ const} \\

# & = -\frac{1}{2}\trace {P^{-1}} s s^\top + \mu^\top P^{-1}s + \text{ const} \\

# \end{align}

#

# ## Special Cases

#

# To gain the intuition, we take a look to a few special cases

# ### Bivariate Gaussian

#

# #### Example 1: Identity covariance matrix

#

# $

# x = \left(\begin{array}{c} x_1 \\ x_2 \end{array} \right)

# $

#

# $

# \mu = \left(\begin{array}{c} 0 \\ 0 \end{array} \right)

# $

#

# $

# \Sigma = \left(\begin{array}{cc} 1& 0 \\ 0 & 1 \end{array} \right) = I_2

# $

#

# \begin{align}

# \mathcal{N}(x; \mu, \Sigma) &= |2\pi I_{2}|^{-1/2} \exp\left( -\frac{1}{2} x^\top x \right)

# = (2\pi)^{-1} \exp\left( -\frac{1}{2} \left( x_1^2 + x_2^2\right) \right) = (2\pi)^{-1/2} \exp\left( -\frac{1}{2} x_1^2 \right)(2\pi)^{-1/2} \exp\left( -\frac{1}{2} x_2^2 \right)\\

# & = \mathcal{N}(x; 0, 1) \mathcal{N}(x; 0, 1)

# \end{align}

#

# #### Example 2: Diagonal covariance

# $\newcommand{\diag}{\text{diag}}$

#

# $

# x = \left(\begin{array}{c} x_1 \\ x_2 \end{array} \right)

# $

#

# $

# \mu = \left(\begin{array}{c} \mu_1 \\ \mu_2 \end{array} \right)

# $

#

# $

# \Sigma = \left(\begin{array}{cc} s_1 & 0 \\ 0 & s_2 \end{array} \right) = \diag(s_1, s_2)

# $

#

# \begin{eqnarray}

# \mathcal{N}(x; \mu, \Sigma) &=& \left|2\pi \left(\begin{array}{cc} s_1 & 0 \\ 0 & s_2 \end{array} \right)\right|^{-1/2} \exp\left( -\frac{1}{2} \left(\begin{array}{c} x_1 - \mu_1 \\ x_2-\mu_2 \end{array} \right)^\top \left(\begin{array}{cc} 1/s_1 & 0 \\ 0 & 1/s_2 \end{array} \right) \left(\begin{array}{c} x_1 - \mu_1 \\ x_2-\mu_2 \end{array} \right) \right) \\

# &=& ((2\pi)^2 s_1 s_2 )^{-1/2} \exp\left( -\frac{1}{2} \left( \frac{(x_1-\mu_1)^2}{s_1} + \frac{(x_2-\mu_2)^2}{s_2}\right) \right) \\

# & = &\mathcal{N}(x; \mu_1, s_1) \mathcal{N}(x; \mu_2, s_2)

# \end{eqnarray}

#

# #### Example 3:

# $

# x = \left(\begin{array}{c} x_1 \\ x_2 \end{array} \right)

# $

#

# $

# \mu = \left(\begin{array}{c} \mu_1 \\ \mu_2 \end{array} \right)

# $

#

# $

# \Sigma = \left(\begin{array}{cc} 1 & \rho \\ \rho & 1 \end{array} \right)

# $

# for $1<\rho<-1$.

#

# Need $K = \Sigma^{-1}$. When $|\Sigma| \neq 0$ we have $K\Sigma^{-1} = I$.

#

# $

# \left(\begin{array}{cc} 1 & \rho \\ \rho & 1 \end{array} \right) \left(\begin{array}{cc} k_{11} & k_{12} \\ k_{21} & k_{22} \end{array} \right) = \left(\begin{array}{cc} 1& 0 \\ 0 & 1 \end{array} \right)

# $

# \begin{align}

# k_{11} &+ \rho k_{21} & & &=1 \\

# \rho k_{11} &+ k_{21} & & &=0 \\

# && k_{12} &+ \rho k_{22} &=0 \\

# && \rho k_{12} &+ k_{22} &=1 \\

# \end{align}

# Solving these equations leads to the solution

#

# $$

# \left(\begin{array}{cc} k_{11} & k_{12} \\ k_{21} & k_{22} \end{array} \right) = \frac{1}{1-\rho^2}\left(\begin{array}{cc} 1 & -\rho \\ -\rho & 1 \end{array} \right)

# $$

# Plotting the Equal probability contours

# +

# %matplotlib inline

import matplotlib.pyplot as plt

import numpy as np

from notes_utilities import pnorm_ball_points

RHO = np.arange(-0.9,1,0.3)

plt.figure(figsize=(20,20/len(RHO)))

plt.rc('text', usetex=True)

plt.rc('font', family='serif')

for i,rho in enumerate(RHO):

plt.subplot(1,len(RHO),i+1)

plt.axis('equal')

ax = plt.gca()

ax.set_xlim(-4,4)

ax.set_ylim(-4,4)

S = np.mat([[1, rho],[rho,1]])

A = np.linalg.cholesky(S)

dx,dy = pnorm_ball_points(3*A)

plt.title(r'$\rho =$ '+str(rho if np.abs(rho)>1E-9 else 0), fontsize=16)

ln = plt.Line2D(dx,dy,markeredgecolor='k', linewidth=1, color='b')

ax.add_line(ln)

ax.set_axis_off()

#ax.set_visible(False)

plt.show()

# +

from ipywidgets import interact, interactive, fixed

import ipywidgets as widgets

from IPython.display import clear_output, display, HTML

from matplotlib import rc

from notes_utilities import bmatrix, pnorm_ball_line

rc('font',**{'family':'sans-serif','sans-serif':['Helvetica']})

## for Palatino and other serif fonts use:

#rc('font',**{'family':'serif','serif':['Palatino']})

rc('text', usetex=True)

fig = plt.figure(figsize=(5,5))

S = np.array([[1,0],[0,1]])

dx,dy = pnorm_ball_points(S)

ln = plt.Line2D(dx,dy,markeredgecolor='k', linewidth=1, color='b')

dx,dy = pnorm_ball_points(np.eye(2))

ln2 = plt.Line2D(dx,dy,markeredgecolor='k', linewidth=1, color='k',linestyle=':')

plt.xlabel('$x_1$')

plt.ylabel('$x_2$')

ax = fig.gca()

ax.set_xlim((-4,4))

ax.set_ylim((-4,4))

txt = ax.text(-1,-3,'$\left(\right)$',fontsize=15)

ax.add_line(ln)

ax.add_line(ln2)

plt.close(fig)

def set_line(s_1, s_2, rho, p, a, q):

S = np.array([[s_1**2, rho*s_1*s_2],[rho*s_1*s_2, s_2**2]])

A = np.linalg.cholesky(S)

#S = A.dot(A.T)

dx,dy = pnorm_ball_points(A,p=p)

ln.set_xdata(dx)

ln.set_ydata(dy)

dx,dy = pnorm_ball_points(a*np.eye(2),p=q)

ln2.set_xdata(dx)

ln2.set_ydata(dy)

txt.set_text(bmatrix(S))

display(fig)

ax.set_axis_off()

interact(set_line, s_1=(0.1,2,0.01), s_2=(0.1, 2, 0.01), rho=(-0.99, 0.99, 0.01), p=(0.1,4,0.1), a=(0.2,10,0.1), q=(0.1,4,0.1))

# -

# %run plot_normballs.py

# %run matrix_norm_sliders.py

# Exercise:

#

# $

# x = \left(\begin{array}{c} x_1 \\ x_2 \end{array} \right)

# $

#

# $

# \mu = \left(\begin{array}{c} \mu_1 \\ \mu_2 \end{array} \right)

# $

#

# $

# \Sigma = \left(\begin{array}{cc} s_{11} & s_{12} \\ s_{12} & s_{22} \end{array} \right)

# $

#

#

# Need $K = \Sigma^{-1}$. When $|\Sigma| \neq 0$ we have $K\Sigma^{-1} = I$.

#

# $

# \left(\begin{array}{cc} s_{11} & s_{12} \\ s_{12} & s_{22} \end{array} \right) \left(\begin{array}{cc} k_{11} & k_{12} \\ k_{21} & k_{22} \end{array} \right) = \left(\begin{array}{cc} 1& 0 \\ 0 & 1 \end{array} \right)

# $

#

# Derive the result

# $$

# K = \left(\begin{array}{cc} k_{11} & k_{12} \\ k_{21} & k_{22} \end{array} \right)

# $$

#

# Step 1: Verify

#

# $$

# \left(\begin{array}{cc} s_{11} & s_{12} \\ s_{21} & s_{22} \end{array} \right) = \left(\begin{array}{cc} 1 & -s_{12}/s_{22} \\ 0 & 1 \end{array} \right) \left(\begin{array}{cc} s_{11}-s_{12}^2/s_{22} & 0 \\ 0 & s_{22} \end{array} \right) \left(\begin{array}{cc} 1 & 0 \\ -s_{12}/s_{22} & 1 \end{array} \right)

# $$

#

# Step 2: Show that

# $$

# \left(\begin{array}{cc} 1 & a\\ 0 & 1 \end{array} \right)^{-1} = \left(\begin{array}{cc} 1 & -a\\ 0 & 1 \end{array} \right)

# $$

# and

# $$

# \left(\begin{array}{cc} 1 & 0\\ b & 1 \end{array} \right)^{-1} = \left(\begin{array}{cc} 1 & 0\\ -b & 1 \end{array} \right)

# $$

#

# Step 3: Using the fact $(A B)^{-1} = B^{-1} A^{-1}$ and $s_{12}=s_{21}$, show that and simplify

# $$

# \left(\begin{array}{cc} s_{11} & s_{12} \\ s_{21} & s_{22} \end{array} \right)^{-1} =

# \left(\begin{array}{cc} 1 & 0 \\ s_{12}/s_{22} & 1 \end{array} \right)

# \left(\begin{array}{cc} 1/(s_{11}-s_{12}^2/s_{22}) & 0 \\ 0 & 1/s_{22} \end{array} \right) \left(\begin{array}{cc} 1 & s_{12}/s_{22} \\ 0 & 1 \end{array} \right)

# $$

#

#

# ## Gaussian Processes Regression

#

#

# In Bayesian machine learning, a frequent problem encountered is the regression problem where we are given a pairs of inputs $x_i \in \mathbb{R}^N$ and associated noisy observations $y_i \in \mathbb{R}$. We assume the following model

#

# \begin{eqnarray*}

# y_i &\sim& {\cal N}(y_i; f(x_i), R)

# \end{eqnarray*}

#

# The interesting thing about a Gaussian process is that the function $f$ is not specified in close form, but we assume that the function values

# \begin{eqnarray*}

# f_i & = & f(x_i)

# \end{eqnarray*}

# are jointly Gaussian distributed as

# \begin{eqnarray*}

# \left(

# \begin{array}{c}

# f_1 \\

# \vdots \\

# f_L \\

# \end{array}

# \right) & = & f_{1:L} \sim {\cal N}(f_{1:L}; 0, \Sigma(x_{1:L}))

# \end{eqnarray*}

# Here, we define the entries of the covariance matrix $\Sigma(x_{1:L})$ as

# \begin{eqnarray*}

# \Sigma_{i,j} & = & K(x_i, x_j)

# \end{eqnarray*}

# for $i,j \in \{1, \dots, L\}$. Here, $K$ is a given covariance function. Now, if we wish to predict the value of $f$ for a new $x$, we simply form the following joint distribution:

# \begin{eqnarray*}

# \left(

# \begin{array}{c}

# f_1 \\

# f_2 \\

# \vdots \\

# f_L \\

# f \\

# \end{array}

# \right) & \sim & {\cal N}\left( \left(\begin{array}{c}

# 0 \\

# 0 \\

# \vdots \\

# 0 \\

# 0 \\

# \end{array}\right)

# , \left(\begin{array}{cccccc}

# K(x_1,x_1) & K(x_1,x_2) & \dots & K(x_1, x_L) & K(x_1, x) \\

# K(x_2,x_1) & K(x_2,x_2) & \dots & K(x_2, x_L) & K(x_2, x) \\

# \vdots &\\

# K(x_L,x_1) & K(x_L,x_2) & \dots & K(x_L, x_L) & K(x_L, x) \\

# K(x,x_1) & K(x,x_2) & \dots & K(x, x_L) & K(x, x) \\

# \end{array}\right) \right) \\

# \left(

# \begin{array}{c}

# f_{1:L} \\

# f

# \end{array}

# \right) & \sim & {\cal N}\left( \left(\begin{array}{c}

# \mathbf{0} \\

# 0 \\

# \end{array}\right)

# , \left(\begin{array}{cc}

# \Sigma(x_{1:L}) & k(x_{1:L}, x) \\

# k(x_{1:L}, x)^\top & K(x, x) \\

# \end{array}\right) \right) \\

# \end{eqnarray*}

#

# Here, $k(x_{1:L}, x)$ is a $L \times 1$ vector with entries $k_i$ where

#

# \begin{eqnarray*}

# k_i = K(x_i, x)

# \end{eqnarray*}

#

# Popular choices of covariance functions to generate smooth regression functions include a Bell shaped one

# \begin{eqnarray*}

# K_1(x_i, x_j) & = & \exp\left(-\frac{1}2 \| x_i - x_j \|^2 \right)

# \end{eqnarray*}

# and a Laplacian

# \begin{eqnarray*}

# K_2(x_i, x_j) & = & \exp\left(-\frac{1}2 \| x_i - x_j \| \right)

# \end{eqnarray*}

#

# where $\| x \| = \sqrt{x^\top x}$ is the Euclidian norm.

#

# ## Part 1

# Derive the expressions to compute the predictive density

# \begin{eqnarray*}

# p(\hat{y}| y_{1:L}, x_{1:L}, \hat{x})

# \end{eqnarray*}

#

#

# \begin{eqnarray*}

# p(y | y_{1:L}, x_{1:L}, x) &=& {\cal N}(y; m, S) \\

# m & = & \\

# S & = &

# \end{eqnarray*}

#

# ## Part 2

# Write a program to compute the mean and covariance of $p(\hat{y}| y_{1:L}, x_{1:L}, \hat{x})$ to generate a for the following data:

#

# x = [-2 -1 0 3.5 4]

# y = [4.1 0.9 2 12.3 15.8]

#

# Try different covariance functions $K_1$ and $K_2$ and observation noise covariances $R$ and comment on the nature of the approximation.

#

# ## Part 3

# Suppose we are using a covariance function parameterised by

# \begin{eqnarray*}

# K_\beta(x_i, x_j) & = & \exp\left(-\frac{1}\beta \| x_i - x_j \|^2 \right)

# \end{eqnarray*}

# Find the optimum regularisation parameter $\beta^*(R)$ as a function of observation noise variance via maximisation of the marginal likelihood, i.e.

# \begin{eqnarray*}

# \beta^* & = & \arg\max_{\beta} p(y_{1:N}| x_{1:N}, \beta, R)

# \end{eqnarray*}

# Generate a plot of $b^*(R)$ for $R = 0.01, 0.02, \dots, 1$ for the dataset given in 2.

#

# +

def cov_fun_bell(x1,x2,delta=1):

return np.exp(-0.5*np.abs(x1-x2)**2/delta)

def cov_fun_exp(x1,x2):

return np.exp(-0.5*np.abs(x1-x2))

def cov_fun(x1,x2):

return cov_fun_bell(x1,x2,delta=0.1)

R = 0.05

x = np.array([-2, -1, 0, 3.5, 4]);

y = np.array([4.1, 0.9, 2, 12.3, 15.8]);

Sig = cov_fun(x.reshape((len(x),1)),x.reshape((1,len(x)))) + R*np.eye(len(x))

SigI = np.linalg.inv(Sig)

xx = np.linspace(-10,10,100)

yy = np.zeros_like(xx)

ss = np.zeros_like(xx)

for i in range(len(xx)):

z = np.r_[x,xx[i]]

CrossSig = cov_fun(x,xx[i])

PriorSig = cov_fun(xx[i],xx[i]) + R

yy[i] = np.dot(np.dot(CrossSig, SigI),y)

ss[i] = PriorSig - np.dot(np.dot(CrossSig, SigI),CrossSig)

plt.plot(x,y,'or')

plt.plot(xx,yy,'b.')

plt.plot(xx,yy+3*np.sqrt(ss),'b:')

plt.plot(xx,yy-3*np.sqrt(ss),'b:')

plt.show()

| MultivariateGaussian.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 2

# language: python

# name: python2

# ---

# Analysis of data from fault-scarp model runs.

#

# Start by setting up arrays to hold the data.

# +

import numpy as np

N = 125

run_number = np.zeros(N)

hill_length = np.zeros(N)

dist_rate = np.zeros(N)

uplint = np.zeros(N)

max_ht = np.zeros(N)

mean_slope = np.zeros(N)

mean_ht = np.zeros(N)

# -

# Set up info on domain size and parameters.

domain_lengths = np.array([58, 103, 183, 325, 579])

disturbance_rates = 10.0 ** np.array([-4, -3.5, -3, -2.5, -2])

uplift_intervals = 10.0 ** np.array([2, 2.5, 3, 3.5, 4])

for i in range(N):

hill_length[i] = domain_lengths[i // 25]

dist_rate[i] = disturbance_rates[i % 5]

uplint[i] = uplift_intervals[(i // 5) % 5]

# Read data from file.

# +

import csv

i = -1

with open('./grain_hill_stats.csv', 'rb') as csvfile:

myreader = csv.reader(csvfile)

for row in myreader:

if i > -1:

run_number[i] = int(row[0])

max_ht[i] = float(row[1])

mean_ht[i] = float(row[2])

mean_slope[i] = float(row[3])

i += 1

csvfile.close()

# -

# Let's revisit the question of how to plot, now that I seem to have solved the "not run long enough" problem. If you take the primary variables, the scaling is something like

#

# $h = f( d, \tau, \lambda )$

#

# There is just one dimension here: time. So our normalization becomes simply:

#

# $h = f( d\tau, \lambda )$

#

# This suggests plotting $h$ versus $d\tau$ segregated by $\lambda$.

max_ht

# +

import matplotlib.pyplot as plt

# %matplotlib inline

dtau = dist_rate * uplint # Here's our dimensionless disturbance rate

syms = ['k+', 'k.', 'kv', 'k*', 'ko']

for i in range(5):

idx = (i * 25) + np.arange(25)

plt.loglog(dtau[idx], mean_ht[idx], syms[i])

plt.xlabel(r"Dimensionless disturbance rate, $d'$", {'fontsize' : 12})

plt.ylabel(r'Mean height, $h$', {'fontsize' : 12})

plt.legend([r'$\lambda = 58$', '$\lambda = 103$', '$\lambda = 183$', '$\lambda = 325$', '$\lambda = 579$'])

#plt.savefig('mean_ht_vs_dist_rate.pdf') # UNCOMMENT TO GENERATE FIGURE FILE

# -

# Try Scott's idea of normalizing by lambda

for i in range(5):

idx = (i * 25) + np.arange(25)

plt.loglog(dtau[idx], mean_ht[idx] / hill_length[idx], syms[i])

plt.xlabel(r"Dimensionless disturbance rate, $d'$", {'fontsize' : 12})

plt.ylabel(r'$h / \lambda$', {'fontsize' : 12})

plt.legend([r'$\lambda = 58$', '$\lambda = 103$', '$\lambda = 183$', '$\lambda = 325$', '$\lambda = 579$'])

plt.savefig('h_over_lam_vs_dist_rate.pdf')

# This (h vs d') is actually a fairly straightforward result. For any given hillslope width, there are three domains: (1) threshold, in which height is independent of disturbance or uplift rate; (2) linear, in which height is inversely proportional to $d\tau$, and (3) finite-size, where mean height is only one or two cells, and which is basically meaningless and can be ignored.

# Next, let's look at the effective diffusivity. One method is to start with mean hillslope height, $H_m$. Diffusion theory predicts that mean height should be given by:

#

# $H = \frac{U}{3D}L^2$

#

# Then simply invert this to solve for $D$:

#

# $D = \frac{U}{3H}L^2$

#

# In the particle model, $H$ in real length units is equal to height in cells, $h$, times scale of a cell, $\delta$. Similarly, $L = \lambda \delta / 2$, and $U = \delta / I_u$, where $I_u$ is uplift interval in cells/time (the factor of 2 in $\lambda$ comes from the fact that hillslope length is half the domain length). Substituting,

#

# $D = \frac{4}{3I_u h} \lambda^2 \delta^2$

#

# This of course requires defining cell size. We could also do it in terms of a disturbance rate, $d_{eff}$, equal to $D/\delta^2$,

#

# $d_{eff} = \frac{4}{3I_u h} \lambda^2$

#

# Ok, here's a neat thing: we can define a dimensionless effective diffusivity as follows:

#

# $D' = \frac{D}{d \delta^2} = \frac{4}{3 d I_u h} \lambda^2$

#

# This measures the actual diffusivity relative to the nominal value reflected by the disturbance rate. Here we'll plot it against slope gradient in both linear and log-log.

#

# +

hill_halflen = hill_length / 2.0

D_prime = (hill_halflen * hill_halflen) / (12 * uplint * mean_ht * dist_rate)

plt.plot(mean_slope, D_prime, 'k.')

plt.xlabel('Mean slope gradient')

plt.ylabel('Dimensionless diffusivity')

# -

syms = ['k+', 'k.', 'kv', 'k*', 'ko']

for i in range(0, 5):

idx = (i * 25) + np.arange(25)

plt.semilogy(mean_slope[idx], D_prime[idx], syms[i])

plt.xlabel('Mean slope gradient', {'fontsize' : 12})

plt.ylabel('Dimensionless diffusivity', {'fontsize' : 12})

plt.ylim([1.0e0, 1.0e5])

plt.legend(['L = 58', 'L = 103', 'L = 183', 'L = 325', 'L = 579'])

idx1 = np.where(max_ht > 4)[0]

idx2 = np.where(max_ht <= 4)[0]

plt.semilogy(mean_slope[idx1], D_prime[idx1], 'ko', mfc='none')

plt.semilogy(mean_slope[idx2], D_prime[idx2], '.', mfc='0.5')

plt.xlabel('Mean slope gradient', {'fontsize' : 12})

plt.ylabel(r"Dimensionless diffusivity, $D_e'$", {'fontsize' : 12})

plt.legend(['Mean height > 4 cells', 'Mean height <= 4 cells'])

plt.savefig('dimless_diff_vs_grad.pdf')

# Just for fun, let's try to isolate the portion of $D_e$ that doesn't contain Furbish et al.'s $\cos^2\theta$ factor. In other words, we'll plot against $\cos^2 \theta S$. Remember that $S=\tan \theta$, so $\theta = \tan^{-1} S$ and $\cos \theta = \cos\tan^{-1}S$.

theta = np.arctan(mean_slope)

cos_theta = np.cos(theta)

cos2_theta = cos_theta * cos_theta

cos2_theta_S = cos2_theta * mean_slope

idx1 = np.where(max_ht > 4)[0]

idx2 = np.where(max_ht <= 4)[0]

plt.semilogy(cos2_theta_S[idx1], D_prime[idx1], 'ko', mfc='none')

plt.semilogy(cos2_theta_S[idx2], D_prime[idx2], '.', mfc='0.5')

plt.xlabel(r'Mean slope gradient $\times \cos^2 \theta$', {'fontsize' : 12})

plt.ylabel(r"Dimensionless diffusivity, $D_e'$", {'fontsize' : 12})

plt.legend(['Mean height > 4 cells', 'Mean height <= 4 cells'])

plt.savefig('dimless_diff_vs_grad_cos2theta.pdf')

# Now let's try $D / (1 - (S/S_c)^2)$ and see if that collapses things...

Sc = np.tan(np.pi * 30.0 / 180.0)

de_with_denom = D_prime * (1.0 - (mean_slope / Sc) ** 2)

# +

plt.semilogy(mean_slope[idx1], D_prime[idx1], 'ko', mfc='none')

plt.semilogy(mean_slope[idx2], D_prime[idx2], '.', mfc='0.5')

plt.xlabel('Mean slope grad', {'fontsize' : 12})

plt.ylabel(r"Dimensionless diffusivity, $D_e'$", {'fontsize' : 12})

plt.legend(['Mean height > 3 cells', 'Mean height <= 3 cells'])

# Now add analytical

slope = np.arange(0, 0.6, 0.05)

D_pred = 10.0 / (1.0 - (slope/Sc)**2)

plt.plot(slope, D_pred, 'r')

# -

# Version of the De-S plot with lower end zoomed in to find the approximate asymptote:

idx1 = np.where(max_ht > 4)[0]

idx2 = np.where(max_ht <= 4)[0]

plt.semilogy(mean_slope[idx1], D_prime[idx1], 'ko', mfc='none')

plt.semilogy(mean_slope[idx2], D_prime[idx2], '.', mfc='0.5')

plt.xlabel('Mean slope gradient', {'fontsize' : 12})

plt.ylabel(r"Dimensionless diffusivity, $D_e'$", {'fontsize' : 12})

plt.ylim(10, 100)

# ====================================================

# OLDER STUFF BELOW HERE

# Start with a plot of $D$ versus slope for given fixed values of everything but $I_u$.

halflen = hill_length / 2.0

D = (halflen * halflen) / (2.0 * uplint * max_ht)

print np.amin(max_ht)

import matplotlib.pyplot as plt

# %matplotlib inline

# +

idx = np.arange(0, 25, 5)

plt.semilogy(mean_slope[idx], D[idx], '.')

idx = np.arange(25, 50, 5)

plt.plot(mean_slope[idx], D[idx], '.')

idx = np.arange(50, 75, 5)

plt.plot(mean_slope[idx], D[idx], '.')

idx = np.arange(75, 100, 5)

plt.plot(mean_slope[idx], D[idx], '.')

idx = np.arange(100, 125, 5)

plt.plot(mean_slope[idx], D[idx], '.')

# +

idx = np.arange(25, 50, 5)

plt.plot(mean_slope[idx], D[idx], '.')

idx = np.arange(100, 125, 5)

plt.plot(mean_slope[idx], D[idx], '.')

# -

# Is there something we could do with integrated elevation? To reduce noise...

#

# $\int_0^L z(x) dx = A = \int_0^L \frac{U}{2D}(L^2 - x^2) dx$

#

# $A= \frac{U}{2D}L^3 - \frac{U}{6D}L^3$

#

# $A= \frac{U}{3D}L^3$

#

# $A/L = H_{mean} = \frac{U}{3D}L^2$

#

# Rearranging,

#

# $D = \frac{U}{3H_{mean}}L^2$

#

# $D/\delta^2 = \frac{1}{3 I_u h_{mean}} \lambda^2$

#

# This might be more stable, since it measures area (a cumulative metric).

# First, a little nondimensionalization. We have an outcome, mean height, $h_m$, that is a function of three inputs: disturbance rate, $d$, system length $\lambda$, and uplift interval, $I_u$. If we treat cells as a kind of dimension, our dimensions are: C, C/T, C, T/C. This implies two dimensionless parameters:

#

# $h_m / \lambda = f( d I_u )$

#

# So let's calculate these quantities:

hmp = mean_ht / hill_length

di = dist_rate * uplint

plt.plot(di, hmp, '.')

plt.loglog(di, hmp, '.')

# I guess that's kind of a collapse? Need to split apart by different parameters. But first, let's try the idea of an effective diffusion coefficient:

dd = (1.0 / (uplint * mean_ht)) * halflen * halflen

plt.plot(mean_slope, dd, '.')

# Ok, kind of a mess. Let's try holding everything but uplift interval constant.

var_uplint = np.arange(0, 25, 5) + 2

for i in range(5):

idx = (i * 25) + var_uplint

plt.plot(mean_slope[idx], dd[idx], '.')

plt.xlabel('Mean slope gradient')

plt.ylabel('Effective diffusivity')

plt.legend(['L = 58', 'L = 103', 'L = 183', 'L = 325', 'L = 579'])

var_uplint = np.arange(0, 25, 5) + 3

for i in range(5):

idx = (i * 25) + var_uplint

plt.plot(mean_slope[idx], dd[idx], '.')

plt.xlabel('Mean slope gradient')

plt.ylabel('Effective diffusivity')

plt.legend(['L = 58', 'L = 103', 'L = 183', 'L = 325', 'L = 579'])

var_uplint = np.arange(0, 25, 5) + 4

for i in range(5):

idx = (i * 25) + var_uplint

plt.plot(mean_slope[idx], dd[idx], '.')

plt.xlabel('Mean slope gradient')

plt.ylabel('Effective diffusivity')

plt.legend(['L = 58', 'L = 103', 'L = 183', 'L = 325', 'L = 579'])

for i in range(5):

idx = np.arange(5) + 100 + 5 * i

plt.loglog(di[idx], hmp[idx], '.')

plt.xlabel('d I_u')

plt.ylabel('H_m / L')

plt.grid('on')

plt.legend(['I_u = 100', 'I_u = 316', 'I_u = 1000', 'I_u = 3163', 'I_u = 10,000'])

hmp2 = (mean_ht + 0.5) / hill_length

for i in range(5):

idx = np.arange(5) + 0 + 5 * i

plt.loglog(di[idx], hmp2[idx], '.')

plt.xlabel('d I_u')

plt.ylabel('H_m / L')

plt.grid('on')

plt.legend(['I_u = 100', 'I_u = 316', 'I_u = 1000', 'I_u = 3163', 'I_u = 10,000'])

# They don't seem to segregate much by $I_u$. I suspect they segregate by $L$. So let's plot ALL the data, colored by $L$:

for i in range(5):

idx = i * 25 + np.arange(25)

plt.loglog(di[idx], hmp2[idx], '.')

plt.xlabel('d I_u')

plt.ylabel('H_m / L')

plt.grid('on')

plt.legend(['L = 58', 'L = 103', 'L = 183', 'L = 325', 'L = 579'])

# The above plot makes sense actually. Consider the end members:

#

# At low $d I_u$, you have angle-of-repose:

#

# $H_m = \tan (30^\circ) L/4$ (I think)

#

# or

#

# $H_m / L \approx 0.15$

#

# At high $d I_u$, we have the diffusive case:

#

# $H_m = \frac{U}{3D} L^2$, or

#

# $H_m / L = \frac{U}{3D} L$

#

# But wait a minute, that's backwards from what the plot shows. Could this be a finite-size effect? Let's suppose that finite-size effects mean that there's a minimum $H_m$ equal to $N$ times the size of one particle. Then,

#

# $H_m / L = N / L$

#

# which has the right direction. What would $N$ actually be? From reading the graph above, estimate that (using $L$ as half-length, so half of the above numbers in legend):

#

# For $L=29$, $N/L \approx 0.02$

#

# For $L=51.5$, $N/L \approx 0.015$

#

# For $L=91.5$, $N/L \approx 0.009$

#

# For $L=162.5$, $N/L \approx 0.0055$

#

# For $L=289.5$, $N/L \approx 0.004$

nl = np.array([0.02, 0.015, 0.009, 0.0055, 0.004])

l = np.array([29, 51.5, 91.5, 162.5, 289.5])

n = nl * l

n

1.0 / l

# Ok, so these are all hovering around 1 cell! So, that could well explain the scatter at the right side.

#

# This is starting to make more sense. The narrow window around $d I_u \approx 10$ represents the diffusive regime. To the left, the angle-of-repose regime. To the right, the finite-size-effect regime. For good reasons, the diffusive regime is biggest with the biggest $L$ (more particles, so finite-size effect doesn't show up until larger $d I_u$). Within the diffusive regime, points separate according to scale, reflecting the $H_m / L \propto L$ effect. So, if we took points with varying $L$ but identical $d I_u$, ...

idx = np.where(np.logical_and(di>9.0, di<11.0))[0]

hmd = mean_ht[idx]

ld = hill_length[idx]

plt.plot(ld, hmd/ld, '.')

idx2 = np.where(np.logical_and(di>0.9, di<1.1))[0]

plt.plot(hill_length[idx2], mean_ht[idx2]/hill_length[idx2], 'o')

plt.grid('on')

plt.xlabel('L')

plt.ylabel('Hm/L')

plt.plot([0, 600.0], [0.5774/4.0, 0.5774/4.0])

plt.plot([0.0, 600.0], [0.0, 0.02 * 0.1/3.0 * 600.0])

# In the above plot, diffusive behavior is indicated by a slope of 1:1, whereas angle-of-repose is indicated by a flat trend. One thing this says is that, for a given $d I_u$, a longer slope is more likely to be influenced by the angle of repose. That makes sense I think...?

plt.loglog(ld, hmd, '*')

plt.grid('on')

# Now, what if it's better to consider disturbance rate, $d$, to be in square cells / time? That is, when dimensionalized, to be $L^2/T$ rather than $L/T$? Let's see what happens when we do it this way:

d2 = dist_rate * uplint / hill_length

plt.loglog(hmp, d2, '.')

# Not so great ...

#

#

# Let's try another idea, based again on dimensional analysis. Start with dimensional quantities $U$ (uplift rate), $L$ (length), $H$ (mean height), and $\delta$ (cell size). Nondimensionalize in a somewhat surprising way:

#

# $\frac{UL^2}{Hd\delta^2} = f( d\delta / U, L/\delta )$

#

# This is actually a dimensionless diffusivity: diffusivity relative to disturbance intensity.

#

# Now translate back: $H=h\delta$, $U=\delta / I_u$, and $L=\lambda \delta$:

#

# $\frac{\lambda^2}{hdI_u} = f( d I_u, \lambda )$

#

# So what happens if we plot thus?

diff_nd = halflen * halflen / (uplint * mean_ht * dist_rate)

diu = dist_rate * uplint

for i in range(5):

idx = (i * 25) + np.arange(25)

plt.loglog(diu[idx], diff_nd[idx], '+')

plt.grid('on')

plt.xlabel('Dimensionless disturbance rate (d I_u)')

plt.ylabel('Dimensionless diffusivity (l^2/hdI_u)')

plt.legend(['\lambda= 58', '\lambda = 103', '\lambda = 183', '\lambda = 325', '\lambda = 579'])

# Now THAT'S a collapse. Good. Interpretation: as we go left to right, we go from faster uplift or lower disturbance to slower uplift or faster disturbance. That means the relief goes from high to low. At high relief, we get angle-of-repose behavior, for which the effective diffusivity increases with relief---hence, diffusivity decreases with increasing $dI_u$. Then we get to a realm that is presumably the diffusive regime, where the curve flattens out. This represents a constant diffusivity. Finally, we get to the far right side, where you hit finite-size effects: there will be a hill at least one particle high on average no matter how high $d I_u$, so diffusivity appears to drop again.

#

# There's a one-to-one relation between $D'$ and $\lambda$, at least in the steep regime. This reflects simple scaling. In the steep regime, $H = S_c L / 4$. By definition $D' = (U / 3H\delta^2 d) L^2$, or $H = (U / 3D'\delta^2 d) L^2$. Substituting,

#

# $(U / 3D'\delta^2 d) L^2 = S_c L / 4$

#

# $D' = 4 U L / 3 \delta^2 d S_c$

#

# in other words, we expect $D' \propto L$ in this regime. (If we translate back, this writes as

#

# $D' = 4 \lambda / 3 I_u d S_c$

#

# Voila!

#

# Ok, but why does the scaling between $D'$ and $\lambda$ continue in the diffusive regime? My guess is as follows. To relate disturbance rate, $d$, to diffusivity, $D$, consider that disturbance acts over depth $\delta$ and length $L$. Therefore, one might scale diffusivity as follows:

#

# $D \propto dL\delta \propto d \lambda$

#

# By that argument, $D$ should be proportional to $\lambda$. Another way to say this is that in order to preserve constant $D$, $d$ should be treated as a scale-dependent parameter: $d \propto D/\lambda$.

#

# A further thought: can we define diffusivity more carefully? One approach would be

#

# (frequency of disturbance events per unit time per unit length, $F$) [1/LT]

#

# x

#

# (cross-sectional area disturbed, $A$)

#

# x

#

# (characteristic displacement length,$\Lambda$)

#

# For the first, take the expected number of events across the whole in unit time and divide by the length of the slope:

#

# $F = \lambda d / L = ...$

#

# hmm, this isn't going where I thought...

#

| ModelInputsAndRunScripts/DataAnalysis/analysis_of_grain_hill_data.ipynb |

# # Beyond linear separation in classification

#

# As we saw in the regression section, the linear classification model

# expects the data to be linearly separable. When this assumption does not

# hold, the model is not expressive enough to properly fit the data.

# Therefore, we need to apply the same tricks as in regression: feature

# augmentation (potentially using expert-knowledge) or using a

# kernel-based method.

#

# We will provide examples where we will use a kernel support vector machine

# to perform classification on some toy-datasets where it is impossible to

# find a perfect linear separation.

#

# We will generate a first dataset where the data are represented as two

# interlaced half circle. This dataset is generated using the function

# [`sklearn.datasets.make_moons`](https://scikit-learn.org/stable/modules/generated/sklearn.datasets.make_moons.html).

# +

import numpy as np

import pandas as pd

from sklearn.datasets import make_moons

feature_names = ["Feature #0", "Features #1"]

target_name = "class"

X, y = make_moons(n_samples=100, noise=0.13, random_state=42)

# We store both the data and target in a dataframe to ease plotting

moons = pd.DataFrame(np.concatenate([X, y[:, np.newaxis]], axis=1),

columns=feature_names + [target_name])

data_moons, target_moons = moons[feature_names], moons[target_name]

# -

# Since the dataset contains only two features, we can make a scatter plot to

# have a look at it.

# +

import matplotlib.pyplot as plt

import seaborn as sns

sns.scatterplot(data=moons, x=feature_names[0], y=feature_names[1],

hue=target_moons, palette=["tab:red", "tab:blue"])

_ = plt.title("Illustration of the moons dataset")

# -

# From the intuitions that we got by studying linear model, it should be

# obvious that a linear classifier will not be able to find a perfect decision

# function to separate the two classes.

#

# Let's try to see what is the decision boundary of such a linear classifier.

# We will create a predictive model by standardizing the dataset followed by

# a linear support vector machine classifier.

import sklearn

sklearn.set_config(display="diagram")

# +

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.svm import SVC

linear_model = make_pipeline(StandardScaler(), SVC(kernel="linear"))

linear_model.fit(data_moons, target_moons)

# -

# <div class="admonition warning alert alert-danger">

# <p class="first admonition-title" style="font-weight: bold;">Warning</p>

# <p class="last">Be aware that we fit and will check the boundary decision of the classifier

# on the same dataset without splitting the dataset into a training set and a

# testing set. While this is a bad practice, we use it for the sake of

# simplicity to depict the model behavior. Always use cross-validation when

# you want to assess the generalization performance of a machine-learning model.</p>

# </div>

# Let's check the decision boundary of such a linear model on this dataset.

# +

from helpers.plotting import DecisionBoundaryDisplay

DecisionBoundaryDisplay.from_estimator(

linear_model, data_moons, response_method="predict", cmap="RdBu", alpha=0.5

)

sns.scatterplot(data=moons, x=feature_names[0], y=feature_names[1],

hue=target_moons, palette=["tab:red", "tab:blue"])

_ = plt.title("Decision boundary of a linear model")

# -

# As expected, a linear decision boundary is not enough flexible to split the

# two classes.

#

# To push this example to the limit, we will create another dataset where

# samples of a class will be surrounded by samples from the other class.

# +

from sklearn.datasets import make_gaussian_quantiles

feature_names = ["Feature #0", "Features #1"]

target_name = "class"

X, y = make_gaussian_quantiles(

n_samples=100, n_features=2, n_classes=2, random_state=42)

gauss = pd.DataFrame(np.concatenate([X, y[:, np.newaxis]], axis=1),

columns=feature_names + [target_name])

data_gauss, target_gauss = gauss[feature_names], gauss[target_name]

# -

ax = sns.scatterplot(data=gauss, x=feature_names[0], y=feature_names[1],

hue=target_gauss, palette=["tab:red", "tab:blue"])

_ = plt.title("Illustration of the Gaussian quantiles dataset")

# Here, this is even more obvious that a linear decision function is not

# adapted. We can check what decision function, a linear support vector machine

# will find.

linear_model.fit(data_gauss, target_gauss)

DecisionBoundaryDisplay.from_estimator(

linear_model, data_gauss, response_method="predict", cmap="RdBu", alpha=0.5

)

sns.scatterplot(data=gauss, x=feature_names[0], y=feature_names[1],

hue=target_gauss, palette=["tab:red", "tab:blue"])

_ = plt.title("Decision boundary of a linear model")

# As expected, a linear separation cannot be used to separate the classes

# properly: the model will under-fit as it will make errors even on

# the training set.

#

# In the section about linear regression, we saw that we could use several

# tricks to make a linear model more flexible by augmenting features or

# using a kernel. Here, we will use the later solution by using a radial basis

# function (RBF) kernel together with a support vector machine classifier.

#

# We will repeat the two previous experiments and check the obtained decision

# function.

kernel_model = make_pipeline(StandardScaler(), SVC(kernel="rbf", gamma=5))

kernel_model.fit(data_moons, target_moons)

DecisionBoundaryDisplay.from_estimator(

kernel_model, data_moons, response_method="predict", cmap="RdBu", alpha=0.5

)

sns.scatterplot(data=moons, x=feature_names[0], y=feature_names[1],

hue=target_moons, palette=["tab:red", "tab:blue"])

_ = plt.title("Decision boundary with a model using an RBF kernel")

# We see that the decision boundary is not anymore a straight line. Indeed,

# an area is defined around the red samples and we could imagine that this

# classifier should be able to generalize on unseen data.

#

# Let's check the decision function on the second dataset.

kernel_model.fit(data_gauss, target_gauss)

DecisionBoundaryDisplay.from_estimator(

kernel_model, data_gauss, response_method="predict", cmap="RdBu", alpha=0.5

)

ax = sns.scatterplot(data=gauss, x=feature_names[0], y=feature_names[1],

hue=target_gauss, palette=["tab:red", "tab:blue"])

_ = plt.title("Decision boundary with a model using an RBF kernel")

# We observe something similar than in the previous case. The decision function

# is more flexible and does not underfit anymore.

#

# Thus, kernel trick or feature expansion are the tricks to make a linear

# classifier more expressive, exactly as we saw in regression.

#

# Keep in mind that adding flexibility to a model can also risk increasing

# overfitting by making the decision function to be sensitive to individual

# (possibly noisy) data points of the training set. Here we can observe that

# the decision functions remain smooth enough to preserve good generalization.

# If you are curious, you can try to repeat the above experiment with

# `gamma=100` and look at the decision functions.

| notebooks/logistic_regression_non_linear.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# # Overfitting II

#

# Last time, we saw a theoretical example of *overfitting*, in which we fit a machine learning model that perfectly fit the data it saw, but performed extremely poorly on fresh, unseen data. In this lecture, we'll observe overfitting in a more practical context, using the Titanic data set again. We'll then begin to study *validation* techniques for finding models with "just the right amount" of flexibility.

import numpy as np

from matplotlib import pyplot as plt

import pandas as pd

# +

# assumes that you have run the function retrieve_data()

# from "Introduction to ML in Practice" in ML_3.ipynb

titanic = pd.read_csv("data.csv")

titanic

# -

# Recall that we diagnosed overfitting by testing our model against some new data. In this case, we don't have any more data. So, what we can do instead is *hold out* some data that we won't let our model see at first. This holdout data is called the *validation* or *testing* data, depending on the use to which we put it. In contrast, the data that we allow our model to see is called the *training* data. `sklearn` provides a convenient function for partitioning our data into training and holdout sets called `train_test_split`. The default and generally most useful behavior is to randomly select rows of the data frame to be in each set.

# +

from sklearn.model_selection import train_test_split

np.random.seed(1234)

train, test = train_test_split(titanic, test_size = 0.3) # hold out 30% of the data

train.shape, test.shape

# -

# Now we have two data frames. As you may recall from a previous lecture, we need to do some data cleaning, and split them into predictor variables `X` and target variables `y`.

# +

from sklearn import preprocessing

def prep_titanic_data(data_df):

df = data_df.copy()

# convert male/female to 1/0

le = preprocessing.LabelEncoder()

df['Sex'] = le.fit_transform(df['Sex'])

# don't need name column

df = df.drop(['Name'], axis = 1)

# split into X and y

X = df.drop(['Survived'], axis = 1)

y = df['Survived']

return(X, y)

# -

X_train, y_train = prep_titanic_data(train)

X_test, y_test = prep_titanic_data(test)

# Now we're able to train our model on the `train` data, and then evaluate its performance on the `val` data. This will help us to diagnose and avoid overfitting.

#

# Let's try using the decision tree classifier again. As you may remember, the `DecisionTreeClassifier()` class takes an argument `max_depth` that governs how many layers of decisions the tree is allowed to make. Larger `max_depth` values correspond to more complicated trees. In this way, `max_depth` is a model complexity parameter, similar to the `degree` when we did polynomial regression.

#

# For example, with a small `max_depth`, the model scores on the training and validation data are relatively close.

# +

from sklearn import tree

T = tree.DecisionTreeClassifier(max_depth = 3)

T.fit(X_train, y_train)

T.score(X_train, y_train), T.score(X_test, y_test)

# -

# On the other hand, if we use a much higher `max_depth`, we can achieve a substantially better score on the training data, but our performance on the validation data has not improved by much, and might even suffer.

# +

T = tree.DecisionTreeClassifier(max_depth = 20)

T.fit(X_train, y_train)

T.score(X_train, y_train), T.score(X_test, y_test)

# -

# That looks like overfitting! The model achieves a near-perfect score on the training data, but a much lower one on the test data.

# +

fig, ax = plt.subplots(1, figsize = (10, 7))

for d in range(1, 30):

T = tree.DecisionTreeClassifier(max_depth = d)

T.fit(X_train, y_train)

ax.scatter(d, T.score(X_train, y_train), color = "black")

ax.scatter(d, T.score(X_test, y_test), color = "firebrick")

ax.set(xlabel = "Complexity (depth)", ylabel = "Performance (score)")

# -

# Observe that the training score (black) always increases, while the test score (red) tops out around 83\% and then even begins to trail off slightly. It looks like the optimal depth might be around 5-7 or so, but there's some random noise that can prevent us from being able to determine exactly what the optimal depth is.

#

# Increasing performance on the training set combined with decreasing performance on the test set is the trademark of overfitting.

#

# This noise reflects the fact that we took a single, random subset of the data for testing. In a more systematic experiment, we would draw many different subsets of the data for each value of depth and average over them. This is what *cross-validation* does, and we'll talk about it in the next lecture.

| content/ML/ML_5.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# # A Simple Autoencoder

#

# We'll start off by building a simple autoencoder to compress the MNIST dataset. With autoencoders, we pass input data through an encoder that makes a compressed representation of the input. Then, this representation is passed through a decoder to reconstruct the input data. Generally the encoder and decoder will be built with neural networks, then trained on example data.

#

# <img src='notebook_ims/autoencoder_1.png' />

#

# ### Compressed Representation

#