Update readme

Browse files

README.md

CHANGED

|

@@ -12,7 +12,7 @@ inference: false

|

|

| 12 |

<video src='https://huggingface.co/ByteDance/AnimateDiff-Lightning/resolve/main/animatediff_lightning_samples_t2v.mp4' width="100%" autoplay muted loop style='margin:0'></video>

|

| 13 |

<video src='https://huggingface.co/ByteDance/AnimateDiff-Lightning/resolve/main/animatediff_lightning_samples_v2v.mp4' width="100%" autoplay muted loop style='margin:0'></video>

|

| 14 |

|

| 15 |

-

AnimateDiff-Lightning is a lightning-fast text-to-video generation model. It can generate videos more than ten times faster than the original AnimateDiff. For more information, please refer to our research paper: [AnimateDiff-Lightning: Cross-Model Diffusion Distillation](https://

|

| 16 |

|

| 17 |

Our models are distilled from [AnimateDiff SD1.5 v2](https://huggingface.co/guoyww/animatediff). This repository contains checkpoints for 1-step, 2-step, 4-step, and 8-step distilled models. The generation quality of our 2-step, 4-step, and 8-step model is great. Our 1-step model is only provided for research purposes.

|

| 18 |

|

|

@@ -99,4 +99,16 @@ Additional notes:

|

|

| 99 |

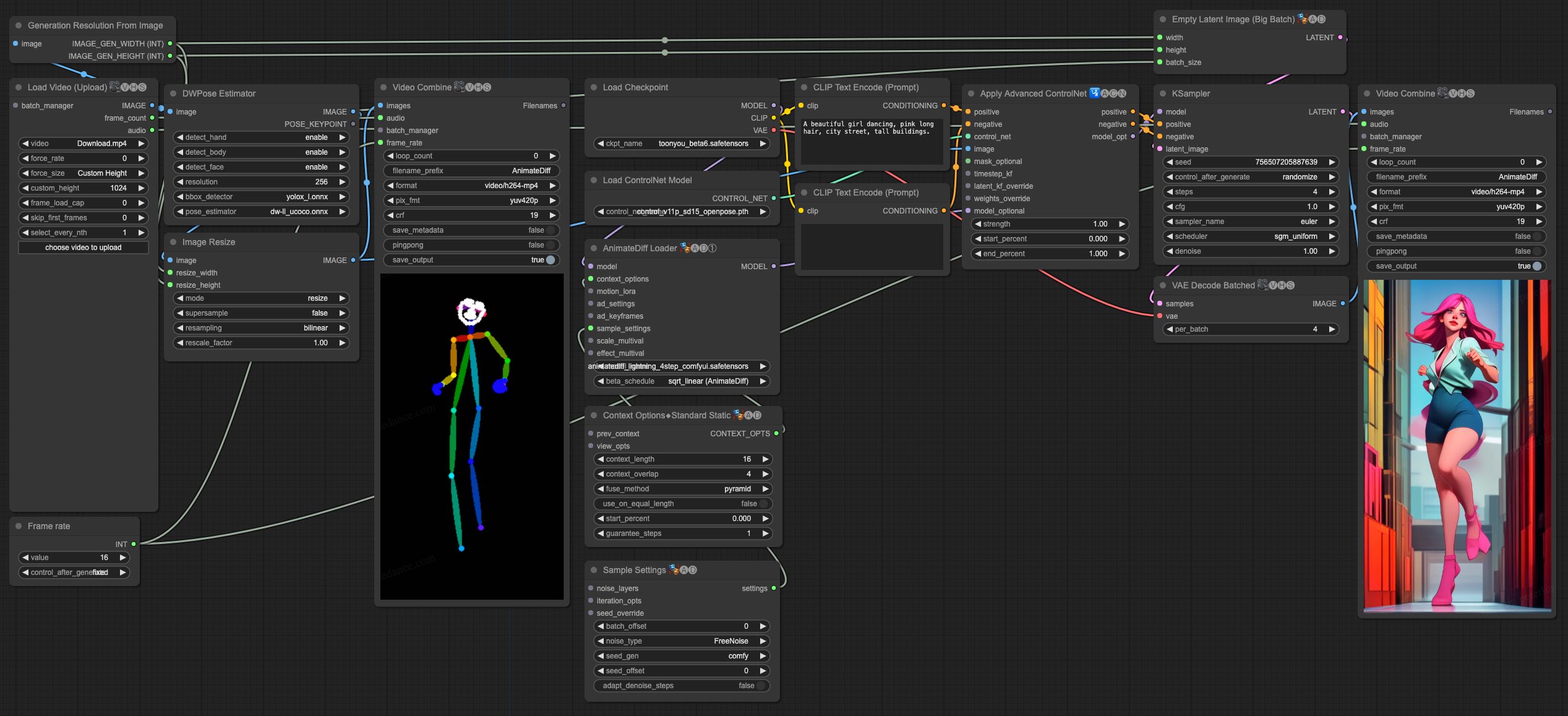

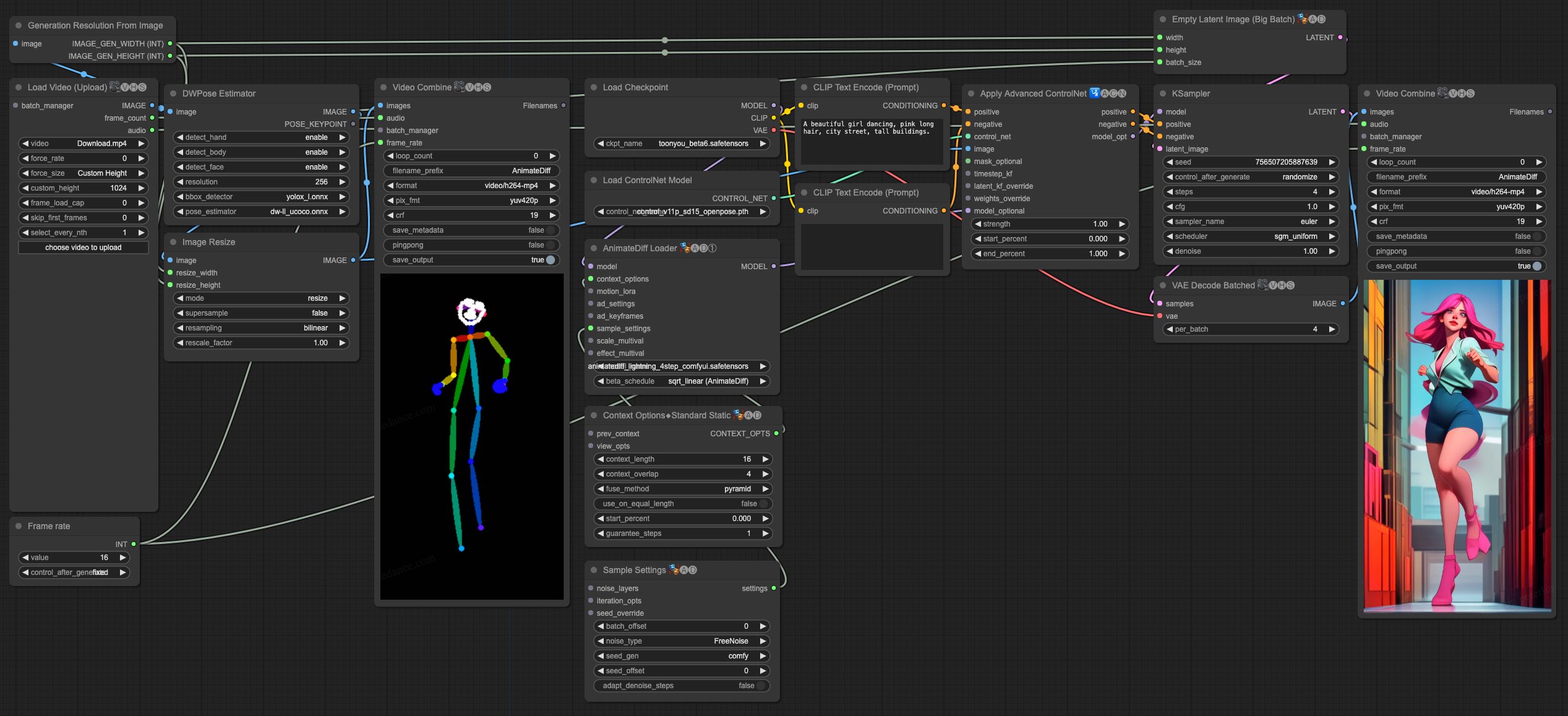

1. DWPose may get stuck in UI, but the pipeline is actually still running in the background. Check ComfyUI log and your output folder.

|

| 100 |

1. This simple pipeline will scene change due to limited context window. More sophisticated pipeline, such as IP-Adapter for identity preservation, matting, or i2v recurring generation can be explored.

|

| 101 |

|

| 102 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 |

<video src='https://huggingface.co/ByteDance/AnimateDiff-Lightning/resolve/main/animatediff_lightning_samples_t2v.mp4' width="100%" autoplay muted loop style='margin:0'></video>

|

| 13 |

<video src='https://huggingface.co/ByteDance/AnimateDiff-Lightning/resolve/main/animatediff_lightning_samples_v2v.mp4' width="100%" autoplay muted loop style='margin:0'></video>

|

| 14 |

|

| 15 |

+

AnimateDiff-Lightning is a lightning-fast text-to-video generation model. It can generate videos more than ten times faster than the original AnimateDiff. For more information, please refer to our research paper: [AnimateDiff-Lightning: Cross-Model Diffusion Distillation](https://arxiv.org/abs/2403.12706). We release the model as part of the research.

|

| 16 |

|

| 17 |

Our models are distilled from [AnimateDiff SD1.5 v2](https://huggingface.co/guoyww/animatediff). This repository contains checkpoints for 1-step, 2-step, 4-step, and 8-step distilled models. The generation quality of our 2-step, 4-step, and 8-step model is great. Our 1-step model is only provided for research purposes.

|

| 18 |

|

|

|

|

| 99 |

1. DWPose may get stuck in UI, but the pipeline is actually still running in the background. Check ComfyUI log and your output folder.

|

| 100 |

1. This simple pipeline will scene change due to limited context window. More sophisticated pipeline, such as IP-Adapter for identity preservation, matting, or i2v recurring generation can be explored.

|

| 101 |

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

# Cite Our Work

|

| 105 |

+

```

|

| 106 |

+

@misc{lin2024animatedifflightning,

|

| 107 |

+

title={AnimateDiff-Lightning: Cross-Model Diffusion Distillation},

|

| 108 |

+

author={Shanchuan Lin and Xiao Yang},

|

| 109 |

+

year={2024},

|

| 110 |

+

eprint={2403.12706},

|

| 111 |

+

archivePrefix={arXiv},

|

| 112 |

+

primaryClass={cs.CV}

|

| 113 |

+

}

|

| 114 |

+

```

|