diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..4e0bbdfaa692aff943e039a75fa6203770ea5ae7

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,2 @@

+venv/

+.idea/

diff --git a/README.md b/README.md

index fb10e2534b090242b9f5d8fe0abdb092a7bd152a..e692edc79d0c9be045aab6fd3e8f9014d7454aec 100644

--- a/README.md

+++ b/README.md

@@ -10,3 +10,19 @@ pinned: false

---

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

+

+[](https://colab.research.google.com/github/ajay-sainy/Wav2Lip-GFPGAN/blob/main/Wav2Lip-GFPGAN.ipynb)

+

+Combine Lip Sync AI and Face Restoration AI to get ultra high quality videos.

+

+[Demo Video](https://www.youtube.com/watch?v=jArkTgAMA4g)

+

+[](https://youtu.be/jArkTgAMA4g)

+

+Projects referred:

+1. https://github.com/Rudrabha/Wav2Lip

+2. https://github.com/TencentARC/GFPGAN

+

+Video sources:

+1. https://www.youtube.com/watch?v=39w_zYB7AVM&t=0s

+2. https://www.youtube.com/watch?v=LQCQym6hVMo&t=0s

diff --git a/Wav2Lip-GFPGAN.ipynb b/Wav2Lip-GFPGAN.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..97118cbce46c937e88e3a2dd57c91db73070c5d7

--- /dev/null

+++ b/Wav2Lip-GFPGAN.ipynb

@@ -0,0 +1,208 @@

+{

+ "nbformat": 4,

+ "nbformat_minor": 0,

+ "metadata": {

+ "colab": {

+ "name": "Wav2Lip.ipynb",

+ "provenance": [],

+ "collapsed_sections": []

+ },

+ "kernelspec": {

+ "name": "python3",

+ "display_name": "Python 3"

+ },

+ "language_info": {

+ "name": "python"

+ },

+ "accelerator": "GPU",

+ "gpuClass": "standard"

+ },

+ "cells": [

+ {

+ "cell_type": "code",

+ "source": [

+ "!git clone https://github.com/ajay-sainy/Wav2Lip-GFPGAN.git\n",

+ "basePath = \"/content/Wav2Lip-GFPGAN\"\n",

+ "%cd {basePath}"

+ ],

+ "metadata": {

+ "id": "YhFe3CJGAIiV"

+ },

+ "execution_count": null,

+ "outputs": []

+ },

+ {

+ "cell_type": "code",

+ "source": [

+ "wav2lipFolderName = 'Wav2Lip-master'\n",

+ "gfpganFolderName = 'GFPGAN-master'\n",

+ "wav2lipPath = basePath + '/' + wav2lipFolderName\n",

+ "gfpganPath = basePath + '/' + gfpganFolderName\n",

+ "\n",

+ "!wget 'https://www.adrianbulat.com/downloads/python-fan/s3fd-619a316812.pth' -O {wav2lipPath}'/face_detection/detection/sfd/s3fd.pth'\n",

+ "!gdown https://drive.google.com/uc?id=1fQtBSYEyuai9MjBOF8j7zZ4oQ9W2N64q --output {wav2lipPath}'/checkpoints/'"

+ ],

+ "metadata": {

+ "id": "mH7A_OaFUs8U"

+ },

+ "execution_count": null,

+ "outputs": []

+ },

+ {

+ "cell_type": "code",

+ "source": [

+ "!pip install -r requirements.txt"

+ ],

+ "metadata": {

+ "id": "CAJqWQS17Qk1"

+ },

+ "execution_count": null,

+ "outputs": []

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "EqX_2YtkUjRI"

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "outputPath = basePath+'/outputs'\n",

+ "inputAudioPath = basePath + '/inputs/kim_audio.mp3'\n",

+ "inputVideoPath = basePath + '/inputs/kimk_7s_raw.mp4'\n",

+ "lipSyncedOutputPath = basePath + '/outputs/result.mp4'\n",

+ "\n",

+ "if not os.path.exists(outputPath):\n",

+ " os.makedirs(outputPath)\n",

+ "\n",

+ "!cd $wav2lipFolderName && python inference.py \\\n",

+ "--checkpoint_path checkpoints/wav2lip.pth \\\n",

+ "--face {inputVideoPath} \\\n",

+ "--audio {inputAudioPath} \\\n",

+ "--outfile {lipSyncedOutputPath}"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "source": [

+ "!cd $gfpganFolderName && python setup.py develop\n",

+ "!wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth -P {gfpganFolderName}'/experiments/pretrained_models'"

+ ],

+ "metadata": {

+ "id": "PPBew5FGGvP9"

+ },

+ "execution_count": null,

+ "outputs": []

+ },

+ {

+ "cell_type": "code",

+ "source": [

+ "import cv2\n",

+ "from tqdm import tqdm\n",

+ "from os import path\n",

+ "\n",

+ "import os\n",

+ "\n",

+ "inputVideoPath = outputPath+'/result.mp4'\n",

+ "unProcessedFramesFolderPath = outputPath+'/frames'\n",

+ "\n",

+ "if not os.path.exists(unProcessedFramesFolderPath):\n",

+ " os.makedirs(unProcessedFramesFolderPath)\n",

+ "\n",

+ "vidcap = cv2.VideoCapture(inputVideoPath)\n",

+ "numberOfFrames = int(vidcap.get(cv2.CAP_PROP_FRAME_COUNT))\n",

+ "fps = vidcap.get(cv2.CAP_PROP_FPS)\n",

+ "print(\"FPS: \", fps, \"Frames: \", numberOfFrames)\n",

+ "\n",

+ "for frameNumber in tqdm(range(numberOfFrames)):\n",

+ " _,image = vidcap.read()\n",

+ " cv2.imwrite(path.join(unProcessedFramesFolderPath, str(frameNumber).zfill(4)+'.jpg'), image)\n"

+ ],

+ "metadata": {

+ "id": "X_RNegAcISU2"

+ },

+ "execution_count": null,

+ "outputs": []

+ },

+ {

+ "cell_type": "code",

+ "source": [

+ "!cd $gfpganFolderName && \\\n",

+ " python inference_gfpgan.py -i $unProcessedFramesFolderPath -o $outputPath -v 1.3 -s 2 --only_center_face --bg_upsampler None"

+ ],

+ "metadata": {

+ "id": "k6krjfxTJYlu"

+ },

+ "execution_count": null,

+ "outputs": []

+ },

+ {

+ "cell_type": "code",

+ "source": [

+ "import os\n",

+ "restoredFramesPath = outputPath + '/restored_imgs/'\n",

+ "processedVideoOutputPath = outputPath\n",

+ "\n",

+ "dir_list = os.listdir(restoredFramesPath)\n",

+ "dir_list.sort()\n",

+ "\n",

+ "import cv2\n",

+ "import numpy as np\n",

+ "\n",

+ "batch = 0\n",

+ "batchSize = 300\n",

+ "from tqdm import tqdm\n",

+ "for i in tqdm(range(0, len(dir_list), batchSize)):\n",

+ " img_array = []\n",

+ " start, end = i, i+batchSize\n",

+ " print(\"processing \", start, end)\n",

+ " for filename in tqdm(dir_list[start:end]):\n",

+ " filename = restoredFramesPath+filename;\n",

+ " img = cv2.imread(filename)\n",

+ " if img is None:\n",

+ " continue\n",

+ " height, width, layers = img.shape\n",

+ " size = (width,height)\n",

+ " img_array.append(img)\n",

+ "\n",

+ "\n",

+ " out = cv2.VideoWriter(processedVideoOutputPath+'/batch_'+str(batch).zfill(4)+'.avi',cv2.VideoWriter_fourcc(*'DIVX'), 30, size)\n",

+ " batch = batch + 1\n",

+ " \n",

+ " for i in range(len(img_array)):\n",

+ " out.write(img_array[i])\n",

+ " out.release()\n"

+ ],

+ "metadata": {

+ "id": "XibzGPIVJfvP"

+ },

+ "execution_count": null,

+ "outputs": []

+ },

+ {

+ "cell_type": "code",

+ "source": [

+ "concatTextFilePath = outputPath + \"/concat.txt\"\n",

+ "concatTextFile=open(concatTextFilePath,\"w\")\n",

+ "for ips in range(batch):\n",

+ " concatTextFile.write(\"file batch_\" + str(ips).zfill(4) + \".avi\\n\")\n",

+ "concatTextFile.close()\n",

+ "\n",

+ "concatedVideoOutputPath = outputPath + \"/concated_output.avi\"\n",

+ "!ffmpeg -y -f concat -i {concatTextFilePath} -c copy {concatedVideoOutputPath} \n",

+ "\n",

+ "finalProcessedOuputVideo = processedVideoOutputPath+'/final_with_audio.avi'\n",

+ "!ffmpeg -y -i {concatedVideoOutputPath} -i {inputAudioPath} -map 0 -map 1:a -c:v copy -shortest {finalProcessedOuputVideo}\n",

+ "\n",

+ "from google.colab import files\n",

+ "files.download(finalProcessedOuputVideo)"

+ ],

+ "metadata": {

+ "id": "jtde28qwpDd6"

+ },

+ "execution_count": null,

+ "outputs": []

+ }

+ ]

+}

\ No newline at end of file

diff --git a/app.py b/app.py

new file mode 100644

index 0000000000000000000000000000000000000000..b6a4f308bbfd5078e7cee63131f1d3667ea5ae04

--- /dev/null

+++ b/app.py

@@ -0,0 +1,66 @@

+import os

+

+import gradio as gr

+import subprocess

+from subprocess import call

+

+basePath = os.path.dirname(os.path.realpath(__file__))

+

+outputPath = os.path.join(basePath, 'outputs')

+inputAudioPath = basePath + '/inputs/kim_audio.mp3'

+inputVideoPath = basePath + '/inputs/kimk_7s_raw.mp4'

+lipSyncedOutputPath = basePath + '/outputs/result.mp4'

+

+with gr.Blocks() as ui:

+ with gr.Row():

+ video = gr.File(label="Video or Image", info="Filepath of video/image that contains faces to use")

+ audio = gr.File(label="Audio", info="Filepath of video/audio file to use as raw audio source")

+ with gr.Column():

+ checkpoint = gr.Radio(["wav2lip", "wav2lip_gan"], label="Checkpoint",

+ info="Name of saved checkpoint to load weights from")

+ no_smooth = gr.Checkbox(label="No Smooth",

+ info="Prevent smoothing face detections over a short temporal window")

+ resize_factor = gr.Slider(minimum=1, maximum=4, step=1, label="Resize Factor",

+ info="Reduce the resolution by this factor. Sometimes, best results are obtained at 480p or 720p")

+ with gr.Row():

+ with gr.Column():

+ pad_top = gr.Slider(minimum=0, maximum=50, step=1, value=0, label="Pad Top", info="Padding above")

+ pad_bottom = gr.Slider(minimum=0, maximum=50, step=1, value=10,

+ label="Pad Bottom (Often increasing this to 20 allows chin to be included)",

+ info="Padding below lips")

+ pad_left = gr.Slider(minimum=0, maximum=50, step=1, value=0, label="Pad Left",

+ info="Padding to the left of lips")

+ pad_right = gr.Slider(minimum=0, maximum=50, step=1, value=0, label="Pad Right",

+ info="Padding to the right of lips")

+ generate_btn = gr.Button("Generate")

+ with gr.Column():

+ result = gr.Video()

+

+

+ def generate(video, audio, checkpoint, no_smooth, resize_factor, pad_top, pad_bottom, pad_left, pad_right):

+ if video is None or audio is None or checkpoint is None:

+ return

+

+ smooth = "--nosmooth" if no_smooth else ""

+

+ cmd = [

+ "python",

+ "inference.py",

+ "--checkpoint_path", f"checkpoints/{checkpoint}.pth",

+ "--segmentation_path", "checkpoints/face_segmentation.pth",

+ "--enhance_face", "gfpgan",

+ "--face", video.name,

+ "--audio", audio.name,

+ "--outfile", "results/output.mp4",

+ ]

+

+ call(cmd)

+ return "results/output.mp4"

+

+

+ generate_btn.click(

+ generate,

+ [video, audio, checkpoint, pad_top, pad_bottom, pad_left, pad_right, resize_factor],

+ result)

+

+ui.queue().launch(debug=True)

diff --git a/gfpgan/.gitignore b/gfpgan/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..8151890ed0f735bc3db37b3900616e1657dee170

--- /dev/null

+++ b/gfpgan/.gitignore

@@ -0,0 +1,139 @@

+# ignored folders

+datasets/*

+experiments/*

+results/*

+tb_logger/*

+wandb/*

+tmp/*

+

+version.py

+

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+pip-wheel-metadata/

+share/python-wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.nox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+*.py,cover

+.hypothesis/

+.pytest_cache/

+

+# Translations

+*.mo

+*.pot

+

+# Django stuff:

+*.log

+local_settings.py

+db.sqlite3

+db.sqlite3-journal

+

+# Flask stuff:

+instance/

+.webassets-cache

+

+# Scrapy stuff:

+.scrapy

+

+# Sphinx documentation

+docs/_build/

+

+# PyBuilder

+target/

+

+# Jupyter Notebook

+.ipynb_checkpoints

+

+# IPython

+profile_default/

+ipython_config.py

+

+# pyenv

+.python-version

+

+# pipenv

+# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

+# However, in case of collaboration, if having platform-specific dependencies or dependencies

+# having no cross-platform support, pipenv may install dependencies that don't work, or not

+# install all needed dependencies.

+#Pipfile.lock

+

+# PEP 582; used by e.g. github.com/David-OConnor/pyflow

+__pypackages__/

+

+# Celery stuff

+celerybeat-schedule

+celerybeat.pid

+

+# SageMath parsed files

+*.sage.py

+

+# Environments

+.env

+.venv

+env/

+venv/

+ENV/

+env.bak/

+venv.bak/

+

+# Spyder project settings

+.spyderproject

+.spyproject

+

+# Rope project settings

+.ropeproject

+

+# mkdocs documentation

+/site

+

+# mypy

+.mypy_cache/

+.dmypy.json

+dmypy.json

+

+# Pyre type checker

+.pyre/

diff --git a/gfpgan/.pre-commit-config.yaml b/gfpgan/.pre-commit-config.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..d221d29fbaac74bef1c0cd910ce8d8b6526181b8

--- /dev/null

+++ b/gfpgan/.pre-commit-config.yaml

@@ -0,0 +1,46 @@

+repos:

+ # flake8

+ - repo: https://github.com/PyCQA/flake8

+ rev: 3.8.3

+ hooks:

+ - id: flake8

+ args: ["--config=setup.cfg", "--ignore=W504, W503"]

+

+ # modify known_third_party

+ - repo: https://github.com/asottile/seed-isort-config

+ rev: v2.2.0

+ hooks:

+ - id: seed-isort-config

+

+ # isort

+ - repo: https://github.com/timothycrosley/isort

+ rev: 5.2.2

+ hooks:

+ - id: isort

+

+ # yapf

+ - repo: https://github.com/pre-commit/mirrors-yapf

+ rev: v0.30.0

+ hooks:

+ - id: yapf

+

+ # codespell

+ - repo: https://github.com/codespell-project/codespell

+ rev: v2.1.0

+ hooks:

+ - id: codespell

+

+ # pre-commit-hooks

+ - repo: https://github.com/pre-commit/pre-commit-hooks

+ rev: v3.2.0

+ hooks:

+ - id: trailing-whitespace # Trim trailing whitespace

+ - id: check-yaml # Attempt to load all yaml files to verify syntax

+ - id: check-merge-conflict # Check for files that contain merge conflict strings

+ - id: double-quote-string-fixer # Replace double quoted strings with single quoted strings

+ - id: end-of-file-fixer # Make sure files end in a newline and only a newline

+ - id: requirements-txt-fixer # Sort entries in requirements.txt and remove incorrect entry for pkg-resources==0.0.0

+ - id: fix-encoding-pragma # Remove the coding pragma: # -*- coding: utf-8 -*-

+ args: ["--remove"]

+ - id: mixed-line-ending # Replace or check mixed line ending

+ args: ["--fix=lf"]

diff --git a/gfpgan/CODE_OF_CONDUCT.md b/gfpgan/CODE_OF_CONDUCT.md

new file mode 100644

index 0000000000000000000000000000000000000000..e8cc4daa4345590464314889b187d6a2d7a8e20f

--- /dev/null

+++ b/gfpgan/CODE_OF_CONDUCT.md

@@ -0,0 +1,128 @@

+# Contributor Covenant Code of Conduct

+

+## Our Pledge

+

+We as members, contributors, and leaders pledge to make participation in our

+community a harassment-free experience for everyone, regardless of age, body

+size, visible or invisible disability, ethnicity, sex characteristics, gender

+identity and expression, level of experience, education, socio-economic status,

+nationality, personal appearance, race, religion, or sexual identity

+and orientation.

+

+We pledge to act and interact in ways that contribute to an open, welcoming,

+diverse, inclusive, and healthy community.

+

+## Our Standards

+

+Examples of behavior that contributes to a positive environment for our

+community include:

+

+* Demonstrating empathy and kindness toward other people

+* Being respectful of differing opinions, viewpoints, and experiences

+* Giving and gracefully accepting constructive feedback

+* Accepting responsibility and apologizing to those affected by our mistakes,

+ and learning from the experience

+* Focusing on what is best not just for us as individuals, but for the

+ overall community

+

+Examples of unacceptable behavior include:

+

+* The use of sexualized language or imagery, and sexual attention or

+ advances of any kind

+* Trolling, insulting or derogatory comments, and personal or political attacks

+* Public or private harassment

+* Publishing others' private information, such as a physical or email

+ address, without their explicit permission

+* Other conduct which could reasonably be considered inappropriate in a

+ professional setting

+

+## Enforcement Responsibilities

+

+Community leaders are responsible for clarifying and enforcing our standards of

+acceptable behavior and will take appropriate and fair corrective action in

+response to any behavior that they deem inappropriate, threatening, offensive,

+or harmful.

+

+Community leaders have the right and responsibility to remove, edit, or reject

+comments, commits, code, wiki edits, issues, and other contributions that are

+not aligned to this Code of Conduct, and will communicate reasons for moderation

+decisions when appropriate.

+

+## Scope

+

+This Code of Conduct applies within all community spaces, and also applies when

+an individual is officially representing the community in public spaces.

+Examples of representing our community include using an official e-mail address,

+posting via an official social media account, or acting as an appointed

+representative at an online or offline event.

+

+## Enforcement

+

+Instances of abusive, harassing, or otherwise unacceptable behavior may be

+reported to the community leaders responsible for enforcement at

+xintao.wang@outlook.com or xintaowang@tencent.com.

+All complaints will be reviewed and investigated promptly and fairly.

+

+All community leaders are obligated to respect the privacy and security of the

+reporter of any incident.

+

+## Enforcement Guidelines

+

+Community leaders will follow these Community Impact Guidelines in determining

+the consequences for any action they deem in violation of this Code of Conduct:

+

+### 1. Correction

+

+**Community Impact**: Use of inappropriate language or other behavior deemed

+unprofessional or unwelcome in the community.

+

+**Consequence**: A private, written warning from community leaders, providing

+clarity around the nature of the violation and an explanation of why the

+behavior was inappropriate. A public apology may be requested.

+

+### 2. Warning

+

+**Community Impact**: A violation through a single incident or series

+of actions.

+

+**Consequence**: A warning with consequences for continued behavior. No

+interaction with the people involved, including unsolicited interaction with

+those enforcing the Code of Conduct, for a specified period of time. This

+includes avoiding interactions in community spaces as well as external channels

+like social media. Violating these terms may lead to a temporary or

+permanent ban.

+

+### 3. Temporary Ban

+

+**Community Impact**: A serious violation of community standards, including

+sustained inappropriate behavior.

+

+**Consequence**: A temporary ban from any sort of interaction or public

+communication with the community for a specified period of time. No public or

+private interaction with the people involved, including unsolicited interaction

+with those enforcing the Code of Conduct, is allowed during this period.

+Violating these terms may lead to a permanent ban.

+

+### 4. Permanent Ban

+

+**Community Impact**: Demonstrating a pattern of violation of community

+standards, including sustained inappropriate behavior, harassment of an

+individual, or aggression toward or disparagement of classes of individuals.

+

+**Consequence**: A permanent ban from any sort of public interaction within

+the community.

+

+## Attribution

+

+This Code of Conduct is adapted from the [Contributor Covenant][homepage],

+version 2.0, available at

+https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

+

+Community Impact Guidelines were inspired by [Mozilla's code of conduct

+enforcement ladder](https://github.com/mozilla/diversity).

+

+[homepage]: https://www.contributor-covenant.org

+

+For answers to common questions about this code of conduct, see the FAQ at

+https://www.contributor-covenant.org/faq. Translations are available at

+https://www.contributor-covenant.org/translations.

diff --git a/gfpgan/Comparisons.md b/gfpgan/Comparisons.md

new file mode 100644

index 0000000000000000000000000000000000000000..1542d4c0c0a04ceeba42f24d5277351c2514214a

--- /dev/null

+++ b/gfpgan/Comparisons.md

@@ -0,0 +1,24 @@

+# Comparisons

+

+## Comparisons among different model versions

+

+Note that V1.3 is not always better than V1.2. You may need to try different models based on your purpose and inputs.

+

+| Version | Strengths | Weaknesses |

+| :---: | :---: | :---: |

+|V1.3 | ✓ natural outputs

✓better results on very low-quality inputs

✓ work on relatively high-quality inputs

✓ can have repeated (twice) restorations | ✗ not very sharp

✗ have a slight change on identity |

+|V1.2 | ✓ sharper output

✓ with beauty makeup | ✗ some outputs are unnatural|

+

+For the following images, you may need to **zoom in** for comparing details, or **click the image** to see in the full size.

+

+| Input | V1 | V1.2 | V1.3

+| :---: | :---: | :---: | :---: |

+||  |  |  |

+|  |  |  | |

+|  |  |  | |

+|  |  |  | |

+|  |  |  | |

+|  |  |  | |

+|  |  |  | |

+

+

diff --git a/gfpgan/FAQ.md b/gfpgan/FAQ.md

new file mode 100644

index 0000000000000000000000000000000000000000..e4d5a49cc216ffe987c7ab195a430f463f375425

--- /dev/null

+++ b/gfpgan/FAQ.md

@@ -0,0 +1,7 @@

+# FAQ

+

+1. **How to finetune the GFPGANCleanv1-NoCE-C2 (v1.2) model**

+

+**A:** 1) The GFPGANCleanv1-NoCE-C2 (v1.2) model uses the *clean* architecture, which is more friendly for deploying.

+2) This model is not directly trained. Instead, it is converted from another *bilinear* model.

+3) If you want to finetune the GFPGANCleanv1-NoCE-C2 (v1.2), you need to finetune its original *bilinear* model, and then do the conversion.

diff --git a/gfpgan/LICENSE b/gfpgan/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..24384c0728442ace20d180d784cb3ea714413923

--- /dev/null

+++ b/gfpgan/LICENSE

@@ -0,0 +1,351 @@

+Tencent is pleased to support the open source community by making GFPGAN available.

+

+Copyright (C) 2021 THL A29 Limited, a Tencent company. All rights reserved.

+

+GFPGAN is licensed under the Apache License Version 2.0 except for the third-party components listed below.

+

+

+Terms of the Apache License Version 2.0:

+---------------------------------------------

+Apache License

+

+Version 2.0, January 2004

+

+http://www.apache.org/licenses/

+

+TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+1. Definitions.

+

+“License” shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document.

+

+“Licensor” shall mean the copyright owner or entity authorized by the copyright owner that is granting the License.

+

+“Legal Entity” shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, “control” means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity.

+

+“You” (or “Your”) shall mean an individual or Legal Entity exercising permissions granted by this License.

+

+“Source” form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files.

+

+“Object” form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types.

+

+“Work” shall mean the work of authorship, whether in Source or Object form, made available under the License, as indicated by a copyright notice that is included in or attached to the work (an example is provided in the Appendix below).

+

+“Derivative Works” shall mean any work, whether in Source or Object form, that is based on (or derived from) the Work and for which the editorial revisions, annotations, elaborations, or other modifications represent, as a whole, an original work of authorship. For the purposes of this License, Derivative Works shall not include works that remain separable from, or merely link (or bind by name) to the interfaces of, the Work and Derivative Works thereof.

+

+“Contribution” shall mean any work of authorship, including the original version of the Work and any modifications or additions to that Work or Derivative Works thereof, that is intentionally submitted to Licensor for inclusion in the Work by the copyright owner or by an individual or Legal Entity authorized to submit on behalf of the copyright owner. For the purposes of this definition, “submitted” means any form of electronic, verbal, or written communication sent to the Licensor or its representatives, including but not limited to communication on electronic mailing lists, source code control systems, and issue tracking systems that are managed by, or on behalf of, the Licensor for the purpose of discussing and improving the Work, but excluding communication that is conspicuously marked or otherwise designated in writing by the copyright owner as “Not a Contribution.”

+

+“Contributor” shall mean Licensor and any individual or Legal Entity on behalf of whom a Contribution has been received by Licensor and subsequently incorporated within the Work.

+

+2. Grant of Copyright License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license to reproduce, prepare Derivative Works of, publicly display, publicly perform, sublicense, and distribute the Work and such Derivative Works in Source or Object form.

+

+3. Grant of Patent License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable (except as stated in this section) patent license to make, have made, use, offer to sell, sell, import, and otherwise transfer the Work, where such license applies only to those patent claims licensable by such Contributor that are necessarily infringed by their Contribution(s) alone or by combination of their Contribution(s) with the Work to which such Contribution(s) was submitted. If You institute patent litigation against any entity (including a cross-claim or counterclaim in a lawsuit) alleging that the Work or a Contribution incorporated within the Work constitutes direct or contributory patent infringement, then any patent licenses granted to You under this License for that Work shall terminate as of the date such litigation is filed.

+

+4. Redistribution. You may reproduce and distribute copies of the Work or Derivative Works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions:

+

+You must give any other recipients of the Work or Derivative Works a copy of this License; and

+

+You must cause any modified files to carry prominent notices stating that You changed the files; and

+

+You must retain, in the Source form of any Derivative Works that You distribute, all copyright, patent, trademark, and attribution notices from the Source form of the Work, excluding those notices that do not pertain to any part of the Derivative Works; and

+

+If the Work includes a “NOTICE” text file as part of its distribution, then any Derivative Works that You distribute must include a readable copy of the attribution notices contained within such NOTICE file, excluding those notices that do not pertain to any part of the Derivative Works, in at least one of the following places: within a NOTICE text file distributed as part of the Derivative Works; within the Source form or documentation, if provided along with the Derivative Works; or, within a display generated by the Derivative Works, if and wherever such third-party notices normally appear. The contents of the NOTICE file are for informational purposes only and do not modify the License. You may add Your own attribution notices within Derivative Works that You distribute, alongside or as an addendum to the NOTICE text from the Work, provided that such additional attribution notices cannot be construed as modifying the License.

+

+You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such Derivative Works as a whole, provided Your use, reproduction, and distribution of the Work otherwise complies with the conditions stated in this License.

+

+5. Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions.

+

+6. Trademarks. This License does not grant permission to use the trade names, trademarks, service marks, or product names of the Licensor, except as required for reasonable and customary use in describing the origin of the Work and reproducing the content of the NOTICE file.

+

+7. Disclaimer of Warranty. Unless required by applicable law or agreed to in writing, Licensor provides the Work (and each Contributor provides its Contributions) on an “AS IS” BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied, including, without limitation, any warranties or conditions of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A PARTICULAR PURPOSE. You are solely responsible for determining the appropriateness of using or redistributing the Work and assume any risks associated with Your exercise of permissions under this License.

+

+8. Limitation of Liability. In no event and under no legal theory, whether in tort (including negligence), contract, or otherwise, unless required by applicable law (such as deliberate and grossly negligent acts) or agreed to in writing, shall any Contributor be liable to You for damages, including any direct, indirect, special, incidental, or consequential damages of any character arising as a result of this License or out of the use or inability to use the Work (including but not limited to damages for loss of goodwill, work stoppage, computer failure or malfunction, or any and all other commercial damages or losses), even if such Contributor has been advised of the possibility of such damages.

+

+9. Accepting Warranty or Additional Liability. While redistributing the Work or Derivative Works thereof, You may choose to offer, and charge a fee for, acceptance of support, warranty, indemnity, or other liability obligations and/or rights consistent with this License. However, in accepting such obligations, You may act only on Your own behalf and on Your sole responsibility, not on behalf of any other Contributor, and only if You agree to indemnify, defend, and hold each Contributor harmless for any liability incurred by, or claims asserted against, such Contributor by reason of your accepting any such warranty or additional liability.

+

+END OF TERMS AND CONDITIONS

+

+

+

+Other dependencies and licenses:

+

+

+Open Source Software licensed under the Apache 2.0 license and Other Licenses of the Third-Party Components therein:

+---------------------------------------------

+1. basicsr

+Copyright 2018-2020 BasicSR Authors

+

+

+This BasicSR project is released under the Apache 2.0 license.

+

+A copy of Apache 2.0 is included in this file.

+

+StyleGAN2

+The codes are modified from the repository stylegan2-pytorch. Many thanks to the author - Kim Seonghyeon 😊 for translating from the official TensorFlow codes to PyTorch ones. Here is the license of stylegan2-pytorch.

+The official repository is https://github.com/NVlabs/stylegan2, and here is the NVIDIA license.

+DFDNet

+The codes are largely modified from the repository DFDNet. Their license is Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

+

+Terms of the Nvidia License:

+---------------------------------------------

+

+1. Definitions

+

+"Licensor" means any person or entity that distributes its Work.

+

+"Software" means the original work of authorship made available under

+this License.

+

+"Work" means the Software and any additions to or derivative works of

+the Software that are made available under this License.

+

+"Nvidia Processors" means any central processing unit (CPU), graphics

+processing unit (GPU), field-programmable gate array (FPGA),

+application-specific integrated circuit (ASIC) or any combination

+thereof designed, made, sold, or provided by Nvidia or its affiliates.

+

+The terms "reproduce," "reproduction," "derivative works," and

+"distribution" have the meaning as provided under U.S. copyright law;

+provided, however, that for the purposes of this License, derivative

+works shall not include works that remain separable from, or merely

+link (or bind by name) to the interfaces of, the Work.

+

+Works, including the Software, are "made available" under this License

+by including in or with the Work either (a) a copyright notice

+referencing the applicability of this License to the Work, or (b) a

+copy of this License.

+

+2. License Grants

+

+ 2.1 Copyright Grant. Subject to the terms and conditions of this

+ License, each Licensor grants to you a perpetual, worldwide,

+ non-exclusive, royalty-free, copyright license to reproduce,

+ prepare derivative works of, publicly display, publicly perform,

+ sublicense and distribute its Work and any resulting derivative

+ works in any form.

+

+3. Limitations

+

+ 3.1 Redistribution. You may reproduce or distribute the Work only

+ if (a) you do so under this License, (b) you include a complete

+ copy of this License with your distribution, and (c) you retain

+ without modification any copyright, patent, trademark, or

+ attribution notices that are present in the Work.

+

+ 3.2 Derivative Works. You may specify that additional or different

+ terms apply to the use, reproduction, and distribution of your

+ derivative works of the Work ("Your Terms") only if (a) Your Terms

+ provide that the use limitation in Section 3.3 applies to your

+ derivative works, and (b) you identify the specific derivative

+ works that are subject to Your Terms. Notwithstanding Your Terms,

+ this License (including the redistribution requirements in Section

+ 3.1) will continue to apply to the Work itself.

+

+ 3.3 Use Limitation. The Work and any derivative works thereof only

+ may be used or intended for use non-commercially. The Work or

+ derivative works thereof may be used or intended for use by Nvidia

+ or its affiliates commercially or non-commercially. As used herein,

+ "non-commercially" means for research or evaluation purposes only.

+

+ 3.4 Patent Claims. If you bring or threaten to bring a patent claim

+ against any Licensor (including any claim, cross-claim or

+ counterclaim in a lawsuit) to enforce any patents that you allege

+ are infringed by any Work, then your rights under this License from

+ such Licensor (including the grants in Sections 2.1 and 2.2) will

+ terminate immediately.

+

+ 3.5 Trademarks. This License does not grant any rights to use any

+ Licensor's or its affiliates' names, logos, or trademarks, except

+ as necessary to reproduce the notices described in this License.

+

+ 3.6 Termination. If you violate any term of this License, then your

+ rights under this License (including the grants in Sections 2.1 and

+ 2.2) will terminate immediately.

+

+4. Disclaimer of Warranty.

+

+THE WORK IS PROVIDED "AS IS" WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, EITHER EXPRESS OR IMPLIED, INCLUDING WARRANTIES OR CONDITIONS OF

+MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE OR

+NON-INFRINGEMENT. YOU BEAR THE RISK OF UNDERTAKING ANY ACTIVITIES UNDER

+THIS LICENSE.

+

+5. Limitation of Liability.

+

+EXCEPT AS PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL

+THEORY, WHETHER IN TORT (INCLUDING NEGLIGENCE), CONTRACT, OR OTHERWISE

+SHALL ANY LICENSOR BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY DIRECT,

+INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF

+OR RELATED TO THIS LICENSE, THE USE OR INABILITY TO USE THE WORK

+(INCLUDING BUT NOT LIMITED TO LOSS OF GOODWILL, BUSINESS INTERRUPTION,

+LOST PROFITS OR DATA, COMPUTER FAILURE OR MALFUNCTION, OR ANY OTHER

+COMMERCIAL DAMAGES OR LOSSES), EVEN IF THE LICENSOR HAS BEEN ADVISED OF

+THE POSSIBILITY OF SUCH DAMAGES.

+

+MIT License

+

+Copyright (c) 2019 Kim Seonghyeon

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

+

+

+

+Open Source Software licensed under the BSD 3-Clause license:

+---------------------------------------------

+1. torchvision

+Copyright (c) Soumith Chintala 2016,

+All rights reserved.

+

+2. torch

+Copyright (c) 2016- Facebook, Inc (Adam Paszke)

+Copyright (c) 2014- Facebook, Inc (Soumith Chintala)

+Copyright (c) 2011-2014 Idiap Research Institute (Ronan Collobert)

+Copyright (c) 2012-2014 Deepmind Technologies (Koray Kavukcuoglu)

+Copyright (c) 2011-2012 NEC Laboratories America (Koray Kavukcuoglu)

+Copyright (c) 2011-2013 NYU (Clement Farabet)

+Copyright (c) 2006-2010 NEC Laboratories America (Ronan Collobert, Leon Bottou, Iain Melvin, Jason Weston)

+Copyright (c) 2006 Idiap Research Institute (Samy Bengio)

+Copyright (c) 2001-2004 Idiap Research Institute (Ronan Collobert, Samy Bengio, Johnny Mariethoz)

+

+

+Terms of the BSD 3-Clause License:

+---------------------------------------------

+Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

+

+1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

+

+2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

+

+3. Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS “AS IS” AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

+

+

+

+Open Source Software licensed under the BSD 3-Clause License and Other Licenses of the Third-Party Components therein:

+---------------------------------------------

+1. numpy

+Copyright (c) 2005-2020, NumPy Developers.

+All rights reserved.

+

+A copy of BSD 3-Clause License is included in this file.

+

+The NumPy repository and source distributions bundle several libraries that are

+compatibly licensed. We list these here.

+

+Name: Numpydoc

+Files: doc/sphinxext/numpydoc/*

+License: BSD-2-Clause

+ For details, see doc/sphinxext/LICENSE.txt

+

+Name: scipy-sphinx-theme

+Files: doc/scipy-sphinx-theme/*

+License: BSD-3-Clause AND PSF-2.0 AND Apache-2.0

+ For details, see doc/scipy-sphinx-theme/LICENSE.txt

+

+Name: lapack-lite

+Files: numpy/linalg/lapack_lite/*

+License: BSD-3-Clause

+ For details, see numpy/linalg/lapack_lite/LICENSE.txt

+

+Name: tempita

+Files: tools/npy_tempita/*

+License: MIT

+ For details, see tools/npy_tempita/license.txt

+

+Name: dragon4

+Files: numpy/core/src/multiarray/dragon4.c

+License: MIT

+ For license text, see numpy/core/src/multiarray/dragon4.c

+

+

+

+Open Source Software licensed under the MIT license:

+---------------------------------------------

+1. facexlib

+Copyright (c) 2020 Xintao Wang

+

+2. opencv-python

+Copyright (c) Olli-Pekka Heinisuo

+Please note that only files in cv2 package are used.

+

+

+Terms of the MIT License:

+---------------------------------------------

+Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

+

+

+

+Open Source Software licensed under the MIT license and Other Licenses of the Third-Party Components therein:

+---------------------------------------------

+1. tqdm

+Copyright (c) 2013 noamraph

+

+`tqdm` is a product of collaborative work.

+Unless otherwise stated, all authors (see commit logs) retain copyright

+for their respective work, and release the work under the MIT licence

+(text below).

+

+Exceptions or notable authors are listed below

+in reverse chronological order:

+

+* files: *

+ MPLv2.0 2015-2020 (c) Casper da Costa-Luis

+ [casperdcl](https://github.com/casperdcl).

+* files: tqdm/_tqdm.py

+ MIT 2016 (c) [PR #96] on behalf of Google Inc.

+* files: tqdm/_tqdm.py setup.py README.rst MANIFEST.in .gitignore

+ MIT 2013 (c) Noam Yorav-Raphael, original author.

+

+[PR #96]: https://github.com/tqdm/tqdm/pull/96

+

+

+Mozilla Public Licence (MPL) v. 2.0 - Exhibit A

+-----------------------------------------------

+

+This Source Code Form is subject to the terms of the

+Mozilla Public License, v. 2.0.

+If a copy of the MPL was not distributed with this file,

+You can obtain one at https://mozilla.org/MPL/2.0/.

+

+

+MIT License (MIT)

+-----------------

+

+Copyright (c) 2013 noamraph

+

+Permission is hereby granted, free of charge, to any person obtaining a copy of

+this software and associated documentation files (the "Software"), to deal in

+the Software without restriction, including without limitation the rights to

+use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of

+the Software, and to permit persons to whom the Software is furnished to do so,

+subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS

+FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR

+COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER

+IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN

+CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

diff --git a/gfpgan/MANIFEST.in b/gfpgan/MANIFEST.in

new file mode 100644

index 0000000000000000000000000000000000000000..bcaa7179b82f6f0eebace30fa7e4ebea88408f52

--- /dev/null

+++ b/gfpgan/MANIFEST.in

@@ -0,0 +1,8 @@

+include assets/*

+include inputs/*

+include scripts/*.py

+include inference_gfpgan.py

+include VERSION

+include LICENSE

+include requirements.txt

+include gfpgan/weights/README.md

diff --git a/gfpgan/PaperModel.md b/gfpgan/PaperModel.md

new file mode 100644

index 0000000000000000000000000000000000000000..e9c8bdc4e757a9818f18d1926b7452172486ec92

--- /dev/null

+++ b/gfpgan/PaperModel.md

@@ -0,0 +1,76 @@

+# Installation

+

+We now provide a *clean* version of GFPGAN, which does not require customized CUDA extensions. See [here](README.md#installation) for this easier installation.

+If you want want to use the original model in our paper, please follow the instructions below.

+

+1. Clone repo

+

+ ```bash

+ git clone https://github.com/xinntao/GFPGAN.git

+ cd GFPGAN

+ ```

+

+1. Install dependent packages

+

+ As StyleGAN2 uses customized PyTorch C++ extensions, you need to **compile them during installation** or **load them just-in-time(JIT)**.

+ You can refer to [BasicSR-INSTALL.md](https://github.com/xinntao/BasicSR/blob/master/INSTALL.md) for more details.

+

+ **Option 1: Load extensions just-in-time(JIT)** (For those just want to do simple inferences, may have less issues)

+

+ ```bash

+ # Install basicsr - https://github.com/xinntao/BasicSR

+ # We use BasicSR for both training and inference

+ pip install basicsr

+

+ # Install facexlib - https://github.com/xinntao/facexlib

+ # We use face detection and face restoration helper in the facexlib package

+ pip install facexlib

+

+ pip install -r requirements.txt

+ python setup.py develop

+

+ # remember to set BASICSR_JIT=True before your running commands

+ ```

+

+ **Option 2: Compile extensions during installation** (For those need to train/inference for many times)

+

+ ```bash

+ # Install basicsr - https://github.com/xinntao/BasicSR

+ # We use BasicSR for both training and inference

+ # Set BASICSR_EXT=True to compile the cuda extensions in the BasicSR - It may take several minutes to compile, please be patient

+ # Add -vvv for detailed log prints

+ BASICSR_EXT=True pip install basicsr -vvv

+

+ # Install facexlib - https://github.com/xinntao/facexlib

+ # We use face detection and face restoration helper in the facexlib package

+ pip install facexlib

+

+ pip install -r requirements.txt

+ python setup.py develop

+ ```

+

+## :zap: Quick Inference

+

+Download pre-trained models: [GFPGANv1.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth)

+

+```bash

+wget https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth -P experiments/pretrained_models

+```

+

+- Option 1: Load extensions just-in-time(JIT)

+

+ ```bash

+ BASICSR_JIT=True python inference_gfpgan.py --input inputs/whole_imgs --output results --version 1

+

+ # for aligned images

+ BASICSR_JIT=True python inference_gfpgan.py --input inputs/whole_imgs --output results --version 1 --aligned

+ ```

+

+- Option 2: Have successfully compiled extensions during installation

+

+ ```bash

+ python inference_gfpgan.py --input inputs/whole_imgs --output results --version 1

+

+ # for aligned images

+ python inference_gfpgan.py --input inputs/whole_imgs --output results --version 1 --aligned

+ ```

diff --git a/gfpgan/README.md b/gfpgan/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..b6296252fe04dae9116817552c3620ea937b7287

--- /dev/null

+++ b/gfpgan/README.md

@@ -0,0 +1,192 @@

+

+  +

+

+

+##

+

+[](https://github.com/TencentARC/GFPGAN/releases)

+[](https://pypi.org/project/gfpgan/)

+[](https://github.com/TencentARC/GFPGAN/issues)

+[](https://github.com/TencentARC/GFPGAN/issues)

+[](https://github.com/TencentARC/GFPGAN/blob/master/LICENSE)

+[](https://github.com/TencentARC/GFPGAN/blob/master/.github/workflows/pylint.yml)

+[](https://github.com/TencentARC/GFPGAN/blob/master/.github/workflows/publish-pip.yml)

+

+1. [Colab Demo](https://colab.research.google.com/drive/1sVsoBd9AjckIXThgtZhGrHRfFI6UUYOo) for GFPGAN  ; (Another [Colab Demo](https://colab.research.google.com/drive/1Oa1WwKB4M4l1GmR7CtswDVgOCOeSLChA?usp=sharing) for the original paper model)

+2. Online demo: [Huggingface](https://huggingface.co/spaces/akhaliq/GFPGAN) (return only the cropped face)

+3. Online demo: [Replicate.ai](https://replicate.com/xinntao/gfpgan) (may need to sign in, return the whole image)

+4. Online demo: [Baseten.co](https://app.baseten.co/applications/Q04Lz0d/operator_views/8qZG6Bg) (backed by GPU, returns the whole image)

+5. We provide a *clean* version of GFPGAN, which can run without CUDA extensions. So that it can run in **Windows** or on **CPU mode**.

+

+> :rocket: **Thanks for your interest in our work. You may also want to check our new updates on the *tiny models* for *anime images and videos* in [Real-ESRGAN](https://github.com/xinntao/Real-ESRGAN/blob/master/docs/anime_video_model.md)** :blush:

+

+GFPGAN aims at developing a **Practical Algorithm for Real-world Face Restoration**.

; (Another [Colab Demo](https://colab.research.google.com/drive/1Oa1WwKB4M4l1GmR7CtswDVgOCOeSLChA?usp=sharing) for the original paper model)

+2. Online demo: [Huggingface](https://huggingface.co/spaces/akhaliq/GFPGAN) (return only the cropped face)

+3. Online demo: [Replicate.ai](https://replicate.com/xinntao/gfpgan) (may need to sign in, return the whole image)

+4. Online demo: [Baseten.co](https://app.baseten.co/applications/Q04Lz0d/operator_views/8qZG6Bg) (backed by GPU, returns the whole image)

+5. We provide a *clean* version of GFPGAN, which can run without CUDA extensions. So that it can run in **Windows** or on **CPU mode**.

+

+> :rocket: **Thanks for your interest in our work. You may also want to check our new updates on the *tiny models* for *anime images and videos* in [Real-ESRGAN](https://github.com/xinntao/Real-ESRGAN/blob/master/docs/anime_video_model.md)** :blush:

+

+GFPGAN aims at developing a **Practical Algorithm for Real-world Face Restoration**.

+It leverages rich and diverse priors encapsulated in a pretrained face GAN (*e.g.*, StyleGAN2) for blind face restoration.

+

+:question: Frequently Asked Questions can be found in [FAQ.md](FAQ.md).

+

+:triangular_flag_on_post: **Updates**

+

+- :fire::fire::white_check_mark: Add **[V1.3 model](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth)**, which produces **more natural** restoration results, and better results on *very low-quality* / *high-quality* inputs. See more in [Model zoo](#european_castle-model-zoo), [Comparisons.md](Comparisons.md)

+- :white_check_mark: Integrated to [Huggingface Spaces](https://huggingface.co/spaces) with [Gradio](https://github.com/gradio-app/gradio). See [Gradio Web Demo](https://huggingface.co/spaces/akhaliq/GFPGAN).

+- :white_check_mark: Support enhancing non-face regions (background) with [Real-ESRGAN](https://github.com/xinntao/Real-ESRGAN).

+- :white_check_mark: We provide a *clean* version of GFPGAN, which does not require CUDA extensions.

+- :white_check_mark: We provide an updated model without colorizing faces.

+

+---

+

+If GFPGAN is helpful in your photos/projects, please help to :star: this repo or recommend it to your friends. Thanks:blush:

+Other recommended projects:

+:arrow_forward: [Real-ESRGAN](https://github.com/xinntao/Real-ESRGAN): A practical algorithm for general image restoration

+:arrow_forward: [BasicSR](https://github.com/xinntao/BasicSR): An open-source image and video restoration toolbox

+:arrow_forward: [facexlib](https://github.com/xinntao/facexlib): A collection that provides useful face-relation functions

+:arrow_forward: [HandyView](https://github.com/xinntao/HandyView): A PyQt5-based image viewer that is handy for view and comparison

+

+---

+

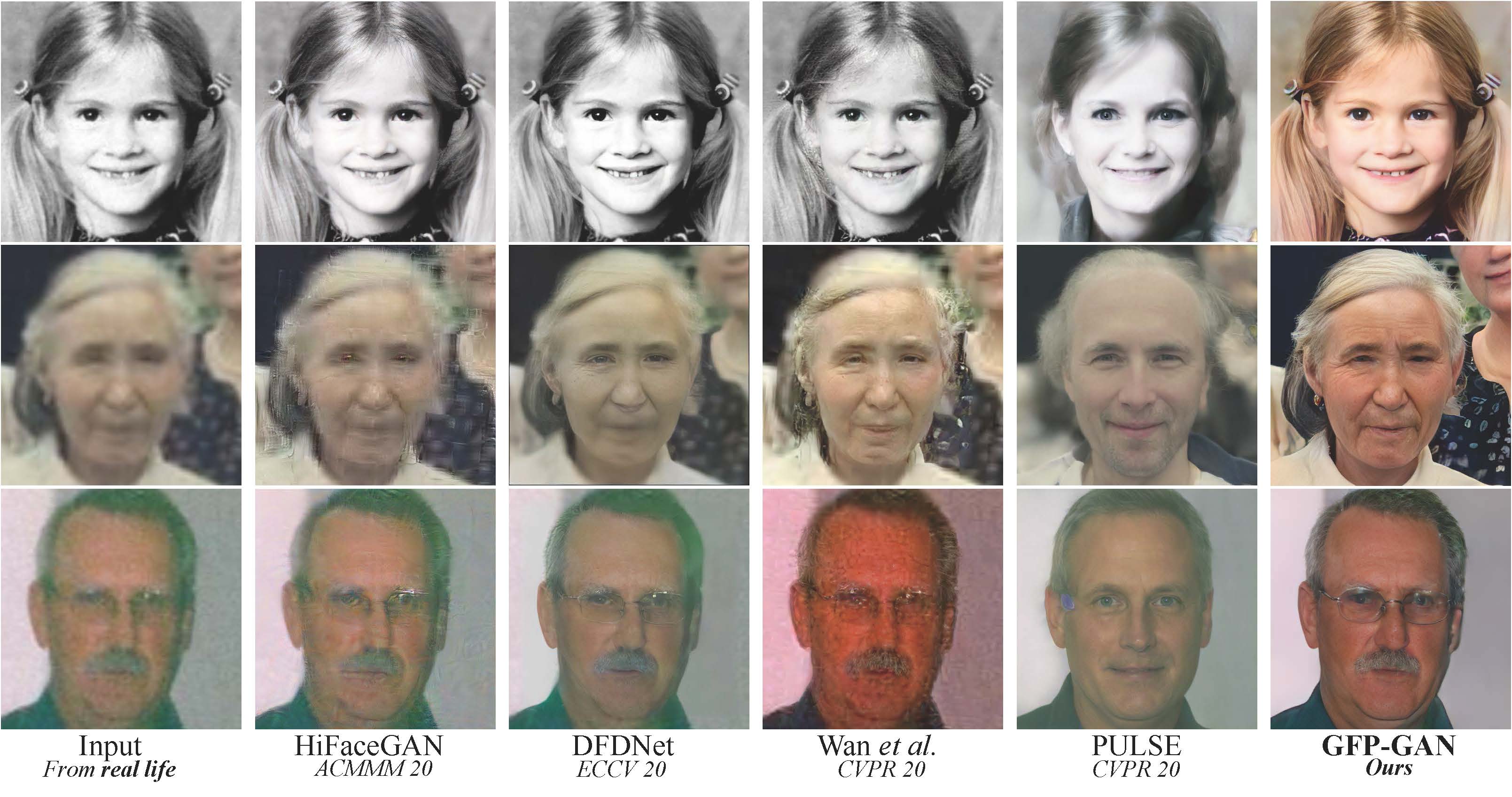

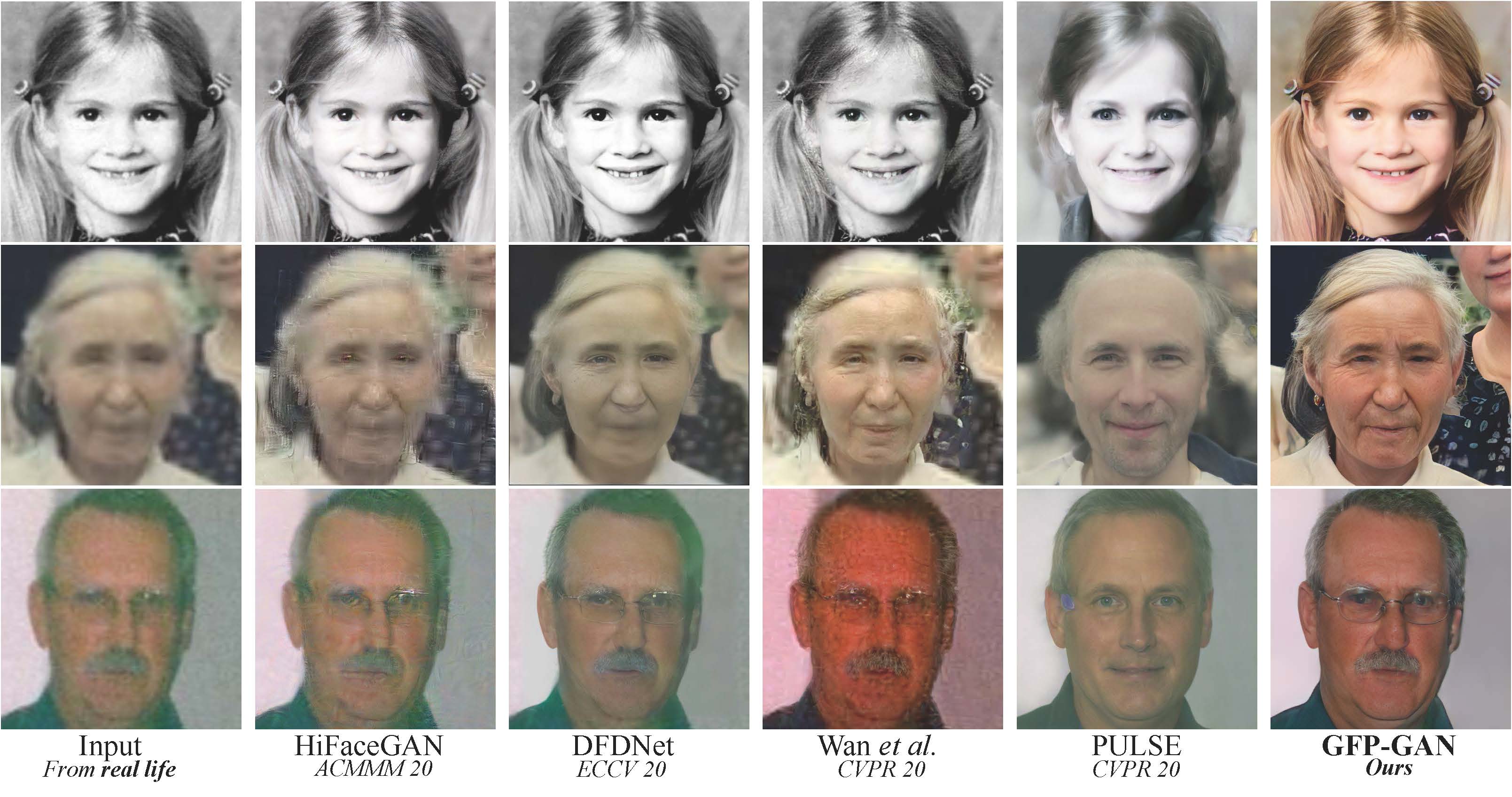

+### :book: GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

+

+> [[Paper](https://arxiv.org/abs/2101.04061)] [[Project Page](https://xinntao.github.io/projects/gfpgan)] [Demo]

+> [Xintao Wang](https://xinntao.github.io/), [Yu Li](https://yu-li.github.io/), [Honglun Zhang](https://scholar.google.com/citations?hl=en&user=KjQLROoAAAAJ), [Ying Shan](https://scholar.google.com/citations?user=4oXBp9UAAAAJ&hl=en)

+> Applied Research Center (ARC), Tencent PCG

+

+

+  +

+

+

+---

+

+## :wrench: Dependencies and Installation

+

+- Python >= 3.7 (Recommend to use [Anaconda](https://www.anaconda.com/download/#linux) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html))

+- [PyTorch >= 1.7](https://pytorch.org/)

+- Option: NVIDIA GPU + [CUDA](https://developer.nvidia.com/cuda-downloads)

+- Option: Linux

+

+### Installation

+

+We now provide a *clean* version of GFPGAN, which does not require customized CUDA extensions.

+If you want to use the original model in our paper, please see [PaperModel.md](PaperModel.md) for installation.

+

+1. Clone repo

+

+ ```bash

+ git clone https://github.com/TencentARC/GFPGAN.git

+ cd GFPGAN

+ ```

+

+1. Install dependent packages

+

+ ```bash

+ # Install basicsr - https://github.com/xinntao/BasicSR

+ # We use BasicSR for both training and inference

+ pip install basicsr

+

+ # Install facexlib - https://github.com/xinntao/facexlib

+ # We use face detection and face restoration helper in the facexlib package

+ pip install facexlib

+

+ pip install -r requirements.txt

+ python setup.py develop

+

+ # If you want to enhance the background (non-face) regions with Real-ESRGAN,

+ # you also need to install the realesrgan package

+ pip install realesrgan

+ ```

+

+## :zap: Quick Inference

+

+We take the v1.3 version for an example. More models can be found [here](#european_castle-model-zoo).

+

+Download pre-trained models: [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth)

+

+```bash

+wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth -P experiments/pretrained_models

+```

+

+**Inference!**

+

+```bash

+python inference_gfpgan.py -i inputs/whole_imgs -o results -v 1.3 -s 2

+```

+

+```console

+Usage: python inference_gfpgan.py -i inputs/whole_imgs -o results -v 1.3 -s 2 [options]...

+

+ -h show this help

+ -i input Input image or folder. Default: inputs/whole_imgs

+ -o output Output folder. Default: results

+ -v version GFPGAN model version. Option: 1 | 1.2 | 1.3. Default: 1.3

+ -s upscale The final upsampling scale of the image. Default: 2

+ -bg_upsampler background upsampler. Default: realesrgan

+ -bg_tile Tile size for background sampler, 0 for no tile during testing. Default: 400

+ -suffix Suffix of the restored faces

+ -only_center_face Only restore the center face

+ -aligned Input are aligned faces

+ -ext Image extension. Options: auto | jpg | png, auto means using the same extension as inputs. Default: auto

+```

+

+If you want to use the original model in our paper, please see [PaperModel.md](PaperModel.md) for installation and inference.

+

+## :european_castle: Model Zoo

+

+| Version | Model Name | Description |

+| :---: | :---: | :---: |

+| V1.3 | [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) | Based on V1.2; **more natural** restoration results; better results on very low-quality / high-quality inputs. |

+| V1.2 | [GFPGANCleanv1-NoCE-C2.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.2.0/GFPGANCleanv1-NoCE-C2.pth) | No colorization; no CUDA extensions are required. Trained with more data with pre-processing. |

+| V1 | [GFPGANv1.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth) | The paper model, with colorization. |

+

+The comparisons are in [Comparisons.md](Comparisons.md).

+

+Note that V1.3 is not always better than V1.2. You may need to select different models based on your purpose and inputs.

+

+| Version | Strengths | Weaknesses |

+| :---: | :---: | :---: |

+|V1.3 | ✓ natural outputs

✓better results on very low-quality inputs

✓ work on relatively high-quality inputs

✓ can have repeated (twice) restorations | ✗ not very sharp

✗ have a slight change on identity |

+|V1.2 | ✓ sharper output

✓ with beauty makeup | ✗ some outputs are unnatural |

+

+You can find **more models (such as the discriminators)** here: [[Google Drive](https://drive.google.com/drive/folders/17rLiFzcUMoQuhLnptDsKolegHWwJOnHu?usp=sharing)], OR [[Tencent Cloud 腾讯微云](https://share.weiyun.com/ShYoCCoc)]

+

+## :computer: Training

+

+We provide the training codes for GFPGAN (used in our paper).

+You could improve it according to your own needs.

+

+**Tips**

+

+1. More high quality faces can improve the restoration quality.

+2. You may need to perform some pre-processing, such as beauty makeup.

+

+**Procedures**

+

+(You can try a simple version ( `options/train_gfpgan_v1_simple.yml`) that does not require face component landmarks.)

+

+1. Dataset preparation: [FFHQ](https://github.com/NVlabs/ffhq-dataset)

+

+1. Download pre-trained models and other data. Put them in the `experiments/pretrained_models` folder.

+ 1. [Pre-trained StyleGAN2 model: StyleGAN2_512_Cmul1_FFHQ_B12G4_scratch_800k.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/StyleGAN2_512_Cmul1_FFHQ_B12G4_scratch_800k.pth)

+ 1. [Component locations of FFHQ: FFHQ_eye_mouth_landmarks_512.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/FFHQ_eye_mouth_landmarks_512.pth)

+ 1. [A simple ArcFace model: arcface_resnet18.pth](https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/arcface_resnet18.pth)

+

+1. Modify the configuration file `options/train_gfpgan_v1.yml` accordingly.

+

+1. Training

+

+> python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 gfpgan/train.py -opt options/train_gfpgan_v1.yml --launcher pytorch

+

+## :scroll: License and Acknowledgement

+

+GFPGAN is released under Apache License Version 2.0.

+

+## BibTeX

+

+ @InProceedings{wang2021gfpgan,

+ author = {Xintao Wang and Yu Li and Honglun Zhang and Ying Shan},

+ title = {Towards Real-World Blind Face Restoration with Generative Facial Prior},

+ booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+ year = {2021}

+ }

+

+## :e-mail: Contact

+

+If you have any question, please email `xintao.wang@outlook.com` or `xintaowang@tencent.com`.

diff --git a/gfpgan/README_CN.md b/gfpgan/README_CN.md

new file mode 100644

index 0000000000000000000000000000000000000000..880f20631cb199fb33e1541ea7fd38bf1d29167b

--- /dev/null

+++ b/gfpgan/README_CN.md

@@ -0,0 +1,7 @@

+

+  +

+

+

+##

+

+还未完工,欢迎贡献!

diff --git a/gfpgan/VERSION b/gfpgan/VERSION

new file mode 100644

index 0000000000000000000000000000000000000000..d0149fef743a8035720ed161412709e87702dcab

--- /dev/null

+++ b/gfpgan/VERSION

@@ -0,0 +1 @@

+1.3.4

diff --git a/gfpgan/assets/gfpgan_logo.png b/gfpgan/assets/gfpgan_logo.png

new file mode 100644

index 0000000000000000000000000000000000000000..f01937838faf7689869d3a4dfd50da006af8fd5d

Binary files /dev/null and b/gfpgan/assets/gfpgan_logo.png differ

diff --git a/gfpgan/experiments/pretrained_models/GFPGANv1.3.pth b/gfpgan/experiments/pretrained_models/GFPGANv1.3.pth

new file mode 100644

index 0000000000000000000000000000000000000000..1da748a3ef84ff85dd2c77c836f222aae22b007e

--- /dev/null

+++ b/gfpgan/experiments/pretrained_models/GFPGANv1.3.pth

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:c953a88f2727c85c3d9ae72e2bd4846bbaf59fe6972ad94130e23e7017524a70

+size 348632874

diff --git a/gfpgan/experiments/pretrained_models/GFPGANv1.4.pth b/gfpgan/experiments/pretrained_models/GFPGANv1.4.pth

new file mode 100644

index 0000000000000000000000000000000000000000..afedb5c7e826056840c9cc183f2c6f0186fd17ba

--- /dev/null

+++ b/gfpgan/experiments/pretrained_models/GFPGANv1.4.pth

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:e2cd4703ab14f4d01fd1383a8a8b266f9a5833dacee8e6a79d3bf21a1b6be5ad

+size 348632874

diff --git a/gfpgan/gfpgan/__init__.py b/gfpgan/gfpgan/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..94daaeebce5604d61999f0b1b354b9a9e299b991

--- /dev/null

+++ b/gfpgan/gfpgan/__init__.py

@@ -0,0 +1,7 @@

+# flake8: noqa

+from .archs import *

+from .data import *

+from .models import *

+from .utils import *

+

+# from .version import *

diff --git a/gfpgan/gfpgan/archs/__init__.py b/gfpgan/gfpgan/archs/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..bec5f17bfa38729b55f57cae8e40c27310db2b7b

--- /dev/null

+++ b/gfpgan/gfpgan/archs/__init__.py

@@ -0,0 +1,10 @@

+import importlib

+from basicsr.utils import scandir

+from os import path as osp

+

+# automatically scan and import arch modules for registry

+# scan all the files that end with '_arch.py' under the archs folder

+arch_folder = osp.dirname(osp.abspath(__file__))

+arch_filenames = [osp.splitext(osp.basename(v))[0] for v in scandir(arch_folder) if v.endswith('_arch.py')]

+# import all the arch modules

+_arch_modules = [importlib.import_module(f'gfpgan.archs.{file_name}') for file_name in arch_filenames]

diff --git a/gfpgan/gfpgan/archs/arcface_arch.py b/gfpgan/gfpgan/archs/arcface_arch.py

new file mode 100644

index 0000000000000000000000000000000000000000..e6d3bd97f83334450bd78ad2c3b9871102a56b70

--- /dev/null

+++ b/gfpgan/gfpgan/archs/arcface_arch.py

@@ -0,0 +1,245 @@

+import torch.nn as nn

+from basicsr.utils.registry import ARCH_REGISTRY

+

+

+def conv3x3(inplanes, outplanes, stride=1):

+ """A simple wrapper for 3x3 convolution with padding.

+

+ Args:

+ inplanes (int): Channel number of inputs.

+ outplanes (int): Channel number of outputs.

+ stride (int): Stride in convolution. Default: 1.

+ """

+ return nn.Conv2d(inplanes, outplanes, kernel_size=3, stride=stride, padding=1, bias=False)

+

+

+class BasicBlock(nn.Module):

+ """Basic residual block used in the ResNetArcFace architecture.

+

+ Args:

+ inplanes (int): Channel number of inputs.

+ planes (int): Channel number of outputs.

+ stride (int): Stride in convolution. Default: 1.

+ downsample (nn.Module): The downsample module. Default: None.

+ """

+ expansion = 1 # output channel expansion ratio

+

+ def __init__(self, inplanes, planes, stride=1, downsample=None):

+ super(BasicBlock, self).__init__()

+ self.conv1 = conv3x3(inplanes, planes, stride)

+ self.bn1 = nn.BatchNorm2d(planes)

+ self.relu = nn.ReLU(inplace=True)

+ self.conv2 = conv3x3(planes, planes)

+ self.bn2 = nn.BatchNorm2d(planes)

+ self.downsample = downsample

+ self.stride = stride

+

+ def forward(self, x):

+ residual = x

+

+ out = self.conv1(x)

+ out = self.bn1(out)

+ out = self.relu(out)

+

+ out = self.conv2(out)

+ out = self.bn2(out)

+

+ if self.downsample is not None:

+ residual = self.downsample(x)

+

+ out += residual

+ out = self.relu(out)

+

+ return out

+

+

+class IRBlock(nn.Module):

+ """Improved residual block (IR Block) used in the ResNetArcFace architecture.

+

+ Args:

+ inplanes (int): Channel number of inputs.

+ planes (int): Channel number of outputs.

+ stride (int): Stride in convolution. Default: 1.

+ downsample (nn.Module): The downsample module. Default: None.

+ use_se (bool): Whether use the SEBlock (squeeze and excitation block). Default: True.

+ """

+ expansion = 1 # output channel expansion ratio

+

+ def __init__(self, inplanes, planes, stride=1, downsample=None, use_se=True):

+ super(IRBlock, self).__init__()

+ self.bn0 = nn.BatchNorm2d(inplanes)

+ self.conv1 = conv3x3(inplanes, inplanes)

+ self.bn1 = nn.BatchNorm2d(inplanes)

+ self.prelu = nn.PReLU()

+ self.conv2 = conv3x3(inplanes, planes, stride)

+ self.bn2 = nn.BatchNorm2d(planes)

+ self.downsample = downsample

+ self.stride = stride

+ self.use_se = use_se

+ if self.use_se:

+ self.se = SEBlock(planes)

+

+ def forward(self, x):

+ residual = x

+ out = self.bn0(x)

+ out = self.conv1(out)

+ out = self.bn1(out)

+ out = self.prelu(out)

+

+ out = self.conv2(out)

+ out = self.bn2(out)

+ if self.use_se:

+ out = self.se(out)

+

+ if self.downsample is not None:

+ residual = self.downsample(x)

+

+ out += residual

+ out = self.prelu(out)

+

+ return out

+

+

+class Bottleneck(nn.Module):

+ """Bottleneck block used in the ResNetArcFace architecture.

+

+ Args:

+ inplanes (int): Channel number of inputs.

+ planes (int): Channel number of outputs.

+ stride (int): Stride in convolution. Default: 1.

+ downsample (nn.Module): The downsample module. Default: None.

+ """

+ expansion = 4 # output channel expansion ratio

+

+ def __init__(self, inplanes, planes, stride=1, downsample=None):

+ super(Bottleneck, self).__init__()

+ self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

+ self.bn1 = nn.BatchNorm2d(planes)

+ self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride, padding=1, bias=False)

+ self.bn2 = nn.BatchNorm2d(planes)

+ self.conv3 = nn.Conv2d(planes, planes * self.expansion, kernel_size=1, bias=False)

+ self.bn3 = nn.BatchNorm2d(planes * self.expansion)

+ self.relu = nn.ReLU(inplace=True)

+ self.downsample = downsample

+ self.stride = stride

+

+ def forward(self, x):

+ residual = x

+

+ out = self.conv1(x)

+ out = self.bn1(out)

+ out = self.relu(out)

+

+ out = self.conv2(out)

+ out = self.bn2(out)

+ out = self.relu(out)

+

+ out = self.conv3(out)

+ out = self.bn3(out)

+

+ if self.downsample is not None:

+ residual = self.downsample(x)

+

+ out += residual

+ out = self.relu(out)

+

+ return out

+

+

+class SEBlock(nn.Module):

+ """The squeeze-and-excitation block (SEBlock) used in the IRBlock.

+

+ Args:

+ channel (int): Channel number of inputs.

+ reduction (int): Channel reduction ration. Default: 16.

+ """

+

+ def __init__(self, channel, reduction=16):

+ super(SEBlock, self).__init__()

+ self.avg_pool = nn.AdaptiveAvgPool2d(1) # pool to 1x1 without spatial information

+ self.fc = nn.Sequential(

+ nn.Linear(channel, channel // reduction), nn.PReLU(), nn.Linear(channel // reduction, channel),

+ nn.Sigmoid())

+

+ def forward(self, x):

+ b, c, _, _ = x.size()

+ y = self.avg_pool(x).view(b, c)

+ y = self.fc(y).view(b, c, 1, 1)

+ return x * y

+

+

+@ARCH_REGISTRY.register()

+class ResNetArcFace(nn.Module):

+ """ArcFace with ResNet architectures.

+

+ Ref: ArcFace: Additive Angular Margin Loss for Deep Face Recognition.

+

+ Args:

+ block (str): Block used in the ArcFace architecture.

+ layers (tuple(int)): Block numbers in each layer.

+ use_se (bool): Whether use the SEBlock (squeeze and excitation block). Default: True.

+ """

+

+ def __init__(self, block, layers, use_se=True):

+ if block == 'IRBlock':

+ block = IRBlock

+ self.inplanes = 64

+ self.use_se = use_se

+ super(ResNetArcFace, self).__init__()

+

+ self.conv1 = nn.Conv2d(1, 64, kernel_size=3, padding=1, bias=False)

+ self.bn1 = nn.BatchNorm2d(64)

+ self.prelu = nn.PReLU()

+ self.maxpool = nn.MaxPool2d(kernel_size=2, stride=2)

+ self.layer1 = self._make_layer(block, 64, layers[0])

+ self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

+ self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

+ self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

+ self.bn4 = nn.BatchNorm2d(512)

+ self.dropout = nn.Dropout()

+ self.fc5 = nn.Linear(512 * 8 * 8, 512)

+ self.bn5 = nn.BatchNorm1d(512)

+

+ # initialization

+ for m in self.modules():

+ if isinstance(m, nn.Conv2d):

+ nn.init.xavier_normal_(m.weight)

+ elif isinstance(m, nn.BatchNorm2d) or isinstance(m, nn.BatchNorm1d):

+ nn.init.constant_(m.weight, 1)

+ nn.init.constant_(m.bias, 0)

+ elif isinstance(m, nn.Linear):

+ nn.init.xavier_normal_(m.weight)

+ nn.init.constant_(m.bias, 0)

+

+ def _make_layer(self, block, planes, num_blocks, stride=1):

+ downsample = None

+ if stride != 1 or self.inplanes != planes * block.expansion:

+ downsample = nn.Sequential(

+ nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=False),

+ nn.BatchNorm2d(planes * block.expansion),

+ )

+ layers = []

+ layers.append(block(self.inplanes, planes, stride, downsample, use_se=self.use_se))

+ self.inplanes = planes

+ for _ in range(1, num_blocks):

+ layers.append(block(self.inplanes, planes, use_se=self.use_se))

+

+ return nn.Sequential(*layers)

+

+ def forward(self, x):

+ x = self.conv1(x)

+ x = self.bn1(x)

+ x = self.prelu(x)

+ x = self.maxpool(x)

+

+ x = self.layer1(x)

+ x = self.layer2(x)

+ x = self.layer3(x)

+ x = self.layer4(x)

+ x = self.bn4(x)

+ x = self.dropout(x)

+ x = x.view(x.size(0), -1)

+ x = self.fc5(x)

+ x = self.bn5(x)

+

+ return x

diff --git a/gfpgan/gfpgan/archs/gfpgan_bilinear_arch.py b/gfpgan/gfpgan/archs/gfpgan_bilinear_arch.py

new file mode 100644

index 0000000000000000000000000000000000000000..52e0de88de8543cf4afdc3988c4cdfc7c7060687

--- /dev/null

+++ b/gfpgan/gfpgan/archs/gfpgan_bilinear_arch.py

@@ -0,0 +1,312 @@

+import math

+import random

+import torch

+from basicsr.utils.registry import ARCH_REGISTRY

+from torch import nn

+

+from .gfpganv1_arch import ResUpBlock

+from .stylegan2_bilinear_arch import (ConvLayer, EqualConv2d, EqualLinear, ResBlock, ScaledLeakyReLU,

+ StyleGAN2GeneratorBilinear)

+

+

+class StyleGAN2GeneratorBilinearSFT(StyleGAN2GeneratorBilinear):

+ """StyleGAN2 Generator with SFT modulation (Spatial Feature Transform).

+