Update app.py

Browse files

app.py

CHANGED

|

@@ -4,12 +4,12 @@ from PIL import Image

|

|

| 4 |

|

| 5 |

from lambda_diffusers import StableDiffusionImageEmbedPipeline

|

| 6 |

|

| 7 |

-

def ask(input_im, scale,

|

| 8 |

images = images

|

| 9 |

generator = torch.Generator(device=device).manual_seed(int(seed))

|

| 10 |

|

| 11 |

images_list = pipe(

|

| 12 |

-

|

| 13 |

guidance_scale=scale,

|

| 14 |

num_inference_steps=steps,

|

| 15 |

generator=generator,

|

|

@@ -23,7 +23,7 @@ def ask(input_im, scale, n_samples, steps, seed, images):

|

|

| 23 |

images.append(image)

|

| 24 |

return images

|

| 25 |

|

| 26 |

-

def main(input_im, scale,

|

| 27 |

|

| 28 |

images = []

|

| 29 |

images = ask(input_im, scale, n_samples, steps, seed, images)

|

|

@@ -41,12 +41,33 @@ pipe = pipe.to(device)

|

|

| 41 |

inputs = [

|

| 42 |

gr.Image(),

|

| 43 |

gr.Slider(0, 25, value=3, step=1, label="Guidance scale"),

|

| 44 |

-

gr.Slider(1, 2, value=2, step=1, label="Number images"),

|

| 45 |

gr.Slider(5, 50, value=25, step=5, label="Steps"),

|

| 46 |

-

gr.

|

| 47 |

]

|

| 48 |

output = gr.Gallery(label="Generated variations")

|

| 49 |

-

output.style(grid=2)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 50 |

|

| 51 |

demo = gr.Interface(

|

| 52 |

fn=main,

|

|

|

|

| 4 |

|

| 5 |

from lambda_diffusers import StableDiffusionImageEmbedPipeline

|

| 6 |

|

| 7 |

+

def ask(input_im, scale, steps, seed, images):

|

| 8 |

images = images

|

| 9 |

generator = torch.Generator(device=device).manual_seed(int(seed))

|

| 10 |

|

| 11 |

images_list = pipe(

|

| 12 |

+

2*[input_im],

|

| 13 |

guidance_scale=scale,

|

| 14 |

num_inference_steps=steps,

|

| 15 |

generator=generator,

|

|

|

|

| 23 |

images.append(image)

|

| 24 |

return images

|

| 25 |

|

| 26 |

+

def main(input_im, scale, steps, seed):

|

| 27 |

|

| 28 |

images = []

|

| 29 |

images = ask(input_im, scale, n_samples, steps, seed, images)

|

|

|

|

| 41 |

inputs = [

|

| 42 |

gr.Image(),

|

| 43 |

gr.Slider(0, 25, value=3, step=1, label="Guidance scale"),

|

|

|

|

| 44 |

gr.Slider(5, 50, value=25, step=5, label="Steps"),

|

| 45 |

+

gr.Slider(label = "Seed", minimum = 0, maximum = 2147483647, step = 1, randomize = True)

|

| 46 |

]

|

| 47 |

output = gr.Gallery(label="Generated variations")

|

| 48 |

+

output.style(grid=2, height="")

|

| 49 |

+

|

| 50 |

+

description = \

|

| 51 |

+

"""

|

| 52 |

+

|

| 53 |

+

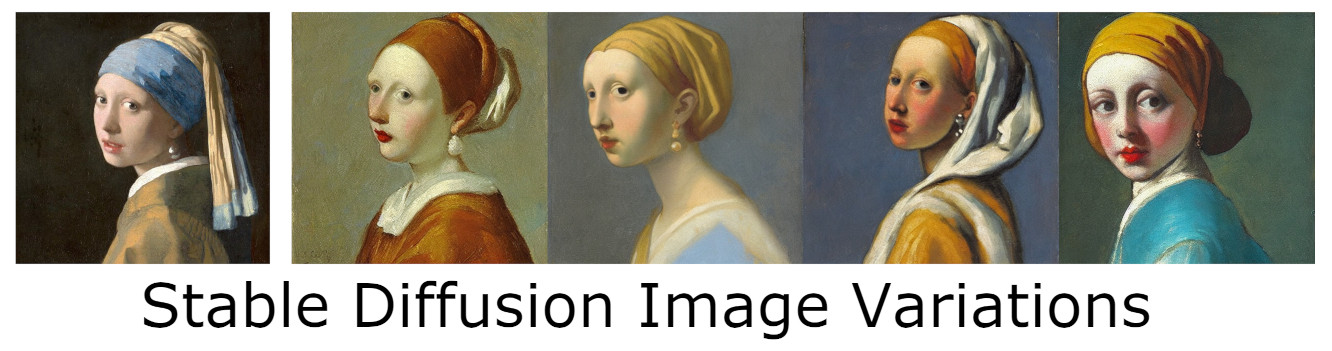

Generate variations on an input image using a fine-tuned version of Stable Diffision.

|

| 54 |

+

Trained by [Justin Pinkney](https://www.justinpinkney.com) ([@Buntworthy](https://twitter.com/Buntworthy)) at [Lambda](https://lambdalabs.com/)

|

| 55 |

+

This version has been ported to 🤗 Diffusers library, see more details on how to use this version in the [Lambda Diffusers repo](https://github.com/LambdaLabsML/lambda-diffusers).

|

| 56 |

+

__For the original training code see [this repo](https://github.com/justinpinkney/stable-diffusion).

|

| 57 |

+

|

| 58 |

+

"""

|

| 59 |

+

|

| 60 |

+

article = \

|

| 61 |

+

"""

|

| 62 |

+

## How does this work?

|

| 63 |

+

The normal Stable Diffusion model is trained to be conditioned on text input. This version has had the original text encoder (from CLIP) removed, and replaced with

|

| 64 |

+

the CLIP _image_ encoder instead. So instead of generating images based a text input, images are generated to match CLIP's embedding of the image.

|

| 65 |

+

This creates images which have the same rough style and content, but different details, in particular the composition is generally quite different.

|

| 66 |

+

This is a totally different approach to the img2img script of the original Stable Diffusion and gives very different results.

|

| 67 |

+

The model was fine tuned on the [LAION aethetics v2 6+ dataset](https://laion.ai/blog/laion-aesthetics/) to accept the new conditioning.

|

| 68 |

+

Training was done on 4xA6000 GPUs on [Lambda GPU Cloud](https://lambdalabs.com/service/gpu-cloud).

|

| 69 |

+

More details on the method and training will come in a future blog post.

|

| 70 |

+

"""

|

| 71 |

|

| 72 |

demo = gr.Interface(

|

| 73 |

fn=main,

|