import vllm

import torch

import gradio

import huggingface_hub

import os

huggingface_hub.login(token=os.environ["HF_TOKEN"])

# Fava prompt

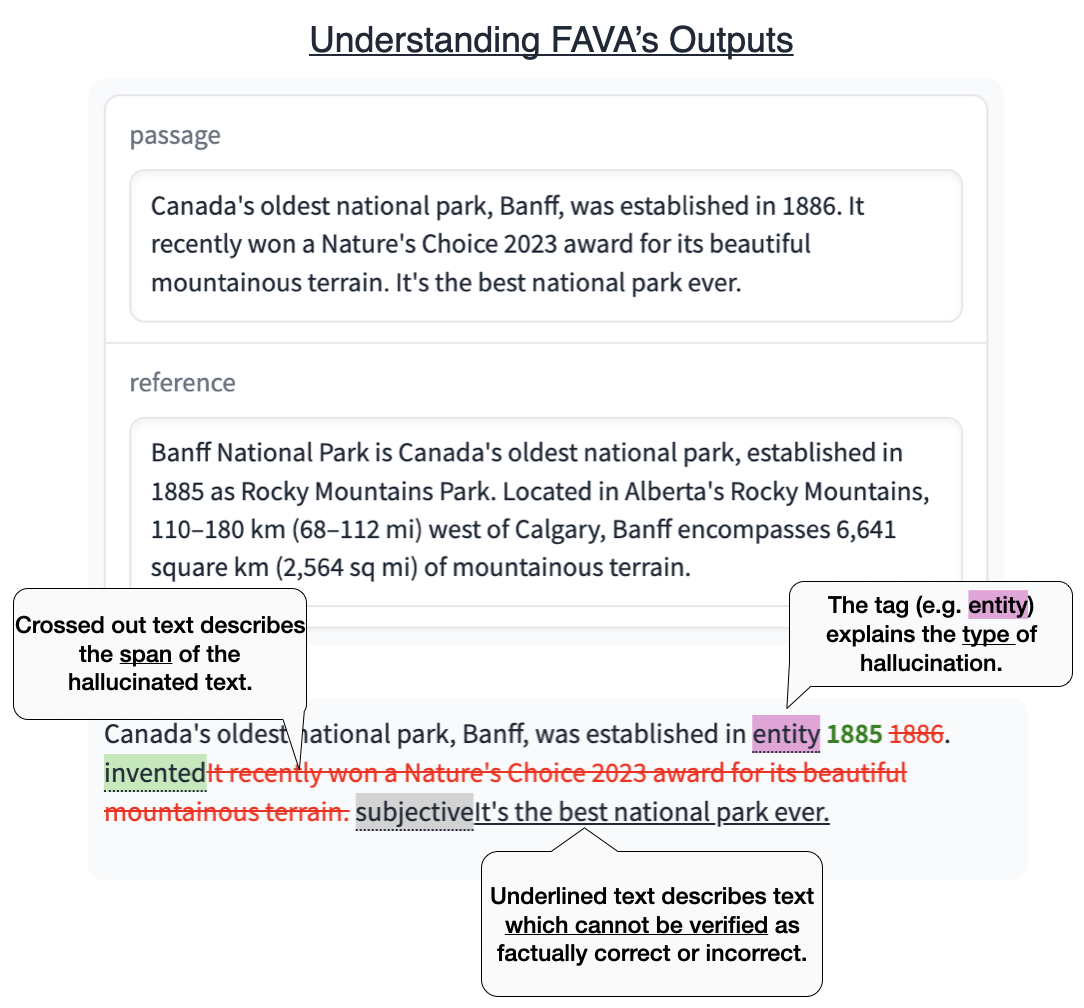

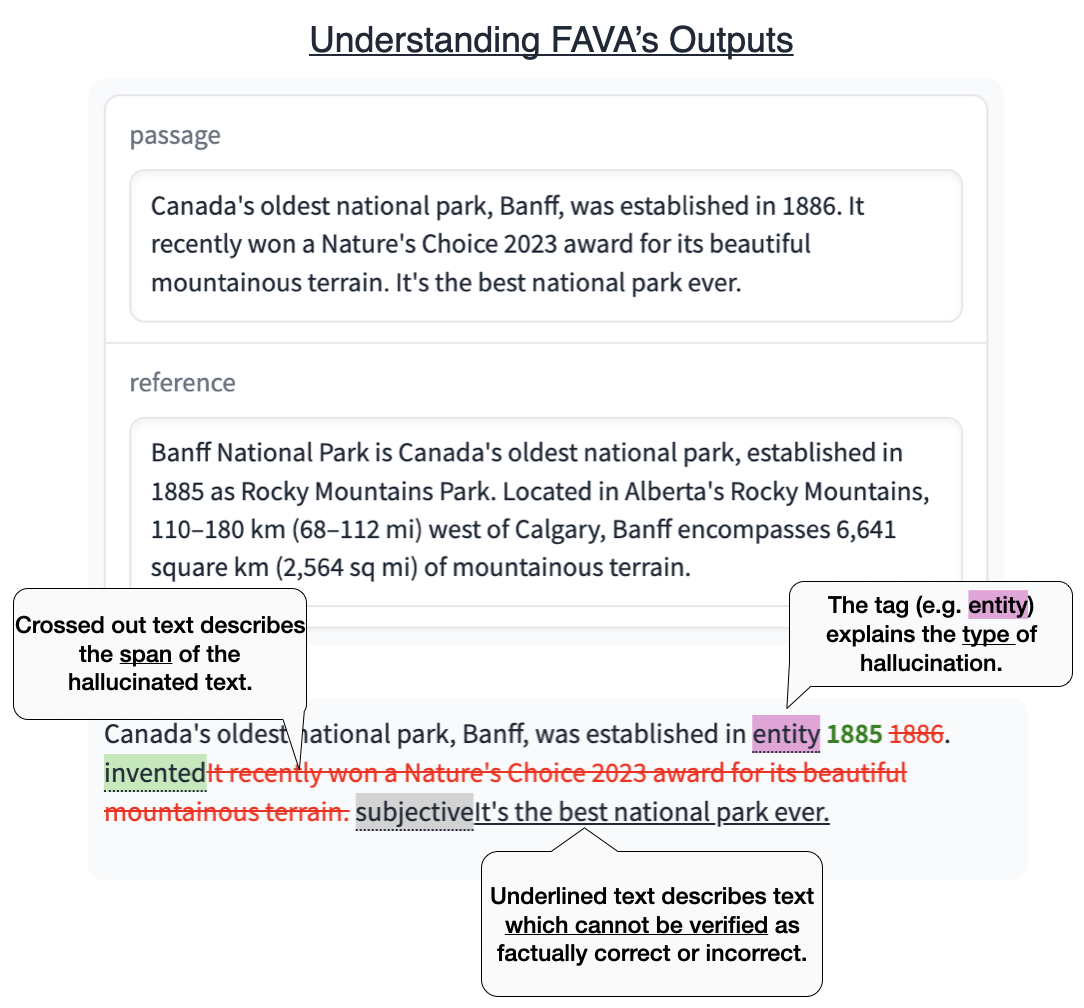

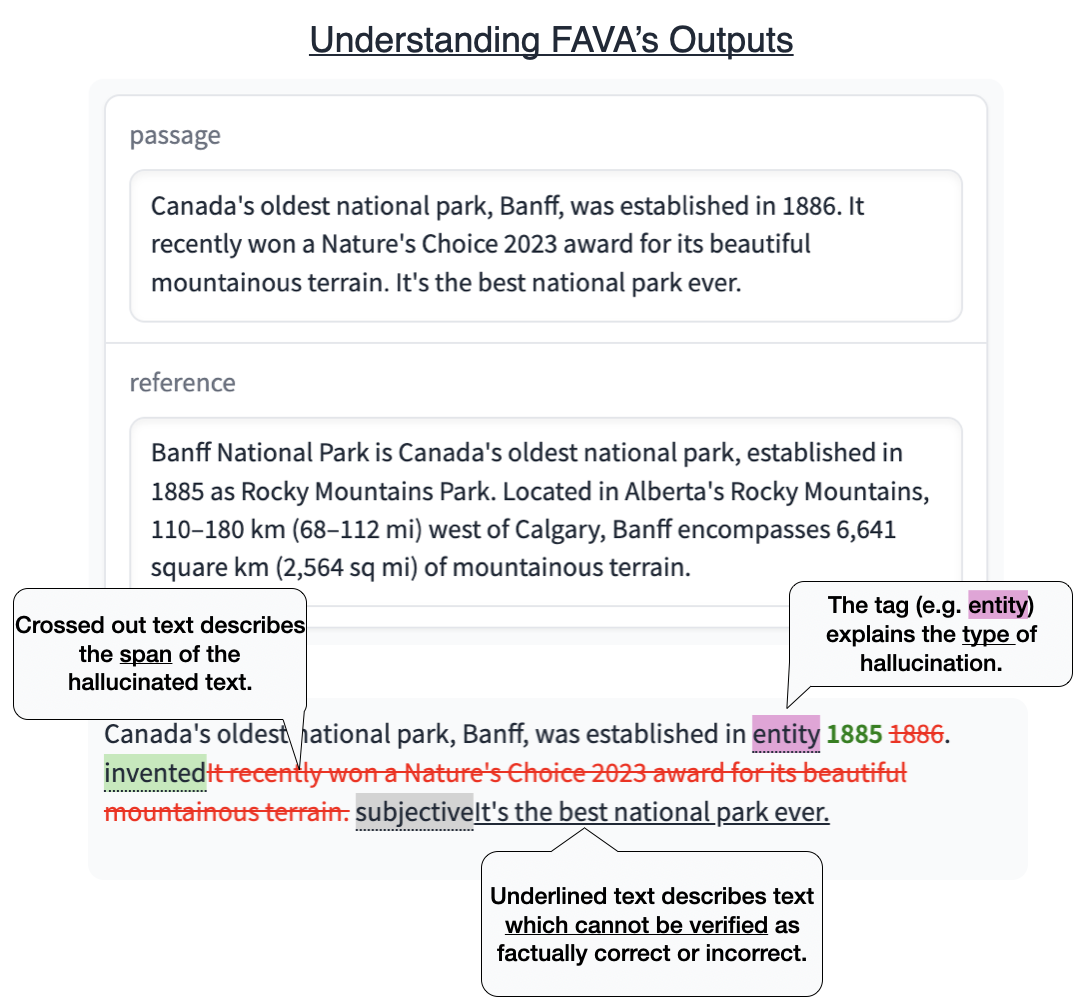

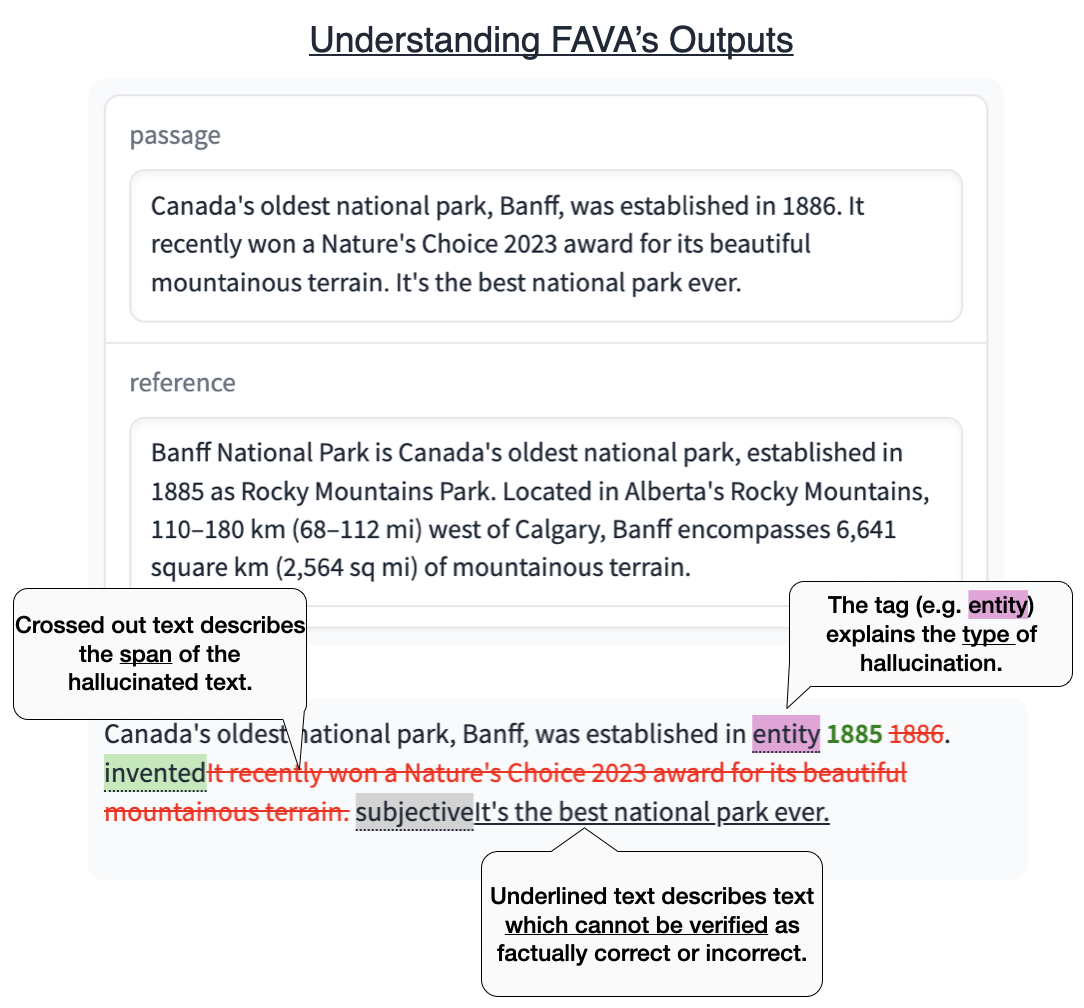

INPUT = "Read the following references:\n{evidence}\nPlease identify all the errors in the following text using the information in the references provided and suggest edits if necessary:\n[Text] {output}\n[Edited] "

_article = f' '

model = vllm.LLM(model="fava-uw/fava-model")

def result(passage, reference):

prompt = [INPUT.format_map({"evidence":reference, "output":passage})]

sampling_params = vllm.SamplingParams(

temperature=0,

top_p=1.0,

max_tokens=500,

)

outputs = model.generate(prompt, sampling_params)

outputs = [it.outputs[0].text for it in outputs]

output = outputs[0].replace("", " ")

output = output.replace("", " ")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "entity")

output = output.replace("", "relation")

output = output.replace("", "contradictory")

output = output.replace("", "unverifiable")

output = output.replace("", "invented")

output = output.replace("", "subjective")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("Edited:", "")

return f'

'

model = vllm.LLM(model="fava-uw/fava-model")

def result(passage, reference):

prompt = [INPUT.format_map({"evidence":reference, "output":passage})]

sampling_params = vllm.SamplingParams(

temperature=0,

top_p=1.0,

max_tokens=500,

)

outputs = model.generate(prompt, sampling_params)

outputs = [it.outputs[0].text for it in outputs]

output = outputs[0].replace("", " ")

output = output.replace("", " ")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "entity")

output = output.replace("", "relation")

output = output.replace("", "contradictory")

output = output.replace("", "unverifiable")

output = output.replace("", "invented")

output = output.replace("", "subjective")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("", "")

output = output.replace("Edited:", "")

return f'{output}

'; #output;

if __name__ == "__main__":

# description="Enter some text and a reference for the text and FAVA will detect and edit any hallucinations present."

custom_footer = """"""

demo = gradio.Interface(fn=result, inputs=["text", "text"], outputs="html", title="Fine-grained Hallucination Detection and Editing (FAVA)", description=custom_footer)

demo.launch(share=True)