Spaces:

Sleeping

Sleeping

added files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE +57 -0

- README.md +145 -12

- app.py +7 -0

- assets/overview.png +0 -0

- assets/teaser.png +0 -0

- common/constants.py +35 -0

- common/renderer_pyrd.py +112 -0

- configs/cfg_test.yml +28 -0

- configs/cfg_train.yml +29 -0

- data/base_dataset.py +164 -0

- data/mixed_dataset.py +42 -0

- data/preprocess/behave-30fps-error_frames.json +511 -0

- data/preprocess/behave.py +0 -0

- data/preprocess/behave_test/behave_simple_test.npz.py +37 -0

- data/preprocess/behave_test/split.json +327 -0

- data/preprocess/hot_dca.py +220 -0

- data/preprocess/hot_noprox.py +241 -0

- data/preprocess/hot_prox.py +217 -0

- data/preprocess/prepare_damon_behave_split.py +69 -0

- data/preprocess/rich_smplx.py +222 -0

- data/preprocess/rich_smplx_agniv.py +578 -0

- data/preprocess/yoga-82_test/yoga82_simple_test.npz.py +34 -0

- example_images/213.jpg +0 -0

- example_images/pexels-photo-15732209.jpeg +0 -0

- example_images/pexels-photo-207569.webp +0 -0

- example_images/pexels-photo-3622517.webp +0 -0

- fetch_data.sh +18 -0

- hot_analysis/.ipynb_checkpoints/hico_analysis-checkpoint.ipynb +307 -0

- hot_analysis/.ipynb_checkpoints/vcoco_analysis-checkpoint.ipynb +276 -0

- hot_analysis/agniv_pose_filter/hico.npy +3 -0

- hot_analysis/agniv_pose_filter/hot.npy +3 -0

- hot_analysis/agniv_pose_filter/hot_dict.pkl +3 -0

- hot_analysis/agniv_pose_filter/pq_wnp.npy +3 -0

- hot_analysis/agniv_pose_filter/vcoco.npy +3 -0

- hot_analysis/count_objects_per_img.py +35 -0

- hot_analysis/create_combined_objectwise_plots.ipynb +291 -0

- hot_analysis/create_part_probability_mesh.py +86 -0

- hot_analysis/damon_qc_stats/compute_accuracy_iou_damon.py +59 -0

- hot_analysis/damon_qc_stats/compute_fleiss_kappa_damon.py +111 -0

- hot_analysis/damon_qc_stats/qa_accuracy_gt_contact_combined.npz +3 -0

- hot_analysis/damon_qc_stats/quality_assurance_accuracy.csv +0 -0

- hot_analysis/damon_qc_stats/quality_assurance_fleiss.csv +0 -0

- hot_analysis/damon_qc_stats/successful_qualifications_fleiss.csv +0 -0

- hot_analysis/filtered_data/v_1/hico/hico_imglist_all_140223.txt +0 -0

- hot_analysis/filtered_data/v_1/hico/image_per_object_category.png +0 -0

- hot_analysis/filtered_data/v_1/hico/imgnames_per_object_dict.json +0 -0

- hot_analysis/filtered_data/v_1/hico/imgnames_per_object_dict.txt +0 -0

- hot_analysis/filtered_data/v_1/hico/object_per_image_dict.json +0 -0

- hot_analysis/filtered_data/v_1/hico/object_per_image_dict.txt +0 -0

- hot_analysis/filtered_data/v_1/hico_imglist_all_140223.txt +0 -0

LICENSE

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

License

|

| 2 |

+

Data & Software Copyright License for non-commercial scientific research purposes

|

| 3 |

+

Please read carefully the following terms and conditions and any accompanying documentation before you download and/or use the DECO data, models and software, (the "Data & Software"), including DECO baseline models, 3D meshes, MANO/SMPL-X parameters, images, MoCap data, videos, software, and scripts. By downloading and/or using the Data & Software (including downloading, cloning, installing, and any other use of the corresponding GitHub repository), you acknowledge that you have read these terms and conditions, understand them, and agree to be bound by them. If you do not agree with these terms and conditions, you must not download and/or use the Data & Software. Any infringement of the terms of this agreement will automatically terminate your rights under this License

|

| 4 |

+

|

| 5 |

+

Ownership / Licensees

|

| 6 |

+

The Data & Software and the associated materials have been developed at the Max Planck Institute for Intelligent Systems (hereinafter "MPI").

|

| 7 |

+

|

| 8 |

+

Any copyright or patent right is owned by and proprietary material of the Max-Planck-Gesellschaft zur Förderung der Wissenschaften e.V. (hereinafter “MPG”; MPI and MPG hereinafter collectively “Max-Planck”)

|

| 9 |

+

|

| 10 |

+

hereinafter the “Licensor”.

|

| 11 |

+

|

| 12 |

+

License Grant

|

| 13 |

+

Licensor grants you (Licensee) personally a single-user, non-exclusive, non-transferable, free of charge right:

|

| 14 |

+

|

| 15 |

+

To install the Data & Software on computers owned, leased or otherwise controlled by you and/or your organization;

|

| 16 |

+

To use the Data & Software for the sole purpose of performing non-commercial scientific research, non-commercial education, or non-commercial artistic projects;

|

| 17 |

+

Any other use, in particular any use for commercial, pornographic, military, or surveillance, purposes is prohibited. This includes, without limitation, incorporation in a commercial product, use in a commercial service, or production of other artifacts for commercial purposes. The Data & Software may not be used to create fake, libelous, misleading, or defamatory content of any kind excluding analyses in peer-reviewed scientific research. The Data & Software may not be reproduced, modified and/or made available in any form to any third party without Max-Planck’s prior written permission.

|

| 18 |

+

|

| 19 |

+

The Data & Software may not be used for pornographic purposes or to generate pornographic material whether commercial or not. This license also prohibits the use of the Data & Software to train methods/algorithms/neural networks/etc. for commercial, pornographic, military, surveillance, or defamatory use of any kind. By downloading the Data & Software, you agree not to reverse engineer it.

|

| 20 |

+

|

| 21 |

+

No Distribution

|

| 22 |

+

The Data & Software and the license herein granted shall not be copied, shared, distributed, re-sold, offered for re-sale, transferred or sub-licensed in whole or in part except that you may make one copy for archive purposes only.

|

| 23 |

+

|

| 24 |

+

Disclaimer of Representations and Warranties

|

| 25 |

+

You expressly acknowledge and agree that the Data & Software results from basic research, is provided “AS IS”, may contain errors, and that any use of the Data & Software is at your sole risk. LICENSOR MAKES NO REPRESENTATIONS OR WARRANTIES OF ANY KIND CONCERNING THE DATA & SOFTWARE, NEITHER EXPRESS NOR IMPLIED, AND THE ABSENCE OF ANY LEGAL OR ACTUAL DEFECTS, WHETHER DISCOVERABLE OR NOT. Specifically, and not to limit the foregoing, licensor makes no representations or warranties (i) regarding the merchantability or fitness for a particular purpose of the Data & Software, (ii) that the use of the Data & Software will not infringe any patents, copyrights or other intellectual property rights of a third party, and (iii) that the use of the Data & Software will not cause any damage of any kind to you or a third party.

|

| 26 |

+

|

| 27 |

+

Limitation of Liability

|

| 28 |

+

Because this Data & Software License Agreement qualifies as a donation, according to Section 521 of the German Civil Code (Bürgerliches Gesetzbuch – BGB) Licensor as a donor is liable for intent and gross negligence only. If the Licensor fraudulently conceals a legal or material defect, they are obliged to compensate the Licensee for the resulting damage.

|

| 29 |

+

Licensor shall be liable for loss of data only up to the amount of typical recovery costs which would have arisen had proper and regular data backup measures been taken. For the avoidance of doubt Licensor shall be liable in accordance with the German Product Liability Act in the event of product liability. The foregoing applies also to Licensor’s legal representatives or assistants in performance. Any further liability shall be excluded.

|

| 30 |

+

Patent claims generated through the usage of the Data & Software cannot be directed towards the copyright holders.

|

| 31 |

+

The Data & Software is provided in the state of development the licensor defines. If modified or extended by Licensee, the Licensor makes no claims about the fitness of the Data & Software and is not responsible for any problems such modifications cause.

|

| 32 |

+

|

| 33 |

+

No Maintenance Services

|

| 34 |

+

You understand and agree that Licensor is under no obligation to provide either maintenance services, update services, notices of latent defects, or corrections of defects with regard to the Data & Software. Licensor nevertheless reserves the right to update, modify, or discontinue the Data & Software at any time.

|

| 35 |

+

|

| 36 |

+

Defects of the Data & Software must be notified in writing to the Licensor with a comprehensible description of the error symptoms. The notification of the defect should enable the reproduction of the error. The Licensee is encouraged to communicate any use, results, modification, or publication.

|

| 37 |

+

|

| 38 |

+

Publications using the Data & Software

|

| 39 |

+

You acknowledge that the Data & Software is a valuable scientific resource and agree to appropriately reference the following paper in any publication making use of the Data & Software.

|

| 40 |

+

|

| 41 |

+

Subjects' Consent: All subjects gave informed written consent to share their data for research purposes. You further agree to delete data or change their use, in case a subject changes or withdraws their consent.

|

| 42 |

+

|

| 43 |

+

Citation:

|

| 44 |

+

|

| 45 |

+

@InProceedings{Tripathi_2023_ICCV,

|

| 46 |

+

author = {Tripathi, Shashank and Chatterjee, Agniv and Passy, Jean-Claude and Yi, Hongwei and Tzionas, Dimitrios and Black, Michael J.},

|

| 47 |

+

title = {DECO: Dense Estimation of 3D Human-Scene Contact In The Wild},

|

| 48 |

+

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

|

| 49 |

+

month = {October},

|

| 50 |

+

year = {2023},

|

| 51 |

+

pages = {8001-8013}

|

| 52 |

+

}

|

| 53 |

+

|

| 54 |

+

Commercial licensing opportunities

|

| 55 |

+

For commercial uses of the Data & Software, please send emails to ps-license@tue.mpg.de

|

| 56 |

+

|

| 57 |

+

This Agreement shall be governed by the laws of the Federal Republic of Germany except for the UN Sales Convention.

|

README.md

CHANGED

|

@@ -1,12 +1,145 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

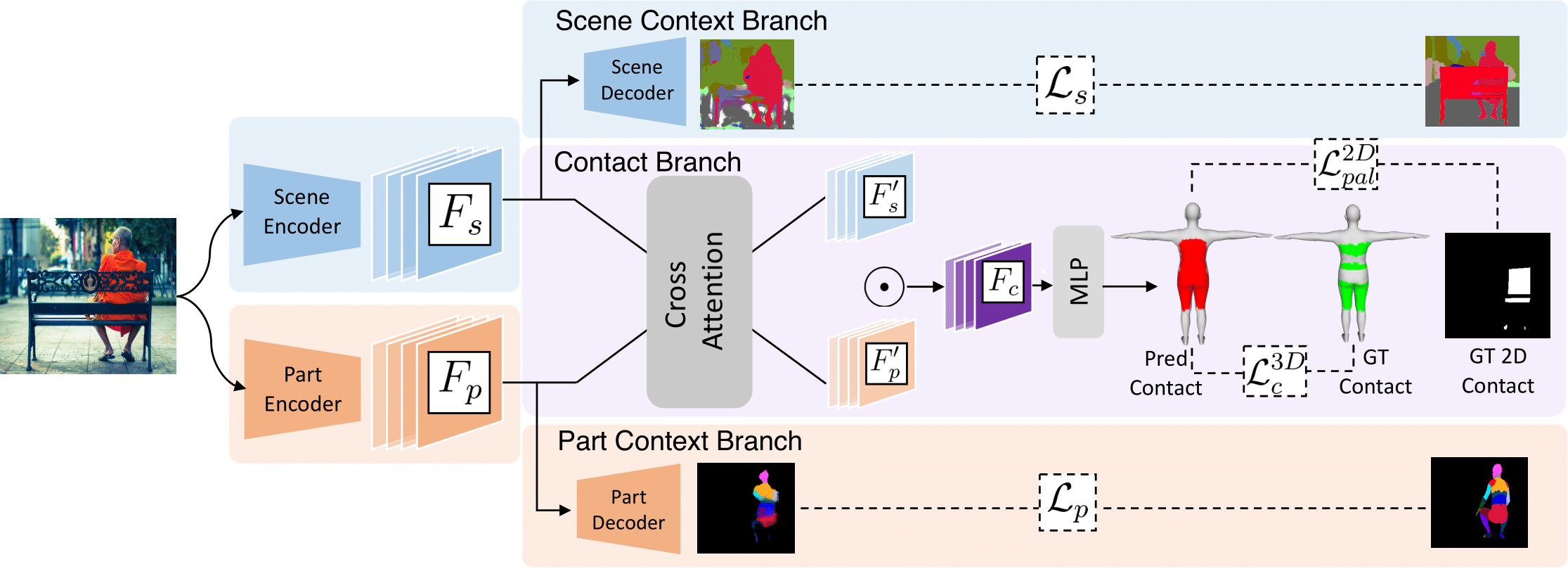

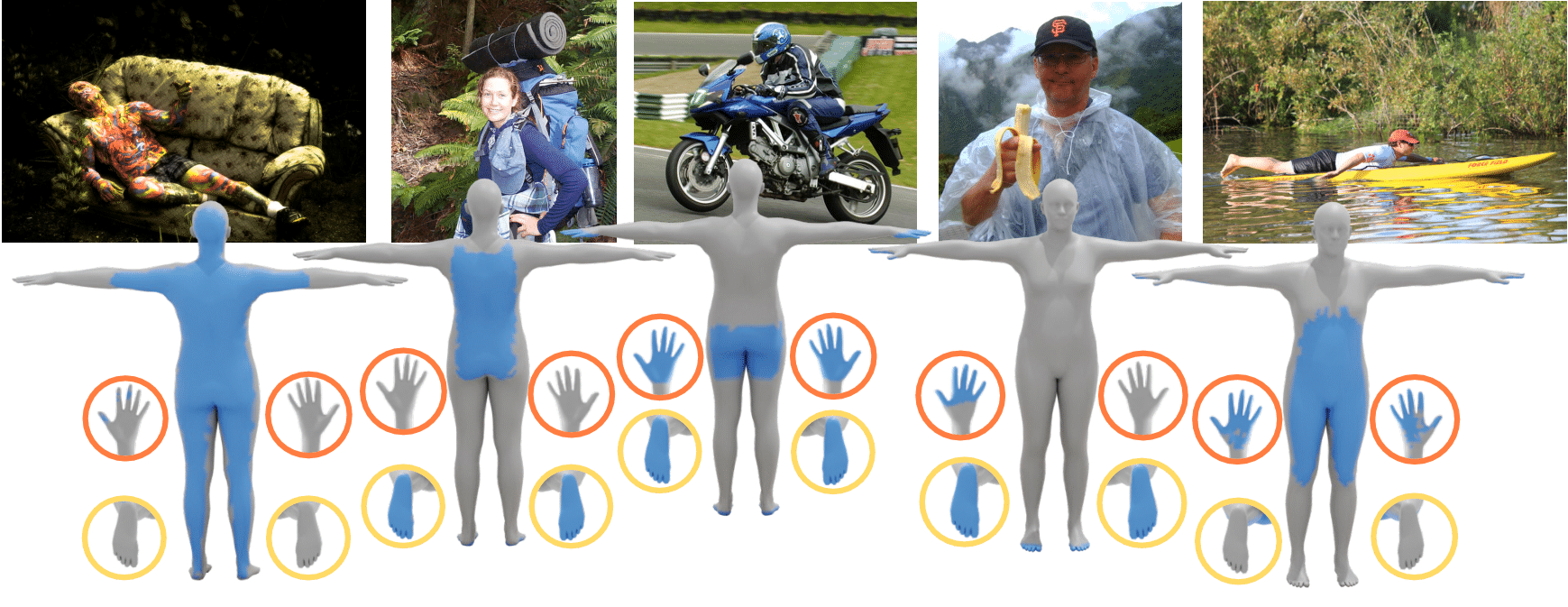

# DECO: Dense Estimation of 3D Human-Scene Contact in the Wild [ICCV 2023 (Oral)]

|

| 2 |

+

|

| 3 |

+

> Code repository for the paper:

|

| 4 |

+

> [**DECO: Dense Estimation of 3D Human-Scene Contact in the Wild**](https://openaccess.thecvf.com/content/ICCV2023/html/Tripathi_DECO_Dense_Estimation_of_3D_Human-Scene_Contact_In_The_Wild_ICCV_2023_paper.html)

|

| 5 |

+

> [Shashank Tripathi](https://sha2nkt.github.io/), [Agniv Chatterjee](https://ac5113.github.io/), [Jean-Claude Passy](https://is.mpg.de/person/jpassy), [Hongwei Yi](https://xyyhw.top/), [Dimitrios Tzionas](https://ps.is.mpg.de/person/dtzionas), [Michael J. Black](https://ps.is.mpg.de/person/black)<br />

|

| 6 |

+

> *IEEE International Conference on Computer Vision (ICCV), 2023*

|

| 7 |

+

|

| 8 |

+

[](https://arxiv.org/abs/2309.15273) [](https://deco.is.tue.mpg.de/) []() []()

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

[[Project Page](https://deco.is.tue.mpg.de)] [[Paper](https://arxiv.org/abs/2309.15273)] [[Video](https://www.youtube.com/watch?v=o7MLobqAFTQ)] [[Poster](https://www.dropbox.com/scl/fi/kvhpfnkvga2pt19ayko8u/ICCV2023_DECO_Poster_v2.pptx?rlkey=ihbf3fi6u9j0ha9x1gfk2cwd0&dl=0)] [[License](https://deco.is.tue.mpg.de/license.html)] [[Contact](mailto:deco@tue.mpg.de)]

|

| 13 |

+

|

| 14 |

+

## Installation and Setup

|

| 15 |

+

1. First, clone the repo. Then, we recommend creating a clean [conda](https://docs.conda.io/) environment, activating it and installing torch and torchvision, as follows:

|

| 16 |

+

```shell

|

| 17 |

+

git clone https://github.com/sha2nkt/deco.git

|

| 18 |

+

cd deco

|

| 19 |

+

conda create -n deco python=3.9 -y

|

| 20 |

+

conda activate deco

|

| 21 |

+

pip install torch==1.13.0+cu117 torchvision==0.14.0+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

|

| 22 |

+

```

|

| 23 |

+

Please adjust the CUDA version as required.

|

| 24 |

+

|

| 25 |

+

2. Install PyTorch3D from source. Users may also refer to [PyTorch3D-install](https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md) for more details.

|

| 26 |

+

However, our tests show that installing using ``conda`` sometimes runs into dependency conflicts.

|

| 27 |

+

Hence, users may alternatively install Pytorch3D from source following the steps below.

|

| 28 |

+

```shell

|

| 29 |

+

git clone https://github.com/facebookresearch/pytorch3d.git

|

| 30 |

+

cd pytorch3d

|

| 31 |

+

pip install .

|

| 32 |

+

cd ..

|

| 33 |

+

```

|

| 34 |

+

|

| 35 |

+

3. Install the other dependancies and download the required data.

|

| 36 |

+

```bash

|

| 37 |

+

pip install -r requirements.txt

|

| 38 |

+

sh fetch_data.sh

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

4. Please download [SMPL](https://smpl.is.tue.mpg.de/) (version 1.1.0) and [SMPL-X](https://smpl-x.is.tue.mpg.de/) (v1.1) files into the data folder. Please rename the SMPL files to ```SMPL_FEMALE.pkl```, ```SMPL_MALE.pkl``` and ```SMPL_NEUTRAL.pkl```. The directory structure for the ```data``` folder has been elaborated below:

|

| 42 |

+

|

| 43 |

+

```

|

| 44 |

+

├── preprocess

|

| 45 |

+

├── smpl

|

| 46 |

+

│ ├── SMPL_FEMALE.pkl

|

| 47 |

+

│ ├── SMPL_MALE.pkl

|

| 48 |

+

│ ├── SMPL_NEUTRAL.pkl

|

| 49 |

+

│ ├── smpl_neutral_geodesic_dist.npy

|

| 50 |

+

│ ├── smpl_neutral_tpose.ply

|

| 51 |

+

│ ├── smplpix_vertex_colors.npy

|

| 52 |

+

├── smplx

|

| 53 |

+

│ ├── SMPLX_FEMALE.npz

|

| 54 |

+

│ ├── SMPLX_FEMALE.pkl

|

| 55 |

+

│ ├── SMPLX_MALE.npz

|

| 56 |

+

│ ├── SMPLX_MALE.pkl

|

| 57 |

+

│ ├── SMPLX_NEUTRAL.npz

|

| 58 |

+

│ ├── SMPLX_NEUTRAL.pkl

|

| 59 |

+

│ ├── smplx_neutral_tpose.ply

|

| 60 |

+

├── weights

|

| 61 |

+

│ ├── pose_hrnet_w32_256x192.pth

|

| 62 |

+

├── J_regressor_extra.npy

|

| 63 |

+

├── base_dataset.py

|

| 64 |

+

├── mixed_dataset.py

|

| 65 |

+

├── smpl_partSegmentation_mapping.pkl

|

| 66 |

+

├── smpl_vert_segmentation.json

|

| 67 |

+

└── smplx_vert_segmentation.json

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

## Run demo on images

|

| 71 |

+

The following command will run DECO on all images in the specified `--img_src`, and save rendering and colored mesh in `--out_dir`. The `--model_path` flag is used to specify the specific checkpoint being used. Additionally, the base mesh color and the color of predicted contact annotation can be specified using the `--mesh_colour` and `--annot_colour` flags respectively.

|

| 72 |

+

```bash

|

| 73 |

+

python inference.py \

|

| 74 |

+

--img_src example_images \

|

| 75 |

+

--out_dir demo_out

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

## Training and Evaluation

|

| 79 |

+

|

| 80 |

+

We release 3 versions of the DECO model:

|

| 81 |

+

<ol>

|

| 82 |

+

<li> DECO-HRNet (<em> Best performing model </em>) </li>

|

| 83 |

+

<li> DECO-HRNet w/o context branches </li>

|

| 84 |

+

<li> DECO-Swin </li>

|

| 85 |

+

</ol>

|

| 86 |

+

|

| 87 |

+

All the checkpoints have been downloaded to ```checkpoints```.

|

| 88 |

+

However, please note that versions 2 and 3 have been trained solely on the RICH dataset. <br>

|

| 89 |

+

We recommend using the first DECO version.

|

| 90 |

+

|

| 91 |

+

The dataset npz files have been downloaded to ```datasets/Release_Datasets```. Please download the actual DAMON data and place them in ```datasets``` following the instructions given.

|

| 92 |

+

|

| 93 |

+

### Evaluation

|

| 94 |

+

To run evaluation on the DAMON dataset, please run the following command:

|

| 95 |

+

|

| 96 |

+

```bash

|

| 97 |

+

python tester.py --cfg configs/cfg_test.yml

|

| 98 |

+

```

|

| 99 |

+

|

| 100 |

+

### Training

|

| 101 |

+

The config provided (```cfg_train.yml```) is set to train and evaluate on all three datasets: DAMON, RICH and PROX. To change this, please change the value of the key ```TRAINING.DATASETS``` and ```VALIDATION.DATASETS``` in the config (please also change ```TRAINING.DATASET_MIX_PDF``` as required). <br>

|

| 102 |

+

Also, the best checkpoint is stored by default at ```checkpoints/Other_Checkpoints```.

|

| 103 |

+

Please run the following command to start training of the DECO model:

|

| 104 |

+

|

| 105 |

+

```bash

|

| 106 |

+

python train.py --cfg configs/cfg_train.yml

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

### Training on custom datasets

|

| 110 |

+

|

| 111 |

+

To train on other datasets, please follow these steps:

|

| 112 |

+

1. Please create an npz of the dataset, following the structure of the datasets in ```datasets/Release_Datasets``` with the corresponding keys and values.

|

| 113 |

+

2. Please create scene segmentation maps, if not available. We have used [Mask2Former](https://github.com/facebookresearch/Mask2Former) in our work.

|

| 114 |

+

3. For creating the part segmentation maps, this [sample script](https://github.com/sha2nkt/deco/blob/main/scripts/datascripts/get_part_seg_mask.py) can be referred to.

|

| 115 |

+

4. Add the dataset name(s) to ```train.py``` ([these lines](https://github.com/sha2nkt/deco/blob/d5233ecfad1f51b71a50a78c0751420067e82c02/train.py#L83)), ```tester.py``` ([these lines](https://github.com/sha2nkt/deco/blob/d5233ecfad1f51b71a50a78c0751420067e82c02/tester.py#L51)) and ```data/mixed_dataset.py``` ([these lines](https://github.com/sha2nkt/deco/blob/d5233ecfad1f51b71a50a78c0751420067e82c02/data/mixed_dataset.py#L17)), according to the body model being used (SMPL/SMPL-X)

|

| 116 |

+

5. Add the path(s) to the dataset npz(s) to ```common/constants.py``` ([these lines](https://github.com/sha2nkt/deco/blob/d5233ecfad1f51b71a50a78c0751420067e82c02/common/constants.py#L19)).

|

| 117 |

+

6. Finally, change ```TRAINING.DATASETS``` and ```VALIDATION.DATASETS``` in the config file and you're good to go!

|

| 118 |

+

|

| 119 |

+

## Citing

|

| 120 |

+

If you find this code useful for your research, please consider citing the following paper:

|

| 121 |

+

|

| 122 |

+

```bibtex

|

| 123 |

+

@InProceedings{Tripathi_2023_ICCV,

|

| 124 |

+

author = {Tripathi, Shashank and Chatterjee, Agniv and Passy, Jean-Claude and Yi, Hongwei and Tzionas, Dimitrios and Black, Michael J.},

|

| 125 |

+

title = {DECO: Dense Estimation of 3D Human-Scene Contact In The Wild},

|

| 126 |

+

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

|

| 127 |

+

month = {October},

|

| 128 |

+

year = {2023},

|

| 129 |

+

pages = {8001-8013}

|

| 130 |

+

}

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

### License

|

| 134 |

+

|

| 135 |

+

See [LICENSE](LICENSE).

|

| 136 |

+

|

| 137 |

+

### Acknowledgments

|

| 138 |

+

|

| 139 |

+

We sincerely thank Alpar Cseke for his contributions to DAMON data collection and PHOSA evaluations, Sai K. Dwivedi for facilitating PROX downstream experiments, Xianghui Xie for his generous help with CHORE evaluations, Lea Muller for her help in initiating the contact annotation tool, Chun-Hao P. Huang for RICH discussions and Yixin Chen for details about the HOT paper. We are grateful to Mengqin Xue and Zhenyu Lou for their collaboration in BEHAVE evaluations, Joachim Tesch and Nikos Athanasiou for insightful visualization advice, and Tsvetelina Alexiadis for valuable data collection guidance. Their invaluable contributions enriched this research significantly. We also thank Benjamin Pellkofer for help with the website and IT support. This work was funded by the International Max Planck Research School for Intelligent Systems (IMPRS-IS).

|

| 140 |

+

|

| 141 |

+

### Contact

|

| 142 |

+

|

| 143 |

+

For technical questions, please create an issue. For other questions, please contact `deco@tue.mpg.de`.

|

| 144 |

+

|

| 145 |

+

For commercial licensing, please contact `ps-licensing@tue.mpg.de`.

|

app.py

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

|

| 3 |

+

def greet(name):

|

| 4 |

+

return "Hello " + name + "!!"

|

| 5 |

+

|

| 6 |

+

iface = gr.Interface(fn=greet, inputs="text", outputs="text")

|

| 7 |

+

iface.launch()

|

assets/overview.png

ADDED

|

assets/teaser.png

ADDED

|

common/constants.py

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from os.path import join

|

| 2 |

+

|

| 3 |

+

DIST_MATRIX_PATH = 'data/smpl/smpl_neutral_geodesic_dist.npy'

|

| 4 |

+

SMPL_MEAN_PARAMS = 'data/smpl_mean_params.npz'

|

| 5 |

+

SMPL_MODEL_DIR = 'data/smpl/'

|

| 6 |

+

SMPLX_MODEL_DIR = 'data/smplx/'

|

| 7 |

+

|

| 8 |

+

N_PARTS = 24

|

| 9 |

+

|

| 10 |

+

# Mean and standard deviation for normalizing input image

|

| 11 |

+

IMG_NORM_MEAN = [0.485, 0.456, 0.406]

|

| 12 |

+

IMG_NORM_STD = [0.229, 0.224, 0.225]

|

| 13 |

+

|

| 14 |

+

# Output folder to save test/train npz files

|

| 15 |

+

DATASET_NPZ_PATH = 'datasets/Release_Datasets'

|

| 16 |

+

CONTACT_MAPPING_PATH = 'data/conversions'

|

| 17 |

+

|

| 18 |

+

# Path to test/train npz files

|

| 19 |

+

DATASET_FILES = {

|

| 20 |

+

'train': {

|

| 21 |

+

'damon': join(DATASET_NPZ_PATH, 'damon/hot_dca_trainval.npz'),

|

| 22 |

+

'rich': join(DATASET_NPZ_PATH, 'rich/rich_train_smplx_cropped_bmp.npz'),

|

| 23 |

+

'prox': join(DATASET_NPZ_PATH, 'prox/prox_train_smplx_ds4.npz'),

|

| 24 |

+

},

|

| 25 |

+

'val': {

|

| 26 |

+

'damon': join(DATASET_NPZ_PATH, 'damon/hot_dca_test.npz'),

|

| 27 |

+

'rich': join(DATASET_NPZ_PATH, 'rich/rich_test_smplx_cropped_bmp.npz'),

|

| 28 |

+

'prox': join(DATASET_NPZ_PATH, 'prox/prox_val_smplx_ds4.npz'),

|

| 29 |

+

},

|

| 30 |

+

'test': {

|

| 31 |

+

'damon': join(DATASET_NPZ_PATH, 'damon/hot_dca_test.npz'),

|

| 32 |

+

'rich': join(DATASET_NPZ_PATH, 'rich/rich_test_smplx_cropped_bmp.npz'),

|

| 33 |

+

'prox': join(DATASET_NPZ_PATH, 'prox/prox_val_smplx_ds4.npz'),

|

| 34 |

+

},

|

| 35 |

+

}

|

common/renderer_pyrd.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (C) 2022. Huawei Technologies Co., Ltd. All rights reserved.

|

| 2 |

+

|

| 3 |

+

# This program is free software; you can redistribute it and/or modify it

|

| 4 |

+

# under the terms of the MIT license.

|

| 5 |

+

|

| 6 |

+

# This program is distributed in the hope that it will be useful, but WITHOUT ANY

|

| 7 |

+

# WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A

|

| 8 |

+

# PARTICULAR PURPOSE. See the MIT License for more details.

|

| 9 |

+

|

| 10 |

+

import os

|

| 11 |

+

import trimesh

|

| 12 |

+

import pyrender

|

| 13 |

+

import numpy as np

|

| 14 |

+

import colorsys

|

| 15 |

+

import cv2

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

class Renderer(object):

|

| 19 |

+

|

| 20 |

+

def __init__(self, focal_length=600, img_w=512, img_h=512, faces=None,

|

| 21 |

+

same_mesh_color=False):

|

| 22 |

+

os.environ['PYOPENGL_PLATFORM'] = 'egl'

|

| 23 |

+

self.renderer = pyrender.OffscreenRenderer(viewport_width=img_w,

|

| 24 |

+

viewport_height=img_h,

|

| 25 |

+

point_size=1.0)

|

| 26 |

+

self.camera_center = [img_w // 2, img_h // 2]

|

| 27 |

+

self.focal_length = focal_length

|

| 28 |

+

self.faces = faces

|

| 29 |

+

self.same_mesh_color = same_mesh_color

|

| 30 |

+

|

| 31 |

+

def render_front_view(self, verts, bg_img_rgb=None, bg_color=(0, 0, 0, 0), vertex_colors=None, render_part_seg=False, part_label_bins=None):

|

| 32 |

+

# Create a scene for each image and render all meshes

|

| 33 |

+

scene = pyrender.Scene(bg_color=bg_color, ambient_light=np.ones(3) * (1 if render_part_seg else 0))

|

| 34 |

+

# Create camera. Camera will always be at [0,0,0]

|

| 35 |

+

camera = pyrender.camera.IntrinsicsCamera(fx=self.focal_length, fy=self.focal_length,

|

| 36 |

+

cx=self.camera_center[0], cy=self.camera_center[1])

|

| 37 |

+

scene.add(camera, pose=np.eye(4))

|

| 38 |

+

|

| 39 |

+

# Create light source

|

| 40 |

+

if not render_part_seg:

|

| 41 |

+

light = pyrender.DirectionalLight(color=[1.0, 1.0, 1.0], intensity=3.0)

|

| 42 |

+

# for DirectionalLight, only rotation matters

|

| 43 |

+

light_pose = trimesh.transformations.rotation_matrix(np.radians(-45), [1, 0, 0])

|

| 44 |

+

scene.add(light, pose=light_pose)

|

| 45 |

+

light_pose = trimesh.transformations.rotation_matrix(np.radians(45), [0, 1, 0])

|

| 46 |

+

scene.add(light, pose=light_pose)

|

| 47 |

+

|

| 48 |

+

# Need to flip x-axis

|

| 49 |

+

rot = trimesh.transformations.rotation_matrix(np.radians(180), [1, 0, 0])

|

| 50 |

+

# multiple person

|

| 51 |

+

num_people = len(verts)

|

| 52 |

+

|

| 53 |

+

# for every person in the scene

|

| 54 |

+

for n in range(num_people):

|

| 55 |

+

mesh = trimesh.Trimesh(verts[n], self.faces, process=False)

|

| 56 |

+

mesh.apply_transform(rot)

|

| 57 |

+

if self.same_mesh_color:

|

| 58 |

+

mesh_color = colorsys.hsv_to_rgb(0.6, 0.5, 1.0)

|

| 59 |

+

else:

|

| 60 |

+

mesh_color = colorsys.hsv_to_rgb(float(n) / num_people, 0.5, 1.0)

|

| 61 |

+

material = pyrender.MetallicRoughnessMaterial(

|

| 62 |

+

metallicFactor=1.0,

|

| 63 |

+

alphaMode='OPAQUE',

|

| 64 |

+

baseColorFactor=mesh_color)

|

| 65 |

+

|

| 66 |

+

if vertex_colors is not None:

|

| 67 |

+

# color individual vertices based on part labels

|

| 68 |

+

mesh.visual.vertex_colors = vertex_colors

|

| 69 |

+

mesh = pyrender.Mesh.from_trimesh(mesh, material=material, wireframe=False)

|

| 70 |

+

scene.add(mesh, 'mesh')

|

| 71 |

+

|

| 72 |

+

# Alpha channel was not working previously, need to check again

|

| 73 |

+

# Until this is fixed use hack with depth image to get the opacity

|

| 74 |

+

color_rgba, depth_map = self.renderer.render(scene, flags=pyrender.RenderFlags.RGBA)

|

| 75 |

+

color_rgb = color_rgba[:, :, :3]

|

| 76 |

+

|

| 77 |

+

if render_part_seg:

|

| 78 |

+

body_parts = color_rgb.copy()

|

| 79 |

+

# make single channel

|

| 80 |

+

body_parts = body_parts.max(-1) # reduce to single channel

|

| 81 |

+

# convert pixel value to bucket indices

|

| 82 |

+

# body_parts = torch.bucketize(body_parts, self.part_label_bins, right=True)

|

| 83 |

+

body_parts = np.digitize(body_parts, part_label_bins, right=True)

|

| 84 |

+

# part labels start from 2 because of the binning scheme. Subtract 1 from all non-zero labels to make label

|

| 85 |

+

# go from 1 to 24. 0 is background

|

| 86 |

+

# handle background coinciding with hip label = 0

|

| 87 |

+

body_parts = body_parts + 1

|

| 88 |

+

mask = depth_map > 0

|

| 89 |

+

body_parts = body_parts * mask

|

| 90 |

+

return body_parts, color_rgb

|

| 91 |

+

|

| 92 |

+

if bg_img_rgb is None:

|

| 93 |

+

return color_rgb

|

| 94 |

+

else:

|

| 95 |

+

mask = depth_map > 0

|

| 96 |

+

bg_img_rgb[mask] = color_rgb[mask]

|

| 97 |

+

return bg_img_rgb

|

| 98 |

+

|

| 99 |

+

def render_side_view(self, verts):

|

| 100 |

+

centroid = verts.mean(axis=(0, 1)) # n*6890*3 -> 3

|

| 101 |

+

# make the centroid at the image center (the X and Y coordinates are zeros)

|

| 102 |

+

centroid[:2] = 0

|

| 103 |

+

aroundy = cv2.Rodrigues(np.array([0, np.radians(90.), 0]))[0][np.newaxis, ...] # 1*3*3

|

| 104 |

+

pred_vert_arr_side = np.matmul((verts - centroid), aroundy) + centroid

|

| 105 |

+

side_view = self.render_front_view(pred_vert_arr_side)

|

| 106 |

+

return side_view

|

| 107 |

+

|

| 108 |

+

def delete(self):

|

| 109 |

+

"""

|

| 110 |

+

Need to delete before creating the renderer next time

|

| 111 |

+

"""

|

| 112 |

+

self.renderer.delete()

|

configs/cfg_test.yml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

EXP_NAME: 'damon_hrnet_testing'

|

| 2 |

+

PROJECT_NAME: 'DECO_DAMON_Testing'

|

| 3 |

+

OUTPUT_DIR: 'deco_results'

|

| 4 |

+

CONDOR_DIR: ''

|

| 5 |

+

DATASET:

|

| 6 |

+

BATCH_SIZE: 16

|

| 7 |

+

NUM_WORKERS: 4

|

| 8 |

+

NORMALIZE_IMAGES: [True]

|

| 9 |

+

OPTIMIZER:

|

| 10 |

+

TYPE: 'adam'

|

| 11 |

+

LR: [5e-5]

|

| 12 |

+

NUM_UPDATE_LR: 3

|

| 13 |

+

TRAINING:

|

| 14 |

+

ENCODER: 'hrnet'

|

| 15 |

+

CONTEXT: [True]

|

| 16 |

+

NUM_EPOCHS: 1

|

| 17 |

+

NUM_EARLY_STOP: 10

|

| 18 |

+

SUMMARY_STEPS: 5

|

| 19 |

+

CHECKPOINT_EPOCHS: 5

|

| 20 |

+

DATASETS: ['damon']

|

| 21 |

+

DATASET_MIX_PDF: ['1.']

|

| 22 |

+

DATASET_ROOT_PATH: ''

|

| 23 |

+

BEST_MODEL_PATH: './checkpoints/Release_Checkpoint/deco_best.pth'

|

| 24 |

+

PAL_LOSS_WEIGHTS: 0.0

|

| 25 |

+

VALIDATION:

|

| 26 |

+

SUMMARY_STEPS: 1000

|

| 27 |

+

DATASETS: ['damon']

|

| 28 |

+

MAIN_DATASET: 'damon'

|

configs/cfg_train.yml

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

EXP_NAME: 'demo_train'

|

| 2 |

+

PROJECT_NAME: 'DECO_demo_training'

|

| 3 |

+

OUTPUT_DIR: 'deco_results'

|

| 4 |

+

CONDOR_DIR: ''

|

| 5 |

+

DATASET:

|

| 6 |

+

BATCH_SIZE: 4

|

| 7 |

+

NUM_WORKERS: 8

|

| 8 |

+

NORMALIZE_IMAGES: [True]

|

| 9 |

+

OPTIMIZER:

|

| 10 |

+

TYPE: 'adam'

|

| 11 |

+

LR: [1e-5]

|

| 12 |

+

NUM_UPDATE_LR: 3

|

| 13 |

+

TRAINING:

|

| 14 |

+

ENCODER: 'hrnet'

|

| 15 |

+

CONTEXT: [True]

|

| 16 |

+

NUM_EPOCHS: 100

|

| 17 |

+

NUM_EARLY_STOP: 10

|

| 18 |

+

SUMMARY_STEPS: 5

|

| 19 |

+

CHECKPOINT_EPOCHS: 5

|

| 20 |

+

DATASETS: ['damon', 'rich', 'prox']

|

| 21 |

+

DATASET_MIX_PDF: ['0.4', '0.3', '0.3'] # should sum to 1.0 unless you want to weight by dataset size

|

| 22 |

+

DATASET_ROOT_PATH: ''

|

| 23 |

+

BEST_MODEL_PATH: './checkpoints/Other_Checkpoints/demo_train.pth'

|

| 24 |

+

LOSS_WEIGHTS: 1.

|

| 25 |

+

PAL_LOSS_WEIGHTS: 0.01

|

| 26 |

+

VALIDATION:

|

| 27 |

+

SUMMARY_STEPS: 5

|

| 28 |

+

DATASETS: ['damon', 'rich', 'prox']

|

| 29 |

+

MAIN_DATASET: 'damon'

|

data/base_dataset.py

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import cv2

|

| 3 |

+

import numpy as np

|

| 4 |

+

from torch.utils.data import Dataset

|

| 5 |

+

from torchvision.transforms import Normalize

|

| 6 |

+

from common import constants

|

| 7 |

+

|

| 8 |

+

def mask_split(img, num_parts):

|

| 9 |

+

if not len(img.shape) == 2:

|

| 10 |

+

img = img[:, :, 0]

|

| 11 |

+

mask = np.zeros((img.shape[0], img.shape[1], num_parts))

|

| 12 |

+

for i in np.unique(img):

|

| 13 |

+

mask[:, :, i] = np.where(img == i, 1., 0.)

|

| 14 |

+

return np.transpose(mask, (2, 0, 1))

|

| 15 |

+

|

| 16 |

+

class BaseDataset(Dataset):

|

| 17 |

+

|

| 18 |

+

def __init__(self, dataset, mode, model_type='smpl', normalize=False):

|

| 19 |

+

self.dataset = dataset

|

| 20 |

+

self.mode = mode

|

| 21 |

+

|

| 22 |

+

print(f'Loading dataset: {constants.DATASET_FILES[mode][dataset]} for mode: {mode}')

|

| 23 |

+

|

| 24 |

+

self.data = np.load(constants.DATASET_FILES[mode][dataset], allow_pickle=True)

|

| 25 |

+

|

| 26 |

+

self.images = self.data['imgname']

|

| 27 |

+

|

| 28 |

+

# get 3d contact labels, if available

|

| 29 |

+

try:

|

| 30 |

+

self.contact_labels_3d = self.data['contact_label']

|

| 31 |

+

# make a has_contact_3d numpy array which contains 1 if contact labels are no empty and 0 otherwise

|

| 32 |

+

self.has_contact_3d = np.array([1 if len(x) > 0 else 0 for x in self.contact_labels_3d])

|

| 33 |

+

except KeyError:

|

| 34 |

+

self.has_contact_3d = np.zeros(len(self.images))

|

| 35 |

+

|

| 36 |

+

# get 2d polygon contact labels, if available

|

| 37 |

+

try:

|

| 38 |

+

self.polygon_contacts_2d = self.data['polygon_2d_contact']

|

| 39 |

+

self.has_polygon_contact_2d = np.ones(len(self.images))

|

| 40 |

+

except KeyError:

|

| 41 |

+

self.has_polygon_contact_2d = np.zeros(len(self.images))

|

| 42 |

+

|

| 43 |

+

# Get camera parameters - only intrinsics for now

|

| 44 |

+

try:

|

| 45 |

+

self.cam_k = self.data['cam_k']

|

| 46 |

+

except KeyError:

|

| 47 |

+

self.cam_k = np.zeros((len(self.images), 3, 3))

|

| 48 |

+

|

| 49 |

+

self.sem_masks = self.data['scene_seg']

|

| 50 |

+

self.part_masks = self.data['part_seg']

|

| 51 |

+

|

| 52 |

+

# Get gt SMPL parameters, if available

|

| 53 |

+

try:

|

| 54 |

+

self.pose = self.data['pose'].astype(float)

|

| 55 |

+

self.betas = self.data['shape'].astype(float)

|

| 56 |

+

self.transl = self.data['transl'].astype(float)

|

| 57 |

+

if 'has_smpl' in self.data:

|

| 58 |

+

self.has_smpl = self.data['has_smpl']

|

| 59 |

+

else:

|

| 60 |

+

self.has_smpl = np.ones(len(self.images))

|

| 61 |

+

self.is_smplx = np.ones(len(self.images)) if model_type == 'smplx' else np.zeros(len(self.images))

|

| 62 |

+

except KeyError:

|

| 63 |

+

self.has_smpl = np.zeros(len(self.images))

|

| 64 |

+

self.is_smplx = np.zeros(len(self.images))

|

| 65 |

+

|

| 66 |

+

if model_type == 'smpl':

|

| 67 |

+

self.n_vertices = 6890

|

| 68 |

+

elif model_type == 'smplx':

|

| 69 |

+

self.n_vertices = 10475

|

| 70 |

+

else:

|

| 71 |

+

raise NotImplementedError

|

| 72 |

+

|

| 73 |

+

self.normalize = normalize

|

| 74 |

+

self.normalize_img = Normalize(mean=constants.IMG_NORM_MEAN, std=constants.IMG_NORM_STD)

|

| 75 |

+

|

| 76 |

+

def __getitem__(self, index):

|

| 77 |

+

item = {}

|

| 78 |

+

|

| 79 |

+

# Load image

|

| 80 |

+

img_path = self.images[index]

|

| 81 |

+

try:

|

| 82 |

+

img = cv2.imread(img_path)

|

| 83 |

+

img_h, img_w, _ = img.shape

|

| 84 |

+

img = cv2.resize(img, (256, 256), cv2.INTER_CUBIC)

|

| 85 |

+

img = img.transpose(2, 0, 1) / 255.0

|

| 86 |

+

except:

|

| 87 |

+

print('Img: ', img_path)

|

| 88 |

+

|

| 89 |

+

img_scale_factor = np.array([256 / img_w, 256 / img_h])

|

| 90 |

+

|

| 91 |

+

# Get SMPL parameters, if available

|

| 92 |

+

if self.has_smpl[index]:

|

| 93 |

+

pose = self.pose[index].copy()

|

| 94 |

+

betas = self.betas[index].copy()

|

| 95 |

+

transl = self.transl[index].copy()

|

| 96 |

+

else:

|

| 97 |

+

pose = np.zeros(72)

|

| 98 |

+

betas = np.zeros(10)

|

| 99 |

+

transl = np.zeros(3)

|

| 100 |

+

|

| 101 |

+

# Load vertex_contact

|

| 102 |

+

if self.has_contact_3d[index]:

|

| 103 |

+

contact_label_3d = self.contact_labels_3d[index]

|

| 104 |

+

else:

|

| 105 |

+

contact_label_3d = np.zeros(self.n_vertices)

|

| 106 |

+

|

| 107 |

+

sem_mask_path = self.sem_masks[index]

|

| 108 |

+

try:

|

| 109 |

+

sem_mask = cv2.imread(sem_mask_path)

|

| 110 |

+

sem_mask = cv2.resize(sem_mask, (256, 256), cv2.INTER_CUBIC)

|

| 111 |

+

sem_mask = mask_split(sem_mask, 133)

|

| 112 |

+

except:

|

| 113 |

+

print('Scene seg: ', sem_mask_path)

|

| 114 |

+

|

| 115 |

+

try:

|

| 116 |

+

part_mask_path = self.part_masks[index]

|

| 117 |

+

part_mask = cv2.imread(part_mask_path)

|

| 118 |

+

part_mask = cv2.resize(part_mask, (256, 256), cv2.INTER_CUBIC)

|

| 119 |

+

part_mask = mask_split(part_mask, 26)

|

| 120 |

+

except:

|

| 121 |

+

print('Part seg: ', part_mask_path)

|

| 122 |

+

|

| 123 |

+

try:

|

| 124 |

+

if self.has_polygon_contact_2d[index]:

|

| 125 |

+

polygon_contact_2d_path = self.polygon_contacts_2d[index]

|

| 126 |

+

polygon_contact_2d = cv2.imread(polygon_contact_2d_path)

|

| 127 |

+

polygon_contact_2d = cv2.resize(polygon_contact_2d, (256, 256), cv2.INTER_NEAREST)

|

| 128 |

+

# binarize the part mask

|

| 129 |

+

polygon_contact_2d = np.where(polygon_contact_2d > 0, 1, 0)

|

| 130 |

+

else:

|

| 131 |

+

polygon_contact_2d = np.zeros((256, 256, 3))

|

| 132 |

+

except:

|

| 133 |

+

print('2D polygon contact: ', polygon_contact_2d_path)

|

| 134 |

+

|

| 135 |

+

if self.normalize:

|

| 136 |

+

img = torch.tensor(img, dtype=torch.float32)

|

| 137 |

+

item['img'] = self.normalize_img(img)

|

| 138 |

+

else:

|

| 139 |

+

item['img'] = torch.tensor(img, dtype=torch.float32)

|

| 140 |

+

|

| 141 |

+

if self.is_smplx[index]:

|

| 142 |

+

# Add 6 zeros to the end of the pose vector to match with smpl

|

| 143 |

+

pose = np.concatenate((pose, np.zeros(6)))

|

| 144 |

+

|

| 145 |

+

item['img_path'] = img_path

|

| 146 |

+

item['pose'] = torch.tensor(pose, dtype=torch.float32)

|

| 147 |

+

item['betas'] = torch.tensor(betas, dtype=torch.float32)

|

| 148 |

+

item['transl'] = torch.tensor(transl, dtype=torch.float32)

|

| 149 |

+

item['cam_k'] = self.cam_k[index]

|

| 150 |

+

item['img_scale_factor'] = torch.tensor(img_scale_factor, dtype=torch.float32)

|

| 151 |

+

item['contact_label_3d'] = torch.tensor(contact_label_3d, dtype=torch.float32)

|

| 152 |

+

item['sem_mask'] = torch.tensor(sem_mask, dtype=torch.float32)

|

| 153 |

+

item['part_mask'] = torch.tensor(part_mask, dtype=torch.float32)

|

| 154 |

+

item['polygon_contact_2d'] = torch.tensor(polygon_contact_2d, dtype=torch.float32)

|

| 155 |

+

|

| 156 |

+

item['has_smpl'] = self.has_smpl[index]

|

| 157 |

+

item['is_smplx'] = self.is_smplx[index]

|

| 158 |

+

item['has_contact_3d'] = self.has_contact_3d[index]

|

| 159 |

+

item['has_polygon_contact_2d'] = self.has_polygon_contact_2d[index]

|

| 160 |

+

|

| 161 |

+

return item

|

| 162 |

+

|

| 163 |

+

def __len__(self):

|

| 164 |

+

return len(self.images)

|

data/mixed_dataset.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This file contains the definition of different heterogeneous datasets used for training

|

| 3 |

+

"""

|

| 4 |

+

import torch

|

| 5 |

+

import numpy as np

|

| 6 |

+

|

| 7 |

+

from .base_dataset import BaseDataset

|

| 8 |

+

|

| 9 |

+

class MixedDataset(torch.utils.data.Dataset):

|

| 10 |

+

|

| 11 |

+

def __init__(self, ds_list, mode, dataset_mix_pdf, **kwargs):

|

| 12 |

+

self.dataset_list = ds_list

|

| 13 |

+

print('Training Dataset list: ', self.dataset_list)

|

| 14 |

+

self.num_datasets = len(self.dataset_list)

|

| 15 |

+

|

| 16 |

+

self.datasets = []

|

| 17 |

+

for ds in self.dataset_list:

|

| 18 |

+

if ds in ['rich', 'prox']:

|

| 19 |

+

self.datasets.append(BaseDataset(ds, mode, model_type='smplx', **kwargs))

|

| 20 |

+

elif ds in ['damon']:

|

| 21 |

+

self.datasets.append(BaseDataset(ds, mode, model_type='smpl', **kwargs))

|

| 22 |

+

else:

|

| 23 |

+

raise ValueError('Dataset not supported')

|

| 24 |

+

|

| 25 |

+

total_length = sum([len(ds) for ds in self.datasets])

|

| 26 |

+

length_itw = sum([len(ds) for ds in self.datasets])

|

| 27 |

+

self.length = max([len(ds) for ds in self.datasets])

|

| 28 |

+

|

| 29 |

+

# convert list of strings to list of floats

|

| 30 |

+

self.partition = [float(i) for i in dataset_mix_pdf] # should sum to 1.0 unless you want to weight by dataset size

|

| 31 |

+

assert sum(self.partition) == 1.0, "Dataset Mix PDF must sum to 1.0 unless you want to weight by dataset size"

|

| 32 |

+

assert len(self.partition) == self.num_datasets, "Number of partitions must be equal to number of datasets"

|

| 33 |

+

self.partition = np.array(self.partition).cumsum()

|

| 34 |

+

|

| 35 |

+

def __getitem__(self, index):

|

| 36 |

+

p = np.random.rand()

|

| 37 |

+

for i in range(self.num_datasets):

|

| 38 |

+

if p <= self.partition[i]:

|

| 39 |

+

return self.datasets[i][index % len(self.datasets[i])]

|

| 40 |

+

|

| 41 |

+

def __len__(self):

|

| 42 |

+

return self.length

|

data/preprocess/behave-30fps-error_frames.json

ADDED

|

@@ -0,0 +1,511 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|