diff --git a/.dockerignore b/.dockerignore

new file mode 100644

index 0000000000000000000000000000000000000000..d600b6c76dd93f7b2472160d42b2797cae50c8e5

--- /dev/null

+++ b/.dockerignore

@@ -0,0 +1,25 @@

+# Logs

+logs

+*.log

+npm-debug.log*

+yarn-debug.log*

+yarn-error.log*

+pnpm-debug.log*

+lerna-debug.log*

+

+node_modules

+dist

+dist-ssr

+*.local

+

+# Editor directories and files

+.vscode/*

+!.vscode/extensions.json

+.idea

+.DS_Store

+*.suo

+*.ntvs*

+*.njsproj

+*.sln

+*.sw?

+

diff --git a/.editorconfig b/.editorconfig

new file mode 100644

index 0000000000000000000000000000000000000000..a78447ebf932f1bb3a5b124b472bea8b3a86f80f

--- /dev/null

+++ b/.editorconfig

@@ -0,0 +1,7 @@

+[*]

+charset = utf-8

+insert_final_newline = true

+end_of_line = lf

+indent_style = space

+indent_size = 2

+max_line_length = 80

\ No newline at end of file

diff --git a/.env.example b/.env.example

new file mode 100644

index 0000000000000000000000000000000000000000..c603625379c2f3519c90d1ba67a08bee3d4f6c48

--- /dev/null

+++ b/.env.example

@@ -0,0 +1,32 @@

+# A comma-separated list of access keys. Example: `ACCESS_KEYS="ABC123,JUD71F,HUWE3"`. Leave blank for unrestricted access.

+ACCESS_KEYS=""

+

+# The timeout in hours for access key validation. Set to 0 to require validation on every page load.

+ACCESS_KEY_TIMEOUT_HOURS="24"

+

+# The default model ID for WebLLM with F16 shaders.

+WEBLLM_DEFAULT_F16_MODEL_ID="SmolLM2-360M-Instruct-q0f16-MLC"

+

+# The default model ID for WebLLM with F32 shaders.

+WEBLLM_DEFAULT_F32_MODEL_ID="SmolLM2-360M-Instruct-q0f32-MLC"

+

+# The default model ID for Wllama.

+WLLAMA_DEFAULT_MODEL_ID="smollm2-360m"

+

+# The base URL for the internal OpenAI compatible API. Example: `INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL="https://api.openai.com/v1"`. Leave blank to disable internal OpenAI compatible API.

+INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL=""

+

+# The access key for the internal OpenAI compatible API.

+INTERNAL_OPENAI_COMPATIBLE_API_KEY=""

+

+# The model for the internal OpenAI compatible API.

+INTERNAL_OPENAI_COMPATIBLE_API_MODEL=""

+

+# The name of the internal OpenAI compatible API, displayed in the UI.

+INTERNAL_OPENAI_COMPATIBLE_API_NAME="Internal API"

+

+# The type of inference to use by default. The possible values are:

+# "browser" -> In the browser (Private)

+# "openai" -> Remote Server (API)

+# "internal" -> $INTERNAL_OPENAI_COMPATIBLE_API_NAME

+DEFAULT_INFERENCE_TYPE="browser"

diff --git a/.github/workflows/ai-pr-summarizer/model.txt b/.github/workflows/ai-pr-summarizer/model.txt

new file mode 100644

index 0000000000000000000000000000000000000000..f020f00858aaaf640145b8ce7f70bac61649ede3

--- /dev/null

+++ b/.github/workflows/ai-pr-summarizer/model.txt

@@ -0,0 +1 @@

+qwen2.5-coder:7b-instruct-q8_0

diff --git a/.github/workflows/ai-pr-summarizer/ollama-version.txt b/.github/workflows/ai-pr-summarizer/ollama-version.txt

new file mode 100644

index 0000000000000000000000000000000000000000..0b69c00c5f5a0e553ff0bab7ae97f473d9facfe0

--- /dev/null

+++ b/.github/workflows/ai-pr-summarizer/ollama-version.txt

@@ -0,0 +1 @@

+0.3.14

diff --git a/.github/workflows/ai-pr-summarizer/prompt.txt b/.github/workflows/ai-pr-summarizer/prompt.txt

new file mode 100644

index 0000000000000000000000000000000000000000..f172dc1aac7a93ef6fd69ae51731d02a6de6a227

--- /dev/null

+++ b/.github/workflows/ai-pr-summarizer/prompt.txt

@@ -0,0 +1,29 @@

+You are an expert code reviewer analyzing git diff output. Provide a clear, actionable review summary focusing on what matters most to the development team:

+

+1. Changes Overview. For example:

+ - Key functionality modifications

+ - API/interface changes

+ - Data structure updates

+ - New dependencies

+

+2. Technical Impact. For example:

+ - Logic changes and their implications

+ - Performance considerations

+ - Security implications

+ - Breaking changes or migration requirements

+

+3. Quality Assessment. For example:

+ - Test coverage adequacy

+ - Error handling completeness

+ - Code complexity concerns

+ - Documentation updates needed

+

+4. Actionable Recommendations. For example:

+ - Critical issues that must be addressed

+ - Suggested improvements

+ - Potential refactoring opportunities

+

+5. Suggested Commit Message.

+ - Provide a one-sentence commit message summarizing the changes

+

+Here is the git diff output for review:

diff --git a/.github/workflows/on-pull-request-to-main.yml b/.github/workflows/on-pull-request-to-main.yml

new file mode 100644

index 0000000000000000000000000000000000000000..aed7fec3e98ee32b8e9218f2cf2710fa6233a03c

--- /dev/null

+++ b/.github/workflows/on-pull-request-to-main.yml

@@ -0,0 +1,29 @@

+name: On Pull Request To Main

+on:

+ pull_request:

+ types: [opened, synchronize, reopened]

+ branches: ["main"]

+jobs:

+ test-lint-ping:

+ uses: ./.github/workflows/reusable-test-lint-ping.yml

+ ai-pr-summarizer:

+ needs: [test-lint-ping]

+ runs-on: ubuntu-latest

+ name: AI PR Summarizer

+ permissions:

+ pull-requests: write

+ contents: read

+ steps:

+ - name: Checkout Repository

+ uses: actions/checkout@v4

+ - name: Summarize PR with AI

+ uses: behrouz-rad/ai-pr-summarizer@v1

+ with:

+ llm-model: 'qwen2.5-coder:7b-instruct-q8_0'

+ prompt-file: ./.github/workflows/ai-pr-summarizer/prompt.txt

+ models-file: ./.github/workflows/ai-pr-summarizer/model.txt

+ version-file: ./.github/workflows/ai-pr-summarizer/ollama-version.txt

+ context-window: 16384

+ upload-changes: false

+ fail-on-error: false

+ token: ${{ secrets.GITHUB_TOKEN }}

diff --git a/.github/workflows/on-push-to-main.yml b/.github/workflows/on-push-to-main.yml

new file mode 100644

index 0000000000000000000000000000000000000000..4ab001108ac59f57665437d3e5d9042474cff7ff

--- /dev/null

+++ b/.github/workflows/on-push-to-main.yml

@@ -0,0 +1,50 @@

+name: On Push To Master

+on:

+ push:

+ branches: ["master"]

+jobs:

+ test-lint-ping:

+ uses: ./.github/workflows/reusable-test-lint-ping.yml

+ build-and-push-image:

+ needs: [test-lint-ping]

+ name: Publish Docker image to Docker Hub

+ runs-on: ubuntu-latest

+ steps:

+ - name: Checkout repository

+ uses: actions/checkout@v4

+ - name: Login to DockerHub

+ if: github.ref == 'refs/heads/master'

+ uses: docker/login-action@v2

+ with:

+ username: jack20191124

+ password: ${{ secrets.DOCKERHUB_TOKEN }}

+ - name: Push image to DockerHub

+ if: github.ref == 'refs/heads/master'

+ uses: docker/build-push-action@v3

+ with:

+ context: .

+ push: true

+ tags: jack20191124/mini-search:latest

+ - name: Push image Description

+ if: github.ref == 'refs/heads/master'

+ uses: peter-evans/dockerhub-description@v4

+ with:

+ username: jack20191124

+ password: ${{ secrets.DOCKERHUB_TOKEN }}

+ repository: jack20191124/mini-search

+ readme-filepath: README.md

+ sync-to-hf:

+ needs: [test-lint-ping]

+ name: Sync to HuggingFace Spaces

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ with:

+ lfs: true

+ - uses: JacobLinCool/huggingface-sync@v1

+ with:

+ github: ${{ secrets.GITHUB_TOKEN }}

+ user: QubitPi

+ space: miniSearch

+ token: ${{ secrets.HF_TOKEN }}

+ configuration: "hf-space-config.yml"

diff --git a/.github/workflows/reusable-test-lint-ping.yml b/.github/workflows/reusable-test-lint-ping.yml

new file mode 100644

index 0000000000000000000000000000000000000000..63c8e7c09f4a8598702dd4a30cd4a920d770043d

--- /dev/null

+++ b/.github/workflows/reusable-test-lint-ping.yml

@@ -0,0 +1,25 @@

+on:

+ workflow_call:

+jobs:

+ check-code-quality:

+ name: Check Code Quality

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ - uses: actions/setup-node@v4

+ with:

+ node-version: 20

+ cache: "npm"

+ - run: npm ci --ignore-scripts

+ - run: npm test

+ - run: npm run lint

+ check-docker-container:

+ needs: [check-code-quality]

+ name: Check Docker Container

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ - run: docker compose -f docker-compose.production.yml up -d

+ - name: Check if main page is available

+ run: until curl -s -o /dev/null -w "%{http_code}" localhost:7860 | grep 200; do sleep 1; done

+ - run: docker compose -f docker-compose.production.yml down

diff --git a/.github/workflows/update-searxng-docker-image.yml b/.github/workflows/update-searxng-docker-image.yml

new file mode 100644

index 0000000000000000000000000000000000000000..50261a76e8453bc473fa6e487d81a45cebe7cd1a

--- /dev/null

+++ b/.github/workflows/update-searxng-docker-image.yml

@@ -0,0 +1,44 @@

+name: Update SearXNG Docker Image

+

+on:

+ schedule:

+ - cron: "0 14 * * *"

+ workflow_dispatch:

+

+permissions:

+ contents: write

+

+jobs:

+ update-searxng-image:

+ runs-on: ubuntu-latest

+ steps:

+ - name: Checkout code

+ uses: actions/checkout@v4

+ with:

+ token: ${{ secrets.GITHUB_TOKEN }}

+

+ - name: Get latest SearXNG image tag

+ id: get_latest_tag

+ run: |

+ LATEST_TAG=$(curl -s "https://hub.docker.com/v2/repositories/searxng/searxng/tags/?page_size=3&ordering=last_updated" | jq -r '.results[] | select(.name != "latest-build-cache" and .name != "latest") | .name' | head -n 1)

+ echo "LATEST_TAG=${LATEST_TAG}" >> $GITHUB_OUTPUT

+

+ - name: Update Dockerfile

+ run: |

+ sed -i 's|FROM searxng/searxng:.*|FROM searxng/searxng:${{ steps.get_latest_tag.outputs.LATEST_TAG }}|' Dockerfile

+

+ - name: Check for changes

+ id: git_status

+ run: |

+ git diff --exit-code || echo "changes=true" >> $GITHUB_OUTPUT

+

+ - name: Commit and push if changed

+ if: steps.git_status.outputs.changes == 'true'

+ run: |

+ git config --local user.email "github-actions[bot]@users.noreply.github.com"

+ git config --local user.name "github-actions[bot]"

+ git add Dockerfile

+ git commit -m "Update SearXNG Docker image to tag ${{ steps.get_latest_tag.outputs.LATEST_TAG }}"

+ git push

+ env:

+ GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..932b6ac5f1f717d633b25b367df8518db38702a8

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,8 @@

+node_modules

+.DS_Store

+/client/dist

+/server/models

+.vscode

+/vite-build-stats.html

+.env

+.idea/

diff --git a/.npmrc b/.npmrc

new file mode 100644

index 0000000000000000000000000000000000000000..80bcbed90c4f2b3d895d5086dc775e1bd8b32b43

--- /dev/null

+++ b/.npmrc

@@ -0,0 +1 @@

+legacy-peer-deps = true

diff --git a/Dockerfile b/Dockerfile

new file mode 100644

index 0000000000000000000000000000000000000000..47c81b44ae5ded2a9951d658dfe4c50ccd2c0406

--- /dev/null

+++ b/Dockerfile

@@ -0,0 +1,82 @@

+# Use the SearXNG image as the base

+FROM searxng/searxng:2024.11.1-b07c0ae39

+

+# Set the default port to 7860 if not provided

+ENV PORT=7860

+

+# Expose the port specified by the PORT environment variable

+EXPOSE $PORT

+

+# Install necessary packages using Alpine's package manager

+RUN apk add --update \

+ nodejs \

+ npm \

+ git \

+ build-base \

+ cmake \

+ ccache

+

+# Set the SearXNG settings folder path

+ARG SEARXNG_SETTINGS_FOLDER=/etc/searxng

+

+# Modify SearXNG configuration:

+# 1. Change output format from HTML to JSON

+# 2. Remove user switching in the entrypoint script

+# 3. Create and set permissions for the settings folder

+RUN sed -i 's/- html/- json/' /usr/local/searxng/searx/settings.yml \

+ && sed -i 's/su-exec searxng:searxng //' /usr/local/searxng/dockerfiles/docker-entrypoint.sh \

+ && mkdir -p ${SEARXNG_SETTINGS_FOLDER} \

+ && chmod 777 ${SEARXNG_SETTINGS_FOLDER}

+

+# Set up user and directory structure

+ARG USERNAME=user

+ARG HOME_DIR=/home/${USERNAME}

+ARG APP_DIR=${HOME_DIR}/app

+

+# Create a non-root user and set up the application directory

+RUN adduser -D -u 1000 ${USERNAME} \

+ && mkdir -p ${APP_DIR} \

+ && chown -R ${USERNAME}:${USERNAME} ${HOME_DIR}

+

+# Switch to the non-root user

+USER ${USERNAME}

+

+# Set the working directory to the application directory

+WORKDIR ${APP_DIR}

+

+# Define environment variables that can be passed to the container during build.

+# This approach allows for dynamic configuration without relying on a `.env` file,

+# which might not be suitable for all deployment scenarios.

+ARG ACCESS_KEYS

+ARG ACCESS_KEY_TIMEOUT_HOURS

+ARG WEBLLM_DEFAULT_F16_MODEL_ID

+ARG WEBLLM_DEFAULT_F32_MODEL_ID

+ARG WLLAMA_DEFAULT_MODEL_ID

+ARG INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL

+ARG INTERNAL_OPENAI_COMPATIBLE_API_KEY

+ARG INTERNAL_OPENAI_COMPATIBLE_API_MODEL

+ARG INTERNAL_OPENAI_COMPATIBLE_API_NAME

+ARG DEFAULT_INFERENCE_TYPE

+

+# Copy package.json, package-lock.json, and .npmrc files

+COPY --chown=${USERNAME}:${USERNAME} ./package.json ./package.json

+COPY --chown=${USERNAME}:${USERNAME} ./package-lock.json ./package-lock.json

+COPY --chown=${USERNAME}:${USERNAME} ./.npmrc ./.npmrc

+

+# Install Node.js dependencies

+RUN npm ci

+

+# Copy the rest of the application files

+COPY --chown=${USERNAME}:${USERNAME} . .

+

+# Configure Git to treat the app directory as safe

+RUN git config --global --add safe.directory ${APP_DIR}

+

+# Build the application

+RUN npm run build

+

+# Set the entrypoint to use a shell

+ENTRYPOINT [ "/bin/sh", "-c" ]

+

+# Run SearXNG in the background and start the Node.js application using PM2

+CMD [ "(/usr/local/searxng/dockerfiles/docker-entrypoint.sh -f > /dev/null 2>&1) & (npx pm2 start ecosystem.config.cjs && npx pm2 logs production-server)" ]

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..30243afdf4d88f374218e90b79a9675879ec5d0d

--- /dev/null

+++ b/README.md

@@ -0,0 +1,146 @@

+---

+title: MiniSearch

+emoji: 👌🔍

+colorFrom: yellow

+colorTo: yellow

+sdk: docker

+short_description: Minimalist web-searching app with browser-based AI assistant

+pinned: false

+custom_headers:

+ cross-origin-embedder-policy: require-corp

+ cross-origin-opener-policy: same-origin

+ cross-origin-resource-policy: cross-origin

+---

+

+[![Hugging Face space badge]][Hugging Face space URL]

+[![GitHub workflow status badge][GitHub workflow status badge]][GitHub workflow status URL]

+[![Hugging Face sync status badge]][Hugging Face sync status URL]

+[![Docker Hub][Docker Pulls Badge]][Docker Hub URL]

+[![Apache License Badge]][Apache License, Version 2.0]

+

+# MiniSearch

+

+A minimalist web-searching app with an AI assistant that runs directly from your browser.

+

+Live demo: https://huggingface.co/spaces/QubitPi/miniSearch

+

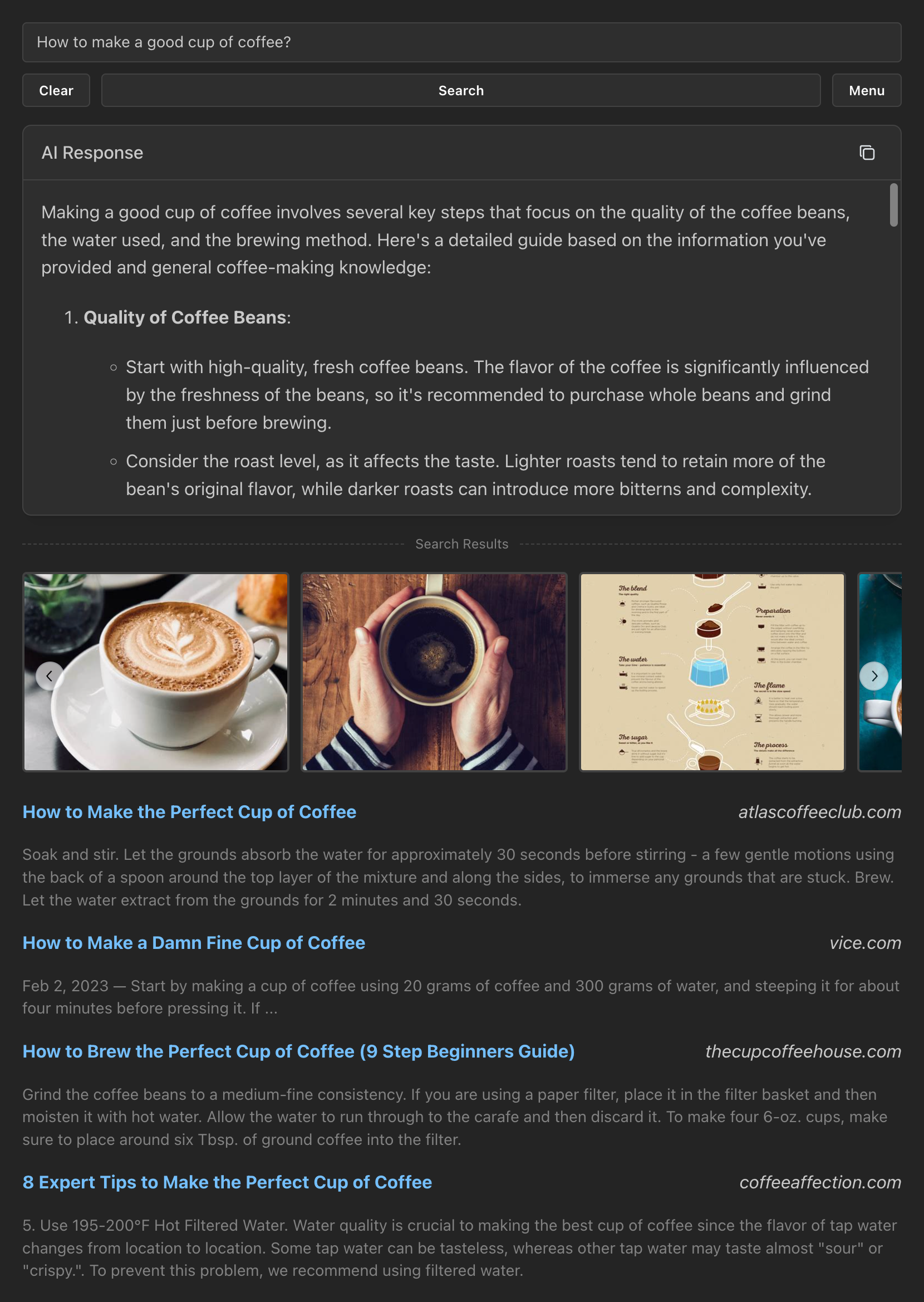

+## Screenshot

+

+

+

+## Features

+

+- **Privacy-focused**: [No tracking, no ads, no data collection](https://docs.searxng.org/own-instance.html#how-does-searxng-protect-privacy)

+- **Easy to use**: Minimalist yet intuitive interface for all users

+- **Cross-platform**: Models run inside the browser, both on desktop and mobile

+- **Integrated**: Search from the browser address bar by setting it as the default search engine

+- **Efficient**: Models are loaded and cached only when needed

+- **Customizable**: Tweakable settings for search results and text generation

+- **Open-source**: [The code is available for inspection and contribution at GitHub](https://github.com/QubitPi/MiniSearch)

+

+## Prerequisites

+

+- [Docker](https://docs.docker.com/get-docker/)

+

+## Getting started

+

+There are two ways to get started with MiniSearch. Pick one that suits you best.

+

+**Option 1** - Use [MiniSearch's Docker Image][Docker Hub URL] by running:

+

+```bash

+docker run -p 7860:7860 jack20191124/mini-search:main

+```

+

+**Option 2** - Build from source by [downloading the repository files](https://github.com/QubitPi/MiniSearch/archive/refs/heads/main.zip) and running:

+

+```bash

+docker compose -f docker-compose.production.yml up --build

+```

+

+Then, open http://localhost:7860 in your browser and start searching!

+

+## Frequently asked questions

+

+

+ How do I search via the browser's address bar?

+

+ You can set MiniSearch as your browser's address-bar search engine using the pattern http://localhost:7860/?q=%s, in which your search term replaces %s.

+

+

+

+

+ Can I use custom models via OpenAI-Compatible API?

+

+ Yes! For this, open the Menu and change the "AI Processing Location" to Remote server (API). Then configure the Base URL, and optionally set an API Key and a Model to use.

+

+

+

+

+ How do I restrict the access to my MiniSearch instance via password?

+

+ Create a .env file and set a value for ACCESS_KEYS. Then reset the MiniSearch docker container.

+

+

+ For example, if you to set the password to PepperoniPizza, then this is what you should add to your .env:

+ ACCESS_KEYS="PepperoniPizza"

+

+

+ You can find more examples in the .env.example file.

+

+

+

+

+ I want to serve MiniSearch to other users, allowing them to use my own OpenAI-Compatible API key, but without revealing it to them. Is it possible?

+

Yes! In MiniSearch, we call this text-generation feature "Internal OpenAI-Compatible API". To use this it:

+

+

Set up your OpenAI-Compatible API endpoint by configuring the following environment variables in your .env file:

+

+

INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL: The base URL for your API

+

INTERNAL_OPENAI_COMPATIBLE_API_KEY: Your API access key

+

INTERNAL_OPENAI_COMPATIBLE_API_MODEL: The model to use

+

INTERNAL_OPENAI_COMPATIBLE_API_NAME: The name to display in the UI

+

+

+

Restart MiniSearch server.

+

In the MiniSearch menu, select the new option (named as per your INTERNAL_OPENAI_COMPATIBLE_API_NAME setting) from the "AI Processing Location" dropdown.

+

+

+

+

+ How can I contribute to the development of this tool?

+

Fork this repository and clone it. Then, start the development server by running the following command:

+

docker compose up

+

Make your changes, push them to your fork, and open a pull request! All contributions are welcome!

+

+

+

+ Why is MiniSearch built upon SearXNG's Docker Image and using a single image instead of composing it from multiple services?

+

There are a few reasons for this:

+

+

MiniSearch utilizes SearXNG as its meta-search engine.

+

Manual installation of SearXNG is not trivial, so we use the docker image they provide, which has everything set up.

+

SearXNG only provides a Docker Image based on Alpine Linux.

+

The user of the image needs to be customized in a specific way to run on HuggingFace Spaces, where MiniSearch's demo runs.

+

HuggingFace only accepts a single docker image. It doesn't run docker compose or multiple images, unfortunately.