---

title: MiniSearch

emoji: 👌🔍

colorFrom: yellow

colorTo: yellow

sdk: docker

short_description: Minimalist web-searching app with browser-based AI assistant

pinned: true

custom_headers:

cross-origin-embedder-policy: require-corp

cross-origin-opener-policy: same-origin

cross-origin-resource-policy: cross-origin

---

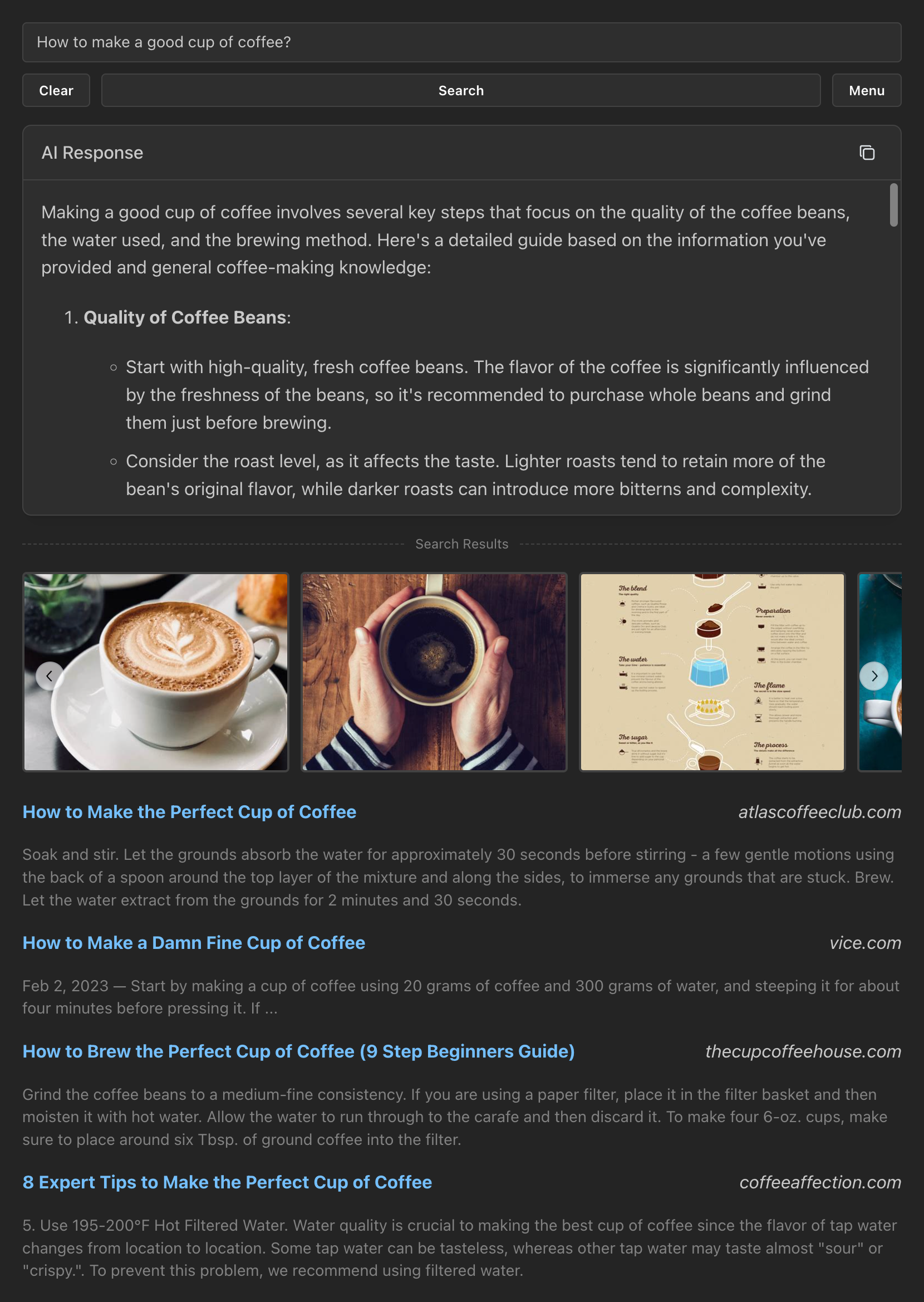

# MiniSearch

A minimalist web-searching app with an AI assistant that runs directly from your browser.

Live demo: https://felladrin-minisearch.hf.space

## Screenshot

## Features

- **Privacy-focused**: [No tracking, no ads, no data collection](https://docs.searxng.org/own-instance.html#how-does-searxng-protect-privacy)

- **Easy to use**: Minimalist yet intuitive interface for all users

- **Cross-platform**: Models run inside the browser, both on desktop and mobile

- **Integrated**: Search from the browser address bar by setting it as the default search engine

- **Efficient**: Models are loaded and cached only when needed

- **Customizable**: Tweakable settings for search results and text generation

- **Open-source**: [The code is available for inspection and contribution at GitHub](https://github.com/felladrin/MiniSearch)

## Prerequisites

- [Docker](https://docs.docker.com/get-docker/)

## Getting started

Here are the easiest ways to get started with MiniSearch. Pick the one that suits you best.

**Option 1** - Use [MiniSearch's Docker Image](https://github.com/felladrin/MiniSearch/pkgs/container/minisearch) by running in your terminal:

```bash

docker run -p 7860:7860 ghcr.io/felladrin/minisearch:main

```

**Option 2** - Add MiniSearch's Docker Image to your existing Docker Compose file:

```yaml

services:

minisearch:

image: ghcr.io/felladrin/minisearch:main

ports:

- "7860:7860"

```

**Option 3** - Build from source by [downloading the repository files](https://github.com/felladrin/MiniSearch/archive/refs/heads/main.zip) and running:

```bash

docker compose -f docker-compose.production.yml up --build

```

Once the container is running, open http://localhost:7860 in your browser and start searching!

## Frequently asked questions

How do I search via the browser's address bar?

You can set MiniSearch as your browser's address-bar search engine using the pattern http://localhost:7860/?q=%s, in which your search term replaces %s.

Can I use custom models via OpenAI-Compatible API?

Yes! For this, open the Menu and change the "AI Processing Location" to Remote server (API). Then configure the Base URL, and optionally set an API Key and a Model to use.

How do I restrict the access to my MiniSearch instance via password?

Create a .env file and set a value for ACCESS_KEYS. Then reset the MiniSearch docker container.

For example, if you to set the password to PepperoniPizza, then this is what you should add to your .env:

ACCESS_KEYS="PepperoniPizza"

You can find more examples in the .env.example file.

I want to serve MiniSearch to other users, allowing them to use my own OpenAI-Compatible API key, but without revealing it to them. Is it possible?

Yes! In MiniSearch, we call this text-generation feature "Internal OpenAI-Compatible API". To use this it:

- Set up your OpenAI-Compatible API endpoint by configuring the following environment variables in your

.env file:

INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL: The base URL for your APIINTERNAL_OPENAI_COMPATIBLE_API_KEY: Your API access keyINTERNAL_OPENAI_COMPATIBLE_API_MODEL: The model to useINTERNAL_OPENAI_COMPATIBLE_API_NAME: The name to display in the UI

- Restart MiniSearch server.

- In the MiniSearch menu, select the new option (named as per your

INTERNAL_OPENAI_COMPATIBLE_API_NAME setting) from the "AI Processing Location" dropdown.

How can I contribute to the development of this tool?

Fork this repository and clone it. Then, start the development server by running the following command:

docker compose up

Make your changes, push them to your fork, and open a pull request! All contributions are welcome!

Why is MiniSearch built upon SearXNG's Docker Image and using a single image instead of composing it from multiple services?

There are a few reasons for this:

- MiniSearch utilizes SearXNG as its meta-search engine.

- Manual installation of SearXNG is not trivial, so we use the docker image they provide, which has everything set up.

- SearXNG only provides a Docker Image based on Alpine Linux.

- The user of the image needs to be customized in a specific way to run on HuggingFace Spaces, where MiniSearch's demo runs.

- HuggingFace only accepts a single docker image. It doesn't run docker compose or multiple images, unfortunately.