Commit

•

031a736

1

Parent(s):

722a99c

Update README.md

Browse files

README.md

CHANGED

|

@@ -131,8 +131,6 @@ For GGUFs, [mradermacher/Lamarck-14B-v0.3-i1-GGUF](https://huggingface.co/mrader

|

|

| 131 |

|

| 132 |

|

| 133 |

|

| 134 |

-

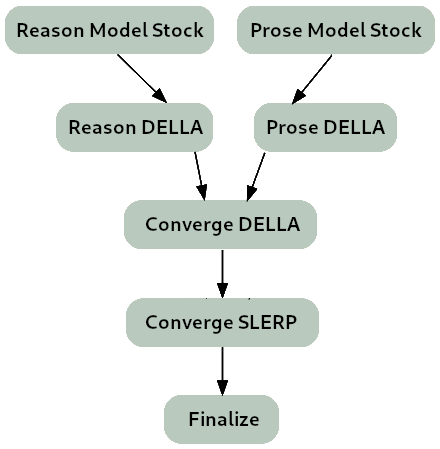

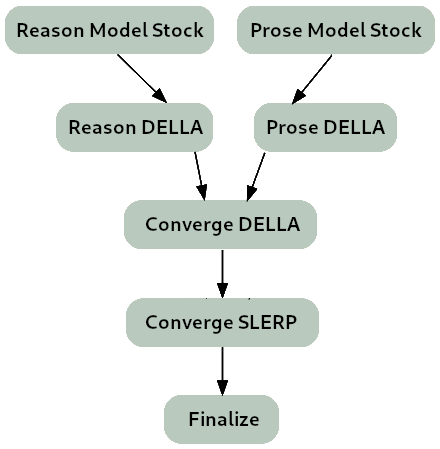

The first two layers come entirely from Virtuoso. The choice to leave these layers untouched comes from [arxiv.org/abs/2307.03172](https://arxiv.org/abs/2307.03172) which identifies early attention glitches as a chief cause of hallucinations. Layers 3-8 feature a SLERP gradient into introducing the DELLA merge tree in which the reason branch is emphasized, the prose branch only given a small ranking.

|

| 135 |

-

|

| 136 |

### Thanks go to:

|

| 137 |

|

| 138 |

- @arcee-ai's team for the ever-capable mergekit, and the exceptional Virtuoso Small model.

|

|

|

|

| 131 |

|

| 132 |

|

| 133 |

|

|

|

|

|

|

|

| 134 |

### Thanks go to:

|

| 135 |

|

| 136 |

- @arcee-ai's team for the ever-capable mergekit, and the exceptional Virtuoso Small model.

|