---

language:

- en

license: llama3

datasets:

- liuhaotian/LLaVA-CC3M-Pretrain-595K

pipeline_tag: visual-question-answering

---

# llama3-vision-alpha

projection module trained to add vision capabilties to Llama 3 using SigLIP. built by [@yeswondwerr](https://x.com/yeswondwerr) and [@qtnx_](https://x.com/qtnx_)

usable directly in Transformers

**usage**

```

pip install torch transformers pillow

```

```python

import torch

from PIL import Image

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers import BitsAndBytesConfig

bnb_cfg = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16,

llm_int8_skip_modules=["mm_projector", "vision_model"],

)

model_id = "qresearch/llama-3-vision-alpha-hf"

model = AutoModelForCausalLM.from_pretrained(

model_name,

trust_remote_code=True,

torch_dtype=torch.float16,

quantization_config=bnb_cfg,

)

tokenizer = AutoTokenizer.from_pretrained(

model_id,

use_fast=True,

)

image = Image.open("image_path")

print(

tokenizer.decode(

model.answer_question(image, "question", tokenizer),

skip_special_tokens=True,

)

)

```

**examples**

| Image | Examples |

| --- | --- |

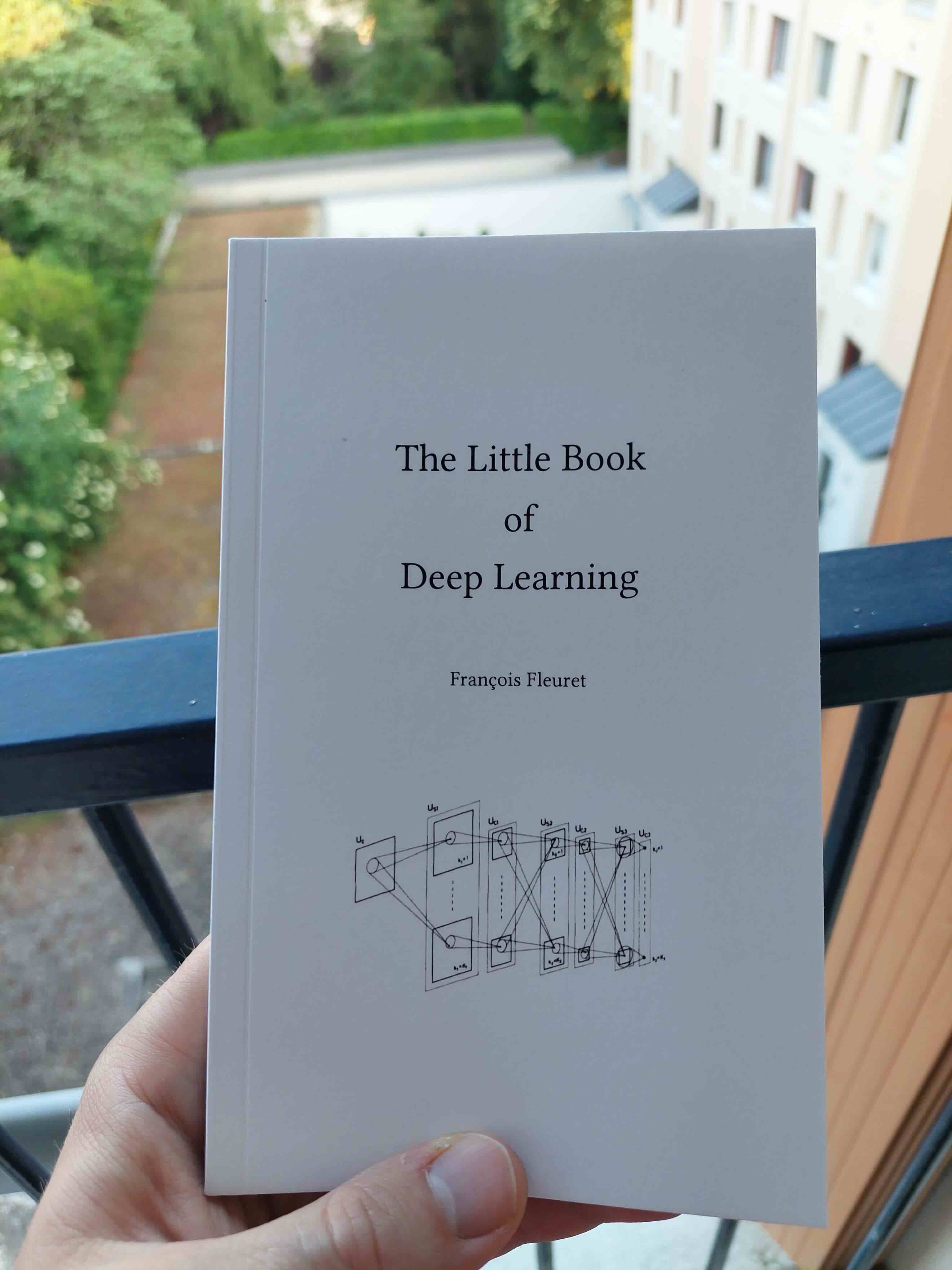

|  | **What is the title of this book? answer briefly**

| **What is the title of this book? answer briefly**

The title of the book is "The Little Book of Deep Learning".

**Where is the person standing? answer briefly**

The person is standing on the balcony.

**Describe the image**

The image shows a person holding a book with a cityscape visible through the window behind them. The book has a cover with a title that reads "The Little Book of Deep Learning" in bold letters. |

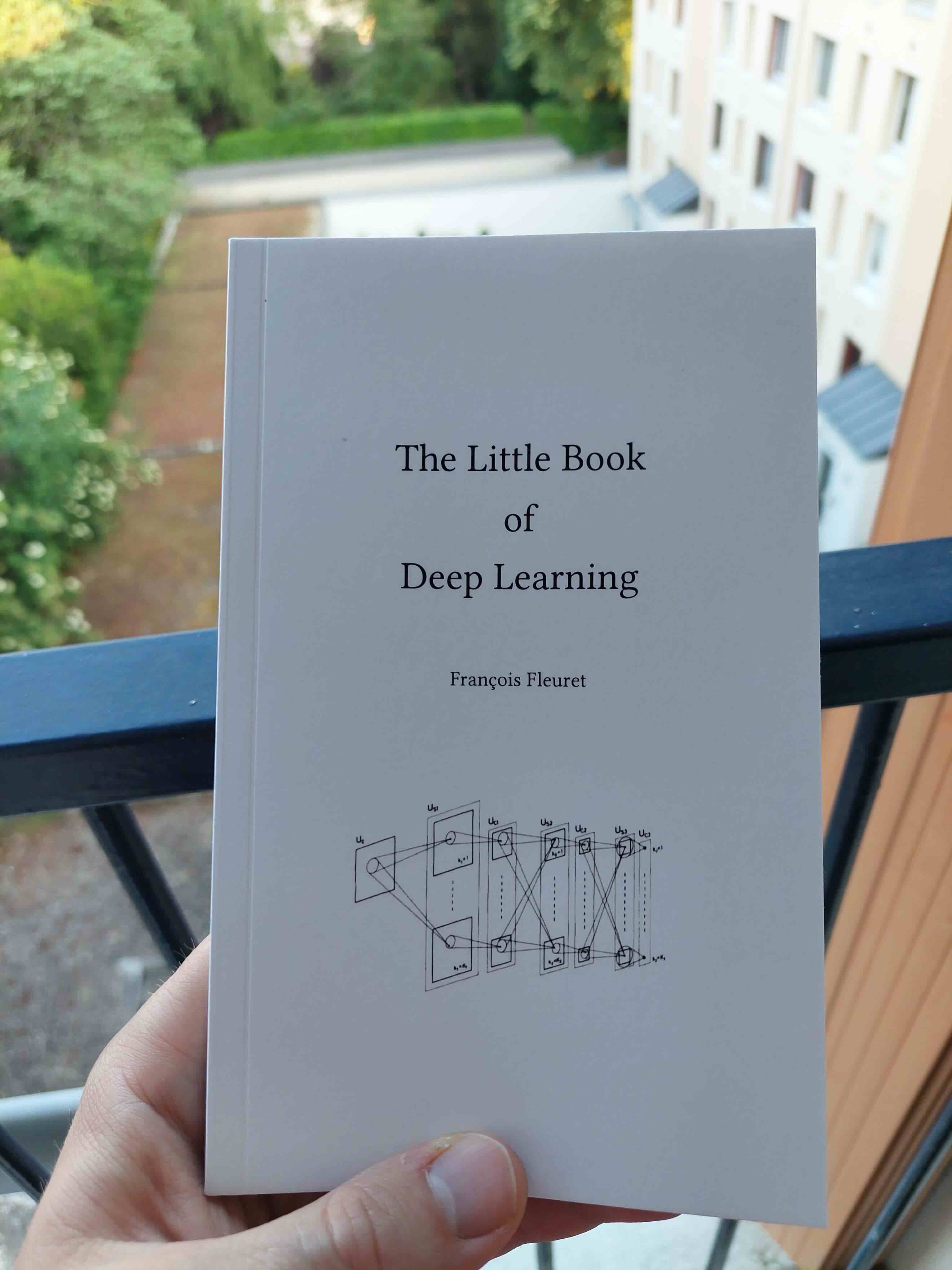

|  | **What type of food is the girl holding? answer briefly**

| **What type of food is the girl holding? answer briefly**

A hamburger!

**What color is the woman's hair? answer briefly**

It's white!

**Describe the image**

The image is of a young girl with short, curly hair and a sweet smile, holding a giant hamburger in her hand. She's sitting at a table with a festive dinner setting, surrounded by candles and a warm glow. Her eyes are shining with excitement and contentment as she takes a big bite of the burger. |

**acknowledgements**

- Liu et al. : [LLaVA](https://arxiv.org/abs/2304.08485)

- Moon et al. : [AnyMAL](https://arxiv.org/abs/2309.16058)

- vikhyatk : moondream, test images

```

.x+=:.

z` ^% .uef^"

.u . . '88" <888'888k 888E~?888L

I888 9888 4888> ' d888 '88%" 8888N=*8888 d888 '88%" 9888 9888 4888> ' 9888 'Y" 888E 888E

I888 9888 4888> 8888.+" %8" R88 8888.+" 9888 9888 4888> 9888 888E 888E

I888 9888 .d888L .+ 8888L @8Wou 9% 8888L 9888 9888 .d888L .+ 9888 888E 888E

`888Nx?888 ^"8888*" '8888c. .+ .888888P` '8888c. .+ 9888 9888 ^"8888*" ?8888u../ 888E 888E

"88" '888 "Y" "88888% ` ^"F "88888% "888*""888" "Y" "8888P' m888N= 888>

88E "YP' "YP' ^Y" ^Y' "P' `Y" 888

98> J88"

'8 @%

` :"

``` | **What is the title of this book? answer briefly**

| **What is the title of this book? answer briefly** | **What type of food is the girl holding? answer briefly**

| **What type of food is the girl holding? answer briefly**