Model Overview

RewardBench results:

| Chat | Chat Hard | Safety | Reasoning |

|---|---|---|---|

| 0.89 | 0.42 | 0.68 | 0.61 |

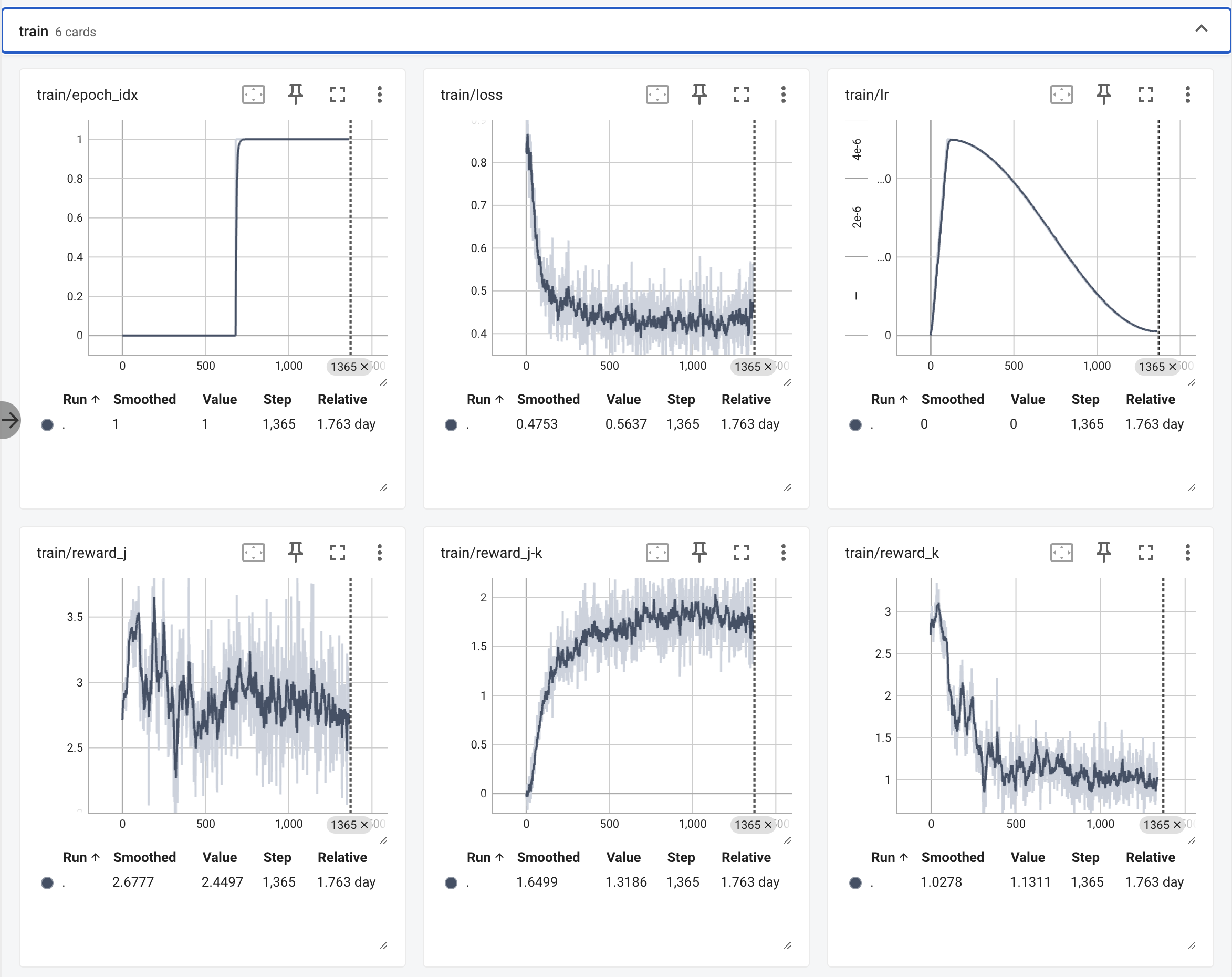

This model has been fine-tuned on the hendrydong/preference_700K dataset for 2 epochs, using the Llama-3.2-1B-Instruct model as the base. See config for more details about the training hyperparameters.

Fine-tuning was done using the fusion-bench:

fusion_bench --config-name llama_full_finetune \

fabric.loggers.name=llama_full_bradley_terry_rm \

method=lm_finetune/bradley_terry_rm \

method.dataloader_kwargs.batch_size=8 \

method.accumulate_grad_batches=16 \

method.lr_scheduler.min_lr=1e-7 \

method.lr_scheduler.max_lr=5e-6 \

method.lr_scheduler.warmup_steps=100 \

method.optimizer.lr=0 \

method.optimizer.weight_decay=0.001 \

method.gradient_clip_val=1 \

method.max_epochs=2 \

method.checkpoint_save_interval=epoch \

method.checkpoint_save_frequency=1 \

modelpool=SeqenceClassificationModelPool/llama_preference700k

8 GPUs, per-GPU batch size is 8, with gradient accumulation of 16 steps, so the effective batch size is 1024.

- Downloads last month

- 50

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

Model tree for fusion-bench/Llama-3.2-1B-Instruct_Bradly-Terry-RM_Preference-700k

Base model

meta-llama/Llama-3.2-1B-Instruct