import from zenodo

Browse files- README.md +51 -0

- exp/enh_stats_8k/train/feats_stats.npz +0 -0

- exp/enh_train_enh_conv_tasnet_raw/66epoch.pth +3 -0

- exp/enh_train_enh_conv_tasnet_raw/RESULTS.md +20 -0

- exp/enh_train_enh_conv_tasnet_raw/config.yaml +146 -0

- exp/enh_train_enh_conv_tasnet_raw/images/backward_time.png +0 -0

- exp/enh_train_enh_conv_tasnet_raw/images/forward_time.png +0 -0

- exp/enh_train_enh_conv_tasnet_raw/images/iter_time.png +0 -0

- exp/enh_train_enh_conv_tasnet_raw/images/loss.png +0 -0

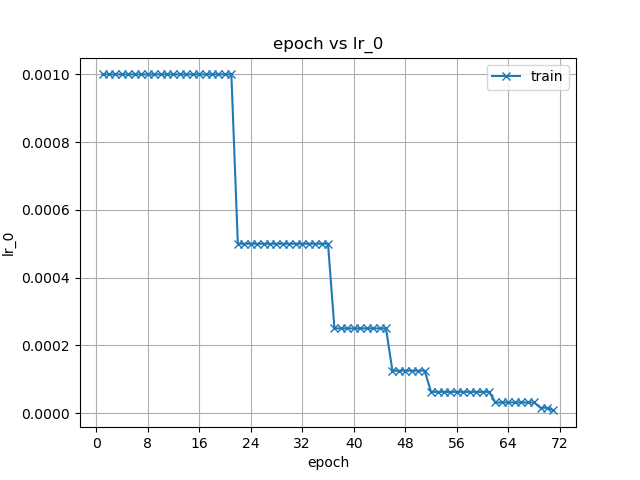

- exp/enh_train_enh_conv_tasnet_raw/images/lr_0.png +0 -0

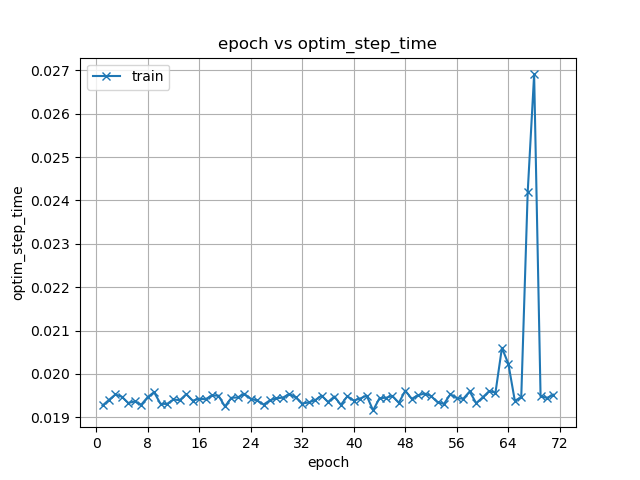

- exp/enh_train_enh_conv_tasnet_raw/images/optim_step_time.png +0 -0

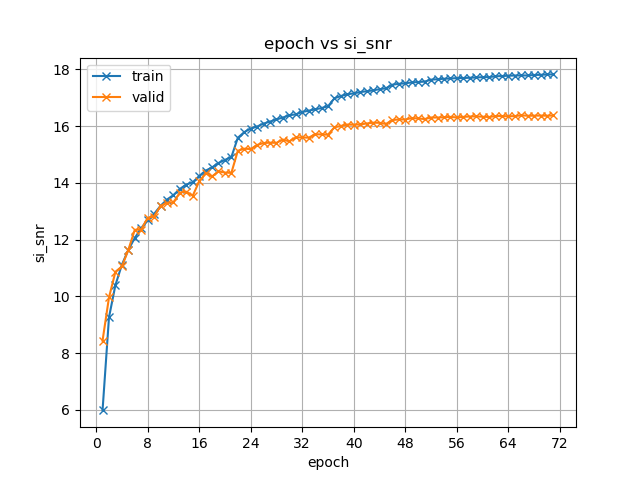

- exp/enh_train_enh_conv_tasnet_raw/images/si_snr.png +0 -0

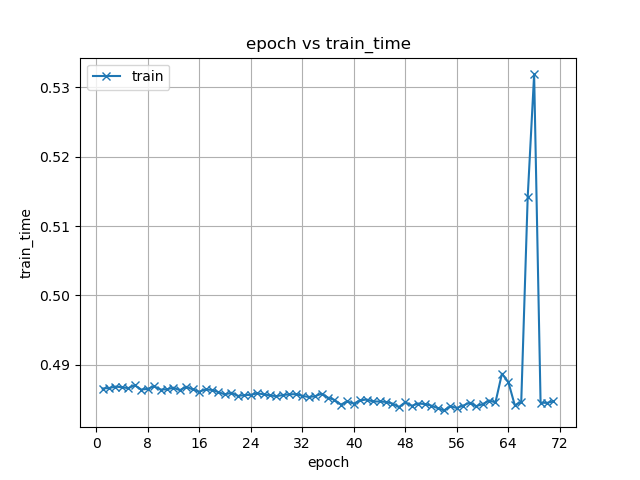

- exp/enh_train_enh_conv_tasnet_raw/images/train_time.png +0 -0

- meta.yaml +8 -0

README.md

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- espnet

|

| 4 |

+

- audio

|

| 5 |

+

- speech-enhancement

|

| 6 |

+

- audio-to-audio

|

| 7 |

+

language: en

|

| 8 |

+

datasets:

|

| 9 |

+

- wsj0_2mix

|

| 10 |

+

license: cc-by-4.0

|

| 11 |

+

---

|

| 12 |

+

## Example ESPnet2 ENH model

|

| 13 |

+

### `Chenda_Li/wsj0_2mix_enh_train_enh_conv_tasnet_raw_valid.si_snr.ave`

|

| 14 |

+

♻️ Imported from https://zenodo.org/record/4498562/

|

| 15 |

+

|

| 16 |

+

This model was trained by Chenda Li using wsj0_2mix/enh1 recipe in [espnet](https://github.com/espnet/espnet/).

|

| 17 |

+

### Demo: How to use in ESPnet2

|

| 18 |

+

```python

|

| 19 |

+

# coming soon

|

| 20 |

+

```

|

| 21 |

+

### Citing ESPnet

|

| 22 |

+

```BibTex

|

| 23 |

+

@inproceedings{watanabe2018espnet,

|

| 24 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson {Enrique Yalta Soplin} and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 25 |

+

title={{ESPnet}: End-to-End Speech Processing Toolkit},

|

| 26 |

+

year={2018},

|

| 27 |

+

booktitle={Proceedings of Interspeech},

|

| 28 |

+

pages={2207--2211},

|

| 29 |

+

doi={10.21437/Interspeech.2018-1456},

|

| 30 |

+

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

|

| 31 |

+

}

|

| 32 |

+

@inproceedings{hayashi2020espnet,

|

| 33 |

+

title={{Espnet-TTS}: Unified, reproducible, and integratable open source end-to-end text-to-speech toolkit},

|

| 34 |

+

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Inoue, Katsuki and Yoshimura, Takenori and Watanabe, Shinji and Toda, Tomoki and Takeda, Kazuya and Zhang, Yu and Tan, Xu},

|

| 35 |

+

booktitle={Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

|

| 36 |

+

pages={7654--7658},

|

| 37 |

+

year={2020},

|

| 38 |

+

organization={IEEE}

|

| 39 |

+

}

|

| 40 |

+

```

|

| 41 |

+

or arXiv:

|

| 42 |

+

```bibtex

|

| 43 |

+

@misc{watanabe2018espnet,

|

| 44 |

+

title={ESPnet: End-to-End Speech Processing Toolkit},

|

| 45 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Enrique Yalta Soplin and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 46 |

+

year={2018},

|

| 47 |

+

eprint={1804.00015},

|

| 48 |

+

archivePrefix={arXiv},

|

| 49 |

+

primaryClass={cs.CL}

|

| 50 |

+

}

|

| 51 |

+

```

|

exp/enh_stats_8k/train/feats_stats.npz

ADDED

|

Binary file (778 Bytes). View file

|

exp/enh_train_enh_conv_tasnet_raw/66epoch.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3a324193d6a4537be5711e00caf18e631df5e10c88896a388e5745626bfe22ae

|

| 3 |

+

size 34969477

|

exp/enh_train_enh_conv_tasnet_raw/RESULTS.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- Generated by ./scripts/utils/show_enh_score.sh -->

|

| 2 |

+

# RESULTS

|

| 3 |

+

## Environments

|

| 4 |

+

- date: `Thu Feb 4 01:16:18 CST 2021`

|

| 5 |

+

- python version: `3.7.6 (default, Jan 8 2020, 19:59:22) [GCC 7.3.0]`

|

| 6 |

+

- espnet version: `espnet 0.9.7`

|

| 7 |

+

- pytorch version: `pytorch 1.5.0`

|

| 8 |

+

- Git hash: `a3334220b0352931677946d178fade3313cf82bb`

|

| 9 |

+

- Commit date: `Fri Jan 29 23:35:47 2021 +0800`

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## enh_train_enh_conv_tasnet_raw

|

| 13 |

+

|

| 14 |

+

config: ./conf/tuning/train_enh_conv_tasnet.yaml

|

| 15 |

+

|

| 16 |

+

|dataset|STOI|SAR|SDR|SIR|

|

| 17 |

+

|---|---|---|---|---|

|

| 18 |

+

|enhanced_cv_min_8k|0.949205|17.3785|16.8028|26.9785|

|

| 19 |

+

|enhanced_tt_min_8k|0.95349|16.6221|15.9494|25.9032|

|

| 20 |

+

|

exp/enh_train_enh_conv_tasnet_raw/config.yaml

ADDED

|

@@ -0,0 +1,146 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

config: ./conf/tuning/train_enh_conv_tasnet.yaml

|

| 2 |

+

print_config: false

|

| 3 |

+

log_level: INFO

|

| 4 |

+

dry_run: false

|

| 5 |

+

iterator_type: chunk

|

| 6 |

+

output_dir: exp/enh_train_enh_conv_tasnet_raw

|

| 7 |

+

ngpu: 1

|

| 8 |

+

seed: 0

|

| 9 |

+

num_workers: 4

|

| 10 |

+

num_att_plot: 3

|

| 11 |

+

dist_backend: nccl

|

| 12 |

+

dist_init_method: env://

|

| 13 |

+

dist_world_size: null

|

| 14 |

+

dist_rank: null

|

| 15 |

+

local_rank: 0

|

| 16 |

+

dist_master_addr: null

|

| 17 |

+

dist_master_port: null

|

| 18 |

+

dist_launcher: null

|

| 19 |

+

multiprocessing_distributed: false

|

| 20 |

+

cudnn_enabled: true

|

| 21 |

+

cudnn_benchmark: false

|

| 22 |

+

cudnn_deterministic: true

|

| 23 |

+

collect_stats: false

|

| 24 |

+

write_collected_feats: false

|

| 25 |

+

max_epoch: 100

|

| 26 |

+

patience: 4

|

| 27 |

+

val_scheduler_criterion:

|

| 28 |

+

- valid

|

| 29 |

+

- loss

|

| 30 |

+

early_stopping_criterion:

|

| 31 |

+

- valid

|

| 32 |

+

- loss

|

| 33 |

+

- min

|

| 34 |

+

best_model_criterion:

|

| 35 |

+

- - valid

|

| 36 |

+

- si_snr

|

| 37 |

+

- max

|

| 38 |

+

- - valid

|

| 39 |

+

- loss

|

| 40 |

+

- min

|

| 41 |

+

keep_nbest_models: 1

|

| 42 |

+

grad_clip: 5.0

|

| 43 |

+

grad_clip_type: 2.0

|

| 44 |

+

grad_noise: false

|

| 45 |

+

accum_grad: 1

|

| 46 |

+

no_forward_run: false

|

| 47 |

+

resume: true

|

| 48 |

+

train_dtype: float32

|

| 49 |

+

use_amp: false

|

| 50 |

+

log_interval: null

|

| 51 |

+

unused_parameters: false

|

| 52 |

+

use_tensorboard: true

|

| 53 |

+

use_wandb: false

|

| 54 |

+

wandb_project: null

|

| 55 |

+

wandb_id: null

|

| 56 |

+

pretrain_path: null

|

| 57 |

+

init_param: []

|

| 58 |

+

freeze_param: []

|

| 59 |

+

num_iters_per_epoch: null

|

| 60 |

+

batch_size: 8

|

| 61 |

+

valid_batch_size: null

|

| 62 |

+

batch_bins: 1000000

|

| 63 |

+

valid_batch_bins: null

|

| 64 |

+

train_shape_file:

|

| 65 |

+

- exp/enh_stats_8k/train/speech_mix_shape

|

| 66 |

+

- exp/enh_stats_8k/train/speech_ref1_shape

|

| 67 |

+

- exp/enh_stats_8k/train/speech_ref2_shape

|

| 68 |

+

valid_shape_file:

|

| 69 |

+

- exp/enh_stats_8k/valid/speech_mix_shape

|

| 70 |

+

- exp/enh_stats_8k/valid/speech_ref1_shape

|

| 71 |

+

- exp/enh_stats_8k/valid/speech_ref2_shape

|

| 72 |

+

batch_type: folded

|

| 73 |

+

valid_batch_type: null

|

| 74 |

+

fold_length:

|

| 75 |

+

- 80000

|

| 76 |

+

- 80000

|

| 77 |

+

- 80000

|

| 78 |

+

sort_in_batch: descending

|

| 79 |

+

sort_batch: descending

|

| 80 |

+

multiple_iterator: false

|

| 81 |

+

chunk_length: 32000

|

| 82 |

+

chunk_shift_ratio: 0.5

|

| 83 |

+

num_cache_chunks: 1024

|

| 84 |

+

train_data_path_and_name_and_type:

|

| 85 |

+

- - dump/raw/tr_min_8k/wav.scp

|

| 86 |

+

- speech_mix

|

| 87 |

+

- sound

|

| 88 |

+

- - dump/raw/tr_min_8k/spk1.scp

|

| 89 |

+

- speech_ref1

|

| 90 |

+

- sound

|

| 91 |

+

- - dump/raw/tr_min_8k/spk2.scp

|

| 92 |

+

- speech_ref2

|

| 93 |

+

- sound

|

| 94 |

+

valid_data_path_and_name_and_type:

|

| 95 |

+

- - dump/raw/cv_min_8k/wav.scp

|

| 96 |

+

- speech_mix

|

| 97 |

+

- sound

|

| 98 |

+

- - dump/raw/cv_min_8k/spk1.scp

|

| 99 |

+

- speech_ref1

|

| 100 |

+

- sound

|

| 101 |

+

- - dump/raw/cv_min_8k/spk2.scp

|

| 102 |

+

- speech_ref2

|

| 103 |

+

- sound

|

| 104 |

+

allow_variable_data_keys: false

|

| 105 |

+

max_cache_size: 0.0

|

| 106 |

+

max_cache_fd: 32

|

| 107 |

+

valid_max_cache_size: null

|

| 108 |

+

optim: adam

|

| 109 |

+

optim_conf:

|

| 110 |

+

lr: 0.001

|

| 111 |

+

eps: 1.0e-08

|

| 112 |

+

weight_decay: 0

|

| 113 |

+

scheduler: reducelronplateau

|

| 114 |

+

scheduler_conf:

|

| 115 |

+

mode: min

|

| 116 |

+

factor: 0.5

|

| 117 |

+

patience: 1

|

| 118 |

+

init: xavier_uniform

|

| 119 |

+

model_conf:

|

| 120 |

+

loss_type: si_snr

|

| 121 |

+

use_preprocessor: false

|

| 122 |

+

encoder: conv

|

| 123 |

+

encoder_conf:

|

| 124 |

+

channel: 256

|

| 125 |

+

kernel_size: 20

|

| 126 |

+

stride: 10

|

| 127 |

+

separator: tcn

|

| 128 |

+

separator_conf:

|

| 129 |

+

num_spk: 2

|

| 130 |

+

layer: 8

|

| 131 |

+

stack: 4

|

| 132 |

+

bottleneck_dim: 256

|

| 133 |

+

hidden_dim: 512

|

| 134 |

+

kernel: 3

|

| 135 |

+

causal: false

|

| 136 |

+

norm_type: gLN

|

| 137 |

+

nonlinear: relu

|

| 138 |

+

decoder: conv

|

| 139 |

+

decoder_conf:

|

| 140 |

+

channel: 256

|

| 141 |

+

kernel_size: 20

|

| 142 |

+

stride: 10

|

| 143 |

+

required:

|

| 144 |

+

- output_dir

|

| 145 |

+

version: 0.9.7

|

| 146 |

+

distributed: false

|

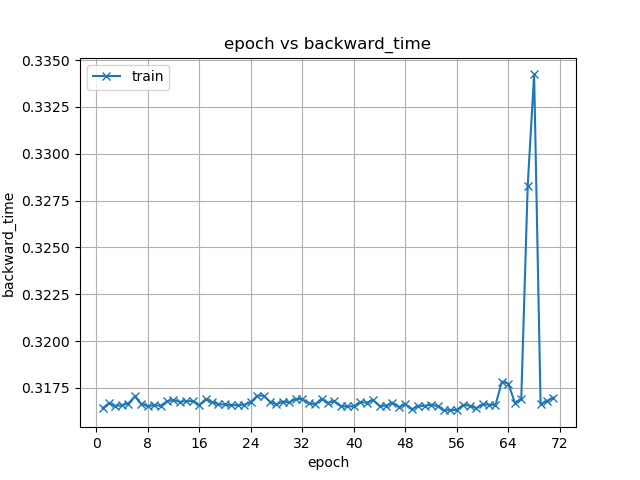

exp/enh_train_enh_conv_tasnet_raw/images/backward_time.png

ADDED

|

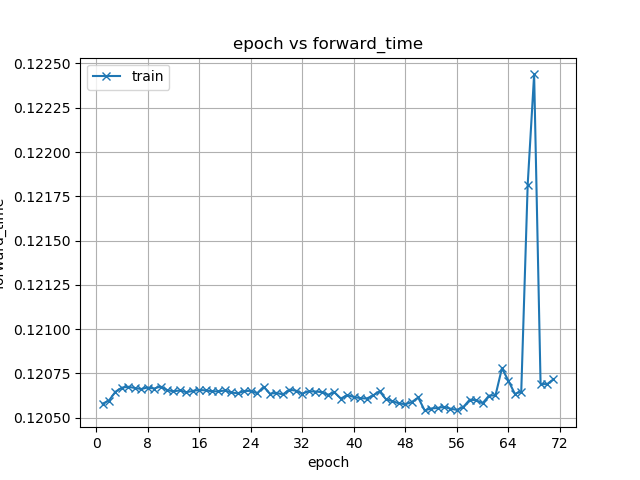

exp/enh_train_enh_conv_tasnet_raw/images/forward_time.png

ADDED

|

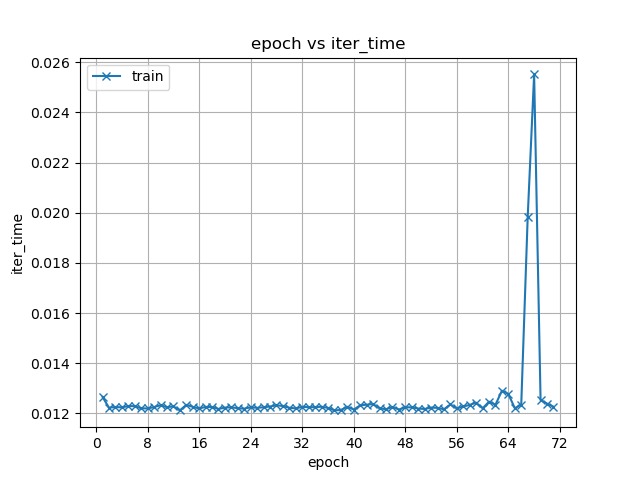

exp/enh_train_enh_conv_tasnet_raw/images/iter_time.png

ADDED

|

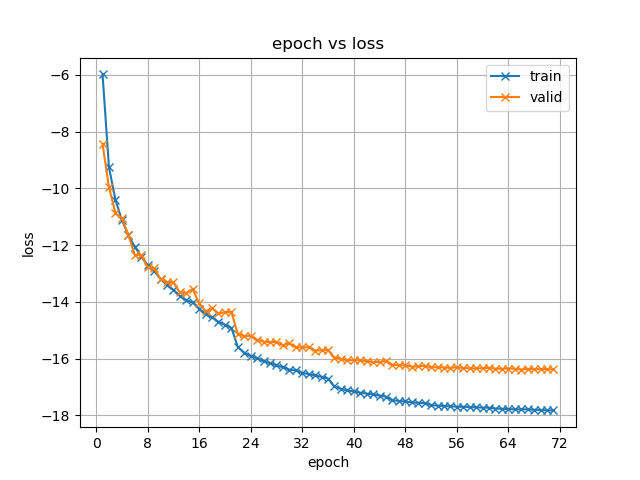

exp/enh_train_enh_conv_tasnet_raw/images/loss.png

ADDED

|

exp/enh_train_enh_conv_tasnet_raw/images/lr_0.png

ADDED

|

exp/enh_train_enh_conv_tasnet_raw/images/optim_step_time.png

ADDED

|

exp/enh_train_enh_conv_tasnet_raw/images/si_snr.png

ADDED

|

exp/enh_train_enh_conv_tasnet_raw/images/train_time.png

ADDED

|

meta.yaml

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

espnet: 0.9.7

|

| 2 |

+

files:

|

| 3 |

+

model_file: exp/enh_train_enh_conv_tasnet_raw/66epoch.pth

|

| 4 |

+

python: "3.7.6 (default, Jan 8 2020, 19:59:22) \n[GCC 7.3.0]"

|

| 5 |

+

timestamp: 1612372579.804363

|

| 6 |

+

torch: 1.5.0

|

| 7 |

+

yaml_files:

|

| 8 |

+

train_config: exp/enh_train_enh_conv_tasnet_raw/config.yaml

|