Unnamed: 0,title,content,source,url

0,Multimodal Financial Document Analysis and Recall;,"# Multimodal Financial Document Analysis and Recall; Tesla Investor Presentations

In this lesson, we will explore the application of the Retrieval-augmented Generation (RAG) method in processing a company's financial information contained within a PDF document. The process includes extracting critical data from a PDF file (like text, tables, graphs, etc.) and saving them in a vector store database such as Deep Lake for quick and efficient retrieval. Next, a RAG-enabled bot can access stored information to respond to end-user queries. This task requires diverse tools, including [Unstructured.io](http://unstructures.io/) for text/table extraction, OpenAI's GPT-4V for extracting information from graphs, and LlamaIndex for developing a bot with retrieval capabilities. As previously mentioned, data preprocessing plays a significant role in the RAG process. So, we start by pulling data from a PDF document. The content of this lesson focuses on demonstrating how to extract data from a single PDF document for ease of understanding. Nevertheless, the accompanying notebook provided after the lesson will analyze three separate reports, offering a broader scope of information.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

1,Multimodal Financial Document Analysis and Recall;,"# Extracting Data

Extracting textual data is relatively straightforward, but processing graphical elements such as line or bar charts can be more challenging. The latest OpenAI model equipped with vision processing, GPT-4V, is valuable for visual elements. We can feed the slides to the model and ask it to describe it in detail, which then will be used to complement the textual information. This lesson uses Tesla's [Q3 financial report](https://digitalassets.tesla.com/tesla-contents/image/upload/IR/TSLA-Q3-2023-Update-3.pdf) as the source document. It is possible to download the document using the `wget` command. ```bash wget https://digitalassets.tesla.com/tesla-contents/image/upload/IR/TSLA-Q3-2023-Update-3.pdf ``` ",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

2,Multimodal Financial Document Analysis and Recall;,"# Extracting Data

## 1. Text/Tables

The `unstructured` package is an effective tool for extracting information from PDF files. It requires two tools, `poppler`and `tesseract`, that help render PDF documents. We suggest setting up these packages on [Google Colab](https://colab.research.google.com/), freely available for students to execute and experiment with code. We will briefly mention the installation of these packages on other operating systems. Let's install the utilities and their dependencies using the following commands. ```bash apt-get -qq install poppler-utils apt-get -qq install tesseract-ocr pip install -q unstructured[all-docs]==0.11.0 fastapi==0.103.2 kaleido==0.2.1 uvicorn==0.24.0.post1 typing-extensions==4.5.0 pydantic==1.10.13 ``` The process is simple after installing all the necessary packages and dependencies. We simply use the `partition_pdf` function, which extracts text and table data from the PDF and divides it into multiple chunks. We can customize the size of these chunks based on the number of characters. ```python from unstructured.partition.pdf import partition_pdf raw_pdf_elements = partition_pdf( filename=""./TSLA-Q3-2023-Update-3.pdf"", # Use layout model (YOLOX) to get bounding boxes (for tables) and find titles # Titles are any sub-section of the document infer_table_structure=True, # Post processing to aggregate text once we have the title chunking_strategy=""by_title"", # Chunking params to aggregate text blocks # Attempt to create a new chunk 3800 chars # Attempt to keep chunks > 2000 chars # Hard max on chunks max_characters=4000, new_after_n_chars=3800, combine_text_under_n_chars=2000 ) ``` The previous code identifies and extracts various elements from the PDF, which can be classified into CompositeElements (the textual content) and Tables. We use the `[Pydantic](https://docs.pydantic.dev/latest/)` package to create a new data structure that stores information about each element, including their `type` and `text`. The code below iterates through all extracted elements, keeping them in a list where each item is an instance of the `Element` type. ```python from pydantic import BaseModel from typing import Any # Define data structure class Element(BaseModel): type: str text: Any # Categorize by type categorized_elements = [] for element in raw_pdf_elements: if ""unstructured.documents.elements.Table"" in str(type(element)): categorized_elements.append(Element(type=""table"", text=str(element))) elif ""unstructured.documents.elements.CompositeElement"" in str(type(element)): categorized_elements.append(Element(type=""text"", text=str(element))) ``` Creating the `Element` data structure enables convenient storage of the additional information, which can be beneficial for identifying the source of each answer, whether it is derived from texts, tables, or figures.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

3,Multimodal Financial Document Analysis and Recall;,"# Extracting Data

## 2. Graphs

The next step is gathering information from the charts to add context. The primary challenge is extracting images from the pages to feed into OpenAI's endpoint. A practical approach is to convert the PDF to images and pass each page to the model, inquiring if it detects any graphs. If it identifies one or more charts, the model can describe the data and the trends they represent. If no graphs are detected, the model will return an empty array as an indication. The initial step involves installing the `pdf2image` package to convert the PDF into images. This also requires the `poppler` tool, which we have already installed. ```bash !pip install -q pdf2image==1.16.3 ``` The code below uses the `convert_from_path` function, which takes the path of a PDF file. We can iterate over each page and save it as a PNG file using the `.save()` method. These images will be saved in the `./pages` directory. Additionally, we define the `pages_png` variable that holds the path of each image. ```python import os from pdf2image import convert_from_path os.mkdir(""./pages"") convertor = convert_from_path('./TSLA-Q3-2023-Update-3.pdf') for idx, image in enumerate( convertor ): image.save(f""./pages/page-{idx}.png"") pages_png = [file for file in os.listdir(""./pages"") if file.endswith('.png')] ``` Defining a few helper functions and variables is necessary before sending the image files to the OpenAI API. The `headers` variable will contain the OpenAI API Key, enabling the server to authenticate our requests. The `payload` carries configurations such as the model name, the maximum token limit, and the prompts. It instructs the model to describe the graphs and generate responses in JSON format, addressing scenarios like encountering multiple graphs on a single page or finding no graphs at all. We will add the images to the `payload` before sending the requests. Finally, there is the `encode_image()` function, which encodes the images in base64 format, allowing them to be processed by OpenAI. ```python headers = { ""Content-Type"": ""application/json"", ""Authorization"": ""Bearer "" + str( os.environ[""OPENAI_API_KEY""] ) } payload = { ""model"": ""gpt-4-vision-preview"", ""messages"": [ { ""role"": ""user"", ""content"": [ { ""type"": ""text"", ""text"": ""You are an assistant that find charts, graphs, or diagrams from an image and summarize their information. There could be multiple diagrams in one image, so explain each one of them separately. ignore tables."" }, { ""type"": ""text"", ""text"": 'The response must be a JSON in following format {""graphs"": [, , ]} where , , and placeholders that describe each graph found in the image. Do not append or add anything other than the JSON format response.' }, { ""type"": ""text"", ""text"": 'If could not find a graph in the image, return an empty list JSON as follows: {""graphs"": []}. Do not append or add anything other than the JSON format response. Dont use coding ""```"" marks or the word json.' }, { ""type"": ""text"", ""text"": ""Look at the attached image and describe all the graphs inside it in JSON format. ignore tables and be concise."" } ] } ], ""max_tokens"": 1000 } # Function to encode the image to base64 format def encode_image(image_path): with open(image_path, ""rb"") as image_file: return base64.b64encode(image_file.read()).decode('utf-8') ``` The remaining steps include: 1) utilizing the `pages_png` variable to loop through the images, 2) encoding the image into base64 format, 3) adding the image into the payload, and finally, 4) sending",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

4,Multimodal Financial Document Analysis and Recall;,"# Extracting Data

## 2. Graphs

the request to OpenAI and handling its responses. We will use the same `Element` data structure to store each image's type (graph) and the text (descriptions of the graphs). ```python graphs_description = [] for idx, page in tqdm( enumerate( pages_png ) ): # Getting the base64 string base64_image = encode_image(f""./pages/{page}"") # Adjust Payload tmp_payload = copy.deepcopy(payload) tmp_payload['messages'][0]['content'].append({ ""type"": ""image_url"", ""image_url"": { ""url"": f ""data:image/png;base64,{base64_image}"" } }) try: response = requests.post(""https://api.openai.com/v1/chat/completions"", headers=headers, json=tmp_payload) response = response.json() graph_data = json.loads( response['choices'][0]['message']['content'] )['graphs'] desc = [f""{page}\n"" + '\n'.join(f""{key}: {item[key]}"" for key in item.keys()) for item in graph_data] graphs_description.extend( desc ) except: # Skip the page if there is an error. print(""skipping... error in decoding."") continue; graphs_description = [Element(type=""graph"", text=str(item)) for item in graphs_description] ```",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

5,Multimodal Financial Document Analysis and Recall;,"# Store on Deep Lake

This section will utilize the Deep Lake vector database to store the collected information and their embeddings. These embedding vectors convert pieces of text into numerical representations that capture their meaning, enabling similarity metrics such as cosine similarity to identify documents with close relationships. For instance, a prompt inquiring about a company's total revenue would result in high cosine similarity with a database document stating the revenue amount as X dollars. The data preparation is complete with the extraction of all crucial information from the PDF. The next step involves combining the output from the previous sections, resulting in a list containing 41 entries. ```python all_docs = categorized_elements + graphs_description print( len( all_docs ) ) ``` ``` 41 ``` Given that we are using LlamaIndex, we can use its integration with Deep Lake to create and store the dataset. Begin by installing LlamaIndex and deeplake packages along with their dependencies. ```bash !pip install -q llama_index==0.9.8 deeplake==3.8.8 cohere==4.37 ``` Before using the libraries, it's essential to configure the `OPENAI_API_KEY` and `ACTIVELOOP_TOKEN` variables in the environment. Remember to substitute the placeholder values with your actual keys from the respective platforms. ```python import os os.environ[""OPENAI_API_KEY""] = """" os.environ[""ACTIVELOOP_TOKEN""] = """" ``` The integration of LlamaIndex enables the use of `DeepLakeVectorStore` class, which is designed to create a new dataset. Simply enter your organization ID, which by default is your Activeloop username, in the code provided below. This code will generate an empty dataset, ready to store documents. ```python from llama_index.vector_stores import DeepLakeVectorStore # TODO: use your organization id here. (by default, org id is your username) my_activeloop_org_id = """" my_activeloop_dataset_name = ""tsla_q3"" dataset_path = f""hub://{my_activeloop_org_id}/{my_activeloop_dataset_name}"" vector_store = DeepLakeVectorStore( dataset_path=dataset_path, runtime={""tensor_db"": True}, overwrite=False) ``` ``` Your Deep Lake dataset has been successfully created! ``` Next, we must pass the created vector store to a `StorageContext` class. This class serves as a wrapper to create storage from various data types. In our case, we're generating the storage from a vector database, which is accomplished simply by passing the created database instance using the `.from_defaults()` method. ```python from llama_index.storage.storage_context import StorageContext storage_context = StorageContext.from_defaults(vector_store=vector_store) ``` To store our preprocessed data, we must transform them into LlamaIndex `Documents` for compatibility with the library. The LlamaIndex `Document` is an abstract class that acts as a wrapper for various data types, including text files, PDFs, and database outputs. This wrapper facilitates the storage of valuable information with each sample. In our case, we can include a metadata tag to hold extra details like the data type (text, table, or graph) or denote document relationships. This approach simplifies the retrieval of these details later. As shown in the code below, you can employ built-in classes like `SimpleDirectoryReader` to automatically read files from a specified path or proceed manually. It will loop through our list containing all the extracted information and assign text and a category to each document. ```python from llama_index import Document documents = [Document(text=t.text, metadata={""category"": t.type},) for t in categorized_elements] ``` Lastly, we can utilize the `VectorStoreIndex` class to generate embeddings for the documents and employ the database instance to store these values. By default, it uses OpenAI's Ada model to create the embeddings. ```python from llama_index import VectorStoreIndex index = VectorStoreIndex.from_documents( documents, storage_context=storage_context ) ``` ``` Uploading data to deeplake dataset. 100%|██████████| 29/29 [00:00<00:00, 46.26it/s] \Dataset(path='hub://alafalaki/tsla_q3-nograph', tensors=['text', 'metadata', 'embedding', 'id']) tensor htype shape dtype compression ------- ------- ------- ------- ------- text text (29, 1) str None metadata json (29, 1) str None embedding embedding (29, 1536) float32 None id text (29, 1) str None ``` ",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

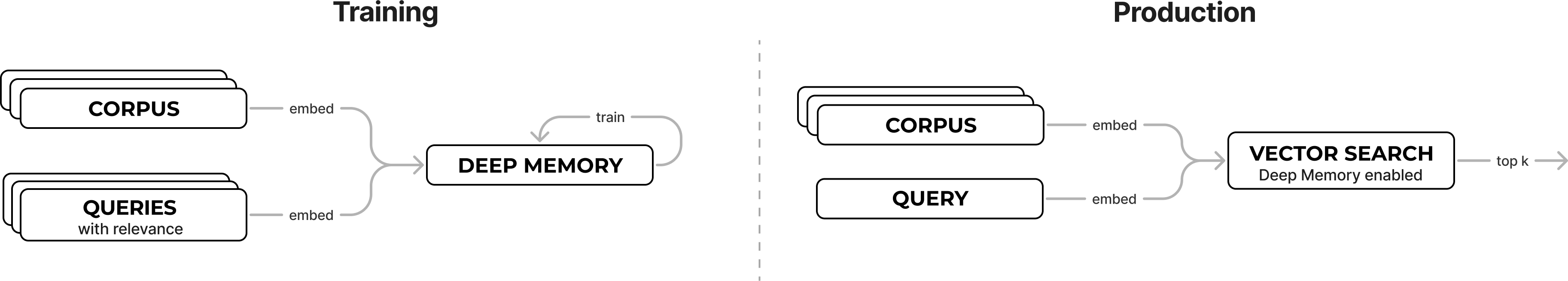

7,Multimodal Financial Document Analysis and Recall;,"# Activate DeepMemory

The deep memory feature from Activeloop enhances the retriever's accuracy. This improvement allows the model to access higher-quality data, leading to more detailed and informative responses. In earlier lessons, we already covered the basics of deep memory, so we will not dive into more details. The process begins by fetching chunks of data from the cloud and using GPT-3.5 to create specific questions for each chunk. These generated questions are then utilized in the deep memory training procedure to enhance the embedding quality. In our experience, this approach led to a 25% enhancement in performance. The initial phase involves loading the pre-existing dataset and reading the text of each chunk along with its corresponding ID. ```python from llama_index.vector_stores import DeepLakeVectorStore # TODO: use your organization id here. (by default, org id is your username) my_activeloop_org_id = """" my_activeloop_dataset_name = ""LlamaIndex_tsla_q3"" dataset_path = f""hub://{my_activeloop_org_id}/{my_activeloop_dataset_name}"" db = DeepLakeVectorStore( dataset_path=dataset_path, runtime={""tensor_db"": True}, read_only=True ) # fetch dataset docs and ids if they exist (optional you can also ingest) docs = db.vectorstore.dataset.text.data(fetch_chunks=True, aslist=True)['value'] ids = db.vectorstore.dataset.id.data(fetch_chunks=True, aslist=True)['value'] print(len(docs)) ``` ``` Deep Lake Dataset in hub://genai360/tesla_quarterly_2023 already exists, loading from the storage 127 ``` The following code segment outlines a function designed to use GPT-3.5 for generating questions corresponding to each data chunk. This involves crafting a specialized tool tailored for the OpenAI API. Primarily, the code configures suitable prompts for API requests to produce the questions and compiles them with their associated chunk IDs into a list. ```python import json import random from tqdm import tqdm from openai import OpenAI client = OpenAI() # Set the function JSON Schema for openai function calling feature tools = [ { ""type"": ""function"", ""function"": { ""name"": ""create_question_from_text"", ""parameters"": { ""type"": ""object"", ""properties"": { ""question"": { ""type"": ""string"", ""description"": ""Question created from the given text"", }, }, ""required"": [""question""], }, ""description"": ""Create question from a given text."", }, } ] def generate_question(tools, text): try: response = client.chat.completions.create( model=""gpt-3.5-turbo"", tools=tools, tool_choice={ ""type"": ""function"", ""function"": {""name"": ""create_question_from_text""}, }, messages=[ {""role"": ""system"", ""content"": ""You are a world class expert for generating questions based on provided context. You make sure the question can be answered by the text.""}, { ""role"": ""user"", ""content"": text, }, ], ) json_response = response.choices[0].message.tool_calls[0].function.arguments parsed_response = json.loads(json_response) question_string = parsed_response[""question""] return question_string except: question_string = ""No question generated"" return question_string def generate_queries(docs: list[str], ids: list[str], n: int): questions = [] relevances = [] pbar = tqdm(total=n) while len(questions) < n: # 1. randomly draw a piece of text and relevance id r = random.randint(0, len(docs)-1) text, label = docs[r], ids[r] # 2. generate queries and assign and relevance id generated_qs = [generate_question(tools, text)] if generated_qs == [""No question generated""]: continue questions.extend(generated_qs) relevances.extend([[(label, 1)] for _ in generated_qs]) pbar.update(len(generated_qs)) return questions[:n], relevances[:n] questions, relevances = generate_queries(docs, ids, n=20) ``` ``` 100%|██████████| 20/20 [00:19<00:00, 1.02it/s] ``` Now, we can use the questions and the reference ids to activate the deep memory using the `.deep_memory.train()` method to improve the embedding representations. You can see the status of the training process using the `.info` method. ```python from langchain.embeddings.openai import OpenAIEmbeddings embeddings = OpenAIEmbeddings() job_id = db.vectorstore.deep_memory.train( queries=questions, relevance=relevances, embedding_function=embeddings.embed_documents, ) print( db.vectorstore.dataset.embedding.info )",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

8,Multimodal Financial Document Analysis and Recall;,"# Activate DeepMemory

``` ``` Starting DeepMemory training job Your Deep Lake dataset has been successfully created! Preparing training data for deepmemory: Creating 20 embeddings in 1 batches of size 20:: 100%|██████████| 1/1 [00:03<00:00, 3.23s/it] DeepMemory training job started. Job ID: 6581e3056a1162b64061a9a4 {'deepmemory': {'6581e3056a1162b64061a9a4_0.npy': {'base_recall@10': 0.25, 'deep_memory_version': '0.2', 'delta': 0.25, 'job_id': '6581e3056a1162b64061a9a4_0', 'model_type': 'npy', 'recall@10': 0.5}, 'model.npy': {'base_recall@10': 0.25, 'deep_memory_version': '0.2', 'delta': 0.25, 'job_id': '6581e3056a1162b64061a9a4_0', 'model_type': 'npy', 'recall@10': 0.5}}} ``` The dataset is now prepared and compatible with the deep memory feature. It's crucial to note that the deep memory option must be actively set to true when using the dataset for inference.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

9,Multimodal Financial Document Analysis and Recall;,"# Chatbot In Action

In this section, we will use the created dataset as the retrieval object, providing the necessary context for the `GPT-3.5-turbo` model (the default choice for LlamaIndex) to answer the questions. Keep in mind that the inference outcomes presented in the subsequent section are derived from processing three PDF files, which are consistent with the sample codes provided in the notebook. To access the processed dataset containing all the PDF documents, use `hub://genai360/tesla_quarterly_2023` as the dataset path in the code below. The `DeepLakeVectorStore` class also handles loading a dataset from the hub. The key distinction in the code below, compared to the previous sections, lies in the use of the `.from_vector_store()` method. This method creates indexes directly from the database rather than variables. ```python from llama_index.vector_stores import DeepLakeVectorStore from llama_index.storage.storage_context import StorageContext from llama_index import VectorStoreIndex vector_store = DeepLakeVectorStore(dataset_path=dataset_path, overwrite=False) storage_context = StorageContext.from_defaults(vector_store=vector_store) index = VectorStoreIndex.from_vector_store( vector_store, storage_context=storage_context ) ``` We can now use the `.as_query_engine()` method of the index variables to establish a query engine. This will allow us to ask questions from various data sources. Notice the `vector_store_kwargs` argument, which activates the `deep_memory` feature by setting it to True. This step is essential for enabling the feature on the retriever. The `.query()` method takes a prompt and searches for the most relevant data points within the database to construct an answer. ```python query_engine = index.as_query_engine(vector_store_kwargs={""deep_memory"": True}) response = query_engine.query( ""What are the trends in vehicle deliveries?"", ) ``` ``` The trends in vehicle deliveries on the Quarter 3 report show an increasing trend over the quarters. ```  Screenshot referenced graph. As observed, the chatbot effectively utilized the data from the descriptions of the graphs we generated in the report. On the right, there's a screenshot of the bar chart which the chatbot referenced to generate its response. Additionally, we conducted an experiment where we compiled the same dataset but excluded the graph descriptions. This dataset can be accessed via `hub://genai360/tesla_quarterly_2023-nograph` path. The purpose was to determine whether including the descriptions aids the chatbot's performance. ``` In quarter 3, there was a decrease in Model S/X deliveries compared to the previous quarter, with a 14% decline. However, there was an increase in Model 3/Y deliveries, with a 29% growth. Overall, total deliveries in quarter 3 increased by 27% compared to the previous quarter. ``` You'll observe that the chatbot points to incorrect text segments. Despite the answer being contextually similar, it doesn't provide the correct answer. The graph shows an upward trend, a detail that might not have been mentioned in the report's text.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

10,Multimodal Financial Document Analysis and Recall;,"# Conclusion

In this lesson, we explored the steps of developing a chatbot capable of utilizing PDF files as a knowledge base to answer questions. Additionally, we employed the vision capability of GPT-4V to identify and describe graphs from each page. Describing the charts and their illustrated trends improves the chatbot's accuracy in answering and providing additional context. --- >> [Notebook](https://colab.research.google.com/drive/1JHevaKUazdjSptTMzjFR9BCQ2RTBq73o?usp=sharing). >> Preprocessed Text/Label: [categorized_elements.pkl](Multimodal%20Financial%20Document%20Analysis%20and%20Recall;%20974bbe2cce5d4402a7ac0bec9022a7f3/categorized_elements.pkl) >> Preprocessed Graphs: [graphs_description.pkl](Multimodal%20Financial%20Document%20Analysis%20and%20Recall;%20974bbe2cce5d4402a7ac0bec9022a7f3/graphs_description.pkl)",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320368-building-a-multi-modal-financial-document-analysis-and-recall-for-tesla-investor-presentations

11,Production-Ready RAG Solutions with LlamaIndex,"# Introduction

[LlamaIndex](https://www.llamaindex.ai/) is a framework for developing data-driven LLM applications, offering data ingestion, indexing, and querying tools. It plays a key role in incorporating additional data sources into LLMs, which is essential for RAG systems. In this lesson, we will explore how RAG-based applications can be improved by focusing on building production-ready code with a focus on data considerations. We'll discuss how to improve RAG retrieval performance through clear data definition and state management. Additionally, we will cover how to use LLMs to extract metadata to boost retrieval efficiency. The lesson also covers the concerns about how embedding references and summaries in text chunks can significantly improve retrieval performance and the capability of LLMs to infer metadata filters for structured retrieval. We'll also discuss fine-tuning embedding representations in LLM applications to achieve optimal retrieval performance.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

12,Production-Ready RAG Solutions with LlamaIndex,"# Challenges of RAG Systems

Retrieval-Augmented Generation (RAG) applications present unique challenges crucial for their successful implementation. In this section, we explore the dynamic management of data, ensuring varied and effective data representation and adhering to regulatory standards, highlighting the intricate balance required in RAG systems. ### **Document Updates and Stored Vectors** A significant challenge in RAG systems is keeping up with changes in documents and ensuring these updates are accurately reflected in the stored vectors. When documents are modified, added, or removed, the corresponding vectors need to be updated to maintain the accuracy and relevance of the retrieval system. Not addressing this can lead to outdated or irrelevant data retrieval, negatively impacting the system's effectiveness. Implementing dynamic updating mechanisms for vectors can greatly improve the system's ability to provide relevant and current information, enhancing its overall performance. ### Chunking **and Data Distribution** The granularity level is vital in achieving accurate retrieval results. If the chunk size is too large, important details might be missed; if it's too small, the system might get bogged down in details and miss the bigger picture. This setting requires testing and refinement tailored to the specific characteristics of the data and its application. ### **Diverse Representations in Latent Space** The presence of different representations in the same latent space can be challenging (e.g., for representing a paragraph of text versus representing a table or an image). These diverse representations can cause conflicts or inconsistencies when retrieving information, leading to less accurate results. ### **Compliance** Compliance is another critical issue, especially when implementing RAG systems in regulated industries or environments with strict data handling requirements, particularly for private documents with limited access. Non-compliance can lead to legal issues (think about a finance application), data breaches, or misuse of sensitive information. Ensuring the system adheres to relevant laws, regulations, and ethical guidelines prevents these risks. It increases the system's reliability and trustworthiness, vital for its successful deployment.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

13,Production-Ready RAG Solutions with LlamaIndex,"# Optimization

Understanding the intricacies of challenges in RAG systems and their solutions is crucial for boosting their overall effectiveness. We will explore several optimization strategies that can contribute to performance enhancement. ### **Model Selection and Hybrid Retrieval** Selecting appropriate models for the embedding and generation phases is critical. Choosing efficient and cheap embedding models can minimize costs while maintaining performance levels, but not in the generation process where an LLM is needed. Different options are available for both phases, including proprietary models with API access, such as [OpenAI](https://openai.com/blog/openai-api) or [Cohere](https://docs.cohere.com/docs), as well as open-source alternatives like [LLaMA-2](https://ai.meta.com/llama/) and [Mistral](https://mistral.ai/), which offer the flexibility of self-hosting or using third-party APIs. This choice should be based on the unique needs and resources of the application. It’s worth noting that, in some retrieval systems, balancing latency with quality is essential. Combining different methods, like keyword and embedding retrieval with reranking, ensures that the system is fast enough to meet user expectations while still providing accurate results. LlamaIndex also offers extensive integration options with various platforms, allowing for easy selection and comparison between different providers. This facilitates finding the optimal balance between cost and performance for specific needs. ### **CPU-Based Inference** In production, relying on GPU-based inference can incur substantial costs. Investigating options like better hardware or refining the inference code can lower the costs in large-scale applications where small inefficiencies can accumulate into considerable expenses. This approach is particularly important when using open-source models from sources such as the [HuggingFace hub](https://huggingface.co/docs/hub/index). Intel®'s advanced optimization technologies help with the efficient fine-tuning and inference of neural network models on CPUs. The 4th Gen Intel® Xeon® Scalable processors come with Intel® Advanced Matrix Extensions (Intel® AMX), an AI-enhanced acceleration feature. Each core of these processors includes integrated BF16 and INT8 accelerators, contributing to the acceleration of deep learning fine-tuning and inference speed. Additionally, libraries such as [Intel Extension for PyTorch](https://github.com/intel/intel-extension-for-pytorch) and [Intel® Extension for Transformers](https://github.com/intel/intel-extension-for-transformers) further optimize the performance of neural network models demanding computations on CPUs. ### Retrieval **Performance** In RAG applications, the primary method involves dividing the data into smaller, independent units and housing them within a vector dataset. However, this often leads to failures during document retrieval, as individual segments may lack the broader context necessary to answer specific queries. LlamaIndex offers features designed to construct a network of interlinked chunks (nodes), along with retrieval tools. These tools improve search capabilities by augmenting user queries, extracting key terms, or navigating through the connected nodes to locate the necessary information for answering queries. Advanced data management tools can help organize, index, and retrieve data more effectively. New tooling can also assist in handling large volumes of data and complex queries, which are common in RAG systems.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

14,Production-Ready RAG Solutions with LlamaIndex,"# Optimization

## The Role of the Retrieval Step

While the role of the retrieval step is frequently underestimated, it is vital for the effectiveness of the RAG pipeline. The techniques employed in this phase significantly influence the relevance and contextuality of the output. The LlamaIndex framework provides a variety of retrieval methods, complete with practical examples for different use cases, including the following examples, to name a few. - Combining keyword + embedding search in a hybrid approach can enhance retrieval of specific queries. [[link](https://docs.llamaindex.ai/en/stable/examples/query_engine/CustomRetrievers.html)] - Metadata filtering can provide additional context and improve the performance of the RAG pipeline. [[link](https://docs.llamaindex.ai/en/stable/examples/vector_stores/WeaviateIndexDemo.html#metadata-filtering)] - Re-ranking orders the search results by considering the recency of data to the user’s input query. [[link](https://docs.llamaindex.ai/en/stable/examples/node_postprocessor/CohereRerank.html)] - Indexing documents by summaries and retrieving relevant information within the document. [[link](https://docs.llamaindex.ai/en/stable/examples/index_structs/doc_summary/DocSummary.html)] Additionally, augmenting chunks with metadata will provide more context and enhance retrieval accuracy by defining node relationships between chunks for retrieval algorithms. Language models can help extract page numbers and other annotations from text chunks. Decouple embeddings from raw text chunks to avoid biases and improve context capture. Embedding references, summaries in text chunks, and text at the sentence level improves retrieval performance by fetching granular pieces of information. Organizing data with metadata filters helps with structured retrieval by ensuring relevant chunks are fetched.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

15,Production-Ready RAG Solutions with LlamaIndex,"# RAG Best Practices

Here are some good practices for dealing with RAG:",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

16,Production-Ready RAG Solutions with LlamaIndex,"# RAG Best Practices

## Fine-Tuning the Embedding Model

[Fine-tuning the embedding model](https://docs.llamaindex.ai/en/stable/optimizing/fine-tuning/fine-tuning.html#finetuning-embeddings) involves several key steps (like the creation of the training set) to enhance the embedding performance. Initially, it’s necessary to get the training set, which can be done by generating synthetic questions/answers from random documents. The next phase is fine-tuning the model, where adjustments are made to optimize its functioning. Following this, the model can optionally undergo an evaluation process to assess its improvements. The reported numbers from LlamaIndex show that the fine-tuning process can yield a 5-10% improvement in retrieval metrics, enabling the enhanced model to be effectively integrated into RAG applications. LlamaIndex offers capabilities for various fine-tuning types, including adjustments to embedding models, adaptors, and even routers, to boost the overall efficiency of the pipeline. This method supports the model by improving its capacity to develop more impactful embedding representations, extracting deeper and more significant insights from the data. You can [read here](https://docs.llamaindex.ai/en/stable/optimizing/fine-tuning/fine-tuning.html#finetuning-embeddings) for more information.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

17,Production-Ready RAG Solutions with LlamaIndex,"# RAG Best Practices

## LLM Fine-Tuning

[Fine-tuning the LLM](https://docs.llamaindex.ai/en/stable/optimizing/fine-tuning/fine-tuning.html#fine-tuning-llms) creates a model that effectively grasps the overall style of the dataset, leading to the generation of more precise responses. Fine-tuning the generative model brings several advantages, such as reducing hallucinations during output formation, which are typically challenging to eliminate through prompt engineering. Moreover, the refined model has a deeper understanding of the dataset, enhancing performance even in smaller models. This means achieving performance comparable to GPT-4 while employing more cost-effective alternatives like GPT-3.5. LlamaIndex offers a variety of fine-tuning schemas tailored to specific goals. It enhances model capabilities for use cases such as following a predetermined output structure, boosting its proficiency in converting natural language into SQL queries or augmenting its capacity for memorizing new knowledge. The [documentation](https://docs.llamaindex.ai/en/stable/optimizing/fine-tuning/fine-tuning.html#fine-tuning-llms) section has several examples.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

18,Production-Ready RAG Solutions with LlamaIndex,"# RAG Best Practices

## Evaluation

Regularly monitoring the performance of your RAG pipeline is a recommended practice, as it allows for assessing changes and their impact on the overall results. While evaluating a model's response, which can be highly subjective, is challenging, there are several methods available to track progress effectively. LlamaIndex provides [modules for assessing the quality](https://docs.llamaindex.ai/en/stable/optimizing/evaluation/evaluation.html) of the generated results and the retrieval process. Response evaluation focuses on whether the response aligns with the retrieved context and the initial query and if it adheres to the reference answer or set guidelines. For retrieval evaluation, the emphasis is on the relevance of the sources retrieved in relation to the query. A common method for assessing responses involves employing a proficient LLM, such as GPT-4, to evaluate the generated responses against various criteria. This evaluation can encompass aspects like correctness, semantic similarity, and faithfulness, among others. Please refer to the [following tutorial](https://docs.llamaindex.ai/en/stable/module_guides/evaluating/root.html) for more information on the evaluation process and techniques.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

19,Production-Ready RAG Solutions with LlamaIndex,"# RAG Best Practices

## Generative Feedback Loops

A key aspect of generative feedback loops is injecting data into prompts. This process involves feeding specific data points into the RAG system to generate contextualized outputs. Once the RAG system generates descriptions or vector embeddings, these outputs can be stored in the database. The creation of a loop where generated data is continually used to enrich and update the database can improve the system's ability to produce better outputs.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

20,Production-Ready RAG Solutions with LlamaIndex,"# RAG Best Practices

## Hybrid Search

It is essential to keep in mind that embedding-based retrieval is not always practical for entity lookup. Implementing a hybrid search that combines the benefits of keyword lookup with additional context from embeddings can yield better results, offering a balanced approach between specificity and context.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

21,Production-Ready RAG Solutions with LlamaIndex,"# Conclusion

In this lesson, we covered the challenges and optimization strategies of Retrieval-Augmented Generation (RAG) systems, emphasizing the importance of effective data management, diverse representations in latent space, and compliance in complex environments. We highlighted techniques like dynamic updating of vectors, chunk size optimization, and hybrid retrieval approaches. We also explored the role of LlamaIndex in enhancing retrieval performance through data organization and the significance of fine-tuning embedding and LLM models for optimal RAG applications. Lastly, we recommended regular evaluation and the use of generative feedback loops and hybrid searches for maintaining and improving RAG systems. RESOURCES: “Make RAG Production-Ready” webinar: [https://www.youtube.com/watch?v=g-VvYLhYhOg](https://www.youtube.com/watch?v=g-VvYLhYhOg) --- *For more information on Intel® Accelerator Engines, visit [this resource page](https://download.intel.com/newsroom/2023/data-center-hpc/4th-Gen-Xeon-Accelerator-Fact-Sheet.pdf). Learn more about Intel® Extension for Transformers, an Innovative Transformer-based Toolkit to Accelerate GenAI/LLM Everywhere [here](https://github.com/intel/intel-extension-for-transformers).* *Intel, the Intel logo, and Xeon are trademarks of Intel Corporation or its subsidiaries.*",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320355-production-ready-rag-solutions-with-llamaindex

22,LlamaIndex Introduction Precision and Simplicity i,"# LlamaIndex Introduction: Precision and Simplicity in Information Retrieval

In this guide, we will comprehensively explore the LlamaIndex framework, which helps in building Retrieval-Augmented Generation (RAG) systems for LLM-based applications. LlamaIndex, like other RAG frameworks, combines the fetching of relevant information from a vast database with the generative capabilities of Large Language Models. It involves providing supplementary information to the LLM for a posed question to ensure that the LLM does not generate inaccurate responses. The aim is to provide a clear understanding of the best practices in developing LLM-based applications. We will explain LlamaIndex's fundamental components in the following sections.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

23,LlamaIndex Introduction Precision and Simplicity i,"# **Vector Stores**

Vector store databases enable the storage of large, high-dimensional data and provide the essential tools for semantically retrieving relevant documents. This implies that rather than naively checking for the presence of specific words in a document, these systems analyze the embedding vectors that encapsulate the entire document's meaning. This approach simplifies the search process and enhances its accuracy. Searching in vector stores focuses on fetching data according to its vector-based representations. These databases are integral across various domains, including Natural Language Processing (NLP) and multimodal applications, allowing for the efficient storing and analysis of high-dimensional datasets. A primary function in vector stores is the similarity search, aiming to locate vectors closely resembling a specific query vector. This functionality is critical in numerous AI-driven systems, such as recommendation engines and image retrieval platforms, where pinpointing contextually relevant data is critical. Semantic search transcends traditional keyword matching by seeking information that aligns conceptually with the user's query. It captures the meaning in vectorized representations by employing advanced ML techniques. Semantic search can be applied to all data formats, as we vectorize the data before storing it in a database. Once we have an embedded format, we can calculate indexed similarities or capture the context embedded in the query. This ensures that the results are relevant and in line with the contextual and conceptual nuances of the user's intent.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

24,LlamaIndex Introduction Precision and Simplicity i,"# **Data Connectors**

The effectiveness of a RAG-based application is significantly enhanced by accessing a vector store that compiles information from various sources. However, managing data in diverse formats can be challenging. Data connectors, also called `Readers`, are essential in LlamaIndex. Readers are responsible for parsing and converting the data into a simplified `Document` representation consisting of text and basic metadata. Data connectors are designed to streamline the data ingestion process, automate the task of fetching data from various sources (like APIs, PDFs, and SQL databases), and format it. [LlamaHub](https://llamahub.ai/) is an open-source project that hosts data connectors. LlamaHub repository offers data connectors for ingesting all possible data formats into the LLM. You can check out the [LlamaHub](https://llamahub.ai/) repository and test some of the loaders here. You can explore various integrations and data sources with the embedded link below. These implementations make the preprocessing step as simple as executing a function. Take the [Wikipedia](https://llamahub.ai/l/wikipedia?from=loaders) integration, for instance. [https://llamahub.ai/](https://llamahub.ai/) Before testing loaders, we must install the required packages and set the OpenAI API key for LlamaIndex. You can get the API key on [OpenAI's website](https://platform.openai.com/playground) and set the environment variable with `OPENAI_API_KEY`. Please note that LlamaIndex defaults to using OpenAI's `get-3.5-turbo` for text generation and `text-embedding-ada-002` model for embedding generation. ```bash pip install -q llama-index==0.9.14.post3 openai==1.3.8 cohere==4.37 ``` ```bash # Add API Keys import os os.environ['OPENAI_API_KEY'] = '' # Enable Logging import logging import sys #You can set the logging level to DEBUG for more verbose output, # or use level=logging.INFO for less detailed information. logging.basicConfig(stream=sys.stdout, level=logging.DEBUG) logging.getLogger().addHandler(logging.StreamHandler(stream=sys.stdout)) ``` We have also added a logging mechanism to the code. Logging in LlamaIndex is a way to monitor the operations and events that occur during the execution of your application. Logging helps develop and debug the process and understand the details of what the application is doing. In a production environment, you can configure the logging module to output log messages to a file or a logging service. We can now use the `download_loader` method to access integrations from LlamaHub and activate them by passing the integration name to the class. In our sample code, the `WikipediaReader` class takes in several page titles and returns the text contained within them as `Document` objects. ```python from llama_index import download_loader WikipediaReader = download_loader(""WikipediaReader"") loader = WikipediaReader() documents = loader.load_data(pages=['Natural Language Processing', 'Artificial Intelligence']) print(len(documents)) ``` ``` 2 ``` This retrieved information can be stored and utilized to enhance the knowledge base of our chatbot.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

25,LlamaIndex Introduction Precision and Simplicity i,"# **Nodes**

In LlamaIndex, once data is ingested as documents, it passes through a processing structure that transforms these documents into `Node` objects. Nodes are smaller, more granular data units created from the original documents. Besides their primary content, these nodes also contain metadata and contextual information. LlamaIndex features a `NodeParser` class designed to convert the content of documents into structured nodes automatically. The `SimpleNodeParser` converts a list of document objects into nodes. ```python from llama_index.node_parser import SimpleNodeParser # Assuming documents have already been loaded # Initialize the parser parser = SimpleNodeParser.from_defaults(chunk_size=512, chunk_overlap=20) # Parse documents into nodes nodes = parser.get_nodes_from_documents(documents) print(len(nodes)) ``` ``` 48 ``` The code above splits the two retrieved documents from the Wikipedia page into 48 smaller chunks with slight overlap.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

26,LlamaIndex Introduction Precision and Simplicity i,"# ****Indices****

At the heart of LlamaIndex is the capability to index and search various data formats like documents, PDFs, and database queries. Indexing is an initial step for storing information in a database; it essentially transforms the unstructured data into embeddings that capture semantic meaning and optimize the data format so it can be easily accessed and queried. LlamaIndex has a variety of index types, each fulfills a specific role. We have highlighted some of the popular index types in the following subsections.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

27,LlamaIndex Introduction Precision and Simplicity i,"# ****Indices****

## ****Summary Index****

The [Summary Index](https://docs.llamaindex.ai/en/stable/examples/index_structs/doc_summary/DocSummary.html) extracts a summary from each document and stores it with all the nodes in that document. Since it’s not always easy to match small node embeddings with a query, sometimes having a document summary helps.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

28,LlamaIndex Introduction Precision and Simplicity i,"# ****Indices****

## Vector Store Index

The [Vector Store Index](https://docs.llamaindex.ai/en/stable/module_guides/indexing/vector_store_guide.html) generates embeddings during index construction to identify the top-k most similar nodes in response to a query. It’s suitable for small-scale applications and easily scalable to accommodate larger datasets using high-performance vector databases.  Fetching the top-k nodes and passing them for generating the final response The crawled Wikipedia documents can be stored in a Deep Lake vector store, and an index object can be created based on its data. We can create the dataset in [Activeloop](https://www.activeloop.ai/) and append documents to it by employing the `DeepLakeVectorStore` class. First, we need to set the Activeloop and OpenAI API keys in the environment using the following code. ```python import os os.environ['OPENAI_API_KEY'] = '' os.environ['ACTIVELOOP_TOKEN'] = '' ``` To connect to the platform, use the `DeepLakeVectorStore` class and provide the `dataset path` as an argument. To save the dataset on your workspace, you can replace the `genai360` name with your organization ID (which defaults to your Activeloop username). Running the following code will create an empty dataset. ```python from llama_index.vector_stores import DeepLakeVectorStore my_activeloop_org_id = ""genai360"" my_activeloop_dataset_name = ""LlamaIndex_intro"" dataset_path = f""hub://{my_activeloop_org_id}/{my_activeloop_dataset_name}"" # Create an index over the documnts vector_store = DeepLakeVectorStore(dataset_path=dataset_path, overwrite=False) ``` ```python Your Deep Lake dataset has been successfully created! ``` Now, we need to create a storage context using the `StorageContext` class and the Deep Lake dataset as the source. Pass this storage to a `VectorStoreIndex` class to create the index (generate embeddings) and store the results on the defined dataset. ```python from llama_index.storage.storage_context import StorageContext from llama_index import VectorStoreIndex storage_context = StorageContext.from_defaults(vector_store=vector_store) index = VectorStoreIndex.from_documents( documents, storage_context=storage_context ) ``` ``` Uploading data to deeplake dataset. 100%|██████████| 23/23 [00:00<00:00, 69.43it/s] Dataset(path='hub://genai360/LlamaIndex_intro', tensors=['text', 'metadata', 'embedding', 'id']) tensor htype shape dtype compression ------- ------- ------- ------- ------- text text (23, 1) str None metadata json (23, 1) str None embedding embedding (23, 1536) float32 None id text (23, 1) str None ``` The created database will be accessible in the future. The Deep Lake database efficiently stores and retrieves high-dimensional vectors. ",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

29,LlamaIndex Introduction Precision and Simplicity i,"# **Query Engines**

The next step is to leverage the generated indexes to query through the information. The Query Engine is a wrapper that combines a Retriever and a Response Synthesizer into a pipeline. The pipeline uses the query string to fetch nodes and then sends them to the LLM to generate a response. A query engine can be created by calling the `as_query_engine()` method on an already-created index. The code below uses the documents fetched from the Wikipedia page to construct a Vector Store Index using the `GPTVectorStoreIndex` class. The `.from_documents()` method simplifies building indexes on these processed documents. The created index can then be utilized to generate a `query_engine` object, allowing us to ask questions based on the documents using the `.query()` method. ```python from llama_index import GPTVectorStoreIndex index = GPTVectorStoreIndex.from_documents(documents) query_engine = index.as_query_engine() response = query_engine.query(""What does NLP stands for?"") print( response.response ) ``` ``` NLP stands for Natural Language Processing. ``` The indexes can also function solely as retrievers for fetching documents relevant to a query. This capability enables the creation of a Custom Query Engine, offering more control over various aspects, such as the prompt or the output format. You can learn more [here](https://docs.llamaindex.ai/en/stable/examples/query_engine/custom_query_engine.html#defining-a-custom-query-engine).",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

30,LlamaIndex Introduction Precision and Simplicity i,"# **Routers**

[Routers](https://docs.llamaindex.ai/en/stable/module_guides/querying/router/root.html#routers) play a role in determining the most appropriate retriever for extracting context from the knowledge base. The routing function selects the optimal query engine for each task, improving performance and accuracy. These functions are beneficial when dealing with multiple data sources, each holding unique information. Consider an application that employs a SQL database and a Vector Store as its knowledge base. In this setup, the router can determine which data source is most applicable to the given query. You can see a working example of incorporating the routers [in this tutorial](https://docs.llamaindex.ai/en/stable/module_guides/querying/router/root.html#routers).",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

31,LlamaIndex Introduction Precision and Simplicity i,"# Saving and Loading Indexes Locally

All the examples we explored involved storing indexes on cloud-based vector stores like Deep Lake. However, there are scenarios where saving the data on a disk might be necessary for rapid testing. The concept of storing refers to saving the index data, which includes the nodes and their associated embeddings, to disk. This is done using the `persist()` method from the `storage_context` object related to the index. ```python # store index as vector embeddings on the disk index.storage_context.persist() # This saves the data in the 'storage' by default # to minimize repetitive processing ``` If the index already exists in storage, you can load it directly instead of recreating it. We simply need to determine whether the index already exists on disk and proceed accordingly; here is how to do it: ```python # Index Storage Checks import os.path from llama_index import ( VectorStoreIndex, StorageContext, load_index_from_storage, ) from llama_index import download_loader # Let's see if our index already exists in storage. if not os.path.exists(""./storage""): # If not, we'll load the Wikipedia data and create a new index WikipediaReader = download_loader(""WikipediaReader"") loader = WikipediaReader() documents = loader.load_data(pages=['Natural Language Processing', 'Artificial Intelligence']) index = VectorStoreIndex.from_documents(documents) # Index storing index.storage_context.persist() else: # If the index already exists, we'll just load it: storage_context = StorageContext.from_defaults(persist_dir=""./storage"") index = load_index_from_storage(storage_context) ``` In this example, the `os.path.exists(""./storage"")` function is used to check if the 'storage' directory exists. If it does not exist, the Wikipedia data is loaded, and a new index is created.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

32,LlamaIndex Introduction Precision and Simplicity i,"# **LangChain vs. LlamaIndex**

LangChain and LlamaIndex are designed to improve LLMs' capabilities, each with their unique strengths. **LlamaIndex**: LlamaIndex specializes in processing, structuring, and accessing private or domain-specific data, with a focus on specific LLM interactions. It works for tasks that demand high precision and quality when dealing with specialized, domain-specific data. Its main strength lies in linking Large Language Models (LLMs) to any data source. **LangChain** is dynamic, suited for context-rich interactions, and effective for applications like chatbots and virtual assistants. These features render it highly appropriate for quick prototyping and application development. While generally used independently, it is worth noting that it can be possible to combine functions from both LangChain and LlamaIndex where they have different strengths. Both can be complementary tools. We also designed a little table below to help you understand the differences better. The attached video in the course also aims to help you decide which tool you should use for your application: LlamaIndex, LangChain, OpenAI Assistants, or doing it all from scratch (yourself). Here’s a clear comparison of each to help you quickly grasp the essentials on a few relevant topics you may consider when choosing: | | LangChain | LlamaIndex | OpenAI Assistants | | --- | --- | --- | --- | | | Interact with LLMs - Modular and more flexible | Data framework for LLMs - Empower RAG | Assistant API - SaaS | | Data | • Standard formats like CSV, PDF, TXT, … • Mostly focus on Vector Stores. | • LlamaHub with dedicated data loaders from different sources. (Discord, Slack, Notion, …) • Efficient indexing and retrieving + easily add new data points without calculating embeddings for all. • Improved chunking strategy by linking them and using metadata. • Support multimodality. | • 20 files where each can be up to 512mb. • Accept a wide range of https://platform.openai.com/docs/assistants/tools/supported-files. | | LLM Interaction | • Prompt templates to facilitate interactions. • Very flexible, easily defining chains and using different modules. Choose the prompting strategy, model, and output parser from many options. • Can directly interact with LLMs and create chains without the need to have additional data. | • Mostly use LLMs in the context of manipulating data. Either for indexing or querying. | • Either GPT-3.5 Turbo or GPT-4 + any fine-tuned model. | | Optimizations | - | • LLM fine-tuning • Embedding fine-tuning | - | | Querying | • Use retriever functions. | • https://twitter.com/llama_index/status/1729303619760259463?s=20 indexing/querying techniques like subquestions, HyDe,... • Routing: enable to use multiple data sources | • thread and messages to keep track of users conversations. | | Agents | • LangSmith | • LlamaHub | • Code interpreter, knowledge retriever, and custom function calls. | | Documentation | • Easy to debug. • Easy to find concepts and understand the function usage. | • As of November 2023, the methods are mostly explained in the form of tutorials or blog posts. A bit harder to debug. | • Great. | | Pricing | FREE | FREE | • $0.03 / code interpreter session • $0.20 / GB / assistant / day • + The usual usage of the LLM | A smart approach involves closely examining your unique use case and its specific demands. The key decision boils down to whether you need interactive agents or powerful search capabilities for retrieving information. If your aim leans towards the latter, LlamaIndex stands out as a likely choice",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

33,LlamaIndex Introduction Precision and Simplicity i,"# **LangChain vs. LlamaIndex**

for enhanced performance.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

34,LlamaIndex Introduction Precision and Simplicity i,"# **Conclusion**

We explored the features of the LlamaIndex library and its building blocks. During our exploration of the LlamaIndex library and its building blocks, we learned about various features. Firstly, we discussed data connectors and LlamaHub, which help to retrieve relevant data through vector stores and retrievers. We also talked about routers that assist in selecting the most suitable retrievers. Additionally, we looked at data connectors that are capable of ingesting data from various sources, such as APIs, PDFs, and SQL databases, and how the indexing step structures the data into embedding representations. Lastly, we covered the query engines that provide knowledge retrieval. LangChain and LlamaIndex are valuable and popular frameworks for developing apps powered by language models. LangChain offers a broader range of capabilities and tool integration, while LlamaIndex specializes in indexing and retrieval of information. Read the [LlamaIndex documentation](https://gpt-index.readthedocs.io/en/stable/) to explore its full potential. >> [Notebook](https://colab.research.google.com/drive/1CgTSpnTNj50PBMbA8g8QOqZCIK-UvWLU?usp=sharing). --- **RESOURCES**: - OpenAI [Financial Document Analysis with LlamaIndex | OpenAI Cookbook](https://cookbook.openai.com/examples/third_party/financial_document_analysis_with_llamaindex) - datacamp [LlamaIndex: A Data Framework for the Large Language Models (LLMs) based applications](https://www.datacamp.com/tutorial/llama-index-adding-personal-data-to-llms) - indexing [LlamaIndex: How to use Index correctly.](https://howaibuildthis.substack.com/p/llamaindex-how-to-use-index-correctly)",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51320311-llamaindex-introduction-precision-and-simplicity-in-information-retrieval

35,Mastering Advanced RAG Techniques with LlamaIndex,"# Introduction

The Retrieval-Augmented Generation (RAG) pipeline heavily relies on retrieval performance guided by the adoption of various techniques and advanced strategies. Methods like query expansion, query transformations, and query construction each play a distinct role in refining the search process. These techniques enhance the scope of search queries and the overall result quality. In addition to core methods, strategies such as reranking (with the Cohere Reranker), recursive retrieval, and small-to-big retrieval further enhance the retrieval process. Together, these techniques create a comprehensive and efficient approach to information retrieval, ensuring that searches are wide-ranging, highly relevant, and accurate.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51334510-mastering-advanced-rag-techniques-with-llamaindex

36,Mastering Advanced RAG Techniques with LlamaIndex,"# Querying in LlamaIndex

As mentioned in a previous lesson, the process of querying an index in LlamaIndex is structured around several key components. - **Retrievers**: These classes are designed to retrieve a set of nodes from an index based on a given query. Retrievers source the relevant data from the index. - **Query Engine**: It is the central class that processes a query and returns a response object. Query Engine leverages the retrievers and the response synthesizer modules to curate the final output. - **Query Transform**: It is a class that enhances a raw query string with various transformations to improve the retrieval efficiency. It can be used in conjunction with a Retriever and a Query Engine. Incorporating the above components can lead to the development of an effective retrieval engine, complementing the functionality of any RAG-based application. However, the relevance of search results can noticeably improve with more advanced techniques like query construction, query expansion, and query transformations.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51334510-mastering-advanced-rag-techniques-with-llamaindex

37,Mastering Advanced RAG Techniques with LlamaIndex,"# Query Construction

[Query construction](https://blog.langchain.dev/query-construction/) in RAG converts user queries to a format that aligns with various data sources. This process involves transforming questions into vector formats for unstructured data, facilitating their comparison with vector representations of source documents to identify the most relevant ones. It also applies to structured data, such as databases where queries are formatted in a compatible language like SQL, enabling effective data retrieval. The core idea is to answer user queries by leveraging the inherent structure of the data. For instance, a query like ""movies about aliens in the year 1980"" combines a semantic component like ""aliens"" (which will get better results if retrieved through vector storage) with a structured component like ""year == 1980"". The process involves translating a natural language query into the query language of a specific database, such as SQL for relational databases or Cypher for graph databases. Incorporating different approaches to perform query construction depends on the specific use case. The first category includes the **MetadataFilter** classes for vector stores with metadata filtering, an auto-retriever that translates natural language into unstructured queries. This involves defining the data source, interpreting the user query, extracting logical conditions, and forming an unstructured request. The other approach is **Text-to-SQL** for relational databases; converting natural language into SQL requests poses challenges like hallucination (creating fictitious tables or fields) and user errors (mis-spellings or irregularities). This is addressed by providing the LLM with an accurate database description and using few-shot examples to guide query generation. Query Construction improves RAG answer quality with logical filter conditions inferred directly from user questions, and the retrieved text chunks passed to the LLM are refined before final answer synthesis. ",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51334510-mastering-advanced-rag-techniques-with-llamaindex

38,Mastering Advanced RAG Techniques with LlamaIndex,"# Query Expansion

Query expansion works by extending the original query with additional terms or phrases that are related or synonymous. For instance, if the original query is too narrow or uses specific terminology, query expansion can include broader or more commonly used terms relevant to the topic. Suppose the original query is ""*climate change effects.*"" Query expansion would involve adding related terms or synonyms to this query, such as ""*global warming impact*,"" ""*environmental consequences*,"" or ""*temperature rise implications*."" One approach to do it is utilizing the `synonym_expand_policy` from the `KnowledgeGraphRAGRetriever` class. In the context of LlamaIndex, the effectiveness of query expansion is usually enhanced when combined with the Query Transform class.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51334510-mastering-advanced-rag-techniques-with-llamaindex

39,Mastering Advanced RAG Techniques with LlamaIndex,"# **Query Transformation**

Query transformations ****modify the original query to make it more effective in retrieving relevant information. Transformations can include changes in the query's structure, the use of synonyms, or the inclusion of contextual information. Consider a user query like ""*What were Microsoft's revenues in 2021?*"" To enhance this query through transformations, the original query could be modified to be more like *“Microsoft revenues 2021”, which is more optimized for search engines and vector DBs.* Query transformations involve **changing the structure** of a query to improve its performance.",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51334510-mastering-advanced-rag-techniques-with-llamaindex

40,Mastering Advanced RAG Techniques with LlamaIndex,"# **Query Engine**

A [Query engine](https://docs.llamaindex.ai/en/stable/module_guides/deploying/query_engine/root.html) is a sophisticated interface designed to interact with data through natural language queries. It's a system that processes queries and delivers responses. As mentioned in previous lessons, multiple query engines can be combined for enhanced functionality, catering to complex data interrogation needs. For a more interactive experience resembling a back-and-forth conversation, a [Chat Engine](https://docs.llamaindex.ai/en/stable/module_guides/deploying/chat_engines/root.html) can be used in scenarios requiring multiple queries and responses, providing a more dynamic and engaging interaction with data. A basic usage of query engines is to call the `.as_query_engine()` method on the created Index. This section will include a step-by-step example of creating indexes from text files and utilizing query engines to interact with the dataset. The first step is installing the required packages using Python package manager (PIP), followed by setting the API key environment variables. ```bash pip install -q llama-index==0.9.14.post3 deeplake==3.8.8 openai==1.3.8 cohere==4.37 ``` ```python import os os.environ['OPENAI_API_KEY'] = '' os.environ['ACTIVELOOP_TOKEN'] = '' ``` The next step is downloading the text file that serves as our source document. This file is a compilation of all the essays Paul Graham wrote on his blog, merged into a single text file. You have the option to download the file from the [provided URL](https://raw.githubusercontent.com/run-llama/llama_index/main/docs/examples/data/paul_graham/paul_graham_essay.txt), or you can execute these commands in your terminal to create a directory and store the file. ```bash mkdir -p './paul_graham/' wget 'https://raw.githubusercontent.com/run-llama/llama_index/main/docs/examples/data/paul_graham/paul_graham_essay.txt' -O './paul_graham/paul_graham_essay.txt' ``` Now, use the `SimpleDirectoryReader` within the LlamaIndex framework to read all files from a specified directory. This class will automatically cycle through the files, reading them as `Document` objects. ```python from llama_index import SimpleDirectoryReader # load documents documents = SimpleDirectoryReader(""./paul_graham"").load_data() ``` We can now employ the `ServiceContext` to divide the lengthy single document into several smaller chunks with some overlap. Following this, we can proceed to create the nodes out of the generated documents. ```python from llama_index import ServiceContext service_context = ServiceContext.from_defaults(chunk_size=512, chunk_overlap=64) node_parser = service_context.node_parser nodes = node_parser.get_nodes_from_documents(documents) ``` The nodes must be stored in a vector store database to enable easy access. The `DeepLakeVectorStore` class can create an empty dataset when given a path. You can use `genai360` to access the processed dataset or alter the organization ID to your Activeloop username to store the data in your workspace. ```python from llama_index.vector_stores import DeepLakeVectorStore my_activeloop_org_id = ""genai360"" my_activeloop_dataset_name = ""LlamaIndex_paulgraham_essays"" dataset_path = f""hub://{my_activeloop_org_id}/{my_activeloop_dataset_name}"" # Create an index over the documnts vector_store = DeepLakeVectorStore(dataset_path=dataset_path, overwrite=False) ``` ``` Your Deep Lake dataset has been successfully created! ``` The new database will be wrapped as a `StorageContext` object, which accepts nodes to provide the necessary context for establishing relationships if needed. Finally, the `VectorStoreIndex` takes in the nodes along with links to the database and uploads the data to the cloud. Essentially, it constructs the index and generates embeddings for each segment. ```python from llama_index.storage.storage_context import StorageContext from llama_index import VectorStoreIndex storage_context = StorageContext.from_defaults(vector_store=vector_store) storage_context.docstore.add_documents(nodes) vector_index = VectorStoreIndex(nodes, storage_context=storage_context) ``` ``` Uploading data to deeplake dataset. 100%|██████████| 40/40 [00:00<00:00, 40.60it/s] |Dataset(path='hub://genai360/LlamaIndex_paulgraham_essays', tensors=['text', 'metadata', 'embedding', 'id']) tensor htype shape dtype compression ------- ------- ------- ------- ------- text text (40, 1) str None metadata json (40, 1) str None embedding embedding (40, 1536) float32 None id text (40, 1) str None ``` The created index serves as the basis for defining the query engine. We initiate a query engine by using the vector index object and executing the `.as_query_engine()` method. The following code sets the `streaming` flag to True, which reduces idle waiting time for the end user (more details on this will follow). Additionally, it employs the `similarity_top_k` flag to specify the number of source documents it can consult to respond to each query. ```python",advanced_rag_course,https://learn.activeloop.ai/courses/take/rag/multimedia/51334510-mastering-advanced-rag-techniques-with-llamaindex

41,Mastering Advanced RAG Techniques with LlamaIndex,"# **Query Engine**