input

stringlengths 38

38.8k

| target

stringlengths 30

27.8k

|

|---|---|

I started to do Monte Carlo in R as a hobby, but eventually a financial analyst advised to migrate to Matlab.

I'm an experienced software developer.

but a Monte Carlo beginner.

I want to construct static models with sensitivity analysis, later dynamic models.

Need good libraries/ algorithms that guide me.

To me seems that R has excellent libraries, and I suspect mathlab is preferred by inexperienced programmers because of the easy pascal-like language. The R language is based on scheme and this is hard for beginners, but not for me. If Matlab/ Octave has not advantages on the numerical/ library side I would stick with R. | To be honest, I think any question you ask around here about R vs ... will be bias towards R. Remember that R is by far the most used [tag](https://stats.stackexchange.com/tags)!

**What I do**

My current working practice is to use R to prototype and use C when I need an extra boost of speed. It used to be that I would have to switch to C very quickly (again for my particular applications), but the R [multicore](http://www.rforge.net/doc/packages/multicore/multicore.html) libraries have helped delay that switch. Essentially, you make a `for` loop run in parallel with a trivial change.

I should mention that my applications are *very* computationally intensive.

**Recommendation**

To be perfectly honest, it really depends on exactly what you want to do. So I'm basing my answer on this statement in your question.

>

> I want to construct static models

> with sensitivity analysis, later

> dynamic models. Need good libraries/

> algorithms that guide me

>

>

>

I'd imagine that this problem would be ideally suited to prototyping in R and using C when needed (or some other compiled language).

On saying that, typically Monte-Carlo/sensitivity analysis doesn't involve particularly advanced statistical routines - of course it may needed other advanced functionality. So I think (without more information) that you *could* carry out your analysis in any language, but being completely biased, I would recommend R! |

I'm working through the examples in Kruschke's [Doing Bayesian Data Analysis](http://www.indiana.edu/%7Ekruschke/DoingBayesianDataAnalysis/), specifically the Poisson exponential ANOVA in ch. 22, which he presents as an alternative to frequentist chi-square tests of independence for contingency tables.

I can see how we get information about about interactions that occur more or less frequently than would be expected if the variables were independent (ie. when the HDI excludes zero).

My question is how can I compute or interpret an *effect size* in this framework? For example, Kruschke writes "the combination of blue eyes with black hair happens less frequently than would be expected if eye color and hair color were independent", but how can we describe the strength of that association? How can I tell which interactions are more extreme than others? If we did a chi-square test of these data we might compute the Cramér's V as a measure of the overall effect size. How do I express effect size in this Bayesian context?

Here's the self-contained example from the book (coded in `R`), just in case the answer is hidden from me in plain sight ...

```r

df <- structure(c(20, 94, 84, 17, 68, 7, 119, 26, 5, 16, 29,

14, 15, 10, 54, 14), .Dim = c(4L, 4L),

.Dimnames = list(c("Black", "Blond",

"Brunette", "Red"), c("Blue", "Brown", "Green", "Hazel")))

df

Blue Brown Green Hazel

Black 20 68 5 15

Blond 94 7 16 10

Brunette 84 119 29 54

Red 17 26 14 14

```

Here's the frequentist output, with effect size measures (not in the book):

```r

vcd::assocstats(df)

X^2 df P(> X^2)

Likelihood Ratio 146.44 9 0

Pearson 138.29 9 0

Phi-Coefficient : 0.483

Contingency Coeff.: 0.435

Cramer's V : 0.279

```

Here's the Bayesian output, with HDIs and cell probabilities (directly from the book):

```r

# prepare to get Krushkes' R codes from his web site

Krushkes_codes <- c(

"http://www.indiana.edu/~kruschke/DoingBayesianDataAnalysis/Programs/openGraphSaveGraph.R",

"http://www.indiana.edu/~kruschke/DoingBayesianDataAnalysis/Programs/PoissonExponentialJagsSTZ.R")

# download Krushkes' scripts to working directory

lapply(Krushkes_codes, function(i) download.file(i, destfile = basename(i)))

# run the code to analyse the data and generate output

lapply(Krushkes_codes, function(i) source(basename(i)))

```

And here are plots of the posterior of Poisson exponential model applied to the data:

And plots of the posterior distribution on estimated cell probabilities:

| Improper scoring rules such as proportion classified correctly, sensitivity, and specificity are not only arbitrary (in choice of threshold) but are improper, i.e., they have the property that maximizing them leads to a bogus model, inaccurate predictions, and selecting the wrong features. It is good that they disagree with proper scoring (log-likelihood; logarithmic scoring rule; Brier score) rules and the $c$-index (a semi-proper scoring rule - area under ROC curve; concordance probability; Wilcoxon statistic; Somers' $D\_{xy}$ rank correlation coefficient); this gives us more confidence in proper scoring rules. |

We have submitted a paper reporting a statistically significant result.

One reviewer asks us to report what is the power to detect a significant association. As there was a previous paper on this issue, we could use the effect size of that paper to do the calculation.

However, we are surprised by this comment, and would be happy to know what is your opinion and whether you know of references that discuss calculation of power at posteriori when the result is significant.

---

Thank you very much for your responses.

I should have made clearer that we used a large dataset to run these analyses, so the study is unlikely to be underpowered. However, it involves a complex design, and other than running simulations, there is no simple way to compute power. We are not familiar with simulations to compute power, so I was trying to avoid this :-) | *Context*: I wrote this answer before the OP clarified that they are working with a large dataset, so the study (probably) has sufficient power. In my post I consider the more common case of a small study with a "significant finding". Imagine, for example, that the article under review presents an estimate of 1.25 in a domain where previous studies about related phonemena have reported estimates in the range [0.9, 1.1]. How does the article's author respond to the reviewer's request for a post-hoc estimate of power to detect an effect of size 1.25?

---

It's hard to argue that it doesn't matter if a study with significant p-value is underpowered. If a study has low power *and* the null hypothesis is rejected, then the sample statistic is likely to be a biased estimate of the population parameter. Yes, you are lucky to get evidence against the null hypothesis but also likely to be over-optimistic. The reviewer knows this so he asks how much power your study had to detect the effect you detected.

It's not recommended to do post-hoc power estimation. This is a much discussed topic on CV; see references below. In short – if your study was indeed underpowered to detect the true effect size – by doing post-hoc power analysis you compound the issue of overestimating the effect by also overestimating the power. Mathematically, the power at the observed effect is a function of the p-value: if the p-value is small, the post-hoc power is large. It's as if the result is more convincing because the same fact — the null is rejected — gets reported twice.

Okay, so enough bad news. How can you respond to the reviewer? Computing the power retroactively is not meaningful because your study is already done. Instead compute confidence interval(s) for the effect(s) of interest and emphasize estimation, not hypothesis testing. If the power of your study is low, the intervals are wide (as low power means that we can't make precise estimates). If the power of your study is high, the intervals are tight, demonstrating convincingly how much you have learned from your data.

If the reviewer insists on a power calculation, don't compute the power by plugging in the estimated effect for the true effect, aka post-hoc power. Instead do sensitivity power analysis: For example, fix the sample size, the power and the significance level, and determine the range of effect sizes that can be detected. Or fix the sample size and the significance, and plot power as a function of effect size. It will be especially informative to know what the power is for a range of realistic effect sizes.

Daniël Lakens discusses power at great length in [Improving Your Statistical Inferences](https://lakens.github.io/statistical_inferences/). There is even a section on "What to do if Your Editor Asks for Post-hoc Power?" He has great advice.

*References*

J. M. Hoenig and D. M. Heisey. The abuse of power. *The American Statistician*, 55(1):19–24, 2001.

A. Gelman. Don't calculate post-hoc power using observed estimate of effect size. *Annals of Surgery*, 269(1), 2019.

[Do underpowered studies have increased likelihood of false positives?](https://stats.stackexchange.com/q/176384/237901)

[What is the post-hoc power in my experiment? How to calculate this?](https://stats.stackexchange.com/q/430030/237901)

[Why is the power of studies that only report significant effects not always 100%?](https://stats.stackexchange.com/questions/263383/why-is-the-power-of-studies-that-only-report-significant-effects-not-always-100)

[Post hoc power analysis for a non significant result?](https://stats.stackexchange.com/questions/193726/post-hoc-power-analysis-for-a-non-significant-result)

---

This simulation shows that "significant" estimates from underpowered studies are inflated. A study with little power to detect a small effect has more power to detect a large effect. So if the true effect is small and the null hypothesis of no effect is rejected, the estimated effect tends to be larger than the true one.

I simulate 1000 studies with 50%, so about half of the studies have p-value < 0.05. The sample means from those "significant" studies are mostly to the right of the true mean 0.1, ie. they overestimate the true mean, often by a lot.

```r

library("pwr")

library("tidyverse")

# Choose settings for an underpowered study

mu0 <- 0

mu <- 0.1

sigma <- 1

alpha <- 0.05

power <- 0.5

pwr.t.test(d = (mu - mu0) / sigma, power = power, sig.level = alpha, type = "one.sample")

#>

#> One-sample t test power calculation

#>

#> n = 386.0261

#> d = 0.1

#> sig.level = 0.05

#> power = 0.5

#> alternative = two.sided

# Sample size to achieve 50% power to detect mean 0.1 with a one-sided t-test

n <- 387

# Simulate 1,000 studies with low power

set.seed(123)

reps <- 1000

studies <-

tibble(

study = rep(seq(reps), each = n),

x = rnorm(reps * n, mean = mu, sd = sigma)

)

results <- studies %>%

group_by(

study

) %>%

group_modify(

~ broom::tidy(t.test(.))

)

results %>%

# We are only interested in studies where the null is rejected

filter(

p.value < alpha

) %>%

ggplot(

aes(estimate)

) +

geom_histogram(

bins = 33

) +

geom_vline(

xintercept = mu,

color = "red"

) +

labs(

x = glue::glue("estimate of true effect {mu} in studies with {100*power}% power"),

y = "",

title = "\"Significant\" effect estimates from underpowered studies are inflated"

)

```

Created on 2022-04-30 by the [reprex package](https://reprex.tidyverse.org) (v2.0.1) |

The fixed-point combinator FIX (aka the Y combinator) in the (untyped) lambda calculus ($\lambda$) is defined as:

FIX $\triangleq \lambda f.(\lambda x. f~(\lambda y. x~x~y))~(\lambda x. f~(\lambda y. x~x~y))$

I understand its purpose and I can trace the execution of its application perfectly fine; **I would like to understand how to derive FIX from first principles**.

Here is as far as I get when I try to derive it myself:

1. FIX is a function: FIX $\triangleq \lambda\_\ldots$

2. FIX takes another function, $f$, to make it recursive: FIX $\triangleq \lambda f.\_\ldots$

3. The first argument of the function $f$ is the "name" of the function, used where a recursive application is intended. Therefore, all appearances of the first argument to $f$ should be replaced by a function, and this function should expect the rest of the arguments of $f$ (let's just assume $f$ takes one argument): FIX $\triangleq \lambda f.\_\ldots f~(\lambda y. \_\ldots y)$

This is where I do not know how to "take a step" in my reasoning. The small ellipses indicate where my FIX is missing something (although I am only able to know that by comparing it to the "real" FIX).

I already have read [Types and Programming Languages](http://rads.stackoverflow.com/amzn/click/0262162091), which does not attempt to derive it directly, and instead refers the reader to [The Little Schemer](http://mitpress.mit.edu/books/little-schemer) for a derivation. I have read that, too, and its "derivation" was not so helpful. Moreover, it is less of a direct derivation and more of a use of a very specific example and an ad-hoc attempt to write a suitable recursive function in $\lambda$. | As Yuval has pointed out there is not just one fixed-point operator. There are many of them. In other words, the equation for fixed-point theorem do not have a single answer. So you can't derive the operator from them.

It is like asking how people derive $(x,y)=(0,0)$ as a solution for $x=y$. They don't! The equation doesn't have a unique solution.

---

Just in case that what you want to know is how the first fixed-point theorem was discovered. Let me say that I also wondered about how they came up with the fixed-point/recursion theorems when I first saw them. It seems so ingenious. Particularly in the computability theory form. Unlike what Yuval says it is not the case that people played around till they found something. Here is what I have found:

As far as I remember, the theorem is originally due to S.C. Kleene. Kleene came up with the original fixed-point theorem by salvaging the proof of inconsistency of Church's original lambda calculus. Church's original lambda calculus suffered from a Russel type paradox. The modified lambda calculus avoided the problem. Kleene studied the proof of inconsistency probably to see how if the modified lambda calculus would suffer from a similar problem and turned the proof of inconsistency into a useful theorem in of the modified lambda calculus. Through his work regarding equivalence of lambada calculus with other models of computation (Turing machines, recursive functions, etc.) he transferred it to other models of computation.

---

How to derive the operator you might ask? Here is how I keep it in mind. Fixed-point theorem is about removing self-reference.

Everyone knows the liar paradox:

>

> I am a lair.

>

>

>

Or in the more linguistic form:

>

> This sentence is false.

>

>

>

Now most people think the problem with this sentence is with the self-reference. It is not! The self-reference can be eliminated (the problem is with truth, a language cannot speak about the truth of its own sentences in general, see [Tarski's undefinability of truth theorem](https://en.wikipedia.org/wiki/Tarski%27s_undefinability_theorem)). The form where the self-reference is removed is as follows:

>

> If you write the following quote twice, the second time inside quotes, the resulting sentence is false: "If you write the following quote twice, the second time inside quotes, the resulting sentence is false:"

>

>

>

No self-reference, we have instructions about how to construct a sentence and then do something with it. And the sentence that gets constructed is equal to the instructions. Note that in $\lambda$-calculus we don't need quotes because there is no distinction between data and instructions.

Now if we analyse this we have $MM$ where $Mx$ is the instructions to construct $xx$ and do something to it.

>

> $Mx = f(xx)$

>

>

>

So $M$ is $\lambda x. f(xx)$ and we have

>

> $MM = (\lambda x. f(xx))(\lambda x. f(xx))$

>

>

>

This is for a fixed $f$. If you want to make it an operator we just add $\lambda f$ and we get $Y$:

>

> $Y = \lambda f. (MM) = \lambda f.((\lambda x. f(xx))(\lambda x. f(xx)))$

>

>

>

So I just keep in mind the paradox without self-reference and that helps me understand what $Y$ is about. |

[Ladner's Theorem](http://doi.acm.org/10.1145/321864.321877) states that if P ≠ NP, then there is an infinite hierarchy of [complexity classes](http://en.wikipedia.org/wiki/Complexity_class) strictly containing P and strictly contained in NP. The proof uses the completeness of SAT under many-one reductions in NP. The hierarchy contains complexity classes constructed by a kind of diagonalization, each containing some language to which the languages in the lower classes are not many-one reducible.

This motivates my question:

>

> Let C be a complexity class, and let D be a complexity class that strictly contains C. If D contains languages that are complete for some notion of reduction, does there exist an infinite hierarchy of complexity classes between C and D, with respect to the reduction?

>

>

>

More specifically, I would like to know if there are results known for D = P and C = [LOGCFL](http://qwiki.stanford.edu/wiki/Complexity_Zoo%3aL#logcfl) or C = [NC](http://qwiki.stanford.edu/wiki/Complexity_Zoo%3aN#nc), for an appropriate notion of reduction.

---

Ladner's paper already includes Theorem 7 for space-bounded classes C, as Kaveh pointed out in an answer. In its strongest form this says: if NL ≠ NP then there is an infinite sequence of languages between NL and NP, of strictly increasing hardness. This is slightly more general than the usual version (Theorem 1), which is conditional on P ≠ NP. However, Ladner's paper only considers D = NP. | It is very likely that you can accomplish this in a generic setting. Almost certainly such a result **has** been proved in a generic setting already, but the references escape me at the moment. So here's an argument from scratch.

The writeup at <http://oldblog.computationalcomplexity.org/media/ladner.pdf> has two proofs of Ladner's theorem. The second proof, by Russell Impagliazzo, produces a language $L\_1$ of the form {$ x01^{f(|x|)}$} where $x$ encodes a satisfiable formula and $f$ is a particular polynomial time computable function. That is, by simply padding SAT with the appropriate number of $1$'s, you can get "NP-intermediate" sets. The padding is performed to "diagonalize" over all possible polynomial time reductions, so that no polynomial time reduction from SAT to $L\_1$ will work (assuming $P \neq NP$). To prove that there are infinitely many degrees of hardness, one should be able to substitute $L\_1$ in place of SAT in the above argument, and repeat the argument for $L\_2 = ${$x 0 1^{f(|x|)} | x \in L\_1$}. Repeat with $L\_i = ${$x 0 1^{f(|x|)} | x \in L\_{i-1}$}.

It seems clear that such a proof can be generalized to classes $C$ and $D$, where (1) $C$ is properly contained in $D$, (2) $D$ has a complete language under $C$-reductions, (3) the list of all $C$-reductions can be recursively enumerated, and (4) the function $f$ is computable in $C$. Perhaps the only worrisome requirement is the last one, but if you look at the definition of $f$ in the link, it looks very easy to compute, for most reasonable classes $C$ that I can think of. |

Is there a natural class $C$ of CNF formulas - preferably one that has previously been studied in the literature - with the following properties:

* $C$ is an easy case of SAT, like e.g. Horn or 2-CNF, i.e., membership in $C$ can be tested in polynomial time, and formulas $F\in C$ can be tested for satisfiability in polynomial time.

* Unsatisfiable formulas $F\in C$ are not known to have short (polynomial size) tree-like resolution refutations. Even better would be: there are unsatisfiable formulas in $C$ for which a super-polynomial lower bound for tree-like resolution is known.

* On the other hand, unsatisfiable formulas in $C$ are known to have short proofs in some stronger proof system, e.g. in dag-like resolution or some even stronger system.

$C$ should not be too sparse, i.e., contain many formulas with $n$ variables, for every (or at least for most values of) $n\in \mathbb{N}$. It should also be non-trivial, in the sense of containing satisfiable as well as unsatisfiable formulas.

The following approach to solving an arbitrary CNF formula $F$ should be meaningful: find a partial assignment $\alpha$ s.t. the residual formula $F\alpha$ is in $C$, and then apply the polynomial time algorithm for formulas in $C$ to $F\alpha$. Therefore I would like other answers besides the *all-different constraints* from the currently accepted answer, as I think it is rare that an arbitrary formula will become an all-different constraint after applying a restriction. | I'm not sure why one would require also sat formulas but there are some articles on the separation between General and Tree resolution eg [1]. It sounds to me that this is what you want.

[1] Ben-Sasson, Eli, Russell Impagliazzo, and Avi Wigderson. "Near optimal separation of tree-like and general resolution." Combinatorica 24.4 (2004): 585-603. |

What is the difference between programming language and a scripting language?

For example, consider C versus Perl.

Is the only difference that scripting languages require only the interpreter and don't require compile and linking? | I think the difference has a lot more to do with the intended use of the language.

For example, Python is interpreted, and doesn't require compiling and linking, as is Prolog. I would classify both of these as programming languges.

Programming langauges are meant for writing software. They are designed to manage large projects. They can probably call programs, read files, etc., but might not be quite as good at that as a scripting language.

Scripting langauges aren't meant for large-scale software development. Their syntax, features, library, etc. are focused more around accomplishing small tasks quickly. This means they are sometimes more "hackish" than programming langauges, and might not have all of the same nice features. They're designed to make commonly performed tasks, like iterating through a bunch of files or performing sysadmin tasks, to be automated.

For example, Bash doesn't do arithmetic nicely, which would probably make writing large-scale software in it a nightmare.

As a kind of benchmark: I would never write a music player in perl, even though I probably could. Likewise, I would never try to use C++ to rename all the files in a given folder.

This line is becoming blurrier and blurrier. JavaScript, by definition a "scripting" langauge, is increasingly used to develop "web apps" which are more in the realm of software. Likewise, Python initially fit many of the traits of a scripting language but is seeing more and more sofware developed using Python as the primary platform. |

For every Kth operation:

Right rotate the array clockwise by 1.

Delete the (n-k+1)th last element.

eg:

A = {1, 2, 3, 4, 5, 6}. Rotate the array clockwise i.e. after rotation the array A = {6, 1, 2, 3, 4, 5} and delete the last element that is {5} so A = {6, 1, 2, 3, 4}. Again rotate the array for the second time and deletes the second last element that is {2} so A = {4, 6, 1, 3}, doing these steps when he reaches 4th time, 4th last element does not exists so he deletes 1st element ie {1} so A={3, 6}. So continuing this procedure the last element in A is {3}, so outputp will be 3

How to solve this? | It seems some kind of problem with mathematical solution (try to find the position with a closed math formula). However, here is an algorithmic approach that runs in $O(n \log^2 n)$.

Using segment tree / Fenwick tree, you can find the number of removed elements in a range (using prefix sums). Keep track of the offset (imagine having a pointer on the first element (imagine a cyclic arry and in each step we push it one element to the left instead of rotating right). Using binary search on the Fenwick tree, you can find the index of element having the distance you are looking for the offset element. Nite that after deleting half of the elements $k$ will be greater than the size and hence you can directly output the first unremoved element before the offset. |

I am conducting an ordinal logistic regression. I have an ordinal variable, let's call it Change, that expresses the change in a biological parameter between two time points 5 years apart. Its values are 0 (no change), 1 (small change), 2 (large change).

I have several other variables (VarA, VarB, VarC, VarD) measured between the two time points. My intention is to perform an ordinal logistic regression to assess whether the entity of Change is more strongly associated with VarA or VarB. I'm really interested only in VarA and VarB, and I'm not trying to create a model. VarC and VarD are variables that I know *may* affect Change, but probably not very much, and in any case I'm not interested in them. I just want to know if the association in the period of observation (5 years) was stroger for VarA or for VarB.

Would it be wrong to not include VarC and VarD in the regression? | This depends on the relationships between the predictor variables (how are VarC and VarD related to VarA and VarB?) and also what question you are trying to answer.

Consider the possible case where VarA causes VarC which causes the response. If your only interest is the relationship between VarA and the response then including VarC would hide the indirect relationship. But if we are interested in if VarA has a direct effect on the response above and beyond the indirect effect through VarC then including VarC is important.

Sometimes it is helpful to draw a diagram with all the different variables and then draw lines/arrows showing the potential and/or interesting relationships between all the variables. Then use that along with the question of interest to decide on the model. |

I have one question with respect to need to use feature selection methods (Random forests feature importance value or Univariate feature selection methods etc) before running a statistical learning algorithm.

We know to avoid overfitting we can introduce regularization penalty on the weight vectors.

So if I want to do linear regression, then I could introduce the L2 or L1 or even Elastic net regularization parameters. To get sparse solutions, L1 penalty helps in feature selection.

Then is it still required to do feature selection before Running L1 regularizationn regression such as Lasso?. Technically Lasso is helping me reduce the features by L1 penalty then why is the feature selection needed before running the algo?

I read a research article saying that doing Anova then SVM gives better performance than using SVM alone. Now question is: SVM inherently does regularization using L2 norm. In order to maximise the margin, it is minimising the weight vector norm. So it is doing regularization in it's objective function. Then technically algorithms such as SVM should not be bothered about feature selection methods?. But the report still says doing Univariate Feature selection before normal SVM is more powerful.

Anyone with thoughts? | I don't think overfitting is the reason that we need feature selection in the first place. In fact, overfitting is something that happens if we don't give our model enough data, and feature selection further *reduces* the amount of data that we pass our algorithm.

I would instead say that feature selection is needed as a preprocessing step for models which do not have the power to determine the importance of features on their own, or for algorithms which get much less efficient if they have to do this importance weighting on their own.

Take for instance a simple k-nearest neighbor algorithm based on Euclidean distance. It will always look at all features as having the same weight or importance to the final classification. So if you give it 100 features but only three of these are relevant for your classification problem, then all the noise from these extra features will completely drown out the information from the three important features, and you won't get any useful predictions. If you instead determine the critical features beforehand and pass only those to the classifier, it will work much better (not to mention be much faster).

On the other hand, look at a random forest classifier. While training, it will automatically determine which features are the most useful by finding an optimal split by choosing from a subset of all features. Therefore, it will do much better at sifting through the 97 useless features to find the three good ones. Of course, it will still run faster if you do the selection beforehand, but its classification power will usually not suffer much by giving it a lot of extra features, even if they are not relevant.

Finally, look at neural networks. Again, this is a model which has the power to ignore irrelevant features, and training by backpropagation will usually converge to using the interesting features. However, it is known that standard training algorithm converge much faster if the inputs are "whitened", i.e., scaled to unit variance and with removed cross correlation [(LeCun et al, 1998)](http://yann.lecun.com/exdb/publis/pdf/lecun-98b.pdf). Therefore, although you don't strictly need to do feature selection, it can pay in pure performance terms to do preprocessing of the input data.

So in summary, I would say feature selection has less to do with overfitting and more with enhancing the classification power and computational efficiency of a learning method. How much it is needed depends a lot on the method in question. |

A [domino tiling](https://en.wikipedia.org/wiki/Domino_tiling) is a tesselation of a region in the plane by 2 × 1 squares. What is a good data type for storing and manipulating such objects?

In my current manipulation, use an array to store all the half-squares numbering them 1-2 for a horizontal domino, 3 4 for a vertical. It's not ideal but I can draw the tiling in `ASCII` or using a graphics editor.

```

_ _ _ _

1 2 |_ _| 3 3 | | |

1 2 |_ _| 4 4 |_|_|

```

In some applications, I have to identify specific features within array. For example, I might have to count all instances of 2 ×2 squares of dominos in my array, excluding things like

```

_ _

_|_ 2 1

|_ _| 1 2

```

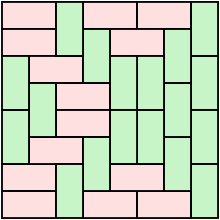

These queries are not difficult, but they make me start to question my use of arrays. | There is an alternative, more compact kind of array representation which might be better if you are working with diamonds as defined in the paper you linked (for axis aligned rectangles you need to find the smallest covering diamond).

The idea is to checkerboard the plane and assign a 2-bit number to each "black" cell, such that the number represents the orientation of the domino relative to the cell. Consider for example:

where $0$ means "horizontal domino pointing east", $1$ means "vertical domino pointing north", etc. Tilting the numbers clockwise yields the array representation:

$$A = \begin{pmatrix} 0 & 0 & 0 & 0 \\ 2 & 3 & 3 & 0 \\ 2 & 3 & 0 & 2 \\ 2 & 1 & 0 & 1 \\ 2 & 2 & 2 & 1 \\ \end{pmatrix}$$

To find two dominos forming a square search for a $0$ adjacent to a $2$ or a $1$ adjacent to a $3$. Now, if you deal with big tesselations and are into implemtations sped up by bit operations, you can do this:

Let $A$ be a $\{0,1,2,3\}^{m \times n}$ matrix, $b$ be the bit mask $\dots 01010101$, then we can represent the $i^{th}$ row of $A$ as a single integer by using bit-concatenation:

$$r\_i = \bigvee\_{j=1}^n (a\_{ij} << 2(j-1))$$

where $\vee$ denotes a bitwise OR and $<<$ a leftshift. Denoting $\oplus$ as bitwise XOR we can calculate

$$s\_i=(r\_i \text{ mod } 2^{2n}) \oplus (r\_i >> 2) \oplus b \\

t\_i=r\_i \oplus r\_{i+1} \oplus b, ~~i < m$$

Then domino $a\_{ij}$ and domino $a\_{ij+1}$ form a square iff bits $(s\_i)\_{2j-2}$ and $(s\_i)\_{2j-1}$ are both $1$. Likewise domino $a\_{ij}$ and domino $a\_{i+1j}$ do so iff bits $(t\_i)\_{2j-2}$ and $(t\_i)\_{2j-1}$ are both $1$.

Example calculation for rows 1,2 (little-endian!):

```

r_1 00 00 00 00

r_2 10 11 11 00

b 01 01 01 01

xor -----------

11 10 10 01

```

Therefore $a\_{11}$ and $a\_{21}$ form a square. |

In all of the contexts I've seen loss functions in statistics/machine learning so far, loss functions are additive in observations. i.e.: loss $Q\_D$ of dataset $D$ is an additive aggregation of losses at observations $i\in D$: $Q\_D(\beta)=\sum\_{i\in D}Q\_i(\beta)$. e.g. in the loss that is a simple sum of squared residuals: $Q\_D=\sum\_i(y\_i-X\_i\beta)^2$.

This seems sensible, but I am wondering: **Are there contexts in statistics/machine learning in which it happens (or reasons in theory why one might want) that a loss function is used that is not additive (or even separable) in observations?** | **Loss functions are not always additive in observations:** A loss function is function of an estimator (or predictor) and the thing it is estimating (predicting). The loss function is often, but not always, a distance function. Moreover, the estimator (predictor) sometimes, but not always, involves a sum of terms involving a single observation. Generally speaking, the loss function does not always have a form that is additive with respect to the observations. For prediction problems, deviation from this form occurs because of the form of the loss function. For estimation problems, it occurs either because of the form of the loss function, or because of the form of the estimator appearing in the loss function.

To see the generality of the loss form for a prediction problem, consider the general case where we have an observed data $\mathbf{y} = (y\_1,...,y\_n)$ and we want to predict the observable vector $\mathbf{y}\_\* = (y\_{n+1},...,y\_{n+k})$ using the predictor $\hat{\mathbf{y}}\_\* = \mathbf{H}(\mathbf{y})$. We can write the loss for this prediction problem as:

$$L(\hat{\mathbf{y}}\_\*, \mathbf{y}\_\*) = L(\mathbf{H}(\mathbf{y}), \mathbf{y}\_\*).$$

The loss function in your question is the Euclidean distance between the prediction vector and the observed data vector, which is $L(\hat{\mathbf{y}}\_\*, \mathbf{y}\_\*) = ||\hat{\mathbf{y}}\_\* - \mathbf{y}\_\*||^2 = \sum\_i (\hat{y}\_{\*i} - y\_{\*i})^2$. That particular form is composed of a sum of terms involving the observed values being predicted, and so the additivity property holds in that case. However, there are many other examples of loss functions that give rise to a form that does not have this additivity property.

A simple example of two loss functions that are not additive in the observations are when the loss is equal to the prediction error either from the best prediction, or from the worst prediction. In the case of "loss from best prediction" we have the loss function $L(\hat{\mathbf{y}}\_\*, \mathbf{y}\_\*) = \min\_i |\hat{y}\_{\*i} - y\_{\*i}|$, and in "loss from worse prediction" we have the loss function $L(\hat{\mathbf{y}}\_\*, \mathbf{y}\_\*) = \max\_i |\hat{y}\_{\*i} - y\_{\*i}|$. In either case, the loss function is not additive for the individual terms. |

How is [real-time computing](http://en.wikipedia.org/wiki/Real-time_computing) defined in theoretical computer science (e.g. complexity theory)? Are there complexity theoretic models designed to capture the real-time computation? | Like many things in life, there is no one definitive definition. For an algorithm to run in real-time, some people on the theoretical side say that this means it will take constant time per 'something.' Now you have to decide what a 'something' is but let me give a concrete example. Let's say that the input arrives one symbol at a time and you want to output the answer to a query as soon as a new symbol arrives. If calculating that output takes constant time per new symbol then you might say the algorithm runs in real-time. An example of this is real-time exact string matching, which outputs whether a pattern matches the latest suffix of a text in constant time per new symbol. The text is assumed to arrive one symbol at a time.

However, an engineering answer will be less worried about "constant time" and more worried about it happening fast in practice and in particular fast enough that the result can be used by the time it is needed. So for example in robotics, if you want to play ping-pong it is useful for the robot to be able to work out where the ball is and move to hit it as the ball arrives, and not after the ball has passed. The asymptotic time complexity of the underlying algorithms will perhaps be of less interest there than just the observation that the code works out the location quickly enough. To give another example, if you want to render video and can do it at 25 frames per second then it is reasonable to say that the rendering is happening in real-time.

So basically you have two answers. One for the theoreticians/algorithmists and one that just says that you are doing the work as you need it on the fly.

EDIT: I should probably add that one extra feature one should require of even a constant time algorithm is that the time complexity is not amortised. In this context, real-time == unamortised constant time. |

I've always thought vaguely that the answer to the above question was affirmative along the following lines. Gödel's incompleteness theorem and the undecidability of the halting problem both being negative results about decidability and established by diagonal arguments (and in the 1930's), so they must somehow be two ways to view the same matters. And I thought that Turing used a universal Turing machine to show that the halting problem is unsolvable. (See also [this math.SE](https://math.stackexchange.com/questions/108964/halting-problem-and-universality) question.)

But now that (teaching a course in computability) I look closer into these matters, I am rather bewildered by what I find. So I would like some help with straightening out my thoughts. I realise that on one hand Gödel's diagonal argument is very subtle: it needs a lot of work to construct an arithmetic statement that can be interpreted as saying something about it's own derivability. On the other hand the proof of the undecidability of the halting problem I found [here](http://en.wikipedia.org/wiki/Halting_problem#Sketch_of_proof) is extremely simple, and doesn't even explicitly mention Turing machines, let alone the existence of universal Turing machines.

A practical question about universal Turing machines is whether it is of any importance that the alphabet of a universal Turing machine be the same as that of the Turing machines that it simulates. I thought that would be necessary in order to concoct a proper diagonal argument (having the machine simulate itself), but I haven't found any attention to this question in the bewildering collection of descriptions of universal machines that I found on the net. If not for the halting problem, are universal Turing machines useful in any diagonal argument?

Finally I am confused by [this further section](http://en.wikipedia.org/wiki/Halting_problem#Relationship_with_G.C3.B6del.27s_incompleteness_theorem) of the same WP article, which says that a weaker form of Gödel's incompleteness follows from the halting problem: "a complete, consistent and sound axiomatisation of all statements about natural numbers is unachievable" where "sound" is supposed to be the weakening. I know a theory is consistent if one cannot derive a contradiction, and a complete theory about natural numbers would seem to mean that all true statements about natural numbers can be derived in it; I know Gödel says such a theory does not exist, but I fail to see how such a hypothetical beast could possibly fail to be sound, i.e., also derive statements which are false for the natural numbers: the negation of such a statement would be true, and therefore by completeness also derivable, which would contradict consistency.

I would appreciate any clarification on one of these points. | Universal Turing machines are useful for some diagonal arguments, e.g in the separation of some classes in the [hierarchies of time](http://en.wikipedia.org/wiki/Time_hierarchy_theorem) or [space](http://en.wikipedia.org/wiki/Space_hierarchy_theorem) complexity: the universal machine is used to prove there is a decision problem in $\mbox{DTIME}(f(n)^3)$ but not in $\mbox{DTIME}(f(n/2))$. (Better bounds can be found in the WP article)

However, to be perfectly honest, if you look closely, the universal machine is not used in the `negative' part: the proof supposes there is a machine $K$ that would solve a time-limited version of the halting problem and then proceeds to build $¬KK$. (No universal machine here) The universal machine is used to solve the time-limited version of the halting problem in a larger amount of time. |

I’m a sucker for mathematical elegance and rigour, and now am looking for such literature on algorithms and algorithm analysis. Now, it doesn’t matter much to me *what* algorithms are covered, but very much *how* they are presented and treated.¹ I most value a very clear and precise language which *defines* all used notions in a stringent and abstract manner.

I found that the classic *Introduction to Algorithms*, by Cormen, Leiserson, Rivest and Stein is pretty neat, but doesn’t handle the mathematics well and is quite informal with its proofs and definitions. Sipser’s *Introduction to the Theory of Computation* seems better in that regard, but still offers no seamless transition from mathematics to algorithms.

Can anyone recommend something?

---

¹: The algorithms should at least invole the management of their needed data using classical non-trivial abstract data structures like graphs, arrays, sets, lists, trees and so on – preferably also operating on such data structures. I wouldn’t be too interested if the issue of usage and management of data structures was ignored altogether. I don’t care much about the problems solved with them, though. | Well, you can always consider a Turing machine equipped with an oracle for the ordinary Turing machine halting problem. That is, your new machine has a special tape, onto which it can write the description of an ordinary Turing machine and its input and ask if that machine halts on that input. In a single step, you get an answer, and you can use that to perform further computation. (It doesn't matter whether it's in a single step or not: it would be enough if it was guaranteed to be in some finite number of steps.)

However, there are two problems with this approach.

1. Turing machines equipped with such an oracle can't decide their own halting problem: Turing's proof of the undecidability of the ordinary halting problem can easily be modified to this new setting. In fact, there's an infinite hierarchy, known as the "Turing degrees", generated by giving the next level of the hierarchy an oracle for the halting problem of the previous one.

2. Nobody has ever suggested any way in which such an oracle could be physically implemented. It's all very well as a theoretical device but nobody has any clue how to build one.

Also, note that ZFC is, in a sense, weaker than naive set theory, not stronger. ZFC can't express Russell's paradox, whereas naive set theory can. As such, a better analogy would be to ask whether the halting problem is decidable for weaker models of computation than Turing machines. For example, the halting problem for deterministic finite automata (DFAs) is decidable, since DFAs are guaranteed to halt for every input. |

I have been struggling with [flat file databases](https://en.wikipedia.org/wiki/Flat_file_database) and corresponding statistical packages for almost 20 years now (from Excel to SPSS, then Stata, and currently R).

However, I have always had to convert complex and multidimensional [relational databases](https://en.wikipedia.org/wiki/Relational_database) (eg in Access or MySQL) to often overly simplified flat sheet databases, which is at best time consuming (but often means reducing the amount of information available for each analysis).

Indeed, the approach I have always followed is the typical one of converting a relational database through specific queries into one or more flat file databases. While this is simple enough for most analyses, especially univariate and bivariate, it may become more confusing for multivariable and multivariate analyses, as it requires taking multiple and complex queries, and most importantly often oversimplifying the data themselves.

Now that I try to get more acquainted with big data and data science, I wonder whether the shift to big data will require also a shift to data analysis encompassing multiple tables and relations, without diluting the efficiency and power of a relational database when it is converted into multiple flat file databases.

So, my question is, simply: is it possible to directly perform complex (eg multivariable) analyses of relational databases? And if yes, how?

This is not a philosophical question (only). For instance, I am now working on a relatively large (reaching 2000 patients) observational study on transcatheter aortic valve implantation for severe aortic stenosis ([RISPEVA](https://clinicaltrials.gov/ct2/show/NCT02713932)). It is based on a MySQL electronic case report form which corresponds to 12 separate tables with complex relations and often multiple entries per each patient. My approach so far to try to identify predictors of long-term death (eg if looking for a score) has been, as usual, to create multiple tables through queries, and then distill the key features capable of predicting death. This means going through multiple stages of analysis and, at best, it is time consuming.

My fear is however that it might overlook one or more of the relational features of the data, and thus loosing precision or accuracy. Could it be done in a different fashion, directly analyzing the relational database as it stands? | My understanding of your question is that you are interested in methods to uncover multidimensional relationships in data yet are reluctant to take low-dimensional slices of the data for analysis. This is, in a sense, the basis of many machine learning algorithms that use data in high dimensions to make predictions or classifications with often very complex rules that are learned directly from the data.

There are classes of relational methods which perhaps fit more neatly into what you are thinking of, however. For example, the [infinite relational model](http://www.psy.cmu.edu/~ckemp/papers/KempTGYU06.pdf) is a Bayesian nonparametric framework for identifying hidden structure across many dimensions in a way that appears to conceptually match what you want. For a sample problem that this might be used for, consider a relational database which contains 3 tables with 3 different primary keys and containing information on a set of cases $S$, a set of patients $P$ and a set of doctors $D$ that performed procedures during these cases. I offer this as a low-dimensional example but all of this can be scaled up to include more data.

Then, suppose that you have an indicator variable denoting whether or not the patient had a good outcome. As shown in the paper I linked, you could simultaneously find partitionings of each of $S$, $D$ and $P$ such that each partition cell contained similar outcomes. This learning is done by performing optimization of the likelihood of the data under a Bayesian model. This might inform you as to which doctors are good or bad, or whether certain patients are particularly troublesome for a given procedure. Again, this framework is flexible and affords a range of generative models for the underlying process.

This may be more complex than what you desired - it's a bit of a jump from Excel or SPSS to writing custom inference code in another programming language. Still, it's how I would approach this problem. |

I am interested in modeling objects, from object oriented programming, in dependent type theory. As a possible application, I would like to have a model where I can describe different features of imperative programming languages.

I could only find one Paper on modeling objects in dependent type theory, which is:

[Object-oriented programming in dependent type theory by A. Setzer (2006)](http://www.cs.swan.ac.uk/~csetzer/articles/objectOrientedProgrammingInDepTypeTheoryTfp2006PostProceedings.pdf)

Are there further references on the topic that I missed and perhaps are there more recent ones?

Is there perhaps an implementation (i.e. proof) available for a theorem prover, like Coq or Agda? | Some early(?) work done in this area was by Bart Jacobs (Nijmegen) and Marieke Huisman. Their work is based on the PVS tool and relied on a coalgebraic encoding of classes (if I remember correctly). Look at Marieke's [publication page](http://wwwhome.ewi.utwente.nl/~marieke/papers.html) for papers in the year 2000 and her PhD thesis in 2001. Also look at the papers by Bart Jacobs cited in the A Setzer paper you mention.

Back in those days, they had something called the LOOP tool, but it seems to have vanished from the internets.

There is a workshop series known as [FTfJP](http://www.cs.ru.nl/ftfjp/) (Formal Techniques for Java-like Programs) that addresses the formal verification of OO programs. Undoubtedly some of the work uses dependent type theory/higher-order logic. The workshop series has been running for some 14 years. |

The problem is whether a graph (which we represent as a flow network) has a single min-cut, or there could be multiple min cuts with the same maximum flow value, I've yet to encounter a well explained algorithm for solving this problem, let alone a complexity anaylsis or proof.

I was not able to find the exact resource of information to help me answer the problem. I'm not sure where else to search, therefore I thought asking here could perhaps help me better my understanding of the problem and the algorithms that help solving them. | Here is a simple algorithm that determines whether a flow network $G=(V, E, c)$ with source $s$ and sink $t$ has a single min-cut or not.

1. Find a maximum flow $f$ of $G$. Let $R\_f$ be the residual network of $G$ with respect to $f$.

2. Let $X$ be the set of all nodes that are reachable from $s$ in $R\_f$.

3. Let $Y$ be the set of all nodes from which $t$ is reachable or, what is equivalent, all nodes reachable from $t$ in $R\_f$ with all direction of flow capacity reversed.

4. If $X\cup Y=V$, there is a unique minimum cut (which is $(X,Y)$). Otherwise, there are more than one minimum cut.

#### Why is the algorithm above correct?

First, we know that $(X, V\setminus X)$ is a minimum cut. By symmetry, so is $(V\setminus Y, Y)$.

Suppose $(S,T)$ is an arbitrary minimum-cut of $G$ where $s\in S$ and $t\in T$. [This post](https://cs.stackexchange.com/q/149194/91753) tells that $X$ is contained in $S$. By symmetry, $Y$ is contained in $T$. Hence, $(S,T)$ is the unique minimum-cut iff $X\cup Y=V$.

#### Time-complexity of the algorithm

It mostly depends on the complexity of [the algorithm that is used to compute a maximum flow](https://en.wikipedia.org/wiki/Maximum_flow_problem#Algorithms).

It takes $O(E)$ time to compute $X$ and $Y$, given the max-flow $f$.

It takes $O(V)$ time to check whether $X=Y$. |

I can't find how to prove the decibility with a reduction.

EDIT:

I've tried the reduction from the halting problem and the aceptance problem. Stopping for at least one entry has infinite inputs (you have to check all possible inputs) but the halting problem only has one input for the TM.

I don't understand how can i formally define a machine that using a machine that checks all inputs solves all cases of the halting problem. | It more or less depends on the implementation. If you have implemented the matrix using linked lists (which isn't what we usually do), then adding a new vertex in $G$ will be linear in the number of vertices of $G$. But we don't usually use linked lists because then reading/checking an edge will take $V(G)^2$ time.

To allow random/constant time access of the adjacency matrix, we need to use fixed-size arrays of arrays (or matrix). Now, when you add new vertex, we are required to copy the whole matrix into a bigger size matrix: hence the quadratic time.

Generally, we use $vectors$ instead of $arrays$ which hold *extra hidden memory*, and hence it will indeed be linear time to add a vertex on average. <https://www.geeksforgeeks.org/vector-in-cpp-stl/> |

I understand the textbook explanation of how to use dynamic programming to find the minimum edit distance between 2 strings but how do we get to pick the 2nd string?

I don't think the entire dictionary is compared as sometimes the difference is either in the middle or the end. I assume that in the end, what is suggested is the string that has the minimum edit distance after creating a certain number of $n \times m$ tables, where $n$ is the typed string length and $m$ is that of the other words that may be close. | Companies with search engines (e.g. Microsoft or Google) don't always directly search for the string with the smallest Levenshtein distance. They have a huge database of search queries, from which they have developed a huge database of commonly misspelled/mistyped variants, and what word the user probably meant to type instead.

They also have a huge corpus of text, and can use this to (for example) predict which word is most likely to come next based on what you've typed so far, or to assist with autocompletion. The set of likely words is much smaller than the set of possible words.

Don't underestimate the value of understanding exactly how real humans misspell or mistype things. For example, when you misspell something, you rarely get the first letter wrong, unless it's an ambiguous letter such as a vowel or "c" vs "k".

With all that said, let's assume that you're not doing any of that, and just want to find a string with edit distance as close as possible. The general idea is to find a set of candidate words first (e.g. all words within a certain edit distance, or all words with promising sub-matches), and then use some kind of finer-grained metric to decide which member of the set to suggest.

A simple approach is to use a trie, such as a [ternary search trie](https://en.wikipedia.org/wiki/Ternary_search_tree). Another option is to combine [k-mer](https://en.wikipedia.org/wiki/K-mer) matches. |

I am reading/studying this paper [1](https://arxiv.org/pdf/1601.00670.pdf) and got confused with some expressions. It might be basic for many of you, so my apologizes. In the paper the following prior model is assumed:

$\mu\_k \sim \mathcal{N}(0, \sigma^2) \\ c\_i \sim Categorical(1/K, ... 1/K) \\ x\_i|c\_i, \mu \sim \mathcal{N}(c\_i^{T}\mu, 1)$

The joint density is modeled as follows:

$p(x, c, \mu) = p(\mu)\prod\_{i=1}^{n}p(c\_i)p(x\_i|c\_i, \mu)$

Using the mean-field approximation as,

$q(\mu, c) = \prod\_{k=1}^{K}q(\mu\_k; m\_k, s\_k^{2}) \prod\_{i=1}^{n}q(c\_i;\varphi\_i)$

the authors arrive to the ELBO,

$ELBO(\textbf{m}, \textbf{s}^2, \varphi) = \sum\_{k=1}^{K}\mathbb{E}[\log p(\mu\_k);m\_k, s\_k^{2}] + \\

+ \sum\_{i=1}^{n}(\mathbb{E}[\log p(c\_i);\varphi\_i] + \mathbb{E}[\log p(x\_i|c\_i, \mu); \textbf{m}, \textbf{s}^{2}, \varphi\_i]) + \\

- \sum\_{i=1}^{n}\mathbb{E}[\log q(c\_i;\varphi\_i)] - \sum\_{k=1}^{K}\mathbb{E}[\log q(\mu\_k; m\_k, s\_k^{2})]$

I am kind of lost in how to compute the ELBO. E.g., the first term is the prior on $\mu\_k$, which is a zero-mean Gaussian. Then I would say that term is zero. Am I right? In the second term, $\sum\_{i=1}^{n}(\mathbb{E}[\log p(c\_i);\varphi\_i]$, should it be $\log (K)$? Can someone give me a hint how to compute this equation?

Besides this, the paper goes on presenting the update algorithm on page 14. The update equation for the latent variables $\varphi\_i$ is:

For $i=1....n$

$ \varphi\_{ik} \propto \texttt{exp}\{\mathbb{E}[\mu\_k; m\_k, s\_k^{2}]x\_i - \mathbb{E}[\mu\_k^{2};m\_k,s\_k^{2}]/2\}$

Again, $\mathbb{E}[\cdot]$ is computed w.r.t. $q(\cdot)$, and, assuming that $\mu\_k$ is a Gaussian distribution centered here at $m\_k$, the first term should be simply $\texttt{exp}\{m\_k x\_i\}$ ? the second term just $\texttt{exp}\{(s\_k^{2} + m\_k^{2})/2\}$ ?

Help in understanding these expressions would be very appreciated! Thanks! | Ok, I believe I got some feeling about what , e.g., the first term of the ELBO might be:

$\sum\_{k=1}^{K}\mathbb{E}[\log p(\mu\_k);m\_k;s\_k^{2}] \sum\_{k=1}^{K}\mathbb{E}[-\frac{1}{2}\log(\frac{1}{2\pi\sigma^2})-\frac{\mu\_k^{2}}{2\sigma^{2}};m\_k;s\_k^{2}] = \\

\sum\_{k=1}^{K}-\frac{1}{2}\log(\frac{1}{2\pi\sigma^2})-\mathbb{E}[\frac{\mu\_k^{2}}{2\sigma^{2}};m\_k;s\_k^{2}] = \sum\_{k=1}^{K}-\frac{1}{2}\log(\frac{1}{2\pi\sigma^2})-\frac{\mathbb{E}[\mu\_k^{2};m\_k;s\_k^{2}]}{2\sigma^{2}} = \\

\sum\_{k=1}^{K}-\frac{1}{2}\log(\frac{1}{2\pi\sigma^2})-\frac{s\_k^{2}+m\_k^{2}}{2\sigma^{2}} = -\frac{K}{2}\log(\frac{1}{2\pi\sigma^2})-\sum\_{k=1}^{K}\frac{s\_k^{2}+m\_k^{2}}{2\sigma^{2}}$

which is a function of the variational parameters and hence can be computed. |

I'm following-up on [this great answer](https://stats.stackexchange.com/a/540019/140365). Essentially, I was wondering how could misspecification of random-effects bias the estimates of fixed-effects?

So, can the same set of fixed-effect coefficients become biased if we create models that only differ in their random-effect specification?

Also as a conceptual matter, can we say in mixed-effect models, the fixed-effect coef is some kind of (weighted) average of the individual regression counterparts fit to each individual cluster and that is why fixed-effect coefs in mixed models can prevent [something like this Simpson's Paradox case](https://stats.stackexchange.com/a/478580/42952) from happening?

A possible `R` demonstration is appreciated. | >

> Can fixed-effects become biased due to random structure misspecification

>

>

>

Yes they can. Let's do a simulation in R to show it.

We will simulate data according to the following model:

```

Y ~ treatment + time + (1 | site) + (time | subject)

```

So we have fixed effects for `treatment` and `time`, random intercepts for `subject` nested within `site` and random slopes for `time` over `subject`. There are many things that we can vary with this simulation and obviously there is a limit to what I can do here. But if you (or others) have some suggestions for altering the simulations, then please let me know. Of course you can also play with the code yourself :)

In order to look at bias in the fixed effects we will do a Monte Carlo simulation. We will make use of the following helper function to determine if the model converged properly or not:

```r

hasConverged <- function (mm) {

if ( !(class(mm)[1] == "lmerMod" | class(mm)[1] == "lmerModLmerTest")) stop("Error must pass a lmerMod object")

retval <- NULL

if(is.null(unlist(mm@optinfo$conv$lme4))) {

retval = 1

}

else {

if (isSingular(mm)) {

retval = 0

} else {

retval = -1

}

}

return(retval)

}

```

So we will start by setting up the parameters for the nested factors:

```r

n_site <- 100; n_subject_site <- 5; n_time <- 2

```

which are the number of sites, the number of subjects per site and the number of measurements within subjects.

So now we simulate the factors:

```r

dt <- expand.grid(

time = seq(0, 2, length = n_time),

site = seq_len(n_site),

subject = seq_len(n_subject_site),

reps = 1:2

) %>%

mutate(

subject = interaction(site, subject),

treatment = sample(0:1, size = n_site * n_subject_site,, replace =

TRUE)[subject],

Y = 1

)

X <- model.matrix(~ treatment + time, dt) # model matrix for fixed effects

```

where we also add a column of 1s for the reponse at this stage in order to make use of the `lFormula` function in `lme4` which can construct the model matrix of random effects `Z`:

```r

myFormula <- "Y ~ treatment + time + (1 | site) + (time|subject)"

foo <- lFormula(eval(myFormula), dt)

Z <- t(as.matrix(foo$reTrms$Zt))

```

Now we set up the parameters we will use in the simulations:

```r

# fixed effects

intercept <- 10; trend <- 0.1; effect <- 0.5

# SDs of random effects

sigma_site <- 5; sigma_subject_ints <- 2; sigma_noise <- 1; sigma_subj_slopes <- 0.5

# correlation between intercepts and slopes for time over subject

rho_subj_time <- 0.2

betas <- c(intercept, effect, trend) # Fixed effects parameters

```

Then we perform the simulations:

```r

n_sim <- 200

# vectrs to store the fixed effects from each simulations

vec_intercept <- vec_treatment <- vec_time <- numeric(n_sim)

for (i in 1:n_sim) {

set.seed(i)

u_site <- rnorm(n_site, 0, sigma_site) # standard deviation of random intercepts for site

cormat <- matrix(c(sigma_subject_ints, rho_subj_time, rho_subj_time, sigma_subj_slopes), 2, 2) # correlation matrix

covmat <- lme4::sdcor2cov(cormat)

umat <- MASS::mvrnorm(n_site * n_subject_site, c(0, 0), covmat, empirical = TRUE) # simulate the random effects

u_subj <- c(rbind(umat[, 1], umat[, 2])) # lme4 needs the random effects in this order (interleaved) when there are slopes and intercepts

u <- c(u_subj, u_site)

e <- rnorm(nrow(dt), 0, sigma_noise) # residual error

dt$Y <- X %*% betas + Z %*% u + e

m0 <- lmer(myFormula, dt)

summary(m0) %>% coef() -> dt.tmp

if(hasConverged(m0)) {

vec_intercept[i] <- dt.tmp[1, 1]

vec_treatment[i] <- dt.tmp[2, 1]

vec_time[i] <- dt.tmp[3, 1]

} else {

vec_intercept[i] <- vec_treatment[i] <- vec_time[i] <- NA

}

}

```

And finally we can check for bias:

```r

mean(vec_intercept, na.rm = TRUE)

## [1] 10.04665

mean(vec_treatment, na.rm = TRUE)

## 0.497358

mean(vec_time, na.rm = TRUE)

## [1] 0.09761494

```

...and these agree closely with the values used in the simulation: 10, 0.5 and 0.1.

Now, let us repeat the simulations, based on the same model:

```r

Y ~ treatment + time + (1 | site) + (time|subject)

```

but instead of fitting this model, we will fit:

```r

Y ~ treatment + time + (1 | site)

```

So we just need to make a simple change:

```r

m0 <- lmer(myFormula, dt)

```

to

```r

m0 <- lmer(Y ~ treatment + time + (1 | site), data = dt )

```

And the results are:

```r

mean(vec_intercept, na.rm = TRUE)

## [1] 10.04169

mean(vec_treatment, na.rm = TRUE)

##[1] 0.5068864

mean(vec_time, na.rm = TRUE)

##[1] 0.09761494

```

So that's all good.

Now we make a simple change:

```r

n_site <- 4

```

So now, instead of 100 sites, we have 4 sites. We retain the number of subjects per site (5) and the number of time points per subject (2).

For the "correct" model, the results are:

```r

mean(vec_intercept, na.rm = TRUE)

## 10.16447

mean(vec_treatment, na.rm = TRUE)

## [1] 0.422812

mean(vec_time, na.rm = TRUE)

## [1] 0.1049933

```

Now, while the intercept and time are close to unbiased, the `treatment` fixed effect is a little off (0.42 vs 0.5, a bias of around -15% which perhaps stregthens the argument for not fitting random intercepts at all for such a small group even when the random structure is correct). But, if we fit the "wrong" model, the results are:

```r

mean(vec_intercept, na.rm = TRUE)

## [1] 10.0194

mean(vec_treatment, na.rm = TRUE)

## [1] 0.7084542

mean(vec_time, na.rm = TRUE)

## [1] 0.1029664

```

So now we find the bias of around +42%

As mentioned above, there are a huge number of possible ways this simulation can be altered and adapted, but it does show that biased fixed effects can result when the random structure is wrong, as requested. |

"The same value in all the parameters makes all the

neurons have the same effect on the input, which causes

the gradient with respect to all the weights is the same and, therefore,

the parameters always change in the same way."

Taken from my course. | a. For a beginner I would suggest the [fullstackdeeplearning](https://fullstackdeeplearning.com/spring2021/lecture-6/) course, it's a modern overview of tools and best practices for ML in production. As you can see below, there are a lot of moving pieces.

[](https://i.stack.imgur.com/rBfCn.png)

b. What you are asking for can be done with Spark + Airflow. In particular Airflow (or similar tools such as Luigi) allows to create very customised data pipelines. The learning curve is a bit steep, but there are good resources available online.

c. The course above should answer your questions, as the data side is not really deep learning specific, but can apply also to data-science workflows. |

Going through [some knowledge representation tutorials](http://www.cs.toronto.edu/~sheila/384/w11/) on resolution at the moment, and I came across [slide 05.KR, no77](http://www.cs.toronto.edu/~sheila/384/w11/Lectures/csc384w11-Lecture-05-KR.pdf).

There it is mentioned that "the procedure is also complete".

I think this completeness can not mean that if a sentence is entailed by KB, then it will be derived by resolution. For example, resolution can not derive $(q \lor \neg q)$ from a KB with single clause $\neg p$. (Example from KRR, Brachman and Levesque, page 53).

Could anyone help me figure out what is meant in this slide? Is the completeness of slide refer to being refutaton-complete and not a complete proof procedure? | Resolution is only refutationally complete, as you mentioned. This is *intended* and very useful, because it drastically reduces the search space. Instead of having to eventually derive every possible consequence (to find a proof of some conjecture), resolution is only trying to derive the empty clause. |

Let's say I have a context free language. It can be recognised by a pushdown automaton. Chances are it can't be parsed with a regular expression, as regular expressions are not as powerful as pushdown automata.

Now, let's put an additional constraint on the language: the maximum recursion amount must be finite.

Because the stack size has an upper bound in this case, my understanding is that there are finite number of stack configurations reachable. This means I could number them 0, 1, 2, 3, ..., N. So, I should be able to create a deterministic finite automaton (DFA) with states 0, 1, 2, 3, ..., N that recognises the same language that the pushdown automaton recognises.

Now, if I'm able to create an equivalent DFA, doesn't it mean that there exists a regular expression that can parse the context-free language with maximum recursion amount?

So, my theory is that all context-free languages that have a maximum recursion amount can be parsed with regular expressions. Is this theory correct? Of course, the theory says nothing about the complexity of the regular expression, it just says such a regular expression should exist.

So, in other words: if your stack memory is limited, a regexp can do the job of a HTML/XML parser!

In principle, isn't it true that computers with finite memory are actually DFAs and not Turning machines? | We can take it even further: if we put a limit on the size of the HTML/XML, say 1PB, then there is only a finite number of them, so we can trivially parse them in $O(1)$ using a giant look-up table. However, that doesn't seem to tell us much about the complexity of parsing HTML/XML in practice.

The issue at stake here is *modeling*. A good model abstracts away the salient points of a real world situation in a way that makes the situation amenable to mathematical inquiry. Modeling HTML/XMLs as instances of arbitrarily recursing language forms a better model in practice than your suggestion or my suggestion. |

I need a data structure which can include millions of elements, minimum and maximum must be accesable in constant time and inserting and erasing element time complexity must be better than linear. | A basic data structure that allows insertion and deletion in time $\Theta(\log n)$ are [balanced binary search trees](https://en.wikipedia.org/wiki/Balanced_binary_search_tree). Their memory overhead is reasonable (in case of AVL trees, two pointers and three bits per entry) so millions of entries are no problem at all on modern machines.

Note that in a search tree, finding the minimum (or maximum) is conceptually easy by descending always left (right) starting in the root. This works in time $\Theta(\log n)$, too, which is too slow for you.

However, we can certainly store pointers to these tree nodes, similar to front and end pointers in double-linked linear lists. But what happens when the elements are deleted? In this case, we have to find the [in-order](https://en.wikipedia.org/wiki/In-order_traversal#In-order_.28symmetric.29) successor (predecessor) and update the pointer to the minimum (maximum). Finding this node works in time $O(\log n)$ so it does not hurt deletion time, asymptotically.

You can, however, enable time $O(1)$ deletion of minimum and maximum by *threading* the tree, that is maintaining -- in addition to the binary search tree -- a double-linked list in in-order. Then, finding the new minimum/maximum is possible in time $O(1)$. This list requires additional space (two pointers per entry) and has to be maintained during insertions and deletions; this does not make the asymptotics worse but certainly slows down *every* such operation (I leave the details to you). So you have to trade-off the options given your application, that is which operations occur more often and which you want to be fastest.

Note that trees, as all linked structures, tend to be bad for memory hierarchies since they don't necessarily preserve data locality. If your sets are so large that they don't fit into cache completely, you should check out [B-trees](https://en.wikipedia.org/wiki/B-tree) which are designed to minimise page loads. The above works with them, too. |

My question is simple:

>

> What is the worst-case running time of the best known algorithm for computing an [eigendecomposition](http://mathworld.wolfram.com/EigenDecomposition.htmlBlockquoteBlockquote) of an $n \times n$ matrix?

>

>

>

Does eigendecomposition reduce to matrix multiplication or are the best known algorithms $O(n^3)$ (via [SVD](http://en.wikipedia.org/wiki/Singular_value_decomposition)) in the worst case ?

Please note that I am asking for a worst case analysis (only in terms of $n$), not for bounds with problem-dependent constants like condition number.

**EDIT**: Given some of the answers below, let me adjust the question: I'd be happy with an $\epsilon$-approximation. The approximation can be multiplicative, additive, entry-wise, or whatever reasonable definition you'd like. I am interested if there's a known algorithm that has better dependence on $n$ than something like $O(\mathrm{poly}(1/\epsilon)n^3)$?

**EDIT 2**: See [this related question](https://cstheory.stackexchange.com/questions/3115/complexity-of-finding-the-eigendecomposition-of-a-symmetric-matrix) on *symmetric matrices*. | Ryan answered a similar question on mathoverflow. Here's the link: [mathoverflow-answer](https://mathoverflow.net/questions/24287/what-is-the-best-algorithm-to-find-the-smallest-nonzero-eigenvalue-of-a-symmetric/24294#24294)

Basically, you can reduce eigenvalue computation to matrix multiplication by computing a symbolic determinant. This gives a running time of O($n^{\omega+1}m$) to get $m$ bits of the eigenvalues; the best currently known runtime is O($n^3+n^2\log^2 n\log b$) for an approximation within $2^{-b}$.

Ryan's reference is ``Victor Y. Pan, Zhao Q. Chen: The Complexity of the Matrix Eigenproblem. STOC 1999: 507-516''.

(I believe there is also a discussion about the relationship between the complexities of eigenvalues and matrix multiplication in the older Aho, Hopcroft and Ullman book ``The Design and Analysis of Computer Algorithms'', however, I don't have the book in front of me, and I can't give you the exact page number.) |

I'm facing a problem where I want to model a GEE with a tweedie distribution but it's not implemented in any R package that I found.

I know that GEEs and linear mixture models (LMM) are somehow related but I'm not an expert. It's very easy to define an LMM in Bayesian terms and carry out parameter estimation in `rStan` for example.

Is there a way to do this for GEEs as well? I'm interested in an example as well. | As far as I know, this is not possible. GEE uses estimating equations for the various moments. The benefit of this approach is that you don't have to write down a likelihood and make the assumptions therein, however this also makes it limited in terms of using Bayesian methods that require specification of a likelihood. Here is a link <https://ete-online.biomedcentral.com/articles/10.1186/s12982-015-0030-y> |

There is given sequence $a\_1,...a\_n$ such that there are $O(n^{\frac{3}{2}}) $ inversions in this sequence. I am thinking about sorting algorithm for that.

I know lower bound for number of comparisons - it is $O(n)$ - on the contrary, there would be a minimum finding algorithm faster than $O(n)$.

Nevertheless, I don't have idea how sort it in linear time ? What doy you think ?

Inversion is a pair $(i, j)$ such that $i < j$ and $a\_i > a\_j$ | You can't sort it in linear time.

Suppose you have $n$ items, and you divide them into $\sqrt{n}$ consecutive blocks of $\sqrt{n}$ items each.

You need to take $\sqrt{n} \log \sqrt{n}$ comparisons to sort each one. And there are $\sqrt{n}$ of them, giving $\theta(n \log n)$ time total. And it's easy to see that there can't be more than $n^{3/2}$ inversions in the sequence, since there can't be more than $n$ inversions in each subsequence. |

Stable Marriage Problem: <http://en.wikipedia.org/wiki/Stable_marriage_problem>

I am aware that for an instance of a SMP, many other stable marriages are possible apart from the one returned by the Gale-Shapley algorithm. However, if we are given only $n$ , the number of men/women, we ask the following question - Can we construct a preference list that gives the maximum number of stable marriages? What is the upper bound on such a number? | An upper bound on the maximum number of stable matchings for a Stable Marriage instance is given in my Master's thesis and it is extended to the Stable Roommates problem as well.The bound is of magnitude $O(n!/2^n)$ and it can be shown that it is actually of magnitude $O\left((n!)^\frac{2}{3}\right)$.

The document is thesis number 97 on page <http://mpla.math.uoa.gr/msc/> |

I was reading [this article](http://www.paulgraham.com/avg.html). The author talks about "The Blub Paradox". He says programming languages vary in power. That makes sense to me. For example, Python is more powerful than C/C++. But its performance is not as good as that of C/C++.

Is it always true that more powerful languages must **necessarily** have lesser **possible** performance when compared to less powerful languages? Is there a law/theory for this? | **TL;DR:** Performance is a factor of **Mechanical Sympathy** and **Doing Less**. Less *flexible* languages are generally doing less and being more mechanically sympathetic, hence they generally perform better *out of the box*.

### Physics Matter

As Jorg mentioned, CPU designs today co-evolved with C. It's especially telling for the x86 instruction set which features SSE instructions specifically tailored for NUL-terminated strings.

Other CPUs could be tailored for other languages, and that may give an edge to such other languages, but regardless of the instruction set there are some hard physics constraints:

* The size of transistors. The latest CPUs feature 7nm, with 5nm being experimental. Size immediately places an upper bound on density.

* The speed of light, or rather the speed of electricity in the medium, places on an upper bound on the speed of transmission of information.

Combining the two places an upper bound on the size of L1 caches, in the absence of 3D designs – which suffer from heat issues.

**Mechanical Sympathy** is the concept of designing software with hardware/platform constraints in mind, and essentially to play to the platform's strengths. Language Implementations with better Mechanical Sympathy will outperform those with lesser Mechanical Sympathy on a given platform.

A critical constraint today is being cache-friendly, notably keeping the working set in the L1 cache, and typically GCed languages use more memory (and more indirections) compared to languages where memory is manually managed.

### Less (Work) is More (Performance)

There's no better optimization than removing work.

A typical example is accessing a property:

* In C `value->name` is a single instruction (`lea`).

* In Python or Ruby, the same typically involves a hash table lookup.