diff --git "a/jsteinhardt_blog.jsonl" "b/jsteinhardt_blog.jsonl"

new file mode 100644--- /dev/null

+++ "b/jsteinhardt_blog.jsonl"

@@ -0,0 +1,39 @@

+{"text": "New new blog location\n\nI’ve switched the host of my blog to Ghost, so my blog is now located at . This is different from the previous re-hosting on my personal website, which is no longer maintained.\n\n\nFeedly users can subscribe via RSS [here](https://feedly.com/i/subscription/feed/https://bounded-regret.ghost.io/rss/). \nOr click the bottom-right subscribe button on [this](https://bounded-regret.ghost.io/) page to get e-mail notifications of new posts.\n\n", "url": "https://jsteinhardt.wordpress.com/2021/10/13/new-new-blog-location/", "title": "New new blog location", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2021-10-13T22:16:16+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "7af08cc55e69629d19952f50757a4cc3", "summary": []}

+{"text": "New blog location\n\n*[Update: This post is out of date. My blog has now moved again, to .]*\n\n\nI’ve decided to move this blog to my personal website. It’s now located here: , including all the old posts and comments, plus some new and upcoming posts :).\n\n", "url": "https://jsteinhardt.wordpress.com/2021/06/23/new-blog-location/", "title": "New blog location", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2021-06-23T23:39:11+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "130777e457a45a578fe92c25625b980b", "summary": []}

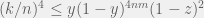

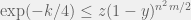

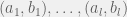

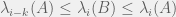

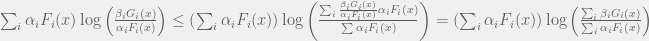

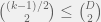

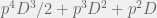

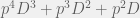

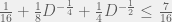

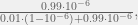

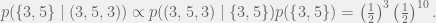

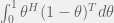

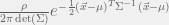

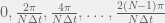

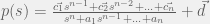

+{"text": "Sets with Small Intersection\n\nSuppose that we want to construct subsets  with the following properties:\n\n\n1.  for all \n2.  for all \n\n\nThe goal is to construct as large a family of such subsets as possible (i.e., to make  as large as possible). If , then up to constants it is not hard to show that the optimal number of sets is  (that is, the trivial construction with all sets disjoint is essentially the best we can do).\n\n\nHere I am interested in the case when . In this case I claim that we can substantially outperform the trivial construction: we can take . The proof is a very nice application of the asymmetric Lovasz Local Lemma. (Readers can refresh their memory [here](https://en.wikipedia.org/wiki/Lov%C3%A1sz_local_lemma#Asymmetric_Lov.C3.A1sz_local_lemma) on what the asymmetric LLL says.)\n\n\n**Proof.** We will take a randomized construction. For , , let  be the event that . We will take the  to be independent each with probability . Also define the events\n\n\n![Y_{i,j,a,b} = I[i \\in S_a \\wedge j \\in S_a \\wedge i \\in S_b \\wedge j \\in S_b]](https://s0.wp.com/latex.php?latex=Y_%7Bi%2Cj%2Ca%2Cb%7D+%3D+I%5Bi+%5Cin+S_a+%5Cwedge+j+%5Cin+S_a+%5Cwedge+i+%5Cin+S_b+%5Cwedge+j+%5Cin+S_b%5D&bg=f0f0f0&fg=555555&s=0&c=20201002)\n\n\n![Z_{a} = I[|S_a| < k]](https://s0.wp.com/latex.php?latex=Z_%7Ba%7D+%3D+I%5B%7CS_a%7C+%3C+k%5D&bg=f0f0f0&fg=555555&s=0&c=20201002)\n\n\nIt suffices to show that with non-zero probability, all of the  and  are false. Note that each  depends on , and each  depends on . Thus each  depends on at most  other  and  other , and each  depends on at most  of the . Also note that  and  (by the Chernoff bound). It thus suffices to find constants  such that\n\n\n\n\n\n\n\n\nWe will guess , , in which case the bottom inequality is approximately  (which is satisfied for large enough , and the top inequality is approximately , which is satisfied for  (assuming ). Hence in particular we can indeed take , as claimed.\n\n", "url": "https://jsteinhardt.wordpress.com/2017/03/17/sets-with-small-intersection/", "title": "Sets with Small Intersection", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2017-03-17T04:42:29+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "9674a312afb4fe19cb9b5ef08241f4fb", "summary": []}

+{"text": "Advice for Authors\n\nI’ve spent much of the last few days reading various ICML papers and I find there’s a few pieces of feedback that I give consistently across several papers. I’ve collated some of these below. As a general note, many of these are about local style rather than global structure; I think that good local style probably contributes substantially more to readability than global structure and is in general under-rated. I’m in general pretty willing to break rules about global structure (such as even having a conclusion section in the first place! though this might cause reviewers to look at your paper funny), but not to break local stylistic rules without strong reasons.\n\n\n**General Writing Advice**\n\n\n* Be precise. This isn’t about being pedantic, but about maximizing information content. Choose your words carefully so that you say what you mean to say. For instance, replace “performance” with “accuracy” or “speed” depending on what you mean.\n* Be concise. Most of us write in an overly wordy style, because it’s easy to and no one drilled it out of us. Not only does wordiness decrease readability, it wastes precious space if you have a page limit.\n* Avoid complex sentence structure. Most research is already difficult to understand and digest; there’s no reason to make it harder by having complex run-on sentences.\n* Use consistent phrasing. In general prose, we’re often told to refer to the same thing in different ways to avoid boring the reader, but in technical writing this will lead to confusion. Hopefully your actual results are interesting enough that the reader doesn’t need to be entertained by your large vocabulary.\n\n\n**Abstract**\n\n\n* There’s more than one approach to writing a good abstract, and which one you take will depend on the sort of paper you’re writing. I’ll give one approach that is good for papers presenting an unusual or unfamiliar idea to readers.\n* The first sentence / phrase should be something that all readers will agree with. The second should be something that many readers would find surprising, or wouldn’t have thought about before; but it should follow from (or at least be supported by) the first sentence. The general idea is that you need to start by warming the reader up and putting them in the right context, before they can appreciate your brilliant insight.\n* Here’s an example from my [Reified Context Models](http://jmlr.org/proceedings/papers/v37/steinhardta15.html) paper: “A classic tension exists between exact inference in a simple model and approximate inference in a complex model. The latter offers expressivity and thus accuracy, but the former provides coverage of the space, an important property for confidence estimation and learning with indirect supervision.” Note how the second sentence conveys a non-obvious claim — that coverage is important for confidence estimation as well as for indirect supervision. It’s tempting to lead with this in order to make the first sentence more punchy, but this will tend to go over reader’s heads. Imagine if the abstract had started, “In the context of inference algorithms, coverage of the space is important for confidence estimation and indirect supervision.” No one is going to understand what that means.\n\n\n**Introduction** \n\n\n* The advice in this section is most applicable to the introduction section (and maybe related work and discussion), but applies on some level to other parts of the paper as well.\n* Many authors (myself included) end up using phrases like “much recent interest” and “increasingly important” because these phrases show up frequently in academic papers, and they are vague enough to be defensible. Even though these phrases are common, they are bad writing! They are imprecise and rely on hedge words to avoid having to explain why something is interesting or important.\n* Make sure to provide context before introducing a new concept; if you suddenly start talking about “NP-hardness” or “local transformations”, you need to first explain to the reader why this is something that should be considered in the present situation.\n* Don’t beat around the bush; if the point is “A, therefore B” (where B is some good fact about your work), then say that, rather than being humble and just pointing out A.\n* Don’t make the reader wait for the payoff; spell it out in the introduction. I frequently find that I have to wait until Section 4 to find out why I should care about a paper; while I might read that far, most reviewers are going to give up about halfway through Section 1. (Okay, that was a bit of an exaggeration; they’ll probably wait until the end of Section 1 before giving up.)\n\n\n**Conclusion / Discussion**\n\n\n* I generally put in the conclusion everything that I wanted to put in the introduction, but couldn’t because readers wouldn’t be able to appreciate the context without reading the rest of the paper first. This is a relatively straightforward way to write a conclusion that isn’t just a re-hash of the introduction.\n* The conclusion can also be a good place to discuss open questions that you’d like other researchers to think about.\n* My model is that only the ~5 people most interested in your paper are going to actually read this section, so it’s worth somewhat tailoring to that audience. Unfortunately, the paper reviewers might also read this section, so you can’t tailor it too much or the reviewers might get upset if they end up not being in the target audience.\n* For theory papers, having a conclusion is completely optional (I usually skip it). In this case, open problems can go in the introduction. If you’re submitting a theory paper to NIPS or ICML, you unfortunately need a conclusion or reviewers will get upset. In my opinion, this is an instance where peer review makes the paper worse rather than better.\n\n\n**LaTeX**\n\n\n* Proper citation style: one should write “Widgets are awesome (Smith, 2001).” or “Smith (2001) shows that widgets are awesome.” but never “(Smith, 2001) shows that widgets are awesome.” You can control this in LaTeX using \\citep{} and \\citet{} if you use natbib.\n* Display equations can take up a lot of space if over-used, but at the same time, too many in-line equations can make your document hard to read. Think carefully about which equations are worth displaying, and whether your in-line equations are becoming too dense.\n* If leave a blank line after \\end{equation} or $$, you will create an extra line break in the document. This is sort of annoying because white-space isn’t supposed to matter in that way, but you can save a lot of space by remembering this.\n* DON’T use the fullpage package. I’m used to using \\usepackage{fullpage} in documents to get the margins that I want, but this will override options in many style files (including jmlr.sty which is used in machine learning).\n* \\left( and \\right) can be convenient for auto-sizing parentheses, but are often overly conservative (e.g. making parentheses too big due to serifs or subscripts). It’s fine to use \\left( and \\right) initially, but you might want to specify explicit sizes with \\big(, \\Big(, \\bigg(, etc. in the final pass.\n* When displaying a sequence of equations (e.g. with the align environment), use \\stackrel{} on any non-trivial equality or inequality statements and justify these steps immediately after the equation. See the bottom of page 6 of [this](http://www.jmlr.org/proceedings/papers/v40/Steinhardt15.pdf) paper for an example.\n* Make sure that \\label{} commands come after the \\caption{} command in a figure (rather than before), otherwise your numbering will be wrong.\n\n\n**Math**\n\n\n* When using a variable that hasn’t appeared in a while, remind the reader what it is (i.e., “the sample space ” rather than ““.\n* If it’s one of the main points of your work, call it a Theorem. If it’s a non-trivial conclusion that requires a somewhat involved argument (but it’s not a main point of the work), call it a Proposition. If the proof is short or routine, call it a Lemma, unless it follows directly from a Theorem you just stated, in which case call it a Corollary.\n* As a general rule there shouldn’t be more than 3 theorems in your paper (probably not more than 1). If you think this is unreasonable, consider that my [COLT 2015 paper](http://www.jmlr.org/proceedings/papers/v40/Steinhardt15.pdf) has 3 theorems across 24 pages, and my STOC 2017 paper has 2 theorems across 47 pages (not counting stating the same theorem in multiple locations).\n* If you just made a mathematical argument in the text that ended up with a non-trivial conclusion, you probably want to encapsulate it in a Proposition or Theorem. (Better yet, state the theorem before the argument so that the reader knows what you’re arguing for; although this isn’t always the best ordering.)\n", "url": "https://jsteinhardt.wordpress.com/2017/02/28/advice-for-authors/", "title": "Advice for Authors", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2017-02-28T01:11:50+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "3f3ca381d809cba7d7a64d49ac63f439", "summary": []}

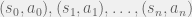

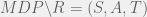

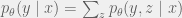

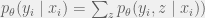

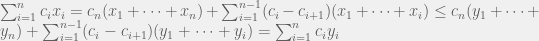

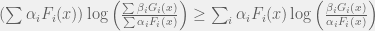

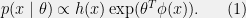

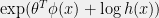

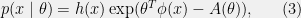

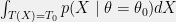

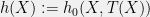

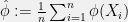

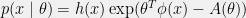

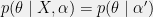

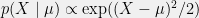

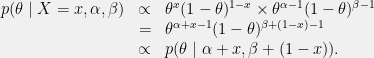

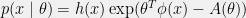

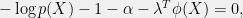

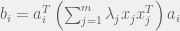

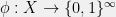

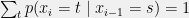

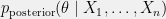

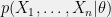

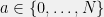

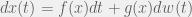

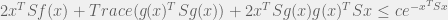

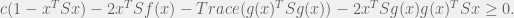

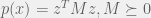

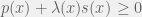

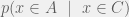

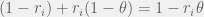

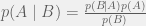

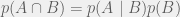

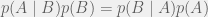

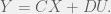

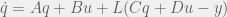

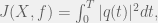

+{"text": "Model Mis-specification and Inverse Reinforcement Learning\n\nIn my previous post, “[Latent Variables and Model Mis-specification](https://jsteinhardt.wordpress.com/2017/01/10/latent-variables-and-model-mis-specification/)”, I argued that while machine learning is good at optimizing accuracy on observed signals, it has less to say about correctly inferring the values for unobserved variables in a model. In this post I’d like to focus in on a specific context for this: inverse reinforcement learning ([Ng et al. 2000](http://ai.stanford.edu/~ang/papers/icml00-irl.pdf), [Abeel et al. 2004](http://machinelearning.wustl.edu/mlpapers/paper_files/icml2004_PieterN04.pdf), [Ziebart et al. 2008](http://www.aaai.org/Papers/AAAI/2008/AAAI08-227.pdfhttp://www.aaai.org/Papers/AAAI/2008/AAAI08-227.pdf), [Ho et al 2016](http://papers.nips.cc/paper/6391-generative-adversarial-imitation-learning)), where one observes the actions of an agent and wants to infer the preferences and beliefs that led to those actions. For this post, I am pleased to be joined by Owain Evans, who is an active researcher in this area and has co-authored an online [book](http://agentmodels.org/) about building models of agents (see [here](http://agentmodels.org/chapters/4-reasoning-about-agents.html) in particular for a tutorial on inverse reinforcement learning and inverse planning).\n\n\nOwain and I are particularly interested in inverse reinforcement learning (IRL) because it has been proposed (most notably by [Stuart Russell](http://papers.nips.cc/paper/6420-cooperative-inverse-reinforcement-learning)) as a method for learning human values in the context of AI safety; among other things, this would eventually involve learning and correctly implementing human values by artificial agents that are much more powerful, and act with much broader scope, than any humans alive today. While we think that overall IRL is a promising route to consider, we believe that there are also a number of non-obvious pitfalls related to performing IRL with a mis-specified model. The role of IRL in AI safety is to infer human values, which are represented by a reward function or utility function. But crucially, human values (or human reward functions) are never directly observed.\n\n\nBelow, we elaborate on these issues. We hope that by being more aware of these issues, researchers working on inverse reinforcement learning can anticipate and address the resulting failure modes. In addition, we think that considering issues caused by model mis-specification in a particular concrete context can better elucidate the general issues pointed to in the previous post on model mis-specification.\n\n\n### **Specific Pitfalls for Inverse Reinforcement Learning**\n\n\nIn “[Latent Variables and Model Mis-specification](https://jsteinhardt.wordpress.com/2017/01/10/latent-variables-and-model-mis-specification/)”, Jacob talked about *model mis-specification*, where the “true” model does not lie in the model family being considered. We encourage readers to read that post first, though we’ve also tried to make the below readable independently.\n\n\nIn the context of inverse reinforcement learning, one can see some specific problems that might arise due to model mis-specification. For instance, the following are things we could misunderstand about an agent, which would cause us to make incorrect inferences about the agent’s values:\n\n\n* The **actions** of the agent. If we believe that an agent is capable of taking a certain action, but in reality they are not, we might make strange inferences about their values (for instance, that they highly value not taking that action). Furthermore, if our data is e.g. videos of human behavior, we have an additional inference problem of recognizing actions from the frames.\n* The **information** available to the agent. If an agent has access to more information than we think it does, then a plan that seems irrational to us (from the perspective of a given reward function) might actually be optimal for reasons that we fail to appreciate. In the other direction, if an agent has less information than we think, then we might incorrectly believe that they don’t value some outcome A, even though they really only failed to obtain A due to lack of information.\n\n\n* The **long-term plans** of the agent. An agent might take many actions that are useful in accomplishing some long-term goal, but not necessarily over the time horizon that we observe the agent. Inferring correct values thus also requires inferring such long-term goals. In addition, long time horizons can make models more brittle, thereby exacerbating model mis-specification issues.\n\n\nThere are likely other sources of error as well. The general point is that, given a mis-specified model of the agent, it is easy to make incorrect inferences about an agent’s values if the optimization pressure on the learning algorithm is only towards predicting actions correctly in-sample.\n\n\nIn the remainder of this post, we will cover each of the above aspects — actions, information, and plans — in turn, giving both quantitative models and qualitative arguments for why model mis-specification for that aspect of the agent can lead to perverse beliefs and behavior. First, though, we will briefly review the definition of inverse reinforcement learning and introduce relevant notation.\n\n\n### **Inverse Reinforcement Learning: Definition and Notations**\n\n\nIn inverse reinforcement learning, we want to model an agent taking actions in a given environment. We therefore suppose that we have a **state space**  (the set of states the agent and environment can be in), an **action space**  (the set of actions the agent can take), and a **transition function** , which gives the probability of moving from state  to state  when taking action . For instance, for an AI learning to control a car, the state space would be the possible locations and orientations of the car, the action space would be the set of control signals that the AI could send to the car, and the transition function would be the dynamics model for the car. The tuple of  is called an , which is a Markov Decision Process without a reward function. (The  will either have a known horizon or a discount rate  but we’ll leave these out for simplicity.)\n\n\n\n\n\n*Figure 1: Diagram showing how IRL and RL are related. (Credit: Pieter Abbeel’s* [*slides*](https://people.eecs.berkeley.edu/~pabbeel/cs287-fa12/slides/inverseRL.pdf) *on IRL)* \n\n\nThe inference problem for IRL is to infer a reward function  given an optimal policy  for the  (see Figure 1). We learn about the policy  from samples  of states and the corresponding action according to  (which may be random). Typically, these samples come from a trajectory, which records the full history of the agent’s states and actions in a single episode:\n\n\n\n\n\nIn the car example, this would correspond to the actions taken by an expert human driver who is demonstrating desired driving behaviour (where the actions would be recorded as the signals to the steering wheel, brake, etc.).\n\n\nGiven the  and the observed trajectory, the goal is to infer the reward function . In a Bayesian framework, if we specify a prior on  we have:\n\n\n\n\n\nThe likelihood  is just ![\\pi_R(s)[a_i]](https://s0.wp.com/latex.php?latex=%5Cpi_R%28s%29%5Ba_i%5D&bg=f0f0f0&fg=555555&s=0&c=20201002), where  is the optimal policy under the reward function . Note that computing the optimal policy given the reward is in general non-trivial; except in simple cases, we typically approximate the policy using reinforcement learning (see Figure 1). Policies are usually assumed to be noisy (e.g. using a softmax instead of deterministically taking the best action). Due to the challenges of specifying priors, computing optimal policies and integrating over reward functions, most work in IRL uses some kind of approximation to the Bayesian objective (see the references in the introduction for some examples).\n\n\n### **Recognizing Human Actions in Data**\n\n\nIRL is a promising approach to learning human values in part because of the easy availability of data. For supervised learning, humans need to produce many labeled instances specialized for a task. IRL, by contrast, is an unsupervised/semi-supervised approach where any record of human behavior is a potential data source. Facebook’s logs of user behavior provide trillions of data-points. YouTube videos, history books, and literature are a trove of data on human behavior in both actual and imagined scenarios. However, while there is lots of existing data that is informative about human preferences, we argue that exploiting this data for IRL will be a difficult, complex task with current techniques.\n\n\n*Inferring Reward Functions from Video Frames*\n\n\nAs we noted above, applications of IRL typically infer the reward function R from observed samples of the human policy . Formally, the environment is a known  and the observations are state-action pairs, . This assumes that (a) the environment’s dynamics  are given as part of the IRL problem, and (b) the observations are structured as “state-action” pairs. When the data comes from a human expert parking a car, these assumptions are reasonable. The states and actions of the driver can be recorded and a car simulator can be used for . For data from YouTube videos or history books, the assumptions fail. The data is a sequence of partial observations: the transition function  is unknown and the data does not separate out *state* and *action*. Indeed, it’s a challenging ML problem to infer human actions from text or videos.\n\n\n\n\n\n*Movie still: What actions are being performed in this situation?* ([Source](http://www.moviemoviesite.com/People/A/attenborough-richard/filmography.htm))\n\n\nAs a concrete example, suppose the data is a video of two co-pilots flying a plane. The successive frames provide only limited information about the state of the world at each time step and the frames often jump forward in time. So it’s more like a [POMDP](https://en.wikipedia.org/wiki/Partially_observable_Markov_decision_process) with a complex observation model. Moreover, the actions of each pilot need to be inferred. This is a challenging inference problem, because actions can be subtle (e.g. when a pilot nudges the controls or nods to his co-pilot).\n\n\nTo infer actions from observations, some model relating the true state-action  to the observed video frame must be used. But choosing any model makes substantive assumptions about how human values relate to their behavior. For example, suppose someone attacks one of the pilots and (as a reflex) he defends himself by hitting back. Is this reflexive or instinctive response (hitting the attacker) an action that is informative about the pilot’s values? Philosophers and neuroscientists might investigate this by considering the mental processes that occur before the pilot hits back. If an IRL algorithm uses an off-the-shelf action classifier, it will lock in some (contentious) assumptions about these mental processes. At the same time, an IRL algorithm cannot *learn* such a model because it never directly observes the mental processes that relate rewards to actions.\n\n\n*Inferring Policies From Video Frames*\n\n\nWhen learning a reward function via IRL, the ultimate goal is to use the reward function to guide an artificial agent’s behavior (e.g. to perform useful tasks to humans). This goal can be formalized directly, without including IRL as an intermediate step. For example, in **Apprenticeship Learning,** the goal is to learn a “good” policy for the  from samples of the human’s policy  (where  is assumed to approximately optimize an unknown reward function). In **Imitation Learning,** the goal is simply to learn a policy that is similar to the human’s policy.\n\n\nLike IRL, policy search techniques need to recognize an agent’s actions to infer their policy. So they have the same challenges as IRL in learning from videos or history books. Unlike IRL, policy search does not explicitly model the reward function that underlies an agent’s behavior. This leads to an additional challenge. Humans and AI systems face vastly different tasks and have different action spaces. Most actions in videos and books would never be performed by a software agent. Even when tasks are similar (e.g. humans driving in the 1930s vs. a self-driving car in 2016), it is a difficult [transfer learning](https://openreview.net/pdf?id=B16dGcqlx) problem to use human policies in one task to improve AI policies in another.\n\n\n*IRL Needs Curated Data*\n\n\nWe argued that records of human behaviour in books and videos are difficult for IRL algorithms to exploit. Data from Facebook seems more promising: we can store the state (e.g. the HTML or pixels displayed to the human) and each human action (clicks and scrolling). This extends beyond Facebook to any task that can be performed on a computer. While this covers a broad range of tasks, there are obvious limitations. Many people in the world have a limited ability to use a computer: we can’t learn about their values in this way. Moreover, some kinds of human preferences (e.g. preferences over physical activities) seem hard to learn about from behaviour on a computer.\n\n\n### **Information and Biases**\n\n\nHuman actions depend both on their preferences and their *beliefs*. The beliefs, like the preferences, are never directly observed. For narrow tasks (e.g. people choosing their favorite photos from a display), we can model humans as having full knowledge of the state (as in an [MDP](https://en.wikipedia.org/wiki/Markov_decision_process)). But for most real-world tasks, humans have limited information and their information changes over time (as in a [POMDP](https://en.wikipedia.org/wiki/Partially_observable_Markov_decision_process) or [RL](https://en.wikipedia.org/wiki/Reinforcement_learning) problem). If IRL assumes the human has full information, then the model is mis-specified and generalizing about what the human would prefer in other scenarios can be mistaken. Here are some examples:\n\n\n(1). Someone travels from their house to a cafe, which has already closed. If they are assumed to have full knowledge, then IRL would infer an alternative preference (e.g. going for a walk) rather than a preference to get a drink at the cafe.\n\n\n(2). Someone takes a drug that is widely known to be ineffective. This could be because they have a false belief that the drug is effective, or because they picked up the wrong pill, or because they take the drug for its side-effects. Each possible explanation could lead to different conclusions about preferences.\n\n\n(3). Suppose an IRL algorithm is inferring a person’s goals from key-presses on their laptop. The person repeatedly forgets their login passwords and has to reset them. This behavior is hard to capture with a POMDP-style model: humans forget some strings of characters and not others. IRL might infer that the person *intends* to repeatedly reset their passwords.\n\n\nExample (3) above arises from humans forgetting information — even if the information is only a short string of characters. This is one way in which humans systematically deviate from rational Bayesian agents. The field of psychology has documented many other deviations. Below we discuss one such deviation — *time-inconsistency* — which has been used to explain temptation, addiction and procrastination.\n\n\n*Time-inconsistency and Procrastination*\n\n\nAn IRL algorithm is inferring Alice’s preferences. In particular, the goal is to infer Alice’s preference for completing a somewhat tedious task (e.g. writing a paper) as opposed to relaxing. Alice has  days in which she could complete the task and IRL observes her working or relaxing on each successive day.\n\n\n\n\n\n*Figure 2. MDP graph for choosing whether to “work” or “wait” (relax) on a task.* \n\n\nFormally, let R be the preference/reward Alice assigns to completing the task. Each day, Alice can “work” (receiving cost  for doing tedious work) or “wait” (cost ). If she works, she later receives the reward  minus a tiny, linearly increasing cost (because it’s better to submit a paper earlier). Beyond the deadline at , Alice cannot get the reward . For IRL, we fix  and  and infer .\n\n\nSuppose Alice chooses “wait” on Day 1. If she were fully rational, it follows that R (the preference for completing the task) is small compared to  (the psychological cost of doing the tedious work). In other words, Alice doesn’t care much about completing the task. Rational agents will do the task on Day 1 or never do it. Yet humans often care deeply about tasks yet leave them until the last minute (when finishing early would be optimal). Here we imagine that Alice has 9 days to complete the task and waits until the last possible day.\n\n\n\n\n\n*Figure 3: Graph showing IRL inferences for Optimal model (which is mis-specified) and Possibly Discounting Model (which includes hyperbolic discounting). On each day (**-axis) the model gets another observation of Alice’s choice. The* *-axis shows the posterior mean for*  *(reward for task), where the tedious work* *.* \n\n\nFigure 3 shows results from running IRL on this problem. There is an “Optimal” model, where the agent is optimal up to an unknown level of softmax random noise (a typical assumption for IRL). There is also a “Possibly Discounting” model, where the agent is either softmax optimal or is a hyperbolic discounter (with unknown level of discounting). We do joint Bayesian inference over the completion reward , the softmax noise and (for “Possibly Discounting”) how much the agent hyperbolically discounts. The work cost  is set to . Figure 3 shows that after 6 days of observing Alice procrastinate, the “Optimal” model is very confident that Alice does not care about the task . When Alice completes the task on the last possible day, the posterior mean on R is not much more than the prior mean. By contrast, the “Possibly Discounting” model never becomes confident that Alice doesn’t care about the task. (Note that the gap between the models would be bigger for larger . The “Optimal” model’s posterior on R shoots back to its Day-0 prior because it explains the whole action sequence as due to high softmax noise — optimal agents without noise would either do the task immediately or not at all. Full details and code are [here](http://agentmodels.org/chapters/5d-joint-inference.html).)\n\n\n### **Long-term Plans**\n\n\nAgents will often take long series of actions that generate negative utility for them in the moment in order to accomplish a long-term goal (for instance, studying every night in order to perform well on a test). Such long-term plans can make IRL more difficult for a few reasons. Here we focus on two: (1) IRL systems may not have access to the right type of data for learning about long-term goals, and (2) needing to predict long sequences of actions can make algorithms more fragile in the face of model mis-specification.\n\n\n*(1) Wrong type of data.* To make inferences based on long-term plans, it would be helpful to have coherent data about a single agent’s actions over a long period of time (so that we can e.g. see the plan unfolding). But in practice we will likely have substantially more data consisting of short snapshots of a large number of different agents (e.g. because many internet services already record user interactions, but it is uncommon for a single person to be exhaustively tracked and recorded over an extended period of time even while they are offline).\n\n\nThe former type of data (about a single representative population measured over time) is called **panel data**, while the latter type of data (about different representative populations measured at each point in time) is called **repeated cross-section data**. The differences between these two types of data is [well-studied](http://www.uio.no/studier/emner/sv/oekonomi/ECON5103/v10/undervisningsmateriale/PDAppl_17.pdf) in econometrics, and a general theme is the following: it is difficult to infer individual-level effects from cross-sectional data.\n\n\nAn easy and familiar example of this difference (albeit not in an IRL setting) can be given in terms of election campaigns. Most campaign polling is cross-sectional in nature: a different population of respondents is polled at each point in time. Suppose that Hillary Clinton gives a speech and her overall support according to cross-sectional polls increases by 2%; what can we conclude from this? Does it mean that 2% of people switched from Trump to Clinton? Or did 6% of people switch from Trump to Clinton while 4% switched from Clinton to Trump?\n\n\nAt a minimum, then, using cross-sectional data leads to a difficult disaggregation problem; for instance, different agents taking different actions at a given point in time could be due to being at different stages in the same plan, or due to having different plans, or some combination of these and other factors. Collecting demographic and other side data can help us (by allowing us to look at variation and shifts within each subpopulation), but it is unclear if this will be sufficient in general.\n\n\nOn the other hand, there are some services (such as Facebook or Google) that do have extensive data about individual users across a long period of time. However, this data has another issue: it is incomplete in a very systematic way (since it only tracks online behaviour). For instance, someone might go online most days to read course notes and Wikipedia for a class; this is data that would likely be recorded. However, it is less likely that one would have a record of that person taking the final exam, passing the class and then getting an internship based on their class performance. Of course, some pieces of this sequence would be inferable based on some people’s e-mail records, etc., but it would likely be under-represented in the data relative to the record of Wikipedia usage. In either case, some non-trivial degree of inference would be necessary to make sense of such data.\n\n\n*(2) Fragility to mis-specification.* Above we discussed why observing only short sequences of actions from an agent can make it difficult to learn about their long-term plans (and hence to reason correctly about their values). Next we discuss another potential issue — fragility to model mis-specification.\n\n\nSuppose someone spends 99 days doing a boring task to accomplish an important goal on day 100. A system that is only trying to correctly predict actions will be right 99% of the time if it predicts that the person inherently enjoys boring tasks. Of course, a system that understands the goal and how the tasks lead to the goal will be right 100% of the time, but even minor errors in its understanding could bring the accuracy back below 99%.\n\n\nThe general issue is the following: large changes in the model of the agent might only lead to small changes in the predictive accuracy of the model, and the longer the time horizon on which a goal is realized, the more this might be the case. This means that even slight mis-specifications in the model could tip the scales back in favor of a (very) incorrect reward function. A potential way of dealing with this might be to identify “important” predictions that seem closely tied to the reward function, and focus particularly on getting those predictions right (see [here](http://www.di.ens.fr/~slacoste/research/pubs/lacoste-AISTATS11-lossBayes.pdf) for a paper exploring a similar idea in the context of approximate inference).\n\n\nOne might object that this is only a problem in this toy setting; for instance, in the real world, one might look at the particular way in which someone is studying or performing some other boring task to see that it coherently leads towards some goal (in a way that would be less likely were the person to be doing something boring purely for enjoyment). In other words, correctly understanding the agent’s goals might allow for more fine-grained accurate predictions which would fare better under e.g. log-score than would an incorrect model.\n\n\nThis is a reasonable objection, but there are some historical examples of this going wrong that should give one pause. That is, there are historical instances where: (i) people expected a more complex model that seemed to get at some underlying mechanism to outperform a simpler model that ignored that mechanism, and (ii) they were wrong (the simpler model did better under log-score). The example we are most familiar with is n-gram models vs. parse trees for language modelling; the most successful language models (in terms of having the best log-score on predicting the next word given a sequence of previous words) essentially treat language as a high-order Markov chain or hidden Markov model, despite the fact that linguistic theory predicts that language should be tree-structured rather than linearly-structured. Indeed, NLP researchers have tried building language models that assume language is tree-structured, and these models perform worse, or at least do not seem to have been adopted in practice (this is true both for older discrete models and newer continuous models based on neural nets). It’s plausible that a similar issue will occur in inverse reinforcement learning, where correctly inferring plans is not enough to win out in predictive performance. The reason for the two issues might be quite similar (in language modelling, the tree structure only wins out in statistically uncommon corner cases involving long-term and/or nested dependencies, and hence getting that part of the prediction correct doesn’t help predictive accuracy much).\n\n\nThe overall point is: in the case of even slight model mis-specification, the “correct” model might actually perform worse under typical metrics such as predictive accuracy. Therefore, more careful methods of constructing a model might be necessary.\n\n\n### **Learning Values != Robustly Predicting Human Behaviour**\n\n\nThe problems with IRL described so far will result in poor performance for predicting human choices out-of-sample. For example, if someone is observed doing boring tasks for 99 days (where they only achieve the goal on Day 100), they’ll be predicted to continue doing boring tasks even when a short-cut to the goal becomes available. So even if the goal is simply to predict human behaviour (not to infer human values), mis-specification leads to bad predictions on realistic out-of-sample scenarios.\n\n\nLet’s suppose that our goal is not to predict human behaviour but to create AI systems that promote and respect human values. These goals (predicting humans and building safe AI) are distinct. Here’s an example that illustrates the difference. Consider a long-term smoker, Bob, who would continue smoking even if there were (counterfactually) a universally effective anti-smoking treatment. Maybe Bob is in denial about the health effects of smoking or Bob thinks he’ll inevitably go back to smoking whatever happens. If an AI system were assisting Bob, we might expect it to avoid promoting his smoking habit (e.g. by not offering him cigarettes at random moments). This is not paternalism, where the AI system imposes someone else’s values on Bob. The point is that even if Bob would continue smoking across many counterfactual scenarios this doesn’t mean that he places value on smoking.\n\n\nHow do we choose between the theory that Bob values smoking and the theory that he does not (but smokes anyway because of the powerful addiction)? Humans choose between these theories based on our experience with addictive behaviours and our insights into people’s preferences and values. This kind of insight can’t easily be captured as formal assumptions about a model, or even as a criterion about counterfactual generalization. (The theory that Bob values smoking *does* make accurate predictions across a wide range of counterfactuals.) Because of this, learning human values from IRL has a more profound kind of model mis-specification than the examples in Jacob’s previous post. Even in the limit of data generated from an infinite series of random counterfactual scenarios, standard IRL algorithms would not infer someone’s true values.\n\n\nPredicting human actions is neither necessary nor sufficient for learning human values. In what ways, then, are the two related? One such way stems from the premise that if someone spends more resources making a decision, the resulting decision tends to be more in keeping with their true values. For instance, someone might spend lots of time thinking about the decision, they might consult experts, or they might try out the different options in a trial period before they make the real decision. Various authors have thus suggested that people’s choices under sufficient “reflection” act as a reliable indicator of their true values. Under this view, predicting a certain kind of behaviour (choices under reflection) is sufficient for learning human values. Paul Christiano has written about [some](https://medium.com/ai-control/model-free-decisions-6e6609f5d99e#.y6gg55z3r) [proposals](https://sideways-view.com/2016/12/01/optimizing-the-news-feed/) for doing this, though we will not discuss them here (the first link is for general AI systems while the second is for newsfeeds). In general, turning these ideas into algorithms that are tractable and learn safely remains a challenging problem.\n\n\n### **Further reading**\n\n\nThere is [research](http://www.jmlr.org/papers/v12/choi11a.html) on doing IRL for agents in POMDPs. Owain and collaborators explored the effects of limited information and cognitive biases on IRL: [paper](https://arxiv.org/pdf/1512.05832), [paper](http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.705.2548&rep=rep1&type=pdf), [online book](http://agentmodels.org).\n\n\nFor many environments it will not be possible to *identify* the reward function from the observed trajectories. These identification problems are related to the mis-specification problems but are not the same thing. Active learning can help with identification ([paper](https://arxiv.org/abs/1601.06569)).\n\n\nPaul Christiano raised many similar points about mis-specification in a [post](https://medium.com/ai-control/the-easy-goal-inference-problem-is-still-hard-fad030e0a876#.mb33rtxo8) on his blog.\n\n\nFor a big-picture monograph on relations between human preferences, economic utility theory and welfare/well-being, see Hausman’s [“Preference, Value, Choice and Welfare”.](https://www.amazon.com/Preference-Choice-Welfare-Daniel-Hausman/dp/1107695120)\n\n\n### **Acknowledgments**\n\n\nThanks to Sindy Li for reviewing a full draft of this post and providing many helpful comments. Thanks also to Michael Webb and Paul Christiano for doing the same on specific sections of the post.\n\n", "url": "https://jsteinhardt.wordpress.com/2017/02/07/model-mis-specification-and-inverse-reinforcement-learning/", "title": "Model Mis-specification and Inverse Reinforcement Learning", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2017-02-07T21:25:15+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "ae7ff499faa47ec174608acb353071c8", "summary": []}

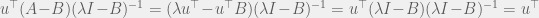

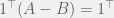

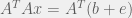

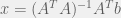

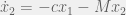

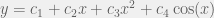

+{"text": "Linear algebra fact\n\nHere is interesting linear algebra fact: let  be an  matrix and  be a vector such that . Then for any matrix , .\n\n\nThe proof is just basic algebra: .\n\n\nWhy care about this? Let’s imagine that  is a (not necessarily symmetric) stochastic matrix, so . Let  be a low-rank approximation to  (so  consists of all the large singular values, and  consists of all the small singular values). Unfortunately since  is not symmetric, this low-rank approximation doesn’t preserve the eigenvalues of  and so we need not have . The  can be thought of as a “correction” term such that the resulting matrix is still low-rank, but we’ve preserved one of the eigenvectors of .\n\n", "url": "https://jsteinhardt.wordpress.com/2017/02/06/linear-algebra-fact/", "title": "Linear algebra fact", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2017-02-06T02:33:39+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "eefc78d4cc3385de7429409ee95f7f40", "summary": []}

+{"text": "Prékopa–Leindler inequality\n\nConsider the following statements:\n\n\n1. The shape with the largest volume enclosed by a given surface area is the -dimensional sphere.\n2. A marginal or sum of log-concave distributions is log-concave.\n3. Any Lipschitz function of a standard -dimensional Gaussian distribution concentrates around its mean.\n\n\nWhat do these all have in common? Despite being fairly non-trivial and deep results, they all can be proved in less than half of a page using the [Prékopa–Leindler inequality](https://en.wikipedia.org/wiki/Pr%C3%A9kopa%E2%80%93Leindler_inequality).\n\n\n(I won’t show this here, or give formal versions of the statements above, but time permitting I will do so in a later blog post.)\n\n", "url": "https://jsteinhardt.wordpress.com/2017/02/05/prekopa-leindler-inequality/", "title": "Prékopa–Leindler inequality", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2017-02-05T22:02:43+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "014ba3e02251aa6ba5389cabf071f444", "summary": []}

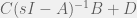

+{"text": "Latent Variables and Model Mis-specification\n\nMachine learning is very good at optimizing predictions to match an observed signal — for instance, given a dataset of input images and labels of the images (e.g. dog, cat, etc.), machine learning is very good at correctly predicting the label of a new image. However, performance can quickly break down as soon as we care about criteria other than predicting observables. There are several cases where we might care about such criteria:\n\n\n* In scientific investigations, we often care less about predicting a specific observable phenomenon, and more about what that phenomenon implies about an underlying scientific theory.\n* In economic analysis, we are most interested in what policies will lead to desirable outcomes. This requires predicting what would counterfactually happen if we were to enact the policy, which we (usually) don’t have any data about.\n* In machine learning, we may be interested in learning value functions which match human preferences (this is especially important in complex settings where it is hard to specify a satisfactory value function by hand). However, we are unlikely to observe information about the value function directly, and instead must infer it implicitly. For instance, one might infer a value function for autonomous driving by observing the actions of an expert driver.\n\n\nIn all of the above scenarios, the primary object of interest — the scientific theory, the effects of a policy, and the value function, respectively — is not part of the observed data. Instead, we can think of it as an unobserved (or “latent”) variable in the model we are using to make predictions. While we might hope that a model that makes good predictions will also place correct values on unobserved variables as well, this need not be the case in general, especially if the model is *mis-specified*.\n\n\nI am interested in latent variable inference because I think it is a potentially important sub-problem for building AI systems that behave safely and are aligned with human values. The connection is most direct for value learning, where the value function is the latent variable of interest and the fidelity with which it is learned directly impacts the well-behavedness of the system. However, one can imagine other uses as well, such as making sure that the concepts that an AI learns sufficiently match the concepts that the human designer had in mind. It will also turn out that latent variable inference is related to *counterfactual reasoning*, which has a large number of tie-ins with building safe AI systems that I will elaborate on in forthcoming posts.\n\n\nThe goal of this post is to explain why problems show up if one cares about predicting latent variables rather than observed variables, and to point to a research direction (counterfactual reasoning) that I find promising for addressing these issues. More specifically, in the remainder of this post, I will: (1) give some formal settings where we want to infer unobserved variables and explain why we can run into problems; (2) propose a possible approach to resolving these problems, based on counterfactual reasoning.\n\n\n1 Identifying Parameters in Regression Problems\n-----------------------------------------------\n\n\nSuppose that we have a regression model , which outputs a probability distribution over  given a value for . Also suppose we are explicitly interested in identifying the “true” value of  rather than simply making good predictions about  given . For instance, we might be interested in whether smoking causes cancer, and so we care not just about predicting whether a given person will get cancer () given information about that person (), but specifically whether the coefficients in  that correspond to a history of smoking are large and positive.\n\n\nIn a typical setting, we are given data points  on which to fit a model. Most methods of training machine learning systems optimize predictive performance, i.e. they will output a parameter  that (approximately) maximizes . For instance, for a linear regression problem we have . Various more sophisticated methods might employ some form of regularization to reduce overfitting, but they are still fundamentally trying to maximize some measure of predictive accuracy, at least in the limit of infinite data.\n\n\nCall a model **well-specified** if there is some parameter  for which  matches the true distribution over , and call a model **mis-specified** if no such  exists. One can show that for well-specified models, maximizing predictive accuracy works well (modulo a number of technical conditions). In particular, maximizing  will (asymptotically, as ) lead to recovering the parameter .\n\n\nHowever, if a model is mis-specified**,** then it is not even clear what it means to correctly infer . We could declare the  maximizing predictive accuracy to be the “correct” value of , but this has issues:\n\n\n1. While  might do a good job of predicting  in the settings we’ve seen, it may not predict  well in very different settings.\n2. If we care about determining  for some scientific purpose, then good predictive accuracy may be an unsuitable metric. For instance, even though margarine consumption might [correlate well with](http://www.tylervigen.com/spurious-correlations) (and hence be a good predictor of) divorce rate, that doesn’t mean that there is a causal relationship between the two.\n\n\nThe two problems above also suggest a solution: we will say that we have done a good job of inferring a value for  if  can be used to make *good predictions in a wide variety of situations*, and not just the situation we happened to train the model on. (For the latter case of predicting causal relationships, the “wide variety of situations” should include the situation in which the relevant causal intervention is applied.)\n\n\nNote that both of the problems above are different from the typical statistical problem of overfitting. Clasically, overfitting occurs when a model is too complex relative to the amount of data at hand, but even if we have a large amount of data the problems above could occur. This is illustrated in the following graph:\n\n\n\n\n\nHere the blue line is the data we have (), and the green line is the model we fit (with slope and intercept parametrized by ). We have more than enough data to fit a line to it. However, because the true relationship is quadratic, the best linear fit depends heavily on the distribution of the training data. If we had fit to a different part of the quadratic, we would have gotten a potentially very different result. Indeed, in this situation, there is no linear relationship that can do a good job of extrapolating to new situations, unless the domain of those new situations is restricted to the part of the quadratic that we’ve already seen.\n\n\nI will refer to the type of error in the diagram above as *mis-specification error*. Again, mis-specification error is different from error due to overfitting. Overfitting occurs when there is too little data and noise is driving the estimate of the model; in contrast, mis-specification error can occur even if there is plenty of data, and instead occurs because the best-performing model is different in different scenarios.\n\n\n**2 Structural Equation Models**\n--------------------------------\n\n\nWe will next consider a slightly subtler setting, which in economics is referred to as a *structural equation model*. In this setting we again have an output  whose distribution depends on an input , but now this relationship is mediated by an *unobserved* variable . A common example is a [discrete choice](https://en.wikipedia.org/wiki/Discrete_choice) model, where consumers make a choice among multiple goods () based on a consumer-specific utility function () that is influenced by demographic and other information about the consumer (). Natural language processing provides another source of examples: in [semantic parsing](http://www-nlp.stanford.edu/software/sempre/), we have an input utterance () and output denotation (), mediated by a latent logical form ; in [machine translation](https://en.wikipedia.org/wiki/Statistical_machine_translation), we have input and output sentences ( and ) mediated by a latent [alignment](https://en.wikipedia.org/wiki/IBM_alignment_models) ().\n\n\nSymbolically, we represent a structural equation model as a parametrized probability distribution , where we are trying to fit the parameters . Of course, we can always turn a structural equation model into a regression model by using the identity , which allows us to ignore  altogether. In economics this is called a *reduced form model*. We use structural equation models if we are specifically interested in the unobserved variable  (for instance, in the examples above we are interested in the value function for each individual, or in the logical form representing the sentence’s meaning).\n\n\nIn the regression setting where we cared about identifying , it was obvious that there was no meaningful “true” value of  when the model was mis-specified. In this structural equation setting, we now care about the latent variable , which can take on a meaningful true value (e.g. the actual utility function of a given individual) even if the overall model  is mis-specified. It is therefore tempting to think that if we fit parameters  and use them to impute , we will have meaningful information about the actual utility functions of individual consumers. However, this is a notational sleight of hand — just because we call  “the utility function” does not make it so. The variable  need not correspond to the actual utility function of the consumer, nor does the consumer’s preferences even need to be representable by a utility function.\n\n\nWe can understand what goes wrong by consider the following procedure, which formalizes the proposal above:\n\n\n1. Find  to maximize the predictive accuracy on the observed data, , where . Call the result .\n2. Using this value , treat  as being distributed according to . On a new value  for which  is not observed, treat  as being distributed according to .\n\n\nAs before, if the model is well-specified, one can show that such a procedure asymptotically outputs the correct probability distribution over . However, if the model is mis-specified, things can quickly go wrong. For example, suppose that  represents what choice of drink a consumer buys, and  represents consumer utility (which might be a function of the price, attributes, and quantity of the drink). Now suppose that individuals have preferences which are influenced by unmodeled covariates: for instance, a preference for cold drinks on warm days, while the input  does not have information about the outside temperature when the drink was bought. This could cause any of several effects:\n\n\n* If there is a covariate that happens to correlate with temperature in the data, then we might conclude that that covariate is predictive of preferring cold drinks.\n* We might increase our uncertainty about  to capture the unmodeled variation in .\n* We might implicitly increase uncertainty by moving utilities closer together (allowing noise or other factors to more easily change the consumer’s decision).\n\n\nIn practice we will likely have some mixture of all of these, and this will lead to systematic biases in our conclusions about the consumers’ utility functions.\n\n\nThe same problems as before arise: while we by design place probability mass on values of  that correctly predict the observation , under model mis-specification this could be due to spurious correlations or other perversities of the model. Furthermore, even though predictive performance is high on the observed data (and data similar to the observed data), there is no reason for this to continue to be the case in settings very different from the observed data, which is particularly problematic if one is considering the effects of an intervention. For instance, while inferring preferences between hot and cold drinks might seem like a silly example, the [design](http://web.stanford.edu/~jdlevin/Papers/Auctions.pdf) of [timber auctions](http://web.stanford.edu/~jdlevin/Papers/Skewing.pdf) constitutes a much more important example with a roughly similar flavour, where it is important to correctly understand the utility functions of bidders in order to predict their behaviour under alternative auction designs (the model is also more complex, allowing even more opportunities for mis-specification to cause problems).\n\n\n3 A Possible Solution: Counterfactual Reasoning\n-----------------------------------------------\n\n\nIn general, under model mis-specification we have the following problems:\n\n\n* It is often no longer meaningful to talk about the “true” value of a latent variable  (or at the very least, not one within the specified model family).\n* Even when there is a latent variable  with a well-defined meaning, the imputed distribution over  need not match reality.\n\n\nWe can make sense of both of these problems by thinking in terms of *counterfactual reasoning*. Without defining it too formally, counterfactual reasoning is the problem of making good predictions not just in the actual world, but in a wide variety of counterfactual worlds that “could” exist. (I recommend [this](http://leon.bottou.org/publications/pdf/tr-2012-09-12.pdf) paper as a good overview for machine learning researchers.)\n\n\nWhile typically machine learning models are optimized to predict well on a specific distribution, systems capable of counterfactual reasoning must make good predictions on many distributions (essentially any distribution that can be captured by a reasonable counterfactual). This stronger guarantee allows us to resolve many of the issues discussed above, while still thinking in terms of predictive performance, which historically seems to have been a successful paradigm for machine learning. In particular:\n\n\n* While we can no longer talk about the “true” value of , we can say that a value of  is a “good” value if it makes good predictions on not just a single test distribution, but many different counterfactual test distributions. This allows us to have more confidence in the generalizability of any inferences we draw based on  (for instance, if  is the coefficient vector for a regression problem, any variable with positive sign is likely to robustly correlate with the response variable for a wide variety of settings).\n* The imputed distribution over a variable  must also lead to good predictions for a wide variety of distributions. While this does not force  to match reality, it is a much stronger condition and does at least mean that any aspect of  that can be measured in some counterfactual world must correspond to reality. (For instance, any aspect of a utility function that could at least counterfactually result in a specific action would need to match reality.)\n* We will successfully predict the effects of an intervention, as long as that intervention leads to one of the counterfactual distributions considered.\n\n\n(Note that it is less clear how to actually train models to optimize counterfactual performance, since we typically won’t observe the counterfactuals! But it does at least define an end goal with good properties.)\n\n\nMany people have a strong association between the concepts of “counterfactual reasoning” and “causal reasoning”. It is important to note that these are distinct ideas; causal reasoning is a type of counterfactual reasoning (where the counterfactuals are often thought of as centered around interventions), but I think of counterfactual reasoning as any type of reasoning that involves making robustly correct statistical inferences across a wide variety of distributions. On the other hand, some people take robust statistical correlation to be the *definition* of a causal relationship, and thus do consider causal and counterfactual reasoning to be the same thing.\n\n\nI think that building machine learning systems that can do a good job of counterfactual reasoning is likely to be an important challenge, especially in cases where reliability and safety are important, and necessitates changes in how we evaluate machine learning models. In my mind, while the Turing test has many flaws, one thing it gets very right is the ability to evaluate the accuracy of counterfactual predictions (since dialogue provides the opportunity to set up counterfactual worlds via shared hypotheticals). In contrast, most existing tasks focus on repeatedly making the same type of prediction with respect to a fixed test distribution. This latter type of benchmarking is of course easier and more clear-cut, but fails to probe important aspects of our models. I think it would be very exciting to design good benchmarks that require systems to do counterfactual reasoning, and I would even be happy to [incentivize](https://jsteinhardt.wordpress.com/2016/12/31/individual-project-fund-further-details/) such work monetarily.\n\n\n**Acknowledgements**\n\n\nThanks to Michael Webb, Sindy Li, and Holden Karnofsky for providing feedback on drafts of this post. If any readers have additional feedback, please feel free to send it my way.\n\n", "url": "https://jsteinhardt.wordpress.com/2017/01/10/latent-variables-and-model-mis-specification/", "title": "Latent Variables and Model Mis-specification", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2017-01-10T02:45:46+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "e19dda5ee6f3f5f7754fa81360a2cdf1", "summary": []}

+{"text": "Individual Project Fund: Further Details\n\nIn my post on [where I plan to donate in 2016](https://jsteinhardt.wordpress.com/2016/12/28/donations-for-2016/), I said that I would set aside $2000 for funding promising projects that I come across in the next year:\n\n\n\n> The idea behind the project fund is … [to] give in a low-friction way on scales that are too small for organizations like Open Phil to think about. Moreover, it is likely good for me to develop a habit of evaluating projects I come across and thinking about whether they could benefit from additional money (either because they are funding constrained, or to incentivize an individual who is on the fence about carrying the project out). Finally, if this effort is successful, it is possible that other EAs will start to do this as well, which could magnify the overall impact. I think there is some danger that I will not be able to allocate the $2000 in the next year, in which case any leftover funds will go to next year’s donor lottery.\n> \n> \n\n\nIn this post I will give some further details about this fund. My primary goal is to give others an idea of what projects I am likely to consider funding, so that anyone who thinks they might be a good fit for this can get in contact with me. (I also expect many of the best opportunities to come from people that I meet in person but don’t necessarily read this blog, so I plan to actively look for projects throughout the year as well.)\n\n\nI am looking to fund or incentivize projects that meet several of the criteria below:\n\n\n* The project is in the area of computer science, especially one of machine learning, cyber security, algorithmic game theory, or computational social choice. [Some other areas that I would be somewhat likely to consider, in order of plausibility: economics, statistics, political science (especially international security), and biology.]\n* The project either wouldn’t happen, or would seem less worthwhile / higher-effort without the funding.\n* The organizer is someone who either I or someone I trust has an exceptionally high opinion of.\n* The project addresses a topic that I personally think is highly important. High-level areas that I tend to care about include international security, existential risk, AI safety, improving political institutions, improving scientific institutions, and helping the global poor. Technical areas that I tend to care about include reliable machine learning, machine learning and security, counterfactual reasoning, and value learning. On the other hand, if you have a project that you feel has a strong case for importance but doesn’t fit into these areas, I am interested in hearing about it.\n* It is unlikely that this project or a substantially similar project would be done by someone else at a similar level of quality. (Or, whoever else is likely to do it would instead focus on a similarly high-value project, if this one were to be taken care of.)\n* The topic pertains to a technical area that I or someone I trust has a high degree of expertise in, and can evaluate more quickly and accurately than a non-specialized funder.\n\n\nIt isn’t necessary to meet all of the criteria above, but I would probably want most things I fund to meet at least 4 of these 6.\n\n\nHere are some concrete examples of things I might fund:\n\n\n* Someone is thinking of doing a project that is undervalued (in terms of career benefits) but would be very useful. They don’t feel excited about allocating time to a non-career-relevant task but would feel more excited if getting an award of $1000 for their efforts.\n* Someone I trust is starting a new discussion group in an area that I think is important, but can’t find anyone to sponsor it, and wants money for providing food at the meetings.\n* Someone wants to do an experiment that I find valuable, but needs more compute resources than they have, and could use money for buying AWS hours.\n* Someone wants to curate a valuable dataset and needs money for hiring mechanical turkers.\n* Someone is organizing a workshop and needs money for securing a venue.\n* One project I am particularly interested in is a good survey paper at the intersection of machine learning and cyber security. If you might be interested in doing this, I would likely be willing to pay you.\n* There are likely many projects in the area of political activism that I would be interested in funding, although (due to crowdedness concerns) I have a particularly high bar for this area in terms of the criteria I laid out above.\n\n\nIf you think you might have a project that could use funding, please get in touch with me at jacob.steinhardt@gmail.com. Even if you are not sure if your project would be a good target for funding, I am very happy to talk to you about it. In addition, please feel free to comment either here or via e-mail if you have feedback on this general idea, or thoughts on types of small-project funding that I missed above.\n\n", "url": "https://jsteinhardt.wordpress.com/2016/12/31/individual-project-fund-further-details/", "title": "Individual Project Fund: Further Details", "source": "jsteinhardt.wordpress.com", "source_type": "wordpress", "date_published": "2016-12-31T22:10:28+00:00", "paged_url": "https://jsteinhardt.wordpress.com/feed?paged=1", "authors": ["jsteinhardt"], "id": "9ddf977255a2988c534758d4b282c17d", "summary": []}